Abstract

Current knowledge regarding the processing of observed manipulative actions (OMAs) (e.g., grasping, dragging, or dropping) is limited to grasping and underlying neural circuitry remains controversial. Here, we addressed these issues by combining chronic neuronal recordings along the anteroposterior extent of monkeys’ anterior intraparietal (AIP) area with tracer injections into the recorded sites. We found robust neural selectivity for 7 distinct OMAs, particularly in the posterior part of AIP (pAIP), where it was associated with motor coding of grip type and own-hand visual feedback. This cluster of functional properties appears to be specifically grounded in stronger direct connections of pAIP with the temporal regions of the ventral visual stream and the prefrontal cortex, as connections with skeletomotor related areas and regions of the dorsal visual stream exhibited opposite or no rostrocaudal gradients. Temporal and prefrontal areas may provide visual and contextual information relevant for manipulative action processing. These results revise existing models of the action observation network, suggesting that pAIP constitutes a parietal hub for routing information about OMA identity to the other nodes of the network.

Keywords: action observation, anatomical connectivity, macaque monkey, parietal cortex, visuomotor processing

Introduction

The interest in the brain networks underlying others’ observed action processing has been triggered by the discovery, in the monkey ventral premotor area F5, of the so-called “mirror neurons,” which respond during both action execution and others’ action observation (di Pellegrino et al. 1992; Gallese et al. 1996; Rizzolatti et al. 1996). This finding demonstrated that observed action processing is not limited to visual brain areas (Perrett et al. 1989; Vangeneugden et al. 2009; Singer and Sheinberg 2010), but involves frontoparietal areas belonging to the motor system as well (see Materials and Methods for the anatomofunctional criteria defining the areas of interest). Subsequently, neurons responding to the observation of another individual’s action have been described in 2 parietal areas: PFG, in the inferior parietal lobule (IPL) convexity (Fogassi et al. 2005; Rozzi et al. 2008; Bonini et al. 2010) and the anterior intraparietal (AIP) area (Pani et al. 2014; Maeda et al. 2015). Until recently, based on fMRI and connectional evidence (Nelissen et al. 2011), PFG and AIP were believed to operate in parallel with distinct visual inputs from the superior temporal sulcus (STS), each preferentially associated with the processing of information related to observed agents (upper bank of the STS–PFG–F5 convexity) or target objects (lower bank of the STS–AIP–F5 bank sector). However, the direct anatomical link between area PFG and the STS is highly variable (Rozzi et al. 2006; Frey et al. 2014) or even undetectable in a recent study (Bruni et al. 2018) in which tracers were injected in the core of functionally identified IPL sites hosting neurons responding to other’s observed action. In contrast, area AIP is strongly connected with the STS (Lewis and Van Essen 2000; Nakamura et al. 2001; Borra et al. 2008), even with the region in which hand action observation neurons have been found (Perrett et al. 1989). Altogether, these findings suggest that the existing models, which assign a prominent role to the STS–PFG–F5 circuit in the action observation network, may need a revision. Area AIP is a likely candidate for being the main parietal node of the monkey’s action observation network: yet, in the absence of combined anatomofunctional evidence, its role remains controversial.

The importance of area AIP in observed action processing is also in line with human fMRI studies, which have documented that the putative human AIP, the homolog of monkey AIP (Grefkes et al. 2002; Orban 2016), is activated by observed manipulative actions (OMAs) such as grasping, dragging, or dropping (Shmuelof and Zohary 2005; 2006; 2008; Jastorff et al. 2010; Abdollahi et al. 2013; Ferri et al. 2015; Corbo and Orban 2017). In contrast, single neuron evidence for observed action processing in AIP is sparse and mostly limited to the encoding of own hand visual feedback (HVF) during grasping (Sakata et al. 1995; Murata et al. 2000) or the observation of another’s grasping action seen from a first-person visual perspective (Pani et al. 2014; Maeda et al. 2015). The only study that has tested AIP single neuron activity during observation of other’s action from an allocentric (side-view) perspective reported that <10% of the recorded neurons responded in this condition (Maeda et al. 2015), leaving the relevance of AIP neurons in other’s action processing unclear. Furthermore, most likely because of the widely recognized role of AIP in the visual guidance of reaching-grasping actions (Janssen and Scherberger 2015), grasping is the only observed action tested thus far (Nelissen et al. 2011; Pani et al. 2014; Maeda et al. 2015). Here, we investigated whether AIP plays a major role in the visual processing of a wider variety of OMAs, beyond grasping, in accordance with the richness of primates’ behavioral repertoire. Furthermore, we predict that neuronal responsiveness and selectivity for OMAs may be prevalent in posterior AIP (pAIP), where previous studies reported greater selectivity for several types of visual information other than observed actions (Orban et al. 2006; Durand et al. 2007; Baumann et al. 2009; Premereur et al. 2015). The presence of a rostrocaudal gradient in visual tuning of AIP neurons is also supported by combined electrical microstimulation and fMRI experiments (Premereur et al. 2015), which demonstrated stronger activation of visual areas following pAIP stimulation. Nevertheless, anatomical evidence of rostrocaudal connectional gradients supporting this functional organization is lacking.

Other studies have reported an opposite rostrocaudal motor gradient in AIP in addition to the visual one (Baumann et al. 2009), raising intriguing questions about the integration of visual and motor information at the single neuron and network level. Indeed, visuomotor matching is a hallmark of observed action processing in the parietofrontal motor system, and mirror neuron studies indicate that there is a generally broad correspondence between the action evoking the strongest discharge during observation and execution (Gallese et al. 1996; Ferrari et al. 2003; Rozzi et al. 2008; Maeda et al. 2015; Papadourakis and Raos 2017; Mazurek et al. 2018). Overall, these studies strongly emphasize the convergence between motor and visual representations of action up to the single-neuron level. However, whether and how such integration occurs in AIP remains unknown.

On these bases, this study hypothesizes that 1) area AIP may encode a variety of OMAs, particularly in its caudal part; 2) among OMAs, grasping may be the preferred exemplar, possibly linked to its widespread motor representation in AIP; and 3) rostrocaudal gradients of visual and motor properties should be linked to corresponding gradients in anatomical connections. To address these issues, we performed chronic single neuron recordings along the entire anteroposterior extent of AIP in 2 monkeys, while they passively viewed videos depicting 7 OMA exemplars (i.e., drag, drop, grasp, push, roll, rotate, and squeeze), and actively performed a visuomotor reaching/grasping task (Bonini et al. 2014b). We found evidence of neural selectivity for various OMAs, particularly in pAIP, where it was associated with motor coding of grip type and own-HVF. After the recordings, we injected neural tracers into each functionally characterized AIP site, revealing that regions hosting OMA-selective neurons showed strong direct connections with a set of temporal and prefrontal areas deemed to be involved in context-dependent visual processing of information regarding other’s actions and objects. These results revise current models of the action observation network, suggesting that pAIP constitutes a parietal hub for routing information about OMA identity to the other nodes of the network.

Materials and Methods

Experiments were carried out on 2 Macaca mulatta, 1 female (Mk1, 4 kg) and 1 male (Mk2, 7 kg). Before recordings, monkeys were habituated to sit in a primate chair and to interact with the experimenters. They were then trained to perform a visuomotor task (VMT) (Bonini et al. 2014a; Maranesi et al. 2015) and an observation task (OT), both described below. When the training was completed, a head fixation system was implanted under general anesthesia (ketamine hydrochloride, 5 mg/kg i.m. and medetomidine hydrochloride, 0.1 mg/kg i.m.), followed by postsurgical pain medications. Surgical procedures were the same as previously described (Bruni et al. 2017). All experimental protocols complied with the European law on the humane care and use of laboratory animals (directives 86/609/EEC, 2003/65/CE, and 2010/63/EU), they were approved by the Veterinarian Animal Care and Use Committee of the University of Parma (Prot. 78/12 17/07/2012) and authorized by the Italian Ministry of Health (D.M. 294/2012-C, 11/12/2012).

Apparatus and Behavioral Paradigm

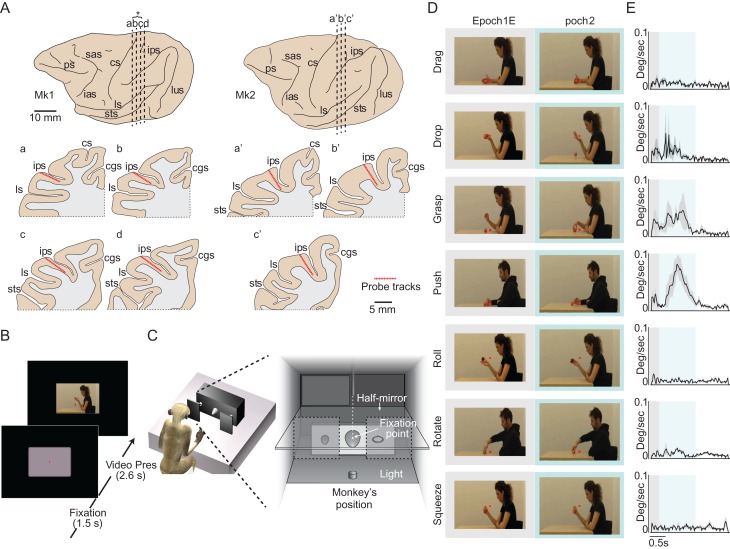

During the VMT, the monkey was seated on a primate chair in front of a box, shown in Figure 1C from the monkey’s point of view, whereas during OT it was sitting in front of a video monitor located on the opposite side. The 2 tasks were carried out in distinct, subsequent blocks during the same recording session.

Figure 1.

Anatomical reconstruction and behavioral paradigms. (A) Reconstruction of the probes location along the intraparietal sulcus of Mk1 and Mk2. Vertical dashed lines indicate the position of each probe’s track, illustrated in the coronal sections below (a–d for Mk1 and a′–c′ for Mk2). Asterisk indicates the location of the 2 probes that have been considered together for the analysis of neuronal responses. Cgs, cingulate gyrus; cs, central sulcus; ias, inferior arcuate sulcus; ips, intraparietal sulcus; ls, lateral sulcus; lus, lunate sulcus; ps, principal sulcus; sas, superior arcuate sulcus; sts, superior temporal sulcus. (B) Observation task (OT). (C) Behavioral setup for the visuomotor task (VMT). Temporal sequence of task events is shown in Figure S1A. (D) Examples of initial (Epoch 1) and middle (Epoch 2) frames for each OMA exemplar. (E) Average speed of motion (degree/second) for the 4 variants of each OMA exemplar during the 2.6 s video presentation period.

Visuomotor Task

The VMT was performed by using a box divided horizontally into 2 sectors by a half-mirror: the upper sector contained a small black tube with a white light-emitting diode (LED) that could project a spot of light on the half-mirror surface; the lower sector contained a sliding plane hosting 3 different objects. When the LED was turned on (in complete darkness), the half-mirror reflected the spot of light so that it appeared to the monkey as located in the lower sector (fixation point), in the very position of the center of mass of the not-yet-visible target object. The objects—a ring, a small cone, and a large cone—were chosen because they afforded 3 different grip types: hook grip (in which the index finger enters the ring); side grip (performed by opposing the thumb and the lateral surface of the index finger); whole-hand prehension (achieved by opposing all the fingers to the palm). Objects were presented one per trial, through a 7 cm opening located on the monkey’s sagittal plane within reach of its hand’s starting position. A stripe of white LEDs located on the lower sector of the box allowed us to illuminate objects during specific phases of the task. Note that, because of the half-mirror, the fixation point remained visible in the middle of the object even when the lower sector of the box was illuminated.

The VMT included 3 fully randomized conditions, as illustrated in Figure S1A: grasping in the light, grasping in the dark and a no-go condition. Each of them started when the monkey held its hand on a starting button, after a variable intertrial period ranging from 1 to 1.5 s from the end of the previous trial.

Grasping in the Light

The fixation point was presented and the monkey was required to acquire the fixation point within 1.2 s. Fixation onset resulted in the presentation of a cue sound (a pure high tone constituted by a 1200 Hz sine wave), which instructed the monkey to grasp the subsequently presented object (go-cue). After 0.8 s the lower sector of the box was illuminated and one of the objects became visible. Then, after a variable time lag (0.8–1.2 s), the sound ceased (go-signal), at which point the monkey had to reach, grasp, and pull the object within 1.2 s. It then had to hold the object steadily for 0.8 s. If the task was performed correctly without breaking fixation, the reward was automatically delivered. We collected 12 correctly preformed trials with each object.

Grasping in the Dark

The temporal sequence of events in this condition was identical to that of grasping in the light. However, when the cue sound (the same high tone as in grasping in the light) ceased (go signal), the light inside the box was automatically switched off and the monkey performed the subsequent motor acts in complete darkness. The fixation point was visible for the entire duration of each trial, providing a spatial guidance for reaching the object in the absence of visual feedback. In this paradigm, grasping in the light and grasping in the dark trials were identical and unpredictable until the occurrence of the go signal, to ensure that action planning was the same in both conditions. We collected 12 correctly performed trials with each object.

No-Go Condition

The basic sequence of events was the same as in the go conditions, but a different cue sound (a pure low tone constituted by a 300 Hz sine wave), instructed the monkey to remain still and continue fixating the object for 1.2 s in order to receive the reward. We collected 12 correctly performed trials with each object.

Observation Task

The OT was performed with the monkey chair rotated by 180° to face a video monitor (1920 × 1080, 60 Hz). The monitor was located 57 cm from the monkeys’ face, where the video took up an area of 13.04° × 9.85° of the visual field in the horizontal and vertical dimension, respectively. Videos of 7 different OMA exemplars, each performed by 2 actors, one male and one female, on 2 target objects of the same size and different color (4 variants for each exemplar), were presented. First, the monkey had to gaze at a red square on a scrambled background. Then, the video stimulus started and lasted 2.6 s. The monkey was required only to remain still, with its hand on the starting button, and to maintain fixation for the entire duration of the trial. Details on OMA exemplars administered are provided in Figure 1D and E. If the monkey maintained fixation within a 3° spatial window centered on the fixation point for the entire duration of the trial, reward was automatically delivered. The stimuli were randomly presented 3 times each, for a total of 12 trials for each exemplar.

The phases of both tasks were automatically controlled and monitored by LabView-based software, enabling the interruption of the trial if the monkey broke fixation, made an incorrect movement or did not respect the task temporal constraints described above. In all these cases, no reward was delivered. After correct completion of a trial, the monkey was automatically rewarded with the same amount of juice in all conditions (pressure reward delivery system, Crist Instruments, Hagerstown, MD).

Recording Techniques

Neuronal recordings were performed by means of chronically implanted arrays of linear silicon probes with 32 recording channels per shaft. Probes were implanted by estimating the angle of penetration with MRI-based reconstruction of the outline of the intraparietal sulcus at the selected site of insertion (Fig. 1A). Previous reports provide more details on the methodology of probe fabrication, assembly, and implantation (Herwik et al. 2011; Barz et al. 2014; Bonini, Maranesi, Livi, Bruni, et al. 2014), as well as on probes’ recording performance over time in chronic applications (Barz et al. 2017).

The signal from the 128 channels was simultaneously amplified and sampled at 30 kHz with four 32-channel Intan amplifier boards (Intan Technologies, Los Angeles, CA, USA), controlled in parallel via the electrophysiology platform Open Ephys (http://open-ephys.org/). All formal signal analyses were performed off-line with fully automated software, Mountain sorter (Chung et al. 2017), using a −3.0 standard deviations of the signal-to-noise ratio of each channel as threshold for detecting units. To distinguish single- from multiunit we used the noise overlap, a parameter that can vary between 0 and 1, with units with a value below 0.1 being considered as single. Single unit isolation was further verified using standard criteria (ISI distribution, refractory period >1 ms, and absence of cross-correlated firing with time-lag of ≈0 relative to other isolated units, to avoid oversampling), possible artifacts were removed, and all the remaining waveforms that could not be classified as single units formed the multiunit activity.

Recording of Behavioral Events and Definition of Epochs of Interest

Distinct contact sensitive devices (Crist Instruments) were used to detect when the monkey’s hand (grounded) touched the metal surface of the starting button or one of the target objects. To signal the onset and tonic phase of object pulling, an additional device was connected to the switch located behind each object. Each of these devices provided a TTL signal, which was used by the LabView-based software to monitor the monkey’s performance and to control the generation and presentation of the behavioral paradigm’s auditory and visual cue signals.

Eye position was monitored in parallel with neuronal activity with an eye tracking system consisting of a 50 Hz CCD video camera provided with an infrared filter and 2 spots of infrared light. Two identical but independent systems were used for monitoring eye position during VMT and OT. Analog signal related to horizontal and vertical eye position was fed to a computer equipped with dedicated software, enabling calibration and basic processing of eye position signals. The monkey was required to maintain fixation throughout each task, and the eye position signal was monitored by the same LabView-based software dedicated to the control of the behavioral paradigm.

The same software also generated different digital output signals associated with various input and output events of both the VMT and OT. These signals were recorded and stored together with the neuronal activity and subsequently used to construct the response histograms and the data files for statistical analysis.

Unit activity was analyzed in relation to the digital signals associated with the main behavioral events. In the VMT we considered the following epochs of interest: 1) baseline, 500 ms before object presentation; 2) object presentation, from 0 to 500 ms after switching on the light; 3) reaching-grasping, from −500 to 0 ms before pulling onset; and 4) object pulling, from pulling onset to 500 ms after this event. Note that during baseline the monkey kept its hand immobile on the starting button, was staring at the fixation point and was already aware of whether the ongoing trial was a go or a no-go trial: these features enabled us to assess possible variation in neural discharge specifically linked with the subsequent task stages within the ongoing behavioral set.

In the OT we considered the following epochs of interest: 1) baseline, 500 ms before video presentation onset; 2) Epoch 1, 300 ms from video onset; and 3) Epoch 2, including the subsequent 1200 ms of the video.

Data Analyses

Single- and Multiunit Classification

Units (single- and multi-) were primarily classified, using OT responses, based on possible modulation of the activity in Epoch 1 and/or 2 of video presentation relative to baseline as facilitation (when the response was stronger than baseline) or suppression (when the response was weaker than baseline), according to Vigneswaran et al. (2013). The choice of these 2 epochs was motivated by the fact that in the videos of some OMA exemplars the amount of motion differed markedly between the 2 epochs, with Epoch 1 including primarily static information about the depicted action conveyed by the actor’s initial body posture. The analysis was carried out by means of a 3 × 7 repeated measures ANOVA (factors: Exemplar and Epoch): we classified as action-related all units showing a significant effect (P < 0.05) of the factor Epoch, either as a main or interaction effect with the factor Exemplar. Action-related units also showing a significant effect (P < 0.05) of the factor Exemplar, either as a main or interaction effect with the factor Epoch, were classified as OMA-selective units.

Next, units were classified as motor-related by means of the VMT responses based on their possible modulation (facilitated or suppressed) in one (or both) epoch/s of action execution in the dark relative to baseline (i.e., reaching-grasping and object pulling). The analysis was carried out by means of a 3 × 3 repeated measures ANOVA (factors: Object and Epoch). The same analysis was performed to assess possible responses during grasping in the light as well. Object presentation response during the VMT was assessed by means of a 3 × 2 × 2 repeated measures ANOVA (factors: Object, Condition, and Epoch). All ANOVAs were followed by Bonferroni post hoc tests (P < 0.05) in the case of significant interaction effects or to identify specific effects of factors with more than 2 levels.

Heat maps have been constructed to show the temporal activation profile of individual units in selected neuronal populations. Each line represents the activity of a unit averaged across trials of a given condition. The color code represents the net normalized activity, computed as follows: for each unit, a mean baseline value across trials was calculated, then subtracted bin-by-bin for the task period to be plotted and finally normalized to the absolute maximum bin value across the compared conditions. All final plots were made using a bin size of 60 ms and steps of 20 ms. The data, used to produce the heat maps, averaged in 60 ms bins slid forward in steps of 20 ms, were also used to plot the time course of the net normalized population activity. To represent the population selectivity for a given variable (i.e., OMA or grip type) we performed one-way repeated measures sliding ANOVAs (P < 0.05 uncorrected) on each unit’s firing rate over time. This analysis was performed in 200 ms bins, advanced in steps of 20 ms for the entire task-unfolding period. The results of this analysis were plotted (relative to the center of each epoch) by calculating the percentage of significantly tuned units in each epoch in the entire neuronal population.

Preference Indices

Neural selectivity for a given variable of interest (e.g., OMA, grip type, object, own HVF) was quantified for each unit by calculating a preference index (PI) for that variable using the activity in a specific epoch of interest with the following equation:

where, v is the selected variable of interest, n is the number of conditions of v, ri is the unit response associated to condition i, and rpref is the unit response associated to the preferred condition. Regardless of the number of conditions of the selected variable, the PI ranges from 0 to 1, with a value of 0 corresponding to identical response magnitude for all conditions and a value of 1 corresponding to a response to only one condition.

Decoding Analyses

We assessed the decoding accuracy with a maximum correlation coefficient classifier trained to discriminate between the 7 OMA exemplars, using the methodology previously described by Meyers (2013) and adopted in other studies (Zhang et al. 2011; Rutishauser et al. 2015; Kaminski et al. 2017).

For each neuron, data were first converted from raster format into binned format. Specifically, we created binned data that contained the average firing rate in 150 ms bins sampled at 50 ms intervals for each trial (data-point). We obtained a population of binned data characterized by a number of data points corresponding to the minimum number of correct trials in all units × exemplars (i.e., 10 × 7 = 70 data-points for OMA exemplars decoding during OT) in an N-dimensional space (where, N is the total number of neurons considered for the analysis). Next, we randomly grouped the 63 available data points into a number of splits equal to the total number of data points per condition (n = 10), with each split corresponding to one replication of all 7 conditions and containing a “pseudopopulation,” that is, a population of neurons that were partially recorded separately but treated as recorded simultaneously. Before sending the data to the classifier, they were normalized by means of z-score conversion so that neurons with higher levels of activity did not dominate the decoding procedure. We used a 10-fold cross-validation procedure whereby a pattern classifier was trained using all but one of the 10 splits of the data and then tested on the remaining one: this procedure was repeated as many times as the number of splits (i.e., 10), leaving out a different test split each time. To increase the robustness of the results, the overall decoding procedure was run 50 times with different selection of data in the training and test splits, and the decoding accuracy from all these runs was then averaged. The decoding results were based on the use of a maximum correlation-coefficient classifier. The analysis was performed on data collected from the 2 monkeys.

To assess whether the classification accuracy was above chance, we performed a permutation test in which we randomly shuffled the attribution of the labels to the different trials (50 repetitions), and then ran the full clutter-decoding experiment to obtain a null distribution to be compared with the accuracy of the real decoding: the P-value was found by assessing how many of the points in the null distribution were greater than those in the real decoding distribution and selecting only periods of at least 3 consecutive significant bins. The decoding results were considered statistically significant only if accuracy was greater than all the shuffled data in the null distribution (P = 0).

Tracer Injections and Histological Procedures

The anatomofunctional investigated region was defined based on previous studies (Murata et al. 2000; Borra et al. 2008), which identified as AIP the cortical sector ranging from anterior 6 to posterior −2 mm. Electrode implantation was thus performed within this region. At the end of the electrophysiological experiments, the probes were removed and neural tracers were injected within the functionally characterized region by taking into account the following criteria: 1) achieving a match between injection sites and probe locations and 2) avoiding overlap between adjacent injection sites (minimum 1.5 mm in AP between each injection). To fulfill these criteria, we could inject 3 different tracers in each animal, as detailed in Table 1.

Table 1.

Injected regions and details for each site in both animals

| Monkey | Species | Hemisphere | Injected site | Tracer | Amount |

|---|---|---|---|---|---|

| Mk1 | Macaca mulatta | Right | AP 4 mm | FB 3% | 1 × 0.2 μL |

| Right | AP 2 mm | CTBg Alexa 488 1% | 1 × 1.2 μL | ||

| Right | AP 0 mm | DY 2% | 1 × 0.2 μL | ||

| Mk2 | Macaca mulatta | Left | AP 4.5 mm | FB 3% | 1 × 0.2 μL |

| Left | AP 3 mm | DY 2% | 1 × 0.2 μL | ||

| Left | AP 1 mm | CTBg Alexa 488 1% | 1 × 1.2 μL |

Before tracer injection, each monkey was anesthetized (Ketamine, 5 mg/kg i.m. and Medetomidine, 0.08–0.1 mg/kg i.m.) and tracers were slowly pressure injected at the desired depth using a Hamilton microsyringe (Reno, NV, USA). In the right hemisphere of Mk1 we injected 2 retrograde tracers, Fast Blue (FB, 3% in distilled water, Drilling Plastics GmbH, Breuberg, Germany) and Dyamidino Yellow (DY, 2% in distilled water, Drilling Plastics GmbH, Breuberg, Germany), and an anteroretrograde tracer, analyzed as a retrograde one, cholera toxin B subunit conjugated with Alexa 488 (CTBg, 1% in phosphate-buffered saline; Molecular Probes). In the left hemisphere of Mk2 we injected FB, DY, and CTBg. After an appropriate survival period for tracer’s transport (21 days), each animal was deeply anesthetized with an overdose of sodium thiopental and perfused through the left cardiac ventricle with saline, 3.5% paraformaldehyde and 5% glycerol (in this order) prepared in phosphate buffer 0.1 M, pH 7.4. Each brain was then coronally blocked on a stereotaxic apparatus, removed from the skull, photographed, and placed in 10% buffered glycerol for 4 days. Finally, each brain was cut frozen into coronal sections of 60 μm thickness. For visualizing DY and FB by fluorescence microscopy, one section of each 5 was mounted air-dried and quickly cover-slipped. For visualizing CTB-g by bright-field microscopy, one section out of every 5 was immunohistochemically processed, as follows. After inactivation of the endogenous peroxidase (methanol: hydrogenperoxide = 4:1), selected sections were incubated for 72 h at 4 °C in a primary antibody solution of rabbit anti-Alexa 488 (1:15 000, Life Technologies) in 0.3% Triton, 5% normal goat serum in phosphate buffer solution (PBS), and then incubated in biotinylated secondary antibody (1:200, Vector Laboratories, Burlingame, CA, USA) in 0.3% Triton, 5% normal goat serum in PBS. Finally, CTBg labeling was visualized using the Vectastain ABC kit (Vector) and the Vector SG peroxidase substrate kit (SK-4700, Vector) as a chromogen. For both monkeys, 1 section out of 5 was stained using the Nissl method (thionin, 0.1% in 0.1 M acetate buffer, pH 3.7).

Reconstruction of the Injection Sites, Identification of the Recorded Regions, Distribution of Labeled Neurons and Quantitative Analysis

The locations of the probes’ tracks and injection sites were assessed under an optical microscope in a series of Nissl-stained coronal sections, then plotted and digitized together with the outer and inner borders of the cerebral cortex using a computer-based charting system. The distribution of retrograde cortical labeling was plotted in sections spaced 600 μm apart, together with the outer and inner cortical borders. The digitalized sections were then imported in CARET software to create reconstructions of the cortical surface (http://www.nitrc.org/projects/caret/, Van Essen et al. 2001) as described in other studies (Galletti et al. 2005; Gamberini et al. 2009). The same software was used to prepare the density maps of labeled neurons by projecting the location of each neuron to the nearest mid-thickness contour used to build the 3D reconstruction (Bakola et al. 2010; Passarelli et al. 2011). The strength of projections from various cortical areas was quantified as the percentage of labeled cells in each area relative to the total number of labeled cells in the whole brain, except the halo and core regions of the injection site.

Identification of Cortical Areas Containing Extrinsic Labeled Cells

The nomenclature and boundaries of the cortical areas that contained labeled cells were based on published criteria or sulcal landmarks, using previously published maps as a guide. The architectonic criteria of Pandya and Seltzer (1982) were used to subdivide the superior parietal lobule into areas PE and PEc. The IPL was subdivided according to Gregoriou et al. (2006). Areas of the lateral intraparietal sulcus were identified based on descriptions of Blatt et al. (1990), Durand et al. (2007) and Lewis and Van Essen (2000). The subdivision of the medial parietal areas and cingulate sulcus (PGm, 23, 24) was defined according to the criteria of Kobayashi and Amaral (2000), Luppino et al. (2005), Matelli et al. (1991), Morecraft et al. (2004), Passarelli et al. (2018), and Vogt et al. (2005). The temporal cortex and the STS were subdivided according to Boussaoud et al. (1990), Lewis and Van Essen (2000) and Saleem and Tanaka (1996). The frontal motor and premotor cortices were subdivided into areas F1–F7 according to the criteria of Belmalih et al. (2007) and Matelli et al. (1991). The lateral prefrontal cortex was subdivided based on architectonic and connectional definition by Borra et al. (2017), Borra et al. (2011), Carmichael and Price (1994), Gerbella et al. (2007; 2010), and Gerbella et al. (2013).

Results

We recorded neuronal activity from 4 locations along the anteroposterior extent of area AIP in Monkey 1 (Mk1) and from 3 locations in Monkey 2 (Mk2) using linear multielectrode (32-channel) silicon probes. The probes were spaced at 2 to 3 mm intervals along the lower bank of the intraparietal sulcus (Fig. 1A), covering most of the rostrocaudal extent of the area. Note that the data recorded from intermediate probes b and c in Mk1 have been combined in subsequent analyses to facilitate comparisons between animals and to evaluate correspondences with the results of the tracing study (see below). The entire recorded region corresponds to the functionally defined area AIP (Sakata et al. 1995; Murata et al. 2000), as even the most posterior probes of both monkeys show neuronal properties known to characterize area AIP (Fig. S1). During all recording sessions (n = 6, 2 in Mk1 and 4 in Mk2), monkeys performed the OT (Fig. 1B). In addition, in 4 out of the 6 sessions (n = 2 in Mk1 and n = 2 in Mk2), we also recorded neuronal activity while monkeys performed the visuomotor reaching-grasping task (VMT, see Fig. S1A) using the apparatus (Fig. 1C) originally devised for studies of other areas (Bonini et al. 2014b; Lanzilotto et al. 2016).

During OT, monkeys had to fixate a square in the middle of a screen in a dark room while they were randomly presented with one of the 4 variants (2 objects and 2 actors) of 7 different manipulative action exemplars (Fig. 1D). Each video lasted for 2.6 s and was preceded by a 1.5 s blank screen with the fixation point (Fig. 1B). Each OMA exemplar was characterized by a specific speed profile (Fig. 1E), used as proxy for the dynamic body shape changes characterizing the action (Vangeneugden et al. 2009, 2011; Theusner et al. 2014). Within the first 300 ms of video presentation (Epoch 1), OMA exemplars were characterized by the appearance of specific postures involving the actor’s body and right arm with relatively little dynamic information (Fig. 1D, gray squared pictures and Fig. 1E). In contrast, during the subsequent 1200 ms (Epoch 2) the dynamic body shape changes varied widely, with some of the exemplars (Fig. 1D, light-blue squared pictures and Fig. 1E) being characterized by greater changes. These 2 epochs (1 and 2) were subsequently used in most analyses comparing neural activity to baseline, defined as the 500 ms preceding video onset. It is worth to note that, despite the wide differences in OMAs dynamics monkeys correctly performed most OT trials and frequencies of brakes in fixation occurring during video presentation did not differ significantly (Kruskal–Wallis, χ2 = 3.61, P = 0.73) amongst exemplars (Fig. S2A).

Neuronal activity was analyzed off-line through an automated spike sorting software (Chung et al. 2017). We extracted both multi- and single unit activity, here defined together as “units”. Because of their similarity in the encoding of functional properties (see Fig. 2 and Fig. S2B and C), we pooled all (single- and multi-) units in most of subsequent analyses of functional data to match the unbiased sampling of the tracing study performed on the physiologically characterized sites.

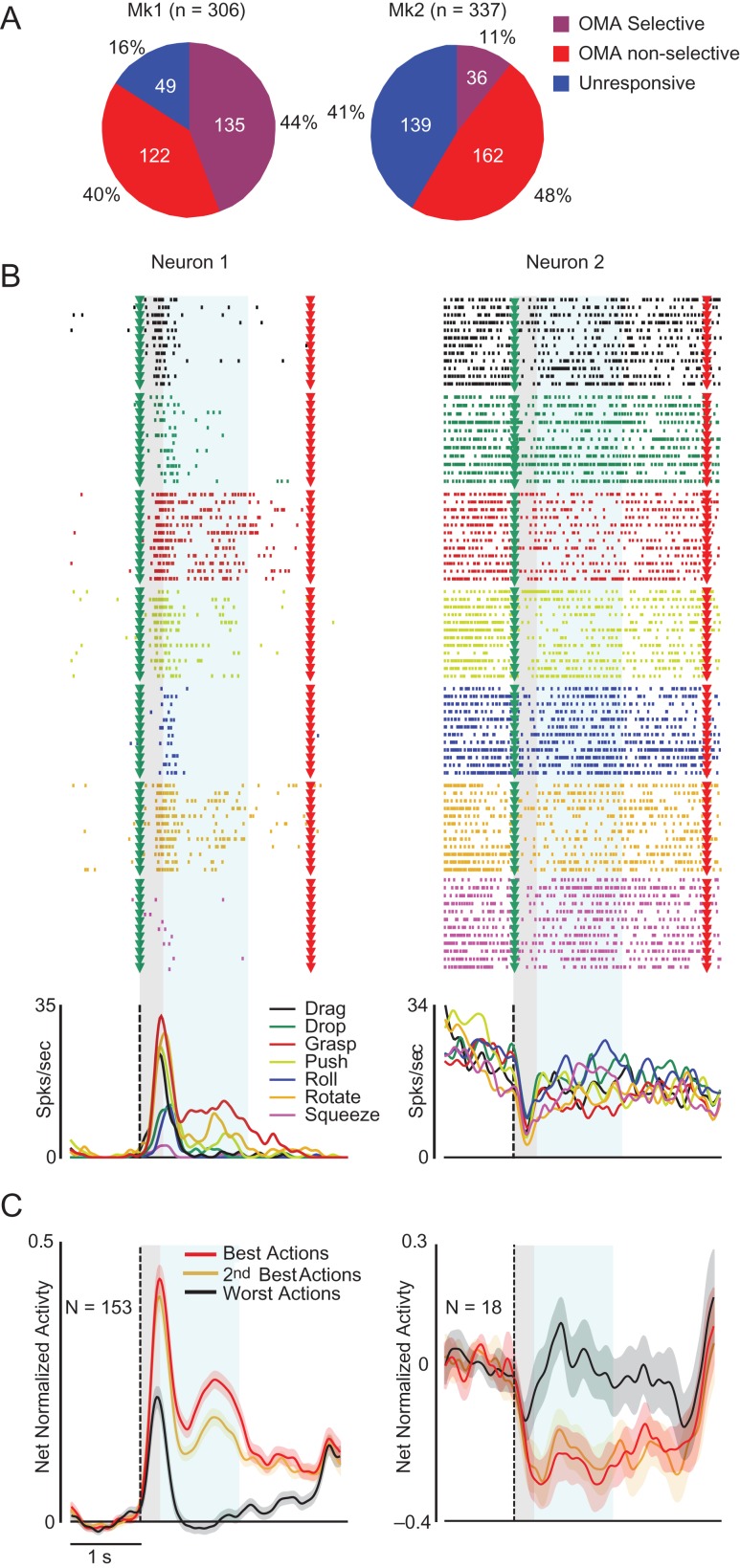

Figure 2.

Single neuron examples, population activity and tuning properties of AIP units in the OT. (A) Percentage of OMA-selective, -nonselective, and –unresponsive units in each monkey. (B) Examples of facilitation (Neuron 1) and suppression (Neuron 2) OMA-selective neurons. Each neuron’s raster and peri-stimulus response is aligned to the video presentation (green triangles and dashed lines). Red triangles, reward delivery. (C) Time course of the net normalized population activity (including single- and multiunits) of OMA-selective facilitated (Left) and suppressed (right) units. The shading area around each line indicates 1 standard error, gray and light-blue shaded areas superimposed on each plot represent epochs 1 and 2 used for statistical analysis.

Neuronal Selectivity for OMAs in Area AIP

We isolated 643 units (Fig. 2A), 131 of which were classified as well-isolated single units (n = 89 in Mk1 and n = 42 in Mk2) based on strict standard criteria (see Materials and Methods). Of all isolated units, 455 (70.8%, including 123 single units) responded to OMA (7 × 3 repeated measures ANOVA, factors: Exemplar and Epoch, P < 0.05) and, of the latter 171 (including 40 single units) exhibited action selectivity (see Materials and Methods). Thus, over a quarter (26.6%) of all AIP recorded units (30.5% of single units) exhibited OMA selectivity, despite interindividual differences (44% and 11% of units in Mk1 and Mk2, respectively).

Most AIP OMA-selective units showed increased activity in response to the video presentation (facilitation units, N = 153, 89.5%), whereas a few exhibited suppressed activity (suppression units, N = 18, 10.5%). Figure 2B shows examples of facilitation (Neuron 1) and suppression (Neuron 2) OMA-selective single units. In line with these examples, the population activity of both units (Fig. 2C) and single neurons (Fig. S2B) discriminated between best and worst OMA in a similar manner. Interestingly, the time course of facilitation units (Fig. 2C) and single neurons (Fig. S2B) showed a strong and transient activation, peaking in epoch 1, followed by a sustained activity during epoch 2. Considering the differences in motion strength (taken as a proxy for the magnitude of body shape changes) characterizing epochs 1 and 2 of the videos (Fig. 1E), the early population response (Epoch 1) may derive largely from static information conveyed by the initially different body posture of the actor, whereas the later response (Epoch 2) should reflect only dynamic visual information. To directly test this issue, we performed a series of single unit and population analyses.

OMA selectivity was evident during both Epoch 1 and 2: the OMA PI for epoch 2 was slightly greater than for Epoch 1 (Fig. 3A), but the 2 indices did not differ significantly (t = 0.81, P = 0.42) and were positively correlated (r = 0.57, P < 0.001). These findings suggest that distinctive initial static body postures in some of the OMA exemplars were sufficient to elicit OMA selectivity, which was sustained during the subsequent dynamic changes in body shape. To test this idea more directly, we independently ranked the 7 OMA exemplars based on the discharge rates of each unit in Epochs 1 and 2. OMA exemplars with lower ranks based on the units’ responses during Epoch 2 also showed the lowest ranks when scored based on their response during Epoch 1 (Fig. 3B and S2D). Thus, the representation of OMA exemplars in area AIP at the single unit level appears to be relatively invariant with regard to the strength of the dynamic information characterizing them. AIP neurons may therefore code the OMA identity, regardless of the prevalence of static or dynamic information conveying it.

Figure 3.

Temporal dynamic of OMA processing in AIP. (A) Regression plot of preference index (PI) values calculated on OMA-selective unit activity during Epoch 1 and 2. See also Figure S2D. (B) Cross-validation of the ranking of all OMA exemplars performed with the average activity (±1 standard error) during Epoch 1 as a function of the same ranking performed with the average activity during Epoch 2 (Kruskal–Wallis, χ2 = 139.34, P < 0.001). (C) Percentage of units selective for each OMA exemplar in each monkey during Epoch 2. (D) Classification accuracy of OMA exemplars as a function of test and training time. The superimposed white line represents the classification accuracy of the population along the diagonal (scale on the right). The red line in the lower part of the plot indicates the period of time during which the decoding accuracy is significantly above chance level (see Materials and Methods). (E) Cross-validation of the best OMA exemplar (rank = 1) calculated with the activity during Epoch 2 (E2) as a function of time during the entire action unfolding period (bin width 300 ms, step 20 ms). For each bin the color code (see inset) represents the local rank of the OMA ranking 1 in Epoch 2. Bins in which neural activity was not significantly different from baseline (sliding window ANOVA, bin width 300 ms, step 20 ms, P > 0.5 uncorrected) have been blanked out (E1 = Epoch 1).

Encoding OMA requires not only selective units but also an adequate coverage of the various exemplars, which we investigated using responses in Epoch 2. Figure 3C demonstrates that grasping was the most represented OMA: indeed, for more than 30% of the recorded units (34% in Mk1 and 36% in Mk2) grasping evoked the strongest response (see also Fig. S2C). On the other hand, Roll, Squeeze and Rotate were the less well-represented OMAs amongst selective AIP units. Therefore, the similarity between ranks in Epochs 1 and 2 (Fig. 3B) was greater for the 4 exemplars which were most frequently preferred than for the 3 less well-represented (Fig. S2E). Further analyses were performed to rule out the possibility that neuronal selectivity for OMA can be accounted for by stimulus features other than the action (i.e., actor gender, type of object, or interaction between these factors): Figure S2F shows that action exemplar was the only manipulated factor significantly represented by AIP neuronal activity.

Finally, we trained a classifier (Meyers 2013) to discriminate between the 7 OMA exemplars using the entire AIP neuronal population. The results (Fig. 3D) indicate that the classification accuracy was high and significantly above chance level during the entire video presentation period, reaching a maximum of nearly 80% of accuracy near the end of epoch 1 and then showing close-to-maximal values during most of Epoch 2. By training the classifier at one point in time (using 150 ms bins of data shifted by 50 ms) and then testing its decoding performance at either the same or a different time point, we have been able to investigate whether a dynamic or static population code underlies OMA representation in AIP (Fig. 3D). The high decoding accuracy restricted along the diagonal indicates that the neural representation of OMA identity in AIP emerges mainly from a dynamic code, consistent with previous studies of perceptual and cognitive processes (Meyers et al. 2008, 2012; Crowe et al. 2010). This dynamic representation of OMA identity in AIP (Fig. 3D) essentially reflects the contribution of OMA selective (Fig. S3A) as compared with task-related (but OMA unselective) (Fig. S3B) and task-unrelated (Fig. S3C) units. Although accurate decoding of the AIP population activity is time dependent, most OMA selective units display a remarkable stability over time of their preference for a given exemplar (Fig. 3E), in line with the comparison of the selectivity in the 2 epochs reported above. Thus, OMA representation emerges dynamically at the population level from individual units with a relatively stable code for exemplar’s identity.

Relationship Between OMA Selectivity and Visuomotor Properties in AIP

A subset of 487 units (Mk1 n = 306; Mk2 n = 181) was tested in the VMT in addition to the OT, the vast majority of which (91%) proved task-related. Table 2 summarizes the relationship between visual responses to OMAs and selectivity for the grip type in the dark, which is considered the most reliable marker for hand grasping motor coding. A few AIP units (n = 25, 5%) discharged only during visual presentation of the target object, many (n = 133, 27%) responded during at least 1 of the 2 action execution epochs in the dark but most reacted (n = 287, 59%) during both object presentation and action execution (in the dark).

Table 2.

Properties of all units recorded during both VMT in the dark (reaching-grasping/pulling epoch) and OT (Epochs 1/2)

| Grip selective | Grip nonselective | Unresponsive | Total | |

|---|---|---|---|---|

| OMA-selective | 67 | 75 | 15 | 157 |

| OMA nonselective | 68 | 125 | 25 | 218 |

| OMA unresponsive | 34 | 51 | 27 | 112 |

| Total | 169 | 251 | 67 | 487 |

Grip-selective units showed OMA selectivity more frequently (49.6%) than grip-nonselective ones (37.5%, χ2 = 4.86, P = 0.028), and this association was significant even if unresponsive units were considered (χ2 = 6.5, P = 0.011). Since grasping was the most well-represented OMA (Fig. 3C and S2C), we next investigated the possible association between neuronal selectivity for observed and executed grasping. Our results show no evidence of association between grip selectivity during the VMT and visual selectivity for observed grasping relative to other OMAs in the OT (χ2 = 2.75, P = 0.097, see Table 3). Indeed, the proportion of grip selective units is even smaller (though not significantly) amongst those with OMA selectivity for grasp (36%) relative to those preferring other OMAs (51%).

Table 3.

Properties of OMA-selective units recorded during both VMT in the dark (reaching-grasping/pulling epoch) and OT (Epochs 1/2)

| Observed manipulative action selectivity | |||

|---|---|---|---|

| Grasp | Other OMAs | Total | |

| Grip selective | 14 | 53 | 67 |

| Grip nonselective | 25 | 50 | 75 |

| Total | 39 | 103 | 142 |

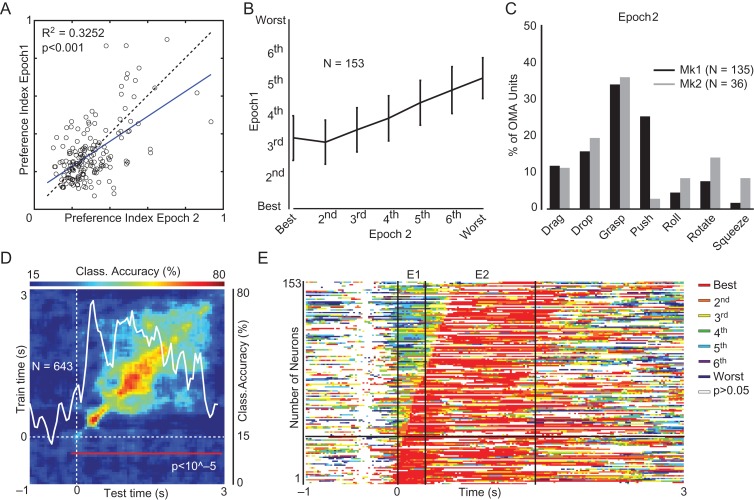

To address this issue further, we investigated the relationship between motor (grip type) and visual (OMA) selectivity as a function of time. Figure 4A shows that relative to grip nonselective units (red), greater numbers of grip selective units (blue) not only exhibit grip selectivity over time but also show OMA selectivity in the later phase of video presentation (Fig. 4B), confirming the epoch based contingency analysis. Nonetheless, this effect cannot be specifically accounted for by greater visual selectivity for grasping, since the proportion of grasping OMA-selective units (Fig. 4B) did not differ significantly between grip selective (light blue) and nonselective (orange) units at any moment during video presentation (sliding χ2 test P > 0.05 during the whole period).

Figure 4.

Relationship between motor selectivity for the grip type and visual selectivity for observed grasping actions. (A) Percentage of grip-selective (blue) and nonselective (red) units showing grip selectivity over time during the VMT performed in darkness. The dashed line below the plot indicates the time bins in which the relative number of tuned units was significantly different between the 2 subpopulations (sliding χ2 test performed on 20 ms bins, P < 0.05, only sets of at least 5 contiguous bins are shown). (B) Percentage of grip-selective (blue) and nonselective (red) units (same as in panel A) showing OMA selectivity over time. All conventions as in panel A. The additional curves indicate the percentage of units with specific selectivity for observed grasping (sliding χ2 tests, P < 0.05) among grip-selective (light blue) and nonselective (orange) units. (C, D) Heat maps of facilitation OMA-selective units with visual preference for grasping (C) or for OMA other than grasping (D) during the OT. Units in the heat maps have been ordered (from bottom to top) according to the timing of their peak activity after video presentation onset (vertical dashed line in the panels on the right). Superimposed on each heat map, the black lines in the left panels represent the percentage of units of the entire subpopulation showing significant tuning for grip type (sliding window one-way ANOVA, bin size 200 ms, step 20 ms, P < 0.05 uncorrected). In the right panels, the colored curves on the heat maps indicate the percentage of units in the subpopulation displaying preference for a specific OMA exemplar (i.e., the exemplar with highest activity value see color code in the legend; sliding window one-way ANOVA with 7 levels of the factor “OMA,” bin size 200 ms, step 20 ms, P < 0.05 uncorrected). Further analyses on the same set of data are provided in Figure S4. (E) Time course of the net normalized mean activity for each subpopulation illustrated in panels C and D during VMT (left) and OT (right). Shaded regions around each line represent 1 standard error.

In the analyses so far described the relationship between observed and executed grasping has been explored using motor selectivity as a criterion for grouping the units. We also investigated this relationship by grouping the units based on their selectivity for OMAs. Figure 4C shows, for units selective for observed grasping, the temporal activation pattern in both VMT in the dark (left) and OT (right). Figure 4D shows the same information for units with selectivity for the OMAs other than grasping. A sliding χ2 test (P < 0.05) carried out over the entire VMT period provides no evidence for a greater proportion of grip-selective units among those with visual selectivity for observed grasping relative to those with visual selectivity for the OMAs other than grasping. Furthermore, there was no difference in the relative levels of visual (t = 0.42, P = 0.68) and motor (t = −0.77, P = 0.44) activities in VMT between units with visual selectivity for grasping and those with selectivity for the other 6 OMA exemplars (Fig. 4E). Since nongrasp OMA exemplars can be subdivided into high- versus low-motion (reflecting magnitude of dynamic body shape changes), we also compared the visuomotor properties of units with visual preference for either of these 2 categories (Fig. S4) but found no significant difference between them. The response pattern of OMA-selective suppression units tested in the VMT was radically different from that of OMA-selective facilitation units (Fig. S5) in showing no significant modulation during grasping execution, hence, suggesting that they were essentially visual in nature.

The present findings show that AIP neurons with grasp-selective motor response play a major role in encoding OMA identity. Although grasping was the most frequently represented OMA, we found no privileged association between motor and visual representations of grasping relative to other OMAs, suggesting that the convergence of visual and motor signals onto the same neurons in AIP does not appear to be grounded in a visuomotor congruence between the 2 formats of action exemplar representation.

Rostrocaudal Distribution of OMA Selectivity and Visuomotor Properties in AIP

Previous functional evidence obtained with static visual stimuli suggested the presence of a rostrocaudal increasing gradient of visual processing within area AIP (Durand et al. 2007; Baumann et al. 2009). Here, we compared the functional properties of the 3 populations of units located in the rostral, intermediate and caudal parts of area AIP of each monkey (see Fig. 1A and Materials and Methods), by exploiting electrophysiological data collected with both the OT and VMT. For this purpose, we computed PIs for each factor of interest (OMA selectivity, object visual selectivity, grip selectivity and HVF), using the same procedure for all factors (see Materials and Methods) and including all task-related units.

Figure 5A illustrates OMA selectivity along AIP for the 2 monkeys. The caudal populations show a greater OMA selectivity relative to those located more rostrally (F(2) = 4.78, P = 0.009). This rostrocaudal gradient in the OMA coding is accompanied by a rostrocaudal increase in shape selectivity during the object presentation epoch in the VMT (Fig. 5B), although this effect did not reach significance (F(2) = 2.39, P = 0.09). Nevertheless, we found significantly greater grip selectivity in the caudal sites during the reaching-grasping epoch, both in the dark (F(2) = 5.09, P = 0.006) and in the light (F(2) = 7.19, P = 0.0008) (Fig. 5C), but not during the object pulling epoch (dark, F(2) = 1.26, P = 0.28; light, F(2) = 0.81, P = 0.45). The stronger gradient evidenced during VMT in the light may derive from input regarding monkey’s own HVF during grasping. Indeed, HVF preference (Fig. 5D) was greater in neural activity recorded more caudally (F(2) = 26.01, P = 0). It is interesting to note that the proportion of AIP units showing combined grip and OMA selectivity also varied as a function of the rostrocaudal position, from only 7% (6/90) at the rostral position up to 16% (37/238) and 15% (24/159) at the intermediate and caudal levels respectively (χ2 = 4.68, P < 0.05). These findings demonstrate a rostrocaudal gradient in AIP for a variety of visual information related to own and others’ action.

Figure 5.

Rostrocaudal differences in neural selectivity for OMA, visually presented object and grip type along AIP. (A) Preference index for OMA during OT. (B) Preference index for the visually presented object during go trials of the VMT. (C) Preference index for the grip type during reaching/grasping execution (left column) and during object pulling (right column) in the dark (top) and in the light (bottom). (D) Preference index for hand visual feedback (HVF, light vs. dark) calculated from reaching/grasping (left column) and object pulling (right column) responses. Each set of data has been analyzed by means of a 2 × 3 factorial ANOVA (factors: monkey, position), **P < 0.001 for the factor position.

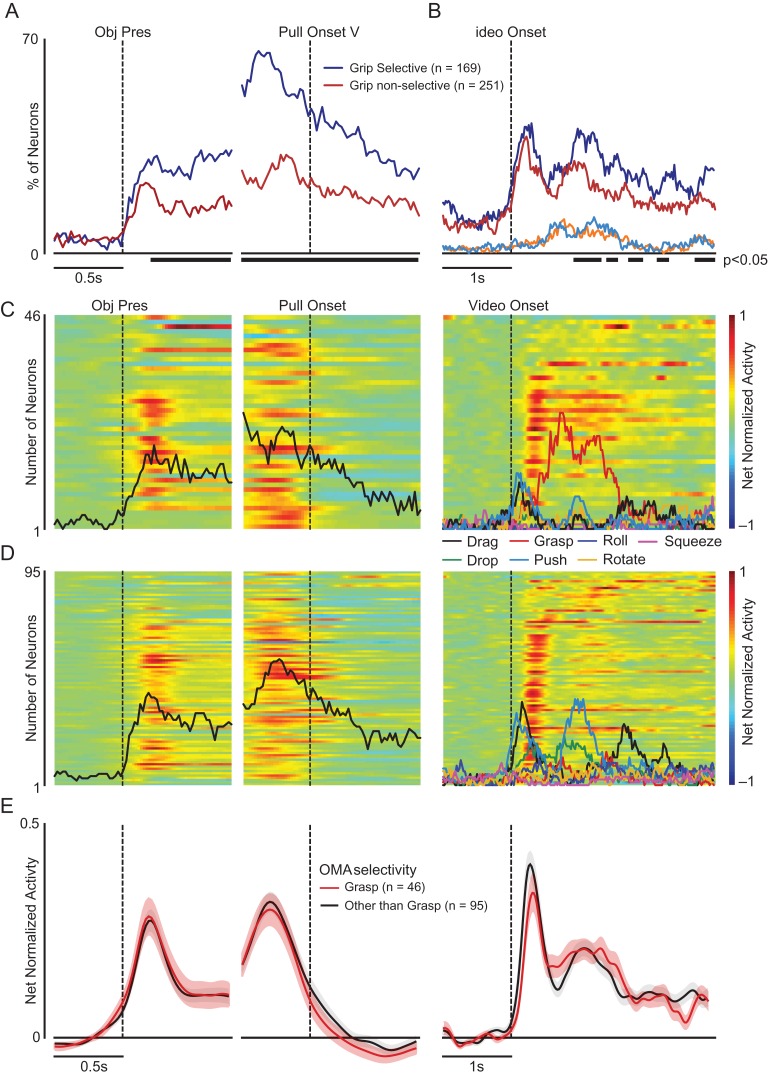

Rostrocaudal Connectivity of Functionally Characterized AIP Sites

To elucidate the rostrocaudal changes in AIP connectivity we injected, at the end of the neurophysiological experiments, 3 different neural tracers at the anteroposterior positions (see Methods and Methods and Fig. 6A) corresponding to the locations of the explanted probes (Fig. 1A). Consistent with previous studies (Lewis and Van Essen 2000; Nakamura et al. 2001; Borra et al. 2008), all the injections showed the connectivity pattern typical of AIP, which includes areas of the IPL, the IPS, the parietal operculum (PO), as well as different subdivisions of the premotor cortex (Fig. 6A). In particular, all the injected sites shared similar connectivity with the anatomical subdivisions of area F5, which is the most well-established connectional hallmark of AIP (Borra et al. 2008). Nonetheless, we have been able to observe quantitative differences within AIP regarding specific connectivity patterns, depending on the position of the injected site along the intraparietal sulcus (Fig. 6B).

Figure 6.

Anatomical connectivity of the rostral, intermediate and caudal sectors of area AIP in the 2 monkeys. (A) Three dimensional anatomical reconstructions illustrating the distribution of labeled cells after injections at the different AIP levels of Mk1 (top) and Mk2 (bottom). For each monkey, connectivity maps are presented for the rostral (left), intermediate (center) and caudal (right) injections. To facilitate the comparison, the maps of Mk1 (right hemisphere) were flipped and shown as a left hemisphere and the mesial walls were shown as right hemispheres. The color scale indicates the relative density of labeled cells, counted within regions of 600 × 600μm2, and expressed as a percentage of the maximum value obtained within the cortical surface for any given injection. Cgs, cingulate sulcus. Other abbreviations as in Figure 1. (B) Graphical representation of the strength of the main (>1%) connections of the rostral, intermediate and caudal sectors of AIP (data from corresponding injection positions in the 2 monkeys have been merged). The width of the wedges indicates the percentage of labeled neurons following injections at the various anteroposterior positions (see scale on the left). Acronyms: rIPL, rostral inferior parietal lobule; cIPL, caudal inferior parietal lobule; cIPS, caudal intraparietal sulcus; Par.Op, parietal operculum; SPL, superior parietal lobule; mIPS, medial intraparietal sulcus; vIPS, ventral intraparietal sulcus.

The most caudal injections were characterized by stronger connections with temporal areas (PITd/PITv, TEa/TEm, and IPa/PGa), ventrolateral prefrontal areas 12r/46 v and areas FEF/8 A/45B, in addition to caudal parietal areas Opt, and LIP. The intermediate and even more the rostral injections exhibited weaker (or no) connectivity with the temporal and prefrontal regions listed above. In contrast, intermediate and even more the rostral injections yielded strong connections with ventral premotor area F5p, inferior parietal area PFG and PF, the PO, as well as with areas PEip and MIP in superior parietal lobule and the medial intraparietal sulcus (Fig. 6B).

To clarify the possible relationship between specific pathways and the information they may convey to the different AIP sectors, we calculated the relative percentage of retrograde labeling within the 6 functional clusters of areas obtained following tracer injections at the 3 rostrocaudal positions in each monkey. This analysis revealed 3 connectivity gradients (Fig. 7, Table S1 and Fig. S6) increasing in the rostrocaudal direction with 1) ventral visual areas, 2) oculomotor parietofrontal areas (particularly area LIP, see Table S1), and 3) prefrontal areas (particularly areas 46 v/12r, see Table S1). In addition, we found the opposite caudo-rostral gradient concerning a large set of sensorimotor, mainly parietal regions, processing somatosensory information. It is worth noting that connections with dorsal visual areas, including the MT cluster, CIP, PIP, and V6A, show little or no gradient, being strongest for the intermediate AIP injection.

Figure 7.

Rostrocaudal gradients in AIP anatomical connectivity. Each bar represents the percentage of labeled cells observed in the various functional territories. Each territory is defined based on functional similarities of the labeled areas. The areas included in each cluster are listed under the histograms. The bars in each cluster are arranged in a rostral to caudal fashion relative to the location of the injection sites. Acronyms as in Figures 1 and 6.

Discussion

In this study we show that, per our prediction, AIP neurons encode the identity of specific OMAs, whether it is conveyed by either mostly static (body shape) or dynamic (body motion) information. Visual selectivity for OMA identity was stronger in pAIP, where it was associated with motor selectivity for the grip type as well as to high sensitivity to visual feedback of the monkey’s own hand during grasping execution. The rostrocaudal increase in preference for the visual encoding of manual actions of self and others parallels a rostrocaudal increase in anatomical connectivity with temporal areas of the ventral visual stream, oculomotor regions and prefrontal cortex, which may provide visual and contextual information relevant for manipulative action processing. These results revise current models of action observation network in the macaque, indicating that pAIP constitutes a parietal hub for routing information about OMA identity to the other parietal, premotor and prefrontal nodes of the network (Bonini 2017; Rozzi and Fogassi 2017).

AIP Neurons Encode a Variety of OMAs

Previous single neuron studies reported that 23% (Maeda et al. 2015) to 59% (Pani et al. 2014) of AIP neurons respond to observed grasping action. Here we found much greater percentages of both single- (94%) and multiunits (71%) exhibiting facilitated or suppressed responses to OMAs, with over a quarter of them displaying selectivity for the various exemplars. Importantly, our chronic recording approach is unbiased, as it excludes any preselection of the recorded neurons, making these percentages extremely reliable. The discrepancies with the 2 previous studies may be reconciled by considering that both focused only on the rostral half of area AIP and investigated a single OMA exemplar (grasping).

Different OMA exemplars are primarily characterized by specific patterns of body-shape changes. However, the distinctive static body postures of the actor before action onset allow, in many cases, predicting the action that will be observed (Theusner et al. 2014), even if the prediction may not be particularly accurate (Platonov and Orban 2016). Interestingly, a general feature of AIP neurons evidenced in the present study is that they exhibit tuning for specific OMAs both during the initial epoch dominated by the actor’s static body-posture at video onset and during the subsequent epoch in which the pattern of body-shape changes characterizes each OMA. The fact that OMA selectivity was quantitatively (i.e., magnitude of preference) and qualitatively (i.e., action exemplar) similar between the 2 epochs, indicates that AIP neurons can identify a given OMA regardless of the specific type of (static or dynamic) visual information available and independently of the magnitude of the body-shape changes. Indeed, OMA identity can be accurately decoded from AIP neuronal population activity during the entire video presentation period. Interestingly, AIP population code appears to be mostly dynamic (Mendoza-Halliday and Martinez-Trujillo 2017; Meyers 2018), suggesting that distinct AIP neurons provide specific contributions to the representation of OMA exemplars at distinct periods in time. Nonetheless, this does not mean that single neuron tuning for OMAs randomly changes over time. Indeed, we showed that OMA preference of selective units displays a remarkable temporal stability, despite changes in magnitude of the neuronal activity, suggesting that OMA representation emerges dynamically at the population level from individual units with a relatively stable code for exemplar’s identity.

In spite of the neuronal coverage of all OMA exemplars, a greater number of units show selectivity for the exemplars characterized by large changes in body-shape. Among them, grasping was by far the most widely represented in both animals, although this was not the exemplar characterized by the largest body motion, and all 7 OMAs were evenly sampled. The overrepresentation of observed grasping is consistent with its crucial ethological role in primates (Hashimoto et al. 2013; Graziano 2016; Tia et al. 2017). Furthermore, the complexity of its biomechanical control (Grafton 2010) and the extension of its motor representation in the cerebral cortex (Filimon 2010; Nelissen and Vanduffel 2011; Baldwin et al. 2017) may necessitate devoting a large number of neurons for even its visual processing. Indeed, previous studies have shown that distinct sets of parietal (Fogassi et al. 2005; Maeda et al. 2015) and premotor (Caggiano et al. 2009; Bonini et al. 2010; Papadourakis and Raos 2017; Mazurek et al. 2018) neurons represent specific types of observed grasps as well as the specific context in which the observed grasp is embedded. Thus, the more refined and articulated granularity of neural representation of grasping relative to other manual actions may explain its overrepresentation.

Because the visual representation of grasping predominates in AIP, one might expect its motor and visual formats to converge at the single neuron level. Even if grasping was the only manipulative action tested with the motor task, grip selective neurons should exhibit selectivity for observed grasping more frequently than for other OMAs. In contrast, we found the motor specificity for grasping in AIP neurons preferentially associated with visual selectivity for the OMAs other than grasping. A possible interpretation of this lack of congruence at the exemplar level is that the neuronal population investigated may be involved not only in the motor planning of grasping, but also of other manipulative actions. Indeed, a recent study using long-train intracortical microstimulation (Baldwin et al. 2017) suggested that the IPL, including AIP, hosts the neural substrates underlying a variety of ethologically relevant hand and digit movements beyond grasping, consistent with previous proposals (Tunik et al. 2007). Intriguingly, our evidence demonstrating OMA selectivity in AIP suggests that object size and shape should no longer be considered the only visual information utilized for action planning in AIP. Indeed, the observed actions of others may also play a role in the selection and planning of appropriate manipulative actions, especially in social contexts, a view supported by a recent evidence that AIP is a crucial component of the cortical network underlying the visual processing of social interactions (Sliwa and Freiwald 2017). Finally, a recent study on ventral and dorsal premotor mirror neurons demonstrated that in both these nodes of the cortical action observation network the probability to get strict visuomotor congruence between motor and visual representations of grip type in single neurons was at chance level (Papadourakis and Raos 2018). Although in the execution mode we could not test the same variety of actions as in the observation mode, the present and previous (Papadourakis and Raos 2018) findings, suggest that in most areas of the action observation network (Bonini 2017; Bruni et al. 2018; Fiave et al. 2018) the encoding of executed and observed actions recruits largely overlapping sets of neurons, which nonetheless may specify highly distinct variants of the encoded features when switching between the visual and motor modes of action representation.

The neural machinery underlying observed action processing revealed by the present study also suggests that area AIP should prove an ideal focus for future investigations into the neural basis of the monkeys’ ability to discriminate and categorize hand actions (Nelissen and Vanduffel 2017), as previously investigated for 3D-shapes (Verhoef et al. 2015). Interestingly, this function may also benefit from the activity of suppression OMA-selective units that, unlike facilitation units, did not respond during grasping in the dark but did so in the light. This suggests that, in spite of their essentially visual nature, they differentiate the visual feedback regarding the subject’s own hand during active grasping from others’ observed manual actions.

Rostrocaudal Anatomofunctional Gradients Within AIP

One of the most important contributions of our study, other than the demonstration of the role played by AIP in the neural representation of OMAs, is that the visual selectivity for observed actions is prevalent in the caudal portion of the area, where it is associated with stronger visuomotor selectivity for the grip type and, most interestingly, with stronger tuning for the visual feedback of the monkey’s own hand during active grasping. These findings are consistent with previous AIP studies indicating a rostral-to-caudal increase in the selectivity for visual features such as objects’ shape and orientation (Durand et al. 2007; Baumann et al. 2009). In our study, object selectivity exhibited the same rostrocaudal trend, although it did not reach significance probably because we used only 3 objects that the monkey grasped with the same orientation of the wrist (pronation). Furthermore, previous single-neuron AIP studies reported responses to the visual image of monkey’s own hand during grasping (Sakata et al. 1995; Maeda et al. 2015): in our study, we provide the first evidence that this effect is more marked in the caudal part of the area, where it is combined with increased sensitivity to OMAs. Our findings suggest therefore that pAIP is more specifically committed to the processing of visual information about self and others’ manipulative actions.

Significantly, we have been able to directly match our neurophysiological findings of a tuning of pAIP neurons to self and other’s observed actions with the neuro-anatomical evidence, obtained in the same animals, of 3 rostral-to-caudally increasing connectivity gradients. Compared with the intermediate and rostral levels of AIP, pAIP displays stronger connections with 1) a set of visual areas of the ventral stream that convey information about object features (Sary et al. 1993; Logothetis et al. 1995; Saleem and Tanaka 1996; Koteles et al. 2008; Hong et al. 2016) and observed actions (Perrett et al. 1989; Nelissen et al. 2011), in particular the dynamic body shape changes defining the action (Vangeneugden et al. 2009), 2) prefrontal cortical areas, including visually recipients areas 12r and 46 v (Borra et al. 2011; Gerbella et al. 2013), involved in manual action planning (Bruni et al. 2015; Simone et al. 2015) and observation (Raos and Savaki 2017; Simone et al. 2017; Fiave et al. 2018), and 3) oculomotor regions, including area LIP, which may drive spatial attention processes aimed at proactively capturing goals and targets of others’ observed actions (Flanagan and Johansson 2003; Falck-Ytter et al. 2006; Elsner et al. 2013; Maranesi et al. 2013; Lanzilotto et al. 2017). The specificity of this anatomofunctional association is underscored by the absence of a gradient in the connections with dorsovisual and skeletomotor related areas, as well as a reversed caudal-to-rostral incremental gradient for the connections with a large set of mainly parietal somatosensory regions, consistent with previous studies (Lewis and Van Essen 2000; Borra et al. 2008; Baumann et al. 2009). These somatosensory connections provide the rostral sector of AIP with a rich set of information about the state of the body parts (Buneo and Andersen 2012) and the relationship between body-state and objects in the outside world for updating action planning and control (Tunik et al. 2007; Borra et al. 2017; Gerbella et al. 2017). Of course, all these connectional patterns may also be shared by several other functions not directly linked with observed action processing, such as 3D-shape processing (Taira et al. 2000; Durand et al. 2007; Rosenberg et al. 2013), eye-hand coordination (Lehmann and Scherberger 2013; Borra et al. 2014) and spatial attention toward possible targets of planned actions (Bisley and Goldberg 2010). These additional functions do not, however, detract from the present evidence indicating that pAIP plays a major role in OMA coding.

Conclusions

The well-established visuomotor properties and the previously described functional gradients in AIP have supported the view of a gradual transformation of visual shape information into motor signals underlying planning and execution of grasping actions (Murata et al. 2000; Janssen and Scherberger 2015). Our results suggest that a similar mechanism may exist for linking the motor representations of a variety of manipulative actions with visual and contextual information about others’ observed actions, with pAIP acting as a hub in this process. To explore the variety of manual actions that characterize the monkeys’ behavioral repertoire, further studies may have to record neuronal activity during unconstrained behaviors within individual and social contexts. Our results underscore the need for such studies, using wireless recording techniques to explore the neuronal correlates of freely moving monkeys’ behaviors, to remove the biases introduced in the literature by the focus on a very limited set of actions. These studies may also provide single neuron evidence for “social affordance” (Loveland 1991), in which just as observed objects trigger a variety of motor affordances depending on the objects’ physical properties (Maranesi et al. 2014), other’s observed actions may trigger different possible reactive actions in the observer’s brain depending on the social context.

Supplementary Material

Funding

This work was supported by the European Union's framework program FP7/2007-2013 under grant agreement 600925 to G.A.O and by the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation programme under grant agreement 678307 to L.B.

Notes

Conflict of Interest: None declared.

References

- Abdollahi RO, Jastorff J, Orban GA. 2013. Common and segregated processing of observed actions in human SPL. Cereb Cortex. 23(11):2734–2753. [DOI] [PubMed] [Google Scholar]

- Bakola S, Gamberini M, Passarelli L, Fattori P, Galletti C. 2010. Cortical connections of parietal field PEc in the macaque: linking vision and somatic sensation for the control of limb action. Cereb Cortex. 20(11):2592–2604. [DOI] [PubMed] [Google Scholar]

- Baldwin MKL, Cooke DF, Goldring AB, Krubitzer L. 2017. Representations of fine digit movements in posterior and anterior parietal cortex revealed using long-train intracortical microstimulation in macaque monkeys. Cereb Cortex. 9:1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barz F, Livi A, Lanzilotto M, Maranesi M, Bonini L, Paul O, Ruther P. 2017. Versatile, modular 3D microelectrode arrays for neuronal ensemble recordings: from design to fabrication, assembly, and functional validation in non-human primates. J Neural Eng. 14(3):1741–2552. [DOI] [PubMed] [Google Scholar]

- Barz F, Paul O, Ruther P. 2014. Modular assembly concept for 3D neural probe prototypes offering high freedom of design and alignment precision. Conf Proc IEEE Eng Med Biol Soc. 80(10):6944495. [DOI] [PubMed] [Google Scholar]

- Baumann MA, Fluet MC, Scherberger H. 2009. Context-specific grasp movement representation in the macaque anterior intraparietal area. J Neurosci. 29(20):6436–6448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belmalih A, Borra E, Contini M, Gerbella M, Rozzi S, Luppino G. 2007. A multiarchitectonic approach for the definition of functionally distinct areas and domains in the monkey frontal lobe. J Anat. 211(2):199–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. 2010. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 33:1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blatt GJ, Andersen RA, Stoner GR. 1990. Visual receptive field organization and cortico-cortical connections of the lateral intraparietal area (area LIP) in the macaque. J Comp Neurol. 299(4):421–445. [DOI] [PubMed] [Google Scholar]

- Bonini L. 2017. The extended mirror neuron network: anatomy, origin, and functions. Neuroscientist. 23(1):56–67. [DOI] [PubMed] [Google Scholar]

- Bonini L, Maranesi M, Livi A, Bruni S, Fogassi L, Holzhammer T, Paul O, Ruther P. 2014. Application of floating silicon-based linear multielectrode arrays for acute recording of single neuron activity in awake behaving monkeys. Biomed Tech. 59(4):273–281. [DOI] [PubMed] [Google Scholar]

- Bonini L, Maranesi M, Livi A, Fogassi L, Rizzolatti G. 2014. a. Space-dependent representation of objects and other’s action in monkey ventral premotor grasping neurons. J Neurosci. 34(11):4108–4119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonini L, Maranesi M, Livi A, Fogassi L, Rizzolatti G. 2014. b. Ventral premotor neurons encoding representations of action during self and others’ inaction. Curr Biol. 24(14):1611–1614. [DOI] [PubMed] [Google Scholar]

- Bonini L, Rozzi S, Serventi FU, Simone L, Ferrari PF, Fogassi L. 2010. Ventral premotor and inferior parietal cortices make distinct contribution to action organization and intention understanding. Cereb Cortex. 20(6):1372–1385. [DOI] [PubMed] [Google Scholar]

- Borra E, Belmalih A, Calzavara R, Gerbella M, Murata A, Rozzi S, Luppino G. 2008. Cortical connections of the macaque anterior intraparietal (AIP) area. Cereb Cortex. 18(5):1094–1111. [DOI] [PubMed] [Google Scholar]

- Borra E, Gerbella M, Rozzi S, Luppino G.. 2017. The macaque lateral grasping network: A neural substrate for generating purposeful handactions. Neurosci Biobehav Rev. 75:65–90. [DOI] [PubMed] [Google Scholar]

- Borra E, Gerbella M, Rozzi S, Luppino G. 2011. Anatomical evidence for the involvement of the macaque ventrolateral prefrontal area 12r in controlling goal-directed actions. J Neurosci. 31(34):12351–12363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borra E, Gerbella M, Rozzi S, Tonelli S, Luppino G. 2014. Projections to the superior colliculus from inferior parietal, ventral premotor, and ventrolateral prefrontal areas involved in controlling goal-directed hand actions in the macaque. Cereb Cortex. 24(4):1054–1065. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R. 1990. Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol. 296(3):462–495. [DOI] [PubMed] [Google Scholar]

- Bruni S, Gerbella M, Bonini L, Borra E, Coude G, Ferrari PF, Fogassi L, Maranesi M, Roda F, Simone L, et al. . 2018. Cortical and subcortical connections of parietal and premotor nodes of the monkey hand mirror neuron network. Brain Struct Funct. 223(4):1713–1729. [DOI] [PubMed] [Google Scholar]

- Bruni S, Giorgetti V, Bonini L, Fogassi L. 2015. Processing and integration of contextual information in monkey ventrolateral prefrontal neurons during selection and execution of goal-directed manipulative actions. J Neurosci. 35(34):11877–11890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruni S, Giorgetti V, Fogassi L, Bonini L. 2017. Multimodal encoding of goal-directed actions in monkey ventral premotor grasping neurons. Cereb Cortex. 27(1):522–533. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Andersen RA. 2012. Integration of target and hand position signals in the posterior parietal cortex: effects of workspace and hand vision. J Neurophysiol. 108(1):187–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caggiano V, Fogassi L, Rizzolatti G, Thier P, Casile A. 2009. Mirror neurons differentially encode the peripersonal and extrapersonal space of monkeys. Science. 324(5925):403–406. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. 1994. Architectonic subdivision of the orbital and medial prefrontal cortex in the macaque monkey. J Comp Neurol. 346(3):366–402. [DOI] [PubMed] [Google Scholar]

- Chung JE, Magland JF, Barnett AH, Tolosa VM, Tooker AC, Lee KY, Shah KG, Felix SH, Frank LM, Greengard LF. 2017. A fully automated approach to spike sorting. Neuron. 95(6):1381–1394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbo D, Orban GA. 2017. Observing others speak or sing activates spt and neighboring parietal cortex. J Cogn Neurosci. 29(6):1002–1021. [DOI] [PubMed] [Google Scholar]

- Crowe DA, Averbeck BB, Chafee MV. 2010. Rapid sequences of population activity patterns dynamically encode task-critical spatial information in parietal cortex. J Neurosci. 30(35):11640–11653. [DOI] [PMC free article] [PubMed] [Google Scholar]