Abstract

Mental disorders often emerge in adolescence and young adulthood, and these disorders can have lasting effects on students’ health, social functioning, and education. Although evidence-based treatments have been established for many mental disorders, few community therapists use such treatments. What is needed is a practical, economically feasible means of training clinicians to implement evidence-based treatments suitable for widespread use. This cluster randomized trial will randomize 26 college counseling centers to one of two implementation strategies for training counselors to use interpersonal psychotherapy (IPT), an evidence-based treatment for depression and eating disorders: 1) an external expert consultation model comprising a workshop, therapy manual, and expert follow-up consultation (n = 13); or 2) a train-the-trainer model in which a staff member from the counseling center is coached to train other staff members to implement IPT (n = 13). The primary outcome is therapist adherence to IPT, with secondary outcomes of therapist competence in IPT and client outcomes for depression and eating disorders. Therapist and organizational characteristics will be explored as potential moderators and mediators of implementation outcomes. Implementation costs for each of the training methods will also be assessed. The present study involves partnering with college counseling centers to determine the most effective method to implement IPT for depression and eating disorders in these settings. The results of this study will inform future large-scale dissemination of clinical interventions to mental health service providers by providing evidence for the selection of training methods when an agency chooses to adopt new interventions.

Keywords: college mental health, evidence-based treatment, dissemination, implementation, interpersonal psychotherapy, training

Introduction

When individuals with mental disorders receive care, it is typically not an evidence-based treatment (EBT) [1–3]. For example, in the National Comorbidity Survey-Replication, only 32.7% of respondents with mental disorders were classified as receiving at least minimally adequate treatment based on evidence-based guidelines [4]. Furthermore, even when clinicians say they are using an EBT, they omit crucial elements [5].

As stated by Insel [6], “We have powerful, evidence-based psychosocial interventions, but they are not widely available…A serious deficit exists in training for evidence-basedpsychosocial interventions.” Indeed, Cook et al. [7] identified “training issues” (e.g., lack of local training and ongoing supervision) as the major reason for the lack of dissemination and implementation of new treatments. What is needed is a practical, economically feasible means of training clinicians to implement EBTs. This paper describes the design of a study aimed to address this need by comparing two strategies for training therapists to implement an EBT in college counseling centers.

College counseling centers are an ideal setting in which to study implementation of EBTs because mental disorders often emerge in adolescence and young adulthood; indeed, three quarters of lifetime mental disorders emerge by age 24 [8]. These disorders can have lasting effects on students’ health, social functioning, and education [9–13]; yet, only 16–36% of students with mental disorders receive treatment [14, 15].

In this study, we selected interpersonal psychotherapy (IPT) as the EBT to disseminate for several reasons. First, IPT is an EBT for at least two of the most common mental health problems seen by college counseling centers—depression and eating disorders (EDs) [16–20]. As a transdiagnostic treatment, IPT offers clinical range, which may enhance adoption [21]. Second, IPT is readily acceptable to therapists and clients [22]. Third, mental disorders in young people are often marked by interpersonal problems [23–26], making IPT particularly appropriate [22].

Current approaches to training therapists typically consist of a two-day workshop delivered by an expert and provision of a manual [27]. Although workshops increase therapists’ knowledge, their impact on skills is short-lived without further consultation [28–31]. Alternatively, there is a strong theoretical case for the “train-the-trainer” approach, which centers around development of an internal coach and champion, who then subsequently trains therapists in the setting, and has been recommended as the most effective means of changing actual therapist behavior [27].

Much of the past research on the train-the-trainer approach used methodologically flawed pilot studies (e.g., limited power, absence of comparison groups) [28, 32], although more rigorous studies have begun to emerge. For instance, Martino et al. [33] found that expert-led and train-the-trainer strategies, in comparison to self-study, improved community mental health center clinicians’ adherence and competence for motivational interviewing. While there is emerging evidence supporting the train-the-trainer approach, there is a gap in the field to specifically test this approach for implementing EBT for mental disorders on the college campus. Data on the cost-effectiveness of training methods for EBTs are also lacking, as are data on predictors, moderators, and mediators of implementation outcomes.

Methods and Design

Specific Aims

The aim of the current study is to compare the implementation outcomes of two methods of training therapists to treat mental disorders, namely depression and EDs, on college campuses using IPT.

We have the following specific aims:

To compare the effectiveness of two methods to train therapists to implement IPT for the treatment of depression and EDs in college counseling centers: 1) an external expert consultation model comprising a workshop, therapy manual, and 12 months of expert follow-up consultation—a “low intensity” method; and 2) a train-the-trainer model in which a staff member from the counseling center is coached to train other staff members to implement IPT—a “high intensity” method. These inside, or on-site, experts will also be trained to implement quality-control procedures to ensure treatment fidelity and enhance sustainability. The primary outcome will be therapist adherence to IPT assessed by auditing audio recordings of selected therapy sessions. The secondary outcomes will be therapist competence in IPT (assessed by auditing audio recordings) and client outcomes, which are the ultimate goal of effective implementation [27, 34, 35].

To compare the incremental cost-effectiveness ratios of the two training methods in exploratory analyses.

To examine predictors, moderators, and mediators of implementation outcomes in exploratory analyses.

Experimental Design

Using a cluster randomized design, counseling centers (N = 26), with approximately 230 counselors, will be randomly allocated to one of the two implementation methods using a computer-generated randomization sequence implemented by study statisticians: 1) the external expert consultation condition; or 2) the train-the-trainer condition. Randomization of sites to conditions will take into account the ratio of students to counselors at each site to equalize the two conditions on this variable and take place after sites agree to participate in the study. Efforts to recruit a variety of colleges and universities (e.g., private and public, small and large) into the study will also be made to ensure generalization of study results. We chose a cluster randomized design, with randomization occurring at the college counseling center level, given that we plan to train therapists in IPT by their already established group and wanted to prevent contamination across treatment arms. Colleges will be eligible to participate if they have a student counseling center, at least three therapists interested in consenting into the study, and a center staff member in a leadership position who agrees to serve as the director for the study.

Each participating therapist (eligible if they see students at the counseling center at least 25% of the time), consented by study staff, will be asked to consent up to two student clients (eligible if they are 18 years or older and presented to the counseling center with symptoms of either depression or EDs [excluding symptoms of anorexia nervosa]) during each of the three study phases, which is reasonable given the high frequency of depression and EDs in college populations [36, 37]. We note that the therapist will decide if the client meets criteria for depression or EDs (excluding anorexia nervosa). The three study phases are baseline, post-training, and sustainability. The sustainability phase is defined as the period of time during which expert consultation (with therapists in the case of the external expert consultation condition and with trainers in the case of the train-the-trainer condition) will have ceased. The primary outcome will be the adherence of therapist application of IPT determined by auditing audio recordings of selected therapy sessions. Of note, therapists will not be blinded to study condition given the nature of the study. Students will be blinded to study condition.

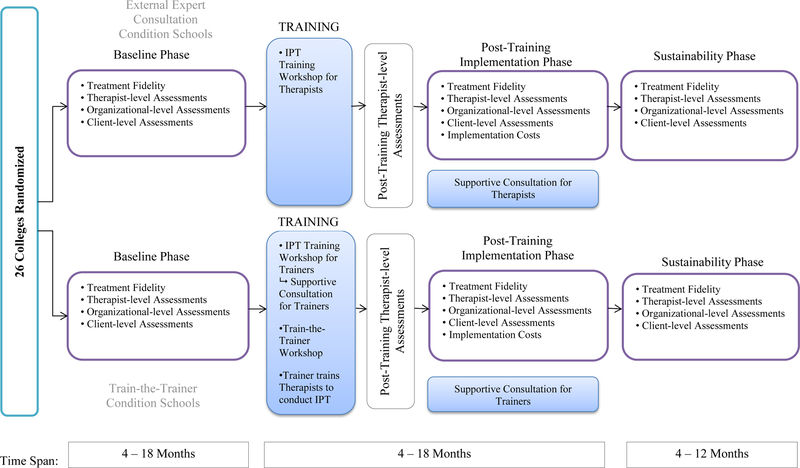

At each site randomized to the train-the-trainer condition, one trainer will be selected by the staff member consented into the study’s director role at that counseling center (i.e., either the actual director of the counseling center or an individual with some leadership role at the center). The selected trainers (N = 13) will be judged by consented directors to have the following characteristics: interest in the project; competency as a therapist and supervisor; and a stable position within the service setting. Based on general rates of utilization of care and prevalence of depression and EDs, it is expected that each therapist will carry at any one time approximately 8–11 patients for whom IPT may be appropriate. However, patients will not be randomized to treatment conditions, and therapists, regardless of training condition to which their site is randomized, will not be required to use IPT since they will be expected to provide whatever form of care they deem in the best interests of the patients who present for treatment. For these reasons, targeted enrollment estimates are conducted at the therapist level for this cluster randomized research design. Notably, while the therapists are the primary participants of interest in the current study, we will also collect organization-level data from consented directors (N = 26) and data on the participating clients of the study therapists. See Figure 1 for an overview of the experimental design and sources of data.

Figure 1.

Study design and data collection components

Integration of study procedures into clinic functioning.

Prior to participation in the first training workshop, a member of the study team will survey the consented director of each counseling center to determine what resources are available for screening, assessing, tracking, and evaluating students presenting for treatment of depression and EDs. The study team will work to integrate study procedures within participating centers to minimize disruption to current operating procedures.

Training Conditions

External Expert Consultation

IPT training workshops.

We will provide a two-day workshop on IPT at each site randomized to this condition to train therapists to become competent in administering the treatment. These workshops will be conducted by a study team member who is an expert in IPT training. Two weeks prior to the workshop, therapists will be asked to review a copy of the IPT treatment manual. The workshops will comprise: (a) a detailed, interactive review of key principles and procedures of IPT using PowerPoint slides; and (b) role-plays and case examples to demonstrate the various treatment phases. Therapists will be given copies of the slides.

Supportive consultation for therapists.

Therapists at each counseling site will have the opportunity to engage in a one-hour phone call every month to receive guidance on their implementation of IPT from the study team member who conducted the IPT training. These calls will be scheduled at times that are most convenient for the counseling sites (e.g., lunch hour, early morning). This phase will span up to 12 months post-workshop for each site.

Train-the-Trainer

In contrast to the external expert consultation strategy, with its focus on consultation from outside experts (both in the workshop and subsequent consultation calls), the focus of the train-the-trainer strategy is on building expertise capacity in the use of IPT within the organization. This approach, which is based on social cognitive learning theory [38], features active learning via modeling, feedback on performance, building self-efficacy, and supportive interactions among therapists learning the treatment. Indeed, the goal of the train-the-trainer strategy is to teach the selected trainers to provide active, ongoing involvement in instruction of IPT, consultation on the use of IPT, and quality control within their centers. This on-site “internal expert” serves as a role model or “champion” [39, 40], provides guidance in the use of IPT when necessary, oversees the quality of IPT, and encourages accountability for competent implementation of IPT to the extent that this is feasible. On-site trainers will be able to work with their counseling center directors and colleagues from within to change the site’s culture regarding the treatment of depression and EDs specifically.

Selected trainers in the train-the-trainer condition will attend, as a group, two separate workshops at one of the study team sites; the first two-day workshop will be identical in content to the workshop provided in the external expert consultation condition and is designed to teach participants to conduct IPT. The second workshop will provide the participants in the train-the-trainer condition with specific training in how to train others in the use of IPT. This cross-site training process for the train-the-trainer condition is intended to begin building an “esprit de corps” among the trainers. It is anticipated that this form of peer support from colleagues across the country will facilitate implementation of IPT over the long-term.

Supportive consultation for trainers.

Following participation in the first, two-day workshop, each trainer will return to their site and be encouraged to treat up to two cases with IPT, audio recording each session. The study team member who conducted the IPT training will review a selection of the recorded sessions from each case treated by the trainers and will provide feedback regarding treatment quality. This procedure allows the expert IPT trainer to model the provision of feedback on treatment quality for the trainers in the high-intensity condition, preparing them for providing feedback on implementation efforts to their trainees following their participation in the second workshop.

Train-the-trainer workshop:

The goal of this second workshop is to prepare therapists to train other therapists at their sites in IPT and to manage the quality-control procedures as modeled by the study team member following the first workshop. The emphasis in this second workshop will be on teaching participants how to engage therapists at their center in the learning and use of IPT. The workshop will also focus on the rationale for and the process of utilizing quality-control methods (see below) in the implementation of IPT with their own trainees, as well as problem solving potential barriers to conducting the training and providing ongoing consultation. Trainers will also be provided with video-recorded role-plays demonstrating the various treatment phases for use in conducting training with their colleagues, if they so choose. This phase, including the two workshops, and the trainer’s practice treating up to two cases and receiving feedback, will span approximately six months. Trainers in the train-the-trainer condition will then be encouraged to train other therapists at their sites.

On-site quality-control procedures.

Once trainers have trained their colleagues in the use of IPT, they will be encouraged to meet weekly with their trainee colleagues for one-hour group consultations to monitor treatment quality, provide specific feedback, and track client progress. In addition, trainers will be encouraged to provide their trainees with checklists and forms designed to facilitate the use of IPT with their clients.

Peer support for trainers

Trainers will be encouraged to join monthly group implementation review calls with participants from other sites, which will be arranged and facilitated by study staff. This system of peer support will empower the participants in the train-the-trainer condition, enhancing their self-efficacy and sense of cohesion.

See Table 1 for comparison of the two implementation strategies.

Table 1.

Components of different training methods

| Training Methods | ||

|---|---|---|

| Components | External Expert Consultation Method | Train-the-Trainer Method |

| Increased knowledge of IPT | X | X |

| Practice of IPT with external consultation | X | X |

| Practice of IPT with internal consultation | X | |

| Internal quality control | X | |

| Peer support | X | |

Note. IPT = interpersonal psychotherapy.

During the final 12 months of the study, supportive consultation with the study team will be discontinued (consultation calls with therapists in the case of the external expert consultation method and facilitation of peer support calls with trainers in the case of the train-the-trainer method), and participants across all sites will be encouraged to continue to utilize IPT. Trainers in the train-the-trainer condition will be encouraged to continue to provide on-site consultation to their trainees for quality-control purposes.

Measures

Treatment fidelity is comprised of both doing the right thing (adherence) and doing it well (competence). The primary implementation outcome will be therapist adherence to IPT. Secondary outcomes will be therapist competence in IPT and change in depressive and ED symptoms for the sample of clients treated. Treatment fidelity and client outcomes will be assessed at three intervals: baseline; during the post-workshop implementation phase (when the two conditions are fully implemented); and during the sustainability phase (once study team involvement with the sites has ended). The length of these assessment intervals may vary by site depending on data collection rates but will likely span anywhere from 4 to 18 months (with the exception that the sustainability phase will only last up to 12 months). See Table 2 for a list of assessments and time points. All assessments will be web-based self-report surveys apart from the assessment of treatment fidelity in delivering IPT, which will be assessed by rating audio recordings (see more information below). Additionally, after consented directors complete web-based self-report surveys regarding implementation costs, telephone interviews will be conducted to verify their responses and ensure that all costs related to implementation have been captured.

Table 2.

Assessments and time points

| Assessment | Baseline | Immediately Post-Training | Post-Training Implementation Phase | Sustainability Phase |

|---|---|---|---|---|

| TREATMENT FIDELITY | ||||

| Adherence to IPT | X | X | X | |

| Competence in IPT | X | X | X | |

| THERAPIST LEVEL | ||||

| Therapist characteristics | X | |||

| Therapist acceptance of evidence-based treatments | X | X | X | |

| Therapist acceptance of IPT | X | X | X | |

| Therapist knowledge of IPT | X | X | X | |

| Uptake of IPT | X | X | X | |

| Penetration of use of IPT | X | X | X | |

| ORGANIZATION LEVEL | ||||

| Site characteristics | X | |||

| Organizational acceptance | X | X | ||

| Work satisfaction | X | X | ||

| Commitment | X | X | ||

| CLIENT LEVEL^ | ||||

| Depression | X | X | X | |

| Eating disorder symptoms | X | X | X | |

| Satisfaction with care | X | X | X | |

| IMPLEMENTATION COSTS | ||||

| Implementation costs | X | |||

Note. IPT = interpersonal psychotherapy.

All client-level assessments, except satisfaction with care, will be assessed at each therapy session. Satisfaction with care will be assessed only once per client, at the end of the course of treatment.

Treatment fidelity.

During each of the assessment intervals (i.e., baseline, post-training implementation, and sustainability), we will ask each therapist for all audio recordings of sessions from their consented cases (i.e., up to two cases per study phase). Two recordings—the first session recording and one randomly selected recording from the third recording and on (unless only two recordings are submitted, in which case both will be rated)—will then be selected from each case and audited by study staff. Auditors will be blind to implementation condition. Fidelity (i.e., adherence and competence, which will be rated separately) will be assessed using the IPT fidelity assessment instrument, which was informed by our own work and work done by the Veterans’ Health Administration (VHA) and other IPT researchers training therapists to conduct EBT. Auditors of fidelity to IPT procedures will be graduate student research assistants blinded to implementation condition and study phase who have received training in the delivery of IPT, including an in-person graduate course on IPT and an in-person IPT workshop with the study team member conducting IPT training with participants. A senior study team member will train these assistants in rating recordings for IPT adherence and competence. A portion of the audited recordings will be rated by the senior study team member to assess inter-rater reliability at the three time points. This method for training raters and for auditing therapy session audio recordings for treatment integrity and fidelity to IPT has demonstrated validity and reliability in other clinical trials [41, 42]. Notably, we will examine treatment fidelity for depression and ED cases combined, with exploratory analyses examining treatment fidelity for depression and ED cases separately.

Therapist-level assessments.

(1) Therapist characteristics. These include: age, degree, years of experience working in student counseling, years employed in present position, and current experience treating EDs (yes/no) and will be assessed at baseline (moderator variables). (2) Acceptability. (a) The Evidence Based Practice Attitude Scale (EBPAS) [43] will be used to ascertain the degree of acceptance of EBTs in general by the therapists. This 15-item scale has four subscales: Appeal, Requirements, Openness and Divergence. Norms for this scale have been established from a large-scale study [44]. The EBPAS will be administered at baseline (moderator variable), immediately post-training (mediator variable), and during the sustainability phase (mediator variable). (b) The 11-item Acceptance of IPT scale was developed and will be used to assess degree of acceptance of IPT as a relevant and useful treatment. Assessed at baseline (moderator variable), immediately post-training (mediator variable), and during the sustainability phase (mediator variable). (3) Knowledge. A 20-item questionnaire developed to assess knowledge of IPT. Assessed at baseline (moderator variable), immediately post-training (mediator variable), and during the sustainability phase (mediator variable). (4) Uptake This measure is the ratio of the number of therapist cases rated satisfactorily on treatment fidelity to the total number of cases assessed (mediator variable). (5) Penetration. Full penetration is defined as the proportion of therapists conducting at least one satisfactory case of IPT during an assessment period and partial penetration as the proportion of therapists who are rated as applying IPT to at least one case, but the application was rated as unsatisfactory.

Organization-level assessments.

(1) Site characteristics. Variables such as size of student body, number of counseling visits per year for depression and EDs, and number of counselors employed. Reported on by consented counseling center directors at baseline (moderator variables). (2) Organizational acceptance. This measure, based on the TCU Organizational Readiness for Change scale [45, 46], assesses the degree of support from the consented directors of the counseling centers for the training program and implementation strategy. Reported on by counseling center directors post-training and during the sustainability phase (moderator variables). (3) Work satisfaction. The job satisfaction scale [47], a 14-item scale from the Job Diagnostic Scale, assesses various dimensions of satisfaction. The scale has good internal consistency and validity in that it correlates with other hypothesized work behaviors. This scale will be completed by therapists at baseline and during the sustainability phase (moderator variables). (4) Commitment. Commitment to the work organization, which is a more stable construct than job satisfaction, will be measured by the 15-item Organizational Commitment Scale [48]. The scale has good internal consistency and validity in that it correlates with other hypothesized work behaviors. This scale will be completed by therapists at baseline and during the sustainability phase.

Client-level assessments.

Therapists will consent student clients during all three study phases (i.e., baseline, post-training implementation, and sustainability). Clients will complete the following measures at each visit (with the exception of satisfaction with care, which will be assessed only once). (1) Depression. The Patient Health Questionnaire (PHQ-9), a 9-item scale scored 0–27, which differentiates various levels of depression and has good test-retest reliability will be used to assess depression [49, 50]. One item (“poor appetite or overeating”) was modified to only ask about poor appetite. Assessed over the past two weeks on the first visit and over the past week thereafter. (2) ED symptoms. ED psychopathology will be assessed using items adapted from the Eating Disorder Examination (EDE) [51], a widely used, semi-structured interview for assessing ED symptoms. The primary ED symptom outcome will be number of objective binge eating episodes. Assessed over the past 28 days on the first visit and over the past week thereafter. Of note, we will examine client outcomes combined for depression and EDs, by examining percent change in symptoms over the course of treatment, and also separately. When examining client outcomes separately, in addition to examining depression and ED symptoms continuously, we will also examine abstinence (in the case of EDs, abstinence will be defined as abstinence from all ED behaviors [e.g., binge eating, self-induced vomiting]). (3) Client satisfaction with care. The treatment satisfaction scale developed by the Agency for Healthcare and Research Quality [52] is a single item rating of satisfaction with care. A link to a survey website will be provided to clients by their therapists during their termination session, and clients will be given the space to complete this question confidentially. If a client does not show up for a termination session (or if treatment ends without a formal termination session), the therapist will e-mail the link to the client to complete the survey.

To assure as complete data collection as possible, all client measures, except satisfaction with care, will be assessed as part of routine clinical care at the beginning of each therapy session using web-based measures.

Implementation costs.

The principal reason to evaluate implementation costs is to understand if the train-the-trainer condition is “worth it” when it comes to treatment fidelity. Given the design of the train-the-trainer condition, it is likely that it will be more expensive to deploy. But what is the added improvement in outcomes that might result from this higher expense? If this increased cost of the train-the-trainer condition compared to the external expert consultation condition can be quantified, and then compared to the improved outcomes that are expected from the train-the-trainer condition, then decision makers can evaluate these competing implementation strategies from an economic perspective. A comparison of relative costs versus relative outcomes allows us to arrive at an incremental cost-effectiveness ratio for these competing strategies, which is one additional tool that agency administrators and policymakers can use when deciding whether or not to invest in an implementation strategy.

The overall approach to capturing implementation costs is described elsewhere [53]. Briefly, this approach is an example of an activity-based costing strategy [54], which has its origins in the field of accounting. The basic premise of an activity-based strategy is that it is activities performed by various individuals that primarily consume resources, and that it is these activities that are also responsible for producing outcomes. Consequently, understanding the resources consumed by these various activities can be used to allocate costs associated with these activities, and serve as a basis for evaluating the outcomes that result from such activities. In our study, we use this approach to calculate all indirect labor costs and all nonlabor costs associated with each implementation strategy. Indirect labor costs are generated as a result of activities undertaken by clinicians, administrators, and supervisors. These costs associated both with pre-work (e.g., familiarization with the implementation protocol) and implementation activities (e.g., case consultation). Non-labor costs of procuring training in the implementation strategy (tuition and other materials), as well as travel expenses for (minimally) one clinician, will also be captured. We will develop implementation cost-effectiveness ratios by differencing the costs of the train-the-trainer from the external expert consultation implementation condition, and dividing this difference by the difference in fidelity achieved between these two conditions. We will adopt the counseling center’s perspective for these analyses, and use a time horizon coterminous with study completion to study fidelity, which obviates the need to use a formal discounting strategy.

Data Analysis

Primary analysis for Specific Aim 1.

The primary implementation outcome in this project is treatment adherence in the use of IPT (examined for depression and ED cases combined and separately in exploratory analyses). Based on the assessment of fidelity by audits of audio recordings from selected therapy sessions, we will construct a continuous measure of treatment adherence. We will compare treatment adherence across the two randomized implementation methods accounting for the nested structure of the data collected from college settings. Given that therapists are nested within universities, it is possible that therapists in the same university are somewhat more similar in terms of their implementation behavior compared to therapists in different universities. Although we do not expect a high correlation among therapists within universities, we will take this possibility into account in our analyses. To take this correlation (i.e., intraclass correlation; ICC) into account, we will employ multilevel or mixed effects analysis [55, 56], treating universities as clusters (second level analysis unit). Since we will have only 26 campuses in total as the cluster units, in addition to the formal multilevel approach, we plan to employ an intermediate approach (i.e., sandwich estimator), where standard errors are adjusted without introducing separate parameters to represent variation within clusters and variation between clusters. In line with Amemiya [57] and Carroll, Ruppert, and Stefansky [58], we will use a maximum likelihood estimation method to implement the sandwich estimator. According to our Monte Carlo simulations, the sandwich estimator using 26 clusters resulted in a type I error rate close to the nominal 5% in the simple group comparison setting we propose. For both formal multilevel and sandwich estimator analyses, we will use a maximum likelihood estimation method implemented in Mplus [59]. Using these methods, we will first compare treatment adherence across the two implementation methods at each assessment point, which will show us whether the difference is clinically (effect size) and statistically significant. We will also estimate the longitudinal trends of treatment adherence using multilevel growth curve analysis and compare them across the two methods. These analyses will let us test whether the obtained treatment fidelity is sustained at the final follow-up. The results will also tell us how longitudinal trends differ across the two implementation strategies. These analyses fit in the framework of general mixed effects analysis [55, 56] and can be conducted in Mplus.

Secondary analyses for Specific Aim 1.

First, we will examine competence in IPT using methods similar to those described above. Next, the relative effectiveness of the two implementation strategies will be estimated in terms of client outcomes (combined for depression and EDs, by examining percent change in symptoms over the course of treatment, and also separately in exploratory analyses). In the proposed study, the unit of randomization is a college. Therapists are nested within colleges (about 115 therapists in each treatment group), and clients are nested within therapists. The basic unit of analysis will be college student clients (up to two clients per therapist, per study phase). Although we do not expect a high correlation among therapists within colleges (e.g., due to age gaps and different training/specialties), we do expect a considerable correlation among student clients nested within therapists. To take this correlation (ICC) into account, we will employ mixed effects analyses, treating therapists as clusters (second level analysis unit). We expect that the effect of ICC among students belonging to the same universities will be minimal in our analyses after taking into account ICC due to the same therapists. Unlike the primary outcome (treatment fidelity), client outcomes will be measured based on different samples at each assessment point, and therefore, we will focus on change in symptoms at each assessment point and will not formally estimate longitudinal trends across assessments. The results will still have implications on sustainability of the implementation method in terms of client outcomes but will not hold strong longitudinal inferences obtained based on therapist outcomes.

Specific Aim 2.

Once costs have been tracked for each of the implementation strategies, we will aggregate costs to annual figures. These annual costs will be expressed in two ways: total annual cost per university and average annual incremental cost per depression or ED client (calculated by dividing total incremental costs by the number of clients served in each year in each university). All costs will be converted to constant dollars using the Consumer Price Index. We will estimate preliminary models to evaluate incremental cost-effectiveness ratios:

The numerator reflects the incremental cost of the train-the-trainer implementation strategy over the external expert consultation implementation strategy; the denominator reflects differences between the outcomes of interest under the train-the-trainer condition and the external expert consultation condition. All outcomes will be adjusted for risk and case-mix. These ratios will be calculated separately for each short-term and long-term outcome. The economic decision rule would likely be to recommend that universities adopt the implementation arm with the lowest incremental cost-effectiveness ratios. From a planning perspective, these ratios will tell universities how much they would have to pay to achieve the additional outcomes measured using each of the implementation strategies.

Since this study will evaluate cost-effectiveness of the train-the-trainer approach across a number of different dimensions, this strategy may prove more cost-effective for some but not for other outcomes. In this case, the outcomes for which the train-the-trainer strategy is more cost-effective will be identified and discussed. However, with up to six patients per therapist, nine therapists per university on average, and 13 universities per treatment group, we will not be able to assign standard errors to the cost-effectiveness ratios (e.g., bootstrap procedures would require a greater pool from which to resample to get meaningful standard error estimates). For this reason, we will discuss these analyses as exploratory, and our conclusions as suggestive rather than definitive.

Specific Aim 3.

For our primary outcome, adherence to IPT, we will examine variables such as site and therapist characteristics, therapist acceptance of EBTs and IPT, and other baseline assessments as potential moderators. Potential mediators include therapist knowledge and acceptance of IPT assessed post-workshop and number of cases in which IPT was attempted. For our secondary client level outcomes, moderators consist of variables such as therapist characteristics, site characteristics, and organizational support. Hypothesized mediators consist of variables such as therapist knowledge, uptake of IPT, and treatment fidelity. For moderator analyses, we will apply the McArthur framework [60, 61] where the effect of interaction between baseline covariates and training assignment on the post-training outcome is defined as moderation effect. For mediator analyses, we will first apply the McArthur framework, where post-training intermediate outcomes are qualified as mediators if they are affected by training assignment and if they have main and/or interaction (training x mediator) effect on the final outcome of interest. Second, we will apply a more rigorous causal modeling approach where the intention is to make causal inference and identify conditions under which the effect estimates can be interpreted as causal. Since this approach relies on identifying assumptions that cannot be directly tested based on observed data, plausibility of assumptions and the quality of sensitivity analysis determines the quality of causal inference.

Power

The current study was powered for the primary analysis for Specific Aim 1. If resemblance among therapists in each cluster (i.e., each college counseling center in our study) is ignored in analyzing outcomes in a cluster randomized trial, standard errors are usually underestimated, which results in inflation of statistical power (type I error). Previous studies have established various guidelines regarding design strategies and remedies at the analysis stage in this context [62–64]. Following these guidelines, we estimated statistical power of 0.8. We expect to have nine therapists at each college counseling center on average. For ICC, we considered the size that is commonly seen in social or psychological cluster randomized trials, where ICC of 0.01–0.05 is common and 0.1 is considered quite large. Given that, we consider ICC of 0.05 to 0.1 in our power estimation. We will assume a simple group comparison of continuous means with the nominal significance level of .05 (two-tailed). In this calculation, we assumed a medium effect size (Cohen’s d = 0.5). According to this scenario, the estimated power ranges from 0.814 to 0.899 with the total of 26 universities (13 universities per condition).

Discussion

The goal of the current study is to evaluate the effectiveness of two different implementation methods to train college therapists to deliver IPT, an EBT for depression and EDs under “real world” conditions, both in the short- and long-term. In order to be useful, EBTs must be able to be disseminated successfully to multiple environments to ensure that more patients can receive recommended treatment. Indeed, in order to optimize psychological science’s impact on public health, dissemination and implementation research questions must be tested [65]. As such, the ultimate objective of this project is to provide guidance as to the best way to train college counseling therapists in EBTs for a number of disorders. Further, this study may identify a model of training that can be used in other settings, such as community mental health centers.

The strengths of this study include: First, the current study will be the first to evaluate the comparative effectiveness of two different methods of training college counseling center therapists to increase their use of EBT for depression and EDs and to evaluate the sustainability of these methods in this setting. Next, the study will assess actual change in therapist behavior in delivering treatment (fidelity to IPT assessed by review of audio recordings of treatment sessions, with the primary outcome being adherence to IPT) instead of simply obtaining self-report proficiency as typically has been the case. Third, client outcomes will be measured so we can evaluate whether greater treatment fidelity mediates client outcome. Fourth, the comparative analysis of cost-effectiveness of two different implementation strategies differing in level of intensity will be the first of its kind in these settings. Lastly, recruitment will target all types of college counseling centers across the country (e.g., public and private schools, large and small schools), to increase generalizability of the findings obtained. Despite its strengths, this study also has several potential weaknesses including that the power of the study may be inadequate to provide definitive information on some cost-analyses and other secondary analyses and that because therapist participation is voluntary, we would expect some non-participation thus reducing penetration.

Outcomes will inform the best training model to incorporate EBT for depression and EDs into routine clinical practice and could generalize to the implementation of other EBTs for different clinical disorders not only in college counseling centers but also in other routine clinical care services. Importantly, since the launch of this study, research has revealed that not only is IPT an EBT for depression and EDs, but it has also emerged as an EBT for anxiety disorders [17, 66]. As such, findings from the current study may be readily generalizable to the implementation of IPT for anxiety disorders. IPT is thus an EBT for three psychiatric disorders that account for a substantial proportion of the mental health burden on college campuses and is readily applicable to nearly all of the top concerns of students presenting for treatment at college counseling centers [16]. Further, this study may provide insight into the organizational and therapist factors underlying therapist adoption of an EBT and the relation between therapist fidelity to the IPT treatment protocol, other therapist factors, and clinical outcomes. Therefore, the findings from the present study will provide novel and immediately useful information to organizations by establishing an evidence base for the selection of training methods when an agency chooses to adopt new interventions. Most importantly, in line with the primary aim, these results will inform the best methods for implementing and sustaining EBT for depression and EDs on the college campus, a setting in which dissemination has been slow considering the gravity of these conditions in college students. Furthermore, this study will provide a basis for developing and evaluating potentially even more cost-effective and far-reaching training methods, such as web-based training platforms and treatment simulations.

Acknowledgments

This study is supported by National Institute of Mental Health R01 MH095748. The authors thank Ms. Vanessa Teng and Ms. Hannah Toyama for their invaluable assistance in the preparation of this manuscript.

Abbreviations

- EBT

evidence-based treatment

- ED

eating disorder

- ICC

intraclass correlation

- IPT

interpersonal psychotherapy

- VHA

Veterans’ Health Administration

References

- 1.problems and solutions. Int J Eat Disord 2013;46:516–21. doi: 10.1002/eat.22110. [DOI] [PubMed] [Google Scholar]

- 2.Harvey Fairburn CG, Wilson GT. The dissemination and implementation of psychological treatments: AG, Gumport NB. Evidence-based psychological treatments for mental disorders: modifiable barriers to access and possible solutions. Behav Res Ther 2015;68:1–12. doi:10.1016.j.brat.2015.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kazdin AE, Fitzsimmons-Craft EE, Wilfley DE. Addressing critical gaps in the treatment of eating disorders. Int J Eat Disord 2017;50:170–89. doi: 10.10.1002/eat.22670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang PS, Lane M, Olfson M, Pincus HA, Wells KB, Kessler RC. Twelve-month use of mental health services in the United States: results from the National Comorbidity Survey Replication. Arch Gen Psychiatry 2005;62:629–40. doi: 10.1001/archpsyc.62.6.629. [DOI] [PubMed] [Google Scholar]

- 5.Waller G Evidence-based treatment and therapist drift. Behav Res Ther 2009;47:119–27. doi: 10.1016/j.brat.2008.10.018. [DOI] [PubMed] [Google Scholar]

- 6.Insel TR. Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Arch Gen Psychiatry 2009;66:128–33. doi: 10.1001/archgenpsychiatry.2008.540. [DOI] [PubMed] [Google Scholar]

- 7.Cook JM, Biyanova T, Coyne JC. Barriers to adoption of new treatments: an internet study of practicing community psychotherapists. Adm Policy MentHealth 2009;36:83–90. doi: 10.1007/s10488-008-0198-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kessler RC, Berglund P, Demler O, Jin R, Merikangas KR, Walters EE. Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the National Comorbidity Survey Replication. Arch Gen Psychiatry 2005;62:593–602. doi: 10.1001/archpsyc.62.6.593. [DOI] [PubMed] [Google Scholar]

- 9.Arria AM, Caldeira KM, Vincent KB, Winick ER, Baron RA, O’Grady KE. Discontinuous college enrollment: associations with substance use and mental health. Psychiatr Serv 2013;64(2):165–72. doi: 10.1176/appi.ps.201200106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.De Hert M, Correll CU, Bobes J, Cetkovich-Bakmas M, Cohen D, Asai I, et al. Physical illness in patients with severe mental disorders. I. Prevalence, impact of medications and disparities in health care. World Psychiatry 2011;10:52–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kafali N, Cook B, Wang S, Martinez PG, Selke Z, Blanco C. Impact of mental disorders on employment and receipt of public assistance: an instrumental variables approach. J Ment Health Policy Econ 2015;18:137–45. [PubMed] [Google Scholar]

- 12.Kessler RC, Foster CL, Saunders WB, Stang PE. Social consequences of psychiatric disorders, I: Educational attainment. Am J Psychiatry 1995;152:1026–32. doi: 10.1176/ajp.152.7.1026. [DOI] [PubMed] [Google Scholar]

- 13.Kessler RC, Walters EE, Forthofer MS. The social consequences of psychiatric disorders, III: probability of marital stability. Am J Psychiatry 1998;155(8):1092–6. doi: 10.1176/ajp.155.8.1092. [DOI] [PubMed] [Google Scholar]

- 14.Auerbach RP, Alonso J, Axinn WG, Cuijpers P, Ebert DD, Green JG, et al. Mental disorders among college students in the World Health Organization World Mental Health Surveys. Psychol Med 2016;46:2955–70. doi: 10.1017/S0033291716001665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Eisenberg D, Hunt J, Speer N, Zivin K. Mental health service utilization among college students in the United States. J Nerv Ment Dis 2011;199:301–8. doi: 10.1097/NMD.0b013e3182175123. [DOI] [PubMed] [Google Scholar]

- 16.Center for Collegiate Mental Health. 2016 annual report Publication No. STA 17–174; 2017. https://sites.psu.edu/ccmh/files/2017/01/2016-Annual-Report-FINAL_2016_01_09-1gc2hj6.pdf Accessed 4 May 2017.

- 17.Cuijpers P, Donker T, Weissman MM, Ravitz P, Cristea IA. Interpersonal psychotherapy for mental health problems: a comprehensive meta-analysis. Am J Psychiatry 2016;173:680–7. doi: 10.1176/appi.ajp.2015.15091141. [DOI] [PubMed] [Google Scholar]

- 18.Weissman MM, Hankerson SH, Scorza P, Olfson M, Verdeli H, Shea S, et al. Interpersonal counseling (IPC) for depression in primary care. Am J Psychother 2014;68:359–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Blanco C, Okuda M, Wright C, Hasin DS, Grant BF, Liu SM, et al. Mental health of college students and their non-college-attending peers: results from the National Epidemiologic Study on Alcohol and Related Conditions. Arch Gen Psychiatry 2008;65:1429–37. doi:10.1001.archpsyc.65.12.1429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Eisenberg D, Nicklett EJ, Roeder K, Kirz NE. Eating disorder symptoms among college students: prevalence, persistence, correlates, and treatment-seeking. J Am Coll Health 2011;59:700–7. doi: 10.1080/07448481.2010.546461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Weisz J, Bearman SK, Santucci LC, Jensen-Doss A. Initial test of a principle-guided approach to transdiagnostic psychotherapy with children and adolescents. J Clin Child Adolesc Psychol 2017;46:44–58. doi: 10.1080/15374416.2016.1163708. [DOI] [PubMed] [Google Scholar]

- 22.Tanofsky-Kraff M, Wilfley DE. Interpersonal psychotherapy for bulimia nervosa and binge-eating disorder. In: Grilo CM, Mitchell JE, editors. The treatment of eating disorders: a clinical handbook New York: Guilford Press; 2010. p. 271–293. [Google Scholar]

- 23.Blomquist KK, Ansell EB, White MA, Masheb RM, Grilo CM. Interpersonal problems and developmental trajectories of binge eating disorder. Compr Psychiatry 2012;53:1088–95. doi: 10.1016/j.comppsych.2012.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hames JL, Hagan CR, Joiner TE. Interpersonal processes in depression. Ann Rev Clin Psycho 2013;9:355–77. doi: 10.1146/annurev-clinpsy-050212-185553. [DOI] [PubMed] [Google Scholar]

- 25.Hartmann A, Zeeck A, Barrett MS. Interpersonal problems in eating disorders. Int J Eat Disord 010;43:619–27. doi: 10.1002/eat.20747. [DOI] [PubMed] [Google Scholar]

- 26.Rieger E, Van Buren DJ, Bishop M, Tanofsky-Kraff M, Welch R, Wilfley DE. An eating disorder-specific model of interpersonal psychotherapy (IPT-ED): causal pathways and treatment implications. Clini Psychol Rev 2010;30:400–10. doi: 10.1016/j.cpr.2010.02.001. [DOI] [PubMed] [Google Scholar]

- 27.Beidas RS, Kendall PC. Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin Psychol 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: a review and critique with recommendations. Clin Psychol Rev 2010;30:448–66. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Karlin BE, Cross G. From the laboratory to the therapy room: national dissemination and implementation of evidence-based psychotherapies in the US Department of Veterans Affairs Health Care System. Am Psychol 2014;69:19. doi: 10.1037/a0033888. [DOI] [PubMed] [Google Scholar]

- 30.Karlin BE, Brown GK, Trockel M, Cunning D, Zeiss AM, Taylor CB. National dissemination of cognitive behavioral therapy for depression in the Department of Veterans Affairs health care system: therapist and patient-level outcomes. J Consult Clin Psych 2012;80:707–18. doi: 10.1037/a0029328. [DOI] [PubMed] [Google Scholar]

- 31.Walser RD, Karlin BE, Trockel M, Mazina B, Barr Taylor C. Training in and implementation of Acceptance and Commitment Therapy for depression in the Veterans Health Administration: therapist and patient outcomes. Behav Res Therapy 2013;51:555–63. doi: 10.1016/j.brat.2013.05.009. [DOI] [PubMed] [Google Scholar]

- 32.Pearce J, Mann MK, Jones C, van Buschbach S, Olff M, Bisson JI. The most effective way of delivering a train-the-trainers program: a systematic review. J Contin Edu Health Prof 2012;32:215–26. [DOI] [PubMed] [Google Scholar]

- 33.Martino S, Ball SA, Nich C, Canning-Ball M, Rounsaville BJ, Carroll KM. Teaching community program clinicians motivational interviewing using expert and train-the-trainer strategies. Addiction 2011;106:428–41. doi: 10.1111/j.1360-0443.2010.03135.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract 2014;24:192–212. doi: 10.1177/1049731513505778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy MentHealth 2009;36:24–34. doi: 10.1007/s10488-008-1097-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Schwitzer A, Hatfield T, Jones AR, Duggan MH, Jurgens J, Winninger A. Confirmation among college women: the eating disorders not otherwise specified diagnostic profile. J Am Coll Health 2008;56:607–15. doi: 10.3200/JACH.56.6.607-616. [DOI] [PubMed] [Google Scholar]

- 37.Zivin K, Eisenberg D, Gollust SE, Golberstein E. Persistence of mental health problems and needs in a college student population. J Affective Disord 2009;117:180–5. doi: 10.1016/j.jad.2009.01.001. [DOI] [PubMed] [Google Scholar]

- 38.Bandura A Social foundations of thought and action Englewood Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- 39.Stetler CB, McQueen L, Demakis J, Mittman BS. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci 2008;3:30. doi: 10.1186/1748-5908-3-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zazzali JL, Sherbourne C, Hoagwood KE, Greene D, Bigley MF, Sexton TL. The adoption and implementation of an evidence based practice in child and family mental health services organizations: a pilot study of functional family therapy in New York State. Adm Policy MentHealth 2008;35:38–49. doi: 10.1007/s10488-007-0145-8. [DOI] [PubMed] [Google Scholar]

- 41.Loeb KL, Wilson GT, Labouvie E, Pratt EM, Hayaki J, Walsh BT, et al. Therapeutic alliance and treatment adherence in two interventions for bulimia nervosa: a study of process and outcome. J Consult Clini Psychol 2005;73:1097–107. doi: 10.1037/0022-006X.73.6.1097. [DOI] [PubMed] [Google Scholar]

- 42.Wilfley DE, Welch RR, Stein RI, Spurrell EB, Cohen LR, Saelens BE, et al. A randomized comparison of group cognitive-behavioral therapy and group interpersonal psychotherapy for the treatment of overweight individuals with binge-eating disorder. Arch Gen Psychiatry 2002;59:713–21. [DOI] [PubMed] [Google Scholar]

- 43.Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the Evidence-Based Practice Attitude Scale (EBPAS). Ment Health Serv Res 2004;6:61–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Aarons GA, Glisson C, Hoagwood K, Kelleher K, Landsverk J, Cafri G. Psychometric properties and U.S. National norms of the Evidence-Based Practice Attitude Scale (EBPAS). Psychol Assess 2010;22:356–65. doi: 10.1037/a001988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Institute of Behavioral Research. TCU organizational readiness for change: treatment: director version (TCU ORC-D) Fort Worth: Texas Christian University, Institute of Behavioral Research; 2003a. https://ibr.tcu.edu Accessed 1 June 2017. [Google Scholar]

- 46.Institute of Behavioral Resarch. TCu organization readiness for change: treatment staff version (TCU ORC-S) Fort Worth: Texas Christian University, Institute of Behavioral Research; 2003b. https://ibr.tcu.edu Accessed 1 June 2017. [Google Scholar]

- 47.Hackman JR, Oldham GR. Development of the job diagnostic survey. J Appl Psychol 1975;60:159. doi: 10.1037/h0076546. [DOI] [Google Scholar]

- 48.Mowday RT, Steers RM, Porter LW. The measurement of organizational commitment. J Vocat Behav 1979;14:224–47. doi:l0.1016/0001-8791(79(90072-1. [Google Scholar]

- 49.Richardson LP, McCauley E, Grossman DC, McCarty CA, Richards J, Russo JE, et al. Evaluation of the Patient Health Questionnaire-9 Item for detecting major depression among adolescents. Pediatrics 2010;126:1117–23. doi: 10.1542./peds.2010-0852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zuithoff NP, Vergouwe Y, King M, Nazareth I, van Wezep MJ, Moons KG, et al. The Patient Health Questionnaire-9 for detection of major depressive disorder in primary care: consequences of current thresholds in a crosssectional study. BMC Fam Pract 2010;11:98. doi: 10.1186/1471-2296-11-98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Fairburn CG, Cooper Z The eating disorder examination. In: Fairburn CG, Wilson GT, editors. Binge eating: nature, assessment, and treatment 12th ed. Guilford, NY; 1993. p. 317–360. [Google Scholar]

- 52. Agency for Healthcare Research and Quality. CAHPS 2.0 survey and reporting kit. Silver.

- 53.Raghavan R The role of economic evaluation in dissemination and implementation research. In: Brownson R, Colditz G, & Proctor EK, editors. Dissemination and implementation research in health: translating science to practice New York: Oxford University Press; 2012. p. 94–113. [Google Scholar]

- 54.Baker JJ. Activity-based costing and activity-based management for health care Gaithersburg, MD: Aspen; 1998. [Google Scholar]

- 55.Raudenbush SW, Bryk AS. Hierarchical linear models: applications and data analysis methods Thousand Oaks, CA: Sage; 2002. [Google Scholar]

- 56.Snijders TAB, Bosker RJ. Multilevel analysis: an introduction to basic and advanced multilevel modeling Newbury Park: Sage; 1999. [Google Scholar]

- 57.Amemiya T Advanced econometrics Cambridge, MA: Harvard University Press;1985. Springs, MD: Publications Clearinghouse; 2002. [Google Scholar]

- 58.Carroll RJ, Ruppert D, Stefanski LA. Measurement error in nonlinear models London: Chapman & Hall; 1995. [Google Scholar]

- 59.Muthén LK, Muthén BO. Mplus user’s guide 7th ed. Los Angeles: Muthén & Muthén; 2010. [Google Scholar]

- 60.Kraemer HC, Wilson GT, Fairburn CG, Agras WS. Mediators and moderators of treatment effects in randomized clinical trials. Arch Gen Psychiatry 2002;59:877–83. [DOI] [PubMed] [Google Scholar]

- 61.Kraemer HC, Kiernan M, Essex M, Kupfer DJ. How and why criteria defining moderators and mediators differ between the Baron & Kenny and MacArthur approaches. Health Psychol 2008;27:S101–8. doi: 10.1037/0278-6133.27.2(Suppl.).S101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Donner A, Birkett N, Buck C. Randomization by cluster: sample size requirements and analysis. Am J Epidemiol 1981;114:906–14. [DOI] [PubMed] [Google Scholar]

- 63.Donner A, Klar N. Statistical considerations in the design and analysis of community intervention trials. J Clin Epidemiol 1996;49:435–9. [DOI] [PubMed] [Google Scholar]

- 64.Murray DM. Design and analysis of group-randomized trials New York: Oxford University Press; 1998. [Google Scholar]

- 65.Lewis CC, Larson M, Marriott BR, Jackson CB, Dorsey CN, Kerns SE, et al. Optimizing Psychological Science’s Impact on Public Health. APS Observer 2018;31(30/3). [Google Scholar]

- 66.Markowitz JC, Petkova E, Neria Y, Van Meter PE, Zhao Y, Hembree E, et al. Is exposure necessary? a randomized clinical trial of interpersonal psychotherapy for PTSD. Am J Psychiatry 2015;172:430–40. doi: 10.1176/appi.ajp.2014.14070908. [DOI] [PMC free article] [PubMed] [Google Scholar]