Abstract

Motivation

Co-inertia analysis (CIA) is a multivariate statistical analysis method that can assess relationships and trends in two sets of data. Recently CIA has been used for an integrative analysis of multiple high-dimensional omics data. However, for classical CIA, all elements in the loading vectors are nonzero, presenting a challenge for the interpretation when analyzing omics data. For other multivariate statistical methods such as canonical correlation analysis (CCA), penalized least squares (PLS), various approaches have been proposed to produce sparse loading vectors via l1-penalization/constraint. We propose a novel CIA method that uses l1-penalization to induce sparsity in estimators of loading vectors. Our method simultaneously conducts model fitting and variable selection. Also, we propose another CIA method that incorporates structure/network information such as those from functional genomics, besides using sparsity penalty so that one can get biologically meaningful and interpretable results.

Results

Extensive simulations demonstrate that our proposed penalized CIA methods achieve the best or close to the best performance compared to the existing CIA method in terms of feature selection and recovery of true loading vectors. Also, we apply our methods to the integrative analysis of gene expression data and protein abundance data from the NCI-60 cancer cell lines. Our analysis of the NCI-60 cancer cell line data reveals meaningful variables for cancer diseases and biologically meaningful results that are consistent with previous studies.

Availability and implementation

Our algorithms are implemented as an R package which is freely available at: https://www.med.upenn.edu/long-lab/.

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

Recently, there has been rapid progress in high-throughput technologies to generate various -omics datasets (e.g. gene expression data and metabolomics data) from same biological subjects or samples. As a result, there has been increasing interest in an integrative analysis of multiple omics datasets. Such analysis integrates and concatenates information from multiple datasets leading to a better understanding of biological underpinnings of diseases.

There are several statistical techniques to conduct an integrative analysis of multivariate datasets. In specific, methods for two multivariate datasets include canonical correlation analysis (CCA), partial least squares (PLS), canonical correspondence analysis and multiple factor analysis (MFA). For example, CCA (Hotelling, 1936) is one of the popular statistical multivariate methods, which finds linear transformations of two multivariate datasets so that the correlation between transformed datasets is maximized. PLS (Wold, 1966) is similar with the CCA, which is widely used in chemometrics. Estimated loading vectors of PLS maximize a covariance between two linearly transformed multivariate data. However, a number of multivariate methods are not applicable if datasets lie in a high-dimensional space, which is also one of the natural features that -omics data have. For example, the CCA typically requires an inverse of a sample covariance matrix, which can be singular because the number of variables exceeds the sample size. To overcome this drawback of the CCA, researchers adopt a regression framework (Lykou and Whittaker, 2010; Parkhomenko et al., 2009; Waaijenborg et al., 2008) or assume that a covariance matrix as an identity matrix (Witten et al., 2009). Those analysis methods also suffer from a lack of interpretability. Typically estimators from high-dimensional datasets combine thousands of variables in a linear fashion and there is no zero coefficient. This causes difficulties on interpreting the results. There have been recent works in the literatures that propose a sparsity-constrained approach as a remedy for the high dimensionality of the data, such as sparse CCA (Hardoon and Shawe-Taylor, 2011; Parkhomenko et al., 2009; Safo et al., 2018; Waaijenborg et al., 2008; Witten et al., 2009) and sparse PLS (Chun and Keleş, 2010; Chung et al., 2012; Lee et al., 2011).

Co-inertia analysis (CIA) is another multivariate statistical analysis technique proposed by Dolédec and Chessel (1994), which can be considered as a generalized CCA or PLS. Co-inertia analysis takes two multivariate datasets as an input and seeks for multiple sets of axis pairs that maximize the concordance between two datasets projected on those new axis pairs. This goal is achieved by maximizing the global measure called ‘co-inertia’ that calculates the degree of the co-variability between two heterogeneous datasets. This analysis approach has been widely used in the ecology area (Dray et al., 2003; Thioulouse, 2011) to uncover the relationship between species and environment. CIA can be applied directly to the high dimensional datasets without any constraints or problems since CIA does not require inverting covariance matrices. Due to this advantage of the CIA, it has been used to the analysis of various biological datasets such as gene expression and proteomics data (Culhane et al., 2003; Fagan et al., 2007; Lê Cao et al., 2009). Culhane et al. conducted a cross-platform comparison of two gene expression datasets using CIA and Fagan et al. used CIA to conduct an integrative analysis of proteomic and gene expression data. In Lê Cao et al. (2009), they consider three methods, sparse PLS, sparse CCA and CIA for the integrative analysis of two datasets. They pointed out that objective goals of three methods are different so that it is difficult to compare them directly. As means of an indirect comparison, they focused on the biological interpretation of the selected genes and graphical outputs from the real data analysis results of each method. In that process, it is pointed out that lack of sparsity is one of weaknesses of the CIA since nonsparse estimated loading vectors make hard to interpret the analysis results and identify robust biomarkers (Lê Cao et al., 2009; Meng et al., 2016). Lê Cao et al. (2009) apply hard thresholding on estimated loading vectors to select important variables as a heuristic approach. Recently Tenenhaus et al. (2017) proposed regularized generalized canonical correlation analysis framework, which includes many methods as its special cases. The CIA also can be regarded as a special case of the RGCCA framework, but this framework cannot includes the sparse CIA as its special case. To the best of our knowledge, there has been no work on combining penalization with CIA to obtain sparse loading vectors.

In this paper, we propose two novel penalized CIA methods conducting estimation and features selection simultaneously to improve interpretability of analysis result and get enhanced identification of significant biomarkers from analysis result. By converting the CIA problem into a penalized regression problem, we achieve the sparsity of estimators. Also, we adopt another penalty that uses network information among genes such as those from functional genomics data so that the estimated model is expected to select relevant genes guided by the prior knowledge about relationships between genes. All penalty parameters are selected by cross-validation, and the performance of our algorithms is investigated by extensive simulation studies. We illustrate our methods by analyzing the NCI60 cell line data, gene expression and proteomics datasets on 57 cell lines.

The rest of this paper is organized as follows. In Section 2, we first review the CIA problem starting from the case dealing with one dataset to the case with two datasets. In Section 3, we present two proposed methods namely the sparse CIA (sCIA) and the structured sparse CIA (ssCIA) the latter of which incorporates biological/structural information. In Section 4, we conduct simulation studies to investigate the performance of our proposed algorithms in comparison with the CIA. We apply our methods to the NCI-60 cancer cell line data in Section 5 and some discussions and remarks are addressed in Section 6.

2 Co-inertia analysis

Suppose that we have a given dataset observed from n subjects and assume that is centered without loss of generality. Let be the positive weights for samples (row space) of and be the positive weights for variables (column space) of . Define the inner product of in the column space of as . Based on the above notations, the inertia of is defined as . The inertia is a global measure of the variability of the data , which has a variance as its specific case. If is centered, is the Euclidean metric, and is the , the inertia is the sum of variances of n data points of . There are several approaches to construct and . For example, can be used to adjust possible sampling bias or duplicated observations. Specifically, we can estimate the probability of selection for each individual in the sample using available covariates in the data and use the inverse of the estimated probability as a weight for each individuals to adjust sampling bias. Also can be used to put strong emphasis on some reliable samples compared to the other samples. can be used to give weights for specific variables, a column sum is one of the choice for the diagonal elements of (Dray et al., 2003). As another approach, can be chosen such that genes in that are known to be associated with a clinical phenotype of interest have larger weights. It may be also a good approach to compute based on functional annotation following some recent proposed methods, originally proposed for rare-variant test for integrative analysis (Byrnes et al., 2013; He et al., 2017).

Let denote the projection of to the vector normalized with , where is known as the inertia axis (Culhane et al., 2003; Dray et al., 2003) or the inertia loading vector (Lê Cao et al., 2009). Following the latter, we call the loading vector. The projected inertia is defined as . There exists p orthogonal vectors that are normalized with such that sum of projected inertias becomes the total inertia . Those orthogonal vectors are the eigenvectors of the matrix , which also can be calculated sequentially by solving the following problem,

| (1) |

Suppose that there is another set of data collected from the same subjects. Analogous to the definition of the inertia, we define the ‘co-inertia’ that measures the concordance between two datasets (Dray et al., 2003) as . For two projections and , where a -normed vector and a -normed vector , the co-inertia between two projections is defined as . In addition to the centering the data, it is recommended to scale both datasets if variables in each data are measured on different scales (Dolédec and Chessel, 1994; Dray et al., 2003).The goal of CIA is to find the optimal loading vector pair that maximizes the projected co-inertia. Pairs of optimal co-inertia loading vectors can be obtained simultaneously via eigenvalue decomposition of the matrix . First R co-inertia loadings are with respect to and , where is set of eigenvectors and is corresponding eigenvalues. By solving following optimization problem, the first loading vectors can be acquired,

| (2) |

We can reformulate problem (2) as follows,

| (3) |

where and , which is a singular decomposition (SVD) problem. Subsequent pairs of orthogonal loadings can be estimated by applying SVD to the deflated data with respect to all previously estimated loading vector pairs . In following sections, we will develop our methods based on above problem representation (3).

3 Penalized co-inertia analysis

3.1 Sparse co-inertia analysis (sCIA)

To get a sparse loading vector, we impose the l1-constraint on the optimization problem (3) as follows.

| (4) |

where c1, c2 are pre-defined constants. Note that we relax the l2-equality penalty on into inequality penalty to achieve the convexity of the problem following Witten et al. (2009). The problem (4) has constraints on and , which are transformed and , not directly on and . However, the sparsity that and achieved is transferred to and because and are diagonal matrices. Lagrangian formulation of the problem (4) is

| (5) |

where λ1 and λ2 are Lagrangian multipliers. The objective function of the problem (5) is a biconvex function in and such that we can use iterative approach. By fixing one loading vector at a time, the problem (5) can be reformulated into the iterative algorithm that consists of two penalized least squares problem as follows,

| (6) |

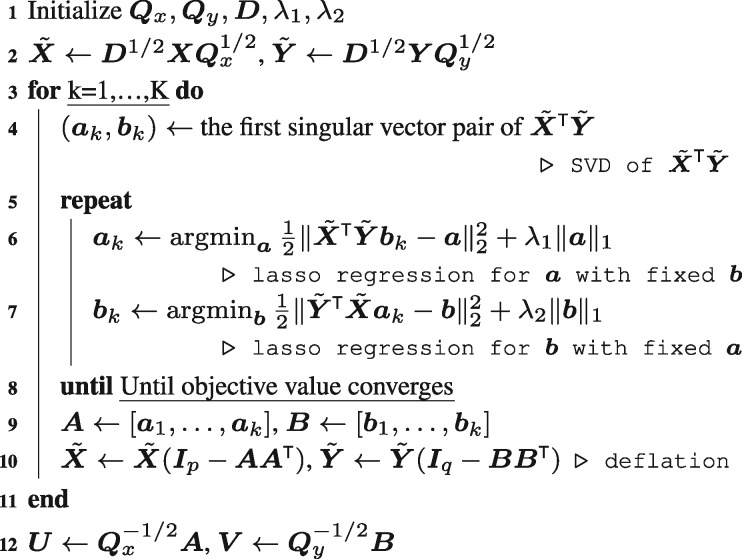

We can get the optimal pair of by solving iterative problem (6) until it converges. More than two orthogonal sCIA loading vector pairs can be estimated by applying the above iterative procedure to the deflated data with respect to all previous estimated loading vector pairs. The complete overall procedure for the sCIA is summarized in Algorithm 1.

Algorithm 1: Sparse Co-Inertia Analysis

3.2 Structured sparse co-inertia analysis (ssCIA)

In this section, we extend the proposed sCIA method by incorporating prior knowledge about the network information among variables so that relevant variables can be identified more efficiently. To this end, we adopt the Laplacian penalty function proposed by Li and Li (2008). Let contains a weighted undirected graph information of variables in , where is the set of vertices corresponding to the p features (or nodes), is the set of edges showing that features i and j are direct neighbors in the network, and is the weight of each node. Using , the (i, j)th element of the normalized Laplacian matrix is defined by

| (7) |

where is the weight of the edge and di is the degree of the vertex i defined as . The normalized Laplacian matrix for the data can be defined in the same way. With definitions of and and given positive constants and c4, we propose the following structured sparse CIA (ssCIA) criterion that is the extended model of the sCIA problem (4),

| (8) |

where and . Like as the sCIA problem, the sparsity that and achieved is transferred to and due to the diagonality of and . Also, the Laplacian penalty in the problem (8) smoothes the estimated loading vectors such that variables within a same network can be selected or neglected together. Our structure penalty function uses the Laplacian matrices that and are pre-/post-multiplied respectively. Thus, this penalty function encourages smoothness of the and . Smaller values of c3 and c4 result in smoother estimates of loading vectors and respectively. Lagrangian formulation of the problem (8) is

| (9) |

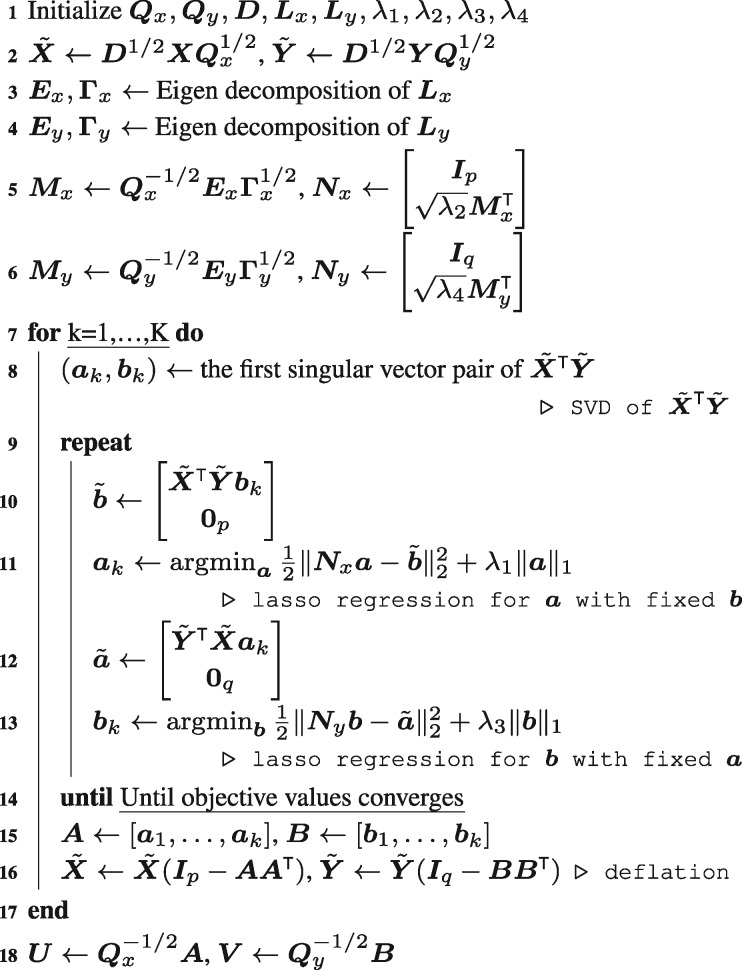

which is a biconvex problem for and such that we can find the optimal and using an iterative algorithm. By fixing one loading vector at a time, the problem (9) can be recast into the following iterative algorithm that consists of two simple lasso regression problem as in Li and Li (2008) and Chen et al. (2013),

| (10) |

where

and are eigenvectors and eigenvalues of and respectively. More than two orthogonal ssCIA loading vectors can be derived by applying above iterative procedure again to the deflated data that is projected to the orthogonal space of all previously estimated pairs of loading vectors. The complete overall procedure for the ssCIA is summarized in Algorithm 2.

Algorithm 2: Structured Sparse Co-Inertia Analysis

4 Numerical studies

4.1 Generative model for synthetic data

We generate the simulation data following the model presented in Parkhomenko et al. (2009), which assumes the existence of a latent variable to make the dependency between two sets of random variables. Consider a pair of random variable vectors and . Suppose that μ from is a latent variable that generates dependency between two random variables. We construct two random variables and where and are pre-defined true sparse co-inertia loading vectors such that . Then the covariance matrix of and have a block matrix structure, and we can show that and are left and right singular vectors of , which maximize the objective function of the CIA. Detailed description of the covariance matrix and the simple proof that and be the singular vectors of can be found in the Section A.1 of the Supplementary Material.

4.2 Design of experiments

One hundred Monte Carlo (MC) datasets are generated, where and are drawn for n = 200 times for each. We make as an identity matrix without loss of generality, and diagonal weight matrices and are randomly generated. To mimic networks existing in the omics data, we assume that the first 300 variables each of and form 30 networks. Each network has 10 variables and the first one of them within each network is the main variable connected to the rest of 9 variables. Variance matrices for and are generated so as to contain each assumed network information.

For the construction of the true loading vectors, we assume that the most of the genes have no effects to the relationship between two datasets and only small portion of genes affects to that relationship. Thus true loading vectors are sparse across all simulations. We consider seven scenarios by changing three conditions, a number of networks affecting on dependency between two datasets, different signal direction of genes within each network, and degree of sparsity within each network. The first condition decides the sparsity of the true loading vector. There are more nonzero elements in the true loadings if there are more effective networks we have. The second and third conditions decide whether the ssCIA can benefits from the prior network information. If signals of coefficients vary or there are zero coefficients within a network, that scenario is not favorable to the ssCIA since true loading vectors cannot make the network penalty zero. Specific values of the constructed variance matrices and true sparse loading vectors for each scenario design are described in Section A.2 and A.3 of the Supplementary Material.

4.3 Tuning parameter selection and performance measures

We use five-fold cross validation (CV) method for selecting the optimal tuning parameters. For each data, we divide the data into five subgroups and calculate CV objective values, , where are kth subgroup of the data, are estimators from the data except the kth subgroup using tuning parameter λ. We select the optimal tuning parameters that maximize the above cross validation criteria. For the grid search of sparsity tuning parameters of both methods, we use evenly spaced grid points between the maximum and minimum value of the sparsity parameter that gives almost zero loading vectors to almost non-sparse loading vectors. For the network penalty parameters of the ssCIA, we conduct preliminary analyses to obtain a rough guess for the range containing tuning parameter values. After narrowing down the ranges, evenly distributed grid points are used in the simulation. According to the case-specific situation, different grid density generated using different gaps can be used instead.

We assess the feature selection performance of our methods using sensitivity, specificity, Matthews correlation coefficient (MCC), and the estimation performance using the angle between the true and an estimated loading vector. Each measure is defined as and , where TP, TN, FP and FN are true positives, true negatives, false positives and false negatives and are the estimator of the true loading vector .

4.4 Results

Simulation results are shown in Table 1. The loading estimators from sCIA having better angles in all scenarios compared to the classical CIA. Since the estimators from sCIA have better angles with fewer nonzero elements in the estimated loadings, we can think that the sCIA shows improvements in interpretability and precision. Also, the ssCIA shows higher angle values in most of the cases, which indicates that the ssCIA shows improvements in the interpretability and precision in most cases.

Table 1.

Simulation results of sCIA and ssCIA

|

in |

in |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Sens | Spec | MCC | Angle | Sens | Spec | MCC | Angle | ||

| Scen1 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen2 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen3 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen4 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen5 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen6-1 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen6-2 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen7-1 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

| Scen7-2 | CIA | – | – | – | – | – | – | ||

| sCIA | |||||||||

| ssCIA | |||||||||

Note: As a measure for the performance of proposed algorithms, sensitivity (Sens), specificity (Spec), Mattews correlation coefficient (MCC), and the angle between estimated loadings and true loadings are shown. Numbers inside the parenthesis are Monte Carlo standard deviation.

We evaluate the performance of the ssCIA compared to the CIA and the sCIA when the true underlying loadings do not agree with the graph structure assumed by comparing results of the first, second and sixth scenarios to the rest. Scenario 1, 2 and 6 are favorable designs to the ssCIA, because the true loading vectors in those simulation settings agree with the network information incorporated in the model. The ssCIA outperforms the CIA and the sCIA in those favorable scenarios. The estimators from ssCIA have higher values in sensitivity, specificity and MCC in general compared to estimators from the sCIA. In the first and second scenario, the ssCIA finds all effective variables while it keep showing high specificity. Also, measured angle values of the ssCIA are higher in every cases compared to that of the sCIA. Based on the above observations, we conclude that our ssCIA shows better feature selection performance compared to the CIA and the sCIA with a help of graph information if incorporated graph information agrees with true loading vectors.

In the other settings such as scenario 3, 4, 5 and 7, the penalty function has nonzero value when the input is the true loading vector. Thus in those simulation settings, the ssCIA cannot enjoy the benefit of incorporated prior information. However, the ssCIA still shows comparable values in all measures even though those designs are not favorable to them. The difference of four measures between the ssCIA and the CIA/sCIA are relatively small. We conclude that still our ssCIA shows competitive performance, especially in specificity, despite of the discordance between some elements of the true loading vectors and incorporated graph information.

From the comparison between results of scenario 1, 3 and scenario 2, 4, we compare the performance of our penalized CIA compared to the classical CIA when the true loading vectors become more sparse. The classical CIA performs better if the true loading vectors are less sparse, which is expected result. For our proposed methods, there is a trade off between sensitivity and specificity. Our algorithms lose some sensitivity but get a lot more specificity so that MCC and angle are improved. This observation implies that our proposed methods shows better performance when the true loading is sparse that fits with our original purpose.

We also generate averaged ROC curves and corresponding approximated AUC values. Those results are presented in Section B of the Supplementary Material. Figure 1 and Table 1 in the Supplementary Material confirms the above simulation results.

5 Real data analysis

5.1 NCI-60 cell line data

The NCI-60 is a panel of 60 diverse human cancer cell lines used by the Developmental Therapeutics Program (DTP) of the U.S. National Cancer Institute (NCI) to screen over 100 000 chemical compounds and natural products. It consists of 10 kinds of cancerous cell lines, leukemia, lymphomas, melanomas, ovarian, renal, breast, prostate, colon, lung and CNS origin. There are various -omics datasets available for those cell lines including gene expression data from various platforms, protein abundance data and methylation data. We use the gene expression data used in the CIA application (Culhane et al., 2003), which contains 1517 probes that have the minimum change in gene expressions greater than 500 units across all cell lines. For the other data, we use the protein abundance data generated by Nishizuka et al. (2003) using the high-density RP lysate arrays (Paweletz et al., 2001), which can be downloaded from CellMiner (http://discover.nci.nih.gov/cellminer/) web application for the NCI-60 data (Reinhold et al., 2012). In this study, abundance levels of 162 proteins are available for the NCI-60 cell lines. After matching labels of cell lines of two datasets, 57 of 60 matched cell line data were used for the analysis. For the weight matrices in the CIA, sCIA and the ssCIA, we use the identity matrix for and the column sum divided by total sum of the absolute values of the data each as diagonal values of the weight matrix following Culhane et al. (2003). Network information incorporated in the ssCIA method are collected from KEGG pathway database (Kanehisa et al., 2017).

5.2 Analysis results

To compare the performance of proposed algorithms with the classical CIA, the number of nonzero elements in the first two estimated loading vectors and the cumulative percentage of explained variance of data by the estimated loading vectors are calculated in Table 2. Cumulated percentage of the explained variability is the ratio of sum of estimated co-inertia to the total co-inertia. Since the total co-inertia between and does not change (Dray et al., 2003), we can use the cumulated percentage of explained variability as a measure to compare the performance of the CIA, sCIA and the ssCIA how much they explain the co-variability between two datasets. We can observe that our penalized algorithms select much fewer elements in loading vectors but still explains almost same portion of the data variability explained by the CIA. The ssCIA collects more variables compared to the sCIA, but it explains more variability than the sCIA, and much fewer variables compared to the CIA to explain the similar percentage of the data variability explained by the CIA.

Table 2.

Analysis results of CIA, sCIA and ssCIA for the NCI60 cell line data

| Result of the CIA, sCIA and ssCIA | ||||||

|---|---|---|---|---|---|---|

| Number of nonzero elements |

Cumul % explained by estimated loadings |

|||||

| 1st loading | 1st, 2nd loadings | |||||

| CIA | 1517 | 1517 | 162 | 162 | 0.359 | 0.641 |

| sCIA | 1038 | 573 | 92 | 153 | 0.336 | 0.598 |

| ssCIA | 1206 | 1036 | 113 | 132 | 0.348 | 0.624 |

Note: First four columns are number of nonzero elements in the estimated first two co-inertia loadings for each and , next two columns are cumulated percentage of explained variability of the data.

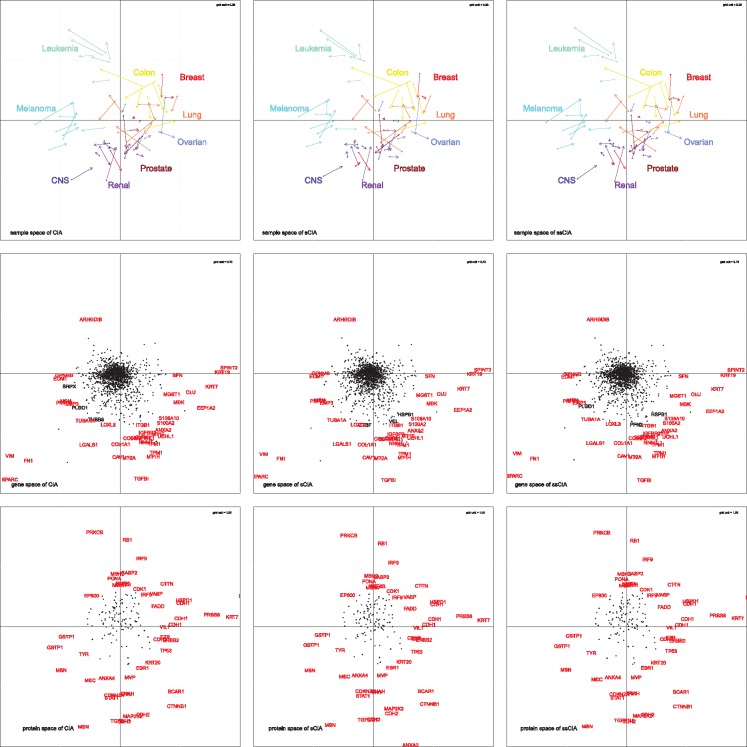

Following Culhane et al. (2003), Fagan et al. (2007) and Meng et al. (2014), we generate three figures for each method and shown in Figure 1. The figures in the first row show the sample space of the gene expression and protein abundance data. The arrow base pointed as a dot is the projection of the cell line from the gene space while the tip of the arrow is the projected coordinate of the cell line from the protein space. The length of the arrow indicates the degree of the concordance between two datasets, the shorter arrow stands for the higher consensus between two datasets. For example, the arrows of the CNS cell line data exhibits relatively short-length compared to others, which suggests that consensus between the gene expression and the protein abundance data of the CNS cell line is higher compared to that of leukemia, melanoma, or lung cell lines. This plot also shows the global pattern of the data, the distance between the tip and the origin tells us which cell line contributes more and have higher weights on the specific co-inertia axis. Gene space (arrow base) of the melanoma cell lines is projected further than its protein space in the direction of the first co-inertia axis, which suggests that the gene expression data contributes more to the trend of the first axis compared to the protein abundance data. Similarly, the protein abundance data of the leukemia cell lines may contributes more to the first axis compared to the gene expression data. Clustering pattern is another information that we can get from the figures in the first row. First we observed is that samples are clustered by their cell lines, especially, samples from leukemia, melanoma and colon cell lines are well clustered compared to others. We notice that both datasets from lung carcinomas and breast cancer are more dispersed than others, which may suggest that datasets for those disease is more heterogeneous than others. In second, we can observe that cell lines are separated by their characteristics. Cell lines clustered to the right of the second axis are colon, breast, lung, ovarian, prostate and renal cell lines. All those cell lines are from epithelial cell tissues (Marshall et al., 2017) while lueukemia, melanoma and CNS are not from that origin of tissues.

Fig. 1.

NCI60 Cell Line data analysis result from the CIA, the sCIA and the ssCIA, left to right respectively at each row. The first row shows the sample space of analysis result. The starting point of an arrow is a normalized score of a sample in the gene data, while the endpoint of arrow is a normalized score of a sample in the protein data. The figures second row show the distribution of samples in the gene space by the first and second scores of estimated CIA axis for the gene space, while the figures in the third row show the distribution of samples in the protein space by the first and second score of estimated CIA axis. The value of d in the upper right part of each figure is the unit length of the grid in each figure

The plots in the second and the third row in Figure 1 show the gene and protein projections in their respective spaces. Labeled genes in each plot are top 50 genes that are the most extreme from the ends of each co-inertia axis and red color-labeled ones are commonly chosen in all three methods (Culhane et al., 2003). We observe that the sCIA and the ssCIA select similar sets of significant genes and proteins while their estimators are sparse compared to estimators of CIA. This suggests that our methods perform well in feature selection, important features are selected while less important features are neglected. Also, we observe that there is a group of genes and proteins projected onto the same direction in those plots across the methods and spaces. For example, the gene TGFBI is located in the bottom-end of the second axis in all figures in the second and third rows. Another observation we make is that the mesenchymal biomarker VIM is located in the left-end of the first axis while epithelial markers KRT7 and KRT19 are located at the right-end of the first axis of the gene space figures. Also, another epithelial maker CDH1 has high positive weight on the first axis of the protein space figures. These observations agree with the results from the figures in the first row. Those findings suggest that our penalized CIA methods give us biologically meaningful results.

We also conduct the pathway enrichment analysis for each selected genes and proteins in each method using ToppGene Suite (Chen et al., 2009). In general, both sCIA and ssCIA finds biologically meaningful results, while the ssCIA can detect more enriched pathways compared to the sCIA. The most highly enriched diseases in the selected genes from the sCIA and the ssCIA are neoplasma, tumor progression, glioma, non-small cell lung carcinoma, ovarian carcinoma, colon carcinoma and a number of other cancers such as leukemia, renal cell carcinoma and malignant tumor of colon. All those listed diseases are included in the list of diseases selected for the NCI60 cell line data. Pathways in cancer (Bonferroni adjusted q-value for sCIA: , ssCIA: ), non-small cell lung cancer (Bonferroni adjusted q-value for sCIA: , ssCIA: ) and many other cancers related pathways are enriched among the selected genes of results from our methods. We also find GO terms that are significantly enriched in the selected genes and proteins. In both the sCIA and the ssCIA results, highly enriched GO term include RNA binding (ID GO: 0003723), enzyme binding (ID GO: 0019899) and structural constituent of the ribosome (ID GO: 0003735) (Ross et al., 2000). GO terms related tissue development, metastasis are expected to be detected in the enrichment analysis of the first estimates since the first axis separates epithelial cancers. And we find that cell adhesion (GO: 0007155), extracellular matrix structural constituent (GO: 0005201) are enriched from both results of the sCIA and the ssCIA. There exists some other GO term such as structural molecule activity (GO: 0005198), structural constituent of cytoskeleton (GO: 0005200) that are related to cell structure but only enriched in the results of the ssCIA. From this, we confirm that the ssCIA enjoys the benefits of network information incorporated via network penalty.

6 Discussion

We proposed two sparse CIA methods that impose penalties on the CIA loading vectors. We use the l1 penalty and the network penalty that utilizes the prior knowledge about relationships among variables. Our approach is useful when data is high dimensional, since estimated loading vectors from our model are sparse while explaining a similar amount of variation between two datasets as the CIA, particularly when the ssCIA is used and our methods are computationally efficient and scalable to analysis of high-dimensional -omics data. Regarding the scalability, computational complexity of our algorithms are discussed in Section B of the Supplementary Material. Numerical studies prove that our proposed penalized CIA methods achieve close performance compared to the CIA, with small number of selected variables. For the future research, we plan to extend the methods to multiple co-inertia analysis (MCIA) for analysis of more than two datasets.

Funding

This work is partly supported by NIH grants P30CA016520, R21NS091630 and R01GM124111. The content is the responsibility of the authors and does not necessarily represent the views of NIH.

Conflict of Interest: none declared.

Supplementary Material

References

- Byrnes A.E. et al. (2013) The value of statistical or bioinformatics annotation for rare variant association with quantitative trait. Genet. Epidemiol., 37, 666–674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J. et al. (2009) ToppGene Suite for gene list enrichment analysis and candidate gene prioritization. Nucleic Acids Res., 37, W305–W311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J. et al. (2013) Structure-constrained sparse canonical correlation analysis with an application to microbiome data analysis. Biostatistics, 14, 244–258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun H., Keleş S. (2010) Sparse partial least squares regression for simultaneous dimension reduction and variable selection. J. R. Stat. Soc. Ser. B (Stat. Methodol.), 72, 3–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung D. et al. (2012) SPLS: sparse partial least squares (SPLS) regression and classification. R Package Version, 2, 1–1. [Google Scholar]

- Culhane A.C. et al. (2003) Cross-platform comparison and visualisation of gene expression data using co-inertia analysis. BMC Bioinformatics, 4, 59.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dolédec S., Chessel D. (1994) Co-inertia analysis: an alternative method for studying species-environment relationships. Freshwater Biol., 31, 277–294. [Google Scholar]

- Dray S. et al. (2003) Co-inertia analysis and the linking of ecological data tables. Ecology, 84, 3078–3089. [Google Scholar]

- Fagan A. et al. (2007) A multivariate analysis approach to the integration of proteomic and gene expression data. Proteomics, 7, 2162–2171. [DOI] [PubMed] [Google Scholar]

- Hardoon D.R., Shawe-Taylor J. (2011) Sparse canonical correlation analysis. Mach. Learn., 83, 331–353. [Google Scholar]

- He Z. et al. (2017) Unified sequence-based association tests allowing for multiple functional annotations and meta-analysis of noncoding variation in metabochip data. Am. J. Hum. Genet., 101, 340–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hotelling H. (1936) Relations between two sets of variates. Biometrika, 28, 321–377. [Google Scholar]

- Kanehisa M. et al. (2017) KEGG: new perspectives on genomes, pathways, diseases and drugs. Nucleic Acids Res., 45, D353–D361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lê Cao K.-A. et al. (2009) Sparse canonical methods for biological data integration: application to a cross-platform study. BMC Bioinformatics, 10, 34.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D. et al. (2011) Sparse partial least-squares regression and its applications to high-throughput data analysis. Chemometr. Intell. Lab. Syst., 109, 1–8. [Google Scholar]

- Li C., Li H. (2008) Network-constrained regularization and variable selection for analysis of genomic data. Bioinformatics, 24, 1175–1182. [DOI] [PubMed] [Google Scholar]

- Lykou A., Whittaker J. (2010) Sparse CCA using a lasso with positivity constraints. Comput. Stat. Data Anal., 54, 3144–3157. [Google Scholar]

- Marshall E.A. et al. (2017) Small non-coding rna transcriptome of the nci-60 cell line panel. Sci. Data, 4, 170157.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meng C. et al. (2014) A multivariate approach to the integration of multi-omics datasets. BMC Bioinformatics, 15, 162.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meng C. et al. (2016) Dimension reduction techniques for the integrative analysis of multi-omics data. Brief. Bioinf., 17, 628–641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishizuka S. et al. (2003) Proteomic profiling of the NCI-60 cancer cell lines using new high-density reverse-phase lysate microarrays. Proc. Natl. Acad. Sci., 100, 14229–14234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkhomenko E. et al. (2009) Sparse canonical correlation analysis with application to genomic data integration. Stat. Appl. Genet. Mol. Biol., 8, 1–34. [DOI] [PubMed] [Google Scholar]

- Paweletz C.P. et al. (2001) Reverse phase protein microarrays which capture disease progression show activation of pro-survival pathways at the cancer invasion front. Oncogene, 20, 1981.. [DOI] [PubMed] [Google Scholar]

- Reinhold W.C. et al. (2012) CellMiner: a web-based suite of genomic and pharmacologic tools to explore transcript and drug patterns in the nci-60 cell line set. Cancer Res., 72, 3499–3511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross D.T. et al. (2000) Systematic variation in gene expression patterns in human cancer cell lines. Nat. Genet., 24, 227.. [DOI] [PubMed] [Google Scholar]

- Safo S.E. et al. (2018) Sparse generalized eigenvalue problem with application to canonical correlation analysis for integrative analysis of methylation and gene expression data. Biometrics, doi:10.1111/biom.12886. [DOI] [PubMed] [Google Scholar]

- Tenenhaus M. et al. (2017) Regularized generalized canonical correlation analysis: a framework for sequential multiblock component methods. Psychometrika, 82, 737–777. [DOI] [PubMed] [Google Scholar]

- Thioulouse J. (2011) Simultaneous analysis of a sequence of paired ecological tables: a comparison of several methods. Ann. Appl. Stat., 5, 2300–2325. [Google Scholar]

- Waaijenborg S. et al. (2008) Quantifying the association between gene expressions and DNA-markers by penalized canonical correlation analysis. Stat. Appl. Genet. Mol. Biol., 7, Article 3. [DOI] [PubMed] [Google Scholar]

- Witten D.M. et al. (2009) A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics, 10, 515–534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wold H. (1966) Estimation of principal components and related models by iterative least squares In: Multivariate Analysis. Academic Press, New York: pp. 391–420. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.