Abstract

Background:

Biased patterns of attention are implicated as key mechanisms across many forms of psychopathology and have given rise to automated mechanistic interventions designed to modify such attentional preferences. However, progress is substantially hindered by limitations in widely-used methods to quantify attention bias leading to imprecision of measurement.

Methods:

In a clinically anxious sample (n=70), we applied a well-validated form of computational modeling (Drift-Diffusion Model; DDM) to trial-level reaction time data from a two-choice “dot-probe task”—the dominant paradigm used in hundreds of studies attention bias studies to date—in order to model distinct components of task performance.

Results:

While DDM-derived attentional bias indices exhibited convergent validity with previous approaches (e.g., conventional bias scores, eyetracking), our novel analytic approach yielded substantially improved split-half reliability, modestly improved test-retest reliability, and revealed novel mechanistic insights regarding neural substrates of attentional bias and the impact of an automated attention retraining procedure.

Conclusions:

Computational modeling of attentional bias task data may represent a new way forward to improve precision.

[clinicaltrials.gov: NCT02303691, “Attention Bias Modification for Transdiagnostic Anxiety”]

Keywords: drift-diffusion model, attentional bias, anxiety, attention bias modification, computational psychiatry, computational modeling

Introduction

Computational psychiatry aims to apply computational methods to observational data in psychiatric populations in the hopes of more precisely quantifying underlying mental processes, which may then track with observations at other levels of analysis (e.g., clinical symptoms, neural substrates)(1-3). Many psychiatric conditions are characterized by alterations in attention, a fundamental building block of cognition that dictates which stimuli are preferentially selected from within a dynamic environment and promoted for further processing (4). A prominent example comes from clinical anxiety, a prevalent and disabling condition (5,6), in which individuals demonstrate selective attentional preference (henceforth, attention bias) in response to threat-relevant stimuli (7). Attention Bias Modification (ABM) procedures, a fully automated, computer-based, mechanistic intervention designed to target vigilance to threat, have shown some promise in reducing emotional vulnerability and/or alleviating clinical anxiety symptoms (8,9), supporting the theory that attention bias may play a causal role in anxiety. Though there are well-replicated behavioral findings regarding both the existence of attention bias across a wide range of anxious phenotypes (7) and the ability of ABM procedures to effectively modify attention (8,9), this literature has suffered from widely acknowledged psychometric issues (10-12), including: a) the predominant use of tasks and analysis procedures that conflate numerous components of task performance; and b) a lack of sufficient test-retest stability to facilitate addressing key, clinically relevant questions related to individual differences (e.g., relationships between change in mechanism and change in symptoms; personalized treatment outcome prediction).

One form of computational modeling, Drift-Diffusion Modeling [DDM; (13)], has been strongly validated and widely used in both simulated and empirical datasets as a method of dissecting and modeling diverse components of trial-level behavioral data in the context of two-choice decision tasks (14). The dominant task paradigm used to quantify attention bias in hundreds of anxiety studies to date (7), the dot-probe task (15), is a two-choice decision task which is appropriate for application of DDM, though DDM has not been previously applied within the dot-probe literature, and rarely (16-22) has been applied in affective psychopathology research. Briefly, DDM utilizes each individual’s distribution of reaction times and errors across task trials to quantify a set of parameters that together produce the optimal fit to the individual’s actual distribution of responses. Most relevant to the study of attentional bias is the capacity to separate out two parameters, “drift” and “extradecisional time,” effectively enabling the response process to be divided into: 1) a measure of performance related to the response decision itself (represented by the “drift” parameter), which, in the case of the dot-probe, is an incidental decision task which is irrelevant to affective processing (e.g., does a probe cue display as an “E” or an “F”), and 2) the “extradecisional” components that occur before and after a probe decision is made. Though DDM analysis often focuses on decision-related parameters (e.g., drift, decisional threshold), in the case of the dot-probe task, it is this latter, extradecisional component that encompasses the attentional processes of theoretical interest (as well as more extraneous components such as response preparation and execution—e.g., pressing the correct button). More precisely, the extradecisional component includes the time an individual takes to orient attention to the probe location (which must occur before a decision about the probe can commence), which can then be compared for trials in which the probe location follows either an affective (e.g., threat-related) or neutral cue, providing an index of attentional preference. By separately modeling and thereby removing some irrelevant features of task performance (parameters related to the incidental probe decision), DDM could produce a purer behavioral measure of the attentional patterns of interest, and might yield more precise and/or psychometrically sound estimates, enabling potential reanalysis of response data from many hundreds of studies that have previously utilized the dot-probe task in the study of psychopathology.

To test this thesis, we applied DDM to dot-probe behavioral data collected from a transdiagnostic clinically anxious sample in the context of a mechanistic intervention study, in which participants were randomized to complete ABM procedures intended to modify attentional patterns or a sham control condition. We aimed to: 1) explore the viability of DDM to achieve good model fits and/or superior reliability when applied to response data from a fairly typical dot-probe paradigm; 2) assess convergence regarding how novel attentional bias scores derived via DDM relate to other variables, including conventional dot-probe attention bias scores,eye movements, and neural correlates; and 3) assess whether use of DDM enabled more sensitive detection of mechanistic shifts in attention over the course of the RCT that were not observable when applying conventional analyses to task data.

Methods

Full methods and primary clinical findings from the randomized controlled trial (clinicaltrials.gov: NCT02303691) have been reported previously (23). In brief, seventy unmedicated patients reporting clinically elevated levels of trait anxiety and associated clinician-rated disability were randomized to receive active ABM (n=49) or a sham control variant (n=21). See Table S1 in Supplement for sample characteristics.

Dot-probe task.

The dot-probe assessment task was completed at baseline, at acute post-treatment, and at 1-month follow-up. At baseline, ten idiographic threat words were selected collaboratively by the participant and clinical interviewer and ideographically matched (on familiarity and word length) to 10 neutral words; these idiographic lists were supplemented by 20 threat words and 20 neutral words from a normative corpus used previously in ABM research(e.g., 24). Word pairs (80% threat-neutral; 20% neutral-neutral) were presented vertically for either 500ms (50% of trials) or 1500ms (50% of trials), followed by a probe (‘E’ or ‘F’) in either the upper or lower word location. Participants responded via button press to indicate the probe letter displayed. A total of 300 trials were given. The present analyses focused on 2 conditions of shorter (500ms) duration trials, comprised of 60 trials each, which were randomly interspersed over the course of the experiment: “congruent” trials (in which the probe appeared in the previous location of the threat word) and “incongruent” trials (in which the probe replaced the neutral word). Given that the ABM intervention specifically trained attention using 500ms stimulus presentations (see details below), we specifically hypothesized that intervention effects would be evident on these trials. The remaining task trials, comprised of congruent and incongruent trials of longer (1500ms) duration, and neutral-neutral word pairs of both short and long duration, were exploratory and not included in the present analyses. To provide a direct measure of overt eye movements, a RK-768 eyetracker concurrently measured eye gaze during the task (see Supplement for details).

In a separate fMRI session prior to the onset of treatment, the same dot-probe task was administered, with minor modifications (e.g., only 500ms duration word pairs were included; randomly jittered intertrial intervals of 0-8s). BOLD data were acquired simultaneously on a 3Tesla Siemens Trio scanner (TR=2000; TE=28; flip angle=73°; 38 slices; FOV=200×200; 3.125×3.125×3.2mm voxels). Standard preprocessing steps were applied in AFNI. 68 of the 70 randomized participants had usable data (n=1 excluded for excessive motion; n=1 did not complete scan). Whole-brain analyses were conducted to identify regions robustly linked to DDM-derived attentional bias scores across individuals [voxel threshold: p<.005; map-wise p<.05 via 3dClustSim with AFNI’s spatial autocorrelation function, which provides accurate type I error control under our conditions (25)]. See Supplement for full details of fMRI data acquisition and analysis.

DDM analysis.

Analyses were completed using fast-dm software ((26);see Supplement for software commands used and for preliminary model comparison steps which led to our adopted approach). A distribution of reaction times and correct/incorrect values of each trial response was compiled for each individual, at each assessment point, for each of the 2 trial types described above. Each of these discrete distributions was then modeled using the Kolmogorov-Smirnov method to search through the parameter space and identify the optimal combination of the following parameters: extradecisional time (t0), drift (v), differences in speed of response execution for correct/incorrect responses (d), decisional threshold separation (a), variability in t0, variability in v, and inter-trial variability in starting point (sz). Consistent with recommendations when applying DDM to correct/incorrect response distributions with a similar number of trials (14), a priori decisional bias (zr) was set to 0.5 (representing no starting bias towards correct/incorrect responding) and percentage of contaminants (p) was set to 0. Simulations (see Supplement) verified that the DDM methods could accurately identify model parameter shifts in extradecisional time, as distinct from shifts in other relevant parameters (e.g., drift rates), for datasets with the current number of trials per condition and similar RT and error rate distributions.

Bias scores.

The extradecisional time parameters from each of the two conditions were used to generate an attentional bias score for each participant/timepoint as follows: extradecisional_bias = t0_ incongruent – t0_ congruent. Likewise, conventional attention bias scores were generated from mean RT values from each trial type, after applying data handling/cleaning recommendations to optimize reliability (10). Across all bias scores, larger scores indicate greater degree of vigilance to threat.

ABM and sham interventions.

The ABM and sham conditions were modeled after prior studies(e.g., 24) and have been described in a previous report which focused on fMRI indices, collected during a distinct task, predicting clinical outcomes (see (23) and Supplement for details). Briefly, participants in both groups completed 8 twice-weekly sessions in the laboratory, using an abbreviated version of the dot-probe task as described above, with a few modifications. In all trials during training, word pairs were presented for 500ms. The only distinction between the ABM and control conditions was in the relationship between the probe location and the threat word in each word pair. In ABM, for 100% of threat-neutral trials (80% of all trials), the probe replaced the neutral word, thereby shaping attention away from threatening cues through practice. In the sham condition, the distribution of trials was the same as in the assessment dot-probe, with the probe replacing either the threat or neutral word with equal likelihood.

Results

Model fit.

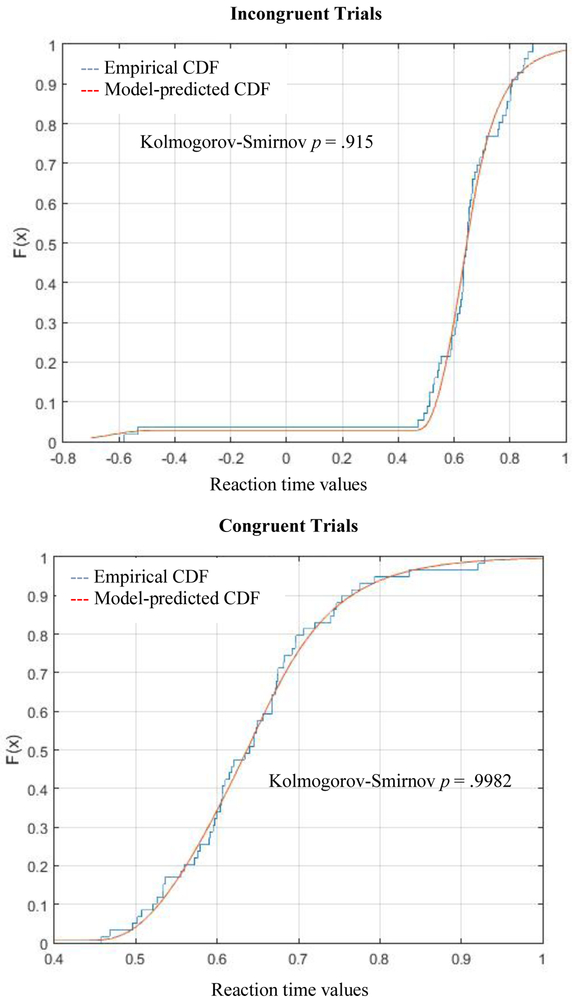

DDM models were a good fit for every participant’s datasets (for each assessment point and trial type) according to Kolmogorov-Smirnov tests, which assess the probability that empirical and predicted data distributions differ (all p’s≥.59; mean p=.983; SD=.017). Model fits did not differ by trial type, timepoint, or group (ABM vs. control) (p’s≥.18). Figure 1 illustrates the model fit for the empirical data distribution in a representative subject. See Table S3 in Supplement for descriptive statistics for all model parameters.

Figure 1.

Representative empirical and model-predicted cumulative distribution functions (CDFs) of trial-level behavioral performance on the dot-probe task, constructed from a single individual at baseline. For graphical purposes only, error trials (present in the top panel only) are represented as negative values reflecting the inverse of observed reaction time.

Reliability.

Test-retest reliability of attentional bias scores was examined across baseline, post-treatment, and 1-month follow-up assessments in the sham control group only, as test-retest stability would not be expected among individuals who received active ABM specifically intended to alter attention bias. By conventional psychometric standards, Intraclass Correlation Coefficient (ICC) values ≤0 indicate no reliability, while values that are statistically significant (relative to 0) but <.5 indicate low reliability (27). Extradecisional_bias scores were significantly reliable across the three sessions (baseline, post-treatment, 1-month follow-up) spanning a roughly 2-month period, though the reliability coefficient was low (ICC=0.25; p=.037). Reliability was particularly strong across the first two (baseline and post-training) assessments (r=.63; p=.003). By contrast, conventional bias scores showed no reliability (ICC=.001; p=.48; max pairwise r=.20, ns), even when using only trials with probes appearing on the bottom of the screen, as recommended previously (10) to improve dot-probe reliability (ICC= −0.14; p=.82; max pairwise r=.27, ns).

To assess split-half reliability, DDM models were re-calculated allowing the t0 parameter to vary as a function of odd vs. even trials (see Supplement for alternative split-half reliability results when allowing additional model parameters to vary as a function of odd vs. even trials). Extradecisional bias scores were derived separately from odd vs. even trials, correlated with one another, and a Spearman-Brown prediction formula was applied to estimate reliability. Split-half reliability was adequate at each assessment point (baseline: Spearman-Brown reliability=0.85, r=0.732, p<.001; post-treatment: reliability=0.52, r=0.355, p<.001; follow-up: reliability=0.83, r=0.716, p<.001). By contrast, the split-half reliability for conventional bias scores was uniformly unacceptable (non-significant and near or below 0; r’s ranging from −0.185 to 0.187, p’s<=.15), both when analyzing all relevant trials and for dot-on-bottom trials only.

Correlates of extradecisional time.

Age.

For both trial types at baseline, extradecisional time was correlated with participant age (r’s>=.44; p’s<.001), while drift rates were not (∣r∣’s<.09; p’s>.45). This replicates a very well-established pattern within the DDM literature when applied to other two-choice tasks (28), suggesting the parameters derived from the dot-probe task in this sample conformed to established expectations.

Eye movements.

For both trial types at baseline, extradecisional time was moderately correlated with the percentage of trials in which overt eye fixations on one or both of the words in the word pair were registered by the eyetracker (r's>=.35; p’s<.004), while drift rates were not related to eye fixations (∣r∣<.05, p’s>.71) (see Supplement for eyetracking details). This further supports the DDM’s ability to accurately separate extradecisional components of task performance, which include overt attentional shifts in eye gaze (which would be expected to increase extradecisional time). However, unlike eye movement data, the extradecisional time component preserves the ability to capture important covert extradecisional processes [e.g., covert/mental shifts in attention; (29)] not reflected in overt eye movements.

Conventional bias scores.

Extradecisional_bias scores were moderately correlated with conventional bias scores at two out of the three assessment points (baseline: r=.34; p=.005; post-treatment: r=.20; p=.113; follow-up: r=.27; p=.039), suggesting the novel DDM-derived indices track with conventional methods, but are not fully collinear with them.

Neural correlates.

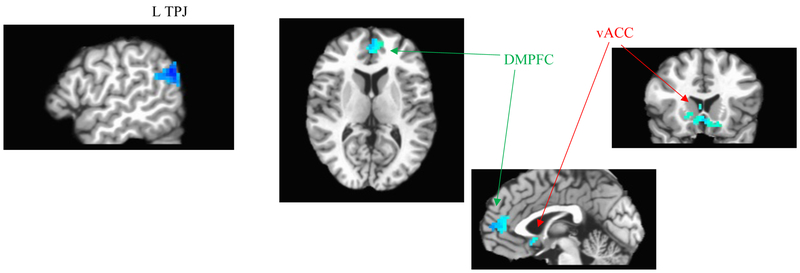

In whole-brain analyses, extradecisional_bias scores from the baseline assessment were negatively correlated with fMRI contrast values (incongruent-minus-congruent trial responses) across several regions implicated in emotional and attentional regulation of salient stimuli, including left temporoparietal junction, ventral anterior cingulate, and dorsomedial PFC (Figure 3). This pattern may implicate decreased regulatory control as a neural substrate linked to increased vigilance to threat (indexed by extradecisional_bias) across individuals (30,31). When using conventional attentional bias scores in an identical whole-brain search, no significant clusters tracking with degree of bias across individuals were identified.

Figure 3:

Clusters where incongruent-minus-congruent fMRI contrast values negatively correlate with DDM-derived extradecisional bias scores across individuals (from whole-brain analysis with map-wise p<.05; voxel-wise p<.005). From left to right, panels display the following clusters from Results text: L temperoparietal junction (TPJ; n=121 voxels; peak voxel: x=−46, y=−69, z=38; R2=.28); dorsomedial prefrontal cortex (DMPFC; n=93 voxels; peak voxel: x=−1, y=45, z=14; R2=.20); ventral anterior cingulate cortex (vACC; n=96 voxels; peak voxel: x=5, y=15, z=−3; R2=.21). No significant clusters exhibiting positive correlations were found.

Intervention effects.

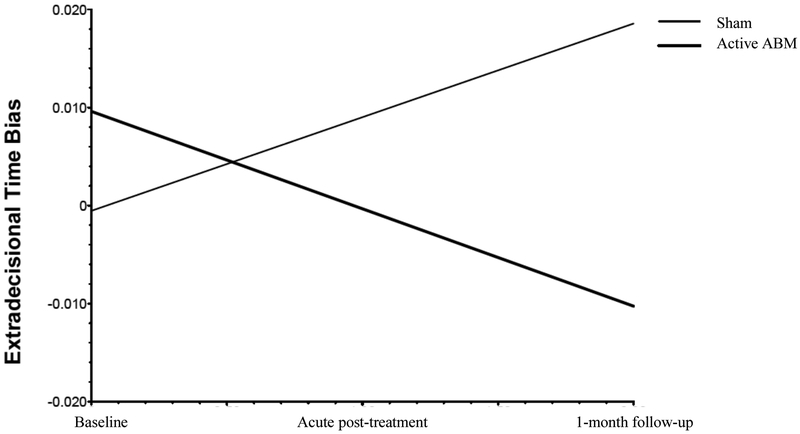

Intent-to-treat mixed models regression with extradecisional_bias as the dependent measure, training group (ABM or control) as a between-subjects factor, subject as a random factor, and timepoint (baseline, acute post-treatment, 1-month follow-up) as a within-subject factor revealed a significant effect of training condition on the slope of extradecisional_bias over time, indicating that the trajectory of scores over time differed across training conditions: t(68)=−3.41, p=.001, reffect size=.38 (a large effect (32)). As shown in Figure 2, extradecisional_bias decreased steadily over the course of the assessment points in the ABM group, such that individuals in this group became less biased towards threat over the course of the baseline, acute post-treatment, and 1-month follow-up [within-group effect of time: t(48)=−2.71, p=.009, reffect size=.36], while extradecisional_bias increased over time in the control training group [t(20)=2.15, p=.044, reffect size=.43]. These findings were maintained in models that included participant gender and age as covariates.

Figure 2.

Hierarchical linear regression equation plots depicting changes (slope) in extradecisional bias as a function of training group.

Conversely, identical mixed models regression analyses with conventional bias scores as the dependent measure revealed no evidence of intervention effects. Specifically, there were no effects of group on either the slope or the intercept of attentional bias over the three assessment points (group effect on slope of attentional bias: p’s>.46; reffect size ≤.09). There were also no effects of time on conventional attentional bias scores when considering the ABM group alone [p’s≥.40, reffect Size ≤.12].

Exploratory analysis: Relationship to clinical outcomes.

We explored whether change in extradecisional bias (delta score: post-treatment - baseline) was related to change in our primary clinical outcomes (clinician-rated hypervigilance; MASQ-Anxious Arousal and General Distress subscales; see Supplement for details), in either the ABM or sham training groups or across the full sample. No significant relationships were found (∣r∣ < .15; p’s>.31).

Discussion

The dot-probe task has been used in many hundreds of studies of affective conditions, spanning both internalizing [e.g., anxiety(7), depression(33), trauma(34), suicidality(35)] and externalizing [e.g., substance use(36), unhealthy eating(37)] conditions. The task assesses the clinically relevant construct of attentional bias—or preferential allocation of attention to disorder-relevant stimuli—which is a feature of information processing believed to have wide-reaching effects on the generation and/or maintenance of a range of psychopathological symptoms. In this first, proof-of-principle application of a well-validated computational modeling approach to dissect behavioral data collected during the dot-probe task, we introduce a novel analytic approach for dot-probe data and show that good model fits were readily achievable using publicly available software. We further demonstrate that split-half reliability of resulting DDM-derived attentional bias indices was strong—a necessary precondition for evaluating individual differences (38)—and test-retest reliability, though still modest, clearly outperformed conventional attention bias scores, where widely-acknowledged problems with reliability threaten to stall progress (10,11). With respect to convergent validity, the DDM-derived extradecisional parameters tracked as expected with participant age, eye movements measured concurrently during the task, and conventional bias scores, and with altered salience network activation during a dot-probe task performed in the fMRI scanner (Figure 3). Finally, the novel extradecisional bias scores appeared more sensitive to treatment change—when using these indices, hypothesized mechanistic intervention effects were unveiled which were not detectable using conventional bias quantification methods.

The advantages of computational modeling include the ability to make full use of trial-level data to obtain more fine-grained detail about cognitive processes (3). In the context of anxiety research, computational modeling approaches have previously revealed novel insights including altered learning mechanisms [e.g., increased threat expectations, decreased ability to leverage causal statistics to accurately predict outcomes;(39,40)], which may contribute to the development of affective biases such as attention bias (41); dynamic processes of task performance such as an increased tendency to apply caution in response to errors (19); and effects of threatening content on memory performance which were not detectable using traditional memory bias indices (17,18). In an effort to encourage replications and extensions of the current work, we used computational modeling methods which are freely available for download, making the enormous corpus of previously collected dot-probe data—which has produced replicable (7) yet notoriously mixed and unreliable (11) findings using conventional analysis techniques—ripe for the picking. Our results suggest the potential benefits may include improved reliability, interpretability, and sensitivity of attentional bias indices.

Our fMRI analyses suggest the DDM-derived index could also be readily traced backwards to neural substrates, while conventional bias scores could not. Findings implicated decreased recruitment of attentional and emotional control regions that regulate responses to salient stimuli (30,31) in those individuals showing the largest degree of extradecisional bias. While this finding may be consistent with the hope that computational psychiatry will assist in establishing linkages across levels of analysis, we were unable to further link extradecisional bias forward to clinical symptoms in the current sample. Specifically, in exploratory analyses we found no evidence that shifts in extradecisional bias over time mediated acute improvements in clinical symptoms (following either ABM or sham training). These analyses were limited by the relatively small sample sizes in the two arms, the (still suboptimal) test-retest reliability of the DDM indices, and by the fact that robust between-group differences in clinical outcomes were not observed in the current study (23). Larger or more clinically homogeneous samples may be required to adequately test for such linkages. Given the prevalent usage of the dot-probe task across numerous ABM studies conducted to date, reanalysis of existing datasets may present an accessible opportunity to address these questions in sufficiently powered samples.

Limitations.

The DDM extradecisional time parameter conflates both cognitive processes of theoretical interest [e.g., time taken to orient attention to the probe location] and those of less interest (response preparation/execution). Although good model fits to the empirical data were obtained in the present analyses, computational modeling necessarily involves assumptions that may vary in their applicability across participants and/or studies, and model fits, though uniformly adequate, did vary to some degree across individual datasets. With respect to test-retest reliability, the DDM-derived bias scores surpassed that of conventional bias scores in the current dataset, as well as exceeding the maximum reliability achieved in previous exhaustive analyses of conventional bias scores conducted across multiple datasets (max ICC=.19; (10)); yet test-retest reliability still remained suboptimal, which may be particularly problematic if correlational analyses of individual differences in trajectories over time within a group (e.g., mediation analyses) are desired. This may be partly due to inherent (mathematically determined) challenges in obtaining good reliability whenever a bias score is computed as a difference score between two task conditions which are themselves highly correlated across individuals (11) or may be a natural by-product of state (rather than trait-like) influences on performance. Unexpectedly, extradecisional_bias increased over repeated assessments within the sham training group, which could be linked to the aforementioned factors limiting stability of bias scores over time, coupled with the relatively small control group sample; or could suggest that the control training had an unanticipated (and potentially unwanted) effect in this sample. The dot-probe task has been critiqued for conflating other features of attention which DDM cannot separate—e.g., initial engagement with threat vs. disengagement from threat; overt and covert aspects of attention (29,42). While the current findings may inform improved analysis strategies applicable to a large volume of existing data, future work should aim to develop and refine new assessment methods (e.g.,40), ideally with an explicit eye towards task features that will maximize the ability to apply computational analytic methods and precisely quantify cognitive components most relevant to the attentional mechanisms of interest. Finally, the current dataset was suboptimal for examining key clinically relevant questions (e.g., mediation of clinical effects), particularly given small within-treatment sample sizes and the lack of robust group differences in clinical outcomes.

Conclusions.

The present analyses suggest a well-validated computational modeling method (DDM) is appropriate for modeling of the dot-probe task in clinically anxious samples and represents an improved analytic technique that can yield novel insights. Widespread interest in quantifying and mechanistically targeting attentional bias in clinical populations has led to widespread use of the dot-probe task. However, efforts to better understand the nature, role, and neurobiological substrates of attentional patterns are stymied by recent recognition of the limitations of conventional analytic methods. Our results introduce one possible method of extracting more reliable, precise, and sensitive attentional bias metrics from existing behavioral dot-probe datasets—which likely span tens of thousands of participants—and could therefore improve the potential to translate this fundamental, transdiagnostic mechanism, both backward to neural substrates and forward to clinical applications.

Supplementary Material

Acknowledgement.

This research was supported by NIH Career Development Award K23MH100259. We gratefully acknowledge Danielle Gilchrist, Logan Cummings, Simona Graur, and the study participants for their contributions to this work.

Footnotes

Financial Disclosures. Dr. Price, Ms. Brown, and Dr. Siegle reported no biomedical financial interests or potential conflicts of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Pine DS: Clinical Advances From a Computational Approach to Anxiety. Biol Psychiatry 2017;82(6):385–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ferrante M, Redish AD, Oquendo MA, Averbeck BB, Kinnane ME, Gordon JA: Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges. Mol Psychiatry 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Huys QJ, Maia TV, Frank MJ: Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci 2016;19(3):404–13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Carrasco M: Visual attention: the past 25 years. Vision Res 2011;51(13):1484–525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kessler RC: The global burden of anxiety and mood disorders: putting the European Study of the Epidemiology of Mental Disorders (ESEMeD) findings into perspective. J Clin Psychiatry 2007;68 Suppl 2:10–9 [PMC free article] [PubMed] [Google Scholar]

- 6.Kessler RC, Chiu WT, Demler O, Merikangas KR, Walters EE: Prevalence, severity, and comorbidity of 12-month DSM-IV disorders in the National Comorbidity Survey Replication. Arch Gen Psychiatry 2005;62(6):617–27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bar-Haim Y, Lamy D, Pergamin L, Bakermans-Kranenburg MJ, van IJzendoorn MH: Threat-related attentional bias in anxious and nonanxious individuals: a meta-analytic study. Psychol Bull 2007;133(1):1–24 [DOI] [PubMed] [Google Scholar]

- 8.Price RB, Wallace M, Kuckertz JM, Amir N, Graur S, Cummings L, Popa P, Carlbring P, Bar-Haim Y: Pooled patient-level metaanalysis of children and adults completing a computer-based anxiety intervention targeting attentional bias. Clin Psychol Rev 2016;50:37–49 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.MacLeod C, Clarke PJF: The attentional bias modification approach to anxiety intervention. Clin Psychol Sci 2015;3(1):58–78 [Google Scholar]

- 10.Price RB, Kuckertz JM, Siegle GJ, Ladouceur CD, Silk JS, Ryan ND, Dahl RE, Amir N: Empirical recommendations for improving the stability of the dot-probe task in clinical research. Psychol Assess 2015;27(2):365–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rodebaugh TL, Scullin RB, Langer JK, Dixon DJ, Huppert JD, Bernstein A, Zvielli A, Lenze EJ: Unreliability as a threat to understanding psychopathology: The cautionary tale of attentional bias. J Abnorm Psychol 2016;125(6):840–51 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kruijt AW, Field AP, Fox E: Capturing Dynamics of Biased Attention: Are New Attention Variability Measures the Way Forward? PLoS One 2016; 11(11):e0166600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ratcliff R, McKoon G: The diffusion decision model: Theory and data for two-choice decision tasks. Neural Comp 2008;20:873–922 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Voss A, Nagler M, Lerche V: Diffusion models in experimental psychology: a practical introduction. Exp Psychol 2013;60(6):385–402 [DOI] [PubMed] [Google Scholar]

- 15.MacLeod C, Mathews A, Tata P: Attentional bias in emotional disorders. J Abnorm Psychol 1986;95(1):15–20 [DOI] [PubMed] [Google Scholar]

- 16.White C, Ratcliff R, Vasey M, McKoon G: Dysphoria and memory for emotional material: A diffusion-model analysis. Cogn Emot 2009;23(1):181–205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.White CN, Ratcliff R, Vasey MW: Anxiety-related threat bias in recognition memory: the moderating effect of list composition and semantic-similarity effects. Cogn Emot 2015:1–15 [DOI] [PubMed] [Google Scholar]

- 18.White CN, Ratcliff R, Vasey MW, McKoon G: Anxiety enhances threat processing without competition among multiple inputs: a diffusion model analysis. Emotion 2010;10(5):662–77 [DOI] [PubMed] [Google Scholar]

- 19.White CN, Ratcliff R, Vasey MW, McKoon G: Using diffusion models to understand clinical disorders. J Math Psychol 2010;54(1):39–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dillon DG, Wiecki T, Pechtel P, Webb C, Goer F, Murray L, Trivedi M, Fava M, McGrath PJ, Weissman M, Parsey R, Kurian B, Adams P, Carmody T, Weyandt S, Shores-Wilson K, Toups M, McInnis M, Oquendo MA, Cusin C, Deldin P, Bruder G, Pizzagalli DA: A computational analysis of flanker interference in depression. Psychol Med 2015;45(11):2333–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pe M, Vandekerckhove J, Kuppens P: A diffusion model account of the relationship between the emotional flanker task and rumination and depression. Emotion 2013;13(4):739–47 [DOI] [PubMed] [Google Scholar]

- 22.Tipples J: Rapid temporal accumulation in spider fear: Evidence from hierarchical drift diffusion modelling. Emotion 2015;15(6):742–51 [DOI] [PubMed] [Google Scholar]

- 23.Price R, Cummings L, Gilchrist D, Graur S, Banihashemi L, Kuo S, Siegle G: Toward personalized, brain-based behavioral intervention for transdiagnostic anxiety: Transient neural responses to negative images predict outcomes following a targeted computer-based intervention. J Consult Clin Psychol In press [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Amir N, Beard C, Burns M, Bomyea J: Attention modification program in individuals with generalized anxiety disorder. J Abnorm Psychol 2009;118:28–33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cox RW, Reynolds RC, Taylor PA: AFNI and clustering: false positive rates redux. BioRXiv 2016;doi: 10.1101/065862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Voss A, Voss J: Fast-dm: a free program for efficient diffusion model analysis. Behav Res Methods 2007;39(4):767–75 [DOI] [PubMed] [Google Scholar]

- 27.Koo T, Li M: A guideline of selecting and reporting Intraclass Correlation Coefficients for reliability research. J Chiropr Med 2016;15(2):155–63 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ratcliff R: Modeling aging effects on two-choice tasks: response signal and response time data. Psychol Aging 2008;23(4):900–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Weierich MR, Treat TA, Hollingworth A: Theories and measurement of visual attentional processing in anxiety. 2008;22(6):985–1018 [Google Scholar]

- 30.Etkin A, Egner T, Kalisch R: Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn Sci 2011;15(2):85–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Corbetta M, Shulman GL: Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 2002;3(3):201–15 [DOI] [PubMed] [Google Scholar]

- 32.Cohen J: Statistical power analysis for the behavioral sciences. Hillsdale, NJ, Lawrence Erlbaum Associates, 1988 [Google Scholar]

- 33.Peckham AD, McHugh RK, Otto MW: A meta-analysis of the magnitude of biased attention in depression. Depress Anxiety 2010;27(12):1135–42 [DOI] [PubMed] [Google Scholar]

- 34.Naim R, Wald I, Lior A, Pine DS, Fox NA, Sheppes G, Halpern P, Bar-Haim Y: Perturbed threat monitoring following a traumatic event predicts risk for post-traumatic stress disorder. 2014;44(10):2077–84 [DOI] [PubMed] [Google Scholar]

- 35.Cha CB, Najmi S, Park JM, Finn CT, Nock MK: Attentional bias toward suicide-related stimuli predicts suicidal behavior. J Abnorm Psychol 2010;119(3):616–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Waters AJ, Marhe R, Franken IH: Attentional bias to drug cues is elevated before and during temptations to use heroin and cocaine. Psychopharmacology (Berl) 2012;219(3):909–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Calitri R, Pothos EM, Tapper K, Brunstrom JM, Rogers PJ: Cognitive biases to healthy and unhealthy food words predict change in BMI. 2010;18(12):2282–7 [DOI] [PubMed] [Google Scholar]

- 38.Hajcak G, Meyer A, Kotov R: Psychometrics and the neuroscience of individual differences: Internal consistency limits between-subjects effects. J Abnorm Psychol 2017;126(6):823–34 [DOI] [PubMed] [Google Scholar]

- 39.Browning M, Behrens TE, Jocham G, O'Reilly JX, Bishop SJ: Anxious individuals have difficulty learning the causal statistics of aversive environments. Nat Neurosci 2015;18(4):590–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Browning M, Harmer CJ: Expectancy and surprise predict neural and behavioral measures of attention to threatening stimuli. Neuroimage 2012;59:1942–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pulcu E, Browning M: Affective bias as a rational response to the statistics of rewards and punishments. eLife 2017; 6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Grafton B, MacLeod C: Enhanced probing of attentional bias: the independence of anxiety-linked selectivity in attentional engagement with and disengagement from negative information. Cogn Emot 2014;28(7): 1287–302 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.