Abstract

We propose an attention-based method that aggregates local image features to a subject-level representation for predicting disease severity. In contrast to classical deep learning that requires a fixed dimensional input, our method operates on a set of image patches; hence it can accommodate variable length input image without image resizing. The model learns a clinically interpretable subject-level representation that is reflective of the disease severity. Our model consists of three mutually dependent modules which regulate each other: (1) a discriminative network that learns a fixed-length representation from local features and maps them to disease severity; (2) an attention mechanism that provides interpretability by focusing on the areas of the anatomy that contribute the most to the prediction task; and (3) a generative network that encourages the diversity of the local latent features. The generative term ensures that the attention weights are non-degenerate while maintaining the relevance of the local regions to the disease severity. We train our model end-to-end in the context of a large-scale lung CT study of Chronic Obstructive Pulmonary Disease (COPD). Our model gives state-of-the art performance in predicting clinical measures of severity for COPD.The distribution of the attention provides the regional relevance of lung tissue to the clinical measurements.

1. Introduction

We propose a deep learning model that learns subject-level representation from a set of local features. Our model represents the image volume as a bag (or set) of local features (or patches) and can accommodate input images of variable sizes. We target diseases where the pathology is diffused and is not always located in the same anatomical region. The model learns by optimizing the objective function that balances two goals: (1) to build a fixed length subject-level feature that is predictive of the disease severity, (2) to extract interpretable local features that identify regions of anatomy that contribute the most to the disease. Our motivation comes from the study of COPD, but the proposed model is applicable to a wide range of heterogeneous disorders.

Many diseases such as emphysema are highly heterogeneous [16] and show diffuse pattern in computed tomographic (CT) images of the lung. Having an objective way to characterize local patterns of the disease is important in diagnosis, risk prediction, and sub-typing [18,7,13,5]. Although various intensity and texture based feature descriptors are proposed to characterize the visual appearance of the disease [2,19,20], most image features are generic and are not necessarily optimized for the disease. Recent advances in deep learning enable researchers going directly from raw image to clinical outcome without specifying radiological features [6,4]. However, the classical deep learning methods, that operate on entire volume or slices[6], are challenging to interpret and they require resizing the input images to a fixed dimension. That is particularly the case for lung images due to the significant variation in the lung volume amongst individuals. Reshaping voxels in a CT image without adjusting for the density, changes the interpretation of the intensity values.

In this paper, we address these issues. We view each subject as a set of image patches from the lung region. Different lung sizes result in bags with different number of elements. Previously, [2,17] viewed the subjects as sets and used handcrafted image features. In contrast, the discriminative part of our model uses deep learning approach and directly extracts features from the volumetric patches. Next, we use an attention mechanism [1] to adaptively weight local features and build the subject level representation, which is predictive of the disease severity. Our model is inspired by the Deep Set [21]. However, our method adopts generative regularization to prevent the redundancy of the hidden features. Furthermore, the attention mechanism provides interpretability by quantifying the relevance of a region to the disease. We evaluate the performance of our method on a simulated dataset as well as a large-scale COPD lung CT dataset where our method gives state-of-the-art performance in predicting the clinical measurements.

2. Method

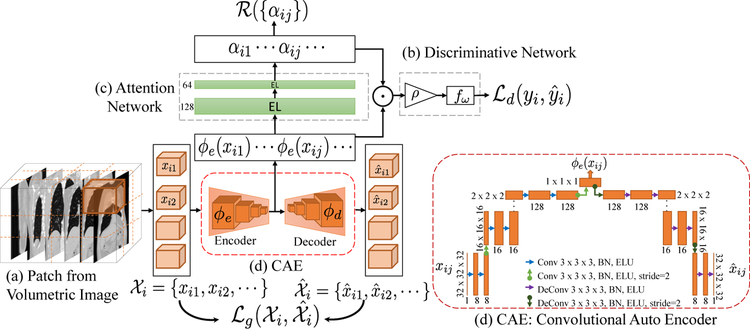

We represent each subject as a set (bag) of volumetric image patches extracted from the lung region , where Ni is the number of patches for subject i. Our method maps xij to a low-dimensional latent space. It then aggregates the latent features to form a fix-length representation, by adaptively weighting the patches based on their contribution in prediction of disease severity (yi). The general idea of our approach is shown in Fig.1.

Fig. 1.

(a) A subject is represented as a set of 3d image patches, (b) Discriminative Network: aggregates local features to form a fixed length representation for the subject and predicts the disease severity , (c) Attention Network: focuses attention on critical patches to provide interpretability. (d) Convolutional Auto Encoder (Generative Network): prevents redundancy of latent features.

The method consists of three networks that are trained jointly: (1) a discriminative network, that aggregates the local information from patches in the set to predict the disease severity yi, (2) an attention mechanism, that helps discriminative network to selectively focus on patch-features by assigning weights to the patches in , and (3) a generative network, that regularizes the discriminative network to avoid redundant representation of patches in the latent space.

The model is trained end to end, by minimizing the below objective function:

| (1) |

where and are the discriminative and generative loss functions respectively and the third term is a regularization over the attention. The θe, θd, θa and ω are the parameters of each loss function; some of the parameters are shared. The γ1, γ2 control the balance between the terms. The sum is over number of subjects. In the following section, we discuss each term in more detail.

2.1. Discriminative Network

The discriminative network transforms the input set of image patches and estimates the disease severity as

| (2) |

The transformation is composed of three functions: (1) ϕe(·; θe) is an encoder function parameterized by θe. It extracts features from patches in the set and outputs a set of features. (2) The ρ(·) function operates on the elements of the set and converts the variable length set into a fixed length vector. It is a permutation invariant function such as, maximum function ρ(·) = max(ϕe(xi,1), … , ϕe(xini)) or mean function . This formulation ensures that, is invariant to the order of patches in . In our experiments, we tried different ρ’s and the mean function works well for our task. The mean function assumes all the instances within the set are contributing equally to the set level feature vector. We extended it further to perform weighted mean, where weights are learned using the attention network in Section 2.2. (3) f (·; ω) is a prediction function, parameterized by ω. It takes the set-level feature vector extracted by ρ(·) as input, and estimates the disease severity. In this paper, we predict two continuous clinical variables. Finally, is a ℓ2 loss function between predicted and true value.

2.2. Attention Mechanism

The goal of our proposed model is twofold: first to provide a prediction of the disease severity and secondly, to provide a qualitative assessment of our prediction, to enhance the interpretability of the results. For the given problem, it is reasonable to assume that different regions in the lung contribute differently to the disease severity. We model this contribution by adaptively weighting the patches. The weight indicates the importance of a patch in predicting the overall disease severity of the lung. This idea is similar to the concept of attention mechanism in the Computer Vision [1] and Natural Language Processing [10] communities.

We estimate the attention weights for the subject i (αi = {αi1, ⋯ , αi,Ni}) by the attention network as

| (3) |

Unlike the ρ(·) function in Section 2.1, A(·; θa) maps a set to another set. Permuting the order of elements in the set , should equivariantly permute the output set αi. To ensure A(·) is a permutation equivariant function, we construct it as a neural network with equivariant layer (EL) [21]. Assuming where kth row is , one possible way of modeling the equivariant layer is

| (4) |

where [Hi]k denotes kth row of Hi and max(Hi, 1) is the max over rows. are the parameters of the EL. It is straightforward to show that the function in Eq. 4 is permutation-equivariant. To ensure A(·; θa) is permutation equivariant we construct it by composing few EL’s. Also, we assume that the weights (αi) are non-negative numbers that sums to 1. We pass the output of the EL to a softmax to obtain a distribution of weights over the patches in the subject. Finally, to ensure the weights are sparsely distributed, we added a regularization term to the loss function in Eq. 1

2.3. Generative Network

The encoder function ϕe projects the raw image patch xij to a d-dimensional latent representation . Without extra regularization, the loss function focuses only on the prediction task, forcing the encoder function to extract information that is only relevant to y. If y is low dimensional, the encoder function learns a highly redundant latent space representation for each patch. Since αij is a function of ϕe (xij ,θe), redundant features result in almost uniform weights i.e., . This phenomenon makes interpretability very difficult. We demonstrated this effect in our experiments.

To discourage this loss of information, we added a convolutional auto-decoder (CAE) [12] to reconstruct input patch as . Finally, we add a generative loss to the cost function in Eq. 1

2.4. Architecture Details

The f(·; ω) is a linear function predicting the disease severity yi. The architecture of generative network is elaborated in Fig.1. The convolutional layer employs batch-normalization for regularization, followed by an exponential linear unit (ELU) [3] for non-linearity. The attention network A(·; θa) has 2 equivalence layers with sigmoid activation function, followed by a softmax layer. The model is trained using Adam optimizer [8] with a fixed learning rate of 0.001.

3. Experiments

We evaluate the prediction and interpretation of our method on synthetic and real datasets. To evaluate the interpretability of our method quantitatively, we synthesize a dataset where the set-level target (y) are simulated from a subset of instances. Hence by viewing the attention weights as a detector of the relevant instances, we are able to evaluate the interpretability of our approach.

3.1. Synthetic Data

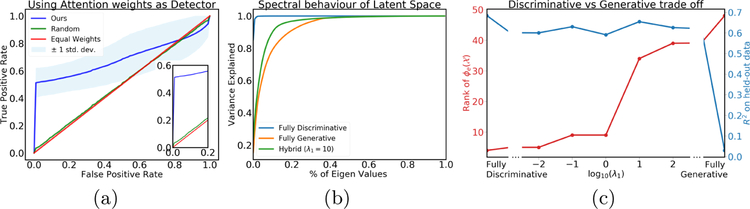

In this experiment, we build 10,000 training and 8,000 testing sets. The instances in the set are randomly drawn images from MNIST [9] dataset. The size of the sets varies between 20 to 100 instances. Each image is a 28 × 28 pixel monochrome image of a handwritten digit between 0 – 9. The set-label (y) is the sum of prime numbers (2, 3, 5, 7) in that set. Our method predicts the set-label with a high accuracy (R2 = 0.99 on held-out data). We view the attention weights as detectors of prime numbers. Note that no instance level supervision is used. We make an ROC (Receiver Operating Characteristic) curve per set, and compute one average ROC curve across the held-out dataset. Fig.2(a) shows the average and error bar for all the sets. The figure compares our method (blue) with equal weights (red) and uniform random weights (green). Our method can detect correct instances in the set, with only weak supervision over the set (i.e., set-level label y). Here we used λ1 = 100 and λ2 = 0.01.

Fig. 2.

(a) ROC curve of detecting true relevant instances on synthetic dataset using attention weights, (b) Spectral properties of patch-level features for different values of λ1. (c) The trade-off between rank of latent space (red, y-axis on left) and predictive power (blue, y-axis on right) for different values of λ1. Left represents fully discriminative and right represents fully generative models.

3.2. COPD

We evaluate our model on 6,400 subjects with different degrees of severity of the COPD from the COPDGene dataset [14]. As clinical measures of the disease severity, we use the Forced Expiratory Volume in one second (FEV1), the ratio of FEV1 and Forced Vital Capacity (FVC), and discrete score (between 0–4) called the Global Initiative for Chronic Obstructive Lung Disease (GOLD). We first segment the lung area on the inspiratory images using CIP library [15]. Each subject is represented as a bag of equal size 3D patches, with some overlap. Large patch size and percentage overlap leads to GPU memory issues. We experimented with different values and finally used patch-size of 32 × 32 × 32 with 40% overlap in our experiments.

We perform three experiments: (1) Prediction: we compare the performance of our method against the sate-of-art for predicting the clinical measurements, (2) Generative regularizer (λ1): we study the effect of the generative regularizer (i.e., λ1) in terms of prediction accuracy and information preserved in latent space, (3) Visualization: we visualize the interpretation of the model on the subject and population level. Unlike λ1, the choice of λ2 don’t have any significant effect on the prediction accuracy. The value of λ2 influences the sparsity and diversity of the attention weights. In the experiments, we fixed λ2 to 0.0001.

Prediction:

We compare to several baselines: (a) Baseline: two threshold-based features measuring the percentage of voxels with intensity values less than a threshold in the images; 950 Hounsfield Unit (HU) for the inspiratory and −856 HU for expiratory. Those measurements reflect what is clinically used to quantify emphysema and the degree of gas trapping. (b) Non-parametric: Schabdach et. al [17] view each subject as a set of hand-crafted histogram and texture features from supervoxels. They represent each subject in an embedding space using a non-parametric distance between sets. (c) CNN: Gonzalez et. al [6] use deep features learned from a composite image of four canonical views of a CT scan to quantify FEV1 and stage COPD. (d) BOW: This method views each subject as a set of hand-crafted features from super-voxels but applies k–means to extract the subject-level representation. We perform 10-fold cross-validation and report R2 for the continuous measurements (i.e., FEV1 and FEV/FVC) and accuracy for the GOLD score. Since the GOLD score is a discrete but ordered value, we report the percentage of cases whose classification lays within one class of the true value (one-off). The Table 3.2 summarizes the results of the experiments. Our method outperforms the state-of-the-art on predicting FEV1 and GOLD score. Adding the generative regularization (λ1 = 10) reduces the accuracy but results in much better interpretability. In the following, we study the effect of λ1.

Generative regularizer (λ1):

The Fig. 2(b) reports the spectral behaviors of the latent features (i.e., ) for different values of λ1. For small λ1 the loss function doesn’t optimize for the generative loss. Hence, the latent space representation becomes highly redundant, and all the attention weights αij becomes similar and converges to . The Fig. 2(c) shows the trade-off between effective rank of the latent feature (red, y-axis on left) and R2 for predicting FEV1 (blue, y-axis on right). Although, the R2 drops a little, the rank, which represents the diversity of the latent features, improves drastically. The gap between accuracies of λ1 =0 and λ1 > 0 is the price we pay for the interpretability. Fully generative model (λ1 → ∞) does not produce good prediction.

Visualization:

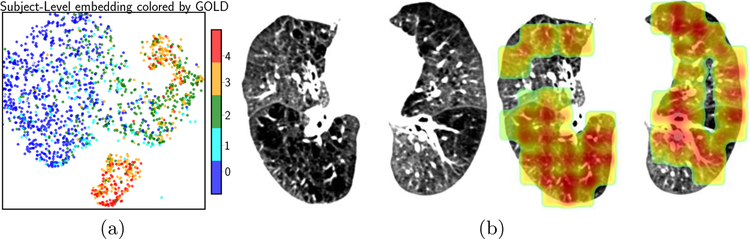

We use tSNE [11] to visualize subject-level features in two dimension. In Fig. 3(a), each dot represents a subject colored by the GOLD score. Even in two dimension, subjects with GOLD score of (0,1) and (3,4) are quite separable and 2’s are in between. The bimodal distribution of GOLD stages 3 and 4, is sensitive to t-SNE parameterization and requires further investigation. 3(b) visualizes the attention weights on one subject. The dark area on the left lung, which is severely damaged, received hight attention.

Fig. 3.

(a) Embedding the subjects in 2D using tSNE. The dots represents one subject colored by the GOLD score. (b) An axial view of the attention map on a subject. Red color indicate higher relevance to the disease severity.

4. Conclusion

We developed a novel attention-based model that achieves high prediction while maintaining interpretability. The method outperforms state-of-art and detects correct instances on the simulated data. Our current model does not account for spatial locations of the patches. As a future direction, we plan to extend the model to accommodate relationship between patches.

Table 1.

Clinical measurement regression and GOLD stage classification accuracy by different methods on the COPDGene dataset.

Acknowledgement

This work is partially supported by NIH Award Number 1R01HL141813–01. We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan X Pascal GPU. We thank Competitive Medical Research Fund (CMRF) grant for their funding.

References

- 1.Bengio Y, Simard P, Frasconi P, Xu Kelvin, Ba, J., Kiro R, Cho K, Courville A, Salakhudinov R, Zemel R: show, attend and tell. IEEE Transactions on Neural Networks 5(2), 157–166 (2015) [DOI] [PubMed] [Google Scholar]

- 2.Cheplygina V, Pena IP, Pedersen JH, Lynch DA, Sørensen L, de Brui-jne M: Transfer learning for multi-center classification of chronic obstructive pulmonary disease (1 2017) [DOI] [PMC free article] [PubMed]

- 3.Clevert DA, Unterthiner T, Hochreiter S: Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs) (11 2015)

- 4.Dubost F, Bortsova G, Adams H: Gp-Unet: Lesion detection from weak labels with a 3D regression network In: MICCAI. vol. 10435 LNCS, pp. 214–221 (2017) [Google Scholar]

- 5.Estépar RSJ, Kinney GL: Computed tomographic measures of pulmonary vascular morphology in smokers and their clinical implications. AJRCCM (2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.González G, Ash SY, V S G: Disease Staging and Prognosis in Smokers Using Deep Learning in Chest Computed Tomography. AJRCCM pp. 201705–0860 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hayhurst MD, MacNee W, Flenley DC: Diagnosis of pulmonary emphysema by computerised tomography. Lancet 2(8398), 320–322 (1984) [DOI] [PubMed] [Google Scholar]

- 8.Kingma DP, Ba J: Adam: A Method for Stochastic Optimization (12 2014)

- 9.LeCun Y, Cortes C: MNIST handwritten digit database. AT&T Labs (2010) [Google Scholar]

- 10.Luong MT, Pham H, Manning CD: Effective Approaches to Attention-based Neural Machine Translation (8 2015)

- 11.Maaten L v.d., Hinton G: Visualizing Data using t-SNE. Journal of Machine Learning Research 9(Nov), 2579–2605 (2008) [Google Scholar]

- 12.Masci J, Meier U, Ciresan D, Schmidhuber J: Stacked convolutional autoencoders for hierarchical feature extraction. In: LNCS. pp. 52–59 (2011) [Google Scholar]

- 13.Müller NL, Staples CA, Miller RR, Abboud RT: Density mask: An objective method to quantitate emphysema using computed tomography. (10 1988) [DOI] [PubMed]

- 14.Regan EA, Hokanson JE, Murphy JR, Make B, Lynch DA, Beaty TH, Curran-Everett D, Silverman EK, Crapo JD: Genetic epidemiology of COPD (COPDGene) study design. Journal of COPD 7(1), 32–43 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ross JC, Harmouche R, Onieva J, Diaz AA, Washko GR, Estepar RSJ: CIP: an open-source library and workstation for quantitative chest imaging. Am J Respir Crit Care Med 191, A4975 (2015) [Google Scholar]

- 16.Satoh K, Kobayashi T, Murota M: CT assessment of subtypes in pulmonary emphysema in smokers. JJCR 46(1), 98–102 (2001) [DOI] [PubMed] [Google Scholar]

- 17.Schabdach J, Wells WM, Cho M, Batmanghelich KN: A likelihood-free approach for characterizing heterogeneous diseases in large-scale studies In: IPMI. vol. 10265 LNCS, pp. 170–183 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shapiro SD: Evolving concepts in the pathogenesis of chronic obstructive pulmonary disease. Clinics in chest medicine 21(4), 621–632 (2000) [DOI] [PubMed] [Google Scholar]

- 19.Sorensen L, Nielsen M, Lo P, Ashraf H, Pedersen JH, De Bruijne M: Texture-based analysis of COPD: A data-driven approach. IEEE Transactions on Medical Imaging 31(1), 70–78 (2012) [DOI] [PubMed] [Google Scholar]

- 20.Yang J, Angelini ED, Balte PP, Hoffman EA, Austin JHM, Smith BM, Song J, Barr RG, Andrew F Laine: Unsupervised discovery of spatially-informed lung texture patterns for pulmonary emphysema: The MESA COPD study In: MICCAI. vol. 10433 LNCS, pp. 116–124 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zaheer M, Kottur S, Ravanbakhsh S, Poczos B, Salakhutdinov R, Smola A: Deep Sets (3 2017)