Abstract

Computed tomography (CT) is a popular medical imaging modality and enjoys wide clinical applications. At the same time, the x-ray radiation dose associated with CT scannings raises a public concern due to its potential risks to the patients. Over the past years, major efforts have been dedicated to the development of Low-Dose CT (LDCT) methods. However, the radiation dose reduction compromises the signal-to-noise ratio (SNR), leading to strong noise and artifacts that downgrade CT image quality. In this paper, we propose a novel 3D noise reduction method, called Structurally-sensitive Multi-scale Generative Adversarial Net (SMGAN), to improve the LDCT image quality. Specifically, we incorporate three-dimensional (3D) volumetric information to improve the image quality. Also, different loss functions for training denoising models are investigated. Experiments show that the proposed method can effectively preserve structural and textural information in reference to normal-dose CT (NDCT) images, and significantly suppress noise and artifacts. Qualitative visual assessments by three experienced radiologists demonstrate that the proposed method retrieves more information, and outperforms competing methods.

Keywords: Machine Leaning, Low dose CT, Image denoising, Deep learning, Loss Function

I. INTRODUCTION

X-RAY computed tomography (CT) is one of the most popular imaging modalities in clinical, industrial, and other applications [1]. Nevertheless, the potential risks (i.e., a chance to induce cancer and cause genetic damage) of ionizing radiation associated with medical CT scans cause a public concern [2]. Studies from the National Council on Radiation Protection and Measurements (NCRP) demonstrate a 600% increase in medical radiation dose to the US population from 1980 to 2006, showing both great successes of the CT technology and an elevated alert to patients [3].

The main drawback of radiation dose reduction is to increase the image background noise, which could severely compromise diagnostic information. How to minimize the exposure to ionizing radiation while maintaining diagnostic utility of low-dose CT (LDCT) has been a challenge for researchers, who follows the well-known ALARA (as low as reasonably achievable) guideline [1]. Numerous methods were designed for LDCT noise reduction. These methods can be categorized as follows: (1) Sinogram filtering-based techniques [4]–[9]: these methods directly process projection data in the projection domain [6]. The main advantage of these methods is computational efficiency. However, they may result in loss of structural information and spatial resolution [6], [7], [10]; (2) Iterative reconstruction (IR) [11]–[20]: IR techniques may potentially produce high signal-to-noise ratio (SNR). However, these methods require a substantial computational cost and troublesome parametric turning; (3) Image space denoising techniques [20]–[27]: these techniques can be performed directly on reconstructed images so that they can be applied across various CT scanners at a very low cost. Examples are non-local means-based filters [16], [21], dictionary-learning-based K-singular value decomposition (KSVD) method [20] and the block-matching 3D (BM3D) algorithms [24], [25]. Even though these algorithms greatly suppress noise and artifacts, edge blurring or resolution loss may persist in processed LDCT images.

Deep learning (DL) has recently received a tremendous attention in the field of medical imaging [28], [29], such as brain image segmentation [30], image registration [31], [32], image classification [33], and LDCT noise reduction [34]– [40]. For example, Chen et al. [35] proposed a Residual Encoder-Decoder Convolutional Neural Network (REN-CNN) to predict NDCT images from noisy LDCT images. This method greatly reduces the background noise and artifacts. However, a limitation is that the results look blurry sometimes since the method targets minimizing the mean-squared error between the generated LDCT and corresponding NDCT images. To cope with this problem, the generative adversarial network (GAN) [41] offers an attractive solution. In the GAN, the generator G learns to capture a real data distribution Pr while the discriminator D attempts to discriminate between the synthetic data distribution and the real counterpart. Note that the loss used in GAN, called the adversarial loss, measures the distance between the synthetic data distribution and the real one in order to improve the performance of G and D simultaneously. Originally, GAN uses the Jensen-Shannon (JS) divergence to evaluate the similarity of the two data distributions [41]. However, several problems exist in training GAN, such as unstable training and non-convergence. To address these issues, Arjovsky et al. introduced the Wasserstein distance instead of the Jensen-Shannon divergence to improve the neural network training [42]. We will discuss more details on this aspect in Section II-D3.

In our previous work [37], we first introduced the perceptual loss to capture perceptual differences between denoised LDCT images and the reference NDCT images, providing the perceptually better results for clinical diagnosis at a cost of low scores in traditional image quality metrics. Since the traditional image quality metrics evaluate the generated images with reference to the gold-standard in generic ways, minimizing the perceptual loss does not ensure the results optimal in terms of the traditional image quality metrics. To address this discrepancy and inspired by the work in [36], [43], here we propose a novel 3D clinical Structurally-sensitive Multi-scale Generative Adversarial Network (SMGAN) to capture subtle structural features while maintaining high visual sensitivity. The proposed structurally-sensitive loss leverages a combination of adversarial loss [42], perceptually-favorable structural loss, and pixel-wise L1 loss. Moreover, to validate the diagnostic quality of images processed by our method, we report qualitative image assessments by three expert radiologists. Systematically, we demonstrate the feasibility and merits of mapping LDCT images to corresponding NDCT images in the GAN framework.

Our main contributions in this paper are summarized as follows:

-

1)

To keep the underlying structural information in LDCT images, we adopt a 3D CNN model as a generator based on WGAN which can enhance the image quality for better diagnosis.

-

2)

To measure the structural difference between generated LDCT images and the NDCT gold-standard, a structurally-sensitive loss is used to enhance the accuracy and robustness of the algorithm. Different from [37], we replace the perceptual loss with a combination of L1 loss and structural loss.

-

3)

To compare the performance of the 2D and the 3D models, we perform an extensive evaluation on their convergence rate and denoising performance.

This paper is organized as follows: Section II introduces the proposed approach and analyzes the impact of each component loss function on the image quality. Section III presents the experimental design and results. Section IV discusses relevant issues. Finally, the concluding remarks and future plans are given in Section V.

II. METHODS

A. Problem Inversion

Assuming that denotes the original LDCT image, and denotes the corresponding NDCT image, the relationship between them can be expressed as:

| (1) |

where is a generic noising process that degrades a real sample x of NDCT to a corresponding LDCT sample y in a non-linear way. ∈ stands for the additive noise and unmodeled factors, and H, W, D are height, width and depth respectively.

From another standpoint, considering that the real NDCT distribution Pr is unknown, we focus on extracting information to recover desired images x from the noisy LDCT images y. In general, the noise distribution in CT images is regarded as the mixture of Poisson quantum noise and Gaussian electronic noise [44]. Compared with traditional denoising methods, the DL-based method is capable of effectively modeling any type of data distributions since the DL-based denoising model itself can be easily adapted to any practical noise model with statistical properties of typical noise distributions in a combination. Therefore, the proposed DL-based denoising network is to solve the inverse problem T† ≈ T–1 to retrieve feasible images, and the solution can be expressed as:

| (2) |

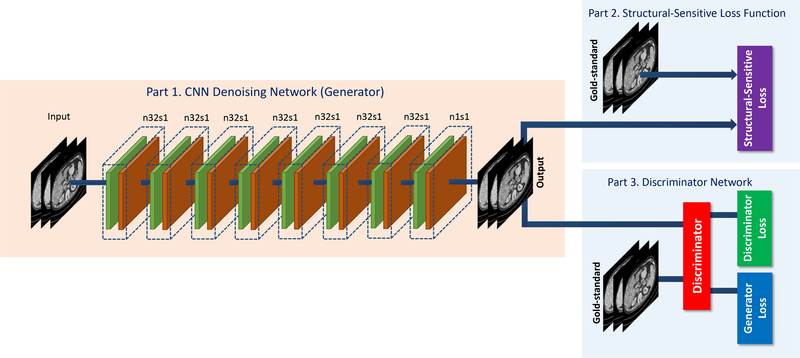

As shown in Fig. 1, the overall network comprises three parts. Part 1 is the generator G, part 2 is the Structurally-Sensitive loss (SSL) function, and part 3 is the discriminator D. G maps a volumetric LDCT image to the NDCT feature space, thereby estimating a NDCT image. The SSL function computes the structurally-sensitive dissimilarity which encodes multi-scale structural information. The loss computed by the SSL function aims to improve the ability of G to generate realistic results. D distinguishes a pair of synthetic and real NDCT images. If D can identify the input image as “synthetic” or “real” correctly and tell us the discrepancy between the estimated CT image and the corresponding real NDCT image, we will know if G yields a high-quality estimation or not. With the indication from D, G can optimize its performance. Also, D can upgrade its ability as well. Hence, G and D are in competition: G attempts to generate a convincing estimate to an NDCT image while D aims to distinguish the estimated image from real NDCT images. See Sections II-C and II-D for more details. For your convenience, the summary of notations that we use in this paper is in Table V.

Fig. 1:

The overall structure of the proposed SMGAN network. Note that the variable n denotes the number of filters and s denotes the stride size.

Table V:

Summary of notations.

| Notation | Meaning |

|---|---|

| NDCT | Normal dose CT |

| LDCT | Low dose CT |

| SSL | Structurally sensitive loss, integrating the structural loss and the L1 loss as defined in Eq. 10 |

| SSIM | Structural similarity index (SSIM) [49] |

| MS-SSIM | Multi-scale structural similarity index (MSSSIM) [51] |

| SL-net (CNN-SL) | 8-layer CNN with only structural similarity loss |

| MSL-net(CNN-MSL) | 8-layer CNN with only multi-scale structural similarity loss |

| WGAN | Wasserstein Generative Adversarial Networks with L2 loss |

| BM3D | Block-matching and 3D filtering |

| RED-CNN | Residual encoder-decoder CNN with only L2 loss |

| WGAN-VGG | Wasserstein generative adversarial network with perceptual loss |

| SMGAN-2D | 2D Wasserstein generative adversarial network with SSL loss |

| SMGAN-3D | 3D Wasserstein generative adversarial network with SSL loss |

B. 3D Spatial Information

The advantages of using 3D spatial information are evident. Hence, volumetric imaging and 3D visualization have become standards in diagnostic radiology [45]. There is a large amount of 3D NDCT and LDCT volumetric images available in practice. However, most of the networks are of 2D-based architecture. With a 3D network architecture, adjacent crosssection slices from a 3D CT image volume exhibit strong spatial correlation which we can utilize to preserve more information than with 2D models.

As mentioned above, here we use a 3D ConvNet as the generator and introduce a 3D Structurally-Sensitive loss (SSL) function. Accordingly, we extract 3D image patches and use a 3D filter instead of a 2D filter. The generator in our network takes 3D volumetric LDCT patches as the input and process them with 3D non-linear transform operations. For convenience and comparison, 2D and 3D denoising networks are referred to as SMGAN-2D and SMGAN-3D respectively. The details of the network architecture are in the following Section II-C.

C. Network Structure

Inspired by the studies in [36], [37], we introduce our proposed SMGAN-3D network structure. First, in Section II-C1 we present the 3D generator G which captures local anatomical features. Then, in Section II-C2 we define the 3D SSL function which guides the learning process. Finally, we outline the 2.5D discriminator D in Section II-C3.

1). 3D CNN Generator:

The generator G consists of eight 3D convolutional (Conv) layers. The first 7 layers each has 32 filters, and the last layer has only 1 filter. The odd-numbered convolutional layers apply 3 × 3 × 1 filters, while the evennumbered convolutional layers use 3 × 3 × 3 filters. The size of the extracted 3D patches is 80×80×11 as the input to our whole network; see Fig. 1. Note that the variable n denotes the number of the filters and s denotes the stride size, which is the step size of the filer when moving across an image so that n32s1 stands for 32 feature maps with a unit stride. Furthermore, a pooling layer after each Conv layer may lead to loss of subtle textural and structural information. Therefore, the pooling layer is not applied in this network. The Rectified Linear Unit (ReLU) [46] is our activation function after each Conv layer.

2). Structurally-Sensitive Loss (SSL) Function:

The proposed 3D SSL function measures the patch-wise discrepancy between a 3D output from the 3D ConvNet and the 3D NDCT image in the spatial domain. This measure is back-propagated [47] through the neural network to update the parameters of the network; see Section II-D for more details.

3). Discriminator:

The discriminator D consists of six convolutional layers with 64, 64, 128, 128, 256, and 256 filters and the kernel size of 3 × 3. Two fully-connected (FC) layers produce 1024 and 1 feature maps respectively. Each layer is followed by a leaky ReLU defined as max(0,x) – α max(0,−x) [46], where α is a small constant. A stride of one pixel is applied for odd-numbered Conv layers and a stride of two pixels for even-numbered Conv layers. The input fed to D is of the size 64×64×3, which comes from the output of G. The reason why we use a 2D filter in D is to reduce the computational complexity. Since the adversarial loss between each two adjacent slices in one volumetric patch contribute equally to the weighted average in one iteration, it can be easily computed. Following the suggestion in [42], we do not use the sigmoid cross entropy layer in D.

D. Loss Functions for Noise Reduction

In this sub-section, we evaluate the impact of different loss functions on LDCT noise reduction. This justifies the use of a hybrid loss function for optimal diagnostic quality.

1). L2 loss:

The L2 loss can efficiently suppress the background noise, but it could make the denoised results unnatural and blurry. This is expected due to its regressionto-mean nature [43], [48]. Furthermore, the L2 loss assumes that background noise is white Gaussian noise, which is independent of local image features [49] and not desirable for LDCT imaging.

The formula of L2 loss is expressed as:

| (3) |

where H, W, D stand for the height, width, and depth of a 3D image patch respectively, x denotes the gold-standard (NDCT), and G(y) represents the generated result from the source (LDCT) image y. It is worth noting that since the L2 loss has appealing properties of differentiability, convexity, and symmetry, the mean squared error (MSE) or L2 loss is still a popular choice in denoising tasks [50].

2). L1 Loss:

The L1 and L2 losses are both the mean-based measures, the impacts of these two loss functions are different on denoising results. Compared with the L2 loss, the L1 loss does not over-penalize large differences or tolerate small errors between denoised and gold-standard images. Thus, the L1 loss can alleviate some drawbacks of the L2 loss we mentioned earlier. Additionally, the L1 loss enjoys the same fine characteristics as L2 loss except for the differentiability. The formula for the L1 loss is written as:

| (4) |

As shown in Figs. 3–6, compared with the L2 loss, the L1 loss suppresses blurring, but does not help reduce blocky artifacts. For more details, see Section III.

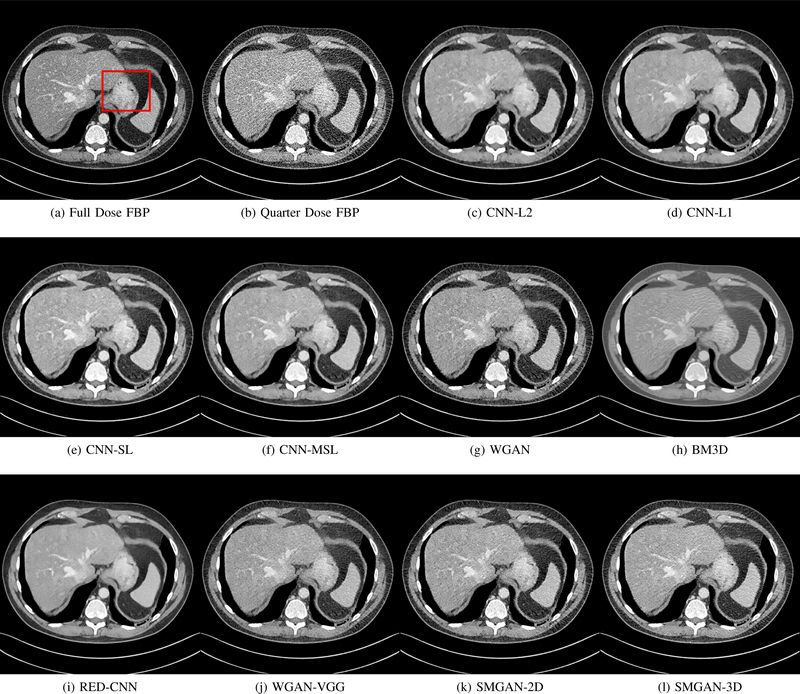

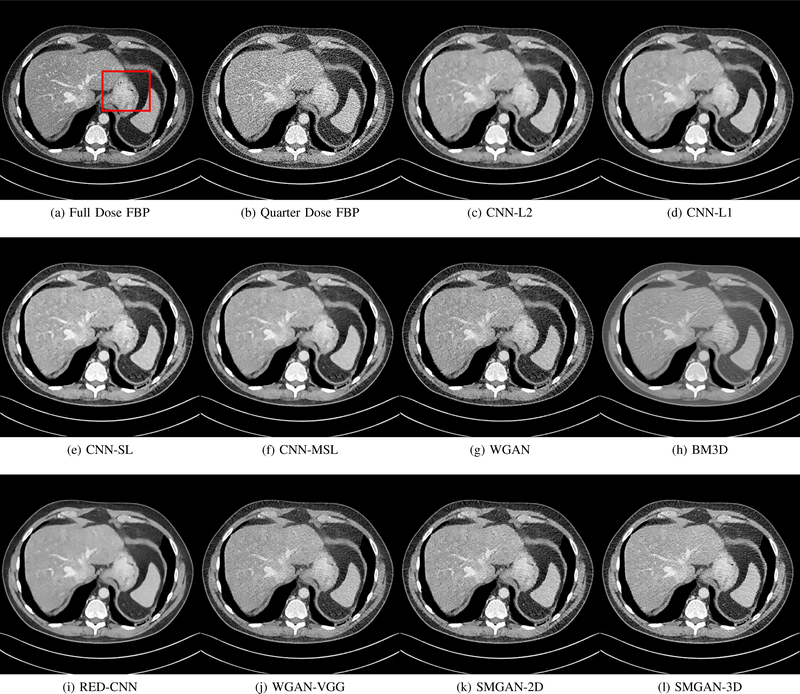

Fig. 3:

Results from abdomen CT images. (a) NDCT, (b) LDCT, (c) CNN-L2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D, and (l) SMGAN-3D. The red rectangle indicates the region zoomed in Fig. 4. The display window is [-160, 240]HU.

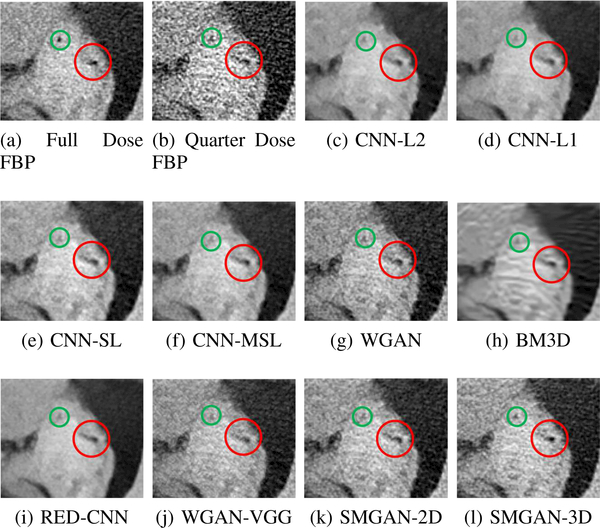

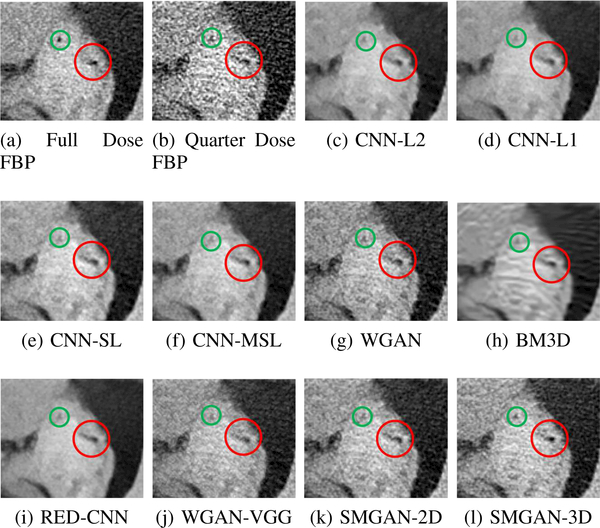

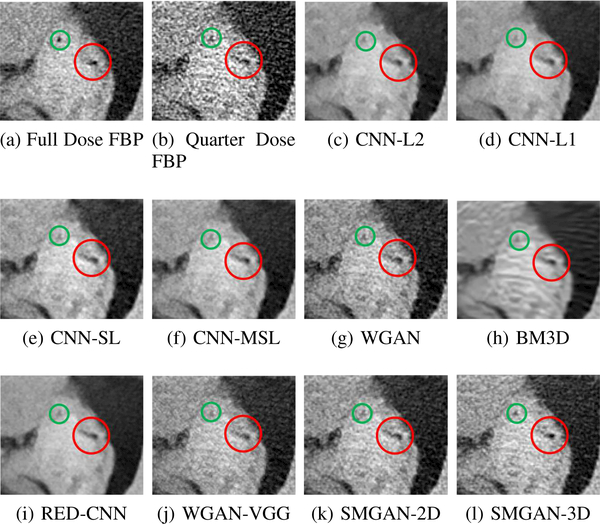

Fig. 6:

Zoomed parts of the region of interests (ROIs) marked by the red rectangle in Fig. 5. (a) NDCT, (b) LDCT, (c) CNNL2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN, (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D and (l) SMGAN-3D. The red circle indicates the metastasis and the green and blue arrows indicates two subtle structures. The display window is [-160,240]HU.

3). Adversarial Loss:

The Wasserstein distance with the regularization term was proposed in [48], which is formulated as

| (5) |

where the first two terms are for the Wasserstein distance, and the third term implements the gradient penalty. Note that z denotes G(y) for brevity. is uniformly sampled along the straight line between a pair of points sampled from G and corresponding NDCT images.

4). Structural Loss:

Medical images contain strong feature correlations. For example, their voxels have strong interdependencies. The structural similarity index (SSIM) [49] and the multi-scale structural similarity index (MS-SSIM) [51] are perceptually motivated metrics, and perform better in visual pattern recognition than mean-based metrics [49]. To measure the structural and perceptual similarity between two images, the SSIM [49] is formulated as follows:

| (6) |

| (7) |

where C1,C2 are constants and μx,μz,σx,σz,σxz denote means, standard deviations and cross-covariance of the image pair (x,z) from G and the corresponding NDCT image respectively. l(x,z), cs(x,z) are the first term and second factor we defined in Eqn. 6.

The multiscale SSIM provides more flexibility for multiscale analysis [51]. The formula for MS-SSIM [51] is expressed as:

| (8) |

where xj,zj are the local image content at the jth level, and M is the number of scale levels. Clearly, SSIM is a special case of MS-SSIM.

The formula for the structural loss (SL) is generally expressed as:

| (9) |

Note that the loss can be easily back-propagated to update weights in the network, since it can be differentiated [43].

5). Objective Function:

As mentioned in the recent studies [37], [43], minimizing the L2 loss leads to over-smoothed appearance. The adversarial loss in GAN may yield sharp images, but it does not exactly match the corresponding real NDCT images [37]. The perceptual loss computed by a VGG network [47] evaluates the perceptual differences between the generated images and real NDCT images in a high-level feature space instead of the voxel space. Since the VGG network is trained on a large dataset of natural images, not CT images, it may result in distortions of processed CT images. To tackle these issues, we propose to utilize different loss terms together for high image quality.

As revealed in [43], the L1 loss allows noise suppression and SNR improvement. However, it blurs anatomical structures to some extent. In contrast, the structural loss discourages blurring and keeps high contrast resolution. To have the merits of both loss functions, the structural sensitive loss (SSL) is expressed as:

| (10) |

where τ is the weighting factor to balance between structure preservation in the first term (from Eq. 9) and noise suppression in the second term (from Eq. 4).

Nevertheless, the above-mentioned two losses may still miss some diagnostic features. Hence, the adversarial loss is incorporated to keep textural and structural features as much as possible. In summary, the overall objective function of SMGAN is expressed as:

| (11) |

where β is the weight for the adversarial loss. In the last step of the network, we compare the difference between the output volume and the target volume, and then the error can be back-propagated for optimization [52].

III. EXPERIMENTS AND RESULTS

A. Experimental Datasets and Setup

To show the effectiveness of the proposed network for LDCT noise reduction, we used a real clinical dataset, published by Mayo Clinic for the 2016 NIH-AAPM-Mayo Clinic Low Dose CT Grand Challenge [53]. The Mayo dataset consists of 2,378 normal dose CT (NDCT) and low dose (quarter dose) CT (LDCT) images from 10 anonymous patients. The reconstruction interval and slice thickness in the dataset were 0.8mm and 1.0mm respectively.

For limited data, the denoising performance of DL-based methods depends on the size of the training datasets, so large-scale valid training datasets can improve the denoising performance. However, it is worth noting that the training image library may not contain many valid images. To enhance the performance of the network, the strategies we utilized are as follows. First of all, in order to improve generalization performance of the network and avoid over-fitting, we adopted the “10-fold cross validation” strategy. The original dataset was partitioned into 10 equal size subsets. Then, a single subset was used in turn as the validation subset and the rest of data were utilized for training. Moreover, considering the limited number of CT images, we applied the overlapping patches strategy because it can not only consider patch-wise spatial interconnections, but also significantly increase the size of the training patch dataset [54], [55].

For data preprocessing, the original LDCT and NDCT images are of 512 × 512 pixels. Since directly processing the entire patient images is computationally inefficient and infeasible, our denoising model was applied to image patches. First, we applied the overlapped sliding window with a sliding size of 1 × 1 × 1 to obtain image patches and then randomly extracted 100,100 pairs of training patches and 5,100 pairs for validation from remaining patient images of the same size 80 × 80 × 11. Then, the “10-fold cross validation” strategy is used to ensure the accuracy of the proposed algorithm. Next, the CT Hounsfield Unit (HU) scale was normalized to [0, 1] before the images were fed to the network.

For qualitative comparison, in order to validate the performance of our proposed methods (SMGAN-2D and SMGAN-3D), we compare them with eight state-of-the-art denoising methods, including CNN-L2 (L2-net), CNN-L1 (L1-net), structural-loss net (SL-net), multi-scale structural-loss net (MSL-net), WGAN, BM3D [25], RED-CNN [35], and WGAN-VGG [37]. Among these existing denoising methods, BM3D is a classical image space denoising algorithm. WGAN-VGG represents a 2D perceptual-loss-based network, and RED-CNN refers to a 2D pixel-wise network. Note that the parameter settings in these methods [25], [35], [37] had been followed per the suggestions from the original papers.

For quantitative comparison, to evaluate the effectiveness of the proposed methods, three metrics were chosen to perform image quality evaluation, including peak signal-to-noise ratio (PSNR), structural similarity index (SSIM) [51], and rootmean-square error (RMSE).

B. Parameter Selection

In our experiments, the Adam optimization algorithm was implemented for our network training [56]. In the training phase, the mini-batch size was 64. The hyperparameter λ for the balance between the Wasserstein distance and gradient penalty was set 10, per the suggestion from the original paper [42]. The parameter β for the trade-off between adversarial loss and mixture loss was set be 10−3. The parameter τ was set to 0.89. The slope of the leaky ReLu activation function was set to 0.2. The networks are implemented in the TensorFlow [57] on an NVIDIA Titan Xp GPU.

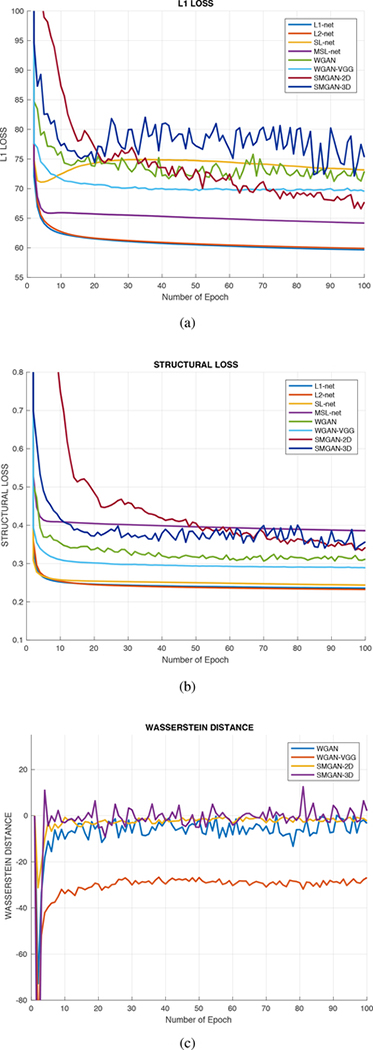

C. Network Convergence

To examine the robustness of different denoising algorithms, ten methods corresponding to the L1 loss (L1), structural loss (SL), and Wasserstein distance were separately trained in the same settings as that for SMGAN-3D. Note that the parameter settings of RED-CNN, WGAN-VGG, and BM3D from the original papers had been followed [25], [35], [37]. In addition, the size of the input patches of the 2D network is 80 × 80 while our proposed 3D model uses training patches with the size of 80 × 80 × 11. We calculated the averaged loss value achieved by different methods versus the number of epochs as the measure of convergence in Fig. 2.

Fig. 2:

Comparison of loss function value versus the number of epochs with respect to different algorithms. (a) L1 Loss, (b) Structural Loss, and (c) Wasserstein Distance curves.

In Fig. 2a and 2b, in terms of L1 and SL, we observe that L1-net and L2-net achieved the fastest convergence rate and have similar convergence trends in that all curves decreased initially and then smoothly converged, indicating that these mean-based algorithms both have fast convergence rates. Fig. 2a shows that they both converged around the 6th epoch. In contrast, in Fig. 2a, there are differences between SL-based and mean-based methods. We can see that the convergence curve of the SL-net decreases initially and then slightly rises around the 4th epoch as shown in Fig. 2a. MSL-net also shows a small increase like SL-net in terms of L1. This observation indicates that SL-based and mean-based methods have different emphasis on minimizing perceptually motivated similarity between real NDCT images and generated NDCT images. For WGAN-based methods, it can be clearly observed that the curves for WGAN, WGAN-VGG, SMGAN-2D, and SMGAN-3D slightly oscillate in the convergence process after the 5th epoch in Fig. 2a and 2b. The reason for such oscillatory behaviors is as follows: G attempts to mimic the real NDCT distribution while D aims to differentiate between the real NDCT distribution and the denoised LDCT distribution. Since GAN’s intrinsic nature is a two-player game, the distributions of G and D are constantly changing, and this leads to the oscillatory behavior when converging to their optimal status.

As shown in Fig. 2c, we can evaluate the convergence performance of WGAN. It can be seen that our proposed SMGAN-2D has the mildest oscillatory behavior compared with the other three models and reaches a stable state after the 13th epoch. Moreover, the SMGAN-3D oscillates in a relatively large range in the training process. This is because our proposed SMGAN-3D considers 3D structural information which results in a relatively larger vibrating amplitude in the training process. However, the curve still oscillates close to the x-axis, indicating SMGAN-3D’s robustness in minimizing the Wasserstein distance between the generated samples and real samples.

D. Denoising Performance

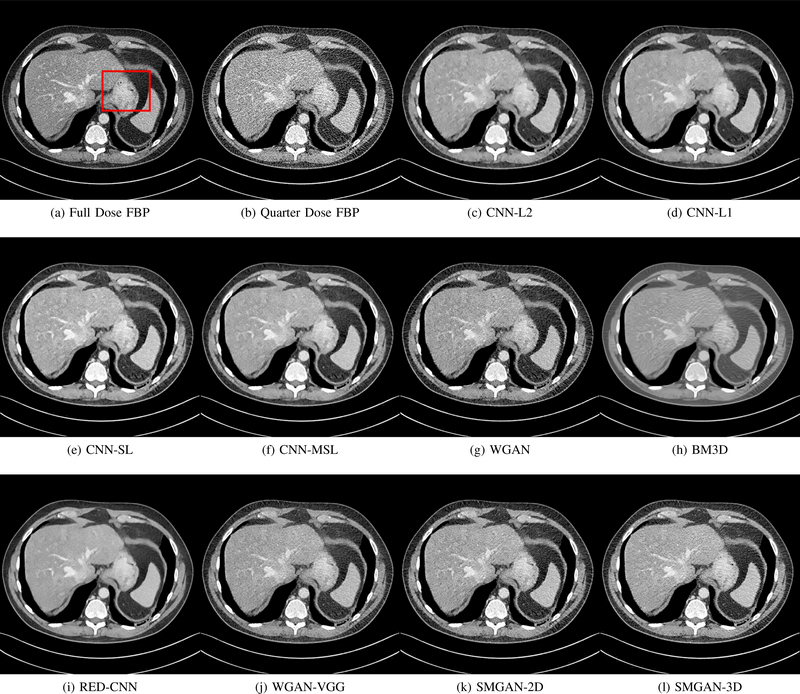

To demonstrate the effectiveness of the proposed network, we perform the qualitative comparisons over three representative abdominal images presented in Figs. 3, 5 and 7. For better evaluations of the image quality with different denoising models, zoomed regions-of-interest (ROIs) are marked by red rectangles and shown in Figs. 4, 6 and 8 respectively. Note that all results from different denoising models focus on two aspects: content restoration and noise-reduction. All CT images in axial view are displayed in the angiography window [-160, 240]HU.

Fig. 5:

Results from abdomen CT images. (a) NDCT, (b) LDCT, (c) CNN-L2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D, and (l) SMGAN-3D. The red rectangle indicates the region zoomed in Fig. 6. This display window is [-160, 240]HU.

Fig. 7:

Results from abdomen CT images. (a) NDCT, (b) LDCT, (c) CNN-L2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D, and (l) SMGAN-3D. The red rectangle indicates the region zoomed in Fig. 8. This display window is [-160, 240]HU.

Fig. 4:

Zoomed parts of the region of interests (ROIs) marked by the red rectangle in Fig. 3. (a) NDCT, (b) LDCT, (c) CNNL2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN, (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D and (l) SMGAN-3D. The red circle indicates the metastasis and the green and blue arrows indicate two subtle structure parts. The display window is [-160,240]HU.

Fig. 8:

Zoomed parts of the region of interests (ROIs) marked by the red rectangle in Fig. 7. (a) NDCT, (b) LDCT, (c) CNNL2, (d) CNN-L1, (e) SL-net, (f) MSL-net, (g) WGAN, (h) BM3D, (i) RED-CNN, (j) WGAN-VGG, (k) SMGAN-2D and (l) SMGAN-3D. The red and the green circles indicate subtle edges. The display window is [-160,240]HU.

The real NDCT images and corresponding LDCT images are presented in Figs. 3a and 3b. As observed, there are distinctions between ground truth (NDCT) images and LDCT images. Figs. 3a and 7a show the lesions/metastasis. Fig. 5a presents focal fatty sparing/focal fat. In Figs. 4a, 6a and 8a, these lesions can be clearly observed in NDCT images; in contrast, from Figs. 4b, 6b, and 8b, it can be seen that the original LDCT image is noisy, and lacks structural features for task-based clinical diagnosis. All adopted denoising models suppress noise to some extent.

1). Comparison with CNN-based denoising methods:

To study the robustness of the adversarial learning framework in SMGAN-3D, we compared SMGAN-3D with the CNN-based methods, including CNN-L2, CNN-L1, RED-CNN [35], SLnet and MSL-net. It is worth noting that CNN-L2, CNN-L1, and RED-CNN are mean-based denoising methods, and SLnet and MSL-net are SL-based denoising methods. All of the methods greatly reduce the noise compared with LDCT images. Our proposed method preserves more structural details, thereby yielding better image quality, compared with the other five methods.

Mean-based methods can effectively reduce noise, but the side effect is impaired image contents. In Fig. 3c, L2-net greatly suppresses the noise, but blurs some crucial structural information in the porta hepatis region. Meanwhile, some waxy artifacts can still be observed in Fig. 6c. L2-net does not produce good visual quality because it assumes that the noise is independent of local characteristics of the images. Even though it retains high SNR, its results are not clinically preferable. Compared with L2-net, in Figs. 3d and 5d, it can been seen that L1-net encourages less blurring and preserves more structural information. However, as observed in Fig. 4d, it still over-smooths some anatomical details. Meanwhile, in Fig. 6d, there are some blocky effects marked by the blue arrow. The results obtained by RED-CNN [35] deliver high SNR but blur the vessel details as shown in Figs. 4i and 6i.

For SL-based methods, as observed in Figs. 3e and 5e, SL-net generates images with higher contrast resolution and preserves texture of real NDCT images better than L2-net and L1-net. However, Figs. 4e and 6e show that SL-net does not preserve the structural features well, and there still remain small streak artifacts. Subsequently, in Figs. 4e and 4f, SLnet and MSL-net have low frequency image intensity variance because SSIM/MS-SSIM is insensitive to uniform biases [49], [51]. On the other hand, L1-net preserves the overall image intensity, but it does not preserve high contrast resolution well as SL-net and MSL-net do.

From Figs. 7 and 8, we can see mean-based and SLbased methods work well with effective noise suppression and artifact removal. However, the illustrations in Fig. 8 show that these methods blur the local strutural features. Our proposed SMGAN-based methods present a better edge preservation than the competing methods.

Overall, the observations above support the following statements. First, although the voxel-wise methods show good noise-reduction properties, to some extent they blur the contents and lead to the loss of structural details because they optimize the results in the voxel-wise manner. Second, SLbased methods better preserve texture than mean-based methods, but they cannot preserve overall image intensity. Third, the results produced by the proposed SMGAN-3D demonstrate the benefits of the combination of two loss functions and the importance of the adversarial training [41], [42].

2). Comparison with WGAN-based denoising methods:

To evaluate the effectiveness of our proposed objective function, we compare our method with existing WGAN-based networks, including WGAN and WGAN-VGG. Considering the importance of clinical image quality and specific structural features for medical diagnosis, we adopted the adversarial learning method [41], [42] in our experiments because WGAN could help to capture more structural information. Nevertheless, based on our prior experience, utilizing WGAN alone may yield stronger noise than other selected approaches, because it only maps the data distribution from LDCT to NDCT without consideration of local voxel intensity and structural correlations. The observations demonstrate that the noise texture is coarse in the images, as shown in Fig. 4g and Fig. 8g, which support our intuition.

Indeed, the images of WGAN-VGG [37], as shown in Fig. 3j, exhibit better visual quality with respect to more details and share structural details similar to NDCT images according to human perceptual evaluations. However, Figs. 4j (marked by the red circle) and 6j (marked by the green circle) suggest that it may severely distort the original structural information. A possible reason is that the VGG network [47] is a pre-trained deep CNN network based on natural images, and the structural information and contents of natural images are different from medical images.

Compared with WGAN and WGAN-VGG, our proposed SMGAN-3D, as shown in Figs. 4l (marked by the red circle) and 6l (marked by the green circle), can more clearly visualize the metastasis and better preserve of the portal vein.

In Figs. 7 and 8, it can be found that the SMGAN-based methods can achieve better anatomical feature preservations and visual quality than other state-of-the-art methods.

The experimental results demonstrate that our proposed objective function is essential to capture more accurate anatomical details.

3). Comparison with Image space denoising:

To validate the robustness of DL-based methods, we compared our method with the image space denoising method. Figs. 4h and 6h show that BM3D blurs the low-contrast lesion marked by the red circle and smooths specific features marked by the blue arrow. In contrast, SMGAN-3D exhibits better on the low-contrast lesion and yields sharper features as shown in Figs. 4l and 6l.

4). Comparison with 2D-based SMGAN network:

In order to evaluate the 3D structural information, we compared SMGAN-3D with SMGAN-2D. As shown in Fig. 4l, our proposed SMGAN-3D generated the results with better subtle details than SMGAN-2D and enjoys more similar statistical noise properties to the corresponding NDCT images. The reasons why SMGAN-3D outperforms SMGAN-2D are follows. First, SMGAN-3D incorporates 3D structural information to improve image quality. Second, SMGAN-2D takes input slice by slice, thus potentially leading to the loss of spatial correlation between adjacent slices.

Figs. 7 and 8 demonstrate that the SMGAN-3D can be used to provide improved anatomical feature preservation over other state-of-the-art methods.

In summary, we compared our proposed methods with existing methods, and it can be clearly observed that SMGAN-3D achieves robust performance in noise suppression, artifact removal, and texture preservation. Note that we recommend the reader to see ROIs (in Fig. 4 and 6) or zoom in to better evaluate our results. To further validate the generalization ability of our proposed model, we conclude more details in Appendix A.

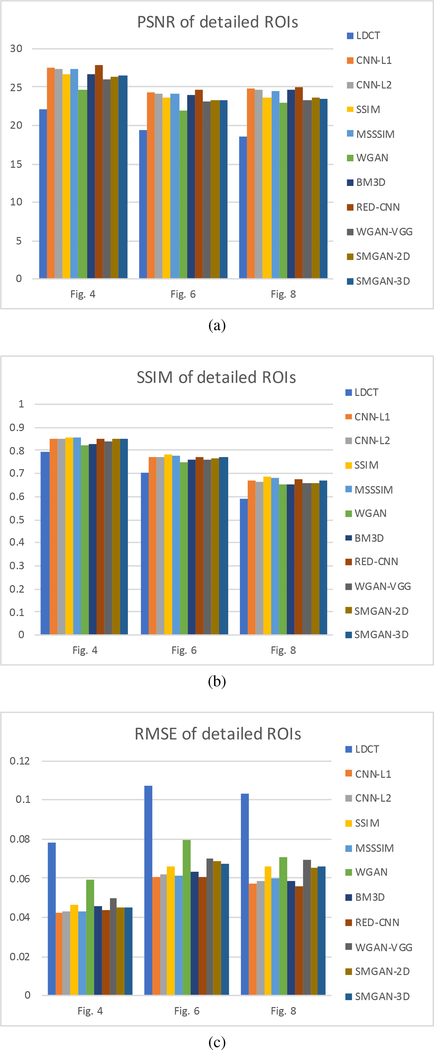

E. Quantitative analysis

We performed the quantitative analysis with respect to three selected metrics (PNSR, SSIM, and RMSE). Then, we investigated the statistical properties of the denoised images for each noise-reduction algorithm. Furthermore, we performed a blind reader study with three radiologists on 10 groups of images. Note that quantitative full-size measurements are in Table I and image quality assessments of ROIs are in Fig. 9. The NDCT images are chosen as the gold-standard.

Table I:

| Fig. 3 | Fig. 5 | Fig. 7 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | RMSE | PSNR | SSIM | RMSE | PSNR | SSIM | RMSE | |

| LDCT | 22.818 | 0.761 | 0.0723 | 21.558 | 0.659 | 0.0836 | |||

| 24.169 | 0.737 | 0.0618 | |||||||

| CNN-L1 | 27.791 | 0.822 | 0.0408 | 26.794 | 0.738 | 0.0457 | 29.162 | 0.807 | 0.0348 |

| CNN-L2 | 27.592 | 0.819 | 0.0418 | 26.630 | 0.736 | 0.0466 | 28.992 | 0.806 | 0.0355 |

| SL-net | 26.864 | 0.831 | 0.0453 | 25.943 | 0.745 | 0.0504 | 28.069 | 0.813 | 0.0395 |

| MSL-net | 27.667 | 0.831 | 0.0414 | 26.685 | 0.744 | 0.0469 | 28.902 | 0.812 | 0.0359 |

| WGAN | 25.727 | 0.801 | 0.0517 | 24.655 | 0.711 | 0.0585 | 26.782 | 0.781 | 0.0458 |

| BM3D | 27.312 | 0.809 | 0.0431 | 26.525 | 0.728 | 0.0472 | 28.959 | 0.794 | 0.0356 |

| RED-CNN | 28.279 | 0.825 | 0.0385 | 27.243 | 0.743 | 0.0444 | 29.679 | 0.811 | 0.0328 |

| WGAN-VGG | 26.464 | 0.811 | 0.0475 | 25.300 | 0.722 | 0.0543 | 27.161 | 0.793 | 0.0419 |

| SMGAN-2D | 26.627 | 0.821 | 0.0466 | 25.507 | 0.732 | 0.0530 | 27.731 | 0.795 | 0.0406 |

| SMGAN-3D | 26.569 | 0.824 | 0.0473 | 25.372 | 0.739 | 0.0538 | 27.398 | 0.794 | 0.0411 |

Fig. 9:

Performance comparison of LDCT and ten algorithms over the ROIs marked by the red rectangles in Fig. 3a and Fig. 5a.

1). Image quality analysis:

As shown in Table I, RED-CNN scores the highest PSNR and RMSE, and ranks the second place in SSIM. Since the properties of PSNR and RMSE are regression to the mean, it is expected that RED-CNN, a meanbased regressiom optimization, has better performance than other feature-based models. For SL-net and MSL-net, it is not surprising that both models achieve the highest SSIM scores due to the adoption of structural similarity loss. However, a good score measured by image quality metrics does not ensure the preservation of high-level feature information and structural details, and this explains why RED-CNN can have the best PSNR and RMSE despite over-smoothing the content. PSNR, SSIM and RMSE are not perfect, and they are subject to image blurring abd blocky/waxy artifacts in the denoised images, as shown in Figs. 3–8. Hence, these metrics may not be sufficient in evaluating image quality and indicating diagnostic performance. Indeed, WGAN can provide better visual quality and achieve improved statistical properties. Compared with the CNN-based methods, the WGAN architecture can progressively reserve the consistency of the feature distributions between LDCT and NDCT images. By encouraging less blurring, WGAN alone could introduce more image noise to compromise diagnosis. To keep information in LDCT images, our novel loss function with a regularization term is structurally alert to enhance the clinical usability as compared to the other methods.

Although mean-based approaches, such as L1-net, L2-net, enjoy high metric scores, they may over-smooth the overall image contents and lose feature characteristics, which do not satisfy our HVS requirements because mean-based methods favor the regression toward the mean. Meanwhile, WGANVGG satisfies HVS requirements, but gets the lowest scores in the three selected metrics. The reason for the lowest scores is that WGAN-VGG may suffer from loss of subtle structural information or noise features, which may severely affect the diagnostic accuracy. The proposed SMGAN-2D outperforms the feature-based method WGAN-VGG with reference to the three metrics, illustrating the robust denoising capability of our proposed loss function. Compared with the SMGAN-2D model, SMGAN-3D achieves higher scores in PSNR and SSIM since it incorporates 3D spatial information. To further validate the performance of each denoising model with respect to clinically significant local details, we performed the quantitative analysis over ROIs. The summary of the quantitative results from ROIs is shown in Fig. 9. It is worth noting that the quantitative results of the ROIs follow a similar trend to that of the full-size images.

2). Statistical analysis:

To quantitatively evaluate the statistical properties of processed images by different denoising models, we calculate the mean CT number (Hounsfield Unit) and standard deviations (SDs) of ROIs, as shown in Table II. For each denoising model, the percent error of the mean and SD values were calculated in comparison to those of the reference (NDCT) images. The lower percent errors correspond to more robust denoising models. As shown in Table II, L1-net, L2-net, SL-net, MSL-net, BM3D, REDCNN, and WGAN-VGG generate high percent errors in SD with respect to the NDCT images. There are blocky and over-smoothing effects in the images which match our visual inspections. Specifically, for Fig. 8, the absolute difference in SD between BM3D and NDCT is the largest among all of the denoising models, which indicates that BM3D has the most noticeable blurring effects. The standard deviation of BM3D supports our visual observations as shown in Figs. 4h, 6h, and 8h. The mean values of WGAN, WGAN-VGG, SL-net and SMGAN-2D deviated much from that of the NDCT image in Fig. 4. This indicates that WGAN, WGAN-VGG, and SMGAN-2D effectively reduce the noise level but compromise significant content information. Nevertheless, the SD value of SMGAN-2D is close to that of NDCT, which indicates that it supports HVS requirements. From the quantitative analysis in Table II, it can be observed that our proposed SMGAN-3D achieves the best matching SD to the NDCT images out of all other methods. Overall, SMGAN-3D is a highly competitive denoising model for clinical use.

Table II:

Statistical properties of the images in Figs. 4, 6 axnd 8. These are the ROIs indicated by the red rectangles in Figs. 3, 5 and 7. Note that the relative percentage difference of NDCT values versus the rest of models is added to aid the readers.

| Fig. 4 | Fig. 6 | Fig. 8 | ||||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| NDCT | 115.282 | 45.946 | 56.903 | 58.512 | 51.225 | 73.297 |

| LDCT | 114.955 (−0.2837%) | 74.299 (61.709%) | 57.228 (0.571%) | 85.854 (46.729%) | 50.142 (−2.114%) | 89.346 (21.896%) |

| CNN-L1 | 115.809 (0.4571%) | 28.532 (−37.9010%) | 57.709 (1.416%) | 42.315 (−27.682%) | 50.917 (−0.6013%) | 66.359 (−9.466%) |

| CNN-L2 | 117.191 (1.656%) | 29.933 (−34.852%) | 58.956 (3.608%) | 43.411 (−25.808%) | 52.229 (1.960%) | 66.922 (−8.698%) |

| SL-net | 131.333 (13.923%) | 35.844 (−21.987%) | 68.471 (20.329%) | 50.789 (−13.199%) | 63.874 (24.693%) | 72.718 (−0.790%) |

| MSL-net | 118.395 (2.701%) | 32.548 (−29.160%) | 63.271 (11.191%) | 46.979 (−19.711%) | 57.052 (11.375%) | 69.519 (−5.154%) |

| WGAN | 105.461 (−8.519%) | 42.659 (−7.154%) | 48.432 (−14.887%) | 54.306 (−7.188%) | 42.417 (−17.195%) | 70.904 (−3.265%) |

| BM3D | 114.058 (−1.062%) | 31.515 (−31.409%) | 25.649 (−54.925%) | 69.411 (18.627%) | 15.183 (−70.360%) | 100.08 (36.540%) |

| RED-CNN | 116.642 (1.180%) | 27.194 (−40.813%) | 57.985 (1.902%) | 42.048 (−28.138%) | 51.272 (0.0918%) | 66.961 (−8.644%) |

| WGAN-VGG | 108.229 (−6.118%) | 36.721 (−20.078%) | 54.450 (−4.311%) | 48.660 (−16.838%) | 44.959 (−12.232%) | 67.059 (−8.511%) |

| SMGAN-2D | 108.758 (−5.659%) | 40.948 (−10.878%) | 51.243 (−9.947%) | 53.065 (−9.309%) | 48.230 (−5.847%) | 72.073 (−1.670%) |

| SMGAN-3D | 115.569 (0.749%) | 43.654 (−6.723%) | 54.356 (−4.476%) | 56.552 (−3.350%) | 55.378 (8.107%) | 73.303 (−0.00821%) |

3). Visual assessments:

To validate clinical image quality of processed results, three radiologists performed a visual assessment on 10 groups of images. Each group includes an original LDCT image with lesions, the corresponding reference NDCT image, and the processed images by different denoising methods. NDCT, considered as the gold-standard, is the only labeled image in each group. All other images were evaluated on sharpness, noise suppression, diagnostic acceptability, and contrast retention using a five-point scale (5 = excellent and 1 = unacceptable). We invited three radiologists with mean clinical experience of 12.3 years to join our study. Note that these results were evaluated independently and the overall image quality score for each method was computed an averaging score from the four evaluation criteria. For different methods, the final score is presented as mean±SD (average score of three radiologists±standard deviation). The final quantitative results are listed in Table III.

Table III:

Visual assessment scores by three radiologist readers.

| Sharpness | Noise Suppression | Diagnostic Acceptability | Contrast Retention | Overall Quality | |

|---|---|---|---|---|---|

| LDCT | 2.55±1.43 | 1.55±0.80 | 1.85±0.96 | 1.75±0.83 | 1.93±1.01 |

| CNN-L1 | 2.80±0.81 | 3.30±0.71 | 2.70±0.78 | 2.75±0.77 | 2.89±0.77 |

| CNN-L2 | 2.12±0.42 | 3.98±0.58 | 1.93±0.78 | 2.07±0.83 | 2.53±0.55 |

| SL-net | 2.95±0.86 | 3.15±0.65 | 2.70±0.71 | 2.80±0.81 | 2.90±0.76 |

| MSL-net | 3.01±0.94 | 3.16±0.57 | 2.87±0.83 | 2.84±0.69 | 2.97±0.76 |

| WGAN | 3.30±0.56 | 2.80±0.81 | 3.15±0.91 | 3.45±1.02 | 3.09±0.66 |

| BM3D | 2.21±1.08 | 3.29±0.80 | 2.21±0.86 | 2.29±0.88 | 2.50±0.91 |

| RED-CNN | 3.29±0.88 | 3.79±0.70 | 3.51±0.70 | 3.46±1.12 | 3.51±0.85 |

| WGAN-VGG | 3.35±0.91 | 3.50±1.07 | 3.35±0.91 | 3.45±1.02 | 3.41±0.94 |

| SMGAN-2D | 3.25±0.65 | 3.48±0.66 | 3.32±0.58 | 3.21±0.78 | 3.32±0.67 |

| SMGAN-3D | 3.56±0.73 | 3.59±0.68 | 3.58±0.46 | 3.61±1.02 | 3.59±0.72 |

As observed, the original LDCT images have the lowest scores because of their severe image quality degradation. All denoising models improve the scores to some extent in this study. From Table III, RED-CNN obtains the highest score in noise suppression. Compared to all other methods, our proposed SMGAN-3D scores best with respect to sharpness, diagnostic acceptability, and contrast retention. Furthermore, voxel-wise optimization (CNN-L2) has the best visuallyassessed image noise suppression, but it suffers from relatively low scores in sharpness and diagnostic acceptability, indicating a loss of image details. The proposed SMGAN-3D model gets a superior overall image quality score relative to the 2D model, which indicates that a 3D model can enhance CT image denoising performance by incorporating spatial information from adjacent slices.

In brief, the visual assessment demonstrates that SMGAN-3D has powerful capabilities in noise reduction, subtle image structure and edge preservation, and artifact removal. Most importantly, it satisfies the HVS requirements as shown in Figs. 3–6.

F. Computational Cost

In CT reconstruction, there is a trade-off between the computational cost and the image quality. In this aspect, a DL-based algorithm has great advantages in computational efficiency. Although the training of DL-based methods is timeconsuming, it can rapidly perform the denoising tasks on reconstructed LDCT images after the training is completed. In our study, the proposed 2D method requires about 15 hours and the 3D model needs approximately 26 hours for training to converge. WGAN-VGG, which has the same number of layers, takes about 18 hours in the training phase. Compared with iterative reconstruction, any DL-based approach will require much less execution time, which facilitates the clinical workflow. In practice, our proposed SMGAN-2D and SMGAN-3D took 0.534s and 4.864s respectively in the validation phase on a NVIDA Titan GPU. Compared with the results in [58], [59], our method took significantly less time. For example, the computational cost for soft threshold filtering (STF)-based TV minimization in the ordered-subset simultaneous algebraic reconstruction technique (OS-SART) framework took 45.1s per iteration on the same computing platform. Hence, it is clear that once the model is trained, it requires far less computational overhead than an iterative reconstruction method given that other conditions are equal.

IV. DISCUSSIONS

As mentioned before, different emphases on visual evaluation and traditional image quality metrics were extensively investigated. When training with only the mean-based losses (L1-net, L2-net, RED-CNN), the results can achieve high scores in quantitative metrics and yield promising results with substantial noise reduction. When training with the featurebased methods (WGAN-VGG), the results can meet HVS requirements for visualization since they preserve more structural details than mean-based methods. However, these methods suffer from the potential risk of content distortion since a perceptual loss is computed based on a network [47] trained on a natural image dataset. Practically and theoretically, even though adversarial learning can prevent smoothing in the image, and capture structural characteristics, they may often result in severe loss of diagnostic information. To integrate the best characteristics of these loss functions, we have proposed a hybrid loss function to deliver the LDCT image quality optimally.

Although our proposed network has achieved high-quality denoised LDCT images, there are still rooms for potential improvements. First and foremost, some feature edges in the processed results still look blurry. Also, some structural variations between NDCT and LDCT do not perfectly match. A possible way to enhance correlation between NDCT and LDCT is to design a network with a better modeling capability, which is the work we have started. As far as our reader study is concerned, although visual assessment may be subject to intra-as well as inter-operator variability, on average such assessment can still evaluate different algorithms effectively, especially in a pilot study. In our follow-up study, we will invite more radiologists to rate the results, and then quantify inter-operator variability in a task-specific fashion, and also study intra-operator variability.

V. CONCLUSION

In conclusion, we have presented a 3D CNN-based method for LDCT noise reduction. As a follow-up to our previous work [37], a 3D convolutional neural network is utilized to improve the image quality in the 3D contextual setting. In addition, we have highlighted that the purpose of loss functions is to preserve high-resolution and critical features for diagnosis. Different from the state-of-the-art LDCT denoising method used in [36], an efficient structurally-sensitive loss has been included to capture informative structural features. Moreover, we have employed the Wasserstein distance to stabilize the training process for GAN. We have performed the quantitative and qualitative comparison of the image quality.

The assessments have demonstrated that SMGAN-3D can produce results with higher-level image quality for clinical usage compared with the existing denoising networks [34]–[37].

In the future, we will extend our model to other medical imaging modalities in a task-specific manner. Moreover, we plan to incorporate more advanced denoising models such as the networks mentioned in [60]–[62] for LDCT reconstruction. Finally, we are also interested in making our denoising software robust over different scanners.

ACKNOWLEDGMENT

The authors would like to thank NVIDIA Corporation for the donation of Titan Xp GPU, which has been utilized for this study. The authors are grateful for helpful discussion with Dr. Mats Persson (Stanford University). This work was supported in part by the National Natural Science Foundation of China under Grant 61671312 and Science and Technology Project of Sichuan Province of China under Grant 2018HH0070, and in part by the National Institutes of Health under Grants R21 EB019074, R01 EB016977, and U01 EB017140.

Biography

Chenyu You received the B.S. degree in Electrical Engineering with a minor in Mathematics from Rensselaer Polytechnic Institute, Troy, NY, USA in 2017 where he did undergraduate research under the supervision of Prof. G. Wang. He is currently working toward the M.S. degree in bioengineering and electrical engineering at Stanford University, Stanford, CA, USA. His research interests include X-ray computed tomography, magnetic resonance imaging, and deep learning.

Chenyu You received the B.S. degree in Electrical Engineering with a minor in Mathematics from Rensselaer Polytechnic Institute, Troy, NY, USA in 2017 where he did undergraduate research under the supervision of Prof. G. Wang. He is currently working toward the M.S. degree in bioengineering and electrical engineering at Stanford University, Stanford, CA, USA. His research interests include X-ray computed tomography, magnetic resonance imaging, and deep learning.

Qingsong Yang received the B.S. degree from the Department of Engineering Physics, Tsinghua University, Beijing, China, in 2013. He is currently working toward the Ph.D. degree in biomedical engineering at Rensselaer Polytechnic Institute, Troy, NY, USA, under the supervision of Prof. G. Wang. His research interests include X-ray phasecontrast imaging and photon-counting CT technology.

Qingsong Yang received the B.S. degree from the Department of Engineering Physics, Tsinghua University, Beijing, China, in 2013. He is currently working toward the Ph.D. degree in biomedical engineering at Rensselaer Polytechnic Institute, Troy, NY, USA, under the supervision of Prof. G. Wang. His research interests include X-ray phasecontrast imaging and photon-counting CT technology.

Hongming Shan received the bachelor’s degree from Shandong University of Technology, China in 2011 and obtained the PhD degree from Fudan University, China in 2017. He is currently a postdoctoral scholar at the Rensselaer Polytechnic Institute. His research interests include machine/deep learning, computer vision, dimension reduction, and biomedical imaging.

Hongming Shan received the bachelor’s degree from Shandong University of Technology, China in 2011 and obtained the PhD degree from Fudan University, China in 2017. He is currently a postdoctoral scholar at the Rensselaer Polytechnic Institute. His research interests include machine/deep learning, computer vision, dimension reduction, and biomedical imaging.

Lars Gjesteby received the B.S. degree in Biomedical engineering from the Rensselaer Polytechnic Institute, Troy, NY, USA in 2014, where he is currently pursuing the Ph.D. degree in biomedical engineering under the supervision of G. Wang. His current research interest include x-ray computed tomography, metal artifact reduction, deep learning, and the combination of CT and MRI for simultaneous imaging

Lars Gjesteby received the B.S. degree in Biomedical engineering from the Rensselaer Polytechnic Institute, Troy, NY, USA in 2014, where he is currently pursuing the Ph.D. degree in biomedical engineering under the supervision of G. Wang. His current research interest include x-ray computed tomography, metal artifact reduction, deep learning, and the combination of CT and MRI for simultaneous imaging

Guang Li received the B.S., M.S., and Ph.D. degrees from Biomedical Engineering Department, Southwest University, Nanjing, China, in 2008, 2011, and 2016, respectively. From 2016, he is with the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA, as a Postdoctoral Researcher. His research interests include computed tomography reconstruction, image processing and cone-beam CT system development.

Guang Li received the B.S., M.S., and Ph.D. degrees from Biomedical Engineering Department, Southwest University, Nanjing, China, in 2008, 2011, and 2016, respectively. From 2016, he is with the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA, as a Postdoctoral Researcher. His research interests include computed tomography reconstruction, image processing and cone-beam CT system development.

Shenghong Ju received the MD and Ph.D. degree from Medical School of Southeast University, Nanjing, China, in 2006. She is currently the professor and chair of Radiology Departmen in Zhongda Hospital Southeast University. Her research is mainly in the field of molecular imaging, functional imaging and radiomics.

Shenghong Ju received the MD and Ph.D. degree from Medical School of Southeast University, Nanjing, China, in 2006. She is currently the professor and chair of Radiology Departmen in Zhongda Hospital Southeast University. Her research is mainly in the field of molecular imaging, functional imaging and radiomics.

Zhuiyang Zhang received the B.S., M.S. degree from Medical School of Soochow University, Suzhou, China, in 1986 and 1999 respectively. He is currently a chief radiologist in Wuxi No.2 People Hospital, Wuxi, China, mainly focused on computed tomography imaging.

Zhuiyang Zhang received the B.S., M.S. degree from Medical School of Soochow University, Suzhou, China, in 1986 and 1999 respectively. He is currently a chief radiologist in Wuxi No.2 People Hospital, Wuxi, China, mainly focused on computed tomography imaging.

Zhen Zhao received the MD and Ph.D. degree from Medical School of Southeast University, Nanjing, China, in 2017. He is currently a radiologist in Zhongda Hospital Southeast University, mainly focused on abdominal imaging. His research interest is focused on molecular imaging.

Zhen Zhao received the MD and Ph.D. degree from Medical School of Southeast University, Nanjing, China, in 2017. He is currently a radiologist in Zhongda Hospital Southeast University, mainly focused on abdominal imaging. His research interest is focused on molecular imaging.

Yi Zhang (S’11-M’12) received the B.S., M.S., and Ph.D. degrees from the College of Computer Science, Sichuan University, Chengdu, China, in 2005, 2008, and 2012, respectively. From 2014 to 2015, he was with the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA, as a Postdoctoral Researcher. He is currently an Associate Professor with the College of Computer Science, Sichuan University. His research interests include computed tomography, compressive sensing, and deep learning.

Yi Zhang (S’11-M’12) received the B.S., M.S., and Ph.D. degrees from the College of Computer Science, Sichuan University, Chengdu, China, in 2005, 2008, and 2012, respectively. From 2014 to 2015, he was with the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA, as a Postdoctoral Researcher. He is currently an Associate Professor with the College of Computer Science, Sichuan University. His research interests include computed tomography, compressive sensing, and deep learning.

Wenxiang Cong received the B.S. degree in mathematics from Heilongjiang University, Harbin, China, in 1982, the M.S. degree in applied mathematics from Harbin Institute of Technology, Harbin, in 1988, and the Ph.D. degree in optical imaging from Beijing University of Science and Technology, Beijing, China, in 1998. He is currently a Research Scientist in the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA. His research interests include optical molecular to-mographic imaging and X-ray phase-contrast/dark-field tomographic imaging.

Wenxiang Cong received the B.S. degree in mathematics from Heilongjiang University, Harbin, China, in 1982, the M.S. degree in applied mathematics from Harbin Institute of Technology, Harbin, in 1988, and the Ph.D. degree in optical imaging from Beijing University of Science and Technology, Beijing, China, in 1998. He is currently a Research Scientist in the Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA. His research interests include optical molecular to-mographic imaging and X-ray phase-contrast/dark-field tomographic imaging.

Ge Wang (F’03) received the Ph.D degree in ECE, University at Buffalo The state University of New York, Buffalo, NY, USA. He is Clark Crossan & Chair Professor and the Director of the Biomedical Imaging Center, RPI, USA. He authored the papers on the first spiral/helical cone-beam/multi-slice CT algorithm. Currently, there are over 100 million medical CT scans yearly with a majority in the spiral cone-beam mode. He pioneered bioluminescence tomography. His group published the first papers on interior tomography and omni-tomography (“all-in-one”) to acquire diverse datasets simultaneously (“all-at-once”). His results were featured in Nature, Science, and PNAS, and recognized with awards. He wrote> 430 peer-reviewed journal publications. He is Fellow of the IEEE, SPIE, OSA, AIMBE, AAPM, and AAAS.

Ge Wang (F’03) received the Ph.D degree in ECE, University at Buffalo The state University of New York, Buffalo, NY, USA. He is Clark Crossan & Chair Professor and the Director of the Biomedical Imaging Center, RPI, USA. He authored the papers on the first spiral/helical cone-beam/multi-slice CT algorithm. Currently, there are over 100 million medical CT scans yearly with a majority in the spiral cone-beam mode. He pioneered bioluminescence tomography. His group published the first papers on interior tomography and omni-tomography (“all-in-one”) to acquire diverse datasets simultaneously (“all-at-once”). His results were featured in Nature, Science, and PNAS, and recognized with awards. He wrote> 430 peer-reviewed journal publications. He is Fellow of the IEEE, SPIE, OSA, AIMBE, AAPM, and AAAS.

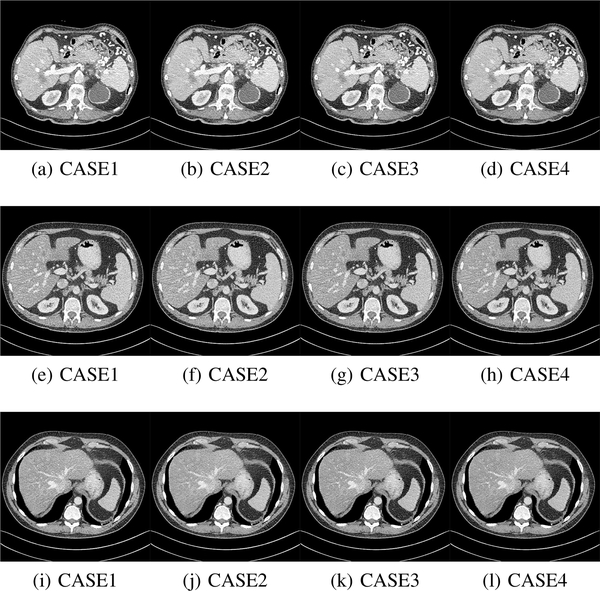

APPENDIX A

DIFFERENT TRAINING SETS FOR SMGAN-3D TRAINING

We randomly splitted the Mayo dataset [53] into four different training sets,each with 5,000 image patches of size 80 × 80 × 11 pixels. Then, different training sets were used to validate the generalizability of our proposed 3D SMGAN model. The results are presented in Fig. 10 and Table IV.

Fig. 10:

Results from four different training sets for SMGAN-3D. (a)-(d) refer to Fig. 3, (e)-(h) refer to Fig. 5 and (i)-(l) refer to Fig. 7. This display window is [-160, 240]HU.

Table IV:

Quantitative results associated with different training sets for SMGAN-3D in Figs. 10.

| Figs. 10a - 10d | Figs. 10e - 10h | Figs. 10i - 10l | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | RMSE | PSNR | SSIM | RMSE | PSNR | SSIM | RMSE | |

| Case1 | 26.678 | 0.811 | 0.0463 | 25.842 | 0.776 | 0.0510 | 26.538 | 0.812 | 0.0472 |

| Case2 | 26.759 | 0.814 | 0.0459 | 25.848 | 0.781 | 0.0510 | 26.544 | 0.814 | 0.0470 |

| Case3 | 26.589 | 0.807 | 0.0468 | 25.701 | 0.772 | 0.0519 | 26.455 | 0.806 | 0.0475 |

| Case4 | 26.903 | 0.815 | 0.0452 | 25.914 | 0.782 | 0.0506 | 26.662 | 0.816 | 0.0464 |

APPENDIX B

SUMMARY OF NOTATIONS

Contributor Information

Chenyu You, Departments of Bioengineering and Electrical Engineering, Stanford University, Stanford, CA, 94305.

Qingsong Yang, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

Hongming Shan, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

Lars Gjesteby, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

Guang Li, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

Shenghong Ju, Jiangsu Key Laboratory of Molecular and Functional Imaging, Department of Radiology, Zhongda Hospital, Medical School, Southeast University, Nanjing 210009, China.

Zhuiyang Zhang, Department of Radiology, Wuxi No.2 People’s Hospital,Wuxi, 214000, China.

Zhen Zhao, Jiangsu Key Laboratory of Molecular and Functional Imaging, Department of Radiology, Zhongda Hospital, Medical School, Southeast University, Nanjing 210009, China.

Yi Zhang, College of Computer Science, Sichuan University, Chengdu 610065, China.

Cong Wenxiang, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

Ge Wang, Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, 12180.

REFERENCES

- [1].Brenner DJ and Hall EJ, “Computed tomography - an increasing source of radiation exposure,” New Eng. J. Med, vol. 357, no. 22, pp. 2277–2284, 2007. [DOI] [PubMed] [Google Scholar]

- [2].de Gonz´alez AB, Mahesh M, Kim K-P, Bhargavan M, Lewis R, Mettler F, and Land C, “Projected cancer risks from computed tomographic scans performed in the united states in 2007,” Arch. Intern. Med, vol. 169, no. 22, pp. 2071–2077, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Schauer DA and Linton OW, “National council on radiation protection and measurements report shows substantial medical exposure increase,” pp. 293–296, 2009. [DOI] [PubMed] [Google Scholar]

- [4].Wang J, Lu H, Li T, and Liang Z, “Sinogram noise reduction for low-dose CT by statistics-based nonlinear filters,” in Proc. of SPIE Vol, vol. 5747, 2005, p. 2059. [Google Scholar]

- [5].Wang J, Li T, Lu H, and Liang Z, “Penalized weighted least-squares approach to sinogram noise reduction and image reconstruction for lowdose X-ray computed tomography,” IEEE Trans. Med. Imaging, vol. 25, no. 10, pp. 1272–1283, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Balda M, Hornegger J, and Heismann B, “Ray contribution masks for structure adaptive sinogram filtering,” IEEE Trans. Med. Imaging, vol. 31, no. 6, pp. 1228–1239, 2012. [DOI] [PubMed] [Google Scholar]

- [7].Yang G-Z, Burger P, Firmin DN, and Underwood S, “Structure adaptive anisotropic image filtering,” Proc. IEEE Int. Conf. Image Process. Applicat, vol. 14, no. 2, pp. 135–145, 1996. [Google Scholar]

- [8].Liu J, Ma J, Zhang Y, Chen Y, Yang J, Shu H, Luo L, Coatrieux G, Yang W, Feng Q et al. , “Discriminative feature representation to improve projection data inconsistency for low dose ct imaging,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2499–2509, 2017. [DOI] [PubMed] [Google Scholar]

- [9].Chen Y, Ma J, Feng Q, Luo L, Shi P, and Chen W, “Nonlocal prior bayesian tomographic reconstruction,” Journal of Mathematical Imaging and Vision, vol. 30, no. 2, pp. 133–146, 2008. [Google Scholar]

- [10].Manduca A, Yu L, Trzasko JD, Khaylova N, Kofler JM, McCollough CM, and Fletcher JG, “Projection space denoising with bilateral filtering and CT noise modeling for dose reduction in CT,” Med. Phys, vol. 36, no. 11, pp. 4911–4919, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Sidky EY, Duchin Y, Pan X, and Ullberg C, “A constrained, totalvariation minimization algorithm for low-intensity x-ray ct,” Med. Phys, vol. 38, no. S1, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].De Man B and Basu S, “Distance-driven projection and backprojection in three dimensions,” Phys. Med. Biol, vol. 49, no. 11, p. 2463, 2004. [DOI] [PubMed] [Google Scholar]

- [13].Whiting BR, Massoumzadeh P, Earl OA, O’Sullivan JA, Snyder DL, and Williamson JF, “Properties of preprocessed sinogram data in x-ray computed tomography,” Med. Phys, vol. 33, no. 9, pp. 3290–3303, 2006. [DOI] [PubMed] [Google Scholar]

- [14].Elbakri IA and Fessler JA, “Statistical image reconstruction for polyenergetic X-ray computed tomography,” IEEE Trans. Med. Imaging, vol. 21, no. 2, pp. 89–99, 2002. [DOI] [PubMed] [Google Scholar]

- [15].Tian Z, Jia X, Yuan K, Pan T, and Jiang SB, “Low-dose CT reconstruction via edge-preserving total variation regularization,” Phys. Med. Biol, vol. 56, no. 18, p. 5949, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Liu Y, Ma J, Fan Y, and Liang Z, “Adaptive-weighted total variation minimization for sparse data toward low-dose x-ray computed tomography image reconstruction,” Phys. Med. Biol, vol. 57, no. 23, p. 7923, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Xu Q, Yu H, Mou X, Zhang L, Hsieh J, and Wang G, “Low-dose X-ray CT reconstruction via dictionary learning,” IEEE Trans. Med. Imaging, vol. 31, no. 9, pp. 1682–1697, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Zhang Y, Mou X, Wang G, and Yu H, “Tensor-based dictionary learning for spectral CT reconstruction,” IEEE Trans. Med. Imaging, vol. 36, no. 1, pp. 142–154, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Sidky EY and Pan X, “Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization,” Phys. Med. Biol, vol. 53, no. 17, p. 4777, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Chen Y, Yin X, Shi L, Shu H, Luo L, Coatrieux J-L, and Toumoulin C, “Improving abdomen tumor low-dose CT images using a fast dictionary learning based processing,” Phys. Med. Biol, vol. 58, no. 16, p. 5803, 2013. [DOI] [PubMed] [Google Scholar]

- [21].Ma J, Huang J, Feng Q, Zhang H, Lu H, Liang Z, and Chen W, “Low-dose computed tomography image restoration using previous normal-dose scan,” Med. Phys, vol. 38, no. 10, pp. 5713–5731, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Li Z, Yu L, Trzasko JD, Lake DS, Blezek DJ, Fletcher JG, McCollough CH, and Manduca A, “Adaptive nonlocal means filtering based on local noise level for CT denoising,” Med. Phys, vol. 41, no. 1, 2014. [DOI] [PubMed] [Google Scholar]

- [23].Buades A, Coll B, and Morel J-M, “A review of image denoising algorithms, with a new one,” Multiscale Model. Simul, vol. 4, no. 2, pp. 490–530, 2005. [Google Scholar]

- [24].Cheddad A, Svensson C, Sharpe J, Georgsson F, and Ahlgren U, “Image processing assisted algorithms for optical projection tomography,” IEEE Trans. Med. Imaging, vol. 31, no. 1, pp. 1–15, 2012. [DOI] [PubMed] [Google Scholar]

- [25].Feruglio PF, Vinegoni C, Gros J, Sbarbati A, and Weissleder R, “Block matching 3D random noise filtering for absorption optical projection tomography,” Phys. Med. Biol, vol. 55, no. 18, p. 5401, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Chen Y, Shi L, Feng Q, Yang J, Shu H, Luo L, Coatrieux J-L, and Chen W, “Artifact suppressed dictionary learning for low-dose ct image processing,” IEEE Trans. Med. Imaging, vol. 33, no. 12, pp. 2271–2292, 2014. [DOI] [PubMed] [Google Scholar]

- [27].Liu J, Hu Y, Yang J, Chen Y, Shu H, Luo L, Feng Q, Gui Z, and Coatrieux G, “3d feature constrained reconstruction for low dose ct imaging,” IEEE Trans. on Circuits Syst. Video Technol, 2016. [Google Scholar]

- [28].Wang G, “A perspective on deep imaging,” IEEE Access, vol. 4, pp. 8914–8924, 2016. [Google Scholar]

- [29].Wang G, Kalra M, and Orton CG, “Machine learning will transform radiology significantly within the next 5 years,” Med. Phys, vol. 44, no. 6, pp. 2041–2044, 2017. [DOI] [PubMed] [Google Scholar]

- [30].Zhang W, Li R, Deng H, Wang L, Lin W, Ji S, and Shen D, “Deep convolutional neural networks for multi-modality isointense infant brain image segmentation,” NeuroImage, vol. 108, pp. 214–224, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Wang S, Kim M, Wu G, and Shen D, “Scalable high performance image registration framework by unsupervised deep feature representations learning,” in Deep Learning for Medical Image Analysis. Elsevier, 2017, pp. 245–269. [Google Scholar]

- [32].Cao X, Yang J, Gao Y, Wang Q, and Shen D, “Region-adaptive deformable registration of ct/mri pelvic images via learning-based image synthesis,” IEEE Trans. Image Process, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Cattell L, Platsch G, Pfeiffer R, Declerck J, Schnabel JA, Hutton C, Initiative ADN et al. , “Classification of amyloid status using machine learning with histograms of oriented 3d gradients,” NeuroImage: Clinical, vol. 12, pp. 990–1003, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Chen H, Zhang Y, Zhang W, Liao P, Li K, Zhou J, and Wang G, “Low-dose CT via convolutional neural network,” Biomed. Opt. Express, vol. 8, no. 2, pp. 679–694, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P, Zhou J, and Wang G, “Low-dose CT with a residual encoder-decoder convolutional neural network,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2524–2535, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Wolterink JM, Leiner T, Viergever MA, and Išgum I, “Generative adversarial networks for noise reduction in low-dose CT,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2536–2545, 2017. [DOI] [PubMed] [Google Scholar]

- [37].Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, and Wang G, “Low dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss,” arXiv preprint arXiv:1708.00961, 2017. [DOI] [PMC free article] [PubMed]

- [38].Kang E, Min J, and Ye JC, “A deep convolutional neural network using directional wavelets for low-dose x-ray ct reconstruction,” arXiv preprint arXiv:1610.09736, 2016. [DOI] [PubMed]

- [39].Shan H, Zhang Y, Yang Q, Kruger U, Cong W, and Wang G, “3D convolutional encoder-decoder network for low-dose CT via transfer learning from a 2D trained network,” arXiv preprint arXiv:1802.05656, 2018. [DOI] [PMC free article] [PubMed]

- [40].Yang W, Zhang H, Yang J, Wu J, Yin X, Chen Y, Shu H, Luo L, Coatrieux G, Gui Z et al. , “Improving low-dose ct image using residual convolutional network,” IEEE Access, vol. 5, pp. 24698–24705, 2017. [Google Scholar]

- [41].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, and Bengio Y, “Generative adversarial nets,” in Proc. Adv. Neural Inf. Process. Syst, 2014, pp. 2672–2680. [Google Scholar]

- [42].Arjovsky M, Chintala S, and Bottou L, “Wasserstein GAN,” arXiv preprint arXiv:1701.07875, 2017.

- [43].Zhao H, Gallo O, Frosio I, and Kautz J, “Loss functions for image restoration with neural networks,” IEEE Trans. Comput. Imaging, vol. 3, no. 1, pp. 47–57, 2017. [Google Scholar]

- [44].Fu L, Lee T-C, Kim SM, Alessio AM, Kinahan PE, Chang Z, Sauer K, Kalra MK, and De Man B, “Comparison between prelog and post-log statistical models in ultra-low-dose CT reconstruction,” IEEE Trans. Med. Imaging, vol. 36, no. 3, pp. 707–720, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Calhoun PS, Kuszyk BS, Heath DG, Carley JC, and Fishman EK, “Three-dimensional volume rendering of spiral ct data: theory and method,” Radiographics, vol. 19, no. 3, pp. 745–764, 1999. [DOI] [PubMed] [Google Scholar]

- [46].Nair V and Hinton GE, “Rectified linear units improve restricted boltzmann machines,” in Proc. 27th Int. Conf. Machine Learning, 2010, pp. 807–814. [Google Scholar]

- [47].Simonyan K and Zisserman A, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [48].Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, and Courville AC, “Improved training of wasserstein gans,” in Proc. Advances Neural Information Processing Systems Conf, 2017, pp. 5769–5779. [Google Scholar]

- [49].Wang Z, Bovik AC, Sheikh HR, and Simoncelli EP, “Image quality assessment: from error visibility to structural similarity,” IEEE Trans. Image Process, vol. 13, no. 4, pp. 600–612, 2004. [DOI] [PubMed] [Google Scholar]

- [50].Wang Z and Bovik AC, “Mean squared error: Love it or leave it? a new look at signal fidelity measures,” IEEE Signal Process. Mag, vol. 26, no. 1, pp. 98–117, 2009. [Google Scholar]

- [51].Wang Z, Simoncelli EP, and Bovik AC, “Multiscale structural similarity for image quality assessment,” in Proc. IEEE Asilomar Conf. Signals, Syst., Comput, vol. 2 Ieee, 2003, pp. 1398–1402. [Google Scholar]

- [52].LeCun Y, Bottou L, Bengio Y, and Haffner P, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, 1998. [Google Scholar]

- [53].AAPM, “Low dose ct grand challenge,” 2017. [Online]. Available: http://www.aapm.org/GrandChallenge/LowDoseCT/#

- [54].Xie J, Xu L, and Chen E, “Image denoising and inpainting with deep neural networks,” in Proc. Adv. Neural Inf. Process. Syst, 2012, pp. 341–349. [Google Scholar]

- [55].Dong C, Loy CC, He K, and Tang X, “Image super-resolution using deep convolutional networks,” IEEE Trans. Pattern Anal. Mach. Intell, vol. 38, no. 2, pp. 295–307, 2016. [DOI] [PubMed] [Google Scholar]

- [56].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [57].Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M et al. , “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” arXiv preprint arXiv:1603.04467, 2016.

- [58].Liu R, Luo Y, and Yu H, “Gpu-based acceleration for interior tomography,” IEEE Access, vol. 2, pp. 757–770, 2014. [Google Scholar]

- [59].Matenine D, Goussard Y, and Després P, “Gpu-accelerated regularized iterative reconstruction for few-view cone beam ct,” Medical physics, vol. 42, no. 4, pp. 1505–1517, 2015. [DOI] [PubMed] [Google Scholar]

- [60].Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, and Wei Y, “Deformable convolutional networks,” CoRR, abs/1703.06211, vol. 1, no. 2, p. 3, 2017. [Google Scholar]

- [61].He K, Zhang X, Ren S, and Sun J, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn, 2016, pp. 770–778. [Google Scholar]

- [62].Sabour S, Frosst N, and Hinton GE, “Dynamic routing between capsules,” in Proc. Adv. Neural Inf. Process. Syst, 2017, pp. 3859–3869. [Google Scholar]