Abstract

The articulated laser sensor is a new kind of trans-scale and non-contact measurement instrument in regular-size space and industrial applications. These sensors overcome many deficiencies and application limitations of traditional measurement methods. The articulated laser sensor consists of two articulated laser sensing modules, and each module is made up of two rotary tables and one collimated laser. The three axes represent a non-orthogonal shaft architecture. The calibration method of system parameters for traditional instruments is no longer suitable. A novel high-accuracy calibration method of an articulated laser sensor for trans-scale 3D measurement is proposed. Based on perspective projection models and image processing techniques, the calibration method of the laser beam is the key innovative aspect of this study and is introduced in detail. The experimental results show that a maximum distance error of 0.05 mm was detected with the articulated laser sensor. We demonstrate that the proposed high-accuracy calibration method is feasible and effective, particularly for the calibration of laser beams.

Keywords: articulated laser sensor, calibration, perspective projection model, image processing, laser beam

1. Introduction

Nowadays, accompanied with the rapid development of manufacturing, 3D measurement has been widely applied in the domains of complicated surfaces measurement, dynamical scan, and reverse engineering [1,2,3,4]. The traditional 3D measurement instruments include the laser tracker [5,6], total station [7], and theodolite [8]. Their measurement accuracies rely heavily on the structure orthogonality, which increases their expenditures. Structured light 3D scanners and laser vision sensors are widely applied techniques in 3D measurement space [9]. However, the cameras of vison sensors are difficult to apply to trans-scale space measurement, because their limited focus leads to a dead measurement distance beyond which objects cannot clearly be seen.

For the trans-scale and non-contact measurement in regular-size space and industrial applications, there are many deficiencies and application limitations for traditional measurement methods. In reference to the three axes architecture of traditional instruments, an articulated laser sensor combined with adaptive focusing technology of laser alignment is proposed. However, the calibration method of system parameters for traditional instruments is no longer suitable for articulated laser sensors. Wu et al. proposed a non-orthogonal shaft laser theodolite (N-theodolite) [10]. The intrinsic parameters were obtained by minimum-zone circle fitting and linear fitting. Moreover, by aiming the targets at a scale bar placed at several different positions, the extrinsic parameters can be calibrated. However, this calibration method is suitable only for large-size measurements and low accuracy. The articulated laser sensor employs N-theodolite’s non-orthogonal architecture. It is essential to study the method of calibrating the spatial position of the laser beam, which represents the visual measuring axis. The spatial position consists of the direction vector of the laser beam and a fixed point on the laser beam.

Bi et al. mounted a laser displacement sensor on the Z-axis of a coordinate measuring machine (CMM) to build up an optical coordinate measuring system, and proposed a method based on a standard sphere to calibrate the laser beam direction. This method required length information from laser displacement sensor [11]. Sun et al. presented a vision measurement model of the laser displacement sensor, and then achieved the calibration with a planar target mounted on a 2D moving platform. However, during the calibration, it was difficult to realize that the planar target was perpendicular to the fixed plane of the moving platform [12]. Xie et al. established a multi-probe measurement system, and proposed a technique called the “coplanar calibration method” to calibrate the extrinsic parameters of the structured-light sensor. This method is not suitable to determine the initial spatial position of laser beam [13]. Yang et al. proposed a kind of inner diameter measuring device by increasing the number of laser displacement sensors. The method can calibrate the direction of three laser beams simultaneously [14]. The issue is that the methods of laser beam calibration can only obtain the direction vector of the laser beam [15,16,17]. Moreover, the spatial position of the laser beam cannot be received through the direction vector.

To achieve the calibration requirements of the articulated laser sensor, a novel calibration method is proposed in this paper. The key innovative aspect of this paper is the proposed method to calibrate the spatial position of laser beam.

The remainder of this paper is organized as follows. In Section 2, the principle of the articulated laser sensor is introduced. In Section 3, the calibration method of the articulated laser sensor is presented. Particularly, the calibration method of the laser beam is introduced in detail. In Section 4, the image processing of the laser spot is presented. In Section 5, the actual measurement experiments are performed. Those experimental data validate that the proposed method is effective. The paper ends with some concluding remarks in Section 6.

2. Principle of Articulated Laser Sensor

2.1. System Construction

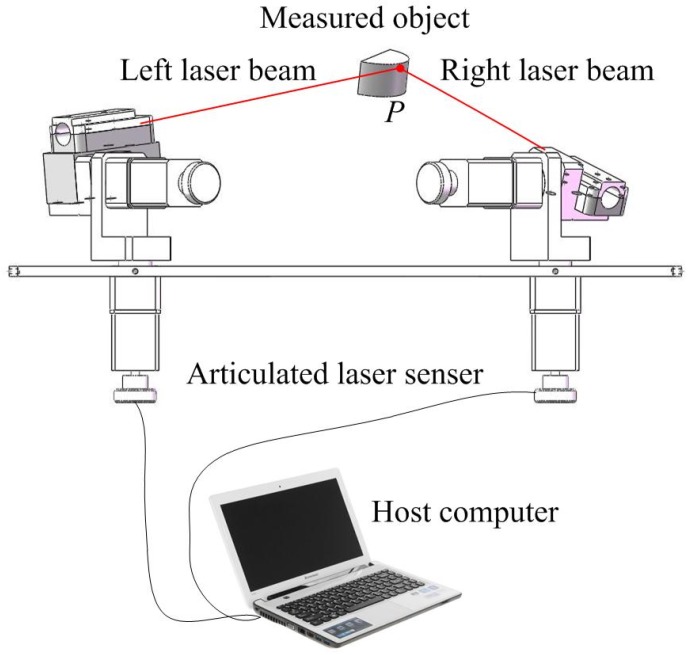

The trans-scale 3D coordinate measurement system mainly consists of two articulated laser sensing modules, as shown in Figure 1. Each module is made up of two one-dimensional rotary tables and one collimated laser conveniently. As with traditional orthogonal measurement instruments, there are three axes in the articulated laser sensing module. However, the three axes have no strict requirements for the orthogonality and intersection conditions. The three axes are bifacial straights, and the angle between any two axes is not 90°. The rotating axes of the rotary tables of the articulated laser sensing module are called the “vertical axis” and “horizontal axis”, respectively, and the location of collimated laser beam is called the “measuring axis”.

Figure 1.

Structural diagram of articulated laser sensor.

2.2. Measurement Principle

Similar to determining 3D coordinates utilizing traditional forward intersection measurement instruments, the measurement operation of the articulated laser sensor is based on the intersection of two laser beams in the measured object. With the help of a high-resolution digital CCD camera, the intersection can be achieved accurately. During measurement, the coincidence of two laser beams on the measured object denotes the intersection of the visualized measuring axes. As shown in Figure 2, when the left laser beam coincides with the right laser beam on a point of the measured object, the 3D coordinate of the point can be calculated based on the rotation angles provided by the rotary tables of two articulated laser sensing modules and the mathematical measurement model. The mathematical measurement model is established based on the perspective projection model and quaternion kinetic model.

Figure 2.

Structural diagram of the measurement system.

3. Calibration Principle

The system parameters calibration of the articulated laser sensor is necessary to achieve high-accuracy measurement, as the sensor measurement accuracy is greatly affected by the calibration method [18,19]. The system parameters consist of intrinsic and extrinsic parameters. For determining 3D coordinates, it is necessary to obtain the related positions of the three axes of the articulated laser sensing module, which are called the intrinsic parameters. The extrinsic parameters denote the relationship between the left module of the articulated laser sensor and the right module and include a rotation matrix and a translation vector . The three axes of every articulated laser sensing module can be abstracted as three lines in 3D space, as shown in Figure 3. The system parameters of the articulated laser sensor and their physical meanings are listed in Table 1.

Figure 3.

Schematic diagram of parameters calibration.

Table 1.

The system parameters of the articulated laser sensor.

| Category | Parameters | Physical Meaning | |

|---|---|---|---|

| Intrinsic parameters | Vertical axis | Vector | Direction of vertical axis |

| Point | Fixed point of vertical axis | ||

| Horizontal axis | Vector | Direction of horizontal axis | |

| Point | Fixed point of horizontal axis | ||

| Measuring axis | Vector | Direction of measuring axis | |

| Point | Fixed point of measuring axis | ||

| Extrinsic parameters | Rotation–translation matrix | Rotation matrix | Rotation from to |

| Translation vector | Translation from to | ||

3.1. Calibration of the Vertical and Horizontal Axes

To make precise measurements, it is necessary to calibrate the parameters with a high-accuracy measurement instrument. A CMM is employed to calibrate these intrinsic and extrinsic parameters of the articulated laser sensor in the laboratory. The accuracy of the CMM is 2.1 μm + 2.8l/1000 μm, and l is the measurement distance. Two porcelain beads with diameters of 7.935 mm and a machining accuracy of 0.25 μm are pasted onto the two articulated laser sensing modules, respectively. Then, the two articulated laser sensing modules are rotated vertically and horizontally every ten degrees, and the centers of the porcelain bead are measured in each position. Thirty-six measured data points are obtained and these data are measured from a complete ellipse. The least square method is utilized to optimize the parameters. The direction vectors and fixed points of three axes are needed for calibration. The parameters of vertical and horizontal axes are obtained as follows:

-

(1)

Based on least squares methods, a plane is fitted utilizing the centers of the porcelain beads, which are measured by CMM.

-

(2)

The distances from the measured points to the fitted plane are calculated. If the distance is more than the threshold, these points are eliminated and another plane is fitted again.

-

(3)

The normal vector of fitted plane is recorded as the direction vector of the rotation axis.

-

(4)

The remaining points are projected onto the fitted plane.

-

(5)

Based on least squares methods, an ellipse is fitted utilizing the projected points.

-

(6)

The center of fitted ellipse is recorded as the fixed point of rotation axis.

In the measurement space, a plane can be expressed as [20]

| (1) |

where the vector contains the plane parameters and represents a point on the plane.

The four parameters of are redundant. Equation (1) can be simplified as

| (2) |

where .

Equation (2) takes the form of the matrix equation

| (3) |

where is obtained from measured data, denotes unknown parameter, and .

The number of measured data is more than the unknown parameter. Equation (3) is overdetermined and solved by least squares methods. The normal vector of the fitted plane is recorded as

| (4) |

The unit normal vector is defined as the direction vector of the rotation axis. The distances from the measured points to the fitted plane are expressed as

| (5) |

The point is eliminated if . The remaining points are projected onto the fitted plane, and the matrix equation is expressed as

| (6) |

These projected points are used for fitting the ellipse, and the center is defined as the fixed point of the rotation axis. An ellipse can be expressed as [21]

| (7) |

where the vector contains the ellipse parameters and is a point on the ellipse.

The six parameters of are redundant. Equation (7) can be simplified as

| (8) |

where .

Equation (8) takes the form of a matrix equation:

| (9) |

where is obtained from projection data, denotes unknown parameter, and . The center of ellipse is expressed as

| (10) |

In summary, the direction vector of the rotation axis is expressed as Equation (4), and the fixed point is expressed as Equation (10).

3.2. Calibration of the Laser Beam

In 3D space, the laser beam can be abstracted as a spatial line. In the world coordinate system, the equation of the laser beam can be expressed as

| (11) |

where and are the coordinates of the laser spots. Therefore, the key aspect is to obtain the coordinates of the laser spots in the world coordinate system.

The calibration principle diagram is shown in Figure 4. There are two parallel planes which are called the “image plane” and the “target plane”, respectively. The method used to ensure that the two planes are parallel is introduced in Section 4. Two porcelain beads are pasted to the target plane. The centers of the two porcelain beads are defined as and . The projection of and onto the target plane are defined as and . The projection of and onto the image plane are defined as and . The laser spot on the target plane is defined as , and the projection onto the image plane is defined as .

Figure 4.

The calibration diagram of the laser beam.

Based on the principle of calibration, four coordinate systems are established:

-

(1)

The world coordinate system is defined as . The CMM’s measurement coordinate system is regarded as world coordinate system.

-

(2)

The viewpoint coordinate system is defined as . The axis is perpendicular to the target plane, and the and coordinates of origin are equal to the and coordinates of .

-

(3)

The actual coordinate system of pixels on CCD is defined as .

-

(4)

The image plane coordinate system is defined as . The axis is parallel to axis, and the and coordinates of origin are equal to the and coordinates of .

The equation of the target plane in the world coordinate system is expressed as

| (12) |

where is the unit normal vector of plane and is the coordinates of a point on the target plane in the world coordinate system.

The two porcelain beads centers of and are obtained by CMM. Several points on the target plane are measured by CMM, and the parameters of direction vector and fixed point are obtained by plane fitting. The coordinates of each point in the various coordinate systems are listed in Table 2.

Table 2.

Coordinates of each point in the various coordinate systems.

| Point | ||||

|---|---|---|---|---|

A central aim of calibration is to be able to obtain the coordinate of in the world coordinate system.

and are the projection onto the target plane of and . The coordinates of and can be calculated by

| (13) |

| (14) |

where and can be calculated by

| (15) |

| (16) |

Based on the introduction of described above, the transformation from to is defined as

| (17) |

where is the unit normal vector of the rotation axis, and . The coordinates of and in can be calculated by

| (18) |

| (19) |

Because the axis is perpendicular to the target plane, the relationship of the axis coordinates of , and is obtained by

| (20) |

The vector is expressed as

| (21) |

In order to ensure that axis is parallel to the axis, the vector is expressed as

| (22) |

Similarly, the transformation from to is defined as

| (23) |

where . The method used to get the coordinates of , and in is introduced in Section 4. The coordinates of , and in can be calculated by

| (24) |

| (25) |

| (26) |

Because the image plane is parallel to the target plane, is obtained. According to perspective projection, the equation is given by

| (27) |

| (28) |

Therefore, can be calculated via Equations (27) and (28), and it can be submitted back to Equations (27) and (28) to calculate and . Thus, the coordinate can be obtained, and the coordinate of laser spot in can be calculated by

| (29) |

4. Image Processing

4.1. Centroid Extraction

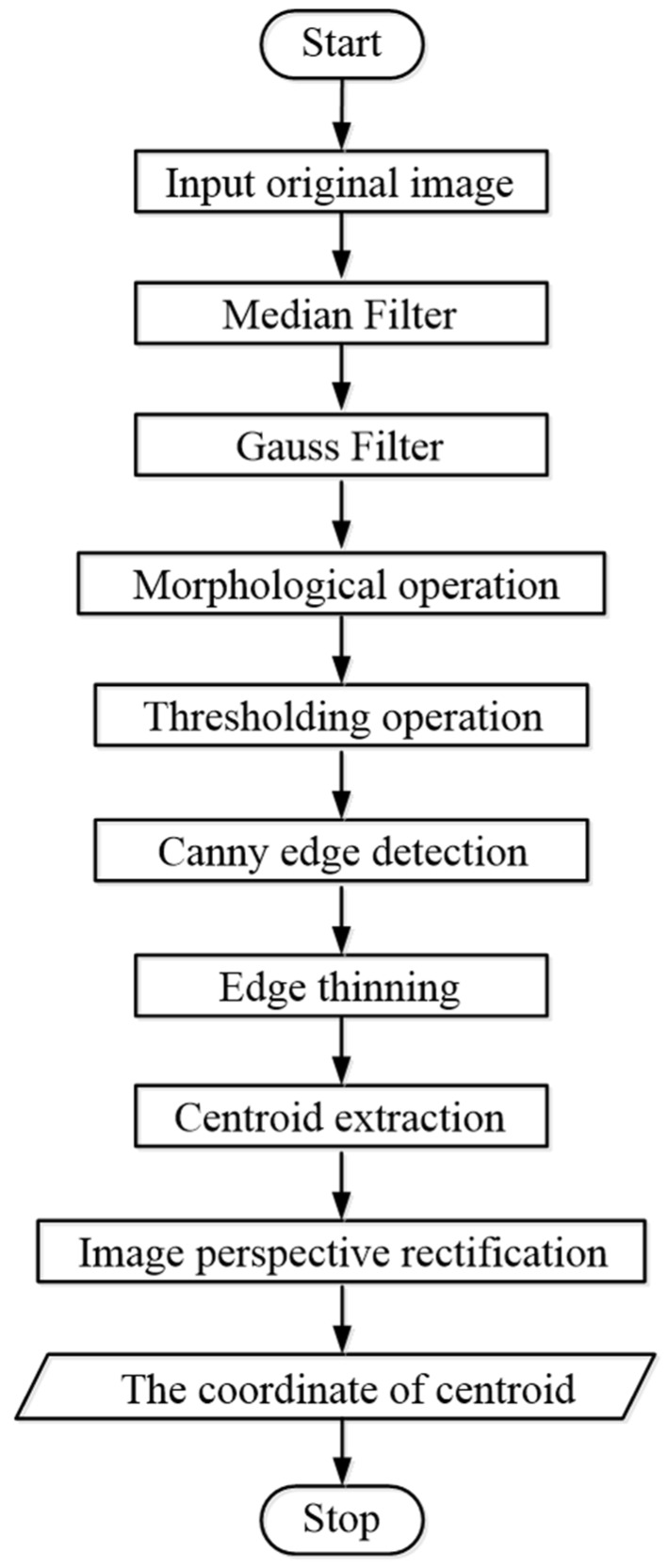

One original image including one laser spot and six white spots is shown in Figure 5. The four white spots in the center of the image are used to ensure the parallelism of the target plane and the image plane, and their centers are control points. The two white spots on the right side of the image are the images of the two porcelain beads pasted to the target plane. The flow chart of centroid extraction is shown in Figure 6. The centers of the laser spot and each white spot are obtained by the centroid method [22], as shown in Figure 7.

Figure 5.

Example diagram of the laser spot and other white spots.

Figure 6.

Flow chart of centroid extraction.

Figure 7.

(a) Image of laser spot extraction; (b) image of white spots extraction.

Through the extraction method above, we can obtain the 2D coordinates of the centers of the laser spot and each white spot in . Due to the uncertainty of the camera position, the image plane is not parallel to the target plane. Therefore, it is necessary to do post-image-processing.

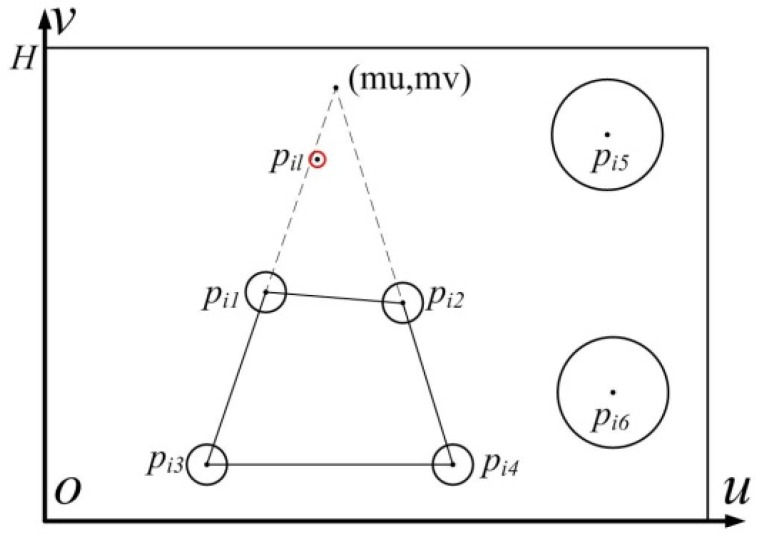

4.2. Image Perspective Rectification

In order to ensure that the image plane is parallel to the target plane, the image perspective rectification method based on double vanishing point is employed [23]. The four control points are arranged in a square on the target plane. Due to the uncertainty of the camera position, they are not a square in the image. The principle diagram of image rectification is shown in Figure 8.

Figure 8.

Schematic diagram of image rectification.

By analyzing the location of the four control points, the workflow of image rectification is as follows:

-

(1)

The image is rotated to ensure that is parallel to the axis. According to the coordinates , , and after rotation, the vanishing point coordinate is obtained.

-

(2)The expression of the rectification in u-axis direction is

(30) -

(3)

where is the width of square,

-

(4)and the expression of the rectification in the axis direction is

(31) -

(5)

After the rectification in the axis and the axis direction, is parallel to , but is not parallel to . The image is rotated 90°, and the rectification in the axis and the axis direction is executed again.

5. Experiment

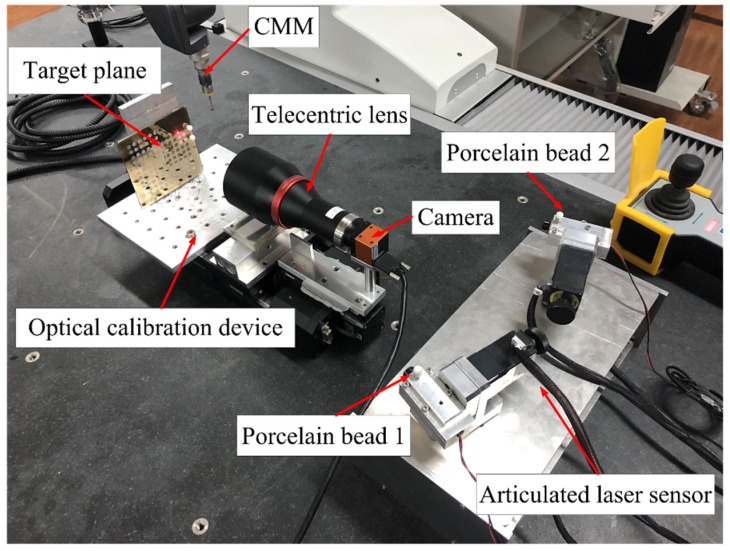

The calibration experiment site is shown in Figure 9. In the laboratory, a CMM is employed to calibrate these intrinsic and extrinsic parameters, and the CMM’s measurement coordinate system is defined as the world coordinate system.

Figure 9.

Calibration experiment diagram.

5.1. Calibration of the Vertical and Horizontal Axes

As shown in Figure 9, there are two porcelain beads pasted onto the two articulated laser sensing modules, respectively. By measuring porcelain bead 1 and porcelain bead 2 rotating around the corresponding axes, the direction vectors and fixed points of the horizontal and vertical axes are obtained by plane and ellipse fitting. The intrinsic parameters under the CMM coordinate system are shown in Table 3.

Table 3.

Intrinsic parameters of the vertical and horizontal axes (mm).

| Category | Intrinsic Parameters | |

|---|---|---|

| Left module | Horizontal axis | Fixed point (160.919,162.305,−622.648) Direction vector (0.954,−0.300,0.005) |

| Vertical axis | Fixed point (208.213,147.371,−604.198) Direction vector (0.006,−0.002,−0.999) |

|

| Right module | Horizontal axis | Fixed point (416.534,157.549,−622.284) Direction vector (0.973,0.232,−0.004) |

| Vertical axis | Fixed point (368.818,146.245,−603.301) Direction vector (−0.002,−0.003,−0.999) |

|

5.2. Calibration of the Laser Beam

As shown in Figure 9, the target plane and a camera are fixed on a platform. Two porcelain beads are pasted to the target plane. The perspective projection image of a ball is usually not a standard circle, but an ellipse, and the geometric center of an ellipse is not the same as the ball-center’s real image [24]. Fortunately, a telecentric lens is employed to correct the parallax error of traditional industrial lenses.

According to the calibration principle, the calibration flow of the laser beam can be operated as follows:

-

(1)

The articulated laser sensor is fixed on the operating platform of CMM.

-

(2)

The optical calibration device is adjusted to ensure that the laser beam can project onto the target plane.

-

(3)

The centers of two beads are measured by CMM, and the coordinates are recorded as and , respectively.

-

(4)

Nine points on the target plane are measured by CMM and used to fit a plane. The parameters of the target plane are obtained, including the unit normal vector recorded as and a point on the plane recorded as .

-

(5)

An image is collected by a camera with telecentric lens.

-

(6)

Steps (2)–(5) are repeated more than seven times.

-

(7)

The collected images are processed as described in the Section 4.

-

(8)

Based on the least square method, a spatial line is fitted from the coordinates of the laser spots in the CMM’s coordinate system.

-

(9)

The parameters of the laser beam are obtained, including the direction vector and a fixed point on the laser beam, as shown in Table 4.

Table 4.

Intrinsic parameters of the sight axis (mm).

| Category | Intrinsic Parameters | |

|---|---|---|

| Left module | Measuring axis | Fixed point (313.363,702.058,−618.860) |

| Direction vector (0.270,0.963,0.005) | ||

| Right module | Measuring axis | Fixed point (252.987,691.699,−619.093) |

| Direction vector (−0.2934,0.956,0.003) | ||

5.3. Calibration of Extrinsic Parameters

The above calibration results show that the intrinsic parameters of the three axes of each articulated laser sensing module are obtained in the CMM’s coordinate system. The extrinsic parameters are received as follows

5.4. Verification Experiment

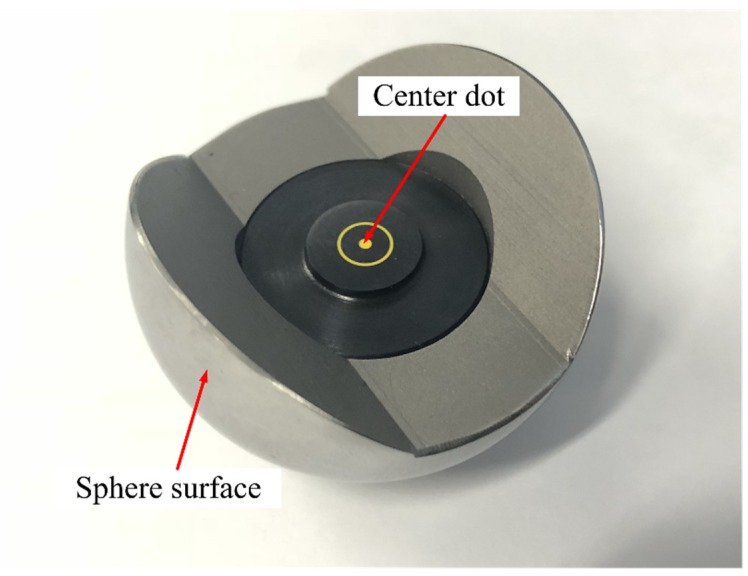

Further measurement experiments are needed to verify the accuracy of the proposed calibration method utilizing the intrinsic and extrinsic parameters. A high-precision machined hemispherical target with the center dot, as shown in Figure 10, is employed to achieve a high-accuracy measurement. The machining accuracy of the hemispherical target is 0.01mm.

Figure 10.

High-precision machined hemispherical target.

The hemispherical target is placed in different positions. The length of two positions is the measurand. The points on the sphere surface are measured by CMM, and the coordinate of center dot is obtained by sphere surface fitting, as shown in Figure 11. The intersection of the two laser beams is measured by the articulated laser sensor. The measurement results from CMM are defined as the truth values, and those from the articulated laser sensor are defined as the measured values, as shown in Table 5.

Figure 11.

Measurement experiment diagram.

Table 5.

Comparison between the measured values and real values.

| Point No. | Left/Right Module | Horizontal Angle (°) | Vertical Angle (°) | Measured Length (mm) | Real Length (mm) | Deviation (mm) |

|---|---|---|---|---|---|---|

| 1 | Left | 0.000 | 0.000 | 91.747 | 91.752 | −0.005 |

| Right | −15.775 | −0.108 | ||||

| 2 | Left | 14.986 | −0.057 | |||

| Right | −0.052 | 0.012 | ||||

| 3 | Left | 33.143 | −1.342 | 166.854 | 164.831 | 0.023 |

| Right | 38.992 | −1.258 | ||||

| 4 | Left | 15.786 | −1.325 | |||

| Right | 17.184 | −1.277 | ||||

| 5 | Left | 12.152 | −1.475 | 172.134 | 172.141 | −0.007 |

| Right | 12.206 | −1.447 | ||||

| 6 | Left | 9.647 | 19.920 | |||

| Right | 3.046 | 20.580 | ||||

| 7 | Left | −8.503 | 20.021 | 157.470 | 157.467 | 0.003 |

| Right | −12.452 | 18.288 | ||||

| 8 | Left | −31.538 | 19.282 | |||

| Right | −29.141 | 15.717 | ||||

| 9 | Left | −0.848 | 14.621 | 128.730 | 128.753 | −0.023 |

| Right | 4.000 | 14.582 | ||||

| 10 | Left | 11.500 | 13.578 | |||

| Right | 17.800 | 14.570 | ||||

| 11 | Left | 12.500 | −1.744 | 352.831 | 352.781 | 0.050 |

| Right | −1.102 | −1.754 | ||||

| 12 | Left | 26.000 | 12.595 | |||

| Right | 35.000 | 14.606 |

From the comparison, it is shown that the maximum distance error of the articulated laser sensor calibrated by the new method is 0.05 mm in the real experiment.

6. Conclusions

A novel high-accuracy calibration method of an articulated laser sensor is proposed in this paper. The system parameters to be calibrated are the spatial positions of the three axes of the articulated laser sensing module. The calibration principles of the three axes are introduced in detail. Especially, the calibration of the laser beam is elaborated, which is the key innovative aspect of the study. A novel optical calibration device is also presented to achieve high-accuracy operation, including a linear displacement guide, high-precision machined porcelain beads, and a camera with a telecentric lens. The calibration method of the laser beam consists of a perspective projection model and image processing techniques. The image processing procedure is divided into two steps: centroid extraction and image perspective rectification. The experimental results show that a maximum distance error of 0.05 mm was detected with articulated laser sensor. These encouraging results prove that this proposed calibration method is suitable for articulated laser sensors, particularly for the calibration of the laser beam.

Author Contributions

Conceptualization, B.W. and J.K.; Methodology, J.K., X.D. and T.X.; System Structure, J.K. and X.D.; Software, J.K. and X.D.; Validation, J.K. and X.D.; Formal Analysis, J.K. and X.D.; Writing-Original Draft Preparation, J.K. and X.D.; Writing-Review & Editing, B.W. and J.K.; Funding Acquisition, B.W. and T.X.

Funding

This research was funded by the National Natural Science Foundation of China (Grant Nos. 61771336, 61671321, 51475328), and the Natural Science Foundation of Tianjin in China (No. 18JCZDJC38600).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Lee R.T., Shiou F.J. Multi-beam laser probe for measuring position and orientation of freeform surface. Measurement. 2011;44:1–10. doi: 10.1016/j.measurement.2010.05.005. [DOI] [Google Scholar]

- 2.Sun X., Zhang Q. Dynamic 3-D shape measurement method: A review. Opt. Lasers Eng. 2010;48:191–204. [Google Scholar]

- 3.Zhang Q., Sun X., Xiang L., Sun X. 3-D shape measurement based on complementary Gray-code light. Opt. Lasers Eng. 2012;50:574–579. doi: 10.1016/j.optlaseng.2011.06.024. [DOI] [Google Scholar]

- 4.Feng D., Feng M., Ozer E., Fukuda Y. A Vision-Based Sensor for Noncontact Structural Displacement Measurement. Sensors. 2015;15:16557–16575. doi: 10.3390/s150716557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Muralikrishnan B., Phillips S., Sawyer D. Laser trackers for large-scale dimensional metrology: A review. Precis. Eng. 2016;44:13–28. doi: 10.1016/j.precisioneng.2015.12.001. [DOI] [Google Scholar]

- 6.Ouyang J.F., Liu W.L., Yan Y.G., Sun D.X. Angular error calibration of laser tracker system. SPIE. 2006;6344:6344–6348. [Google Scholar]

- 7.Scherer M., Lerma J. From the conventional total station to the prospective image assisted photogrammetric scanning total station: Comprehensive review. J. Surv. Eng. 2009;135:173–178. doi: 10.1061/(ASCE)0733-9453(2009)135:4(173). [DOI] [Google Scholar]

- 8.Wu B., Wang B. Automatic Measurement in Large-Scale Space with the Laser Theodolite and Vision Guiding Technology. Adv. Mech. Eng. 2013;5:1–8. doi: 10.1155/2013/629385. [DOI] [Google Scholar]

- 9.Zhou F., Peng B., Cui Y., Wang Y., Tan H. A novel laser vision sensor for omnidirectional 3D measurement. Opt. Laser Technol. 2013;45:1–12. doi: 10.1016/j.optlastec.2012.08.005. [DOI] [Google Scholar]

- 10.Wu B., Yang F., Ding W., Xue T. A novel calibration method for non-orthogonal shaft laser theodolite measurement system. Rev. Sci. Instrum. 2016;87:035102. doi: 10.1063/1.4943017. [DOI] [PubMed] [Google Scholar]

- 11.Bi C., Liu Y., Fang J.-G., Guo X., Lv L.-P., Dong P. Calibration of laser beam direction for optical coordinate measuring system. Measurement. 2015;73:191–199. [Google Scholar]

- 12.Sun J., Zhang J., Liu Z., Zhang G. A vision measurement model of laser displacement sensor and its calibration method. Opt. Lasers Eng. 2013;51:1344–1352. doi: 10.1016/j.optlaseng.2013.05.009. [DOI] [Google Scholar]

- 13.Xie Z., Wang J., Zhang Q. Complete 3D measurement in reverse engineering using a multi-probe system. Mach. Tools Manuf. 2005;45:1474–1486. [Google Scholar]

- 14.Yang T., Wang Z., Wu Z., Li X., Wang L., Liu C. Calibration of Laser Beam Direction for Inner Diameter Measuring Device. Sensors. 2017;17:294. doi: 10.3390/s17020294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Xie Z., Wang X., Chi S. Simultaneous calibration of the intrinsic and extrinsic parameters of structured-light sensors. Opt. Lasers Eng. 2014;58:9–18. doi: 10.1016/j.optlaseng.2014.01.001. [DOI] [Google Scholar]

- 16.Yang K., Yu H.-Y., Yang C. Calibration of line structured-light vision measurement system based on free-target. J. Mech. Electr. Eng. 2016;33:1066–1070. [Google Scholar]

- 17.Smith K.B., Zheng Y.F. Point laser triangulation probe calibration for coordinate metrology. J. Manuf. Sci. Eng. 2000;122:582–593. doi: 10.1115/1.1286256. [DOI] [Google Scholar]

- 18.Wu D., Chen T., Li A. A High Precision Approach to Calibrate a Structured Light Vision Sensor in a Robot-Based Three-Dimensional Measurement System. Sensors. 2016;16:1388. doi: 10.3390/s16091388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yin S., Ren Y., Guo Y., Zhu J., Yang S., Ye S. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement. 2014;54:65–76. doi: 10.1016/j.measurement.2014.04.009. [DOI] [Google Scholar]

- 20.Men Y., Zhang G., Men C., Li X., Ma N. A Stereo Matching Algorithm Based on Four-Moded Census and Relative Confidence Plane Fitting. Chin. J. Electron. 2015;24:807–812. doi: 10.1049/cje.2015.10.024. [DOI] [Google Scholar]

- 21.Mulleti S., Seelamantula C.S. Ellipse Fitting Using the Finite Rate of Innovation Sampling Principle. IEEE Trans. Image Process. 2016;25:1451–1464. doi: 10.1109/TIP.2015.2511580. [DOI] [PubMed] [Google Scholar]

- 22.Yang J., Zhang T., Song J., Liang B. High accuracy error compensation algorithm for star image sub-pixel subdivision location. Opt. Lasers Eng. 2010;18:1002–1010. [Google Scholar]

- 23.Luo X., Du Z. Method of Image Perspective Transform Based on Double Vanishing Point. Comput. Eng. 2009;35:212–214. [Google Scholar]

- 24.Gu F., Zhao H. Analysis and correction of projection error of camera calibration ball. Acta Opt. Sin. 2012;12:209–215. [Google Scholar]