Abstract

This paper studies the asymptotic properties of a sparse linear regression estimator, referred to as broken adaptive ridge (BAR) estimator, resulting from an L0-based iteratively reweighted L2 penalization algorithm using the ridge estimator as its initial value. We show that the BAR estimator is consistent for variable selection and has an oracle property for parameter estimation. Moreover, we show that the BAR estimator possesses a grouping effect: highly correlated covariates are naturally grouped together, which is a desirable property not known for other oracle variable selection methods. Lastly, we combine BAR with a sparsity-restricted least squares estimator and give conditions under which the resulting two-stage sparse regression method is selection and estimation consistent in addition to having the grouping property in high- or ultrahigh-dimensional settings. Numerical studies are conducted to investigate and illustrate the operating characteristics of the BAR method in comparison with other methods.

Keywords: Feature selection, Grouping effect, L0-penalized regression, Oracle estimator, Sparsity recovery

1. Introduction

Simultaneous variable selection and parameter estimation is an essential task in statistics and its applications. A natural approach to variable selection is L0-penalized regression, which directly penalizes the cardinality of a model through well-known information criteria such as Mallow’s Cp [35], Akaike’s information criterion (AIC) [1], the Bayesian information criterion (BIC) [9, 38], and risk inflation criteria (RIC) [17]. It has also been shown to have an optimality property for variable selection and parameter estimation [40]. However, the L0-penalization problem is nonconvex and finding its global optima requires exhaustive combinatorial best subset search, which is NP-hard and computationally infeasible even for data in moderate dimension. Moreover, it can be unstable for variable selection [6]. A popular alternative is L1-penalized regression, or Lasso [43], which is known to be consistent for variable selection [36, 48] although not consistent for parameter estimation [13, 51]. During the past two decades, much e orts have been devoted to improving Lasso using various variants of the L1 penalty, which are not only consistent for variable selection, but also consistent for parameter estimation [13, 15, 24, 27, 47, 51]. Yet another approach is to approximate the L0 penalty using a surrogate L0 penalty function, which could lead to a more sparse model with favorable numerical properties [11, 25, 40, 41].

This paper concerns a different L0-based approach to variable selection and parameter estimation which performs iteratively reweighted L2 penalized regressions in order to approximate an L0 penalized regression [19, 33]. Unlike a surrogate L0 penalization method such as the truncated Lasso penalization (TLP) method [41], the L0-based iteratively reweighted L2 penalization method can be viewed as performing a sequence of surrogate L0 penalizations, where each reweighted L2 penalty serves as an adaptive surrogate L0 penalty and the approximation of L0 penalization improves with each iteration. It is also computationally appealing since each iteration only involves a reweighted L2 penalization. The idea of iteratively reweighted penalization has its roots in the well-known Lawson algorithm [29] in classical approximation theory, which later found applications in Ld minimization for values of d ∈ (0, 1) [37], sparse signal reconstruction [21], compressive sensing [7, 8, 10, 20, 45], and variable selection [19, 33]. However, despite its increasing popularity in applications and favorable numerical performance, theoretical studies of the iteratively reweighted L2 penalization method have so far focused on its non-asymptotic numerical convergence properties [10, 19, 33]. Its statistical properties such as selection consistency and estimation consistency remain unexplored.

The primary purpose of this paper is to study rigorously the asymptotic properties of a specific version of the L0-based iteratively reweighted L2-penalization method for the linear model. We will refer to the resulting estimator as the broken adaptive ridge (BAR) estimator to reflect the fact that it is obtained by fitting adaptively reweighted ridge regression which eventually breaks part of the ridge with the corresponding weights diverging to infinity to produce sparsity. The BAR algorithm uses an L2-penalized (or ridge) estimator as its initial estimator and updates the estimator iteratively using reweighted L2 penalizations where the weight for each coe cient at each iteration step is the inverse of its squared estimate from the previous iteration as defined later in Eqs. (2)–(3) of Section 2.

Our key theoretical contribution is to establish two large-sample properties for the BAR estimator. First, we will show that the BAR estimator possesses an oracle property: when the true model is sparse, with probability tending to 1, it estimates the zero coefficients as zeros and estimates the non-zero coefficients as well as the scenario when the true sub-model is known in advance. To this end, we stress that because the BAR estimator is the limit of an iterative penalization algorithm, commonly used techniques for deriving oracle properties of a single-step penalized regression method do not apply directly. As pointed out by a reviewer, the common strategies used in analyzing approximate solutions to nonconvex penalized method such as SCAD and MCP usually rely on an initial estimator that is close to the optimal, and then show that some kind of iterative refinement of the initial estimator would lead to a good local estimator [15, 34]. Our derivations of the asymptotic oracle properties for BAR are inspired by this idea, but the technical details are substantially different from those for other variable selection methods in the literature. A unique feature of the BAR algorithm is that at each reweighted L2 iteration, the resulting estimator is not sparse. We show that with a good initial ridge estimator, each BAR iteration shrinks the estimates of the zero coefficients towards zero and drives the estimates of the nonzero coefficients towards an oracle ridge estimator. Consequently, sparsity and selection consistency are achieved only in the limit of the BAR algorithm and the estimation consistency of the ridge estimator for the nonzero coefficients is preserved. Second, we will show that the BAR estimator has a grouping effect that highly correlated variables are naturally grouped together with similar coefficients, which is a desirable property inherited from the ridge estimator. Therefore, our results show that the BAR estimator enjoys the best of the L0 and L2 penalized regressions, while avoiding some of their limitations. Lastly, we consider high- or ultrahigh-dimensional settings when the dimension is larger or much larger than the sample size. Frommlet and Nuel [19] suggested to use the mBIC penalty for the BAR method in high-dimensional settings, but its asymptotic consistency has yet to be studied rigorously. We propose a different extension of the BAR method to ultrahigh-dimensional settings by combining it with a sparsity-restricted least squares estimation method and show rigorously that the resulting two-stage estimator is asymptotically consistent for variable selection and parameter estimation, and that it retains the grouping property.

In Section 2, we review the BAR estimator. In Section 3, we state our main results on its oracle property and grouping property for the general non-orthogonal case; we also develop rigorously a two-stage BAR method for high-or ultrahigh-dimensional settings. Section 4 illustrates the finite-sample operating characteristics of BAR in comparison with some popular methods for variable selection, parameter estimation, prediction, and grouping. Section 5 presents illustrations involving a prostate cancer data and a gut microbiota data. Concluding remarks are given in Section 6. Proofs of the theoretical results are deferred to the Appendix.

2. Broken adaptive ridge estimator

Consider the linear regression model

where is a response vector, are predictors, and is the vector of regression coefficients. Assume that β0 is the true parameter. Without loss of generality, we suppose X = (x1,…,xp) = (w1,…,wn)⊤ and y are centralized. In this paper, || ‧ || represents the Euclidean norm for a vector and spectral norm for a matrix. Let

| (1) |

be the ridge estimator, where ξn ≥ 0 is a tuning parameter. For any integer k ≥ 1, define

| (2) |

where

| (3) |

and . The broken adaptive ridge (BAR) estimator is defined as

Remark 1. [Weight selection and arithmetic overflow] The initial ridge estimator and its subsequent updates do not yield any zero coefficient. Thus, the weights in each iteration are well defined. However, as the iterated values converge to its limit, the weight matrix will inevitably run into division by a very small nonzero value, which could cause an arithmetic overflow. A commonly used solution to this problem is to introduce a small perturbation, or more specifically, replace by for some δ > 0 to avoid numerical instability [10, 19]. Here we provide a different solution by rewriting formula (3) as

| (4) |

where , and . Because the right-hand side of (4) involves only multiplication, instead of division, by the ., numerical stability is achieved without having to introduce a perturbation δ. We also note that the BAR algorithm based on (4) is equivalent to the algorithm of Liu and Li [33].

Remark 2. [Orthogonal case] To appreciate how the BAR method works, it is helpful to examine the orthogonal case which admits a closed form for the BAR estimator and which has been previously studied in [19]. Assume that . Then, for each j ∈ {1, …, pn}, the jth component of defined by (3) is equal to

where is the ordinary least squares (OLS) estimate. Hence the BAR algorithm can be implemented component by component. First, we show the existence and uniqueness of the BAR estimator . Without loss of generality, assume and then for every integer k. Also note that the map is increasing in x on (0, ∞). Set ϕ(x) = g(x) − x. For fixed n and λn, the following facts can be verified easily.

-

(i)

If , then ϕ (x) < 0. Therefore, for all k and .

-

(ii)If , then ϕ (x) ≤ 0. This leads to for all k. Then,

-

(iii)If , then (x) ≥ 0 when x ∈ [x1, x], where and and ϕ(x) < 0 otherwise. As a result,

In conclusion, the BAR estimator always converges to a unique solution depending on the settings of the initial value. Since we choose the ridge estimator as the initial value, which would be close to the OLS estimator under mild conditions, the BAR algorithm for the jth component converges to the following limit:

| (5) |

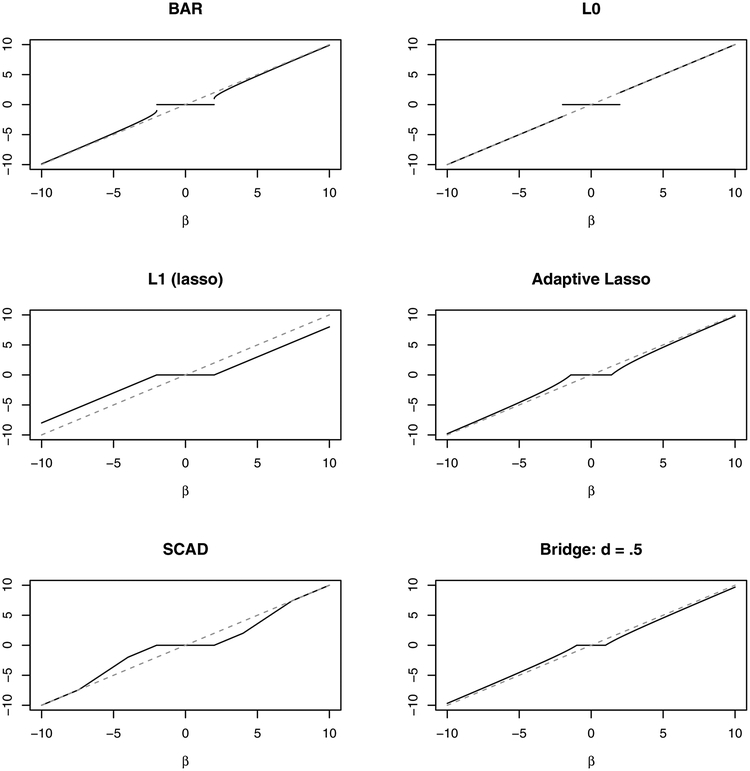

Hence, the BAR estimator shrinks the small values of to 0 and keeps its large values essentially unchanged provided that λn/n is small. Figure 1 depicts the BAR thresholding function (5), along with some well-known thresholding functions (L0, Lasso, Adaptive Lasso, and SCAD), suggesting that BAR gives a good approximation to an L0 penalized regression. The existence and uniqueness of the BAR estimator for X in a general form are nontrivial, although they have been verified empirically in our extensive numerical studies. A slightly weaker theoretical result is provided in Theorem 1(i) of Section 3. Therefore, BAR is expected to behave like an L0-penalized regression, but is more stable and easily scalable to high-dimensional settings.

Figure 1:

Thresholding functions of BAR, L0, Lasso, Adaptive Lasso, SCAD, and L0.5.

3. Large-sample properties of BAR

3.1. Oracle property

We next develop the oracle properties of the BAR estimator for the general case without requiring the design matrix to be orthogonal.

Let be the true parameter value with dimension pn diverging to infinity but pn < n. For simplicity, write where and . Assume β01 ≠ 0 and β02 = 0. Let denote the BAR estimator of β0. Set , and .

The following conditions are needed to derive the asymptotic properties of the BAR estimator.

-

(C1)

The errors ε1, …, εn are independent and identically distributed random variables with mean zero and variance 0 < σ2 < ∞.

-

(C2)

There exists a constant C > 1 such that 0 < 1/C < λmin (Σn) ≤ λmax (Σn) < C < ∞ for every integer n.

-

(C3)

Let and . As , and

Theorem 1 (Oracle property). Assume the conditions (C1)–(C3)| are satisfied. For any qn-vector bn satisfying ||bn|| ≤ 1, define Then, the fixed point of exists and is unique, where Moreover, with probability tending to 1,

-

(i)

the BAR estimator exists and is unique, where and is the unique fixed point of f (α);

-

(ii)

.

3.2. Grouping effect

When the true model has a natural group structure, it is desirable to have all coefficients within a group clustered together. For example, by virtue of gene network relationships, some of the genes will strongly correlate and are referred to as grouped genes [39]. The next theorem shows that the BAR method has a grouping property.

Theorem 2. Assume that the columns of matrix X are standardized such that x1j + ⋯ + xnj = 0 and for all j ∈ {1, …, pn}. Let be the BAR estimator. Then, with probability tending to 1, for any i < j,

provided that , where is the sample correlation of xi and xj.

The above grouping property of BAR is similar to that of Elastic Net. In particular, Zou and Hastie [52] showed that if , then

where is the Elastic Net estimate of βi and λ is a tuning parameter. As seen in the proof of Theorem 2, the reason for stating the grouping effect of the BAR estimator in terms of is the weight used in the reweighted L2 penalization.

3.3. High-dimensional setting

Like most oracle variable selection methods, the oracle property of the BAR estimator in Theorem 1 is derived for pn < n. In high- or ultrahigh-dimensional problems where the number of variables pn can be much larger than the sample size n, it is not clear if BAR would still enjoy the oracle property under the similar conditions. A commonly used strategy for ultrahigh-dimensional problems is to add a screening step to reduce the dimension before performing variable selection and parameter estimation [14]. During the past decades, many variable screening procedures have been developed with the sure screening property that with probability tending to 1, the screened model retains all important variables; see, e.g., [12, 14, 15, 22, 28, 30–32, 44, 46, 49, 50] and the references therein. Our results in Theorems 3 and 4 guarantee that when combined with a sure screening procedure, the BAR estimator has the oracle and grouping property in ultrahigh-dimensional settings.

Inspired by Xu and Chen [46], who studied a sparse maximum likelihood estimation method for generalized linear models, we introduce below a sparsity-restricted least squares estimate for screening joint effects for the linear model with unspecified error distribution and establish its sure screening property. Specifically, for a given k, define the following sparsity-restricted least squares estimate (sLSE):

| (6) |

where Solving for can be computationally demanding even for moderately large k since it is equivalent to the best subset search. Here we adopt the iterative hard-thresholding (IHT) algorithm of Blumensath and Davies [4] to approximately solve the optimization problem (6). Define a local quadratic approximation of ℓ(β) by

where and u > 0 is a scaling parameter. The IHT algorithm solves (6) via the following iterative algorithm

| (7) |

where β(0) = 0. Note that without the sparsity constraint, (7) has an explicit solution

Adding the sparsity constraint, the solution of (7) is then given by

with r being the kth largest component of Therefore the update at each iteration of the IHT algorithm is straightforward and The following theorem shows that with an appropriate scaling parameter u, the sequence {β(t)}obtained from the above IHT algorithm decreases the objective function ℓ at every iteration and converges to a local solution of (6).

Theorem 3. Let {β(t)} be the sequence defined by (7). Assume that where denotes the largest eigenvalue of Then

-

(a)

ℓ{β(t)} ≥ {β(t+1)} for every integer t.

-

(b)

Assume that the parameter space Assumeis a compact subset of Assumeand contains the true parameter value β0. If for all submodels s such that ||s||0 ≤ k, then {β(t)} converges to a local solution to (6).

Let denote the true model and the screened model via the sparsity-restricted least squares estimate The following conditions are needed to establish the sure screening property of ŝsLSE.

-

(D1)

There exist ω1, ω2 > 0 and some nonnegative constants τ1, τ2 such that τ1 + τ2 < 1/2, and qn < k ≤ ω2nτ2.

-

(D2)

ln(pn) = O(nd) for some d ∈ [0, 1 − 2τ1 − 2τ2).

-

(D3)

There exist constants c1 > 0, δ1 > 0 such that for sufficiently large n, for and where λmin denotes the smallest eigenvalue of a matrix.

-

(D4)

Let There exist positive constants c2, c3, c4, σ such that and for sufficiently large n,

Theorem 4. (Sure Joint Screening Property) Under conditions (D1)–(D4), .

Finally, let be BAR estimator for the screened submodel

| (8) |

It then follows immediately from Theorems 1, 2 and 4 that under conditions (D1)–(D4), has the oracle properties for variable selection and parameter estimation and the grouping property for highly correlated covariates, provided that Model (8) satisfies conditions (C1)–(C3).

4. Simulation study

4.1. Variable selection, estimation, and prediction

We first present a simulation to illustrate the performance of BAR for variable selection, parameter estimation, and prediction in comparison with some L1-based variable selection methods (Lasso, adaptive Lasso, SCAD and MCP) and a surrogate L0 method (TLP) [40] under some low-dimensional settings when p ≤ n. Here we consider two linear models, viz.

where in both models, the p-dimensional covariate vector X is generated from a multivariate Gaussian distribution with mean 0 and variance-covariance matrix Σ = (r|i−j|), and the error ε is Gaussian and independent of the covariates. Model 1 has sparse strong signals and Model 2 contains a mix of both strong and weak signals. Five-fold CV was used to select tuning parameters for each method. For BAR, we used ten grid points evenly spaced on the interval [10−4, 100] for ξn and ten equally spaced grid points on [1, 10] for λn. We observed from our limited experience that BAR is insensitive to the choice of ξn and its solution path is essentially flat over a broad range of ξn. So the selection of λn is more relevant. In this simulation, we used only ten grid points to reduce the computational burden. In practice, however, a more refined grid for λn would generally be preferred. We used the R package glmnet [18] for Lasso, adaptive Lasso and ncvreg [5] for SCAD and MCP in our simulations. The TLP method [40] requires to specify a resolution tuning parameter τ. To illustrate the impact of τ, we considered two τ values (0.15 and 0.5), although a data-driven τ would be used in practice as demonstrated in [40]. We considered a variety of scenarios by varying n, p, and r for each of the above two models, but only present partial results in Tablel 1 due to space limitation.

Table 1:

(Low-dimensional settings: n = 100, p ∈ {10, 50, 100} Comparison of BAR, Lasso, SCAD, MCP, and Adaptive Lasso (ALasso) and TLP based on 100 Monte-Carlo samples. (Misclassification = mean number of misclassified non-zeros and zeros; FP = mean number of false positives (non-zeros); FN = mean number of false negatives (zeros); TM = probability that the selected model is exactly the true model; MAB = mean absolute bias; MSPE = mean squared prediction error from five-fold CV.)

| Model | p | Method | Misclassification | FP | FN | TM | MAB | MSPE |

|---|---|---|---|---|---|---|---|---|

| 1 | 10 | BAR | 0.08 | 0.08 | 0 | 93% | 0.26 | 1.02 |

| Lasso | 3.28 | 3.28 | 0 | 6% | 0.56 | 1.08 | ||

| SCAD | 0.90 | 0.90 | 0 | 62% | 0.34 | 1.03 | ||

| MCP | 0.72 | 0.72 | 0 | 68% | 0.33 | 1.03 | ||

| ALasso | 0.26 | 0.26 | 0 | 89% | 0.29 | 1.03 | ||

| TLP(0.15) | 0.54 | 0.54 | 0 | 63% | 0.34 | 1.04 | ||

| TLP(0.5) | 0.64 | 0.64 | 0 | 77% | 0.31 | 1.04 | ||

| 50 | BAR | 0.26 | 0.26 | 0 | 86% | 0.32 | 1.04 | |

| Lasso | 7.75 | 7.75 | 0 | 2% | 0.95 | 1.18 | ||

| SCAD | 1.81 | 1.81 | 0 | 65% | 0.37 | 1.05 | ||

| MCP | 0.97 | 0.97 | 0 | 73% | 0.34 | 1.05 | ||

| ALasso | 3.99 | 3.99 | 0 | 40% | 0.68 | 1.03 | ||

| TLP(0.15) | 0.98 | 0.98 | 0 | 60% | 0.45 | 1.05 | ||

| TLP(0.5) | 1.51 | 1.51 | 0 | 77% | 0.36 | 1.08 | ||

| 100 | BAR | 0.44 | 0.44 | 0 | 80% | 0.35 | 1.02 | |

| Lasso | 10.38 | 10.38 | 0 | 2% | 1.08 | 1.2 | ||

| SCAD | 1.41 | 1.41 | 0 | 65% | 0.31 | 1.03 | ||

| MCP | 1.04 | 1.04 | 0 | 66% | 0.32 | 1.03 | ||

| ALasso | 1.51 | 1.51 | 0 | 32% | 0.42 | 1.08 | ||

| TLP(0.15) | 1.31 | 1.31 | 0 | 55% | 0.46 | 1.02 | ||

| TLP(0.5) | 2.21 | 2.21 | 0 | 70% | 0.38 | 1.05 | ||

| 2 | 10 | BAR | 1.14 | 0.12 | 1.02 | 30% | 0.67 | 1.11 |

| Lasso | 2.70 | 2.61 | 0.09 | 5% | 0.76 | 1.11 | ||

| SCAD | 1.87 | 1.58 | 0.29 | 14% | 0.74 | 1.1 | ||

| MCP | 1.69 | 1.27 | 0.42 | 19% | 0.74 | 1.1 | ||

| ALasso | 1.55 | 1.11 | 0.44 | 18% | 0.69 | 1.07 | ||

| TLP(0.15) | 1.02 | 0.40 | 0.62 | 33% | 0.66 | 1.07 | ||

| TLP(0.5) | 2.27 | 1.87 | 0.4 | 9% | 0.74 | 1.09 | ||

| 50 | BAR | 2.18 | 0.68 | 1.5 | 4% | 0.94 | 1.17 | |

| Lasso | 10.56 | 10.09 | 0.47 | 0% | 1.51 | 1.29 | ||

| SCAD | 5.36 | 4.32 | 1.04 | 1% | 1.06 | 1.19 | ||

| MCP | 3.33 | 2.1 | 1.23 | 4% | 0.99 | 1.19 | ||

| ALasso | 8.22 | 7.38 | 0.84 | 0% | 1.41 | 1.1 | ||

| TLP(0.15) | 2.25 | 1.36 | 1.19 | 7% | 1.03 | 1.1 | ||

| TLP(0.5) | 6.07 | 5.13 | 0.94 | 3% | 1.09 | 1.2 | ||

| 100 | BAR | 2.67 | 0.94 | 1.73 | 2% | 1.05 | 1.17 | |

| Lasso | 14.63 | 14.02 | 0.61 | 0% | 1.79 | 1.34 | ||

| SCAD | 4.24 | 3.08 | 1.16 | 1% | 1.01 | 1.21 | ||

| MCP | 3.34 | 1.99 | 1.35 | 3% | 0.96 | 1.2 | ||

| ALasso | 4.42 | 2.27 | 2.15 | 1% | 1.26 | 1.32 | ||

| TLP(0.15) | 3.14 | 1.88 | 1.26 | 2% | 1.03 | 1.08 | ||

| TLP(0.5) | 7.67 | 6.71 | 0.96 | 1% | 1.17 | 1.19 |

From Table 1, it appears that the BAR and TLP methods generally produce more sparse models than the L1-based methods, with BAR having the lowest number of false positives (nonzeros). When the signals are strong (Model 1), BAR demonstrates better performance in most categories with (i) better variable selection performance (the lowest number of misclassifications (non-zeros and zeros), lowest false positives, highest probability of selecting the true model (TM), and equally low false rate (close to 0)); (ii) better estimation performance (the smallest estimation bias, MAB); and (iii) for equally good prediction performance (similar mean squared prediction error (MSPE)).

Similar trends are observed for Model 2, in which there are both strong and weak signals. BAR still produces the sparsest model with the lowest positive false positive rate and lowest number of misclassifications, but it tends to miss the small effects with more false negatives. For estimation, BAR has comparable or smaller bias, but no method dominates others across all scenarios. For prediction, all methods had comparable performance with similar MSPE.

Because some signals are weak and the sample size n = 100 is relatively small, it is challenging for all methods to select the exact correct model (TM close to zero), but for different reasons: BAR tends to miss some small signals with more false negatives (zeros) whereas the other methods tend to include some zeros as signals with more false positives. Moreover, as a surrogate L0 method, the TLP method generally produces a more sparse model than an L1-based method. In principle, the TLP method with a smaller τ value gives a closer approximation to L0 penalization and thus leads to a more sparse model as shown in Table A.1. In practice, however, τ cannot be too small since the TLP estimate obtained from Algorithm 1 in [40] would be no more sparse than its initial Lasso estimator.

In summary, BAR shows an advantage of more accurately selecting and estimating strong signals. On the other hand, if weak signals are also of interest and the sample size is relatively small, then an L1-based method with smaller false negatives might be preferred.

4.2. Grouping effect

We now present a simulation to illustrate the grouping effect of BAR for highly correlated covariates. Assume y = Xβ0 + ε with pn = 9 and β0 = (2, 2, 2, 0.4, 0.4, 0.4, 0, 0, 0), where and

where ei are independent . In this setting, x1, x2, and x3 form a group with latent factor z1, and x5, x6, and x7 form another group with latent factor z2. The with-in group correlations are almost 1 and the between-group correlations are almost 0. Figure 2 plots the solution paths of BAR, Elastic Net, Lasso, Adaptive Lasso, SCAD, and MCP for a random sample of size n = 200. The estimated coefficients and cross-validation errors together with the corresponding optimal tuning parameters are provided in Table 2.

Figure 2:

Path plots of BAR, Elastic Net, Lasso, SCAD, Adaptive Lasso, and MCP for a linear model with two groups of highly correlated covariates with true coefficients βj = 2 for j =1,2, and 3; βj = 0.5 for j = 4, 5, and 6; and βj = 0 for j ≥ 7, as described in Section 3.2. For BAR, we fixed ξn = 100. For Elastic Net, the fixed tuning parameter is λ2 = 0.001 and SCAD with γ = 3.7, Adaptive Lasso with ξ = 18.68, MCP with γ = 3. The dashed vertical lines are the optimal tuning parameters obtained by 5-fold CV with lowest CV error and the corresponding estimated coefficients are provided in Table 2.

Table 2:

(Grouping effects) Estimated coefficients of BAR, Elastic Net, Lasso, SCAD, Adaptive Lasso, and MCP for a linear model with optimal tuning parameters selected by five-fold CV. The full solution paths are depicted in Figure 2.

| Parameter | BAR | Elastic Net | Lasso | SCAD | Adaptive Lasso | MCP |

|---|---|---|---|---|---|---|

| (Intercept) | −0.008 | −0.004 | −0.005 | 0.031 | −0.009 | 0.029 |

| β1 | 1.902 | 2.000 | 2.137 | 6.001 | 1.585 | 6.001 |

| β2 | 2.016 | 1.998 | 1.441 | 1.908 | ||

| β3 | 2.081 | 1.999 | 2.421 | 2.506 | ||

| β4 | 0.499 | 0.406 | 0.894 | 1.200 | 1.153 | 1.200 |

| β5 | 0.469 | 0.401 | 0.292 | 0.047 | ||

| β6 | 0.233 | 0.392 | 0.013 | |||

| β7 | −0.001 | |||||

| β8 | ||||||

| β9 | ||||||

| Tuning | ξn = 100 | λ1 = 0.017 | λ = 0.017 | γ = 3.7, | ξ = 18.68 | γ = 3, |

| parameters | λn = 0.043 | λ2 = 0.001 | λ = 0.044 | λ = 0.001 | λ = 10.861 | |

| CV Error | 0.038 | 0.045 | 0.331 | 0.048 | 0.043 | 0.330 |

It is seen from Figure 2 and Table 2 that both BAR and Elastic Net selected all three variables in each of the two non-zero groups with almost evenly distributed coefficients within each group, whereas Lasso, adaptive Lasso, SCAD, and MCP failed to reveal any grouping information with disorganized paths and erratic coefficient estimates.

4.3. High-dimensional settings (p ≫ n)

We finally present a simulation to illustrate the performance of BAR in comparison with some other variable selection methods when combined with the sparsity-restricted least squares estimate-based screening method in a high-dimensional setting. Specifically, we consider Models 1 and 2 in Section 3.1, but increase p to 2000. The simulation results for the two-stage estimators with n = 100 are presented in Table 3.

Table 3:

(High-dimensional settings: n = 100, p = 2000) Comparison of BAR, Lasso, SCAD, MCP, Adaptive Lasso (ALasso), and TLP Following the Sparsity-Restricted Least Squares Estimate (sLSE). (Misclassification = Mean Number of Misclassified Non-zeros and Zeros; FP = Mean Number of False Positives (Non-zeros); FN = Mean Number of False Negatives (Zeros); TM = Probability that the Selected Model is Exactly the True Model; MAB = Mean Absolute Bias; MSPE = Mean Squared Prediction Error from Five-Fold CV.)

| Model | Method | Misclassification | FP | FN | TM | MAB | MSPE |

|---|---|---|---|---|---|---|---|

| 1 | sLSE-BAR | 0.32 | 0.18 | 0.14 | 75.72% | 0.55 | 1.04 |

| sLSE-Lasso | 5.10 | 4.96 | 0.14 | 3.8% | 0.97 | 1.13 | |

| sLSE-SCAD | 0.94 | 0.80 | 0.14 | 64.60% | 0.57 | 1.05 | |

| sLSE-MCP | 0.67 | 0.53 | 0.14 | 68.32% | 0.57 | 1.05 | |

| sLSE-ALasso | 0.92 | 0.78 | 0.14 | 63.88% | 0.61 | 1.05 | |

| sLSE-TLP(0.15) | 0.74 | 0.60 | 0.14 | 56.56% | 0.64 | 1.06 | |

| sLSE-TLP(0.5) | 0.89 | 0.75 | 0.14 | 67.72% | 0.57 | 1.06 | |

| 2 | sLSE-BAR | 0.30 | 0.18 | 0.12 | 0% | 1.45 | 1.04 |

| sLSE-Lasso | 5.09 | 4.98 | 0.11 | 0% | 1.87 | 1.13 | |

| sLSE-SCAD | 0.95 | 0.83 | 0.12 | 0% | 1.47 | 1.05 | |

| sLSE-MCP | 0.66 | 0.54 | 0.12 | 0% | 1.47 | 1.05 | |

| sLSE-ALasso | 0.93 | 0.81 | 0.12 | 0% | 1.51 | 1.05 | |

| sLSE-TLP(0.15) | 0.72 | 0.60 | 0.12 | 0% | 1.53 | 1.06 | |

| sLSE-TLP(0.5) | 0.91 | 0.79 | 0.12 | 0% | 1.47 | 1.07 |

It is seen that for both Models 1 and 2, sLSE-BAR outperformed other methods for variable selection and parameter estimation with a lower number of misclassifications, fewer false positives, and a smaller estimation bias (MAB). Consistent with the low-dimensional settings, BAR shows similar prediction performance to the other methods. Again it is challenging for all methods to select exactly the true model under Model 2 when there are both strong and weak signals, but our limited simulations (not presented here) showed that TM (the probability of selecting the correct model) will improve, especially for BAR, as n increases.

5. Data examples

5.1. Prostate cancer data

As an illustration, we analyze the prostate cancer data described in [42]. There are eight clinical predictors, namely ln(cancer volume) (lcavol), ln(prostate weight) (lweight), age, ln(amount of benign prostatic hyperplasia) (lpbh), seminal vesicle invasion (svi), ln(capsular penetration) (lcp), Gleason score (gleason) and percentage Gleason scores 4 or 5 (pgg45). We consider the problem of selecting important predictors for the logarithm of prostate-specific antigen (lpsa). We randomly split the data set into a training set of size 67 and a test set of size 30, and compare the results of six variable selection methods: BAR, lasso, adaptive lasso, SCAD, MCP and Elastic Net by selecting nonzero features based on the training data set and test error as the mean squared prediction error on the test data set. We performed an analysis with p = 36 features consisting of all the main effects and two-way interactions. The five-fold CV method was used to select the optimal ξn and λn values for BAR, with 100 evenly-spaced grid points on the interval [10−4, 100] for ξn, and 100 equally-spaced grid points on [1, 100] for λn. The estimated coefficients are given in Table 4, together with a summary of the number of selected features and test errors for each method.

Table 4:

Coefficients of selected features and test mean squared prediction error for a prostate data (with p = 36 main effects and two-way interactions)

| Variable | BAR | Lasso | SCAD | MCP | Adaptive Lasso | Elastic Net |

|---|---|---|---|---|---|---|

| lcavol | 0.573 | 0.560 | 0.637 | 0.627 | 0.594 | 0.264 |

| lweight | 0.020 | 0.056 | ||||

| Age | −0.028 | −0.073 | −0.149 | −0.077 | −0.053 | |

| lbph | 0.070 | |||||

| svi | 0.125 | 0.422 | 0.440 | 0.141 | 0.090 | |

| lcp | 0.001 | |||||

| gleason | 0.013 | |||||

| (lcavol)×(lweight) | 0.068 | |||||

| (lcavol)×(age) | 0.036 | |||||

| (lcavol)×(svi) | 0.341 | 0.216 | 0.233 | 0.150 | ||

| (lcavol)×(gleason) | 0.196 | |||||

| (lweight)×(gleason) | 0.315 | 0.238 | 0.243 | 0.305 | 0.259 | 0.171 |

| (age)×(lbph) | 0.184 | 0.277 | 0.282 | 0.194 | 0.098 | |

| (age)×(svi) | 0.045 | |||||

| (lbph)×(gleason) | 0.021 | |||||

| (svi)×(gleason) | 0.030 | 0.090 | ||||

| (lcp)×(pgg45) | −0.019 | −0.067 | ||||

| Tuning | ξn = 44.45 | λ = 0.08 | γ = 3.7 | γ = 3 | ξ = 1.16 | λ1 = 0.06 |

| Parameters | λn = 0.1 | λ = 0.08 | λ = 0.07 | λ = 0.21 | λ2 = 0.1 | |

| Number of Selected Features | 3 | 7 | 6 | 6 | 7 | 16 |

| Test Error | 0.461 | 0.484 | 0.587 | 0.583 | 0.513 | 0.498 |

It is seen that BAR produces the sparsest model with three nonzero features as compared with at least six nonzero features by the other methods. Its prediction performance is comparable to other methods with a slightly lower test error. This is consistent with the simulation results in Section 4.1.

5.2. Gut microbiota data

In this example, we illustrate our method on a metagenomic dataset on gut microbiota that was downloaded from dbGaP under study ID phs000258 [53]. After aligning the 16S rRNA sequences of gut microbiota to reference sequences and taxonomy databases, we had a total of 240 taxa at the genus level. Here we are interested in identifying BMI associated taxa. Because taxa with small reads are not reliable and subject to noise and measurement error, we first dropped the taxa with average reads below 2, resulting in 62 features. For each taxon, we created a dummy variable 1(value of taxon > 25th quantile of the taxon). We used 304 patients after removing missing values. As in the previous example, we randomly split the data set into a training set of size 228 to select nonzero features and a test set of size 76 to compute the mean squared prediction error (MSPE). Table 5 gives the selected taxa, together with a summary of the number of selected features and test errors for each of six methods: BAR, lasso, adaptive lasso, SCAD, MCP and Elastic Net.

Table 5:

Coefficients of selected BMI-related features and test mean squared prediction error for a gut microbiota metagenomic data

| Taxon | BAR | Lasso | SCAD | MCP | Adaptive Lasso | Elastic Net |

|---|---|---|---|---|---|---|

| Acetanaerobacterium | −0.064 | |||||

| Acidaminococcus | −0.014 | |||||

| Akkermansia | 0.233 | |||||

| Anaerotruncus | −0.105 | |||||

| Asteroleplasma | −0.058 | |||||

| Bacteroides | −0.110 | |||||

| Barnesiella | 0.195 | |||||

| Butyricicoccus | −0.046 | |||||

| Butyrivibrio | 0.205 | |||||

| Catenibacterium | 0.198 | |||||

| Clostridium | −0.259 | |||||

| Collinsella | 1.066 | 0.669 | 0.689 | 0.867 | 0.908 | 0.708 |

| Coprobacillus | −0.165 | −0.133 | −0.064 | −0.216 | −0.295 | |

| Coprococcus | 0.014 | |||||

| Desulfovibrio | −0.082 | −0.049 | −0.122 | −0.308 | ||

| Escherichia Shigella | 0.081 | |||||

| Eubacterium | 0.021 | |||||

| Faecalibacterium | −0.610 | −0.389 | −0.352 | −0.352 | −0.584 | −0.591 |

| Hydrogenoanaerobacterium | 0.099 | 0.044 | 0.223 | 0.409 | ||

| Lachnobacterium | 0.294 | |||||

| Lactobacillus | 0.115 | |||||

| Megasphaera | 0.761 | 0.458 | 0.410 | 0.470 | 0.716 | 0.639 |

| Odoribacter | −0.024 | |||||

| Parabacteroides | −0.043 | |||||

| Prevotella | 0.335 | |||||

| Rikenella | −0.065 | |||||

| Robinsoniella | −0.113 | −0.088 | −0.050 | −0.124 | −0.344 | |

| Sporobacter | −0.102 | |||||

| Streptococcus | 0.050 | |||||

| Subdoligranulum | −0.018 | |||||

| Succiniclasticum | 0.119 | 0.080 | 0.211 | 0.344 | ||

| Succinivibrio | 0.238 | |||||

| Sutterella | −0.001 | −0.224 | ||||

| Treponema | −0.115 | |||||

| Turicibacter | −0.996 | −0.622 | −0.615 | −0.807 | −0.846 | −0.611 |

| Xylanibacter | 0.160 | |||||

| Tuning | ξn = 77.78 | λ = 0.54 | γ = 3.7 | γ=3 | ξ = 14.19 | λ1 = 0.19 |

| Parameters | λn = 38 | λ = 0.57 | λ = 0.66 | λ = 3.67 | λ2 = 0.26 | |

| Number of Selected Taxa | 4 | 10 | 9 | 6 | 9 | 36 |

| Test Error | 28.630 | 28.189 | 28.311 | 28.230 | 27.926 | 27.062 |

Consistent with the simulation results in Section 3, BAR yields the sparsest model (four nonzero features as compared to at least six nonzero features by the other methods) with similar test errors to other methods. Among the four identified taxa by BAR, Faecalibacterium is well known to be associated with obesity. For example, some recent studies showed that Faecalibacterium increases significantly in lean people and has a negative association with BMI [2, 23]. The other three taxa (Collinsella, Megasphaera, and Turicibacter) have also been shown to be correlated with obesity and BMI in animal models and clinical trials [3, 16, 26].

6. Discussion

We have established an oracle property and a grouping property for the BAR estimator, a sparse regression estimator resulted from an L0-based iteratively reweighted L2-penalized regressions using a ridge estimator as the initial value. Hence, BAR enjoys the best of both L0 and L2 penalized regressions while avoiding their pitfalls. For example, BAR shares the consistency properties of L0-penalized regression for variable selection and parameter estimation, avoids its instability, and is computationally more scalable to high-dimensional settings. BAR can also be viewed as a sparse version of the ridge estimator obtained by shrinking the small ridge coefficients to zeros via an approximate L0-penalized regression and consequently inherits the stability and grouping effect of the ridge estimator. In addition to the low-dimensional setting (p < n), we have extended the BAR method to high- or ultrahigh-dimensional settings when p is larger and potentially much larger than n, by combining it with a sparsity-restricted least squares estimate and provided conditions under which the two-stage estimator possesses the oracle property and grouping property.

We have conducted extensive simulations to study the operating characteristics of the L0-based BAR method in comparison with some popular L1-based variable selection methods. It was observed that as an L0-based method, BAR tends to produce a more sparse model with lower false positive rate and comparable prediction performance, and generally can select and estimate strong signals more accurately with a lower misclassification rate and a smaller estimation bias. In contrast, it is more likely to shrink weak signals to null when the sample size is not very large. Therefore, in practice, one may choose between an L1-based method and an L0-based method such as BAR based on whether or not weak signals are of interest.

Our theoretical developments for the asymptotic properties of the L0-based BAR estimator can be easily modified to analyze large sample properties of an Ld-based BAR estimator obtained by replacing with in Eq. (3) for any d ∈ [0, 1]. Our simulations (not reported in this version of the paper) suggests that there is a trade-off between the false positive rate and false negative rate as d varies. Specifically, as d increases from 0 to 1, the Ld-based BAR estimator becomes less sparse with an increased false positive rate but decreased false negative rate.

Finally, this paper focuses on the linear model. Extensions to more complex models and applications such as generalized linear models and survival data are currently under investigation by our team.

Acknowledgments

The author thanks the Editor-in-Chief, an Associate Editor and two anonymous referees for their constructive and insightful comments and suggestions that have led to significant improvements of the paper. The research of Gang Li was partly supported by National Institute of Health Grants P30 CA-16042, UL1TR000124–02, and P50 CA211015.

Appendix A. Proofs of the theorems

To prove Theorems 1–4, we need to introduce some notations and lemmas. Write where α is a qn × 1 vector and γ is a (pn − qn) × 1 vector. Analogously, write and

| (A.1) |

For simplicity, we write α*(β) and γ* (β) as α* and γ* hereafter. We further partition as

where the A11 is a qn × qn matrix. Then, multiplying to Eq. (A.1) gives

| (A.2) |

where and .

Lemma 1. Let δn be a sequence of positive real numbers satisfying δn → ∞ and Define and . Assume conditions (C1)−(C3) hold. Then, with probability tending to 1, we have

-

(a)

for some constant C0 > 1;

-

b)

g is a mapping from Hn to itself.

Proof. We first prove part (a). Because and , we have . By (C2), Hence, . It then follows from (A.2) that

| (A.3) |

Note that and By assumptions (C2) and (C3), we have

| (A.4) |

where the second inequality uses the fact , which follows from the inequality . Combining (A.3) and (A.4) gives

| (A.5) |

Note that A22 is positive definite and by the singular value decomposition, , where τ2i and u2i are eigenvalues and eigenvectors of A22. Then, since 1/C < τ2i < C, we have

This, together with (A.5) and (C2), implies that with probability tending to 1,

| (A.6) |

Let . Because we have

| (A.7) |

and

| (A.8) |

Combining (A.6), (A.7) and (A.8), we have that with probability tending to 1,

| (A.9) |

for some constant C0 > 1 provided that It is worth noting that , as n → ∞.

Furthermore, with probability tending to 1,

This proves part (a).

Next we prove part (b). First, it is easy to see from (A.8) and (A.9) that, as n → ∞,

| (A.10) |

Then, by (A.2), we have

| (A.11) |

Similar to (A.4), it is easily to verify that

| (A.12) |

Moreover, with probability tending to 1,

| (A.13) |

where the last step follows from (A.6), (A.10), and the fact that It follows from (A.11), (A.12) and (A.13) that with probability tending to 1,

| (A.14) |

Because we have, as n → ∞,

| (A.15) |

Combining (A.10) and (A.15) completes the proof of part (b).

Lemma 2. Assume that (C1)–(C3) hold. For any qn-vector bn satisfying ||bn|| ≤ 1, define as in Theorem 1. Define

| (A.16) |

Then, with probability tending to 1,

-

(a)

f(α) is a contraction mapping from to itself;

-

(b)

where is the unique fixed point of f (α) defined by

Proof. We first prove part (a). Note that (A.16) can be rewritten as

Thus,

| (A.17) |

Similar to (A.4), it can be shown that

| (A.18) |

It follows from (A.17) and (A.18) that

where δn is a sequence of real numbers satisfying δn → ∞ and . This implies that, as n → ∞,

| (A.19) |

In other words, f is a mapping from the region Bn to itself.

Rewrite (A.16) as and then differentiate it with respect to α, we have

where and This, together with the assumption , implies that

| (A.20) |

Note that Σn1 is positive definite. Write , where τ1i and u1i are eigenvalues and eigenvectors of Σn1. Then, by (C2), τ1i ∈ (1/C,C) for all i and

| (A.21) |

Therefore, it follows from α ∈ Bn, (A.21) and (C2) that

This, together with (A.20) and (C2), implies that

| (A.22) |

Finally, the conclusion in part (a) follows from (A.19) and (A.22).

Next we prove part (b). Write

| (A.23) |

By the first order resolvent expansion formula, viz. (H + Δ)−1 = H−1 − H−1 Δ (H + Δ)−1, the first term on the right-hand side of Eq. (A.23) can be written as

Hence, by the assumptions (C2) and (C3), we have

| (A.24) |

Furthermore, by writing and applying the first order resolvent expansion formula, it can be shown that

| (A.25) |

which converges in distribution to N(0, 1) by the Lindeberg–Feller Central Limit Theorem. Finally, combining (A.23), (A.24), and (A.25), we can conclude the proof of part (b).

Proof of Theorem 1. Note that for the initial ridge estimator defined by (1), we have

By the first order resolvent expansion formula and ,

It is easy to see that .Thus . This, combined with part (a) of Lemma 1, implies that

| (A.26) |

Hence, to prove part (i) of Theorem 1, it is su cient to show that

| (A.27) |

where is the fixed point of f(α) defined in part (b) of Lemma 2.

Define γ* = 0 if γ= 0. It is easy to see from (A.2) that for any α ∈ Bn,

| (A.28) |

Combining (A.28) with the fact

we find that, for any α ∈ Bn,

| (A.29) |

Therefore, g is continuous and thus uniformly continuous on the compact set β ∈ Hn. This, together with (A.26) and (A.29), implies that, as k → ∞,

| (A.30) |

with probability tending to 1. Note that

where the last step follows from . Let , for every integer k ≥ 0. From (A.30), we can induce that with probability tending to 1, for any ∊ > 0, there exists a positive integer N such that for every integer k > N, |ηk| < ∊ and

and the right-hand term tends to 0 as k → ∞. This proves (A.27).

Therefore, it follows immediately from (A.26) and (A.27) that with probability tending to 1, , which completes the proof of part (i). This, in addition to part (b) of Lemma 2, proves part (ii) of Theorem 1

Proof of Theorem 2. Recall that and , where

If for ℓ ∈ {i, j}, then must satisfy the following normal equations for ℓ ∈ {i, j}

Thus, for ℓ ∈ {i, j},

| (A.31) |

where . Moreover, because

we have

| (A.32) |

Letting k → ∞ in (A.31) and (A.32), we have, for ℓ ∈ {i, j}, and where .

Therefore,

and the proof is complete.

Proof of Theorem 3. (a) It is easy to check that

| (A.33) |

where the last step follows from the assumption that .

(b) The proof for the convergence of{β(t)} to a local solution of (6) essentially mimicks the proof of Theorem 1 of Xu and Chen (2014) and thus is omitted here.

Proof of Theorem 4. Define and to be the collections the over-fitted models and the under-fitted models. Let be the estimate of β based on the model s Clearly the result holds if . Thus, it suffices to show that as n → ∞,

For any . Consider , and by the Taylor expansion and conditions (D1) and (D3), we have

Thus, upon setting , we have

| (A.34) |

Let Such that Under condition (D4), we have and for some positive constant σ. Then,

where the last step uses Lemma A.1 in [46] and c’ is a positive constant. Similarly,

| (A.35) |

Combining (A.34)–(A.35), we have . Hence,

where the last two steps follow from conditions (D1)–(D2). Because is convex , the above result holds for any such that .

For any , let be augmented with zeros corresponding to the elements in By condition (D1), we have . Thus

This concludes the argument.

References

- [1].Akaike H, A new look at the statistical model identification, IEEE Trans. Automat. Contr 19 (1974) 716–723. [Google Scholar]

- [2].Andoh A, Nishida A, Takahashi K, Inatomi O, Imaeda H, Bamba S, Kito K, Sugimoto M, Kobayashi T, Comparison of the gut microbial community between obese and lean peoples using 16s gene sequencing in a Japanese population, J. Clin. Biochem. Nutr 59 (2016) 65–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Basso N, Soricelli E, Castagneto-Gissey L, Casella G, Albanese D, Fava F, Donati C, Tuohy K, Angelini G, La Neve F, Severino A,Kamvissi-Lorenz V, Birkenfeld AL, Bornstein S, Manco M, Mingrone G, Insulin resistance, microbiota, and fat distribution changes by a new model of vertical sleeve gastrectomy in obese rats, Diabetes 65 (2016) 2990–3001. [DOI] [PubMed] [Google Scholar]

- [4].Blumensath T, Davies ME, Iterative hard thresholding for compressed sensing, Appl. Comput. Harmon. Anal 27 (2009) 265–274. [Google Scholar]

- [5].Breheny P, Huang J, Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection, Ann. Appl. Statist 5 (2011) 232–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Breiman L, Heuristics of instability and stabilization in model selection, Ann. Statist 24 (1996) 2350–2383. [Google Scholar]

- [7].Candès EJ, Wakin MB, Boyd SP. Enhancing sparsity by reweighted [lscript]1 minimization, J. Fourier Anal. Appl 14 (2008) 877–905. [Google Scholar]

- [8].Chartrand R, Yin W, Iteratively reweighted algorithms for compressive sensing, In: Proceedings of Int. Conf. on Acoustics, Speech, Signal Processing (ICASSP) (2008) 3869–3872. [Google Scholar]

- [9].Chen J, Chen Z, Extended bayesian information criteria for model selection with large model spaces, Biometrika 95 (2008) 759–771. [Google Scholar]

- [10].Daubechies I, DeVore R, Fornasier M, Güntürk CS, Iteratively reweighted least squares minimization for sparse recovery, Commun. Pure Appl. Math 63 (2010) 1–38. [Google Scholar]

- [11].Dicker L, Huang B, Lin X, Variable selection and estimation with the seamless-ℓ0 penalty, Stat. Sinica 23 (2013) 929–962. [Google Scholar]

- [12].Fan J, Feng Y, Song R, Nonparametric independence screening in sparse ultra-high-dimensional additive models, J. Amer. Statist. Assoc 106 (2011) 544–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties, J. Amer. Statist. Assoc 96 (2001) 1348–1360. [Google Scholar]

- [14].Fan J, Lv J, Sure independence screening for ultrahigh dimensional feature space, J. R. Stat. Soc. Ser. B Stat. Methodol 70 (2008) 849–911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Fan J, Xue L, Zou H, Strong oracle optimality of folded concave penalized estimation, Ann. Statist 42 (2014) 819–849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Federico A, Dallio M, Tolone S, Gravina AG, Patrone V, Romano M, Tuccillo C, Mozzillo AL, Amoroso V, Misso G, Morelli L,Docimo L, Loguercio C, Gastrointestinal hormones, intestinal microbiota and metabolic homeostasis in obese patients: E ect of bariatric surgery, in vivo 30 (2016) 321–330. [PubMed] [Google Scholar]

- [17].Foster DP, George EI, The risk inflation criterion for multiple regression, Ann. Statist 22 (1994) 1947–1975. [Google Scholar]

- [18].Friedman J, Hastie T, Tibshirani RJ, Regularization paths for generalized linear models via coordinate descent, J. Stat. Softw 33 (2010) 1. [PMC free article] [PubMed] [Google Scholar]

- [19].Frommlet F, Nuel G, An adaptive ridge procedure for ℓ0 regularization, PloS one 11 (2016) e0148620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Gasso G, Rakotomamonjy A, Canu S, Recovering sparse signals with a certain family of nonconvex penalties and DC programming, IEEE Trans. Signal Process 57 (2009) 4686–4698. [Google Scholar]

- [21].Gorodnitsky IF, Rao BD, Sparse signal reconstruction from limited data using focuss: A re-weighted minimum norm algorithm, IEEE Trans. Signal Process 45 (1997) 600–616. [Google Scholar]

- [22].He X, Wang L, Hong HG, Quantile-adaptive model-free variable screening for high-dimensional heterogeneous data, Ann. Statist 41 (2013) 342–369. [Google Scholar]

- [23].Hippe B, Remely M, Aumueller E, Pointner A, Magnet U, Haslberger A, Faecalibacterium prausnitzii phylotypes in type two diabetic, obese, and lean control subjects, Benef. Microbes 7 (2016) 511–517. [DOI] [PubMed] [Google Scholar]

- [24].Huang J, Horowitz JL, Ma S, Asymptotic properties of bridge estimators in sparse high-dimensional regression models, Ann. Statist 36 (2008) 587–613. [Google Scholar]

- [25].Johnson BA, Long Q, Huang Y, Chansky K, Redman M, Log-penalized least squares, iteratively reweighted lasso, and variable selection for censored lifetime medical cost, Department of Biostatistics and Bioinformatics, Technical Report, Emory University, Atlanta, GA, 2012.

- [26].Jung M-J, Lee J, Shin N-R, Kim M-S, Hyun D-W, Yun J-H, Kim PS, Whon TW, Bae J-W, Chronic repression of mTOR complex 2 induces changes in the gut microbiota of diet-induced obese mice, Sci. Rep 6 (2016) 30887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Knight K, Fu W, Asymptotics for lasso-type estimators, Ann. Statist 28 (2000) 1356–1378. [Google Scholar]

- [28].Lai P, Liu Y, Liu Z, Wan Y, Model free feature screening for ultrahigh dimensional data with responses missing at random, Comput. Statist. Data Anal 105 (2017) 201–216. [Google Scholar]

- [29].Lawson CL, Contribution to the theory of linear least maximum approximation, Doctoral dissertation, Univ. Calif. at Los Angeles, Los Angeles, CA, 1961. [Google Scholar]

- [30].Li G, Peng H, Zhang J, Zhu L, Robust rank correlation based screening, Ann. Statist 40 (2012) 1846–1877. [Google Scholar]

- [31].Lin L, Sun J, Adaptive conditional feature screening, Comput. Statist. Data Anal 94 (2016) 287–301. [Google Scholar]

- [32].Liu J, Li R, Wu R, Feature selection for varying coe cient models with ultrahigh-dimensional covariates, J. Amer. Statist. Assoc 109 (2014) 266–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Liu Z, Li G , E cient regularized regression with penalty for variable selection and network construction, Comput. Math. Methods Med 2016 (2016) ID 3456153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Loh P-L, Wainwright MJ, Regularized M-estimators with nonconvexity: Statistical and algorithmic theory for local optima, J. Mach. Learn. Res 16 (2015) 559–616. [Google Scholar]

- [35].Mallows CL, Some comments on Cp, Technometrics 15 (1973) 661–675. [Google Scholar]

- [36].Meinshausen N, Bühlmann P, High-dimensional graphs and variable selection with the lasso, Ann. Statist 34 (2006) 1436–1462. [Google Scholar]

- [37].Osborne MR, Finite algorithms in optimization and data analysis, Wiley, 1985. [Google Scholar]

- [38].Schwarz G, Estimating the dimension of a model, Ann. Statist 6 (1978) 461–464. [Google Scholar]

- [39].Segal MR, Dahlquist KD, Conklin BR, Regression approaches for microarray data analysis, J. Comput. Biol 10 (2003) 961–980. [DOI] [PubMed] [Google Scholar]

- [40].Shen X, Pan W, Zhu Y, Likelihood-based selection and sharp parameter estimation, J. Amer. Statist. Assoc 107 (2012) 223–232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Shen X, Pan W, Zhu Y, Zhou H, On constrained and regularized high-dimensional regression, Ann. Inst. Statist. Math 65 (2013) 807–832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Stamey TA, Kabalin JN, McNeal JE, Johnstone IM, Freiha F, Redwine EA, Yang N, Prostate specific antigen in the diagnosis and treatment of adenocarcinoma of the prostate. II: Radical prostatectomy treated patients, J. Urol 141 (1989) 1076–1083. [DOI] [PubMed] [Google Scholar]

- [43].Tibshirani RJ, Regression shrinkage and selection via the lasso, J. R. Stat. Soc. Series B Stat. Methodol 58 (1996) 267–288. [Google Scholar]

- [44].Wang H, Forward regression for ultra-high dimensional variable screening, J. Amer. Statist. Assoc 104 (2009) 1512–1524. [Google Scholar]

- [45].Wipf D, Nagarajan S, Iterative reweighted ℓ1 and ℓ2 methods for finding sparse solutions, IEEE J. Sel. Topics Signal Process 4 (2) (2010) 317–329. [Google Scholar]

- [46].Xu C, Chen J, The sparse mle for ultrahigh-dimensional feature screening, J. Amer. Statist. Assoc 109 (2014) 1257–1269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Zhang C-H, Nearly unbiased variable selection under minimax concave penalty, Ann. Statist 38 (2010) 894–942. [Google Scholar]

- [48].Zhao P, Yu B, On model selection consistency of lasso, J. Mach. Learn. Res 7 (2006) 2541–2563. [Google Scholar]

- [49].Zhong W, Zhu L, Li R, Cui H, Regularized quantile regression and robust feature screening for single index models, Stat. Sinica 26 (2016) 69–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Zhu L-P, Li L, Li R, Zhu L-X, Model-free feature screening for ultrahigh-dimensional data, J. Amer. Statist. Assoc 106 (2011) 1464–1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Zou H, The adaptive lasso and its oracle properties, J. Amer. Statist. Assoc 101 (2006) 1418–1429. [Google Scholar]

- [52].Zou H, Hastie T, Regularization and variable selection via the Elastic Net, J. R. Stat. Soc. Series B Stat. Methodol 67 (2005) 301–320. [Google Scholar]

- [53].Zupancic ML, Cantarel BL, Liu Z, Drabek EF, Ryan KA, Cirimotich S, Jones C, Knight R, Walters WA, Knights D, Mongodin EF, Horenstein RB, Mitchell BD, Steinle N, Snitker S, Shuldiner AR, Fraser CM, Analysis of the gut microbiota in the old order amish and its relation to the metabolic syndrome, PloS one 7 (2012) e43052. [DOI] [PMC free article] [PubMed] [Google Scholar]