Abstract

Retinal microsurgery is technically demanding and requires high surgical skill with very little room for manipulation error. The introduction of robotic assistance has the potential to enhance and expand a surgeon’s manipulation capabilities during retinal surgery, i.e., improve precision, cancel physiological hand tremor, and provide sensing information. However, surgeon performance may also be negatively impacted by robotic assistance due to robot structural stiffness and nonintuitive controls. In complying with robotic constraints, the surgeon loses the dexterity of the human hand. In this paper, we present a preliminary experimental study to evaluate user behavior when affected by robotic assistance during mock retinal surgery. In these experiments user behavior is characterized by measuring the forces applied by the user to the sclera, the tool insertion/retraction speed, the tool insertion depth relative to the scleral entry point, and the duration of surgery. The users’ behavior data is collected during three mock retinal surgery tasks with four users. Each task is conducted using both freehand and robot-assisted techniques. The univariate user behavior and the correlations of multiple parameters of user behavior are analyzed. The results show that robot assistance prolongs the duration of the surgery and increases the manipulation forces applied to sclera, but refines the insertion velocity and eliminates hand tremor.

I. INTRODUCTION

Retinal surgery continues to be one of the most challenging surgical tasks due to its high precision requirements, small and constrained workspace, and delicate eye tissue. Any small surgical error, e.g., a large manipulation force, unintentional touch or tear of the retinal surface may cause eye tissue damage, and potentially vision loss. The surgeon’s performance during retinal surgery is subjected to multiple factors: physiological hand tremor, fatigue, poor kinesthetic feedback, patient movement, and the absence of tactile feedback from the small (single millinewton scale) tool-tissue contact forces. Retinal surgery is carried out under a surgical microscope. With magnification the surgeon inserts small instruments (e.g. 23–27 Ga) through the sclerotomy ports (ϕ < 1mm) located on the sclera, (the white part of the eye), as shown in Fig.1. One example of a very challenging surgical retina task is retinal vein cannulation (RVC), which is a potential treatment for retinal vein occlusion. RVC has not been incorporated into routine clinical practice due to its rigorous manipulation requirements and as yet, unproven efficacy. During RVC, the surgeon carefully inserts a needle or micro-pipette into the occluded retinal vein and slowly injects clot-dissolving drug. During injection the tool is continuously constrained by the sclerotomy port. Steady and precise motion is required, because the diameter of the retinal vein can be as tiny as 80–120 μm, while human hand tremor may exceed 100 μm on average [1]. Additionally, the tool-tissue interaction force is well below the human sensory threshold [2]. Thus retinal surgical tasks demand advanced surgical skills that are near the limit of natural human capability.

Fig. 1.

Retinal microsurgery. (a) The environment of the retinal surgery. (b) The layout of the surgical instruments during the RVC.

Present limitations in retinal surgery can be relieved by resorting to advanced robotic assistive technology. Many robotic systems have been developed and investigated in the last few decades to enhance and expand the capabilities of surgeons during retinal surgery. These robotic assistants can be categorized as teleoperative manipulation systems [3]–[5], handheld robotic devices [6], [7] and flexible micro-manipulators [8]. With the assistance of robotic systems, manipulation precision is improved, and hand tremor is filtered. Recently, two robot-assisted retinal surgeries, RVC and epiretinal membrane peeling (EMP), were successfully performed in humans [9], [10], demonstrating the clinical feasibility of robotic technology for retinal microsurgery. In prior work we developed the Steady Hand Eye Robot (SHER) based on a cooperative control approach [11], [12]. SHER users directly hold the tool mounted on SHER end-effector to perform tasks cooperatively with the robot. The manipulation forces applied to the tool handle are captured by the end-effector force sensor, and used to control the robot as it follows the user’s motion. SHER damps user hand tremor via the robot’s stiff mechanical structure and provides transparent manipulation.

Smart or otherwise responsive instruments with force sensing capability are strategic and potentially useful for safe interaction between the robot and the patient. An approach for the development of smart instruments is to incorporate a micro sensor in the tool handle [13]. These prototypes were not however, able to distinguish forces applied at the tool tip while the tool was inserted into the eye. Therefore, our group has designed and developed a family of sensorized tools by integrating Fiber Brag Grating (FBG) sensors into the important point of contact segments of the tool [14]–[17]. The modality of multi-function sensing is presented in [18], and is further developed in this work, as we measure the contact forces present between both the tool tip and retina, and the tool shaft and sclerotomy ports, while also assessing the depth of tool insertion into the eye.

Robotic devices have the potential to significantly expand a surgeons capabilities and presumably improve surgical outcomes. However, the introduction of robotic assistance may also impact the surgeons tool manipulation experience. This may in part be due to mechanical stiffness of the robot as well as nonintuitive control approaches in robot-assisted surgery. Whereas at present the surgeon needs to comply with a robot’s motion, he/she in turn loses the superior dexterity of the human hand. This is exchanged for the control imparted by the robot. Ultimately surgeon acceptance will determine the admissibility of the robotic tool in the operating theater and hence the control gained will need to exceed the control lost. Therefore the interaction between the user and the robotic system is a point of considerable research interest. Tanaka et al. [19] have attempted to quantitatively assess/compare manual and robotic manipulations during eye surgery, but their results to date are restricted to microcannulation. Moreover their evaluation is largely limited to measures of the contact force between the tool tip and the retina surface, occurring near the end of their manipulation. The interactive force during surgery, e.g., the tool shaft manipulation force exerted on the sclerotomy, and the manipulation velocity, is not presented.

In the present work, we expand the quantitative parameters of interest as we examine the user’s behavior during robot-assisted retinal surgery maneuvers. The user’s behavior is characterized by multiple parameters including: scleral manipulation force (sclera force), tool insertion depth (insertion depth), tool insertion velocity (insertion velocity) and elapsed experimental time (duration). These parameters are collected by the novel multi-function sensing tool during three different mock retinal surgery tasks with four subjects. The experiments are carried out with the SHER. The user behavior is analyzed independently and then correlation analysis between multiple parameters is presented.

The goal of this work is to establish a users behavior database and safe manipulation assessment criteria for robot-assisted retinal surgery. The information has further utility in assessing user operation segmentation, recognition [20] and prediction, as well as robot multi-variable admittance control[18].

II. MATERIAL AND METHOD

Two user parameters, scleral force and insertion depth are measured directly by the novel multi-function sensing tool as shown in Fig. 2 (a). The insertion velocity is calculated using the difference of the insertion depth, i.e., , where the D denotes the insertion depth, the positive value of the velocity represents the insertion of the tool, while the negative value of velocity represents the retraction of the tool. The duration is measured by logging program at 200 Hz. Four subjects performed three mock surgical tasks under two experimental conditions, i.e., freehand and robot-assisted. A total of 24 experimental groups are carried out. SHER is used as the robot assistant on the mock eye.

Fig. 2.

Multi-function sensing tool. (a) The depiction of the sclera force and insertion depth. (b) The section view of the tool shaft with three fibers. (c) The tool dimension.

A. Multi-Function Sensing Tool

The multi-function tool is designed and fabricated by the methods described in our prior work [18]. As shown in Fig. 2, a 25-gauge nitinol needle is machined with three grooves at an angle of 90°, and three FBGs sensors with a diameter of 0.11 mm are glued into the grooves. The FBG sensor is sensitive to small strain forces, and responds to the scleral force exerted on the tool shaft. Based on the detected scleral force, the insertion depth can be calculated with the force moment. The tool is calibrated and validated using a precision scale with a resolution of 1 mg (Sartorius ED224S Extend Analytical Balance, Goettingen Germany), the validation root mean square error (RMSE) for scleral force and insertion depth are 1.2 mN and 0.5 mm, respectively.

B. Steady Hand Eye Robot

SHER has 5 degrees of freedom, it is made up of three linear stages, a rotation stage, and a custom designed remote center motion (RCM) mechanism as shown in Fig. 3(a). SHER has high accuracy, its translation resolution is less than 3μm, and its rotation resolution is 0.0005°. SHER adopts cooperative control method, which allows the user and the robot assistant to share the control of the tool. The velocity of SHER follows user manipulation force applied on the tool handle within its constraint limits. User manipulation force is measured by a six degrees of freedom (DoF) force sensor (ATI Industrial Automation, Inc.), which is mounted behind the tool handle. The user’s manipulation force is applied on the robot handle and fed as an input into the control law as shown in Eq. (1) and (2) [18]:

| (1) |

| (2) |

where and are the desired robot handle velocities in the handle frame and in the robot frame, respectively, Fhh is the user’s manipulation force input measured in the robot handle frame, α is the admittance gain tuned by the robot pedal, Adgrh is the adjoint transformation associated with the handle and robot coordinate frame transformation grh.

Fig. 3.

Experimental setup of retinal surgery phantoms. (a) The robotic assistant. (b) The eye model for task TT and task TA, its inner surface is glued with a printed paper with five colored points. (c) The eye model for task VF, its inner surface has several colored curves representing the retinal vessels.

C. Experimental setup

The experimental setup includes SHER, the multi-function sensing tool, a microscope, a FBG interrogator, an eye phantom, and a monitor, as shown Fig. 3 (a). The multi-function sensing tool is mounted on SHER with a quick release mechanism. The sm 130–700 optical interrogator (Micron Optics, Atlanta, GA) is used to monitor the FBG sensors within the spectrum from 1525 nm to 1565 nm at a 2 kHz refresh rate. Two dry eye phantoms are fabricated for different mock surgical tasks. They are made of silicon rubber and placed into the 3D-printed socket. The socket is lubricated with mineral oil to produce a realistic friction coefficient analogous in the eye. One eye phantom is lined with a target on its inner surface, the five colored target is shown in Fig. 3 (b). The other eye phantom has several colored curves simulating retinal vessels as shown in Fig. 3 (c). A microscope (ZEISS, Germany) is adopted to provide the magnified view for users, and a Point Grey camera (FLIR Systems, Inc.) is attached on the microscope for recording.

D. Mock Retinal Surgery Task Design

To collect user behavior data in different environments, we designed three mock retinal surgery tasks, i.e., target touching (TT), target approaching (TA), and vessel following (VF). Task TT and task TA are carried out in the eye phantom with five target points as shown in Fig. 3 (b), and the task VF is conducted on the eye phantom with mock vessels as shown in Fig. 3 (c).

The TT procedure is designed as follows:

(1) preparation: insert the tool through the sclerotomy port, then adjust the eyeball’s position and orientation for best view under the microscope using the inserted tool;

(2) homing: move the tool tip position above the home point;

(3) target touching: handle the tool to touch the four targets one by one, and start over at step 2 for the next trial;

The procedure of TA and VF is the same as TT except for step 3. For the TA, step 3 is replaced by the following:

(3) target approaching: use the tool to approach the four targets in sequence, and come as close as possible without target contact.

For the VF, step 3 is changed as follows:

(3) vessel following: move the tool to follow four simulated vessel curves consecutively, without touching the retina surface, each vessel curve is tracked with one round trip. Vessel following starts from the home position, pauses at the end of the vessel curve, and then returns to the home position.

The complexity of the manipulations increase from TT to TA, and to VF. Four subjects are involved in the data collection, including two engineers (subjects 1 and 2) and two clinicians, one beginner surgeon (subject 3) and one retinal surgeon with more than 20 years of experience (subject 4). They repeat the tasks TT, TA, and VF, 20 times. The first 10 times are carried out freehand, and the last 10 times are performed with robot-assistance. It should be noted that in each trial, the sequence of target points and vessel curves to be touched or followed, is randomly assigned.

III. RESULTS AND DISCUSSION

A quantitative evaluation of univariate user behavior is analyzed first and this is followed by a correlation analysis of the multiple parameters of user behavior.

A. Univariate Analysis

The scleral force, insertion velocity, and the duration of each experiment are analyzed independently as shown in Table I. Each number in the table is the average value of the data from four subjects with 10 trials, i.e.,

where i = 1,2,3,4 denotes the four subjects, j = 1,2, …,10 denotes the 10 trials, the xij is the average value of the data in one specific trial, yk represent the raw data in one trial, k = 1,2, …,n is the data sequence, while n indicates the amount of raw data. The raw data is smoothed using the RLOWESS method with a window size of 10. The t-test and one-way analysis of variance with a value of p < 0.05 are used for the statistical analysis.

TABLE I.

UNIVARIATE ANALYSIS FOR USERS’ BEHAVIOR IN ALL EXPERIMENTS

| Condition | Sclera force(mN) | Absolute value of insertion velocity(mm/s) | ||||

|---|---|---|---|---|---|---|

| Maximum value | Most probable value | Maximum value | Average value | Standard deviation | Average value | |

| Freehand | 210.0 | 45.8 | 55.5 | 4.8 | 5.0 | 9.7 |

| Robot | 288.6 | 57.8 | 25.8 | 2.5 | 2.2 | 14.9 |

| Freehand | 191.6 | 43.5 | 92.0 | 4.4 | 5.2 | 15.9 |

| Robot | 271.9 | 70.8 | 37.3 | 2.4 | 4.3 | 22.7 |

| Freehand | 252.8 | 54.5 | 91.5 | 7.5 | 9.7 | 22.1 |

| Robot | 299.9 | 124.3 | 51.0 | 3.3 | 4.8 | 36.0 |

The maximum value and the highest probability value of the scleral force is calculated, where the highest probability value is the force present during the 10 trials that was most often recorded. These two values recorded during the robot-assisted trials are much larger than during freehand trials. This situation exists for all tasks (p < 0.001). A possible reason for this is that the robots mechanical stiffness attenuates user tactile ability, and limits dexterity resulting in additional manipulation force applied to the tool handle. In addition, the scleral force in the VF task is significantly higher than during the TA and TT tasks, however there is no difference between TT and TA tasks under robot-assisted conditions (p < 0.001) or freehand conditions (p < 0.001). Thus, the manipulation force increases along with task complexity under all conditions. In the robot-assisted condition, the highest probability force value applied to the sclera from subject 4, (the expert retinal clinician), is 48 mN, 75 mN, and 155 mN in the TT, TA, and VF separately. These lower force values might be regarded as a reasonable safety manipulation threshold for robot-assisted surgery.

During insertion velocity analysis, the absolute value is adopted to normalize the negative velocity. The maximum value and the average value of the insertion velocity are calculated. The values under robot-assisted conditions are significantly lower than those under freehand conditions for all tasks (p< 0.001). The standard variation for the insertion velocity under robot-assisted conditions is lower than that during freehand testing. This is presumably the result of robot assistance producing sufficient manipulation resistance to suppress the manipulation velocity.

The average time required to achieve the experimental tasks was higher with robot-assistance than freehand, for all three tasks (p < 0.001). The primary reason for slowing of task performance is that robot assistance suppresses manipulation velocity as well as the degrees of freedom of hand motion, thus significantly increasing the required duration for task completion. Duration of the trial also increases with the complexity of the task for both freehand and robot-assisted performance.

The distribution of scleral forces applied over time for all experiments is depicted in Fig. 4. The most probable value of the scleral force, i.e., the force at which the most elapsed time occurred, is denoted as the red point in the figure. Its value is much larger when assisted by the robot in all subjects (p < 0.001) and for all tasks. In addition, all of the peak values of force variation increase with increasing complexity of the tasks performed.

Fig. 4.

The distribution of scleral force over elapsed time for all experiments in (a) task TT, (b) task TA, and (c) task VF. The elapsed time is counted for every 1 mN sclera force. The highest probability sclera force is the force that was used over the most elapsed total time. The crest of each curve, is denoted as a red point and its value is displayed in the box at the peaks.

B. Correlations of Multiple Users’ Behavior

To compare the relationship of multiple user parameters to user behavior, we analyzed correlations between scleral force and tool insertion depth. It should be noted that only the data for subject 4 (the expert retinal clinician) is presented here.

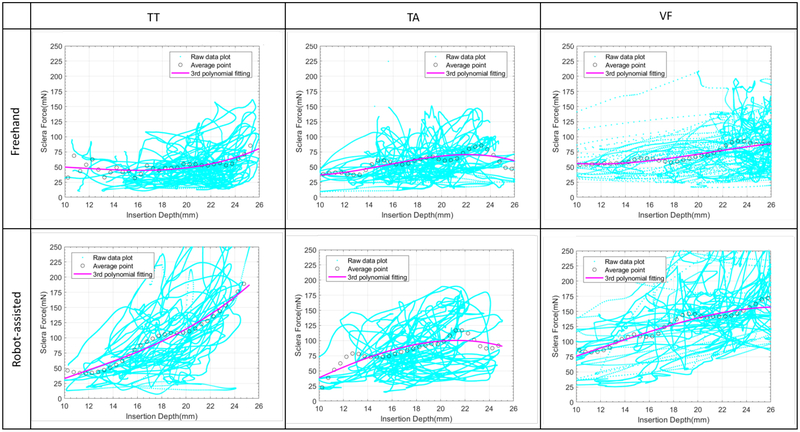

The average value of scleral force is calculated at each 0.5 mm insertion depth and is depicted in Fig. 5. The 3rd polynomial is applied to fit the average value and the fitting parameters are shown in Table II. For task TT, the fitted curve is concave shaped for the freehand and robot-assisted conditions. This indicates that scleral force increases when the insertion depth reaches its maximum value. However, in task TA, the fitting curve is convex in shape for both conditions, and the scleral force decreases when the insertion depth reaches the maximum value. These findings are plausible, as the tool is handled to touch the eyeball surface in task TT, resulting in increasing sclera force. Whereas in task TA, the tool is supposed to approach the target point without touching the eyeball surface, so the scleral forces should be suppressed as the tool approaches the target point. In task VF, the fitted curve has less curvature, under both freehand and robot-assisted conditions. Scleral forces due to target following yields this result.

Fig. 5.

The correlation between sclera force and insertion depth. The average value of scleral force for each 0.5 mm insertion depth is calculated. The 3rd polynomial is applied to fit the average value.

TABLE II.

FITTING PARAMETERS FOR SCLERA FORCE VS. INSERTION DEPTH

| Condition | Fitting coefficient | |||

|---|---|---|---|---|

| a1 | a2 | a3 | b | |

| Freehand | 0.0278 | −1.153 | 15.162 | −16.5 |

| Robot-assisted | 0.0702 | −3.201 | 55.326 | −276.2 |

| Freehand | −0.024 | 1.0593 | −12.11 | 74.762 |

| Robot-assisted | 0.0297 | −1.906 | 42.659 | −231.9 |

| Freehand | −0.027 | 1.6053 | −27.72 | 203.36 |

| Robot-assisted | −0.013 | 0.541 | −0.537 | 41.101 |

The correlations between insertion velocity and insertion depth, and between scleral force and insertion velocity are also compared in Figure 6 and Fig. 7, respectively. Only the data in the task TA, under the freehand condition is represented in the figures. The 3rd polynomial is adopted to fit these two sets of data, and the fitting parameters are calculated as shown in Table. III. The fitting curves could be further used to design a control scheme for SHER that would robotically guide and assist novice users toward this or other desired pattern.

Fig. 6.

The correlation between insertion velocity and insertion depth. The data in the task TA under freehand conditions is analyzed and illustrated. The average value of insertion velocity for every 0.5 mm insertion depth, is calculated. The 3rd polynomial is applied to fit the average value.

Fig. 7.

The correlation between scleral force and insertion velocity. The data in the task TA under freehand conditions is analyzed and illustrated. The average value of scleral force and insertion velocity is extracted from Fig. 5 and Fig. 6, respectively. The 3rd polynomial is applied to fit the average value.

TABLE III.

FITTING PARAMETERS FOR THE OTHER CORRELATIONS

| Fitting coefficient | |||

|---|---|---|---|

| a1 | a2 | a3 | b |

| −0.024 | 1.0593 | −12.11 | 74.762 |

| −0.006 | 0.3894 | −8.14 | 60.64 |

IV. CONCLUSION

Although the results presented above are preliminary, they represent a first attempt to evaluate user behavior, by measurement of four quantifiable parameters, in robot-assisted retinal surgery, as simulated by three different tasks. We used a novel multi-function sensing tool and SHER to collect the scleral force, insertion depth, insertion velocity and task duration data. Univariate analysis was applied first, and was followed by correlation analysis of multiple parameters. The results suggest that a user behavior database is possible for safe manipulation assessment criteria of robot-assisted retinal surgery.

ACKNOWLEDGMENT

This work was supported by U.S. National Institutes of Health under grant number1R01EB023943–01 and 2R01EB000526–01. The work of C. He was supported in part by the China Scholarship Council under Grant 201706020074. The work of PLG was supported in part by Research to Prevent Blindness, New York, USA, and gifts by the J. Willard and Alice S. Marriott Foundation, the Gale Trust, Mr. Herb Ehlers, Mr. Bill Wilbur, Mr. and Mrs. Rajandre Shaw, Ms. Helen Nassif, Ms Mary Ellen Keck, and Mr. Ronald Stiff.

REFERENCES

- [1].Singhy S and Riviere C, “Physiological tremor amplitude during retinal microsurgery,” in Bioengineering Conference, 2002. Proceedings of the IEEE 28th Annual Northeast IEEE, 2002, pp. 171–172. [Google Scholar]

- [2].Gupta PK, Jensen PS, and de Juan E, “Surgical forces and tactile perception during retinal microsurgery,” in International Conference on Medical Image Computing and Computer-Assisted Intervention Springer, 1999, pp. 1218–1225. [Google Scholar]

- [3].Jingjing X, Long H, Lijun S, and Yang Y, “Design and research of a robotic aided system for retinal vascular bypass surgery,” Journal of Medical Devices, vol. 8, no. 4, p. 044501, 2014. [Google Scholar]

- [4].Gijbels A, Wouters N, Stalmans P, Van Brussel H, Reynaerts D, and Vander Poorten E, “Design and realisation of a novel robotic manipulator for retinal surgery,” in Intelligent Robots and Systems (IROS), 2013 IEEE/RSJ International Conference on IEEE, 2013, pp. 3598–3603. [Google Scholar]

- [5].de Smet MD, Meenink TC, Janssens T, Vanheukelom V, Naus GJ, Beelen MJ, Meers C, Jonckx B, and Stassen J-M, “Robotic assisted cannulation of occluded retinal veins,” PloS one, vol. 11, no. 9, p. e0162037, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].MacLachlan RA, Becker BC, Tabarés JC, Podnar GW, Lobes LA Jr, and Riviere CN, “Micron: an actively stabilized handheld tool for microsurgery,” IEEE Transactions on Robotics, vol. 28, no. 1, pp. 195–212, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Gonenc B, Balicki MA, Handa J, Gehlbach P, Riviere CN, Taylor RH, and Iordachita I, “Preliminary evaluation of a micro-force sensing handheld robot for vitreoretinal surgery,” in Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on IEEE, 2012, pp. 4125–4130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].He X, Van Geirt V, Gehlbach P, Taylor R, and Iordachita I, “Iris: Integrated robotic intraocular snake,” in Robotics and Automation (ICRA), 2015 IEEE International Conference on IEEE, 2015, pp. 1764–1769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Walsh F, Robot operates inside eye in world first, 2016. [Online]. Available: http://www.bbc.com/news/health-37246995

- [10].Donald T, “Robot assists with injections for rvo,” Retina Today, vol. April, pp. 59–61, 2017. [Google Scholar]

- [11].Üneri A, Balicki MA, Handa J, Gehlbach P, Taylor RH, and Iordachita I, “New steady-hand eye robot with micro-force sensing for vitreoretinal surgery,” in Biomedical Robotics and Biomechatronics (BioRob), 2010 3rd IEEE RAS and EMBS International Conference on IEEE, 2010, pp. 814–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].He X, Roppenecker D, Gierlach D, Balicki M, Olds K, Gehlbach P, Handa J, Taylor R, and Iordachita I, “Toward clinically applicable steady-hand eye robot for vitreoretinal surgery,” in ASME 2012 International Mechanical Engineering Congress and Exposition. American Society of Mechanical Engineers, 2012, pp. 145–153. [Google Scholar]

- [13].Menciassi A, Eisinberg A, Scalari G, Anticoli C, Carrozza M, and Dario P, “Force feedback-based microinstrument for measuring tissue properties and pulse in microsurgery,” in Robotics and Automation, 2001. Proceedings 2001 ICRA. IEEE International Conference on, vol. 1 IEEE, 2001, pp. 626–631. [Google Scholar]

- [14].Balicki M, Han J-H, Iordachita I, Gehlbach P, Handa J, Taylor R, and Kang J, “Single fiber optical coherence tomography microsurgical instruments for computer and robot-assisted retinal surgery,” in International Conference on Medical Image Computing and Computer-Assisted Intervention Springer, 2009, pp. 108–115. [DOI] [PubMed] [Google Scholar]

- [15].Iordachita I, Sun Z, Balicki M, Kang JU, Phee SJ, Handa J, Gehlbach P, and Taylor R, “A sub-millimetric, 0.25 mn resolution fully integrated fiber-optic force-sensing tool for retinal microsurgery,” International journal of computer assisted radiology and surgery, vol. 4, no. 4, pp. 383–390, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].He X, Handa J, Gehlbach P, Taylor R, and Iordachita I, “A submillimetric 3-dof force sensing instrument with integrated fiber bragg grating for retinal microsurgery,” IEEE Transactions on Biomedical Engineering, vol. 61, no. 2, pp. 522–534, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Gonenc B, Gehlbach P, Taylor RH, and Iordachita I, “Safe tissue manipulation in retinal microsurgery via motorized instruments with force sensing,” in SENSORS, 2017 IEEE. IEEE, 2017, pp. 1–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].He X, Balicki M, Gehlbach P, Handa J, Taylor R, and Iordachita I, “A multi-function force sensing instrument for variable admittance robot control in retinal microsurgery,” in Robotics and Automation (ICRA), 2014 IEEE International Conference on. IEEE, 2014, pp. 1411–1418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Tanaka S, Harada K, Ida Y, Tomita K, Kato I, Arai F, Ueta T, Noda Y, Sugita N, and Mitsuishi M, “Quantitative assessment of manual and robotic microcannulation for eye surgery using new eye model,” The International Journal of Medical Robotics and Computer Assisted Surgery, vol. 11, no. 2, pp. 210–217, 2015. [DOI] [PubMed] [Google Scholar]

- [20].Hundtofte CS, Hager GD, and Okamura AM, “Building a task language for segmentation and recognition of user input to cooperative manipulation systems,” in Haptic Interfaces for Virtual Environment and Teleoperator Systems, 2002. HAPTICS 2002. Proceedings. 10th Symposium on. IEEE, 2002, pp. 225–230. [Google Scholar]