Abstract

Background

Recruiting a hidden population, such as the population of women at risk for alcohol-exposed pregnancy (AEP) who binge drink and are at risk of an unintended pregnancy, is challenging as this population is not typically seeking help or part of an identifiable group. We sought to identify affordable and efficient methods of recruitment for hidden populations.

Methods

Several popular online social media and advertising sites were identified. Cities with high rates of binge drinking among women were targeted. We placed advertisements and study notices using Facebook, Twitter, Craigslist, University postings, and ClinicalTrials.gov.

Results

For this study, 75 women at risk for AEP were recruited from across the U.S. within 7 months. Online advertising for study participants on Craigslist resulted in enrollment of the majority 51 (68%) of the study participants. While Craigslist advertising could be tailored to specific locations with high rates of binge drinking among women, there were challenges to using Craigslist. These included automated deletion due to repeated postings and mention of sexual behavior or drinking, requiring increased efforts and resources by the study team. Several strategies were developed to optimize advertising on Craigslist. Approximately 100 h of staff time valued at $2500 was needed over the 7-month recruitment period.

Discussion

Despite challenges, the target sample of women at risk for AEP was recruited in the 7 month recruitment period using online advertising methods. We recommend that researchers consider online classified advertisements when recruiting from non-help seeking populations. By taking advantage of national data to target specific risk factors, and by tailoring advertising efforts, it is possible to efficiently and affordably recruit a non-treatment seeking sample.

Highlights

-

•

We sought to identify affordable and efficient methods to recruit a non-help-seeking sample of women at risk for alcohol-exposed pregnancy.

-

•

We placed free advertisements and study notices using Facebook, Twitter, Craigslist, traditional flyers and university website postings, and ClinicalTrials.gov.

-

•

Free online advertising for study participants on Craigslist was the most successful recruitment method, resulting in enrollment of 51 (68%) of the 75 study participants.

-

•

By using national data to target specific risk factors, and by tailoring advertising efforts, it is possible to efficiently and affordably recruit a non-help seeking sample.

1. Introduction

Recruiting community-based participants to research studies is often challenging. The challenge is magnified when a study seeks participants who are not troubled by symptoms and are not seeking help. Traditional recruitment methods include, but are not limited to, snowball sampling, paper flyers, random digit dialing, direct mail, community fairs, or ads in magazines and newspapers. While traditional recruitment methods have rarely been examined for effectiveness (Head et al., 2016), some investigators have begun to compare traditional to online recruitment methods in terms of efficiency and cost (Juraschek et al., 2018; Leach et al., 2017). Others have compared different types of online research recruitment, primarily for health research surveys that collect cross-sectional data (Dworkin et al., 2016; Lane et al., 2015; Musiat et al., 2016). A minority of investigators have reported on online methods to recruit participants for intervention studies (Bijker et al., 2016; Blake et al., 2016; Choi et al., 2017; Routledge et al., 2017). Even fewer addressed the challenge of recruiting non-treatment-seeking samples for online studies (Ibarra et al., 2018; Wise et al., 2016). Ibarra and colleagues described several challenges of online recruitment including difficulty in reaching “hidden” samples with advertising, overcoming hesitancy to report honestly about stigmatized behaviors such as smoking during pregnancy, the potential for fraudulent responses by bots or low-quality responses by humans responding excessively carelessly or rapidly to eligibility surveys on crowdsourcing platforms such as mTurk, and the paucity of data on retention of online recruits in prospective studies.

Online research recruitment could supplement or supplant traditional recruitment methods for in-person and online studies given that most U.S. adults (over 80%) use the Internet (Perrin and Duggan, 2015). Online free recruitment methods have included posts on Facebook Twitter, listservs, and websites, (Head et al., 2016; Kayrouz et al., 2016; Ramo et al., 2010; Ramo and Prochaska, 2012; Valdez et al., 2014). Paid efforts include ads via Google adware or on Facebook, or micro-employment opportunities on Amazon Mechanical Turk (Buckingham et al., 2017; Dworkin et al., 2016; Juraschek et al., 2018). A few researchers have hired web-based marketing companies to recruit samples (Buckingham et al., 2017; Musiat et al., 2016). A growing number of studies have used free or paid (Head et al., 2016; Kayrouz et al., 2016; Ramo et al., 2010; Ramo and Prochaska, 2012; Valdez et al., 2014) online classified ad websites to recruit research participants (Antoun et al., 2016; Head et al., 2016; Worthen, 2014). This work has led to at least one review of the literature of online recruitment methods.(Lane et al., 2015), along with a few critiques of the potential for fraud (Quach et al., 2013) and unrepresentative sampling (Sullivan et al., 2011) when relying on online recruitment methods.

For this study, we sought to recruit women with habits of binge drinking and ineffective contraception use that put them at risk for unintended pregnancy. When combined, binge drinking and risk for unintended pregnancy creates the risk of an alcohol exposed pregnancy (AEP). The population of women at risk for AEP is not seeking help. Women at risk of AEP do not tend to think of their drinking as a problem, and often do not recognize that they are at risk for pregnancy. Even if they recognized their risk for AEP, women may perceive stigma in seeking help now that more people recognize that drinking during pregnancy is risky. Finally, recent survey studies have estimated that a wide range of women is at risk for AEP, with up to 1 in 30 at risk of AEP in the U.S. (Cannon et al., 2015; Green et al., 2016). Therefore, this group is not a part of a particular clinic or other identifiable group, making it difficult to target online ads based on their characteristics, or to find natural groups that they join from which to recruit.

There are two previous U.S. studies that recruited women at risk of AEP to online interventions (Tenkku et al., 2011; Montag et al., 2015). In the St. Louis metropolitan area, investigators used traditional methods, such as ads in local newspapers and TV, print ads in light rail cars and grocery store receipts, and online advertising on Google websites, that drove interested candidates to call a 1–800 number or visit the project's website (Tenkku et al., 2011). Investigators reported that these methods were inefficient, resulted in slower than planned recruitment, and required extension of the recruitment period to two years. In a study using on online brief intervention to target risky drinking or risk of AEP among American Indian/Alaska Native women in California, investigators recruited participants in person in waiting areas of three different clinics (Montag et al., 2015). Although the report does not specifically discuss challenges in recruitment, we calculated that they enrolled an average of 15.5 participants per month over 17 months. The purpose of this report is to add to the scant literature on online recruitment of hidden populations. In it, we describe how we recruited a non-help seeking population at risk for AEP for an online intervention trial, to analyze the relative yield and the cost of online recruitment methods, and to share lessons learned about recruiting non-help- seeking participants for an online intervention.

2. Methods

The Contraception and Alcohol Risk Reduction Internet Intervention (CARRII) is a fully automated, interactive, tailored, and personalized intervention using messaging consistent with the Motivational Interviewing counseling style to reduce the risk of alcohol-exposed pregnancy (AEP). The CARRII intervention was tested and compared to a non-personalized patient education condition in a pilot randomized controlled trial (Ingersoll et al., 2018). The target population was women aged 18–44 years old who 1) were able to become pregnant, 2) had sexual intercourse with a man in the previous 90 days, and 3) reported absent, ineffective, or intermittent contraception use and binge drinking in the previous 90 days. A computer with Internet service access was required, and the participants had to live in the United States. The recruitment period ran for 7 months, from early January 2015 to early August 2015. The study was approved by the University of Virginia's Human Subjects Research Institutional Review Board.

The study team used online advertisements and traditional paper flyers as primary recruitment methods. Because there were no public datasets showing the rate of women at risk for AEP by location, areas were selected by reviewing federal statistics on binge drinking rates among women in American cities from the Behavioral Risk Factor Surveillance System (Li et al., 2011). At the time of study recruitment, cities with the highest binge drinking rates on BRFSS were Boston, Massachusetts; Baltimore, Maryland; Philadelphia, Pennsylvania; Milwaukee, Wisconsin; and Detroit, Michigan. These areas and others were then used to target advertisements as described below.

IRB-approved online advertisements were posted to online websites, including Craigslist, Facebook, Twitter, the Clinical Trials page for the University of Virginia, and Clinicaltrials.gov. Craigslist, a free website with local classifieds advertising with over 50 billion views per month, has the potential to provide researchers a cost-effective recruitment option (Antoun et al., 2016) and may reach a broad array of potential participants given that usage of online advertising websites by U.S. adults doubled from 22% in 2005 to 49% in 2009 (Jones and Fox, 2009). Sample advertisements are shown in Fig. 1.

Fig. 1.

Advertisement examples.

A total of 909 advertisements were posted over a period of 210 days, 887 of which were online advertisements. Most online advertisements (867, 97.7%) were posted on Craigslist, 14 (0.02%) were posted to Facebook, and 6 (0.01%) were posted in other areas, such as the university clinical trials page. Based on BRFSS binge drinking data, twenty-eight advertisements were posted to the Boston, MA Craigslist page; 21 to Philadelphia, PA; 20 to Milwaukee, WI; and 45 to Detroit, MI (due to Craigslist's separation of advertising in the Detroit area across 3 counties). The remaining 20 (3%) online advertisements were posted across U.S. locations.

Using a traditional recruitment approach, we also sent flyers to colleagues for potential distribution at their universities and to the University of Virginia Psychology Department. Additionally, paper flyers were placed around Charlottesville, Virginia, the local site. The advertisements directed interested candidates to the CARRII website, which included basic information about the study and a form for interested individuals to complete as the first step in determining eligibility for the trial.

Recruitment data includes number of postings, location of postings, interest forms received, location from which forms came, and respondent reports of how they heard about the study. Data on study applicants on interest forms were used to characterize respondents' demographics and risk behaviors.

3. Results

3.1. Advertising responses

After eliminating 29 submissions that were duplicates, applications by men and other inaccurate applications (including some that appeared to have been bots based on visual inspection of nonsensical data), 947 unique interest forms were submitted. Submissions came from all 50 states and the District of Columbia, with Virginia (174, 18.4%), Pennsylvania (87, 9.2%), and Massachusetts (70, 7.4%) represented most frequently, and Utah (2, 0.2%), South Dakota (1, 0.1%), and Wyoming (1, 0.1%) represented least frequently (see Table 1).

Table 1.

Applicants, eligibility and enrollment by state.

| State | Applicants | % of total | Initially Eligible | % of total | Enrolled | % of total | % of state |

|---|---|---|---|---|---|---|---|

| Alabama | 6 | 0.63% | 1 | 0.39% | 0 | 0% | 0% |

| Alaska | 3 | 0.32% | 0 | 0% | 0 | 0% | 0% |

| Arizona | 15 | 1.58% | 4 | 1.57% | 0 | 0% | 0% |

| Arkansas | 3 | 0.32% | 1 | 0.39% | 0 | 0% | 0% |

| California | 36 | 3.80% | 11 | 4.33% | 4 | 5.33% | 11.11% |

| Colorado | 10 | 1.06% | 2 | 0.79% | 0 | 0% | 0% |

| Connecticut | 21 | 2.22% | 7 | 2.76% | 0 | 0% | 0% |

| DC | 18 | 1.90% | 6 | 2.36% | 3 | 4.00% | 16.67% |

| Delaware | 3 | 0.32% | 1 | 0.39% | 1 | 1.33% | 33.33% |

| Florida | 27 | 2.85% | 8 | 3.15% | 2 | 2.67% | 7.41% |

| Georgia | 15 | 1.58% | 0 | 0% | 0 | 0% | 0% |

| Hawaii | 4 | 0.42% | 2 | 0.79% | 0 | 0% | 0% |

| Idaho | 3 | 0.32% | 1 | 0.39% | 0 | 0% | 0% |

| Illinois | 9 | 0.95% | 6 | 2.36% | 1 | 1.33% | 11.11% |

| Indiana | 19 | 2.01% | 8 | 3.15% | 1 | 1.33% | 5.26% |

| Iowa | 7 | 0.74% | 2 | 0.79% | 0 | 0% | 0% |

| Kansas | 3 | 0.32% | 1 | 0.39% | 0 | 0% | 0% |

| Kentucky | 4 | 0.42% | 1 | 0.39% | 0 | 0% | 0% |

| Louisiana | 8 | 0.84% | 4 | 1.57% | 1 | 1.33% | 12.50% |

| Maine | 5 | 0.53% | 0 | 0% | 0 | 0% | 0% |

| Maryland | 41 | 4.33% | 15 | 5.91% | 3 | 4.00% | 7.32% |

| Massachusetts | 70 | 7.39% | 13 | 5.12% | 5 | 6.67% | 7.14% |

| Michigan | 30 | 3.17% | 9 | 3.54% | 2 | 2.67% | 6.67% |

| Minnesota | 20 | 2.11% | 6 | 2.36% | 1 | 1.33% | 5.00% |

| Mississippi | 3 | 0.32% | 2 | 0.79% | 0 | 0% | 0% |

| Missouri | 12 | 1.27% | 6 | 2.36% | 2 | 2.67% | 16.67% |

| Montana | 6 | 0.63% | 2 | 0.79% | 0 | 0% | 0% |

| Nebraska | 9 | 0.95% | 0 | 0% | 0 | 0% | 0% |

| Nevada | 6 | 0.63% | 2 | 0.79% | 0 | 0% | 0% |

| New Hampshire | 4 | 0.42% | 1 | 0.39% | 1 | 1.33% | 25.00% |

| New Jersey | 14 | 1.48% | 1 | 0.39% | 1 | 1.33% | 7.14% |

| New Mexico | 3 | 0.32% | 0 | 0% | 0 | 0% | 0% |

| New York | 39 | 4.12% | 12 | 4.72% | 2 | 2.67% | 5.13% |

| North Carolina | 23 | 2.43% | 8 | 3.15% | 2 | 2.67% | 8.70% |

| North Dakota | 2 | 0.21% | 0 | 0% | 0 | 0% | 0% |

| Ohio | 24 | 2.53% | 4 | 1.57% | 2 | 2.67% | 8.33% |

| Oklahoma | 7 | 0.74% | 0 | 0% | 0 | 0% | 0% |

| Oregon | 21 | 2.22% | 6 | 2.36% | 1 | 1.33% | 4.76% |

| Pennsylvania | 87 | 9.19% | 30 | 11.81% | 12 | 16.00% | 13.79% |

| Rhode Island | 15 | 1.58% | 6 | 2.36% | 2 | 2.67% | 13.33% |

| South Carolina | 6 | 0.63% | 2 | 0.79% | 1 | 1.33% | 16.67% |

| South Dakota | 1 | 0.11% | 0 | 0% | 0 | 0% | 0% |

| Tennessee | 12 | 1.27% | 5 | 1.97% | 0 | 0% | 0% |

| Texas | 23 | 2.43% | 4 | 1.57% | 2 | 2.67% | 8.70% |

| Utah | 2 | 0.21% | 0 | 0% | 0 | 0% | 0% |

| Vermont | 13 | 1.37% | 3 | 1.18% | 2 | 2.67% | 15.38% |

| Virginia | 174 | 18.37% | 34 | 13.39% | 15 | 20.00% | 8.62% |

| Washington | 11 | 1.16% | 2 | 0.79% | 1 | 1.33% | 9.09% |

| West Virginia | 7 | 0.74% | 3 | 1.18% | 0 | 0% | 0% |

| Wisconsin | 42 | 4.44% | 12 | 4.72% | 5 | 6.67% | 11.90% |

| Wyoming | 1 | 0.11% | 0 | 0% | 0 | 0% | 0% |

| Total | 947 | 100% | 254 | 100% | 75 | 100% |

3.2. Advertisement method yield

As shown in Fig. 1, among the 947 interest forms, 828 applicants (87.4%) reported learning about the study online; 35 from flyers or other methods; and 84 through “word of mouth”. Of those learning about the study online, 655 (79%) reported Craigslist as the source, 157 (19%) reported that they read about the study elsewhere on the Internet, and 16 (2%) reported that they heard about the study on Facebook. Of the 84 who reported learning about the study by word of mouth, 44 (52%) heard about the study from the UVA psychology department, and 3 (4%) from another study participant. While we did not track exposure to advertising types, we compared the yield of each online method by reviewing the number of applicants reporting each method as the source of their interest by the number of ads placed using each method. When viewed in this manner, by “comparing apples to apples,” the data show that the yield of Craigslist report by Craigslist ads was 75.5%, of Facebook reports by Facebook ads was 114.3%, and of other online venues was 261.7%.

3.3. Study applicant characteristics

The average age of applicants was 27.63 years (SD = 7.3, range of 15–65 years of age). The racial distribution was 671 (70.9%) White, 125 (13.2%) Black, 56 (5.9%) Biracial, 53 (5.6%) Asian, 22 (2.3%) other, 17 (1.8%) American Indian, 2 (0.2%) Pacific Islander, and 1 (0.1%) Alaska Native. Ninety applicants (9.5%) reported Hispanic ethnicity.

3.4. Applicant eligibility

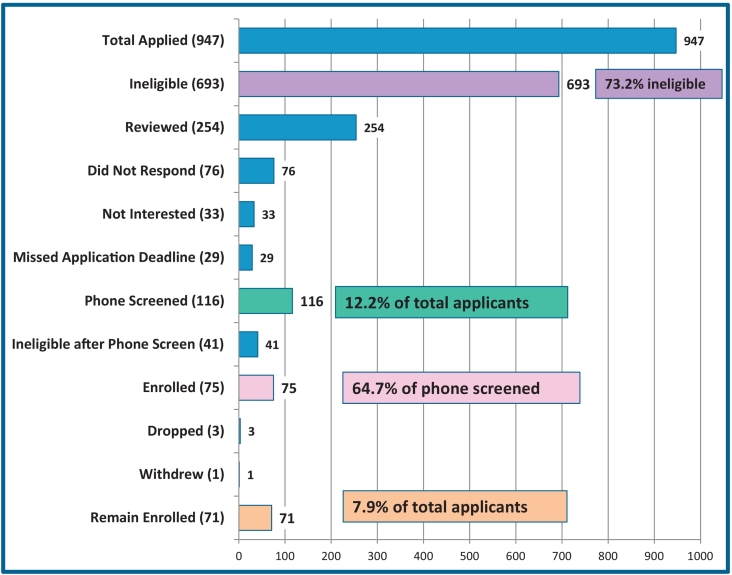

As shown in Fig. 2, of the 947 interest forms submitted, 693 applicants were not eligible due to low risk drinking or low risk for pregnancy. Those who remained potentially eligible (254) were contacted by email and offered telephone interviews to complete the eligibility determination process. Of the 254, 149 responded to the invitation email, and 116 participated in telephone screenings. 75 were determined to be eligible, and all 75 enrolled. The final number enrolled was 74 as one enrolled participant was determined to be ineligible at baseline during the analysis phase, was then classified as a mistaken enrollment and was dropped from analysis.

Fig. 2.

Participant flow.

3.5. Advertising challenges

When a free Craigslist ad is placed, it is first placed at the top of the page and then moves downward as other new ads are posted. In order to keep study advertisements at the top and most visible to users, ads were reposted on a daily basis for high population target cities. Unfortunately, almost immediately after posting the first few study advertisements to Craigslist, many posts were deleted and likely never appeared publicly. Researchers suspect this was due repeated postings and/or mention of sexual behavior or drinking, causing the posts to be flagged for removal. To keep posts active in the target areas, researchers created 11 variations of the online Craigslist advertisement, and created 15 individual Craigslist accounts, each of which was required to be verified by a unique telephone number. Even after making these changes, 253 of the 867 advertisements were deleted shortly after being posted, and were likely never seen by potentially eligible women. The study team estimated that 100 h (at an estimated cost of $2500) was spent by staff members on daily posting and reposting Craigslist ads across different accounts and locations over the 7 month recruitment period. There are commercial services available for this process, but they are costly and were not included in the study budget.

3.6. Interest form challenges

We discovered that 2 screening questions in the online interest form used to determine initial eligibility were not clear enough to determine eligibility without further review. This led to 118 applicants to be considered “potentially eligible” but who required follow-up questions in order to confirm eligibility, which took additional staff time. Specifically, answer choices to 2 questions about pregnancy intention and birth control pill usage did not initially result in determination of pregnancy risk so these questions' answer choices were modified early in the recruitment period.

3.7. Phone screening participation

As previously detailed, of the 254 eligible/potentially eligible participants, fewer than half (116) participants completed a phone screen. The remaining 138 applicants did not complete the phone screen for reasons including 1) no longer interested in participating (n = 33) or 2) not responding to researchers' contacts (n = 76). The average number of times an eligible subject was contacted before a successful phone screen was 10.31 (SD = 6.52). We spent 2.89 min (SD = 0.73) per contact, and total researcher time spent across contacts was estimated at 29.33 min (SD = 20.25) per participant.

3.8. Fraudulent applications

Fraud is a concern among researchers who collect data online, due to the risk of single individuals completing multiple interest forms and potentially entering the study multiple times to earn money (Quach et al., 2013). Attempted fraud was detected when interest forms were inconsistent/incoherent; an example would be the city not matching the state or country provided. When this occurred, these attempts were assumed to be bots. It is possible that human applicants who completed more than one interest form were among those who failed to respond to researcher contact attempts to complete a telephone interview. Requiring applicants to participate in this interview collecting final eligibility data may have prevented some fraudulent applicants from pursuing study entry. Finally, the majority (59.8%) of interviews were conducted by one research coordinator (RC). The remainder were conducted by several research assistants working closely with the RC, who either supervised or reviewed the results of each phone interview before enrolling the subject. Using this oversight method, we did not detect any attempts at duplicate enrollment among those who completed the telephone interview. While we have no evidence of fraudulent participation, it is possible that some participants could have fabricated data and participated in the 6 month study. This concern is mitigated by the time and effort required to complete telephone assessments, questionnaires, and online diaries at each measurement point, and to review and complete the 6 Cores of material. It seems unlikely that the compensation of $150 delivered in online gift cards for completing all study requirements was high enough to make this study appealing to those without AEP risk.

4. Discussion

Despite challenges, the study team recruited the target sample of women at risk for AEP in the planned 7 month recruitment period using mostly online advertising methods. Craigslist ads were cited as the source of recruitment for the majority of study applicants, even though Facebook and Other online methods showed higher calculated yield per ad given their lower number of ads placed in those outlets. Unfortunately, we were unable to calculate yield by ad views because we did not collect this data during the recruitment period. Other researchers have reported that study recruitment through online classified advertisement services, like Craigslist, may be more efficient than other Internet recruitment methods (Antoun et al., 2016). For this non-help-seeking population, other online means of recruiting participants did not result in as many applicants for this study. Because the population of women at risk for AEP is broad, there was not a Facebook group of women who would be most likely to be eligible, and they could only be targeted based on age. Similarly, Twitter recruitment efforts were likely limited because there are no identifiable communities at risk, and reach to a large population typically requires having many followers or a trending hashtag. Although the study team was located at a university, this did not help in recruitment efforts because most college-aged applicants were not eligible for the study due to their use of contraception. Reviewing each application takes time and effort (5–10 min each) by the study team, and out of the 44 applications from the university advertising source, 40 were ultimately ineligible.

While Craigslist yielded the most applicants overall, it had challenges. The most vexing was automatic deletion of advertisements, often immediately after they were posted. Craigslist attempts to eliminate spam or robot postings that do not follow the Craigslist terms of use (Craigslist Terms of Use, n.d.). Repeated postings from one account across too many geographic locations may have registered as spam and flagged for removal. It is also possible that because the advertisements mentioned sexual activity and drinking specifically, they were viewed as sensitive or inappropriate and were therefore flagged for removal. Automated deletions was found to be an issue in other studies using Craigslist as a recruitment tool (Antoun et al., 2016; Worthen, 2014). While we overcame this, it was costly in terms of staff time, requiring about $2500 worth of time. Compared to some studies reporting spending over $50,000 on recruitment, (e.g., Watson et al., 2018) we think this cost is affordable.

It should be noted that the study protocol required 3 phone interviews across the study to verify eligibility and to collect calendar-based retrospective recall data about drinking and contraception use. While automated online assessments and interventions could be completed entirely online, our team chose to require the telephone interview for several reasons. Interviewers provided additional information and answered questions about the study. Interviewers were friendly and nonjudgmental. We anticipated a potential response bias to report prosocial and healthy behaviors. To overcome this, interviewers emphasized the privacy of data collection and storage procedures, and the importance of each participants' honest and complete reporting of behaviors to enable the findings to have value to society and to help women in the future. Thus, the interview functioned in part as a role induction procedure, in which participants developed the belief that value of the study depended on their honest reporting of their behaviors and experiences. As noted previously, it is possible that some of the applicants who failed to respond to our requests for interviews were attempting to enroll fraudulently, and this requirement discouraged them from continuing, representing a potential benefit in terms of study quality. It is also possible that some women who were eligible decided not to participate due to the “hassle factor,” and this represents a potential cost in terms of delay of reaching the enrollment goal sooner.

The Internet allows researchers to reach a wide range of populations that used to be almost inaccessible. We recommend that researchers consider online classified advertisements when recruiting from non-treatment seeking populations. Future studies should utilize advertising analytics to estimate yield by method more precisely. By using national data to target specific risk factors, and by tailoring advertising efforts, we found that it is possible to efficiently and affordably recruit a non-treatment seeking sample.

Conflict of interest declaration

Ms. MacDonnell, Ms. Cowen, Mr. Cunningham, and Dr. Ingersoll have no conflicts of interest to declare.

Dr. Ritterband has an interest in BeHealth, a company that disseminates eHealth interventions, but CARRII is not included in BeHealth.

Footnotes

Funder: The National Institutes of Health, National Institute on Alcohol Abuse and Alcoholism, Grant # R34 AA020853.

References

- Antoun C., Zhang C., Conrad F.G., Schober M.F. Comparisons of online recruitment strategies for convenience samples: Craigslist, Google AdWords, Facebook, and Amazon Mechanical Turk. Field Methods. 2016;28(3):231–246. [Google Scholar]

- Bijker L., Kleiboer A., Riper H., Cuijpers P., Donker T. E-care 4 caregivers - an online intervention for nonprofessional caregivers of patients with depression: study protocol for a pilot randomized controlled trial. Trials. 2016;17:6. doi: 10.1186/s13063-016-1320-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake H., Quirk H., Leighton P., Randell T., Greening J., Guo B., Glazebrook C. Feasibility of an online intervention (STAK-D) to promote physical activity in children with type 1 diabetes: protocol for a randomised controlled trial. Trials. 2016;17(1) doi: 10.1186/s13063-016-1719-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckingham L., Becher J., Voytek C.D., Fiore D., Dunbar D., Davis-Vogel A.…Frank I. Going social: success in online recruitment of men who have sex with men for prevention HIV vaccine research. Vaccine. 2017;35(27):3498–3505. doi: 10.1016/j.vaccine.2017.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cannon M.J., Guo J., Denny C.H., Green P.P., Miracle H., Sniezek J.E., Floyd R.L. Prevalence and characteristics of women at risk for an alcohol-exposed pregnancy (AEP) in the United States: estimates from the National Survey of Family Growth. Matern. Child Health J. 2015;19(4):776–782. doi: 10.1007/s10995-014-1563-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi I., Milne D.N., Glozier N., Peters D., Harvey S.B., Calvo R.A. Using different Facebook advertisements to recruit men for an online mental health study: engagement and selection bias. Internet Interv. 2017;8:27–34. doi: 10.1016/j.invent.2017.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craigslist Terms of Use. (n.d.). Retrieved from https://www.craigslist.org/about/prohibited

- Dworkin J., Hessel H., Gliske K., Rudi J.H. A comparison of three online recruitment strategies for engaging parents. Fam. Relat. 2016;65(4):550–561. doi: 10.1111/fare.12206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green P.P., McKnight-Eily L.R., Tan C.H., Mejia R., Denny C.H. Vital signs: alcohol-exposed pregnancies–United States, 2011–2013. MMWR Morb. Mortal. Wkly Rep. 2016;65(4):91–97. doi: 10.15585/mmwr.mm6504a6. [DOI] [PubMed] [Google Scholar]

- Head B.F., Dean E., Flanigan T., Swicegood J., Keating M.D. Advertising for cognitive interviews: a comparison of Facebook, Craigslist, and Snowball recruiting. Soc. Sci. Comput. Rev. 2016;34(3):360–377. [Google Scholar]

- Ibarra J.L., Agas J.M., Lee M., Pan J.L., Buttenheim A.M. Comparison of online survey recruitment platforms for hard-to-reach pregnant smoking populations: feasibility study. JMIR Research Protocols. 2018;7(4):e101. doi: 10.2196/resprot.8071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingersoll K., Frederick C., MacDonnell K., Ritterband L., Lord H., Jones B., Truwit L. A pilot RCT of an internet intervention to reduce the risk of alcohol-exposed pregnancy. Alcohol. Clin. Exp. Res. 2018;42(6):1132–1144. doi: 10.1111/acer.13635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones S., Fox S. Pew Research Center; 2009. Generations Online in 2009.http://www.pewinternet.org/2009/01/28/generations-online-in-2009/ Retrieved from. [Google Scholar]

- Juraschek S.P., Plante T.B., Charleston J., Miller E.R., Yeh H.-C., Appel L.J.…Hermosilla M. Use of online recruitment strategies in a randomized trial of cancer survivors. Clinical Trials (London, England) 2018;15(2):130–138. doi: 10.1177/1740774517745829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayrouz R., Dear B.F., Karin E., Titov N. Facebook as an effective recruitment strategy for mental health research of hard to reach populations. Internet Interv. 2016;4:1–10. doi: 10.1016/j.invent.2016.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane T.S., Armin J., Gordon J.S. Online recruitment methods for web-based and mobile health studies: a review of the literature. J. Med. Internet Res. 2015;17(7):e183. doi: 10.2196/jmir.4359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leach L.S., Butterworth P., Poyser C., Batterham P.J., Farrer L.M. Online recruitment: feasibility, cost, and representativeness in a study of postpartum women. J. Med. Internet Res. 2017;19(3):e61. doi: 10.2196/jmir.5745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li C., Balluz L.S., Okoro C.A., Strine T.W., Lin J.-M.S., Town M.…Valluru B. Surveillance of certain health behaviors and conditions among states and selected local areas—Behavioral Risk Factor Surveillance System, United States, 2009. Morb. Mortal. Wkly. Rep. Surveill. Summ. 2011;60(9):1–249. [PubMed] [Google Scholar]

- Montag A.C., Brodine S.K., Alcaraz J.E., Clapp J.D., Allison M.A., Calac D.J.…Chambers C.D. Preventing alcohol-exposed pregnancy among an American Indian/Alaska Native population: effect of a screening, brief intervention, and referral to treatment intervention. Alcohol. Clin. Exp. Res. 2015;39(1):126–135. doi: 10.1111/acer.12607. [DOI] [PubMed] [Google Scholar]

- Musiat P., Winsall M., Orlowski S., Antezana G., Schrader G., Battersby M., Bidargaddi N. Paid and unpaid online recruitment for health interventions in young adults. J. Adolesc. Health. 2016;59(6):662–667. doi: 10.1016/j.jadohealth.2016.07.020. [DOI] [PubMed] [Google Scholar]

- Perrin A., Duggan M. Pew Research Center; 2015. Americans' Internet Access: 2000–2015.http://www.pewresearch.org/wp-content/uploads/sites/9/2015/06/2015-06-26_internet-usage-across-demographics-discover_FINAL.pdf Retrieved from. [Google Scholar]

- Quach S., Pereira J.A., Russell M.L., Wormsbecker A.E., Ramsay H., Crowe L.…Kwong J. The good, bad, and ugly of online recruitment of parents for health-related focus groups: lessons learned. J. Med. Internet Res. 2013;15(11):e250. doi: 10.2196/jmir.2829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramo D.E., Prochaska J.J. Broad reach and targeted recruitment using Facebook for an online survey of young adult substance use. J. Med. Internet Res. 2012;14(1) doi: 10.2196/jmir.1878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramo D.E., Hall S.M., Prochaska J.J. Vol. 12. 2010. Reaching Young Adult Smokers Through the Internet: Comparison of Three Recruitment Mechanisms; pp. 768–775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Routledge F.S., Davis T.D., Dunbar S.B. Recruitment strategies and costs associated with enrolling people with insomnia and high blood pressure into an online behavioral sleep intervention: a single-site pilot study. J. Cardiovasc. Nurs. 2017;32(5):439–447. doi: 10.1097/JCN.0000000000000370. [DOI] [PubMed] [Google Scholar]

- Sullivan P.S., Khosropour C.M., Luisi N., Amsden M., Coggia T., Wingood G.M., DiClemente R.J. Bias in online recruitment and retention of racial and ethnic minority men who have sex with men. J. Med. Internet Res. 2011;13(2) doi: 10.2196/jmir.1797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tenkku L.E., Mengel M.B., Nicholson R.A., Hile M.G., Morris D.S., Salas J. A web-based intervention to reduce alcohol-exposed pregnancies in the community. Health Education & Behavior: The Official Publication of the Society for Public Health Education. 2011;38(6):563–573. doi: 10.1177/1090198110385773. [DOI] [PubMed] [Google Scholar]

- Valdez R.S., Guterbock T.M., Thompson M.J., Reilly J.D., Menefee H.K., Bennici M.S.…Rexrode D.L. Beyond traditional advertisements: leveraging Facebook's social structures for research recruitment. J. Med. Internet Res. 2014;16(10) doi: 10.2196/jmir.3786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson N.L., Mull K.E., Heffner J.L., McClure J.B., Bricker J.B. Participant recruitment and retention in remote eHealth intervention trials: methods and lessons learned from a large randomized controlled trial of two web-based smoking interventions. J. Med. Internet Res. 2018;20(8) doi: 10.2196/10351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise T., Arnone D., Marwood L., Zahn R., Lythe K.E., Young A.H. Recruiting for research studies using online public advertisements: examples from research in affective disorders. Neuropsychiatr. Dis. Treat. 2016;12:279–285. doi: 10.2147/NDT.S90941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthen M.G. An invitation to use craigslist ads to recruit respondents from stigmatized groups for qualitative interviews. Qual. Res. 2014;14(3):371–383. [Google Scholar]