Abstract

Jumping to conclusions during probabilistic reasoning is a cognitive bias reliably observed in psychosis and linked to delusion formation. Although the reasons for this cognitive bias are unknown, one suggestion is that psychosis patients may view sampling information as more costly. However, previous computational modeling has provided evidence that patients with chronic schizophrenia jump to conclusions because of noisy decision-making. We developed a novel version of the classical beads task, systematically manipulating the cost of information gathering in four blocks. For 31 individuals with early symptoms of psychosis and 31 healthy volunteers, we examined the numbers of “draws to decision” when information sampling had no, a fixed, or an escalating cost. Computational modeling involved estimating a cost of information sampling parameter and a cognitive noise parameter. Overall, patients sampled less information than controls. However, group differences in numbers of draws became less prominent at higher cost trials, where less information was sampled. The attenuation of group difference was not due to floor effects, as in the most costly block, participants sampled more information than an ideal Bayesian agent. Computational modeling showed that, in the condition with no objective cost to information sampling, patients attributed higher costs to information sampling than controls did, Mann–Whitney U = 289, p = 0.007, with marginal evidence of differences in noise parameter estimates, t(60) = 1.86, p = 0.07. In patients, individual differences in severity of psychotic symptoms were statistically significantly associated with higher cost of information sampling, ρ = 0.6, p = 0.001, but not with more cognitive noise, ρ = 0.27, p = 0.14; in controls, cognitive noise predicted aspects of schizotypy (preoccupation and distress associated with delusion-like ideation on the Peters Delusion Inventory). Using a psychological manipulation and computational modeling, we provide evidence that early-psychosis patients jump to conclusions because of attributing higher costs to sampling information, not because of being primarily noisy decision makers.

Keywords: jumping to conclusions, schizophrenia, beads, fish, cognitive bias, computational psychiatry, At Risk Mental State

INTRODUCTION

A consistent psychological finding in schizophrenia research is that patients, especially those with delusions, gather less information before reaching a decision. This tendency to draw a conclusion on the basis of little evidence has been called a jumping-to-conclusions (JTC) bias (Garety & Freeman, 2013). According to cognitive theories of psychosis, a JTC bias is a trait representing liability to delusions. People who jump to conclusions easily accept implausible ideas, discounting alternative explanations and thus ensuring the persistence of delusions (Garety & Freeman, 1999; Garety, Bebbington, Fowler, Freeman, & Kuipers, 2007; Garety et al., 2013). Reviews and meta-analyses have confirmed the specificity, strength, and reliability of the association of JTC bias and psychotic symptoms (Dudley, Taylor, Wickham, & Hutton, 2016; Fine, Gardner, Craigie, & Gold, 2007; Garety & Freeman, 2013; Ross, McKay, Coltheart, & Langdon, 2015; So, Garety, Peters, & Kapur, 2010; So, Siu, Wong, Chan, & Garety, 2015).

The JTC bias is commonly measured in psychosis research using variants of the beads in the jar task (Huq, Garety, & Hemsley, 1988). A person is presented with beads drawn one at a time from one of two jars containing beads of two colors mixed in opposite ratios (Garety, Hemsley, & Wessely, 1991; Huq et al., 1988). JTC has been operationally defined in the beads tasks as making decisions after just one or two beads (Garety et al., 2005; Warman, Lysaker, Martin, Davis, & Haudenschield, 2007). The commonly used outcome in this task is the number of beads seen before choosing the jar, known as draws to decision (DTD). The task requires the participant to decide how much information to sample before making a final decision. This behavior can be compared to the behavior of an ideal Bayesian reasoning agent (Huq et al., 1988). However, when people experience evidence seeking as costly, it is thought that gathering less information could be seen as optimal, leading to monetary gains at the expense of accuracy (Furl & Averbeck, 2011).

Although the JTC bias has been well replicated, the neurocognitive mechanisms underlying it are unknown; many possible psychological explanations have been put forward, not all of which are mutually exclusive (Evans, Averbeck, & Furl, 2015). Motivational factors, such as intolerance of uncertainty (Bentall & Swarbrick, 2003; Broome et al., 2007), a “need for closure” (Colbert & Peters, 2002), a cost to self-esteem of seeming to need more information (Bentall & Swarbrick, 2003), or an abnormal “hypersalience of evidence” (Esslinger et al., 2013; Menon, Mizrahi, & Kapur, 2008; Speechley, Whitman, & Woodward, 2010), have been posited as potentially underlying the JTC bias. A common theme emerging from the intolerance of uncertainty, the need for closure, and the cost to self-esteem hypotheses is that patients experience an excessive cost of sampling information.

Computational models allow researchers to consider important latent factors influencing decisions. The costed Bayesian model (Moutoussis, Bentall, El-Deredy, & Dayan, 2011) incorporates a Bayesian consideration of future outcomes with the subjective benefits or penalty (cost) for gathering additional information on each trial and the noise during the decision-making; Moutoussis and colleagues (2011) applied this computational model to the information sampling behavior of a sample of chronic schizophrenia patients undertaking the beads task. They found, contrary to their expectations, that a higher perceived cost of the information gathering did not underpin the JTC bias. Therefore they concluded that differences in the noise of decision-making were more useful in explaining the differences between patients and controls than the perceived cost of the information sampling. However, here we reason that a rejection of the increased cost of information sampling account of the JTC bias based on this finding is premature, as patients with chronic schizophrenia may not be representative of all psychosis patients, especially not those at early stages of psychosis—a stage particularly relevant for understanding the formation of delusions. A variety of different cognitive factors may contribute to the JTC bias; the balance of contributory factors may differ in different patient populations, with noisy decision-making relating to executive cognitive impairments predominating in chronic but perhaps not in early stages of psychosis. Furthermore, Moutoussis and colleagues applied their model to an existing dataset (Corcoran et al., 2008) that used the classic beads task. In this task, no explicit value was assigned to getting an answer correct or incorrect, and no explicit cost was assigned to gathering information. The authors themselves concluded that their work required replication, including incorporation of experimental manipulation of rewards and penalties.

The current study investigated the hypothesis that patients with early psychosis attribute higher costs to information sampling using a novel version of the traditional beads task and computational modeling. Focusing on patients at early stages of psychosis allowed us to investigate the JTC bias before the onset of a potential neuropsychological decline seen in some patients with chronic schizophrenia and to study a largely unmedicated sample of psychosis patients. Specifically, we were interested in testing whether patients adapt their decision strategies when there is an explicit cost of information sampling. We therefore developed a variation of the beads task in which there were blocks with and without an explicit cost of information sampling, and we gave feedback for correct and incorrect answers. This manipulation allowed the comparison between groups on different cost schedules. However, it also allowed us to test the competing hypothesis, which is that psychosis patients jump to conclusions because of primarily noisy decision-making behavior. Under this account, patients should be insensitive to a cost manipulation in the novel setup of the paradigm and apply random decision-making.

This study presents a novel investigation of the processes that lead to reduced information sampling in psychosis. We hypothesized that (a) psychosis patients would gather less information than controls when gathering information is cheap and that (b) psychosis patients and controls would adjust their information sampling according to experimental cost manipulations and that the adjustments would mitigate, but not abolish, the difference between the groups. Finally, we hypothesized that (c) the costed Bayesian model applied to this paradigm and a largely unmedicated early-psychosis group would provide explanatory evidence for JTC bias in favor of less information sampling because of higher perceived costs rather than purely noisy decision-making.

METHODS

Study Participants

The study was approved by the Cambridgeshire 3 National Health Service research ethics committee. An early-psychosis group (N = 31) was recruited, consisting of individuals with first-episode psychotic illness (N = 14) or with at-risk mental states (ARMS; N = 17) from the Cambridge early intervention service in psychosis (CAMEO). Inclusion criteria were as follows: age 16–35 years and current psychotic symptoms meeting either ARMS or first episode of psychosis (FEP) criteria according to the Comprehensive Assessment of At-Risk Mental States (CAARMS; Morrison et al., 2012; Yung et al., 2005). Patients with FEP were required to meet ICD-10 criteria for a schizophrenia spectrum disorder (F20, F22, F23, F25, F28, F29) or affective psychosis (F30.2, F31.2, F32.3). Healthy volunteers (N = 31) without a history of psychiatric illness or brain injury were recruited as control subjects. None of the participants had drug or alcohol dependence. Healthy volunteers had not reported any personal or family history of neurological or psychotic illness and were matched with regard to age, gender, handedness, level of education, and maternal level of education. None of the patients with ARMS were taking antipsychotic medication, and four patients with FEP were on antipsychotic medication at the time of testing. All of the experiments were completed with the participants’ written informed consent.

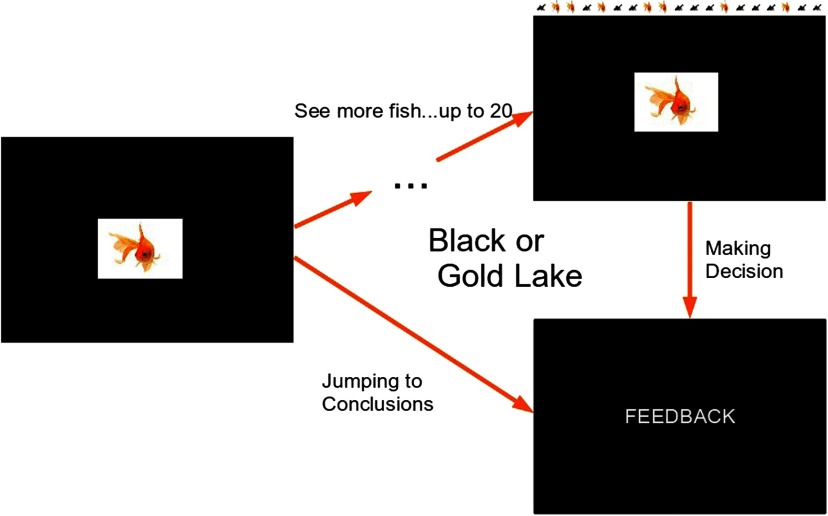

Behavioral JTC Task

This was a novel task (Figure 1), based on previously published tasks (Garety et al., 1991; Huq et al., 1988) of reasoning bias in psychosis, but amended in the light of decision-making theory, according to which the amount of evidence sought is inversely proportional to the costs of information sampling. These costs include the high subjective cost of uncertainty and the cost to self-esteem or other factors (Moutoussis et al., 2011). Participants were told that there are two lakes, each containing black and gold fish in two different ratios (60:40). The ratios were explicitly stated and displayed on the introductory slide. A series of fish was drawn from one of the lakes; all the previously “caught” fish were visible to reduce the working memory load. The participants were informed that fish were being “caught” randomly from either of the two lakes and then allowed to “swim away.” We used a pseudo-randomized order for each trial, which was the same for all participants. The lake from which the fish were drawn was also pseudo-randomized.

Figure 1. .

Experimental design of a single trial. In 50% of the trials, fish were coming from the mainly black lake, and in 50%, they were coming from the mainly gold lake. The order was pseudo-randomized so that the same sequences were used for all participants. Feedback, depending on the block, was either of the words “Correct” and “Incorrect” in Block 1 or the number of points won (or lost) during the trial in all subsequent blocks.

Participants could ask for a maximum of 20 fish to be shown. After each fish shown, they indicated whether the fish came from Lake G (mainly gold) or Lake B (mainly black) or asked to see another fish. The trial terminated when the subject chose the lake. There were four blocks, each with the 10 trials of the predetermined sequences to increase reliability. Block 1 was similar to the classical beads task and was included to provide a reference point. The only difference was that feedback (“correct” or “incorrect”) was provided after each trial. In Block 2, a win was assigned to a correct decision (100 points) and a loss (−100 points) to an incorrect decision. In Block 3, the cost of each extra fish after the first one was introduced (−5 points) was subtracted from the possible win or loss of 100 points for making a correct or incorrect decision, respectively. Block 4 was similar to Block 3, but the information sampling cost was incrementally increased: The first fish would cost 0 points, the second −5 points, the third −10 points, and so on. Thus a higher number of fish sampled led to more lost points. Subjects performed the task at their own pace. Whether Lake G or Lake B was correct was randomized. The task consisted of four blocks. Within each block were 10 trials of predetermined sequences of fish to increase reliability. All of the fish that were “caught” during one trial were visible on the screen to minimize the working memory load. Block order was not randomized because the task increased in complexity.

The main outcome variable was the number of fish sampled (DTD). Secondary outcomes were the accuracy of the decision, calculated according to Bayes’s theorem, based on the probability of the chosen lake given the color and number of fish seen (Everitt & Skrondal, 2010), and the dichotomous JTC variable, which is defined as making a decision after two or fewer pieces of information.

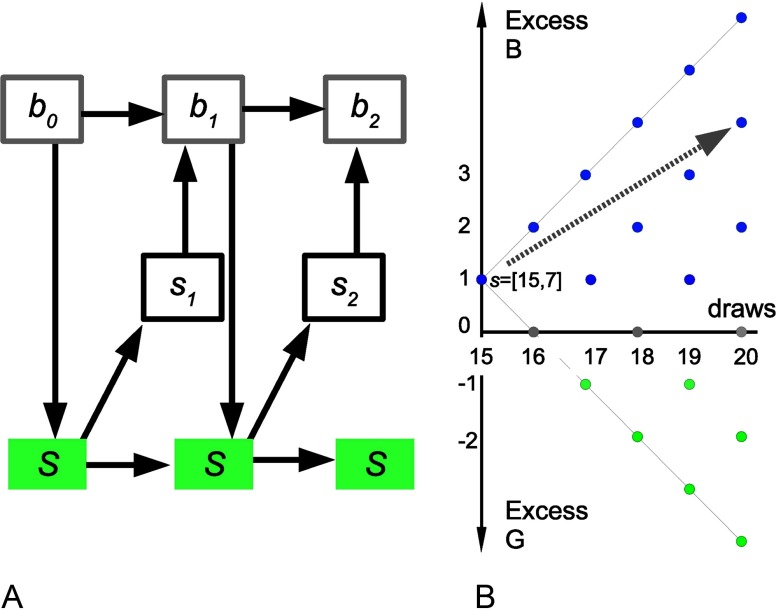

Partially Observable Markov Process Decision-Making

We consider a belief-based model of decision-making, formally a partially observable Markovian decision process, to model behavior in this task. The process is Markovian because we can concisely formulate the state in which the people find themselves upon observing nd draws, so that the state contains all information that can be extracted from observations thus far. As beads are drawn from one jar only at each trial, this can be simply defined as the number of g fishes seen so far and the total number seen: s = [nd, ng]. The agent is interested to infer upon the true state of the world, which is a B or G lake. This is not directly observable but partially observable. The agent maintains a belief component of their state, P(G|s). The Markov property still holds: future beliefs are independent of past beliefs given the current state (Figure 2A). As we will see, belief-state transitions can be calculated just by considering the evidence so far. Given the current belief state, the probability of the possible unfolding of the task into the future can be estimated and hence the expected returns for each possible future decision (Figure 2B). The value of the available choices can thus be estimated: choosing the B lake DB, the G lake DG, or sampling another piece of information DS. The agent chooses accordingly and either terminates the trial or gathers a new datum and repeats the process (Figure 2).

Figure 2. .

State space schematic. A) Markovian transitions in this task. Top: belief (probabilistic) component of states; middle: observable part of the state (data/feedback). Down arrows: actions (sample, declare). Bottom: true state. For example, let the cost of sampling be very high. Then b0 may be “equiprobable lakes,” Action 1 “sample,” s1 “B,” b1 “60% B,” Action 2 “declare B,” and s1 “Wrong.” B) In this example, sampling cost is very low. A person has drawn 15 fishes, 7 of them g, hence the position of 15 on the x-axis and +1 on the y-axis as there is a +1 excess of black fish so far. The visible states corresponding to all possible future draws are shown. Looking ahead (example: gray arrow), the agent finds the “sampling” action more valuable in that the current preference for the B lake is likely to be strengthened at very low cost.

We now formally specify the model. Let P(G|nd, ng) be the probability of the lake being gold after drawing nd fish and seeing ng gold fish. Using Bayes’s theorem, and assuming that a gold lake and a black lake are equally probable,

| (1) |

We then need to calculate the value of each action. For the “declare” choices, the action value is the expected reward for a correct answer minus the expected cost for a wrong answer. For example, if Q(DG; nd, ng) is the action value of declaring the lake gold, after drawing nd fish and seeing ng gold fish,

| (2) |

where RC is the reward for declaring the color of the correct lake and CW is the cost of declaring the color of the wrong lake. In our task, RC = CW, so

Similarly,

The action value of sampling again is the expectation over the value of the next state minus the cost of sampling CS(nd). If the value of the (possible) next state is V(s′), the action value for sampling again is the sum over the new possible states, weighted by their probabilities. The latter depends, in turn, on the identity of the true underlying lake, L:

| (3a) |

The possible outcomes for sampling again, and getting a black fish (going from (nd, ng) to (nd + 1, ng)) and getting a gold fish (going from (nd, ng) to (nd + 1, ng + 1)), are

| (3b) |

Agents will tend to prefer actions with the greatest value. An ideal, reward-maximizing agent will always choose the action with the maximum value and will thus endow the corresponding state with this value. Denoting q as the vector of action values,

| (4a) |

Real agents will choose probabilistically as a function of action values, so

| (4b) |

Agents cannot fill in the action values for sampling starting from their current state, as the next state value is not known. However, they can fill in all values by backward inference. At the very end, nd = 20, sampling is not an option, and the action values can be calculated directly from Equation 2 and the state value from Equation 4a. Once all possible state values for nd = 20 have been calculated, Equations 2, 3a, 3b, 4a, and 4b are used to calculate action and state values for nd = 19 and downward to nd = 1.

We now turn to the ideal, deterministically maximizing agent against which we can compare human performance. When given the same sequence of fish as human participants, on average, the ideal Bayesian agent samples 20, 20, 3.5, and 1 fish in each of the four blocks, respectively, achieving total winnings of 1,070 points (Table 3). For no cost (Blocks 1 and 2), an ideal Bayesian agent samples all the fish and has p = 0.835 of being correct (100% if we had infinite fish). For constant cost (5 points, Block 3), it samples until the difference between black and gold fish (Nb − Ng) is 2, with p = 0.692 probability of being correct. For increasing cost (Block 4), it guesses after the first fish, so there is 0.6 probability of being correct. To model real agents, the probabilistic action choice in Equation 4b took the softmax form:

| (5) |

with z a normalizing constant that is the same for all the actions at a specific state and T a decision temperature parameter, described later (Moutoussis et al., 2011).

Table 3. .

Information sampling in controls, patients, and an ideal Bayesian agent

| Group | Control (n = 31), Mean (SD) | Psychosis (n = 31), Mean (SD) | Ideal Bayesian agent |

|---|---|---|---|

| DTD 1 | 12.01 (5.42) | 8.14 (6.16) | 20 |

| DTD 2 | 12.69 (5.67) | 9.15 (6.31) | 20 |

| DTD 3 | 5.80 (4.23) | 4.14 (2.64) | Nb − Ng = ±2 |

| DTD 4 | 3.71 (3.12) | 3.03 (2.09) | 1 |

| P corr 1 | 0.72 (0.10) | 0.66 (0.10) | 0.835 |

| P corr 2 | 0.75 (0.09) | 0.69 (0.11) | 0.835 |

| P corr 3 | 0.65 (0.07) | 0.62 (0.08) | 0.692 |

| P corr 4 | 0.62 (0.09) | 0.59 (0.08) | 0.6 |

Note. DTD = draws to decision (i.e., number of fish seen by the participant before reaching a decision); Nb = number of black; Ng = number of gold; P corr = probability of being correct in Blocks 1–4.

Thus two parameters shape a participant’s behavior:

-

•

CS, the cost of sampling, that is, the subjective cost of each additional piece of information compared to the final reward. It may be greater or smaller than the costs imposed by the experimenter but is here taken to be constant (in a given block). High values of CS mean that decisions are made early.

-

•

T, the noise parameter. As this value increases, the probability of the participant following the ideal behavior (for their given value of CS) decreases and their actions become more uniformly random.

Model Parameter Estimation

Here we were interested in the most accurate possible estimates of the means and variances characterizing the psychosis and control groups. We therefore used what is known as a hierarchical model, a variant of the random effects approach. Here a participant’s model parameters are drawn from a group, or population, distribution. This procedure estimates the mean and variance for CS and for T for the whole group. For a group of, for example, 30 participants with 10 data points each, we use 300 data points to estimate 4 values (i.e., mean and variance for each of the two parameter distributions) rather than 2 different parameters for each of the 30 participants (i.e., 60 parameters in total). We assumed both parameters to be positive and a priori uncorrelated and therefore appropriately modeled by independent gamma distributions. The standard ways that mathematicians parameterize gamma distributions are in terms of a shape and scale or rate. However, these do not map intuitively to the quantities we are usually interested in clinical research, that is, a measure of center and a measure of spread. Thus we follow Moutoussis et al. (2011) in describing our parameters by mean and variance. We used the expectation-maximization (EM) technique (see Ermakova, Gileadi et al., 2019, Appendix; see also Moutoussis et al., 2011). In brief, EM proceeds by first assuming uninformative distributions at the group level; using these as uninformative priors, it derives probability distributions for the parameters of each participant based on each one’s data. This is expectation. Then, it reestimates the group-level distributions to maximize the likelihood of the (temporarily fixed) lower level. This is maximization. The group-level distributions then form empirical priors that are used in place of the uninformative ones. The process is repeated until all estimates are stable; we ran between 25 and 30 iterations of the EM algorithm (see Ermakova, Gileadi et al., 2019, Appendix). Runs took about 30 s per iteration per participant on a single core of an Intel Core2 Duo CPU and 4 GB of RAM.

Once the maximum likelihood parameters for a given group are estimated, they can be interpreted using the integrated Bayesian information criterion (iBIC; Huys et al., 2012, 2015), which uses the likelihood values of best-fit parameters for two different models to decide which model best represents the data. In the case of the ordinary BIC, to assess model fit, we calculate the maximum likelihood of the data of each participant given a particular model, and we penalize this in proportion to the number of parameters in the model. The underlying assumption is that a greater number of parameters represents a proportionate reduction of prior belief that the participant belongs to a given region of the parameter space and that all parameters are on the same footing for each participant. The volume of this parameter space scales to the power of its dimensionality, that is, the number of parameters, so the log of this, used in the BIC, is proportional to this number of parameters. Redundant parameters, which would result in overfitting, are thus penalized. However, this approximation can be refined. The study sample itself gives information about the prior probability that a particular parameter obtains at the micro-level of the individual. This is the empirical prior, which, in our case, is calculated by EM. Now the complexity penalty at the level of the individual is calculated by forming a mean, or integrated, likelihood weighed by this prior. This allows for the data to speak to some parameters being “more equal than others” in penalizing complexity. We can now account well for the penalty due to the prior at the level of the individual, but we have not considered the level of the group. Should we assume that, say, patient participants should be fitted with different empirical priors than healthy controls, or the same? To compare separate-fit and common-fit statistical models, we turn to the BIC approximation of complexity proportional to the number of parameters, but now at the level of the groups. Thus the integrated BIC, which is integrated in the sense that it contains weighted-mean likelihoods rather than maximum likelihoods, also contains a penalty term for models in proportion to the number of empirical priors, or groups, used. Therefore it can be used for hypothesis testing: If one model has all participant parameters coming from one distribution (four parameters) and a second model separates the control and unhealthy groups (eight parameters), a comparison of iBIC values can be used to decide if splitting the sample is justified. The interested reader is referred to Huys et al. (2012, 2015) for mathematical details.

In addition, we conducted analyses based on individual participants’ estimated model parameters. A reliable test of the hypothesis that the groups differ in the cost (or noise) parameters can be created by forcing the model to treat all participants as coming from one group, with a single group mean and variance, then using the model’s estimates of the single subject parameters to conduct a test of whether there are differences according to diagnostic group. This approach is overconservative but serves a purpose in subjecting the test of group differences to a stern challenge.

Rating Scales and Questionnaires

The participants underwent a general psychiatric interview and assessment (Tables 1 and 2) using the CAARMS (Morrison et al., 2012; Yung et al., 2005), the Positive and Negative Symptom Scale (PANSS; Kay, Fiszbein, & Opler, 1987), the Scale for the Assessment of Negative Symptoms (SANS; Andreasen, 1989), and the Global Assessment of Functioning (GAF; Hall, 1995). The Beck Depression Inventory (BDI; Beck, Steer, & Brown, 1996) was used to assess depressive symptoms during the last 2 weeks. IQ was estimated using the Culture Fair Intelligence Test (Cattell & Cattell, 1973). Schizotypy was measured with the 21-item Peters et al. Delusions Inventory (PDI-21; Peters, Joseph, Day, & Garety, 2004).

Table 1. .

Sample characteristics for healthy controls and patients with early psychosis

| Variable | Controls (n = 31) | Early psychosis (n = 31) | Statistics | ||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| Age (years) | 21.58 | 2.41 | 22.52 | 4.66 | t(60) = 0.993, p = 0.325 |

| Gender (male/female) | 18/13 | 18/13 | χ(1) = 0.0, p = 1 | ||

| IQ | 110.52 | 15.79 | 102.26 | 17.91 | t(60) = −1.926, p = 0.059 |

| Level of education | 2.35 | 0.79 | 2.00 | 1.17 | U(2) = 373.5, p = 0.117 |

| Mother’s level of education | 2.19 | 0.91 | 2.11 | 1.37 | U(2) = 440.0, p = 0.884 |

| Smoking (yes/no)a,* | 6/25 | 18/13 | χ(1) = 9.79, p = 0.004 | ||

| Alcohol | 2.42 | 0.85 | 1.78 | 1.37 | U(2) = 419.5, p = 0.368 |

| Cannabis | 0.90 | 0.79 | 1.26 | 1.23 | U(2) = 430.0, p = 0.459 |

| Other drugsb,* | 0.49 | 1.02 | 1.11 | 1.36 | U(2) = 355.5, p = 0.039 |

| PDI-21* | 4.45 | 2.34 | 7.83 | 4.58 | t(45) = 3.359, p = 0.002 |

| Distress* | 9.52 | 6.94 | 24.00 | 15.32 | t(45) = 4.430, p < 0.001 |

| Preoccupation* | 10.28 | 6.48 | 24.78 | 16.11 | t(45) = 4.341, p < 0.001 |

| Conviction* | 13.31 | 8.13 | 26.56 | 17.11 | t(45) = 3.58, p = 0.001 |

| BDI* | 3.40 | 3.90 | 25.34 | 14.12 | t(57) = 8.197, p < 0.001 |

| CAARMS summary scorec,* | 0.52 | 1.15 | 18.00 | 7.53 | t(60) = 12.776, p < 0.001 |

Note. Intelligence was measured with a Culture Fair Intelligence Test. BDI = Beck Depression Inventory; CAARMS = Comprehensive Assessment of At Risk Mental States; PDI = Peter’s Delusion Inventory.

0 = nonsmoker; 1 = smoker.

Substance use was measured on a 5-point scale ranging from 0 (never used) to 5 (daily user). Other drugs included hallucinogens, stimulants, or sedatives.

A summary score of Unusual Thought Content, Non-bizarre Ideas, and Perceptual Abnormalities intensity and frequency subscales (for individual subscales of CAARMS and other clinical assessment measures, see Table 2).

Significant differences.

Table 2. .

Clinical assessment measures for 31 patients with psychosis

| Mean | SD | |

|---|---|---|

| UTC | 2.44 | 2.19 |

| UTC frequency | 2.33 | 2.01 |

| NBI | 3.41 | 1.67 |

| NBI frequency | 3.52 | 1.48 |

| PA | 3.33 | 2.06 |

| PA frequency | 2.52 | 1.89 |

| DS | 0.59 | 1.28 |

| DS frequency | 0.96 | 1.99 |

| ADB | 1.81 | 1.82 |

| ADB frequency | 2.15 | 1.92 |

| SS | 1.59 | 1.48 |

| SS frequency | 1.30 | 1.44 |

| GAF score | 55.00 | 18.54 |

| SANS score | 0.35 | 0.75 |

| PANSS positive | 13.68 | 3.99 |

| PANSS negative | 9.87 | 4.88 |

Note. BDI = Beck Depression Inventory; GAF = Global Assessment of Functioning; SANS = Scale for Assessment of Negative Symptoms. Comprehensive Assessment of At Risk Mental States (CAARMS) subscales included Unusual Thought Content (UTC), Non-bizarre Ideas (NBI), Perceptual Abnormalities (PA), Disorganized Speech (DA), Aggression/Dangerous Behavior (ADB), Suicidality and Self-Harm (SS).

Statistical Analysis of the Behavioral Data

The effect of manipulations of wins, losses, and costs on the DTD and accuracy was assessed by repeated-measures analysis of variance (ANOVA) using SPSS 23. Although DTD was not normally distributed, repeated-measures ANOVA is robust to violations of normality and was therefore an appropriate test to run. IQ and depressive symptoms scores were initially included as covariates and dropped from the subsequent analysis where nonsignificant. We report two-tailed p-values, which were significant at p < 0.05. When the assumption of sphericity was violated, we applied Greenhouse–Geisser corrections. We also examined whether DTD was correlated with the severity of psychotic symptoms in the patient group (CAARMS positive symptoms) and with schizotypy scores on the PDI in controls using Spearman’s correlation coefficients. The intraclass correlation coefficients (ICCs) were used to estimate the consistency of decision-making. We calculated the ICCs of the mean number of choices in each block separately for patients and controls.

For completeness and to help relate our study to prior literature (or for future meta-analyses), we report data on the dichotomous variable JTC, defined as making a decision after receiving one or two pieces of information, and we compare the FEP and ARMS patient groups separately to controls on Block 1 DTD and estimated model parameters.

RESULTS

Demographical Characteristics of the Participants

In Tables 1 and 2, the sociodemographic and cognitive parameters as well as clinical measures of the participants are presented. Although healthy volunteers had higher IQ compared to the psychosis group, the difference was not significant, and the groups were matched with regard to level of maternal education and number of years of education. The control and patient groups did not differ with regard to gender or age. There were significantly more smokers in the patient group compared to the control group, and some subjects of the patient group used more recreational drugs. For all of the other measures (e.g., alcohol or cannabis), there were no significant group differences. As expected, there are significant differences between the healthy volunteers and the patients in all subscales of CAARMS (Table 2), on the self-reported depression questionnaire (BDI), and in self-reported schizotypy scores (PDI). In the patient group only, we performed additional clinical assessments. The mean (±SD) score for PANSS positive symptoms was 13.68 (±3.99); for PANSS negative symptoms 9.87 (±4.88); for SANS 0.35 (±0.75), and for GAF 55.00 (±18.54).

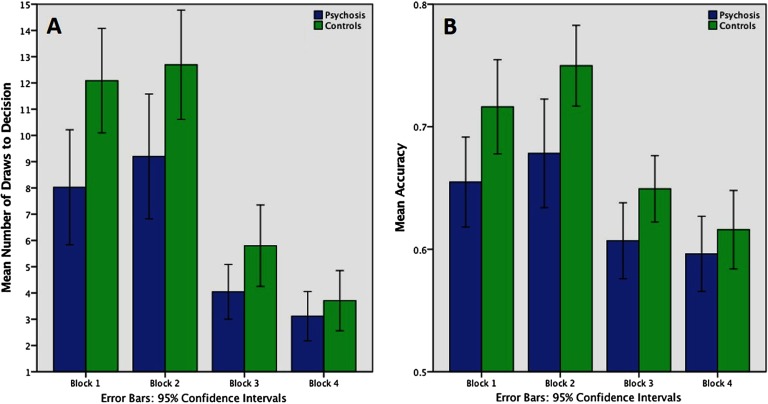

Group Differences in the Number of DTD and Points

Inspection of Figure 3A reveals that in all of the blocks, the controls took more DTDs than the patients. Mauchly’s test indicated that the assumption of sphericity was not violated, W(5) = 0.211, p < 0.001. On mixed-model ANOVA, there were significant main effects of block, F(3) = 94.49, p < 0.001, and of group, F(1) = 5.99, p = 0.017, on the number of DTD. The interaction between the group and the block was also significant, F(3) = 4.32, p = 0.006. This indicates that, depending on the group, block change had different effects on DTD. Group differences were statistically significant in the first two blocks (Block 1, p = 0.007; Block 2, p = 0.028), whereas in Blocks 3 and 4, the group differences were increasingly attenuated, as the cost of decision-making became increasingly high (Block 3, p = 0.059; Block 4, p = 0.419). Control behavior was more similar to the ideal Bayesian agent than patients on the first three blocks but not Block 4 (Table 3). Group differences in the probability of being correct were very similar to the results in the number of DTD (Figure 3B).

Figure 3. .

Performance: Draws to decision and accuracy according to block and group. A) Mean number of draws to decision in the four blocks of the task. On mixed-model ANOVA, there were significant main effects of block, F(3) = 94.49, p < 0.001, and of group, F(1) = 5.99, p = 0.017, and an interaction between group and block, F(3) = 4.32, p = 0.006, with group differences in Block 1, p = 0.007, and Block 2, p = 0.03. B) Probability of being correct (accuracy) at the time of making the decision in four task blocks. Here there was an effect for block, F(2) = 93.73, p < 0.001, a marginally significant interaction between Block ⋅ Group, F(2) = 2.52, p = 0.086 and a significant groups effect, F(1) = 4.14, p = 0.047.

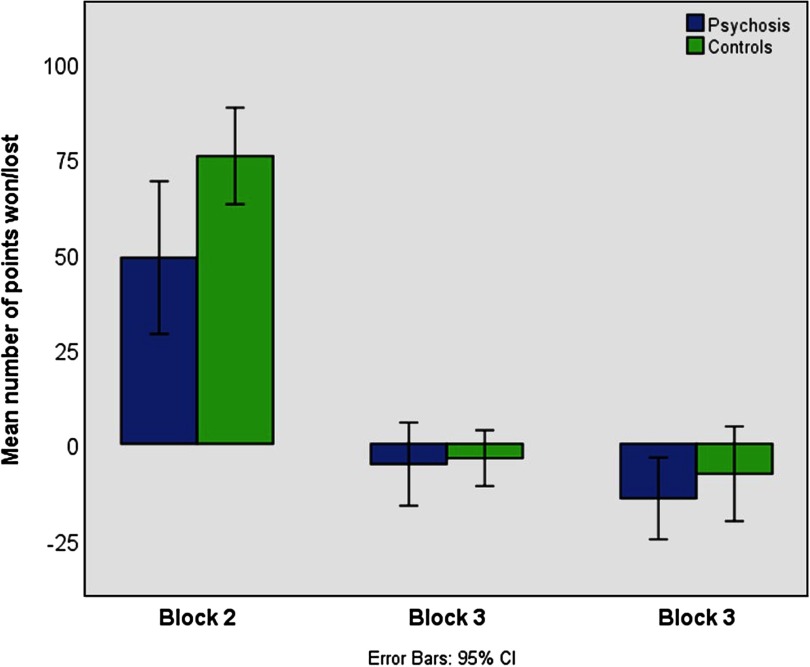

Analyzing the points won/lost in Blocks 2–4 (Figure 4), we identified four outliers in each group that significantly exceeded the ±2 standard deviation threshold. After excluding these subjects, the mixed-model ANOVA revealed a significant effect for block, F(2) = 93.73, p < 0.001, a marginally significant interaction between Block ⋅ Group, F(2) = 2.52, p = 0.086, and a significant groups effect, F(1) = 4.14, p = 0.047. Patients won significantly fewer points in Block 2, F(2) = 4.65, p = 0.035, but did not differ from controls in Blocks 3 and 4, both p > 0.3. Percentage and count of people displaying a JTC reasoning style are presented in Table 4.

Figure 4. .

Mean number of points won or lost in Blocks 2–3 across all 10 trials. Patients won significantly fewer points in Block 2, F(2) = 4.65, p = 0.035, but did not differ from controls in Block 3 and 4, both p > 0.3.

Table 4. .

Percentage and count of people who displayed JTC reasoning style

| Control (n = 31), n (%) | Psychosis (n = 31), n (%) | |

|---|---|---|

| No JTC | 18 (58.1) | 15 (48.4) |

| JTC Blocks 1/2 | 0 (0.0) | 1 (3.2) |

| JTC Blocks 3/4 | 11 (35.5) | 7 (22.6) |

| JTC all Blocks | 2 (6.5) | 8 (25.8) |

Note. JTC (jumping to conclusions) is defined as reaching a decision after being given just one or two pieces of information.

Intraclass Correlations of DTD and SD of the Mean DTD

ICCs of the mean number of DTD within each block were calculated separately for patients and controls. Within all blocks in both groups, the correlations were very high, indicating that behavior was consistent within each block (ICC values: Block 1, patients 0.965, controls 0.943; Block 2, patients 0.982, controls 0.973; Block 3, patients 0.972, controls 0.976; Block 4, patients 0.973, controls 0.978).

Correlation of Symptoms and IQ With DTD

We calculated two-tailed Spearman’s correlations with positive psychotic symptoms in patients and with PDI scores in controls. In the patient group, we used a summary score of the three CAARMS subscales that quantify positive psychotic symptoms, namely, Unusual Thought Content, Non-bizarre Ideas (mainly persecutory ideas), and Perceptual Aberrations. An additional advantage of the summary measure is that it provides one measure to reduce the number of correlations that need to be performed. We also ran correlation BDI scores because the groups differed on this measure. In the group with psychotic symptoms, the correlations with the overall CAARMS score were significant in the first three blocks (Block 1, ρ = −0.515, p = 0.003; Block 2, ρ = −0.489, p = 0.005; Block 3, ρ = −0.491, p = 0.005). There were no correlations in the psychotic symptoms group between DTD and BDI score, for all p > 0.1.

In controls, we found significant correlations of DTD in Block 1 with the Distress, ρ = −394, p = 0.035, and Preoccupation, ρ = −0.462, p = 0.012, subscales of the PDI. DTD in Block 2 correlated with the Preoccupation subscale of PDI, ρ = −0.387, p = 0.038. There were no significant correlations with BDI scores (e.g., for Block 1, ρ = −0.023, p = 0.905).

In the patients, we furthermore found a positive correlation between IQ and the first three blocks (Block 1, ρ = −0.481, p = 0.006; Block 2, ρ = −0.362, p = 0.045; Block 3, ρ = −0.364, p = 0.044). There was no such correlation in the controls.

Group Differences in the Probability of Being Correct (Accuracy)

Mauchly’s test indicated that the assumption of sphericity was not violated, W(5) = 0.667, p < 0.001. There was a significant main effect of block on the probability of being correct, F(3) = 53.502, p < 0.001, and a significant effect of group, F(1) = 5.514, p = 0.022. There was a significant interaction between the group and the block, F(1) = 2.791, p = 0.042, indicating that as the cost of decision-making increased, the group differences became attenuated.

Additional Analyses Having Excluded Participants on Medication or to Make Groups More Closely Matched on IQ

To demonstrate that the findings were unaffected by antipsychotic medication, we conducted repeat analyses having excluded the four patients taking antipsychotic medication. The results were similar to in the full sample. As before, on mixed-model ANOVA, there were significant main effects of block, F(3) = 88.59, p < 0.001, and of group, F(1) = 5.21, p = 0.026, on the number of DTD. The interaction between the group and the block was also significant, F(3) = 4.54, p = 0.004. Group differences were statistically significant in the first two blocks (Block 1, p = 0.013; Block 2, p = 0.023) but reduced in Blocks 3 and 4 (Block 3, p = 0.098; Block 4, p = 0.55).

When we excluded the three highest IQ controls and two lowest IQ patients to make groups more similar in IQ (resulting in control mean IQ 107 and patient mean 105), the results were similar: On mixed-model ANOVA, there were significant main effects of block, F(3) = 90.2, p < 0.001, and of group, F(1) = 5.25, p = 0.026, on the number of DTD. The interaction between the group and the block was also significant, F(3) = 3.50, p = 0.017. Group differences were statistically significant in the first two blocks (Block 1, p = 0.013; Block 2, p = 0.039) but reduced in Blocks 3 and 4 (Block 3, p = 0.062; Block 4, p = 0.45).

Computational Modeling Results: Analysis of Group Estimates of Model Parameters

In our computational analysis of Blocks 1–3, we found that patients with early psychosis assigned a higher cost to sampling more data than did healthy controls (Table 5). For example, in Block 1, where there was no explicit cost, the modeled mean cost of sampling in the controls was very low (1.9 ⋅ 10−3) compared to that of patients (1.7). The modeled variance was also higher for the patient group (13) compared to the controls (2.0 ⋅ 10−6). The estimated noise parameters were similar in both groups. For example, in Blocks 1 and 2, the respective group estimated mean noise parameters were 3.4 and 3.6 in controls and 4.2 and 2.9 in patients, respectively.

Table 5. .

Best-fit distribution parameters for all groups in all experiments

| Group | CSM | CSV | TM | TV | LL |

|---|---|---|---|---|---|

| Block 1: No cost | |||||

| Control | 1.9 ⋅ 10−3 | 2.0 ⋅ 10−6 | 3.4 | 13 | −943.747 |

| Psychosis | 1.7 | 13 | 4.2 | 13 | −762.993 |

| Combined | 0.7 | 2.2 | 3.6 | 13 | −1,735.65 |

| Block 2: No cost, but win or loss (±100) | |||||

| Control | 5.0 ⋅ 10−3 | 1.3 ⋅ 10−5 | 3.6 | 21 | −841.202 |

| Psychosis | 3.0 | 44 | 2.9 | 7.5 | −690.567 |

| Combined | 1.5 | 13 | 2.5 | 7.0 | −1,549.28 |

| Block 3: Fixed cost, plus win or loss (±100) | |||||

| Control | 1.6 | 9.9 | 4.1 | 2.2 | −707.604 |

| Psychosis | 5.2 | 100 | 4.4 | 2.2 | −545.704 |

| Combined | 3.1 | 38 | 4.2 | 1.0 | −1,260.45 |

Note. Best-fit parameters were calculated for each participant group separately and for the group formed by combining both participant groups. CSM = mean cost per sample for a particular group; CSV = variance of cost per sample within a particular group; TM is the mean noise parameter for a particular group; TV = variance of the noise parameter within a particular group; LL = log-likelihood of the data given the parameters.

In Block 3, where an explicit cost of 5 points per fish sampled was assigned, the model shows that patients very slightly overestimated the cost of sampling compared to the assigned cost (estimated mean cost of sample for patients 5.2), whereas the controls underestimated it (estimated mean cost of sample 1.6).

In Block 4, we explicitly told participants that the cost for additional information is not fixed but increases with amount of information requested. We did not fit the computational model to Block 4 because the model does not take into account the increasing cost structure set up.

To test the null hypothesis that both groups are drawn from the same distribution of behavior parameters, we used iBIC. Table 6 shows the results of that calculation, where the null hypothesis can be rejected with strong evidence. For example, in Block 1, despite the fact that the iBIC penalizes the use of extra parameters substantially, when fitting CSm, CSv, Tm, and Tv separately for each group, iBIC was 3,450.2, compared to an iBIC of 3,489.7 for fitting them as one combined group. Taken together, the iBIC can be used to compare the hypotheses about the two models of interest. In our case, those are the following two: (a) Unhealthy and healthy groups have independent CS and T parameter distributions, characterized by eight numbers (mean and variance for CS and for T, for each group), and (b) all participants come from the same pool of noise and cost parameters, characterized by four numbers. The iBIC suggests that Hypothesis 1 is better explained by the separate model in Blocks 1 and 2 and by the combined model in Block 3. Model fits were not changed substantially after exclusion for medication (Table 6).

Table 6. .

Integrated Bayesian information criterion values for the model where all participants are drawn from the same distribution, compared to the model where the healthy and unhealthy groups differ in their distributions

| Block | iBIC combined | iBIC separate | iBIC difference | iBIC difference after medication exclusions | iBIC difference after closer IQ matching |

|---|---|---|---|---|---|

| 1 (no cost) | 3,489.7 | 3,450.2 | 39.5 (very strong) | 43.9 | 36.1 |

| 2 (no cost, win or loss ±100) | 3,116.9 | 3,100.3 | 16.7 (very strong) | 16.6 | −12.9 |

| 3 (fixed cost, plus win or loss ±100) | 2,539.3 | 2,543.4 | −4.1 (preference for combined model) | −6.2 | −4.1 |

Note. iBIC values and differences are presented for analyses with all participants. iBIC differences are also shown for repeat analyses after exclusions for antipsychotic medication or closer IQ matching. Positive iBIC differences indicate the preference for separate groups, negative for a single combined group. iBIC = integrated Bayesian information criterion.

Computational Modeling Results: Analysis of Individual Participant-Level Cost and Noise Parameters

A very strong test of the hypothesis that the groups differ in the cost (or noise) parameters can be created by forcing the model to treat all participants as coming from one group, with a single group mean and variance, then using the model’s estimates of the single subject parameters to conduct a test of whether there are differences according to diagnostic group. Although we found (see earlier) that in Blocks 1 and 2, this assumption did not fit the data as well as modeling the groups separately, so the approach is overconservative, this procedure serves a purpose in subjecting the test of group differences to a stern challenge. The groups differed significantly on estimated cost parameters in this procedure (Block 1, controls mean = −0.24, median = −0.04, interquartile range = 0.07, psychosis mean = −1.1, median = −0.1, interquartile range 1.2, Mann–Whitney U = 289, p = 0.007; Block 2, controls mean = −0.8, median = −0.04, interquartile range = 0.08; psychosis mean = −2.2, median = −0.14, interquartile range = 4.9, U = 363, p = 0.038; Block 3, controls mean = −2.0, median = −0.17, interquartile range = 2.3, psychosis mean = −4.2, median = −0.48, interquartile range = 8.62, U = 338, p = 0.045) but generally were similar on estimated noise parameters (Block 1, controls mean = 2.96, SD = 2.08, patients mean = 4.14, SD = 2.86, t[60] = 1.86, p = 0.07; Block 2, controls mean = 2.27, SD = 1.61, patients mean = 2.81, SD = 1.95, t[60] = 1.2, p = 0.23; Block 3, controls mean = 4.1, SD = 0.74, patients mean = 4.31, SD = 0.41, t[60] = 1.2, p = 0.23).

The results were similar after exclusions for medication (Block 1, cost group difference Mann– Whitney U = 248, p = 0.008; noise group difference t[60] = 1.79, p = 0.08) or to equalized IQ (Block 1, cost group difference U = 246, p = 0.01; noise group difference t[60] = 1.5, p = 0.13).

Individually estimated greater cost parameters predicted higher psychotic symptom severity in patients, ρ = 0.58, p = 0.001. There was no significant association between estimated noise parameters and psychotic symptom severity, ρ = 0.27, p = 0.14. In controls, cost was associated with PDI preoccupation, ρ = 0.41, p = 0.03, and marginally with distress, ρ = 0.35, p = 0.06, and noise was associated with overall PDI score, ρ = 0.34, p = 0.07, distress, ρ = 0.42, p = 0.02, and preoccupation, ρ = 0.45, p = 0.01.

Cost parameter estimates were highly correlated across blocks (Blocks 1 and 2, ρ = 0.9; Blocks 1 and 3, ρ = 0.6; Blocks 2 and 3, ρ = 0.7). Noise parameters were also correlated across blocks (noise on the first two blocks, ρ = 0.8; noise on Blocks 1 and 3, ρ = 0.3; noise on Blocks 2 and 3, ρ = 0.4). However, despite associations across blocks, there were significant effects of block on both cost and noise (repeated-measures ANOVA effect of block on cost, F[2, 122] = 14, p = 0.000003; effect of block on noise, F[2, 122] = 22, p = 5 ⋅ 10−9). Cost and noise parameters were related to each other (e.g., on Block 1, ρ = 0.7) and, to a lesser extent, to IQ on some blocks (e.g., IQ vs. Block 1 cost, ρ = 0.3; Block 3, ρ = 0.3; Block 1 noise, ρ = 0.2; Block 3 noise, ρ = 0).

Subgroup Analysis

On Block 1 DTD, controls (mean DTD 12.1, SD = 5.4) gathered more evidence than FEP (mean 6.7, SD = 6.0), one-tailed t(44) = 3.0, p = 0.002, and ARMS (mean 9.2, SD = 5.9), one-tailed t(47) = 1.7, p = 0.045. On Block 1, cost parameter, controls (median 0.04, interquartile range 0.07) had lower values than FEP (median 2.5, interquartile range 4.1), Mann–Whitney U = 106, one-tailed p = 0.003, and ARMS (median = 0.06, interquartile range 0.7), Mann–Whitney U = 183, one-tailed p = 0.04. On Block 1, noise parameter, controls (mean 3.0, SD = 2.1) had lower values than ARMS (mean 4.2, SD = 3.1), t(45) = 1.7, one-tailed p = 0.045, and, marginally, than FEP (mean 4.0, SD = 2.7), t(44) = 1.5, one-tailed p = 0.075).

DISCUSSION

Our study shows that early-psychosis patients generally gather less information before coming to a conclusion compared to healthy controls. Both groups slightly increased their DTD when rewarded for a correct answer (Block 2) and significantly decreased their DTD when there was an explicit cost for the sampling of information (Blocks 3 and 4). The decrease was strongest in Block 4, including the incremental cost increase for each “extra fish.” These effects were especially strong in controls, as they gathered significantly more information during Blocks 1 and 2 compared to patients. Thus patients had a lower “baseline” against which to exhibit a change in DTD with increasing cost. However, we emphasize that an ideal Bayesian decision agent would still sample fewer information than the average control or patient in Blocks 3 and 4 (in Block 4, the ideal Bayesian agent decides after the first fish, whereas our human participants sampled more). This indicates that potential floor effects may not be responsible for the decreased reduction of DTD in patients compared to controls. Together with our modeling results, this finding supports the hypothesis that, independent of the objective cost value of information, patients with early psychosis experience information sampling as more costly than controls do.

In this novel version of the beads task using blocks with explicit costs, JTC can be an advantageous strategy, because sampling a large amount of very costly information would cancel out the potential gain due to correctly identifying the lake. Consistent with this, group differences were especially strong in the first two blocks, where information sampling was free. In Block 3, when there was only a small cost of information, both groups responded to that change by lowering the number of fish sampled. The difference between the groups was marginally significant on Block 3, where patients still applied fewer DTD, p = 0.06. However, further increasing the information sampling cost completely abolished the group differences. Our data furthermore show that patients were significantly worse in overall accuracy (i.e., probability of being correct at the time of making the decision) and total points won, indicating the application of an unsuccessful strategy. In general, these results show that healthy controls were more flexible in adapting their information sampling to the changed task blocks. Controls increased the number of fish sampled when there was a reward for the correct decision and decreased it when information sampling had a cost. Patients also decreased the number of DTD when information sampling became more costly, but not as much as the controls, suggesting that the patients view information sampling generally as costly, somewhat independent of the actual value and the feedback. The results on Blocks 1 and 2, furthermore, indicate that psychosis patients have difficulties integrating feedback appropriately to update their future decisions. This is similar to results we reported in a recent study on the win-stay/lose-shift behavior in a partially overlapping early-psychosis group (Ermakova, Knolle et al., 2018).

The variance in DTD across the two groups was similar, and the ICCs were all greater than 0.94, indicating consistent decision-making behavior across the 10 trials in each block and within each group. If patients were acting more randomly, they would apply noisier and more variable decision-making behavior, but we did not observe this, which is in contrast to Moutoussis et al. (2011). Concluding from their Bayesian modeling data, they proposed that patients had more noise in their responses, leading to the reduced number of DTD. When modeling our data in a similar way, we found a difference between the groups in the perceived cost of information sampling, but less evidence in differences in the noise of decision-making behavior. We suggest that the contrast between our findings and those of Moutoussis et al. (2011) may be due to the differences in the patient groups used in the two studies. Whereas Moutoussis et al. used chronic, mainly schizophrenic, patients with a potential neuropsychological decline and a lower IQ (of 92), our study used patients at early stages of psychosis with preserved cognitive functioning (IQ 102). Severity of psychotic symptoms was related to DTD and cost parameters in our sample; however, as all our participants were in the early stages of illness, we were not able to explore relationships with chronicity statistically. We did, however, conduct secondary analyses to examine subgroup differences within our patient sample. On Block 1 DTD and cost parameters, the patients with confirmed psychotic illness (FEP) had the most pronounced differences from controls, whereas regarding the noise parameter, there was little difference between the patient groups, and indeed, the ARMS group, with milder symptoms, had slightly higher noise parameters. Differences in task design may also contribute to differences in the results from Moutoussis et al. Block 1 was most similar to that previous study: It differed in the type of test (computerized fishing emulation test vs. the classic test using actual beads and actual jars) and in providing feedback (i.e., “correct” or “incorrect”) after each decision, as well as in showing the sequence of fish drawn in each trial. These changes in our task potentially assisted patients (e.g., if some patients had memory deficits), which might also contribute to why we did not observe robust differences in the noise parameter in Block 1. When analyzing the objective cost of information sampling in Block 3, we found that patients slightly overestimated the cost, while the controls underestimated it. Furthermore, key contributors to the best-fit parameters may be those who jump to conclusions most: participants who consistently made a decision after viewing only one fish. The proportion of those individuals was significantly higher among the patients.

The percentage of people with psychosis who demonstrated JTC reasoning style in our study was relatively low, at 26%, compared to previous studies that report 40% of individuals (Dudley et al., 2011) or half to two-thirds of the individuals with delusions (So et al., 2010). Likewise, our objective DTD values were slightly higher than those in most other studies. This might be due to the use of early-stage psychosis patients and the presence of feedback in our task compared to previous studies. Feedback has been shown to increase information sampling and accuracy both in patients with delusions and in controls (Lincoln, Ziegler, Mehl, & Rief, 2010).

Additionally, we found a correlation between IQ and DTD in the first three blocks in the patient group, so we cannot completely exclude the contribution of intelligence to the information-gathering bias. Some argue that impaired executive functions or working memory deficits contribute to the JTC bias (Falcone et al., 2015; Garety et al., 2013). A low IQ could lead to a low tolerance for uncertainty and an equivalent high cost of the information sampling as well as the inability to integrate feedback to update future decisions. If more information is unpleasant, because it exceeds one’s capacity to utilize it, it could be viewed as costly. However, the fact that the correlation between IQ and DTD does not appear in controls argues against this. The groups were well matched on maternal education level (a proxy for premorbid or potential IQ), but the patient group had lower current IQ than controls (as expected, given that schizophrenia spectrum disorders are robustly associated with reduced current IQ compared to the general population). When we excluded the three controls with the highest IQ and the two patients with the lowest IQ, the DTD results were broadly unchanged. Computational modeling in Block 1 was not changed by these exclusions, although in Block 2, there were some differences. After the exclusions, in Block 2, the BIC values did not suggest evidence that the participants from different diagnostic groups are drawn from different populations. However, when we examined the individual-level modeled parameters, even after the exclusions, there was evidence that patients had higher estimated sampling costs compared to controls. Taken together, the findings indicate that lower IQ associated with psychosis is likely to contribute to the JTC bias but is unlikely solely to explain its existence in psychosis. The results of the study were unchanged when we excluded four patients taking antipsychotic (dopamine receptor antagonist) medication, which is consistent with two prior studies in healthy volunteers, suggesting that dopaminergic manipulations do not have a large effect on information sampling (Andreou, Moritz, Veith, Veckenstedt, & Naber, 2013; Ermakova, Ramachandra, Corlett, Fletcher, & Murray, 2014).

When comparing our results to those of Moutoussis et al. (2011), the use of the same computational model, implemented in the same way, is advantageous. However, we note that there are limitations in the approach. For example, the use of a gamma distribution may not be optimal in the case of values near zero (Moutoussis et al., 2011). It could be hypothesized that the degree of cognitive noise should be a constant per individual and that thus it would be more parsimonious to apply the same noise parameters for a given subject across blocks. Our data suggest that this is not the case. Noise is highly correlated across blocks, just as cost is. However, the experimental manipulation of block had a highly significant effect on both cost (as intended by our paradigm design) and noise (an incidental effect). Noise parameters were reduced in Block 2, where a correct decision is explicitly rewarded, compared to Block 1, where it is not (indicating that information sampling is not immutable but can be adaptively altered by psychological manipulation). Noise parameters were greater in Block 3 than in Block 2, presumably because the decision is more difficult in Block 3 (where participants need to balance the stated benefits and costs of sampling). When decisions reach a certain level of difficulty, participants may appear more random in their decision-making because they can no longer effectively utilize the information available.

Rather than focusing on ICD-10 or DSM–V schizophrenia patients, we studied a group of patients early in their course of psychosis. All patients in our study suffered from current psychotic symptoms. Psychosis is often viewed as an upper part of the continuum, ranging from rare occurrences of delusions or hallucinations at one end through individuals with regular “schizotypal” traits (van Os, Linscott, Myin-Germeys, Delespaul, & Krabbendam, 2009). Consistent with this approach, and with the theory that a JTC-style cognitive bias contributes to psychotic symptom formation (Huq et al., 1988), we found that patients with more severe positive symptoms sampled less information and had higher estimated sampling cost parameters. To investigate the idea of the psychosis continuum further, we looked at the correlations between information sampling and schizotypy characteristics in healthy volunteers. In this group, we found a negative correlation between the number of DTD and the scores on the distress and preoccupation subscales of the PDI, indicating that less information sampling is associated with higher scores. This is consistent with studies by Colbert and Peters (2002) and Lee, Barrowclough, and Lobban (2011), as well as the recent meta-analysis by Ross et al. (2015), in keeping with a continuum model of psychosis. Regarding modeled parameters in controls, estimated noise was associated with total PDI score, PDI distress, and PDI preoccupation, and estimated sampling costs were associated with PDI preoccupation and, marginally, with distress. This hints at the intriguing possibility that hasty decision-making due to cognitive noise may be a more important contributory factor to delusion-like thinking in the healthy population than it is to psychotic symptoms in psychotic illness, where hasty decision-making due to higher information sampling costs appears to be more important.

Summary

In summary, we found that early-psychosis patients demonstrate a hasty decision-making style compared with healthy volunteers, sampling significantly less information. This decision-making style was correlated with delusion severity, consistent with the possibility that it may be a cognitive mechanism contributing to delusion formation. Our data are not consistent with the account that patients sample less information because they are in general more noisy decision makers. Rather, our data suggest that patients with psychosis sample less information before making a decision because they attribute a higher cost to information sampling. Although psychosis patients were less able to adapt to the changing demands of the task, they did alter their decision-making style in response to the changing explicit costs of information, indicating that an impulsive decision-making style is not completely fixed in psychosis. This finding is consistent with the possibility that information sampling may be a treatment target, for example, for psychotherapy (Moritz et al., 2014), and that patients with psychosis may benefit in this neuropsychological domain, as they have in other domains, from cognitive scaffolding approaches exemplified in cognitive remediation therapy (Cella & Wykes, 2017).

AUTHOR CONTRIBUTIONS

Author roles: AE conceptualization, methodology, formal analysis, writing (original draft preparation, review, and editing); NG methodology, formal analysis, writing (review and editing); FK (methodology, formal analysis, writing (review and editing); AJ methodology, investigation, project administration, writing (review and editing); RA methodology, formal analysis, writing, review, and editing; PCF conceptualization, methodology, supervision writing (review and editing); MM methodology, analysis supervision, writing (original draft preparation, review, and editing); GKM conceptualization, project administration, methodology, formal analysis, supervision, funding acquisition, writing (original draft preparation, review, and editing).

FUNDING INFORMATION

Supported by a MRC Clinician Scientist (G0701911) and an Isaac Newton Trust award to GKM; by the University of Cambridge Behavioural and Clinical Neuroscience Institute, funded by a joint award from the Medical Research Council (G1000183) and Wellcome Trust (093875/Z/ 10/Z); by awards from the Wellcome Trust (095692) and the Bernard Wolfe Health Neuroscience Fund to PCF; by the Cambridgeshire and Peterborough NHS Foundation Trust and Cambridge NIHR Biomedical Research Centre, and by the Max Planck–UCL Centre for Computational Psychiatry and Ageing, a joint initiative of the Max Planck Society and University College London. MM also receives support from the UCLH Biomedical Research Centre and is funded staff in the “Neuroscience in Psychiatry Network,” Wellcome Strategic Award (095844/7/11/Z).

ACKNOWLEDGMENTS

The authors are grateful to clinical staff in CAMEO, Cambridgeshire and Peterborough NHS Trust, for help with participant recruitment, and to the study participants.

DATA AND ANALYSIS CODE

Code and de-identified decision making data are available at https://github.com/gm285/Ermakova2018CompPsy.

Supplementary Material

Contributor Information

Anna O. Ermakova, Unit for Social and Community Psychiatry, WHO Collaborating Centre for Mental Health Services Development, East London NHS Foundation Trust, London, UK

Nimrod Gileadi, Department of Psychiatry, School of Clinical Medicine, University of Cambridge, Cambridge, UK.

Franziska Knolle, Department of Psychiatry, School of Clinical Medicine, University of Cambridge, Cambridge, UK; Behavioural and Clinical Neuroscience Institute, University of Cambridge, Cambridge, UK.

Azucena Justicia, Cambridgeshire and Peterborough NHS Foundation Trust, Cambridge, UK; Hospital del Mar Medical Research Institute, CIBERSAM, Barcelona, Spain.

Rachel Anderson, School of Clinical Medicine, University of Cambridge, Cambridge, UK.

Paul C. Fletcher, Department of Psychiatry, School of Clinical Medicine, University of Cambridge, Cambridge, UK Behavioural and Clinical Neuroscience Institute, University of Cambridge, Cambridge, UK; Cambridgeshire and Peterborough NHS Foundation Trust, Cambridge, UK; Institute of Metabolic Science, University of Cambridge, Cambridge, UK.

Michael Moutoussis, Wellcome Centre for Human Neuroimaging and Max Planck Centre for Computational Psychiatry and Ageing, University College London, London, UK.

Graham K. Murray, Department of Psychiatry, School of Clinical Medicine, University of Cambridge, Cambridge, UK; Behavioural and Clinical Neuroscience Institute, University of Cambridge, Cambridge, UK; Cambridgeshire and Peterborough NHS Foundation Trust, Cambridge, UK.

REFERENCES

- Andreasen N. C. (1989). Scale for the Assessment of Negative Symptoms (SANS). British Journal of Psychiatry Supplement, 7, 49–58. [PubMed] [Google Scholar]

- Andreou C., Moritz S., Veith K., Veckenstedt R., & Naber D. (2013). Dopaminergic modulation of probabilistic reasoning and overconfidence in errors: A double-blind study. Schizophrenia Bulletin, 40, 558–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beck A. T., Steer R. A., & Brown G. K. (1996). Manual for the Beck Depression Inventory–II. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Bentall R. P., & Swarbrick R. (2003). The best laid schemas of paranoid patients: Autonomy, sociotropy and need for closure. Psychology and Psychotherapy, 76(Pt. 2), 163–171. [DOI] [PubMed] [Google Scholar]

- Broome M. R., Johns L. C., Valli I., Woolley J. B., Tabraham P., Brett C., … McGuire P. K. (2007). Delusion formation and reasoning biases in those at clinical high risk for psychosis. British Journal of Psychiatry, 191(S51), 38–42. [DOI] [PubMed] [Google Scholar]

- Cattell R. B., & Cattell A. K. S. (1973). Measuring intelligence with the culture fair tests. Champaign, Illinois: Institute for Personality and Ability Testing. [Google Scholar]

- Cella M., & Wykes T. (2017). The nuts and bolts of cognitive remediation: Exploring how different training components relate to cognitive and functional gains. Schizophrenia Research. Advance publication [DOI] [PubMed] [Google Scholar]

- Colbert S. M., & Peters E. R. (2002). Need for closure and jumping-to-conclusions in delusion-prone individuals. Journal of Nervous and Mental Disease, 190(1), 27–31. [DOI] [PubMed] [Google Scholar]

- Corcoran R., Rowse G., Moore R., Blackwood N., Kinderman P., Howard R., … Bentall R. P. (2008). A transdiagnostic investigation of “theory of mind” and “jumping to conclusions” in patients with persecutory delusions. Psychological Medicine, 38, 1577–1583. [DOI] [PubMed] [Google Scholar]

- Dudley R., Shaftoe D., Cavanagh K., Spencer H., Ormrod J., Turkington D., & Freeston M. (2011). “Jumping to conclusions” in first-episode psychosis. Early Intervention in Psychiatry, 5(1), 50–56. [DOI] [PubMed] [Google Scholar]

- Dudley R., Taylor P., Wickham S., & Hutton P. (2016). Psychosis, delusions and the “jumping to conclusions” reasoning bias: A systematic review and meta-analysis. Schizophrenia Bulletin, 42, 652–665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermakova A. O., Gileadi N., Knolle F., Justicia A., Anderson R., Fletcher P. C., … Murray G. K. (2019). Appendix: Expectation-Maximization. Supplement to “Cost evaluation during decision-making in patients at early stages of psychosis.” Computational Psychiatry, 3, 18–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermakova A. O., Gileadi N., Knolle F., Justicia A., Anderson R., Fletcher P. C., … Murray G. K. (2018). Code and de-identified decision making data for “Cost evaluation during decision-making in patients at early stages of psychosis.” GitHub https://github.com/gm285/Ermakova2018CompPsy [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermakova A. O., Knolle F., Justicia A., Bullmore E. T., Jones P. B., Robbins T. W., … Murray G. K. (2018). Abnormal reward prediction-error signalling in antipsychotic naive individuals with first-episode psychosis or clinical risk for psychosis. Neuropsychopharmacology, 43, 1691–1699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermakova A. O., Ramachandra P., Corlett P. R., Fletcher P. C., & Murray G. K. (2014). Effects of methamphetamine administration on information gathering during probabilistic reasoning in healthy humans. PLoS One, 9(7), e102683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esslinger C., Braun U., Schirmbeck F., Santos A., Meyer-Lindenberg A., Zink M., & Kirsch P. (2013). Activation of midbrain and ventral striatal regions implicates salience processing during a modified beads task. PLoS One, 8(3), 0058536 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans S. L., Averbeck B. B., & Furl N. (2015). Jumping to conclusions in schizophrenia. Neuropsychiatric Disease and Treatment, 2015, 1615–1624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everitt B. S., & Skrondal A. (2010). The Cambridge dictionary of statistics (4th ed.). Cambridge, England: Cambridge University Press. [Google Scholar]

- Falcone M. A., Murray R. M., O’Connor J. A., Hockey L. N., Gardner-Sood P., Di Forti M., … Jolley S. (2015). Jumping to conclusions and the persistence of delusional beliefs in first episode psychosis. Schizophrenia Research, 165, 243–246. [DOI] [PubMed] [Google Scholar]

- Fine C., Gardner M., Craigie J., & Gold I. (2007). Hopping, skipping or jumping to conclusions? Clarifying the role of the JTC bias in delusions. Cognitive Neuropsychiatry, 12(1), 46–77. [DOI] [PubMed] [Google Scholar]

- Furl N., & Averbeck B. B. (2011). Parietal cortex and insula relate to evidence seeking relevant to reward-related decisions. Journal of Neuroscience, 31, 17572–17582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garety P. A., Bebbington P., Fowler D., Freeman D., & Kuipers E. (2007). Implications for neurobiological research of cognitive models of psychosis: A theoretical paper. Psychological Medicine, 37, 1377–1391. [DOI] [PubMed] [Google Scholar]

- Garety P. A., & Freeman D. (1999). Cognitive approaches to delusions: A critical review of theories and evidence. British Journal of Clinical Psychology, 38(Pt. 2), 113–154. [DOI] [PubMed] [Google Scholar]

- Garety P. A., & Freeman D. (2013). The past and future of delusions research: From the inexplicable to the treatable. British Journal of Psychiatry, 203, 327–333. [DOI] [PubMed] [Google Scholar]

- Garety P. A., Freeman D., Jolley S., Dunn G., Bebbington P. E., Fowler D. G., … Dudley R. (2005). Reasoning, emotions, and delusional conviction in psychosis. Journal of Abnormal Psychology, 114, 373–384. [DOI] [PubMed] [Google Scholar]

- Garety P. A., Hemsley D. R., & Wessely S. (1991). Reasoning in deluded schizophrenic and paranoid patients. Journal of Nervous and Mental Disease, 179, 194–201. [DOI] [PubMed] [Google Scholar]

- Garety P., Joyce E., Jolley S., Emsley R., Waller H., Kuipers E., … Freeman D. (2013). Neuropsychological functioning and jumping to conclusions in delusions. Schizophrenia Research, 150, 570–574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall R. C. W. (1995). Global assessment of functioning: A modified scale. Psychosomatics, 36, 267–275. [DOI] [PubMed] [Google Scholar]

- Huq S. F., Garety P. A., & Hemsley D. R. (1988). Probabilistic judgements in deluded and non-deluded subjects. Quarterly Journal of Experimental Psychology, Section A, 40, 801–812. [DOI] [PubMed] [Google Scholar]

- Huys Q. J. M., Eshel N., O’Nions E., Sheridan L., Dayan P., & Roiser J. P. (2012). Bonsai trees in your head: How the Pavlovian system sculpts goal-directed choices by pruning decision trees. PLoS Computational Biology, 8(3), 1002410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys Q. J. M., Lally N., Faulkner P., Eshel N., Seifritz E., Gershman S. J., … Roiser J. P. (2015). Interplay of approximate planning strategies. Proceedings of the National Academy of Sciences, 112, 3098–3103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay S. R., Fiszbein A., & Opler L. A. (1987). The Positive and Negative Syndrome Scale (PANSS) for schizophrenia. Schizophrenia Bulletin, 13, 261–276. [DOI] [PubMed] [Google Scholar]

- Lee G., Barrowclough C., & Lobban F. (2011). The influence of positive affect on jumping to conclusions in delusional thinking. Personality and Individual Differences, 50, 717–722. [Google Scholar]

- Lincoln T. M., Ziegler M., Mehl S., & Rief W. (2010). The jumping to conclusions bias in delusions: Specificity and changeability. Journal of Abnormal Psychology, 119, 40–49. [DOI] [PubMed] [Google Scholar]

- Menon M., Mizrahi R., & Kapur S. (2008). “Jumping to conclusions” and delusions in psychosis: Relationship and response to treatment. Schizophrenia Research, 98, 225–231. [DOI] [PubMed] [Google Scholar]

- Moritz S., Andreou C., Schneider B. C., Wittekind C. E., Menon M., Balzan R. P., & Woodward T. S. (2014). Sowing the seeds of doubt: A narrative review on metacognitive training in schizophrenia. Clinical Psychology Review, 34, 358–366. [DOI] [PubMed] [Google Scholar]

- Morrison A. P., French P., Stewart S. L. K., Birchwood M., Fowler D., Gumley A. I., … Dunn G. (2012). Early detection and intervention evaluation for people at risk of psychosis: Multisite randomised controlled trial. British Medical Journal, 344, e2233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moutoussis M., Bentall R. P., El-Deredy W., & Dayan P. (2011). Bayesian modelling of jumping-to-conclusions bias in delusional patients. Cognitive Neuropsychiatry, 16, 422–447. [DOI] [PubMed] [Google Scholar]

- Peters E., Joseph S., Day S., & Garety P. (2004). Measuring delusional ideation: The 21-item Peters et al. Delusions Inventory (PDI). Schizophrenia Bulletin, 30, 1005–1022. [DOI] [PubMed] [Google Scholar]

- Ross R. M., McKay R., Coltheart M., & Langdon R. (2015). Jumping to conclusions about the beads task? a meta-analysis of delusional ideation and data-gathering. Schizophrenia Bulletin, 41, 1183–1191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- So S. H., Garety P. A., Peters E. R., & Kapur S. (2010). Do antipsychotics improve reasoning biases? A review. Psychosomatic Medicine, 72, 681–693. [DOI] [PubMed] [Google Scholar]

- So S. H.-W., Siu N. Y.-F., Wong H.-L., Chan W., & Garety P. A. (2015). “Jumping to conclusions” data-gathering bias in psychosis and other psychiatric disorders: Two meta-analyses of comparisons between patients and healthy individuals. Clinical Psychology Review. [DOI] [PubMed] [Google Scholar]

- Speechley W. J., Whitman J. C., & Woodward T. S. (2010). The contribution of hypersalience to the “jumping to conclusions” bias associated with delusions in schizophrenia. Journal of Psychiatry and Neuroscience, 35, 7–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Os J., Linscott R. J., Myin-Germeys I., Delespaul P., & Krabbendam L. (2009). A systematic review and meta-analysis of the psychosis continuum: Evidence for a psychosis proneness–persistence–impairment model of psychotic disorder. Psychological Medicine, 39, 179–195. [DOI] [PubMed] [Google Scholar]

- Warman D. M., Lysaker P. H., Martin J. M., Davis L., & Haudenschield S. L. (2007). Jumping to conclusions and the continuum of delusional beliefs. Behaviour Research and Therapy, 45, 1255–1269. [DOI] [PubMed] [Google Scholar]

- Yung A. R., Yuen H. P., McGorry P. D., Phillips L. J., Kelly D., Dell’Olio M., … Buckby J. (2005). Mapping the onset of psychosis: The comprehensive assessment of at risk mental states. Schizophrenia Research, 39, 964–971. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.