Abstract

Importance

Surgeons are increasingly interested in using mobile and online applications with wound photography to monitor patients after surgery. Early work using remote care to diagnose surgical site infections (SSIs) demonstrated improved diagnostic accuracy using wound photographs to augment patients’ electronic reports of symptoms, but it is unclear whether these findings are reproducible in real-world practice.

Objective

To determine how wound photography affects surgeons’ abilities to diagnose SSIs in a pragmatic setting.

Design, Setting, and Participants

This prospective study compared surgeons’ paired assessments of postabdominal surgery case vignettes with vs without wound photography for detection of SSIs. Data for case vignettes were collected prospectively from May 1, 2007, to January 31, 2009, at Erasmus University Medical Center, Rotterdam, the Netherlands, and from July 1, 2015, to February 29, 2016, at Vanderbilt University Medical Center, Nashville, Tennessee. The surgeons were members of the American Medical Association whose self-designated specialty is general, abdominal, colorectal, oncologic, or vascular surgery and who completed internet-based assessments from May 21 to June 10, 2016.

Intervention

Surgeons reviewed online clinical vignettes with or without wound photography.

Main Outcomes and Measures

Surgeons’ diagnostic accuracy, sensitivity, specificity, confidence, and proposed management with respect to SSIs.

Results

A total of 523 surgeons (113 women and 410 men; mean [SD] age, 53 [10] years) completed a mean of 2.9 clinical vignettes. For the diagnosis of SSIs, the addition of wound photography did not change accuracy (863 of 1512 [57.1%] without and 878 of 1512 [58.1%] with photographs). Photographs decreased sensitivity (from 0.58 to 0.50) but increased specificity (from 0.56 to 0.63). In 415 of 1512 cases (27.4%), the addition of wound photography changed the surgeons’ assessment (215 of 1512 [14.2%] changed from incorrect to correct and 200 of 1512 [13.2%] changed from correct to incorrect). Surgeons reported greater confidence when vignettes included a wound photograph compared with vignettes without a wound photograph, regardless of whether they correctly identified an SSI (median, 8 [interquartile range, 6-9] vs median, 8 [interquartile range, 7-9]; P < .001) but they were more likely to undertriage patients when vignettes included a wound photograph, regardless of whether they correctly identified an SSI.

Conclusions and Relevance

In a practical simulation, wound photography increased specificity and surgeon confidence, but worsened sensitivity for detection of SSIs. Remote evaluation of patient-generated wound photographs may not accurately reflect the clinical state of surgical incisions. Effective widespread implementation of remote postoperative assessment with photography may require additional development of tools, participant training, and mechanisms to verify image quality.

This simulation study uses case vignettes to determine how wound photography affects surgeons’ abilities to diagnose surgical site infections in a pragmatic setting.

Key Points

Question

How does the addition of wound photography affect the ability of surgeons to diagnose surgical site infections using an online monitoring tool?

Findings

This simulation study found that wound photography increased specificity but worsened accuracy and sensitivity for the diagnosis of surgical site infections. Surgeons reported greater confidence but were more likely to undertriage patients when vignettes included a wound photograph, regardless of whether they correctly diagnosed a surgical site infection.

Meaning

Wound photography facilitates online postoperative wound assessment, but current practices need refinement to ensure that critical issues are not missed.

Introduction

Supported by the widespread availability of smartphones, telemedicine applications for perioperative care have proliferated.1 Alongside the development and testing of formal tools for remote care, many surgeons are also informally using electronic messaging and smartphone photography to evaluate surgical incisions.2,3,4,5 These strategies could increase patient access while improving surgeons’ abilities to survey and quickly detect developing complications at surgical sites, mitigating morbidity and hospital readmission.

When surgeons provide remote postoperative care, it is generally believed that the addition of wound photography helps them determine whether a patient’s symptoms indicate a surgical site infection (SSI) or another wound complication. In studies performed in controlled settings, the addition of wound photography alongside clinical data increases diagnostic accuracy, improves clinicians’ confidence in a diagnosis, and decreases overtreatment of SSIs.4,6,7

A limitation of prior work is that study contexts have differed from the settings in which remote assessment of wounds is currently used on an informal basis and its likely use if it were widely adopted among surgical practices. Specifically, images in prior work have typically been obtained in a uniform fashion by trained photographers. Furthermore, evaluators have been highly skilled practitioners with experience and specific interest in SSIs.5,6,7,8 Finally, existing studies examined the diagnosis of an SSI as the sole clinical outcome. Other postoperative wound conditions may also require in-person assessment and treatment, and they have been categorized as surgical site occurrences (SSOs), which include SSIs but also several non-SSI entities, such as wound seroma, hematoma, cellulitis, wound necrosis, stitch abscess, and superficial wound dehiscence.9

Given the controlled settings and narrow outcome measures in prior studies, it is not clear whether similar outcomes of remote postoperative assessment can be achieved in clinical practice. We aimed to evaluate the ability of surgeons who are untrained in remote assessment to diagnose SSIs and other wound events using online patient reports of symptoms, with or without the addition of wound photographs. In addition, to explore how the effectiveness of remote assessments is affected by variations in image quality, we compared the photographs taken by patients with those taken by trained clinicians to assess diagnostic performance. We hypothesized that, in a pragmatic context, wound photography would improve the accuracy of a surgeon’s diagnosis of an SSI and/or an SSO compared with symptom reports alone. We further hypothesized that, owing to variation in the quality of data, patient-generated data (symptom reports and wound photography) would be inferior to clinician-generated data for surgeon’s diagnosis of an SSI and/or an SSO.

Methods

Target Population and Survey Administration

We used the American Medical Association Physician Masterfile to identify surgeons practicing in the United States who manage postoperative abdominal wounds based on their designated specialty of abdominal surgery, colon and rectal surgery, general surgery, surgical oncology, or vascular surgery. Direct Medical Data housed the Physician Masterfile and managed electronic message invitations containing a link to the survey, which was administered via Vanderbilt REDCap (Research Electronic Data Capture).10 Study personnel did not have direct access to names or contact details for invited participants. Direct Medical Data provided data regarding the number of emails that were opened and the number of links that were accessed. The study was approved by the Vanderbilt University Medical Center institutional review board and was deemed exempt by the University of Washington given its use of an established, deidentified data set.

Collection of Patient Data

The survey consisted of deidentified case vignettes from actual postoperative patients. These data were obtained from patient-generated data collected prospectively at Vanderbilt University Medical Center, Nashville, Tennessee, and from clinician-generated data initially collected in a prospective cohort study at Erasmus University Medical Center, Rotterdam, the Netherlands, and curated at the University of Washington, Seattle.8 The clinician-generated data included reports of patients’ symptoms and wound photographs obtained by clinician researchers during participants’ inpatient stays, which ranged from 2 to 21 postoperative days.8

Patient-generated data were obtained from postoperative patients presenting to an outpatient surgical clinic at Vanderbilt University Medical Center. Prior to their visit, patients completed an online assessment that had been developed and tested in a previous pilot study using standardized instructions (eFigure 1 in the Supplement).2 For each case, the clinical wound diagnosis (SSI, SSO, or normal) and treatment (eg, wound opening, antibiotic prescription, or no wound care) as documented by the treating clinician was considered the criterion standard when determining diagnostic accuracy, sensitivity, and specificity.

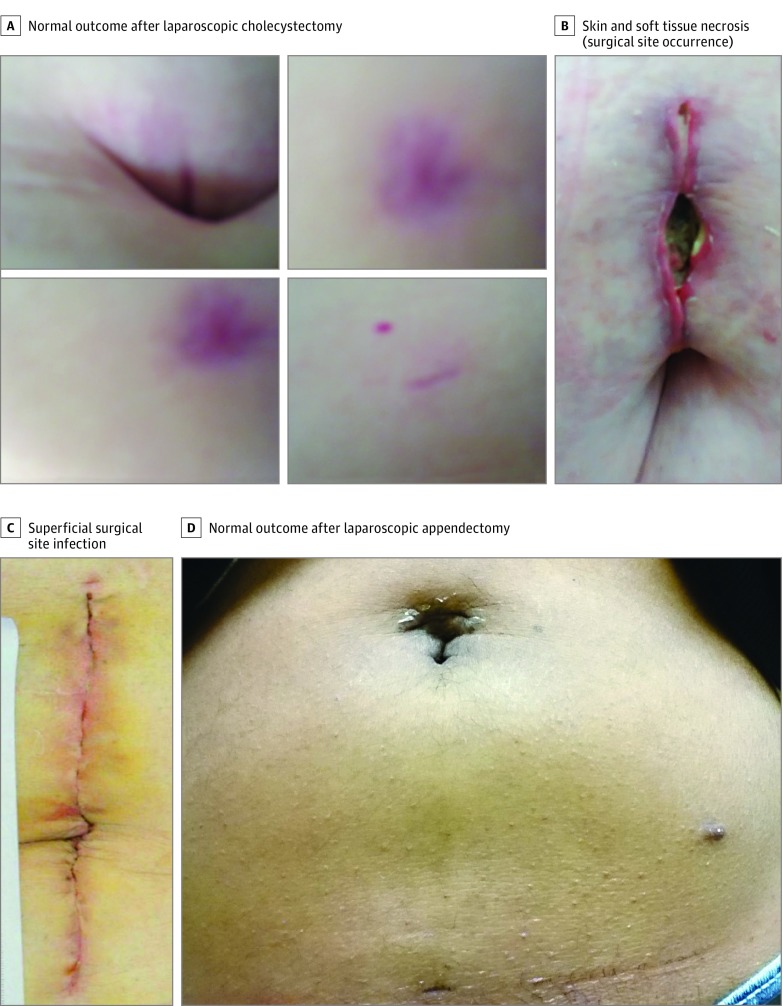

Development of Survey Using Patient Data

We created 8 unique surveys, each containing 3 different case vignettes. Included cases were selected by study personnel to reflect a broad range of postoperative wound abnormalities and operation types, as well as the observed variation in data quality (Figure 1 and eTable 1 in the Supplement). For each case, respondents initially received only the patient’s symptom report and categorized the patient as having an SSI (yes or no) and/or another wound abnormality that required further evaluation and/or treatment (yes or no) as a lay definition for SSO (eFigure 2 in the Supplement). The respondents stated their confidence in the diagnosis on a scale from 0 to 10, where 0 indicated being least confident and 10 indicated being most confident. The National Surgical Quality Improvement Project definition of an SSI was included on each survey for reference (eTable 2 in the Supplement).11 Surgeons then identified their next step in management, with options ranging from reassure patient to instruct patient to be seen in the emergency department as soon as possible. Participants were then presented with the same case, this time including both the symptom report and a wound photograph, and responded to the same series of questions. Respondents could not go back and change prior answers based on the additional data. The surveys were tested on an internal sample of surgeons and found to take, on average, 10.5 minutes to complete.

Figure 1. Examples of Wound Photographs Included in Case Vignettes.

Outcomes and Statistical Analysis

Using McNemar χ2 tests to account for intraphysician correlation, we compared the primary outcome, diagnostic accuracy (percentage correct) of surgeon assessments of SSIs using symptom reports alone vs using symptom reports plus wound photographs.12,13 Each surgeon’s assessment of each vignette was considered a unique, but conditionally dependent, test. We also determined the sensitivity and specificity of each remote diagnosis relative to the criterion standard of clinical diagnosis. A secondary outcome was the assessment of an SSO (eTable 3 and eFigure 3 in the Supplement).9 Because an SSI is included in the definition of an SSO, SSI cases were automatically coded as SSOs. Likewise, when surgeons responded that an SSI was present, this was counted as an SSO.

In addition, we calculated sensitivity, specificity, and accuracy of surgeons’ assessments using patient-generated vs clinician-generated data. Using Wilcoxon rank sum tests, we also compared surgeons’ confidence in assessments with vs without wound photographs. Surgeons’ reported next steps in management with and without wound photography were compared using McNemar χ2 tests. We categorized surgeons’ reported next steps as an escalation of care (prescribe antibiotics or perform in-person assessment) vs none (reassure or continue electronic monitoring). All P values were from 2-sided tests and results were deemed statistically significant at P < .05.

Results

The survey was sent to 23 619 unique individuals via electronic message; 3927 of these individuals (16.6%) opened the email. A total of 662 individuals responded to the survey, 523 (79.0%) of whom completed at least 1 case and 139 (21.0%) of whom discontinued before finishing the first case, for an overall participation rate of 2.2% and a participation rate of 13.3% among surgeons who opened the study email (eFigure 4 in the Supplement). Surgeons who completed at least 1 case (defined as both symptom report alone and symptom report with photographs) were included in the descriptive statistics and primary analyses. Of these, 486 of 523 surgeons (92.9%) completed all 3 cases, yielding 1513 total case assessments. Respondents were a mean (SD) age of 53 (10) years and had practiced surgery for a mean (SD) of 20 (10) years. Most of the respondents were white men practicing general surgery in a community setting (Table 1).

Table 1. Demographic Characteristics of Participating Surgeons.

| Characteristic | No. (%) (N = 523) |

|---|---|

| Age, mean (SD), y | 53 (10) |

| Female sex | 113 (21.6) |

| Time in practice, mean (SD), y | 20 (10) |

| Primary practice setting | |

| University hospital | 130 (24.9) |

| Community hospital | 234 (44.7) |

| Community hospital, university-affiliated | 123 (23.5) |

| Othera | 30 (5.7) |

| Surgical specialty | |

| General surgery | 303 (57.9) |

| Vascular or wound Surgery | 73 (14.0) |

| Surgical oncology | 51 (9.8) |

| Colorectal surgery | 33 (6.3) |

| Trauma surgery | 17 (3.3) |

| Transplant surgery | 10 (1.9) |

| Otherb | 35 (6.7) |

| Primarily elective practice | 463 (88.5) |

| English as primary language | 501 (95.8) |

| Race/ethnicity | |

| White | 429 (82.0) |

| Asian or Pacific Islander | 30 (5.7) |

| Hispanic, Latino/a, of Spanish origin | 29 (5.5) |

| Black | 13 (2.5) |

| American Indian or Alaska Native | 2 (0.4) |

| Prefer not to respond | 21 (4.0) |

| Time of first postoperative follow-up | |

| 1 wk | 215 (41.1) |

| 2 wk | 246 (47.0) |

| ≥3 wk | 60 (11.5) |

| No scheduled visits | 2 (0.4) |

| No. of surgical site infections seen per month, median (IQR) [absolute range] | 1 (1-2) [0-25] |

| No. of wound photographs seen per month, median (IQR) [absolute range] | 1 (0-3) [0-110] |

Abbreviation: IQR, interquartile range.

Veterans Affairs hospital (6); military hospital (1); academic public hospital (1), government hospital (1), or Indian Health Service (2); nonuniversity academic tertiary care (2); private office (3); retired (2); critical access hospital (1), rural hospital (1), nursing home or long-term acute care (1), large academic multispecialty clinic (1), wound care (1), or not specified (7).

Bariatric (4), breast (2), burn (1), cardiothoracic (3), chronic orofacial pain (1), endocrine (1), gynecologic oncology (6), obstetrics and gynecology (1), surgical critical care (4), oral and maxillofacial surgery (1), ophthalmology (1), orthopedic trauma (1), plastic and reconstructive surgery (2), urgent care (2), urology (1), wound care and vascular (1), wound care (2), pancreas or hepatobiliary (1), or pediatric surgery (1).

Overall Accuracy, Sensitivity, and Specificity

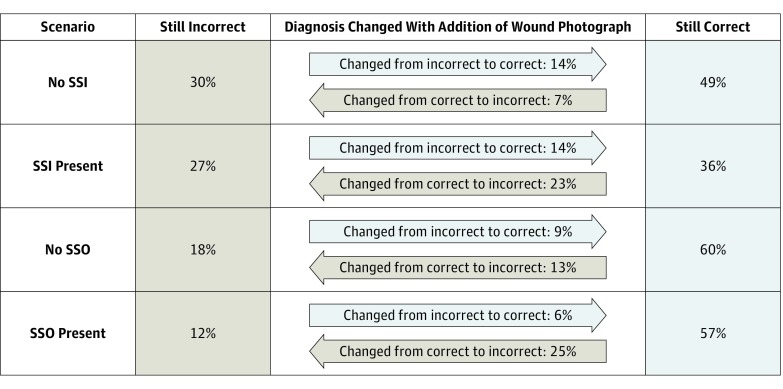

When diagnosing SSIs, surgeons were correct in 863 of 1512 cases (57.1%) with symptom reports alone and in 878 of 1512 cases (58.1%) with symptom reports plus wound photographs. For each of the 24 case vignettes, accuracy ranged from 1.7% (1 correct of 59 responses) to 100% (66 correct of 66 responses). Specifically, for cases in which an SSI was present (n = 601), surgeons were correct in 351 cases with symptom reports alone (58.4%) and 300 of 598 cases with symptom reports plus wound photographs (50.2%) (P < .001) (Table 2). For cases without an SSI (n = 911), surgeons were correct in 512 cases with symptom reports alone (56.1%) and 578 cases with symptom reports plus wound photographs (63.4%) (P < .001). The addition of wound photographs resulted in a change in the surgeons’ assessment of SSIs in 415 of 1512 cases (27.4%; 215 of 1512 [14.2%] changed from incorrect to correct and 200 of 1512 [13.2%] changed from correct to incorrect) (Figure 2). Relative to the criterion standard of clinical diagnosis, sensitivity for a diagnosis of an SSI with symptom reports alone was 0.58, and specificity was 0.56. With the addition of wound photography, sensitivity was slightly worse (0.50) and specificity was slightly better (0.63) (Table 2).

Table 2. Sensitivity and Specificity of Symptom Reports Alone vs Symptom Reports Plus Wound Photograph(s) for Diagnosis of SSI and SSO Relative to Actual Clinical Diagnosis.

| Characteristic | Clinical Diagnosis | Total No. of Cases | |

|---|---|---|---|

| SSI | No SSI | ||

| SSI symptom report alone | |||

| SSI | 351 | 399 | 750 |

| No SSI | 250 | 512 | 762 |

| Totala | 601 | 911 | 1512 |

| SSI symptom report plus photograph(s) | |||

| SSI | 300 | 333 | 633 |

| No SSI | 298 | 578 | 876 |

| Totalb | 598 | 911 | 1509 |

| SSO | No SSO | ||

| SSO symptom report alone | |||

| SSO | 914 | 105 | 1019 |

| No SSO | 209 | 284 | 493 |

| Totalc | 1123 | 389 | 1512 |

| SSO symptom report plus photograph(s) | |||

| SSO | 710 | 120 | 830 |

| No SSO | 410 | 269 | 679 |

| Totald | 1120 | 389 | 1509 |

Abbreviations: SSI, surgical site infection; SSO, surgical site occurrence.

Sensitivity, 0.58; specificity, 0.56; 57% correct.

Sensitivity, 0.50; specificity, 0.63; 58% correct.

Sensitivity, 0.81; specificity, 0.73; 79% correct.

Sensitivity, 0.63; specificity, 0.69; 65% correct.

Figure 2. Association of Wound Photograph With Correct Diagnosis.

“Still Incorrect” indicates that the response was incorrect using descriptions alone and remained incorrect with the addition of wound photograph(s); “Still Correct” indicates that the response was correct using descriptions alone and remained correct with the addition of wound photograph(s). SSI indicates surgical site infection; and SSO, surgical site occurrence.

For cases with an SSO (n = 1123), surgeons’ accuracy was 79.2% (1198 of 1512) with symptom reports alone (range by case, 1.7% [1 of 59 correct] to 100% [68 of 68 correct]) and 64.9% (979 of 1509) with symptom reports plus wound photograph(s) (range, 8.5% [5 of 59 correct] to 96.7% [58 of 60 correct]) (Table 2). For cases without an SSO (n = 389), surgeons were correct in 284 cases with symptom reports alone (73.0%) and 269 cases with symptom reports plus wound photographs (69.2%). The addition of wound photographs changed surgeons’ assessment in 429 of 1512 cases (28.4%; 105 of 1512 [6.9%] changed from incorrect to correct and 324 of 1512 [21.4%] changed from correct to incorrect) (Figure 2). Symptom reports alone yielded an overall sensitivity of 0.81 and specificity of 0.73. The addition of wound photography resulted in decreased sensitivity to 0.63 and decreased specificity to 0.69 for SSOs (Table 2).

Surgeon Confidence and Next Steps

Surgeons’ confidence in a diagnosis of SSI was generally high and increased with the addition of wound photography (median, 8; interquartile range [IQR], 6-9; vs median, 8; IQR, 7-9; P < .001). Likewise, for a diagnosis of an SSO, confidence was high and increased with addition of wound photography (median, 7; IQR, 5-9; vs median, 8; IQR, 7-9; P < .001). Confidence increased with the addition of wound photography regardless of whether the surgeon’s assessment was correct (SSI: median, 8; IQR, 7-9 with photography vs 8; IQR, 6-9 without photography; P < .001; SSO: median, 8; IQR, 6-9 with photography vs 7; IQR, 5-9 without photography; P < .001) or incorrect (SSI: median, 9; IQR, 7-10 with photography vs 8; IQR, 6-9 without photography; P < .001; SSO: median, 8; IQR, 7-10 with photography vs 8; IQR, 6-9 without photography; P < .001).

Association of Wound Photography With Proposed Treatment

In the absence of SSIs, the addition of wound photography decreased the frequency of the escalation of care from 629 of 911 (69.0%) to 538 of 911 (59.1%) (P < .001) (Table 3). When an SSI was present, the addition of wound photographs alongside symptom reports still decreased the percentage of surgeons who recommended an escalation of care (from 86.2% [518 of 601 cases] to 75.9% [454 of 598 cases]; P < .001). In the presence of an SSO, surgeons were also more likely to reassure and less likely to escalate care with the addition of a wound photograph (26.0% [291 of 1120] reassure with a photograph vs 12.6% [142 of 1123] reassure without a photograph; P < .001). If there was no SSO, the addition of wound photography did not affect the reported next step (57.3% [223 of 389] reassure with symptom reports alone and 58.1% [226 of 389] reassure with addition of wound photographs; P = .77).

Table 3. Surgeon-Reported Next Steps In Management.

| Characteristic | No. of Casesa | Total No. of Cases | % of Cases | ||

|---|---|---|---|---|---|

| Escalate | Monitor | Undertriage | Overtriage | ||

| Symptom report alone for SSIs | |||||

| SSI | 518 | 83 | 601 | 5 | 42 |

| No SSI | 629 | 282 | 911 | ||

| Total | 1147 | 365 | 1512 | ||

| Symptoms plus photograph(s) | |||||

| SSI | 454 | 144 | 598 | 10 | 36 |

| No SSI | 538 | 373 | 911 | ||

| Total | 992 | 517 | 1509 | ||

| Symptom report alone for SSOs | |||||

| SSO | 981 | 142 | 1123 | 9 | 11 |

| No SSO | 166 | 223 | 389 | ||

| Total | 1147 | 365 | 1512 | ||

| Symptoms plus photograph(s) | |||||

| SSO | 829 | 291 | 1120 | 19 | 11 |

| No SSO | 163 | 226 | 389 | ||

| Total | 992 | 517 | 1509 | ||

Abbreviations: SSI, surgical site infection; SSO, surgical site occurrence.

Escalate includes prescribe antibiotics, see in clinic, send to the emergency department; monitor includes reassure, perform electronic monitoring of wound symptoms, perform electronic monitoring of wound photographs.

Patient- vs Clinician-Generated Data

For a diagnosis of SSI, accuracy was better overall with the surgeon’s assessment of clinician-generated data than with the patient-generated data using both symptom reports alone (503 of 730 [68.9%] vs 360 of 782 [46.0%]) and symptom reports plus wound photographs (475 of 727 [65.3%] vs 403 of 782 [51.5%]). In addition, there was less variation by case in accuracy with clinician-generated data. For example, using symptom reports plus wound photographs, the 25th to 75th percentile for accuracy was 40% to 90% with clinician-generated data vs 24% to 84% with patient-generated data. Using clinician-generated data, we found that the addition of wound photography decreased sensitivity to 0.54 from 0.69 but increased specificity to 0.86 from 0.68 for the diagnosis of an SSI (P < .001 for both). Using patient-generated data, we found that the addition of wound photography increased sensitivity to 0.36 from 0.18 (P < .001) but that there was no difference in specificity (0.52 vs 0.55; P = .10).

Discussion

In this pragmatic simulation of remote postoperative assessment using case vignettes with or without wound photography, surgeons correctly diagnosed SSIs a mean 57% of the time and correctly diagnosed SSOs a mean 81% of the time. The addition of wound photographs decreased the sensitivity of surgeons’ assessments for both SSIs and SSOs. The addition of wound photographs increased specificity for the diagnosis of SSIs but not for SSOs. Surgeons reported greater confidence in their assessments when wound photographs were included alongside symptom reports, regardless of whether they made the correct diagnosis. They were also more likely to recommend reassurance or continued electronic monitoring rather than prescribe antibiotics or perform an in-person assessment after viewing wound photographs, even when an SSI or an SSO was present. These findings raise some concerns about surgeons’ increasing practice of using wound photographs, often taken by patients with smartphones, to evaluate patients’ surgical sites during the postoperative period. Furthermore, these findings suggest that image-based approaches for remote postoperative assessment will need refinement prior to widespread implementation.

Our intent in this study was to evaluate current informal practices and to examine a pragmatic approach to the widespread implementation of remote postoperative care. Minimally trained patients and surgeons used the devices of their choice for image capture and review, which is believed to best replicate current surgeon practices and is a potentially scalable strategy to implement the remote assessment of wounds among a large target population using existing resources. This approach differs from prior studies of the remote assessment of wounds. For example, in a similar study, clinician-generated images from inpatients were evaluated by members of the Surgical Infection Society, a group with specialized interest and expertise in surgical infections.7,8 In other resource-intensive studies, patient-generated data were obtained using electronic devices after extensive in-person training and remote coaching, and data were reviewed on standardized platforms by dedicated researchers.5,6,14,15 A final key difference was the inclusion of noninfectious wound complications (ie, SSOs) as an outcome of interest to capture nearly all potential problems at surgical sites that require in-person evaluation and treatment.

In this study, the overall accuracy of surgeons was lower than in prior studies, presumably owing to the use of patient-generated data and the more general population of surgeons sampled. The aforementioned study of surgical infection experts demonstrated accurate SSI detection in 67% of cases without photographs and in 76% of cases with photographs7 (vs 57% of cases without photographs and 58% of cases with photographs in our study). A study of vascular surgeons evaluating postoperative wounds found 66% to 95% agreement between in-person and remote assessments.6 In another vascular surgery sample, agreement between in-person and remote raters ranged from 0.27 to 0.90 (κ coefficient; 1 = perfect agreement and 0 = no better than chance) for various postoperative wound characteristics.4

However, the observed variation between in-person and remote assessments, herein labeled as inaccuracy because in-person findings were considered a criterion standard, has been similarly detected between different surgeons evaluating the same wound in person. In the prior vascular surgery studies, agreement among in-person surgeons was similar (64%-85%)6 or only slightly better than between in-person and remote surgeons (κ = 0.44-0.93).4 Interrater agreement between 2 surgeons both making remote assessments varies similarly, with 3 different European studies demonstrating poor (κ = 0.24) to moderate (κ = 0.45-0.68) agreement among remote surgeons, which did not improve with the provision of a standard definition of SSI.8,16,17 Beyond interrater variability, the additional decrease in accuracy in our study may be explained by variation in data quality, evidenced by significant differences between data from patients and data from trained clinician researchers.

Given the apparent importance of data quality, an opportunity exists to improve the validity of surgeons’ remote evaluations by standardizing patient photography techniques using tutorials, coaching, or incorporating image-quality verification tools at the point of image capture.13 In addition, clinicians may benefit from focused training to assess image quality alongside content. On a broad scale, implementing these technological improvements and coaching patients and surgeons would require a significant investment, and it remains to be seen whether remote postoperative assessments will prove to be cost-effective.

It was surprising that, regardless of whether surgeons’ assessments were correct, the addition of wound photography increased their confidence and the likelihood that they would provide reassurance rather than requesting an in-person assessment. Sanger et al7 similarly found increased confidence with the addition of wound photographs, and the addition photographs effectively decreased the rate of overtreatment while maintaining a stable rate of undertreatment. Others have also found that, despite variation in diagnostic accuracy, treatment recommendations using remote data have been largely concordant with those made during in-person evaluations.4 Our contradictory finding that the addition of a wound photograph decreased the likelihood of the escalation of care in the presence of an SSI or an SSO is somewhat concerning. It is possible that surgeons in our study felt more comfortable recommending continued monitoring, particularly for cases in which a wound abnormality was present without apparent infection, because they believed that they could perform serial remote assessments over subsequent days. Although the option to perform serial assessments was not explicitly offered in these vignettes, it has been trialed by other developers of remote assessment tools.14,15

Limitations

There are several limitations to this study. Although the participation rate was within the expected range for a study using email recruitment methods, it remains small and increases the potential for selection bias. Although the case vignettes were developed using content from actual clinical cases, the surgeons’ assessments were not performed in the context of providing actual clinical care. In practice, surgeons have detailed knowledge about their patients’ comorbidities and the operations performed, which may influence their wound assessment or management recommendations. We used standard definitions of SSI and SSO and specifically included the National Surgical Quality Improvement Project definition of an SSI as a reference in the survey; however, the respondents may have applied alternative understandings of these diagnoses, which would affect their diagnostic accuracy. Our use of a broader secondary outcome, an SSO (which encompasses an SSI), likely minimized the influence of definitional discordances in this study; indeed, we found greater accuracy for the assessment of an SSO than for an assessment of an SSI.

The study design used same-case comparisons, in which the participating surgeons viewed case vignettes initially containing symptom reports only and then viewed the same cases with the addition of wound photography. This intuitive approach to isolating the influence that the wound photography could have on a remote assessment may have introduced bias due to sequential assessments. Finally, our oversampling of abnormal wounds does not reflect their true incidence in clinical practice, so study findings cannot be used to describe the incidence of wound events, nor the positive or negative predictive values for specific types of remote assessment in diagnosing SSIs and SSOs.

Conclusions

This work comes at a critical time when surgeons are beginning to incorporate telemedicine into perioperative care in both formal and informal ways. Surgeons may intuitively use image-based assessments of patients’ wounds, which appear to increase their confidence in diagnosing problems at surgical sites. Although such a practice may be helpful in ruling out an SSI, it does not necessarily improve on the current standard of electronic message or telephone-based triage without photography for determining which patients require an escalation of care. Inability to remotely detect an SSI or an SSO could create false sense of reassurance, could incur additional morbidity to patients, and could increase the surgeon’s liability. For this reason, ongoing efforts are needed to improve remote assessment programs for use in the triage and treatment of postoperative patients.

eFigure 1. Patient Instruction Sheet (Example)

eFigure 2. Online Assessment Tool

eFigure 3. Examples of Case Vignettes of SSO

eFigure 4. Cohort Flow

eTable 1. Postoperative Case Vignettes Used for Online Assessments of Surgical Site Infection (SSI) and Surgical Site Occurrence (SSO)

eTable 2. Definition of Surgical Site Infection

eTable 3. Definition of Surgical Site Occurrence

References

- 1.Gunter RL, Chouinard S, Fernandes-Taylor S, et al. Current use of telemedicine for post-discharge surgical care: a systematic review. J Am Coll Surg. 2016;222(5):915-927. doi: 10.1016/j.jamcollsurg.2016.01.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kummerow Broman K, Oyefule OO, Phillips SE, et al. Postoperative care using a secure online patient portal: changing the (inter)face of general surgery. J Am Coll Surg. 2015;221(6):1057-1066. doi: 10.1016/j.jamcollsurg.2015.08.429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sanger PC, van Ramshorst GH, Mercan E, et al. A prognostic model of surgical site infection using daily clinical wound assessment. J Am Coll Surg. 2016;223(2):259-270.e2. doi: 10.1016/j.jamcollsurg.2016.04.046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wiseman JT, Fernandes-Taylor S, Gunter R, et al. Inter-rater agreement and checklist validation for postoperative wound assessment using smartphone images in vascular surgery. J Vasc Surg Venous Lymphat Disord. 2016;4(3):320-328.e2. doi: 10.1016/j.jvsv.2016.02.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wiseman JT, Fernandes-Taylor S, Barnes ML, Tomsejova A, Saunders RS, Kent KC. Conceptualizing smartphone use in outpatient wound assessment: patients’ and caregivers’ willingness to use technology. J Surg Res. 2015;198(1):245-251. doi: 10.1016/j.jss.2015.05.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wirthlin DJ, Buradagunta S, Edwards RA, et al. Telemedicine in vascular surgery: feasibility of digital imaging for remote management of wounds. J Vasc Surg. 1998;27(6):1089-1099. doi: 10.1016/S0741-5214(98)70011-4 [DOI] [PubMed] [Google Scholar]

- 7.Sanger PC, Simianu VV, Gaskill CE, et al. Diagnosing surgical site infection using wound photography: a scenario-based study. J Am Coll Surg. 2017;224(1):8-15.e1. doi: 10.1016/j.jamcollsurg.2016.10.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.van Ramshorst GH, Vrijland W, van der Harst E, Hop WC, den Hartog D, Lange JF. Validity of diagnosis of superficial infection of laparotomy wounds using digital photography: inter and intra-observer agreement among surgeons. Wounds. 2010;22(2):38-43. [PubMed] [Google Scholar]

- 9.Kanters AE, Krpata DM, Blatnik JA, Novitsky YM, Rosen MJ. Modified hernia grading scale to stratify surgical site occurrence after open ventral hernia repairs. J Am Coll Surg. 2012;215(6):787-793. doi: 10.1016/j.jamcollsurg.2012.08.012 [DOI] [PubMed] [Google Scholar]

- 10.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.American College of Surgeons National Surgical Quality Improvement Program operations manual: wound occurrences. Chicago, IL: American College of Surgeons; 2015:87-98. [Google Scholar]

- 12.Kim S, Lee W. Does McNemar’s test compare the sensitivities and specificities of two diagnostic tests? Stat Methods Med Res. 2017;26(1):142-154. doi: 10.1177/0962280214541852 [DOI] [PubMed] [Google Scholar]

- 13.Trajman A, Luiz RR. McNemar χ2 test revisited: comparing sensitivity and specificity of diagnostic examinations. Scand J Clin Lab Invest. 2008;68(1):77-80. doi: 10.1080/00365510701666031 [DOI] [PubMed] [Google Scholar]

- 14.Fernandes-Taylor S, Gunter RL, Bennett KM, et al. Feasibility of implementing a patient-centered postoperative wound monitoring program using smartphone images: a pilot protocol. JMIR Res Protoc. 2017;6(2):e26. doi: 10.2196/resprot.6819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gunter RL, Fernandes-Taylor S, Rahman S, et al. Feasibility of an image-based mobile health protocol for postoperative wound monitoring. J Am Coll Surg. 2018;226(3):277-286. doi: 10.1016/j.jamcollsurg.2017.12.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lepelletier D, Ravaud P, Baron G, Lucet JC. Agreement among health care professionals in diagnosing case vignette–based surgical site infections. PLoS One. 2012;7(4):e35131. doi: 10.1371/journal.pone.0035131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Birgand G, Lepelletier D, Baron G, et al. Agreement among healthcare professionals in ten European countries in diagnosing case-vignettes of surgical-site infections. PLoS One. 2013;8(7):e68618. doi: 10.1371/journal.pone.0068618 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure 1. Patient Instruction Sheet (Example)

eFigure 2. Online Assessment Tool

eFigure 3. Examples of Case Vignettes of SSO

eFigure 4. Cohort Flow

eTable 1. Postoperative Case Vignettes Used for Online Assessments of Surgical Site Infection (SSI) and Surgical Site Occurrence (SSO)

eTable 2. Definition of Surgical Site Infection

eTable 3. Definition of Surgical Site Occurrence