Significance

Spectral graph clustering—clustering the vertices of a graph based on their spectral embedding—is of significant current interest, finding applications throughout the sciences. But as with clustering in general, what a particular methodology identifies as “clusters” is defined (explicitly, or, more often, implicitly) by the clustering algorithm itself. We provide a clear and concise demonstration of a “two-truths” phenomenon for spectral graph clustering in which the first step—spectral embedding—is either Laplacian spectral embedding, wherein one decomposes the normalized Laplacian of the adjacency matrix, or adjacency spectral embedding given by a decomposition of the adjacency matrix itself. The two resulting clustering methods identify fundamentally different (true and meaningful) structure.

Keywords: spectral embedding, spectral clustering, graph, network, connectome

Abstract

Clustering is concerned with coherently grouping observations without any explicit concept of true groupings. Spectral graph clustering—clustering the vertices of a graph based on their spectral embedding—is commonly approached via K-means (or, more generally, Gaussian mixture model) clustering composed with either Laplacian spectral embedding (LSE) or adjacency spectral embedding (ASE). Recent theoretical results provide deeper understanding of the problem and solutions and lead us to a “two-truths” LSE vs. ASE spectral graph clustering phenomenon convincingly illustrated here via a diffusion MRI connectome dataset: The different embedding methods yield different clustering results, with LSE capturing left hemisphere/right hemisphere affinity structure and ASE capturing gray matter/white matter core–periphery structure.

The purpose of this paper is to cogently present a “two-truths” phenomenon in spectral graph clustering, to understand this phenomenon from a theoretical and methodological perspective, and to demonstrate the phenomenon in a real-data case consisting of multiple graphs each with multiple categorical vertex class labels.

A graph or network consists of a collection of vertices or nodes representing entities together with edges or links representing the observed subset of the possible pairwise relationships between these entities. Graph clustering, often associated with the concept of “community detection,” is concerned with partitioning the vertices into coherent groups or clusters. By its very nature, such a partitioning must be based on connectivity patterns.

It is often the case that practitioners cluster the vertices of a graph—say, via -means clustering composed with Laplacian spectral embedding—and pronounce the method as having performed either well or poorly based on whether the resulting clusters correspond well or poorly with some known or preconceived notion of “correct” clustering. Indeed, such a procedure may be used to compare two clustering methods and to pronounce that one works better (on the particular data under consideration). However, clustering is inherently ill-defined, as there may be multiple meaningful groupings, and two clustering methods that perform differently with respect to one notion of truth may in fact be identifying inherently different, but perhaps complementary, underlying structure. With respect to graph clustering, ref. 1 shows that there can be no algorithm that is optimal for all possible community detection tasks (Fig. 1).

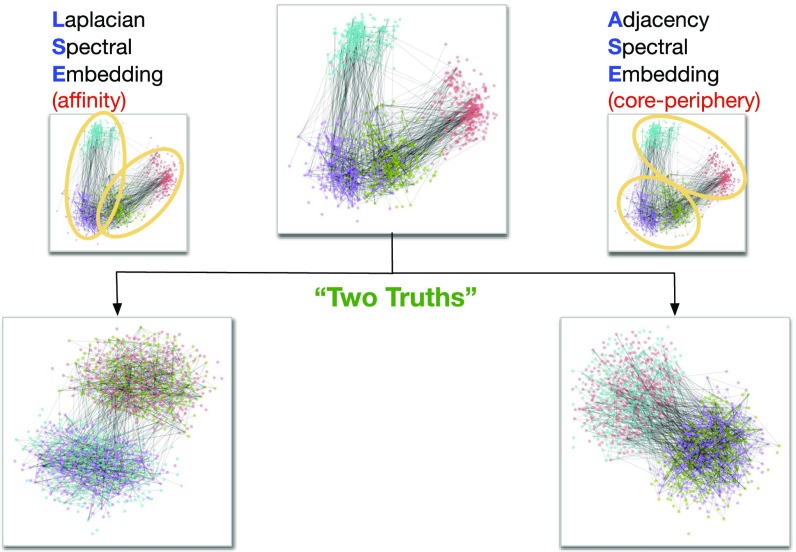

Fig. 1.

A two-truths graph (connectome) depicting connectivity structure such that one grouping of the vertices yields affinity structure (e.g., left hemisphere/right hemisphere) and the other grouping yields core–periphery structure (e.g., gray matter/white matter). (Top Center) The graph with four vertex colors. (Top Left and Top Right) LSE groups one way and ASE groups another way. (Bottom Left) The LSE truth is two densely connected groups, with sparse interconnectivity between them (affinity structure). (Bottom Right) The ASE truth is one densely connected group, with sparse interconnectivity between it and the other group and sparse interconnectivity within the other group (core–periphery structure). This paper demonstrates the two-truths phenomenon illustrated here—that LSE and ASE find fundamentally different but equally meaningful network structure—via theory, simulation, and real data analysis.

We compare and contrast Laplacian and adjacency spectral embedding as the first step in spectral graph clustering and demonstrate that the two methods, and the two resulting clusterings, identify different—but both meaningful—graph structure. We trust that this simple, clear explication will contribute to an awareness that connectivity-based structure discovery via spectral graph clustering should consider both Laplacian and adjacency spectral embedding and the development of new methodologies based on this awareness.

Spectral Graph Clustering

Given a simple graph on vertices, consider the associated adjacency matrix in which = 0 or 1 encoding whether vertices and in share an edge in . For our simple undirected, unweighted, loopless case, is binary with , symmetric with , and hollow with .

The first step of spectral graph clustering (2, 3) involves embedding the graph into Euclidean space via an eigendecomposition. We consider two options: Laplacian spectral embedding (LSE), wherein we decompose the normalized Laplacian of the adjacency matrix, and adjacency spectral embedding (ASE) given by a decomposition of the adjacency matrix itself. With target dimension , either spectral embedding method produces points in , denoted by the matrix . ASE employs the eigendecomposition to represent the adjacency matrix via and chooses the top eigenvalues by magnitude and their associated vectors to embed the graph via the scaled eigenvectors . Similarly, LSE embeds the graph via the top scaled eigenvectors of the normalized Laplacian , where is the diagonal matrix of vertex degrees. In either case, each vertex is mapped to the corresponding row of .

Spectral graph clustering concludes via classical Euclidean clustering of the rows of . As described below, central limit theorems for spectral embedding of the (sufficiently dense) stochastic block model via either LSE or ASE suggest Gaussian mixture modeling (GMM) for this clustering step. Thus, we consider spectral graph clustering to be GMM composed with LSE or ASE:

Stochastic Block Model

The random graph model we use to illustrate our phenomenon is the stochastic block model (SBM), introduced in ref. 4. This model is parameterized by (i) a block membership probability vector in the unit simplex and (ii) a symmetric block connectivity probability matrix with entries in governing the probability of an edge between vertices given their block memberships. Use of the SBM is ubiquitous in theoretical, methodological, and practical graph investigations, and SBMs have been shown to be universal approximators for exchangeable random graphs (5).

For sufficiently dense graphs, both LSE and ASE have a central limit theorem (6–8) demonstrating that, for large , embedding via the top eigenvectors from a rank -block SBM () yields points in behaving approximately as a random sample from a mixture of Gaussians. That is, given that the th vertex belongs to block , the th row of will be approximately distributed as a multivariate normal with parameters specific to block , . The structure of the covariance matrices suggests that the GMM is called for, as an appropriate generalization of -means clustering. Therefore, GMM via maximum likelihood will produce mixture parameter estimates and associated asymptotically perfect clustering, using either LSE or ASE. For finite , however, LSE and ASE yield different clustering performance, and neither one dominates the other.

We make significant conceptual use of the positive definite two-block SBM (), with

which henceforth we abbreviate as . In this simple setting, two general/generic cases present themselves: affinity and core–periphery.

Affinity: .

An SBM with is said to exhibit affinity structure if each of the two blocks has a relatively high within-block connectivity probability compared with the between-block connectivity probability.

Core-periphery: .

An SBM with is said to exhibit core–periphery structure if one of the two blocks has a relatively high within-block connectivity probability compared with both the other block’s within-block connectivity probability and the between-block connectivity probability.

The relative performance of LSE and ASE for these two cases provides the foundation for our analyses. Informally, LSE outperforms ASE for affinity, and ASE is the better choice for core–periphery. We make this clustering performance assessment analytically precise via Chernoff information, and we demonstrate this in practice via the adjusted Rand index.

Clustering Performance Assessment

We consider two approaches to assessing the performance of a given clustering, defined to be a partition of into a disjoint union of partition cells or clusters. For our purposes—demonstrating a two-truths phenomenon in LSE vs. ASE spectral graph clustering—we consider the case in which there is a “true” or meaningful clustering of the vertices against which we can assess performance, but we emphasize that in practice such a truth is neither known nor necessarily unique.

Chernoff Information.

Comparing and contrasting the relative performance of LSE vs. ASE via the concept of Chernoff information (9, 10), in the context of their respective central limit theorems (CLTs), provides a limit theorem notion of superiority. Thus, in the SBM, we allude to the GMM provided by the CLT for either LSE or ASE.

The Chernoff information between two distributions is the exponential rate at which the decision-theoretic Bayes error decreases as a function of sample size. In the two-block SBM, with the true clustering of the vertices given by the block memberships, we are interested in the large-sample optimal error rate for recovering the underlying block memberships after the spectral embedding step has been carried out. Thus, we require the Chernoff information when and are multivariate normals. Letting and

we have

This provides both and when using the large-sample GMM parameters for obtained from the LSE and ASE embeddings, respectively, for a particular two-block SBM distribution (defined by its block membership probability vector and block connectivity probability matrix ). We make use of the Chernoff ratio ; implies ASE is preferred while implies LSE is preferred. (Recall that as the Chernoff information increases, the large-sample optimal error rate decreases.) Chernoff analysis in the two-block SBM demonstrates that, in general, LSE is preferred for affinity while ASE is preferred for core–periphery (7, 11).

Adjusted Rand Index.

In practice, we wish to empirically assess the performance of a particular clustering algorithm on a given graph. There are numerous cluster assessment criteria available in the literature: the Rand index (RI) (12), normalized mutual information (NMI) (13), variation of information (VI) (14), Jaccard (15), etc. These are typically used to compare either an empirical clustering against a “truth” or two separate empirical clusterings. For concreteness, we consider the well-known adjusted Rand index (ARI), popular in machine learning, which normalizes the RI so that expected chance performance is zero: The ARI is the adjusted-for-chance probability that two partitions of a collection of data points will agree for a randomly chosen pair of data points, putting the pair into the same partition cell in both clusterings or splitting the pair into different cells in both clusterings. (Our empirical connectome results are essentially unchanged when using other cluster assessment criteria.)

In the context of spectral clustering via , we consider and to be the two clusterings of the vertices of a given graph. Then ARI() assesses their agreement: ARI() implies that the two clusterings are identical; ARI() implies that the two spectral embedding methods are “operationally orthogonal.” (Significance is assessed via permutation testing.)

In the context of two truths, we consider and to be two known true or meaningful clusterings of the vertices. Then, with being either or , ARI() ARI() implies that the spectral embedding method under consideration is more adept at discovering truth than truth . Analogous to the theoretical Chernoff analysis, ARI simulation studies in the two-block SBM demonstrate that, in general, LSE is preferred for affinity while ASE is preferred for core–periphery.

Model Selection 2

To perform the spectral graph clustering in practice, we must address two inherent model selection problems: We must choose the embedding dimension () and the number of clusters ().

SBM vs. Network Histogram.

If the SBM were actually true, then as any reasonable procedure for estimating the singular value decomposition (SVD) rank would yield a consistent estimator and any reasonable procedure for estimating the number of clusters would yield a consistent estimator . Critically, the universal approximation result of ref. 5 shows that SBMs provide a principled “network histogram” model even without the assumption that the SBM with some fixed actually holds. Thus, practical model selection for spectral graph clustering is concerned with choosing () to provide a useful approximation.

The bias–variance tradeoff demonstrates that any quest for a universally optimal methodology for choosing the “best” dimension and number of clusters, in general, for finite , is a losing proposition. Even for a low-rank model, subsequent inference may be optimized by choosing a dimension smaller than the true signal dimension, and even for a mixture of Gaussians, inference performance may be optimized by choosing a number of clusters smaller than the true cluster complexity. In the case of semiparametric SBM fitting, wherein low-rank and finite mixtures are used as a practical modeling convenience as opposed to a believed true model, and one presumes that both and will tend to infinity as , these bias–variance tradeoff considerations are exacerbated.

For and below, we make principled methodological choices for simplicity and concreteness, but make no claim that these are best in general or even for the connectome data considered herein. Nevertheless, one must choose an embedding dimension and a mixture complexity, and thus we proceed.

Choosing the Embedding Dimension .

A ubiquitous and principled general methodology for choosing the number of dimensions in eigendecompositions and SVDs (e.g., principal components analysis, factor analysis, spectral embedding, etc.) is to examine the so-called scree plot and look for “elbows” defining the cutoff between the top (signal) dimensions and the noise dimensions. There are a plethora of variations for automating this singular value thresholding (SVT); section 2.8 of ref. 16 provides a comprehensive discussion in the context of principal components, and ref. 17 provides a theoretically justified (but perhaps practically suspect, for small ) universal SVT. We consider the profile-likelihood SVT method of ref. 18. Given (for either LSE or ASE) the singular values are used to choose the embedding dimension via

where provides a definition for the magnitude of the “gap” after the first singular values.

Choosing the Number of Clusters .

Choosing the number of clusters in Gaussian mixture models is most often addressed by maximizing a fitness criterion penalized by model complexity. Common approaches include the Akaike information criterion (AIC) (19), the Bayesian information criterion (BIC) (20), minimum description length (MDL) (21), etc. We consider penalized likelihood via the BIC (22). Given points in represented by (obtained via either LSE or ASE) and letting represent the GMM parameter vector whose dimension is a function of the data dimension , the mixture complexity is chosen via

where is twice the log-likelihood of the data evaluated at the GMM with mixture parameter estimate penalized by . For spectral clustering, we use the BIC for after spectral embedding, so with chosen as above.

Connectome Data

We consider for illustration a diffusion MRI dataset consisting of 114 connectomes (57 subjects, two scans each) with 72,783 vertices each and both left/right/other hemispheric and gray/white/other tissue attributes for each vertex. Graphs were estimated using the NeuroData’s MR Graphs pipeline (23), with vertices representing subregions defined via spatial proximity and edges defined by tensor-based fiber streamlines connecting these regions (Fig. 2).

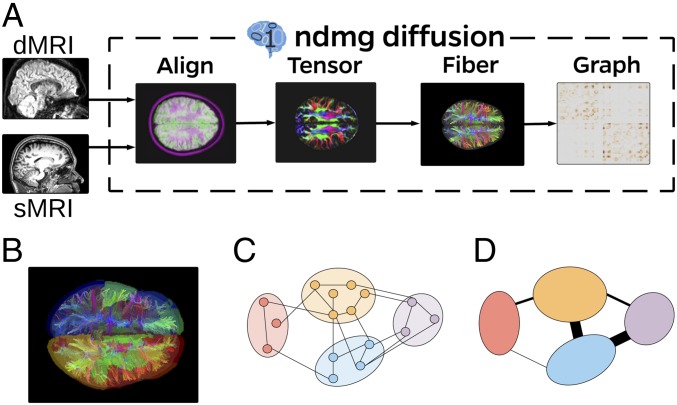

Fig. 2.

Connectome data generation. (A) The pipeline. (B) Voxels and regions in tractography map. (C) Voxels and edges. (D) Contraction yields vertices and edges. The output is diffusion MRI graphs on 1 million vertices. Spatial vertex contraction yields graphs on 70,000 vertices from which we extract largest connected components of 40,000 vertices with and labels for each vertex. Fig. 1 depicts (a subsample from) one such graph.

The actual graphs we consider are the largest connected component (LCC) of the induced subgraph on the vertices labeled as both left or right and gray or white. This yields connected graphs on vertices. Additionally, for each graph every vertex has a label and a label, which we sometimes find convenient to consider as a single label in .

Sparsity.

The only notions of sparsity relevant here are linear algebraic: whether there are enough edges in the graph to support spectral embedding and whether there are few enough to allow for sparse matrix computations. We have a collection of observed connectomes and we want to cluster the vertices in these graphs, as opposed to in an unobserved sequence with the number of vertices tending to infinity. Our connectomes have, on average, vertices and edges, for an average degree and a graph density .

Synthetic Analysis.

We consider a synthetic data analysis via a priori projections onto the SBM—block model estimates based on known or assumed block memberships. Averaging the collection of connectomes yields the composite (weighted) graph adjacency matrix . The projection of the binarized onto the four-block SBM yields the block connectivity probability matrix presented in Fig. 3 and the block membership probability vector . Limit theory demonstrates that spectral graph clustering using will, for large , correctly identify block memberships for this four-block case when using either LSE or ASE. Our interest is to compare and contrast the two spectral embedding methods for clustering into two clusters. We demonstrate that this synthetic case exhibits the two-truths phenomenon both theoretically and in simulation—the a priori projection of our composite connectome yields a four-block two-truths SBM.

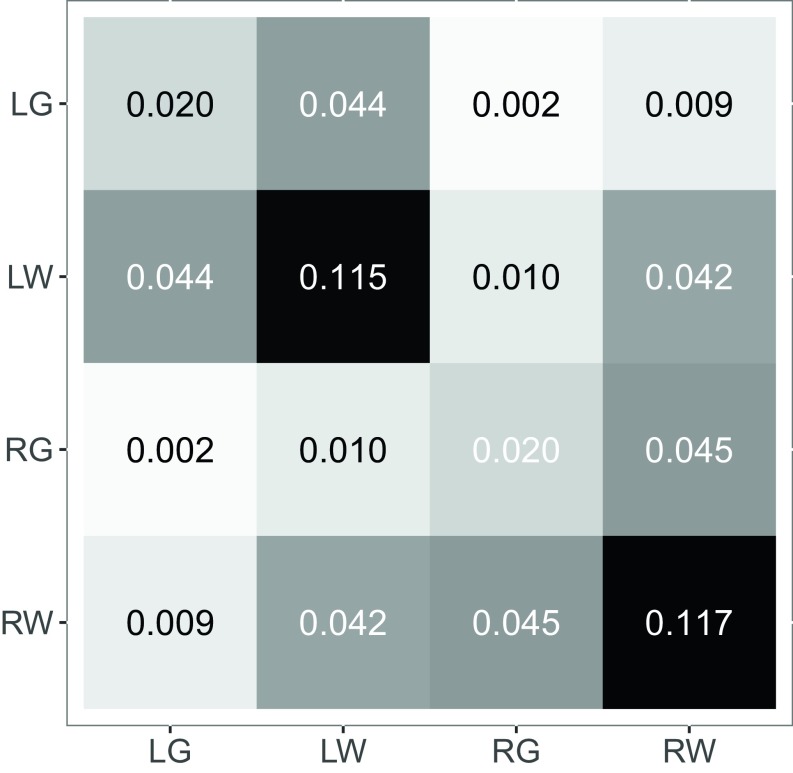

Fig. 3.

Block connectivity probability matrix for the a priori projection of the composite connectome onto the four-block SBM. The two two-block projections ( and ) are shown in Fig. 4. This synthetic SBM exhibits the two-truths phenomenon both theoretically (via Chernoff analysis) and in simulation (via Monte Carlo).

Two-Block Projections.

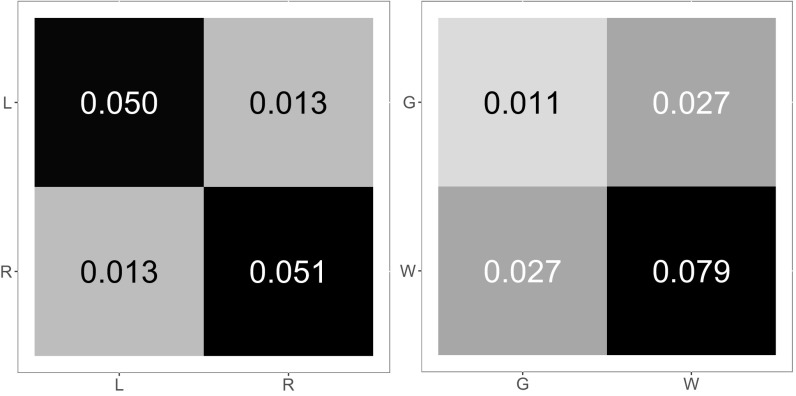

A priori projections onto the two-block SBM for and yield the two-block connectivity probability matrices shown in Fig. 4. It is apparent that the a priori block connectivity probability matrix represents an affinity SBM with and the a priori projection yields a core–periphery SBM with . It remains to investigate the extent to which the Chernoff analysis from the two-block setting (LSE is preferred for affinity while ASE is preferred for core–periphery) extends to such a four-block two-truths case; we do so theoretically and in simulation using this synthetic model derived from the a priori projection of our composite connectome in Theoretical Results and Simulation Results and then empirically on the original connectomes in Connectome Results.

Fig. 4.

Block connectivity probability matrices for the a priori projection of the composite connectome onto the two-block SBM for (Left) and (Right) . exhibits affinity structure, with Chernoff ratio <1; exhibits core–periphery structure, with Chernoff ratio >1.

Theoretical Results.

Analysis using the large-sample Gaussian mixture model approximations from the LSE and ASE CLTs shows that the 2D embedding of the four-block model, when clustered into two clusters, will yield { {LG,LW}, {RG,RW} } (i.e., {Left, Right}) when embedding via LSE and { {LG,RG}, {LW,RW} } (i.e., {Gray, White}) when using ASE. That is, using numerical integration for the , the largest Kullback–Leibler divergence (as a surrogate for Chernoff information) among the 10 possible ways of grouping the four Gaussians into two clusters is for the { {LG,LW}, {RG,RW} } grouping, and the largest of these values for the is for the { {LG,RG}, {LW,RW} } grouping.

Simulation Results.

We augment the Chernoff limit theory via Monte Carlo simulation, sampling graphs from the four-block model and running the algorithm specifying . This results in LSE finding (ARI > 0.95) with probability >0.95 and ASE finding (ARI > 0.95) with probability >0.95.

Connectome Results.

Figs. 5–7 present empirical results for the connectome dataset, graphs each on vertices. We note that these connectomes are most assuredly not four-block two-truths SBMs of the kind presented in Figs. 3 and 4, but they do have two truths ({Left, Right} and {Gray, White}) and, as we shall see, they do exhibit a real-data version of the synthetic results presented above, in the spirit of semiparametric SBM fitting.

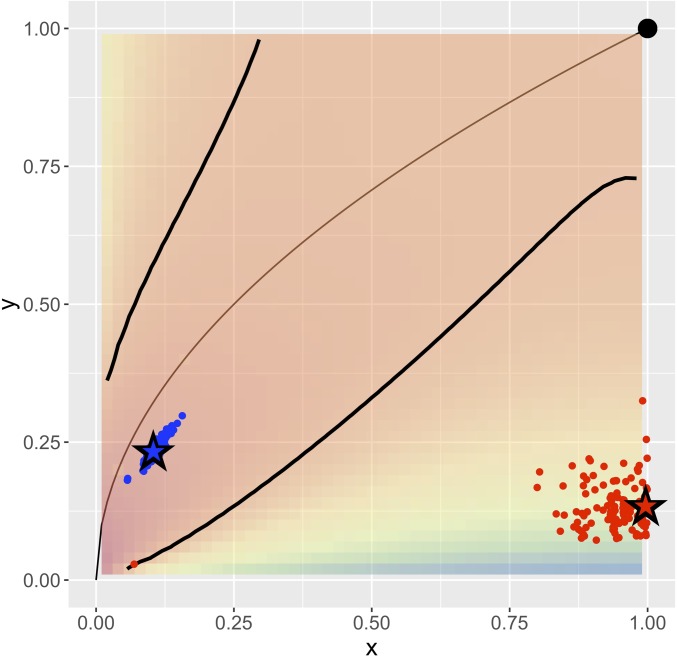

Fig. 5.

For each of our 114 connectomes, we plot the a priori two-block SBM projections for in red and in blue. The coordinates are given by and , where is the observed block connectivity probability matrix. The thin black curve represents the rank 1 submodel separating positive definite (lower right) from indefinite (upper left). The background color shading is Chernoff ratio , and the thick black curves are separating the region where ASE is preferred (between the curves) from where LSE is preferred. The point represents Erdős–Rényi (). The large stars are from the a priori composite connectome projections (Fig. 4). We see that the red projections are in the affinity region where and LSE is preferred while the blue projections are in the core–periphery region where and ASE is preferred. This analytical finding based on projections onto the SBM carries over to empirical spectral clustering results on the individual connectomes (Fig. 7).

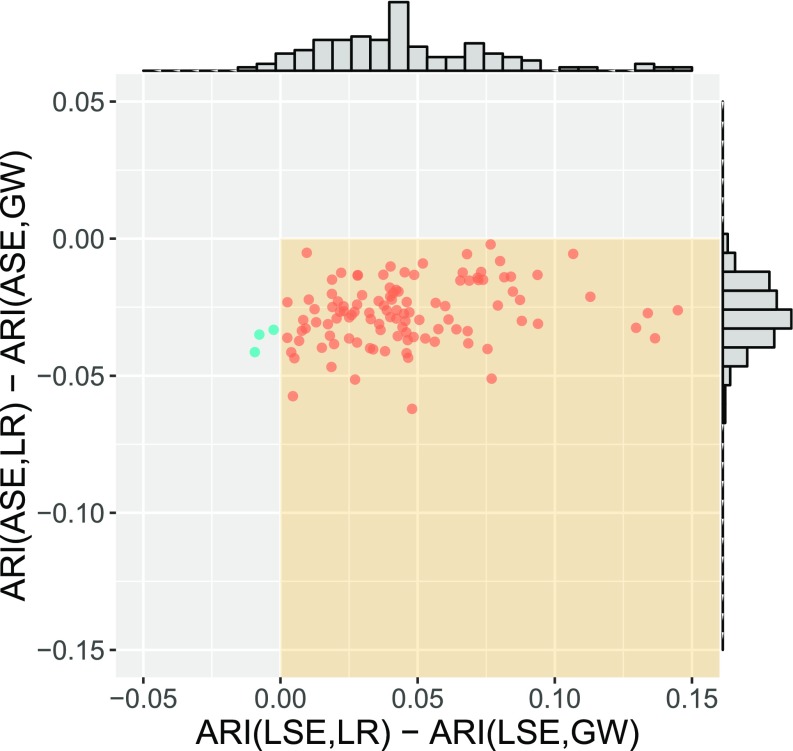

Fig. 7.

Spectral graph clustering assessment via ARI. For each of our 114 connectomes, we plot the difference in ARI for the truth against the difference in ARI for the truth for the clusterings produced by each of LSE and ASE: = ARI(LSE,LR) – ARI(LSE,GW) vs. = ARI(ASE,LR) – ARI(ASE,GW). A point in the quadrant indicates that for that connectome the LSE clustering identified better than and ASE identified better than . Marginal histograms are provided. Our two-truths phenomenon is conclusively demonstrated: LSE identifies (affinity) while ASE identifies (core–periphery).

First, in Fig. 5, we consider a priori projections of the individual connectomes, analogous to the Fig. 4 projections of the composite connectome. Letting be the observed block connectivity probability matrix for the a priori two-block SBM projection ({Left, Right} or {Gray, White}) of a given individual connectome, the coordinates in Fig. 5 are given by and . Each graph yields two points, one for each of {Left, Right} and {Gray, White}. We see that the projections are in the affinity region (large and small imply , where Chernoff ratio and LSE is preferred) while the projections are in the core–periphery region [small and small imply , where and ASE is preferred]. This exploratory data analysis finding indicates complex two-truths structure in our connectome dataset. [Of independent interest, we propose Fig. 5 as the representative for an illustrative two-truths exploratory data analysis (EDA) plot for a dataset of graphs with multiple categorical vertex labels.]

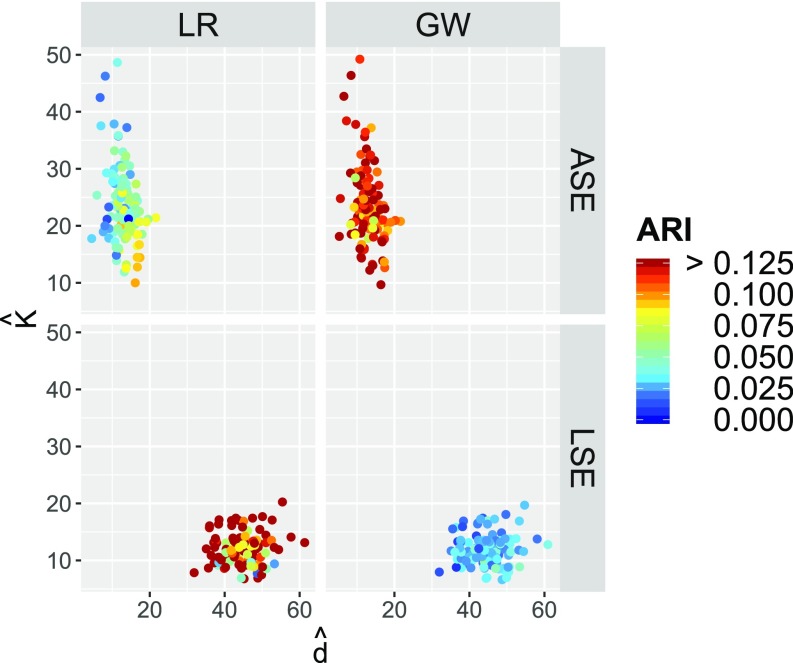

In Figs. 6 and 7 we present the results of runs of the spectral clustering algorithm . We consider each of LSE and ASE, choosing and as described above. The resulting empirical clusterings are evaluated via the ARI against each of the {Left, Right} and {Gray, White} truths. In Fig. 6 we present the results of the () model selection, and we observe that ASE is choosing and LSE is choosing , while ASE is choosing and LSE is choosing . In Fig. 7, each graph is represented by a single point, plotting = ARI(LSE,LR) – ARI(LSE,GW) vs. = ARI(ASE,LR) – ARI(ASE,GW), where “LSE” (resp. “ASE”) represents the empirical clustering (resp. ) and “LR” (resp. “GW”) represents the true clustering (resp. ). We see that almost all of the points lie in the quadrant, indicating ARI(LSE,LR) > ARI(LSE,GW) and ARI(ASE,LR) < ARI(ASE,GW). That is, LSE finds the affinity {Left, Right} structure and ASE finds the core–periphery {Gray, White} structure. The two-truths structure in our connectome dataset illustrated in Fig. 5 leads to fundamentally different but equally meaningful LSE vs. ASE spectral clustering performance. This is our two-truths phenomenon in spectral graph clustering.

Fig. 6.

Results of the () model selection for spectral graph clustering for each of our 114 connectomes. For LSE we see and ; for ASE we see and . The color coding represents clustering performance in terms of ARI for each of LSE and ASE against each of the two truths {Left, Right} and {Gray, White} and shows that LSE clustering identifies better than and ASE identifies better than . Our two-truths phenomenon is conclusively demonstrated: LSE finds (affinity) while ASE finds (core–periphery).

Conclusion

The results presented herein demonstrate that practical spectral graph clustering exhibits a two-truths phenomenon with respect to Laplacian vs. adjacency spectral embedding. This phenomenon can be understood theoretically from the perspective of affinity vs. core–periphery stochastic block models and via consideration of the two a priori projections of a four-block two-truths SBM onto the two-block SBM. For connectomics, this phenomenon manifests itself via LSE better capturing the left hemisphere/right hemisphere affinity structure and ASE better capturing the gray matter/white matter core–periphery structure and suggests that a connectivity-based parcellation based on spectral clustering should consider both LSE and ASE, as the two spectral embedding approaches facilitate the identification of different and complementary connectivity-based clustering truths.

Acknowledgments

The authors thank the Isaac Newton Institute for Mathematical Sciences, Cambridge, United Kingdom, for support and hospitality during the program Theoretical Foundations for Statistical Network Analysis (Engineering and Physical Sciences Research Council Grant EP/K032208/1), where a portion of the work on this paper was undertaken, and the University of Haifa, where these ideas were conceived in June 2014. This work is partially supported by Defense Advanced Research Projects Agency (XDATA, GRAPHS, SIMPLEX, D3M), Johns Hopkins University Human Language Technology Center of Excellence, and the Acheson J. Duncan Fund for the Advancement of Research in Statistics.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Peel L, Larremore DB, Clauset A. The ground truth about metadata and community detection in networks. Sci Adv. 2017;3:e1602548. doi: 10.1126/sciadv.1602548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.von Luxburg U. A tutorial on spectral clustering. Stat Comput. 2007;17:395–416. [Google Scholar]

- 3.Rohe K, Chatterjee S, Yu B. Spectral clustering and the high-dimensional stochastic blockmodel. Ann Stat. 2011;39:1878–1915. [Google Scholar]

- 4.Holland PW, Laskey KB, Leinhardt S. Stochastic blockmodels: First steps. Soc Networks. 1983;5:109–137. [Google Scholar]

- 5.Olhede SC, Wolfe PJ. Network histograms and universality of blockmodel approximation. Proc Natl Acad Sci USA. 2014;111:14722–14727. doi: 10.1073/pnas.1400374111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Athreya A, et al. A limit theorem for scaled eigenvectors of random dot product graphs. Sankhya A. 2016;78:1–18. [Google Scholar]

- 7.Tang M, Priebe CE. Limit theorems for eigenvectors of the normalized Laplacian for random graphs. Ann Stat. 2018;46:2360–2415. [Google Scholar]

- 8.Rubin-Delanchy P, Priebe CE, Tang M, Cape J. 2018 The generalised random dot product graph. Available at https://arxiv.org/abs/1709.05506. Preprint, posted July 29, 2018.

- 9.Chernoff H. A measure of asymptotic efficiency for tests of a hypothesis based on the sum of observations. Ann Math Stat. 1952;23:493–507. [Google Scholar]

- 10.Chernoff H. Large sample theory: Parametric case. Ann Math Stat. 1956;27:1–22. [Google Scholar]

- 11.Cape J, Tang M, Priebe CE. On spectral embedding performance and elucidating network structure in stochastic block model graphs. Network Science, in press.

- 12.Hubert L, Arabie P. Comparing partitions. J Classif. 1985;2:193–218. [Google Scholar]

- 13.Danon L, Díaz-Guilera A, Duch J, Arena A. Comparing community structure identification. J Stat Mech Theory Exp. 2005;2005:P09008. [Google Scholar]

- 14.Meilă M. Comparing clusterings–an information based distance. J Multivar Anal. 2007;98:873–195. [Google Scholar]

- 15.Jaccard P. The distribution of the flora in the alpine zone. New Phytol. 1912;11:37–50. [Google Scholar]

- 16.Jackson JE. A User’s Guide to Principal Components. Wiley, Hoboken, NJ; 2004. [Google Scholar]

- 17.Chatterjee S. Matrix estimation by universal singular value thresholding. Ann Stat. 2015;43:177–214. [Google Scholar]

- 18.Zhu M, Ghodsi A. Automatic dimensionality selection from the scree plot via the use of profile likelihood. Comput Stat Data Anal. 2006;51:918–930. [Google Scholar]

- 19.Akaike H. A new look at the statistical model identification. IEEE Trans Autom Control. 1974;19:716–723. [Google Scholar]

- 20.Schwarz G. Estimating the dimension of a model. Ann Stat. 1978;6:461–464. [Google Scholar]

- 21.Rissanen J. Modeling by shortest data description. Automatica. 1978;14:465–471. [Google Scholar]

- 22.Fraley C, Raftery AE. Model-based clustering, discriminant analysis and density estimation. J Am Stat Assoc. 2002;97:611–631. [Google Scholar]

- 23.Kiar G, et al. 2018 A high-throughput pipeline identifies robust connectomes but troublesome variability. Available at https://www.biorxiv.org/node/94401. Preprint, posted April 24, 2018.