Abstract

The human ability to use different tools demonstrates our capability of forming and maintaining multiple, context-specific motor memories. Experimentally, this has been investigated in dual adaptation, where participants adjust their reaching movements to opposing visuomotor transformations. Adaptation in these paradigms occurs by distinct processes, such as strategies for each transformation or the implicit acquisition of distinct visuomotor mappings. Although distinct, transformation-dependent aftereffects have been interpreted as support for the latter, they could reflect adaptation of a single visuomotor map, which is locally adjusted in different regions of the workspace. Indeed, recent studies suggest that explicit aiming strategies direct where in the workspace implicit adaptation occurs, thus potentially serving as a cue to enable dual adaptation. Disentangling these possibilities is critical to understanding how humans acquire and maintain motor memories for different skills and tools. We therefore investigated generalization of explicit and implicit adaptation to untrained movement directions after participants practiced two opposing cursor rotations, which were associated with the visual display being presented in the left or right half of the screen. Whereas participants learned to compensate for opposing rotations by explicit strategies specific to this visual workspace cue, aftereffects were not cue sensitive. Instead, aftereffects displayed bimodal generalization patterns that appeared to reflect locally limited learning of both transformations. By varying target arrangements and instructions, we show that these patterns are consistent with implicit adaptation that generalizes locally around movement plans associated with opposing visuomotor transformations. Our findings show that strategies can shape implicit adaptation in a complex manner.

NEW & NOTEWORTHY Visuomotor dual adaptation experiments have identified contextual cues that enable learning of separate visuomotor mappings, but the underlying representations of learning are unclear. We report that visual workspace separation as a contextual cue enables the compensation of opposing cursor rotations by a combination of explicit and implicit processes: Learners developed context-dependent explicit aiming strategies, whereas an implicit visuomotor map represented dual adaptation independent from arbitrary context cues by local adaptation around the explicit movement plan.

Keywords: dual adaptation, explicit strategies, implicit learning, motor learning, sensorimotor

INTRODUCTION

Modern tools frequently require their users to operate under different visuomotor transformations. The most common example is a computer mouse, where hand movements are transformed to cursor movements on a screen. The fact that humans can switch between different devices, such as trackpads, phones, and tablets, without apparently having to relearn each transformation each time has been taken as evidence for separate memories of different visuomotor transformations that can be retrieved on the basis of context. This remarkable ability may have been fundamental to the advancement of our species (McDougle et al. 2016; Stout et al. 2008; Stout and Chaminade 2007).

Dual-adaptation paradigms have served as a useful tool to study this ability. In these paradigms, participants learn to compensate for opposing visuomotor transformations, such as visuomotor cursor rotations (Cunningham 1989) or force fields (Shadmehr and Mussa-Ivaldi 1994), in close temporal succession (Ayala et al. 2015; Bock et al. 2005; Galea and Miall 2006; Hegele and Heuer 2010; Thomas and Bock 2012; van Dam and Ernst 2015; Woolley et al. 2007, 2011). Whereas alternating exposure to opposing transformations leads to substantial interference in many situations (Donchin et al. 2003; Howard et al. 2013; Sheahan et al. 2016; Woolley et al. 2007), researchers have identified a limited set of contextual cues that can enable simultaneous learning of opposing transformations (Ayala et al. 2015; Heald et al. 2018; Hegele and Heuer 2010; Howard et al. 2012, 2013; Imamizu et al. 2003; Nozaki et al. 2016; Osu et al. 2004; Sarwary et al. 2015; Seidler et al. 2001; Sheahan et al. 2016).

When it occurs, dual adaptation has been explained as contextual cues establishing separate motor memories or visuomotor mappings (Ayala et al. 2015; Hirashima and Nozaki 2012; Imamizu et al. 2007; Osu et al. 2004) or as opposite learning within a single visuomotor map being enabled by local generalization around different kinematic properties of the movement, like its trajectory (Gonzalez Castro et al. 2011), the direction of a visual target (Woolley et al. 2007, 2011), or the movement plan (Hirashima and Nozaki 2012; Sheahan et al. 2016). Whereas the privileged role attributed to movement characteristics may be justified by the omnipresence of these physical cues in natural environments, the above views can be unified by thinking of the motor memory that results from learning as a multidimensional state space that can contain arbitrary psychological and physical cue dimensions (Howard et al. 2013). Under this view, whether or not a cue enables dual adaptation depends on whether different cue characteristics allow for a regional separation in the state space of memory that is sufficient to reduce the overlap between local generalization of multiple transformations and thereby attenuate interference between them.

A level of complexity is added to this by recent views that propose at least two qualitatively distinct learning mechanisms in visuomotor adaptation (Huberdeau et al. 2015; McDougle et al. 2016; Taylor and Ivry 2012, 2014): On one hand, there is an implicit process, which operates outside of awareness and learns from sensory-prediction errors (Mazzoni and Krakauer 2006; Morehead et al. 2017; Synofzik et al. 2008). This implicit learning is thought to reflect cerebellum-dependent adaptation of internal models (Taylor et al. 2010) and to dominantly contribute to aftereffects that persist in the absence of the novel transformation (Heuer and Hegele 2008). On the other hand, learners can develop conscious aiming strategies to augment reaching performance, a process referred to as explicit learning (Heuer and Hegele 2008; Taylor et al. 2014). This explicit learning appears to be remarkably flexible, is strongly biased by visual cues and verbal instruction, but does not lead to aftereffects (Bond and Taylor 2015, 2017; Taylor et al. 2014).

One way to think of explicit and implicit learning mechanisms, in relation to dual adaptation, is that they reflect the outcome of learning in two different state spaces, each with its own set of contextual cues. Evidence in favor of such a distinction comes from findings indicating that explicit and implicit learning differ with respect to their generalization properties (Heuer and Hegele 2011; McDougle et al. 2017) and with respect to the cue characteristics that enable dual adaptation in these two domains (Hegele and Heuer 2010; van Dam and Ernst 2015). Importantly, recent findings have also pointed toward an interaction of explicit and implicit learning mechanisms, suggesting that the peak of implicit generalization is centered around the direction of the explicit movement plan (Day et al. 2016; McDougle et al. 2017; Morehead et al. 2017). This provides a potential mechanism through which separate movement plans may attenuate interference in dual adaptation by directing local implicit learning to different regions in the state space. Translated to our framework, this suggests that the planned movement direction (i.e., the output of the explicit memory) constitutes a relevant dimension in the state space of implicit learning. The existence of such a link would be essential to our understanding of dual adaptation and the interplay of different learning mechanisms. However, this plan-based dual adaptation is in direct conflict with previous accounts that suggested that the visual target (Woolley et al. 2011) or the movement kinematics (Gonzalez Castro et al. 2011) constitute the relevant separating features.

These issues are central to our ability to use tools or different motor behaviors in different contexts. To gain more insight into the way explicit strategies and implicit learning interact in visuomotor dual adaptation, the present study sought to test whether the direction of explicit movement plans indeed enables local learning of opposing cursor rotations by separating generalization and to contrast this possibility with a previous view under which the direction of the visual target is the relevant cue (Woolley et al. 2011). We chose visual workspace as a contextual cue that has been shown to create separate explicit strategies but not implicit visuomotor maps (Hegele and Heuer 2010) and tested generalization of explicit and implicit learning to different directions after practice. By varying target locations, rotation directions, and verbal instruction of strategies, we tested whether generalization centered around the visual target (target-based generalization; Woolley et al. 2011) or the explicit strategy (plan-based generalization; Day et al. 2016; McDougle et al. 2017) limits interference within a single, implicit visuomotor map.

MATERIALS AND METHODS

A total of 94 participants gave written informed consent to participate in protocols approved by the local ethics committee of department 06 of Justus-Liebig-University, Giessen, Germany (LEK-FB06). Participants included in the analyses were right-handed and had not previously participated in a reaching adaptation experiment. Table 1 provides further information on participants and exclusions.

Table 1.

Overview of included and excluded participants and trials for all experiments

| Experiment | n Analyzed | Men | Age, yr [mean (min; max)] | n Excluded | No. of Exclusions (movement) | No. of Exclusions (angle) | Total No. of Movements |

|---|---|---|---|---|---|---|---|

| 1 | 22 | 7 | 25 (20; 30) | 1a +1b | 462 (5.5%) | 12 (0.1%) | 8,360 |

| 2 | 20 | 4 | 21 (19; 30) | 0 | 353 (4.1%) | 56 (0.6%) | 8,680 |

| 3 | 19 | 7 | 24 (19; 29) | 1c | 401 (4.9%) | 152 (1.8%) | 8,246 |

| 4 | 21 | 9 | 24 (20; 29) | 1a +1b +7c | 320 (3.5%) | 12 (0.1%) | 9,114 |

Reasons for excluding participants:

did not finish testing (because of bug in experimental software or time constraints);

did not meet inclusion criteria (age, handedness, metal implants);

failure to follow instructions (revealed in postexperimental standardized questioning). Individual trials were excluded if no start could be detected or participants failed to reach target amplitude within the specified time (“movement”) or if the angular cursor error was >120° (“angle”).

Apparatus

Participants sat 1 m in front of a vertically mounted 22-in. LCD screen (Samsung 2233RZ) running at 120 Hz (Fig. 1A). Their index finger was strapped to a plastic sled (50 × 30-mm base, 6-mm height) that moved with low friction on a horizontal glass surface at table height. Sled position was tracked at 100 Hz by a trakSTAR sensor (model M800; Ascension Technology, Burlington, VT) mounted vertically above the fingertip. Hand vision was occluded by a black wood panel 25 cm above the surface. With their finger movement, participants controlled a cursor on the screen (cyan filled circle, 5.6-mm diameter) via a custom script written in MATLAB (RRID:SCR_001622) with the Psychophysics Toolbox (Brainard 1997; RRID:SCR_002881). On movement practice trials, participants were instructed to “shoot” the cursor from a visual start (red/green outline circle, 8-mm diameter) through a target (white filled circle, 4.8-mm diameter) at 80-mm distance by a fast, uncorrected movement of their right hand. Cursor feedback was provided concurrently but was frozen as soon as participants’ hand had passed the radial distance of the target. The cursor represented the unrotated hand position during all familiarization and baseline practice trials (phase explanations below). During rotation practice and maintenance (inserted between posttests), the cursor was rotated around the start relative to hand position. The direction of cursor rotation was cued by the location of display on the screen (see below). On movement test trials, the cursor disappeared upon leaving the start circle. If participants took longer than 300 ms from leaving the start circle to reaching target amplitude, the trial was aborted and an error message was displayed (“Zu langsam!”, i.e., “Too slow!”). After the end of the reaching movements, arrows at the side of the screen guided participants back to the start location without providing cursor feedback.

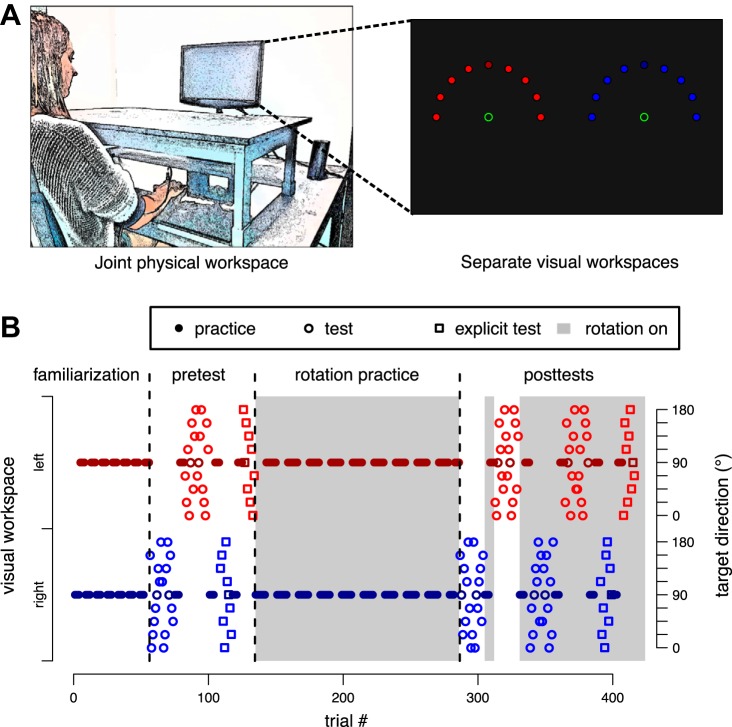

Fig. 1.

A: general setup and visual workspace. Only 1 start and 1 target location were shown on a given trial, but all generalization targets are displayed here for illustrative purposes. In addition, the actual targets were white. B: experimental protocol for an exemplary participant of experiment 1. The start location of the hand on the table was identical for both visual workspaces. The presence/absence of the rotation was cued by the color of the start circle and instructed for both trials with and without feedback. Alternation between visual workspaces was every 4 trials during familiarization and posttest practice and every 8 trials during rotation practice. Circles represent movement trials; squares are explicit judgment trials, where participants do not move their arm and verbally judge required movement direction instead.

Visual Workspace Cue

Throughout the experiment, the start locations of the reaching movement alternated between the left and right half of the screen (x-axis shift of ¼ screen width in respective direction). In phases with cursor rotation, these visual workspaces were associated with visual rotations in opposite directions. We chose this contextual cue because previous research had indicated that it successfully cues separate explicit strategies but not separate implicit visuomotor maps (Hegele and Heuer 2010). Importantly, participants’ actual movements were always conducted in a common physical workspace from a central start location on the table ~40 cm in front of them.

General Task Protocol

All experiments consisted of familiarization, baseline pretests, rotation practice, and posttests, with the general logic that posttests tested generalization of learning induced by rotation practice relative to baseline pretests. During familiarization, participants performed a total of 48 movement practice trials, with the visual workspace alternating between the left and right half of the screen every four trials. This was followed by pretests intermixed with additional practice trials: In pretests, we tested generalization of movements to nine different directions spanning the hemisphere around the practiced direction by movement test trials without visual feedback. These were performed in each visual workspace in an alternating, blocked fashion (Fig. 1B). Each test block contained two (experiment 1) or three (experiments 2–4) sets of one reaching movement per target direction. The sequence of targets was randomized within sets. Before each test block, participants performed four more practice movements with cursor feedback in each visual workspace, respectively, to maintain reaching performance on a stable level.

At the end of pretests, we further probed participants’ explicit knowledge of the cursor rotations for one set of targets in each visual workspace. On these explicit judgment test trials, participants rested their hand on their thigh and provided perceptual judgments about the appropriate aiming direction to reach a specific visual target by verbally instructing the experimenter to rotate the orientation of a straight line originating on the start circle (Heuer and Hegele 2008). They were instructed that the orientation of the line should point in the aiming direction of their hand movement, which would be required to move the cursor from the start to the respective target location.

With the start of rotation practice, two oppositely signed cursor rotations were introduced and participants trained to counteract these rotations in alternating blocks of 8 trials for a total of 144 trials. Before this practice phase, participants were instructed that the mapping of hand to cursor movement would be changed, that the change would be tied to the visual workspace, and that its presence would be signaled by a red (instead of the already encountered green) start circle. No further information about the nature of the change was provided.

Posttests were arranged like pretests, with a few exceptions: We now repeated the movement tests twice, with the first repetition testing for generalization of implicit aftereffects in the absence of strategies. This was done by instructing participants before the test session that the cursor rotation would be removed and that this would be signaled by a green start circle. The second repetition then tested for generalization of total learning, which contains explicit and implicit components, by instructing participants that the cursor rotation was present again (as indicated by the red start circle) and they should move accordingly.

We conducted posttests for explicit judgments identically to the pretests for explicit judgments, with the exception that a red start circle (transformations present) was presented. If participants judged the rotation to be zero at the first explicit posttest trial, we repeated the instructions to them, emphasizing that they should consider the red start circle in their judgment, and asked whether they wanted to reconsider their explicit judgment. This reminder was provided only once; thereafter, explicit judgments were recorded as given without further questioning.

For counterbalancing, each experiment had two groups that differed in whether they began the experiment with the left or right visual workspace.

Experimental Protocol

Experiment 1.

The goal of experiment 1 was to examine the pattern of generalization to determine how explicit and implicit processes enable dual adaptation. Participants practiced reaching movements to a single target direction at 90° (with 0° corresponding to movements to the right). Cursor feedback was rotated by 45° around the start location with a clockwise (CW) rotation in the left and a counterclockwise (CCW) rotation in the right visual workspace (Fig. 2A). Movements thus had a common visual target direction, but the aiming strategies were separate. Each movement generalization test block contained two sets of trials to nine equally spaced generalization targets from 0° to 180°. Pretests thus contained 86 trials, including 36 movement test trials, 18 explicit test trials, and a total of 32 movement practice trials. Similarly, posttests contained 138 trials in total.

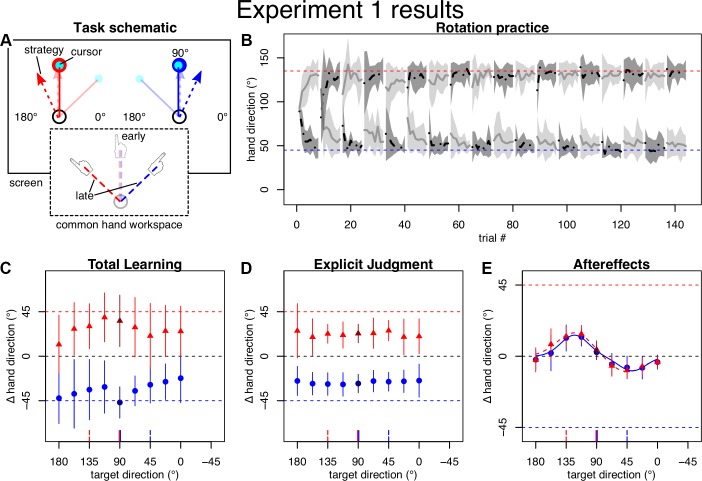

Fig. 2.

Task and results of experiment 1. All angles are in degrees, with 0° falling on the x-axis and positive direction counterclockwise. Error regions and bars represent SDs. A: schematic drawing of the practice targets and approximate predictions for cursor and strategy directions (top) and movement directions in the common hand workspace (bottom) for early (transparent) and late (solid) practice, for left (red) and right (blue) visual workspace. B: mean hand directions (not baseline corrected) during practice plotted separately by the groups starting with either with left (gray) or right (black) visual workspace. Horizontal dashed lines indicate ideal compensation of the cursor rotation. On average, participants compensated the rotation well within the first few blocks. C–E: baseline-corrected average hand directions for left (red) and right (blue) visual workspace on generalization posttests. Darker color indicates the practiced target direction. Horizontal dashed lines indicate full compensation of the cursor rotation for left (red) and right (blue) visual workspace, respectively. Vertical red and blue lines at x-axis indicate direction of target (solid) and full compensation (dashed). C: participants’ total learning approached full compensation, was specific to the visual workspace, and generalized broadly across target directions. D: when tested separately, explicit knowledge reflected this cue-dependent, broadly generalizing learning. E: aftereffects, on the other hand, appeared independent of the visual workspace cue and exhibited a generalization pattern that was well fit by a sum of 2 Gaussians (solid blue and dashed red line).

Experiment 2.

The goal of experiment 2 was to ensure that our findings from experiment 1 were not solely attributable to biomechanical or visual biases independent of learning (Ghilardi et al. 1995; Morehead and Ivry 2015). To test this possibility, the practice and generalization targets were moved by 45° CW (i.e., the practice target was at 45° and generalization targets spanned −45° to 135°; Fig. 3A). We predicted that if the generalization pattern was solely due to potential biases, then it would be unchanged. However, if the apparent generalization function was the result of learning, then it should be shifted by −45° (i.e., 45° CW) on the generalization direction axis. Apart from these changes, experiment 2 was like experiment 1, except that we increased the number of consecutive movement test sets for each visual workspace and test condition from two to three, thus increasing the number of pretest trials to 122 and posttest trials to 174.

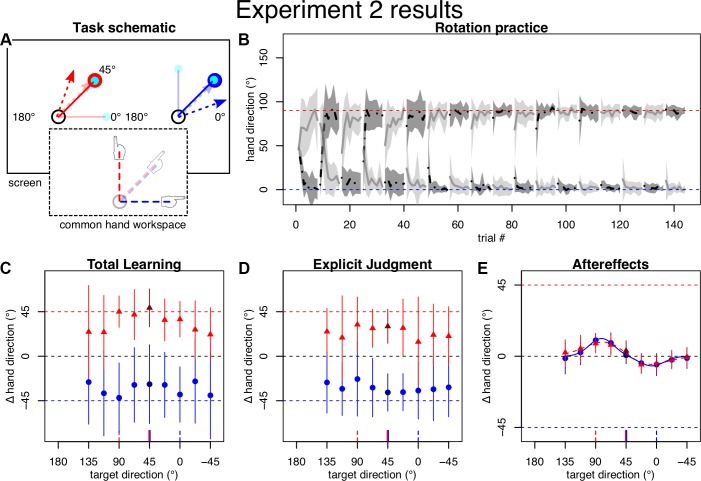

Fig. 3.

Task and results of experiment 2. A: practice direction was rotated by −45° relative to experiment 1. B–D: participants quickly learned to compensate the rotation and displayed appropriate total learning and explicit knowledge, as in experiment 1. E: generalization of aftereffects appeared shifted with the practice location, indicating that bimodal pattern is dominantly an effect of learning, not biases.

Experiment 3.

To further contrast plan-based and target-based generalization, we designed a paradigm with separate visual target locations and cursor rotations, which were arranged in such a way that the resulting compensation strategy for each target should approximately point at the respective other target when projected to the common physical workspace. In this way, plan- and target-based generalization predict opposite generalization patterns. We therefore offset targets by 22.5° outward from the center (i.e., to 112.5° in the left and 67.5° in the right workspace). For rotations, we chose 60° CCW for the left and CW for the right workspace (Fig. 4A). Since implicit learning asymptotes at ~10–15° regardless of rotation magnitude (Bond and Taylor 2015; Morehead et al. 2017), we assumed that strategy magnitude should asymptote around the 45° intertarget distance, as intended. Otherwise, experiment 3 was identical to experiment 2.

Fig. 4.

Task and results of experiment 3. A: visual target directions were separated by 45° and the cursor rotated outward so that strategies should cross the midline and point approximately at the other target. The multiple dashed arrows emphasize strategies changing over the course of learning (compared with experiment 4). B: practice performance was more variable compared with experiment 1 (A). C and D: total learning and explicit judgments flipped signs in line with the reversed cursor rotations cued by the separate visual workspaces. They also appeared more variable but resembled experiment 1 when 5 outliers were removed (not shown). E: aftereffects appeared dominated by interference, which did not change without the 5 outliers (not shown). Note that the lines indicating full compensation are outside the y-axis limits.

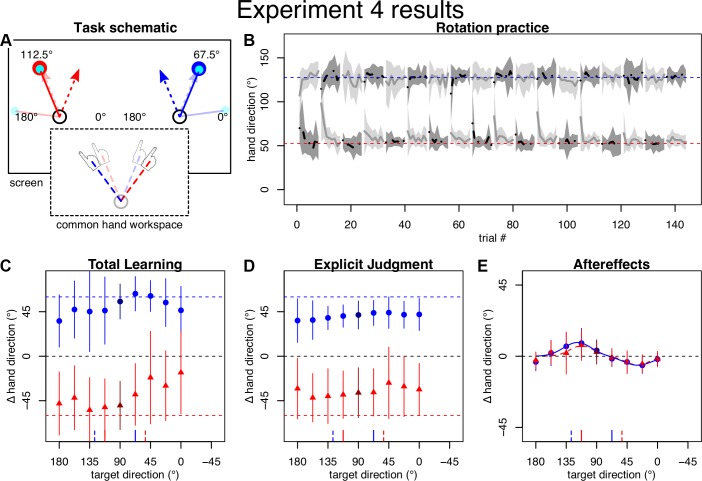

Experiment 4.

This experiment intended to facilitate correct aiming strategies by verbally instructing participants about how to compensate the cursor rotation before practice of the rotations. This was done by informing participants that they would have to aim roughly toward 1 o’clock in the left workspace and 11 o’clock in the right workspace to hit the respective practice targets. They were also encouraged to fine adjust those strategies. We hypothesized that this instruction should strengthen the contrast we originally hypothesized in experiment 3 (Fig. 4A). To ensure that participants applied nonoverlapping strategies throughout practice, we asked participants after the experiment where they aimed during early, middle, and late practice in the left and right workspaces, respectively. However, on the basis of these postexperiment reports, we excluded seven participants who reported not using the clock analogy or aiming less than half an hour in the correct direction away from 12 o’clock for any of those time points (Table 1).

Data Analysis

Data were analyzed in MATLAB (RRID:SCR_001622), R (R Project for Statistical Computing, RRID:SCR_001905), and JASP (JASP, RRID:SCR_015823). Position data were low-pass filtered with MATLAB’s “filtfilt” command set to a fourth-order Butterworth filter with 10-Hz cutoff frequency. We separately calculated x- and y-velocity with a two-point central difference method and tangential velocity. Movement start was determined as the first frame where participants had left the start circle and tangential velocity exceeded 30 mm/s for at least three consecutive frames. For each trial, we extracted the angular end-point direction as the angle between the vector from start to target and the vector between the start and the position where the hand passed the target amplitude. We excluded trials in which no movement start could be detected or where participants failed to reach the target amplitude (see Table 1).

We summarized pre- and posttests separately for each visual workspace, target direction, type of posttest, and participant by taking the median over the two (experiment 1) or three (experiments 2–4) repetitions of each test, respectively (where the explicit judgment tests always had only 1 repetition). From each of the posttest median values, we then subtracted its corresponding pretest median value on an individual participant level to correct for any potential kinematic biases. For further analyses, including model fits (more details below), we used the mean across participants separately for each visual workspace location, target direction, and test.

Our main variables of interest were generalization patterns of aftereffects, and we limit our analysis on explicit judgments and total learning to descriptive reporting. In our analysis of implicit learning, we used two candidate functions to represent our hypotheses for the shape of generalization. The first candidate was a single Gaussian:

where y is the aftereffect at test direction x and the three free parameters are the gain a, the mean b, and the standard deviation c. We chose a Gaussian as approximation because it closely reflects the observed generalization function in visuomotor rotations (Krakauer et al. 2000) and has been related to underlying neural tuning functions (Tanaka et al. 2009; Thoroughman and Shadmehr 2000).

The second candidate was the sum of two Gaussians, henceforth referred to as “bimodal Gaussian”:

for which we assumed separate amplitudes a1 and a2 and means b1 and b2 but the same standard deviation c for the two modes.

We reasoned that successful learning of separate visuomotor maps by visual workspace cues should result in separate generalization curves for the cue conditions where each should resemble a single Gaussian in a direction appropriate for counteracting the cued rotation. It should be noted that we did not expect such an outcome based on previous results (Hegele and Heuer 2010). If the cues did not establish separate visuomotor maps on the other hand, we predicted either of two patterns for the resulting, common generalization curves. Depending on whether the centers of local generalization were overlapping or separate, the pattern should be dominated by interference, which could be fit by either of the functions but with small amplitude parameters, or by a bimodal generalization pattern with opposing peaks whose centers and amplitudes would be in line with compensating for opposing cursor rotations. Which scenario would be the case depends on whether generalization is target based (Woolley et al. 2011) or plan based (Day et al. 2016; McDougle et al. 2017) as well as on the specific arrangements of plan and strategic solutions in the different experiments. We could further have included a single Gaussian with an offset parameter in our model comparison but decided against this option as our focus was to distinguish between types of dual adaptation rather than to infer its exact shape.

To test our hypotheses, we fit the two candidate models to the nine across-participant mean data points representing aftereffects to the nine generalization directions, for each visual workspace, separately, using MATLAB’s “fmincon” to maximize the joint likelihood of the residuals. For this, we assumed independent, Gaussian likelihood functions centered on the predicted curve, whose variance we estimated by the mean of squared residuals. As this fitting procedure tended to run into local minima, we repeated each fit 100 times from different starting values selected uniformly from our constraint intervals (constraints were −180° to 180° on a, 0° to 180° on b parameters, or 135° to −45° for experiment 2, and 0° to 180° on c) and used only the fit with the highest joint likelihood.

To select the best model, we calculated the Bayesian information criterion (BIC) as

where n is the number of data points, k is the number of free parameters of the model, and lik is the joint likelihood of the data under the best fit parameters.

To compare model parameters, we created 10,000 bootstrap samples by selecting N out of our N single participant data sets randomly with replacement and taking the mean across participants for each selection. We then fit our candidate models to each of these means by the method described above, except that we avoided restarting from different values and used the best fit values from the original data set as starting values instead. Because the bimodal Gaussian has two identical equivalents for each solution, we sorted the resulting parameters so that b1 was always larger than b2. This procedure gave us a distribution for each parameter from which we calculated two-sided 95% confidence intervals by taking the 2.5th and 97.5th percentile values. We considered parameters significantly different from a hypothesized true mean if the latter was outside their 95% confidence interval. Similarly, we considered differences between two parameters significant if the 95% confidence interval of their differences within the bootstrap repeats did not include 0. Additionally, we used t-tests to compare aftereffect magnitudes (of the raw, nonbootstrapped data) for specific generalization directions against 0 or between groups. To protect ourselves from interpreting differences between experiments purely based on the absence of an effect in one and the presence in the other (Nieuwenhuis et al. 2011), we further performed an ANOVA on aftereffect posttests with the between-participant factor of experiment and the within-participant factors of visual workspace and target direction, where we added 45° to the target directions of experiment 2.

RESULTS

We report angles in degrees, with 0° corresponding to the positive x-axis and higher angles being CCW with respect to lower ones. Values are reported as means with standard deviation (SD) or with 95% confidence interval.

Experiment 1

Participants appeared overall able to compensate for the two opposing rotations after few blocks of practice (Fig. 2B). Posttests for total learning also indicate that participants learned to compensate for the opposing rotations almost completely and specific to the visual workspace cue (Fig. 2C), with mean total learning toward the practiced target location falling somewhat short of full 45° compensation by compensating 36° (SD 26) in the left workspace and even slightly exceeding the full −45° compensation by compensating −47° (SD 16) in the right workspace. Total learning tested at generalization target locations tended to generalize broadly, appearing relatively flat across directions. Explicit learning was also specific to the workspace, with mean explicit judgments at the practice target amounting to 23° (SD 9) relative to 45° full compensation for the left workspace and −28° (SD 9) relative to −45° full compensation for the right workspace, and tended to display broad generalization (Fig. 2D).

For aftereffects (Fig. 2E), target-based generalization predicted separate, single Gaussians with peak directions reflecting learning of the cued workspaces if the visual workspace cue enabled the formation of separate visuomotor maps; if the cues failed to enable global dual adaptation, then no clear peaks would be observed. Plan-based generalization, on the other hand, predicts a generalization function with two opposite peaks corresponding to participants’ strategic aims.

Model comparison preferred the bimodal Gaussian for characterizing the data, as indicated by differences in BIC >10 (∆BIC: 16 for left, 14 for right workspace cue) relative to the single Gaussian, which is considered “strong” evidence against the model that has the higher BIC (Kass and Raftery 1995). The amplitude parameters had opposing signs, and their confidence intervals did not include 0 (left: a1: 14.9° [12.8°; 17.4°], a2: −9.9° [−11.9°; −8.5°]; right: a1: 13.3° [11.2°; 46.8°], a2: −9.2° [−22.0°; −6.6°]). The corresponding means were located roughly where we would have expected aiming strategies to lie (left: b1: 122.5° [117.1°; 127.9°], b2: 45.7° [38.8°; 55.6°]; right: b1: 122.6° [117.0°; 126.3°], b2: 37.1° [25.7°; 53.4°]; c: left: 36.9° [31.1°; 44.3°]; right: 29.1° [15.9°; 39.3°]), although individual variability and the lack of separate time series for implicit and explicit learning confined us to qualitative analyses in this respect. Mean aftereffects at the practiced target were small, albeit significant [left: 4° (SD 6), P = 0.002, right: 3° (SD 5), P = 0.02], indicating that interference dominated here. This finding argues strongly against target-based generalization.

Importantly, the curves for the left and right workspaces were almost indistinguishable (Fig. 2) and the confidence intervals for differences between left and right workspace parameters all included 0° (∆a1: [−33.2°; 4.6°]; ∆b1: [−6.6°; 7.7°]; ∆a2: [−3.5°; 10.8°]; ∆b2: [−6.1°; 22.8°]; ∆c: [−4.3°; 26.1°]). It therefore appears that visual workspaces did not cue separate implicit visuomotor maps.

Overall, the observed generalization curves are well in line with dual adaptation expressed locally around the movement plan or trajectory but do not support local generalization around the visual target or separate visuomotor maps established based on visual workspace cues.

Experiment 2

For experiment 2, we predicted a two-peaked generalization pattern of aftereffects, similar to the one we observed in experiment 1 but shifted by 45°. That is, if the pattern in experiment 1 was just biases that did not reflect learning, we would predict it to be exactly the same as in experiment 1, whereas if it were a result of learning, we would predict it to be shifted by −45° on the x-axis, reflecting the −45° shift of the practice targets.

Practice and posttests again indicated that participants learned to compensate for the cursor rotation specific to the visual workspace (Fig. 3, B and C). Total learning tested at the practice location was 49° (SD 19) in the left workspace and thus somewhat exceeded full compensation of the −45° cursor rotation. In the right visual workspace, it fell somewhat short of fully compensating the +45° cursor rotation, with average compensation at the practiced target amounting to −28° (SD 40). Explicit judgments were on average 30° (SD 17) relative to 45° full compensation in the left workspace and −37° (SD 19) relative to −45° full compensation in the right workspace (Fig. 3D).

Model comparison favored the bimodal Gaussian to characterize the generalization pattern of aftereffects (∆BIC: 17 for left, 19 for right workspace cue). Importantly, the generalization curves indeed appeared shifted on the x-axis, with complete interference occurring close to the practice direction (Fig. 3E). For the right workspace, this was reflected in the bootstrapped distributions of differences between experiment 1 and experiment 2 mean parameters including −45° (∆b1: [−66.6°; −34.3°], ∆b2: [−48.9°; 5.7°]) and the difference between amplitudes including 0 (∆a1: [−12.4°; 61.4°], ∆a2: [−45.4°; 9.9°]). For experiment 2 the right workspace parameters and confidence intervals were a1: 11.2° [9.4°; 75.6°]; b1: 81.3° [55.6°; 85.4°]; a2: −6.1° [−55.1°; −3.6°]; b2: 6.9° [−3.9°; 44.7°]; c: 25.8° [7.5°; 49.9°]. For the left visual workspace, the best fit was achieved by a solution containing two relatively close peaks with large amplitudes and standard deviations: a1: 162.9° [84.7°; 163.8°]; b1: 49.9° [42.9°; 64.3°]; a2: −159.8° [−161.0°; −83.2°]; b2: 47.4° [36.0°; 57.5°]; c: 49.9° [37.5°; 63.5°]. This fit produces the visual pattern mainly by interference. Although this solution was thus not easily comparable to the two largely separate peaks of experiment 1 and the right workspace, we note that both amplitudes were still significant in opposite directions and that the switch between peaks still appeared to be around the practiced target, with aftereffects at the practiced target amounting to 3° (SD 6) (P19 = 0.026) in the left and 1° (SD 5) (P19 = 0.59) in the right visual workspace.

Overall, we conclude from experiment 2 that whereas some additional biases may contribute to the results observed in experiment 1, the shape of the generalization curve first and foremost reflects learning.

Experiment 3

While experiments 1 and 2 already favored plan-based over target-based generalization, we designed experiment 3 to maximize the contrast between the two hypotheses (Fig. 4A). For this purpose, we had participants practice a 60° cursor rotation to a target at 112.5° in the left workspace and a −60° cursor rotation to a target at 67.5° in the right workspace. For this scenario, target-based generalization predicted a generalization function for aftereffects with a positive peak at 67.5° and a negative peak at 112.5°. Plan-based generalization, on the other hand, should create the opposite result, i.e., a negative peak close to the 67.5° target direction, reflecting compensation of the positive rotation experienced with the 112.5° target and an assumed positive compensation strategy, and a corresponding positive peak close to the 112.5° target direction.

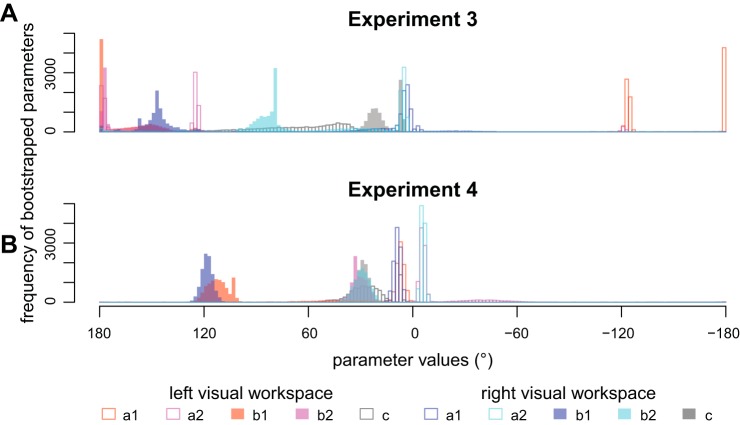

To our surprise, aftereffects no longer displayed a clear generalization pattern as in the previous experiments but a pattern that appeared dominated by complete interference across all directions between the opposing rotations (Fig. 4E). Accordingly, although mean aftereffects were still best described by bimodal Gaussians (∆BIC left: 5; right: 4), their peaks’ locations did not match either of the hypotheses and associated amplitudes were either positive and small (right workspace: a1: 3.1° [−29.1°; 38.4°]; b1: 147.3° [111.4°; 180.0°]; a2: 5.3° [3.3°; 175.0°]; b2: 82.6° [71.7°; 178.7°]; c: 19.5° [26.9°; 144.4°]) or excessively large (left workspace: a1: −125.7° [−180.0°; 124.9°]; b1: 179.5° [95.6°; 180.0°]; a2: −123.7° [−121.4°; 180.0°]; b2: 176.3° [93.7; 178.7°]; c: 69.6° [26.9°; 144.4°]). (Note that these fits approached our bounds on a and b parameters and the amplitude parameters only differed significantly from 0 for right a2). Overall, the fit appeared unstable as shown by the histogram of bootstrapped parameter estimates (see Fig. 6A). The visual impression of aftereffects did not change even if we removed five participants (by visual selection) who appeared responsible for the less clearly separated patterns of explicit and total learning (Fig. 4, C and D; data with participants removed are not shown).

Fig. 6.

Histograms of the bootstrapped parameters for experiment 3 (A) and experiment 4 (B). The distributed and split peaks in A visualize the unstable fit of the bimodal Gaussian to the data of experiment 3. The more confined peaks in B show that the fit is more stable. The centers of generalization (b1, b2) prefer generalization centering on the movement plan rather than the visual target. a, Gain; b, mean; c, standard deviation.

How could this absence of a clear generalization pattern be explained? We hypothesized that the development of aiming strategies might not have been quick enough to allow local generalization to occur in sufficiently distinct directions, thereby creating a generalization pattern that was primarily governed by interference. An indication that this might be the case can be seen in Fig. 4B, where mean hand directions during practice initially fall short of the ideal hand directions, which indicates poorly developed strategies at this time. If participants made such strategy errors, then this would cause counteractive learning under the plan-based generalization hypothesis and therefore could explain interference in posttests. To test whether this was the reason for interference in experiment 3 aftereffects, we conducted experiment 4, where we provided participants with ideal aiming strategies at the onset of rotation practice. We hypothesized that more appropriate strategy application should alleviate interference and restore the predicted, plan-based generalization pattern if our reasoning was correct.

Experiment 4

Predictions for experiment 4 were the same as they had been for experiment 3, but here we predict more local, direction-specific implicit adaptation because participants should have a more consistent strategy. Indeed, we observed more consistent performance during initial practice and the restitution of flat overall learning and explicit judgment patterns, indicating that participants were able to implement the provided strategy (Fig. 5, B–D). Consistent with our prediction, the resulting generalization pattern of implicit learning once again had opposite peaks (∆BIC left: 9; right: 9; Fig. 5E). Parameter histograms display more confined peaks compared with experiment 3 (Fig. 6), suggesting that the bimodal Gaussian was more appropriate here. Importantly, the signs of the amplitude parameters were in line with the predictions under plan-based generalization and the confidence intervals on associated amplitude parameters did not include 0 (left: a1: 6.6° [2.8°; 59°], b1: 112.0° [84.1°; 122.7°], a2: −5.5° [−58.2°; −2.9°], b2: 29.7° [21.8°; 58.1°], c: 25.0° [7.0°; 47.2°]; right: a1: 8.7° [5.1°; 13.0°], b1: 118.4° [111.6°; 124.8°], a2: −5.7° [−8.3°; −3.5°], b2: 29.1° [20.5°; 39.9°], c: 29.3° [22.3°, 37.6°]). This result is in direct contrast to target-based generalization. The confidence intervals for differences between left and right parameters once again included 0 (∆a1: [−5.2; 49.2]; ∆b1: [−31.0; 6.5]; ∆a2: [−52.7; 3.3]; ∆b2: [−9.8; 28.9]; ∆c: [−24.8; 18.1]), suggesting no significant influence of the contextual visual workspace cue.

Fig. 5.

Task and results of experiment 4. A: same scenario as experiment 3, but we provided strategies in advance. B: practice performance appeared less variable. C and D: all participants displayed good total learning and explicit knowledge. E: the bimodal pattern of aftereffects was restored.

In comparison with experiment 3, providing an aiming strategy at the onset of practice alleviated interference in implicit learning, which we interpret to reflect better expression of local generalization due to more appropriate application of spatially separate explicit strategies.

Across-Experiment Comparison

To ensure that the differences we inferred from generalization patterns across experiments were statistically justified, we performed an ANOVA on aftereffect posttests with the factors experiment, workspace cue, and target direction. Greenhouse-Geisser-corrected P values of the ANOVA across experiments indicated a significant main effect of target (F3.6,207.4 = 40.2, P < 0.001) but no other significant main effects (experiment: F3,57 = 0.19, P = 0.91; workspace: F1,57 = 1.5, P = 0.23). There was not a significant twofold interaction involving workspace (workspace × experiment: F3,57 = 1.1, P = 0.38; workspace × target: F6.3, 360.9 = 0.93, P = 0.48) but a significant interaction between experiment and target direction (F10.9, 207.4 = 4.5, P < 0.001). The three-way interaction approached significance (F19.0, 360.9 = 1.5, P = 0.075). Although admittedly post hoc, these numbers overall support our interpretation of differences in generalization to different targets across experiments and further lend some support to the absence of a relevant influence of visual workspace cue on aftereffects.

DISCUSSION

Visuomotor dual adaptation has served as a model paradigm to understand how the brain associates contextual cues with separate memories and representations (Imamizu et al. 2003; Wolpert and Kawato 1998). To broaden our understanding of the underlying constituents of visuomotor dual adaptation, we investigated generalization and interference of learning when separate visual workspaces cued alternating, opposing visuomotor cursor rotations. By varying rotation size, arrangement of visual targets, and instructions, we show that implicit dual adaptation is expressed as a local generalization pattern in this case. In experiment 1, separate visual workspaces cued separate aiming strategies but did not establish separate implicit visuomotor maps. Instead, opposing rotations were realized by local changes to a single visuomotor map. In experiment 2, we changed the practice locations to confirm that this was not a result of visuomotor biases. Experiment 3 showed that overlapping strategies lead to interference, even with separate visual target locations, and experiment 4 showed that this interference could be alleviated by providing explicit aiming strategies to participants from the onset of practice.

All in all, our findings corroborate previous suggestions based on cerebellar imaging that separate memories for different contexts rely on cognitive components (Imamizu et al. 2003). The pattern of implicit dual adaptation we observed can be explained by generalization occurring locally around the (explicit) movement plan. Specifically, we observed peak learning at the approximate locations and in the directions predicted if we assume that learning generalizes locally around the aiming strategy, in line with recent findings (Day et al. 2016; McDougle et al. 2017). Furthermore, interference occurred in a scenario where it could be explained by generalization centering on the movement plan but not the visual target (experiment 3).

Within the framework entertained in introduction, the observed results strongly suggest that the planned movement direction, but not the visual workspace, is a relevant dimension in the implicit processes’ state space. Conversely, the flat pattern of explicit generalization would indicate that visual workspace, but not direction (whether plan, movement, or target), was a relevant dimension in the explicit processes’ state space. Given the high flexibility of human cognition and explicit learning, we would not expect the latter to be a general characteristic of explicit learning, though. It seems more plausible that explicit learning can account for contextual cues in arbitrary dimensions, given that learners become aware of the relevant contingencies between cues and transformations. For implicit memory on the other hand, a state space of relatively fixed low dimensionality would fit well with its overall simplicity, which makes it less flexible (Bond and Taylor 2015; Mazzoni and Krakauer 2006) but robust to constraints on cognitive processing (Fernandez-Ruiz et al. 2011; Haith et al. 2015). Which other cues, besides the movement plan, belong to the implicit state space and whether more extensive practice can introduce new context dimensions to it, e.g., by associative learning as suggested previously (Howard et al. 2013), are interesting topics for future research. A practical implication of this view would be that “A-B-A” paradigms where participants learn first one (A), then another (B), and then the first transformation (A) again may be more suited to infer preexisting context dimensions, as they minimize contrastive exposure to new contingencies that could be learned associatively. Specific investigation of the latter, on the other hand, might benefit from exploiting known characteristics of associative learning.

Plan or Target Based?

Our findings contradict conclusions from an earlier study that inferred the visual target to be the relevant center of local, implicit generalization to different directions (Woolley et al. 2011). We explain this contradiction by the fact that this earlier study only compared the two alternative hypotheses that learning centers on the visual target or the executed movement but did not consider the possibility that it centers on the movement plan. When reinterpreted in light of this new hypothesis, all results in that study can potentially be explained by plan-based generalization with separate visual targets cuing separate aiming strategies. Specifically, participants in that study learned to compensate opposing cursor rotations when visual targets were separate but ideal physical solutions overlapped. Alternatively to local generalization centering on the visual target, this can be explained by different aiming strategies becoming associated with the separate targets, each of which is less than the optimal, full rotation (Bond and Taylor 2015) and therefore does not overlap with the strategy for the opposing cursor rotation (in contrast to the physical solutions). Similarly, interference scaling inversely with the separation of visual targets (Woolley et al. 2011) may also be explained by the degree of overlap between aiming strategies.

It is worth noting that the lack of dual adaptation in an earlier experiment by Woolley and colleagues (Woolley et al. 2007) may be attributable to the saliency of the visual cues. In that study, they found that practice to the same visual target did not enable dual learning when opposing rotations were cued by screen background colors, but the task relevance of these cues may not have been noticed by the participants. If the participants did not associate an aiming strategy with the cues, then it would have not allowed plan-based and directionally dependent implicit adaptation to develop, thus leading to no dual adaptation.

Plan or Movement Based?

Alternatively to plan-based generalization, our findings could be explained by learning generalizing around the movement path, as has been found for force field adaptation (Gonzalez Castro et al. 2011). These two options are difficult to tease apart with our current methodology because the strategic movement plan deviates from the visual target in the same direction as the movement trajectory, resulting in qualitatively similar predictions for the two hypotheses. However, a number of more recent studies have provided convincing evidence in favor of plan-based generalization over movement-based generalization in visuomotor rotation tasks (Day et al. 2016; McDougle et al. 2017). Furthermore, a recent study showed that when participants plan to move two cursors to two separate targets by a hand movement toward the center between them aftereffects occur locally around both targets, but interference dominates when they plan to move to the central target (Parvin et al. 2018). In force fields, three recent studies showed that interference is reduced when similar trajectories are associated with different movement plans while practicing opposing force fields (Hirashima and Nozaki 2012; Sheahan et al. 2016, 2018). Similarly, opposing force fields were learned with the same trajectory when participants intended to control different points on a virtual object (Heald et al. 2018). Finally, irrespective of our reinterpretation above, Woolley and colleagues’ (Woolley et al. 2011) results show that local dual adaptation with similar physical movements is possible, providing further evidence against movement-based generalization in visuomotor rotation tasks.

Rather than a fixed center of generalization, one might expect that the brain adaptively exploits the task structure by linking memory separation to those cues that are sufficiently distinct. Our data for implicit learning do not support this possibility, as otherwise we would have expected aftereffects in experiment 3 to be shaped by learning around the separate visual targets rather than interference. However, it is still possible that such a shift in cue relevance may occur under different circumstances (e.g., longer practice). More generally, we may ask if local learning of multiple transformations evolves according to an underlying model that is specifically adapted to the practice scenario or if it is merely the sum of single transformation learning. Previous studies have considered a model-based approach and varied practiced target directions to distinguish between these possibilities (Bedford 1989; Pearson et al. 2010; Woolley et al. 2011). We note that this approach becomes more difficult under plan-based generalization, since the centers of single adaptation can no longer be taken to be fixed but depend on flexible cognitive strategies, thus complicating quantitative inference. As noted above, we would expect explicit strategies to be in principle highly adaptable to even complex task structures under the right circumstances, although some default preferences may exist (Bedford 1989; Redding and Wallace 2006; van Dam and Ernst 2015), whereas implicit learning is likely more stereotypical.

Relation to Force Field Learning

With respect to the relevance of our findings to visuomotor transformation learning in general, we need to consider the possibility that dual adaptation in force fields may differ from that in cursor rotations. Presumably because of the less transparent nature of the transformation, aiming strategies are harder to conceptualize in force field learning and may play less of a role (McDougle et al. 2015). Furthermore, it is possible that the state space representing implicit internal models for force compensation incorporates more and different dimensions than a visuomotor map representing cursor rotations. For example, movement velocity is theoretically relevant for compensating velocity-dependent force fields but not for velocity-independent cursor rotations.

These differences may reconcile diverging interpretations for the role of visual workspace separation as a cue in force fields and cursor rotations (Hegele and Heuer 2010; Howard et al. 2013). In force fields, the visual workspace may be part of the state-space representation of implicit learning, thus enabling learners to acquire opposing transformations locally in this state space when visual workspace locations are separate (Howard et al. 2013). In cursor rotations on the other hand, the role of the visual workspace appears confined to being a contextual cue for explicit strategies (Hegele and Heuer 2010), as corroborated by our present findings.

Despite these differences, the recent studies showing that opposing force fields can be learned when different plans are associated with identical trajectories (Hirashima and Nozaki 2012; Sheahan et al. 2016, 2018) indicate that principles similar to those we found in this study may apply across kinematic and dynamic transformations. It should be noted that our present results extend these findings by showing that the plan does not need to be tied to a visual target, in line with learning from sensory prediction errors being independent of visual target presence (Lee et al. 2018). The extent to which plan-dependent force compensation can be characterized as explicit or implicit remains to be clarified.

Relation to Models of Dual Adaptation

A number of models have been proposed to formalize the underlying computational principles of dual adaptation to opposing sensorimotor transformations (Ghahramani and Wolpert 1997; Howard and Franklin 2015, 2016; Lee and Schweighofer 2009; Lonini et al. 2009; McDougle et al. 2017; van Dam and Ernst 2015; Wolpert and Kawato 1998). Many of these models share the common feature that a set of independent bases encodes a visuomotor map and that these bases learn from errors depending on how close the movement was to their preferred direction in some relevant cue space. Indeed, it has been suggested that the shape of the neural tuning functions that give rise to generalization across different target directions may underlie the pattern of interference observed in dual-adaptation paradigms (Howard and Franklin 2015, 2016; Sarwary et al. 2015). Applying these models to our results, they can in principle explain the local, implicit generalization phenomena we observed via limited local generalization if we use the explicit movement plan as the relevant cue. This implies a serial arrangement where the motor plan feeds into the implicit adaptation model. A previous model comparison study concluded that distinct motor learning processes are arranged in parallel (Lee and Schweighofer 2009). However, they arrived at this conclusion by sequential comparison of different models with the underlying assumptions considering neither locally limited generalization nor specific properties associated with explicit planning. Therefore, their conclusion may not apply when these are taken into account.

Implications for Experiments and Real-World Tasks

Irrespective of underlying computations or neural processes, our results have important consequences for behavioral experiments and their interpretation with respect to real-world behavior. Thus our results show that the implicit mechanism that underlies aftereffects can learn opposing visuomotor transformations locally for specific parts of the workspace without forming separate visuomotor maps, provided that contextual cues allow separate aiming strategies to be associated with opposing transformations. This finding suggests that observing dual/opposing aftereffects following training at the same target locations or even with overlapping hand paths still does not imply the formation of separate implicit visuomotor maps. Instead, posttests may be probing local dents within a single visuomotor map if participants’ aims resemble those during practice. Alternatively, when participants are instructed to aim toward the visual target, aftereffects may be absent because the generalization function is probed at the point of maximal interference, as in our experiments 1 and 2. This phenomenon is also likely to explain the absence of aftereffects in a previous study of ours (Hegele and Heuer 2010). Overall, researchers need to take into account the possibility that flexible movement plans form a complex generalization landscape, particularly when learners are practicing multiple sensorimotor transformations.

With respect to the introductory example, we would expect the use of different tools to be realized by distinct motor memories comprising different visuomotor mappings, given that successful tool use does not appear to be constrained to a small range of directions, except by biomechanical constraints. Given our present results and the fact that most studies on dual adaptation do not differentiate whether learning observed by them is explicit or implicit and if it occurs locally or in separate visuomotor maps, we do not currently see compelling evidence that the mechanism that underlies implicit aftereffects in visuomotor dual adaptation is indeed relevant for learning to use different tools by a priori context inference. Further research is needed to identify cues that may indeed support separate, implicit visuomotor maps by this mechanism. Other than identifying “preexisting” cues, an interesting question is whether such cues to separate implicit learning may be learned by associations over a longer timescale, as suggested previously (Howard et al. 2013). Alternatively, participants could learn these skills by explicit strategies that become automatized into implicit tendencies for action selection (Morehead et al. 2015), in line with canonical theories of motor skill learning (Fitts and Posner 1967). The role of the process that produces aftereffects, on the other hand, may be limited to calibrating the system to changes that are more biologically common, such as muscular fatigue. Such a division of responsibilities would be reminiscent of the classical distinction between learning of intrinsic (body) vs. extrinsic (tool) transformations (Heuer 1983). Our results therefore highlight the importance of distinguishing between different concepts of dual adaptation, i.e., local shaping of a single vs. the formation of separate visuomotor maps.

GRANTS

This research was supported by a grant within the Priority Program, SPP 1772 from German Research Foundation (Deutsche Forschungsgemeinschaft, DFG), Grant He7105/1.1.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

R.S. and M.H. conceived and designed research; R.S. analyzed data; R.S., J.A.T., and M.H. interpreted results of experiments; R.S. prepared figures; R.S. drafted manuscript; R.S., J.A.T., and M.H. edited and revised manuscript; R.S., J.A.T., and M.H. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Lisa M. Langsdorf, Simon Koch, Samuel Poggemann, Vanessa Walter, and Simon Rosental for data collection. We further thank Eugene Poh and Sam McDougle for helpful discussions on data analysis.

REFERENCES

- Ayala MN, ’t Hart BM, Henriques DY. Concurrent adaptation to opposing visuomotor rotations by varying hand and body postures. Exp Brain Res 233: 3433–3445, 2015. doi: 10.1007/s00221-015-4411-9. [DOI] [PubMed] [Google Scholar]

- Bedford FL. Constraints on learning new mappings between perceptual dimensions. J Exp Psychol Hum Percept Perform 15: 232–248, 1989. doi: 10.1037/0096-1523.15.2.232. [DOI] [Google Scholar]

- Bock O, Worringham C, Thomas M. Concurrent adaptations of left and right arms to opposite visual distortions. Exp Brain Res 162: 513–519, 2005. doi: 10.1007/s00221-005-2222-0. [DOI] [PubMed] [Google Scholar]

- Bond KM, Taylor JA. Flexible explicit but rigid implicit learning in a visuomotor adaptation task. J Neurophysiol 113: 3836–3849, 2015. doi: 10.1152/jn.00009.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bond KM, Taylor JA. Structural learning in a visuomotor adaptation task is explicitly accessible. eNeuro 4: e0122, 2017. doi: 10.1523/ENEURO.0122-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Cunningham HA. Aiming error under transformed spatial mappings suggests a structure for visual-motor maps. J Exp Psychol Hum Percept Perform 15: 493–506, 1989. doi: 10.1037/0096-1523.15.3.493. [DOI] [PubMed] [Google Scholar]

- Day KA, Roemmich RT, Taylor JA, Bastian AJ. Visuomotor learning generalizes around the intended movement. eNeuro 3: 1–12, 2016. doi: 10.1523/ENEURO.0005-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donchin O, Francis JT, Shadmehr R. Quantifying generalization from trial-by-trial behavior of adaptive systems that learn with basis functions: theory and experiments in human motor control. J Neurosci 23: 9032–9045, 2003. doi: 10.1523/JNEUROSCI.23-27-09032.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandez-Ruiz J, Wong W, Armstrong IT, Flanagan JR. Relation between reaction time and reach errors during visuomotor adaptation. Behav Brain Res 219: 8–14, 2011. doi: 10.1016/j.bbr.2010.11.060. [DOI] [PubMed] [Google Scholar]

- Fitts PM, Posner MI. Human Performance. Belmont, CA: Brooks/Cole, 1967. [Google Scholar]

- Galea JM, Miall RC. Concurrent adaptation to opposing visual displacements during an alternating movement. Exp Brain Res 175: 676–688, 2006. doi: 10.1007/s00221-006-0585-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghahramani Z, Wolpert DM. Modular decomposition in visuomotor learning. Nature 386: 392–395, 1997. doi: 10.1038/386392a0. [DOI] [PubMed] [Google Scholar]

- Ghilardi MF, Gordon J, Ghez C. Learning a visuomotor transformation in a local area of work space produces directional biases in other areas. J Neurophysiol 73: 2535–2539, 1995. doi: 10.1152/jn.1995.73.6.2535. [DOI] [PubMed] [Google Scholar]

- Gonzalez Castro LN, Monsen CB, Smith MA. The binding of learning to action in motor adaptation. PLoS Comput Biol 7: e1002052, 2011. doi: 10.1371/journal.pcbi.1002052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haith AM, Huberdeau DM, Krakauer JW. The influence of movement preparation time on the expression of visuomotor learning and savings. J Neurosci 35: 5109–5117, 2015. doi: 10.1523/JNEUROSCI.3869-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heald JB, Ingram JN, Flanagan JR, Wolpert DM. Multiple motor memories are learned to control different points on a tool. Nat Hum Behav 2: 300–311, 2018. doi: 10.1038/s41562-018-0324-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hegele M, Heuer H. Implicit and explicit components of dual adaptation to visuomotor rotations. Conscious Cogn 19: 906–917, 2010. doi: 10.1016/j.concog.2010.05.005. [DOI] [PubMed] [Google Scholar]

- Heuer H. Bewegungslernen. Stuttgart: Kohlhammer, 1983. [Google Scholar]

- Heuer H, Hegele M. Adaptation to visuomotor rotations in younger and older adults. Psychol Aging 23: 190–202, 2008. doi: 10.1037/0882-7974.23.1.190. [DOI] [PubMed] [Google Scholar]

- Heuer H, Hegele M. Generalization of implicit and explicit adjustments to visuomotor rotations across the workspace in younger and older adults. J Neurophysiol 106: 2078–2085, 2011. doi: 10.1152/jn.00043.2011. [DOI] [PubMed] [Google Scholar]

- Hirashima M, Nozaki D. Distinct motor plans form and retrieve distinct motor memories for physically identical movements. Curr Biol 22: 432–436, 2012. doi: 10.1016/j.cub.2012.01.042. [DOI] [PubMed] [Google Scholar]

- Howard IS, Franklin DW. Neural tuning functions underlie both generalization and interference. PLoS One 10: e0131268, 2015. doi: 10.1371/journal.pone.0131268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard IS, Franklin DW. Adaptive tuning functions arise from visual observation of past movement. Sci Rep 6: 28416, 2016. doi: 10.1038/srep28416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard IS, Ingram JN, Franklin DW, Wolpert DM. Gone in 0.6 seconds: the encoding of motor memories depends on recent sensorimotor states. J Neurosci 32: 12756–12768, 2012. doi: 10.1523/JNEUROSCI.5909-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard IS, Wolpert DM, Franklin DW. The effect of contextual cues on the encoding of motor memories. J Neurophysiol 109: 2632–2644, 2013. doi: 10.1152/jn.00773.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huberdeau DM, Krakauer JW, Haith AM. Dual-process decomposition in human sensorimotor adaptation. Curr Opin Neurobiol 33: 71–77, 2015. doi: 10.1016/j.conb.2015.03.003. [DOI] [PubMed] [Google Scholar]

- Imamizu H, Kuroda T, Miyauchi S, Yoshioka T, Kawato M. Modular organization of internal models of tools in the human cerebellum. Proc Natl Acad Sci USA 100: 5461–5466, 2003. doi: 10.1073/pnas.0835746100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imamizu H, Sugimoto N, Osu R, Tsutsui K, Sugiyama K, Wada Y, Kawato M. Explicit contextual information selectively contributes to predictive switching of internal models. Exp Brain Res 181: 395–408, 2007. doi: 10.1007/s00221-007-0940-1. [DOI] [PubMed] [Google Scholar]

- Kass RE, Raftery AE. Bayes factors. J Am Stat Assoc 90: 773–795, 1995. doi: 10.1080/01621459.1995.10476572. [DOI] [Google Scholar]

- Krakauer JW, Pine ZM, Ghilardi MF, Ghez C. Learning of visuomotor transformations for vectorial planning of reaching trajectories. J Neurosci 20: 8916–8924, 2000. doi: 10.1523/JNEUROSCI.20-23-08916.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee JY, Schweighofer N. Dual adaptation supports a parallel architecture of motor memory. J Neurosci 29: 10396–10404, 2009. doi: 10.1523/JNEUROSCI.1294-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee K, Oh Y, Izawa J, Schweighofer N. Sensory prediction errors, not performance errors, update memories in visuomotor adaptation. Sci Rep 8: 16483, 2018. doi: 10.1038/s41598-018-34598-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lonini L, Dipietro L, Zollo L, Guglielmelli E, Krebs HI. An internal model for acquisition and retention of motor learning during arm reaching. Neural Comput 21: 2009–2027, 2009. doi: 10.1162/neco.2009.03-08-721. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, Krakauer JW. An implicit plan overrides an explicit strategy during visuomotor adaptation. J Neurosci 26: 3642–3645, 2006. doi: 10.1523/JNEUROSCI.5317-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDougle SD, Bond KM, Taylor JA. Explicit and implicit processes constitute the fast and slow processes of sensorimotor learning. J Neurosci 35: 9568–9579, 2015. doi: 10.1523/JNEUROSCI.5061-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDougle SD, Bond KM, Taylor JA. Implications of plan-based generalization in sensorimotor adaptation. J Neurophysiol 118: 383–393, 2017. doi: 10.1152/jn.00974.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDougle SD, Ivry RB, Taylor JA. Taking aim at the cognitive side of learning in sensorimotor adaptation tasks. Trends Cogn Sci 20: 535–544, 2016. doi: 10.1016/j.tics.2016.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morehead JR, Ivry RB. Intrinsic biases systematically affect visuomotor adaptation experiments. Society for Neural Control of Movement, 2015. [Google Scholar]

- Morehead JR, Qasim SE, Crossley MJ, Ivry R. Savings upon re-aiming in visuomotor adaptation. J Neurosci 35: 14386–14396, 2015. doi: 10.1523/JNEUROSCI.1046-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morehead JR, Taylor JA, Parvin DE, Ivry RB. Characteristics of implicit sensorimotor adaptation revealed by task-irrelevant clamped feedback. J Cogn Neurosci 29: 1061–1074, 2017. doi: 10.1162/jocn_a_01108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieuwenhuis S, Forstmann BU, Wagenmakers EJ. Erroneous analyses of interactions in neuroscience: a problem of significance. Nat Neurosci 14: 1105–1107, 2011. doi: 10.1038/nn.2886. [DOI] [PubMed] [Google Scholar]

- Nozaki D, Yokoi A, Kimura T, Hirashima M, Orban de Xivry JJ. Tagging motor memories with transcranial direct current stimulation allows later artificially-controlled retrieval. eLife 5: e15378, 2016. doi: 10.7554/eLife.15378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osu R, Hirai S, Yoshioka T, Kawato M. Random presentation enables subjects to adapt to two opposing forces on the hand. Nat Neurosci 7: 111–112, 2004. [Erratum in Nat Neurosci 7: 314, 2004.] doi: 10.1038/nn1184. [DOI] [PubMed] [Google Scholar]

- Parvin DE, Morehead JR, Stover AR, Dang KV, Ivry RB. Task dependent modulation of implicit visuomotor adaptation (Abstract). Society for Neural Control of Movement, 2018. [Google Scholar]

- Pearson TS, Krakauer JW, Mazzoni P. Learning not to generalize: modular adaptation of visuomotor gain. J Neurophysiol 103: 2938–2952, 2010. doi: 10.1152/jn.01089.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redding GM, Wallace B. Generalization of prism adaptation. J Exp Psychol Hum Percept Perform 32: 1006–1022, 2006. doi: 10.1037/0096-1523.32.4.1006. [DOI] [PubMed] [Google Scholar]

- Sarwary AM, Stegeman DF, Selen LP, Medendorp WP. Generalization and transfer of contextual cues in motor learning. J Neurophysiol 114: 1565–1576, 2015. doi: 10.1152/jn.00217.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidler RD, Bloomberg JJ, Stelmach GE. Context-dependent arm pointing adaptation. Behav Brain Res 119: 155–166, 2001. doi: 10.1016/S0166-4328(00)00347-8. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Mussa-Ivaldi FA. Adaptive representation of dynamics during learning of a motor task. J Neurosci 14: 3208–3224, 1994. doi: 10.1523/JNEUROSCI.14-05-03208.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheahan HR, Franklin DW, Wolpert DM. Motor planning, not execution, separates motor memories. Neuron 92: 773–779, 2016. doi: 10.1016/j.neuron.2016.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheahan HR, Ingram JN, Žalalytė GM, Wolpert DM. Imagery of movements immediately following performance allows learning of motor skills that interfere. Sci Rep 8: 14330, 2018. doi: 10.1038/s41598-018-32606-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stout D, Chaminade T. The evolutionary neuroscience of tool making. Neuropsychologia 45: 1091–1100, 2007. doi: 10.1016/j.neuropsychologia.2006.09.014. [DOI] [PubMed] [Google Scholar]

- Stout D, Toth N, Schick K, Chaminade T. Neural correlates of Early Stone Age toolmaking: technology, language and cognition in human evolution. Philos Trans R Soc Lond B Biol Sci 363: 1939–1949, 2008. doi: 10.1098/rstb.2008.0001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Synofzik M, Lindner A, Thier P. The cerebellum updates predictions about the visual consequences of one’s behavior. Curr Biol 18: 814–818, 2008. doi: 10.1016/j.cub.2008.04.071. [DOI] [PubMed] [Google Scholar]

- Tanaka H, Sejnowski TJ, Krakauer JW. Adaptation to visuomotor rotation through interaction between posterior parietal and motor cortical areas. J Neurophysiol 102: 2921–2932, 2009. doi: 10.1152/jn.90834.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JA, Ivry RB. The role of strategies in motor learning. Ann NY Acad Sci 1251: 1–12, 2012. doi: 10.1111/j.1749-6632.2011.06430.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JA, Ivry RB. Cerebellar and prefrontal cortex contributions to adaptation, strategies, and reinforcement learning. Prog Brain Res 210: 217–253, 2014. doi: 10.1016/B978-0-444-63356-9.00009-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JA, Klemfuss NM, Ivry RB. An explicit strategy prevails when the cerebellum fails to compute movement errors. Cerebellum 9: 580–586, 2010. doi: 10.1007/s12311-010-0201-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JA, Krakauer JW, Ivry RB. Explicit and implicit contributions to learning in a sensorimotor adaptation task. J Neurosci 34: 3023–3032, 2014. doi: 10.1523/JNEUROSCI.3619-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas M, Bock O. Concurrent adaptation to four different visual rotations. Exp Brain Res 221: 85–91, 2012. doi: 10.1007/s00221-012-3150-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thoroughman KA, Shadmehr R. Learning of action through adaptive combination of motor primitives. Nature 407: 742–747, 2000. doi: 10.1038/35037588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dam LC, Ernst MO. Mapping shape to visuomotor mapping: learning and generalisation of sensorimotor behaviour based on contextual information. PLoS Comput Biol 11: e1004172, 2015. doi: 10.1371/journal.pcbi.1004172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert DM, Kawato M. Multiple paired forward and inverse models for motor control. Neural Netw 11: 1317–1329, 1998. doi: 10.1016/S0893-6080(98)00066-5. [DOI] [PubMed] [Google Scholar]

- Woolley DG, de Rugy A, Carson RG, Riek S. Visual target separation determines the extent of generalisation between opposing visuomotor rotations. Exp Brain Res 212: 213–224, 2011. doi: 10.1007/s00221-011-2720-1. [DOI] [PubMed] [Google Scholar]

- Woolley DG, Tresilian JR, Carson RG, Riek S. Dual adaptation to two opposing visuomotor rotations when each is associated with different regions of workspace. Exp Brain Res 179: 155–165, 2007. doi: 10.1007/s00221-006-0778-y. [DOI] [PubMed] [Google Scholar]