Abstract.

Multimodal imaging combining optoacoustic tomography (OAT) with magnetic resonance imaging (MRI) enables spatiotemporal resolution complementarity, improves accurate quantification, and thus yields more insights into physiology and pathophysiology. However, only manual landmark based coregistration of OAT-MRI has been used so far. We developed a toolbox (RegOA), which frames an automated registration pipeline to align OAT with high-field MR images based on mutual information. We assessed the performance of the registration method using images acquired on one phantom with fiducial markers and in vivo/ex vivo data of mouse heads/brain. The accuracy and robustness of the registration are improved using a two-step registration method with preprocessing of OAT and MRI data. The major advantages of our approach are minimal user input and quantitative assessment of the registration error. The registration with MR and standard reference atlas enables regional information extraction, facilitating the accurate, objective, and rapid analysis of large groups of rodent OAT and MR images.

Keywords: brain, image registration, magnetic resonance imaging, mutual information, optoacoustic tomography, preclinical imaging

1. Introduction

Photoacoustic or optoacoustic tomography (OAT) is an emerging imaging modality with applications both in the preclinical arena and in the clinical settings.1–3 OAT is based on photoacoustic effect: the energy absorbed by an endogenous chromophore in the tissue (for example, oxy/deoxy-hemoglobin) or by an exogenous probe following excitation by short-pulsed light is transformed in part into heat, leading to a transient thermoelastic expansion and the subsequent generation of broadband pressure waves, which can be detected by ultrasound transducers.4 The use of reconstruction algorithms, whether through a straightforward backprojection or using sophisticated model-based procedures, enables localization of photoabsorbing materials in deep tissue. OAT is particularly attractive for (neuro)imaging of rodents as it combines the sensitivity and tissue coverage of optical imaging with high spatial resolution provided by ultrasound,5–9 enabling versatile applications. For example, OAT allows studying cerebral hemodynamics,3 neural activity based on calcium activity,10 activity of enzymes such as metalloproteinase,11 or blood brain barrier integrity12 in animal models in vivo. Similarly, the method yields phenotypic readouts of pathology, such as the deposition of amyloid beta in murine models of Alzheimer’s disease13 or hypoxia in experimental glioblastoma.14

Accurate quantitative analysis of OAT signals is essential for applications in understanding physiology under normal condition and disease states.15,16 A prerequisite for quantitative analysis is the accurate allocation of OAT signals to anatomical structures. However, this is hampered by the inherent low soft tissue contrast of OAT for most tissues, which renders quantification challenging. Combining OAT with imaging modalities providing high anatomical definition, such as magnetic resonance imaging (MRI) or x-ray computed tomography (CT), will account for the limitation of OAT and thus enable more precise quantification. Moreover, properly registered multimodal imaging data allow obtaining structural, physiological, and molecular information from the same animal, increasing the scientific value of the investigation and reducing the number of animals needed for a study. The methods for registering OAT-MRI reported so far were all based on landmarks in the images,11,17–19 and hence depend largely on manual input. Unbiased automated registration of OAT data with MRI or other modalities is still lacking.

Registration methodologies can be generally classified into feature- or intensity-based approaches.20 Automated feature-based registration requires extraction of features, such as edges, regions, and centroids, through gradient-based methods or segmentation.21,22 Feature extraction is time-consuming and the algorithm selected may differ from case to case.23 In contrast, intensity-based registration is a universal alternative not requiring feature identification. The principle of automated intensity-based registration is to seek a geometrical transformation in an iterative manner by minimizing (or maximizing) a similarity metric. Commonly used similarity metrics for mono- or multimodal registration include sum-of-square-difference, correlation coefficient, cross correlation, correlation ratio, mutual information (MI), and manifold-based measures.20,24 The MI-based registration tries to maximize the amount of shared information between two images by evaluating the Shannon entropy derived from the joint probability distribution of the image intensity.20 MI has been proven robust for registering multimodal images using MRI-positron emission tomography (PET) and CT-PET.24

To address the challenges of coregistering rodent brain images acquired using OAT and MRI, we developed an automated OAT-MRI registration toolbox “RegOA” based on MI. The performance of the registration toolbox was assessed using a phantom with fiducial markers and, for mouse head/brain images, both in vivo and ex vivo. The accuracy of the algorithm was evaluated quantitatively by metrics, such as the target registration error (TRE) and the Euclidean effectiveness ratio (EER).25 The robustness of the registration algorithm was tested by rotating the OAT images against fixed MRI images. RegOA also supports exporting the files to other well-established image analysis/registration platforms, such as AFNI26 and SPM.27

2. Methods

2.1. Framework of Registration

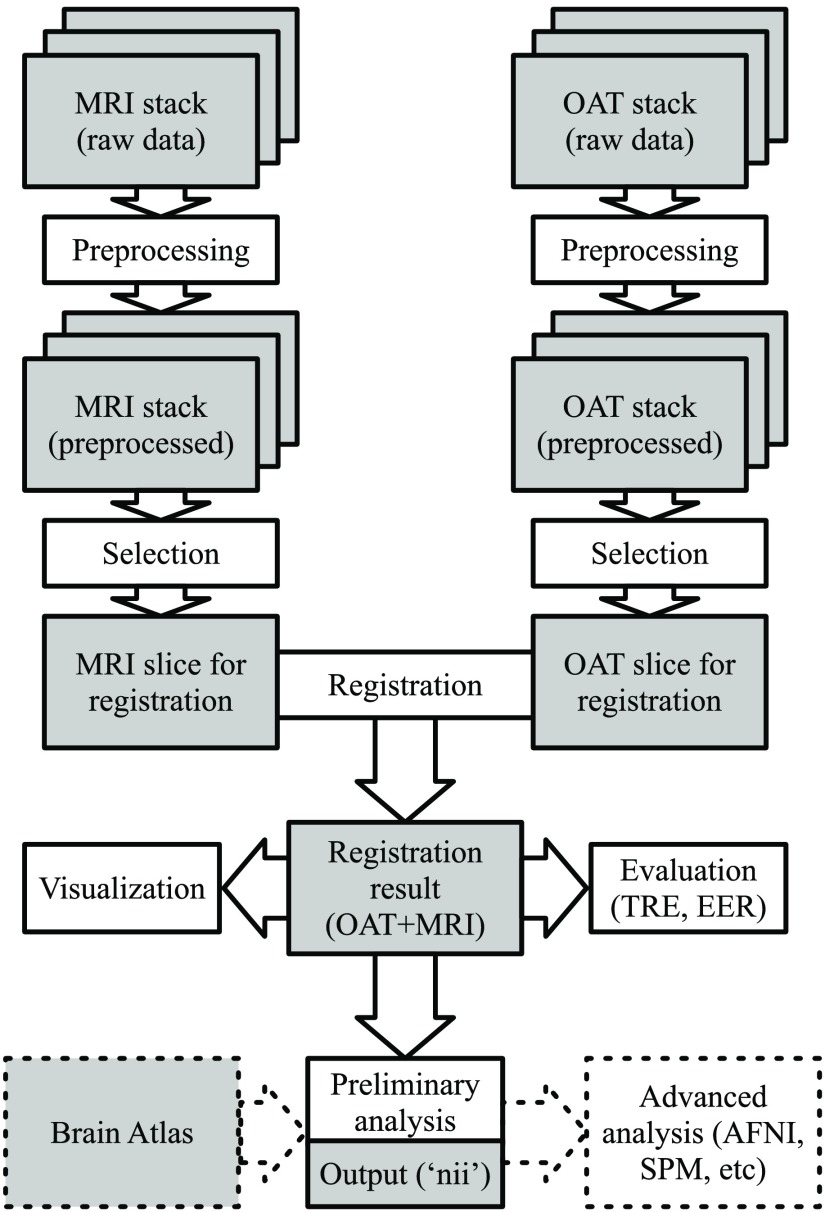

We have developed RegOA, an open-source software toolbox for OAT-MRI registration based on MATLAB (MathWorks, Massachusetts, version: R2018a). The framework of RegOA is shown in Fig. 1. Raw data containing two-dimensional (2-D) or three-dimensional (3-D) MRI and OAT image stacks were preprocessed, which included adaptive filtering and segmentation of the region of interest. The preprocessed images were then registered using a two-step strategy based on MI (or M3 method, explained in “registration algorithm”). The registration results can be visualized and evaluated with metrics such as TRE and EER.25 We have also implemented registration of OAT images to a standard MRI brain atlas for further analysis. Finally, RegOA allows exporting registered results in a NIfTI format to other widely used imaging analysis toolbox, such as SPM27 and AFNI,26 for volume-based morphometry and voxel-based analysis.

Fig. 1.

The workflow for automatic registration. The gray boxes indicate dataset generated at different steps in the workflow and the white boxes represent the corresponding image processing technique.

2.1.1. Preprocessing

Each slice of MRI and OAT stacks was preprocessed to remove noise and to segment the subject from the background. Many sophisticated nonlinear filters have been developed for denoising MRI images; here, we have adopted a 2-D adaptive low-pass Wiener filter that estimates the local mean and variance around each pixel and removes the stochastic noise in MR images without losing important features of the subject.28–30 Then the MRI slices were segmented using an active contour model or “snakes,” which evolves the segmentation result iteratively (details in supplementary material, Sec. 1 in Ref. 31). The initial contour is provided by the user by drawing a coarse contour around the object. The maximum iteration cycle number was set to 50. OAT data displayed ripple-shaped artifacts arising from limitation of OAT instrumentation, reconstruction algorithm, and minor speckle noise. Therefore, a “Canny edge” detector (Gaussian filter, size ) instead of the low-pass Wiener filter was applied to OAT images to identify clean and well-defined edges of the object and successfully discard fictitious edges from the image.32 Thereafter, segmentation using snakes identical to the one used in MRI preprocessing was applied (supplementary Fig. 2 in Ref. 33).

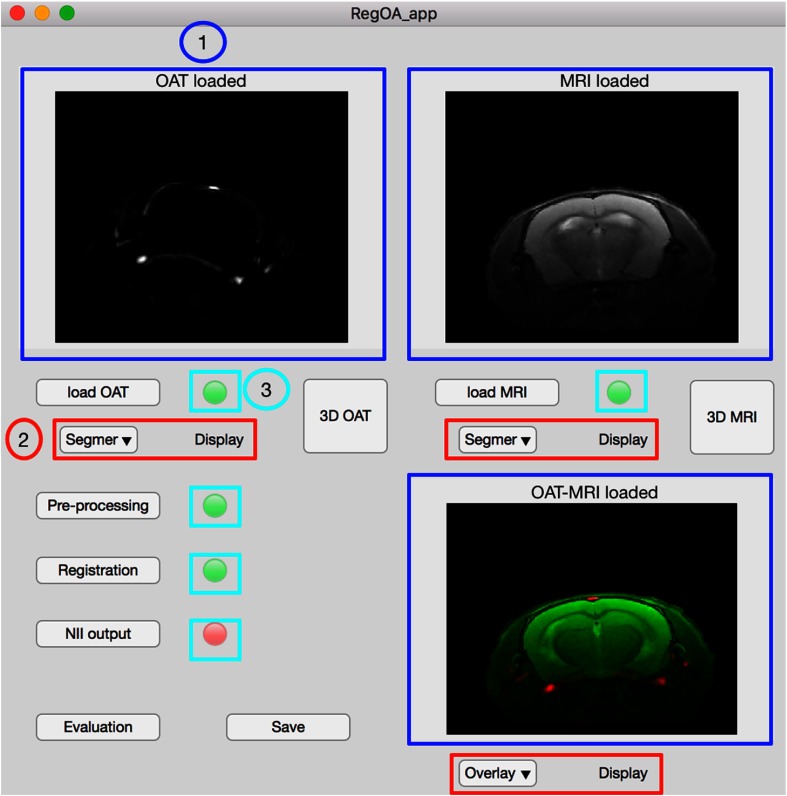

Fig. 2.

The GUI of registration toolbox RegOA. The blue panels (1) are displayed images, including loaded OAT and MRI data and the registration result. The drop-down button (2) controls different display options for (1). Status lamps (3) display the status of a certain function: green notifies accomplishment while red denotes an unfinished state. Normal buttons are for functions, including loading OAT and MRI datasets, preprocessing, registration, exporting files in NIfTI format, evaluation of registration quality, and image saving.

2.1.2. Registration algorithm

To implement automated intensity-based registration, we used MI, which is a commonly used similarity metric or cost function for the multimodal registration problem. MI-based registration measures the statistical dependence between the intensities of corresponding voxels in both images. It is assumed that for well-aligned images the value of MI becomes maximal. MI was calculated as

| (1) |

where and denote the entropies of image A and B (in our case, MRI and OAT images, respectively) and is the joint entropy of both images.34 The MR image was set as the fixed image (reference) and the OAT image as the floating image during registration. The floating image underwent affine transformation, i.e., translation, rotation, and scaling. The application of affine transformation has been shown to yield robust results for the registration of rodent brain images acquired using OAT and MRI.11,13,18 In addition, a one-plus-one evolutionary optimization algorithm was applied to search for the transformation parameters. The final parameters for the transformation are generated by iteratively perturbing or mutating the parameters from the last iteration (the parent).35 For the evolutionary optimization, the growth factor, minimum size, and initial value of the search radius were set to 1.05, , and , respectively. The maximum number of iterations for optimization was set to 100. As MI-based methods have not yet been applied in the context of multimodal registration involving OAT, we evaluated three alternative methods:

-

•

M1: direct MI-based registration method without preprocessing of MRI and OAT images.

-

•

M2: direct MI-based registration method with preprocessed MRI and OAT images.

-

•

M3: two-step MI-based registration method with preprocessed MRI and OAT images.

For M3, the first step was to obtain a primary transformation matrix using masks (segmented binary images) generated in the preprocessing step for MRI and OAT images. The metric for the primary transformation is mean squared difference.20 The second step was to apply MI-based registration to the result from the first step. The settings of metrics and optimization in second step of M3 are identical to those used in M1 and M2.

2.1.3. Graphic user interface

A user-friendly graphic user interface (GUI) was developed to facilitate the implementation of RegOA. The functions and features in the GUI were designed to map the registration process from loading of OAT and MRI datasets, preprocessing, registration, exporting files in NIfTI format, evaluation of registration quality, to image saving, and visualization at different stages (Fig. 2).

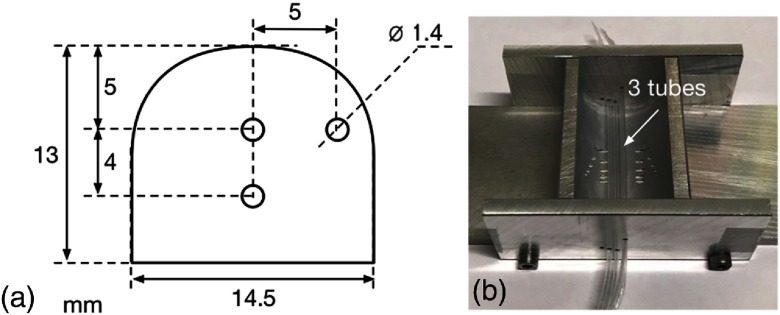

2.2. Phantom

To assess the performance of the OAT-MRI registration method, a phantom with fiducial markers was measured using OAT and MRI systems. The dimensions of the phantom were , with a curved top surface of 30-mm diameter mimicking a mouse head. A cross section of the phantom is shown in Fig. 3(a) with three parallel holes of 1.4-mm diameter for incorporating contrast agents. The phantom was made of 50 mL water with 3% agar and 3 mL intralipid (Sigma-Aldrich, Switzerland) using a home-designed mould [Fig. 3(b)]. Three polyester tubes (diameter: 1.2 mm) were inserted into the mould before pouring heated agar solution. A solution containing optoacoustic visible gold nanoparticle Ntracker DM50 (, Nanopartz, excitation peak: 775 nm) and MRI contrast agent gadolinium-DTPA (, Guerbet, France) was prepared in phosphate-buffered saline (PBS) and injected into polyester tubes after cooling.

Fig. 3.

Phantom design and fabrication: (a) the cross section of the phantom with three inclusions and (b) an aluminum mould used for fabricating the phantom. Three polyester tubes were inserted as the contrast inclusions.

2.3. Animal Model

All procedures conformed to the national guidelines of the Swiss Federal Act on animal protection and were approved by the Cantonal Veterinary Office Zurich (Permit No. 18-2014). Three C57BL/6J mice (Janvier, France), weighting 20 to 25 g, 8 to 10 weeks of age were used. Animals were housed in ventilated cages inside a temperature-controlled room, under a 12-h dark/light cycle. Pelleted food (3437PXL15, CARGILL) and water were provided ad libitum.

2.4. Ex Vivo Mouse Brain

A whole mouse brain was imaged ex vivo with OAT after removal of the skull. The brain was embedded in agar 3% in PBS (pH 7.4). A tube for holding the mouse brain was made from agar using a mold consisting of a 20- and a 5-mL syringe that were aligned coaxially resulting in an agar tube of outer/inner diameter. The mouse brain was placed in coronal orientation inside the agar tube, which was filled with PBS for removing any residual air. The agar tube was removed from syringe prior to OAT and MR imaging.

2.5. Optoacoustic Tomography

For OAT imaging of both phantom and brain, slice-by-slice 2-D imaging setting was used. The in-plane resolution is 2. For imaging of the phantom with fiducial markers, laser excitation pulses of 9 ns were delivered at seven wavelengths (680, 715, 730, 775, 800, 850, and 900 nm), moving along horizontal direction, , 10 averages, and . For ex vivo mouse brain, the agar tube containing mouse brain was fixed into the supplied rigid phantom holder and placed into the imaging chamber of the OAT system. Laser excitation pulses of 9 ns were delivered at six wavelengths (680, 715, 730, 760, 800, and 850 nm) in coronal orientation, moving along horizontal direction, , resolution , 10 averages, and . For in vivo mouse brain, laser excitation pulses of 9 ns were delivered at five wavelengths (715, 730, 760, 800, and 850 nm) in coronal orientation, moving along horizontal direction, , 10 averages, and .

2.6. Magnetic Resonance Imaging

All MRI scans were performed on a 7/16 small animal MR Pharmascan (Bruker Biospin GmbH, Ettlingen, Germany) equipped with an actively shielded gradient capable of switching with a rise time and operated by a ParaVision 6.0 software platform (Bruker Biospin GmbH, Ettlingen, Germany). A circular polarized volume resonator was used for signal transmission, and an actively decoupled mouse brain quadrature surface coil with integrated combiner and preamplifier was used for signal receiving.

For imaging of phantom with fiducial marker, -weighted MR imaging was performed. A 3-D volume was acquired using fast low-angle shot sequence in combination with slice selective excitation of a 20-mm-thick volume. The imaging parameters were: , ; ; , , giving an isotropic spatial , , axial orientation, and 50 s.

Both the in vivo and ex vivo -weighted MR images of mouse brain/head were obtained using a 2-D spin echo sequence (Turbo rapid acquisition with refocused echoes) with imaging parameters: RARE , , , 6 averages, slice , no slice gap, , , giving an in-plane spatial , out-of-plane (slice thickness), within a 36 s. For ex vivo MRI, the whole mouse brain was placed in a 10-mL syringe filled with perfluoropolyether (Fomblin Y, LVAC 16/6, average molecular weight 2700, Sigma-Aldrich, Switzerland). For in vivo MRI, mice were anesthetized with an initial dose of 4% isoflurane (Abbott, Cham, Switzerland) in oxygen/air () mixture and were maintained at 1.5% isoflurane in oxygen/air (). Mice were next placed in prone position on a water-heated support to keep body temperature within , monitored with a rectal temperature probe.

2.7. Optoacoustic Tomography Reconstruction

OAT images were reconstructed using a model-based linear algorithm using MSOT Viewer (iThera Medical GmbH, Germany).36 For in vivo mouse imaging data, linear unmixing was applied to resolve signals from oxygenated and deoxygenated-hemoglobin. For phantom imaging data, linear unmixing was applied to resolve signal from gold nanoparticle. For ex vivo brain imaging data, background image was resolved.

2.8. Evaluation of Registration

We have assessed the performance of the three registration methods (M1, M2, and M3) using OAT and MR images of one phantom and three mouse head/brains. The three mouse brain registration tasks were (1) ex vivo OAT—ex vivo MRI, (2) ex vivo OAT–in vivo MRI, and (3) in vivo OAT–in vivo MRI. Task 2 aims to test if toolbox RegOA has a certain degree of freedom in registering different brain regions of interest. The registration performance was evaluated by the metrics of TRE and EER for each pair of landmarks. TRE is defined as the Euclidean distance between points (targets) that are used for registration algorithm.25 EER, the fraction of Euclidean error remaining after registration, is defined as one TRE gap between two corresponding targets divided by their original distance before registration.25

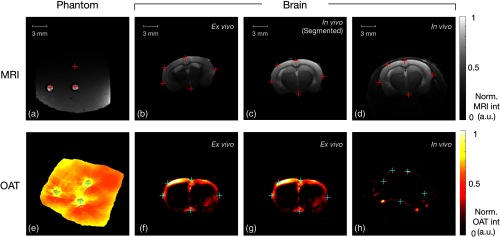

For phantom validation, the three inclusions were clearly visible in both OAT and MR images. The center of each inclusion served as ground-truth position of the registration targets. For mouse brain study, natural landmarks were manually selected as registration targets. Five anatomical landmarks were chosen (Fig. 4). We also tested the robustness of M3 by fixing the MR image and rotating the OAT image by an angle ranging from to 90 deg, with an incremental step of 10 deg. Bilinear interpolation was applied for rotating the image. The original distance, TRE, and EER for five pairs of registration targets were calculated.

Fig. 4.

Registration targets in four datasets for assessing the performance of different registration methods. Three inclusions served as registration targets for phantom study, and five natural landmarks were selected as targets based on the anatomy of in vivo and ex vivo mouse brain. The upper row shows the MR images of (a) phantom, (b) ex vivo brain, (c) segmented brain from in vivo case, and (d) in vivo head of mouse, with red crosses indicating the targets. The bottom row shows the paired OAT images of the upper row (e) phantom, (f) and (g) ex vivo brain, and (h) in vivo head of mouse, with blue crosses indicating the targets.

2.9. Exporting, Registration, and Comparison with AFNI

We exported the preprocessed OAT and MR images as NIfTI format and loaded them to the program “3DAllineate” from the AFNI software package (http://afni.nimh.nih.gov) to register in 2-D the OAT image to the corresponding MR image slice. Affine transformation was performed using two passes: a coarse pass with larger shifts followed by a fine pass to prevent local minima. MI was selected as the similarity metric. Other parameters were left at the default setting.

2.10. Registration with a Brain Atlas

Spatial normalization of individual neuroimaging data by mapping to a standard reference atlas is commonly adopted when analyzing datasets across multiple subjects.37,38 Here, we tested the feasibility to register the ex vivo OAT brain image with a widely used high-resolution volumetric atlas segmented into 62 structures based on average MRI of 40 adult C57Bl/6J mice.37

3. Results

3.1. Evaluation of Registration Accuracy

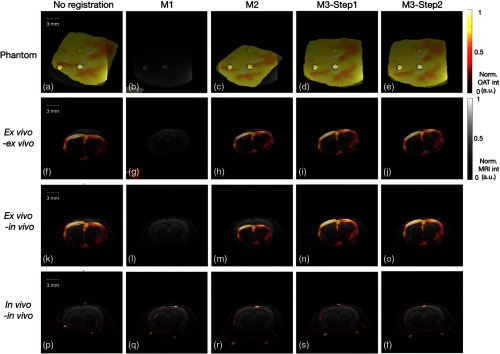

We have tested three different MI-based registration methods (M1 to M3) using phantom with fiducial markers, as well as ex vivo and in vivo mouse brain. Both M1 and M2 performed poorly; the transformed OAT images were either highly distorted in the corner [in Figs. 5(b), 5(g), and 5(l)] or misaligned to a false anatomical structure [in Figs. 5(m), 5(q), and 5(r)]. Compared with M1 and M2, the first step of M3 has achieved higher success rate of registration already based on visual evaluation. The selected target pairs, either the fiducials in the phantom [Fig. 5(d)] or the landmarks in the brain [Figs. 5(i), 5(n), and 5(s)], were matched accurately in OAT and MR images. The second step of M3 [Figs. 5(e), 5(j), 5(o), and 5(t)] led to further significant improvements of registration accuracy when the registered object contains heterogeneous inner structure. For example, in the case of in vivo brain registration, the lower boundary of brain was better aligned in M3-step 2 [Fig. 5(t)] than in M3-step 1 [Fig. 5(s)].

Fig. 5.

Comparison of different registration methods in different registration tasks. Rows (1) to (4) show the overlaid OAT (“HOT” scale) and MRI (gray) images of four tasks: (1) phantom, (2) ex vivo OAT–ex vivo MRI mouse brain, (3) ex vivo OAT–in vivo MR images of mouse brain, and (4) in vivo OAT–in vivo MRI mouse brain. The columns (left to right) display before registration and resulting images using M1, M2, and M3 registration, respectively. M1 and M2 resulted in misalignment due to the interference from the background noise and overdependence on the texture of inner structure obtained from different modalities, having different interpretation of intensity values.

To quantitatively assess the accuracy of registration methods (M1 to M3), TRE and EER were computed for the four cases (Table 1). M1 results in high value of TRE and EER values (Fig. 5, second column), whereas M2 registration using preprocessed data yielded lower mean values of TRE and EER. However, the standard deviation of EER was still large compared with the mean value of the respective metrics. For example, the M2 EER for phantom study was with a standard deviation 8 times larger than the mean value. This indicates that at least one of the registration pairs was significantly misaligned [as observed in Fig. 5(c)]. Both steps 1 and 2 of M3 performed better than M1 and M2, with decreased mean values and standard deviations of TRE and EER. M3-step 2 further decreased TRE and EER based on the M3-step 1. For instance, in the case of ex vivo brain registration, the standard deviation of TRE and EER decreased from 5.20 and 42.87 to 2.81 and 23.52, respectively, after step 2. The in vivo brain registration showed a decrease in both mean value and standard deviation for TRE and EER from steps 1 to 2 (TRE: to ; EER: to ).

Table 1.

Registration evaluation by measuring TRE and EER for different registration methods (M1, M2, and step 1 and step 2 of M3) and different cases (phantom, ex vivo brains, ex vivo/in vivo brains, and in vivo brains). The numbers of target pairs (T. pair) and the original distance for target pairs are given to better compare them to the TRE after registration. Data were presented as mean value ± standard deviation.

| Phantom | Brain ex vivo OAT–ex vivo MRI | Brain ex vivo OAT–in vivo MRI | Brain in vivo OAT–in vivo MRI | ||

|---|---|---|---|---|---|

| T. pair | Number | 3 | 5 | 5 | 5 |

| Original distance | |||||

| M1 | TRE | ||||

| EER (%) | |||||

| M2 | TRE | ||||

| EER (%) | |||||

| M3-step 1 | TRE | ||||

| EER (%) | |||||

| M3-step 2 | TRE | ||||

| EER (%) | |||||

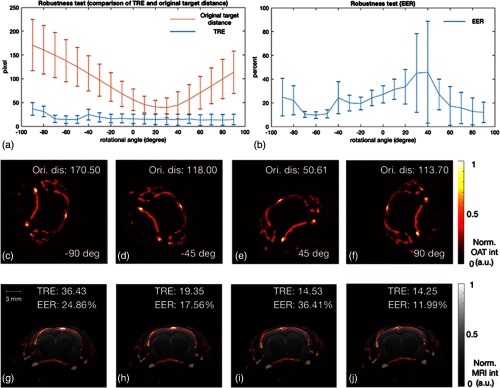

3.2. Evaluation of Registration Robustness

The robustness of the registration method M3 was evaluated by rotating OAT image relative to the MR image by an angle ranging from to 90 deg [Figs. 6(c)–6(f)]. Upon rotating the OAT relative to the MR images, the initial distance varies in a sinusoidal manner [Fig. 6(a)]. Nevertheless, following the two-step registration procedure, the dependence of TRE (blue) on the rotation angle is weak with values , showing a significant decrease compared with the original distance. The second metric EER displayed a stronger dependence on the rotation angle than TRE, especially for the angle in the range of 20 deg to 50 deg. Lowest value of initial distance was observed in this range of the rotation angle, suggesting that OAT and MRI were already well-matched and thus resulted in high values of EER. The mean value and standard deviation for TRE and EER over the 180 deg were and , respectively.

Fig. 6.

Robustness test of registration method M3 at different rotational angles using in vivo OAT–in vivo MRI mouse brain images. OAT image was rotated with an angle ranging from to 90 deg. (a) Variation of original distance and TRE between corresponding targets in OAT and MR images; (b) variation of EER; (c)–(f) exemplary OAT images at , , 45 deg, and 90 deg of rotation; (g)–(j) overlaid OAT/MR images after registration. Ori. Dis: original distance.

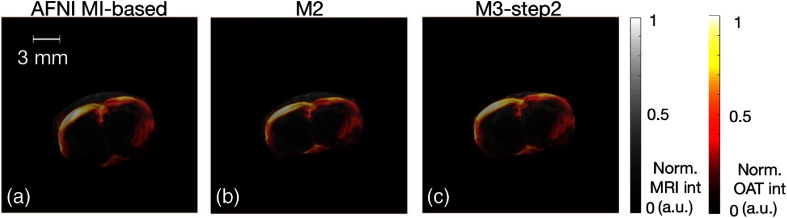

3.3. Comparison of RegOA and AFNI

We have compared the performance of RegOA with an established registration tool used for aligning MRI-based brain data, AFNI. Accordingly, we have exported preprocessed datasets of the in vivo mouse brain study to AFNI and performed AFNI inbuilt MI-based registration. MI-based registration by AFNI [Fig. 7(a)] yielded registration results comparable to M2 by RegOA [Fig. 7(b)]; the alignment of OAT image to the MRI reference image was unsatisfactory, which becomes obvious when comparing the cortical surfaces. In contrast, the two-step M3 registration by RegOA yielded superior results, i.e., the contour in OAT image was matched better with that of MR image [Fig. 7(c)].

Fig. 7.

Comparison of registration results using AFNI and RegOA for ex vivo OAT–ex vivo MRI mouse brain images. Images show the overlaid OAT (HOT scale) and MRI (gray) images after registration using (a) AFNI MI-based, (b) M2, and (c) M3 (after step 2).

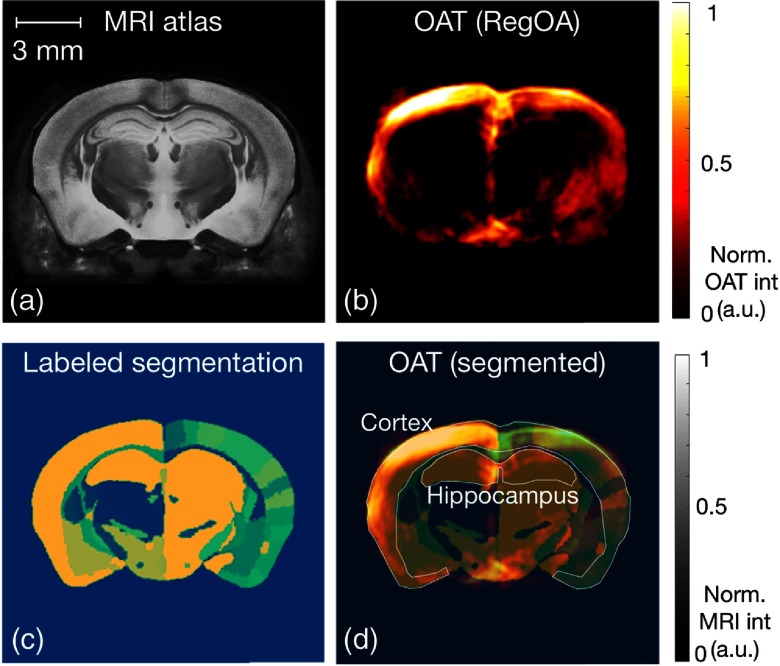

3.4. Registration with a Brain Atlas

Instead of mapping OAT mouse brain image to its own MR image, registering it to a standard anatomical atlas would be helpful for analyzing large data series and use of predefined regions of interest for quantitative analysis. Figure 8 shows the labeled regions in the brain atlas (indicated with different colors), MRI anatomical reference (Dorr’s), OAT image after M3 registration, and overlaid images.

Fig. 8.

Registration of OAT of mouse brain with MRI atlas and corresponding segmentation. (a) MRI atlas Dorr’s C57B6J mice, (b) overlaid OAT image, (c) labeled segmentation based on the (a), and (d) overlaid OAT image with labeled segmentation. The regions of cortex and hippocampus were indicated by the contour.

4. Discussion

The rapid deployment of OAT as stand-alone technique or as partner in multimodal imaging approaches in combination with modalities, such as MRI,11,17,18,39 Raman imaging,40,41 and optical coherence tomography,42 requires the development of an OAT-tailored automated and robust image registration method. Currently available comprehensive toolkits for automated registration focus on well-established imaging modalities, such as CT, MRI, PET, and single-photon emission computer tomography. Yet, methods such as AFNI and SPM cannot be translated in a straightforward manner to OAT due to method-specific issues in data reconstruction.43 To the best of our knowledge, the proposed RegOA is the first automated registration toolbox for OAT-MRI dataset alignment.

The benefits of combining PA with MRI or CT are as follows: MRI/CT provides (1) structural information with a higher soft tissue contrast compared to OAT. This is particularly useful for delineating brain regions and lesion areas in disease models, and thus providing more accurate analysis of regional hemodynamic and molecular data. (2) In addition, MRI is very versatile and can deliver a variety of other measures of tissue integrity, such as magnetic transfer rate and diffusion tensor imaging, susceptibility weighted imaging for hemorrhagic transformation, and lesions and hemodynamics, e.g., arterial spin labeling for cerebral blood flow, among many others, which cannot be attained by OAT in the same way and that can be very useful in future studies of experimental research on brain disease models. Limitations of MRI that can be addressed by OAT are as follows: (1) temporal resolution of MRI is not ideal for measuring fast functional changes, such as functional changes under stimulus and neuronal activities in the brain, which is higher in OAT; (2) both CT and MRI are not inherently very sensitive for detecting imaging probes whereas OAT is.

MI-based method was adopted for automated registration for its avoidance of the time-consuming feature extraction task and has proven its robustness in multimodal registration task. However, a pure MI-based registration has the following drawbacks: (1) applying MI metric becomes problematic if the intensity distribution in the two datasets is very different, e.g., the zero values presented in the subcortical region in OAT images that do not correspond to the intensities of the same region in MR images; (2) high sensitivity to the appearance of strong noise or artifacts (M1 method only uses MI metric without preprocessing); (3) only the intensity values of corresponding individual pixels but not the texture information were taken into account (M2 method uses MI metric with preprocessed images). Thus, an optimal preprocessing step becomes critical for a robust MI-based registration. In the framework of RegOA, different denoising strategies, Canny edge detector and Wiener filter, were applied to OAT and MR images, respectively, to remove different types of noise. An iterative snakes method was applied to segment images from both OAT and MRI. The resulting masks serve as the supportive information in the first step of M3 registration method. The proposed two-step MI-based method (M3) overcame the interference of the presented noise in OAT and MR images and used the spatial information obtained from the segmentation (mainly the boundary information, as OAT is highly sensitive to the object boundary). Low TRE and EER values revealed the advantages of M3 procedure.

The performance of RegOA was evaluated using phantom with fiducial markers and using ex vivo and in vivo mouse brain with anatomical landmarks. Fiducial markers containing nanoparticles provide contrast for various imaging modality and have been used for multimodal registration between MRI-photoacoustic-Raman and PET-optical imaging.41,44 The robustness of the registration method was analyzed by rotating OAT image relative to the MRI image; the registration method was able to correctly map the OAT to the corresponding MR image for a wide range of starting angles. As the polyethylene tube we used here for placing fiducial nanoprobe marker might cause artifact, one alternative is to use MR compatible physical fiducial with proper dimensions.

In addition to the main functionality of automated registration, RegOA also supports simple visualization of registered datasets, exporting in NIfTI format, and mapping onto an anatomical brain atlas. Herein, we summarize several potential benefits RegOA brings about: (1) high-throughput OAT experiment analysis and data sharing. The proposed framework attempts to establish a standard OAT-MRI data processing pipeline, including preprocessing, registration, normalization,32 and further voxel/segment-based quantitative and correlation analysis. Such a pipeline has been well-established in the MRI community using SPM or AFNI but is not yet available for OAT. For example, the oxy/deoxy-hemoglobin data could potentially be related to data from blood oxygen level-dependent functional MRI;45 (2) evaluating new OAT reconstruction algorithm; (3) improvement in reconstruction algorithm for OAT image using well-registered MR image as structural prior information;46 (4) identifying and accurate delineation of brain regions is crucial for quantitative analysis of neuroimaging data. Hence, alignment of molecular and physiological data to a structural reference dataset is a prerequisite for extracting quantitative information. While individual alignment is attractive when analyzing pathological conditions, e.g., neurodegenerative processes and focal brain lesions, which show a subject-specific disease course, registration of OAT (and MRI) data to standard brain atlases will be attractive when analyzing responses across (large) groups of subjects. This would allow using predefined volumes-of-interest across the whole dataset, thereby enhancing statistical rigor.

There are several limitations for this study: (1) automated 3-D registration is still suboptimal. The current two-step registration method has been only implemented and validated in 2-D. Selection of slices for registration depends on the initial alignment based on anatomical reference (such as the eyes and nose) and the thickness of each OAT/MRI slice. We also assume that the animal holder of OAT moved along the horizontal direction, which should be parallel to the central axis of the MR scanner bore.8 Involuntary movements of the animal head in other directions would lead to inaccurate registration. This is currently prevented by mechanical fixation of the animal’s head. A next step would be to apply the framework of RegOA to 3-D data. Such an approach does not require the assumption of central axis parallelism of OAT and MRI data but is computationally more expensive. The computational complexity for 3-D registration can be alleviated using parallel computing.47 (2) Deformable registration is necessary for more general cases of OAT-MRI registration, especially whole body or certain flexible organs.20 Rigid transformation has been widely used and proven to be robust for brain registration.14 (3) Negative or truncated zero values of image intensity were observed in the current OAT dataset, which may influence the similarity metric of MI. This has been partially addressed by incorporating a segmented mask in RegOA. A model-based image inversion algorithm, or intensity correction with optical fluence variation,48,49 or a backprojection algorithm assisted with multiview Hilbert transformation50–53 can improve the image quality of OAT, minimize artifacts especially the bipolarity pixel values, and further improve the registration result.

5. Conclusion

In conclusion, we developed an automated registration framework for OAT-MRI brain imaging data. The major advantages of our approach are minimal user interaction and automatic assessment of the registration error, avoiding visual inspection of the results, facilitating the accurate, objective, and rapid analysis of large amounts of rodent OAT data.

Acknowledgments

The authors thank Dr. Mark Augath and Markus Kupfer at the Institute for Biomedical Engineering, ETH Zurich and University of Zurich for their technical support and Dr. David Cole for advice on SPM. The datasets generated and/or analyzed during the current study are available in the repository DOI: 10.5281/zenodo.1303386. The registration code is available at https://github.com/RenW2018/RegOA. This work was funded by the University of Zurich Forschungskredit (No. FK-17-052) and Synapsis Foundation–Alzheimer Research Switzerland ARS (No. 2017-CDA03) to R.N. It was also supported by the Swiss National Science Foundation and Swiss Innovation Agent BRIDGE proof-of-concept fellowship (No. 178262) for W.R.

Biographies

Wuwei Ren received his BS degree from Zhejiang University, China, in 2010 and his MS degree from KTH, Sweden, in 2012. In 2018, he received PhD from ETH Zurich, Switzerland. His research interest is development of novel imaging techniques, including optical/photoacoustic imaging and hybrid imaging combining optics and MRI. He held a BRIDGE fellowship in 2017. In 2018, he joined the Biomedical Optics Research Laboratory at the University Hospital Zurich as a postdoctoral researcher.

Hlynur Skulason is an electrical and biomedical engineering student at ETH Zurich, graduating with a master’s degree later this year. He holds his bachelor’s degree in electrical and computer engineering from the University of Iceland. He has worked as a summer research assistant for both the University of Iceland and ETH Zurich. His areas of interest are machine learning, deep learning, and biomedical imaging.

Biographies of the other authors are not available.

Disclosures

The authors declared no competing interests.

References

- 1.Ovsepian S. V., et al. , “Pushing the boundaries of neuroimaging with optoacoustics,” Neuron 96(5), 966–988 (2017). 10.1016/j.neuron.2017.10.022 [DOI] [PubMed] [Google Scholar]

- 2.Ntziachristos V., “Going deeper than microscopy: the optical imaging frontier in biology,” Nat. Methods 7(8), 603–614 (2010). 10.1038/nmeth.1483 [DOI] [PubMed] [Google Scholar]

- 3.Wang X., et al. , “Noninvasive laser-induced photoacoustic tomography for structural and functional in vivo imaging of the brain,” Nat. Biotechnol. 21, 803–806 (2003). 10.1038/nbt839 [DOI] [PubMed] [Google Scholar]

- 4.Wang L. V., Hu S., “Photoacoustic tomography: in vivo imaging from organelles to organs,” Science 335(6075), 1458–1462 (2012). 10.1126/science.1216210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dean-Ben X. L., et al. , “Advanced optoacoustic methods for multiscale imaging of in vivo dynamics,” Chem. Soc. Rev. 46(8), 2158–2198 (2017). 10.1039/C6CS00765A [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Taruttis A., Ntziachristos V., “Advances in real-time multispectral optoacoustic imaging and its applications,” Nat. Photonics 9(4), 219–227 (2015). 10.1038/nphoton.2015.29 [DOI] [Google Scholar]

- 7.Li L., et al. , “Single-impulse panoramic photoacoustic computed tomography of small-animal whole-body dynamics at high spatiotemporal resolution,” Nat. Biomed. Eng. 1(5), 0071 (2017). 10.1038/s41551-017-0071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nasiriavanaki M., et al. , “High-resolution photoacoustic tomography of resting-state functional connectivity in the mouse brain,” Proc. Natl. Acad. Sci. U.S.A. 111(1), 21–26 (2014). 10.1073/pnas.1311868111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Li L., et al. , “Label-free photoacoustic tomography of whole mouse brain structures ex vivo,” Neurophotonics 3(3), 035001 (2016). 10.1117/1.NPh.3.3.035001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gottschalk S., et al. , “Real-time volumetric mapping of calcium activity in living mice by functional optoacoustic neuro-tomography (Conference Presentation),” Proc. SPIE 10494, 104941I (2018). 10.1117/12.2289685 [DOI] [Google Scholar]

- 11.Ni R., et al. , “Non-invasive detection of acute cerebral hypoxia and subsequent matrix-metalloproteinase activity in a mouse model of cerebral ischemia using multispectral-optoacoustic-tomography,” Neurophotonics 5(1), 015005 (2018). 10.1117/1.NPh.5.1.015005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang H., et al. , “Monitoring the opening and recovery of the blood-brain barrier with noninvasive molecular imaging by biodegradable ultrasmall nanoparticles,” Nano Lett. 18(8), 4985–4992 (2018). 10.1021/acs.nanolett.8b01818 [DOI] [PubMed] [Google Scholar]

- 13.Ni R., et al. , “Quantification of amyloid deposits and oxygen extraction fraction in the brain with multispectral optoacoustic imaging in arcAβ; mouse model of Alzheimer’s disease,” Proc. SPIE 10494, 104941G (2018). 10.1117/12.2286309 [DOI] [Google Scholar]

- 14.Burton N. C., et al. , “Multispectral opto-acoustic tomography (MSOT) of the brain and glioblastoma characterization,” NeuroImage 65, 522–528 (2013). 10.1016/j.neuroimage.2012.09.053 [DOI] [PubMed] [Google Scholar]

- 15.Uludağ K., Roebroeck A., “General overview on the merits of multimodal neuroimaging data fusion,” NeuroImage 102, 3–10 (2014). 10.1016/j.neuroimage.2014.05.018 [DOI] [PubMed] [Google Scholar]

- 16.Yankeelov T. E., Abramson R. G., Quarles C. C., “Quantitative multimodality imaging in cancer research and therapy,” Nat. Rev. Clin. Oncol. 11, 670–680 (2014). 10.1038/nrclinonc.2014.134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Attia A. B., et al. , “Multispectral optoacoustic and MRI coregistration for molecular imaging of orthotopic model of human glioblastoma,” J. Biophotonics 9(7), 701–708 (2016). 10.1002/jbio.v9.7 [DOI] [PubMed] [Google Scholar]

- 18.Ni R., Rudin M., Klohs J., “Cortical hypoperfusion and reduced cerebral metabolic rate of oxygen in the arcAβ mouse model of Alzheimer’s disease,” Photoacoustics 10, 38–47 (2018). 10.1016/j.pacs.2018.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Park S., et al. , “Real-time triple-modal photoacoustic, ultrasound, and magnetic resonance fusion imaging of humans,” IEEE Trans. Med. Imaging 36(9), 1912–1921 (2017). 10.1109/TMI.2017.2696038 [DOI] [PubMed] [Google Scholar]

- 20.Oliveira F. P., Tavares J. M., “Medical image registration: a review,” Comput. Methods Biomech. Biomed. Eng. 17(2), 73–93 (2014). 10.1080/10255842.2012.670855 [DOI] [PubMed] [Google Scholar]

- 21.Saeed N., “Magnetic resonance image segmentation using pattern recognition, and applied to image registration and quantitation,” NMR Biomed. 11(4–5), 157–167 (1998). 10.1002/(ISSN)1099-1492 [DOI] [PubMed] [Google Scholar]

- 22.Hsieh J.-W., et al. , “Image registration using a new edge-based approach,” Comput. Vision Image Understanding 67(2), 112–130 (1997). 10.1006/cviu.1996.0517 [DOI] [Google Scholar]

- 23.Song H., Qiu P., “Intensity-based 3D local image registration,” Pattern Recognit. Lett. 94, 15–21 (2017). 10.1016/j.patrec.2017.04.021 [DOI] [Google Scholar]

- 24.Pascau J., et al. , “Automated method for small-animal PET image registration with intrinsic validation,” Mol. Imaging Biol. 11(2), 107–113 (2009). 10.1007/s11307-008-0166-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lin J. S., et al. , “Performance assessment for brain MR imaging registration methods,” AJNR Am. J. Neuroradiol. 38(5), 973–980 (2017). 10.3174/ajnr.A5122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cox R. W., “AFNI: software for analysis and visualization of functional magnetic resonance neuroimages,” Comput. Biomed. Res. 29(3), 162–173 (1996). 10.1006/cbmr.1996.0014 [DOI] [PubMed] [Google Scholar]

- 27.Sawiak S. J., et al. , “Voxel-based morphometry with templates and validation in a mouse model of Huntington’s disease,” Magn. Reson. Imaging 31(9), 1522–1531 (2013). 10.1016/j.mri.2013.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lim J. S., Two-Dimensional Signal and Image Processing, Prentice-Hall, Inc., Englewood Cliffs: (1990). [Google Scholar]

- 29.Gudbjartsson H., Patz S., “The Rician distribution of noisy MRI data,” Magn. Reson. Med. 34(6), 910–914 (1995). 10.1002/(ISSN)1522-2594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mohan J., Krishnaveni V., Guo Y., “A survey on the magnetic resonance image denoising methods,” Biomed. Signal Process. Control 9, 56–69 (2014). 10.1016/j.bspc.2013.10.007 [DOI] [Google Scholar]

- 31.Chan T. F., Vese L. A., “Active contours without edges,” IEEE Trans. Image Process. 10(2), 266–277 (2001). 10.1109/83.902291 [DOI] [PubMed] [Google Scholar]

- 32.Canny J., “A computational approach to edge detection,” IEEE Trans. Pattern Anal. Mach. Intell. PAMI-8(6), 679–698 (1986). 10.1109/TPAMI.1986.4767851 [DOI] [PubMed] [Google Scholar]

- 33.Rahunathan S., et al. , “Image registration using rigid registration and maximization of mutual information,” in 13th Annu. Med. Meets Virtual Reality Conf., Long Beach, California: (2005). [Google Scholar]

- 34.Maes F., et al. , “Multimodality image registration by maximization of mutual information,” IEEE Trans. Med. Imaging 16(2), 187–198 (1997). 10.1109/42.563664 [DOI] [PubMed] [Google Scholar]

- 35.Styner M., et al. , “Parametric estimate of intensity inhomogeneities applied to MRI,” IEEE Trans. Med. Imaging 19(3), 153–165 (2000). 10.1109/42.845174 [DOI] [PubMed] [Google Scholar]

- 36.Tzoumas S., et al. , “Unmixing molecular agents from absorbing tissue in multispectral optoacoustic tomography,” IEEE Trans. Med. Imaging 33(1), 48–60 (2014). 10.1109/TMI.2013.2279994 [DOI] [PubMed] [Google Scholar]

- 37.Dorr A. E., et al. , “High resolution three-dimensional brain atlas using an average magnetic resonance image of 40 adult C57Bl/6J mice,” NeuroImage 42(1), 60–69 (2008). 10.1016/j.neuroimage.2008.03.037 [DOI] [PubMed] [Google Scholar]

- 38.Jones A. R., Overly C. C., Sunkin S. M., “The Allen Brain Atlas: 5 years and beyond,” Nat. Rev. Neurosci. 10(11), 821–828 (2009). 10.1038/nrn2722 [DOI] [PubMed] [Google Scholar]

- 39.Hu D., et al. , “Indocyanine green-loaded polydopamine-iron ions coordination nanoparticles for photoacoustic/magnetic resonance dual-modal imaging-guided cancer photothermal therapy,” Nanoscale 8(39), 17150–17158 (2016). 10.1039/C6NR05502H [DOI] [PubMed] [Google Scholar]

- 40.Neuschmelting V., et al. , “Dual-modality surface-enhanced resonance Raman scattering and multispectral optoacoustic tomography nanoparticle approach for brain tumor delineation,” Small 14(23), e1800740 (2018). 10.1002/smll.v14.23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kircher M. F., et al. , “A brain tumor molecular imaging strategy using a new triple-modality MRI-photoacoustic-Raman nanoparticle,” Nat. Med. 18, 829 (2012). 10.1038/nm.2721 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu M., et al. , “Combined multi-modal photoacoustic tomography, optical coherence tomography (OCT) and OCT angiography system with an articulated probe for in vivo human skin structure and vasculature imaging,” Biomed. Opt. Express 7(9), 3390–3402 (2016). 10.1364/BOE.7.003390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lutzweiler C., Razansky D., “Optoacoustic imaging and tomography: reconstruction approaches and outstanding challenges in image performance and quantification,” Sensors 13(6), 7345–7384 (2013). 10.3390/s130607345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Maiorano G., et al. , “Ultra-efficient, widely tunable gold nanoparticle-based fiducial markers for x-ray imaging,” Nanoscale 8(45), 18921–18927 (2016). 10.1039/C6NR07021C [DOI] [PubMed] [Google Scholar]

- 45.Gagnon L., et al. , “Quantifying the microvascular origin of BOLD-fMRI from first principles with two-photon microscopy and an oxygen-sensitive nanoprobe,” J. Neurosci. 35(8), 3663–3675 (2015). 10.1523/JNEUROSCI.3555-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Niedre M., Ntziachristos V., “Elucidating structure and function in vivo with hybrid fluorescence and magnetic resonance imaging,” Proc. IEEE 96(3), 382–396 (2008). 10.1109/JPROC.2007.913498 [DOI] [Google Scholar]

- 47.Shams R., et al. , “A survey of medical image registration on multicore and the GPU,” IEEE Signal Process. Mag. 27(2), 50–60 (2010). 10.1109/MSP.2009.935387 [DOI] [Google Scholar]

- 48.Daoudi K., et al. , “Correcting photoacoustic signals for fluence variations using acousto-optic modulation,” Opt. Express 20(13), 14117–14129 (2012). 10.1364/OE.20.014117 [DOI] [PubMed] [Google Scholar]

- 49.Ding L., Dean-Ben X. L., Razansky D., “Efficient 3-D model-based reconstruction scheme for arbitrary optoacoustic acquisition geometries,” IEEE Trans. Med. Imaging 36(9), 1858–1867 (2017). 10.1109/TMI.2017.2704019 [DOI] [PubMed] [Google Scholar]

- 50.Matthews T. P., et al. , “Parameterized joint reconstruction of the initial pressure and sound speed distributions in photoacoustic computed tomography (Conference Presentation),” Proc. SPIE 10494, 104942V (2018). 10.1117/12.2291014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Li L., et al. , “Multiview Hilbert transformation in full-ring transducer array-based photoacoustic computed tomography,” J. Biomed. Opt. 22(7), 076017 (2017). 10.1117/1.JBO.22.7.076017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zhang P., et al. , “High-resolution deep functional imaging of the whole mouse brain by photoacoustic computed tomography in vivo,” J. Biophotonics 11(1), e201700024 (2018). 10.1002/jbio.201700024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li G., et al. , “Multiview Hilbert transformation for full-view photoacoustic computed tomography using a linear array,” J. Biomed. Opt. 20(6), 066010 (2015). 10.1117/1.JBO.20.6.066010 [DOI] [PMC free article] [PubMed] [Google Scholar]