Abstract

Background

Hidden Markov models of haplotype inheritance such as the Li and Stephens model allow for computationally tractable probability calculations using the forward algorithm as long as the representative reference panel used in the model is sufficiently small. Specifically, the monoploid Li and Stephens model and its variants are linear in reference panel size unless heuristic approximations are used. However, sequencing projects numbering in the thousands to hundreds of thousands of individuals are underway, and others numbering in the millions are anticipated.

Results

To make the forward algorithm for the haploid Li and Stephens model computationally tractable for these datasets, we have created a numerically exact version of the algorithm with observed average case sublinear runtime with respect to reference panel size k when tested against the 1000 Genomes dataset.

Conclusions

We show a forward algorithm which avoids any tradeoff between runtime and model complexity. Our algorithm makes use of two general strategies which might be applicable to improving the time complexity of other future sequence analysis algorithms: sparse dynamic programming matrices and lazy evaluation.

Keywords: Forward algorithm, Haplotype, Complexity, Sublinear algorithms

Background

Probabilistic models of haplotypes describe how variation is shared in a population. One application of these models is to calculate the probability P(o|H), defined as the probability of a haplotype o being observed, given the assumption that it is a member of a population represented by a reference panel of haplotypes H. This computation has been used in estimating recombination rates [1], a problem of interest in genetics and in medicine. It may also be used to detect errors in genotype calls.

Early approaches to haplotype modeling used coalescent [2] models which were accurate but computationally complex, especially when including recombination. Li and Stephens wrote the foundational computationally tractable haplotype model [1] with recombination. Under their model, the probability P(o|H) can be calculated using the forward algorithm for hidden Markov models (HMMs) and posterior sampling of genotype probabilities can be achieved using the forward–backward algorithm. Generalizations of their model have been used for haplotype phasing and genotype imputation [3–7].

The Li and Stephens model

Consider a reference panel H of k haplotypes sampled from some population. Each haplotype is a sequence of alleles at a contiguous sequence of genetic sites. Classically [1], the sites are biallelic, but the model extends to multiallelic sites [8].

Consider an observed sequence of alleles representing another haplotype. The monoploid Li and Stephens model (LS) [1] specifies a probability that o is descended from the population represented by H. LS can be written as a hidden Markov model wherein the haplotype o is assembled by copying (with possible error) consecutive contiguous subsequences of haplotypes .

Definition 1

(Li and Stephens HMM) Define as the event that the allele at site i of the haplotype o was copied from the allele of haplotype . Take parameters

| 1 |

| 2 |

and from them define the transition and recombination probabilities

| 3 |

| 4 |

We will write as shorthand for . We will also define the values of the initial probabilities , which can be derived by noting that if all haplotypes have equal probabilities of randomly being selected, and that this probability is then modified by the appropriate emission probability.

Let P(o|H) be the probability that haplotype o was produced from population H. The forward algorithm for hidden Markov models allows calculation of this probability in time using an dynamic programming matrix of forward states

| 5 |

The probability P(o|H) will be equal to the sum of all entries in the final column of the dynamic programming matrix. In practice, the Li and Stephens forward algorithm is (see "Efficient dynamic programming" section).

Li and Stephens like algorithms for large populations

The time complexity of the forward algorithm is intractable for reference panels with large size k. The UK Biobank has amassed array samples. Whole genome sequencing projects, with a denser distribution of sites, are catching up. Major sequencing projects with or more samples are nearing completion. Others numbering k in the millions have been announced. These large population datasets have significant potential benefits: They are statistically likely to more accurately represent population frequencies and those employing genome sequencing can provide phasing information for rare variants.

In order to handle datasets with size k even fractions of these sizes, modern haplotype inference algorithms depend on models which are simpler than the Li and Stephens model or which sample subsets of the data. For example, the common tools Eagle-2, Beagle, HAPI-UR and Shapeit-2 and -3 [3–7] either restrict where recombination can occur, fail to model mutation, model long-range phasing approximately or sample subsets of the reference panel.

Lunter’s “fastLS” algorithm [8] demonstrated that haplotypes models which include all k reference panel haplotype could find the Viterbi maximum likelihood path in time sublinear in k, using preprocessing to reduce redundant information in the algorithm’s input. However, his techniques do not extend to the forward and forward–backward algorithms.

Our contributions

We have developed an arithmetically exact forward algorithm whose expected time complexity is a function of the expected allele distribution of the reference panel. This expected time complexity proves to be significantly sublinear in reference panel size. We have also developed a technique for succinctly representing large panels of haplotypes whose size also scales as a sublinear function of the expected allele distribution.

Our forward algorithm contains three optimizations, all of which might be generalized to other bioinformatics algorithms. In "Sparse representation of haplotypes" section, we rewrite the reference panel as a sparse matrix containing the minimum information necessary to directly infer all allele values. In "Efficient dynamic programming" section, we define recurrence relations which are numerically equivalent to the forward algorithm but use minimal arithmetic operations. In "Lazy evaluation of dynamic programming rows", we delay computation of forward states using a lazy evaluation algorithm which benefits from blocks of common sequence composed of runs of major alleles. Our methods apply to other models which share certain redundancy properties with the monoploid Li and Stephens model.

Sparse representation of haplotypes

The forward algorithm to calculate the probability P(o|H) takes as input a length n vector o and a matrix of haplotypes H. In general, any algorithm which is sublinear in its input inherently requires some sort of preprocessing to identify and reduce redundancies in the data. However, the algorithm will truly become effectively sublinear if this preprocessing can be amortized over many iterations. In this case, we are able to preprocess H into a sparse representation which will on average contain better than data points.

This is the first component of our strategy. We use a variant of column-sparse-row matrix encoding to allow fast traversal of our haplotype matrix H. This encoding has the dual benefit of also allowing reversible size compression of our data. We propose that this is one good general data representation on which to build other computational work using very large genotype or haplotype data. Indeed, extrapolating from our single-chromosome results, the 1000 Genomes Phase 3 haplotypes across all chromosomes should simultaneously fit uncompressed in 11 GB of memory.

We will show that we can evaluate the Li and Stephens forward algorithm without needing to uncompress this sparse matrix.

Sparse column representation of haplotype alleles

Consider a biallelic genetic site i with alleles . Consider the vector of alleles of haplotypes j at site i. Label the allele A, B which occurs more frequently in this vector as the major allele 0, and the one which occurs less frequently as the minor allele 1. We then encode this vector by storing the value A or B of the major allele 0, and the indices of the haplotypes which take on allele value 1 at this site.

We will write for the subvector of alleles of haplotypes consisting of those haplotypes which possess the minor allele 1 at site i. We will write for the multiplicity of the minor allele. We call this vector the information content of the haplotype cohort H at the site i.

Relation to the allele frequency spectrum

Our sparse representation of the haplotype reference panel benefits from the recent finding [9] that the distribution over sites of minor allele frequencies is biased towards low frequencies.1

Clearly, the distribution of is precisely the allele frequency spectrum. More formally,

Lemma 1

Let be the expected mean minor allele frequency for k genotypes. Then

| 6 |

Corollary 1

If , then in expected value.

Dynamic reference panels

Adding or rewriting a haplotype is constant time per site per haplotype unless this edit changes which allele is the most frequent. It can be achieved by addition or removal or single entries from the row-sparse-column representation, wherein, since our implementation does not require that the column indices be stored in order, these operations can be made . This allows our algorithm to extend to uses of the Li and Stephens model where one might want to dynamically edit the reference panel. The exception occurs when —here it is not absolutely necessary to keep the formalism that the indices stored actually be the minor allele.

Implementation

For biallelic sites, we store our ’s using a length-n vector of length vectors containing the indices j of the haplotypes and a length-n vector listing the major allele at each site (see Fig. 1 panel iii) Random access by key i to iterators to the first elements of sets is and iteration across these is linear in the size of . For multiallelic sites, the data structure uses slightly more space but has the same speed guarantees.

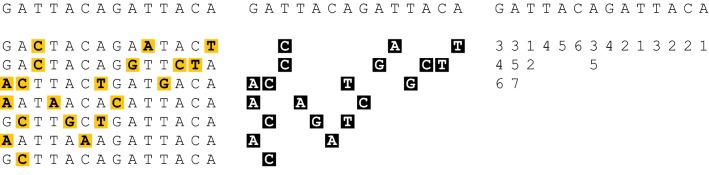

Fig. 1.

Information content of array of template haplotypes. (i) Reference panel with mismatches to haplotype o shown in yellow. (ii) Alleles at site i of elements of in black. (iii) Vectors to encode at each site

Generating these data structures takes time but is embarrassingly parallel in n. Our “*.slls” data structure doubles as a succinct haplotype index which could be distributed instead of a large vcf record (though genotype likelihood compression is not accounted for). A vcf slls conversion tool is found in our github repository.

Efficient dynamic programming

We begin with the recurrence relation of the classic forward algorithm applied to the Li and Stephens model [1]. To establish our notation, recall that we write , that we write as shorthand for and that we have initialized . For , we may then write:

| 7 |

| 8 |

We will reduce the number of summands in (8) and reduce the number indices j for which (7) is evaluated. This will use the information content defined in "Sparse column representation of haplotype alleles" section.

Lemma 2

The summation (8) is calculable using strictly fewer than k summands.

Proof

Suppose first that for all j. Then

| 9 |

| 10 |

Now suppose that for some set of j. We must then correct for these j. This gives us

| 11 |

The same argument holds when we reverse the roles of and . Therefore we can choose which calculation to perform based on which has fewer summands. This gives us the following formula:

| 12 |

where

| 13 |

| 14 |

We note another redundancy in our calculations. For the proper choices of among , the recurrence relations (7) are linear maps

| 15 |

| 16 |

of which there are precisely two unique maps, corresponding to the recurrence relations for those such that , and to those such that .

Lemma 3

If and , then can be calculated without knowing and . If and , then can be calculated without knowing .

Proof

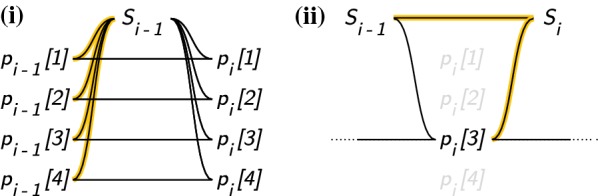

Equation (12) lets us calculate without knowing any for any . From we also have and . Therefore, we can calculate without knowing provided that . This then shows us that we can calculate for all without knowing any j such that and . Finally, the first statement follows from another application of (12) (Fig. 2).

Fig. 2.

Work done to calculate the sum of haplotype probabilities at a site for the conventional and our sublinear forward algorithm. Using the example that at site i, , we illustrate the number of arithmetic operations used in (i) the conventional Li and Stephens HMM recurrence relations. ii Our procedure specified in Eq. (12). Black lines correspond to arithmetic operations; operations which cannot be parallelized over j are colored yellow

Corollary 2

The recurrences (8) and the minimum set of recurrences (7) needed to compute (8) can be evaluated in time, assuming that have been computed .

We address the assumption on prior calculation of the necessary ’s in "Lazy evaluation of dynamic programming rows" section.

Time complexity

Recall that we defined as the expected mean minor allele frequency in a sample of size k. Suppose that it is comparatively trivial to calculate the missing values. Then by Corollary 2 the procedure in Eq. (12) has expected time complexity .

Lazy evaluation of dynamic programming rows

Corollary 2 was conditioned on the assumption that specific forward probabilities had already been evaluated. We will describe a second algorithm which performs this task efficiently by avoiding performing any arithmetic which will prove unnecessary at future steps.2

Equivalence classes of longest major allele suffixes

Lemma 4

Suppose that . Then the dynamic programming matrix entries need not be calculated in order to calculate .

Proof

By repeated application of Lemma (3).

Corollary 3

Under the same assumption on j, need not be calculated in order to calculate . This is easily seen by definition of .

Lemma 5

Suppose that is known, and . Then can be calculated in the time which it takes to calculate .

Proof

It is immediately clear that calculating the lends well to lazy evaluation. Specifically, the are data which need not be evaluated yet at step i. Therefore, if we can aggregate the work of calculating these data at a later iteration of the algorithm, and only if needed then, we can potentially save a considerable amount of computation.

Definition 2

(Longest major allele suffix classes) Define That is, let be the class of all haplotypes whose sequence up to site shares the suffix from to inclusive consisting only of major alleles, but lacks any longer suffix composed only of major alleles.

Remark 1

is the set of all where was needed to calculate but no has been needed to calculate any since.

Note that for each i, the equivalence classes form a disjoint cover of the set of all haplotypes .

Remark 2

,

Definition 3

Write as shorthand for .

The lazy evaluation algorithm

Our algorithm will aim to:

Never evaluate explicitly unless .

Amortize the calculations over all .

Share the work of calculating subsequences of compositions of maps with other compositions of maps where and .

To accomplish these goals, at each iteration i, we maintain the following auxiliary data. The meaning of these are clarified by reference to Figs. 3, 4 and 5.

The partition of all haplotypes into equivalence classes according to longest major allele suffix of the truncated haplotype at . See Definition 2 and Fig. 3.

The tuples of equivalence classes stored with linear map prefixes of the map which would be necessary to fully calculate for the j they contain, and the index m of the largest index in this prefix. See Fig. 5.

The ordered sequence , in reverse order, of all distinct such that m is contained in some tuple. See Figs. 3, 5.

The maps which partition the longest prefix into disjoint submaps at the indices m. See Fig. 3. These are used to rapidly extend prefixes into prefixes .

Finally, we will need the following ordering on tuples to describe our algorithm:

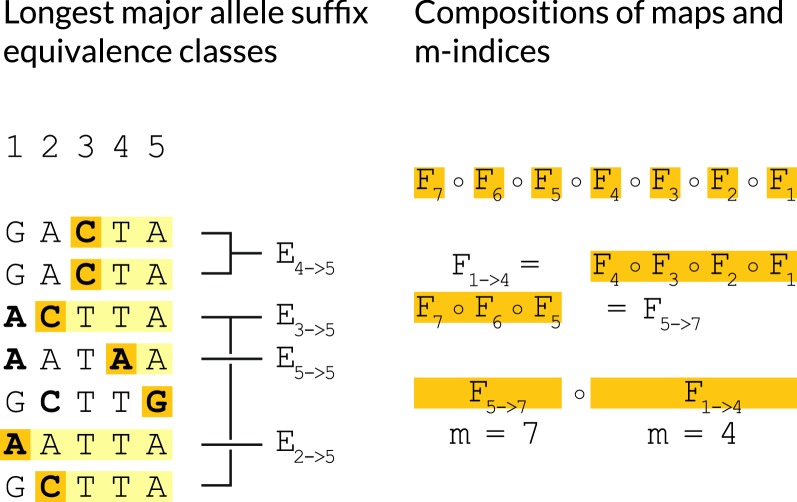

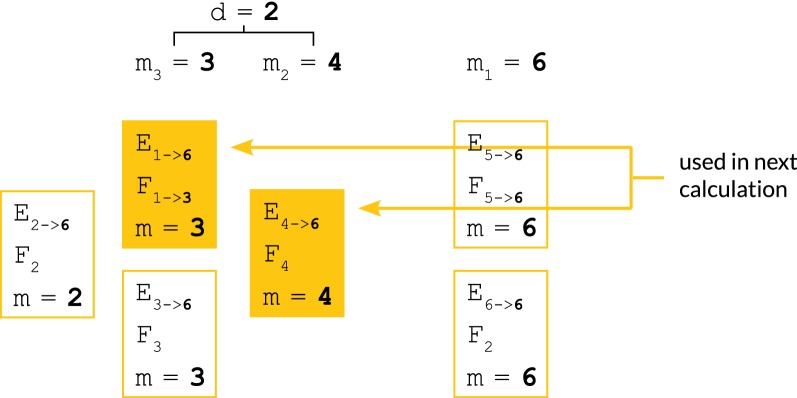

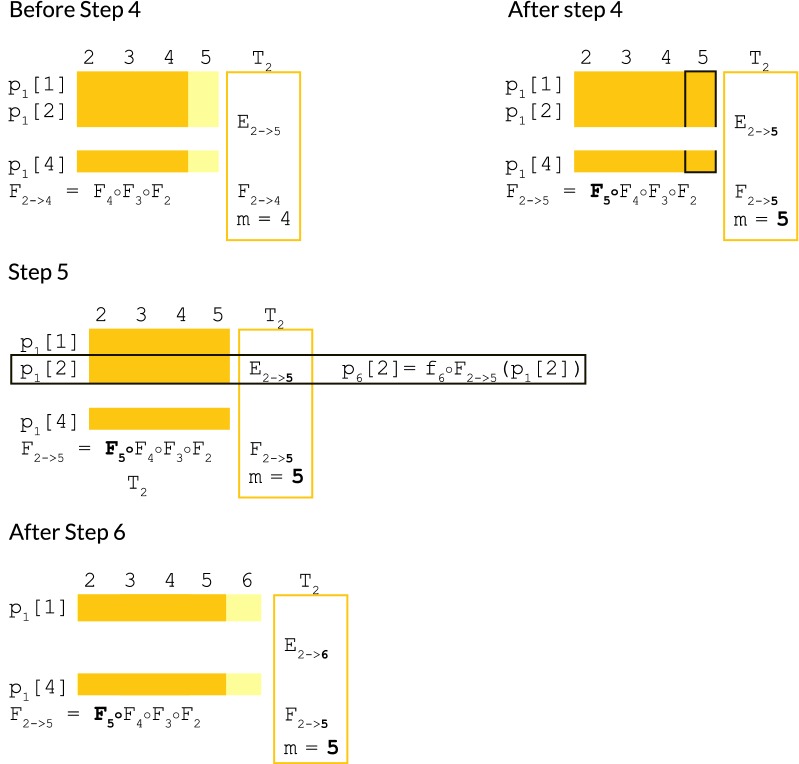

Fig. 3.

Longest major allele suffix classes, linear map compositions. Illustrations clarifying the meanings of the equivalence classes (left) and the maps . Indices m are sites whose indices are b’s in stored maps of the form

Fig. 4.

Partial ordering of tuples of (equivalence class, linear map, index) used as state information in our algorithm. The ordering of the tuples . Calculation of the depth d of an update which requires haplotypes contained in the equivalence classes defining the two tuples shown in solid yellow

Fig. 5.

Key steps involved in calculating by delayed evaluation. An illustration of the manipulation of the tuple by the lazy evaluation algorithm, and how it is used to calculate from just-in-time. In this case, we wish to calculate . This is a member of the equivalence class , since it hasn’t needed to be calculated since time 1. In step 4 of the algorithm, we therefore must update the whole tuple by post-composing the partially completed prefix of the map which we need using our already-calculated suffix map . In step 5, we use to compute . In step 6, we update the tuple to reflect its loss of , which is now a member of

Definition 4

Impose a partial ordering < on the by iff . See Fig. 4.

We are now ready to describe our lazy evaluation algorithm which evaluates just-in-time while fulfilling the aims listed at the top of this section, by using the auxiliary state data specified above.

The algorithm is simple but requires keeping track of a number of intermediate indices. We suggest referring to the Figs. 3, 4 and 5 as a visual aid. We state it in six steps as follows.

- Step 1:

Identifying the tuples containing — time complexity

Identify the subset of the tuples for which there exists some such that .

- Step 2:

Identifying the preparatory map suffix calculations to be performed— time complexity

Find the maximum depth d of any with respect to the partial ordering above. Equivalently, find the minimum m such that . See Fig. 4.

- Step 3:

Performing preparatory map suffix calculations— time complexity

: Let be the last d indices m in the reverse ordered list of indices . By iteratively composing the maps which we have already stored, construct the telescoping suffixes needed to update the tuples to .

: For each , choose an arbitrary and update it to .

- Step 4:

Performing the deferred calculations for the tuples containing — time complexity

If not already done in Step 3.2, for every , extend its map element from to in time using the maps calculated in Step 3.1. See Fig. 5.

- Step 5:

Calculating just-in-time— time complexity

Note: The calculation of interest is performed here.

Using the maps calculated in Step 3.2 or 4, finally evaluate the value . See Fig. 5.

- Step 6:

Updating our equivalence class/update map prefix tuple auxiliary data structures— time complexity

Create the new tuple .

Remove the from their equivalence classes and place them in the new equivalence class . If this empties the equivalence class in question, delete its tuple. To maintain memory use bounded by number of haplotypes, our implementation uses an object pool to store these tuples.

If an index no longer has any corresponding tuple, delete it, and furthermore replace the stored maps and with a single map . This step is added to reduce the upper bound on the maximum possible number of compositions of maps which are performed in any given step.

The following two trivial lemmas allow us to bound d by k such that the aggregate time complexity of the lazy evaluation algorithm cannot exceed . Due to the irregularity of the recursion pattern used by the algorithm, is likely not possible to calculate a closed-form tight bound on , however, empirically it is asymptotically dominated by as shown in the results which follow.

Lemma 6

The number of nonempty equivalence classes in existence at any iteration i of the algorithm is bounded by the number of haplotypes k.

Proof

Trivial but worth noting.

Lemma 7

The number of unique indices m in existence at any iteration i of the algorithm is bounded by the number of nonempty equivalence classes .

Results

Implementation

Our algorithm was implemented as a C++ library located at https://github.com/yoheirosen/sublinear-Li-Stephens. Details of the lazy evaluation algorithm will be found there.

We also implemented the linear time forward algorithm for the haploid Li and Stephens model in C++ as to evaluate it on identical footing. Profiling was performed using a single Intel Xeon X7560 core running at 2.3 GHz on a shared memory machine. Our reference panels H were the phased haplotypes from the 1000 Genomes [10] phase 3 vcf records for chromosome 22 and subsamples thereof. Haplotypes o were randomly generated simulated descendants.

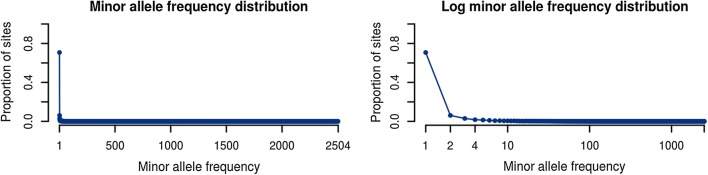

Minor allele frequency distribution for the 1000 Genomes dataset

We found it informative to determine the allele frequency spectrum for the 1000 Genomes dataset which we will use in our performance analyses. We simulated haplotypes o of 1,000,000 bp length on chromosome 22 and recorded the sizes of the sets for . These data produced a mean of 59.9, which is 1.2% of the size of k. We have plotted the distribution of which we observed from this experiment in (Fig. 6). It is skewed toward low frequencies; the minor allele is unique at 71% of sites, and it is below 1% frequency at 92% of sites.

Fig. 6.

Biallelic site minor allele frequency distribution from 1000 Genomes chromosome 22. Note that the distribution is skewed away from the distribution classically theorized. The data used are the genotypes of the 1000 Genomes Phase 3 VCF, with minor alleles at multiallelic sites combined

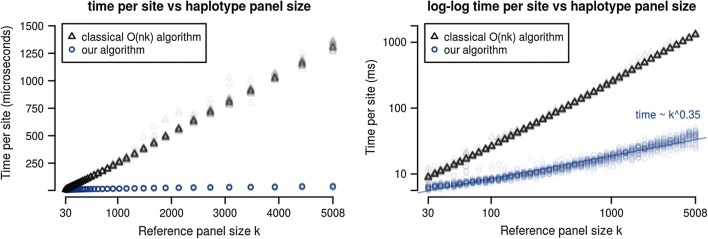

Comparison of our algorithm with the linear time forward algorithm

In order to compare the dependence of our algorithm’s runtime on haplotype panel size k against that of the standard linear LS forward algorithm, we measured the CPU time per genetic site of both across a range of haplotype panel sizes from 30 to 5008. This analysis was achieved as briefly described above. Haplotype panels spanning the range of sizes from 30 to 5008 haplotypes were subsampled from the 1000 Genomes phase 3 vcf records and loaded into memory in both uncompressed and our column-sparse-row format. Random sequences were sampled using a copying model with mutation and recombination, and the performance of the classical forward algorithm was run back to back with our algorithm for the same random sequence and same subsampled haplotype panel. Each set of runs was performed in triplicate to reduce stochastic error.

Figure 7 shows this comparison. Observed time complexity of our algorithm was as calculated from the slope of the line of best fit to a log–log plot of time per site versus haplotype panel size.

Fig. 7.

Runtime per site for conventional linear algorithm vs our sparse-lazy algorithm. Runtime per site as a function of haplotype reference panel size k for our algorithm (blue) as compared to the classical linear time algorithm (black). Both were implemented in C++ and benchmarked using datasets preloaded into memory. Forward probabilities are calculated for randomly generated haplotypes simulated by a recombination–mutation process, against random subsets of the 1000 genomes dataset

For data points where we used all 1000 Genomes project haplotypes (), on average, time per site is 37 μs for our algorithm and 1308 μs for the linear LS algorithm. For the forthcoming 100,000 Genomes Project, these numbers can be extrapolated to 251 μs for our algorithm and 260,760 μs for the linear LS algorithm.

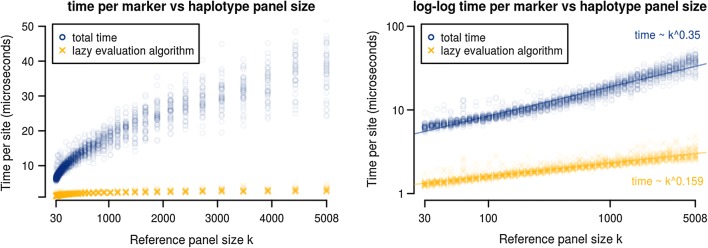

Lazy evaluation of dynamic programming rows

We also measured the time which our algorithm spent within the d-dependent portion of the lazy evaluation subalgorithm. In the average case, the time complexity of our lazy evaluation subalgorithm does not contribute to the overall algebraic time complexity of the algorithm (Fig. 8, right). The lazy evaluation runtime also contributes minimally to the total actual runtime of our algorithm (Fig. 8, left).

Fig. 8.

Runtime per site for the overall algorithm and for the recursion-depth dependent portion. Time per site for the lazy evaluation subalgorithm (yellow) vs. the full algorithm (blue). The experimental setup is the same as previously described, with the subalgorithm time determined by internally timing the recursion-depth d dependent portions of the lazy evaluation subalgorithm.

Sparse haplotype encoding

Generating our sparse vectors

We generated the haplotype panel data structures from "Sparse representation of haplotypes" section using the vcf-encoding tool vcf2slls which we provide. We built indices with multiallelic sites, which increases their time and memory profile relative to the results in "Minor allele frequency distribution for the 1000 Genomes dataset" section but allows direct comparison to vcf records. Encoding of chromosome 22 was completed in 38 min on a single CPU core. Use of M CPU cores will reduce runtime proportional to M.

Size of sparse haplotype index

In uncompressed form, our whole genome *.slls index for chromosome 22 of the 1000 genomes dataset was 285 MB in size versus 11 GB for the vcf record using uint16_t’s to encode haplotype ranks. When compressed with gzip, the same index was 67 MB in size versus 205 MB for the vcf record.

In the interest of speed (both for our algorithm and the algorithm) our experiments loaded entire chromosome sparse matrices into memory and stored haplotype indices as uint64_t’s. This requires on the order of 1 GB memory for chromosome 22. For long chromosomes or larger reference panels on low memory machines, the algorithm can operate by streaming sequential chunks of the reference panel.

Discussions and Conclusion

To the best of our knowledge, ours is the first forward algorithm for any haplotype model to attain sublinear time complexity with respect to reference panel size. Our algorithms could be incorporated into haplotype inference strategies by interfacing with our C++ library. This opens the potential for tools which are tractable on haplotype reference panels at the scale of current 100,000 to 1,000,000+ sample sequencing projects.

Applications which use individual forward probabilities

Our algorithm attains its runtime specifically for the problem of calculating the single overall probability and does not compute all nk forward probabilities. We can prove that if m many specific forward probabilities are also required as output, and if the time complexity of our algorithm is , then the time complexity of the algorithm which also returns the m forward probabilities is .

In general, haplotype phasing or genotype imputation tools use stochastic traceback or other similar sampling algorithms. The standard algorithm for stochastic traceback samples states from the full posterior distribution and therefore requires all forward probabilities. The algorithm output and lower bound of its speed is therefore . The same is true for many applications of the forward–backward algorithm.

There are two possible approaches which might allow runtime sublinear in k for these applications. Using stochastic traceback as an example, first is to devise an sampling algorithm which uses forward probabilities such that . The second is to succinctly represent forward probabilities such that nested sums of the nk forward probabilities can be queried from data. This should be possible, perhaps using the positional Burrows–Wheeler transform [11] as in [8], since we have already devised a forward algorithm with this property for a different model in [12].

Generalizability of algorithm

The optimizations which we have made are not strictly specific to the monoploid Li and Stephens algorithm. Necessary conditions for our reduction in the time complexity of the recurrence relations are

Condition 1

The number of distinct transition probabilities is constant with respect to number of states k.

Condition 2

The number of distinct emission probabilities is constant with respect to number of states k.

Favourable conditions for efficient time complexity of the lazy evaluation algorithm are

Condition 1

The number of unique update maps added per step is constant with respect to number of states k.

Condition 2

The update map extension operation is composition of functions of a class where composition is constant-time with respect to number of states k.

The reduction in time complexity of the recurrence relations depends on the Markov property, however we hypothesize that the delayed evaluation needs only the semi-Markov property.

Other haplotype forward algorithms

Our optimizations are of immediate interest for other haplotype copying models. The following related algorithms have been explored without implementation.

Example 1

(Diploid Li and Stephens) We have yet to implement this model but expect average runtime at least subquadratic in reference panel size k. We build on the statement of the model and its optimizations in [13]. We have found the following recurrences which we believe will work when combined with a system of lazy evaluation algorithms:

Lemma 8

The diploid Li and Stephens HMM may be expressed using recurrences of the form

| 17 |

which use on the intermediate sums defined as

| 18 |

| 19 |

where depend only on the diploid genotype .

Implementing and verifying the runtime of this extension of our algorithm will be among our next steps.

Example 2

(Multipopulation Li and Stephens) [14] We maintain separate sparse haplotype panel representations and and separate lazy evaluation mechanisms for the two populations A and B. Expected runtime guarantees are similar.

This model, and versions for populations, will be important in large sequencing cohorts (such as NHLBI TOPMed) where assuming a single related population is unrealistic.

Example 3

(More detailed mutation model) It may also be desirable to model distinct mutation probabilities for different pairs of alleles at multiallelic sites. Runtime is worse than the biallelic model but remains average case sublinear.

Example 4

(Sequence graph Li and Stephens analogue) In [12] we described a hidden Markov model for a haplotype-copying with recombination but not mutation in the context of sequence graphs. Assuming we can decompose our graph into nested sites then we can achieve a fast forward algorithm with mutation. An analogue of our row-sparse-column matrix compression for sequence graphs is being actively developed within our research group.

While a haplotype HMM forward algorithm alone might have niche applications in bioinformatics, we expect that our techniques are generalizable to speeding up other forward algorithm-type sequence analysis algorithms.

Authors' contributions

YR designed and prototyped the algorithm described in this article and performed its speed benchmarking. BP conceived of the theoretical need for such an algorithm and designed its integration into ongoing variant calling research. Both authors read and approved the final manuscript.

Acknowledgements

This work was supported by the National Human Genome Research Institute of the National Institutes of Health under Award Number 5U54HG007990, the National Heart, Lung, and Blood Institute of the National Institutes of Health under Award Number 1U01HL137183-01, and grants from the W.M. Keck foundation and the Simons Foundation. We would like to thank Jordan Eizenga for his helpful discussions throughout the development of this work.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

The dataset used as template haplotypes for the performance profiling during the current study are available in the 1000 Genomes Phase 3 variant call release, ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20130502/ The runtime data produced during the current study are available from the corresponding author on reasonable request. The randomly generated subsampled haplotype cohorts and haplotypes used in these analyses persisted only in memory and were not saved to disk due to their immense aggregate size.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

We observe similar results in our own analyses in "Minor allele frequency distribution for the 1000 Genomes dataset" section.

This approach is known as lazy evaluation.

References

- 1.Li N, Stephens M. Modeling linkage disequilibrium and identifying recombination hotspots using single-nucleotide polymorphism data. Genetics. 2003;165(4):2213–2233. doi: 10.1093/genetics/165.4.2213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kingman JFC. The coalescent. Stoch Process Appl. 1982;13(3):235–248. doi: 10.1016/0304-4149(82)90011-4. [DOI] [Google Scholar]

- 3.Loh P-R, Danecek P, Palamara PF, Fuchsberger C, Reshef YA, Finucane HK, Schoenherr S, Forer L, McCarthy S, Abecasis GR. Reference-based phasing using the haplotype reference consortium panel. Nat Genet. 2016;48(11):1443. doi: 10.1038/ng.3679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Browning BL, Browning SR. A unified approach to genotype imputation and haplotype-phase inference for large data sets of trios and unrelated individuals. Am J Human Genet. 2009;84(2):210–223. doi: 10.1016/j.ajhg.2009.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Williams AL, Patterson N, Glessner J, Hakonarson H, Reich D. Phasing of many thousands of genotyped samples. Am J Human Genet. 2012;91(2):238–251. doi: 10.1016/j.ajhg.2012.06.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Delaneau O, Zagury J-F, Marchini J. Improved whole-chromosome phasing for disease and population genetic studies. Nat Methods. 2013;10(1):5. doi: 10.1038/nmeth.2307. [DOI] [PubMed] [Google Scholar]

- 7.O’Connell J, Sharp K, Shrine N, Wain L, Hall I, Tobin M, Zagury J-F, Delaneau O, Marchini J. Haplotype estimation for biobank-scale data sets. Nat Genet. 2016;48(7):817. doi: 10.1038/ng.3583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lunter G. Fast haplotype matching in very large cohorts using the li and stephens model. bioRxiv 2016. 10.1101/048280. https://www.biorxiv.org/content/early/2016/04/12/048280.full.pdf. [DOI] [PMC free article] [PubMed]

- 9.Keinan A, Clark AG. Recent explosive human population growth has resulted in an excess of rare genetic variants. Science. 2012;336(6082):740–743. doi: 10.1126/science.1217283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Consortium GP, et al. A global reference for human genetic variation. Nature. 2015;526(7571):68. doi: 10.1038/nature15393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Durbin R. Efficient haplotype matching and storage using the positional Burrows–Wheeler transform (PBWT) Bioinformatics. 2014;30(9):1266–1272. doi: 10.1093/bioinformatics/btu014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rosen Y, Eizenga J, Paten B. Modelling haplotypes with respect to reference cohort variation graphs. Bioinformatics. 2017;33(14):118–123. doi: 10.1093/bioinformatics/btx236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Li Y, Willer CJ, Ding J, Scheet P, Abecasis GR. Mach: using sequence and genotype data to estimate haplotypes and unobserved genotypes. Genet Epidemiol. 2010;34(8):816–834. doi: 10.1002/gepi.20533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Donnelly P, Leslie S. The coalescent and its descendants. 2010. arXiv preprint arXiv:1006.1514.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset used as template haplotypes for the performance profiling during the current study are available in the 1000 Genomes Phase 3 variant call release, ftp://ftp.1000genomes.ebi.ac.uk/vol1/ftp/release/20130502/ The runtime data produced during the current study are available from the corresponding author on reasonable request. The randomly generated subsampled haplotype cohorts and haplotypes used in these analyses persisted only in memory and were not saved to disk due to their immense aggregate size.