Abstract.

Although on-site supervision programs are implemented in many countries to assess and improve the quality of care, few publications have described the use of electronic tools during health facility supervision. The President’s Malaria Initiative–funded MalariaCare project developed the MalariaCare Electronic Data System (EDS), a custom-built, open-source, Java-based, Android application that links to District Health Information Software 2, for data storage and visualization. The EDS was used during supervision visits at 4,951 health facilities across seven countries in Africa. The introduction of the EDS led to dramatic improvements in both completeness and timeliness of data on the quality of care provided for febrile patients. The EDS improved data completeness by 47 percentage points (42–89%) on average when compared with paper-based data collection. The average time from data submission to a final data analysis product dropped from over 5 months to 1 month. With more complete and timely data available, the Ministry of Health and the National Malaria Control Program (NMCP) staff could more effectively plan corrective actions and promptly allocate resources, ultimately leading to several improvements in the quality of malaria case management. Although government staff used supervision data during MalariaCare-supported lessons learned workshops to develop plans that led to improvements in quality of care, data use outside of these workshops has been limited. Additional efforts are required to institutionalize the use of supervision data within ministries of health and NMCPs.

INTRODUCTION

In recent years, the use of mobile health tools designed to support health workers and improve the quality of care has rapidly expanded.1–3 Within health worker capacity building, much of the evidence building has been in the design and implementation of electronic tools to support health workers at the point of care.4,5 By contrast, few publications have described the use of such tools by supervisors during on-site supportive supervision at health facilities.

At the same time, there is increasing interest in using routinely collected data to assess current practices, guide decision-making, and assess the impact of interventions designed to improve quality of care.6 To date, the main sources of information on health-care quality have typically come from periodic health facility surveys, such as the World Health Organization’s (WHO’s) service availability and readiness assessments tool, the Demographic Health Survey’s service provision assessments, and the World Bank’s service delivery indicators reports.7–9 Although valuable sources of information, these assessments are conducted infrequently and are expensive, and data collected may not be sufficient to inform localized programmatic decision-making because of national-level sampling strategies. With many countries implementing on-site supervision programs to assess and improve the quality of care, data collected during supervision visits could offer timely insight into key challenges that health facilities are facing. The use of electronic tools for health surveys and routine health register information data collection has improved data completeness and reduced time to when data can be reviewed.10 In its third year of implementation, the President’s Malaria Initiative–funded MalariaCare project developed the MalariaCare Electronic Data System (EDS), an electronic tool to guide on-site supportive supervision of malaria case management, which could enable ministries of health to take advantage of this underused data source by providing complete and timely quality assurance data for decision-making at multiple levels of the health system.

Between 2012 and 2017, MalariaCare worked in 17 countries to support national malaria control programs (NMCPs) in designing and implementing a case management quality assurance system to improve the diagnosis and treatment of malaria and other febrile illnesses. A key component of the quality assurance system was outreach training and supportive supervision (OTSS) to monitor and improve the performance of health facilities, which was implemented in selected facilities within nine of the 17 countries based on the needs and requests of NMCPs. During OTSS, a team of at least two government staff, usually clinical and laboratory supervisors, visited health facilities to observe and assess the quality of case management for febrile illnesses and to provide mentorship. At the end of each OTSS visit, which usually takes 1 day or less, the supervision team provided feedback, either verbally or in writing, to health facility staff based on their findings, and they collaboratively developed an action plan with health facility staff to improve the quality of care. A full description of the OTSS intervention can be found in Eliades et al.11 To help supervisors collect standardized information and better assess health facility performance in case management over time, MalariaCare introduced an OTSS checklist that is completed by supervisors during their visit and programmed the checklist into the EDS, which also contained additional features designed to guide supervisors in providing mentorship during the OTSS visit. In this analysis of programmatic data, we describe the process of implementing the EDS, its outcomes related to data quality and data use, and the comparative costs of using the paper checklist versus EDS for data entry.

MATERIALS AND METHODS

Program setting and population.

From September 2015 to June 2016, MalariaCare began EDS implementation in seven of the nine countries where MalariaCare supported NMCPs to conduct OTSS: Ghana, Kenya, Malawi, Mali, Mozambique, Tanzania, and Zambia. In May 2017, the Democratic Republic of the Congo began using the EDS, but only as a database for entry of data from paper-based checklists.11 Within each country, ministries of health selected both public and private facilities within regions or provinces agreed on by the ministry and United States Agency for International Development mission for OTSS visits.

Program description.

Before the introduction of the EDS, supervisors in each country used paper checklists when conducting OTSS visits. Following each set of visits to targeted facilities within a defined time period (or “round”), the completed paper checklists were sent to a central location for data entry.

Electronic data system application and content development.

MalariaCare’s EDS is a custom-built, open-source, Java-based, Android application that links to District Health Information Software 2 (DHIS2) for data storage and visualization. The EDS was adapted from Population Services International’s (PSI’s) Health Network Quality Improvement System, which is used to assess and improve the quality of health service provision in the private sector.12 The EDS application is compatible with Android versions 4.0.3 and up, and is licensed for open-source use.13 The interface is optimized for use on a 7-inch Android tablet, but has been used on phones and smaller screens with no reported loss of functionality. The supervisors completed assessments offline during their OTSS visit using the EDS application. Completed assessments were then automatically uploaded to a DHIS2 password-protected website designed specifically for the EDS once a network connection was established. The DHIS2 server was also configured as a Hypertext Transfer Protocol Secure site, which encrypts data during transmission between the EDS and the DHIS2 server.

MalariaCare developed checklist questions based on current national malaria case management guidelines and existing national malaria supervision checklists, when available. Drafts of the paper checklists were reviewed by the NMCP staff and field-tested in each country, and minor country-specific modifications were made where necessary. The OTSS checklist was then programmed into the EDS application and a second round of field-testing was conducted in each country to solicit feedback from supervisors on the design of the application and to test its functionality.

The final content for MalariaCare’s EDS checklist includes six core modules: 1) microscopy observation, where supervisors observe laboratory staff preparing, staining, and reading microscopy slides; 2) malaria rapid diagnostic test (RDT) observation, where supervisors observe health workers conducting RDTs; 3) clinical observation, where supervisors observe health workers conducting consultations with febrile patients; 4) adherence, a review of health facility registers to assess adherence to testing and treatment protocols; 5) general OTSS, an assessment of human resources, commodities, and infrastructure; and 6) feedback and action plans, where supervisors record the top problems identified during the visit and action plans to address them.

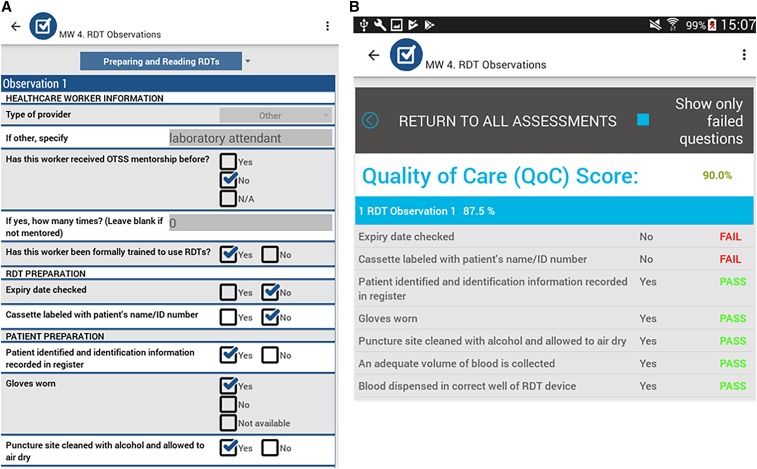

Content within the EDS application can be modified through the DHIS2 interface so that modules can be added or changed as needed to include other aspects of malaria case management or other diseases or topics entirely. In some countries, additional modules were developed during the program to address country-specific requests, including modules on pharmacy and logistics, health management information system (HMIS) data quality assessments, severe malaria, and malaria in pregnancy. The EDS is structured so that each module can be submitted to the EDS DHIS2 website independently, and supervision teams can work simultaneously, with each supervisor submitting her/his assigned module(s). Figure 1 provides screenshots of the EDS application; Figure 2 summarizes its key features.

Figure 1.

Screenshots of the Electronic Data System application. (A) Data entry screen for rapid diagnostic test (RDT) observation module. (B) Performance summary page. This figure appears in color at www.ajtmh.org.

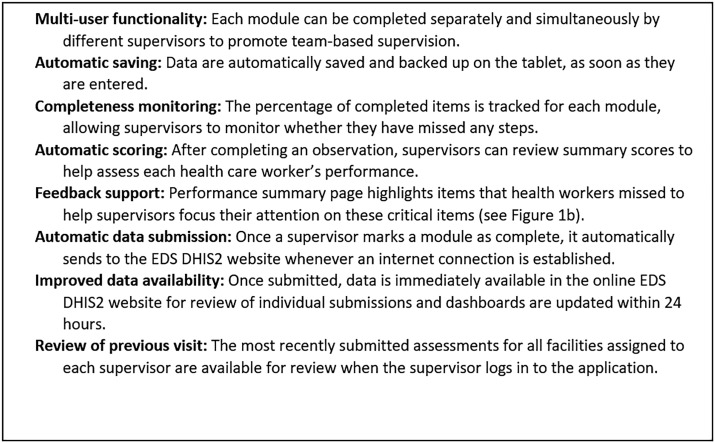

Figure 2.

Key features of the MalariaCare Electronic Data System (EDS). DHIS2 = District Health Information Software 2.

Electronic data system DHIS2 website.

The data collected in the EDS application were sent to the EDS DHIS2 website for data storage and visualization. DHIS2 is an open-source software used for the HMIS in 60 countries at the time of publication; however, the EDS DHIS2 website is separate from national HMIS DHIS2 websites and has been configured for use with the EDS application.14 It was designed separately so as not to interfere with national HMIS DHIS2 websites during EDS testing and scaling, but with the intention for future integration of the EDS and HMIS data, at the discretion of a country’s Ministry of Health.

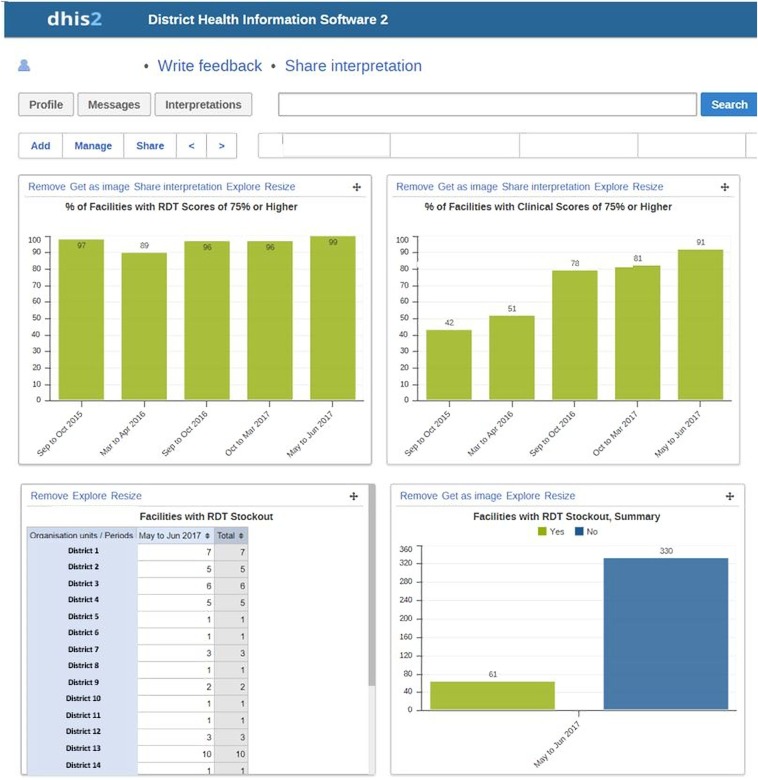

After a checklist was submitted to the EDS DHIS2 website, the individual checklist data were available for immediate review, whereas graphs and dashboards were automatically updated with the checklist content within 24 hours. From this website, government and program staff with access rights could review the data. Working with NMCPs, MalariaCare developed dashboards for the national, regional, and district levels to summarize and track key indicators. The EDS DHIS2 website allowed data users to modify visualizations, including graphs and tables, to drill down to identify specific districts or health facilities with poor performance, and to identify specific skills that need to be strengthened across health facilities. Using the dashboards, national, regional, and district malaria coordinators monitored the implementation of supervision, assessed progress, and identified key areas of focus—whether individual districts and health facilities or specific competency areas that needed to be addressed. With this information, NMCPs could then direct interventions and resources where they were needed most and cost-effectively improve the quality of malaria case management. Figure 3 provides an example of an EDS DHIS2 dashboard.

Figure 3.

Electronic Data System (EDS) District Health Information Software 2 (DHIS2) dashboard. RDT = rapid diagnostic test. This figure appears in color at www.ajtmh.org.

Training.

To support the implementation of the MalariaCare EDS, two training packages were developed. The EDS end-user training was a 3-day training package that trained supervisors to use an electronic tablet and the EDS application to complete the OTSS checklist and provide mentoring during an OTSS visit. The training included a health facility visit to give supervisors practical experience. The EDS data user training was a 3-day training package to train district-, regional-, and national-level decision-makers to create new graphs and dashboards within the EDS DHIS2 website, interpret the findings and track performance, share the results in a district or regional report, and use those data to guide action planning. Following this training, key government staff were coached in using the EDS data to guide action planning during lessons learned workshops (LLWs), which were regional forums held after OTSS rounds to discuss key trends and develop regional- and district-level quality assurance action plans.

Analysis of implementation data.

The purpose of introducing the EDS was to improve both supervision data quality and data use, and, ultimately, to improve the quality of case management of febrile illnesses. The combined effects of OTSS and EDS on the quality of malaria case management are presented in Eliades et al., Alombah et al., and Martin et al.15–17 The outcomes presented here focus on the effect of the EDS on data quality and data use.

We measured data quality by documenting data completeness and timeliness from the last paper-based visit, first EDS visit, and most recent EDS visit for each health facility. We defined completeness as the proportion of health facilities visited with sufficient data to calculate each of the project’s six key health facility performance indicators. Three of the health facility indicators (RDT, microscopy, and clinical observations) required at least one complete observation to calculate a score. The other three health facility performance indicators (testing before treatment, adherence to negative test results, and adherence to positive test results) required register reviews and data from at least half of the recommended sample (either five or 10 patient records depending on the indicator) to calculate a score. A health facility was considered “visited” if a paper checklist was submitted, or if at least one EDS module was submitted to the EDS DHIS2 website.

Timeliness was measured as the number of days between the last OTSS visit for a group of health facilities visited during a set of OTSS rounds and the date when the first analysis with cleaned data was produced. Timeliness was then further divided into timeliness for 1) data submission, the number of days between the last round of OTSS visits and the date when all available data were entered or appeared in the EDS DHIS2 website; and 2) data analysis, the number of days between data entry completion and the presentation of the first analysis with cleaned data.

The comparative cost of data entry using a paper checklist versus EDS was also analyzed. The costs of data entry per health facility visit for the last paper round were calculated from the total costs of printing the paper checklists and the costs of person-time for data entry divided by the total number of health facilities visited. Electronic Data System costs included the costs of the tablets and accessories (including allowances for 5% replacement per year for loss and breakage), airtime for sending data, and web hosting costs for the EDS DHIS2 (assuming a separate website for each country). For EDS, the one-time cost of purchasing the tablets was depreciated over the 4-year life expectancy of the tablet, by using the straight-line depreciation method. To estimate the average number of visits per tablet per round, the number of health facilities visited during the most recent round was divided by the total number of tablets used by supervisors. Airtime costs per tablet per round were divided by the number of visits per tablet per round to calculate the airtime costs per visit. The depreciated cost of the tablets per year and the recurring annual costs of airtime and web hosting were then divided by the number of health facility visits within a year (based on the total number of health facilities visited per round and the number of rounds per year). An informal assessment was carried out to collect specific examples of district, regional, and national staff using EDS data to improve the quality of malaria case management. We did not assess any differences in data use between the paper-based checklist and EDS.

RESULTS

In the seven countries where the EDS was fully implemented, all supervisors who participated in the MalariaCare-supported OTSS visits were trained in the use of EDS for supervision as part of their supervisor training. From September 2015, when rollout of the EDS started, through September 2017, a total of 1,686 supervisors were trained (Table 1). The supervision teams ranged from two to four members, depending on the country, and each team visited between six and 11 facilities on average per round of visits. A total of 11,396 OTSS visits were conducted using the EDS at 4,951 health facilities.

Table 1.

Number of visits conducted using the EDS

| Country | Number of supervisors trained in EDS* | Number of unique health facilities visited | Number of visits conducted | Number of visit rounds with EDS | Average number of visits per facility using EDS |

|---|---|---|---|---|---|

| Country 1 | 685 | 1,973 | 4,524 | 4 | 2.29 |

| Country 2 | 178 | 935 | 2,314 | 4 | 2.47 |

| Country 3 | 315 | 1,227 | 1,875 | 5 | 1.53 |

| Country 4 | 113 | 413 | 1,418 | 5 | 3.43 |

| Country 5 | 121 | 144 | 431 | 4 | 1.86 |

| Country 6 | 68 | 102 | 418 | 8 | 4.10 |

| Country 7 | 187 | 157 | 416 | 3 | 2.65 |

| Total | 1,669 | 4,951 | 11,396 | 33 | 2.27 |

EDS = Electronic Data System.

* The number of supervisors trained in EDS includes supervisors MalariaCare trained for other partner organizations, whereas the number of health facilities and visits includes MalariaCare-supported facilities alone.

Data quality: completeness and timeliness.

Completeness.

For five of the seven EDS countries (Kenya, Mali, Mozambique, Tanzania, and Zambia), we compared data completeness for the last round using a paper checklist, the first round using EDS, and the most recent round using EDS. In Ghana and Malawi, the last round with a paper checklist had a different number of questions, and thus was not comparable.

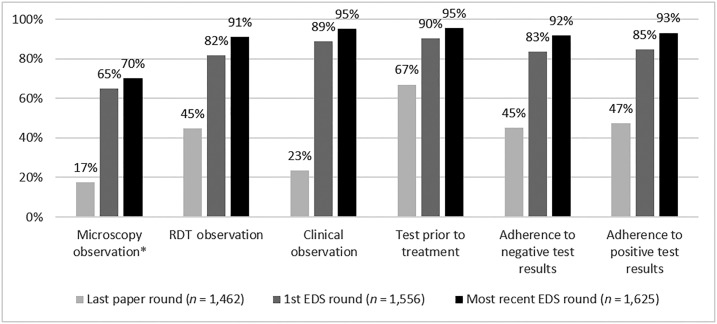

From the last round with a paper checklist to the first visit using the EDS, a dramatic improvement was observed in the percentage of facilities with complete scores, with an average improvement of 40 percentage points across the six areas, and five of the six competency areas demonstrating greater than 80 percent completeness (Figure 4). The first EDS visit to the most recent EDS visit also showed an incremental improvement, with an average 7 percentage point increase and five of the six competency areas greater than 90% complete.

Figure 4.

Percentage of health facilities with complete scores, by competency area. EDS = Electronic Data System; RDT = rapid diagnostic test. *Not all health facilities perform malaria microscopy. Ns for microscopy are as follows: Last paper round, n = 441; 1st EDS round, n = 595; most recent EDS, round n = 776.

Despite improvement, completeness for microscopy scores lagged behind, with only 70% of scores completed. In later visits, we added a question to the checklist asking supervisors to include the reason why they were unable to complete an observation. During the most recent visit, 11% of supervisors reported not being able to do a microscopy observation because of the lack of staff (6%), stock-outs of supplies (2%), a power outage (2%), or a microscopy test not being ordered (1%); 2% of RDT scores and 1% of clinical score were missing for similar reasons.

Timeliness.

Table 2 reports the mean, median, and range of the number of days it took for program staff from each country to verify that supervisors had submitted data from all completed visits to the EDS DHIS2 website from one round of supervision, to clean and analyze the data following submission, and the total number of days from last OTSS visit to first data analysis. The number of days required for verifying data submissions decreased from an average of 84.4 days with the paper checklist to 16.9 in the most recent EDS round, an 80% decrease. With the paper checklists, the time to data submission was affected by the time required to transport and enter the paper checklists. During the use of the EDS, the time to data submission was affected by connectivity challenges and, early in the process, challenges in staff learning to use the system; for example, making sure that airtime was loaded, that the mobile data function in the tablets was turned on for submission, and that program staff were able to monitor the submissions on a daily or weekly basis and follow-up with supervisors, as needed. The number of days required for cleaning the data and producing a final analysis decreased from an average of 73.1 days to 12.6, an 83% decrease. In total, the time from submission and analysis to data being available for decision-making dropped from an average of 5 months post-visit to less than 1 month.

Table 2.

Number of days from the end of outreach training and supportive supervision round until analysis shared

| Number of days: Mean (median [range]) | |||

|---|---|---|---|

| Visit | Data submission | Analysis | Total |

| Last paper visit (n = 2,380) | 84.4 (91.0 [15.0–166.0]) | 73.1 (54.0 [3.0–216.0]) | 157.6 (145.0 [74.0–364.0]) |

| First EDS visit (n = 3,333) | 27.5 (20.0 [0.0–121.0]) | 19.8 (16.0[0.0–71.0]) | 47.3 (27.0 [20.0–146.0]) |

| Most recent EDS visit (n = 3,105) | 16.9 (11.5 [1.0–79.0]) | 12.6 (11.0 [1.0–30.0]) | 29.4 (28.0 [6.0–80.0]) |

EDS = Electronic Data System.

As illustrated in Table 2, even with the EDS, clean, usable data were not automatically available in “real time.” Program staff still needed to follow-up with supervisors to ensure that visits were being completed and that the findings of those visits were documented through data submissions. Moreover, some data cleaning, due to supervisor error in completing the checklist and/or duplicate submissions, was required.

Electronic data system field implementation issues.

Over the course of the project, MalariaCare made several modifications to the EDS application and implementation processes to address key challenges faced by personnel in the field. Significant issues are summarized in the following paragraphs.

Sending completed checklist data.

The initial version of EDS required the supervisor to have an internet connection and press a button to send a module from the tablet. This proved frustrating, requiring the supervisor to repeatedly check for internet connectivity and/or wake up at inconvenient hours to try to send during times of lower network traffic. Supervisors would also press the send button repeatedly, which resulted in duplicate submissions to the EDS DHIS2 website. To address this problem, a subsequent version of the EDS application allowed the supervisor to simply mark a module as complete, and the tablet would automatically push the data to the server once it detected an available network signal.

User names and passwords.

To ensure that the appropriate checklists and health facilities appeared in the EDS application, supervisors were required to enter a user name and password. However, because of the infrequency of OTSS visits (every 3 months or fewer), supervisors often forgot these. One solution instituted in some countries was to introduce just one user account per district, while adding a space for supervisors to write in their name within each module. Although this reduced the ability to track individual supervisor actions, ultimately this was seen by MalariaCare and NMCP staff as a more practical option, as supervisors who could not access their application would not be able to use the tool to guide mentoring or document their supervision visit.

Reviewing results.

Two EDS application improvements were made for reviewing data at the supervisor level. First, a performance summary page was added so that supervisors, after marking the module as complete, could easily identify the missed items and discuss with health facility staff (see Figure 1B). Second, a feature was added so that supervisors could review the previous visit’s results, even when conducted by a different supervisor using another tablet. This was particularly useful for the feedback and action plan module, which required supervisor teams to review the top issues during the previous visit.

Content and application updates.

Through its connection with DHIS2, each time the supervisors logged into the application, the content was updated to reflect the latest version of the checklist, ensuring that old checklist versions were removed from circulation before the next use. MalariaCare also uploaded the EDS application to the Google Play Store, which allows changes in the EDS application features to update when connected to the internet, rather than requiring the program staff and supervisors to uninstall the old version and install a new one. During the project, we disabled the auto-syncing of applications to reduce inadvertent data usage. When a new version of the EDS application was released, program staff would either update the tablets centrally or inform supervisors to update their tablets to the latest version.

Data use.

With EDS, dramatic improvements in data completeness and timeliness allowed for the timely use of OTSS data to inform decision-making during the MalariaCare project. The most critical opportunity for timely data use is at the health facility during OTSS visits, where supervisors can provide direct feedback to health workers immediately after observing the quality of care. Using the EDS, supervisors had rapidly available scores for each competency area, as well as a performance summary page which helped them to focus on key gaps and provide targeted mentorship. Supervisors reported that they found these aspects of the EDS to be a major advantage over the previous paper-based checklist, stating that it allowed them to better direct the feedback they provided to health-care workers and helped to guide their action planning sessions.

To further support data use by health managers at the district, regional, and national levels, data user training was conducted after at least one round of OTSS with the EDS. This training included key national malaria case management and monitoring and evaluation staff, and malaria focal persons and health information officers at the regional or district levels. In some cases, regional/district managers of health services were included for at least part of the training. A total of 535 staff were trained in data use, with the numbers trained ranging from 10 to 301 per country. On average, one to five government staff per region/district implementing OTSS participated in the data use training sessions. Following the data use trainings, MalariaCare supported trained staff to update their dashboards based on the most recent round of OTSS and develop presentations, which were then shared during the LLWs. These data provided the basis for developing regional- and district-wide action plans to address key gaps. In the following paragraphs, we present key examples of data use in MalariaCare-supported countries.

Targeting low-performing facilities for additional intervention.

Within the MalariaCare program, the EDS also enabled better targeting of program resources. For example, in Zambia, financial constraints required MalariaCare to select OTSS facilities where performance during the previous visit was low; this would not have been possible to analyze in time without the EDS. Similarly, in Malawi, facilities that scored low on the management of severe malaria were selected to participate in an additional clinical mentoring intervention designed to improve the management of severe inpatient cases.

Increasing assessments for severe disease.

During the LLW held after the first OTSS round using EDS in country four, district malaria focal persons and supervisors reviewed the results of the clinical management indicators and found that checking for signs of severe disease for febrile outpatients was only 49%. Supervisors then decided to visit health facilities in between official OTSS visits to further educate clinical providers; by the last visit during the project, the performance on this indicator rose to 82%.

Integrating OTSS and HMIS data to reduce RDT stock-outs and improve testing rates.

At an LLW in country three, a regional malaria focal person presented the HMIS data on the proportion of malaria cases confirmed along with the EDS data that indicated 34% of facilities reported a sustained RDT stock-out during OTSS. Using these data, the focal person was then able to garner the support of regional and district council leadership who followed up with district health staff. The malaria-focused technical teams from the region and district councils also met with each district to discuss the reasons for poor performance at certain health facilities, and then conducted additional problem-solving visits. Key strategies used were as follows: training health facility staff on completing RDT stock forms, redistributing RDT stock between health facilities within districts, and reinforcing the importance of testing all patients before treatment. By the last OTSS visit during the project, RDT stock-out rates dropped to 12%, whereas test confirmation rates increased from 89% to 97%.

Despite these positive examples, widespread and routine use of EDS dashboards by government staff remains a challenge. Review and use of data has largely occurred only with MalariaCare prompting and support, such as during LLWs. When asked why data were not used more often outside of the LLWs, several of those trained in data use said they forgot how to use the EDS DHIS2 website and that they did not have regular internet access.

Costs.

A common concern when replacing a paper-based system with an electronic system is the relative cost. To better understand the costs associated with implementing the EDS, data entry costs for the paper checklists and the EDS were compared. For the paper-based data entry, costs included printing and data entrant consultant fees. For the EDS, costs included a one-time purchase of tablets and accessories (cases and screen protectors), spread over a life expectancy of 4 years, and operating costs including annual server hosting and maintenance (assuming independent servers for each country) and airtime. Table 3 presents the average costs per visit for paper-based and EDS data entry. The total data entry cost per visit ranged from US$2.42 to $17.17 for the paper checklist and from US$7.86 to $31.29 for the EDS. Per visit, the EDS was usually more expensive than the paper checklist. Five of the seven countries were between US$0.16 and $28.04 more expensive. In two countries (countries three and six), the EDS was between US$1.15 and $2.99 less expensive. For the EDS, the one-time costs of tablet purchase ranged from 26% to 75% of the total data entry cost per visit, with the operating costs making up the remainder.

Table 3.

Average cost of data entry per facility visit, paper-based checklist vs. EDS

| Country | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | Average | |

| Cost drivers | ||||||||

| Number of health facilities visited, latest round | 1,181 | 782 | 480 | 402 | 144 | 72 | 120 | 454 |

| Number of rounds per year | 2 | 3 | 2 | 2 | 2 | 4 | 2 | 2.43 |

| Number of tablets | 448 | 150 | 129 | 98 | 38 | 16 | 48 | 132 |

| Number of tablets per supervision team | 4 | 2 | 2 | 2 | 2 | 2 | 2 | 2.28 |

| Average number of facilities visited per tablet per round | 2.64 | 5.21 | 3.72 | 4.10 | 3.79 | 4.50 | 2.50 | 3.78 |

| Cost per tablet, with accessories (US$) | $189 | $211 | $165 | $190 | $599 | $265 | $165 | $255 |

| Costs of data entry per facility visit (US$) | ||||||||

| EDS | $11.98 | $7.18 | $8.90 | $10.42 | $31.29 | $14.17 | $23.19 | $15.31 |

| Onetime tablet purchase, as a proportion of data entry costs | 75% | 47% | 62% | 56% | 63% | 26% | 36% | 52% |

| Paper checklist | $2.42 | $7.02 | $10.05 | $8.20 | $3.25 | $17.17 | $5.42 | $7.65 |

| Cost difference | $9.56 | $0.16 | ($1.15) | $2.23 | $28.04 | ($2.99) | $17.78 | $7.66 |

EDS = Electronic Data System.

Electronic Data System costs per visit were lower when a greater number of health facilities were reached with a lower number of tablets. For example, in country two, where a total of 150 supervisors in teams of two visited 782 health facilities with three rounds per year (2,346 health facility visits), average EDS costs were only US$7.86 per visit. Although a similar number of health facility visits were performed per year in country one (2,362 visits conducted over two rounds involving 1,181 health facilities), supervisors travel in teams of four and each supervisor has a tablet. This requires twice the number of tablets per visit and increases costs to nearly double, at US$13.77 per visit. In country seven, costs were relatively high because of the smaller number of health facilities and the large number of active supervisors. In that program, while eight regional supervisors visited five facilities each, at the district level, supervisors would visit two health facilities per district per round. However, in one country with a lower number of health facilities (country six), the EDS costs were lower than those of paper-based data entry. This was due to the comparatively higher cost paid for paper-based data entry, which was performed by program staff because of the low number of paper checklists. Regular program staff compensation is higher than it is for temporary data entry personnel with the qualifications required for this work. In countries with a higher volume of paper checklists for entry, lower cost data entry consultants were used. Finally, in countries that conducted more visits per tablet per round (countries two and six), the one-time costs accounted for a lower proportion of the total data entry costs.

In four countries, tablets and accessories such as cases and screen protectors were bulk purchased from the United States and shipped, which kept unit costs between US$165 and US$211. In countries five and six, where tablets were purchased locally and in lower volume, the cost per tablet was much higher at US$599 and US$265, respectively. The tablets in country five were also a more advanced model, which was recommended for purchase based on a reported lack of reliability in lower level models used for previous projects in-country. This, in addition to the lower number of facilities covered in country five, led to a significantly higher cost per visit for EDS when compared with other countries. In country three, which combined the lowest tablet cost and a larger scale program, EDS was less expensive than paper-based data entry. In countries with a greater number of tablets needed per facility visit (country one) or where tablets were more expensive (country five), the one-time costs accounted for a higher proportion of the total data entry costs.

DISCUSSION

The MalariaCare project experienced dramatic improvements in data completeness and timeliness immediately after the introduction of an electronic tool to guide on-site supportive supervision. Data completeness using the paper-based checklists varied by the indicator but was low overall. To our knowledge, no other studies have evaluated data completeness and timeliness of national supportive supervision programs at this scale. Studies that evaluated completeness of national HMIS data during early DHIS2 implementation, which requires paper-based data management at the facility level, found similar rates of completion, at 36% in Uganda and 26.5% in Kenya.10,18

Several features of the EDS are likely to have directly contributed to these improvements: automated remote data submission, which reduced time to submission and opportunities for data loss; remote monitoring of data submissions through the EDS DHIS2 dashboards, which allowed centrally based program managers to quickly follow-up on missing submissions; and automated scoring and performance summaries, which increased immediate usability of the data and may have further motivated supervisors to complete the checklist.

With increased availability of scores, including during facility visits, and a significant reduction in the time needed to have a full, analyzed dataset available for use, data could more effectively be used for decision-making: supervisors were able to provide more targeted feedback during supervision visits, government and MalariaCare program staff could better use limited resources by targeting poorly performing facilities, and district, regional, and national staff began using EDS data to drive measurable improvements in the quality of care. Across country programs where OTSS was implemented using EDS, MalariaCare has observed improvements in each of the project’s six key indicators (RDT, microscopy and clinical performance, testing before treatment, and adherence to positive and negative test results).15–17

Comparing only the operational costs for data entry, EDS tended to be more expensive per visit than using a paper checklist, with cost differentials ranging from between US$0.16 and US$28.04 per visit for five of the seven countries. The EDS operational costs were lower when it was used at scale—when there were a greater number of health facilities over a greater number of visits, when health facilities were visited by fewer supervisors, and when tablets were purchased in bulk. Given the demonstrated benefits of using the EDS, in terms of data timeliness and completeness and increased data use which has led to improved case management, we believe that EDS is worth the additional cost in countries where large-scale supportive supervision is planned. Further implementation research should be performed to compare the cost-effectiveness of electronic systems for supportive supervision, such as the EDS, and paper-based systems. If such systems enable better and more timely feedback and program modification, as described with the EDS, it may lead to greater improvements in case management practices and, ultimately, reduced morbidity.

The EDS could also reduce overall health-care costs by helping to systematically target facilities for supervision or allowing supervisors and managers to target specific areas of weakness. With average costs between US$44 and US$333 per OTSS visit, it is financially difficult for government programs to reach every facility for a supervision visit two to four times per year, as WHO and many governments presently recommend.11,19 The use of EDS data could help to better target follow-up visits to low-performing facilities, thus achieving higher quality at a lower cost for governments and donors. Using supervision data to target specific interventions, such as equipment purchases, stock distribution, or additional capacity building efforts, could also decrease costs to the health system while improving the quality of care. The EDS also supports the management of large-scale supervision programs through the ability to modify the content as guidelines are updated or country needs change. Automated updates pushed to the tablets ensure that old versions of checklists are quickly and easily removed from circulation.

Our cost analysis did not include EDS development costs or the costs of information technology support staff required to ensure smooth operation of the system. MalariaCare was able to build on existing software developed by PSI and leverage its position as a global project to develop one application that worked across eight countries. With its open-source status and the ability to adapt content for any topic or disease as needed, the EDS also has the potential to be used across multiple disease programs, which could further reduce costs across programs.

The EDS provides more localized and timely data than have been available through periodic national health facility surveys. With improved access to supervision data, district, regional, and national staff have better information to drive decision-making and can incorporate quality improvement into routine management systems. Government staff used supervision data during MalariaCare-supported events to develop plans that led to improvements in the quality of care. However, outside of these events, there has been limited use of the EDS DHIS2 dashboards for data use. This is not surprising, given that establishing habits for data use take time and the OTSS data use efforts are still in their early stages. In most countries, only one to three OTSS visits had taken place since the EDS data use training was implemented.

The use of supportive supervision data beyond the supervisor level must be institutionalized within the NMCP and health management system. Steps should be taken to provide a strong operating environment for use and interpretation of EDS dashboards. Job descriptions for key personnel at the national, regional, and district levels and routine reporting templates should be revised to include the analysis and use of supportive supervision data. Supervision data should be analyzed and shared during existing district and regional health department meetings. Following these review meetings, accountability structures for ensuring the implementation of quality assurance action plans within district and regional health management teams need to be strengthened or established. Within these structures, OTSS and HMIS data, as well as other data sources such as routine stock data and/or community interventions, should be integrated to provide a full picture of malaria case management with each locality.

Whereas the organizational changes proposed earlier will help to create a sustainable data use culture for supervision data, simple technical modifications to address barriers in the use of the EDS application and the EDS DHIS2 website could further improve data quality and data use and allow more time to focus on quality assurance efforts. For example, the EDS application could be improved by reducing the time required to log in and restricting data submissions unless the modules are complete. The somewhat complex DHIS2 visualization environment could also be simplified and tailored further for supervision data. Finally, internet access must be available at the time of data collection, analysis, and reporting, whether through the provision of airtime or mobile internet access devices, NMCPs supplementing district internet allowances, or shifting data analysis to HMIS officers who tend to have more consistent access to a computer and internet than malaria focal persons.

CONCLUSION

The introduction of an electronic tool for supervision led to dramatic improvements in both data completeness and timeliness of data on the quality of care provided for febrile patients and supported Ministry of Health and NMCP staff in their decision-making process for planning corrective actions and promptly allocating resources. Additional efforts are required to institutionalize the use of supervision data within ministries of health and NMCPs.

Acknowledgments:

We acknowledge the contributions of government and project staff in all seven countries who were the principal actors implementing the project.

REFERENCES

- 1.Aranda-Jan CB, Mohutsiwa-Dibe N, Loukanova S, 2014. Systematic review on what works, what does not work and why of implementation of mobile health (mHealth) projects in Africa. BMC Public Health 14: 188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tomlinson M, Rotheram-Borus MJ, Swartz L, Tsai AC, 2013. Scaling up mHealth: where is the evidence? PLoS Med 10: e1001382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hall CS, Fottrell E, Wilkinson S, Byass P, 2014. Assessing the impact of mHealth interventions in low- and middle-income countries—what has been shown to work? Glob Health Action 7: 25606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Amoakoh-Coleman M, Borgstein AB, Sondaal SF, Grobbee DE, Miltenburg AS, Verwijs M, Ansah EK, Browne JL, Klipstein-Grobusch K, 2016. Effectiveness of mHealth interventions targeting health care workers to improve pregnancy outcomes in low- and middle-income countries: a systematic review. J Med Internet Res 18: e226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Agarwal S, Perry HB, Long LA, Labrique AB, 2015. Evidence on feasibility and effective use of mHealth strategies by frontline health workers in developing countries: systematic review. Trop Med Int Health 20: 1003–1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ashton RA, Bennett A, Yukich J, Bhattarai A, Keating J, Eisele TP, 2017. Methodological considerations for use of routine health information system data to evaluate malaria program impact in an era of declining malaria transmission 97 (Suppl 3): 46–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.World Health Organization (WHO) , 2015. Service Availability and Readiness Assessment (SARA): An Annual Monitoring System for Service Delivery: Reference Manual, Version 2.2. Geneva, Switzerland: WHO. Available at: https://www.who.int/healthinfo/systems/sara_reference_manual/en/. Accessed December 13, 2018. [Google Scholar]

- 8.ICF International The DHS Program—Service Provision Assessments (SPA). Available at: https://dhsprogram.com/What-We-Do/Survey-Types/SPA.cfm. Accessed October 11, 2017.

- 9.World Bank , 2017. Service Delivery Indicators. World Bank. Available at: https://www.sdindicators.org/. Accessed December 13, 2018. [Google Scholar]

- 10.Kiberu VM, Matovu JK, Makumbi F, Kyozira C, Mukooyo E, Wanyenze RK, 2014. Strengthening district-based health reporting through the district health management information software system: the Ugandan experience. BMC Med Inform Decis Mak 14: 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Eliades MJ, et al. 2019. Perspectives on implementation considerations and costs of malaria case management supportive supervision. Am J Trop Med Hyg 100: 861–867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Population Services International Quality Improvement within Social Franchise Networks » DHIS in Action. Available at: https://mis.psi.org/where-is-hnqis/?lang=en. Accessed October 11, 2017.

- 13.EyeSeeTea , 2017. MalariaCare Electronic Data System (EDS) Application Source Code. Available at: https://github.com/EyeSeeTea/EDSApp. Accessed December 13, 2018.

- 14.University of Oslo DHIS2 Overview | DHIS2. Available at: https://www.dhis2.org/overview. Accessed October 11, 2017.

- 15.Eliades MJ, Wun J, Burnett SM, Alombah F, Amoo-Sakyi F, Chirambo P, Tesha G, Davis KM, Hamilton P, 2019. Effect of supportive supervision on performance of malaria rapid diagnostic tests in sub-Saharan Africa. Am J Trop Med Hyg 100: 876–881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Alombah F, Eliades MJ, Wun J, Burnett SM, Martin T, Kutumbakana S, Dena R, Saye R, Lim P, Hamilton P, 2019. Effect of supportive supervision on malaria microscopy competencies in sub-Saharan Africa. Am J Trop Med Hyg 100: 868–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Martin T, Eliades MJ, Wun J, Burnett SM, Alombah F, Ntumy R, Gondwe M, Onyando B, Guindo B, Hamilton P, 2019. Effect of supportive supervision on competency of febrile case management in sub-Saharan Africa. Am J Trop Med Hyg 100: 882–888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Githinji S, Oyando R, Malinga J, Ejersa W, Soti D, Rono J, Snow RW, Buff AM, Noor AM, 2017. Completeness of malaria indicator data reporting via the District Health Information Software 2 in Kenya, 2011–2015. Malar J 16: 344. 10.1186/s12936-017-1973-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.World Health Organization (WHO) Malaria Microscopy Quality Assurance Manual—Ver. 2. Geneva, Switzerland: WHO. Available at: https://www.who.int/malaria/publications/atoz/9789241549394/en/. Accessed December 13, 2018.