Abstract.

The U.S. President’s Malaria Initiative–funded MalariaCare project implemented an external quality assurance scheme to support malaria diagnostics and case management across a spectrum of health facilities in participating African countries. A component of this program was a 5-day, malaria diagnostic competency assessment (MDCA) course for health facility laboratory staff conducting malaria microscopy. The MDCA course provided a method to quantify participant skill levels in microscopic examination of malaria across three major diagnosis areas: parasite detection, species identification, and parasite quantification. A total of 817 central-, regional-, and peripheral-level microscopists from 45 MDCA courses across nine African countries were included in the analysis. Differences in mean scores with respect to daily marginal performance were positive and statistically significant (P < 0.001) for each challenge type across all participants combined. From pretest to assessment day 4, mean scores for parasite detection, species identification, and parasite quantification increased by 19.1, 34.9, and 38.2 percentage points, respectively. In addition, sensitivity and specificity increased by 20.8 and 13.8 percentage points, respectively, by assessment day 4. Furthermore, the ability of MDCA participants to accurately report Plasmodium falciparum species when present increased from 44.5% at pretest to 67.1% by assessment day 4. The MDCA course rapidly improved the microscopy performance of participants over a short period of time. Because of its rigor, the MDCA course could serve as a mechanism for measuring laboratory staff performance against country-specific minimum competency standards and could easily be adapted to serve as a national certification course.

INTRODUCTION

Since 2010, the World Health Organization (WHO) has recommended parasite-based diagnostic testing by microscopy or rapid diagnostic test (RDT) for all patients suspected of having malaria before a treatment regimen is started.1 Quality-assured light microscopy serves as the reference standard for diagnosing malaria because it allows technicians to identify malaria species, conduct parasite quantification, and track response to treatment. It also has a role in identifying other parasitic diseases such as filariasis, leishmaniasis, trypanosomiasis, as well as bacterial, fungal, and/or hematological abnormalities.2–6 Microscopy is a relatively simple and cost-effective method of detecting Plasmodium species in peripheral blood smears; however, the accuracy of the test requires a high degree of expertise, particularly in diagnosing patients with low-level parasitemia.4,7,8 Prompt and accurate diagnosis of malaria is an essential component of effective case management. Misdiagnosis of malaria can lead to inappropriate treatment regimens and exacerbation of the underlying illness, resulting in increased clinic attendance, which further strains an already overburdened health system.9,10

The accuracy of microscopy results is dependent on a technician’s capacity to perform a specific set of actions in a quick and precise manner. However, poor specificity of laboratory diagnosis performed at peripheral levels of the health system is not uncommon, due in large part to poor blood film preparation and staining.11–13 In addition, the risk for false-negative results increases with decreasing parasite densities.14 A study conducted among older children and adults in Kenya showed that of 359 consultations, the sensitivity of routine malaria microscopy was 68.6%; its specificity, 61.5%; its positive predictive value, 21.6%; and its negative predictive value, 92.7%.12 The study concluded that the potential benefits of microscopy were not realized because of the poor quality of routine testing and irrational clinical practices. A separate study conducted across different levels of the health system in Dar es Salaam, Tanzania, determined that overdiagnosis of malaria via microscopy was substantial, showing that mean test positivity rates using routine microscopy were 43.0% in hospitals, 62.2% in health centers, and 57.7% in dispensaries, whereas mean positivity rates using routine RDTs were 5.5%, 6.5%, and 7.6%, respectively, in the same facilities during the same time period the following year.15 Indeed, multiple publications have called into question the skill sets of malaria microscopists, citing issues with accuracy and quality, and an overall lack of stringent standards.15–21

Factors contributing to poor microscopy are the lack of effective preservice training opportunities, the high workload many microscopists must manage on a daily basis, poor quality control for reagents and supplies, lack of high-quality equipment, and greater reliance on RDTs resulting in decreased practice of microscopy.4 There is also a lack of programs and funding to support continuous training and monitoring of staff competence levels, weak supervision programs that lack support for follow-up and remedial action, and an absence of national-level guidelines that include minimum competency standards for laboratory technicians performing malaria microscopy.4,14,22

Consequently, countries may lack an adequate number of qualified microscopists and malaria-specific internal quality control processes. This can result in poor diagnosis, leading to improper management of illness and low-quality morbidity data, which in turn can negatively impact sensible allocation of resources to areas of need.4,6,10,23 In addition, poor diagnostic performance may erode clinician confidence in test results, leading to reliance on clinical diagnosis of malaria (i.e., diagnosis in the absence of parasitological confirmation).24,25 However, clinical diagnosis is known to have very low specificity and promotes the indiscriminate use of antimalarials, which compromises the quality of care for non-malaria febrile patients, especially in malaria-endemic areas.24–29 Researchers studying admissions to Tanzanian hospitals found that less than half (46.1%) of the 4,474 patients who received a clinical diagnosis of severe malaria according to WHO criteria actually had presence of Plasmodium falciparum asexual parasites via confirmatory blood smear analysis.27 Another study conducted at a tertiary referral center in Kumasi, Ghana, revealed that 20.3% of children who had been given a WHO-defined clinical diagnosis of severe malaria were subsequently confirmed to have bacterial sepsis.30

A major goal of national malaria control programs in Africa is to provide universal access to high-quality diagnostic testing for all persons suspected of having malaria.1 Accordingly, countries are improving the quality of laboratory diagnostic testing by engaging in activities targeted at the competencies of diagnosticians.1 Carefully constructed in-service refresher training and competency assessment courses for malaria microscopists have been shown to have considerable impact on their skills and play an important role in malaria control in endemic areas.31

The MalariaCare project was a 5-year partnership (2012–2017) led by PATH and funded by the U.S. Agency for International Development (USAID) under the U.S. President’s Malaria Initiative (PMI). The goal was to scale up high-quality diagnosis and treatment services for malaria and other febrile illnesses. The project sought to improve and expand the accuracy of parasitological testing and promote the use of diagnostic test results, primarily in public health facilities across participating countries. A cornerstone of the project’s strategy to strengthen malaria diagnostics was the development and administration of malaria diagnostic competency assessment (MDCA) courses. The MalariaCare project adopted the course structure, content, and grading scheme from the WHO External Competency Assessment of Malaria Microscopists (ECAMM) to develop the MDCA course. The MDCA course differed in intent from the WHO ECAMM in that it placed particular emphasis on refresher training for the purpose of improving skills and monitoring the quality of routine microscopists of varying expertise, whereas the WHO ECAMM certifies highly skilled microscopists at internationally recognized standards. More specifically, the MDCA course structure de-emphasized assessment for certification and highlighted training opportunities while still maintaining a rigorous examination component to accurately describe the state of microscopy skills among participating microscopists. Given the short duration of the course and the learning objectives the program was expected to achieve, daily assessments were used as a mechanism to understand participant deficiencies and to engage in necessary remedial steps during daily learning modules and review sessions. Malaria diagnostic competency assessment courses were also conducted at the country level specifically for host-country nationals working at the central, regional, and peripheral levels of the health system. Through evaluation of aggregate daily assessment results, the MDCA course was able to identify pools of highly proficient microscopists who were often targeted for subsequent in-service training to maintain and further refine their skills, especially if they were involved in supervision or training for malaria microscopy. Malaria diagnostic competency assessment participants with outstanding performance were selectively sponsored to attend the WHO ECAMM.

Previous research has demonstrated the effectiveness of malaria microscopy refresher training on improving technician performance,13,31–37 although some studies have not been able to corroborate this effect.38–41 This study adds to the evidence base for malaria microscopy assessments by broadening the scale of outcome measures related to the intervention in exploring both slide- and person-level results. The primary outcome evaluates the competence of participating laboratory technicians through assessment of three major malaria microscopy diagnosis areas (i.e., parasite detection, species identification, and parasite quantification). In addition, this study makes recommendations on strategies to revise and improve the MDCA course based, in part, on participant results. Finally, a potential policy use for the MDCA course is described.

MATERIALS AND METHODS

Program setting and population.

The MalariaCare project sought to build the capacity of central, regional, and peripheral-level microscopists to improve malaria diagnosis across nine African countries (Democratic Republic of the Congo, Ghana, Kenya, Liberia, Madagascar, Malawi, Mali, Mozambique, and Tanzania). Participants generally comprised individuals involved in supportive supervision for malaria diagnostics and laboratory technicians who were actively practicing bench microscopy in health facilities with relatively high patient loads. Participants were predominantly selected by staff from each country’s national malaria control program with guidance from MalariaCare project field personnel.

Malaria diagnostic competency assessment course description.

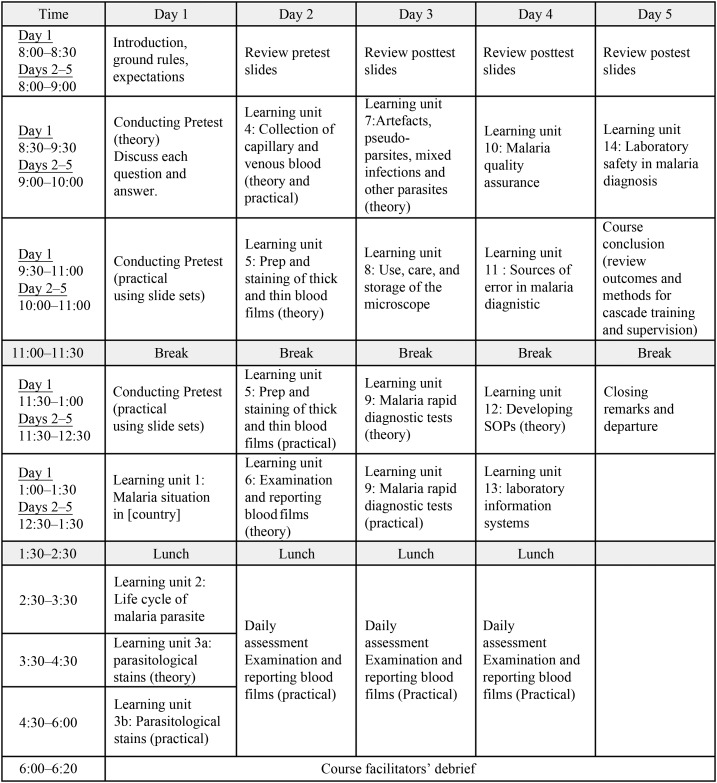

The MDCA courses were primarily conducted over 5 days by WHO-certified expert microscopists alongside local instructors (see Figure 1 for course timetable). All MDCA courses used similar training protocols ultimately assessing the competence of course participants using a standardized scoring and grading rubric. Instructors ran the course making use of malaria reference slides sourced from a number of well-established slide banks that were previously validated following WHO protocols. At the outset of the MDCA course, a theory-based pretest consisting of general malaria questions was administered, followed by extensive feedback on the results. Key areas tested related to disease pathogenesis, laboratory knowledge, and quality assurance. The primary purpose of this pretest was to identify knowledge gaps to be addressed during theoretical and practical units throughout the MDCA course; as such, these pretest results were not used in the calculation of final competence levels. On the first day of the course, participants also underwent a pretest competency assessment covering all targeted components of malaria microscopy, followed by 3–5 days of training with continued assessments. The pretest competency assessment was meant to gather information on the current state of participant skills to (de)emphasize components of subsequent learning modules. Malaria diagnostic competency assessment course instructors were also able to conduct real-time evaluation of participant performance following each posttest assessment. In this manner, instructors were able to continuously adjust particular components of review and learning modules by focusing on identified deficiencies. Malaria diagnostic competency assessment trainers usually reserved the final day of the course to discuss outcomes, provide instruction on how participants should conduct cascade training when they return to work, and lay out a framework for participating in supervisory activities.

Figure 1.

Timetable of malaria diagnostic competency assessment course activities. Course timetable adapted from the U.S. Agency for International Development President’s Malaria Initiative–funded Improving Malaria Diagnostics project.

The morning sessions of the MDCA courses included presentations on all aspects of malaria microscopic diagnosis, from specimen collection to reporting. There was particular emphasis on WHO recommendations and methods for parasite quantification. Afternoons were dedicated to malaria slide examination assessments across three key MDCA challenge types: detection of parasites, species identification, and parasite quantification. These daily assessments were performed under examination conditions, during which participants were given up to 10 minutes to read each slide. Participants were provided with a paper form at the beginning of each assessment day on which they recorded their responses for each slide examined. The numbers present on the physical slides corresponded to the numbers on their forms. Guidance for slide reading was provided on the form, which indicated if a slide should be analyzed for parasite detection and species identification or, alternatively, only for parasite quantification. Malaria diagnostic competency assessment course administrators transferred participant responses into an Excel spreadsheet designed to capture data for each participant across all assessment days.

The same slide sets were used for both pretest and posttest assessments during each MDCA course. The recommended slide set composition for each assessment day included 15 slides that comprised five negative slides; three P. falciparum slides; two non–P. falciparum species slides which comprised, Plasmodium vivax, Plasmodium ovale, and/or Plasmodium malariae; and one mixed-infection slide used for parasite detection and species identification; an additional four P. falciparum slides (two low density, one medium density, and one high density) were included in the slide sets for parasite quantification. Each day began with a review of the slides examined from the previous day. The review sessions allowed participants to freely ask questions, further adding to the learning and consolidation aspects of the workshop. In line with WHO recommendations for external assessment of the competence of national microscopists,4 results from assessment days 2–4 were aggregated to calculate posttest scores for each MDCA challenge type to determine overall competence levels. Participants’ final scores were openly shared and discussed on the last day.

The criteria used for assessing malaria microscopy competence levels for MDCA participants are presented in Table 1.† Percentages correspond to the proportion of slides read correctly for each of the three MDCA challenge types (i.e., parasite detection, species identification, and parasite quantification) across assessment days 2–4. Competence levels were determined based on the lowest score achieved for any of the challenge types. For example, based on aggregate daily assessment results, an MDCA participant who performed below Level B in just one of the three challenge types could not have been ascribed a competency level of anything higher than C.

Table 1.

World Health Organization competence levels for national certification1

| Parasite detection | Species identification | Parasite quantification* | |

|---|---|---|---|

| Competence levels based on lowest score achieved | |||

| Level A | ≥ 90% | ≥ 90% | ≥ 50% |

| Level B | 80% to < 90% | 80% to < 90% | 40% to < 50% |

| Level C | 70% to < 80% | 70% to < 80% | 30% to < 40% |

| Level D | < 70% | < 70% | < 30% |

* Within ± 25% of expert validated count.

Analysis of implementation data.

Malaria diagnostic competency assessment course administrators logged participant responses for each slide examination into course-specific Excel spreadsheets. These results were then used to conduct two separate analyses: a slide-level analysis, where each slide read by each participant was a unit of analysis and a person-level analysis, where unique participants were the unit of analysis, with aggregate scores for each challenge type for each assessment day. Data were cleaned and analyzed using Stata 14 (StataCorp, 2015. Stata Statistical Software: Release 14. College Station, TX: StataCorp LP) and Excel 2013 (Microsoft Corporation, 2013. Excel: Release 2013. Redmond, WA: Microsoft Corporation). Plots were developed in R 1.0.143 using the ggplot2 package.42 For the slide-level analysis, cross-sectional univariate descriptive analyses were carried out to describe participant performance for parasite detection, species identification, and parasite quantification. Additional analyses were conducted to 1) describe the effect of specific slide characteristics (e.g., species type and parasite density) on a participant’s ability to accurately detect parasites, identify Plasmodium species, and conduct parasite quantification; and 2) to compare the change in performance for each MDCA challenge type across assessment days via paired t-tests. For the person-level analysis, participants’ levels of performance in parasite detection, species identification, and parasite quantification were compared with the “gold standard,” as obtained from validated slide results confirmed by WHO-certified Level 1 microscopists and polymerase chain reaction. Overall proportions of slides read correctly by MDCA challenge type and assessment day were calculated for each participant to give a score. Means, standard deviations, proportion correct/incorrect, counts, and competency levels were calculated to assess participant performance in each of the three MDCA challenge types. Bivariate analysis was carried out to demonstrate the degree of significant gains in average participant scores across assessment days and for each MDCA challenge type. Typically, MDCA participants only examined slides for the first 4 days of the course; however, approximately 15% (n = 127) of participants attended extended MDCA courses. Wherever present, data from these supplemental assessment days were excluded. The overall curriculum for participants attending extended MDCA courses did not deviate from the standard 5-day course except for the inclusion of additional assessment days. This analysis includes data from MDCA courses conducted during a 39-month period from January 2013 to March 2016. All tables and figures in this article include data points from all years and countries.

RESULTS

Slide level.

Participants included in the slide-level analysis examined a total of 1,430 malaria-positive slides—981 of which were read 26,346 times for parasite detection and species identification and 571 were read 14,698 times for parasite quantification. In addition, 522 malaria-negative slides were read a total of 15,502 times for parasite detection and species identification. Of note, three countries were responsible for nearly 70.0% of all slide examinations as they comprised just over two-thirds of all participants (67.6%). Of the 981 malaria-positive slides evaluated for parasite detection and species identification, 568 density profiles were unrecoverable because of a method of re-accessioning slide identification numbers upon slide set creation. The density profiles for these slides were not recorded at the time of slide set generation, which resulted in the loss of that information. This only permitted the characterization of 413 malaria-positive slides by density class; however, these 413 slides made up just more than two-thirds (67.5%, n = 17,771) of all slide examinations for parasite detection and species identification.

The administration of the 45 MDCA courses included for analysis relied on the use of different slide banks. Consequently, slide set composition was not equal among MDCA participants. As Table 2 stratifies slides used for parasite detection and species identification by density ranges, only those slides with complete density profiles could be included. On average, participants read 4.6 malaria-negative slides and 6.8 malaria-positive slides per day for assessment of parasite detection and species identification. About 4.4 slides per day were examined for parasite quantification, giving an overall average of 15.7 slides read per day. To determine the extent to which each slide type was evaluated at the person level, participant days with at least one slide reading were calculated. This value depicts the proportion of participants who read each slide type at least once on each of the four assessment days included for analysis.

Table 2.

Daily slide set composition for MDCA participants

| Slide set composition for assessment of parasite detection and species identification skills | |||||||

|---|---|---|---|---|---|---|---|

| Species | Density ranges | Density classes | Number of slides examined per day | Participant days with at least one slide reading | |||

| Mean | Min | Max | n/N | % | |||

| Negative | N/A | N/A | 4.59 | 1 | 9 | 3,268/3,268 | 100.0 |

| Pf | < 100 parasites/µL | Very low | 1.32 | 0 | 6 | 1,782/3,268 | 54.5 |

| Pf | 100–200 parasites/µL | Low | 0.20 | 0 | 5 | 415/3,268 | 12.7 |

| Pf | 201–500 parasites/µL | Medium–low | 0.08 | 0 | 4 | 213/3,268 | 6.5 |

| Pf | 501–2,000 parasites/µL | Medium | 0.34 | 0 | 2 | 1,041/3,268 | 31.9 |

| Pf | 2,001–50,000 parasites/µL | Medium–high | 0.84 | 0 | 6 | 1,314/3,268 | 40.2 |

| Pf | 50,001–100,000 parasites/µL | High | 0.05 | 0 | 2 | 119/3,268 | 3.6 |

| Pf | > 100,000 parasites/µL | Very high | 0.01 | 0 | 1 | 45/3,268 | 1.4 |

| Non-Pf | Variable | Variable | 2.42 | 0 | 8 | 3,159/3,268 | 96.7 |

| Mixed | Variable | Variable | 1.51 | 0 | 5 | 3,154/3,268 | 96.5 |

| Slide set composition for assessment of parasite quantification skills | |||||||

|---|---|---|---|---|---|---|---|

| Species* | Density ranges | Density classes | Number of slides examined per day | Participant days with at least one slide reading | |||

| Mean | Min | Max | n/N | % | |||

| Pf | < 100 parasites/µL | Very low | 1.1 | 0 | 4 | 1,770/3,268 | 54.2 |

| Pf | 100–200 parasites/µL | Low | 0.5 | 0 | 4 | 858/3,268 | 26.3 |

| Pf | 201–500 parasites/µL | Medium–low | 0.2 | 0 | 5 | 505/3,268 | 15.5 |

| Pf | 501–2,000 parasites/µL | Medium | 0.5 | 0 | 2 | 1,355/3,268 | 41.5 |

| Pf | 2,001–50,000 parasites/µL | Medium–high | 1.1 | 0 | 6 | 2,158/3,268 | 66.0 |

| Pf | 50,001–100,000 parasites/µL | High | 0.2 | 0 | 2 | 489/3,268 | 15.0 |

| Pf | > 100,000 parasites/µL | Very high | 0.9 | 0 | 4 | 2,299/3,268 | 70.3 |

Max = maximum; MDCA = malaria diagnostic competency assessment; Min = minimum; N/A = not applicable; Pf = Plasmodium falciparum.

* 0.02% of parasite quantification examinations were conducted on slides derived from non-Pf or Pf mixed infection donors. These slides were excluded from the table as their inclusion was not in line with MDCA protocol which was to use only slides from Pf mono-infected donors for parasite quantification. Their exclusion did not change the presented outcome measures.

Half (50.0%) of all malaria-positive slide examinations for parasite detection were conducted on slides from P. falciparum mono-infected donors; otherwise, slides from donors infected with P. ovale, P. malariae, and P. falciparum/P. ovale comprised 14.5%, 13.6%, and 11.1% of examinations, respectively. The remaining 10.8% of slide examinations were conducted on slides from P. falciparum/P. malariae–, P. falciparum/P. vivax–, or P. vivax–infected donors.

Parasite detection.

Across all assessment days, about four of every five (80.6%) malaria-positive slides examined for parasite detection were correctly read as positive. When stratified by density class, aggregate parasite detection scores tended to increase as parasite density increased, ranging from 59.5% at very low density to 74.7% at medium–low density to 91.5% at medium–high density. A sub-analysis by species type found that a consistently high proportion of slide examinations with available density profiles for P. malariae, P. ovale, and P. vivax mono-infections were read as negative by MDCA participants (19.0%, 16.6%, and 15.6%, respectively), despite their density class designations of medium or medium–high. In addition, just more than one-quarter (26.3%) of all examinations of P. falciparum mono-infected slides with available density profiles resulted in a negative diagnosis by course participants; however, 79.4% of these false negatives stemmed from examinations of very low density slides. Participant performance gains in parasite detection were relatively minimal for very low density slides, increasing by only 14.7 percentage points from pretest to assessment day 4. Over this same period of time, participants improved parasite detection scores for low and medium density slides by 32.2 and 31.5 percentage points, respectively. At the time of the pretest assessment, approximately one-third (32.5%) of negative slide examinations were read as false positives by MDCA participants, resulting in an overall specificity of 69.2%. By assessment day 4, however, specificity reached 83.1% (P < 0.001) with about one-sixth (16.8%) of negative slide examinations being read as false positives. Similarly, sensitivity (correctly identifying malaria parasites when present) increased from 67.4% at pretest to 88.1% (P < 0.001) by assessment day 4.

Species identification.

Overall, and irrespective of density class, the most accurately identified species was P. falciparum (mono-infection), which was correctly identified 55.3% of the time, followed by P. malariae and P. ovale mono-infections, which were accurately identified in 49.1% and 45.3% of examinations, respectively. The slides with P. falciparum/P. malariae mixed infections were the most commonly misidentified slides, with only 27.1% being accurately identified. Slide examinations on P. falciparum/P. malariae, P. falciparum/P. ovale, and P. falciparum/P. vivax mixed infections were misidentified as P. falciparum mono-infections in 22.9%, 30.8%, and 32.0% of instances, respectively. Among these mixed infection slides, all had density class designations of medium, medium–high, or high. Participants also had difficulty identifying species in lower density slides. Only 39.9% of the 4,542 very low density slides, all of which were derived from P. falciparum mono-infected donors, resulted in accurate species identification. Of these slides, two-fifths (40.5%) were erroneously read as negative, and one-fifth (19.7%) were inaccurately reported as non-P. falciparum mono-infected slides. The most frequently and erroneously reported species type on negative slide examinations was P. falciparum (13.4%), followed by P. malariae (5.3%), and P. ovale (3.9%) mono-infections. The ability of MDCA participants to accurately report P. falciparum species when present increased from 44.5% at pretest to 67.1% by assessment day 4. Pretest levels of accuracy in identifying any Plasmodium species were quite variable by density class, fluctuating from 29.9% at very low density to 60.6% at medium–low density to 10.6% at very high density. Improving accuracy in species identification with rising parasite density was more evident by assessment day 4, increasing from 54.3% at very low density to 65.4% at medium–low density to 84.5% at high density.

Parasite quantification.

Nearly all (99.8%) parasite quantification examinations were conducted on slides derived from P. falciparum mono-infected donors. One of the most challenging density classes for MDCA participants included high-density slides; however, only one-quarter (24.6%) of all MDCA participants contributed to parasite quantification results for this density class, with 41.0% of slide examinations being performed by participants from one country. A clear pattern emerged during the pretest assessment of parasite quantification in that scores steadily increased as parasite densities increased, although, overall, only 15.5% of slide examinations were correctly quantified at this stage of the MDCA course. By assessment day 4, however, parasite quantification scores were the highest at density class extremes (67.0% at very low density and 69.4% at very high density). Overall, by assessment day 4, parasite quantification scores improved by nearly 40 percentage points, to 53.3%.

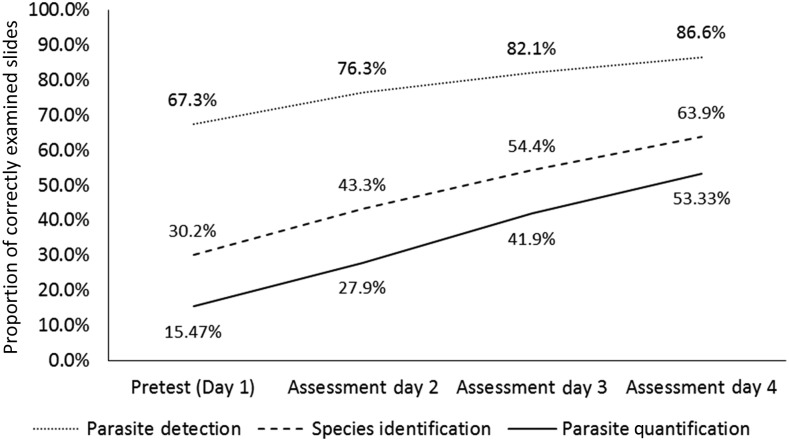

Irrespective of any performance limitations described previously, positive secular trends were evident for each MDCA challenge type (Figure 2), as well as diagnostic measures of performance (i.e., sensitivity, specificity, and accuracy of detecting P. falciparum when present). The proportion of slide examinations correctly read for parasite detection, species identification, and parasite quantification increased by 19.2, 33.7, and 37.9 percentage points, respectively, from pretest to assessment day 4.

Figure 2.

Proportion of slide examinations correctly evaluated, by challenge type and assessment day. Proportion of correct examinations from 981 malaria-positive slides, which were read a total of 26,346 times by 817 malaria diagnostic competency assessment participants, were used to graph day-by-day results for parasite detection and species identification; results from 571 slides, examined a total of 14,698 times, were used to graph outcomes for parasite quantification. In addition, 522 malaria-negative slides, which were read a total of 15,502 times, contributed to the calculation of parasite detection scores.

Person level.

The initial dataset included 851 MDCA participants from 45 MDCA courses administered during the evaluation period, covering nine African countries. The final person-level dataset was restricted to the 817 participants for whom scores could be calculated for each challenge type across each of the first four assessment days of the MDCA course. About one in 10 course participants (90/817; 11.0%) obtained level A or B standing in all three challenge types and, across all participants combined, differences in mean scores from pretest to assessment day 4 were positive and statistically significant (P < 0.001) overall and across each competence level within each challenge type (Table 3). Nevertheless, 23.0% (188/817) of all MDCA participants were unsuccessful at progressing beyond the lowest performance category (level D) for each challenge type by assessment day 4. The proportion of MDCA participants attaining level A standing specifically for parasite detection rapidly increased from 17.1% (140/817) at pretest to 57.9% (473/817) by assessment day 4. Similarly, the proportion of participants reaching this top-tier performance category for parasite quantification increased from 12.2% (100/817) at pretest to 63.4% (518/817) by assessment day 4. A more subdued trend was noted for the species identification component of the MDCA course, where participants attaining level A standing increased from near 0% (6/817; 0.7%) at pretest to 20.1% (164/817) by assessment day 4. Concurrently, the proportion of participants progressing beyond level D standing from pretest to assessment day 4 increased by 37.9, 45.4, and 56.3 percentage points for parasite detection, species identification, and parasite quantification, respectively.

Table 3.

Average participant scores by assessment day, competence level, and challenge type

| Pretest (day 1) | Assessment day 2 | Assessment day 3 | Assessment day 4 | Pretest vs. assessment day 4 | |

|---|---|---|---|---|---|

| Mean (SD), % | Mean (SD), % | Mean (SD), % | Mean (SD), % | Difference in mean scores, % | |

| All participants (N = 817) | |||||

| Parasite detection | 67.3 (19.3) | 76.7 (16.4)* | 82.2 (15.1)* | 86.5 (14.6)* | 19.1* |

| Species identification | 30.7 (21.9) | 43.7 (26.0)* | 56.0 (24.8)* | 65.6 (26.1)* | 34.9* |

| Parasite quantification | 15.5 (20.6) | 28.4 (27.1)* | 41.2 (29.0)* | 53.6 (31.7)* | 38.2* |

| Level A (n = 33) | |||||

| Parasite detection | 90.5 (9.2) | 97.5 (6.5)* | 99.4 (2.3) | 99.7 (1.6) | 9.2* |

| Species identification | 56.0 (19.9) | 91.8 (10.1)* | 98.5 (4.8)† | 98.4 (4.3) | 42.4* |

| Parasite quantification | 22.7 (21.1) | 60.9 (31.0)* | 68.3 (18.7) | 76.6 (22.9) | 53.8* |

| Level B (n = 57) | |||||

| Parasite detection | 81.0 (16.2) | 91.8 (10.9)* | 91.4 (9.1) | 96.4 (5.5)* | 15.4* |

| Species identification | 50.8 (22.2) | 80.5 (14.1)* | 86.1 (12.8) | 91.7 (9.1)† | 40.9* |

| Parasite quantification | 21.1 (22.2) | 44.9 (30.0)* | 58.8 (27.1)† | 78.8 (22.2)* | 57.7* |

| Level C (n = 92) | |||||

| Parasite detection | 74.3 (17.2) | 85.8 (13.6)* | 89.8 (10.7)‡ | 94.2 (8.5)* | 19.9* |

| Species identification | 38.5 (21.5) | 64.8 (18.1)* | 76.3 (15.1)* | 89.3 (11.4)* | 50.9* |

| Parasite quantification | 24.3 (20.4) | 46.4 (27.3)* | 52.6 (26.1) | 68.4 (25.4)* | 44.1* |

| Level D (n = 635) | |||||

| Parasite detection | 63.9 (18.5) | 73.0 (15.5)* | 79.4 (15.1)* | 83.7 (15.0)* | 19.8* |

| Species identification | 26.5 (19.8) | 34.8 (20.4)* | 48.1 (21.1)* | 58.1 (24.4)* | 31.6* |

| Parasite quantification | 13.3 (20.0) | 22.6 (23.4)* | 36.5 (28.1)* | 48.0 (31.4)* | 34.7* |

SD = standard deviation. Paired t-tests were conducted to characterize performance gains in mean participant scores from one assessment day to the next and from pretest to assessment day 4. Results of this analysis are denoted with asterisks across participant competence levels and malaria diagnostic competency assessment challenge type.

* P < 0.001.

† P < 0.01.

‡ P < 0.05.

Many participants who were ascribed overall course competencies at C or D levels actually exhibited performance at A or B standing with respect to parasite detection (516/727; 71.0%) and parasite quantification (474/727; 65.2%). However, 23.1% (189/817) of all participants were ascribed level C or D course competencies as a direct result of poor species identification scores marking this specific assessment category as the most challenging across participants. Indeed, participants were 10.8 times more likely to have been ascribed level C or D course competencies solely because of poor species identification scores as opposed to poor parasite quantification scores. None of the 727 participants who were ultimately ascribed level C or D competencies for the MDCA course placed in these lower tier performance categories solely because of poor parasite detection scores.

Table 3 shows further detail on participant mean scores for each challenge type across assessment days and ascribed participant competence levels. Generally speaking, steady gains across challenge types were more apparent among participants who were ascribed C- and D-level course competencies, as their relatively low performance at the outset of the course allowed for higher mobility and advancement of knowledge compared with their A- and B-level counterparts. Parasite detection and species identification scores were quick to increase and then slowed among participants who were ascribed A- and B-level course competencies. Parasite quantification scores, however, showed relatively steady gains for all participants. Despite the relatively similar starting points for parasite quantification across competence levels, participants with A- and B-level course competencies improved their scores for this challenge type by an average of 56.3 percentage points by assessment day 4 compared with an average gain of 35.9 percentage points among participants with C- and D-level course competencies. Furthermore, participants with A- and B-level course competencies showed rapid gains for each challenge type from pretest to assessment day 1, whereas their C- and D-level counterparts exhibited slower and steadier gains throughout the course. Table 3 also demonstrates, via paired t-tests, that differences in mean scores with respect to daily marginal performance were positive and statistically significant (P < 0.001) for each challenge type when considering the entire cohort of MDCA participants.

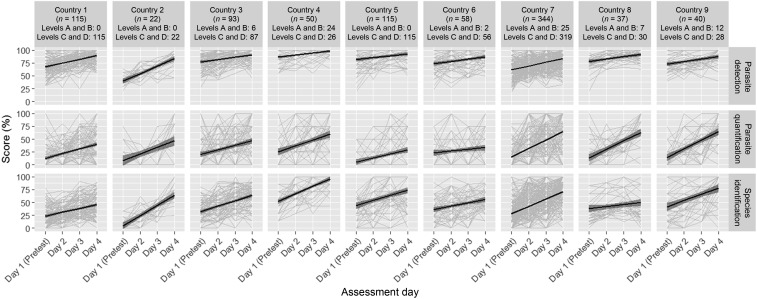

Figure 3 shows microscopy performance by participant, assessment day, MDCA challenge type, and anonymized country. A clear distinction can be made when comparing the person-level plots of parasite detection to the other two MDCA challenge types. Parasite detection scores tended to start at relatively high levels and showed consistent improvement over the course with relatively minimal day-to-day participant variation as evidenced by the tight 95% confidence intervals around the trend lines. Conversely, parasite quantification and species identification scores were quite variable across participants and assessment days.

Figure 3.

Malaria diagnostic competency assessment (MDCA) scores by participant, assessment day, challenge type and country. Data from 45 MDCA courses, including 817 participants showing individual-level performance by country and MDCA challenge type. Trend lines with 95% confidence intervals were added to provide country-level overview. Competence levels ascribed to country-specific MDCA participants are presented for A- and B-levels combined and C- and D- levels combined.

DISCUSSION

The use of microscopy for the detection, identification, and quantification of malaria species is considered the gold standard in parasitological diagnosis for malaria. Accurate slide examination, however, requires highly skilled technicians, especially when parasite densities are low. This course was able to demonstrate improved performance in sensitivity and specificity, which increased by 20.8 and 13.8 percentage points, respectively, from pretest to assessment day 4. Kiggundu et al.33 reported high levels of sensitivity and specificity (95% and 97%, respectively) after a 3-day training in Uganda—well above what participants in the MDCA courses were able to achieve by the end of the course (88.5% and 83.4%, respectively). However, the performance of MDCA participants exceeded those of microscopists participating in a 10-day microscopy refresher training based on guidance from the WHO basic microscopy training manual, where overall sensitivity and specificity reached 78.4% and 62.4%, respectively.35 Malaria diagnostic competency assessment–specific gains for these diagnostic measures of performance are encouraging because they show that by placing an emphasis on the assessment of competence, in-service training programs can rapidly bolster a technician’s capacity to truly distinguish between positive and negative malaria blood films.

Similar to the results of other published works, species identification proved to be a major challenge with respect to participant progression in the course. Aiyenigba et al.35 reported that species identification scores increased by a margin of 19.4 percentage points by the end of the 10-day refresher training course and Olukosi et al.37 reported gains of 25.4–37.6 percentage points over 7 days, depending on the cadre of microscopists being assessed. The MDCA course showed that species identification performance could be significantly improved during a 5-day course, in some instances, by 40–50 percentage points (overall average increase of 33.7 percentage points). However, accuracy in the identification of malaria species is arguably a less important factor than parasite detection, especially considering that the bulk of infection in the countries from where participants came are caused by P. falciparum. Of note, slides derived from P. falciparum mixed-infection donors proved to be the most difficult for MDCA participants to diagnose with respect to species identification; additional emphasis on this component is recommended during malaria microscopy refresher training in epidemiologically relevant settings.

Capacity to conduct parasite quantification is important to track parasitemia in response to antimalarial medications and has particular relevance for severe, hospitalized malaria cases, where monitoring is crucial. Similar to trends noted for species identification, parasite quantification scores were relatively low at the outset of the MDCA course as well as for courses described in other published works. Olukosi et al.,37 reported that quantification test scores increased from 0% to 25% whereas Aiyenigba et al.35 reported an increased in scores from 4.2% to 27.9%. However, the most impressive gains in MDCA course performance were noted for parasite quantification, with an average increase of 37.9 percentage points (up from 15.5% at pretest to 53.3% by assessment day 4), thus, validating parasite counting as a teachable skill that can be greatly improved with intense, short-term training opportunities.

Initial performance gains for species identification and parasite quantification scores among participants who were ascribed A- or B-level course competencies were much steeper than for their C- and D-level counterparts. However, scores for each of the three challenge types among A- and B-level participants tended to taper off after assessment day 2 implying the application of latent knowledge. The rate of improvement across all three challenge types for C- and D-level participants was steady across each assessment day suggesting ongoing learning rather than skills refreshment.

Despite the observed performance gains for most MDCA participants, only 11% (90/817) achieved A- or B-level course competencies. As competency levels are calculated by aggregating scores across assessment days 2–4, participants who exhibited strong performance early on in the MDCA course, and maintained or improved on that level of performance, were more likely to attain A- and B-level competencies than those who started with lower scores at the outset of the course but reached similar levels of proficiency by assessment day 4. In this manner, combined with other course aspects (e.g., effectiveness of course instructors, participant motivation, quality of course materials, etc.), the MDCA course was capable of identifying highly proficient microscopists benefitting from skills refreshment in addition to the primary intent of providing training opportunities for others. Malaria diagnostic competency assessment course outcomes allowed country programs to selectively recruit cadres of highly proficient microscopists to participate in external quality assurance activities specific to malaria diagnostics.

Further elucidating the major differences among MDCA participants with respect to their overall performance outcomes could have been achieved by collecting descriptive information. Information such as level of experience and education, previous microscopy-specific pre- and in-service training opportunities, post-training follow-up for remedial action, extent to which quality-controlled microscopy is used at the facility, and basic demographic data will be necessary to further understand how the MDCA course can be tailored to fit the needs of certain groups of microscopists. This could assist in setting future course expectations, pace, and intensity based on the general profiles of incoming participants.

There were other limitations to this analysis as well. Not having density profiles for about one-third of the slide examinations used for parasite detection and species identification likely affected the interpretation of density-dependent trends. In addition, the MDCA course assessment criteria did not systematically require preparation of blood films by participants, which is a major component of diagnostic accuracy although training was provided for this skill as part of a learning module. Thick and thin blood film preparation and staining for microscopy are crucial to proper reading and should be systematically incorporated into future courses as a competency component for all MDCA courses. Course participants were also given 10 minutes to read each blood slide to completely examine the requisite number of fields before declaring a slide negative. Although recommended for field practice, the allotted time may be at odds with actual field demands.

The inability of the project to use a single, standardized slide bank when constructing slide sets for MDCA participants makes interpretation of a pooled analysis difficult. Although it was an operational advantage for the MDCA courses to make use of multiple slide banks that were reliably vetted, it was not always possible to populate slide sets with exactly the same slide compositions. This affected the comparability of overall competence levels and day-over-day performance gains between participants. A potential solution to this issue might be to assign a difficulty level to each individual slide used during administration of the MDCA courses by aggregating participant responses over multiple readings. This would allow, at a minimum, post hoc analyses designed to examine the extent to which testing was carried out with slide sets of similar difficulty.

Project staff and course administrators were unable to examine the quality of each individual slide before they were deployed for use during the MDCA courses. Future courses might develop a mechanism by which course administrators and MDCA participants flag certain slides if they believe them to be of questionable quality due to partially dehemoglobinized thick film preparations, stain deposits, presence of artifacts and debris, or bubbles in mounting medium. Issues with slide quality could be reported to slide bank operators and even recorded in slide bank databases to selectively remove certain slides from random selection during slide set generation.

Certain countries exhibited strong performance with respect to the high competence levels ascribed to their participants, but most participants in other countries were not able to progress beyond the lowest competence level. A partial explanation for this may be that some country programs used the MDCA course as a targeted mechanism to develop a central cadre of highly competent microscopists or to screen microscopists for advancement to ECAMM whereas others used it to provide blanket training to as many practicing microscopists as possible. In addition, certain countries have more mature malaria programs that have focused on rigorous malaria diagnostics capacity building, including institutionalization and regulation of laboratory quality assurance measures. These observations taken together suggest that the MDCA course could be tailored to reflect the maturity of malaria programs and the types of diagnostic services being offered at different levels of the health system. For instance, participants from the peripheral level of the health system, where parasite detection alone is primarily used for malaria diagnosis, might attend in-service refresher training or competency assessment programs designed to bolster this particular skill. Participants from the reference level or those involved in research endeavors would attend courses focused on all three MDCA challenge types. Scoring systems might otherwise be altered to weigh certain challenge types over others based on overall participant profiles for the microscopists attending the course and could also be linked to diagnostic testing policy for each level of laboratory technicians.

Furthermore, scores for all three challenge types continued to improve throughout the MDCA course, without any evidence of a plateau. This suggests that MDCA courses designed to strengthen technician performance across all three challenge types should be at least 5 days. In settings necessitating the skill of high-performing microscopists (e.g., national-level microscopy trainers and technicians within national reference laboratories), participants may even benefit from an extended course period particularly for improving capacity in species identification and parasite quantification.

A significant gap in existing malaria microscopy policy is the general absence of country-level guidance on how best to manage the use of routine microscopy where performance levels are suboptimal. Establishing minimum competency standards to retain licensure for malaria microscopy as a component of existing policies for regulation of laboratory staff is needed as part of an overall scheme to monitor performance. Courses such as the one detailed in this article could serve as a mechanism for measuring laboratory staff performance against these minimum competency standards and can easily be adapted to serve as national certification courses. It is important to note, however, that this article did not attempt to link overall course outcomes with post-assessment performance; therefore, it is not possible to know the extent to which the MDCA course relates to subsequent on-the-job performance. It would be of interest to follow-through with periodic monitoring of routine malaria microscopy performance for participants who attend MDCA courses.

CONCLUSION

The MDCA course has shown to rapidly improve the microscopy performance of participants over a short period of time with respect to parasite detection, species identification, and parasite quantification skills. Although most participants had poor pretest performances in all three challenge types, they ultimately performed well at level B or higher in parasite detection and parasite quantification by assessment day 4. Species identification, while showing steady improvement across all participants, was the discriminating indicator with the large majority of participants scoring at level D. In epidemiologically relevant settings, this finding indicates an area of study that could be emphasized during future MDCA courses. Participants who were ascribed level A and B competencies achieved most of their performance gains by assessment day 2, which indicates a renewal of latent knowledge, whereas those ascribed C- and D-level competencies, who comprised the vast majority of participants, showed a steady rate of improvement across the duration of the course—a trend more consistent with progressive learning. Characterizing specific determinants of performance may be helpful to customize future MDCA courses to meet the needs of participants who come with varying degrees of experience and highly variable skill sets. In addition, because of its rigor and discerning nature in identifying truly skilled microscopists, this course is well-structured to serve as a national accreditation program.

Acknowledgments:

We thank all of our U.S. President’s Malaria Initiative and MalariaCare colleagues who reviewed and contributed to this manuscript.

Footnotes

World Health Organization competence levels were created for use in ECAMM and national assessment programs. The MDCA courses do not provide certification, but they do make use of the same grading scale.

REFERENCES

- 1.World Health Organization , 2011. Universal Access to Malaria Diagnostic Testing—An Operational Manual. Geneva, Switzerland: WHO. [Google Scholar]

- 2.Molyneux M, Fox R, 1993. Diagnosis and treatment of malaria in Britain. BMJ 306: 1175–1180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hanscheid T, 1999. Diagnosis of malaria: a review of alternatives to conventional microscopy. Clin Lab Haematol 21: 235–245. [DOI] [PubMed] [Google Scholar]

- 4.World Health Organization , 2016. Malaria Microscopy Quality Assurance Manual—Version 2. Geneva, Switzerland: WHO. [Google Scholar]

- 5.Ashraf S, Kao A, Hugo C, Christophel EM, Fatunmbi B, Luchavez J, Lilley K, Bell D, 2012. Developing standards for malaria microscopy: external competency assessment for malaria microscopists in the Asia-Pacific. Malar J 11: 352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bell D, Wongsrichanalai C, Barnwell JW, 2006. Ensuring quality and access for malaria diagnosis: how can it be achieved? Nat Rev Microbiol 4 (9 Suppl): S7–S20. [DOI] [PubMed] [Google Scholar]

- 7.World Health Organization , 2009. WHO Malaria Rapid Diagnostic Test Performance—Results of WHO Product Testing of Malaria RDTs: Round 2. Available at: www.who.int/malaria/publications/atoz/9789241599467/en/index.html. Accessed May 5, 2017.

- 8.World Health Organization , 2015. Guidelines for the Treatment of Malaria, 3rd edition Geneva, Switzerland: WHO. [Google Scholar]

- 9.The malERA Consultative Group on Diagnoses and Diagnostics , 2011. A research agenda for malaria eradication: diagnoses and diagnostics. PLoS Med 8: e1000396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Amexo M, Tolhurst R, Barnish G, Bates I, 2004. Malaria misdiagnosis: effects on the poor and vulnerable. Lancet 364: 1896–1898. [DOI] [PubMed] [Google Scholar]

- 11.Coleman RE, et al. 2006. Comparison of PCR and microscopy for the detection of asymptomatic malaria in a Plasmodium falciparum/vivax endemic area in Thailand. Malar J 5: 121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zurovac D, Midia B, Ochola SA, English M, Snow RW, 2006. Microscopy and outpatient malaria case management among older children and adults in Kenya. Trop Med Int Health 11: 432–440. [DOI] [PubMed] [Google Scholar]

- 13.Ohrt C, Purnomo, Sutamihardja MA, Tang D, Kain KC, 2002. Impact of microscopy error on estimates of protective efficacy in malaria-prevention trials. J Infect Dis 186: 540–546. [DOI] [PubMed] [Google Scholar]

- 14.Wongsrichanalai C, Barcus MJ, Muth S, Sutamihardja A, Wernsdorfer WH, 2007. A review of malaria diagnostic tools: microscopy and rapid diagnostic test (RDT). Am J Trop Med Hyg 77 (Suppl 6): 119–127. [PubMed] [Google Scholar]

- 15.Kahama-Maro J, D’Acremont V, Mtasiwa D, Genton B, Lengeler C, 2011. Low quality of routine microscopy for malaria at different levels of the health system in Dar es Salaam. Malar J 10: 332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kachur SP, Nicolas E, Jean-François V, Benitez A, Bloland PB, Saint Jean Y, Mount DL, Ruebush TK, 2nd, Nguyen-Dinh P, 1998. Prevalence of malaria parasitemia and accuracy of microscopic diagnosis in Haiti, October 1995. Rev Panam Salud Publica 3: 35–39. [DOI] [PubMed] [Google Scholar]

- 17.Durrheim DN, Becker PJ, Billinghurst K, 1997. Diagnostic disagreement—the lessons learnt from malaria diagnosis in Mpumalanga. S Afr Med J 87: 1016. [PubMed] [Google Scholar]

- 18.Kain KC, Harrington MA, Tennyson S, Keystone JS, 1998. Imported malaria: prospective analysis of problems in diagnosis and management. Clin Infect Dis 27: 142–149. [DOI] [PubMed] [Google Scholar]

- 19.Kilian AH, Metzger WG, Mutschelknauss EJ, Kabagambe G, Langi P, Korte R, von Sonnenburg F, 2000. Reliability of malaria microscopy in epidemiological studies: results of quality control. Trop Med Int Health 5: 3–8. [DOI] [PubMed] [Google Scholar]

- 20.Coleman RE, Maneechai N, Rachaphaew N, Kumpitak C, Miller RS, Soyseng V, Thimasarn K, Sattabongkot J, 2002. Comparison of field and expert laboratory microscopy for active surveillance for asymptomatic Plasmodium falciparum and Plasmodium vivax in western Thailand. Am J Trop Med Hyg 67: 141–144. [DOI] [PubMed] [Google Scholar]

- 21.O’Meara WP, McKenzie FE, Magill AJ, Forney JR, Permpanich B, Lucas C, Gasser RA, Jr., Wongsrichanalai C, 2005. Sources of variability in determining malaria parasite density by microscopy. Am J Trop Med Hyg 73: 593–598. [PMC free article] [PubMed] [Google Scholar]

- 22.Gomes LT, et al. 2013. Low sensitivity of malaria rapid diagnostic tests stored at room temperature in the Brazilian Amazon region. J Infect Dev Ctries 7: 243–252. [DOI] [PubMed] [Google Scholar]

- 23.Bell D, Perkins MD, 2008. Making malaria testing relevant: beyond test purchase. Trans R Soc Trop Med Hyg 102: 1064–1066. [DOI] [PubMed] [Google Scholar]

- 24.Mwangi TW, Mohammed M, Dayo H, Snow RW, Marsh K, 2005. Clinical algorithms for malaria diagnosis lack utility among people of different age groups. Trop Med Int Health 10: 530–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ochola LB, Vounatsou P, Smith T, Mabaso MLH, Newton C, 2006. The reliability of diagnostic techniques in the diagnosis and management of malaria in the absence of a gold standard. Lancet Infect Dis 6: 582–588. [DOI] [PubMed] [Google Scholar]

- 26.Barat L, Chipipa J, Kolczak M, Sukwa T, 1999. Does the availability of blood slide microscopy for malaria at health centers improve the management of persons with fever in Zambia? Am J Trop Med Hyg 60: 1024–1030. [DOI] [PubMed] [Google Scholar]

- 27.Reyburn H, et al. 2004. Overdiagnosis of malaria in patients with severe febrile illness in Tanzania: a prospective study. BMJ 329: 1212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.McMorrow ML, Masanja MI, Abdulla SM, Kahigwa E, Kachur SP, 2008. Challenges in routine implementation and quality control of rapid diagnostic tests for malaria—Rufiji District, Tanzania. Am J Trop Med Hyg 79: 385–390. [PMC free article] [PubMed] [Google Scholar]

- 29.Perkins BA, et al. 1997. Evaluation of an algorithm for integrated management of childhood illness in an area of Kenya with high malaria transmission. Bull World Health Organ 75 (Suppl 1): 33–42. [PMC free article] [PubMed] [Google Scholar]

- 30.Evans JA, Adusei A, Timmann C, May J, Mack D, Agbenyega T, Horstmann RD, Frimpong E, 2004. High mortality of infant bacteraemia clinically indistinguishable from severe malaria. QJM 97: 591–597. [DOI] [PubMed] [Google Scholar]

- 31.Nateghpour M, Edrissian G, Raeisi A, Motevalli-Haghi A, Farivar L, Mohseni G, Rahimi-Froushani A, 2012. The role of malaria microscopy training and refresher training courses in malaria control program in Iran during 2001–2011. Iran J Parasitol 7: 104–109. [PMC free article] [PubMed] [Google Scholar]

- 32.Namagembe A, et al. 2011. Improved clinical and laboratory skills after team-based, malaria case management training of health care professionals in Uganda. Malar J 11: 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kiggundu M, Nsobya SL, Kamya MR, Filler S, Nasr S, Dorsey D, Yeka A, 2011. Evaluation of a comprehensive refresher training program in malaria microscopy covering four districts of Uganda. Am J Trop Med Hyg 84: 820–824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Moura S, Fançony C, Mirante C, Neves M, Bernardino L, Fortes F, Sambo MR, Brito M, 2014. Impact of a training course on the quality of malaria diagnosis by microscopy in Angola. Malar J 13: 437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Aiyenigba B, Ojo A, Aisiri A, Uzim J, Adeusi O, Mwenesi H, 2017. Immediate assessment of performance of medical laboratory scientists following a 10-day malaria microscopy training programme in Nigeria. Glob Health Res Policy 2: 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Odhiambo F, et al. 2017. Factors associated with malaria microscopy diagnostic performance following a pilot quality-assurance programme in health facilities in malaria low-transmission areas of Kenya, 2014. Malar J 16: 371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Olukosi Y, et al. 2015. Assessment of competence of participants before and after 7-day intensive malaria microscopy training courses in Nigeria. MWJ 6: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bates I, Bekoe V, Asamoa-Adu A, 2004. Improving the accuracy of malaria-related laboratory tests in Ghana. Malar J 3: 38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ngasala B, Mubi M, Warsame M, Petzold MG, Massele AY, Gustafsson LL, Tomson G, Premji Z, Bjorkman A, 2008. Impact of training in clinical and microscopy diagnosis of childhood malaria on antimalarial drug prescription and health outcome at primary health care level in Tanzania: a randomized controlled trial. Malar J 7: 199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sarkinfada F, Aliyu Y, Chavasse C, Bates I, 2009. Impact of introducing integrated quality assessment for tuberculosis and malaria microscopy in Kano, Nigeria. J Infect Dev Ctries 3: 20–27. [DOI] [PubMed] [Google Scholar]

- 41.Wanja E, et al. 2017. Evaluation of a laboratory quality assurance pilot programme for malaria diagnostics in low-transmission areas of Kenya, 2013. Malar J 16: 221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wickham H, 2016. ggplot2: Elegant Graphics for Data Analysis. New York, NY: Springer-Verlag. [Google Scholar]