Abstract

Background

Medical schools in low- and middle-income countries are facing a shortage of staff, limited infrastructure, and restricted access to fast and reliable internet. Offline digital education may be an alternative solution for these issues, allowing medical students to learn at their own time and pace, without the need for a network connection.

Objective

The primary objective of this systematic review was to assess the effectiveness of offline digital education compared with traditional learning or a different form of offline digital education such as CD-ROM or PowerPoint presentations in improving knowledge, skills, attitudes, and satisfaction of medical students. The secondary objective was to assess the cost-effectiveness of offline digital education, changes in its accessibility or availability, and its unintended/adverse effects on students.

Methods

We carried out a systematic review of the literature by following the Cochrane methodology. We searched seven major electronic databases from January 1990 to August 2017 for randomized controlled trials (RCTs) or cluster RCTs. Two authors independently screened studies, extracted data, and assessed the risk of bias. We assessed the quality of evidence using the Grading of Recommendations, Assessment, Development, and Evaluations criteria.

Results

We included 36 studies with 3325 medical students, of which 33 were RCTs and three were cluster RCTs. The interventions consisted of software programs, CD-ROMs, PowerPoint presentations, computer-based videos, and other computer-based interventions. The pooled estimate of 19 studies (1717 participants) showed no significant difference between offline digital education and traditional learning groups in terms of students’ postintervention knowledge scores (standardized mean difference=0.11, 95% CI –0.11 to 0.32; small effect size; low-quality evidence). Meta-analysis of four studies found that, compared with traditional learning, offline digital education improved medical students’ postintervention skills (standardized mean difference=1.05, 95% CI 0.15-1.95; large effect size; low-quality evidence). We are uncertain about the effects of offline digital education on students’ attitudes and satisfaction due to missing or incomplete outcome data. Only four studies estimated the costs of offline digital education, and none reported changes in accessibility or availability of such education or in the adverse effects. The risk of bias was predominantly high in more than half of the included studies. The overall quality of the evidence was low (for knowledge, skills, attitudes, and satisfaction) due to the study limitations and inconsistency across the studies.

Conclusions

Our findings suggest that offline digital education is as effective as traditional learning in terms of medical students’ knowledge and may be more effective than traditional learning in terms of medical students’ skills. However, there is a need to further investigate students’ attitudes and satisfaction with offline digital education as well as its cost-effectiveness, changes in its accessibility or availability, and any resulting unintended/adverse effects.

Keywords: medical education; systematic review; meta-analysis; randomized controlled trials; students, medical

Introduction

There is a global shortage of 2.6 million medical doctors according to the World Health Organization [1]. In low- and middle-income countries, this shortage is further exacerbated by migrations, inadequacy of training programs, and poor infrastructure including limited access to fast and reliable internet connection [2-6]. Additionally, the content, structure, and delivery mode of medical curricula in these countries are often inadequate to equip medical students with the required knowledge, skills, and experience needed to meet their populations’ evolving health care needs [7]. To tackle these multifaceted and intertwined problems, complex measures need to be taken to increase not only the number of medical doctors, but also the quality and relevance of their training [8]. Offline digital education offers a potential solution to overcome these problems.

Offline digital education, herein also referred to as computer-based learning or computer-assisted instruction, was one of the first forms of digital education, used before the internet became available on a global scale [9,10]. Unlike online digital education, offline digital education is independent of the internet or a local area network connection. Offline digital education can be delivered through CD-ROM, digital versatile disc (DVD)-ROM, external hard discs, universal serial bus (USB) memory sticks, or different software packages [11]. Offline digital education offers potential benefits over traditional modes of learning, including self-paced directed learning, stimulation of various senses (eg, with visual and spatial components) [12,13], and the ability to represent content in a variety of media (eg, text, sound, and motion) [14]. The educational content of the interventions is highly adaptable to the learners’ needs, with the potential to be reviewed, repeated, and resumed at will. The interventions offer improved accessibility and flexibility and transcend the geographical, temporal, and financial boundaries that medical students may face. By reducing the costs of transportation or renting out classrooms and by freeing up the time of medical curriculum providers [14-16], the interventions may potentially offer substantial monetary savings.

A number of randomized control trials (RCTs) have evaluated the effectiveness of offline digital education in improving learning outcomes of medical students. Some of these trials were further evaluated in systematic reviews [13,17], but the findings were inconclusive. The primary objective of this systematic review was to evaluate the effectiveness of offline digital education compared with traditional learning or different forms of offline digital education in improving medical students’ knowledge, skills, attitudes, and satisfaction. The secondary objective was to assess the economic impact of offline digital education, changes in its accessibility or availability, and its unintended/adverse effects.

Methods

Protocol

For this systematic review, we adhered to the published protocol [18]. The methodology has been described in detail by the Digital Health Education Collaboration [19]. The Digital Health Education collaboration is a global initiative focused on evaluating the effectiveness of digital health professions education through a series of methodologically robust systematic reviews.

Search Strategy and Data Sources

Electronic Searches

We developed a comprehensive search strategy for MEDLINE (Ovid; see Multimedia Appendix 1 for MEDLINE [Ovid] search strategy), Embase (Elsevier), Cochrane Central Register of Controlled Trials (CENTRAL) (Wiley), PsycINFO (Ovid), Educational Research Information Centre (Ovid), Cumulative Index to Nursing and Allied Health Literature (Ebsco), and Web of Science Core Collection (Thomson Reuters). Databases were searched from January 1990 to August 2017. We selected 1990 as the starting year for our search because prior to this year, the use of computers was limited to very basic tasks. There were no language restrictions. We searched the reference lists of all the studies that we deemed eligible for inclusion in our review and the relevant systematic reviews. We also searched the International Clinical Trials Registry Platform Search Portal and metaRegister of Controlled Trials to identify unpublished trials.

We developed a common, comprehensive search strategy for a series of systematic reviews focusing on different types of digital education (ie, offline digital education, online digital education, and mobile learning) for preregistration as well as postregistration health care professionals. We retrieved 30,532 records from different bibliographic databases initially. In this review, we only included studies focusing on the effectiveness of offline digital education in medical students’ education, and the findings on other types of digital education (such as virtual reality and mobile learning) within health professions education were reported separately [20-26].

For the purpose of this review, offline digital education can be defined as offline and stand-alone computer-based or computer-assisted learning where internet or intranet connection is not required for the learning activities. Traditional learning can be defined as learning via traditional forms of education such as paper- or text book–based learning and didactic or face-to-face-lecture. Blended digital education can be defined as any intervention that involves the combined use of offline digital education and traditional learning.

Inclusion Criteria

We included RCTs and cluster RCTs (cRCTs). Crossover trials were excluded due to a high likelihood of carry-over effect. We included studies with medical students enrolled in a preregistration, university degree program. Participants were not excluded based on age, gender, or any other sociodemographic characteristic.

We included studies in which offline digital education was used to deliver the learning content of the course. This included studies focused solely on offline digital education, or where offline digital education was part of a complex, multicomponent intervention. The main tasks of the learning activities were performed on a personal computer or laptop (with a hard keyboard). The delivery channel of the computer-based intervention was typically accessed via software programs, CD-ROM, DVD, hard disc, or USB memory stick. The focus was mainly on the learning activities that do not have to rely on any internet or online connection. Interventions where the internet connection was essential to provide learning content were excluded from this review.

We included the control groups that comprised traditional learning or traditional face-to-face learning such as lectures or discussions or text- or textbook-based learning as well as other offline digital education. We included studies that compared offline digital education or blended learning to traditional learning or a different form of offline digital education such as CD-ROM or PowerPoint presentations.

Learning outcomes were chosen based on the literature and relevance for medical students’ education [27]. Eligible studies had to report at least one of the specified primary or secondary outcomes. Primary outcomes (measured using any validated or nonvalidated instruments) were medical students’ knowledge scores (postintervention), medical students’ cognitive skills (postintervention), medical students’ postintervention attitudes toward the interventions or new clinical knowledge, and medical students’ postintervention satisfaction with the interventions. Secondary outcomes included the economic impact of offline digital education (eg, cost-effectiveness, implementation cost, and return on investment), changes in its accessibility or availability, and any resulting adverse effects.

Data Collection and Analysis

Selection of Studies

The search results from different electronic databases were combined in a single Endnote (X.8.2) library, and duplicate records were removed [28]. Four review authors (BK, GD, MS, and UD) independently screened titles and abstracts of all the records to identify potentially eligible studies. We retrieved full-text copies of the articles deemed potentially relevant. Finally, two reviewers (BK and GD) independently assessed the full-text versions of the retrieved articles against the eligibility criteria. Any disagreements were resolved through discussion between the two reviewers, with a third review author (PP) acting as an arbiter, when necessary.

Data Extraction and Management

Five reviewers (BK, GD, MS, UD, and VH) independently extracted relevant characteristics related to participants, intervention, comparators, outcome measures, and results from all the included studies using a standard data-collection form. Any disagreements between the reviewers were resolved by discussion. We contacted the study authors for any missing information, particularly information required to judge the risk of bias.

Assessment of Risk of Bias in Included Studies

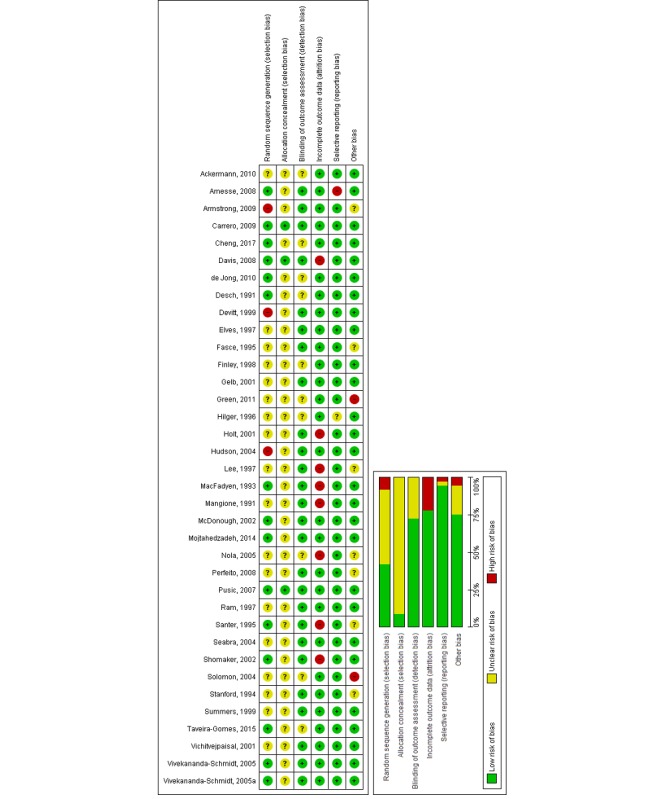

Four reviewers (BK, GD, MS, and UD) independently assessed the methodological risk of bias of included studies using the Cochrane methodology [29]. The following individual risk-of-bias domains were assessed in the included RCTs: random sequence generation, allocation concealment, blinding (outcome assessment), completeness of outcome data (attrition bias), selective outcome reporting (relevant outcomes reported), and other sources of bias (baseline imbalances).

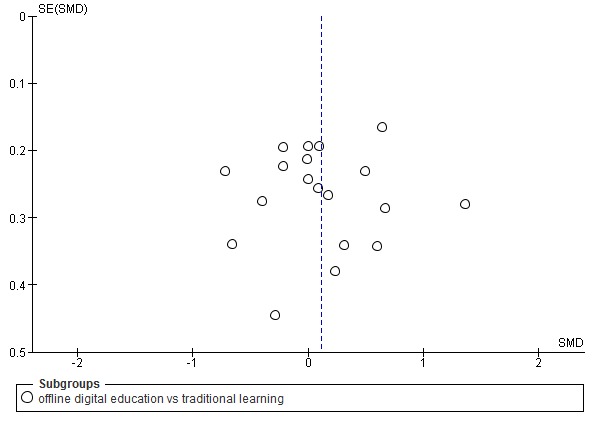

For cRCTs, we assessed the risk of the following additional domains: recruitment bias, baseline imbalance, loss of clusters, incorrect analysis, and comparability with individually randomized trials recommended by Puffer et al [30]. Judgements concerning the risk of bias for each study were scored as high, low, or unclear. We incorporated the results of the risk-of-bias assessment into the review using a graph and a narrative summary. We also assessed publication bias using a funnel plot for comparisons with at least 10 studies.

Measures of Treatment Effect

For continuous outcomes, we reported mean postintervention scores and SD in each intervention group along with the number of participants and P values. We reported mean postintervention outcome data to ensure consistency across the included studies, as this was the most commonly reported form of findings. We presented outcomes using postintervention standardized mean difference (SMD) and interpreted the effect size using the Cohen rule of thumb (ie, with 0.2 representing a small effect, 0.5 representing a moderate effect, and 0.8 representing a large effect) [29,31]. For dichotomous outcomes, we calculated the risk ratio and 95% CIs. If studies had multiple arms, we compared the most active intervention arm to the least active control arm and assessed the difference in postintervention outcomes. We used the standard method recommended by Higgins et al to convert the results [29].

Data Synthesis

For meta-analysis, we used a random-effects model. For studies with the same continuous outcome measures, SMDs (for different scales) between groups, along with the 95% CIs, were estimated using Review Manager 5.3 [32]. In the analysis of continuous outcomes and cRCTs, we used the inverse variance method. We displayed the results of the meta-analyses in forest plots that provided effect estimates and 95% CIs for each individual study as well as a pooled effect estimate and 95% CI. For every step in the data analysis, we adhered to the statistical guidelines described by Higgins et al in 2011 [29].

We synthesized the findings from the included studies by the type of comparison: offline digital education (including PowerPoint and CD-ROM) versus traditional learning, offline digital education versus a different form of offline digital education, and blended learning versus traditional learning. Two authors (BK and GD) used the Grading of Recommendations, Assessment, Development, and Evaluations criteria to assess the quality of the evidence [33]. We considered the following criteria to evaluate the quality of the evidence, downgrading the quality where appropriate: limitations of studies (risk of bias), inconsistency of results (heterogeneity), indirectness of the evidence, imprecision (sample size and effect estimate), and publication bias. We prepared a summary of findings table [33] to present the results (Table 1). Where a meta-analysis was unfeasible, we presented the results in a narrative format, such as that used by Chan et al [34].

Table 1.

Summary of findings table: Effects of offline digital education on knowledge, skills, attitudes, and satisfaction. Patient or population: medical students, Settings: university or hospital, Intervention: offline digital education, Comparison: offline digital education versus traditional learning.

| Outcomes | Illustrative comparative risks (95% CI) | Number of participants (number of studies) | Quality of the evidence (GRADEa) | Comments |

| Knowledge: Assessed with multiple-choice questions, questionnaires, essays, quizzes, and practical section (from postintervention to 11-22 months of follow-up) | The mean knowledge score in offline digital education groups was 0.11 SD higher (–0.11 lower to 0.32 higher) | 1717 (19) | Lowb,c,d | The results from seven studies (689 participants) were not added to the meta-analysis due to incomplete or incomparable outcome data. These studies reported mixed findings: four studies (331 participants) favored offline digital education group, two studies reported no difference (289 participants), and one study favored the traditional learning group (69 participants). |

| Skills: Assessed with checklists, Likert-type scales, and questionnaires, (from postintervention to 1-10 months of follow-up) | The mean skills score in the offline digital education groups was 0.5 SD higher (0.25 higher to 0.75 higher) | 415 (4) | Lowb,c,d | The results of two studies (190 participants) were not added to the meta-analysis due to incomplete outcome data. One study (121 participants) favored offline digital education group. The other study (69 participants) reported no difference between the groups immediately postintervention and favored the offline digital education group at 1-month of follow-up. |

| Attitude: Assessed with Likert scale, questionnaires, and surveys (from postintervention to 5 weeks of follow-up) | Not estimable | 493 (5) | Lowb,c,d | One study (54 participants) reported higher postintervention attitude scores in offline digital education compared to traditional learning. We were uncertain about the effect of four studies (439 participants) due to incomplete outcome data. |

| Satisfaction: Assessed with Likert scales, questionnaires, and surveys (postintervention) | Not estimable | 1442 (15) | Lowb,c,d | Two studies (144 participants) favored traditional learning and two studies (103 participants) reported little or no difference between the groups. We were uncertain about the effect of 11 studies (1195 participants) due to incomplete outcome data. |

aGRADE: Grading of Recommendations, Assessment, Development, and Evaluations.

bLow quality: Further research is very likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate.

cRated down by one level for study limitations. The risk of bias was unclear for sequence generation and allocation concealment in majority of the studies.

dRated down by one level for inconsistency. The heterogeneity is high with large variations in effect and lack of overlap among CIs.

Results

Results of the Search

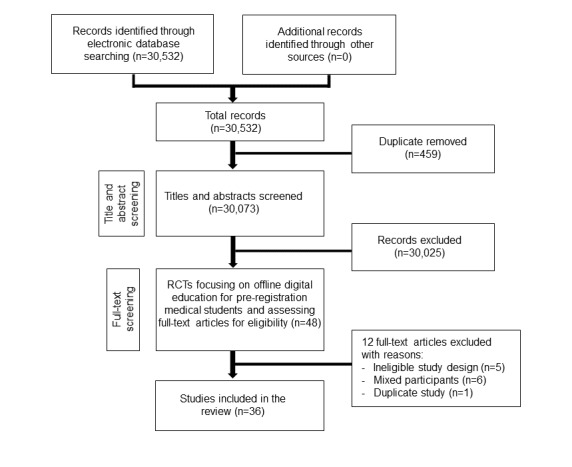

Our search strategy retrieved 30,532 unique references (Figure 1).

Figure 1.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses flow diagram. RCT: randomized controlled trials.

Included Studies

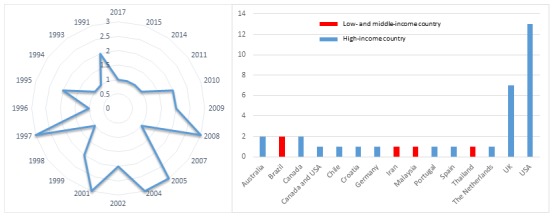

We included 36 studies from 35 reports involving 3325 participants [10,35-68] (Table 2), of which 33 studies were RCTs and the remaining three studies (from two reports) were cRCTs [56,68]. One study [68] reported the results of two cRCTs and we reported these results separately. Thirty-two studies (89%) were published before 2010, and only four studies (11%) were published after 2010 (Figure 2) [39,48,55,66].

Table 2.

Characteristics of the included studies.

| Study, design, and country | Population (n), (medical student year) | Field of study | Outcomes |

| Ackermann et al 2010 [35], RCTb, Germany | 20 (not specified) | Surgery (orthopedic surgery) |

Skill |

| Amesse 2008 [36], RCT, United States | 36 (third year) | Radiology | Knowledge |

| Armstrong et al 2009 [37], RCT, United Kingdom | 21 (fourth year) | Arterial blood gas interpretation | Knowledge and satisfaction |

| Carrero et al 2009 [38], RCT, Spain | 68 (third year) | Basic life support algorithms | Knowledge |

| Cheng et al 2017 [39], RCT, United States | 41 (second, third and fourth year) | Orthopedics | Skill |

| Davis et al 2008 [40], RCT, United Kingdom | 229 (first year) | Evidence-based medicine | Knowledge and attitude |

| de Jong et al 2010 [41], RCT, The Netherlands | 107 (third year) | Musculoskeletal problems | Knowledge and satisfaction |

| Desch et al 1991 [42], RCT, United States | 78 (third year) | Pediatrics (neonatal management) |

Knowledge, satisfaction, and cost |

| Devitt and Palmer 1999 [43], RCT, Australia | 90 (second year) | Anatomy and physiology | Knowledge |

| Elves et al 1997 [44], RCT, United Kingdom | 26 (third year) | Urology | Knowledge and satisfaction |

| Fasce et al 1995 [45], RCT, Chile | 100 (fourth year) | Medicine (hypertension) | Knowledge, attitude, and satisfaction |

| Finley et al 1998 [46], RCT, Canada | 40 (second year) | Medicine (auscultation of heart) | Knowledge and satisfaction |

| Gelb 2001 [47], RCT, United States | 107 (not specified) | Anatomy | Knowledge and satisfaction |

| Green and Levi 2011 [48], RCT, United States | 121 (second year) | Advanced care planning | Knowledge, skill, and satisfaction |

| Hilger et al 1996 [49], RCT, United States | 77 (third year) | Medicine (pharyngitis) | Knowledge and attitude |

| Hudson 2004 [10], RCT, Australia | 100 (third year) | Neuroanatomy and neurophysiology | Knowledge |

| Holt et al 2001 [50], RCT, United Kingdom | 185 (first year) | Endocrinology | Knowledge, satisfaction, and cost |

| Lee et al 1997 [51], RCT, United States | 82 (second year) | Biochemistry/acid-base problem solving | Knowledge and satisfaction |

| MacFadyen et al 1993 [52], RCT, Canada | 54 (fourth year) | Clinical pharmacology | Knowledge and attitude |

| Mangione et al 1991 [53], RCT, United States | 35 (third year) | Auscultation of the heart | Knowledge and attitude |

| McDonough and Marks 2002 [54], RCT, United Kingdom | 37 (third year) | Psychiatry | Knowledge and satisfaction |

| Mojtahedzadeh et al 2014 [55], RCT, Iran | 61 (third year) | Physiology of hematology and oncology | Knowledge and satisfaction |

| Nola et al 2005 [56], cRCTc, Croatia | 225 (not specified) | Pathology | Knowledge |

| Perfeito et al 2008 [57], RCT, Brazil | 35 (fourth year) | Surgery | Knowledge and satisfaction |

| Pusic et al 2007 [58], RCT, Canada and United States | 152 (final year) | Radiology | Knowledge and satisfaction |

| Ram 1997 [59], RCT, Malaysia | 64 (final year) | Cardiology | Knowledge |

| Santer et al 1995 [60], RCT, United States | 179 (third and fourth year) | Pediatrics | Knowledge and satisfaction |

| Seabra et al 2004 [61], RCT, Brazil | 60 (second and third year) | Urology | Knowledge and satisfaction |

| Shomaker et al 2002 [62], RCT, United States | 94 (second year) | Parasitology | Knowledge and satisfaction |

| Solomon et al 2004 [63], RCT, United States | 29 (third year) | Learning concepts (digital and live lecture formats) |

Knowledge |

| Stanford et al 1994 [64], RCT, United States | 175 (first year) | Anatomy (cardiac anatomy) | Knowledge and satisfaction |

| Summers et al 1999 [65], RCT, United States | 69 (first year) | Surgery | Knowledge and skill |

| Taveira-Gomes et al 2015 [66], RCT, Portugal | 96 (fourth and fifth year) | Cellular biology | Knowledge |

| Vichitvejpaisal et al 2001 [67], RCT, Thailand | 80 (third year) | Arterial blood gas interpretation | Knowledge |

| Vivekananda-Schmidt et al 2005 [68], cRCT, United Kingdom (Newcastle)c | 241 (third year) | Orthopedics (musculoskeletal examination skills) | Skill and cost |

| Vivekananda-Schmidt et al 2005 [68], cRCT, United Kingdom (London)c | 113 (third year) | Orthopedics (musculoskeletal examination skills) | Skill and cost |

aRCT: randomized controlled trial.

bcRCT: cluster randomized controlled trial.

cThis study reported its results from two separate cRCTs. We analyzed data from the two cRCTs separately.

Figure 2.

Number of publication(s) on offline digital education in relation to their year of publication and country of origin.

The number of participants across the studies varied from 20 [35] to 241 [68], while individual studies focused on different areas of medical education. For the intervention groups, 20 studies used software programs [10,42,43,45,48-51,53,54, 56,58-62,64-67], nine used CD-ROMs [35,36,40,44, 46,55,57,68], four used PowerPoint presentations [37,38,41,63], two did not specify the type of intervention [47,52], and one used a computer-based video [39]. The duration of the interventions ranged from 10 minutes [39] to 3 weeks [41]. Four studies did not report the duration of the intervention [47,48,56,63]. The frequency of the intervention ranged from one [35,38-40,48,49,51,52,55,57-61,64,65,67-68] to six [50], and the intensity ranged from 10 minutes [39] to 11.1 hours [53]. Nine studies provided instructions on how to use the software [39,42,44,46,50,53,65,68]. Eight studies reported security arrangements [36,37,43,45,49,50,53,58]. For the control groups, 30 studies used traditional methods of learning such as face-to-face lectures, paper- or text book–based learning resources, laboratory courses, practical workshops, or small group tutorials [10,36-42,44,45,47-57,59-65,67,68]. Five studies used different forms of offline digital education as the controls [35,43,46,58,66]. One study compared blended learning (computer-assisted learning in addition to traditional learning) and traditional learning alone [44]. More information on the types of interventions is provided in Multimedia Appendix 2.

Knowledge

Overview

Knowledge was assessed as the primary outcome in 32 studies [10,36-38,40-67], and majority of the studies (69%) used multiple-choice questions or questionnaires to measure the outcome. Twenty-nine studies assessed knowledge using nonvalidated instruments [36-38,40-49,51-57,59-67], while three studies [10,50,58] used validated instruments such as multiple-choice questions, questionnaires, and tests. Twenty-six studies assessed postintervention knowledge scores, while six studies assessed both short-term and long-term knowledge retention, ranging from 1 week to 22 months of follow-up [52,60,62,65-67] (Multimedia Appendix 3).

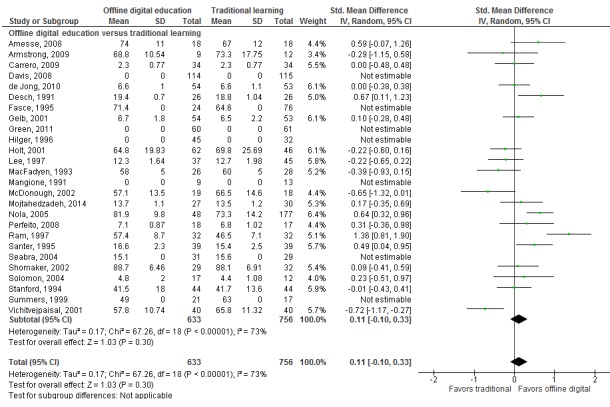

Offline Digital Education Versus Traditional Learning

Meta-analysis of 19 studies showed that there was no significant difference between offline digital education and traditional learning in postintervention knowledge scores (SMD 0.11, 95% CI –0.11 to 0.32; 1717 participants; small effect size, low-quality evidence; Figure 3). There was a substantial amount of heterogeneity in the pooled analyses (I2=73%). The remaining seven studies were not pooled due to incomplete or incomparable outcome data [40,45,48,49,53,61,65] and reported mixed findings. Four studies reported a significant difference in postintervention knowledge scores in favor of offline digital education (331 participants) [45,48,49,53]. Two studies reported no significant difference between the interventions (289 participants) [40,61], and one study (69 participants) reported a significant difference in postintervention knowledge scores in favor of traditional learning [65]. Taken together, these findings suggest that offline digital education had similar effects as traditional learning on medical students’ postintervention knowledge scores.

Figure 3.

Forest plot of studies comparing offline digital education with traditional, postintervention knowledge outcome. IV=interval variable; random=random effect model.

Blended Learning Versus Traditional Learning

One study (26 participants) assessed postintervention knowledge scores in blended learning (offline digital education plus traditional learning) versus traditional learning alone and reported a significant difference in favor of blended learning (SMD 0.81, 95% CI 0.01-1.62; large effect size) [44].

Offline Digital Education Versus Offline Digital Education

Five studies (478 participants) compared one form of offline digital education (eg, CD-ROM and software programs) to another form of offline digital education (eg, CD-ROM, software programs, or computer-assisted learning programs) [10,43,46,58,66].

Devitt et al reported higher knowledge scores in the computer-based group than a free-text entry program (SMD 1.62, 95% CI 0.93-2.31; large effect size) at 2 weeks postintervention [43]. Taveira-Gomes et al also reported higher postintervention knowledge scores in the computer-based software group (ie, the use of flashcards-based learning materials on cellular structure) compared to a computer-based method alone (ie, without the use of flashcards; SMD 2.17, 95% CI 1.67-2.67, large effect size) [66]. Pusic et al reported that the effectiveness of a simple linear computer program was equivalent to that of a more interactive, branched version of the program in terms of postintervention knowledge scores (SMD 0, 95% CI –0.33 to 0.33; small effect size) [58]. The effect of two studies was uncertain due to incomplete outcome data [10,46]. Overall, the findings were mixed and inconclusive.

Skills

Overview

Six studies from five reports (605 participants) assessed skills as a primary outcome [35,39,48,65,68]. Three studies used validated instruments to measure the outcome such as an Objective Structured Clinical Examination [68] and a checklist rating form [65]. The remaining three studies used nonvalidated instruments such as questionnaires [35], a six-point checklist scale [39], and self-assessment [48]. Four studies [35,39,48,68] assessed postintervention skills scores, while two studies [65,68] assessed both short-term and long-term skill retention, ranging from 1 month to 10 months of follow-up (Multimedia Appendix 3).

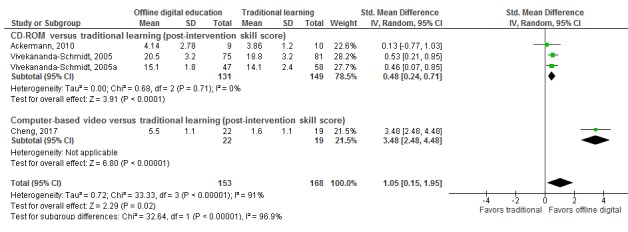

Offline Digital Education Versus Traditional Learning

Meta-analysis of four studies showed that, compared with traditional learning, offline digital education improved medical students’ postintervention skill scores (SMD 1.05, 95% CI 0.15-1.95; I2 =91%; large effect size; low-quality evidence; Figure 4). There was, however, a considerable amount of heterogeneity in the pooled analyses (I2 =91%).

Figure 4.

Forest plot of studies comparing offline digital education with traditional, postintervention skill outcome. IV=interval variable; random=random effect model. Vivekananda-Schmidt, 2005 was conducted in Newcastle and Vivekananda-Schmidt, 2005a was conducted in London.

The results of two studies were not pooled due to incomplete outcome data [48,65]. These studies also reported higher postintervention skill scores with offline digital education than with traditional learning [48,65]. Taken together, these results suggest that offline digital education may improve postintervention skill scores compared to traditional learning.

Attitude

Five studies (493 participants) reported students’ postintervention attitude toward the intervention or new clinical knowledge [40,45,49,52,53]. Attitudes were measured using Likert-based questionnaires [40,49,52], surveys [45], and the Computer Anxiety Index [53]. None of the studies used validated tools to measure the outcome. MacFadyen et al reported higher postintervention attitude scores in the offline digital education group than in the traditional learning group (SMD 2.71, 95% CI 1.96-3.47; large effect size) [52]. One study reported higher attitude scores in the intervention group than in the traditional learning group (89% vs 47%) [45]. Two studies did not report numerical data for either of the study groups [40,49], while one study assessed participants’ postintervention attitudes in the intervention group only [53]; hence, we were unable to judge the effect of these three interventions due to missing outcome data [40,45,49,53]. Taken together, the overall effect of the interventions seems uncertain due to the lack of outcome data in most of the included studies.

Satisfaction

Eighteen studies (1660 participants) assessed postintervention satisfaction [37,41,42,44-48,50,51,54,55,57,58,60-62,64]. Nine studies used Likert-type rating scales [42,46,48,51, 54,55,58,61,62], eight studies used questionnaires [37,41,44,47,50,57,60,64], and one study [45] used a survey to assess participants’ postintervention satisfaction. None of the studies used validated tools to measure the outcome.

Fifteen studies comparing offline digital education with traditional learning assessed satisfaction. Two studies [41,54] reported higher postintervention satisfaction scores in the traditional learning group than in offline digital education (risk ratio=0.46, 95% CI 0.30-0.69; small effect size; SMD –1.33, 95% CI –2.05 to –0.61, large effect size). Two other studies reported no significant difference in students’ postintervention satisfaction between the groups [37,51]. The remaining 11 studies reported incomplete or incomparable outcome data [42,45,47,48,50,55,57,60-62,64]. Overall, we were uncertain about the effects of offline digital education on students’ satisfaction scores, when compared with traditional learning.

Three studies comparing different forms of offline digital education and blended learning to traditional learning also assessed satisfaction. However, we were unable to judge the overall effect of the intervention in the three studies due to missing or incomparable outcome data [44,46,58].

Secondary Outcomes

Four studies (617 participants) reported the cost of the offline digital education [42,50,68]. However, none of the included studies compared costs between the intervention and control groups.

Desch et al reported that the authoring system (computer-assisted instructional software program) costs US $600. Additionally, the study used US $1500 to hire a student to develop the program [42]. The microcomputers used by the students in the study by Desch et al were within a large microcomputer area in the medical library and were used for multiple purposes. Holt et al reported that the total cost of the equipment specially needed to set up the computer-assisted learning course (including slide and document scanners, sound recording, a laptop, and software) was approximately £3000 (~US $4530) [50]. Two studies reported that the cost of designing a virtual rheumatology CD was £11,740 (US $22,045) [68].

No studies reported adverse or unintended effects of the interventions or changes in the accessibility or availability of digital offline education.

Risk of Bias in Included Studies

As presented in Figure 5, the risk of bias was generally unclear or high in most of the studies because of a lack of relevant information in the included studies. For 14 (39%) studies, we found that the risk of bias was low in at least four of six domains [38,39,41,42,54,55,58,59,61,65-68]. For 22 studies (61%), we found that the risk of bias was high, as the studies had an unclear risk of bias in at least three of six domains or a high risk in at least one domain [10,35-37,40,43,44,45,46,47,48-53,56, 57,60,62-64]. A symmetrical funnel plot of studies comparing offline digital education and traditional learning suggests low risk of publication bias for the outcome knowledge (Figure 6). The overall risk of bias for cRCTs was unclear due to limited information from included studies (Multimedia Appendix 4).

Figure 5.

Risk of bias summary: review authors' judgements about each risk of bias item across all included studies.

Figure 6.

Funnel plot of studies comparing offline digital education with traditional, postintervention knowledge outcome. SMD: standardized mean difference.

Discussion

Principal Findings

Our findings show that offline digital education is as effective as traditional learning in improving medical students’ postintervention knowledge and may be more effective in improving skills, with effect sizes ranging from small (for knowledge) to large (for skills). We are uncertain about the effects for attitudes and satisfaction due to missing data or incomplete reporting. None of the studies reported on changes in accessibility or availability for education or adverse effects of the interventions. Only four studies reported the cost of offline digital education interventions; however, no estimates for comparator groups were provided.

Several limitations in the included literature need to be highlighted. For instance, we found that the evidence was of low quality due to the predominantly high risk of bias (studies’ limitations) or inconsistency (high heterogeneity of the pooled analyses). Furthermore, the included studies were highly heterogeneous in terms of student populations (years 1-5), comparator groups (traditional learning and different forms of offline digital education), outcomes and measurement tools (multiple-choice questionnaires, surveys, Likert-type scales, questionnaires, essays, quizzes, practical sections), study designs, settings (university or hospital), and interventions (learning contents, types of delivery mode, duration, frequency, intensity, and security arrangements). In addition, the duration frequency of the interventions were highly variable. Although the included studies encompass a reasonable range of interventions and content, the data are mostly limited to high-income countries, thereby limiting the generalizability of our findings to other settings including low- and middle-income countries.

We also found that reporting in the included studies was often poor. For example, four studies (11%) reported on the cost of setup of the interventions only (without any comparison data). Moreover, we found that none of the studies used learning theories underpinning the development or application of the offline digital education. Most of the studies (92%) used nonvalidated measurement instruments to quantify the outcomes, thereby jeopardizing the reliability and credibility of digital education research. Furthermore, 20 studies (56%) used software/computer programs as the main mode of delivery of the learning content. However, the technical aspects of these programs such as design or functions were often omitted from the studies.

Offline digital education has the potential to play an important role in medical students’ education, especially in low- and middle-income countries. Implementing offline digital education in medical education may require much less investment and infrastructure than alternative forms of digital education (eg, virtual reality or online computer-based education). Because of its scalability, offline digital education has the potential to reduce the shortage of medical doctors. It could be a major (for low- and middle-income countries) or an alternative (for high-income countries) mode of delivering education for medical schools across the world, as more than 4 billion people still did not have access to the internet as of 2016 [6].

To the best of our knowledge, there are only two reviews available in the literature that examined the effectiveness of offline digital education among similar populations [13,17]. One of these reviews, published in 2001, suggested that offline digital education (computer-assisted learning) could reduce the costs of education and increase the number of medical students [13]. However, no formal assessments on cost-related outcomes were made, and further research was recommended. A review by Rasmussen et al stated that offline digital education was equivalent or possibly superior to traditional learning in improving knowledge, skills, attitudes, and satisfactions of preregistration health professionals [17], which is largely in line with our findings. However, Rasmussen et al applied a much narrower search timeframe and focused on all preregistration health care professionals (ie, including students from medical, dental, nursing, and allied health care fields) and could not provide specific recommendations for medical students’ education. Our review provides up-to-date evidence with a comprehensive search strategy and a focus on medical students’ education and includes meta-analyses of studies for knowledge and skills.

Strengths

Strengths of this systematic review include comprehensive searches with no language limitations, robust screening, independent data extractions, and risk-of-bias assessments. The review includes studies from the year 1990 in order to report the most comprehensive evidence and provides up-to-date evidence on the effectiveness of different types of offline digital education for medical students’ education.

Limitations

Some limitations must be acknowledged while interpreting the results. First, we were unable to obtain missing information from the study authors despite multiple attempts. Second, we presented postintervention data rather than mean change scores, as the majority of the included studies (81%) reported postintervention data and only seven studies (19%) reported mean change scores. Third, we were unable to determine whether the study administrators received any incentives from the software or program developers, which might constitute bias. Lastly, we were unable to carry out prespecified subgroup analysis because of an insufficient number of studies under respective outcomes and because of the considerable heterogeneity of populations, interventions, comparators, and outcome measures used.

Implications for Research and Practice

We believe that offline digital education interventions can be practically introduced in medical students’ education for improving their knowledge and skills in places where internet connectivity is limited, which may be of most concern in low- and middle-income countries. However, when interpreting the findings of this systematic review, stakeholders need to consider other factors such as students’ geographical location or features of the intervention such as interactivity, duration, frequency, intensity, and delivery mode.

Future studies should evaluate the cost-effectiveness, sustainability, and indirect (and direct) costs of the interventions (eg, time to develop or implement the educational module). Future research should also report on potential (or actual) adverse effects of the interventions. In addition, most of the studies assessed short-term effectiveness of the interventions; hence, there is a need to evaluate knowledge and skill retention during longer follow-ups (eg, 6-12 months). Additionally, other aspects of the interventions such as different levels of interactivity or feedback in low- and middle-income countries still need to be explored. Addressing these gaps in evidence will help policy makers and curriculum planners allocate resources appropriately.

Conclusions

The findings from this review suggest that offline digital education is as effective as traditional learning in terms of medical students’ knowledge and may be more effective in improving their skills. However, the evidence on other outcomes is inconclusive or limited. Future research should evaluate the effectiveness of offline digital education interventions in low- and middle-income countries and report on outcomes such as attitudes, satisfaction, adverse effects, and economic impact.

Acknowledgments

This review was conducted in collaboration with the Health Workforce Department at the World Health Organization. We would also like to thank Mr Carl Gornitzki, Ms GunBrit Knutssön, and Mr Klas Moberg from the University Library, Karolinska Institutet, Sweden, for developing the search strategy and the peer reviewers for their comments. We gratefully acknowledge funding from the Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore (eLearning for health professionals’ education grant). Additionally, we would like to thank Ms Sabrina Andrea Spieck, Ms Maja Magdalena Olsson, and Mr Geronimo Jimenez for translating German and Spanish papers. We also would like to thank Dr Olena Zhabenko and Dr Ram Chandra Bajpai for their suggestions in data analysis.

Abbreviations

- DVD

digital versatile disc

- USB

universal serial bus

- RCT

randomized control trial

- cRCT

cluster randomized control trial

- SMD

standardized mean difference

- GRADE

Grading of Recommendations, Assessment, Development, and Evaluations

MEDLINE (Ovid) Search Strategy.

Characteristics of the included studies.

Results of the included studies.

Risk of bias for cluster randomized controlled trials.

Footnotes

Authors' Contributions: LC conceived the idea for the review. BK, PP, and GD wrote the review. LC, PP, and MS peer reviewed the review. LC provided methodological guidance on the review. PP, MS, UD, VH, and LTC provided comments on the review.

Conflicts of Interest: None declared.

References

- 1.World Health Organization. 2016. [2018-11-14]. High-Level Commission on Health Employment and Economic Growth Health workforce http://www.who.int/hrh/com-heeg/en/

- 2.Anand S, Bärnighausen T. Human resources and health outcomes: cross-country econometric study. Lancet. 2004;364(9445):1603–9. doi: 10.1016/S0140-6736(04)17313-3.S0140-6736(04)17313-3 [DOI] [PubMed] [Google Scholar]

- 3.Campbell J, Dussault G, Buchan J, Pozo-Martin F, Guerra AM, Leone C, Siyam A, Cometto G. World Health Organization. 2013. [2019-03-01]. A universal truth: no health without a workforce https://www.who.int/workforcealliance/knowledge/resources/GHWA_AUniversalTruthReport.pdf .

- 4.Chen LC. Striking the right balance: health workforce retention in remote and rural areas. Bull World Health Organ. 2010 May;88(5):323, A. doi: 10.2471/BLT.10.078477. http://europepmc.org/abstract/MED/20461215 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hongoro C, McPake B. How to bridge the gap in human resources for health. Lancet. 2004;364(9443):1451–6. doi: 10.1016/S0140-6736(04)17229-2.S0140673604172292 [DOI] [PubMed] [Google Scholar]

- 6.Luxton E. World Economic Forum. [2019-02-27]. 4 billion people still don't have internet access. Here's how to connect them https://www.weforum.org/agenda/2016/05/4-billion-people-still-don-t-have-internet-access-here-s-how-to-connect-them/

- 7.Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Fineberg H, Garcia P, Ke Y, Kelley P, Kistnasamy B, Meleis A, Naylor D, Pablos-Mendez A, Reddy S, Scrimshaw S, Sepulveda J, Serwadda D, Zurayk H. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010 Dec 04;376(9756):1923–58. doi: 10.1016/S0140-6736(10)61854-5.S0140-6736(10)61854-5 [DOI] [PubMed] [Google Scholar]

- 8.World Health Organization. 2015. [2018-11-14]. eLearning for undergraduate health professional education: a systematic review informing a radical transformation of health workforce development http://www.who.int/hrh/documents/14126-eLearningReport.pdf .

- 9.Carr MM, Reznick RK, Brown DH. Comparison of computer-assisted instruction and seminar instruction to acquire psychomotor and cognitive knowledge of epistaxis management. Otolaryngol Head Neck Surg. 1999 Oct;121(4):430–4. doi: 10.1016/S0194-5998(99)70233-0.S0194599899004465 [DOI] [PubMed] [Google Scholar]

- 10.Hudson JN. Computer-aided learning in the real world of medical education: does the quality of interaction with the computer affect student learning? Med Educ. 2004 Aug;38(8):887–95. doi: 10.1111/j.1365-2929.2004.01892.x.MED1892 [DOI] [PubMed] [Google Scholar]

- 11.Crisp N, Gawanas B, Sharp I, Task Force for Scaling Up Education and Training for Health Workers Training the health workforce: scaling up, saving lives. The Lancet. 2008 Feb;371(9613):689–691. doi: 10.1016/s0140-6736(08)60309-8. [DOI] [PubMed] [Google Scholar]

- 12.Brandt MG, Davies ET. Visual-spatial ability, learning modality and surgical knot tying. Can J Surg. 2006 Dec;49(6):412–6. http://www.canjsurg.ca/vol49-issue6/49-6-412/ [PMC free article] [PubMed] [Google Scholar]

- 13.Greenhalgh T. Computer assisted learning in undergraduate medical education. BMJ. 2001 Jan 06;322(7277):40–4. doi: 10.1136/bmj.322.7277.40. http://europepmc.org/abstract/MED/11141156 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Glicksman JT, Brandt MG, Moukarbel RV, Rotenberg B, Fung K. Computer-assisted teaching of epistaxis management: a Randomized Controlled Trial. Laryngoscope. 2009 Mar;119(3):466–72. doi: 10.1002/lary.20083. [DOI] [PubMed] [Google Scholar]

- 15.Hunt CE, Kallenberg GA, Whitcomb ME. Medical students' education in the ambulatory care setting: background paper 1 of the Medical School Objectives Project. Acad Med. 1999 Mar;74(3):289–96. doi: 10.1097/00001888-199903000-00022. [DOI] [PubMed] [Google Scholar]

- 16.Woo MK, Ng KH. A model for online interactive remote education for medical physics using the Internet. J Med Internet Res. 2003;5(1):e3. doi: 10.2196/jmir.5.1.e3. http://www.jmir.org/2003/1/e3/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rasmussen K, Belisario JM, Wark PA, Molina JA, Loong SL, Cotic Z, Papachristou N, Riboli-Sasco E, Tudor Car L, Musulanov EM, Kunz H, Zhang Y, George PP, Heng BH, Wheeler EL, Al Shorbaji N, Svab I, Atun R, Majeed A, Car J. Offline eLearning for undergraduates in health professions: A systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014 Jun;4(1):010405. doi: 10.7189/jogh.04.010405. http://europepmc.org/abstract/MED/24976964 .jogh-04-010405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hervatis V, Kyaw B, Semwal M, Dunleavy G, Tudor CL, Zary N. PROSPERO CRD42016045679. 2016. Apr 15, [2019-02-18]. Offline and computer-based eLearning interventions for medical students' education [Cochrane Protocol] http://www.crd.york.ac.uk/PROSPERO/display_record.php?ID=CRD42016045679 .

- 19.Car J, Carlstedt-Duke J, Tudor Car L, Posadzki P, Whiting P, Zary N, Atun R, Majeed A, Campbell J, Digital Health Education Collaboration Digital Education in Health Professions: The Need for Overarching Evidence Synthesis. J Med Internet Res. 2019 Dec 14;21(2):e12913. doi: 10.2196/12913. http://www.jmir.org/2019/2/e12913/ v21i2e12913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Posadzki P, Bala M, Kyaw Bm, Semwal M, Divakar U, Koperny M, Sliwka A, Car J. Offline digital education for post-registration health professions: a systematic review by the Digital Health Education collaboration. J Med Internet Res (forthcoming) 2018:a. doi: 10.2196/12968. https://preprints.jmir.org/preprint/12968 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kyaw BM, Saxena N, Posadzki P, Vseteckova J, Nikolaou CK, George PP, Divakar U, Masiello I, Kononowicz AA, Zary N, Tudor Car L. Virtual Reality for Health Professions Education: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019 Jan 22;21(1):e12959. doi: 10.2196/12959. http://www.jmir.org/2019/1/e12959/ v21i1e12959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tudor Car L, Kyaw BM, Dunleavy G, Smart NA, Semwal M, Rotgans JI, Low-Beer N, Campbell J. Digital Problem-Based Learning in Health Professions: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 28;21(2):e12945. doi: 10.2196/12945. (forthcoming) http://www.jmir.org/2019/2/e12945/v21i2e12945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gentry SV, Gauthier A, Ehrstrom BL, Wortley D, Lilienthal A, Tudor Car L, Dauwels-Okutsu S, Nikolaou CK, Zary N, Campbell J, Car J. Serious gaming and gamification education in health professions: a systematic review by the Digital Health Education Collaboration. J Med Internet Res (forthcoming) 2018:a. doi: 10.2196/12994. (forthcoming) https://preprints.jmir.org/preprint/12994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dunleavy G, Nikolaou CK, Nifakos S, Atun R, Law GCY, Tudor Car L. Mobile Digital Education for Health Professions: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 12;21(2):e12937. doi: 10.2196/12937. http://www.jmir.org/2019/2/e12937/ v21i2e12937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Semwal M, Whiting P, Bajpai R, Bajpai S, Kyaw BM, Tudor Car L. Digital Education for Health Professions on Smoking Cessation Management: Systematic Review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Mar 04;21(3):e13000. doi: 10.2196/13000. http://www.jmir.org/2019/3/e13000/ v21i3e13000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huang Z, Semwal M, Lee SY, Tee M, Ong W, Tan WS, Bajpai R, Tudor Car L. Digital Health Professions Education on Diabetes Management: Systematic Review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 21;21(2):e12997. doi: 10.2196/12997. http://www.jmir.org/2019/2/e12997/ v21i2e12997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Barr H, Freeth D, Hammick M, Koppel I, Reeves S. CAIPE: Centre for the Advancement of Interprofessional Education. 2000. [2019-03-07]. Evaluations of interprofessional education: a United Kingdom review for health and social care https://www.caipe.org/resources/publications/barr-h-freethd-hammick-m-koppel-i-reeves-s-2000-evaluations-of-interprofessional-education .

- 28.Endnote (X.8.2) Philadelphia, PA: Clarivate Analytics; 2018. [2019-03-01]. https://endnote.com/downloads/available-updates/ [Google Scholar]

- 29.Higgins JPT, Green S. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011] London: The Cochrane Collaboration; 2011. [2019-03-01]. http://crtha.iums.ac.ir/files/crtha/files/cochrane.pdf . [Google Scholar]

- 30.Puffer S, Torgerson D, Watson J. Evidence for risk of bias in cluster randomised trials: review of recent trials published in three general medical journals. BMJ. 2003 Oct 04;327(7418):785–9. doi: 10.1136/bmj.327.7418.785. http://europepmc.org/abstract/MED/14525877 .327/7418/785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Cook DA, Hatala R, Brydges R, Zendejas B, Szostek JH, Wang AT, Erwin PJ, Hamstra SJ. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA. 2011 Sep 07;306(9):978–88. doi: 10.1001/jama.2011.1234.306/9/978 [DOI] [PubMed] [Google Scholar]

- 32.RevMan . Review Manager 5.3. Copenhagen: The Nordic Cochrane Centre: The Cochrane Collaboration; 2014. [2019-03-01]. https://community.cochrane.org/help/tools-and-software/revman-5 . [Google Scholar]

- 33.Schünemann HJ, Oxman AD, Higgins JPT, Vist GE, Glasziou P, Guyatt GH. Cochrane Handbook for Systematic Reviews of Interventions. London: The Cochrane Collaboration; 2008. Presenting results and 'Summary of findings' tables (Chapter 11) [Google Scholar]

- 34.Chan RJ, Webster J, Marquart L. Information interventions for orienting patients and their carers to cancer care facilities. Cochrane Database Syst Rev. 2011 Dec 07;(12):CD008273. doi: 10.1002/14651858.CD008273.pub2. [DOI] [PubMed] [Google Scholar]

- 35.Ackermann O, Siemann H, Schwarting T, Ruchholtz S. [Effective skill training by means of E-learning in orthopaedic surgery] Z Orthop Unfall. 2010 May;148(3):348–52. doi: 10.1055/s-0029-1240549. [DOI] [PubMed] [Google Scholar]

- 36.Amesse LS, Callendar E, Pfaff-Amesse T, Duke J, Herbert WN. Evaluation of Computer-aided Strategies for Teaching Medical Students Prenatal Ultrasound Diagnostic Skills. Med Educ Online. 2008 Sep 24;13:13. doi: 10.3885/meo.2008.Res00275. http://europepmc.org/abstract/MED/20165541 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Armstrong P, Elliott T, Ronald J, Paterson B. Comparison of traditional and interactive teaching methods in a UK emergency department. Eur J Emerg Med. 2009 Dec;16(6):327–9. doi: 10.1097/MEJ.0b013e32832b6375. [DOI] [PubMed] [Google Scholar]

- 38.Carrero E, Gomar C, Penzo W, Fábregas N, Valero R, Sánchez-Etayo G. Teaching basic life support algorithms by either multimedia presentations or case based discussion equally improves the level of cognitive skills of undergraduate medical students. Med Teach. 2009 May;31(5):e189–95. doi: 10.1080/01421590802512896.909022755 [DOI] [PubMed] [Google Scholar]

- 39.Cheng Y, Liu DR, Wang VJ. Teaching Splinting Techniques Using a Just-in-Time Training Instructional Video. Pediatr Emerg Care. 2017 Mar;33(3):166–170. doi: 10.1097/PEC.0000000000000390. [DOI] [PubMed] [Google Scholar]

- 40.Davis J, Crabb S, Rogers E, Zamora J, Khan K. Computer-based teaching is as good as face to face lecture-based teaching of evidence based medicine: a randomized controlled trial. Med Teach. 2008;30(3):302–7. doi: 10.1080/01421590701784349.790929335 [DOI] [PubMed] [Google Scholar]

- 41.de Jong Z, van Nies JA, Peters SW, Vink S, Dekker FW, Scherpbier A. Interactive seminars or small group tutorials in preclinical medical education: results of a randomized controlled trial. BMC Med Educ. 2010 Nov 13;10:79. doi: 10.1186/1472-6920-10-79. https://bmcmededuc.biomedcentral.com/articles/10.1186/1472-6920-10-79 .1472-6920-10-79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Desch LW, Esquivel MT, Anderson SK. Comparison of a computer tutorial with other methods for teaching well-newborn care. Am J Dis Child. 1991 Nov;145(11):1255–8. doi: 10.1001/archpedi.1991.02160110047018. [DOI] [PubMed] [Google Scholar]

- 43.Devitt P, Palmer E. Computer-aided learning: an overvalued educational resource? Med Educ. 1999 Feb;33(2):136–9. doi: 10.1046/j.1365-2923.1999.00284.x. [DOI] [PubMed] [Google Scholar]

- 44.Elves AW, Ahmed M, Abrams P. Computer-assisted learning; experience at the Bristol Urological Institute in the teaching of urology. Br J Urol. 1997 Nov;80 Suppl 3:59–62. [PubMed] [Google Scholar]

- 45.Fasce E, Ramírez L, Ibáñez P. [Evaluation of a computer-based independent study program applied to fourth year medical students] Rev Med Chil. 1995 Jun;123(6):700–5. [PubMed] [Google Scholar]

- 46.Finley JP, Sharratt GP, Nanton MA, Chen RP, Roy DL, Paterson G. Auscultation of the heart: a trial of classroom teaching versus computer-based independent learning. Med Educ. 1998 Jul;32(4):357–61. doi: 10.1046/j.1365-2923.1998.00210.x. [DOI] [PubMed] [Google Scholar]

- 47.Gelb DJ. Is newer necessarily better?: assessment of a computer tutorial on neuroanatomical localization. Neurology. 2001 Feb 13;56(3):421–2. doi: 10.1212/wnl.56.3.421. [DOI] [PubMed] [Google Scholar]

- 48.Green MJ, Levi BH. Teaching advance care planning to medical students with a computer-based decision aid. J Cancer Educ. 2011 Mar;26(1):82–91. doi: 10.1007/s13187-010-0146-2. http://europepmc.org/abstract/MED/20632222 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Hilger AE, Hamrick HJ, Denny FW Jr. Computer instruction in learning concepts of streptococcal pharyngitis. Arch Pediatr Adolesc Med. 1996 Jun;150(6):629–31. doi: 10.1001/archpedi.1996.02170310063011. [DOI] [PubMed] [Google Scholar]

- 50.Holt RI, Miklaszewicz P, Cranston IC, Russell-Jones D, Rees PJ, Sönksen PH. Computer assisted learning is an effective way of teaching endocrinology. Clin Endocrinol (Oxf) 2001 Oct;55(4):537–42. doi: 10.1046/j.1365-2265.2001.01346.x.1346 [DOI] [PubMed] [Google Scholar]

- 51.Lee CSC, Rutecki GW, Whittier FC, Clarett MR, Jarjoura D. A comparison of interactive computerized medical education software with a more traditional teaching format. Teaching and Learning in Medicine. 1997 Jan;9(2):111–115. doi: 10.1080/10401339709539824. [DOI] [Google Scholar]

- 52.MacFadyen JC, Brown JE, Schoenwald R, Feldman RD. The effectiveness of teaching clinical pharmacokinetics by computer. Clin Pharmacol Ther. 1993 Jun;53(6):617–21. doi: 10.1038/clpt.1993.81. [DOI] [PubMed] [Google Scholar]

- 53.Mangione S, Nieman LZ, Greenspon LW, Margulies H. A comparison of computer-assisted instruction and small-group teaching of cardiac auscultation to medical students. Med Educ. 1991 Sep;25(5):389–95. doi: 10.1111/j.1365-2923.1991.tb00086.x. [DOI] [PubMed] [Google Scholar]

- 54.McDonough M, Marks IM. Teaching medical students exposure therapy for phobia/panic - randomized, controlled comparison of face-to-face tutorial in small groups vs. solo computer instruction. Med Educ. 2002 May;36(5):412–7. doi: 10.1046/j.1365-2923.2002.01210.x.1210 [DOI] [PubMed] [Google Scholar]

- 55.Mojtahedzadeh R, Mohammadi A, Emami AH, Rahmani S. Comparing live lecture, internet-based & computer-based instruction: A randomized controlled trial. Med J Islam Repub Iran. 2014;28:136. http://europepmc.org/abstract/MED/25694994 . [PMC free article] [PubMed] [Google Scholar]

- 56.Nola M, Morović A, Dotlić S, Dominis M, Jukić S, Damjanov I. Croatian implementation of a computer-based teaching program from the University of Kansas, USA. Croat Med J. 2005 Jun;46(3):343–7. http://www.cmj.hr/2005/46/3/15861510.pdf . [PubMed] [Google Scholar]

- 57.Perfeito JA, Forte V, Giudici R, Succi JE, Lee JM, Sigulem D. [Development and assessment of a multimedia computer program to teach pleural drainage techniques] J Bras Pneumol. 2008 Jul;34(7):437–44. doi: 10.1590/s1806-37132008000700002. http://www.scielo.br/scielo.php?script=sci_arttext&pid=S1806-37132008000700002&lng=en&nrm=iso&tlng=en .S1806-37132008000700002 [DOI] [PubMed] [Google Scholar]

- 58.Pusic MV, Leblanc VR, Miller SZ. Linear versus web-style layout of computer tutorials for medical student learning of radiograph interpretation. Acad Radiol. 2007 Jul;14(7):877–89. doi: 10.1016/j.acra.2007.04.013.S1076-6332(07)00205-X [DOI] [PubMed] [Google Scholar]

- 59.Ram SP, Phua KK, Ang BS. The effectiveness of a computer-aided instruction courseware developed using interactive multimedia concepts for teaching Phase III MD students. Medical Teacher. 2009 Jul 03;19(1):51–52. doi: 10.3109/01421599709019348. [DOI] [Google Scholar]

- 60.Santer DM, Michaelsen VE, Erkonen WE, Winter RJ, Woodhead JC, Gilmer JS, D'Alessandro MP, Galvin JR. A comparison of educational interventions. Multimedia textbook, standard lecture, and printed textbook. Arch Pediatr Adolesc Med. 1995 Mar;149(3):297–302. doi: 10.1001/archpedi.1995.02170150077014. [DOI] [PubMed] [Google Scholar]

- 61.Seabra D, Srougi M, Baptista R, Nesrallah LJ, Ortiz V, Sigulem D. Computer aided learning versus standard lecture for undergraduate education in urology. J Urol. 2004 Mar;171(3):1220–2. doi: 10.1097/01.ju.0000114303.17198.37.S0022-5347(05)62449-4 [DOI] [PubMed] [Google Scholar]

- 62.Shomaker TS, Ricks DJ, Hale DC. A prospective, randomized controlled study of computer-assisted learning in parasitology. Acad Med. 2002 May;77(5):446–9. doi: 10.1097/00001888-200205000-00022. [DOI] [PubMed] [Google Scholar]

- 63.Solomon DJ, Ferenchick GS, Laird-Fick HS, Kavanaugh K. A randomized trial comparing digital and live lecture formats [ISRCTN40455708. BMC Med Educ. 2004 Nov 29;4:27. doi: 10.1186/1472-6920-4-27. https://bmcmededuc.biomedcentral.com/articles/10.1186/1472-6920-4-27 .1472-6920-4-27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Stanford W, Erkonen WE, Cassell MD, Moran BD, Easley G, Carris RL, Albanese MA. Evaluation of a computer-based program for teaching cardiac anatomy. Invest Radiol. 1994 Feb;29(2):248–52. doi: 10.1097/00004424-199402000-00022. [DOI] [PubMed] [Google Scholar]

- 65.Summers A, Rinehart GC, Simpson D, Redlich PN. Acquisition of surgical skills: a randomized trial of didactic, videotape, and computer-based training. Surgery. 1999 Aug;126(2):330–6.S0039-6060(99)70173-X [PubMed] [Google Scholar]

- 66.Taveira-Gomes T, Prado-Costa R, Severo M, Ferreira MA. Characterization of medical students recall of factual knowledge using learning objects and repeated testing in a novel e-learning system. BMC Med Educ. 2015 Jan 24;15:4. doi: 10.1186/s12909-014-0275-0. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-014-0275-0 .s12909-014-0275-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Vichitvejpaisal P, Sitthikongsak S, Preechakoon B, Kraiprasit K, Parakkamodom S, Manon C, Petcharatana S. Does computer-assisted instruction really help to improve the learning process? Med Educ. 2001 Oct;35(10):983–9.med1020 [PubMed] [Google Scholar]

- 68.Vivekananda-Schmidt P, Lewis M, Hassell AB, ARC Virtual Rheumatology CAL Research Group Cluster randomized controlled trial of the impact of a computer-assisted learning package on the learning of musculoskeletal examination skills by undergraduate medical students. Arthritis Rheum. 2005 Oct 15;53(5):764–71. doi: 10.1002/art.21438. doi: 10.1002/art.21438. [DOI] [PubMed] [Google Scholar]

- 69.Vivekananda-Schmidt P, Lewis M, Hassell AB, ARC Virtual Rheumatology CAL Research Group Cluster randomized controlled trial of the impact of a computer-assisted learning package on the learning of musculoskeletal examination skills by undergraduate medical students. Arthritis Rheum. 2005 Oct 15;53(5):764–71. doi: 10.1002/art.21438. doi: 10.1002/art.21438. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

MEDLINE (Ovid) Search Strategy.

Characteristics of the included studies.

Results of the included studies.

Risk of bias for cluster randomized controlled trials.