Abstract

Background

Referral letters sent from primary to secondary or tertiary care are a crucial element in the continuity of patient information transfer. Internationally, the need for improvement in this area has been recognised. This aim of this study is to review the current literature pertaining to interventions that are designed to improve referral letter quality.

Methods

A search strategy designed following a Problem, Intervention, Comparator, Outcome model was used to explore the PubMed and EMBASE databases for relevant literature. Inclusion and exclusion criteria were established and bibliographies were screened for relevant resources.

Results

A total of 18 publications were included in this study. Four types of interventions were described: electronic referrals were shown to have several advantages over paper referrals but were also found to impose new barriers; peer feedback increases letter quality and can decrease ‘inappropriate referrals’ by up to 50%; templates increase documentation and awareness of risk factors; mixed interventions combining different intervention types provide tangible improvements in content and appropriateness.

Conclusion

Several methodological considerations were identified in the studies reviewed but our analysis demonstrates that a combination of interventions, introduced as part of a joint package and involving peer feedback can improve.

Key words: communication, health systems, primary care, primary–secondary care interface, quality, referral letters

Introduction

In many healthcare systems, including Ireland and the United Kingdom, GPs are the first point of contact for patients with the health system and the majority of medical problems are subsequently managed in primary care (O’Donnell, 2000). A key role of the GP is to act as a gatekeeper for access to secondary services, with one systematic review showing an inverse association between good quality primary care and avoidable hospitalisation (Rosano et al., 2012). Good gate keeping in general practice is dependent on a strong doctor–patient relationship, understanding of the bio-psychosocial model as well as effective diagnostic and referral-making skills (Mathers and Mitchell, 2010). Optimal communication at the primary–secondary care interface is necessary to prevent delays in care, patient frustration and inaccurate information (Sampson et al., 2015) and the importance of high-quality referral letters has been recognised (Ramanayake, 2013).

Previous studies of referral letters have found content deficits in the documentation of: medications (Toleman and Barras, 2007); prior investigations (Culshaw et al., 2008); presenting symptoms (Su et al., 2013); and appropriateness, particularly regarding stated level of urgency (Blundell et al., 2010). One study reported that completeness of documentation could have an important impact on how and when the patient is managed by specialists (Jiwa et al., 2002). Recent qualitative research involving patient participants stated that gaps in their care were often due to problems in the ‘coordination of management’ (Tarrant et al., 2015). Furthermore, hospital physicians in Norway considered only 15.6% of referrals from general practice to be of good quality (Martinussen, 2013). A report commissioned by the King’s Fund found that the quality of ‘a substantial minority’ of referral letters could be improved (Foot et al., 2010).

Attempts to improve referral letter quality have therefore been the subject of research for some years but neither a Cochrane review (Akbari et al., 2008) or a previous systematic review (Faulkner et al., 2003) showed evidence of improvement by interventions. This study aims to review the current literature pertaining to interventions that are designed to improve referral letter quality.

Methods

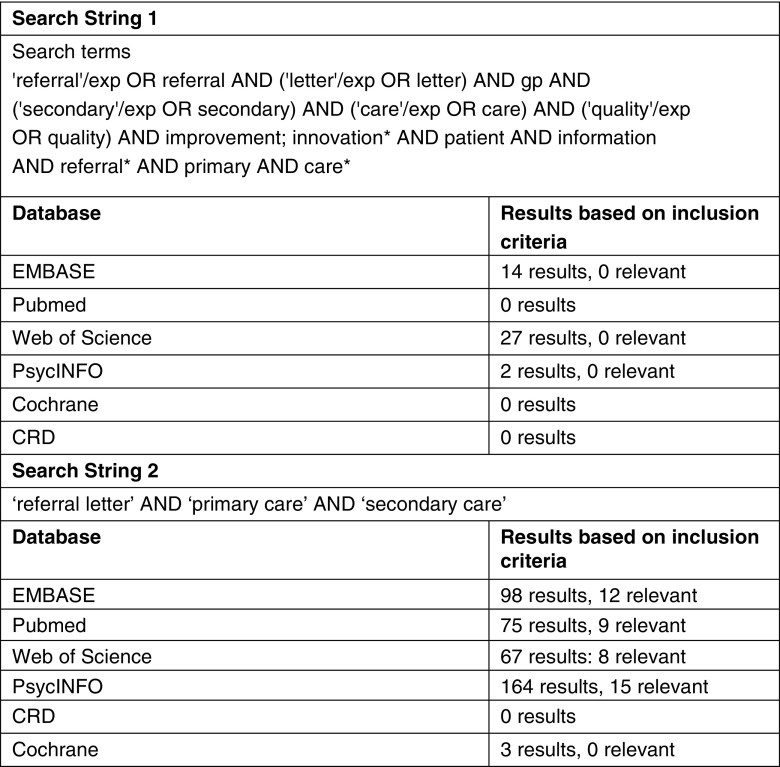

The authors believed that a narrative review would best facilitate focussed analysis of the literature. A search strategy was designed using a Problem, Intervention, Comparator, Outcome (PICO) model (see Table 1). The databases used in the study included: PubMed, EMBASE, Web of Science, PsycINFO, Cochrane and CRD. The search used the following key words and MeSH terms for example ‘referral’/exp OR referral AND (‘letter’/exp OR letter) AND gp AND (‘secondary’/exp OR secondary) AND (‘care’/exp OR care) AND (‘quality’/exp OR quality) AND improvement; innovation* AND patient AND information AND referral* AND primary AND care*.

Table 1.

Problem, Intervention, Comparator, Outcome strategy

| Key words | |

|---|---|

| Problem | ‘referral’ OR ‘referrals’ NOT (‘discharge’ OR prescrib*) |

| Intervention | ‘template’ OR ‘templates’ OR ‘standard’ OR ‘standards’ OR ‘guide’ OR ‘guidelines’ OR ‘protocol’ OR ‘strategy’ OR ‘system’ OR ‘pathway’ |

| Comparator | ‘primary care’ OR ‘primary healthcare’ OR ‘primary health-care’ OR ‘primary health’ NOT (‘nurse’ OR ‘nursing’ OR ‘dentist’ OR ‘dentistry’ OR ‘pharmacist’ OR ‘pharmacy’ OR ‘physiotherapist’ OR ‘physiotherapy’) |

| Outcome | ‘quality’ OR ‘content’ OR ‘patient information’ OR ‘patient data’ OR ‘continuity of care’ OR ‘doctors knowledge’ OR ‘background information’ |

PCP=primary care provider.

Inclusion and exclusion criteria

Research papers published in peer-reviewed journals between January 2007 and 31 July 2017 that were written in the English language were included in the search. The start date was chosen to overlap with the previous Cochrane review. Only papers that focused specifically on interventions to improve the quality of patient information conveyed in primary to secondary care referrals of health systems in developed countries were considered. The exclusion criteria were papers not written in the English language, studies that did not evaluate interventions on letter quality, studies of referrals to non-secondary care destinations. Papers that focused on cost effectiveness were not included in order to focus on the referral letter quality rather than financial implications.

Screening

Literature was reviewed by two researchers using the inclusion and exclusion criteria outlined above, and queries on the suitability of individual studies were discussed and decided upon by a third assessor. Bibliographies of selected publications were screened for any more potentially relevant resources. Previous research (Frye and Hemmer, 2012; Kvan, 2013; Lewis et al., 2017) in the fields of medical and inter-professional education have applied Kirkpatrick’s levels as a model for evaluating learning and training outcomes (Kirkpatrick, 1967). The approach involves categorising the outcomes of an intervention into one of four levels: (1) the level of attitude or reaction; (2) whether learning has occurred in terms of knowledge or skills; (3) to what extent has the skills or knowledge been applied in practice; (4) an impact on the health system or patients (Lewis et al., 2017). While the outcome measurements from each level are not hierarchical, they are considered a useful starting point for comprehensive evaluation (Lewis et al., 2017) and go beyond the level of learner satisfaction (Frye and Hemmer, 2012).

Results

The initial search yielded 291 papers after the removal of duplicates. Full details of the searches are included in Figure 1. Selected papers were screened by title and subsequently screened on their abstract or full manuscript. A total of 18 studies were selected for review based on the set criteria. Papers were mainly excluded that did not pertain to referral letters, were not directed to secondary care and did not evaluate an intervention to promote quality. The publications were assessed thematically, and their results presented by intervention type.

Figure 1.

Search string. * indicates a truncation search term that matches any string and can be used anywhere in the search string.

Four of the studies demonstrated some degree of impact on the health system (Evans, 2009; Kim et al., 2009; Rokstad et al., 2013; Wright et al., 2015). Table 2 describes each of the studies and Table 3 outlines the main findings of each intervention.

Table 2.

Description of studies

| Study | Location | Description | Study rigour |

|---|---|---|---|

| Electronic referrals | |||

| Shaw and de Berker (2007) | UK, Dermatology, one centre only | Retrospective study: 131 electronic referrals and 129 paper referral reviewed for quality of demographic and electronic referrals | This was a small, descriptive study, involving retrospective data analysis. Electronic referrals were superior at recording prescription lists and patients’ demographics compared with paper referrals but difficulties cited with free text may reflect an inherent problem with the design of the proforma itself |

| Kim et al. (2009) | USA, PCPs from 24 clinics | Self-reporting of PCPs. The study population was a mixture of physicians, many were specialists and the study setting is not representative of typical general practice | This was a self-report design and consequently, the results are subject to recall bias. The survey was conducted online and it is possible that participants that were more IT savvy would be more inclined to respond to the web-based questionnaires |

| Nash et al. (2016) | Australia, one emergency department | Retrospective study: 12 199 referrals reviewed for quality of documentation, legibility and whether they contained level of urgency | This study had a single site and retrospective design. Results may not be transferrable to other settings |

| Hysong et al. (2011) | USA, PCPs and specialists at two tertiary centres | Qualitative study using focus groups designed to understand the electronic health records system | This qualitative study was limited to participants from a single health network, which may limit transferability to other health centres |

| Zuchowski et al. (2015) | USA, PCPs from one regional network | Mixed methods: cross sectional survey of 191 PCPs and semi-structured interviews with 41 PCPs | This study was confined to one regional network, which limits the transferability of results. Only PCPs were interviewed for this study. Involvement of the specialists who received the referral letters would had been useful for triangulation |

| Peer feedback | |||

| Evans (2009) | UK, local health board, three practices and one hospital | Review of a year-long scheme that provided protected time for GPs and hospital consultants to meet on a regular basis to discuss referrals | This was a one year pilot study but it was limited to one region and the authors suggest that the intervention may not be suited to other regions |

| Xiang et al. (2013) | UK, 41 practices in a primary care team | Review of referrals by triaging GPs who gave feedback to referring GPs on deficiencies in referral letters | Both internal and external validity were strong, as the design involved a large number of referral letters from a setting with a diverse population. However, the hospital specialist was not involved in assessing referral letters. There was a follow-up with only seven months between baseline and assessment periods |

| Elwyn et al. (2007) | UK, three endoscopy units in two hospital trusts | An intervention that aimed to introduce referral assessment in order to change the proportion of referrals that adhered to accepted guidelines, and to assess what impact this might have on demand for endoscopy and on the referral-to-procedure interval | This study involved a wider timeframe – five months pre and six months post-intervention data, which did not include a control group. Authors stated that they received several letters of complaint from clinicians voicing concerns that the system would erode clinical freedom |

| Templates | |||

| Haley et al. (2015) | USA, nine PCP and five nephrology practices | Qualitative study using pre- and post-implementation interviews, questionnaires, site visits, and monthly teleconferences were used to ascertain practice patterns, perceptions and tool use and to see the level of communication and coordination among PCPs and nephrologists | Familiarity with interviewees may have introduced bias and skewed the results. The specific patient group attending PCPs and nephrology practices in two locations are not reflective of the wider healthcare system. Practices were recruited on a voluntary basis so volunteer bias was a factor in this study |

| Rokstad et al. (2013) | Norway, 210 GPs | Intervention study that aimed to investigate whether incorporating an electronic optional guideline tool in the standardised referral template used by GPs when referring patients to specialised care can improve outpatient referral appropriateness. Follow-up interviews were conducted with the intervention group who used the tool | Both the GP and hospital specialist were interviewed about the EGOT tool, which facilitates a wider range of perspectives. There were problems with the implementation of the intervention as many GPs who agreed to use the template did not continue to do so, which may reflect a problem with usability of the template |

| Wahlberg et al. (2017) | Norway, 14 primary care surgeries | Intervention study with an intervention which consisted of implementing referral templates for new referrals in four clinical areas: dyspepsia; suspected colorectal cancer; chest pain; and confirmed or suspected chronic obstructive pulmonary disease | A large number of assessors were involved in grading the quality of referrals which may have implications for reproducibility of the findings. The authors acknowledged that because of the retrospective nature of the design, that they can only assess actions recorded and that there may have been actions performed and not recorded |

| Wahlberg et al. (2016) | Norway, 14 primary care surgeries and one hospital | Intervention study with an intervention consisted of implementing referral templates for new referrals in four clinical areas: dyspepsia; suspected colorectal cancer; chest pain; and confirmed or suspected chronic obstructive pulmonary disease | This paper had a high response rate (82%) but the use of a short form questionnaire limited the depth of data that was collected. The authors conceded that the study lacked a solid analytical framework |

| Wahlberg et al. (2015) | Norway, 14 primary care surgeries | A cluster randomised trial using referral templates for patients in four diagnostic groups: dyspepsia, suspected colorectal cancer, chest pain and chronic obstructive pulmonary disease | The randomised cluster design of this study lead to a number of problems. First, there is possible bias whereby more proactive GPs may be inclined to use the referral templates and thereby skew results. Second, adherence to the referral template may be variable depending on workload and time constraints |

| Jiwa et al. (2014) | Australia, 102 GPs | Quantitative study using single-blind, parallel-groups, controlled design with a 1:1 randomisation used to evaluate whether specialists are more confident about scheduling appointments when they receive more information in referral letters | This paper took into account that there was no doctor–patient interaction as actors are used to play the role of the patient. In phase one, GPs were shown vignettes of an actor-patient performing a monologue and phase two, the intervention group used the referral software and the control group did not. GPs withdrew after phase one in the control and intervention groups for reasons that were not explained which resulted in lower numbers in phase two |

| Jiwa and Dhaliwal (2012) | Australia, 10 GPs and hospital specialists | Quantitative study using interactive computerised referral writer software to explore if increasing the amount of relevant information relayed in referral letters between GPs | Of the 10 GPs who commenced the study, only seven completed the intervention which may reflect usability problems with the referral software. The mean number of patients per practice were given but not the total number of patients involved in the study |

| Eskeland et al. (2017) | Norway, 25 GPs | Randomised cross over vignette trial in which GPs were randomised to a control and then crossed over to an intervention. The intervention was a drop down diagnosis-specific checklist | Clinical vignettes were used instead of real-life consultations in order to standardise the setting but the findings are therefore not reflective of the interpersonal interactions of which general practice consultations consist. The system did not record all aspects of the referral and this may affect the validity of the findings |

| Wright et al. (2015) | UK, 13 GP practices | Mixed methods approach was used to evaluate the effectiveness of the new referral management system | Practices were recruited on a voluntary basis so volunteer bias was a factor in this study |

| Corwin and Bolter (2014) | New Zealand, 15 GPs and two nurses | Quantitative study using a nine-point checklist to investigate the quality of such referrals in a group of GPs and nurse | The sample size was small but quality was assessed at five months and again at 10 months after baseline. Quality of referrals was measured using only a single tool; a nine-point checklist, with some letters scoring high because they contained a lot of information despite being difficult to follow and sometimes incoherent |

Table 3.

Study outcomes

| Study | Kirkpatrick level | Outcome details |

|---|---|---|

| Electronic referrals | ||

| Shaw and de Berker (2007) | 3 | ERs showed communication of the patient’s problem by GPs was poor |

| Kim et al. (2009) | 4 | 72% believed that ERs improved overall clinical care of patients but the study population was a mixture of physicians, many were specialists and the study setting is not representative of typical general practice |

| Nash et al. (2016) | 3 | ERs provided more clinical information than handwritten but no effect on patient or system outcomes |

| Hysong et al. (2011) | NA | Improvement in referral coordination by PCPs and subspecialists |

| Zuchowski et al. (2015) | NA | Improvement in referral communication |

| Peer feedback | ||

| Evans (2009) | 4 | Improvement in referral quality and reducing inappropriate demand |

| Xiang et al. (2013) | 3 | Improvement in referral quality and decisions made will be more accurate |

| Elwyn et al. (2007) | 3 | Improving the quality of referrals and reducing demand |

| Templates | ||

| Haley et al. (2015) | 3 | Improvement in documentation |

| Rokstad et al. (2013) | 4 | Improvement in referral quality and in time efficiency by the specialist reviewing the letters |

| Wahlberg et al. (2015) | 3 | Improvement in documentation |

| Wahlberg et al. (2016) | 3 | Sought to prove association with patient experience compared with control but none seen |

| Wahlberg et al. (2017) | 3 | Sought to prove association with improved quality of care through quality indicators but none seen |

| Eskeland et al. (2017) | 3 | Improvement in referral quality |

| Jiwa et al. (2014) | 3 | Improvement in documentation of clinically relevant data. Referral times unchanged. Preference for free script |

| Jiwa and Dhaliwal (2012) | 3 | Improvement in referral quality as judged by specialists. No improvement in ability to identify high-risk patients |

| Mixed interventions | ||

| Wright et al. (2015) | 4 | Improvement in referral quality and reduced number of inappropriate referrals. Reduced number of referrals |

| Corwin and Bolter (2014) | 3 | Combination of peer feedback and electronic referrals. Referral quality was only seen with peer feedback |

ER=electronic referral; PCP=primary care provider.

Impact of electronic referrals (ERs)

Shaw and de Berker (2007) reviewed electronic and paper referrals written and found that ERs were more effective at containing demographic data when compared with manual referring but less effective at clinical data that would lead to a diagnosis. The authors cautioned against prioritising the ER process over the clinical context of the patients’ problems. This was a small, descriptive study, involving retrospective data analysis. ERs were superior at recording prescription lists and patients’ demographics compared with paper referrals but difficulties cited with free text may reflect an inherent problem with the design of the proforma itself. Nash et al. (2016) found that ERs were of better quality than handwritten, providing more information on medication and medical history. In contrast, a survey of 298 primary care providers (PCPs), (Kim et al., 2009), found that the majority believe that ERs promoted better quality of care. This was a self-report design and consequently, the results are subject to recall bias. The survey was conducted online and it is possible that participants that were more IT savvy would be more inclined to respond to the web-based questionnaires.

In a qualitative study of the ER system (Hysong et al., 2011) primary and secondary care physicians agreed that ERs could enhance the referral system but that key systems coordination principles needed to be in place in order for an ER system to function. These included clarity of roles, standardisation of practises and adequate resourcing. This qualitative study was limited to participants from a single health network, which may limit transferability to other health centres. Zuchowski et al. (2015) found that the capability of the ER system to improve communication with secondary care specialists varied between specialties. A recurring theme in relation to ER systems was that of ‘rigid informational requirements’, with many GPs resorting to telephone and email use to communicate with those specialists ‘with whom they had established relationships’. This study was confined to one regional network, which limits the transferability of results. Only PCPs were interviewed for this study. Involvement of the specialists who received the referral letters would had been useful for triangulation.

Impact of peer feedback

A year-long intervention (Evans, 2009) which provided GPs with protected and resourced time for peer-review and regular meetings with hospital specialists reported substantial improvement in letter quality. Referrals were rated for their content and in two of the three participating practices the content improved. This was a one year pilot study but it was limited to one region and the authors suggest that the intervention may not be suited to other regions. Xiang et al. (2013) retrospectively analysed GP referrals before and after the introduction of a system that provided GPs with peer feedback for seven months. They found significant improvements in documentation of past medical history and prescribed medication; however, no significant increase in the relevant clinical information or clarity of reason for referral was detected. Both internal and external validity were strong in this study as the design involved a large number of referral letters from a setting with a diverse population. However, the hospital specialist was not involved in assessing referral letters. There was a follow-up with only seven months between baseline and assessment periods. An uncontrolled study of GP referrals to endoscopy units (Elwyn et al., 2007), referrals were analysed by two GPs to evaluate their adherence to NICE guidelines. Same day written feedback was provided to those whose letters did not comply, outlining their deficits. The mean adherence to guidelines improved from 55% before the intervention to 75% afterwards. This study involved a wider timeframe – five months pre and six months post-intervention data, which did not include a control group. Authors stated that they received several letters of complaint from clinicians voicing concerns that the system would erode clinical freedom.

Impact of templates

A study of referrals using templates from nine primary care practices to nephrology clinics reported a significant increase in the level of documentation of relevant clinical information from pre- to post-intervention (Haley et al., 2015). Furthermore, in post-intervention interviews, PCPs said that the intervention helped to increase awareness of risk factors and management guidelines in chronic kidney disease. Familiarity with interviewees may have introduced bias and skewed the results. The specific patient group attending PCPs and nephrology practices in two locations are not reflective of the wider healthcare system. Practices were recruited on a voluntary basis so volunteer bias was a factor in this study. A study of referrals from general practice to lung specialists (Rokstad et al., 2013) investigated an optional electronic guideline incorporated in the practice software. Lung specialists, who were blinded as to whether the referrers were using the intervention or not, used an evaluation form to score the referral and reported improved quality of referrals and time saving. Both the GP and hospital specialist were interviewed about the referral tool, which facilitates a wider range of perspectives. There were problems with the implementation of the intervention as many GPs who agreed to use the template did not continue to do so, which may reflect a problem with usability of the template.

Wahlberg et al. (2015) conducted a randomised cluster trial using templates for four commonly encountered, potentially serious presenting complaints across 14 practices in Norway. Statistically significant improvements in quality of referral letters were associated with three of the four templates were reported. The randomised cluster design of this study led to a number of problems. First, there is possible bias whereby more proactive GPs may be inclined to use the referral templates and thereby skew results. Second, adherence to the referral template may be variable depending on workload and time constraints. A second analysis published one year later (Wahlberg et al., 2016) investigated the impact on patient experience of the care process using self-report questionnaires and found no significant improvement in patient experience. This paper had a high response rate (82%) but the use of a short-form questionnaire limited the depth of data that was collected. The authors conceded that the study lacked a solid analytical framework. A final analysis (Wahlberg et al., 2017) investigated the impact of the referral template on the quality of care received in the hospital and, similarly, no significant improvement in hospital care was observed. A large number of assessors were involved in grading the quality of referrals, which may have implications for reproducibility of the findings. The authors acknowledged that because of the retrospective nature of the design, that they can only assess actions recorded and that there may have been actions performed and not recorded.

Eskeland et al. (2017) asked GPs to read gastroenterology-related clinical vignettes and write clinical referral letters based on the information. GPs were randomised to a control or an intervention, which was a set of diagnosis-specific checklists. A consistent improvement in referral quality was observed in the intervention group. Clinical vignettes were used instead of real-life consultations in order to standardise the setting but the findings are therefore not reflective of the interpersonal interactions of which general practice consultations consist. The system did not record all aspects of the referral and this may affect the validity of the findings. Jiwa and Dhaliwal (2012) introduced templates for referring to six hospital disciplines. They compared 56 referral letters from seven GPs (pre-intervention) to 48 ERs four months after and found that the amount of referral information and the confidence of the clinician receiving the referral in their ability to make a decision based on the referral increased. Of the 10 GPs who commenced the study, only seven completed the intervention, which may reflect usability problems with the referral software. The mean number of patients per practice was given but not the total number of patients involved in the study. Jiwa et al. (2014), in a non-randomised controlled trial asked GPs in both control and intervention groups to read clinical vignettes and make referral decisions based on what they had read. The quantity of clinical information in the letter improved but this did not result in a significant change in appointment scheduling. The design of this study involved actors playing a part in a simulated consultation and would not reflect the reality of the interaction of a real doctor–patient interaction and it is likely therefore that the referrals suggested by the participating GPs would be different from real-life situations. This paper took into account that there was no doctor–patient interaction as actors are used to play the role of the patient. In phase one, GPs were shown vignettes of an actor-patient performing a monologue and phase two, the intervention group used the referral software and the control group did not. GPs withdrew after phase one in the control and intervention groups for reasons that were not explained which resulted in lower numbers in phase two.

Impact of mixed interventions

A pilot study of 13 practices in the United Kingdom (Wright et al., 2015) used a service combining referral guidelines, templates and feedback from those who triage referrals. In the intervention group, fewer referrals were challenged for incompleteness or insufficiency of information and the number of referrals decreased. Interviews with practice staff and patients found a high degree of satisfaction with the system. Practices were recruited on a voluntary basis so volunteer bias was a factor in this study. In a small-scale study (Corwin and Bolter, 2014), GPs were initially given written feedback on their letters from hospital colleagues and a comparison was made between the letter quality before and five months post this intervention. Second, ERs were introduced and a comparison was again made between referrals before and five months after. Feedback improved the referral quality and ERs did not. The sample size was small but quality was assessed at five months and again at 10 months after baseline. Quality of referrals was measured using only a single tool; a nine-point checklist, with some letters scoring high because they contained a lot of information despite being difficult to follow and sometimes incoherent.

Discussion

Our results have shown that several interventions have had moderate success in improving referral letter quality. Some studies claim to have had an additional impact on the health system and have been initially categorised as a Kirkpatrick level 4. However, a deeper analysis contests this assertion. Kim et al. (2009) were relying on the perceptions of physicians and not on an objective measure of systems improvement. Rokstad et al. (2013) found that specialists could afford to spend less time reviewing letters done using templates but this time saving does not necessarily translate into a positive impact for the system or the patient. Both Evans (2009) and Wright et al. (2015) report a reduction in referrals as a result of their intentions but the use of referral counts as a proxy for improvements in health systems has been contested (Foot et al., 2010). Higher or lower referral rates do not translate to good quality practice or referral writing (Knottnerus et al., 1990).

In all, 12 of the interventions scored a Kirkpatrick level of three but the outcomes based focus of system can give an impression of high impact, while missing out in the processes involved the associated intricacies. One such feature in the case of templates is that, in many instances, GPs preferred to use free text rather than the ‘tick-box’ approach provided by the template, which was interpreted as a preference among GPs for including the patient narrative (Jiwa et al., 2014). Similarly, Zuchowski et al. (2015) commented on the rigidity of ERs and that inter-clinician communication was an essential component of referrals. More robust methodology is also needed, including follow-up assessments at six and 12 months post-intervention; longer duration of interventions and involvement of GPs at the design of any intervention that involves them. We suggest that a needs assessment of GPS be conducted and described in any future paper involving interventions that involve them.

Perceptions about quality differ between GPs and hospital specialists. In a large survey of American physicians (O’Malley and Reschovsky, 2011) 69.3% of GPs believed that they usually included relevant clinical details in referral letters whereas only 34.8% of consultants said that they received those details. Our study has reviewed investigations that were designed to improve referral letter quality but this question must be considered in the context of how quality is assessed. Furthermore, long standing concerns over a lack of consensus among practising GPs about what constitutes a good quality referral letter have been expressed (Jiwa and Burr, 2002).

The more favourable interventions reviewed in this paper involved a combination of peer feedback with a software intervention (Corwin and Bolter, 2014; Wright et al., 2015). This finding has been noted in research (Bennett et al., 2001) showing that ear, nose and throat referrals from primary care were improved by combining a basic template with an educational video. GPs have expressed preference to learn about how best to make a referral and various clinical conditions through engagement with consultant colleagues (Eaton, 2008). Interestingly, a recent meta-analysis showed that there was a role for ‘interactive communication’ to improve ‘the effectiveness of primary care-specialist collaboration’ (Foy et al., 2010). A prior review of healthcare communication called for an increase in feedback between GPs and specialists to improve the quality of referral letters (Vermeir et al., 2015). Furthermore, a recent qualitative study with newly qualified GPs proposed integration of training across different specialties to help future GPs and consultants to ‘work collaboratively across the organisational boundaries’ at the primary–secondary care interface (Sabey and Hardy, 2015).

Jiwa and Dadich (2013) systematically analysed the literature around communication and reported overall poor quality of communication leading to compromised patient outcomes. The question of how to improve quality has eluded previous systematic reviews. Its complexity is that it is interlinked with several other factors relating to the health system, clinician capacity, attitudes and experiences, as well as the complexity of the clinical problem. A systematic review, restricted to protocol, cannot peel away the layers of contextual variables. Indeed, an analysis by Pawson et al. (2014) of the lack of success of reviews of healthcare studies stated that ‘multiple lessons’ are often missed because of their failure to ‘address the wider scenario’. Previous research on peer feedback (Jiwa et al., 2014) concurs with studies included in this review (Evans, 2009; Haley et al., 2015) showing GPs welcome feedback but, that as a stand-alone measure, it does not significantly improve quality of referrals.

Studies varied in methodologies: 12 studies were quantitative, four were qualitative and two studies used a mixed methods approach. Study limitations included having a small sample size (Jiwa and Dhaliwal, 2012; Corwin and Bolter, 2014), and being limited to a single region or health service network (Shaw and de Berker, 2007; Evans, 2009; Hysong et al., 2011; Rokstad et al., 2013; Wahlberg et al., 2015; 2016; 2017; Zuchowski et al., 2015; Nash et al., 2016), and consequently, the findings may not be generalisable and relevant to other health systems. Some of the studies involved only PCPs as participants, whereas the involvement of specialists would have been useful for triangulation (Xiang et al., 2013; Eskeland et al., 2017). Many of the quantitative studies had pre and post-intervention data analysis but no longer term follow-up after one year (Elwyn et al., 2007; Xiang et al., 2013; Haley et al., 2015). Some of the studies involved voluntary participation with associated volunteer bias (Haley et al., 2015; Wright et al., 2015) and one of the studies used a self-report design with the potential for recall bias (Kim et al., 2009). Therefore, it is likely that there is insufficient rigour in the studies analysed to make strong conclusions and recommendations.

Limitations only papers published in the English language were reviewed and there is a possibility that publications were missed. There is also a risk of publication bias in that studies that reported negative findings from interventions were not published. Future research should include objective assessments of clinical care quality measures to investigate more rigorously if referral letter improvements can improve the care the patient receives. Also, studies that evaluate the processes involved in the referral including the patient experience are needed as well as evaluations of the implementation of quality improvement interventions. Research on the sustainability of ongoing peer feedback (between GPs) and inter-professional communication involving clinicians who write and receive referral letters with long term follow-up data is needed.

Conclusion

This review has summarised and categorised interventions for quality improvement in GP referral letters over the past 10 years. Our analysis demonstrates that a combination of interventions, introduced as part of a joint package and involving peer feedback can improve both letter quality and, in a small number of instances, the healthcare system. Inter-clinician collaboration is most likely the single most important factor.

Acknowledgements

The authors would like to acknowledge the help of Mr. Fintan Bracken and Ms. Liz Dore; librarians at the University of Limerick.

Conflicts of Interest

None.

References

- Akbari A., Mayhew A., Al-Alawi M.A., Grimshaw J., Winkens R., Glidewell E., Pritchard C., Thomas R. and Fraser C. 2008: Interventions to improve outpatient referrals from primary care to secondary care. The Cochrane System of Systematic Reviews 8, CD005471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett K., Haggard M., Churchill R. and Wood S. 2001: Improving referrals for glue ear from primary care: are multiple interventions better than one alone? Journal of Health Services Research & Policy 6, 139–144. [DOI] [PubMed] [Google Scholar]

- Blundell N., Clarke A. and Mays N. 2010: Interpretations of referral appropriateness by senior health managers in five PCT areas in England: a qualitative investigation. Quality and Safety in Health Care 19, 182–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corwin P. and Bolter T. 2014: The effects of audit and feedback and electronic referrals on the quality of primary care referral letters. Journal of Primary Health Care 6, 324–327. [PubMed] [Google Scholar]

- Culshaw D., Clafferty R. and Brown K. 2008: Let’s get physical! A study of general practitioner’s referral letters to general adult psychiatry – are physical examination and investigation results included? Scottish Medical Journal 53, 7–8. [DOI] [PubMed] [Google Scholar]

- Eaton L. 2008: BMA backs GPs in their objections to financial incentives to limit hospital referrals. British Medical Journal 337, a2306. [Google Scholar]

- Elwyn G., Owen D., Roberts L., Wareham K., Duane P., Allison M. and Sykes A. 2007: Influencing referral practice using feedback of adherence to NICE guidelines: a quality improvement report for dyspepsia. Quality and Safety in Health Care 16, 67–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eskeland S.A., Brunborg C., Rueegg C.S., Aabakken L. and de Lange T. 2017: Assessment of the effect of an Interactive Dynamic Referral Interface (IDRI) on the quality of referral letters from general practitioners to gastroenterologists: a randomised crossover vignette trial. BMJ Open 7, e014636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans E. 2009: The Torfaen referral evaluation project. Quality in Primary Care 17, 423–429. [PubMed] [Google Scholar]

- Faulkner A., Mills N., Bainton D., Baxter K., Kinnersley P., Peters T.J. and Sharp D.A. 2003: Systematic review of the effect of primary care-based service innovations on quality and patterns of referral to specialist secondary care. British Journal of General Practice 53, 878–884. [PMC free article] [PubMed] [Google Scholar]

- Foot C., Naylor C. and Imison C. 2010: The quality of GP diagnosis and referral. London: The King’s Fund. Retrieved 10 July 2017 from https://www.kingsfund.org.uk/sites/default/files/Diagnosis%20and%20referral.pdf

- Foy R., Hempel S., Rubenstein L., Suttorp M., Seelig M., Shanman R. and Shekelle P.G. 2010: Meta-analysis: effect of interactive communication between collaborating primary care physicians and specialists. Annals of Internal Medicine 152, 247–258. [DOI] [PubMed] [Google Scholar]

- Frye A.W. and Hemmer P.A. 2012: Program evaluation models and related theories: AMEE Guide No. 67. Medical Teacher 34, 288–299. [DOI] [PubMed] [Google Scholar]

- Haley W.E., Beckrich A.L., Sayre J., McNeil R., Fumo P., Rao V.M. and Lerma E.V. 2015: Improving care coordination between nephrology and primary care: a quality improvement initiative using the renal physicians association toolkit. American Journal of Kidney Diseases 65, 67–79. [DOI] [PubMed] [Google Scholar]

- Hysong S.J., Esquivel A., Sittig D.S., Paul A.L., Espadas D., Singh S. and Singh H. 2011: Towards successful coordination of electronic health record based-referrals: a qualitative analysis. Implementation Science 6, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiwa M. and Burr J. 2002: GP letter writing in colorectal cancer: a qualitative study. Current Medical Research and Opinion 18, 342–346. [DOI] [PubMed] [Google Scholar]

- Jiwa M. and Dadich A. 2013: Referral letter content: can it affect patient outcomes? British Journal of Healthcare Management 19, 140–146. [Google Scholar]

- Jiwa M. and Dhaliwal S. 2012: Referral writer: preliminary evidence for the value of comprehensive referral letters. Quality in Primary Care 20, 39–45. [PubMed] [Google Scholar]

- Jiwa M., Meng X., O’Shea C., Magin P., Dadich A. and Pillai V. 2014: Impact of referral letters on scheduling of hospital appointments: a randomised control trial. British Journal of General Practice 64, 419–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiwa M., Walters S. and Cooper C. 2002: Quality of referrals to gynaecologists: towards consensus. The Journal of Clinical Governance 10, 177–181. [Google Scholar]

- Kim Y., Chen A.H., Keith E., Yee H.F. and Kushel M.B. 2009: Not perfect, but better: primary care providers’ experiences with electronic referrals in a safety net health system. Journal of General Internal Medicine 24, 614–619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirkpatrick D. 1967: Evaluation of training In Craig, R., editor, Training and development handbook: a guide to human resource development. New York: McGraw-Hill, 87–122. [Google Scholar]

- Knottnerus J.A., Joosten J. and Daams J. 1990: Comparing the quality of referrals of general practitioners with high and average referral rates: an independent panel review. British Journal of General Practice 40, 178–181. [PMC free article] [PubMed] [Google Scholar]

- Kvan T. 2013: Evaluating learning environments for interprofessional care. Journal of Interprofessional Care 27, 31–36. [DOI] [PubMed] [Google Scholar]

- Lewis A., Edwards S., Whiting G. and Donnelly F. 2017: Evaluating student learning outcomes in oral health knowledge and skills. Journal of Clinical Nursing 1–12. 10.1111/jocn.14082 [Epub ahead of print]. [DOI] [PubMed]

- Martinussen P.E. 2013: Referral quality and the cooperation between hospital physicians and general practice: the role of physician and primary care factors. Scandinavian Journal of Public Health 41, 874–882. [DOI] [PubMed] [Google Scholar]

- Mathers N. and Mitchell C. 2010: Are the gates to be thrown open? British Journal of General Practice 60, 317–318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nash E., Hespe C. and Chalkley D. 2016: A retrospective audit of referral letter quality from general practice to an inner‐city emergency department. Emergency Medicine Australasia 28, 313–318. [DOI] [PubMed] [Google Scholar]

- O’Donnell C.A. 2000: Variation in GP referral rates: what can we learn from the literature? Family Practice 17, 462–471. [DOI] [PubMed] [Google Scholar]

- O’Malley A.S. and Reschovsky J.D. 2011: Referral and consultation communication between primary care and specialist physicians: finding common ground. Archives of Internal Medicine 171, 56–65. [DOI] [PubMed] [Google Scholar]

- Pawson R., Greenhalgh J., Brennan C. and Glidewell E. 2014: Do reviews of healthcare interventions teach us how to improve healthcare systems? Social Science & Medicine 114, 129–137. [DOI] [PubMed] [Google Scholar]

- Ramanayake R.P. 2013: Structured printed referral letter (form letter); saves time and improves communication. Journal of Family Medicine and Primary Care 2, 145–148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rokstad I.S., Rokstad K.S., Holmen S., Lehmann S. and Assmus J. 2013: Electronic optional guidelines as a tool to improve the process of referring patients to specialized care: an intervention study. Scandinavian Journal of Primary Health Care 31, 166–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosano A., Loha C.A., Falvo R., Van der Zee J., Ricciardi W., Guasticchi G. and De Belvis A.G. 2012: The relationship between avoidable hospitalization and accessibility to primary care: a systematic review. The European Journal of Public Health 23, 356–360. [DOI] [PubMed] [Google Scholar]

- Sampson R., Cooper J., Barbour R., Polson R. and Wilson P. 2015: Patients’ perspectives on the medical primary-secondary care interface: systematic review and synthesis of qualitative research. British Medical Journal Open 5, e008708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabey A. and Hardy H. 2015: Views of newly-qualified GPs about their training and preparedness. British Journal of General Practice 65, 270–277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw L.J. and de Berker D.A. 2007: Strengths and weaknesses of electronic referral: comparison of data content and clinical value of electronic and paper referrals in dermatology. British Journal of General Practice 57, 223–224. [PMC free article] [PubMed] [Google Scholar]

- Su N., Cheang P.P. and Khalil H. 2013: Do rhinology care pathways in primary care influence the quality of referrals to secondary care? Journal of Laryngology and Otology 127, 364–367. [DOI] [PubMed] [Google Scholar]

- Tarrant C., Windridge K., Baker R., Freeman G. and Boulton M. 2015: Falling through gaps: primary care patients’ accounts of breakdowns in experienced continuity of care. Family Practice 32, 82–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toleman J. and Barras M. 2007: General practitioner referral letters: are we getting the full picture? Internal Medicine Journal 37, 510–511. [DOI] [PubMed] [Google Scholar]

- Vermeir P., Vandijck D., Degroote S., Peleman R., Verhaeghe R., Mortier E., Hallaert G., Van Dael S., Buylaert W. and Vogelaers D. 2015: Communication in healthcare: a narrative review of the literature and practical recommendations. Internal Journal of Clinical Practice 69, 1257–1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wahlberg H., Braaten T. and Broderstad A.R. 2016: Impact of referral templates on patient experience of the referral and care process: a cluster randomised trial. BMJ Open 6 10.1136/bmjopen-2016-011651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wahlberg H., Valle P.C., Malm S. and Broderstad A.R. 2015: Impact of referral templates on the quality of referrals from primary to secondary care: a cluster randomised trial. BMC Health Service Research 15, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wahlberg H., Valle P.C., Malm S., Hovde O. and Broderstad A.R. 2017: The effect of referral templates on outpatient quality of care in a hospital setting: a cluster randomized controlled trial. BMC Health Services Research 17, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright E., Hagmayer Y. and Grayson I. 2015: An evidence-based referral management system: insights from a pilot study. Primary Health Care Research & Development 16, 407–414. [DOI] [PubMed] [Google Scholar]

- Xiang A., Smith H., Hine P., Mason K., Lanza S., Cave A., Sergeant J., Nicholson Z. and Devlin P. 2013: Impact of a referral management “gateway” on the quality of referral letters; a retrospective time series cross sectional review. BMC Health Service Research 13, 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuchowski J.L., Rose D.E., Hamilton A.B., Stockdale S.E., Meredith L.S., Yano E.M., Rubenstein L.V. and Cordasco K.M. 2015: Challenges in referral communication between VHA primary care and specialty care. Journal of General Internal Medicine 30, 305–311. [DOI] [PMC free article] [PubMed] [Google Scholar]