Abstract

An increasing number of wearable devices performing eye gaze tracking have been released in recent years. Such devices can lead to unprecedented opportunities in many applications. However, staying updated regarding the continuous advances and gathering the technical features that allow to choose the best device for a specific application is not trivial. The last eye gaze tracker overview was written more than 10 years ago, while more recent devices are substantially improved both in hardware and software. Thus, an overview of current eye gaze trackers is needed. This review fills the gap by providing an overview of the current level of advancement for both techniques and devices, leading finally to the analysis of 20 essential features in six head-mounted eye gaze trackers commercially available. The analyzed characteristics represent a useful selection providing an overview of the technology currently implemented. The results show that many technical advances were made in this field since the last survey. Current wearable devices allow to capture and exploit visual information unobtrusively and in real time, leading to new applications in wearable technologies that can also be used to improve rehabilitation and enable a more active living for impaired persons.

Keywords: Eye tracking, gaze tracking, head-mounted, wearable robotics, rehabilitation, assistive technology

Introduction

For humans, sight and hearing are the most effective ways to perceive the surrounding reality. While the ears are passive organs, humans can move the eyes to better investigate the surrounding space, stopping on the most salient details. Eye tracking is the measurement of human eye movement and it is performed by devices commonly called eye trackers. Eye trackers can measure the orientation of the eye in space or the position of the eye with respect to the subject’s head.1,2 This review focuses on eye gaze trackers3 that can resolve the point-of-regard, namely where the subject is looking in a scene.1,2 Thus, they allow to monitor where a person is focusing. Eye tracking devices that do not calculate or resolve the point of regard exist, but are not included in this review. Thanks to recent technical advances, lightweight head-mounted eye gaze tracking devices that work in many real-world scenarios are now commercially available. Often, they also provide visual scene information with a camera recording the scene in front of the subject.

The possible applications of eye gaze trackers depend substantially on their characteristics. To the best of our knowledge, the last overview in the field was written more than 10 years ago4 and the domain has substantially changed since then. Thus, the description provided in this paper will help the scientific community to optimally exploit current devices.

Wearable eye gaze trackers allow to monitor vision, which is among the most important senses to control grasping5 and locomotion.6 Thus, eye gaze trackers can be relevant in restoring, augmenting, and assisting both upper and lower limb functionality. Hand–eye coordination is the process with which humans use visual input to control and guide the movement of the hand. In grasping tasks, vision precedes the movement of the hand providing information on how to reach and grasp the targeted object.5,7,8 Eye gaze tracking and object recognition can be used to identify the object that a person is aiming to grasp. Gaze information can also be used to detect and support the patients’ intention during rehabilitation.9 Thus, wearable head-mounted eye gaze trackers with scene camera can help to improve hand prosthetics and orthosis systems.10–12

Vision is a main sensorial input used to control locomotion: it has an important role in trajectory planning, it regulates stability and it is relevant for fall prevention.13–15

Eye gaze tracking can be useful in many assistive applications16,17 and it can be used also to improve the rehabilitation and enable and promote active living also for impaired persons. Eye gaze tracking information can be used to assist during locomotion as well as to improve the control of assistive devices such as wheelchairs.18,19 In combination with other sensor data such as electromyography (EMG), electroencephalography (EEG), and inertial measurement units (IMUs), eye gaze tracking allows persons with limited functional control of the limbs (such as people affected by multiple sclerosis, Parkinson’s disease, muscular dystrophy, and amyotrophic lateral sclerosis) to recover some independence. Tracking eye movements allows patients to control computers, browse the Internet, read e-books, type documents, send and receive e-mails and text messages, draw and talk, thus augmenting their capabilities.20

The first attempts to objectively and automatically track the eye motion date back to the late 19th century, with the studies made by Hering (1879), Lamare (1892), Javal (1879), Delabarre (1898), Huey (1898), and Dodge (1898).21 Early devices consisted of highly invasive mechanical setups (such as cups or contact lenses with mirrors).22,23 In the first years of the 20th century, eye movement recording was substantially improved by noninvasive corneal reflection photography, developed by Dodge and Cline.22,23 This method, often known as Dodge’s method, was improved until the 1960s, when the invasive techniques developed in the previous century found new applications.22 In these years, Robinson developed a new electromagnetic technique based on scleral coils24 and Yarbus applied the investigation of eye movements through suction devices (caps).23 In 1958, Mackworth and Mackworth developed a method based on television techniques to superimpose the gaze point onto a picture of the scene in front of the subject.25 A few years later, Mackworth and Thomas improved the head-mounted eye tracker that was originally invented by Hartridge and Thompson in 1948.23,26,27 In the same period, electro-oculography (EOG) (originally applied to investigate the eye movement by Schott, Meyers, and Jacobson) was also improved.2,23

In the 1970s, the eye-tracking field had an unprecedented growth thanks to the technological improvements brought by the beginning of the digital era. In 1971, corneal reflection was recorded with a television camera and an image dissector.2 Two years later, Cornsweet and Crane separated eye rotations from translations using the multiple reflections of the eye (known as Purkinje images) and developed a very accurate eye tracker based on this technique.3,22,23,27,28 In 1974, the bright pupil (BP) method was successfully applied by Merchant et al.2,22,29 One year later, Russo revised the limbus reflection method, which was based on the intensity of the infrared (IR) light reflected by the eye and developed by Torok, Guillemin and Barnothy in 1951.2,30 In the 1970s, military and industry-related research groups advanced toward remote and automatic eye gaze tracking devices.27

During the 1980s and 1990s, eye gaze tracking devices were substantially improved thanks to the electronic and computer science advancements and they were applied for the first time to human–computer interaction (HCI). In 1994, Land and Lee developed a portable head-mounted eye gaze tracker equipped with a head-mounted camera. This device was capable to record the eye movements and the scene in front of the subject simultaneously. Thus, it allowed the superimposition of the gaze point onto the scene camera’s image in real settings.31,32

Since 2000, wearable, lightweight, portable, wireless, and real-time streaming eye gaze trackers became increasingly available on the market and were used for virtual and augmented reality HCI studies.33–35

In 1998, Rayner described the eye movement research as composed of three eras: the first era (until approximately 1920) corresponds to the discovery of the eye movement basics; the second era (that lasted from the 1920s to the mid-1970s) was mainly characterized by experimental psychology research; during the third era (that started in mid 1970s), several improvements made eye tracking more accurate and easy to record.36 In 2002, Duchowski highlighted the beginning of a fourth new era in eye tracking research, characterized by the increase of interactive applications.37 Duchowski categorized eye tracking systems as diagnostic or interactive. While diagnostic devices aim at measuring visual and attention processes, interactive devices use eye movement and gaze measurement to interact with the user.37 Interactive devices can be subdivided into selective and gaze-contingent devices. While selective devices use gaze as a pointer, gaze-contingent systems adapt their behavior to the information provided by gaze.37,38 Currently, the fourth era of eye tracking is ongoing, supported by the technology evolution in telecommunications, electronic miniaturization, and computing power. Eye gaze tracking devices are becoming increasingly precise, user friendly, and affordable and lightweight head-mounted eye gaze tracking devices working in real-world scenario are now available.

To our knowledge, the last eye tracking device overview was written by the COmmunication by GAze INteraction (COGAIN) network in 2005.4 With this survey, the authors provided an overview of the technical progress in the eye tracking field at the time. The authors highlighted the technical evolution of the devices focusing on gaze tracking methods, physical interfaces, calibration methods, data archiving methods, archive sample types, data streaming methods, streaming sample types, and application programming interface (API) calibration and operation.

More than 10 years after the COGAIN survey, this overview highlights the recent advancements in the field. This work allows expert researchers to be updated on the new features and capabilities of recent devices (which are continuously improved) and less experienced researchers to evaluate the most suitable acquisition setup for their experiments easily.

Eye and gaze-tracking techniques

This section briefly summarizes the functioning of the human visual system and presents the most common methods to measure the movements of the eye.

The human eye has the highest visual acuity in a small circular region of the retina called fovea, having the highest density of cone photoreceptors.1,39,40 For this reason, the eyes are moved to direct the visual targets to the center of the fovea (behavior called scan path of vision).39 The act of looking can roughly be divided into two main events: fixation and gaze shift. A fixation is the maintenance of the gaze in a spot, while gaze shifts correspond to eye movements.41

Currently, the main eye and gaze tracking methods can be represented by seven techniques:4

EOG;

electromagnetic methods;

contact lenses;

limbus/iris-sclera boundary video-oculography;

pupil video-oculography;

pupil and corneal reflections video-oculography;

dual Purkinje image corneal reflection video-oculography.

EOG is based on the measurable difference of electrical potential existing between the cornea and the retina of the human eye.2,4,23,42–44 Electromagnetic methods measure the electric current induced into a scleral coil while moving the eye within a known magnetic field.4,22,42,43,45 Contact lenses–based methods measure the light reflected by a contact lens.4

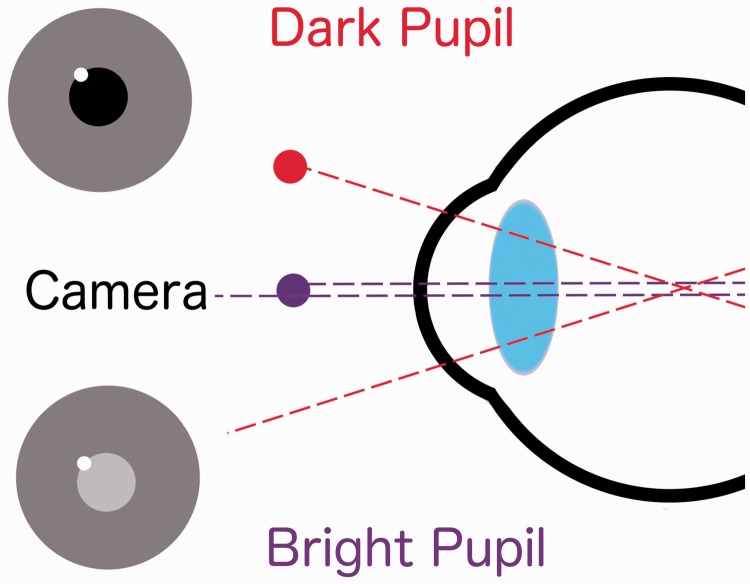

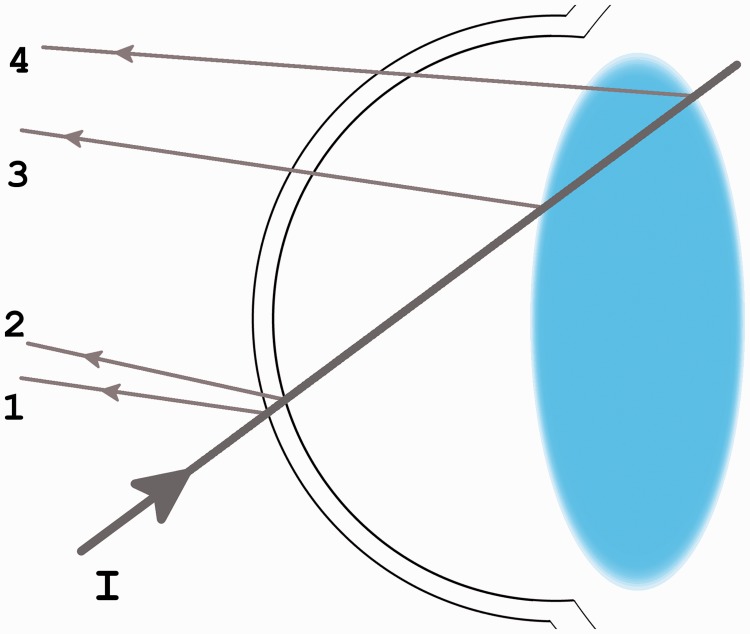

Video-oculography is based on recording the eye position with video cameras and it can be performed with several techniques. It is probably the most frequently used method in nonclinical settings and all the devices surveyed in this review are based on video-oculography techniques. Therefore, this method and the related approaches are extensively described in the following paragraphs. Limbus/iris-sclera boundary detection video-oculography consists of tracking the boundary between sclera and iris (called limbus). This method is easily implementable but it is substantially affected by eyelid covering. A more robust method against eyelid covering is the dark/BP method, based on the iris–pupil boundary detection. On the other hand, under normal light exposure, the contrast between pupil and iris can be very low. Thus, in order to increase the robustness of this method, light sources in the near IR spectrum are often used following two approaches: BP and dark pupil (DP). The main difference between these methods is the positioning of the IR source with respect to the optical axis of the camera. In the BP approach, the IR source is placed near the optical axis, while in DP it is placed far away. Thus, in the BP approach, the video camera records the IR beam reflected by the subject’s retina, making the pupil brighter than the iris, while in the DP approach, the reflected IR beam is not recorded by the camera and the pupil becomes darker than the iris. The two approaches are shown in Figure 1. Other methods to track the eye are based on the visible images of a bright source that can be seen reflected from the structure of the eye. These images are called Purkinje images (Figure 2). The DP/BP methods can be used in combination with one of these methods, called corneal reflection method. This method tracks the reflection of the light on the outer surface of the cornea (first Purkinje image or often referred as glint). The reflection appears as a very bright spot on the eye surface, thus it can be detected easily. Under some assumptions, the glint position depends only on head movement, thus the gaze can be estimated by the relative position between the glint and the pupil center.4,22,27,28,43,44,46 A comprehensive survey of video-oculography techniques can be found in Hansen and Ji.38 The dual Purkinje method is based on tracking the first and the fourth Purkinje images. The fourth Purkinje image is formed by the light reflected from the rear surface of the crystalline lens and refracted by both cornea and lens itself. These two Purkinje images move together for pure translational movement but, once the eye undergoes rotation, their distance changes, giving a measure of the angular orientation of the eye.2–4,22,28,38

Figure 1.

Dark and bright pupil techniques. The light beam provided by a light source close to the optical axis of the camera is reflected and recorded, resulting in a bright pupil effect (bottom). In the dark pupil technique, the light source is placed far away from the optical axis of the camera; therefore, the reflection from the subject’s retina is not recorded (top).

Figure 2.

Scheme of the Purkinje images. The anterior and posterior surface of both cornea and crystalline lens reflect the incoming light beam I. This results in four visible reflections, called first (1), second (2), third (3), and fourth (4) Purkinje images.

The mentioned methods have advantages and disadvantages. Methods involving a direct contact with the eye (such as contact lenses or electromagnetic methods) usually provide high sampling rate and accuracy but they are invasive.3,4 Methods based on dual Purkinje corneal reflection and on EOG usually provide high sampling rate and good accuracy.4 Differently from the other methods, EOG can be applied also if the eyes are closed4,42 but with the eyes closed gaze point tracking is not possible. The limbus/iris-sclera boundary detection is easy to be implemented but, on the other hand, it has low accuracy and precision.4 Video-oculography with pupil and/or corneal reflections can achieve good accuracy and sampling rate, with better results when the two methods are combined.4

A few other methods for gaze tracking have also been developed, such as natural light47–50 and appearance-based methods.48,51,52 Appearance-based methods directly use the content of the image to define and extract the gaze direction. In 1993, Baluja and Pomerleau applied this technique using artificial neural networks (ANNs) to recognize the subject’s eye within an image.51 More recently, deep learning has been used to perform eye tracking on mobile devices with impressive results.53 However, video-oculography with pupil and corneal reflection tracking is currently the dominant method used to estimate the gaze point.1,40 Furthermore, devices based on this technique are considered the most practical for interactive applications and are widely used in many commercial products.1,54

More exhaustive reviews of eye tracking history, methods, and applications can be found in Morimoto and Mimica,3 Young and Sheena,2 Yarbus,23 Duchowski,37 Hansen and Ji,38 Holmqvist et al.,40 Chennamma and Yuan,43 Hammoud,54 and Wade.55

Feature analysis of head-mounted eye gaze trackers

Eye gaze tracking devices are characterized by diverse properties, advantages and disadvantages. Thus, it is difficult to select a device for a specific application. Eye gaze tracking devices can be intrusive or nonintrusive (also called remote).3 Intrusive devices require the physical contact with the user. They are usually more accurate than remote devices but they are not recommended for prolonged use.3 Intrusive devices can have varying levels of invasiveness depending on the eye tracking technique.

The chosen device needs to correspond to the application requirements. In some cases, the devices that limit the head movement can be too restrictive and a head-mounted device is more appropriate. Wearable eye gaze tracking devices can be video-based or usable in real-world scenario. The former estimates the point of gaze on a computer monitor, while the latter uses a scene camera to investigate human gaze behavior in real world. Several features need to be taken into account when evaluating a head-mounted eye gaze tracker. This paper presents a selection of 20 features grouped into: technical features (such as accuracy, precision, sampling frequency), scene camera features (such as resolution, frame rate), software and operative features (e.g., availability of an SDK—Software Development Toolkit, real-time viewing) and additional features (such as price and possible use with corrective glasses). The considered features do not necessarily depend on a specific technique and they rather aim to help researchers choosing the best device for a specific experiment. Technical features (described in detail with examples in the following section) characterize the main quantitative parameters of an eye gaze tracker, so they can be particularly important in experiments measuring fixations, saccades, and microsaccades (for instance, in neurocognitive sciences). Scene camera features on the other hand can be more important for experiments targeting computer vision, for instance for assistive technologies or robotics applications (e.g., Cognolato et al.,10 Lin et al.,18 Ktena et al.,19 and Giordaniello et al.56]). Software and operative features are useful for experiments involving multimodal data (thus for instance, applying eye gaze tracking in combination with EEG or EMG or for assistive robotics applications).

The devices were chosen based on the following properties: being binocular, head-mounted, estimating the location of the point-of-regard, having a scene camera and working in real-world scenario. To collect the information, several eye tracking manufacturers were contacted and asked to fill an information form with the specifications of their device/devices. In the event that a manufacturer provided several devices meeting the requirements, the most portable and suitable was chosen. The devices investigated in this survey are: (1) Arrington Research BSU07—90/220/400; (2) Ergoneers Dikablis; (3) ISCAN OmniView; (4) SensoMotoric Instruments (SMI) Eye Tracking Glasses 2 Wireless; (5) SR Research EyeLink II with Scene Camera; and (6) Tobii Pro Glasses 2.

Thanks to the number and the variety of the considered devices, the information provided in this paper gives an overview of the technology currently implemented in head-mounted eye gaze tracking devices with scene camera working in many scenarios.

Technical features

Table 1 summarizes the technical features for the considered devices that include the following: eye tracking method(s), sampling rate, average accuracy in gaze direction, root mean square (RMS) precision, gaze tracking field of view, typical recovery tracking time (i.e., the time needed to retrack the eye after having lost the signal, for instance, due to eyelid occlusion), time required for the calibration, head tracking, audio recording, and IMU recording. As previously described in Holmqvist et al.,57 accuracy and precision are respectively defined as “the spatial difference between the true and the measured gaze direction” and “how consistently calculated gaze points are when the true gaze direction is constant.” A common method to calculate precision is as the RMS of inter-sample distances in the data.40,57 Accuracy and precision are influenced by several factors such as the user’s eye characteristics and calibration procedure. Therefore, accuracy and precision of real data need to be evaluated for each participant.40,57 Accuracy describes the distance between true and estimated gaze point and it is an essential feature for all experiments investigating exactly where the subject is looking. For example, accuracy is a crucial parameter in applications involving small nearby areas of interest (AOI) and dwell time (period of time in which the gaze stays within an AOI). Gaze data with poor accuracy can in fact focus on close-by AOIs instead of the one gazed by the user, leading to wrong interaction. Furthermore, it affects the dwell time that is often used as input method in gaze-based communication technologies e.g. as a mouse click. A related point to consider is that accuracy can vary over the scene and be poorer on the edges. Therefore, the required level of accuracy substantially depends on the experiment. In Holmqvist et al.,40 it is noted that accuracy ranging from 0.3° to around 2° is reported in the literature. However, this range is not limited to head-mounted eye gaze tracking devices.

Table 1.

Eye gaze tracking technical features.

| Manufacturer | Arrington Research | SMI | SR Research | Tobii Pro | ||

|---|---|---|---|---|---|---|

| Product name | BSU07— 90/220/400 | Ergoneers Dikablis | ISCAN OmniView | Eye Tracking Glasses 2 Wireless | EyeLink II with Scene Camera | Tobii pro Glasses 2 |

| Eye tracking technique/ techniques | Dark pupil, Corneal reflection | Pupil | Pupil, Corneal reflection | Pupil, Corneal reflection | Pupil, Corneal reflection | Dark pupil, Coneal reflection |

| Sampling frequency (Hz) | 60, 90, 220, 400 | 60 | 60 | 30, 60, 120 | 250 | 50, 100 |

| Average accuracy in gaze direction (Hor, Ver) (Deg.), (Deg.) | 0.25, 1 | 0.25 | 0.5, 0.5 | 0.5, 0.5 | ±0.5 | 0.5 |

| Precision (RMS) | 0.15 | 0.25 | <0.1 | 0.1 | <0.02 | 0.3 |

| Gaze-tracking field of view (Hor × Ver) (Deg.) × (Deg.) | ±44 × ± 20 | 180 × 130 | 90 × 60 | 80 × 60 | ±20 × ± 18 | 82 × 52 |

| Typical recovery tracking time (ms) | 17 | 0 | 16.7 | 0 | 4 | 20 |

| Calibration procedure duration (sec.) | 30, 180 | 15 | 20 | 5, 15, 30 | 180, 300, 540 | 2, 5 |

| Head tracking | No | Yes | No | No | No | No |

| IMU embedded | No | No | No | No | N/A | Yes |

SMI: SensoMotoric Instruments; RMS: root mean square; IMU: inertial measurement units.

The manufacturer and device name are reported at the top of the table. The comma is used to separate several values. The dot is used as decimal mark. “Hor.” and “Ver.” mean Horizontal and Vertical, while “N/A” means not available information.

While accuracy takes into account the difference between the estimated and true gaze point, precision is a measurement of the consistency of gaze point estimation. Considering a perfectly stable gaze point, precision quantifies the spatial scattering of the measurements. It is often assessed using an artificial eye. As shown in Holmqvist et al.,57 lower precision has a strong influence on fixation number and duration measurement. In particular, fewer and longer fixations are detected while decreasing in precision. Precision is therefore a crucial feature in applications based on fixations and saccade numbers and durations. In Holmqvist et al.,40 it is reported that high-end eye gaze trackers usually have RMS precision below 0.10°, whereas values up to 1° are reported for poorer devices. For example, precision below 0.05° is reported to perform very accurate fixations and saccade measurements.40 For experiments involving microsaccades or gaze-contingent applications RMS precision lower than around 0.03° is reported as a practical criterion.40

The sample rate is considered as the number of samples recorded in a second and it is expressed in Hertz. The sampling frequency of the eye gaze tracking devices is one of the most important parameters. The sampling frequency of an eye gaze tracking device usually ranges between 25 Hz and 30 Hz and up to more than 1 kHz.40 However, the precision with which event onset and offset are identified is lower at low sampling frequencies. This can affect the measurement of fixation or saccade duration and latency (time with respect to an external event e.g. a stimulus). Furthermore, the measure of eye velocity and acceleration peaks is even more sensitive to the sampling frequency of the device.40,58 Therefore, the effect that the sampling frequency of the device can have on the specific application should be evaluated carefully. For example, fixation duration uncertainty due to low sapling frequency can become an annoying factor in highly interactive assistive devices based on an interactive gaze interface.40,58

All the considered devices are based on pupil and corneal reflection video-oculography. In comparison to the past, recent devices can track the eye using several cameras and multiple corneal reflections per eye. For instance, the Tobii Pro Glasses 2 record each eye using two cameras, whereas the SMI Eye Tracking Glasses 2 Wireless measure six corneal reflections. The optimal positioning of the camera that records the eye can be crucial and can depend on light conditions. In order to obtain constantly optimal recordings, devices such as BSU07, Dikablis, and Eye Link II allow to change the position of the eye cameras.

The sampling rate of the considered devices ranges from 30 Hz to 400 Hz. Devices with high sampling frequency (such as the BSU07—400 or the Eye Link II) can record up to respectively 400 Hz and 250 Hz, thus allowing the measurement of faster eye movements. The accuracy and precision (RMS) are less than 1° and 0.3° in all the devices. In particular, the EyeLink II device has an RMS precision lower than 0.02°. In the survey presented by COGAIN in 2005,4 the average accuracy and temporal resolution of the devices using pupil and corneal reflection was approximately 0.75° visual angle at 60 Hz. The noteworthy improvement of accuracy and precision can be attributed to technological advancements and highlights the progress made in this field during the last 10 years.

The human binocular visual field has a horizontal amplitude of approximately 200° and a vertical amplitude of approximately 135°.59 The eye gaze tracking devices usually have a smaller field of view within which the gaze point can be resolved. Thus, this feature can affect the usability of the device in specific use cases and it is a fundamental parameter for the choice of an eye gaze tracker. Four devices out of six have a comparable gaze tracking field of view of 85° horizontally and 53° vertically on average. The EyeLink II has the narrowest field of view (40° horizontal and 36° vertical), whereas the Ergoneers Dikablis is capable to track the gaze in almost the entire binocular human visual field (180° horizontal and 130 vertical). This parameter is important since gaze estimation accuracy usually decreases in the periphery of the field of view.40

Eye blinking can affect the performance of eye trackers. The normal human blinking rate varies according to the task performed between an average of 4.5 blinks/min when reading to 17 blinks/minute in rest conditions.60 The average duration of a single blink is between 100 ms and 400 ms.61 Eye tracking data recorded with video-oculography suffer from eyelid occlusions. Thus, a fast recovery tracking time can make the devices less prone to error. Most of the surveyed devices have recovery tracking time in the order of one sample, thus less than 20 ms. The producers of two devices report zero milliseconds as recovery tracking time. This should probably be interpreted as the sampling period of the device, meaning that the device is able to resume the recording as soon as it re-detects the eye. In devices based on pupil and corneal reflections video-oculography, the gaze estimation substantially depends on the precise identification of pupil and glint centers. Therefore, when the eye is partially occluded by the eyelid e.g. at the beginning and end of a blink, the gaze point estimation accuracy might decrease.

Calibration is the procedure used to map the eye position to the actual gaze direction. Gaze measurement error can be influenced by several factors and, among those, the subject’s eye characteristics such as size, shape, light refraction, droopy eyelids, covering eyelashes. In most eye gaze trackers a personal calibration is therefore required in order to assure accurate and reliable gaze point estimation.40,54 Therefore, the calibration is very important in eye gaze tracking research. The accuracy of eye tracking data is best after calibration, which is why many eye gaze trackers have built-in support for on-demand recalibration or drift correction.62 Each product is provided with specific calibration procedures. Such procedures usually include automated calibration methods based on the fixation of a set of points at a specific distance (for instance, 1 point for the Tobii Pro Glasses 2, 4 points for the Dikablis, and several calibration types are available for the EyeLink II with nine points as the default). Such a procedure can be performed on the fly when required during data acquisitions. Some systems (including the Arrington Research, the Dikablis, the OmniView, and the EyeLink II) allow performing manual calibrations by displaying the eye camera video and by adjusting several calibration parameters, including the pupil location, the size of the box around it, and the threshold of the pupil.

Usually, the calibration targets are presented at one specific distance. As mentioned in Evans et al.,63 at other distances the scene video and eye’s view are no longer aligned, thus a parallax error can occur according to the geometrical design of the device. To eliminate parallax errors completely, the experimental stimuli must all appear along the depth plane at which the calibration points also appear. This can obviously be difficult when outside the laboratory, where objects may appear at highly variable distances. On the other hand, most of the devices can automatically compensate for parallax error. The time needed to perform the calibration can be an important application requirement. Devices with faster and simpler calibration procedure can be more suitable for everyday application. This time ranges between 2 s to 540 s for the investigated devices. Considering the minimum time required to calibrate each device, all the devices can be calibrated in less than a minute except the EyeLink II, which requires at least 180 s. Time required for calibration can depend on several factors, such as the number of points required for the calibration. Increasing the number of calibration points can increase gaze estimation accuracy and provide robustness to the system. However, this also depends on the type of calibration and on the algorithm used to map eye position to the gaze point (e.g., the eye model, polynomial mapping).64–67 Some eye gaze trackers can be reapplied without performing a new calibration. This possibility can be convenient for applications requiring a prolonged use or rest breaks. However, reapplying head mounted devices, as well as sitting closer/farther from the cameras of a remote eye gaze tracker can alter the distance between the eye and the tracking camera. This alteration can affect the mapping between pixels and degrees, causing in some cases errors that can influence the quality and stability of the data over time. Validation of calibration is recommended to evaluate the quality of the data, in particular regarding precision and accuracy e.g. if the data have lower accuracy than required, a new calibration could be performed until the required accuracy is reached.40,57

The last technical features investigated are the measurement of inertial data by an embedded IMU and the possibility to track the subject’s head. Only the Tobii Pro Glasses 2 have an IMU embedded (3D accelerometer and gyroscope). The Dikablis are able to track the subject’s head using external markers located in front of the subject and software techniques using the D-Lab Eye Tracking & Head Tracking Module. This system can be useful when the exact position of the subject head in the environment is required, for instance in ergonomics, robotics, sport studies, or virtual reality applications.1,40

Scene camera features

The scene camera of an eye gaze tracker records the scene in front of the subject. Knowing where the subject is looking allows estimating the point-of-regard and overlapping it onto each video frame. Thanks to the hardware and software improvements made in the last 10 years, the scene cameras of the considered eye gaze trackers can record at reasonable resolution and frame rate to be used as additional data sources.

Table 2 reports the main features of the scene cameras for the investigated devices: the video resolution, the frame rate, and the field of view. These features can be crucial for applications performing visual analysis (e.g. object and place recognition), such as improving robotic hand prosthesis control by using the gaze-overlaid video to identify the object that a hand amputee is aiming to grasp. On the other hand, a high recording resolution implies a high volume of data, with resulting limitations in data transfer and analysis speed. Thus, the features of the scene cameras should be evaluated according to the application requirements.

Table 2.

Scene camera features.

| SMI | SR Research | Tobii Pro | ||||

|---|---|---|---|---|---|---|

| Manufacturer Product name | Arrington Research BSU07–90/220/400 | Ergoneers Dikablis | ISCAN OmniView | Eye Tracking Glasses 2 Wireless | EyeLink II with Scene Camera | Tobii pro Glasses 2 |

| Scene camera’s video resolution | 320 × 480 640 × 480 | 1920 × 1080 | 640 × 480 | 1280 × 960 960 × 720 | NTSC 525 lines | 1920 × 1080 |

| Scene camera’s video frequency [FPS] | 30, 60 | 30 | 60 | 24 (max res.) 30 (min res.) | 30 | 25 |

| Scene camera’s field of view (Hor × Ver) (Deg.)–(Deg.) | Lens opt. 89, 78, 67, 44, 33, 23 | 80 × 45 | 100 × 60 | 60 × 46 | 95 × N/Aa | 90 16:9 format |

SMI: SensoMotoric Instruments; NTSC: National Television System Committee.

N/A stands for “Not available information.”

As shown in Table 2, in our evaluation the lowest scene camera resolution is 320 × 480 pixels, while the Ergoneers and Tobii devices have the highest resolution (1920 × 1080 pixels). In general, the scene cameras with high resolution are characterized by a slower frame rate. For instance, the Tobii and the Ergoneers have a full HD scene camera, but a frame rate of 25 and 30 Frames Per Second (FPS), respectively. Thus, they are more suitable for applications focusing on image details (such as object and/or place recognition) rather than on high dynamics. The BSU07—90/220/400 and the OmniView have the highest frame rate among the investigated devices (60 FPS). Capturing more images per second, these devices can be more suitable to record high dynamics and fast movements. Most of the scene cameras of the considered eye trackers record at 30 FPS, while the SMI device at the maximum resolution has the lowest frame rate (24 FPS).

The field of view of the scene camera can also be an important parameter. It can in fact limit the “effective field of view” of the eye gaze tracker. The “effective field of view” is the field of view within which the point-of-regard can be resolved and superimposed onto the visual scene video frame. It is obtained as the intersection between the field of view of the gaze tracking and the one of the scene camera. For example, if the field of view of the scene camera is narrower than the gaze tracking one, the point-of-regard will be limited by the former. Among the surveyed devices reporting horizontal and vertical information, the SMI device has the narrowest field of view (60 × 46 horizontal and vertical degrees), while the ISCAN Omniview has the widest one (100 × 60 horizontal and vertical degrees). Two manufacturers (Arrington Research and Ergoneers) reported a scene camera with adjustable field of view. In applications requiring the recording of close objects, the minimum focus distance of the scene camera can be another important parameter. On the other hand, according to the direct experience of the authors with one of the devices, no focusing difficulties were noticed even in tasks requiring interaction with objects close to the scene camera of the device e.g. drinking from a bottle or a can.

Software and operative features

The features of the software and the accessibility of the data can play an important role in the choice of an eye gaze tracker. The scalability of the device and the possibility to interconnect it with other devices can be essential, for instance, while exploring multimodal system applications that are often useful for rehabilitation, active living, and assistive purposes. One example is applications using gaze and scene camera information to improve the control of robotic assistive or rehabilitative devices such as arm prosthesis or orthosis. These systems are often integrated with other sensors such as EMG electrodes or IMUs.

Usually, the manufacturers provide built-in integration with the devices that are most frequently used with an eye gaze tracker (such as EEG or motion capture systems). The possibility to have a direct interface with the eye gaze tracker through a Software Development Kit (SDK) can facilitate and improve the integration with devices that are not natively supported. A few features describing software capabilities and scalability of the devices are reported in Table 3.

Table 3.

Software capabilities and device scalability.

| Arrington Research | SMI | SR Research | Tobii Pro | |||

|---|---|---|---|---|---|---|

| Manufacturer Product Name | BSU07— 90/220/400 | Ergoneers Dikablis | ISCAN OmniView | Eye Tracking Glasses 2 Wireless | EyeLink II with Scene Camera | Tobii pro Glasses 2 |

| Synchronization /integration with physiological monitoring devices | Yes | Yes | Yes | Yes | Yes | Yes |

| SDK | Yes | Yes | No | Yes | Yes | Yes |

| Gaze-scene overlapping | Auto | Auto | Auto | Auto and Man. | Auto | Auto and Man. |

| Real time viewing | Yes | Yes | Yes | Yes | Yes | Yes |

SDK: Software Development Toolkit; SMI: SensoMotoric Instruments.

Each device considered in this survey can be synchronized and/or integrated with external instrumentation aimed at monitoring physiological parameters. Moreover, the manufacturers usually provide a generic hardware synchronization (e.g., via a Transistor–Transistor Logic (TTL) signal), in order to facilitate the synchronization with other devices. Most of the manufacturers provide an SDK as well, containing at least an API, which is essential to create custom applications.

The last features investigated within this group are the method used to superimpose the gaze point onto the scene images and the real time visualization availability. As reported in Table 3, each device superimposes the point-of-regard onto the video automatically giving the possibility to show it in real time.

Additional features

This section includes a set of characteristics that can contribute to improve the capabilities of an eye gaze tracker, such as: audio recording, possible usage with corrective glasses, special features, and also the price (see Table 4).

Table 4.

Additional features.

| Manufacturer | Arrington Research | Ergoneers | ISCAN | SMI | SR Research | Tobii Pro |

|---|---|---|---|---|---|---|

| Product name | BSU07—90/220/400 | Dikablis | OmniView | Eye Tracking Glasses 2 Wireless | EyeLink II with Scene Camera | Tobii pro Glasses 2 |

| Audio embedded | Yes | Yes | Yes | Yes | N/A | Yes |

| Usage with corrective glasses | Yes | Yes | Yes | Yes | Yes | Yes |

| Weight (g) | Starting from less than 35 | 69 | 45–150 (depending on the setup) | 47 | 420 | 45 |

| Special features | Torsion TTL Analog out | Automated glances analysis toward static or dynamic freely definable AOI. Adjustable vertical angle and field of view of the scene camera Adjustable eye cameras position | Binocular mobile system recorder or transmitter version. Compensates automatically for parallax between eyes and scene camera; Works outdoors in full sunlight. Weight of headset 5.2 oz. | Optional modules available: EEG Index of cognitive activity 3 module, 3D/6D, corrective lenses | Dual-use for screen-based work or in a scene camera mode | Four eye cameras Slippage compensation Pupil size measurement Aut. parallax comp. Interchangable lenses TTL signal sync port IP class 20, CE, FPP, CCC |

| Price | From 13,998 to 22,998 [$] | Starting from 11,900 [€] | From 28,500 to 30,800 [$] | From 10,000 to 35,000 [€] | N/A | N/A |

AOI: areas of interest; TTL: Transistor–Transistor Logic; SMI: SensoMotoric Instruments; EEG: electroencephalography.

Most of the considered devices can record audio. Audio data can expand the capabilities of the eye gaze trackers, also thanks to modern speech recognition techniques. In particular, audio information may be fused with the gaze data to improve the control of a Voice Command Device (VCD) or to trigger/label events during the acquisitions. All the reviewed devices allow the use of corrective glasses. In particular, interchangeable corrective lenses mountable on the eye gaze tracker are often used. This possibility makes these devices easy to set up and adapt to the user’s needs. The interchangeability of the lenses often allows the application of dark lenses in outdoor application, improving the tracking robustness.

The last parameter reported in this evaluation is the price of the considered devices. The prices reported are approximate while the exact prices are available on request, as they depend on several factors and can include in some cases academic rebates.

The features investigated in this survey represent a selection made by the authors and they cannot cover all the possible characteristics of an eye gaze tracker. Thus, in order to partially compensate for this, a field named “Special features” was included in the form that was sent to the manufacturers, asking them to mention any other important or special features of their device (Table 4).

Despite not being included in the table, the portability of the device is another important feature that affects both usability and performance of the device. Each surveyed device can be used in real-world scenarios; however, they have different degree of portability. The Tobii, SMI, and ISCAN devices are extremely portable equipped with lightweight portable recording and/or transmission unit. This solution can be more suitable in applications requiring prolonged use or highly dynamic activities. Devices tethered to a computer, such as the SR Research device, have low portability, thus they are mostly suitable for static applications. Devices tethered to a laptop/tablet that can be worn in a backpack and powered by battery pack (such as the Arrington Research and Ergoneers devices) offer an intermediate solution.

Finally, the design and comfort of the device can also be very important, especially in daily life applications. Eye gaze trackers designed to be similar to normal eyeglasses can be more acceptable for users, in particular for prolonged use. Low weight and volume can on the other hand be important characteristics in everyday life applications.

Conclusion

More than 10 years after the COGAIN survey, this review provides a detailed overview of modern head-mounted eye gaze trackers working in real world scenarios. In recent years, many advances substantially improved the devices, both considering the hardware and software.

Vision is among the most important senses to control grasping and locomotion. Current devices allow to monitor vision unobtrusively and in real time, thus offering opportunities to restore, augment, and assist both upper and lower limb functionality. Eye gaze tracking devices have been applied in scientific research to a wide pool of scenarios, including rehabilitation, active living, and assistive technologies. As described in literature, some applications of eye tracking in these fields include the control of hand prostheses, wheelchairs, computers, and communication systems, in order to assist elderly or impaired people, amputees, people affected by multiple sclerosis, Parkinson’s disease, muscular dystrophy, and amyotrophic lateral sclerosis.16–20

The comparison between the survey made in 2005 by the COGAIN network and Tables 1–4 gives an objective evaluation of the many improvements that have been made. Modern head-mounted devices give the opportunity to investigate human behavior in real environments, easily and in real time. It is currently possible to track human gaze in daily life scenarios seeing the scene in front of the subject and knowing exactly what the subject is looking at unobtrusively and wirelessly. Tables 1–4 highlight that modern devices have high precision and accuracy, a wide range of sampling frequencies and scene camera video resolutions. They can be interconnected easily with other devices and, if needed, managed through an SDK. Most of the wearable eye gaze tracking devices are lightweight and comfortable, allowing to wear them for a long time. The devices are now more affordable and do not include expensive technologies, thus justifying tests to use them in real-life applications.

In 2002, Duchowski highlighted the beginning of the fourth era of eye tracking, characterized by interactive applications. The devices reviewed in this paper underline the beginning of this new era. Modern head-mounted eye gaze tracking devices can manage a wider pool of applications in daily life settings. They can help researchers to better study behavior and they can help users to better interact with the environment, also in rehabilitation and assistive applications.

Acknowledgements

The authors would like to thank William C. Schmidt at SR Research, Günter Fuhrmann and Christian Lange at Ergoneers Group, Rikki Razdan at ISCAN, Marka Neumann at Arrington Research, Gilbert van Cauwenberg at SensoMotoric Intruments (SMI), Mirko Rücker at Chronos Vision, Urs Zimmermann at Usability.ch, Rasmus Petersson at Tobii and their teams for their kindness and helpfulness in providing the information used in this work.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The author(s) disclosed receipt of the following financial supportfor the research, authorship, and/or publication of this article: This work was partially supported by the Swiss National Science Foundation [Sinergia project # 160837 Megane Pro] and the Hasler Foundation [Elgar Pro project].

Guarantor

MC

Contributorship

MC analyzed the state of the art, contacted the manufacturers, and wrote the manuscript. MA supervised the literature research, contacted the manufacturers, and wrote the manuscript. HM supervised the literature research, reviewed, and edited the manuscript. All authors approved the final version of the manuscript.

References

- 1.Duchowski AT. Eye tracking methodology: theory and practice. London: Springer, 2007.

- 2.Young LR, Sheena D. Survey of eye movement recording methods. Behav Res Methods Instrum 1975; 7: 397–429. [Google Scholar]

- 3.Morimoto CH, Mimica MRM. Eye gaze tracking techniques for interactive applications. Comput Vis Image Underst 2005; 98: 4–24. [Google Scholar]

- 4.Bates R, Istance H, Oosthuizen L, et al. D2.1 survey of de-facto standards in eye tracking. Communication by Gaze Interaction (COGAIN). Deliverable 2.1, http://wiki.cogain.org/index.php/COGAIN_Reports (2005, accessed 25 April 2018).

- 5.Desanghere L, Marotta JJ. The influence of object shape and center of mass on grasp and gaze. Front Psychol, 6 DOI: 10.3389/fpsyg.2015.01537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Patla AE. Understanding the roles of vision in the control of human locomotion. Gait Posture 1997; 5: 54–69. [Google Scholar]

- 7.Land M, Mennie N, Rusted J. The roles of vision and eye movements in the control of activities of daily living. Perception 1999; 28: 1311–1328. [DOI] [PubMed] [Google Scholar]

- 8.Castellini C and Sandini G. Learning when to grasp. In: Invited paper at Concept Learning for Embodied Agents, a workshop of the IEEE International Conference on Robotics and Automation (ICRA), Rome, Italy, 10–14 April 2007.

- 9.Novak D and Riener R. Enhancing patient freedom in rehabilitation robotics using gaze-based intention detection. In: IEEE 13th International Conference on Rehabilitation Robotics, ICORR, 2013, 24–26 June 2013, pp. 1–6. Seattle, WA, USA: IEEE. [DOI] [PubMed]

- 10.Cognolato M, Graziani M, Giordaniello F, et al. Semi-automatic training of an object recognition system in scene camera data using gaze tracking and accelerometers. In: Liu M, Chen H, Vincze M. (eds). Computer vision systems. ICVS 2017. Lecture notes in computer science, vol. 10528. Cham: Springer, pp. 175–184. [Google Scholar]

- 11.Došen S, Cipriani C, Kostić M, et al. Cognitive vision system for control of dexterous prosthetic hands: experimental evaluation. J Neuroeng Rehabil, 7 DOI: 10.1186/1743-0003-7-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Noronha B, Dziemian S, Zito GA, et al. ‘Wink to grasp’ – comparing eye, voice & EMG gesture control of grasp with soft-robotic gloves. In: International Conference on Rehabilitation Robotics, (ICORR) 2017, 17–20 July 2017, pp.1043–1048. London, UK: IEEE. [DOI] [PubMed]

- 13.Patla AE. Neurobiomechanical bases for the control of human locomotion. In: Bronstein AM, Brandt T, Woollacott MH, et al. (eds) Clinical Disorders of Balance, Posture and Gait. London: Arnold Publisher, 1995, pp.19–40.

- 14.Raibert MH. Legged Robots That Balance. Cambridge, MA, USA: MIT Press. DOI: 10.1109/MEX.1986.4307016.

- 15.Rubenstein LZ. Falls in older people: epidemiology, risk factors and strategies for prevention. Age Ageing 2006; 35: 37–41. [DOI] [PubMed] [Google Scholar]

- 16.Majaranta P, Aoki H, Donegan M, et al. Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies. Hershey, PA: Information Science Reference – Imprints: IGI Publishing, 2011.

- 17.Goto S, Nakamura M, Sugi T. Development of meal assistance orthosis for disabled persons using EOG signal and dish image. Int J Adv Mechatron Syst 2008; 1: 107–115. [Google Scholar]

- 18.Lin C-S, Ho C-W, Chen W-C, et al. Powered wheelchair controlled by eye-tracking system. Opt Appl 2006; 36: 401–412. [Google Scholar]

- 19.Ktena SI, Abbott W and Faisal AA. A virtual reality platform for safe evaluation and training of natural gaze-based wheelchair driving. In: 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015, pp. 236–239. IEEE.

- 20.Blignaut P. Development of a gaze-controlled support system for a person in an advanced stage of multiple sclerosis: a case study. Univers Access Inf Soc 2017; 16: 1003–1016. [Google Scholar]

- 21.Wade NJ, Tatler BW. Did Javal measure eye movements during reading? J Eye Mov Res 2009; 2: 1–7.20664810 [Google Scholar]

- 22.Richardson D and Spivey M. Eye Tracking: Characteristics and Methods. In: Wnek GE and Bowlin GL (eds) Encyclopedia of Biomaterials and Biomedical Engineering, Second Edition – Four Volume Set. CRC Press, pp. 1028–1032.

- 23.Yarbus AL. Eye movements and vision. Neuropsychologia 1967; 6: 222. [Google Scholar]

- 24.Robinson DA. A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans Biomed Eng 1963; 10: 137–145. [DOI] [PubMed] [Google Scholar]

- 25.Mackworth JF, Mackworth NH. Eye fixations recorded on changing visual scenes by the television eye-marker. J Opt Soc Am 1958; 48: 439–445. [DOI] [PubMed] [Google Scholar]

- 26.Mackworth NH, Thomas EL. Head-mounted eye-marker camera. J Opt Soc Am 1962; 52: 713–716. [DOI] [PubMed] [Google Scholar]

- 27.Jacob RJK and Karn KS. Commentary on Section 4 – Eye tracking in human–computer interaction and usability research: Ready to deliver the promises. In: Hyönä J, Radach R and Deubel H (eds) The Mind's Eye, pp. 573–605. Amsterdam: North-Holland.

- 28.Cornsweet TN, Crane HD. Accurate two-dimensional eye tracker using first and fourth Purkinje images. J Opt Soc Am 1973; 63: 921–928. [DOI] [PubMed] [Google Scholar]

- 29.Merchant J, Morrissette R, Porterfield JL. Remote measurement of eye direction allowing subject motion over one cubic foot of space. IEEE Trans Biomed Eng 1974; 21: 309–317. [DOI] [PubMed] [Google Scholar]

- 30.Russo JE. The limbus reflection method for measuring eye position. Behav Res Methods Instrum 1975; 7: 205–208. [Google Scholar]

- 31.Wade NJ, Tatler BW. The moving tablet of the eye: the origins of modern eye movement research, Oxford, UK: Oxford University Press, 2005. [Google Scholar]

- 32.Land MF, Lee DN. Where we look when we steer. Nature 1994; 369: 742–744. [DOI] [PubMed] [Google Scholar]

- 33.Markovic M, Došen S, Cipriani C, et al. Stereovision and augmented reality for closed-loop control of grasping in hand prostheses. J Neural Eng 2014; 11: 46001. [DOI] [PubMed] [Google Scholar]

- 34.Duchowski AT, Shivashankaraiah V, Rawls T, et al. Binocular eye tracking in virtual reality for inspection training. In: Duchowski AT (ed) Proceedings of the Eye Tracking Research & Application Symposium, ETRA '00, 6–8 November 2000, pp. 89–96. Palm Beach Gardens, Florida, USA: ACM Press.

- 35.McMullen DP, Hotson G, Katyal KD, et al. Demonstration of a semi-autonomous hybrid brain-machine interface using human intracranial EEG, eye tracking, and computer vision to control a robotic upper limb prosthetic. IEEE Trans Neural Syst Rehabil Eng 2014; 22: 784–796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rayner K. Eye movements in reading and information processing: 20 years of research. Psychol Bull 1998; 124: 372–422. [DOI] [PubMed] [Google Scholar]

- 37.Duchowski AT. A breadth-first survey of eye tracking applications. Behav Res Methods, Instr Comput 2002; 1: 1–16. [DOI] [PubMed] [Google Scholar]

- 38.Hansen DW, Ji Q. In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell 2010; 32: 478–500. [DOI] [PubMed] [Google Scholar]

- 39.Leigh RJ, Zee DS. The neurology of eye movements, Oxford, UK: Oxford University Press, 2015. [Google Scholar]

- 40.Holmqvist K, Nyström M, Andersson R, et al. Eye tracking: a comprehensive guide to methods and measures, Oxford, UK: Oxford University Press, 2011. [Google Scholar]

- 41.Kowler E. Eye movements: the past 25 years. Vision Res 2011; 51: 1457–1483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Heide W, Koenig E, Trillenberg P, et al. Electrooculography: technical standards and applications. The International Federation of Clinical Neurophysiology. Electroencephalogr Clin Neurophysiol Suppl 1999; 52: 223–240. [PubMed] [Google Scholar]

- 43.Chennamma H, Yuan X. A survey on eye-gaze tracking techniques. Indian J Comput Sci Eng 2013; 4: 388–393. [Google Scholar]

- 44.Lupu RG, Ungureanu F. A survey of eye tracking methods and applications. Math Subj Classif 2013; LXIII: 72–86. [Google Scholar]

- 45.Kenyon RV. A soft contact lens search coil for measuring eye movements. Vision Res 1985; 25: 1629–1633. [DOI] [PubMed] [Google Scholar]

- 46.Guestrin ED, Eizenman M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans Biomed Eng 2006; 53: 1124–1133. [DOI] [PubMed] [Google Scholar]

- 47.Wang J-G and Sung E. Gaze Determination via Images of Irises. In: Mirmehdi M and Thomas BT (eds) Proceedings of The Eleventh British Machine Vision Conference (BMVC), 11–14 September 2000, pp. 1–10. Bristol, UK: British Machine Vision Association.

- 48.Williams O, Blake A and Cipolla R. Sparse and semi-supervised visual mapping with the S^3GP. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), 17–22 June 2006, pp. 230–237. New York, NY, USA: IEEE Computer Society.

- 49.Chen JCJ and Ji QJQ. 3D gaze estimation with a single camera without IR illumination. In: 19th International Conference on Pattern Recognition (ICPR) 2008, 8–11 December 2008, pp. 1–4. Tampa, FL: IEEE Computer Society.

- 50.Sigut J, Sidha SA. Iris center corneal reflection method for gaze tracking using visible light. IEEE Trans Biomed Eng 2011; 58: 411–419. [DOI] [PubMed] [Google Scholar]

- 51.Baluja S and Pomerleau D. Non-intrusive gaze tracking using artificial neural networks. In: Cowan DJ, Tesauro G, Alspector J (eds) Advances in Neural Information Processing Systems 6, 7th NIPS Conference, Denver, Colorado, USA, 1993, pp. 753–760. Denver, Colorado, USA: Morgan Kaufmann.

- 52.Xu L-Q, Machin D and Sheppard P. A Novel Approach to Real-time Non-intrusive Gaze Finding. In: Carter JN and Nixon MS (eds) Proceedings of the British Machine Vision Conference 1998, (BMVC), pp. 428–437. Southampton, UK: British Machine Vision Association.

- 53.Krafka K, Khosla A, Kellnhofer P, et al. Eye Tracking for Everyone. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27–30 June 2016, pp. 2176–2184. Las Vegas, NV, USA: IEEE Computer Society.

- 54.Hammoud RI. Passive eye monitoring: algorithms, applications and experiments, Heidelberg, Berlin: Springer, 2008. [Google Scholar]

- 55.Wade NJ. Image, eye, and retina (invited review). J Opt Soc Am A Opt Image Sci Vis 2007; 24: 1229–1249. [DOI] [PubMed] [Google Scholar]

- 56.Giordaniello F, Cognolato M, Graziani M, et al. Megane pro: myo-electricity , visual and gaze tracking data acquisitions to improve hand prosthetics. In: International Conference on Rehabilitation Robotics, (ICORR), 17–20 July 2017, pp. 1148–1153. London, UK: IEEE. [DOI] [PubMed]

- 57.Holmqvist K, Nyström M and Mulvey F. Eye tracker data quality: what it is and how to measure it. In: Morimoto CH, Istance H, Spencer SN, et al. (eds) Proceedings of the Symposium on Eye Tracking Research and Applications (ETRA) 2012, 28–30 March 2012, pp. 45–52. Santa Barbara, CA, USA: ACM.

- 58.Andersson R, Nyström M, Holmqvist K. Sampling frequency and eye-tracking measures: how speed affects durations, latencies, and more. J Eye Mov Res 2010; 3: 1–12. [Google Scholar]

- 59.Dagnelie G (ed) Visual prosthetics: physiology, bioengineering and rehabilitation. Boston, MA: Springer, 2011.

- 60.Bentivoglio AR, Bressman SB, Cassetta E, et al. Analysis of blink rate patterns in normal subjects. Mov Disord 1997; 12: 1028–1034. [DOI] [PubMed] [Google Scholar]

- 61.Milo R, Jorgensen P, Moran U, et al. BioNumbers: the database of key numbers in molecular and cell biology. Nucleic Acids Res 2009; 38: 750–753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Nyström M, Andersson R, Holmqvist K, et al. The influence of calibration method and eye physiology on eyetracking data quality. Behav Res Methods 2013; 45: 272–288. [DOI] [PubMed] [Google Scholar]

- 63.Evans KM, Jacobs RA, Tarduno JA, et al. Collecting and analyzing eye tracking data in outdoor environments. J Eye Mov Res 2012; 5: 6. [Google Scholar]

- 64.Kasprowski P, Harȩżlak K and Stasch M. Guidelines for the eye tracker calibration using points of regard. In: Piȩtka E, Kawa J, Wieclawek W (eds) Information Technologies in Biomedicine, Vol 4, pp. 225–236. Cham: Springer International Publishing.

- 65.Harezlak K, Kasprowski P, Stasch M. Towards accurate eye tracker calibration—methods and procedures. Procedia Procedia Comput Sci 2014; 35: 1073–1081. [Google Scholar]

- 66.Villanueva A and Cabeza R. A novel gaze estimation system with one calibration point. IEEE Trans Syst Man, Cybern Part B 2008; 38: 1123–1138. [DOI] [PubMed]

- 67.Ramanauskas N. Calibration of video-oculographical eye-tracking system. Elektron ir Elektrotechnika 2006; 72: 65–68. [Google Scholar]