Abstract

The FASSEG repository is composed by four subsets containing face images useful for training and testing automatic methods for the task of face segmentation. Threesubsets, namely frontal01, frontal02, and frontal03 are specifically built for performing frontal face segmentation. Frontal01 contains 70 original RGB images and the corresponding roughly labelledground-truth masks. Frontal02 contains the same image data, with high-precision labelled ground-truth masks. Frontal03 consists in 150 annotated face masks of twins captured in various orientations, illumination conditions and facial expressions. The last subset, namely multipose01, contains more than 200 faces in multiple poses and the corresponding ground-truth masks. For all face images, ground-truth masks are labelled on six classes (mouth, nose, eyes, hair, skin, and background).

Specifications Table [Please fill in right-hand column of the table below.]

| Subject area | Computer science, Signal Processing |

| More specific subject area | Image Processing, Face Segmentation |

| Type of data | Images |

| How data was acquired | Original data are taken from other existing public databases (MIT-CBCL, FEI, POINTING ’04, and SIBLINGS face databases). Ground-truth masks are produced by using a commercial editor for raster graphics. |

| Data format | RGB and JPG |

| Experimental factors | Camera illumination, background colour, subject variety. |

| Experimental features | Facial features (mouth, nose, eyes, hair, skin). |

| Data source location | Brescia, Italy, University of Brescia, Department of Information Engineering, Latitude: 45.564664, Longitude: 10.231660 |

| Data accessibility | Public. Benini, Sergio; Khan, Khalil; Leonardi, Riccardo; Mauro, Massimo; Migliorati, Pierangelo (2019), “FASSEG: a FAce Semantic SEGmentation repository for face image analysis (v2019)”, Mendeley Data, v1https://doi.org/10.17632/sv7ns5xv7f.1 |

| Related research article | Sergio Benini, Khalil Khan, Massimo Mauro, Riccardo Leonardi, Pierangelo Migliorati, “Face analysis through semantic face segmentation”, in Signal Processing: Image Communication, vol. 74, pp. 21–31, May 2019 (Available online since 18 January 2019https://doi.org/10.1016/j.image.2019.01.005) |

Value of the data

|

1. Data

The FAce Semantic SEGmentation (FASSEG) repository contains more than 500 original face images and related manually annotated segmentation masks on six classes, namely mouth, nose, eyes, hair, skin, and background. In particular the FASSEG repository is composed by four subsets containing face images useful for training and testing automatic methods for the task of face segmentation. Three datasets, namely frontal01, frontal02, and frontal03, are specifically built for performing frontal face segmentation. The fourth subset, namely multipose01, contains labelled faces in multiple poses.

The subset Frontal01 contains 70 original RGB images and the corresponding roughly labelled ground-truth masks. Original faces are mainly taken from the MIT-CBCL [1] and FEI [2] datasets. Images are organized in two folders - train and test - matching the division we adopted in our previous work in Ref. [3], in which we used this subset.

The subset Frontal02 contains the same original image data as frontal01, but with higher-precision labelled ground-truth masks. Images are organized in two folders - train and test – matching the division we adopted in our previous works in [5], [6], in which we used this subset.

Fig. 1 shows one subject example from these first two subsets:(from left to right) the original image, the related rough labelled mask in frontal01, and the high-precision mask in frontal02.

Fig. 1.

From left to right: the original image, the related rough labelled masks in frontal01, and the high-precision masks in frontal02.

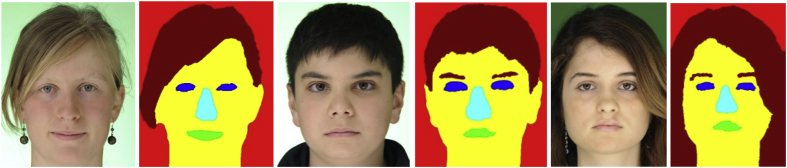

The third frontal dataset, namely Frontal03, contains 150 manually labelled ground-truth images. Original images (in JPG format) captured in various orientations, illumination conditions and facial expressions are taken from the SIBLINGS database (HQ-f subset) [7]. Fig. 2 shows three subjects taken from frontal03 dataset. This subset has not been used in any prior work so far.

Fig. 2.

Examples of three original images and related segmentation masks taken from subset frontal03.

The last subset, namely Multipose01, contains 294 manually labelled ground-truth images belonging to 13 different poses taken at steps of 15 degrees. Original faces are taken from the POINTING ’04 database [8]. We used this dataset in our works in [4], [6]. Images are organized in two folders - train and test - matching the division we adopted in the papers. Fig. 3 shows one example taken from multipose01 subset.

Fig. 3.

One example of original images and related segmentation masks taken from subset multipose01.

2. Experimental design, materials, and methods

We collected all original images from already existing databases publicly available for research purposes: the MIT-CBCL [1], FEI [2], POINTING’04 [8], and SIBLINGS HQ-f [7] datasets.

These databases are previously used for different face analysis tasks such as face recognition, gender classification, head pose estimation, facial expression recognition etc. Despite being all captured in controlled environmental indoor laboratories, due to different cameras and conditions used, the quality of images highly differs in different datasets.

Images from MIT-CBCL [1] and SIBLINGS HQ-f [7] are high resolution and high quality images.

All images in these datasets show neutral facial expressions (and no facial hair in case of male subjects). The background of all images is flat with single colour. None of the subjects in these databases are wearing sunglasses or some other facial makeup.

POINTING’04 [8], previously used for head pose estimation task only, is a more complex dataset with respect to MIT-CBCL [1] and SIBLINGS HQ-f [7]. All these images are captured in low light indoor lab conditions, with low resolution. Approximately half of the subjects (7 out of 15) wear eye glasses, and facial hair and moustaches are also present in some subjects.

Finally FEI [2] is a database previously used for gender recognition and facial expression recognition tasks. The dataset is equally split between male and female subjects in the age range 19–40 years. The background of the images is flat white.

In nutshell our database includes a large variety of both high and low resolution images, with different grades of complexity in facial features, background, and illumination conditions.

All ground-truth masks on these images have been produced manually through a commercial editor for raster graphics. This labelling is done without any automatic segmentation tool. Such kind of segmentation is dependent on the subjective perception of a single human involved in this task. Hence it is very difficult to provide an accurate label to all pixels in the image -- particularly on the boundary region of the different face parts. For example differentiating the nose region from the skin and drawing a boundary between the two is very difficult.

Acknowledgments

None.

Footnotes

Transparency document associated with this article can be found in the online version at https://doi.org/10.1016/j.dib.2019.103881.

Contributor Information

Sergio Benini, Email: sergio.benini@unibs.it.

Khalil Khan, Email: khalil.khan@ajku.edu.pk.

Riccardo Leonardi, Email: riccardo.leonardi@unibs.it.

Massimo Mauro, Email: massimo.mauro@unibs.it.

Pierangelo Migliorati, Email: pierangelo.migliorati@unibs.it.

Transparency document

The following is the transparency document related to this article:

References

- 1.MIT Center for Biological and Computational Learning (CBCL), “Mit-cbcl database,” http://cbcl.mit.edu/software-datasets/FaceData2.html.

- 2.Centro Universitario da FEI, “Fei database,”http://www.fei.edu.br/˜cet/facedatabase.html.

- 3.Khan Khalil, Mauro Massimo, Leonardi Riccardo. 2015 IEEE International Conference on Image Processing (ICIP-2015. 2015. Multi-class semantic segmentation of faces; pp. 827–831. [Google Scholar]

- 4.Khan Khalil, Mauro Massimo, Migliorati Pierangelo, Leonardi Riccardo. Proc. IEEE International Conference on Multimedia and Expo (ICME), 2017s. 2017. Head pose estimation through multi-class face segmentation; pp. 253–258. [Google Scholar]

- 5.Khan Khalil, Mauro Massimo, Migliorati Pierangelo, Leonardi Riccardo. Proc. of Image Analysis and Processing – ICIAP 2017, Catania, Italy, September 11–15. 2017. Gender and expression analysis based on semantic face segmentation. [Google Scholar]

- 6.Benini Sergio, Khan Khalil, Mauro Massimo, Leonardi Riccardo, Migliorati Pierangelo. Face analysis through semantic face segmentation. Signal Process. Image Commun. May 2019;74:21–31. [Related research article] [Google Scholar]

- 7.Vieira Tiago F., Bottino Andrea, Laurentini Aldo, De Simone Matteo. Detecting siblings in image pairs. Vis. Comput. 2014;30(12):1333–1345. [Google Scholar]

- 8.Gourier Nicolas, Hall Daniela, Crowley James L. FG Net Workshop on Visual Observation of Deictic Gestures. FGnet (IST– 2000–26434) Cambridge, UK. 2004. Estimating face orientation from robust detection of salient facial structures; pp. 1–9. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.