Abstract

Intertemporal decision-making involves simultaneous evaluation of both the magnitude and delay to reward, which may require the integrated representation and comparison of these dimensions within working memory (WM). In the current study, neural activation associated with intertemporal decision-making was directly compared with WM load-related activation. During functional magnetic resonance imaging, participants performed an intermixed series of WM trials and intertemporal decision-making trials both varying in load, with the latter in terms of choice difficulty, via options tailored to each participant's subjective value function for delayed rewards. The right anterior prefrontal cortex (aPFC) and dorsolateral prefrontal cortex (dlPFC) showed activity modulation by choice difficulty within WM-related brain regions. In aPFC, these 2 effects (WM, choice difficulty) correlated across individuals. In dlPFC, activation increased with choice difficulty primarily in patient (self-controlled) individuals, and moreover was strongest when the delayed reward was chosen on the most difficult trials. Finally, the choice-difficulty effects in dlPFC and aPFC were correlated across individuals, suggesting a functional relationship between the 2 regions. Together, these results suggest a more precise account of the relationship between WM and intertemporal decision-making that is specifically tied to choice difficulty, and involves the coordinated activation of a lateral PFC circuit supporting successful self-control.

Keywords: cognitive control, fMRI, impulsivity, self-control, temporal discounting

Introduction

Everyday decision-making includes deciding among options that differ along multiple dimensions like reward amount, probability of reward, and delay to reward (Keeney and Raiffa 1993; Green and Myerson 2004). Intertemporal decision-making—deciding between smaller-sooner and larger-later rewards—requires the simultaneous evaluation of reward-related information on 2 dimensions, the reward amount and delay (Mischel et al. 1989; Rachlin 1989; Frederic et al. 2002; Green and Myerson 2004; Ainslie 2005; Glimcher 2009). Because of the integrative nature of these valuations, intertemporal decision-making may depend upon actively maintained representations of choice dimensions (delay and amount) such that these can be appropriately combined and compared across options.

Integration and active maintenance of goal-relevant information are 2 functions typically ascribed to lateral frontoparietal brain regions. In particular, the anterior prefrontal cortex (aPFC) and dorsolateral prefrontal cortex (dlPFC) are widely thought to act in concert to enable the maintenance and integration of highly abstract goal-relevant information within working memory (WM), in the service of attaining behavioral goals (Goldman-Rakic 1987; Miller and Cohen 2001; Braver and Bongiolatti 2002; Ramnani and Owen 2004; Botvinick 2008; Sakai 2008; Badre et al. 2009). The prior literature has provided consistent support for the roles of aPFC and dlPFC in intertemporal decision-making (McClure et al. 2004, 2007; Shamosh et al. 2008; Carter et al. 2010). Nevertheless, there has been less agreement as to exactly how these 2 regions contribute to decision-making in this domain. In the current work, we test the specific hypothesis that aPFC and dlPFC are centrally important for integration of choice dimensions in intertemporal decision-making, and the specific prediction that their recruitment during difficult WM tasks anticipates intertemporal choice patterns, particularly when decisions are difficult (i.e. choice options are close in subjective value, SV).

Research on intertemporal decision-making has contrasted self-controlled and impulsive choices, as indexed by the degree to which a decision-maker discounts the value of a delayed reward, and explored in a range of choice paradigms (Mischel and Metzner 1962; Mischel et al. 1989; Rachlin et al. 1991; Frederic et al. 2002; Ainslie 2005; Berns et al. 2007; Shamosh and Gray 2008; Monterosso and Luo 2010; Scheres et al. 2013). Individuals who steeply discount delayed rewards are characterized as impulsive because they tend to prefer smaller-sooner to larger-later rewards. By contrast, individuals who discount delayed rewards shallowly are characterized as patient, in that they tend to prefer larger-later rewards. Moreover, this patient form of decision-making is thought to depend upon self-control, which is needed to override the impulsive tendency to choose the sooner reward, particularly when it is available immediately (Kirby et al. 1999; Baker et al. 2003; Kable and Glimcher 2007).

A number of influential theoretical accounts have postulated a direct role for the lateral PFC in enabling self-control in intertemporal decision-making, though they differ in emphasis. In particular, one account posits that lateral frontoparietal regions provide a separate mechanism for the selective evaluation of delayed (as opposed to immediate) rewards, enabling evaluation with regard to long-term goals and future planning (McClure et al. 2004; 2007; van den Bos and McClure, 2013). A second account posits a unified valuation system for both delayed and immediate rewards (Kable and Glimcher, 2007), but suggests that lateral prefrontal control mechanisms can intervene to override an immediate reward bias in favor of a delayed reward, when the latter choice might satisfy a higher order goal (Hare et al. 2014). The third account has emphasized the WM demands of intertemporal decision-making, and posited a role in promoting preference stability, whereby lateral PFC regions are critical for integration and related control operations applied to actively maintained choice dimensions (Hinson et al. 2003; Shamosh et al. 2008; Bickel et al. 2011; Wesley and Bickel, 2014).

To date, these different theoretical accounts of the functional role of lateral PFC regions during intertemporal decision-making have not been reconciled. The third account, emphasizing WM functions, is related to alternative accounts that emphasize the role of cognitive control (e.g. Figner at al. 2010), and thus generalize to a wider variety of decision-making contexts. However, the relationship between the role of lateral PFC in WM and intertemporal decision-making, although frequently invoked, is still poorly understood. In part, this is because the relationship has only been investigated indirectly (e.g. Shamosh et al. 2008; Aranovich et al. 2016). To our knowledge, no study has directly compared lateral PFC activity during WM tasks and intertemporal decision-making on a within-subject basis. A second issue is that lateral PFC regions may be important for intertemporal decision-making primarily under conditions in which the choice is a difficult one, such as when the 2 reward options are close in SV. For example, McClure et al. (2004) found greater activity in lateral PFC on difficult choice trials, whereas Figner et al. (2010) found transcranial magnetic stimulation disruption of lateral PFC reduced choice of delayed rewards, particularly when contrasted against a more tempting immediate (rather than a less delayed) reward. Thus, it is critical to experimentally manipulate choice difficulty during intertemporal decision-making, to determine the importance of this factor in moderating the relationship between lateral PFC activity and self-control.

Yet systematic manipulations, or even analyses, of choice-difficulty effects are rarely a central focus in studies of value-based decision-making (Monterosso et al. 2007; Pine et al. 2009; Kable and Glimcher 2010). However, in recent work, it has been observed that multiple cortical and subcortical regions, including dlPFC, are engaged by intertemporal choice situations in which the relative SV differences between choice options are small; moreover, dlPFC activity was increased on trials in which the delayed larger reward was chosen (Hare et al. 2014). Further, strong functional connectivity has been observed between dlPFC and the ventral striatum during intertemporal decision-making, particularly in self-controlled individuals (van den Bos et al. 2014). These results support the idea that the lateral PFC plays a functionally important role in intertemporal choice, in guiding patient (i.e. self-controlled) decisions (Figner et al. 2010).

In the current study, we utilized a novel experimental design that enabled direct, within-subject examination of the relationship of both WM and choice difficulty to lateral PFC activity during intertemporal decision-making. Specifically, participants performed independent and intermixed WM and intertemporal decision-making tasks, during which difficulty was manipulated for each condition (i.e. by load in the WM task, and by the proximity in SVs between alternatives in the decision-making task). Thus, we were able to directly identify lateral PFC regions engaged by intertemporal choice difficulty within the brain regions associated with WM load-related difficulty. Restricting our analyses to these regions meaningfully constrained inferences regarding shared mechanisms.

A second key design feature was to tailor intertemporal choice difficulty to individuals’ delay discounting profiles, estimated prior to functional magnetic resonance imaging (fMRI) scanning. This systematic manipulation provided effective means of examining lateral PFC activity associated with difficulty-related WM/cognitive control processes, and to individual differences in self-control, while equating the choice-difficulty manipulation across participants. Specifically, for difficult trials, because options were close in SVs, elaborated comparison was required, placing greater demands on relevant control processes that might be engaged under such conditions, such as integration of choice dimensions in WM, and attentional focusing/switching among choice options. Conversely, when options were farther apart, elaborated comparison was unnecessary. Our design thus allowed us to test the specific prediction that individual differences in self-control during intertemporal decision-making would specifically interact with choice difficulty, such that individuals high in self-control would most effectively increase lateral PFC activity as difficulty increased.

Materials and Methods

Participants

The sample consisted of 41 participants (21 females; 20 males; mean age 23.2; age range 18–35). All were healthy, neurologically normal young adults, who fulfilled the MRI screening criteria and provided written, informed consent in accordance with Washington University Human Research Protection Office. Additionally, all had participated in a prior experiment (Jimura et al. 2013). Of the 41 participants who completed the study, 1 was excluded from analyses due to poor WM task performance, and 3 others were excluded because they did not exhibit any evidence of delay discounting (i.e. choice preference did not change depending on delay length).

Tasks

Intertemporal decision-making and WM tasks were performed in the same experimental session with a previously reported task involving intertemporal decision-making for liquid rewards (Jimura et al. 2013). We exclude data from the liquid task here. The 3 tasks were interleaved, with the WM and intertemporal decision-making presented pseudo-randomly during the intertrial interval (ITI) of the liquid task (Jimura et al. 2011, 2013). Each ITI involved 2 intertemporal decision-making trials and 2 WM trials, with the task precued by a visual instruction (“MONEY” or “MEMORY”; 2 s) followed by a fixation cross (1 s ISI).

Intertemporal decision-making task

On each trial, 2 alternatives were simultaneously presented on the screen: a larger reward available after a specified delay, and a smaller reward available immediately (Fig. 1A). Participants were instructed to think of the alternatives as if they were real and to choose the alternative they preferred. Alternatives were presented on left and right sides of a central fixation point, and their positions varied randomly from trial to trial. Participants indicated their preference by corresponding button press. Across trials, the delayed reward was varied in both amount and delay, and the immediate reward amount was varied systematically in relation to the delayed reward, as described further below.

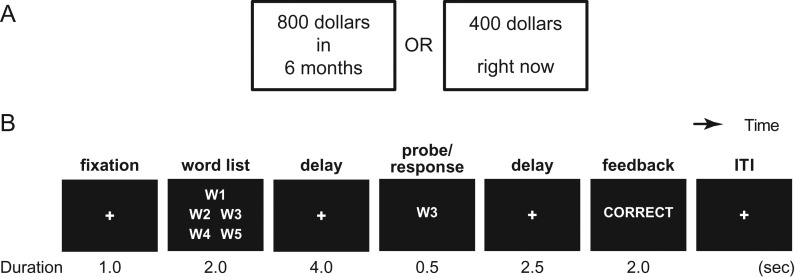

Figure 1.

Behavioral tasks. (A) Participants made intertemporal choice between delayed large and immediate small monetary rewards. (B) They also performed a Sternberg-type WM task.

WM task

Participants also performed a Sternberg-type WM task (Sternberg 1966; Beck et al. 2010; Jimura et al. 2010; Fig. 1B). At the beginning of each trial, a list of words was presented for memorization followed by a retention interval. After this interval, a probe word was presented, prompting participants to decide whether it appeared in the preceding list. Participants were instructed to respond as quickly and accurately as possible and received feedback following their response (correct, incorrect, and too slow). Across trials, the list length was either 2 (low-load) or 5 (high-load) words.

Word stimuli for the WM task were randomly selected from a list of 1100 emotionally neutral words from the English Lexicon Project at Washington University (Balota et al. 2007; http://elexicon.wustl.edu/). These words included nouns, adjectives, and verbs, but no adverbs or plural nouns. Each word consisted of 1 or 2 syllables [1.44 ± 0.81 (mean ± SD)], and 4–6 letters (5.0 ± 0.81). The word frequency in the corpus was 9.62 ± 1.04 (log-transformed; mean ± SD) based on the Hyperspace Analog to Language (Lund and Burgess 1996). No words were presented more than once through the 2 sessions.

Procedure

The study consisted of 2 experimental sessions, behavioral and fMRI scanning, administered at least 1 week apart. The tasks were identical in these 2 sessions, except in terms of trial timing, and for intertemporal decision-making, which delay durations were used and how immediate reward amounts were varied. The purpose of the first behavioral session was to characterize individual differences, by estimating each participant's monetary delay discounting rate. Prior work has demonstrated that discounting behavior in this task is stable across sessions, suggesting a potential trait-like characteristic (Kable and Glimcher 2007; Kirby 2009; Jimura et al. 2011). The results of the behavioral session were used to compute individual delay discounting to optimize the amount of immediate reward in the fMRI session. This allowed us to systematically manipulate choice difficulty during the intertemporal decision-making task performed during fMRI scanning (see below).

Behavioral Session

The behavioral procedure was identical to that of our prior experiments and is described in greater detail elsewhere (Jimura et al. 2009, 2011, 2013). Five different delay conditions (1 week, 1 month, 6 months, 1 year, and 3 years) were used, and participants completed a total of 30 decision-making trials (5 delays × 2 amounts × 3 choices). The order of the delay and amount conditions was pseudorandom, with the constraint that participants made their first choice at each of the 5 delays before going on to make their second choice at each delay.

On the first trial of each delay/amount condition, all participants chose between an immediate $400 reward and a delayed $800 reward in the large reward condition (or between a $20 immediate reward and a $40 delayed reward in the small reward condition). The reward amounts in subsequent trials were adjusted depending on the alternative the participant had selected. Specifically, on the second trial in a delay-amount condition, the immediate reward was increased or decreased by $200 in large ($10 in small) amount and on the third trial by $100 in large ($5 in small) amount. For each delay, the SV of the delayed reward (i.e. the amount of immediate reward equal in value to the delayed reward) was estimated to be equal to $50 in the large ($2.5 in the small) amount more than the amount of immediate reward available on the fourth trial if the delayed reward had been chosen on that trial, and $50 less in the larger ($2.5 in the smaller) than the amount of immediate reward available on the fourth trial if the immediate reward had been chosen on that trial.

WM task trials (both high = 5 and low = 2 loads) were also intermixed during this behavioral session, to allow participants to gain familiarity with the intermixed trial structure, which was also employed during the fMRI scanning session (see below). The WM task during the behavioral session was identical to that performed during the fMRI session, with the exception that 2 different word sets were used. Data from WM task performance in the behavioral session were not analyzed.

Imaging Session

During fMRI scanning of the intertemporal decision-making task, 3 delay conditions (1 month, 6 months, and 1 year), and 2 delayed amount conditions ($40 and $800) were used. Only the 3 middle duration delay conditions from the behavioral session were selected, because for the shortest and longest delay conditions (1 week and 3 years), the estimated SV of the delayed reward was either too small or too large on average to be easily included in the choice-difficulty manipulations described next.

The choice-difficulty manipulation was the most critical aspect of the task procedure during the imaging session. Choice difficulty was systematically manipulated across trials, by adjusting the amount of immediate reward presented during each trial. The adjustment was based on the SV of the delayed reward for each participant, as estimated from the behavioral session. More specifically, the amount of immediate reward was set by adding or subtracting 2.5%, 5.0%, or 10.0% from the SV of the delayed reward. It was assumed that individuals would experience greater difficulty when choosing an alternative in trials where the choices were close in SV (i.e. within 2.5% of each other) relative to trials in which one option was considerably more lucrative (i.e. one is 10% greater than the other). Therefore, trials were labeled as easy (ESY; ±10%), medium (MED; ±5.0%), or difficult (DIF; ±2.5%), creating 3 difficulty conditions based on the difference in SV between the 2 alternatives. Critically, difficulty and immediate reward amount were crossed orthogonally, so that difficulty effects could be isolated from the affective consequences of reward amount, and therefore trials in which the participant was biased to select the larger-later versus the smaller-sooner reward (cf. Jimura et al. 2013). Each scanning run involved 12 decision-making trials and a total of 3 or 4 scanning runs (yielding at least 2 trials of the 3 delay × 2 amount × 3 difficulty conditions of the study), which were administered based on satiety from the liquid reward consumed during the session (cf. Jimura et al. 2013). The trial started after a 2-s warning cue and 1-s cue-trial interval, and the choices were presented until participant's response.

For the WM task, high- and low-load trials were randomly intermixed with equal frequency. The trial started after a 2-s warning cue and 1-s cue-trial interval, with each trial lasting 12-s and consisting of the following events: 2-s presentation of a word list, 4-s delay, 0.5-s probe presentation, 2.5-s probe-feedback interval, and 2.0-s feedback presentation (Fig. 1B). Each scanning run involved 12 WM trials (6 trials for each of the 2 load conditions).

fMRI Procedures

Scanning was conducted on a whole-body Siemens 3 T Trio System (Erlangen, Germany). A pillow and tape were used to minimize head movement in the head coil. Headphones dampened scanner noise and enabled communication with participants. Both anatomical and functional images were acquired from each participant. High-resolution anatomical images were acquired using an MP-RAGE T1-weighted sequence [repetition time (TR) = 9.7 s; echo time (TE) = 4.0 ms, flip angle (FA) = 10°, slice thickness = 1 mm; in-plane resolution = 1 × 1 mm2]. Functional (BOLD, blood oxygen level dependent) images were acquired using an asymmetric spin-echo echo-planar imaging (TR = 2.0 s; TE = 27 ms; FA = 90°; slice thickness = 4 mm; in-plane resolution = 4 × 4 mm2; 34 slices) in parallel to the anterior–posterior commissure line, allowing complete brain coverage at a high signal-to-noise ratio. Each functional run involved 512 volume acquisitions.

Behavioral Analysis

Individual differences in delay discounting rates were quantified by the Area under the Curve (AuC) of the discounting function obtained for each participant (Myerson et al. 2001; Sellitto et al. 2010; Jimura et al. 2011, 2013), using the large reward amount condition. The AuC reflects the average SVs of reward, calculated across all delays for a given participant. More specifically, the AuC is calculated as the sum of the trapezoidal areas under the indifference points normalized by the amount and delay (Myerson et al. 2001).

The AuC was normalized by the amount of the delayed reward (standard amount) and the longest delay length. Theoretically, AuC values range between 1.0 (reflecting maximally shallow, i.e. no discounting) and 0.0 (reflecting maximally steep discounting; i.e. SV dropping to zero with any delay), though in practice, the latter value is almost never obtained. It has been argued that the AuC is the best measure of delay discounting to use for individual difference analyses because it is theoretically neutral (i.e. assumption free) and psychometrically reliable (Myerson et al. 2001). The AuC was calculated solely based on choices made during the behavioral session.

WM performance was assessed separately for the 2-item and 5-item load conditions, by calculating mean accuracy and reaction time for each participant.

Imaging Analysis

All functional images were first temporally aligned across the brain volume, corrected for movement using a rigid-body rotation and translation correction, and then registered to the participant's anatomical images in order to correct for movement between the anatomical and function scans. The data were then intensity normalized to a fixed value, resampled into 3-mm isotropic voxels, and spatially smoothed with a 9-mm full width, half maximum Gaussian kernel. Participants’ anatomical images were transformed into standardized Talairach atlas space (Talairach and Tournoux 1988) using a 12-dimensional affine transformation. The functional images were then registered to the reference brain using the alignment parameters derived for the anatomical scans.

A general-linear model (GLM) approach was used to estimate task events and parametric effects. For intertemporal decision-making trials, the trial event was time-locked to initiate with offer presentation and lasted until the participants’ response. Trial events were then convolved with a double-gamma hemodynamic response function. Parametric trial effects were also modeled, including 1) choice-difficulty (ESY, MED, and DIF); 2) SV of the delayed reward (estimated for each subject at each delay and amount from their behavioral session, as in our prior work; Jimura et al. 2011); and 3) relative adjusted amount of the immediate reward. The first regressor was encoded as the absolute adjusted amount, that is, difficulty, whereas the third regressor was encoded as the signed version of the same values, ensuring that these 2 regressors (first and third) are orthogonal. The current study focuses on the choice-difficulty effect. The intertemporal choice trials for liquid rewards were also coded in GLMs similar to our prior reports (Jimura et al. 2013) as a nuisance effect. In a separate GLM, the choice (delayed and immediate) was also encoded as a final regressor.

For the WM task, trial-related activation was estimated with a finite impulse response approach (i.e. unassumed event-related hemodynamic response shape; Ollinger et al. 2001; Serences 2004) because of the more complex trial structure and long-duration (12 s). Trials were estimated as 28-s epochs starting at the instruction cue onset (i.e. “MEMORY”) and coded by 15 time points (event-related regressors; see also Beck et al. 2010 and Jimura et al. 2010 for a similar approach) in order to cover the WM-trial-related hemodynamic epoch (as well as any post-trial recovery period). These 15 time points that covered the trial-related epoch were statistically independent and individually estimated in the GLM. The 2 WM-load conditions were estimated with separate sets of regressors. The WM-load effect was then defined as the difference in averaged parameter estimates across the 7–9th time points (i.e. 9–13 s after the word onset), which roughly corresponded to peak BOLD responses for the delay and probe period of the WM trials (after accounting for the hemodynamic lag; Beck et al. 2010; Jimura et al. 2010), contrasting between the high (5 word) and low (2 word) conditions.

The current experiment also included intertemporal choice trials for real liquid reward (Jimura et al. 2013). This condition was encoded as in the previous report. The ITI of the current WM and money discounting task was variable, and ranged from 8 to 20 s (Jimura et al. 2011) providing sufficient data to estimate a baseline state.

The difficulty effect of the intertemporal choice task and the load effect of the WM task were then submitted to voxel-wise random-effects models for group analyses. We hypothesized that WM-related regions would also be involved in intertemporal choice, particularly for more difficult decisions. In order to test this hypothesis, a whole-brain exploratory analysis was first performed to extract brain regions that revealed the WM-load effect. Whole-brain beta contrasts map (high WM vs. low WM) were collected from individual subjects’ single-level GLM estimation, and then statistical testing was performed based on nonparametric permutation testing (5000 permutations) (Eklund et al. 2016) implemented in “randomize” in FSL suite (Winkler et al. 2014; https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/Randomise). Clusterwise statistical correction was performed for voxel clusters defined by a threshold (P < 0.001, uncorrected). Clusters showing significance level above P < 0.05 corrected for multiple comparisons were used as a functional mask associated with WM load in the subsequent analyses for the intertemporal choice data.

For intertemporal decision-making task, voxel clusters showing a choice-difficulty effect (P < 0.01, uncorrected) were first identified within the WM region-of-interest (ROI) mask created above. The use of the functional mask restricted the identification of choice-difficulty effects to voxel clusters located within WM ROIs. Then, these voxel clusters were assessed for significance at a threshold of P < 0.05, corrected for multiple comparisons within the WM ROI mask based on nonparametric permutation testing (Winkler et al. 2014; Eklund et al. 2016).

A number of individual difference analyses (i.e. cross-subject correlations) were conducted. The first examined the correlation between the WM-load effect and the choice-difficulty effect in identified ROIs. A second analysis explored the correlation between individual delay discounting rates and the choice-difficulty effect within WM-related regions. For this analysis, voxel-wise correlation coefficients between the AuC parameter and choice-difficulty effect were computed across participants. The significance of this correlation was then assessed using a threshold P < 0.05 corrected for multiple comparisons based on nonparametric permutation testing (Winkler et al. 2014; Eklund et al. 2016) within WM-related brain regions across the whole brain. Because AuC was estimated in the behavioral session, biases due to individual differences in choice behavior during the scanning session were minimized.

Results

Behavioral Results

We first analyzed participants’ choice behavior during the intertemporal decision-making task in the scanning session. In order to examine choice-difficulty effects, choice biases were defined as the choice probability percentage deviation from chance level toward the option with greater SV. Participants showed significant choice biases in all conditions (Fig. 2A) [ESY: t(36) = 13.4, P < 0.001; MED: t(36) = 9.15, P < 0.001; DIF: t(36) = 7.38; P < 0.001, (binomial test)], indicating that choices were all biased toward the alternative with the greater SV. More importantly, a simple regression analysis of difficulty and bias revealed a significant trend, such that the bias was decreased during more difficult trials [t(36) = −7.39, P < 0.001 (binomial test)], indicating that participants chose the option with greater SV to a greater degree in easy trials. Or, conversely, as difficulty increased, subjects became more equivocal, exhibiting weaker choice preferences.

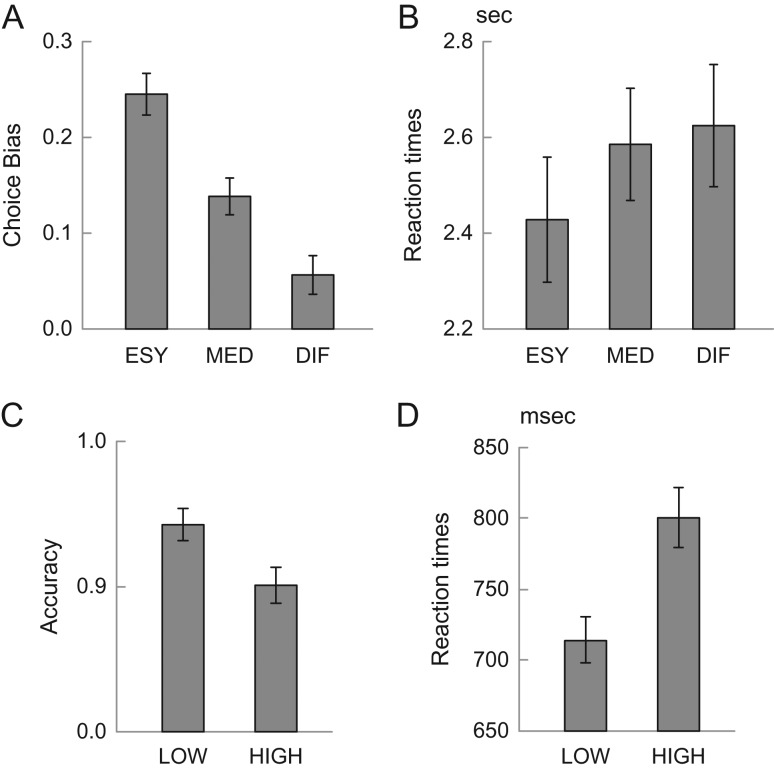

Figure 2.

Behavioral results. (A) Choice biases in intertemporal decision task in fMRI session. The choice biases are as defined as percentage deviation of choice probability from the chance level toward the choice with greater reward value. (B) Reaction times of intertemporal choice trial. ESY: easy; MED: middle; DIF: difficult. (C) Accuracy and (D) reaction times in WM trials. LOW: low load; HIGH: high load.

Accordingly, reaction times showed opposite effect, with longer reaction times in more difficult trials (Fig. 2B). The slope of increase in reaction times is also statistically significant [t(36) = 2.3, P < 0.05], reliably indicating that, in difficult trials, participant were slower to make a choice; conversely, on easy trials, participants made their decision more quickly.

In WM trials, participants showed load effects in both accuracy (Fig. 2C) and reaction times (Fig. 2D), consistent with prior work (e.g. Sternberg 1966) [HIGH vs. LOW: accuracy: t(36) = −3.0, P < 0.01; reaction times: t(36) = 7.3, P < 0.001]. In this sample, WM behavioral effects did not significantly correlate with delay discounting, as indexed by AuC (accuracy: r = 0.11; RT: r = −0.17), although such effects have been observed in prior studies (e.g. Hinson et al. 2003; Shamosh et al. 2008).

Imaging Results

We first explored brain regions showing a WM-load effect (HIGH vs. LOW; see Methods for definition). The WM-load effect was observed bilaterally (Fig. 3A) in several broad areas including dorsolateral and aPFC, inferior frontal junctions, presupplementary motor area, anterior insula, temporo-parietal junction, and posterior parietal cortex, consistent with meta-analyses of fMRI WM studies (Owen et al. 2005; Rottschy et al. 2012; Nee et al. 2013).

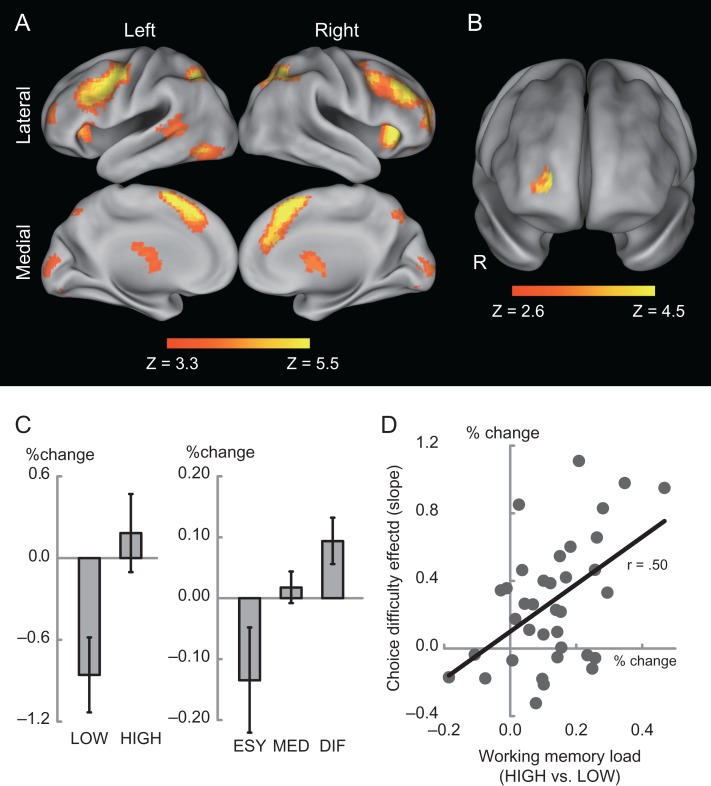

Figure 3.

(A) Statistical significance map of the contrast HIGH versus LOW in WM trials. Statistical maps were color coded and overlaid on the 3D standard anatomical template. Color indicates statistical level, as indicated in the color bar below. (B) Statistical significance maps of the choice-difficulty effect in intertemporal choice task. Format is similar to those in panel A. R, right. (C) Percentage signals in the aPFC for WM trials (left) and intertemporal choice trials (right). The horizontal and vertical axes indicate trial conditions and signal magnitude, respectively. Labels are identical as in Figure 2. (D) Scatter plot between WM-load and choice-difficulty effects in aPFC. The vertical and horizontal axes indicate percentage signals between WM effect and difficulty effect, respectively.

Next, these WM ROIs were explored to examine whether they were also involved in the monetary intertemporal decision-making task, in order to test the current hypothesis that WM-related regions are also related to decision choice difficulty. We first investigated whether choice difficulty would differentially recruit WM regions. A voxel cluster in the right aPFC showed greater activation in difficult trials [(25, 51, and 6), 42 voxels, P < 0.05 corrected within WM ROIs across the whole brain; Fig. 3B], suggesting that this aPFC region is commonly involved in both high-WM load trials and more difficult intertemporal decision-making trials. The BOLD signal amplitude parameters clearly demonstrate increased activations in both high-load WM trials and difficult intertemporal decision-making trials (Fig. 3C).

Importantly, individuals who exhibited greater choice-difficulty effects in the aPFC also demonstrated a higher WM-load effect in this region, as evidenced by a positive correlation between WM-load and choice-difficulty effects [r = 0.50, t(36) = 3.4, P < 0.01; Fig. 3D]. The WM-load effects and choice-difficulty effects are statistically independent, since they were estimated from separate tasks; as such, the between-subjects correlation is not biased from the identification procedure. Consequently, these results suggest a close functional relationship between intertemporal decision-making and WM, supporting the hypothesis that this aPFC region is engaged during both WM and intertemporal choice tasks to support the associated cognitive control processes linked to increased task difficulty in each condition.

Next, we examined the relationship between individual differences in delay discounting and the choice-difficulty effect. Individual differences in delay discounting were indexed by AuC, a reliable measure of self-control in intertemporal decision-making (see Methods; Myerson et al. 2001; Shamosh et al. 2008; Sellitto et al. 2010; Jimura et al. 2011, 2013). Importantly, AuC was calculated on the basis of discounting behavior in the out-of-scanner behavioral session, and thus was independent of in-scanner behavior and brain activity. A between-subjects correlation was calculated between participants’ AuC and their average, voxel-wise choice-difficulty effects within the WM ROIs. A significant cluster was identified in the right dlPFC [(25, 30, 21), 58 voxels, P < 0.05 corrected within the WM-related ROIs across the whole brain; Fig. 4A]. A scatter plot demonstrates this correlation (Fig. 4B). The positive correlation indicates that self-controlled individuals activate dlPFC to a greater degree in difficult versus easy intertemporal choice trials.

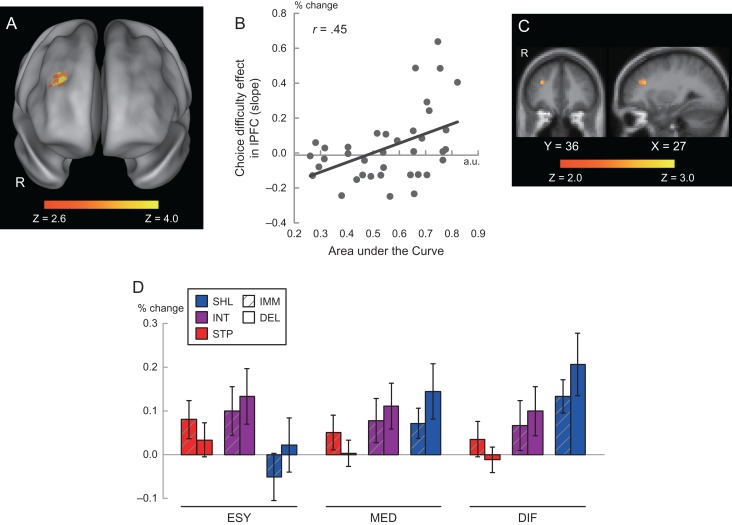

Figure 4.

(A) Statistical activation map for correlation between choice-difficulty effect and delay discounting (AuC). Formats are similar to those in Figures 2 and 3. (B) Scatter plot of AuC against percentage signals for choice-difficulty effect in dlPFC. (C) Statistical activation map for correlation between choice and delay discounting. Statistical maps were color coded and overlaid on the 2D slices of standard anatomical template, as indicated by the X and Y levels below. (D) Percent signals during intertemporal choice trials. Red, purple, and blue bars indicate steep (STP), intermediate (INT), shallow (SHL) discounting groups, respectively. Open bars and bars with slant lines indicate trials where the delayed (DEL) and immediate (IMM) choices were chosen, respectively. ESY: easy; MED: middle; DIF: difficult.

Prior work has demonstrated that increasing dlPFC activity predicts delayed reward selection in intertemporal decision-making (Tanaka et al. 2004; McClure et al. 2004, 2007; Hare et al. 2014). Building on these findings, we examined whether the dlPFC region identified here also showed choice-related activation in a separate voxel-wise GLM that additionally coded participants’ choices during individual trials [DEL: delayed; IMM: immediate]. The main effect of the choice (DEL vs. IMM) failed to reveal significance in the dlPFC region. However, analysis of the correlation between the choice effect and AuC identified a voxel subcluster within the dlPFC region showing a positive correlation, indicating greater activity in high-AuC individuals during DEL trials. The size of the subcluster was found to be statistically significant [(29, 30, 25); 7 voxels based on a threshold P < 0.05 uncorrected; P < 0.05 corrected for multiple comparisons within a small volume in the dlPFC region; Fig. 4C], confirming a reliable correlation in the dlPFC subregion. Note that choice behavior (percentage of choice for delayed/immediate reward) in the scanning session did not correlate with AuC (|r|s < 0.10), ensuring that the choice-related correlation with AuC in imaging data was not contaminated by the pattern of behavioral choices.

These collective findings in the dlPFC indicate that individuals with higher AuC showed increased dlPFC activity during difficult trials, particularly when choosing the delayed reward. To visualize this relationship, participants were divided into 3 groups based on delay discounting: shallow (SHL: less discounting; N = 12), steep (STP: greater discounting; N = 12), and intermediate (middle of them; N = 13) (see Jimura et al. 2013 for a similar approach). Then, activation magnitudes for each group were calculated for each level of difficulty (ESY, MID, and DIF) and participants’ choice (DEL and IMM) for the dlPFC subcluster. As shown in Figure 4D, this pattern reveals how individual differences in delay discounting interact with both choice difficulty and choice outcome, with shallow discounters (SHL) showing a tendency toward greater dlPFC activation (relative to steep [STP] discounters), but primarily during difficult choice trials (DIF) and when the delayed reward was selected (DEL).

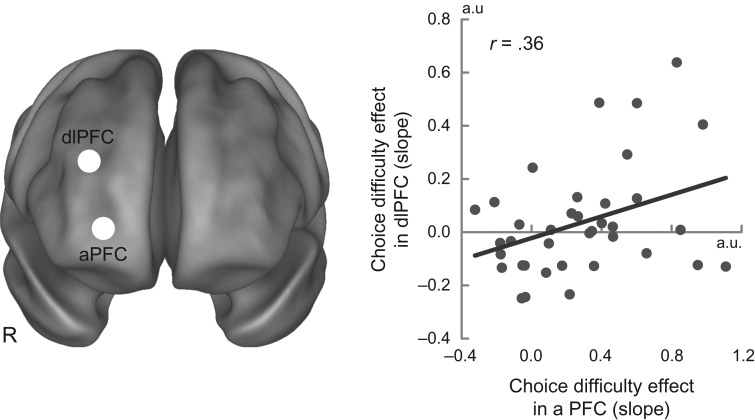

Together, the results reveal 2 prefrontal areas within WM-related brain regions that yielded 2 types of choice-related effects: an aPFC region showing an overall choice-difficulty effect, and a dlPFC region showing a positive correlation between AuC and the difficulty effect (i.e. and AuC × choice-difficulty interaction). The fact that these 2 regions show similar task profiles despite being anatomically separated (25.8 mm) suggests that they may exhibit functional relationships during intertemporal choice. To test this, we calculated the task-related cross-subject functional correlation of choice-difficulty effect between these 2 regions. As shown in Figure 5, the correlation was significant [r = 0.36, t(35) = 2.3, P < 0.05], supporting the hypothesis that the aPFC and dlPFC regions are functionally related, in terms of choice difficulty and individual differences patterns.

Figure 5.

Individual difference analysis in aPFC and dlPFC. Choice-difficulty effect in aPFC is plotted against that in dlPFC. The areas are indicated by white circles on the 3D standard brain template. R, right.

Discussion

The current study explored potential self-control mechanisms involved in intertemporal decision-making. The aPFC and dlPFC were identified as demonstrating choice-difficulty effects present in the same regions and individuals who also exhibited WM-load effects. In the aPFC, the 2 effects were further found to be functionally associated across participants, such that individuals with greater aPFC WM-related activation also showed greater choice-difficulty effects. In the dlPFC, greater choice-difficulty effects were primarily associated with self-control, such that they were strongest in shallow discounters. Moreover, dlPFC activation in shallow discounters was primarily enhanced when they chose a larger, delayed reward under difficult choice conditions, consistent with the hypothesis that the dlPFC plays a role in promoting self-control. Finally, the 2 anatomically separated regions were functionally associated in terms of choice difficulty and individual difference effects. We next discuss further the functional implications of these findings.

The findings of the current study extend prior work suggesting that aPFC mediates the relationship between WM-related activity and delay discounting behavior (Shamosh et al. 2008). In this prior work, the mediating role of aPFC activity in both WM and delay discounting was suggested, but not directly tested in both domains. Here, we use both within-subject activity conjunction as well as between-subjects correlation to support this hypothesis. Moreover, by showing that the aPFC co-activation patterns occur in relationship to choice-difficulty effects, the current results more specifically link this region to intertemporal decision-making conditions in which self-control demands are high.

The increased activation of aPFC with high WM load is expected from the prior literature, as meta-analyses have identified aPFC regions to be consistently engaged during WM task performance (Owen et al. 2005; Rottschy et al. 2012; Nee et al. 2013). However, this region has also been associated with the integration of task-relevant information and goal–subgoal coordination in a broader range of cognitive contexts (Koechlin et al. 1999, 2003; Braver et al. 2003; Sakai and Passingham 2003; Bunge et al. 2005; de Pisapia et al. 2007; Sakai, 2008). Likewise, increased aPFC activity has not only been associated with delay discounting in intertemporal decision-making, but also with general fluid intelligence (Shamosh et al. 2008). Although specific functional interpretations of aPFC engagement during intertemporal decision-making remain speculative, the existing data suggest an integrative role that may be preferentially engaged during difficult choice trials, such as the direct integration and comparison of choice item information (i.e. reward magnitude and delay) when this is critical for successful decisions (i.e. the choices are close in SV). Similarly, this region might be involved in broader goal–subgoal coordinative activities (e.g. coordinating higher order decision goals with attentional focusing/switching operations to enable more elaborated and extended comparisons among options).

A subregion of the dlPFC was also found to exhibit both WM-load and intertemporal choice-difficulty effects. This region further predicted self-controlled (larger-later rather than smaller-sooner) choices for high-AuC participants, replicating a number of reports of greater dlPFC recruitment predicting greater self-control in intertemporal choice (Tanaka et al. 2004; McClure et al. 2004, 2007; Kable and Glimcher 2007; Peters and Buchel 2010; Jimura et al. 2013; Aranovich et al. 2016). Likewise, more recent studies have suggested a specific modulatory role for dlPFC in regulating the engagement of value-related ventromedial prefrontal cortex and striatum regions (Hare et al. 2014; van den Bos et al. 2014). Our results are consistent with these findings, but also suggest a specific form of modulation in which dlPFC “may” boost self-controlled choices, but that its primary role is to enable integration among, and elaborated comparisons between, choice features during decision-making. These processes can promote self-controlled choices in case those reflect the decision-maker's goals. This conclusion is drawn from the fact that, like the aPFC ROI, the dlPFC subregion was identified in terms of showing both WM-load and choice-difficulty effects and thus fits the profile for decision feature integration and elaborated comparison. Furthermore, the choice effect was only apparent among the most self-controlled participants (those with higher AuC), and either absent, or potentially even trending in the opposite direction for the least self-controlled participants (those with the lowest AuC; see Fig. 4D). Although strong inference about individual differences is limited by the fact that we did not independently assess self-control, it is possible that self-controlled participants selectively recruited the dlPFC under difficult choice situations so as to more accurately estimate and compare the relative SV of the delayed reward to the immediate one.

This latter interpretation of dlPFC activity points to a fact about cognitive control that is underappreciated in theoretical accounts of intertemporal decision-making. Though cognitive control is typically assumed to promote delayed choices, it can be used flexibly to support any behavioral outcome, including those that involve selection of immediate rewards. Indeed in shallow discounters, dlPFC activity was not only increased on difficult trials (relative to easier ones) that resulted in selection of the delayed reward, but also in trials for which the immediate reward was chosen. This pattern indicates that higher dlPFC activity on a trial does not automatically translate into patient (i.e. self-controlled) decision-making.

It is worth noting that such patterns of dlPFC activity were not observed when analyzing the discounting trials involving real liquid rewards (which were interleaved in the current task and reported in a prior publication; Jimura et al. 2013). This is partly because, in the liquid discounting trials, choice difficulty (i.e. difference in SV between choices) was strongly correlated with SV of immediate reward, as the SV of the immediate reward was always lower than that of delayed reward. Consequently, this feature of the design (which was only present for the liquid trials) may compromise analyses of the choice-difficulty effect. Another possibility is the differential characteristics of 2 tasks with regard to discounting mechanism, as we previously reported that individual differences in delay discounting of the 2 tasks was relatively independent (Jimura et al. 2011).

The observation of joint activation in aPFC and dlPFC during WM and intertemporal choice, along with functional associations between the 2 tasks and the 2 regions in terms of individual differences, supports the suggestion that the WM-load and choice-difficulty contrasts might be revealing common functional processes engaged by the 2 tasks. Indeed, the suggestion of functional overlap between WM and intertemporal decision-making is not new (Hinson et al. 2003; Shamosh et al. 2008), and is supported by recent meta-analysis (Wesley and Bickel, 2014). However, it does raise the question of what these common WM and decision-making processes might be.

Although interpretations must be speculative, it does not seem likely that simple active maintenance describes the commonalities between the 2 tasks, for a number of reasons. First, in the intertemporal decision-making task, the explicit WM demands are low, given that all information is continuously displayed; further, this does not change across choice-difficulty conditions. Conversely, in the WM task, activation is revealed via a high (5-item) versus low (2-item) load contrast during the delay and probe periods, which reveal not just active maintenance processes, but also additional control processes that enable successful target decisions to present probe items. Indeed, current WM theorizing suggests that under high-load (>3–4 items) conditions, additional control operations are needed for goal-directed target decisions, such as to enable attentional switching and refreshing among items that cannot be directly accessed within the severely capacity-limited focus of attention (Nee and Jonides, 2011; Cowan et al. 2012; LaRocque et al. 2014; Oberauer 2013).

Our preferred interpretation is thus that the common functional processes isolated by the WM-load and choice-difficulty contrasts relate primarily to cognitive control, and in particular to hierarchical goal–subgoal coordinative processes that enable integration and comparison among items maintained in WM. In high-load WM conditions, these processes may drive attentional control operations that support successful target decisions, whereas in difficult choice intertemporal decision-making conditions, the processes may support decision feature integration and elaborated comparisons among choice options. On easy trials, such decision feature integration and elaborated comparison may not be needed, as option evaluation and selection could potentially proceed via rapid attribute-wise comparison (e.g. Dai and Busemeyer 2014; Scholten et al. 2014). In this respect, our interpretation is consistent with other recent work suggesting that self-control during intertemporal decision-making could be a special case of cognitive control operations that support goal-directed behavior (Rudorf and Hare 2014). Further consistent with such work, we suggest that these operations might be implemented in the lateral PFC, more specifically via functional interactions between aPFC and dlPFC subregions.

The aPFC and dlPFC subregions identified in our analyses showed functional association with respect to choice-difficulty effects. Specifically, participants with a larger aPFC choice-difficulty effect also showed a larger dlPFC choice-difficulty effect. The coordinative interaction of aPFC and dlPFC during intertemporal decision-making is consistent with the hypothesis of hierarchical anterior-to-posterior mapping of contextual information with higher order goals maintained in anterior regions (i.e. compare SVs), in order to support subgoals (i.e. choice selection) maintained in posterior prefrontal regions (Braver and Bongiolatti 2002; Koechlin et al. 2003; Nee and Brown 2012; Kriet et al. 2013; Chatham and Badre 2015).

Anatomically, the location of the identified aPFC and dlPFC regions is partially, but not fully consistent with the prior literature. For example, the aPFC and dlPFC regions are relatively closely located (within 16 mm) to foci identified in some WM meta-analyses (Owen et al. 2005; Rottschy et al. 2012; Nee et al. 2013). On the other hand, the lateral PFC regions involved in meta-analyses of intertemporal choice (Carter et al. 2010) are somewhat separated in anatomical location from the ones reported here (>20 mm). Likewise, a recent meta-analysis examining the conjunction of WM and delay discounting effects identified both midlateral and anterior PFC regions (Wesley and Bickel 2014). However, these were selectively in the left hemisphere. Nevertheless, these meta-analyses of intertemporal decision-making have not tended to focus on contrasts of choice-difficulty effects, which was the primary focus of the current study. Indeed, one of the few other papers that did report a high > low difficulty contrast (Monterosso et al. 2007), also observed right-hemisphere aPFC activity, consistent with the current findings. Likewise, a recent study found that engaging in a high-load WM condition (4-back load of the N-back task) selectively decreased right, but not left dlPFC activity in a subsequent delay discounting block (Aranovich et al. 2016). Thus, further work is needed to better understand the anatomic specificity and hemispheric pattern of common WM and intertemporal choice PFC activation.

One limitation regarding interpretations of the current findings relates to the specific analytic approach we employed. This analysis strategy aligned well with our theoretical interests, by identifying regions related to choice difficulty that were constrained to be located within regions previously defined as showing WM-load effects. Nevertheless, this approach leaves open the possibility other brain regions unrelated to WM load may play important roles in choice difficulty and intertemporal decision-making. Another limitation relates to statistical power, caused by the relatively small number of choice-difficulty trials collected in the current study, as these were constrained by the demands of intermixing intertemporal decision-making trials with WM trials. This constraint may have also contributed to a failure to detect potentially important regions associated with choice difficulty. As an example of this, we did not observe significant intertemporal choice effects in the dorsal anterior cingulate cortex (although WM-load effects were present in this region), a brain region that has also been frequently invoked as responding particularly to decision difficulty (Pine et al. 2009; Shenhav et al. 2014).

When taken together, the observed findings provide a potential resolution between different theoretical accounts regarding the role of lateral PFC in intertemporal decision-making. In particular, our results support an account in which the dlPFC (along with aPFC) is involved in the integration and elaborated comparison of choice features during decision-making, and may, but does not necessarily, promote preference for delayed (over immediate) alternatives. We suggest that both dlPFC and aPFC might be jointly engaged to support cognitive control operations during the decision process, rather than directly biasing choice of the delayed reward per se. Our data suggest that such operations occur preferentially when options are close in SV, such that more accurate internal representation is required for evaluation. Further, the results suggest that it is primarily the self-controlled individuals who recruit dlPFC in such a manner, which may provide these individuals with a more accurate basis on which to make intertemporal choices. Thus, our data also provide a more precise account of the particular relationship between WM and intertemporal decision-making than prior work, by suggesting that this relationship might be specifically tied to choice difficulty, and preferentially observed in self-controlled individuals.

Notes

We also thank Bruna Martins, Carol Cox, Joseph Hilgard, Dionne Clarke, Ayaka Misonou, and Kaho Tsumura for administrative and technical assistance. Conflict of Interest: None declared.

Funding

National Institute of Health R01 (AG043461 to T.S.B.); Research Fellowship and a Research Grant from the Uehara Memorial Foundation; Grants-in-Aid for Scientific Research from Ministry of Education, Culture, Sports, Science and Technology of Japan (26 350 986, 26120711 to K.J).

References

- Ainslie G. 2005. Precis of breakdown of will. Behav Brain Sci. 28:635–650. [DOI] [PubMed] [Google Scholar]

- Aranovich GJ, McClure SM, Fryer S, Mathalon DH. 2016. The effect of cognitive challenge on delay discounting. NeuroImage. 124:733–739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badre D, Hoffman J, Cooney JW, D'Esposito M. 2009. Hierarchical cognitive control deficits following damage to the human frontal lobe. Nat Neurosci. 12:515–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balota DA, Yap MJ, Cortese MJ, Hutchison KA, Kessler B, Loftis B, Neely JH, Nelson DL, Simpson GB, Treiman R. 2007. The English lexicon project. Behav Res Methods. 39:445–459. [DOI] [PubMed] [Google Scholar]

- Baker F, Johnson MW, Bickel WK. 2003. Delay discounting in current and never-before cigarette smokers: similarities and differences across commodity, sign, and magnitude. J Ab Psychol. 112:382–392. [DOI] [PubMed] [Google Scholar]

- Beck SM, Locke HS, Savine AC, Jimura K, Braver TS. 2010. Primary and secondary rewards differentially modulate neural activity dynamics during working memory. PLoS One. 5:e9251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berns GS, Laibson D, Loewenstein G. 2007. Intertemporal choice—toward an integrative framework. Trends Cogn Sci. 11:482–488. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Yi R, Landes RD, Hill PF, Baxter C. 2011. Remember the future: working memory training decreases delay discounting among stimulant addicts. Biol Psychiat. 69:260–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM. 2008. Hierarchical models of behavior and prefrontal function. Trends Cogn. Sci. 12:201–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braver TS, Bongiolatti SR. 2002. The role of frontopolar cortex in subgoal processing during working memory. NeuroImage. 115:523–536. [DOI] [PubMed] [Google Scholar]

- Braver TS, Reynolds JR, Donaldson DI. 2003. Neural mechanisms of transient and sustained cognitive control during task switching. Neuron. 39:713–726. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Wendelken C, Badre D, Wagner AD. 2005. Analogical reasoning and prefrontal cortex: evidence for separable retrieval and integration mechanisms. Cereb Cortex. 15:239–249. [DOI] [PubMed] [Google Scholar]

- Carter RM, Meyer JR, Huettel SA. 2010. Functional neuroimaging of intertemporal choice models: a review. J Neurosci Psychol Econ. 1:27–45. [Google Scholar]

- Chatham CH, Badre D. 2015. Multiple gates on working memory. Curr Opin Behav Sci. 1:23–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N, Rouder JN, Blume CL, Saults JS. 2012. Models of verbal working memory capacity: what does it take to make them work? Psychol Rev. 119:480–499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dai J, Busemeyer JR. 2014. A probabilistic, dynamic, and attribute-wise model of intertemporal choice. J Exp Psychol Gen. 143:1489–1514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Pisapia N, Slomski JA, Braver TS. 2007. Functional specializations in lateral prefrontal cortex associated with the integration and segregation of information in working memory. Cereb Cortex. 17:993–1006. [DOI] [PubMed] [Google Scholar]

- Eklund A, Nichols TA, Knutsson H. 2016. Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci USA. 113:7900–7905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Figner B, Knoch D, Johnson EJ, Krosch AR, Kisanby SH, Fehr E, Weber EU. 2010. Lateral prefrontal cortex and self-control in intertemporal choice. Nat Neurosci. 13:538–539. [DOI] [PubMed] [Google Scholar]

- Frederic S, Loewenstein G, O'Donoghue T. 2002. Time discounting and time preference: a critical review. J Econ Lit. 40:351–401. [Google Scholar]

- Goldman-Rakic PS. 1987. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory In: Pulm F, Mountcastle V, editors. Handbook of physiology, Section 1, The nervous system, higher functions of the brain. Vol. V Bethesda: American Physiological Society; p. 373–417. [Google Scholar]

- Glimcher PW. 2009. Decision and the brain In: Glimcher PW, et al., editors. Neuroeconomics. New York: Academic Press; p. 503–522. [Google Scholar]

- Green L, Myerson J. 2004. A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull. 130:769–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Hakimi S, Rangel A. 2014. Activity in dlPFC and its effective connectivity to vmPFC are associated with temporal discounting. Front Neurosci. 8:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinson JM, Whitney P, Holben H, Wirick AK. 2003. Impulsive decision making and working memory. J Exp Psychol Learn Mem Cong. 29:298–306. [DOI] [PubMed] [Google Scholar]

- Jimura K, Myerson J, Hilgard J, Braver TS, Green L. 2009. Are people really more patient than other animals? Evidence from human discounting of real liquid rewards. Psychon Bull Rev. 16:1071–1075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jimura K, Locke HS, Braver TS. 2010. Prefrontal cortex mediation of cognitive enhancement in rewarding motivational contexts. Proc Natl Acad Sci USA. 107:8871–8876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jimura K, Myerson J, Hilgard J, Keighley J, Braver TS, Green L. 2011. Domain independence and stability in young and older adults’ discounting of delayed rewards. Behav Proc. 87:253–259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jimura K, Chushak MS, Braver TS. 2013. Impulsivity and self-control during intertemporal decision making linked to the neural dynamics of reward value representation. J Neurosci. 33:344–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. 2007. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 10:1625–1633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. 2010. An “as soon as possible” effect in human intertemporal decision making: behavioral evidence and neural mechanisms. J Neurophysiol. 103:2513–2531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keeney RL, Raiffa H. 1993. Decisions with multiple objectives: preferences and value tradeoffs. New York: Wiley. [Google Scholar]

- Kirby KN, Petry NM, Bickel WK. 1999. Heroin addicts have higher discount rates for delayed rewards than non-drug-using controls. J Exp Psychol Gen. 128:78–87. [DOI] [PubMed] [Google Scholar]

- Kirby KN. 2009. One-year temporal stability of delay-discount rates. Psychon Bull Rev. 16:457–462. [DOI] [PubMed] [Google Scholar]

- Koechlin E, Basso G, Pietrini P, Panzer S, Grafman J. 1999. The role of the anterior prefrontal cortex in human cognition. Nature. 399:148–151. [DOI] [PubMed] [Google Scholar]

- Koechlin E, Ody C, Kouneiher F. 2003. The architecture of cognitive control in the human prefrontal cortex. Science. 302:1181–1185. [DOI] [PubMed] [Google Scholar]

- Kriete T, Noelle DC, Cohen JD, O'Reilly RC. 2013. Indirection and symbol-like processing in the prefrontal cortex and basal ganglia. Proc Natl Acad Sci USA. 110:16390–16395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Lewis-Peacock JA, Postle BR. 2014. Multiple neural states of representation in short-term memory? It's a matter of attention. Front Hum Neurosci. 8:5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lund K, Burgess C. 1996. Producing high-dimensional semantic spaces from lexical cooccurrence. Behav Res Methods. 28:203–208. [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. 2004. Separate neural systems value immediate and delayed monetary rewards. Science. 306:503–507. [DOI] [PubMed] [Google Scholar]

- McClure SM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD. 2007. Time discounting of primary rewards. J Neurosci. 27:5796–5804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. 2001. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 24:167–202. [DOI] [PubMed] [Google Scholar]

- Mischel W, Metzner R. 1962. Preference for delayed reward as a function of age, intelligence, and length of delay interval. J Abnormal Soc Psychol. 64:425–431. [DOI] [PubMed] [Google Scholar]

- Mischel W, Shoda Y, Rodriguetz ML. 1989. Delay of gratification in children. Science. 244:933–938. [DOI] [PubMed] [Google Scholar]

- Monterosso JR, Ainslie G, Xu J, Cordova X, Domier CP, London ED. 2007. Frontoparietal cortical activity of methamphetamine-dependent and comparison subjects performing a delay discounting task. Hum Brain Mapp. 28:383–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monterosso JR, Luo S. 2010. An argument against dual valuation system competition: cognitive capacities supporting future orientation mediate rather than compete with visceral motivations. J Neurosci Psychol Econ. 1:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L, Warusawitharana M. 2001. Area under the curve as a measure of discounting. J Exp Anal Behav. 76:235–243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nee DE, Brown JW. 2012. Rostral-caudal gradients of abstraction revealed by multi-variate pattern analysis of working memory. NeuroImage. 63:1285–1294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nee DE, Jonides J. 2011. Dissociable contributions of prefrontal cortex and the hippocampus to short-term memory: evidence for a 3-state model of memory. NeuroImage. 54:1540–1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nee DV, Brown JW, Askren MK, Berman MC, Demiralp E, Krawitz A, Jonides J. 2013. A meta-analysis of executive components of working memory. Cereb Cortex. 23:264–282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberauer K. 2013. The focus of attention in working memory-from metaphors to mechanisms. Front Hum Neurosci. 7:673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ollinger JM, Shulman GL, Corbetta M. 2001. Separating processes within a trial in event-related functional MRI. NeuroImage. 13:210–217. [DOI] [PubMed] [Google Scholar]

- Owen AM, McMillan KM, Laird AR, Bullmore E. 2005. N-back working memory paradigm: a meta-analysis of normative functional neuroimaging studies. Hum Brain Mapp. 25:46–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Buchel C. 2010. Episodic future thinking reduces reward delay discounting through an enhancement of prefrontal-mediotemporal interactions. Neuron. 66:138–148. [DOI] [PubMed] [Google Scholar]

- Pine A, Syemour B, Roiser JP, Bossaerts P, Friston KJ, Curran HV, Dolan RJ. 2009. Encoding of marginal utility across time in the human brain. J Neurosci. 29:9575–9581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H. 1989. Judgment, decision, and choice: a cognitive/behavioral synthesis. New York: Freeman. [Google Scholar]

- Rachlin H, Raineri A, Cross D. 1991. Subjective probability and delay. J Exp Anal Behav. 55:233–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramnani N, Owen AM. 2004. Anterior prefrontal cortex: insight into function from anatomy and neuroimaging. Nat Rev Neurosci. 5:184–194. [DOI] [PubMed] [Google Scholar]

- Rottschy C, Langner R, Dogan I, Reetz K, Laird AR, Schulz JB, Fox PT, Eickhoff SB. 2012. Modelling neural correlates of working memory: a coordinate-based meta-analysis. NeuroImage. 60:830–846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudorf S, Hare TA. 2014. Interactions between dorsolateral and ventromedial prefrontal cortex underlie context-dependent stimulus valuation in goal-directed choice. J Neurosci. 34:15988–15996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakai K. 2008. Task set and prefrontal cortex. Annu Rev Neurosci. 31:219–245. [DOI] [PubMed] [Google Scholar]

- Sakai K, Passingham RE. 2003. Prefrontal interactions reflect future task operations. Nat Neurosci. 6:75–81. [DOI] [PubMed] [Google Scholar]

- Scheres A, de Water E, Mies GW. 2013. The neural correlates of temporal reward discounting. WIREs Cogn Sci. 4:523–545. [DOI] [PubMed] [Google Scholar]

- Scholten M, Read D, Sanborn A. 2014. Weighing outcomes by time or against time? Evaluation rules in intertemporal choice. Cogn Sci. 38:399–438. [DOI] [PubMed] [Google Scholar]

- Sellitto M, Ciaramelli E, di Pellegrino G. 2010. Myopic discounting of future rewards after medial orbitofrontal damage in humans. J Neurosci. 30:16429–16436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT. 2004. A comparison of methods for characterizing the event-related BOLD timeseries in rapid fMRI. NeuroImage. 21:1690–1700. [DOI] [PubMed] [Google Scholar]

- Shamosh NA, Deyoung CG, Green AE, Reis DL, Johnson MR, Conway AR, Engle RW, Braver TS, Gray JR. 2008. Individual difference in delay discounting: relation to intelligence, working memory, and anterior prefrontal cortex. Psychol Sci. 19:904–911. [DOI] [PubMed] [Google Scholar]

- Shamosh NA, Gray JR. 2008. Delay discounting and intelligence: a meta-analysis. Intelligence. 36:280–305. [Google Scholar]

- Shenhav A, Straccia MA, Cohen JD, Botvinick MM. 2014. Anterior cingulate engagement in a foraging context reflects choice difficulty, not foraging value. Nat Neurosci. 17:1249–1254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sternberg S. 1966. High-speed scanning in human memory. Science. 153:652–655. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. 1988. Co-planar stereotaxic atlas of the human brain. New York: Thieme. [Google Scholar]

- Tanaka SC, Doya K, Okada G, Ueda K, Okamoto Y, Yamawaki S. 2004. Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nat Neurosci. 7:887–893. [DOI] [PubMed] [Google Scholar]

- van den Bos W, McClure SM. 2013. Towards a general model of temporal discounting. J Exp Anal Behav. 99:58–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Bos W, Rodriguez CA, Schweitzer JB, McClure SM. 2014. Connectivity strength of dissociable striatal tracts predict individual differences in temporal discounting. J Neurosci. 34:10298–10310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wesley MJ, Bickel WK. 2014. Remember the future II: meta-analyses and functional overlap of working memory and delay discounting. Biol Psychiat. 75:435–448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winkler AM, Ridgway GR, Webster MA, Smith SM, Nichols TE. 2014. Permutation inference for the general linear model. NeuroImage. 92:381–397. [DOI] [PMC free article] [PubMed] [Google Scholar]