Abstract

The demand for advanced practice providers (APPs) is increasing across the United States to meet necessary provider staffing requirements including in intensive care settings. Currently, participation in formal postgraduate training programs, or residencies, for APPs is not required for clinical practice, such that most of the APPs immediately enter into the workforce following completion of their initial graduate-level training. Consequently, this results in a supervised training period until APPs develop the necessary competencies to practice more autonomously. Educational programs that support specialty competency development may facilitate the transition of APPs into clinical practice, allowing them to be credentialed to perform essential procedures as quickly as possible. The goal of this boot camp was to provide training for APPs in common critical care, high-risk procedures, and to provide leadership development for high-risk cases to expedite their orientation process. The following manuscript describes our experience with the development, implementation, and short-term evaluation of this training program.

Keywords: advanced practice provider, boot camp, critical care, physician assistant, nurse practitioner

Introduction

The demand for advanced practice providers (APPs) is increasing across the United States to meet necessary provider staffing requirements including in intensive care settings.1 APPs typically include Nurse Practitioners or Physician Assistants. They are typically trained at the master’s level and provide care to patients in collaboration with a physician; although in some instances, they can practice independently. Currently, participation in formal postgraduate training programs, or residencies, for APPs is not required for clinical practice, such that most of the APPs immediately enter into the workforce following completion of their initial graduate-level training.1,2 Consequently, this results in a supervised training period until APPs develop the necessary competencies to practice more autonomously. Educational programs that support specialty competency development may facilitate the transition of APPs into clinical practice, allowing them to be credentialed to perform essential procedures as quickly as possible.

An estimated 10 000 APPs practice in critical care across the United States helping to fill vital gaps in care that otherwise must be assumed by physicians.1,3 In addition to managing common critical care conditions, APPs have been found to perform a wide variety of highly specialized procedures such as placement of chest tubes, intubation, lumbar puncture, and others.1,3,4 Given the lack of standardized training for APPs to practice in critical care environments, employers are responsible for ensuring that APPs without prior requisite experience in crisis resource management principles and the performance of critical care procedures receive adequate training before undergoing specialty credentialing.

Some practice sites have developed their own education and training programs to prepare APPs for both leadership responsibilities and hands-on procedure experience. Frequently, this is done with real patients under direct supervision by experienced, credentialed clinicians. Our institution developed such a training program for APPs without prior critical care experience. The program consisted of a simulation-based boot camp around procedures relevant to our intensive care unit (ICU). The goal of this boot camp was to provide training for APPs in common critical care, high-risk procedures, and to provide leadership development for high-risk cases to expedite their orientation process. The following manuscript describes our experience with the development, implementation, and evaluation of this training program.

Methods

Setting

This program was held in the simulation lab at a tertiary-care, university-affiliated center over the course of a 10-hour day. The instructors for the boot camp included an emergency medicine attending with fellowship training in medical simulation, 2 simulation fellows, 2 third-year emergency medicine residents, a respiratory therapist, and a paramedic. This study was deemed quality assurance and exempt from institutional review board review at Summa Health System.

Boot-camp curriculum and design

Kern’s model of curriculum development guided the establishment and evaluation of this educational program.5

Needs assessment

The educational needs of the APPs were determined by the intensivists and experienced APP staff practicing in the critical care setting and in light of the clinical responsibilities that they expected the APPs to perform.

Goals and objectives

The goals of this APP critical care boot camp were to provide intensive training on common critical care high-risk procedures and to provide formal leadership training for high-risk cases to expedite the orientation process (Figure 1). The objectives were to build knowledge, enhance skills, and increase self-efficacy and teamwork in a group of APPs staffing the critical care department in an urban academic tertiary care center in collaboration with experienced clinicians. Common procedures APPs were expected to be able to perform in the institution’s critical care department were identified to include placement of arterial lines, intraosseous (IO) access, endotracheal intubation, performance of central venous access, and defibrillator utilization.

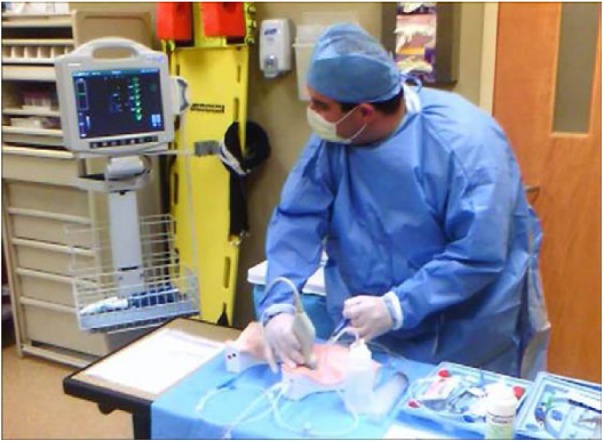

Figure 1.

Participants participating in a high-fidelity simulation case.

Learning methods, implementation, and participants

The curriculum consisted of approximately 8 hours of asynchronous procedural education, followed up by a 10-hour boot-camp day and subsequently followed up by 2 hours of individual hands-on testing on task trainers, for a 20-hour total experience. The development of the boot camp required approximately 15-20 hours by the simulation faculty, to include goals and objectives, proper equipment set up, and simulation case review. Portions of this boot-camp curriculum were used from previous intern boot camps for first year residents in emergency medicine, surgery, and trauma.

A total of 9 individuals participated in the program including 8 APPs without prior critical care experience who were newly employed in the practice setting. Four APPs had been hired without prior experience. Four APPs had prior, non-critical care experience, from 1-12 years. All 8 APPs had prior advanced cardiac life support training. One APP student in her final year of training also participated.

Pre-boot-camp curriculum

In preparation for participation in a simulation boot camp, all participants were required to review a collection of educational materials, which had previously been identified for the orientation of new residents at the facility. These were completed asynchronously and included reading articles, watching procedure demonstration videos (endotracheal intubation, IO access, radial arterial line cannulation, and central venous access to the internal jugular, subclavian, and femoral approaches), and reviewing internally developed procedure checklists for each boot-camp procedure (see, for example, checklist, Supplemental Appendix 1). It was estimated that the review of these materials took 6 to 8 hours to complete. After completion of this curriculum, participants were required to complete a multiple-choice examination on each procedure. A score of 80% or higher was required prior to the attendance at the boot camp.

Simulation boot camp

Simulation training was selected as the core teaching methodology of this program as simulation has several advantages to skill development in health care in comparison to traditional approaches.6,7 Simulation training also provides an integrative learning environment allowing providers to practice freely and make mistakes without risk of adverse patient outcomes.8 Simulation has also demonstrated improvement in patient outcomes such as reducing central line–associated blood stream infections.8 Furthermore, simulation has been used in the training of APPs to improve knowledge and clinical judgment.9

The morning session involved procedure training conducted over 4 hours, followed by a half hour break and a half hour orientation to the simulation lab environment. The morning session involved APPs rotating through 4 stations focused on airway management, defibrillation and cardiac monitoring, central line placement, and one involving IO access, arterial line placement, and needle chest decompression. Each APP was provided hands-on instruction by one member of the instructor team that was intended to be low stress, with immediate constructive feedback following the principles of deliberate practice.10 The afternoon consisted of simulated patient cases over a 5-hour period. After orientation to the simulation mannequins and clinical equipment, the APPs were divided into 2 four-person groups for the first 4 cases (with one student joining one of the teams). One group would lead a case and one would observe, in alternating fashion. The final scenario was a multi-trauma victim simulation presenting with 2 simultaneous patients requiring all learners to participate. Participants would have a team leader during each of their cases. The cases included an unstable ventricular tachycardia in a cardiac patient, a decompensating intubated ICU patient, cardiogenic shock, and a patient with a massive upper gastro-intestinal bleed. At the conclusion of each simulation, a formal debriefing lead by the simulation faculty and fellows was held.

Participant assessment

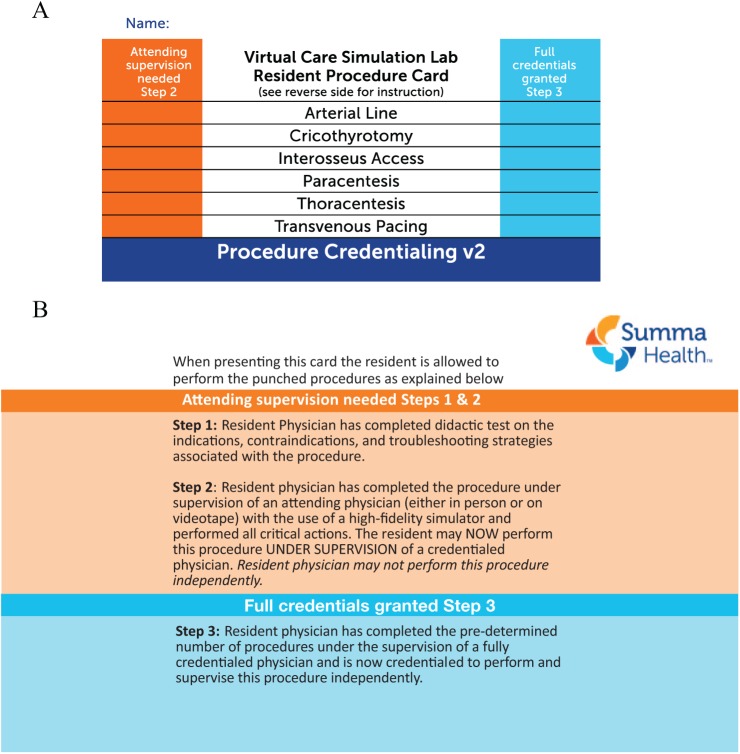

Two weeks following the boot camp, participants returned to the simulation center for assessment of their skills. Advanced practice providers had to individually demonstrate competence on the individual procedural task trainers or high-fidelity simulators as assessed by faculty achieving a score of at least 80% of the items on the checklist and not miss a single critical action to pass the simulated portion of the test (see Figure 2). This testing typically required 2 hours for all procedures. This falls in line with our internal procedural credentialing policy requiring all residents and APPs to pass a 3-step process (as outlined in Table 1) before being given full autonomy to do procedures and as identified on system badges quickly identifying to nursing staff which procedures the providers are credentialed to perform (Figure 3(A) and (B)).

Figure 2.

Participants performing simulation-based procedural test for internal jugular venous access.

Table 1.

Three-step procedure credentialing policy.

|

Step 1: Pass written

multiple-choice test with a score of 80% or

higher. Step 2: Pass formal hands-on simulated procedure. All learners must obtain formal hands-on training by an instructor for each individual procedure. The learner is tasked to memorize the provided checklist for each individual procedure and perform at least 80% of the steps for the procedure correctly without missing a single critical action. Learners typically schedule this test 1-10 days after their formal training. Once passed, this grants them permission to perform this individual procedure under supervision. All subsequent successfully performed procedures must be logged. Step 3: Provide documentation for the pre-determined minimum number of successfully completed procedures with signatures from all supervising providers. Once completed, the learner can independently perform that procedure as well as supervise other step 2 level providers in completing that individual procedure. See procedure requirements below allowing for autonomous performance. Minimum number of successfully performed procedures required for autonomous practice Lumbar puncture—5 Endotracheal intubation—10 Central venous access: Internal jugular—8 Subclavian—8 Femoral—5 Chest tube (tube thoracostomy) —10 Arterial line—5 Cricothyrotomy—5 Intraosseous access (IO)—5 Paracentesis—5 Thoracentesis—5 Transvenous pacing—–5 |

Figure 3.

(A) Front of procedure credentialing card to be checked by the patient’s nurse prior to performance of a procedure. (B) Back of procedure credentialing card to be checked by the patient’s nurse prior to performance of a procedure.

APP self-efficacy was determined using pre- and post-boot-camp surveys. The survey consisted of 15 questions assessing the confidence of APPs in an emergent patient situation, procedural competency, and the ability to function in a team leader position. Assessment was based on a traditional 1-5 Likert-type scale: strongly disagree to strongly agree. A unique, anonymous identifier was used to compare the pre- and post-confidence of each APP. Change in pre- and post-boot-camp total and individual item scores were reported using descriptive statistics.

Program evaluation

A mixed method approach to program evaluation was conducted for this curriculum. The Debriefing Assessment for Simulation in Healthcare (DASH) tool was used after simulation scenarios to evaluate faculty debriefing.11 Immediately after the boot camp concluded, each participant was asked to complete 2 surveys regarding the boot-camp experience: a quantitative survey, consisting of 6 Likert-type-scale questions with a scale of 1-7 (1 being extremely ineffective and 7 being extremely effective), as well as a qualitative survey with 6 open-ended questions. Quantitative results were analyzed using descriptive statistics and qualitative results were reviewed to identify program strengths and opportunities for improvement.

Results

Participant outcomes

Pre-boot-camp medical knowledge assessment

Average test scores for all written procedure tests performed before the simulation boot camp were 84%. All tests were passed on the first attempt with the exception of one learner failing the internal jugular written test and passing it on the next attempt.

Simulation-based assessment

Average test scores for all procedural simulation-based tests (6 procedures) were 88% with 7 out of 8 learners passing all the hands-on simulated tests on the first attempt. One learner failed their first attempt at intubation by missing a single critical action. The APP was retested and passed the assessment on the second attempt.

Self-efficacy

The mean total score on the pre-boot-camp self-efficacy assessment was 50 (maximum 75) and 4.1 (1-5 scale) across all 15 items. The areas where participants initially rated themselves lowest (<3.0) was “being adequately prepared to”: lead a code blue resuscitation, intubate during a code blue resuscitation, use difficult airway equipment, emergently evaluate and treat a tension pneumothorax, and place an IO line.

The mean total score on the post-boot-camp self-efficacy assessment was 62, which was a mean increase of 0.8 across all 15 items. When individual participants’ self-efficacy results were compared, the range of improvement of their total score was 1-19. The largest difference in pre- and post-assessment scores was for the questions “I am adequately prepared to”: intubate during a code blue resuscitation, use difficult airway equipment, emergently evaluate and treat a tension pneumothorax, place an IO line, and troubleshoot a chest tube and pleurovac system placed by another provider (see Table 2 for results).

Table 2.

Participant self-efficacy assessment (1-5 scale).

| Pre-boot camp | Post-boot camp | Mean change | |

|---|---|---|---|

| I understand how an effective code blue resuscitation should be run | 4.2 | 4.6 | 0.4 |

| I feel adequately prepared to lead a code blue resuscitation | 2.9 | 3.4 | 0.5 |

| I feel adequately prepared to function as a key team member (non-leader) during a code blue resuscitation | 4.4 | 4.8 | 0.4 |

| I feel adequately prepared to prepare to provide orders in a closed-loop fashion | 3.8 | 4.6 | 1.2 |

| I feel adequately prepared to intubate during a code blue resuscitation | 2.1 | 3.6 | 1.5 |

| I feel adequately prepared to use difficult airway equipment | 2.1 | 3.2 | 1.1 |

| I feel adequately prepared to emergently evaluate and treat a tension pneumothorax | 2.1 | 3.3 | 1.2 |

| I feel adequately prepared to perform CPR | 4.7 | 4.9 | 0.2 |

| I feel adequately prepared to used a cardiac defibrillator/monitor | 4.3 | 4.6 | 0.3 |

| I feel adequately prepared to pace a third-degree heart block | 3.4 | 4.3 | 0.9 |

| I feel adequately prepared to place an intraosseous line | 2.4 | 4.6 | 2.2 |

| I feel adequately prepared to troubleshoot a chest tube and pleurovac system placed by another provider | 3.2 | 4.3 | 0.9 |

| I feel adequately prepared to place a central venous catheter | 3.2 | 3.7 | 0.5 |

| I feel adequately prepared to interpret EKGs and identify critical or unstable rhythms | 4.0 | 4.6 | 0.6 |

| I feel adequately prepared to troubleshoot basic mechanical ventilation alarms | 3.3 | 3.4 | 0.1 |

Abbreviations: CPR, cardiopulmonary resuscitation; EKG, electrocardiogram.

Boot-camp evaluation

The mean faculty debriefing evaluation score was 41 (out of 42) on the 6-item DASH evaluation survey. All items were scored as either consistently effective (6) or extremely effective (7) (see Table 3 for item results). Limited qualitative results were provided. Strengths of the boot camp included realistic scenarios, debriefing, constructive feedback, instructors, opportunity for self-reflection, and combination of didactic and “hands-on” and timing (“not being rushed”). Opportunities for improvement included additional time for all participants to function as team leader and the opportunity to perform additional procedures. Several participants commented on wanting to continue to participate in simulation training throughout the year and recommended it for didactic and rotating APP students and new hire APPs prior to working in the ICU.

Table 3.

Evaluation of DASH elements and dimensions (1-7 scale).

| The instructor | Mean score |

|---|---|

| Set the stage for an engaging learning experience | 6.8 |

| Maintained an engaging context for learning | 7 |

| Structured the debriefing in an organized way | 6.8 |

| Provoked in-depth discussions that led me to reflect on my performance | 6.9 |

| Identified what I did well or poorly and why | 6.8 |

| Helped me see how to improve or how to sustain good performance | 6.8 |

Abbreviation: DASH, Debriefing Assessment for Simulation in Healthcare.

Discussion

Overall, this training program was perceived by both participants and instructors to be a valuable educational experience. Participants performed well on both knowledge and skills assessment and most reported an increase in their self-efficacy regarding performing these complex, high-risk procedures. Informal feedback from APPs indicated that the critical care boot camp was the most valuable component of the APPs onboarding to the institution. This curriculum was very interactive with little, if any, lecture. This was done to capitalize on the 4 stages of the Kolb experiential learning theory starting with the procedural lab training with very concrete experiences and ultimately leading to the final simulations to “put it all together” in the active experimentation stage.

A variety of elements of the program development and implementation were perceived to contribute to the program’s success. First, the engagement of multidisciplinary content experts and clinicians in the development of the program ensured that the educational needs of participants would be addressed. Next, the involvement of a simulation expert along with simulation fellows and staff was essential in implementing a program that delivered on the execution of high-fidelity cases. This subsequently led to high-quality debriefing resulting in very high scores in the assessment of faculty debriefing by the learners. Finally, the involvement of an interprofessional team including physicians, respiratory therapists, and a paramedic was perceived as being key to providing a realistic training environment.

At this time, data on the long-term impact of the boot-camp program on the speed of APPs credentialing for all procedures in comparison to traditional onboarding have not yet been evaluated. However, the impression by the boot-camp instructors and participants suggests that the program should facilitate procedure skills development under direct supervision and ultimately lead to a shorter time to credentialing and autonomous practice in the ICU. Furthermore, while we did not evaluate the program’s impact on competencies in leadership and crisis resource management, in our experience, simulation training involving teams may lead to improved leadership and closed-loop communication and enhanced the sense of team work that can transition to authentic clinical settings. In future iterations of this curriculum, we will allot more time to high-fidelity simulation cases and evaluate each participant as a team leader using validated teamwork checklists.12,13 This work reflects more of a quality assurance evaluation than curricular research, yet provides a template for other programs who may wish to develop similar curricula for their APPs. It is important to note that this simulation-based program can be quite resource intensive and does require access to several task trainers, high-fidelity full body simulators, dedicated space, and faculty time. Especially with larger cohorts, special attention to logistics and resource allocation, including faculty, is critical.

The primary suggestions for improvement of the curriculum from the learners involved providing them more opportunities to participate in serving as a team leader and performing additional procedures. Such feedback further supports our overall assessment that the program was well received and additional educational initiatives for APPs should include high-fidelity simulation, when utilization of this teaching methodology is feasible. This feedback is similar to other scholar’s experience with similar boot-camp curricula.14,15 Most of the participants felt the curriculum was the right amount of time dedicated to procedural training, leadership training, and discussion of relevant pathology. Several participants suggested the addition of formal training on ventilator alarm management for future iterations of the boot camp. Limitations of single educational initiatives include a small number of participants, application to a single unique clinical environment, and the lack of assessment of the impact on actual patient care. However, it is our hope that this experience with the implementation, delivery, and evaluation of this simulation-based boot camp may be of value to other educational leaders seeking to provide skills training to APPs new to disciplines that involve invasive procedures and high-risk patient presentations.

Supplemental Material

Supplemental material, VCSL_Lumbar_Puncture_-_STEP_2_(1)_xyz143403a25ec14_(1) for Advanced Practice Provider Critical Care Boot Camp: A Simulation-Based Curriculum by Rami A Ahmed, Alex Botsch, Derek Ballas, Alma Benner, Jared Hammond, Tim Schnick, Ahmad Khobrani, Richard George and Maura Polansky in Journal of Medical Education and Curricular Development

Footnotes

Funding:The author(s) received no financial support for the research, authorship, and/or publication of this article.

Declaration of conflicting interests:The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Author Contributions: All authors contributed to the conception and design of the manuscript, acquisition and analysis of data. All authors participated in the drafting of the article and revisions. All authors gave final approval of submitted version.

Supplemental Material: Supplemental material for this article is available online.

ORCID iD: Ahmad Khobrani  https://orcid.org/0000-0001-9966-3987

https://orcid.org/0000-0001-9966-3987

References

- 1. Grabenkort WR, Meissen HM, Gregg SR, Coopersmith CM. Acute care nurse practitioners and physician assistants in critical care: transforming education and practice. Crit Care Med. 2017;45:1111–1114. [DOI] [PubMed] [Google Scholar]

- 2. Polansky M, Garver GSH, Wilson LN, Pugh M, Hilton G. Postgraduate clinical education of physician assistants. J Physician Assist Educ. 2012;23:39–45. [DOI] [PubMed] [Google Scholar]

- 3. Kleinpell RM, Ely W, Grabenkort R. Nurse practitioners and physician assistants in the intensive care unit: an evidence-based review. Crit Care Med. 2008;36:2888–2897. [DOI] [PubMed] [Google Scholar]

- 4. Lucklanow GM, Piper GL, Kaplan LJ. Bridging the gap between training and advance practice provider critical care competency. JAAPA. 2015. 28:1–5. [DOI] [PubMed] [Google Scholar]

- 5. Thomas PA, Kern DE, Hughes MT, Chen BY. Curriculum Development for Medical Education: A Six-Step Approach. 3rd ed. New York: Springer Publishing Company; 2015. [Google Scholar]

- 6. Alluri RK, Tsing P, Lee E, Napolitano J. A randomized controlled trial of high-fidelity simulation versus lecture-based education in preclinical medical students. Med Teach. 2016;38:404–409. [DOI] [PubMed] [Google Scholar]

- 7. Barsuk JH, Cohen ER, McGaghie WC, Wayne DB. Long-term retention of central venous catheter insertion skills after simulation-based mastery learning. Acad Med. 2010;85:S9–S12. [DOI] [PubMed] [Google Scholar]

- 8. Barsuk JH, Cohen ER, Potts S, et al. Dissemination of a simulation-based mastery learning intervention reduces central line-associated bloodstream infections. BMJ Qual Saf. 2014;23:749–756. [DOI] [PubMed] [Google Scholar]

- 9. Spychalla MT, Heathman JH, Pearson KA, Herber AJ, Newman JS. Nurse practitioners and physician assistants: preparing new providers for hospital medicine at the Mayo Clinic. Ochsner J. 2014;14:545–550. [PMC free article] [PubMed] [Google Scholar]

- 10. McGaghie WC. Research opportunities in simulation-based medical education using deliberate practice. Acad Emerg Med. 2008;15:995–1001. [DOI] [PubMed] [Google Scholar]

- 11. Brett-Fleegler M, Rudolph J, Eppich W, et al. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc. 2012;7:288–294. [DOI] [PubMed] [Google Scholar]

- 12. Kim J, Neilipovitz D, Cardinal P, Chiu M, Clinch J. A pilot study using high-fidelity simulation to formally evaluate performance in the resuscitation of critically ill patients: the University of Ottawa Critical Care Medicine, High-Fidelity Simulation, and Crisis Resource Management I Study. Crit Care Med. 2006;34:2167–2174. [DOI] [PubMed] [Google Scholar]

- 13. Boet S, Etherington N, Larrigan S, et al. Measuring the teamwork performance of teams in crisis situations: a systematic review of assessment tools and their measurement properties [published online ahead of print October 1, 2018]. BMJ Qual Saf. doi: 10.1136/bmjqs-2018-008260. [DOI] [PubMed] [Google Scholar]

- 14. Ataya R, Dasgupta R, Blanda R, Moftakhar Y, Hughes PG, Ahmed R. Emergency medicine residency boot camp curriculum: a pilot study. West J Emerg Med. 2015;16:356–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Yee J, Fuenning C, George R, et al. Mechanical ventilation boot camp: a simulation-based pilot study. Crit Care Res Pract. 2016;2016:4670672. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, VCSL_Lumbar_Puncture_-_STEP_2_(1)_xyz143403a25ec14_(1) for Advanced Practice Provider Critical Care Boot Camp: A Simulation-Based Curriculum by Rami A Ahmed, Alex Botsch, Derek Ballas, Alma Benner, Jared Hammond, Tim Schnick, Ahmad Khobrani, Richard George and Maura Polansky in Journal of Medical Education and Curricular Development