Abstract

Background

Rapid and accurate detection of stroke by paramedics or other emergency clinicians at the time of first contact is crucial for timely initiation of appropriate treatment. Several stroke recognition scales have been developed to support the initial triage. However, their accuracy remains uncertain and there is no agreement which of the scales perform better.

Objectives

To systematically identify and review the evidence pertaining to the test accuracy of validated stroke recognition scales, as used in a prehospital or emergency room (ER) setting to screen people suspected of having stroke.

Search methods

We searched CENTRAL, MEDLINE (Ovid), Embase (Ovid) and the Science Citation Index to 30 January 2018. We handsearched the reference lists of all included studies and other relevant publications and contacted experts in the field to identify additional studies or unpublished data.

Selection criteria

We included studies evaluating the accuracy of stroke recognition scales used in a prehospital or ER setting to identify stroke and transient Ischemic attack (TIA) in people suspected of stroke. The scales had to be applied to actual people and the results compared to a final diagnosis of stroke or TIA. We excluded studies that applied scales to patient records; enrolled only screen‐positive participants and without complete 2 × 2 data.

Data collection and analysis

Two review authors independently conducted a two‐stage screening of all publications identified by the searches, extracted data and assessed the methodologic quality of the included studies using a tailored version of QUADAS‐2. A third review author acted as an arbiter. We recalculated study‐level sensitivity and specificity with 95% confidence intervals (CI), and presented them in forest plots and in the receiver operating characteristics (ROC) space. When a sufficient number of studies reported the accuracy of the test in the same setting (prehospital or ER) and the level of heterogeneity was relatively low, we pooled the results using the bivariate random‐effects model. We plotted the results in the summary ROC (SROC) space presenting an estimate point (mean sensitivity and specificity) with 95% CI and prediction regions. Because of the small number of studies, we did not conduct meta‐regression to investigate between‐study heterogeneity and the relative accuracy of the scales. Instead, we summarized the results in tables and diagrams, and presented our findings narratively.

Main results

We selected 23 studies for inclusion (22 journal articles and one conference abstract). We evaluated the following scales: Cincinnati Prehospital Stroke Scale (CPSS; 11 studies), Recognition of Stroke in the Emergency Room (ROSIER; eight studies), Face Arm Speech Time (FAST; five studies), Los Angeles Prehospital Stroke Scale (LAPSS; five studies), Melbourne Ambulance Stroke Scale (MASS; three studies), Ontario Prehospital Stroke Screening Tool (OPSST; one study), Medic Prehospital Assessment for Code Stroke (MedPACS; one study) and PreHospital Ambulance Stroke Test (PreHAST; one study). Nine studies compared the accuracy of two or more scales. We considered 12 studies at high risk of bias and one with applicability concerns in the patient selection domain; 14 at unclear risk of bias and one with applicability concerns in the reference standard domain; and the risk of bias in the flow and timing domain was high in one study and unclear in another 16.

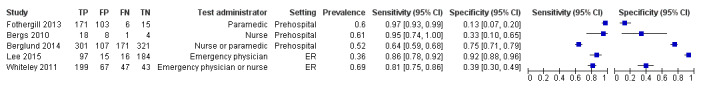

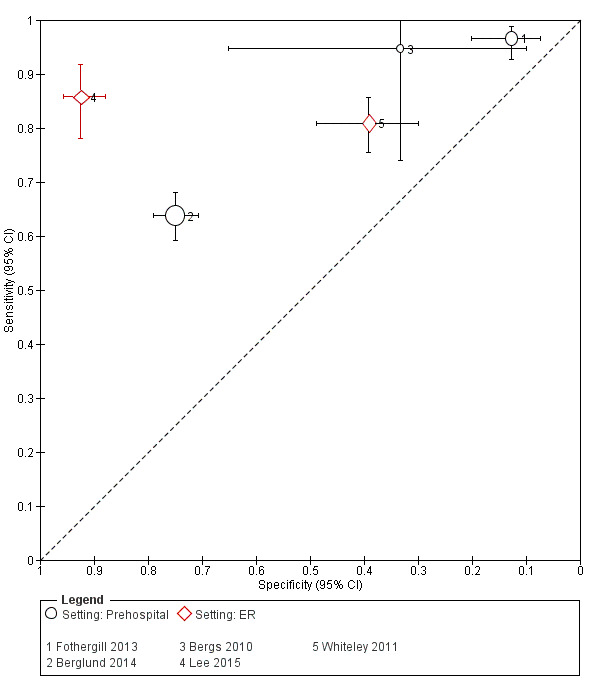

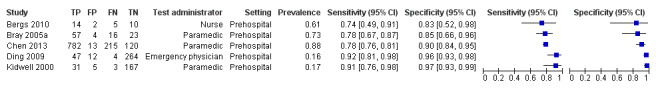

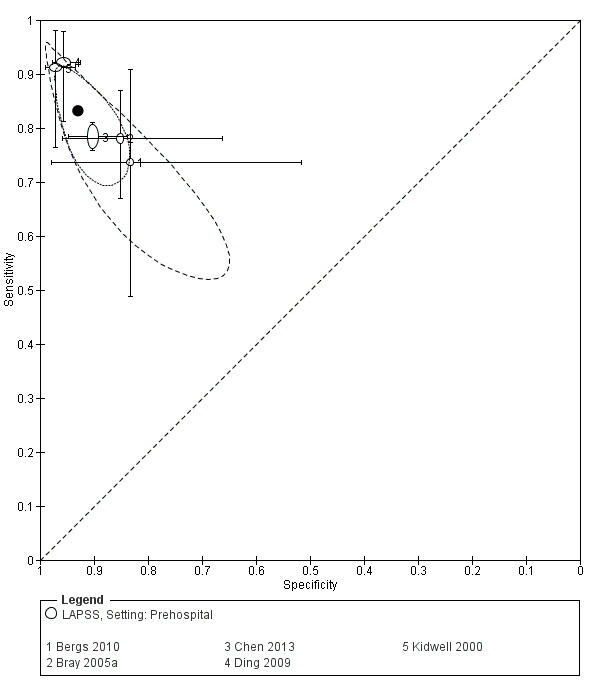

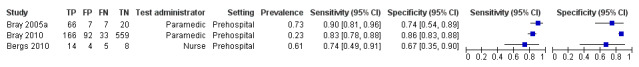

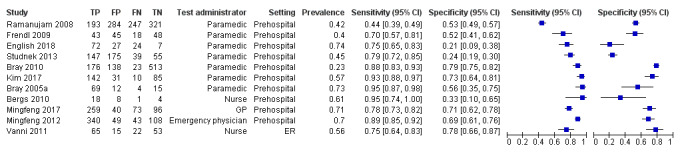

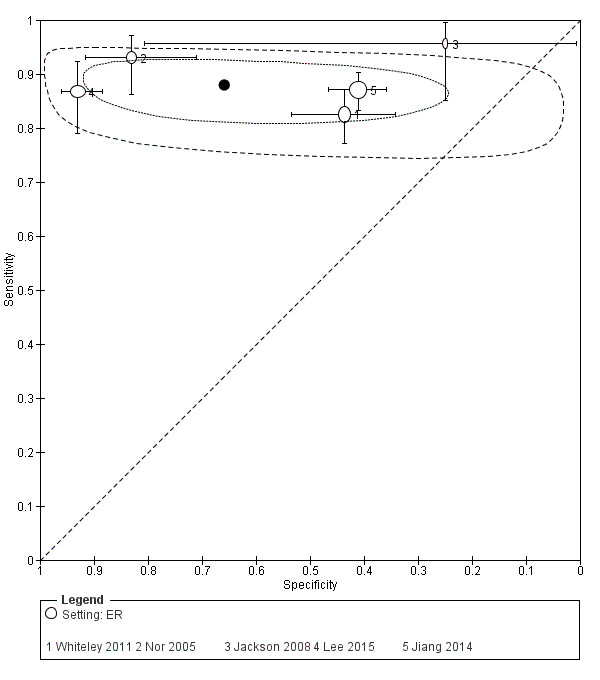

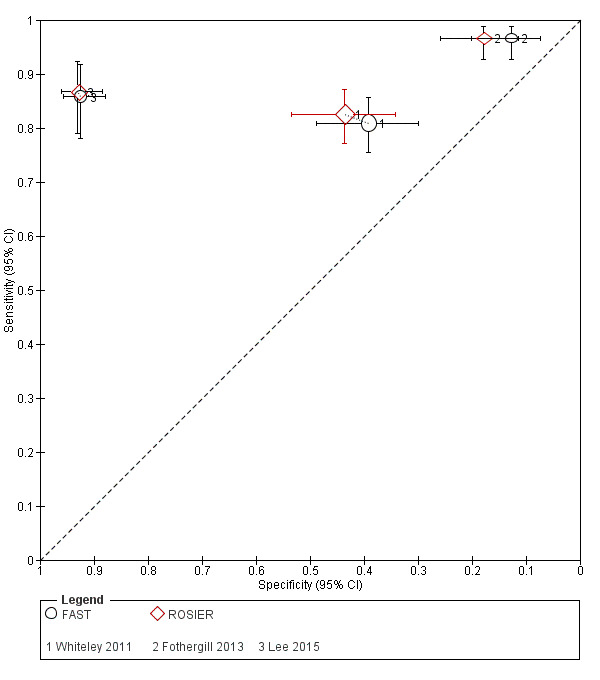

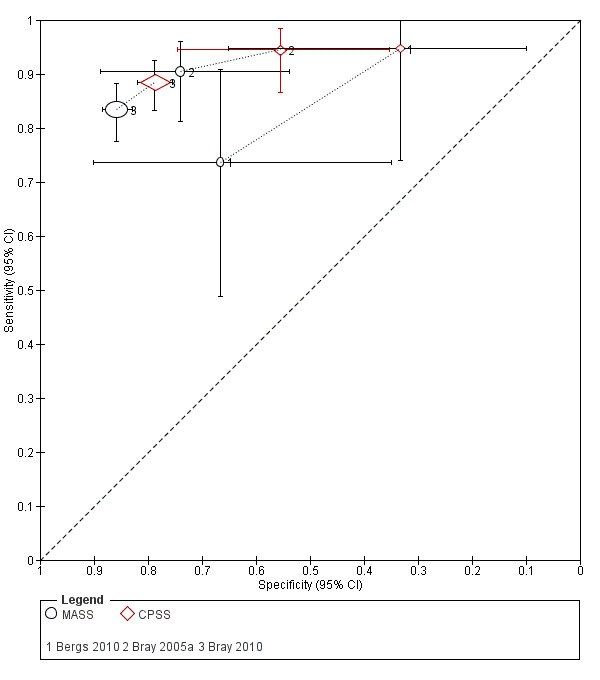

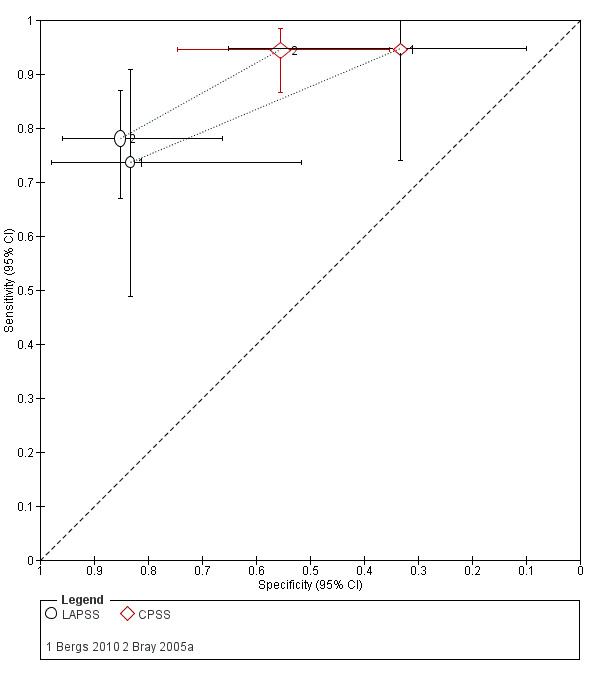

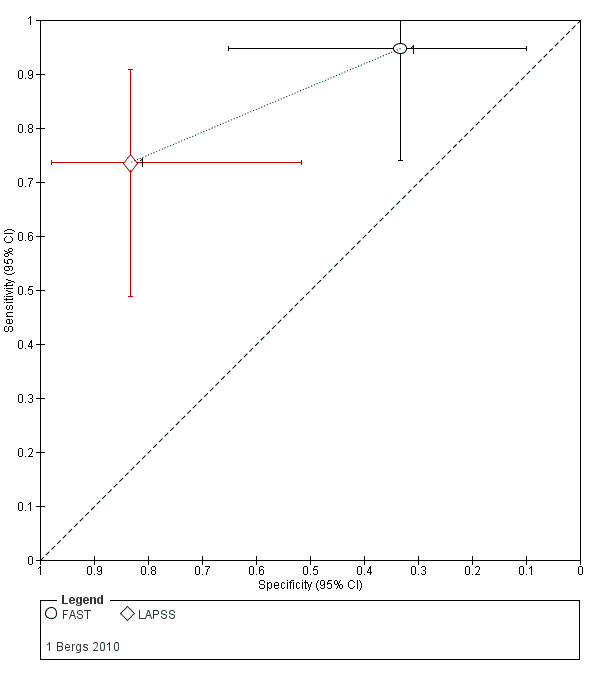

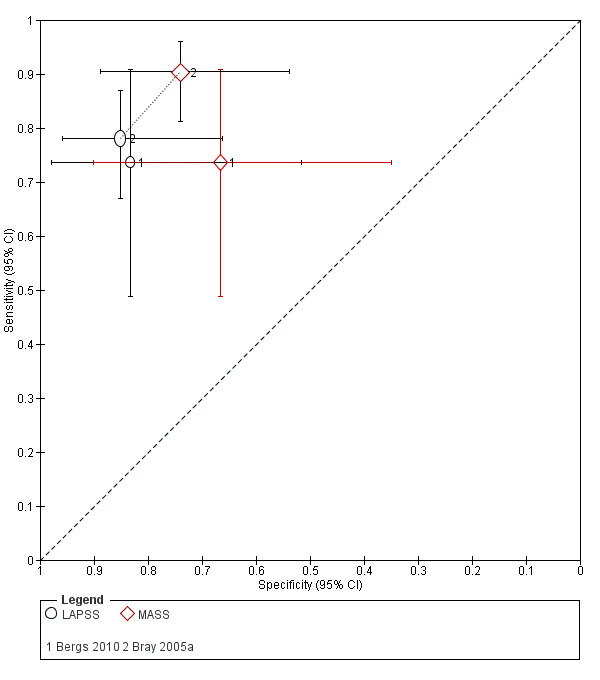

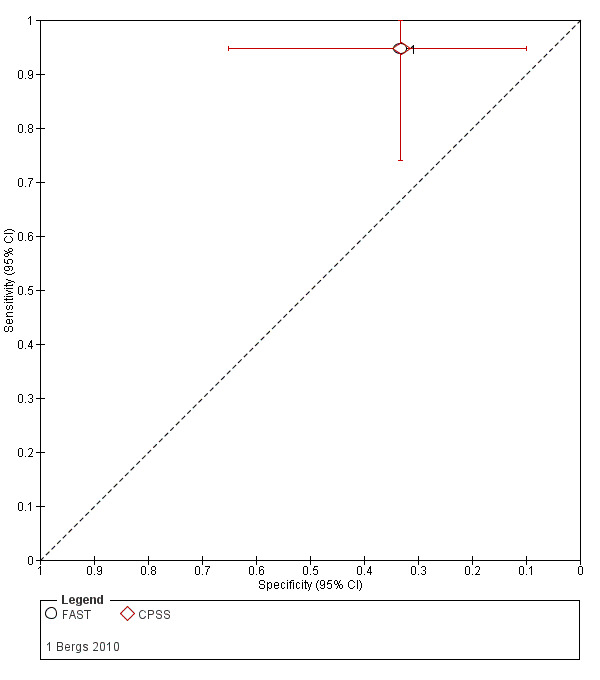

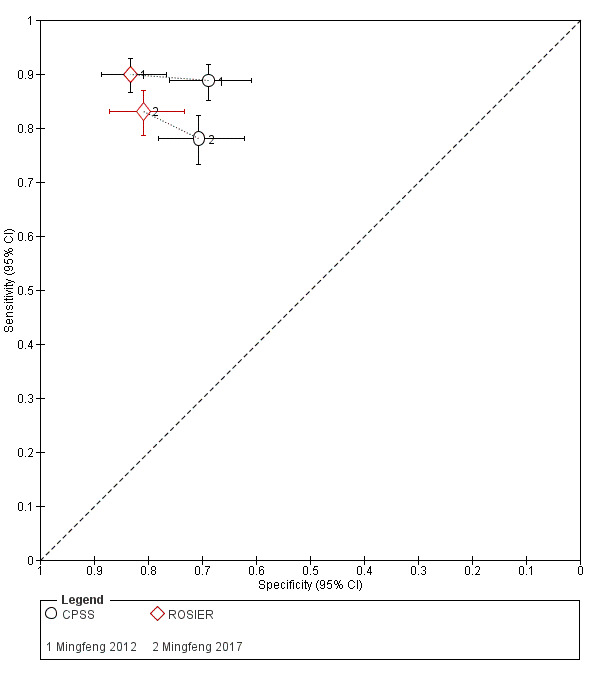

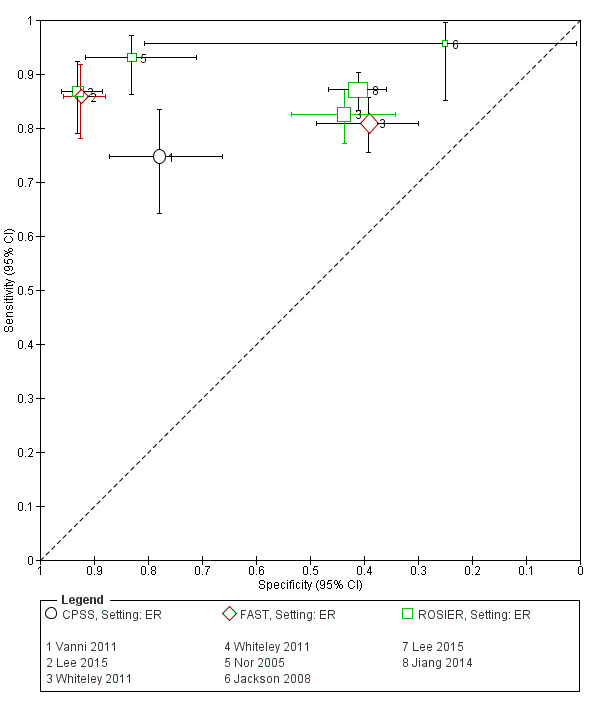

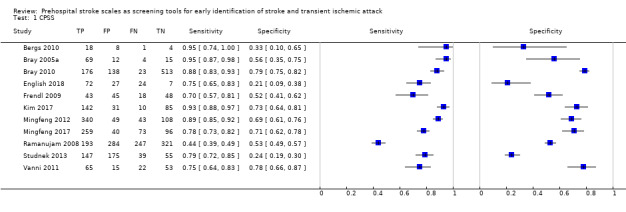

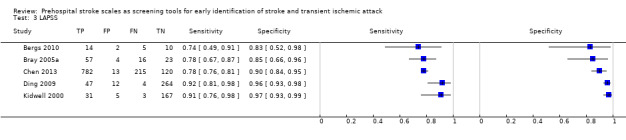

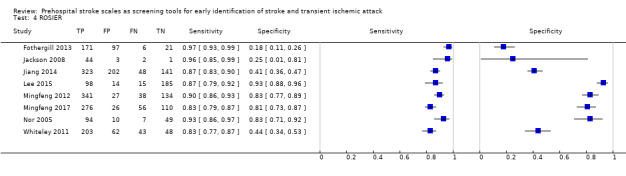

We pooled the results from five studies evaluating ROSIER in the ER and five studies evaluating LAPSS in a prehospital setting. The studies included in the meta‐analysis of ROSIER were of relatively good methodologic quality and produced a summary sensitivity of 0.88 (95% CI 0.84 to 0.91), with the prediction interval ranging from approximately 0.75 to 0.95. This means that the test will miss on average 12% of people with stroke/TIA which, depending on the circumstances, could range from 5% to 25%. We could not obtain a reliable summary estimate of specificity due to extreme heterogeneity in study‐level results. The summary sensitivity of LAPSS was 0.83 (95% CI 0.75 to 0.89) and summary specificity 0.93 (95% CI 0.88 to 0.96). However, we were uncertain in the validity of these results as four of the studies were at high and one at uncertain risk of bias. We did not report summary estimates for the rest of the scales, as the number of studies per test per setting was small, the risk of bias was high or uncertain, the results were highly heterogenous, or a combination of these.

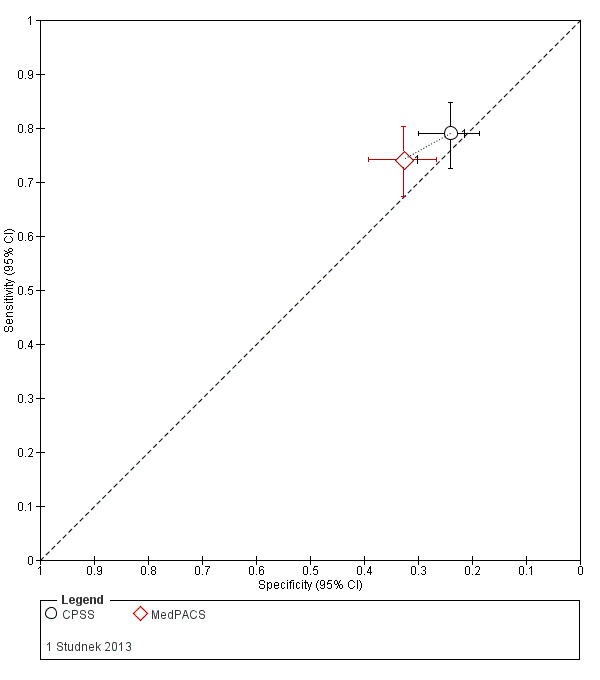

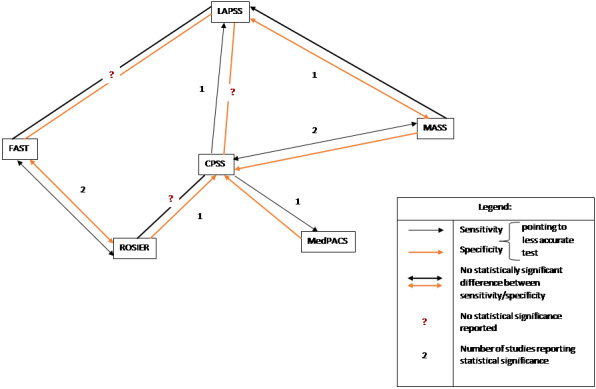

Studies comparing two or more scales in the same participants reported that ROSIER and FAST had similar accuracy when used in the ER. In the field, CPSS was more sensitive than MedPACS and LAPSS, but had similar sensitivity to that of MASS; and MASS was more sensitive than LAPSS. In contrast, MASS, ROSIER and MedPACS were more specific than CPSS; and the difference in the specificities of MASS and LAPSS was not statistically significant.

Authors' conclusions

In the field, CPSS had consistently the highest sensitivity and, therefore, should be preferred to other scales. Further evidence is needed to determine its absolute accuracy and whether alternatives scales, such as MASS and ROSIER, which might have comparable sensitivity but higher specificity, should be used instead, to achieve better overall accuracy. In the ER, ROSIER should be the test of choice, as it was evaluated in more studies than FAST and showed consistently high sensitivity. In a cohort of 100 people of whom 62 have stroke/TIA, the test will miss on average seven people with stroke/TIA (ranging from three to 16). We were unable to obtain an estimate of its summary specificity. Because of the small number of studies per test per setting, high risk of bias, substantial differences in study characteristics and large between‐study heterogeneity, these findings should be treated as provisional hypotheses that need further verification in better‐designed studies.

Plain language summary

Accuracy of prehospital stroke scales to identify people with stroke or transient ischemic attack (TIA)

Background

Stroke is a life‐threatening medical condition in which brain tissue is damaged. This could be caused by a clot blocking the blood supply to part of the brain or bleeding in the brain. If symptoms resolve within 24 hours without lasting consequences, the condition is called TIA (mini stroke). Effective treatment depends on early identification of stroke and any delays may result in brain damage or death.

Emergency medical services are the first point of contact for people experiencing symptoms suggestive of stroke. Medical responders could identify people with stroke more accurately if they use checklists called stroke recognition scales. Such scales include symptoms and other readily‐available information. A positive result on the scale indicates high risk of stroke and the need of urgent specialist assessment. The scales do not differentiate between stroke and TIA; this is done in hospital by a neurologist or stroke physician.

Our objective was to review the research evidence on how accurately stroke recognition scales can detect stroke or TIA when used by paramedics or other prehospital clinicians, who are the first point of contact for people suspected of stroke.

Study characteristics

The evidence is current to 30 January 2018. We included studies assessing the accuracy of stroke recognition scales when applied to adults suspected of stroke out of hospital.

We included 23 studies evaluating the following scales: Cincinnati Prehospital Stroke Scale (CPSS; 11 studies), Recognition of Stroke in the Emergency Room (ROSIER; eight studies), Face Arm Speech Time (FAST; five studies), Los Angeles Prehospital Stroke Scale (LAPSS; five studies), Melbourne Ambulance Stroke Scale (MASS; three studies), Ontario Prehospital Stroke Screening Tool (OPSST; one study), Medic Prehospital Assessment for Code Stroke (MedPACS; one study) and PreHospital Ambulance Stroke Test (PreHAST; one study). Nine studies compared two or more scales in the same people. The results from five studies were combined to estimate the accuracy of ROSIER in the emergency room (ER) and five studies to estimate the accuracy of LAPSS when used by ambulance clinicians.

Quality of the evidence

Many of the studies were of poor or unclear quality and we could not be sure that their results were valid.

Key results of the accuracy of the evaluated prehospital stroke scales

Studies differed considerably in terms of included participants and other characteristics. As a consequence, studies evaluating the same scale reported variable results.

We combined five studies evaluating ROSIER in the ER and obtained average sensitivity of 88% (88 out of 100 people with stroke/TIA will test positive on ROSIER). We were unable to obtain an estimate of specificity (how many people without stroke/TIA will test negative).

We also combined the results for LAPSS, but the included studies were of poor quality and the results may not be valid. The rest of the scales were evaluated in a smaller number of studies or the results were too variable to be combined statistically.

A small number of studies compared two or more scales when applied to the same participants. Such studies are more likely to produce valid results as the scales are used in the same circumstances. They reported that in the ER, ROSIER and FAST had similar accuracy, but ROSIER was evaluated in more studies. When used by ambulance staff, CPSS identified more people with stroke/TIA in all studies, but also more people without stroke/TIA tested positive.

Conclusion

Current evidence suggests that CPSS should be used by ambulance clinicians in the field. Further research is needed to estimate the proportion of wrong results and whether alternatives scales, such as MASS and ROSIER, which might have comparable sensitivity but higher specificity, should be used instead to achieve better overall accuracy. In the ER, ROSIER should be the test of choice. In a group of 100 people of whom 62 have stroke/TIA, the test will miss on average seven people with stroke/TIA (ranging from three to 16). Because of the small number of studies evaluating the tests in a specific setting, poor quality, substantial differences in study characteristics and variability in results, these findings should be treated with caution and need further verification in better‐designed studies.

Summary of findings

Summary of findings'. 'Prehospital stroke scales as screening tools for early identification of stroke and transient ischemic attack.

|

OBJECTIVES AND METHODS Review question: what is the absolute and relative (comparative) accuracy of stroke recognition scales used in a prehospital or ER setting to identify people with stroke and TIA? Inclusion criteria: primary studies evaluating the test accuracy of stroke recognition scales in a prehospital or ER setting. The scales were used to identify stroke and TIA in people suspected of stroke, and the results were compared to a final diagnosis of stroke or TIA made by a neurologist or stroke physician (reference standard). Only studies reporting sufficient data to reconstruct the full 2 × 2 table were included. Studies in which the scales were applied to patient records, or including only scale‐positive patients were excluded Databases searched: CENTRAL, MEDLINE, Embase, Science Citation Index, plus hand‐searches of reference lists Search date: from earliest date possible to 30 January 2018 Methodologic quality assessment: QUADAS‐2 Statistical analysis: if appropriate, the bivariate random‐effects model was used to pool results | ||||

|

RESULTS Number of studies included: 23 studies including 9230 participants, range 31–1130 participants, median 312 (IQR 154 to 554) Number of scales evaluated: 8 scales, CPSS (11 studies), ROSIER (8 studies), FAST (5 studies), LAPSS (5 studies), MASS (3 studies), OPSST (1 study), MedPACS (1 study), PreHAST (1 study) Setting: 6 studies evaluated the scales in the ER and 17 in a prehospital setting (16 evaluated the tests in the field and 1 in primary care) Studies comparing scales in the same participants: 9 studies compared ≥ 2 scales in the same patients (3 studies each compared FAST vs ROSIER and CPSS vs MASS, 2 studies each compared ROSIER vs CPSS, LAPSS vs CPSS and LAPSS vs MASS, and 1 study each compared some of the remaining pairs) Methodologic quality: 12 studies were at high risk of bias and 1 with applicability concerns in the patient selection domain; 14 at unclear risk of bias and 1 with applicability concerns in the reference standard domain; and 1 at high risk of bias and another 16 at unclear risk of bias in the flow and timing domain | ||||

| CONCLUSIONS: CPSS should be preferred in the field as it had consistently high sensitivity in direct comparisons; further evidence is needed to determine its absolute accuracy and whether alternatives scales, such as MASS and ROSIER, which might have comparable sensitivity but higher specificity, should be used instead to achieve better overall accuracy. In the ER, ROSIER should be the test of choice. In a cohort of 100 people of whom 62 have stroke/TIA, the test will miss on average 7 people with stroke/TIA (range 3–16). We were unable to obtain an estimate of its summary specificity. Because of the small number of studies per test per setting, high risk of bias, substantial differences in study characteristics and large between‐study heterogeneity, these findings should be treated as provisional hypotheses that need further verification in better‐designed studies. | ||||

| RESULTS: relative (comparative) accuracy | ||||

| Considering only the results for which the statistical significance was reported or could be determined from the non‐overlapping CIs of the accuracy estimates, the results of the comparative studies could be summarized as follows. In the ER:

In the field:

Additional data from Purrucker 2015 (excluded from the main analysis) contradicted some of these results. | ||||

| RESULTS: absolute accuracy | ||||

| Index test | Number of studies |

Number of studies at high risk of bias or applicability concerns |

Results | Comments |

| ROSIER | 8 (2 in the field, 1 in primary care and 5 in ER) | 2 (1 in patient selection and 1 in flow and timing) |

Mean summary sensitivity 0.88 (95% CI 0.84 to 0.91), prediction region 0.75 to 0.95 Specificity (study‐level, range) 0.18 to 0.93 |

We report only a mean summary estimate for sensitivity, based on 5 studies of relatively good methodologic quality conducted in the ER. It means that in this setting the test will miss on average 12/100 people with stroke/TIA, but this could range from 5 to 25 people. Study‐level specificities were extremely heterogeneous and the statistical uncertainty in the summary estimate was too great to allow meaningful clinical interpretation. Across the studies, between 7/100 and 82/100 people without stroke/TIA tested positive. |

| CPSS | 11 (9 in the field, 1 in primary care and 1 in ER) | 9 (8 in patient selection and 1 in the applicability of the reference standard) | Sensitivity 0.44 to 0.95 Specificity 0.21 to 0.79 |

High level of heterogeneity even when analysis restricted to use of CPSS in a prehospital setting by paramedics (7 studies). Across all studies, between 5/100 and 55/100 people with stroke/TIA were missed and between 21/100 and 79/100 without stroke/TIA tested positive |

| LAPSS | 5 studies (prehospital) | 4 (patient selection) | Summary sensitivity 0.83 (95% CI 0.75 to 0.89) Summary specificity 0.93 (95% CI 0.88 to 0.96) |

According to the obtained summary estimates, the test will miss 17/100 people with stroke/TIA and 7/100 without stroke/TIA will test positive. However, these results should be treated with caution as 4/5 studies were at high risk of selection bias and for most the level of bias in the reference standard and the flow and timing domain could not be fully assessed. |

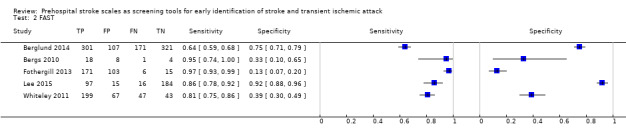

| FAST | 5 studies (3 in prehospital and 2 in ER) | 3 (2 in patient selection and 1 in flow and timing) | Sensitivity 0.64 to 0.97 Specificity 0.13 to 0.92 |

Heterogeneous results even when results analyzed separately by setting. Across studies the test missed between 3/100 and 36/100 people with stroke/TIA and between 8/100 and 87/100 people without stroke/TIA tested positive. |

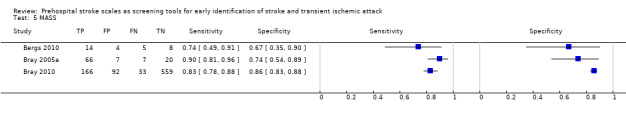

| MASS | 3 studies (prehospital) | 3 (patient selection) | Sensitivity 0.74 to 0.90 Specificity 0.67 to 0.86 |

Heterogeneous results from studies at high risk of bias. Across studies, the test missed between 10/100 and 26/100 people with stroke/TIA and between 14/100 and 33/100 people without stroke/TIA tested positive. |

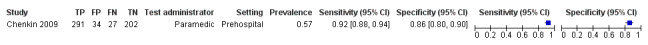

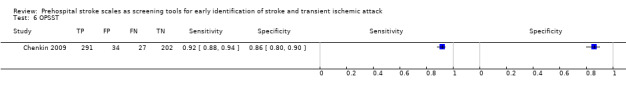

| OPSST | 1 study | 1 (patient selection) | Sensitivity 0.92

(95% CI 0.88 to 0.94) Specificity 0.86 (95% CI 0.80 to 0.90) |

High risk of selection bias; the focus was on the positive predictive value which was 0.90 (95% CI 0.86 to 0.93). This means that 90/100 people with a positive test had stroke/TIA. |

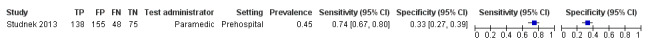

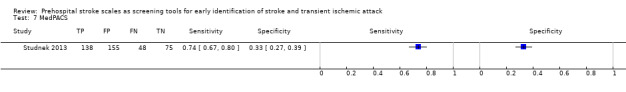

| MedPACS | 1 study | 1 (patient selection) | Sensitivity 0.74 (95% CI 0.67 to 0.80) Specificity 0.33 (95% CI 0.27 to 0.39) |

Retrospective data collection. The test missed 26/100 people with stroke/TIA and 67/100 people without stroke/TIA tested positive. |

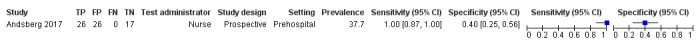

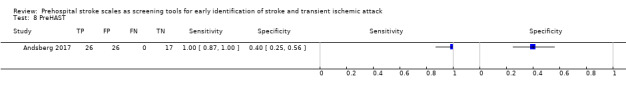

| PreHAST | 1 study | No quality issues | Sensitivity 1.00 (95% CI 0.87 to 1.00) Specificity 0.40 (95% CI 0.25 to 0.56) |

PreHAST was designed for both recognition and severity assessment of stroke in the field; this was a pilot study focusing mainly on the accuracy of the scale to identify people with stroke/TIA. The test missed 0 people with stroke/TIA, but 60/100 people without stroke/TIA tested positive. |

CI: confidence interval; CPSS: Cincinnati Prehospital Stroke Scale; ER: emergency room; FAST: Face Arm Speech Time; IQR: interquartile range; LAPSS: Los Angeles Prehospital Stroke Scale; MASS: Melbourne Ambulance Stroke Scale; MedPACS: Medic Prehospital Assessment for Code Stroke; OPSST: Ontario Prehospital Stroke Screening Tool; PreHAST: PreHospital Ambulance Stroke Test; ROSIER: Recognition of Stroke in the Emergency Room; TIA: transient ischemic attack.

Background

Worldwide, stroke is the leading cause of death. By 2020, 19 million out of 25 million annual stroke deaths will occur in low‐ to middle‐income countries. Some 88% of these events are ischemic strokes, with the remainder being hemorrhagic strokes. Stroke is also the leading cause of disability with 30% of stroke survivors requiring life‐long assistance with their activities of daily living, 20% requiring assistance with ambulation and 16% requiring institutional levels of care (Daroff 2012). Ischemic stroke is caused by blockage of blood flow by thrombi, which are blood clots made of platelets, lipids, clotting factors and fibrin. Fibrin is the particular substrate of the thrombolytic, tissue plasminogen activator (tPA), which is a standard of care treatment for certain people with stroke. Failure to restore blood flow in a timely fashion results in an ischemic stroke, and infarction of brain tissue.

A transient ischemic attack (TIA) is an episode of neurologic deficit that reverses without any clinical evidence of neuronal damage. TIAs are prognosticators for future strokes and also require rapid identification, so that physicians can confirm whether or not the symptoms have resolved and then work towards early risk stratification, which has been shown to decrease recurrence (Amarenco 2008). The reference standard for diagnosis of stroke and TIA is the evaluation by a neurologist or stroke physician upon review of history, physical exam and a non‐contrast brain computed tomography (CT) scan.

Intravenous tPA was the only approved treatment of acute ischemic stroke up until 2015. Utilization of intravenous tPA is limited by its time sensitivity and this medication can only be provided within a window of 0 to 4.5 hours after onset of symptoms. Data from multicenter randomized controlled trials (RCTs) of intravenous tPA treatment in acute stroke have shown that the odds of a favorable outcome at three months increased as onset‐to‐treatment time decreased (Hacke 2008; NINDS 1995; Sandercock 2012). Pooled analysis supported these findings (Wardlaw 2009).

In an effort to deliver thrombolytics at the earliest time point possible, various streamlined 'stroke code' systems have been developed to decrease the door‐to‐treatment time. The current American Heart Association guideline recommends that the target door‐to‐treatment time be less than 60 minutes. However, this is rarely achieved (Lyden 1994; Marler 2000; O'Connor 1999; Saver 2013). In addition to tPA, the American Heart and Stroke Association (Powers 2018), and the European Stroke Organisation (ESO 2018), now recommend that for selected people with acute ischemic stroke, mechanical thrombectomy be considered up to 24 hours of onset of symptoms.

An ideal system for rapid thrombolytic delivery and, now, consideration for revascularization, begins with rapid and accurate stroke detection at the time of first contact with medical personnel (in most cases paramedics). Stroke pathways that include prehospital notification have been demonstrated to reduce door‐to‐treatment time and improve outcomes. Furthermore, hemorrhagic strokes require rapid assessment and it is believed that early identification and intervention is associated with signals toward decreased end volume size of hemorrhage (Anderson 2008). Optimal time of intervention and specific therapy including blood pressure agents and targets (Butcher 2013; Hill 2013), and use of clotting factors (Flaherty 2014), are currently under investigation.

Notably, all studies are focused on initiating therapies as soon as possible. However, the diagnostic accuracy of paramedics' diagnosis of stroke based on unstructured clinical assessment is poor (Harbison 1999). Better results could be achieved if validated stroke recognition tools are used to support the initial triage. Several such instruments have been developed and implemented in different countries worldwide, but the question about their absolute and relative accuracy remains unanswered.

Target condition being diagnosed

We included all suspected acute strokes (ischemic, hemorrhagic or TIAs) in people assessed by prehospital or emergency room (ER) staff including paramedics, emergency medicine technicians (EMTs), nurses, emergency physicians or general practitioners (GPs). Trauma must not be a primary disease mechanism, but we considered eligible studies including people with secondary trauma (a fall due to stroke). In the case of TIA, it is impossible to know if the neurologic deficit has resolved until the person has been assessed by a neurologist or stroke physician and has had adequate imaging. The presentation of TIA is analogous on a spectrum of disease that cannot be separated into categories at the time of first contact by the responding healthcare staff.

We included TIA in the target condition as the scales are not intended to differentiate between stroke and TIA. Therefore, if a person with relevant symptoms at presentation and a final diagnosis of TIA tests positive on the scale, the result will be treated as true positive rather than false positive. However, we appreciate that a lack of clear guidance on whether or not to apply the scales on people who are no longer symptomatic at the time of first contact is likely to introduce variation in the spectrum of included participants and, as a result, in test accuracy. We took this into consideration when interpreting the results from the included studies.

Index test(s)

The index tests are prehospital scales used to determine whether the person is having stroke. They are based on the National Institutes of Health Stroke Scale (NIHSS) and the first such tools, Cincinnati Prehospital Stroke Scale (CPSS) and Los Angeles Prehospital Stroke Scale (LAPSS), were developed and introduced in the USA in the mid‐1990s (Nor 2004). The use of prehospital stroke scales by emergency medical responders is recommended by the American Heart and Stroke Association (Powers 2018), the European Academy of Neurology and the European Stroke Organisation (Kobayashi 2018). However, they make no recommendations about the use of specific instruments. The scales are in wide circulation worldwide and emergency medical responders receive training on how to use them as part of their professional education.

The scales are screening tools intended for use by prehospital and ER staff, and are not meant for diagnosis of any neurologic condition. Furthermore, they are not for determining the severity of stroke (unless they have a dual purpose) or the type of stroke (ischemic versus hemorrhagic versus TIA, or any subtypes). Due to the urgency to act on any type of stroke, the prehospital environment is not the appropriate setting in the decision tree to separate ischemic from hemorrhagic stroke or stroke from TIA. This is done by the attending neurologist or stroke physician.

The following stroke recognition scales were evaluated in the studies eligible for inclusion in the current review.

Cincinnati Prehospital Stroke Scale (CPSS; Kothari 1999).

Los Angeles Prehospital Stroke Scale (LAPSS; Kidwell 2000).

Melbourne Ambulance Stroke Scale (MASS; Bray 2005a).

Ontario Prehospital Stroke Screening Tool (OPSST; Chenkin 2009).

Face Arm Speech Time (FAST; Harbison 2003).

Recognition of Stroke in the Emergency Room (ROSIER; Nor 2005).

Medic Prehospital Assessment for Code Stroke (MedPACS; Studnek 2013).

PreHospital Ambulance Stroke Test (PreHAST; Andsberg 2017).

We summarized the characteristics of the evaluated scales in Table 2. Each scale consists of a list of checkbox items from the patient's history of presenting illness, past medical history, physical exam and basic laboratory values. The presence of any of the symptoms listed on the scale indicates high probability of stroke and should trigger an emergency stroke protocol. If none of the listed symptoms are present, diagnosis of stroke is less likely but not completely ruled out. Each symptom is scored '+1' when present and '0' when absent. Because the number of symptoms that could be scored '+1' is different for different scales, the total score varies. However, for all scales included in our review, a total score '+1 or greater' indicates high probability of stroke and warrants a referral for specialist assessment.

1. Characteristics of the evaluated stroke identification scales.

| — | CPSS | FAST | LAPSS | MASS | ROSIER | MedPACS | PreHAST | OPSST |

| Eligibility criteria | Exclusion criteria | |||||||

| — | — | — | Age > 45 years History of seizures or epilepsy absent Symptom duration < 24 hours At baseline, patient not wheelchair bound or bedridden Blood glucose 60–400 mg/dL (3.3–22.2 mmol/L) |

Age > 45 years History of seizures or epilepsy absent At baseline, patient not wheelchair bound or bedridden Blood glucose 2.8–22.2 mmol/L |

— | History of seizures or epilepsy absent Symptom duration ≤ 25 hours Blood glucose 60–400 mg/dL (3.3–22.2 mmol/L) |

Age > 18 years Intended for use only in conscious people, i.e. alert or aroused by minor stimulation |

CTAS level 1; or uncorrected airway, breathing or circulatory problem (or both) Symptoms of the stroke have resolved Blood sugar < 4 mmol/L Seizure at onset of symptoms or observed by paramedic GCS < 10 Terminally ill or palliative care patient Could not arrive to a stroke center within 2 hours of a clearly determined time of symptom onset or the time the patients was "last seen in a usual state of health" |

| Screen items | ||||||||

| Facial palsy | +1 | +1 | +1 | +1 | +1 | +1 | +1 | +1 |

| Gaze preference | — | — | — | — | — | +1 | +2 | — |

| Vision | — | — | — | — | + 1 | — | + 2 | — |

| Speech disturbance | +1 | +1 | — | +1 | +1 | +1 | 0–2 | +1 |

| Hand grip | — | — | +1 | +1 | — | — | — | — |

| Arm drift/weakness | +1 | +1 | +1 | +1 | +1 | +1 | 0–2 | +1 |

| Leg drift/weakness | — | — | — | — | +1 | +1 | 0–2 | +1 |

| No seizure at onset | — | — | — | — | –1 | — | — | — |

| Blood glucose > 3 5 mmol/L |

— | — | — | — | –1 | — | — | — |

| Other | — | — | — | — | — | — | Verbal instructions +2 Sensory (pain) 0–2 |

— |

| Score range | 0–3 | 0–3 | 0–3 | 0–4 | –2 to 5 | 0–5 | 0–19 | 0–4 |

| Positivity threshold | ≥ 1 | ≥ 1 | ≥ 1 | ≥ 1 | ≥ 1 | ≥ 1 | ≥ 1 | ≥ 1 |

CPSS: Cincinnati Prehospital Stroke Scale; Kothari 1997 and Kothari 1999.

CTAS: Canadian Triage and Acuity Scale.

FAST: Face Arm Speech Time; Kleindorfer 2007.

GCS: Glasgow Coma Scale.

LAPSS: Los Angeles Prehospital Stroke Scale. This refers to the LAPSS criteria published in Kidwell 2000, which differ slightly from an earlier version of the scale published in Kidwell 1998. In LAPSS 2000 an eligibility criterion is considered met even when the answer to the question is unknown (e.g. the person will be considered > 45 years old when this information is not available). In LAPSS 1998 when the answer to an eligibility question is unknown, the criterion is considered unmet and the person is not eligible for assessment with LAPSS; in addition, in the earlier version the symptom duration was 12 (and not 24) hours. Only LAPSS 2000 criteria are presented in the above table as no studies using LAPSS 1998 were included.

MASS: Melbourne Ambulance Stroke Scale; Bray 2005a.

MedPACS: Medic Prehospital Assessment for Code Stroke; Studnek 2013.

OPSST: Ontario Prehospital Stroke Screening Too; Chenkin 2009. The authors point out that "The addition of these exclusion criteria may be helpful for reducing the unnecessary triage of patients with stroke mimics and patients who are ineligible for fibrinolysis" (p. 154).

PreHAST: PreHospital Ambulance Stroke Test. Designed to screen for common stroke symptoms and grade severity, similarly to the National Institutes of Health Stroke Scale (NIHSS); simultaneous testing of right and left side for visual field and sensory items; only verbal instructions allowed, so it tests indirectly for sensory (Wernicke's) aphasia, Andsberg 2017.

ROSIER: Recognition of Stroke in the Emergency Room; Nor 2005.

Some of the scales include additional criteria which determine whether the person is eligible for assessment with the respective scale. These criteria have been added to improve specificity by excluding people with common stroke mimics. However, the eligibility criteria of OPSST aim not only to reduce unnecessary triage of people with stroke mimics, but also of people who would be ineligible for fibrinolysis, regardless of whether stroke is present or not (e.g. people who could not be transported on time to an acute stroke care center) (Chenkin 2009).

ROSIER and PreHAST use slightly different scoring systems. ROSIER comprises five physical symptoms, each scored '+1' and two additional items, seizure activity and abnormal blood sugar, each scored '‐1'. The presence of any of these additional items makes the diagnosis of stroke less likely even when some of the five listed symptoms are present. The total score could range from '‐2' (none of the five physical symptoms and both additional items are present) to '+5' (all physical symptoms are present and neither of the two additional items). The positivity threshold is the same as for the other scales '+1 or greater'.

PreHAST is a tool "designed to screen for common stroke symptoms and grade severity, similarly to the NIHSS." (p. 2) It includes stroke symptoms that could predict main arterial vessel occlusion in addition to recognizing people with stroke in the field (Andsberg 2017). It comprises eight items that are scored differently (e.g. 0 or 1; 0, 1 or 2; 0 or 2), with 0 indicating absence of the symptom and 1 and 2, different levels of severity of a present symptom. The total score ranges from 0 to 19 points and '+1 or greater' is used as a positivity threshold to identify potential stroke. One study eligible for inclusion in this review evaluated PreHAST as the only prehospital stroke scale combining recognition of stroke and assessment of severity. We identified studies evaluating similar 'dual purpose' scales, but none of them met our inclusion criteria, mainly because the scales were applied to patient records (e.g. Purrucker 2015; Purrucker 2017).

Clinical pathway

The clinical pathway is very simple. When the paramedic, ambulance worker or medical attendant who is first on the scene is suspicious that the person may be having stroke, they are to implement a stroke scale in their evaluation of the person. Thus, the point of first contact between emergency medical responders and the person is where the index tests are to be implemented. The people are then brought to an ER for further evaluation and clinical workup. The triage of people who present directly to the ER and are suspected of stroke could also involve a stroke recognition scale.

Alternative test(s)

As discussed earlier, in addition to PreHAST we identified other prehospital stroke scales that combine stroke identification and severity assessment. Most of them were repurposed stroke severity scales (e.g. Kurashiki Prehospital Stroke Scale (KPSS), Los Angeles Motor Scale (LAMS), eight‐item National Institutes of Health Stroke Scale (sNIHSS‐8) and five‐item National Institutes of Health Stroke Scale (sNIHSS‐5)) initially designed to identify people with large vessel occlusion (LVO), who might be candidates for thrombectomy. They were evaluated in a small number of studies none of which met our inclusion criteria. In addition, one of the included studies compared CPSS to a panel of blood biomarkers but, as far as we are aware, these are not routinely used in clinical practice and have not been recommended for prehospital triage of people suspected of stroke (Vanni 2011).

Rationale

Despite the fact that prehospital stroke recognition scales are widely used in clinical practice, there has been little effort to systematically identify and review the evidence pertaining to their accuracy. Two non‐Cochrane systematic reviews with objectives similar to ours have been published (Brandler 2014; Rudd 2016). The first review, Brandler 2014, included only studies in which the scales were used by paramedics, in agreement with the usual practice in the USA emergency medical services (EMS). The authors noted the heterogeneity in test accuracy estimates and concluded that "LAPSS and CPSS had similar diagnostic capabilities" (p. 1). This was questioned by the authors of the second review, Rudd 2016, which had a broader scope and concluded that "Available data do not allow a strong recommendation to be made about the superiority of a stroke recognition instrument." (p. 1). Given the contradicting outcomes from these two investigations, we decided to review the evidence pertaining to the absolute and relative accuracy of prehospital stroke recognition scales using well‐defined inclusion criteria and established Cochrane Review methods, in order to make recommendations for future research and, if appropriate, for clinical practice.

Objectives

To systematically identify and review the evidence pertaining to the test accuracy of validated stroke recognition scales used in a prehospital or emergency room (ER) setting to screen people suspected of having stroke.

Secondary objectives

To investigate the effect of potential sources of heterogeneity on test accuracy estimates.

Methods

Criteria for considering studies for this review

Types of studies

We considered all primary test accuracy studies if they evaluated a stroke recognition scale (index test) used in a prehospital or ER setting, against a final diagnosis of stroke/TIA. We included only those studies reporting sufficient data to determine test accuracy parameters (2 × 2 table). We included retrospective studies using stroke and EMS registry data, if the scales had been applied directly, face‐to‐face, to eligible patients. We excluded studies in which the scales were applied to patient records rather than to actual patients. We also excluded studies that enrolled only screen‐positive patients.

Participants

We defined the target population as non‐comatose, non‐trauma patients suspected of stroke, with symptom duration under 24 hours at the time of presentation. Participants had to be over 18 years of age as this is a criterion for thrombolytic use. We included studies that had a subpopulation of people with previous history of stroke. The stroke recognition scales had to be applied in a prehospital or emergency setting.

We defined comatose patients as people who presented in the field with a Glasgow Coma Scale (GCS) score less than 8 and, therefore, required intubation and life‐saving airway management. We included studies on people with a depressed level of consciousness who were protecting their airway, as their exam was not confounded by medications used for the induction and maintenance of an artificial airway.

Index tests

The Index tests were prehospital scales for the determination of whether the person was having stroke or not. We included all such scales if they were evaluated in eligible studies. The index tests could have been administered by a paramedic, an emergency medical responder, a nurse, an emergency physician or a GP. There were no limitations on the amount of training the scale administrator had received with the particular stroke scale. However, we acknowledge that differences in knowledge, experience and training could contribute to heterogeneity in test accuracy results. Here we used 'prehospital' as an umbrella term referring to the use of the scales in any prehospital setting including in the field (i.e. people attended by the ambulance), the ER or primary care. We specified setting (prehospital versus ER versus primary care) when discussing the use of specific scales in the studies.

Target conditions

The target condition was stroke, regardless of its type or severity, including ischemic stroke, hemorrhagic stroke or TIA. We defined ischemic stroke as irreversible neurologic damage due to obstruction of a blood vessel, corresponding to the parenchymal territory responsible for the neurologic function that was lost. We defined intracerebral hemorrhage as a stroke due to a bleed within the brain parenchyma. TIA is, by definition, transient and the neurologic deficit reverses without any clinical evidence of neuronal damage. To be included, studies could have used either the tissue‐based definition of TIA (a negative diffusion‐weighted imaging study) or the time‐based definition of TIA (resolution of symptoms in less than 24 hours (but may be diffusion‐weighted imaging positive).

Reference standards

There is no single, 'gold standard' diagnostic test to determine stroke. Therefore, we used the following criteria to define an acceptable reference standard.

The initial inhospital diagnosis of stroke must have been done by a physician (neurologist, stroke physician, internist, emergency physician) who performed the history, physical exam and interpretation of the non‐contrast CT head scan and any other imaging. It could alternatively be done by an internist, family physician or an emergency physician with the assistance of a consulting radiologist, neurologist, stroke physician, or a combination of these, available in person or by telephone.

The person must have a documented discharge diagnosis of stroke or 'other', where 'other' could have been a neurologic or non‐neurologic diagnosis. 'Other' could have been any medical condition that was determined by a physician, where the symptoms that mimicked stroke were accounted for.

The person's chart must have been reviewed by a neurologist or stroke physician and the final diagnosis signed off by a neurologist or stroke physician, once the evolution of the person's condition had occurred to the point where they were discharged. For the purpose of the review, we considered a neurologist and a stroke physician equivalent. Non‐neurologic discharge diagnoses made by non‐neurologists were considered valid.

Every participant who was assessed with the index test by prehospital staff/emergency responders was then to be assessed by a neurologist or stroke physician at some point prior to having a neurologic discharge diagnosis. This applied even to people with a 'negative' score on the index test. The path at which they arrived at a non‐stroke diagnosis was beyond the scope of this review.

Search methods for identification of studies

We searched relevant computerized databases (listed below) from the earliest year possible to 30 January 2018. We applied no restrictions on language of publication.

Electronic searches

We searched the following electronic bibliographic databases:

Cochrane Central Register of Controlled Trials (CENTRAL; 2018, Issue 1) in the Cochrane Library (searched 30 January 2018; Appendix 1);

MEDLINE (Ovid) (1946 to 30 January 2018; Appendix 2);

Embase (Ovid) (1974 to 30 January 2018; Appendix 3);

Science Citation Index Cited Reference Search for forward tracking of important articles (up to 13 February 2018).

We developed the MEDLINE search strategy with the help of the Cochrane Stroke Group Information Specialist and adapted it for the other databases (Appendix 2).

Searching other resources

We searched the reference lists of all included studies and other relevant publications to identify additional studies. We contacted authors of the known prehospital stroke scales and asked them to provide information regarding unpublished studies.

Data collection and analysis

Selection of studies

Due to the large volume of initial titles produced by our database searches, we divided the references into two groups. We screened each title twice independently; ZZ and NH screened half of all titles, and GW and JF the other half. We retrieved the full texts of potentially relevant papers and JF and GW assessed their eligibility against the inclusion criteria. We resolved discrepancies by discussion or arbitration by a third review author (ZZ). We coded the studies excluded at full‐text screening with a particular reason for exclusion.

Data extraction and management

To collect data from studies, we used a prespecified data extraction form, which included information on study characteristics, participant population and relevant outcomes (Appendix 4). Two review authors (NH and JF) independently extracted the data to ensure adequate reliability and quality, and a third review author (ZZ) adjudicated any disagreements. If reported in the paper, we extracted 2 × 2 data directly (true positives, false positives, true negatives and false negatives) for each index test. Alternatively, we reconstructed 2 × 2 tables by entering data on sensitivity, specificity, total number of participants and the proportion of diseased participants in the Review Manager 5 diagnostic accuracy calculator (Review Manager 2014). We sent data requests to study authors before excluding a study due to insufficient data.

Assessment of methodological quality

We assessed the methodologic quality of each study using the Quality Assessment of Diagnostic Accuracy Studies version 2 (QUADAS‐2) tool (Whiting 2011). The tool consists of four domains: patient selection, index test, reference standard, and flow and timing. The first three domains are assessed in terms of risk of bias and concerns regarding applicability, and rated as 'high', 'low' or 'unclear'. The fourth domain, flow and timing, is assessed only in terms of risk of bias using the same rating categories. The tailored version of the tool including a set of operational definitions is provided in Appendix 5.

We added to the patient selection domain an additional signaling question to check if data were collected prospectively or retrieved from EMS and stroke registries. Retrospective data are prone to selective and incomplete recording, and matching patient records across different databases is not always possible. Therefore, we considered all studies using retrospective data collection to be at high risk of bias. We included only studies that applied the scales to actual patients and not to patient records, regardless of whether the patients were enrolled prospectively (prospective design) or data were retrieved from registries (retrospective design).

In the current review, the index tests were prehospital scales used to screen people suspected of having stroke at the first point of contact. The reference standard was a combination of tests performed once the person had already been admitted to hospital. Therefore, the question "Were the index test results interpreted without knowledge of the results of the reference standard?" would always be answered 'Yes'. This question was initially removed from the checklist, but included again during the editorial process upon advice from the Diagnostic Test Accuracy Editorial team.

It is unlikely that awareness of the index test results will affect the final diagnosis of stroke, if made by a neurologist/stroke physician using the results from imaging and other objective tests. However, it is possible to affect the diagnosis of TIA, which is based on the patient's presenting symptoms and assessment of their resolution within 24 hours. To capture this, we included in the reference standard domain a signaling question asking whether the clinicians making the final diagnosis were blinded to the results from the index test. However, the presenting symptoms were both part of the index test and the reference standard for TIA and, therefore, complete independence was not possible. Clinicians making the final diagnosis of TIA will always have access to this information, regardless of whether the results from the stroke scale are available to them or not. Therefore, we acknowledge that there could be risk of incorporation bias even in studies in which stroke adjudicators were blinded to the index test results.

Two review authors (GW, SY) independently assessed the methodologic quality of the studies and resolved any disagreements through discussion or arbitration by a third review author (ZZ or NH). If any of the signaling questions in the domain was rated 'high risk of bias', the overall domain was also categorized as 'high risk of bias'.

Statistical analysis and data synthesis

The index tests being reviewed are each made up of a set of criteria that are individually assessed and then combined to assign each participant a particular score. All scales use the same positivity threshold, '1 or greater', which indicates that the person may have been having a stroke. For each index test, we generated a diagnostic 2 × 2 table (true positives, false positives, true negatives and false negatives) from which we calculated sensitivity and specificity with 95% confidence intervals (95% CI). We also created forest plots and receiver operating characteristics (ROC) plots to show the variation in test accuracy estimates across studies.

When at least four studies evaluating the same index test were conducted in the same setting and reported consistent test accuracy estimates, we pooled sensitivity and specificity using the bivariate random‐effects method. This method is recommended for studies using the same positivity threshold; it preserves the two‐dimensional nature of the data; accounts for between‐study variability by using a random‐effects approach, and allows for the possibility of a negative correlation that may exist between sensitivity and specificity across studies (Reitsma 2005). We presented the summary estimates with a 95% confidence ellipse (i.e. a bivariate CI) and a 95% prediction region in the summary ROC space.

When only a small number of studies are included in a meta‐analysis, the prediction regions generated by the Review Manager 5 are excessively conservative (Review Manager 2014). They may appear inconsistent with the estimated CIs, as they depend on the number of included studies as well as on the standard errors and the covariance of the estimated mean logit sensitivity and specificity. To mitigate this, we followed the practice suggested in Gurusamy 2015. It recommends that when fewer than 10 studies are included in a meta‐analysis, the number of studies entered into the Review Manager's analysis panel should be 10 (rather than the actual number of pooled studies). According to the authors, this provides a better approximation of the prediction region than using the actual (smaller) number of studies.

We calculated positive and negative likelihood ratios from the summary sensitivity and specificity, and plotted the results from comparative studies in the ROC plane to illustrate the relative accuracy of the tests. All statistical analyses were carried out using the analysis functions of Review Manager 5 (Review Manager 2014) and STATA statistical software version 15 (StataCorp 2011).

Investigations of heterogeneity

In the protocol, we listed the following variables as potential contributors to between‐study variation in test accuracy estimates:

participant demographics (e.g. age, gender);

proportion of different types of stroke (ischemic, hemorrhagic or TIA);

level of training;

methodologic quality of included studies.

While working on the review, we identified additional potential sources of variation, the most important of which were:

different triggers for applying the tool (prespecified criteria versus general suspicion of stroke);

different procedures to obtain test scores, when more than one stroke scale was performed (e.g. consecutive application of both scales versus deriving the score of the simpler scale from the more complex scale);

differences in the reference standard (e.g. hospital discharge diagnosis versus independent panel of clinicians).

Statistical investigation of the influence of the above sources of heterogeneity was not feasible, because of the small number of studies per test conducted in the same setting. Instead, we conducted a visual inspection of the ROC and forest plots, and provided a narrative description of the observed heterogeneity.

Sensitivity analyses

The small number of studies included in the meta‐analyses precluded quantitative sensitivity analysis based on the methodologic quality of included studies. However, when reporting the results, we considered the methodologic quality of studies evaluating specific tests and highlighted the results reported by better‐quality studies.

Assessment of reporting bias

Following the recommendations of the Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy, we did not investigate publication bias because of the low power of the recommended test for funnel plot asymmetry, when there is heterogeneity in the diagnostic odds ratios and, more generally, because of the limited research in this area (Macaskill 2010). However, publication bias might be present and might affect the results from the review. In order to mitigate this, we conducted comprehensive searches of the published literature and contacted experts in the field to identify any additional or unpublished studies. We also interpreted our results with caution, acknowledging the possibility of publication bias.

Results

Results of the search

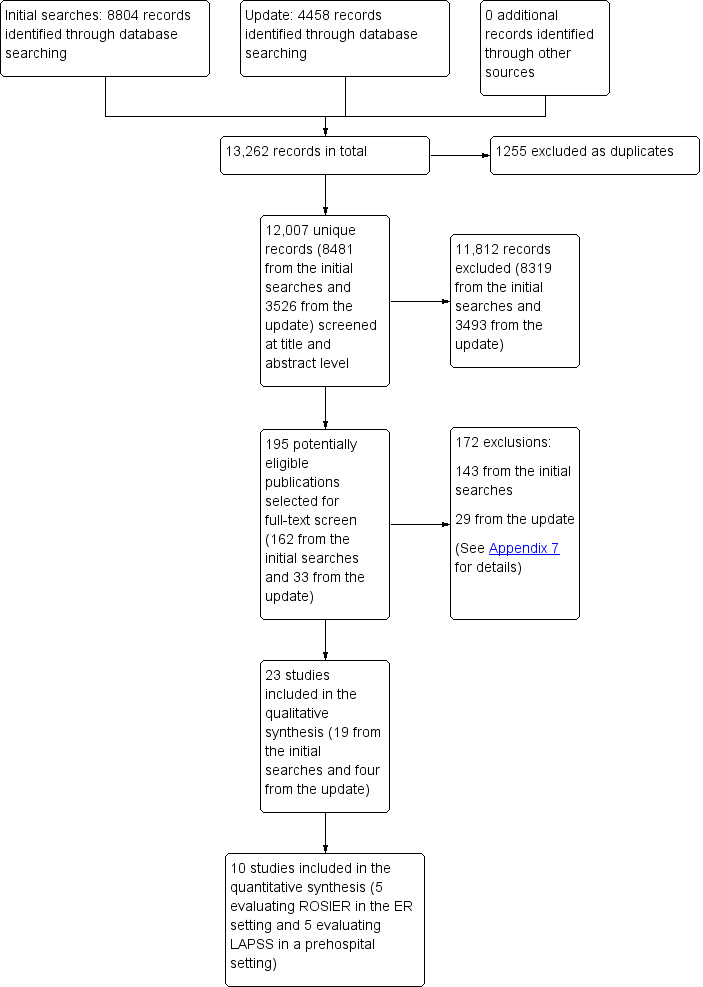

Figure 1 illustrates the selection process and Appendix 6 shows the number of records per database. From the initial electronic searches conducted in January 2015, we identified 8481 unique references. After screening titles and abstracts, we selected 162 publications for full‐text assessment. Of those, we excluded 71 conference abstracts that did not report sufficient data and for which there were no full‐text articles or additional data. Two review authors (JF, GW or SY) independently assessed the eligibility of the remaining 91 titles and selected 19 studies for inclusion in the review. We last updated the searches on 30 January 2018 and identified 3526 additional unique references. We screened 33 at full‐text level and considered four of them to be inclusions. We also searched the reference lists of all included studies and other relevant publications, but found no additional inclusions. The final number of studies included in the review was 23.

1.

Study flow diagram. ER: emergency room; LAPSS: Los Angeles Prehospital Stroke Scale; ROSIER: Recognition of Stroke in the Emergency Room.

Characteristics of the included studies

The characteristics of the included studies are summarized in Table 3, Table 4, and Table 5. Twenty‐two studies were journal articles and one was a conference abstract (Kim 2017). They were published between 2000 and 2017. Five studies were conducted in China (Chen 2013; Ding 2009; Jiang 2014; Mingfeng 2012; Mingfeng 2017); five in the USA (English 2018; Frendl 2009; Kidwell 2000; Ramanujam 2008; Studnek 2013); four in the UK (Fothergill 2013; Jackson 2008; Nor 2005; Whiteley 2011); two each in Australia (Bray 2005a; Bray 2010); Sweden (Andsberg 2017; Berglund 2014); and the Republic of Korea (Kim 2017; Lee 2015); and one each in Belgium (Bergs 2010), Canada (Chenkin 2009), and Italy (Vanni 2011).

2. Inclusion criteria of the studies included in the review.

| Study ID | Country | Inclusion criteria |

| Prehospital setting | ||

| Andsberg 2017 | Sweden | Suspected stroke defined as sudden onset of focal neurologic symptoms/signs, in conscious people > 18 years of age. |

| Berglund 2014 | Sweden | Suspected stroke with symptom onset within 6 hours; ages 18–85 years; previous independence in activities of daily living; and no other acute condition requiring a priority level 1. |

| Bergs 2010 | Belgium | Acute neurologic event without clear origin, altered level of consciousness, convulsions, syncope, headache, and symptoms of weakness, dizziness or decreased well‐being, aphasia, visual impairment, weakness in arms or legs (or both) and facial paralysis. People age < 18 years, trauma, unconsciousness (GCS ≤ 8), and people transported to another hospital were excluded. |

| Bray 2005a | Australia | Paramedics were instructed to complete a MASS assessment sheet on all designated EMS dispatches for 'stroke' that were symptomatic, conscious and to be transported to Box Hill Hospital. Paramedics were also asked to complete a MASS sheet for other people suspected of stroke where a focal neurologic deficit (i.e. unilateral limb weakness, speech disturbance) was noted during an initial exam. |

| Bray 2010 | Australia | People transported by EMS with documented MASS assessments of hand grip, speech, and facial weakness; and people with a discharge diagnosis of stroke or TIA included in the stroke/TIA registry. People who were unconscious or asymptomatic at the time of paramedic assessment were excluded. |

| Chen 2013 | China | Baseline screen criteria for the 'target stroke' population were referred from the original LAPSS study including age ≥ 18 years; neurologically relevant complaints; absence of coma and non‐traumatic. The neurologically relevant complaints were identified with 6 categories, including altered level of consciousness; local neurologic signs; seizure; syncope; head pain and the cluster category of weak/dizzy/sick. |

| Chenkin 2009 | Canada | People screened as positive by paramedics using OPSST and transported directly to a predesignated stroke center based on the person's current geographic location. Also all people with suspected stroke arriving by ambulance who did not have a positive screen were examined. |

| Ding 2009 | China | People with acute neurologic problems and non‐traumatic, non‐comatose, non‐obstetrics presentation transported to 3 local hospitals. |

| English 2018 | USA | People identified by EMS dispatchers as potential stroke/TIA cases were included. Those who met any of the following inclusion criteria were selected: positive CPSS in field; EMS impression of cerebrovascular accident or TIA; acute stroke pager activation in the ER; discharge diagnosis of cerebrovascular accident or TIA. People were excluded if they met any of the following: hospital arrival via helicopter, outside hospital transfer, direct admission without ER evaluation or last known well time > 6 hours. |

| Fothergill 2013 | UK | People aged > 18 years if they presented with symptoms of stroke, were assessed by participating ambulance clinicians using the ROSIER, and conveyed to the Royal London Hospital. Those who were ages < 18 years, not assessed using the ROSIER or transferred to another hospital were excluded. |

| Frendl 2009 | USA | All people transported to the Duke University Medical Center and coded by EMS as having a possible stroke or TIA were identified retrospectively by review of computerized and paper‐based paramedic records for the year before and after training, regardless of whether or not an abnormality was noted for a CPSS item. These records were then compared with the hospital's prospective stroke registry for the same period. The stroke registry includes all patients admitted to the study hospital with a discharge diagnosis of stroke or TIA. |

| Kidwell 2000 | USA | A 'target stroke' population was predefined as non‐comatose, non‐trauma patients with symptom duration < 24 hours with ischemic stroke, intracerebral hemorrhage, or TIA if the person was still symptomatic at the time of initial paramedic examination. These people constituted the population the LAPSS was designed to identify. |

| Kim 2017 | Republic of Korea | People suspected of stroke and transported to a single hospital by EMS paramedics and people with true stroke without stroke recognition by EMS (retrospective sample), for a period of 12 months (data extracted from EMS records, including CPSS score). |

| Mingfeng 2012 | China | All people > 18 years with suspected stroke or TIA with symptoms or signs seen by an emergency physician in the prehospital setting were included. According to the ASA guidelines, people who got ≥ 1 of these suggestive clinical elements as follows were defined as people with suspect acute stroke or TIA. The suggestive clinical elements included sudden weakness or numbness of the face, arm or leg, especially on 1 side of the body; sudden confusion, trouble speaking or understanding; sudden trouble seeing in 1 or both eyes; sudden trouble walking, dizziness, loss of balance or co‐ordination; or sudden severe headache with unknown cause. |

| Mingfeng 2017 | China | All people > 18 years with suspected stroke or TIA who presented to 2 primary care centers during the recruitment period. The following clinical signs were considered suggestive of stroke: numbness or weakness in the face, arms or legs (especially on 1 side of the body); confusion, difficulty in speaking or understanding speech; vision disturbance in 1 or both eyes; dizziness, walking difficulties, loss of balance or co‐ordination; severe headache without known cause. Patients were excluded if they had head trauma or surgery in recent months; previous stroke with neurologic deficits or incomplete medical testing. |

| Ramanujam 2008 | USA | People age ≥ 18 years identified as having stroke in the prehospital phase using the MPDS Stroke protocol by emergency medical dispatchers or by use of CPSS by paramedics. People taken to other acute care hospitals not participating in the study, people with a dispatch determinant of stroke who were not transported by City EMS agency (SDMSE) to participating hospitals, people in the stroke registry not transported by SDMSE or people with no final outcome data were excluded from the study. |

| Studnek 2013 | USA | People were included if they received a prehospital MedPACS screen and were transported to 1 of 7 local hospitals. The EMS agency protocols stipulated that a MedPACS screen be performed on all people who had signs or symptoms of acute stroke or TIA. People with no documented MedPACS screen, who nevertheless ended up with a hospital diagnosis of stroke were excluded from the primary analysis. People were also excluded if they were < 18 years, if they were transported to any medical facility other than those in the inclusion criteria or if they were secondary transports from a regional facility. |

| ER setting | ||

| Jackson 2008 | UK | Consecutive participants admitted to a single ER identified on routine initial triage as having possible or suspected stroke. |

| Jiang 2014 | China | Consecutive participants ≥ 18 years old, presenting to the ER with symptoms or signs suggestive of stroke or TIA. The following people were excluded: traumatic brain injury with an external cause such as motor vehicle crashes and falls; incomplete medical records; people who did not present first to the ER (e.g. direct admission to a ward); and in accordance with the criteria for the original ROSIER scale, people with subarachnoid hemorrhage, subdural hematoma and TIA without symptoms and signs during this period. |

| Lee 2015 | Korea | People with suspected acute stroke who were admitted to the ER. |

| Nor 2005 | UK | People age > 18 years with suspected stroke or TIA with symptoms or signs seen by ER physicians in the ER were included. |

| Vanni 2011 | Italy | Consecutive adults with suspected stroke who presented to the ERs of 3 hospitals. Inclusion criteria were the presence at triage of acute focal neurologic deficit (including also signs of posterior circulation ischemia: vertigo, double vision, visual field defects or disorders of perception, balance, and co‐ordination) or a 118 (local EMS) dispatch of suspected stroke. Exclusion criteria were major trauma and coma (GCS score ≤ 8). People with terminal illnesses (life expectancy < 3 months) were also excluded. |

| Whiteley 2011 | UK | Consecutive participants with suspected acute stroke who presented to the ER of the Western General Hospital, Edinburgh, while the study neurologist was available. Acute stroke was suspected in people: whose symptoms began < 24 hours before admission; who were still symptomatic at the time of assessment; and in whom a general practitioner, a paramedic or a member of the emergency‐room staff had made a diagnosis of 'suspected stroke'. |

ASA: American Stroke Association; CPSS: Cincinnati Prehospital Stroke Scale; EMS: emergency medical services; ER: emergency room; GCS: Glasgow Coma Scale; LAPSS: Los Angeles Prehospital Stroke Scale; MASS: Melbourne Ambulance Stroke Scale; MPDS: medical priority dispatch system; OPSST: Ontario Prehospital Stroke Screening Tool; ROSIER: Recognition of Stroke in the Emergency Room; SDMSE: San Diego Medical Services Enterprise; TIA: transient ischemic attack.

3. Characteristics of study cohorts.

| Study ID | Country | Sample size | Prevalence (%) | Ischemic stroke (%) | Hemorrhagic stroke (%) | TIA (%) | Mean age (years) | Sex (% women) |

Eligible participants out of all EMS runs or ER presentations (%) |

| Prehospital setting | |||||||||

| Andsberg 2017 | Sweden | 69 | 38 | 69 | 4 | 27 | n/a | n/a | n/a |

| Berglund 2014 | Sweden | 900 | 52 | 64 | 9 | 27 | 71 | 44 | n/a |

| Bergs 2010 | Belgium | 31 | 61 | 79 | 16 | 5 | 77 | 39 | 7.6 |

| Bray 2005a | Australia | 100 | 73 | 68 | 13 | 23 | 76 | n/a | 2.1 |

| Bray 2010 | Australia | 850 | 23 | n/a | n/a | n/a | n/a | n/a | 19 |

| Chen 2013 | China | 1130 | 88 | 61 | 25 | 3 | 72 | 39 | 3.1 |

| Chenkin 2009 | Canada | 554 | 57 | 58 | 21 | 11 | 74 | 31 | n/a |

| Ding 2009 | China | 327 | 16 | 44 | 36 | 20 | 58 | 48 | 16.1 |

| English 2018 | USA | 130 | 74 | 64 | 15 | 21 | 72–77 | 50–52 | 34.5 |

| Fothergill 2013 | UK | 295 | 60 | 71 | 23 | 6 | 64 | 47 | n/a |

| Frendl 2009 | USA | 154 | 40 | n/a | n/a | n/a | 67 | 56 | n/a |

| Kidwell 2000 | USA | 206 | 17 | n/a | n/a | n/a | 63 | 48 | 34.4 |

| Kim 2017 | Korea | 268 | 57 | n/a | n/a | 3 | n/a | n/a | n/a |

| Mingfeng 2012 | China | 540 | 70 | n/a | 41 | n/a | 63 | 32 | n/a |

| Mingfeng 2017 | China | 468 | 71 | n/a | 28 | n/a | 71 | 51 | n/a |

| Ramanujam 2008 | USA | 1045 | 42 | n/a | n/a | n/a | n/a | n/a | 1.3 |

| Studnek 2013 | USA | 416 | 45 | 82 | n/a | n/a | 67 | 54 | n/a |

| ER setting | |||||||||

| Jackson 2008 | UK | 50 | 92 | n/a | n/a | n/a | 73 | 52 | n/a |

| Jiang 2014 | China | 714 | 52 | 81 | 12 | 7 | 72 | 47 | n/a |

| Lee 2015 | Korea | 312 | 36 | 57 | 31 | 12 | 60 | 55 | n/a |

| Nor 2005 | UK | 160 | 63 | 76 | 14 | 10 | 70 | 59 | n/a |

| Vanni 2011 | Italy | 155 | 56 | 89 | 11 | n/a | 72 | 41 | 6.8 |

| Whiteley 2011 | UK | 356 | 69 | 80 | 5 | 15 | 72 | 51 | n/a |

EMS: emergency medical service; ER: emergency room; n/a: not applicable; TIA: transient ischemic attack.

4. Index test and reference standard.

| Study ID | Setting | Index tests | Test administrator | Training | Reference standard |

| Andsberg 2017 | Prehospital | PreHAST | Nurse | 4‐hour educational program, covering basic stroke knowledge and assessment and grading of stroke symptoms according to PreHAST; it included practical PreHAST training in pairs, where each ambulance nurse performed the PreHAST items under supervision and proper execution. During the study an instruction video for PreHAST was available on YouTube. | 2 stroke physicians, blinded to the PreHAST scores, independently reviewed the medical records of the participants, including evaluation of history, clinical and radiologic findings. In case of disagreement, a third evaluator adjudicated the final diagnosis. |

| Berglund 2014 | Prehospital | FAST | Nurse or paramedic | 1 lecture about stroke and the FAST test, prior to start of the study. | CT brain scan and in some cases CTA or MRI, neurologic examination, if necessary, EEG (differential diagnosis), laboratory tests. All participants received a final diagnosis by a neurologist or stroke specialist. |

| Bergs 2010 | Prehospital | CPSS, FAST, LAPSS, MASS | Nurse | All nurses were briefed on purpose of study, stroke scales and guidelines. | Diagnosis at ER discharge (unspecified). |

| Bray 2005a | Prehospital | CPSS, LAPSS, MASS | Paramedic | 1‐hour educational session on stroke and use of the prehospital stroke scale. | Standard criteria for diagnosis of stroke or TIA (Warlow 2001); review of discharge diagnosis (no further details). |

| Bray 2010 | Prehospital | CPSS, MASS | Paramedic | 1‐hour educational program and instruction on use of the MASS. | Discharge diagnosis based on hospital stroke registry. |

| Chen 2013 | Prehospital | LAPSS | Paramedic | 3 hours' LAPSS‐based stroke training session with 3 experts from study team. | 2 blinded neurologists reviewed the ER charts, recorded final ER discharge diagnoses, and verified absence or presence of potential stroke symptoms. The medical documents and neuroimaging records were reviewed before the final diagnoses were verified. |

| Chenkin 2009 | Prehospital | OPSST | Paramedic | 90‐minute training session on the stroke screening tool prior to implementation. | Hospital discharge diagnosis (no further details). |

| Ding 2009 | Prehospital | LAPSS | Emergency physician | Not reported. | Hospital final diagnosis made by a specialist group including a neurologist, a radiologist and a generalist. |

| English 2018 | Prehospital | CPSS | Paramedic | 1‐hour online module annually on stroke recognition and assessment in the field as part of their required job training. | Hospital discharge diagnosis (no further details). |

| Fothergill 2013 | Prehospital | FAST, ROSIER | Paramedic | 1‐hour stroke educational program, scenario based demonstration of ROSIER, 15‐minute educational DVD. | Final diagnosis made by a stroke consultant or other senior medical physician caring for the person within 72 hours of the person's admission to hospital, based on CT and MRI scans. The final diagnosis was confirmed by a senior stroke consultant. |

| Frendl 2009 | Prehospital | CPSS | Paramedic | 1‐hour interactive educational presentation on stroke recognition and use of the CPSS. | Hospital discharge diagnosis based on the results of routine clinical, laboratory and radiographic evaluations. |

| Kidwell 2000 | Prehospital | LAPSS | Paramedic | Video vignettes of paramedics performing the LAPSS examination on 3 people with stroke, 1 stroke mimic person, and 1 healthy person. Following a LAPSS‐focused education session, trainees had to pass an exam which, if failed, was followed by further training. | For all runs, 1 blinded author reviewed ER charts, recorded final ER discharge diagnoses and confirmed absence or presence of potential stroke symptoms. On all potential target stroke runs, 1 blinded author additionally examined all inpatient medical records to confirm hospital discharge diagnoses of stroke/TIA by review of reports from imaging studies and attending physician notes. For people with the diagnosis of TIA, a consensus on final diagnosis was reached after complete medical record review and case discussion with a second stroke neurologist. In all people with cerebral infarct and intracerebral hemorrhage, the diagnosis of the blinded reviewer agreed with the charted diagnosis of the attending neurologist. |

| Kim 2017 | Prehospital | CPSS | Paramedic | Not reported. | Hospital medical records. |

| Mingfeng 2012 | Prehospital | CPSS, ROSIER | Emergency physician | 6‐hour course on ROSIER and CPSS. | The final discharge diagnosis of stroke/TIA made by neurologists and based on CT or MRI. |

| Mingfeng 2017 | Prehospital | CPSS, ROSIER | GP | Trained by emergency physicians on the use of the ROSIER scale and CPSS for 10 hours before the study. | Final discharge diagnosis of stroke or TIA made by neurologists reviewing all diagnostic information including CT scan of the brain (immediately after transfer), blood tests and 12‐lead ECG conducted in the ER; comprehensive neurologic assessment including additional tests, such as continuous ECG monitoring, 24‐hour Holter ECG, duplex carotid and cardiac ultrasound, TCD, MRI or MRA, and conventional cerebral angiography were performed as requested by the neurologists once the person was transferred to the neurology ward. The neurologists who made the final diagnosis were blinded to the results from the ROSIER and CPSS. |

| Ramanujam 2008 | Prehospital | CPSS | Paramedic | Annual 1‐hour education session on recognizing stroke. | Discharge diagnosis for people in stroke registry. |

| Studnek 2013 | Prehospital | CPSS, MedPACS | Paramedic | 2‐hour continuing education lecture regarding neurologic emergencies. | Discharge diagnosis of stroke/TIA. |

| Jackson 2008 | ER | ROSIER | Emergency physician | No training reported. | Patients' records were later followed up to determine accuracy of initial diagnosis; stroke confirmed on investigation (no further details reported). |

| Jiang 2014 | ER | ROSIER | Emergency physician or nurse | The research staff received the specific training by a stroke nurse and a module/exam provided by the NIHSS website. | The final diagnoses were made after people suspected of stroke were reviewed by the stroke team and after review of clinical symptoms and the acute neuroimaging (CT and MRI). |

| Lee 2015 | ER | FAST, ROSIER | Emergency physician | 3 hours of training on theory of stroke and the acute stroke registration system from an emergency medicine specialist. | Ischemic stroke and bleeding were determined in accordance with brain CT and MRI results. The final diagnosis was confirmed at the time through the electronic medical record. |

| Nor 2005 | ER | ROSIER | Emergency physician | Regular educational program on the use of the instrument with twice monthly updates given to small groups of ER staff. | Final diagnosis made by the consultant stroke physician, after assessment and review of clinical symptomatology and brain imaging findings. |

| Vanni 2011 | ER | CPSS | Nurse | No training reported. | TIA was excluded from the target condition. Stroke diagnosis established by a consensus of 3 experts, blinded to the index test results, after reviewing all clinical data and brain imaging results. |

| Whiteley 2011 | ER | FAST, ROSIER | Emergency physician or nurse | No training reported. | Diagnosis made by a panel of experts, who had access to the clinical findings, imaging results and the person's subsequent clinical course. |

CPSS: Cincinnati Prehospital Stroke Scale; CT: computed tomography; CTA: computed tomography angiography; ECG: electrocardiogram; ER: emergency room; EEG: electroencephalogram; FAST: Face Arm Speech Time; LAPSS: Los Angeles Prehospital Stroke Scale; MASS: Melbourne Ambulance Stroke Scale; MRA: magnetic resonance angiography; MRI: magnetic resonance imaging; NIHSS: National Institutes of Health Stroke Scale; OPSST: Ontario Prehospital Stroke Screening Tool; PreHAST: PreHospital Ambulance Stroke Test; ROSIER: Recognition of Stroke in the Emergency Room; TCD: transcranial Doppler; TIA: transient ischemic attack.

Twenty‐one studies were published in English, one in Korean (Lee 2015), and one in Chinese (Ding 2009). The data extraction and methodologic quality assessment of the two non‐English language studies were done by stroke neurologists fluent in the respective language: the translation from Chinese was done by a member of our team (SY); and the translation from Korean was done by Dr Sang Min Sung from the Pusan National University Hospital in South Korea. Additional data or answers to specific queries, or both, were very kindly provided by the authors of the following included papers: Berglund 2014, Jiang 2014, and Lee 2015.

The studies evaluated eight prehospital stroke scales:

CPSS (11 studies; Bergs 2010; Bray 2005a; Bray 2010; English 2018; Frendl 2009; Kim 2017; Mingfeng 2012; Mingfeng 2017; Ramanujam 2008; Studnek 2013; Vanni 2011);

ROSIER (eight studies; Fothergill 2013; Jackson 2008; Jiang 2014; Lee 2015; Mingfeng 2012; Mingfeng 2017; Nor 2005; Whiteley 2011);

FAST (five studies; Berglund 2014; Bergs 2010; Fothergill 2013; Lee 2015; Whiteley 2011);

LAPSS (five studies; Bergs 2010; Bray 2005a; Chen 2013; Ding 2009; Kidwell 2000);

MASS (three studies; Bergs 2010; Bray 2005a; Bray 2010);

OPSST (one study; Chenkin 2009);

MedPACS (one study; Studnek 2013);

PreHAST (one study; Andsberg 2017).

Nine of the included studies (39%) evaluated more than one stroke scale in the same participants (Bergs 2010; Bray 2005a; Bray 2010; Fothergill 2013; Mingfeng 2012; Mingfeng 2017; Lee 2015; Studnek 2013; Whiteley 2011), and one study compared CPSS to a panel of blood biomarkers used to identify people with stroke in the ER (Vanni 2011). All studies obtained the scores directly, by face‐to‐face application of the scales to people suspected of stroke.

Nor 2005 also compared the accuracy of ROSIER with that of FAST, CPSS and LAPSS, but the scores for the latter three scales were derived post hoc from neurologist‐recorded signs. An additional analysis from the same study compared the accuracy of ROSIER (completed by ER physicians) with that of FAST (completed by paramedics) in a subgroup of 49 participants. We included the data for ROSIER, which was the main focus of the study, but excluded the two comparative data sets: the first one because the scores for FAST, CPSS and LAPSS were derived from patient records, and the second one because it was a post‐hoc analysis of a small convenience sample and the tests were performed in different setting by different clinicians.

The total number of participants in the included studies was 9230 and ranged from 31 (Bergs 2010) to 1130 (Chen 2013), median 312 (interquartile range (IQR) 154 to 554). The prevalence of the target condition (stroke and TIA) ranged from 16% (Ding 2009) to 92% (Jackson 2008), mean 54% (standard deviation (SD) 20%). The index tests were used in an ER setting in six studies (Jackson 2008; Jiang 2014; Lee 2015; Nor 2005; Vanni 2011; Whiteley 2011); in three of them they were applied by ER physicians, in two by ER physicians or nurses, and by nurses in one study. The rest of the studies were conducted in a prehospital setting and the scales were applied by paramedics, with the exception of Ding 2009 and Mingfeng 2012 (ER physicians as part of an ambulance crew), Andsberg 2017 and Bergs 2010 (nurses), Berglund 2014 (nurses or paramedics), and Mingfeng 2017 (GPs).

The amount of training and the trigger for applying the scales also varied across studies. Some studies applied the stroke recognition tool to all participants suspected of stroke by the attending clinician (Fothergill 2013; Frendl 2009; Nor 2005; Studnek 2013). Other studies required the participants to meet specific eligibility criteria to be tested (Table 3). This most likely led to differences between study cohorts and contributed to the observed between‐study heterogeneity.

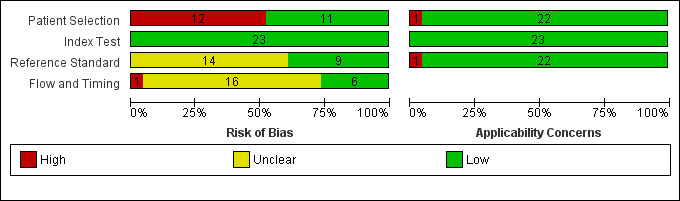

Methodological quality of included studies

The methodologic quality of the included studies is summarized in Figure 2 and Figure 3. We considered 12 studies (52%) at high risk of bias in the patient selection domain: seven because they were retrospective analyses of stroke registry data (Bray 2010; Chenkin 2009; English 2018; Frendl 2009; Kim 2017; Ramanujam 2008; Studnek 2013), and five prospective studies that failed to include all eligible consecutive participants (Bergs 2010; Bray 2005a; Chen 2013; Fothergill 2013; Kidwell 2000). Retrospective studies depend on routinely collected data, which are susceptible to selective and incomplete recording. For instance, Bray 2010 and Studnek 2013 excluded over 10% of all eligible patient records because they were missing relevant data. Therefore, we considered all retrospective studies at high risk of selection bias.

2.

Risk of bias and applicability concerns graph: review authors' judgments about each domain presented as percentages across included studies.

3.

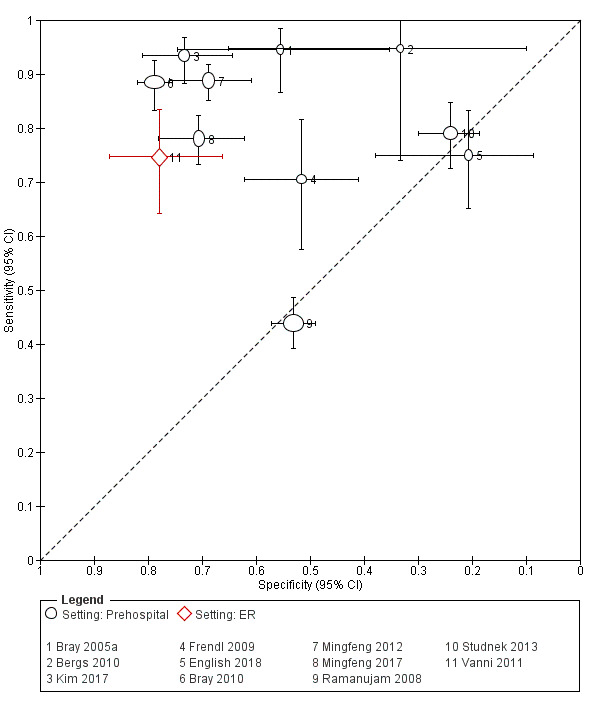

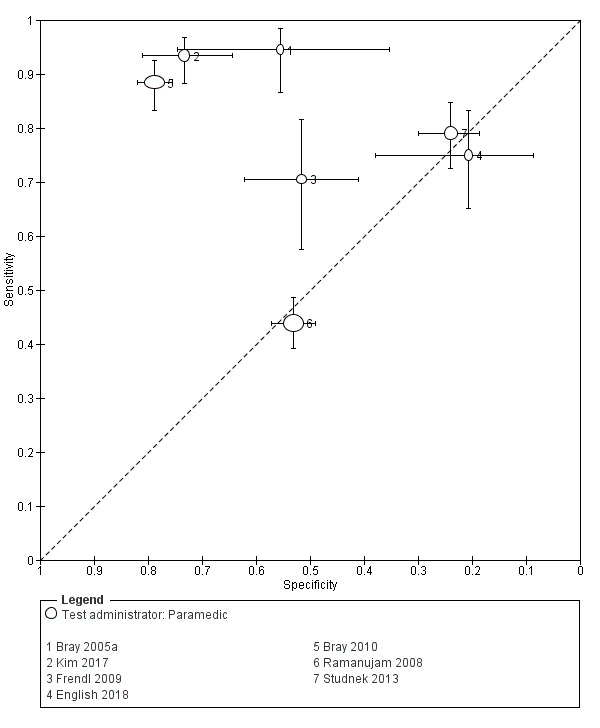

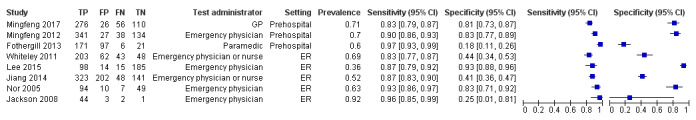

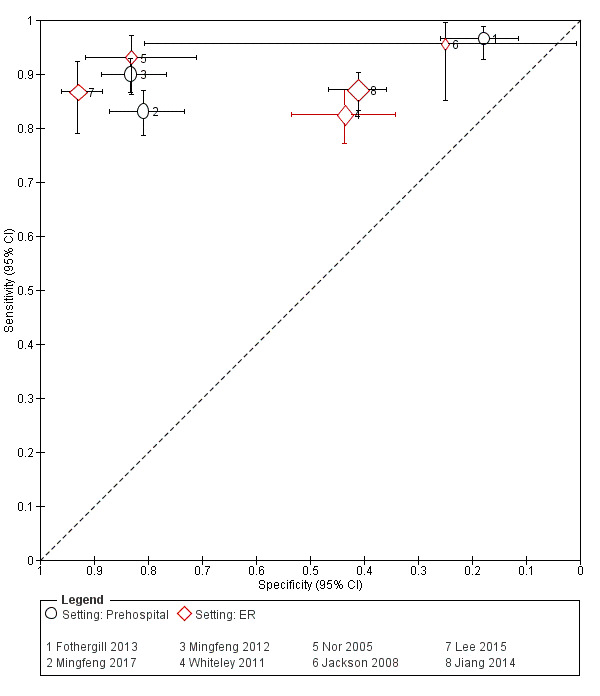

Risk of bias and applicability concerns summary: review authors' judgments about each domain for each included study.