O1 User testing of Test-Treatment Pathway derivation to help formulating focused diagnostic questions

Gowri Gopalakrishna1, Miranda Langendam1, Rob Scholten2, Patrick Bossuyt1, Mariska Leeflang1

1Department of Clinical Epidemiology, Biostatistics & Bioinformatics, Academic Medical Center, University of Amsterdam, Amsterdam, The Netherlands; 2Cochrane Netherlands, Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, Utrecht, Netherlands

Correspondence: Mariska Leeflang (m.m.leeflang@amc.uva.nl)

Background: The Test-Treatment Pathway has been proposed as a method to link test accuracy to downstream outcomes. By describing the clinical actions before and after testing, it illustrates how a test is positioned in the pathway, relative to other tests and diagnostics, and how the introduction of a new test may change the current diagnostics pathway. However, there is limited practical guidance on how to model such Test-Treatment Pathways.

Methods: We selected the Patient - Index test- Comparator - Outcome (PICO) format, as also used elsewhere in evidence-based medicine, as a starting point for building the Test-Treatment Pathways. From there we developed a structured set of triggering questions. We defined these questions based on several brainstorm sessions and iteratively made changes to this basic structure after three rounds of user testing. During the user testing meetings, a pathway was drawn for each specific application. All sessions were recorded both on audio and video.

Results: We present examples of four different Test-Treatment Pathways. User testing revealed that all users found the process of drawing the pathway very useful, but they also felt that this is just the first step in a process. The steps from pathway derivation to key questions remains difficult. Challenges in deriving the pathway were that interviewee(s) may wander off topic and that some problems cannot be captured in only one pathway. Further training was deemed desirable. Users would also like to see an electronic tool. They had no clear preference when offered a choice between a more open interviewing approach versus a more closed checklist approach.

Discussion: Modelling Test-Treatment pathways is a useful step in synthesizing the evidence about medical tests and developing recommendations about them, but further technical development and training are needed to facilitate their use in evidence-based medicine.

O2 Using machine learning and crowdsourcing for the identification of diagnostic test accuracy

Anna Noel-Storr1, James Thomas2, Iain Marshall3, Byron Wallace4

1Cochrane Dementia and Cognitive Improvement Group, University of Oxford, Oxford, UK; 2EPPI-Centre, Department of Social Science, University College London, London, UK; 3Division of Health and Social Care Research, King’s College London, London, UK; 4College of Computer and Information Science, Northeastern University, Boston, USA

Correspondence: Anna Noel-Storr (anna.noel-storr@rdm.ox.ac.uk)

Identifying studies of diagnostic test accuracy (DTA) is challenging. Poor reporting and inconsistent indexing hampers retrieval, and the lack of validated filters means that sensitive searches often yield tens of thousands of results which require further manual assessment. Machine learning (ML) and crowdsourcing have shown to be highly effective at identifying reports of randomized trials, with Cochrane’s Embase screening project accurately identifying over 20,000 reports using a crowd model. Additionally, the project generated a large data set that could be used to train ML systems. The new workflow for RCT identification will combine automated and human screening to optimize system efficiency.

Aims and objectives

This study set out to evaluate the application of these two innovative approaches to DTA identification.

Methods

A gold standard data set (n = 1120) was created, composed of known DTA studies and realistic non-DTA reports. This data set was made available to both machine and crowd. Two ML strategies were evaluated: 1. An ‘active learning’ simulation, in which the abstracts presented for manual assessment were prioritized as a function of their predicted probability of relevance; 2. A binary classifier, which was evaluated via cross-validation. Outcomes of interest were machine and crowd recall and precision.

Results

At the time of writing, the experiments are ongoing. The active learning approach achieved 95% recall at a cost of 30% being manually screened, increasing to 100% after 77% screened. The binary classifier retrieved DTA articles with 95% recall, and 40% precision; 100% recall was possible, but with an associated precision of 13%.

Discussion

The gold standard used for this study was small but had the advantage of not being generated through the relative recall method. If the crowd can do this successfully, as has been shown in the case of Cochrane’s Embase project, then we will be in a position to create a vast human-generated gold standard dataset (across all relevant healthcare areas) that can be used to further improve machine learning accuracy.

This work could also be used to inform methodological filter development and refinement.

O3 Developing plain language summaries for diagnostic test accuracy (DTA) reviews

Penny Whiting1, Clare Davenport2, Mariska Leeflang3, Gowri GopalaKrishna3, Isabel de Salis4

1University Hospitals Bristol NHS Foundation Trust, School of Social and Community Medicine, Bristol, UK; 2Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 3Department of Clinical Epidemiology, Biostatistics & Bioinformatics, Academic Medical Center, University of Amsterdam, Amsterdam, The Netherlands; 4School of Social and Community Medicine, University of Bristol, Bristol, UK

Correspondence: Penny Whiting (Penny.whiting@bristol.ac.uk)

A plain language summary (PLS) is a stand-alone summary of a Cochrane systematic review and should provide rapid access to its content. A clear PLS is essential to ensure that systematic reviews are useful to users who are not familiar with the more technical content of the review. Explaining the results of a Diagnostic Test Accuracy (DTA) review in plain language is challenging. The review methodology and results are less familiar than reviews of interventions and the two dimensional nature of the measure of a test’s accuracy (sensitivity and specificity) introduces further complexity. Additionally, DTA reviews are characterized by a large degree of heterogeneity in results across studies. The reason for this variation is not always clear and explaining this to readers, especially lay readers, is difficult. A further challenge is providing information about the downstream consequences of testing. Challenges in the interpretation of DTA reviews may be different for different target user groups, but this is something that has yet to be established. Ideally, a PLS should be accessible to all potential target audiences (patients, clinicians, policy makers).

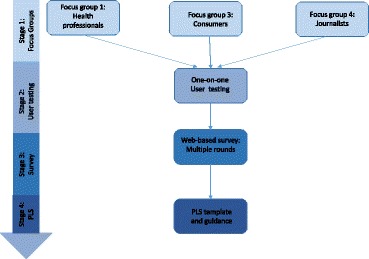

The overall aim of this project is to develop a template and guidance for PLS for Cochrane DTA reviews. We are using a four staged approach to develop this: qualitative focus groups, one-on-one user testing, web-based survey, and producing a template and guidance for PLS for DTA reviews based on the findings from the first three stages (Fig. 1). This presentation will provide a summary of the results from the focus groups, user testing and first rounds of the web-based survey. We will present the current version of the proposed PLS based on an example review of the IQCODE for diagnosing dementia. We will then invite the audience to provide feedback on various aspects of the proposed example PLS using interactive turning point voting software. Feedback from the presentation will then be incorporated into the next version of the PLS.

Fig. 1 (abstract O3).

See text for description

O4 Prediction model study risk of bias assessment tool (PROBAST)

Sue Mallett1, Robert Wolff2, Penny Whiting3, Richard Riley4, Marie Westwood2, Jos Kleinen2, Gary Collins5, Hans Reitsma6,7, Karel Moons6

1Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 2Kleijnen Systematic Reviews Ltd, York, UK; 3University Hospitals Bristol NHS Foundation Trust, School of Social and Community Medicine, Bristol, UK; 4Research Institute for Primary Care and Health Sciences, Keele University, Keele, UK; 5Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK; 6Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, Utrecht, The Netherlands; 7Cochrane Netherlands, University Medical Center Utrecht, Utrecht, The Netherlands

Correspondence: Sue Mallett (s.mallett@bham.ac.uk)

Background: Quality assessment of included studies is a crucial step in any systematic review. Review and synthesis of prediction modelling studies is a relatively new and evolving area and a tool facilitating quality assessment for prognostic and diagnostic prediction modelling studies is needed.

Objectives: To introduce PROBAST, a tool for assessing the risk of bias and applicability of prediction modelling studies.

Methods: A Delphi process, involving 42 experts in the field of prediction research, was used until agreement on the content of the final tool. Existing initiatives in the field of prediction research such as the REMARK (Reporting Recommendations for Tumor Marker Prognostic Studies) guidelines and the TRIPOD prediction model reporting guidelines formed part of the evidence base for the tool development. The scope of PROBAST was determined with consideration of existing tools, such as QUIPS and QUADAS.

Results: After seven rounds of the Delphi procedure, a final tool has been developed which utilises a domain-based structure supported by signalling questions similar to QUADAS-2, which assesses risk of bias and applicability of diagnostic accuracy studies. PROBAST assesses the risk of bias and applicability of prediction modelling studies. Risk of bias refers to the likelihood that a prediction model leads to distorted predictive performance for its intended use and targeted individuals. The predictive performance is typically evaluated using calibration, discrimination, and (re)classification. Applicability refers to the extent to which the prediction model from the primary study matches your systematic review question, for example in terms of the population or outcomes of interest.

PROBAST comprises five domains (participant selection, outcome, predictors, sample size and flow, and analysis) and 24 signalling questions grouped within these domains.

Conclusions: PROBAST can be used for the quality assessment of prediction modelling studies. The presentation will give an overview of the development process and the final version of the tool (including the addressed domains and signalling questions).

O5 Nonparametric meta-analysis for diagnostic accuracy studies

Antonia Zapf1, Annika Hoyer2, Katharina Kramer1, Oliver Kuss2

1Department of Medical Statistics, University Medical Center Göttingen, Göttingen, Germany; 2Institute for Biometry and Epidemiology, German Diabetes Center, Leibniz Institute for Diabetes Research at Heinrich Heine University, Düsseldorf, Germany

Correspondence: Antonia Zapf (Antonia.Zapf@med.uni-goettingen.de)

Background

Summarizing the information of many studies using a meta-analysis becomes more and more important, also in the field of diagnostic studies. The special challenge in meta-analysis of diagnostic accuracy studies is that in general sensitivity and specificity are co-primary endpoints. Across the studies, both endpoints are correlated, and this correlation has to be considered in the analysis.

Methods

The standard approach for such a meta-analysis is the bivariate logistic random effects model. An alternative, more flexible approach is to use marginal beta-binomial distributions for the true positives and the true negatives, linked by copula distributions. However, both approaches can lead to convergence problems. We developed a new, nonparametric approach of analysis, which has greater flexibility with respect to the correlation structure. Furthermore, the nonparametric approach avoids convergence problems.

Results

In a simulation study, it became apparent that the empirical coverage of all three approaches is in general below the nominal level. Regarding bias, empirical coverage, and mean squared error the nonparametric model is often superior to the standard model, and comparable with the copula model. I will also show the application of the three approaches for two example meta-analyses: one with very high specificities and low variability, and one with an outlier study.

Conclusion

In summary, the nonparametric model as compared with the standard model and the copula model has better or comparable statistical properties, no restrictions on the correlations structure and always converges. Subject of further research is the consideration of multiple thresholds per study.

Reference

[1] Zapf A, Hoyer A, Kramer K, Kuss O (2015). Nonparametric meta‐analysis for diagnostic accuracy studies. Statistics in Medicine, 34(29):3831–41.

O6 Meta-analysis of test accuracy studies using imputation for partial reporting of multiple thresholds

J. Ensor1, J. J. Deeks2, E. C. Martin3, R. D. Riley1

1Research Institute for Primary Care and Health Sciences, Keele University, Keele, UK; 2Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 3Manchester Pharmacy School, University of Manchester, Manchester, UK

Correspondence: J. Ensor (j.ensor@keele.ac.uk)

Introduction: For continuous tests, primary studies usually report test accuracy results at multiple thresholds, but the set of thresholds used often differs. This creates missing data when performing a meta-analysis at each threshold. A standard meta-analysis (NI: No Imputation) ignores such missing data. A Single Imputation (SI) approach was recently proposed to recover missing threshold results using a simple piecewise linear interpolation. Here, we propose a new method (MIDC) that performs Multiple Imputation of the missing threshold results using Discrete Combinations, and compare the approaches via simulation.

Methods: The new MIDC method imputes missing threshold results (two by two tables) by randomly selecting from the set of all possible discrete combinations which lie between the results for two known bounding thresholds. Imputed and observed results are then synthesised in a bivariate meta-analysis at each threshold separately. This is repeated M times, and the M pooled results at each threshold are combined using Rubin’s rules to give final estimates.

Results: Compared to the standard NI approach, our simulations suggest both SI and MIDC approaches give more precise pooled sensitivity and specificity estimates, due to the increase in data. Coverage of 95% confidence intervals was also closer to 95%, with the MIDC method generally performing best, especially when the prevalence was low. This is primarily due to improved estimation of the between-study variances. In situations where the linearity assumption was valid in logit ROC space, and there was selective reporting of thresholds, the imputation methods also reduced bias in the summary ROC curve.

Conclusions: The MIDC method is a new option for dealing with missing threshold results in meta-analysis of test accuracy studies, and generally performs better than the current method in terms of coverage, precision and, in some situations, bias. A real example will be used to illustrate the method.

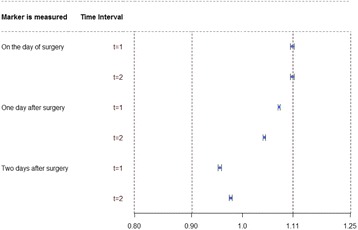

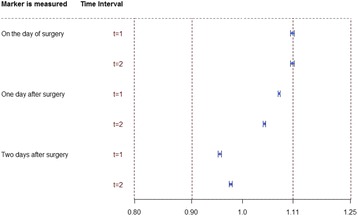

O7 Modelling multiple biomarker thresholds in meta-analysis of diagnostic test accuracy studies

Gerta Rücker1, Susanne Steinhauser2, Martin Schumacher1

1Institute for Medical Biometry and Statistics, Faculty of Medicine and Medical Center – University of Freiburg, Stefan-Meier-Str. 26, 79104 Freiburg, Germany; 2Institute of Medical Statistics, Informatics and Epidemiology, University of Cologne, Kerpener Str. 62, 50937 Cologne, Germany

Correspondence: Gerta Rücker (ruecker@imbi.uni-freiburg.de)

Background

In meta-analyses of diagnostic test accuracy, routinely only one pair of sensitivity and specificity per study is used. However, for tests based on a biomarker often more than one threshold and the corresponding values of sensitivity and specificity are known.

Methods

We present a new meta-analysis approach using this additional information. It is based on the idea of estimating the distribution functions of the underlying biomarker within the non-diseased and diseased individuals. Assuming a normal or logistic distribution, we estimate the distribution parameters in both groups applying a linear mixed effects model to the transformed data. The model accounts for both the within-study dependence of sensitivity and specificity and between-study heterogeneity.

Results

We obtain a summary receiver operating characteristic (SROC) curve as well as the pooled sensitivity and specificity at every specific threshold. Furthermore, the determination of an optimal threshold across studies is possible through maximization of the Youden index. The approach is demonstrated on a meta-analysis on the accuracy of Fractional Exhaled Nitric Oxide (FENO) for diagnosing asthma.

Conclusion

Our approach uses all the available information and results in an estimation not only of the performance of the biomarker but also of the threshold at which the optimal performance can be expected.

O8 Summarising and validating test accuracy results across multiple studies for use in clinical practice

Richard Riley1, Joie Ensor1, Kym Snell2, Brian Willis3, Thomas Debray4, Karel Moons5, Jon Deeks3, Gary Collins6

1Research Institute for Primary Care and Health Sciences, Keele University, Keele, UK; 2Keele University, Keele, UK; 3Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 4University of Utrecht, Utrecht, Netherlands; 5Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, 3584 CG Utrecht, Netherlands; 6Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK

Correspondence: Richard Riley (r.riley@keele.ac.uk)

Following a meta-analysis of test accuracy studies, the translation of summary results into clinical practice is potentially problematic. The sensitivity, specificity, and positive (PPV) and negative (NPV) predictive values of a test may differ substantially from the average meta-analysis findings, due to heterogeneity. Clinicians thus need more guidance: given the meta-analysis, is a test likely to be useful in new populations and, if so, how should test results inform the probability of existing disease (for a diagnostic test) or future adverse outcome (for a prognostic test)? In this presentation, we propose ways to address this [1].

Firstly, following a meta-analysis we suggest deriving prediction intervals and probability statements about the potential accuracy of a test in a new population. Secondly, we suggest strategies for how clinicians should derive post-test probabilities (PPV and NPV) in a new population based on existing meta-analysis results, and propose a cross-validation approach for examining and comparing their calibration performance. Application is made to two clinical examples. In the first, the joint probability that both sensitivity and specificity will be > 80% in a new population is just 0.19, due to a low sensitivity. However, the summary PPV of 0.97 is high and calibrates well in new populations, with a probability of 0.78 that the true PPV will be at least 0.95. In the second example, post-test probabilities calibrate better when tailored to the prevalence in the new population, with cross-validation revealing a probability of 0.97 that the observed NPV will be within 10% of the predicted NPV. We recommend that meta-analysts should go beyond presenting just summary sensitivity and specificity results, by also evaluating and, if necessary, tailoring their meta-analysis results for clinical practice [2].

We conclude with brief extension to the risk prediction modelling field, where similar issues occur: in particular, the distribution of model performance (e.g. in terms of calibration, discrimination and net-benefit) should be evaluated across multiple settings, as focusing only on summary performance can mask serious deficiencies [3].

References

1. Riley RD, Ahmed I, Debray TP, et al. Summarising and validating test accuracy results across multiple studies for use in clinical practice. Stat Med 2015; 34: 2081–2103.

2. Willis BH, Hyde CJ. Estimating a test’s accuracy using tailored meta-analysis: How setting-specific data may aid study selection. J Clin Epidemiol 2014; 67: 538–546.

3. Snell KI, Hua H, Debray TP, Ensor J, Look MP, Moons KG, Riley RD. Multivariate meta-analysis of individual participant data helped externally validate the performance and implementation of a prediction model. J Clin Epidemiol 2016; 69: 40–50

O9 Barriers to blinding: an analysis of the feasibility of blinding in test-treatment RCTs

Lavinia Ferrante di Ruffano1, Brian Willis1, Clare Davenport1, Sue Mallett1, Sian Taylor-Phillips2, Chris Hyde3, Jon Deeks1

1Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 2Division of Health Sciences, Warwick Medical School, The University of Warwick, Coventry, UK; 3Exeter Test Group, University of Exeter Medical School, Exeter, UK

Correspondence: Lavinia Ferrante di Ruffano (ferrantl@bham.ac.uk)

Background: Test-treatment strategies are complex interventions involving four main ingredients: 1) testing, 2) diagnostic decision–making, 3) therapeutic decision–making, 4) subsequent treatment. Methodologists have argued that it may be impossible to control for performance bias when evaluating these strategies using RCTs, since test results must be used by clinicians to plan patient management whilst patients are often actively involved in testing processes and treatment selection. Analysis of complex therapeutic interventions has shown blinding is not always feasible, however claims regarding the ability to blind in test-treatment trials have not been evaluated.

Aim: This methodological review analysed a systematically–derived cohort of 103 test-treatment trials to determine the frequency of blinding, and feasibility of blinding care–providers, patients and outcome assessors.

Methods: Judgments of feasibility were based on subjective assessments following previously published methods1. Extraction and judgements were completed in duplicate, with final judgement decisions made as a group consensus consisting of methodologists and clinicians.

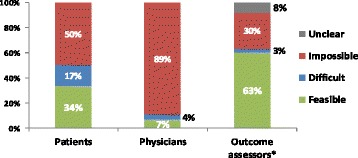

Provisional results: Care–providers, patients and outcome assessors were masked by 4%, 5% and 22% of trials, and could have been masked by a total of 11%, 50% and 66% respectively (Fig. 2). Scarcity of attempts to blind reflected the practical and ethical difficulties in performing sham diagnostic procedures, or masking real test results from patients and clinicians. Feasibility hinged on: the types of tests, nature of their comparison, type of information produced and circumstances surrounding their administration.

Fig. 2 (abstract O9).

The feasibility of blinding patients, care-providers and outcome assessors in test-treatment RCTs

Conclusions: These findings present worrying implications for the validity of test-treatment RCTs. Unexpectedly we found that in some circumstances blinding may alter or eliminate the desired test–treat effect, and recommend further investigation to determine the true impact of masking in these highly complicated trials.

Reference

1. Following method of Boutron et al. J Clin Epidemiol 2004;57:543–550

O10 Measuring the impact of diagnostic tests on patient management decisions within three clinical trials

Sue Mallett1, Stuart A. Taylor2, Gauraang Batnagar2, STREAMLINE COLON Investigators2, STREAMLINE LUNG Investigators2, METRIC Investigators2

1Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 2Centre for Medical Imaging, University College London, London, UK

Correspondence: Sue Mallett (s.mallett@bham.ac.uk)

Background: Standard studies comparing diagnostic tests measure diagnostic test accuracy. Some trials also provide information on additional outcomes such as time to diagnosis and differences between the number of additional tests in patient pathway. Ideally diagnostic tests would be compared as interventions in randomised controlled trials (RCTs). However RCTs for comparison of diagnostic tests as interventions can be problematic to design and run. Problems include long time periods required for studies following patient outcomes during which either test or treatment pathways change, high numbers of patients required, high costs, ethical issues about randomising to receive tests, difficulty to understand role of diagnostic test as complex intervention, plus other barriers. However for some tests it may be possible to measure how tests affect patient management decisions within current diagnostic accuracy trials.

Aims: To describe three ongoing clinical trials measuring the impact of diagnostic tests on patient management.

Methods: Three trials, each comparing alternative diagnostic tests or diagnostic test pathways against a reference standard of normal clinical practice have been designed to collect patient management decisions. In each patient management decisions based on the alternative pathways are reported based on eight or ten alternative management options. STREAMLINE COLON and LUNG compare whole body MRI to current NICE recommended pathways for detection of metastases at diagnosis of colon and lung cancer respectively. METRIC compares ultrasound and MRI for diagnosing the extent and activity of Crohn’s disease in newly diagnosed and relapsed patients.

Discussion of bias and applicability: Including patient management decision into diagnostic accuracy studies increases understanding when comparing the role of diagnostic tests. Including patient management decision making can be onerous to collect in terms of clinical and trialist time. Reduction of bias through blinding of test results and patient management decisions between test pathways being compared, may only be achieved when patient management decisions are made outside of normal clinical pathways. However the most applicable decisions of patient management will be made by normal treating clinicians within normal clinical pathways, when blinding of both test results to each other is less feasible. Constraints of timing and personnel mean trialists may be choosing between trial designs at risk of bias with high applicability or at low risk of bias but high risk of clinical applicability. More methodology work on including patient management decisions based on diagnostic tests is required to understand best ways to design studies and to understand robustness and realism of different methods.

O11 Comparison of international evidence review processes for evaluating changes to the newborn blood spot test

Sian Taylor-Phillips1, Lavinia Ferrante Di Ruffano2, Farah Seedat3, Aileen Clarke3, Jon Deeks2

1Division of Health Sciences, Warwick Medical School, The University of Warwick, Coventry, UK; 2Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 3University of Warwick, Coventry, UK

Correspondence: Sian Taylor-Phillips (s.taylor-phillips@warwick.ac.uk)

Background

Newborn blood spot screening involves taking a spot of blood from a baby’s heel in the first 7 days of life, and testing for a range of rare disorders using Tandem Mass Spectrometry. It is not possible to conduct randomised controlled trials of screening for these rare diseases, so decisions about which disorders to include must be made in the absence of such evidence. In this study we evaluated how the evidence is used to make national policy decisions about which diseases to include in the newborn blood spot test.

Methods

In the absence of RCT evidence, the evidence can be linked together to understand probable patient outcomes. We developed a framework of pathways to patient outcomes building on the work of Raffle and Gray, Harris et al., and Adriaensen et al. in screening, and di Ruffano et al. in test evaluation. We systematically reviewed the literature to identify national screening decision making organisations, their criteria and processes of decision making, and all policy and review documents related to the Newborn blood spot test with no time limits. For each country we analysed how the evidence for each patient pathway and outcome had been considered in practice.

Results

There was large variation between countries, the median number of disorders included in the newborn blood spot test was 19, ranging from 5 in Finland to 54 in the US. Methods of deciding which disorders to include involved expert panel consensus without formal evidence review (Netherlands), systematic review with meta-analysis and economic modelling (UK), and using recommendations and reviews from other countries (Italy). Key elements of pathways to patient outcomes included test accuracy, treatment benefit of early detection, and overdiagnosis. While 8/15 countries considered potential overdiagnosis in at least one review, only 1/15 (the UK) attempted to quantify the numbers overdiagnosed, and this used a comparison of prevalence between countries with and without screening which is subject to significant bias. Complete results by country by disease for pathways to patient outcomes covered, evidence review methods, and association between these and policy decisions will be available in time for the conference.

O12 Reviewing the quantity and quality of evidence available to inform NICE diagnostic guidance. Initial results focusing on end-to-end studies

Sarah Byron1, Frances Nixon1, Rebecca Albrow1, Thomas Walker1, Carla Deakin1, Chris Hyde2, Zhivko Zhelev2, Harriet Hunt2, Lavinia Ferrante di Ruffano3

1National Institute for Health and Care Excellence, Diagnostic Assessment Programme, Manchester, UK; 2Exeter Test Group, University of Exeter Medical School, Exeter, UK; 3Institute of Applied Health Research, University of Birmingham, Birmingham, UK

Correspondence: Chris Hyde (c.j.hyde@exeter.ac.uk)

Background

NICE has been producing guidance on medical diagnostic technologies since 2011. This has so far resulted in 24 pieces of guidance on wide-ranging topics. As part of the process of reviewing its methods, the pieces of guidance and the underpinning evidence are being examined to inform thinking on potential future developments. The expectation in diagnostics assessments is that end-to-end (E2E) studies, directly linking test use to patient outcome, such as comparative outcome studies like RCTs, are rarely available and so there will be a greater reliance on economic modelling as the main tool to assess whether the diagnostic technology is effective and cost-effective. This study reports findings on the availability, nature and impact of any E2E studies informing the guidance so far.

Objectives

To identify how many pieces of NICE diagnostics guidance were informed by E2E studies

Where E2E studies were found, to describe their nature

To describe how the E2E studies informed committee discussions and the final guidance

Methods

The approach was a document analysis of all pieces of published diagnostics guidance and the underpinning evidence. A data extraction form was developed and piloted on one of the pieces of diagnostics guidance and its underpinning evidence. Extraction was performed by one researcher and checked by a second. Data was tabulated and conclusions derived from the tables produced.

Main results

Although identifiable, the number of E2E studies could often not be quickly located in either the under-pinning reports or the guidance. 11/24, 46% (95% CI 26, 66) of guidance had any E2E studies, but in three of these the numbers were very small. The E2E studies were mostly RCTs. Where the test in the guidance was used for diagnosis, there was a mean of 1.9 E2E studies and where used for monitoring there was a mean of 12.2 E2E studies. The difference was unlikely to have occurred by chance alone (Wilcoxon Mann-Whitney Test U = 20 p < 0.05). In the guidance where there were substantial numbers of RCTs, clear account was taken of them as evidenced by the amount of space devoted to them in the “Outcomes” and “Considerations” sections

Authors’ conclusions

End-to-end studies are already an important part of the evidence base in the assessment of diagnostic technologies. HTA methods need to anticipate the likely continuing growth of these study types.

O13 Use of decision modelling in economic evaluations of diagnostic tests: an appraisal of Health Technology Assessments in the UK since 2009

Yaling Yang1, Lucy Abel1, James Buchanan1, Thomas Fanshawe1, Bethany Shinkins2

1Nuffield Department of Primary Health Care Sciences, University of Oxford, Oxford, UK; 2Academic Unit of Health Economics, Leeds Institute of Health Sciences, University of Leeds, Leeds, UK

Correspondence: Yaling Yang (yaling.yang@phc.ox.ac.uk)

Background: Diagnostic tests play an important role in the clinical decision-making process by providing information that enables patients to be stratified to the most appropriate treatment and management strategies. Timely and accurate diagnosis is therefore crucial for improving patient outcomes. By synthesising evidence from multiple sources, decision analytic modelling can be used to evaluate the cost-effectiveness of diagnostic tests in a comprehensive and transparent way.

Objectives: This study critically assesses the methods currently used to model the cost-effectiveness of diagnostic tests in Health Technology Assessment (HTA) reports published in the UK, and highlights areas in need of methodological development.

Methods: HTA reports published from 2009 onwards were screened to identify those reporting an economic evaluation of a diagnostic test using decision modelling. Existing decision modelling checklists were identified in the literature and reviewed. Based on this review a modified checklist was developed and piloted. This checklist covered 11 domains of good practice criteria, including:

whether the decision problem is clearly defined and the analytical perspective specified

whether the comparators are appropriate given the scope

whether the model structure is justified and reflects the natural progress of the condition and available treatment options

whether the inputs are consistent with the stated perspective

whether sources of parameter values are systematically identified, clearly referenced, and appropriately synthesised

whether model assumptions are discussed

whether appropriate sensitivity analyses are performed

A scoring system was then applied, with marks of ‘0, 0.5 and 1’ indicating that criteria were ‘not met, partially met, and met’, respectively. The results were analysed and summarised to demonstrate to what extent the HTA reports meet the quality criteria, and identify any outstanding challenges.

Results and conclusions: A total of 484 HTA reports have been published since 2009, of which 38 met the inclusion criteria. The reports covered a variety of conditions including cancers, chronic diseases, acute diseases and mental health conditions. The diagnostic tests included lab-based, genetic and point-of-care tests, imaging, clinical risk prediction scores, and quality of life measures. In general, the models were of high quality with a clearly defined decision problem and analytical perspective. The model structure was usually consistent with the health condition and care pathway. However, the inherent complexity of the models was rarely handled appropriately: limited justification was provided for selection of comparators and few models fully accounted for uncertainty in treatment effects. The analysis is ongoing and full results will be presented in the paper.

O14 Clinical utility of prediction models for ovarian tumor diagnosis: a decision curve analysis

Laure Wynants1,2, Jan Verbakel3, Sabine Van Huffel1,2, Dirk Timmerman4, Ben Van Calster4,5

1KU Leuven Department of Electrical Engineering (ESAT), STADIUS Center for Dynamical Systems, Signal Processing and Data Analytics, Leuven, Belgium; 2KU Leuven iMinds Department Medical Information Technologies, Leuven, Belgium; 3University of Oxford, Nuffield Department of Primarcy Care Health Sciences, Oxford, UK; 4KU Leuven Department of Development and Regeneration, Leuven, Belgium; 5Department of Public Health, Erasmus MC, Rotterdam, Netherlands

Correspondence: Laure Wynants (laure.wynants@esat.kuleuven.be)

Purpose: To evaluate the clinical utility of prediction models to diagnose ovarian tumors as benign versus malignant using decision curves.

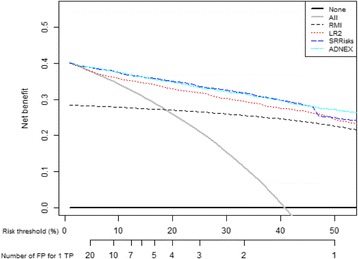

Methods: We evaluated the widely used RMI scoring system using a cut-off of 200, and the following risk models: ROMA and three models from the International Ovarian Tumour Analysis (IOTA) consortium (LR2, SRrisks, and ADNEX). We used a multicenter dataset of 2403 patients collected by IOTA between 2009 and 2012 to compare RMI, LR2, SRrisks, and ADNEX. Additionally, we used a dataset of 360 patients collected between 2005 and 2009 at the KU Leuven to compare RMI, ROMA, and LR2. The clinical utility was examined in all patients, as well as in several relevant subgroups (pre- versus postmenopausal, oncology versus non-oncology centers).

We quantified clinical utility through the Net Benefit (NB). NB corrects the number of true positives for the number of false positives using a harm-to-benefit ratio. This ratio is the odds of the risk of malignancy threshold at which one would suggest treatment for ovarian cancer (e.g. surgery by an experienced gynaecological oncologist). A threshold of 20% (odds 1:4) implies that up to 4 false positives are accepted per true positive. Using NB, a model can be compared to competing models or to default strategies of treating all or treating none. We expressed the difference between models as gain in ‘net specificity (i.e., sensitivity for a constant specificity, ΔNB/prevalence). 95% confidence intervals were obtained by bootstrapping.

Results: Thresholds between 5% (odds 1:19) and 50% (odds 1:1) were considered reasonable. RMI performed worst and was harmful, i.e. worse than treat all, at thresholds <20%. ADNEX and SRrisks consistently showed best performance (see Fig. 3). At the 10% threshold, SRrisks’ net sensitivity was 4% (95% CI 3% to 6%) higher than that of LR2, but similar to the net sensitivity of ADNEX (difference 0%, 95% CI -1% to 1%). Subgroup results showed similar patterns. On the second dataset, results for RMI were similar. In addition, LR2 performed best for the entire range of thresholds, and was the only model with clinical utility at a risk threshold of 10%.

Fig. 3 (abstract O14).

See text for description

Conclusions: NB supersedes discrimination and calibration to quantify the clinical utility of prediction models. Our data suggest superior utility of IOTA models compared to RMI and ROMA.

O15 Adjusting for indirectness in comparative test accuracy meta-analyses

Mariska Leeflang, Aeliko Zwinderman, Patrick Bossuyt

Department of Clinical Epidemiology, Biostatistics & Bioinformatics, Academic Medical Center, University of Amsterdam, Amsterdam, The Netherlands

Correspondence: Mariska Leeflang (m.m.leeflang@amc.uva.nl)

Background: The accuracy of a diagnostic test should be compared to the accuracy of its alternatives. Direct comparisons of tests, in the same patients and against the same reference standard, offer the most valid study design, but are not always available. Comparative systematic reviews are therefore bound to rely on indirect comparisons. As the results from these comparisons can be biased, we investigated ways to correct for indirectness.

Methods: From a large systematic review about the accuracy of D-Dimer testing for venous thromboembolism, we selected those comparisons between two assays that contained three or more direct comparisons and four or more indirect comparisons or single assay studies. Each comparison was analyzed using the bivariate random effects meta-regression model with assay-type, directness and interaction between the two as covariates in the model. In comparisons with a significant effect of the interaction term on sensitivity or specificity, we included the following study features to correct for these differences: referral filter, consecutive enrolment, time-interval, one or more reference standards, verification and year of publication.

Results: Seventeen comparisons were eligible for our analyses. In nine of these, the direct comparisons showed a significant difference between test A and test B while the indirect comparisons did not; or vice versa. However, the interaction term between assay and indirectness showed a significant (P < 0.05) effect on logit-sensitivity and/or logit-specificity in only four of them. Addition of study features as covariates removed the significant effect of the interaction term in two meta-analyses. In the first one, the interaction term was significant for sensitivity (P = 0.006), but after addition of the covariate ‘time-interval between index test and reference standard’ and after addition of the covariate ‘year of publication’, the P-value became 0.086 and 0.096 respectively. In the other analysis, the interaction term was significant for specificity (P = 0.039), but after addition of the covariate ‘all results verified’ and after addition of the covariate ‘only one reference standard used’, the P-value became 0.160 and 0.083 respectively.

Conclusions: Adjusting the effect of directness for study features seems to be possible in some instances, but no systematic effects were found. Study characteristics that may be influential in one comparison, may have no influence at all in another comparisons.

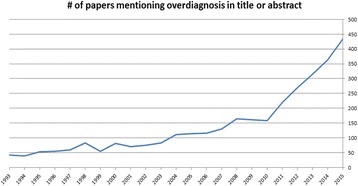

O16 Estimates of excess cancer incidence and cancer deaths avoided in Great Britain from 1980 -2012: the potential for overdiagnosis

Jason Oke, Jack O’Sullivan, Rafael Perera, Brian Nicholson

Nuffield Department of Primary Care Health Sciences, University of Oxford, Oxford, UK

Correspondence: Jason Oke (Jason.oke@phc.ox.ac.uk)

Introduction: Overdiagnosis is often described as the detection of disease that will not progress to cause symptoms or premature death. No consensus exists on the most appropriate method to estimate overdiagnosis. At a population level it can be estimated using incidence and mortality data with sufficient length of observation to account for lead time. We examined incidence and mortality patterns over the last 30 years for the most common cancers in Great Britain, with the aim of developing a method to identify potential overdiagnosis.

Methods: Mortality data were available since 1950 while incidence data were obtained from 1979. We used log-linear regression to model the long-term trend in age-standardised cancer-specific mortality rates for the “pre-diagnostic era” (1950–78) and used these results to predict both mortality and incidence rate in the “diagnostic era” (1980–2012). We used current (“diagnostic era”) incidence and mortality data from Cancer Research UK to calculate excess incidence and deaths avoided by subtracting the observed rates from the predicted rates in ten cancers types for men and women separately. We used the ratio of excess incidence to deaths avoided to summarise our findings.

Results: Simple straight-line models accounted for between 50 and 92% of variation seen in mortality rates in the pre-diagnostic era. Mortality in the diagnostic era closely followed the predicted trends except for breast cancer. In contrast, observed incidence was generally greater by several orders of magnitude to that predicted by the model. Cumulative excess incidence ranged from between 16 cases per 100,000 for thyroid cancer to 1763 per 100,000 for cervical cancer. The model estimated the number of cumulative deaths avoided as zero for the following cancers: oral (both men and women), prostate (men), bowel (men) and kidney (women). For the cancers where the ratio of excess incidence to deaths avoided could be estimated, these ratios varied from 1:1 (non-Hodgkin’s Lymphoma (NHL) in women) to 107:1 (Uterine in women).

Conclusions: The use of long-term mortality data may be useful for identifying and quantifying overdiagnosis by ecological analysis. Our results show that the incidence of many of the most common cancers in Great Britain has increased significantly in the last three decades but this has not necessarily prevented cancer deaths. We suggest that much of the increased detection represents the overdiagnosis of cancer.

O17 Identifying the utility and disutility associated with the over-diagnosis of early breast cancers for use in the economic evaluation of breast screening programmes

Hannah L. Bromley1, Tracy E. Roberts1, Adele Francis2, Denniis Petrie3, G. Bruce Mann4

1Department of Health Economics, University of Birmingham, Birmingham, UK; 2Department of Breast Surgery, Nuffield House, University Hospital Birmingham, Birmingham, UK; 3Melbourne School of Population and Global Health, University of Melbourne, Parkville, Australia; 4Department of Breast Surgery, Royal Women’s Hospital, Melbourne, Australia

Correspondence: Tracy E Roberts (T.E.ROBERTS@bham.ac.uk)

Background: Misplaced policy decisions about screening programmes may exist unless the decision process explicitly accommodates the disutility of screening and treating individuals subject to over-diagnosis. In breast cancer screening, radical surgery or radiotherapy for a woman with an over-diagnosed result would impose a serious unnecessary harm on that woman. At the individual level the harm may not actually be realised because the woman may never know that she had her breast removed unnecessarily. However, at a societal level these collective harms, if quantified, could be included in the analysis and might serve to outweigh the benefits of the screening programme. Recent evidence suggests the benefits of screening programmes have been overstated but the extent and duration of the loss of quality of life as a result of over-diagnosis has been under-researched.

Objectives: To explore the hypothesis that the explicit inclusion of potential disutility associated with the treatment of over-diagnosed early breast cancers will change the relative cost-effectiveness of the current recommended breast screening strategy.

Methods: Preliminary literature searches have shown that although multiple utility health states exist for early and metastatic breast cancers, there is significant heterogeneity between values and limited research on quantifying values associated with the breast screening programme itself. Little has been done to address the problem of over-diagnosis in breast cancer screening or attempts made to quantify associated losses in quality of life. A systematic review of utility and disutility values associated with breast screening is carried out to inform the design of a pilot study devised to capture the disutilities associated with over-diagnosis in screening mammography.

Results: The review results and protocol for the primary work will be completed by June. It is anticipated that women will report a loss in utility associated with the screening process, in particular with false positive mammograms, but limited numbers of such studies may render pooling of these states problematic. Very few economic evaluations of screening mammography explicitly include over-diagnosis in their analysis.

Discussion: This study highlights the challenges of estimating and incorporating the disutility of over-diagnosis in evaluations of screening programmes. The results from the review and the pilot study will be incorporated into a model based economic evaluation of the breast screening programme to estimate losses in quality of life associated with unnecessary treatment as a result of over-diagnosis.

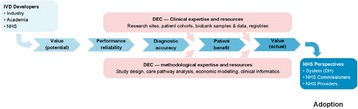

O18 Systematic review of frameworks for staged evaluation of predictive biomarkers

Kinga Malottki1, Holly Smith1, Jon Deeks2, Lucinda Billingham1

1Cancer Research UK Clinical Trials Unit (CRCTU), Institute of Cancer and Genomic Sciences, University of Birmingham, Birmingham, UK; 2Institute of Applied Health Research, University of Birmingham, Birmingham, UK

Correspondence: Kinga Malottki (k.malottki@bham.ac.uk)

Background: Stratified medicine has been defined as using predictive biomarkers to identify cohorts of patients more likely to benefit from a treatment (or less likely to experience a serious adverse event). There are numerous successful predictive biomarkers that have changed clinical practice. There are also examples where potential predictive biomarkers failed at a late stage of development (ERCC1 expression – platinum-based chemotherapy), or there is uncertainty about their utility in spite of being introduced into clinical practice (EGFR expression - erlotinib). These examples, together with the need to optimise the use of resources by prioritising research activities, suggest a structured approach to biomarker development may be necessary. There is a well-established model for phased evaluation of drugs, however no such model is in place for predictive biomarkers. There have been various publications on this topic both by research groups and institutions (such as the FDA). However there is no accepted model and it remains unclear whether there is consensus in the literature on the best approach to staged evaluation of predictive biomarkers.

Aim: To identify existing frameworks for staged evaluation of predictive biomarkers and the stages these propose. For the identified stages, to explore the outcomes, relevant study designs and requirements for the entry into and completion of each stage. To compare and contrast the different frameworks and therefore identify requirements for development of a predictive biomarker.

Methods: We have undertaken a systematic review of papers suggesting a framework for staged evaluation of predictive biomarkers. These were identified through broad searches of MEDLINE, EMBASE and additional internet searches. The identified frameworks were compared and grouped based on the context in which the development of a predictive biomarker was discussed (for example development of a biomarker predicting treatment safety) and the stages proposed.

Findings: We identified 22 papers describing a framework for staged evaluation of predictive biomarkers. These were grouped into four models: (1) general predictive biomarker development, (2) integrated into phased drug development, (3) development of a multi-marker classifier and (4) development of marker predicting treatment safety. It appeared that the most complete model was (1) general, which comprised stages: pre-discovery, discovery, analytical validation, clinical validation, clinical utility, implementation. The remaining models mostly contained stages corresponding to these, however models (2) and (3) did not contain analytical validation and model (4) clinical validation. The stages in models (2-4) corresponding to those in model (1) were occasionally merged or divided into multiple stages. Different terminology was also used to describe similar concepts. Relevant study designs were described for all stages, however there seemed to be consensus mainly for the clinical utility stage, where generally RCTs designed to evaluate the biomarker were suggested (including enrichment, stratified and biomarker-strategy designs).

Conclusions: The identified models suggest the need to consider the context in which the biomarker is developed. There was a large overlap between the four models, suggesting consensus on at least some of the research steps that may be necessary prior to predictive biomarker implementation into clinical practice.

O20 Biological variability studies: design, analysis and reporting

Alice Sitch, Sue Mallett, Jon Deeks

Institute of Applied Health Research, University of Birmingham, UK

Correspondence: Alice Sitch (a.j.sitch@bham.ac.uk)

Introduction: To use a test to the best effect when monitoring disease progression or recurrence of patients it is vital to have accurate information regarding the variability of the test, including sources and estimation of measurement error. There are many sources of variability when testing a population. There is variability in the results for a single patient even when in a stable disease state, this fluctuation in results is within-individual variability, and there is also variability in results from person-to-person which is known as between-individual variability. When undertaking a test, there is pre-analytical variability which occurs before the test is analysed, including within-individual and between-individual variations e.g. timing of measurement. Analytical variation is the variability in test results during the process of obtaining the result, such as when a sample is assayed in a laboratory test. Where interpretation of tests can be subjective, there are intra-inter reader studies to assess variability, comparing interpretations from multiple observers.

Objectives: To review the current state of variability studies for tests to identify best methods and where studies could be improved. Our research focusses on design, sample size, methods of analysis and quality of studies.

Methods: To understand the scope of studies evaluating biological variability, the design, methods for analysis, reporting and overall quality, a review of studies of biological variation was conducted and, whilst conducting this review, the key methodological papers influencing these studies were identified. The searches used to identify papers to be included in the review were: key word search (bio* AND vari*) for the period 1st November 2013 to 31st October 2014; all articles published in the journals Clinical Biochemistry, Radiology and Clinical Chemistry during the period 1st January 2014 to 31st December 2014; papers included in the Westgard QC database published from 1st January 2000 onwards; and, detailed searches for three different test types (imaging, laboratory and physiological) in specific clinical areas: ultrasound imaging to assess bladder wall thickness in patients with incontinence; creatinine and Cystatin C measurements to estimate glomerular filtration rate (GFR) in patients with chronic kidney disease (CKD); and, spirometry to measure forced expiratory volume (FEV) in patients with chronic obstructive pulmonary disorder (COPD). In addition to the papers identified by these searches, published articles identified by previous and concurrent work meeting the criteria were included to enrich the sample.

Key information regarding the design, analysis and results reported was extracted from each paper. In addition, analyses of data from a biological variability study were conducted to demonstrate the current framework for design and analysis and investigate the impact of various components within this.

Results: The review identified 106 studies for assessment allowing the current state of the field with regard to design, analysis and reporting to be evaluated. We will present our findings on typical designs including examples of patient recruitment, sample sizes, analysis methods and sources of variability addressed.

Conclusions: This work will identify the current state of the methodology in this area to help identify where future work to improve the design, analysis and reporting of biological variability studies is needed.

O21 Intra- and interrater agreement with quantitative positron emission tomography measures using variance component analysis

Oke Gerke1, Mie Holm-Vilstrup1, Eivind Antonsen Segtnan1, Ulrich Halekoh2, Poul Flemming Høilund-Carlsen3

1Department of Nuclear Medicine, Odense University Hospital, Odense, Denmark; 2Epidemiology, Biostatistics and Biodemography, University of Southern Denmark, Odense, Denmark; 3Department of Nuclear Medicine, Odense University Hospital & Department of Clinical Research, University of Southern Denmark, Odense, Denmark

Correspondence: Oke Gerke (oke.gerke@rsyd.dk)

Purpose: Any quantitative measurement procedure needs to be both accurate and reliable in order to justify its use in clinical practice. Reliability concerns the ability of a test to distinguish patients from each other, despite measurement errors, and is usually assessed by intraclass correlation coefficients (ICC). Agreement, on the other hand, focuses on the measurement error itself, and various parameters are used in practice, comprising proportions of agreement, standard errors of measurement, coefficients of variations, and Bland-Altman plots. We revive variance component analysis (VCA) in order to decompose the observed variance attributable to different sources of variation (e.g. rater, scanner, time point), to derive relevant repeatability coefficients (RC), and to show the connection to Bland-Altman plots in a test-retest setting. Moreover, we propose a sequential sample size strategy when assuming differences in a test-retest setting to follow approximately a Normal distribution.

Methods: Variants of the commonly used standard uptake value (SUV) in cancer imaging from two studies at our institution were used. In study 1, thirty patients were scanned once pre-operatively for the assessment of ovarian cancer. These 30 images were assessed two times by the same rater two months apart. In study 2, fourteen patients with a confirmed diagnosis of glioma were scanned up to 5 times before and during treatment, and the resulting 50 images were assessed by three raters. Studies 1 and 2 served as examples for intra- and interrater variability assessment, respectively. In study 1, we treated ‘reading’ (1st vs. 2nd) as fixed factor and ‘patient’ as random factor. In study 2, both ‘rater’ and ‘time point’ were considered fixed effects, whereas ‘patient’ and ‘scanner’ were treated as random effects. The sequential sample size strategy, post hoc applied to data from study 1, was based on a hypothesis test on the population variance of the differences between measurements, assuming that the differences follow a Normal distribution. An overall recruitment plan of 15 + 15 + 20 patients was assumed, and the adjustment for multiple testing was done by applying a α-spending function according to Kim, DeMets (Biometrika 1987).

Results: In study 1, the within-subject standard deviation times 2.77 resulted in a RC of 2.46, which is equal to the half width of the Bland-Altman band. The RC is the limit within which 95% of differences will lie. In Study 2, the RC for identical conditions (same patient, same rater, same time point, same scanner) was 2392, allowing for different scanners resulted in a RC of 2543. The differences between raters were, though, negligible compared to the other factors: estimated difference between reader 1 and 2: -10, 95% CI: -352 to 332; reader 1 vs. 3: 28, 95% CI: -313 to 370. The adjusted significance levels for the tests conducted with 15 and 30 patients, respectively, were 0.015 and 0.03. Investigating a range of hypothetical population variance values of 0.25, 0.5, …, 4 resulted in rejecting the one-sided null for 3 at both stages.

Conclusion: VCA seems to be an obvious, yet intriguing approach to agreement studies which often are tackled with simple measures only. VCA is, indeed, built upon various model assumptions, but that had been neither obstacle to the wide use of ICCs in reliability analysis. The ice is getting even thinner when basing the sample size strategy on population variance tests (and the normality assumption), which, though, could be applied in an adaptive manner in the same way as it is often done in therapeutic trials.

O22 Robust novel tolerance intervals and correlated-errors-in-variables regressions for the equivalence and validation of new clinical measurement methods

Bernard G. Francq (bernard.francq@glasgow.ac.uk)

Robertson Centre for Biostatistics, University of Glasgow, Glasgow, UK

The need of laboratories to quickly assess the quality of samples leads to the development of new methods, and improvement of existing methods. It is hoped that these will be more accurate than the reference method. To be validated, these alternative methods should lead to results comparable (equivalent) with those obtained by a standard method.

Two main methodologies for assessing equivalence in method-comparison studies are presented in the literature. The first one is the well-known and widely applied Bland–Altman approach with its agreement intervals, where two methods are considered interchangeable if their differences are not clinically significant. The second approach is based on errors-in-variables regression in a classical (X,Y) plot and focuses on confidence intervals, whereby two methods are considered equivalent when providing similar measures notwithstanding the random measurement errors. This research reconciles these two methodologies and shows their similarities and differences using both real data and simulations. New consistent correlated-errors-in-variables regressions are introduced as the errors are shown to be correlated in the Bland–Altman plot. Indeed, the coverage probabilities collapse and the biases soar when this correlation is ignored. Robust novel tolerance intervals are compared with agreement intervals, and novel predictive intervals are introduced with excellent coverage probabilities.

We conclude that the (correlated)-errors-in-variables regressions should not be avoided in method comparison studies, although the Bland–Altman approach is usually applied to avert their complexity. We argue that tolerance or predictive intervals are better than agreement intervals. It will be shown that tolerance intervals are easier to calculate and easier to interpret. Guidelines for practitioners regarding method comparison studies will be discussed.

Reference

1. Francq, B. G., and Govaerts, B. (2016) How to regress and predict in a Bland–Altman plot? Review and contribution based on tolerance intervals and correlated-errors-in-variables models. Statist. Med., doi: 10.1002/sim.6872

O23 Validation of using early modelling to predict the performance of a monitoring test – the use of the ELF biomarker in liver disease modelling and the ELUCIDATE trial

Jon Deeks1, Alice Sitch1, Jac Dinnes1, Julie Parkes2, Walter Gregory3, Jenny Hewison3, Doug Altman4, William Rosenberg5, Peter Selby3

1Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 2University of Southampton, Southampton, UK; 3University of Leeds, Leeds, UK; 4Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK; 5University College London, London, UK

Correspondence: Jon Deeks (j.deeks@bham.ac.uk)

Background: Monitoring tests can be used to identify disease recurrence or progression. Monitoring strategies are complex interventions, involving specification of a test, a schedule, a threshold or decision rule based on test results, and subsequent diagnostic or therapeutic action. Before undertaking an RCT of a monitoring strategy all four of these components of the monitoring intervention need to be defined. Excess false positives and potentially unnecessary interventions can be caused by using a poorly discriminating or imprecise test, monitoring too frequently, or choosing to act at too low a threshold.

Aim: The study focused on evaluating monitoring strategies using the ELF test to detect progression of fibrosis to decompensated cirrhosis in patients with severe liver disease. The study had two aims: (1) To use early modelling to predict performance of the ELF test for different monitoring schedules and thresholds; (2) To validate the modelling by comparison with results from an RCT of the monitoring strategy.

Methods: A simulation model was constructed using evidence from the literature, existing data sources and expert opinion to inform disease progression, relationship of the marker with the disease state, and measurement error in the marker. The test schedule and decision rule were varied to identify optimal strategies. The ELUCIDATE RCT randomized 878 patients to an ELF based monitoring strategy or usual care, and was undertaken at the same time as the modelling. Data from the RCT are now available on process of care outcomes, disease based outcomes will be available in the future. Comparisons are made between predictions from the simulation model and results of the trial.

Results: Identifying data to build the simulation model was challenging, particularly concerning test characteristics. The simulation model demonstrated that the performance of the monitoring strategy was most influenced by estimates of disease progression, measurement error of the test and the test threshold. The test strategy as used in the ELUCIDATE trial was predicted to lead to high rates of early intervention, which was then observed in the trial in terms of numbers of patients being referred for further investigation in the monitoring arm than in usual care.

Conclusion: Early modelling of monitoring strategies is recommended prior to undertaking RCTs of monitoring strategies to assist in determining optimal test thresholds and frequencies. The study highlights the importance of obtaining valid data on both the performance of the test and the progression of disease before planning trials.

O24 Diagnostic accuracy in the presence of an imperfect reference standard: challenges in evaluating latent class models specifications (a Campylobacter infection case)

Julien Asselineau1, Paul Perez1, Aïssatou Paye1, Emilie Bessede2, Cécile Proust-Lima3

1Bordeaux University Hospital, Public Health Department, Clinical Epidemiology Unit and CIC 1401 EC, Bordeaux, France; 2French National Reference Center for Campylobacter and Helicobacter, Bordeaux, France; 3INSERM U1219, Bordeaux Population Health Research Center, Bordeaux, France; Univ. Bordeaux, ISPED, Bordeaux, France

Correspondence: Julien Asselineau (julien.asselineau@isped.u-bordeaux2.fr)

Introduction: Usual methods to estimate diagnostic accuracy of index tests in the presence of an imperfect reference standard result in biased accuracy and prevalence estimates. The latent class model (LCM) methodology deals with imperfect reference standard by statistically defining the true disease status and possibly assuming residual dependences between diagnostic tests conditionally on this status. Different dependence specifications can lead to inconsistent accuracy estimates, therefore thorough evaluation of models should be systematically undertook although this is rarely done in practice. We use the study of new campylobacter detection tests in which bacteriological culture is an imperfect reference standard to illustrate the complexity of the implementation of a LCM methodology to assess the diagnostic accuracy of detection tests.

Methods: Five tests of campylobacter infection (bacteriological culture, one molecular test and three immunoenzymatic tests) were applied to stool samples of 623 symptomatic patients at Bordeaux and Lyon University Hospital in 2009. Their sensitivity and specificity were estimated with LCMs using maximum likelihood method after probit or logit transformations. Conditional independence hypothesis between tests was relaxed by specifying alternative dependence structures based on random effects. Performances of the models were compared using information criteria, goodness-of-fit statistics with asymptotic or empirical distributions (to tackle many rare or missing profiles) and bivariate residual statistics. Two main functions implementing LCMs were used: NLMIXED procedure in SAS® and randomLCA package in R.

Results: Among the 25 = 32 theoretical profiles of test responses, 17 were observed including 10 with 3 patients or more. The model under conditional independence hypothesis presented the worst Akaike information criterion (AIC) and was highly rejected by all statistics. Introducing a random effect common to all diagnostic tests improved the AIC but the model was still rejected by nearly all statistics. Among the other dependence structures evaluated, the model assuming a residual dependence between the three immunoenzymatic tests showed the best AIC. Statistics using empirical distributions were just above the significance level (p > 0.05) except for the total bivariate residual statistics (p = 0.03). With this model, prevalence of campylobacter infection was 0.11. As expected, culture presented the lowest sensitivity (82.1% vs 85.2%–98.5% for other tests) and the highest specificity (99.6% vs 95.8%–98.4% for other tests). When evaluated by simulations, performances of NLMIXED procedure for the random effect shared by all diagnostic tests showed low coverage rates while randomLCA package provided correct inferences.

Conclusion: LCM methodology allowed estimating the diagnostic accuracy of new campylobacter detection tests and of culture which is an imperfect reference standard as confirmed by our results. Model assessment steps are crucial to select the best specification in LCM and get valid accuracy estimates. However, their interpretation is tricky because of discordant conclusions depending on the statistics used. Rare or missing profiles are frequent when diagnostic tests present high accuracy making use of asymptotic distributions inadequate. Statistics using empirical distributions therefore need to be specifically implemented. Lastly, usual softwares can have limitations due to their unreliability (NLMIXED) or lack of flexibility (randomLCA).

O26 Measures to reduce the impact of missing data on the reference standard data when designing diagnostic test accuracy studies

Christiana Naaktgeboren1, Joris de Groot1, Anne Rutjes2,3, Patrick Bossuyt4, Johannes Reitsma1, Karel Moons1

1Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, 3584 CG Utrecht, Netherlands; 2CTU Bern, Department of Clinical Research, University of Bern, Bern, Switzerland; 3Institute of Social and Preventive Medicine, University of Bern, Bern, Switzerland; 4Department of Clinical Epidemiology and Biostatistics, Academic Medical Center, University of Amsterdam, Amsterdam, Netherlands

Correspondence: Christiana Naaktgeboren (c.naaktgeboren@umcutrecht.nl)

Despite efforts to determine the presence or absence of the condition of interest in all participants in a diagnostic accuracy study, missing reference standard results (i.e. missing outcomes) are often inevitable and should be anticipated in any prospective diagnostic accuracy study.

Analyses that include only the participants in whom the reference standard was performed are likely to produce biased estimates of the accuracy of the index tests. Several analytical solutions for dealing with missing outcomes are available; however, these solutions require knowledge about the pattern of missing data, and they are no substitute for complete data.

In this presentation we aim to provide an overview of the different patterns of missing data on the reference standard (i.e. incidental missing data, data missing by research design, data missing due to clinical practice, data missing due to infeasibility), the recommended corresponding solutions (i.e. analytical correction methods or including a second reference standard), and the specific measures that can be taken before and during a prospective diagnostic study to enhance the validity and interpretation of these solutions. In the presentation various examples will be discussed.

Researchers should anticipate the mechanisms that generate missing reference standard results before the start of a study, so that measures and actions can explicitly be taken to reduce the potential for biased estimates of the accuracy of the tests, markers, or models under study, as well as to facilitate correction in the analysis phase. In all cases, researchers should include in their study report how missing data on the index test and reference standard were handled, as invited by the STARD reporting guideline.

O27 Quantifying the impact of different approaches for handling continuous predictors on the performance of a prognostic model

Gary Collins1, Emmanuel Ogundimu1, Jonathan Cook1, Yannick Le Manach2, Doug Altman1

1Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK; 2Departments of Anesthesia & Clinical Epidemiology and Biostatistics, Michael DeGroote School of Medicine, Faculty of Health Sciences, McMaster University and the Perioperative Research Group, Population Health Research Institute, Hamilton, Canada

Correspondence: Gary Collins (gary.collins@csm.ox.ac.uk)

Background: Continuous predictors are routinely encountered when developing a prognostic model. Categorising continuous measurements into two or more categories has been widely discredited. However, it is still frequently done when developing a prognostic model due to its simplicity, investigator ignorance of the potential impact and of suitable alternatives, or to facilitate model uptake.

Methods: A resampling study was performed to examine three broad approaches for handling continuous predictors on the performance of a prognostic model: 1. Multiple methods of categorising predictors (including dichotomizing at the median; categorising into 3, 4, 5, equal size groups; categorising age only into 5 and 10-year age groups), 2. modelling a linear relationship between the predictors and outcome, and 3. modelling a nonlinear relationship using fractional polynomials or restricted cubic splines. Using the THIN dataset, we used primary care general practice data (from England) to develop models using Cox regression to predict a) the 10-year risk of cardiovascular disease (n = 1.8 million) and b) 10-year risk of hip fracture (n = 1 million). We also examine the impact of sample in developing the prognostic models on model performance (using data sets with 25, 50, 100 and 2000 outcome events). We compare the performance (measured by the c-index, calibration and net benefit) of prognostic models built using each approach, evaluating them using separate data from Scotland.

Results: Our results show that categorising continuous predictors produces models with poor predictive performance (calibration and discrimination) leading to limited clinical usefulness (net benefit). A large difference of between the mean c-index produced by the approaches (as large as 0.1 for the hip fracture model) that did not categorise the continuous predictors and the approach that dichotomised the continuous predictors at the median. The calibration of the models was poor for all methods that used categorisation, which was further exacerbated when the models were developed on small sample sizes. Over a range of clinically relevant probability thresholds, an additional net 5 to 10 cardiovascular disease cases per 1,000 were found during validation if models that implemented fractional polynomials or restricted cubic splines were used, rather than models that dichotomised all of the continuous predictors at the median without conducting any unnecessary treatment. The models that used fractional polynomials or restricted cubic splines (no difference between the two nonlinear approaches), or that assumed a linear relationship between the predictor and outcome all showed a higher net benefit, over a range of thresholds, than the categorising approaches

Conclusions: Categorising continuous predictors is unnecessary, biologically implausible and inefficient and should not be used in prognostic model development.

O28 Does ignoring clustering in multicenter data influence the performance of prediction models? A simulation study

Laure Wynants1,2, Yvonne Vergouwe3, Sabine Van Huffel1,2, Dirk Timmerman4, Ben Van Calster4,5

1KU Leuven Department of Electrical Engineering (ESAT), STADIUS Center for Dynamical Systems, Signal Processing and Data Analytics, Leuven, Belgium; 2KU Leuven iMinds Department Medical Information Technologies, Leuven, Belgium; 3Center for Medical Decision Sciences, Department of Public Health, Erasmus MC, Rotterdam, the Netherlands; 4KU Leuven Department of Development and Regeneration, Leuven, Belgium; 5Department of Public Health, Erasmus MC, Rotterdam, Netherlands

Correspondence: Laure Wynants (laure.wynants@esat.kuleuven.be)

Background: Clinical risk prediction models are increasingly being developed and validated on multicenter datasets. We investigate how the choice of modeling technique affects the predictive performance of the model, and whether this effect depends on the level of validation.

Method: We present a comprehensive framework for the evaluation of the predictive performance of prediction models at both the center level and the population level, considering population-averaged predictions, center-specific predictions and predictions assuming average center effects. We sampled large (100 events per variable) datasets from simulated source populations (n = 20,000, 20 centers) with strong clustering (intraclass correlation 20%). A random intercept (RI) model and a standard logistic regression (LR) model were built in each sample. The agreement between predicted and observed risks was evaluated in the remainder of the source population using the calibration slope, which ideally equals 1, the calibration intercept, which ideally equals 0, and the c-statistic.