Abstract

Causal discovery is the problem of learning the structure of a graphical causal model that approximates the true generating process that gave rise to observed data. In practical problems, including in causal discovery problems, missing data is a very common issue. In such cases, learning the true causal graph entails estimating the full data distribution, samples from which are not directly available. Attempting to instead apply existing structure learning algorithms to samples drawn from the observed data distribution, containing systematically missing entries, may well result in incorrect inferences due to selection bias.

In this paper we discuss adjustments that must be made to existing structure learning algorithms to properly account for missing data. We first give an algorithm for the simpler setting where the underlying graph is unknown, but the missing data model is known. We then discuss approaches to the much more difficult case where only the observed data is given with no other additional information on the missingness model known. We validate our approach by simulations, showing that it outperforms standard structure learning algorithms in all of these settings.

Keywords: Structure learning, Missing data, Causal Discovery

1. Introduction

Causal discovery is an unsupervised learning problem where the goal is to recover as much structure of a causal graphical model as possible from data generated from a process well-approximated by such a model. A large literature on this problem exists, with causal discovery algorithms falling into three general types. Constraint-based algorithms, such as the PC algorithm and the FCI algorithm (Spirtes et al., 2001), attempt to rule out graphs inconsistent with constraints found in the data, and return the set of remaining graphs. Score-based algorithms, such as GES (Chickering, 2002), rank models with a score that rewards model fit, and penalizes model complexity, and finds high ranking models using exhaustive or local search. Finally, parametric methods such as LiNGAM exploit parametric assumptions to infer causal structure (Shimizu et al., 2006).

Using causal discovery algorithms in applications entails dealing with practical data analysis issues, including missing data. If available data has systematically missing entries, using only fully observed rows (complete cases) forms a biased view of the true underlying data distribution, which can severely affect the performance of causal discovery algorithms, as we later show.

In this paper, we consider the problem of causal discovery under missing data. We make use of a statistical technique called Inverse Probability Weighting (IPW) – first developed in Horvitz and Thompson (1952) and then improved and generalized in Robins et al. (1994) and Scharfstein et al. (1999) – which weights datapoints by their sampling probability to alleviate biases due to imbalances in population classes. IPW has numerous applications when missing data is present, such as improvements to M-estimation (Wooldridge, 2007) and estimating biased population statistics (Vansteelandt et al., 2010), such as the population mean. Our methods also make use of IPW, but do so in a way that takes into account information pertaining to the data generation process and causes of missingness in order to improve causal structure learning.

Via simulations, we show that in settings where the underlying causal graph is unknown, but the missing data model is known, our adjustments based on IPW result in high quality inferences about the underlying graph. We also consider more complicated versions of the problem where neither the underlying graph nor the missing data model are known. In this setting, we give evidence that an algorithm that considers, by brute force, all possible sequences of reweightings of the observed data, will yield the sparsest output graph if it uses reweightings corresponding to the true missing data model. Moreover, this sparsest output will be closer to the true graph than outputs that use reweightings not licensed by the true model. The brute force reweighting algorithm, and the IPW adjusted algorithms can be viewed as using identification results for the full data distribution in missing data problems described in Mohan et al. (2013); Shpitser et al. (2015) to improve performance of structure learning when missing data is present.

For simplicity, we restrict our attention to learning directed acyclic graph (DAG) models using the PC algorithm (Spirtes et al., 2001), the standard constraint-based approach for DAGs. Although we do not pursue this here in the interests of space, our approach generalizes in a straightforward way to learning ancestral graphs (Richardson and Spirtes, 2002) using the FCI algorithm, and to learning nested Markov models (Richardson et al., 2017) using scoring approaches.

Our paper is organized as follows. Section 2 reviews notation and basic preliminaries, Section 3 discusses relevant material for causal discovery under missing data with our novel contributions beginning in subsection 3.3 onward, Section 4 contains our simulation results, and Section 5 contains our conclusions.

2. Notation and Preliminaries

We denote variables (or vertices in a graph) by capital letters V, and sets of variables (or vertices) by bold capital letters V. Values are denoted by lowercase letters v, and sets of values by bold lowercase letters v. For values v of V, and W ⊆ V, denote vW to be a subset of values in v for variables in W. A directed acyclic graph (DAG) is a graph with directed edges with no directed cycles. Given a DAG with a vertex set V, denote the sets of parents, children, ancestors, and descendants of V as as , , defined as , and , respectively. By convention, for any . For any V, define . Given A ⊆ V, define the induced subgraph to be a DAG containing vertices A and only edges in between elements in A.

2.1. Statistical Models, Causal Models, and Causal Discovery of DAGs

A statistical model of a DAG with a vertex set V is the set of all distributions p(V) of the form

| (1) |

For disjoint subsets A,B,C of V, we say that A is independent of B given C in p(V), written as a shorthand as (A ⫫ B | C)p if p(A | B ∪ C) = p(A | C).

Conditional independences in a distribution that factorizes as (1) can be read off by the d-separation criterion (Pearl, 1988). We use as a shorthand for A being d-separated from B given C in a DAG . In any distribution p(V) that factorizes as (1) according to a DAG , the following global Markov property holds. For any disjoint subsets A,B,C of V,

| (2) |

The notion of causality we consider in this paper is based on the intervention operation do(a) (Pearl, 2009). This operation can be viewed as altering a system’s normal state from the outside, in the same way the value of a variable in a normally operating computer program can be altered artificially by a debugger. Given a distribution p(V), a subset A of V, and a value set a, the variation in V \ A after the operation do(a) is performed is called an interventional distribution and is denoted by p(V\A | do(a)). Just as statistical models can be viewed as sets of distributions defined by restrictions, causal models can be viewed as sets of interventional distributions defined by restrictions. In this paper, we define the weakest causal model of a DAG , which postulates that for any A ⊂ V, and any values a of A, .

The task of causal discovery algorithms is recovering given a dataset corresponding to p(V) which factorizes according to . In fact, in general recovering the complete DAG is impossible due to observational equivalence, where two distinct DAGs , yield the same statistical model. Because of observational equivalence, a causal discovery algorithm in general cannot distinguish distinct but observationally equivalent DAGs. For this reason, the goal of causal discovery algorithms for DAGs is recovering the true DAG up to its equivalence class.

Regardless of the type of causal discovery algorithm used, assumptions are necessary for inferring causal structure from observational data. The standard assumption in the literature is that any conditional independence that holds in the data corresponds to some structural features of the underlying . This assumption is called faithfulness. A distribution p(V) is said to be faithful for a DAG if for any disjoint subsets A,B,C of V,

| (3) |

2.2. Missing Data and Missingness Graphs

In missing data problems, samples from the full data distribution p(V) are not available. Instead, a subset L ⊆ V is sometimes observed in the data and sometimes missing, while O ≡ V\L is always observed. For each Li ∈ L there exists an observability indicator Ri, such that Li is observed if Ri = 1, and Li is missing if Ri = 0. We define a set of indicators as R ≡ {Ri | Li ∈ L}. We represent this situation as follows. The variables in L are never observed themselves. However, for each Li ∈ L there exists a proxy variable such that if R1 = 1, and is equal to a special value “?” if R1 = 0. Thus, the set of observed variables is Z ≡ O ∪L* ∪ R.

Thus, the full data distribution p(V), and the observed data distribution p(Z) are related by the following identity:p(O,L*,R = 1) = p(V,R = 1) = p(R = 1 | V )p(V). This implies that if p(R = 1 | V) can be shown to be a function gR|V(p(Z)) of the observed data distribution, then, via IPW, the full data distribution p(V) is identified as .

A number of missingness models exist which permit identification of the full data distribution. In this paper, we will use graphical models of missingness, introduced in Mohan et al. (2013). A missingness graph is a directed acyclic graph with vertices corresponding to variables O,L,L*,R with the property that for every , , and for every Ri ∈ R, .

Just as with regular DAGs, we associate distributions p(O,L,R,L*) with a missingness DAG with a vertex set O ∪ L ∪ R ∪ L* if it factorizes as (1) with respect to :

| (4) |

Missingness graphs can also be viewed as causal models, with elements in R being particularly useful to subject to the intervention operation, since

| (5) |

See Mohan et al. (2013); Shpitser et al. (2015) for further details. Even though (5) is a version of the g-formula (in ratio form) for missing data problems, it cannot be applied directly to identify p(V), since elements of for some R may intersect L, and thus may not always be observed.

A general algorithm for identifying the full data distribution in missingness graphs was given in Shpitser et al. (2015). This algorithm adapted causal inference ideas for identifying interventional distributions of the form involved in defining controlled direct effects for the task of identifying , and then applied (5).

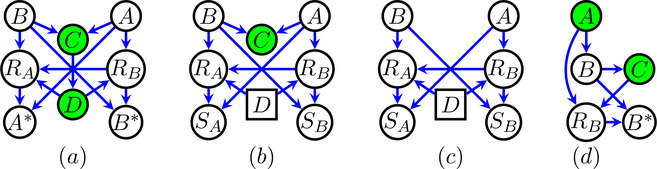

As an example, consider the graph in Fig. 1 (a), where O = {C,D}, L = {A,B}. The application of the algorithm in Shpitser et al. (2015), with certain subproblems arising in the operation of the algorithm shown in Fig. 1 (b),(c), yields , where

| (6) |

and

Figure 1:

Vertices in green correspond to variables that are always observed. (a) An example where the full data distribution p(A,B,C,D) is identifiable from the observed data distribution p(RA,RB,A∗,B∗,C,D). (b),(c) Graphs corresponding to subproblems considered considered by the identification algorithm. (d) A simple missingness graph, where causal discovery from the observed data distribution leads to recovering an erroneous graph.

3. Causal Discovery Under Missing Data

Having given the necessary preliminaries, we now consider the problem of causal discovery from missing data. We assume the observed data was generated from the observed data distribution p(Z) that was generated from a true missingness graph such that the distribution p(O,L,L*,R) factorizes according to as in (4)

A simple approach to causal discovery might be to ignore missing data entirely, and use the observed cases in the data as input to a causal discovery algorithm. The problem with this approach is illustrated by Fig. 1 (d), where A and C are always observed, and B is potentially missing, based on RB which is a function of A and C. Here, the true DAG has a single conditional independence (A ⫫ C | B), which would be easily recoverable if the algorithm were given samples from the full data distribution p(A,B,C). However, in the context of missing data, samples from this distribution are not available. If only samples from p(A,B*,C,RB) are available, and we use fully observed data rows, then the algorithm only sees samples from . This introduces a form of selection bias known as Berkson’s bias in the statistics literature or “explaining away” in the artificial intelligence literature. In this particular case, the result is that A is no longer independent of C given B if we also insist on conditioning on RB, as easily verified by d-separation in Fig. 1 (d). This version of Berkson’s bias misleads the PC algorithm into returning the complete graph rather than the equivalence class corresponding to A → B → C.1

A more sophisticated alternative would attempt to approximate the full data distribution first, before applying causal discovery algorithms. The difficulty is that generally in order to identify the full data distribution, we need to know the missingness graph. However, in causal discovery settings the true graph is unknown. In addition, in order to recover the true graph in missing data contexts, we need to introduce an extension of the faithfulness assumption. Before doing so, we introduce necessary conditional graphs, and kernels, which correspond to intermediate worlds after IPW is applied, and the fixing operation, which corresponds to a single application of IPW.

3.1. Conditional Acyclic Directed Mixed Graphs, Kernels and Fixing

A conditional acyclic directed mixed graph (CADMG) is a graph containing directed (→) and bidirected (↔) edges with no directed cycles such that no edge with an arrowhead into W exists (vertices W are drawn as squares, and meant to denote constants, while V are drawn as circles and meant to denote random variables). A CADMG may contain both a → and ↔ edge between the same pair of vertices. Given a CADMG , and a subset O ⊆ V, define the latent projection (Pearl, 2009) onto O, as a CADMG with a vertex set O,W, all edges in 𝒢 between elements in O ∪ W, and the following additional sets of edges. First, a directed edge between V1,V2 ∈ O∪W is added if there exists a directed path from V1 to V2 with all intermediate elements not in O ∪ W. Second, a bidirected edge between V1, V2 ∈ O ∪ W is added if there exists a path from V1 ← ◦…◦ → V2 with no collider triples, with all intermediate elements not in O ∪ W. The latent projection can be viewed as a graphical analogue of marginalization in distributions, hence the suggestive notation. Note that a latent projection is not a simple graph, e.g. may include both → and ↔ connecting the same pair of vertices.

Given a CADMG , we say a vertex V ∈ V is fixable in if no Z ∈ V \ {V } exists such that V ∈ , and V ↔ ∘ ↔ ... ↔ ∘ ↔ Z exists in . For any vertex V fixable in , define a fixing operator that produces a new graph that removes all arrows with arrowheads into V, and moves V from V to W in . For {V1, … Vk} ⊆ V, a sequence 〈V1, … Vk〉is fixable in , if V1 is fixable in , V2 is fixable in , etc. For such a fixable sequence, define as the generalization of 𝜙V defined via function composition.

Define a kernel q(V | W) as any mapping from values of W to a normalized density over V. Define marginalization and condition in kernels in the natural way. Given a kernel q(V | W) and a CADMG , and V fixable in , define a fixing operator that produces a new object . It is not difficult to show this object is always a kernel. For a fixable sequence above, define as the natural generalization of defined via function composition.

Given a kernel q(V | W), and any A ⊆ V, B,C disjoint subsets of V∪W such that W ⊆ B∪C, we say (A ⫫ B | C)q if q(A | B,C) is only a function of A∪C. Given a CADMG , and a kernel q(V | W), we say that q is Markov relative if for any A ⊆ V, B,C disjoint subsets of V ∪ W such that W ⊆ B ∪ C, if A is m-separated from B given C in then (A ⫫ B | C)q, where m-separation is a natural generalization of d-separation (Richardson and Spirtes, 2002).

If a CADMG/kernel pair is obtained by applying ϕ to a DAG and a distribution p(V) that factories according to , then the kernel is Markov relative to the CADMG (Richardson et al., 2017). An important result in that reference is that any two fixable sequences in on the same set Z ⊆ V applied to a CADMG and kernel q Markov relative to , if they are ultimate derived from hidden variable DAGs and their distributions in an appropriate way, lead to the same CADMG and kernel (Richardson et al., 2017). For this reason, we can redefine fixing operators to apply to sets rather than sequences: and . A causal way to think about a CADMG and kernel q(V | W) obtained from p(V ∪ W) via ϕW is they represent an interventional distribution p(V | do(w)) (identified from p(V) in a particular way represented by ϕW), and a graph representing conditional independences in this distribution.

3.2. Faithfulness in Missing Data Causal Discovery Problems

The standard faithfulness assumption (3) implies all conditional independences are “structural.” For our problem we also need to consider generalized independence constraints, sometimes known as “Verma constraints” (Verma and Pearl, 1990). A simple example of a Verma constraint occurs in Fig. 1 (a). Here, in order to apply missing at random (MAR) approaches, it would be desirable to have RA be conditionally independent of A given the set of observed variables {C,D} or its subset. However, it’s easy to verify by d-separation in Fig. 1 (a) that this is not true for any such set. However, it is possible to show that in any distribution p(A,B,C,D,RA,RB,A*,B*) that factorizes as in Fig. 1 (a), RA is independent of A conditionally on D,RB in the kernel ϕD(p(A*,B*,C,D,RA,RB); (A*,B*,C,D,RA,RB)). In the case of Fig. 1 (a), it is these types of generalized independences that result in identifiability of the full data distribution. The type of faithfulness assumption we need states, informally, that generalized conditional independences themselves are also all structural.

Definition 1 (weak generalized faithfulness)

Given a missingness graph with the observed variables Z ≡ O∪L*∪R, and the corresponding distribution p(R,V), p is weakly faithful to if for any set W ⊆ Z fixable in , and any A ⊆ Z\W, and B,C disjoint subsets of Z\(W∪A) such that W ⊆ B ∪ C, if and only if .

This assumption is stated on fixing sequences applied to the latent projection onto observed variables Z in a missingness graph . All variables involved in fixing operations are also always observed.

3.3. Causal Discovery with Graph-Agnostic Identifiable Models

Aside from generalized faithfulness described above, the simplest additional assumption we can make to make causal inference in the presence of missing data possible is that sufficient information on p(R | V) is available such that (i) p(V) is identified by some function f(p(Z)), and (ii) this function is not sensitive to the structure of . We call this type of missingness model a graphagnostic identifiable model. A simple example of such a model assumes that for every Ri ∈ R, , and . In this model, results in Mohan et al. (2013) show that the full data distribution p(V) is identified via IPW as , regardless of what the structure of the edges among V vertices is.

An alternative commonly used missingness model is the sequential monotone missing at random (MAR) model. In this model, variables in V are assumed to be under a total ordering ≺, which induces a total ordering on elements of R, where Ri ≺ Rj if Li ≺ Lj. For every Li, define pre≺(Li) to be the set of variables in V earlier in the ordering than Li. Similarly, for every Ri, define pre≺(Ri) to be the set of variables in R earlier in the ordering than Ri. Monotonicity here means once a variable Li is missing, then for every Lj such that Li ≺ Lj, Lj is also missing. We further assume every Li is independent of Ri = 1, given pre≺(Ri) ∪ pre≺(Li). Under these assumptions, it is well known that regardless of graph structure on V, the full data distribution p(V) is identified as .

Causal discovery in graph-agnostic identifiable missingness models can be addressed by the following modification of the PC algorithm. First, use the structure of the (known) missingness model to obtain an estimator g(D) for p(1R | V), using an identified functional f(p(Z)) = p(1R | V). Then, replaced ordinary conditional independence tests in the PC algorithm with IPW-weighted tests, and apply the resulting modified PC algorithm to the observed data. As pseudo-code, we have:

Algorithm 1.

CBR-PC algorithm

| Input: Observed dataset D with corresponding variable sets V,O,L,L*, and R; a known estimator g(D) of p(1R | V). |

| Output: An equivalence class of DAGs over V. |

| procedure CBR-PC |

| Construct an IPW-weighted conditional independence algorithm tester(A,B,C; D) for any using D and g(D). |

| return PC-alg(D,tester(A,B,C;D)). |

| end procedure |

| ⊳ PC-alg denotes the PC algorithm from Spirtes et al. (2001), taking as input a dataset D and an algorithm tester(.) for performing any conditional independence hypothesis test (A ⫫ B | C)p using a dataset D drawn from p. |

For example, the finite sample version of the PC algorithm which uses hypothesis tests based on the correlation matrix can be augmented by instead computing an appropriately weighted correlation matrix, while versions which use non-parametric independence tests may augment each test with the appropriate weights. For simplicity, in our simulations we restrict our attention to using weighted correlation matrices. We call this version of PC the correlation-based reweighted PC algorithm (CBR-PC). The following result is straightforward, and generalizes to other versions of the PC algorithm that use IPW with weights given by f(p(Z)) = p(1R | V).

Theorem 1 The CBR-PC algorithm is asymptotically consistent.

Proof: This follows from asymptotic consistency of the ordinary PC algorithm (Spirtes et al., 2001), and the consistency of IPW estimators for any functional of the full data law, if p(R = 1 | V) is identified (Tsiatis, 2006).

3.4. Causal Discovery with Unknown Identifiable Missingness Models

A much more difficult setting assumes a true underlying missingness graph where p(V) is identifiable, but where no part of is known, including the parts related to the missingness model. There are a number of difficulties in this setting. First, the appropriate (strong) version of weak generalized faithfulness needed in this setting cannot yet be stated precisely. This is because without knowing , we cannot be sure attempted divisions by conditional distributions are valid and interpretable causally as was the case with the operator ϕ. In such cases it is an open question whether graphs exist which properly capture independence structure of kernels obtained by such “invalid” operations, and if so what such graphs might look like. Without a characterization of these graphs, it is impossible to state a one to one correspondence between some notion of path separation in such graphs and independence in corresponding kernels formed by “invalid” operations. Second, the space of possible sequences of “invalid” fixing operations is very large. It is not currently known how to minimize the number of hypothesis tests performed, in the way the PC algorithm does, when fixing operations are involved. Third, given that an independence after fixing was found, it is not currently known what the full implications of this are for graphical structure.

Nevertheless, a simple algorithm for learning the graph of an unknown identifiable missingness model, based on conjecture by James M. Robins, is as follows. Given a set of variables, try all possible fixing sequences, apply the PC to the resulting (possibly “non-causal”) kernel, and output the pattern with the fewest edges. As shown in the following section, the sparsest graph found in this way appears to be closest to the true graph, and corresponds to a sequence fixable under the true graph. However, the general validity of this type of algorithm is an open problem due to issues we discuss above.

We now outline in more detail how to implement this algorithm, conjectured to be valid for finding correct structure up to equivalence in unknown identifiable missingness models. Recall that this algorithm applies PC to a kernel obtained by an arbitrary (and not necessarily fixable relative to the true graph) fixing sequence from the observed data distribution p(Z). Since we only have observed data as an empirical approximation of p(Z), we proceed via an iterative resampling scheme suggested by Robins. Assume we are interested in a fixing sequence where we divide by 〈p(H1 | T1), … ,p(Hk | Tk)〉. We first learn a model of p(H1 | T1;α1) via maximum likelihood (in our experiments we used a random forest method, but we believe any flexible strategy would be appropriate). Then, we use a weighted bootstrap approach with IPW to select, from the original dataset of size n, another dataset of size n. Specifically, we select a row in with replacement, such that each row i has a selection probability . After obtaining , we then fit a model p(H2 | T2; α2) by maximum likelihood now using , and use this model to select a dataset of size n from , where rows are selected with replacement with probability similar to above, except the learned H1 model is replaced with the learned H2 model. We iteratively proceed in this way until we obtain . In addition, if a fixing sequence p(Hj | Tj) is such that Tj contains all variables, then we ignore the operation (as it corresponds to a marginalization and can be safely skipped without affecting subsequent hypothesis tests). This dataset is used as an empirical approximation of the kernel resulting from applying the above fixing sequence to p(Z).

As an example, of the scheme, assume we are interested in applying (6) to p(Z) given by variables in Fig. 1 (a). For , we first learn a model p(D | C), then use weighted bootstrap with weights 1/p(D | C) to generate a dataset from which a model for is learned (skipping the step of fixing C, since it corresponds to a marginalization). Similarly, for we use weighted bootstrap and IPW with weights 1/p(D | C) to generate a dataset , which is used to learn a model for p(RA | D,RB). We then use weighted bootstrap and IPW on again, with weights 1/p(RA | D,RB), to generate a dataset , which is used to learn a model for . Finally, both q weights are used to reweigh observed cases, with the resulting weighted correlation matrix given as input to the PC algorithm.

The weighted bootstrap scheme can be viewed as mimicking the fixing operations of the identification algorithm for needed weights for R in Fig. 1 (a), but ignoring all fixing operations that correspond to marginalization. This is due to the fact that marginalizations do not affect independence statements, and all fixing operations can be reordered so marginalizations are computed last, see also Theorem 7 in Shpitser et al. (2009).

4. Simulations

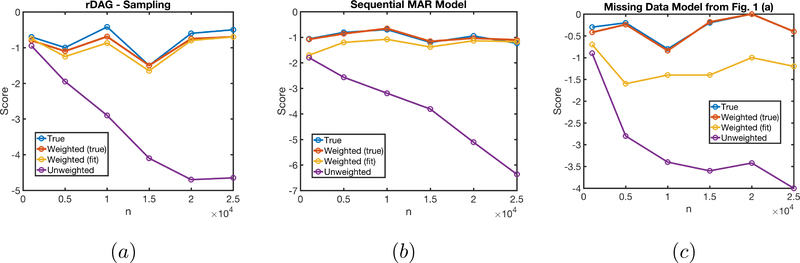

To illustrate the advantages of the CBR-PC algorithm, we focused on cases where the regular PC algorithm performs well given the full data distribution but performs poorly given observed cases only. The CBR-PC algorithm completely reverses this performance gap, learning causal structure given samples from the observed data distribution nearly as well as the the PC algorithm that is given access to samples from the full data distribution.

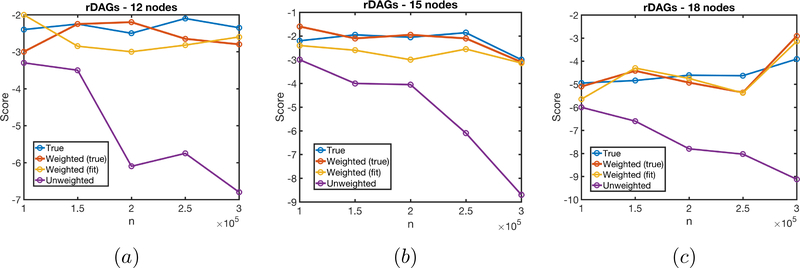

We generated random sparse DAGs of size 9, 12, 15 and 18 of degree 2. The DAGs were parameterized as multivariate normals via the standard parameterization given by the LDL decomposition of the precision matrix (Lauritzen, 1996). For all DAGs, we considered the model with disconnected R variables. For DAGs of size 9, we also considered the sequential monotone MAR model. In all cases, we generated using a noisy-or model, in order to generate strong Berkson’s bias due to only observing cases where R = 1. For all cases, we generated 10 trials for 20 random DAGs, at sample sizes ranging from 1000 to 30000.

We note that the CBR-PC performance is not significantly affected by percentage of missingness ranging up to around 90%, in which case the small sample size of observed cases starts to negatively affect performance. This in contrast to the original PC algorithm, which performs progressively worse as percentage of missingness increases. For all simulations, we set the percentage of missing rows at around 60% ± 8 for each trial in order to clearly illustrate the negative effects of missing data the original PC algorithm may plausibly face in practical scenarios.

We compared four versions of the PC algorithm. The algorithm that used the true full data distribution, the algorithm that used the observed cases only, and the algorthms that reweighted observed cases using either the true model p(R | V), or the model p(R | V) learned using the random forest method described in Chipman et al. (2010). We scored the output of the algorithms by subtracting one point for every skeleton edge or collider that was different between the output, and the true equivalence class, with the best score corresponding to recovering the true equivalence class thus being 0.

The results are shown in Fig. 2 (a), (b), and Fig. 3 (a), (b) (c),, and show that CBR-PC is generally doing as well as the PC algorithm running on the full data distribution, and the PC algorithm using the observed data distribution doing poorly in general, and more poorly with larger sample sizes. We believe this is due to the fact that some false edges introduced due to Berkson’s bias are “weak,” and thus cannot be detected at low sample sizes.

Figure 2:

A comparison of four versions of the PC algorithm for learning sparse DAGs from observations with missing data. (a) Under the “disconnected R” model with random DAGs (b) Under the sequential MAR model with random DAGs. (c) For the model in Fig. 1 (a). Here the algorithm that learned p(R | V) used weighted bootstrap for reweighting.

Figure 3:

Additional simulations of the “disconnected R” model with random DAGs. (a) 12-node graphs (b) 15-node graphs (c) 18-node graphs

Second, we illustrate how the harder version of the problem, where no information about is known might be approached by learning the graph in Fig. 1 (a). First, we verified that even in cases where p(R | V) is identified via a complex function of the observed data, such (6) for Fig. 1 (a), learning this function correctly and applying CBR-PC to the observed data yields performance that is not much worse than that obtained by running PC on the true data. See Fig. 2 (c) for the summary of our experiments.

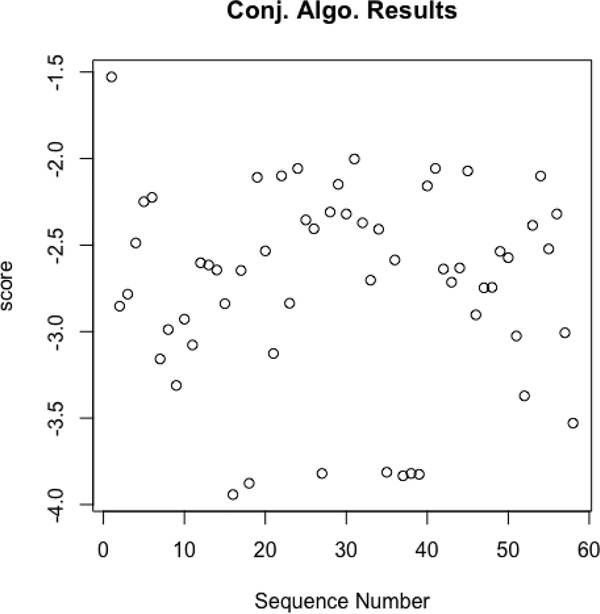

Lastly, we approach the even harder version of the problem where no causal structure is known at all. We applied the pseudo-algorithm outlined in section 3.4 to data generated from the graph in Fig. 1 (a), by trying a random subset of 60 reweighting sequences, including the true sequence licensed by the model. The scores of the resulting graphs are shown in Fig. 4, with the score for the true sequence shown first. It’s clear that given the sequences we tried, the average score of the true sequence is better than all others. We emphasize that while the algorithm we suggest has promising performance in our experiments, it’s formal validity for learning the true structure of a DAG under unknown identifiable missingness models remains an open problem.

Figure 4:

The weighted bootstrap approach applied to a number of fixing sequences on a single dataset size of 10000. The left-most score corresponds to the correct fixing sequence.

5. Conclusions

In this paper we consider the problem of causal discovery from datasets with missing entries. In short, the CBR-PC algorithm outperforms standard structure learning algorithms significantly in all scenarios considered, properly accounting for missing data. Lastly, in addition to considering the problem of causal discovery in missing data problems, we believe this paper is the first time constraint-based causal discovery procedures were adapted to take advantage of generalized independence (or Verma) constraints (Verma and Pearl, 1990; Richardson et al., 2017).

Footnotes

The authors thank Peter Spirtes for pointing this out.

References

- Chickering DM Learning equivalence classes of Bayesian network structures. Journal of Machine Learning Research, 2:445–498, 2002. [Google Scholar]

- Chipman HA, George EI, and McCulloch RE BART: Bayesian additive regression trees. Annals of Applied Statistics, 4(1):266–298, 2010. [Google Scholar]

- Horvitz DG and Thompson DJ A generalization of sampling without replacement from a finite universe. Journal of the American statistical Association, 47(260):663–685, 1952. [Google Scholar]

- Lauritzen SL Graphical Models Oxford, U.K.: Clarendon, 1996. [Google Scholar]

- Mohan K, Pearl J, and Tian J Graphical models for inference with missing data In Burges C, Bottou L, Welling M, Ghahramani Z, and Weinberger K, editors, Advances in Neural Information Processing Systems 26, pages 1277–1285. Curran Associates, Inc., 2013. [Google Scholar]

- Pearl J Probabilistic Reasoning in Intelligent Systems. Morgan and Kaufmann, San Mateo, 1988. [Google Scholar]

- Pearl J Causality: Models, Reasoning, and Inference. Cambridge University Press, 2 edition, 2009. ISBN 978–0521895606. [Google Scholar]

- Richardson T and Spirtes P Ancestral graph Markov models. Annals of Statistics, 30:962–1030, 2002. [Google Scholar]

- Richardson TS, Evans RJ, Robins JM, and Shpitser I Nested Markov properties for acyclic directed mixed graphs. https://arxiv.org/abs/1701.06686, 2017. Working paper.

- Robins JM, Rotnitzky A, and Zhao LP Estimation of regression coefficients when some regressors are not always observed. Journal of the American statistical Association, 89(427):846–866, 1994. [Google Scholar]

- Scharfstein DO, Rotnitzky A, and Robins JM Adjusting for nonignorable drop-out using semiparametric nonresponse models. Journal of the American Statistical Association, 94(448): 1096–1120, 1999. [Google Scholar]

- Shimizu S, Hoyer PO, Hyvärinen A, and Kerminen AJ A linear non-Gaussian acyclic model for causal discovery. Journal of Machine Learning Research, 7:2003–2030, 2006. [Google Scholar]

- Shpitser I, Richardson TS, and Robins JM Testing edges by truncations. In International Joint Conference on Artificial Intelligence, volume 21, pages 1957–1963, 2009. [Google Scholar]

- Shpitser I, Mohan K, and Pearl J Missing data as a causal and probabilistic problem. In Proceedings of the Thirty First Conference on Uncertainty in Artificial Intelligence (UAI-15), pages 802–811. AUAI Press, 2015. [Google Scholar]

- Spirtes P, Glymour C, and Scheines R Causation, Prediction, and Search. Springer Verlag, New York, 2 edition, 2001. ISBN 978–0262194402. [Google Scholar]

- Tsiatis A Semiparametric Theory and Missing Data. Springer-Verlag; New York, 1st edition edition, 2006. [Google Scholar]

- Vansteelandt S, Carpenter J, and Kenward MG Analysis of incomplete data using inverse prob-ability weighting and doubly robust estimators. Methodology, 2010. [Google Scholar]

- Verma TS and Pearl J Equivalence and synthesis of causal models. Technical Report R-150,Department of Computer Science, University of California, Los Angeles, 1990. [Google Scholar]

- Wooldridge JM Inverse probability weighted estimation for general missing data problems. Journal of Econometrics, 141(2):1281–1301, 2007. [Google Scholar]