Abstract

Primary Objective:

Research studies and clinical observations of individuals with traumatic brain injury (TBI) indicate marked deficits in mentalizing—perceiving social information and integrating it into judgements about the affective and mental states of others. The current study investigates social-cognitive mechanisms that underlie mentalizing ability to advance our understanding of social consequences of TBI and inform the development of more effective clinical interventions.

Research Design:

The study followed a mixed-design experiment, manipulating the presence of a mentalizing gaze cue across trials and participant population (TBI vs. healthy comparisons).

Methods and Procedures:

Participants, 153 adults, 74 with moderate-severe TBI and 79 demographically matched healthy comparison peers, were asked to judge a humanoid robot’s mental state based on precisely controlled gaze cues presented by the robot and apply those judgements to respond accurately on the experimental task.

Main Outcomes and Results:

Results showed that, contrary to our hypothesis, the social cues improved task performance in the TBI group but not the healthy comparison group.

Conclusions:

Results provide evidence that, in specific contexts, individuals with TBI can perceive, correctly recognize, and integrate dynamic gaze cues and motivate further research to understand why this ability may not translate to day-to-day social interactions.

Overview

Mentalizing is defined as the process through which individuals rely on social information to explain or predict the beliefs, actions, and intentions of others (1, 2) and has been characterized as a cornerstone of successful social functioning (3). Research studies and clinical observations of individuals with traumatic brain injury (TBI) indicate marked deficits in mentalizing ability in this clinical group (4), which may have significant implications for occupational and social-integration outcomes (5). Current evidence about mentalizing in TBI is derived from studies using written or visual, story-based “third-person” paradigms. These third-person paradigms may not capture mentalizing as it occurs in dynamic, first-person situations. We view first-person mentalizing as a dynamic multi-stage process, involving the situated perception of social stimuli and the integration of perceived information into attributions of mental state (6). Consistent with this view, we tested mentalizing in adults with TBI using a simulated social interaction paradigm developed by Mutlu and colleagues (7), in which study participants are asked to guess the mental states of a humanlike robot that produces dynamic social cues that signal its mental states. Our aim was to determine if impaired ability to attribute mental states to characters in videos or stories was similarly evident in moment-to-moment analysis of face-to-face, task-based interactions.

Background

Mentalizing, also referred to as Theory of Mind (ToM), refers to the ability to attribute mental states including purpose, intention, knowledge, belief, thinking, doubt, guessing, pretending, liking, and so on, to self and others (1, 2). Prior literature on the social consequences of TBI provides overwhelming evidence for deficits in mentalizing ability following moderate-severe TBI (for a meta-analytic review of 26 studies, see Martín-Rodríguez and León-Carrión (4)). Studies contributing to this literature show deficits in a wide range of processes that underlie mentalizing ability, including recognition of emotions in others’ speech and facial expressions (8–10), the ability to make first- and second-order inferences about others’ mental states (8, 11, 12), executive functioning (13), social problem-solving (14), and the ability to infer attitudes from communication functions such as sarcasm (15) and irony (16). Deficits have been observed early and in the chronic stages after injury (17), indicating direct and sustained effects of TBI on mentalizing ability.

Investigations of mentalizing in adults with TBI have employed a number of well-established and validated experimental tasks, such as the False Belief Task (18), Faux Pas Test (19), Cartoon Test (20), Reading the Mind in the Eyes Test (21), Character intention Task (22), Awareness of Social Inference Test (23), Video Social Inference Test (11), Mentalistic Interpretation Task (14), and Social Problem Resolution and Fluency Tasks (14). These tasks primarily follow a paradigm in which participants are presented a scenario, usually depicted as written stories (e.g., in the Mentalistic Interpretation Task) or visual storyboards (e.g., in the Character Intention Task), and asked to make inferences about the prospective actions or retrospective intentions of characters involved in the scenario. These “third-person” paradigms for studying social cognition (24) assess the ability of individuals with TBI to attribute mental states to or predict future actions of characters in abstract scenarios, with no real-life implications for participants. Participants’ ability to correctly interpret the mental states of these characters, therefore, may not generalize to real-life scenarios in which they are active participants and which have consequences for them.

A growing body of literature has characterized mentalizing as a dynamic process distributed across multiple agents, time, and the physical environment (6) and proposed the study of this process using “first-” and “second-person” paradigms that involve realistic interaction scenarios and assess functional outcomes (24). The paradigm used in the current study involves study participants playing a version of the 20-Questions guessing game with a humanlike robot and assesses the effects of the robot’s gaze cues to the correct object (7). This protocol includes several important characteristics of real-life social interactions: participants engage in social-cue perception in a situated scenario; they integrate social information into mental-state judgments; and there are real-life consequences of poor mentalizing (e.g., more guesses and thus a lower score for the task). Prior administration of this paradigm with a healthy Japanese college-student population showed that when the robot “leaked” gaze cues, participants had faster task performance, required fewer guesses, and had more accurate responses.

This approach builds on an experimental paradigm that has found wide use in behavioral research that involves simulating social cues in humanlike representations such as animated on-screen characters and humanlike robots and creating experimental scenarios in which participants interact with these representations (24–28). This paradigm enables the creation of precisely controlled, reliable, and ecologically valid social stimuli and the study of “online” social perception and communication in interactive situations. It offers higher experimental control than would using human confederates because it allows for greater control in verbal and nonverbal cues of the confederate and achieved minimal variability in how these cues are presented across experimental trials. This level of control is critical for behaviors such as eye-gaze, which are highly proceduralized and difficult to control in humans. This paradigm also offers higher ecological validity than image- and video-based studies since it allows us to present social cues to participants in dynamic, interactive situations and capture their responses using objective and behavioral measures.

The high level of control achieved through the use of the humanlike robot, the face-to-face, real-life social-interaction setting, and performance-based outcomes of the interaction make this paradigm a unique and powerful research tool to study mentalizing in adults with TBI.

Hypotheses

The current work seeks to investigate whether deficits in social perception and mentalizing demonstrated in prior work also are revealed in dynamic real-time interactions. The study hypothesis was that healthy comparison peers would use robot social gaze cues during an in-person interaction to successfully infer the mental state of their robot partner, while individuals with TBI would not.

Materials and Methods

Participants

Participants were 149 adults: 72 individuals with moderate-severe TBI (32 females) and 77 demographically matched healthy comparison (HC) participants (36 females). The participants with TBI were 43 years and 5 months old, on average (SD = 15;00), and had an average of 14 years and 10 months of education (SD = 2;03). The HC participants were 44 years and 6 months old, on average (SD = 16;11), and had 15 years and 1 month of education, on average (SD = 1;08). There were no significant differences in age, F(1,147) = 0.174, p = .677, and years in education, F(1,147) = 0.446, p = .506, between the two groups. Participants were recruited from two sites in the Midwestern United States: Madison, Wisconsin and Iowa City, Iowa as part of a larger study on social communication and perception. The relevant institutional review boards approved all procedures.

Inclusion criteria were self-identification as a native English speaker and no self-reported history of a diagnosis of language or learning disability or neurological disorder affecting the brain (pre-injury, for the TBI group). Exclusion criteria were failing a pure-tone audiometric screening test at 20 dB HL at 500, 1000, 2000, and 4000 Hz; failing standard screenings for far and near vision; or testing in the aphasic range on the Western Aphasia Battery Bedside Screening Test (29).

Injury severity for the TBI group was assessed using the Mayo Classification System (30). Participants were considered moderate-severe if at least one of the following criteria was met: (1) Glasgow Coma Scale (GCS) < 13 (i.e., moderate or severe according to the GCS), (2) positive acute CT findings or lesions visible on a chronic MRI, (3) loss of consciousness (LOC) > 30 minutes or post-traumatic amnesia (PTA) > 24 hours, and (4) retrograde amnesia > 24 hours. All participants were more than six months post injury, with an average time of 9.16 years (Min = 0.50, Max = 44.92, Median = 5.21, SD = 10.26 years) post injury, and out of post-traumatic amnesia. Injury-related information was collected using a combination of medical records and a semi-structured interview with participants.

To compare the present study to previous publications, participants completed a series of tasks recommended by the Common Data Elements Committee for TBI research (31): the California Verbal Learning Test (CVLT) (32), Wechsler Adult Intelligence Scales Processing Speed Index tests (WAIS-PSI) (33), and Trailmaking Tests A and B (34). Results for TBI and HC groups are shown in Table 1. Analysis of variance (ANOVA) revealed a significant between-groups difference on all neuropsychological measures (see Table 1), no significant sex-based differences on any measure (all p-values > .05), and no significant interaction of group and sex (all p-values > .05). Additionally, a subset of the participants whose data was included in the current paper has shown impaired mentalizing in other tasks, such as identifying speaker preferences expressed in text-based messages (35) and identifying emotion in facial affect recognition tasks (36). These impairments, coupled with the group differences we have identified in neuropsychological measures, suggest that the population that is included in the current study will have impaired mentalizing ability and, thus, not use robot social gaze cues to successfully infer the mental state of their robot, as predicted by our study hypothesis.

Table 1.

Participant characteristics.

| HC Group (n = 77) | TBI Group (n = 72) | Between-Groups Comparison | |

|---|---|---|---|

| Mean Age | 44;6 (16;11) | 43;5 (15;00) | F(1,147 = 0.174, p = .677 |

| Age Range | 18;0–81;83 | 19;33–72;33 | N/A |

| Time post-injury | N/A | 9;2 (10;3) | N/A |

| Years of Education | 15;01 (1;08) | 14;10 (2;03) | F(1,147) = 0.446, p = .506 |

| Trails A | 0.69 (0.87) | −.45 (1.46) | F(1,147) = 34.14, p < .001 |

| Trails B | 0.33 (2.16) | −1.20 (3.51) | F(1,143) = 10.26, p = .002 |

| WAIS-PSI | 108.04 (17.02) | 93.76 (16.64) | F(1,146) = 26.57, p < .001 |

| CVLT 5 Trials | 58.32 (9.29) | 47.69 (13.83) | F(1,146) = 30.57, p < .001 |

HC = Healthy Comparison group; TBI = Traumatic Brain Injury group. CVLT = California Verbal Learning Test (32), Trails A: Trailmaking Test Part A Trails B = Trailmaking Test Part B (34), WAIS PSI = Wechsler Adult Intelligence Scale (33) Processing Speed Index. Age, time post-injury, and education are years;months, rounded to the next full month. Trails B scores are z-scores; CVLT and WAIS PSI scores are scaled scores.

Task

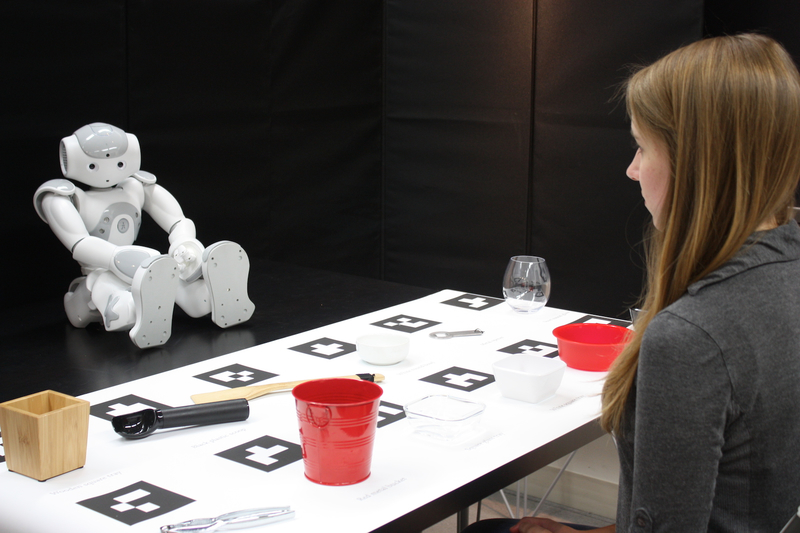

Participants were asked to complete the Nonverbal Leakage Task, in which they played a 20-Questions guessing game with a humanlike robot (7, 24) (Figure 1). The game involved the robot mentally choosing an object from an array, and the participant asking questions in order to identify the robot’s target. Participants were allowed to ask only questions that could be answered with “Yes” or “No.” When speaking with the participant, the robot established and maintained eye contact with the participant. All other times, the robot fixated its gaze to the midpoint of the task space.

Figure 1.

The Nonverbal Leakage Task (7) required participants to make judgements about the mental states of a humanlike robot using information ‘leaked’ from its gaze.

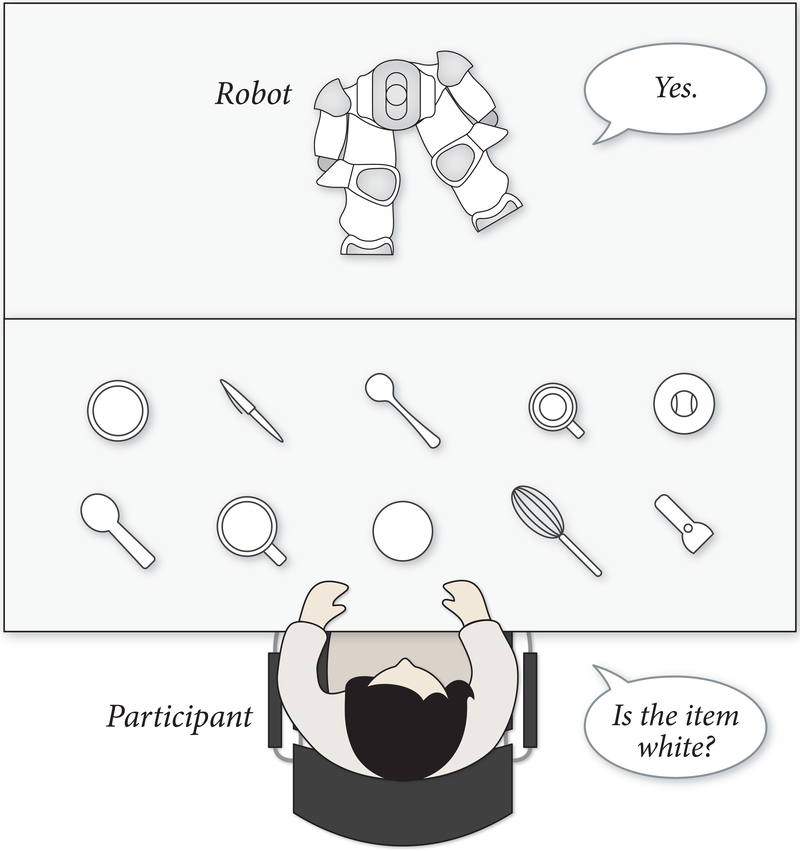

Participants sat across the robot that was placed on a table in a seated posture. An additional table was placed between the participant and the robot. On the table, 12 common household items were placed in two horizontal rows. These items were chosen to have clear distinguishing characteristics in material, color, and shape in order to minimize ambiguity. For example, only one item would fit the description “clear, square, and plastic.” To further minimize pragmatic ambiguity, labels were placed in front of each item and fully described the item (e.g., “wooden square tray”). The number of items and their distinguishing characteristics were chosen to enable participants to correctly identify the robot’s pick in an average of 4–5 guesses. Participants provided guesses until they correctly identified the robot’s pick. When a guess was incorrect, the robot stated that the guess was not correct and prompted the participant to try again. Figure 2 illustrates the physical setup of the study.

Figure 2.

The physical setup of the Nonverbal Leakage Task; participants sat across the humanlike robot that was seated on a table, and another table with task items were placed between the participant and the robot.

Technology.

A NAO humanlike robot, developed by Softbank Robotics, served as the robot platform. Using the platform’s application programming interface (API), the robot was programmed to perform the task in a semi-autonomous fashion. Using an overhead camera, it detected the locations of the objects with assistance of Augmented Reality (AR) tags placed behind the objects and directed its gaze toward them based on the detected locations.

The robot’s responses to the questions were prompted by an experimenter who was seated behind a divider and was aided by an experiment software that provided a video stream of the participant and the task space from the robot’s perspective and controls for each of the robot’s responses. A human experimenter controlled the robot’s responses, as opposed to autonomous operation by the robot, to minimize any potential data loss and interaction breakdowns that could be caused by errors in speech recognition. Upon participant questions such as “Is the item red” or “Is your pick the white round bowl,” the experimenter pressed buttons labeled “Yes” and “No” on a computer interface developed for the study, which triggered the robot to provide these responses to the participant. When the participant correctly identified the robot’s pick, the experimenter pressed a button labeled “Correct,” which triggered the robot to utter this response and initiated either the transition to the next round of the game or the script for ending the interaction. If the experimenter needed the participant to reword questions, a button labelled “Don’t Understand” prompted the robot to ask the participant to repeat the question.

The robot’s non-verbal behaviors, including the precise direction and timing of its gaze cues toward the participants and task-relevant objects, were controlled autonomously by an algorithm.

Manipulation.

A social gaze cue was introduced in some rounds of the game. In four of the nine trials, the robot glanced toward the target object prior to answering one of the participant’s questions. To ensure that acclimation effects did not interfere with perception of the gaze cue, the first round of the game was always cue-free. In the remaining eight trials, four randomly selected trials included the gaze cue. To minimize demand characteristics, the cue was introduced either on the second or the third question, with the order randomized.

The gaze cue followed the behavioral characteristics identified in prior work, although temporal modifications were made to these characteristics to accommodate the motor limitations of the robot platform used. The prior work described this “leakage” cue as a sequence of gaze shifts and fixations. The sequence involved starting with a gaze fixation toward the middle of the task space, shifting gaze toward the object of interest, fixating on the object of interest, shifting gaze toward the participant’s face (establishing eye contact), and fixating on the participant until the robot’s speech ended. In our implementation of the cue, while the length of the gaze shifts depended on object location, the fixation at the object of interest was approximately 400 ms. This timing was chosen based on prior implementations of the experimental paradigm (7, 37), which built on measurements of human gaze cues and the motor limitations of robot platforms. When the cue was absent, the robot shifted its gaze between the midpoint of the task space and the participant’s face in congruency with its dialogue with the participant.

Procedure

The experiment was administered as a part of a battery of tasks conducted under the Social Building Blocks Study. The study protocol was approved by the Education and Social/Behavioral Science Institutional Review Board at the University of Wisconsin–Madison and the Behavioural/Social Science Institutional Review Board at the University of Iowa. The experimenter was a research assistant trained in study procedures.

The procedure began with the experimenter meeting the participant in a waiting area outside the experiment room, then escorting the participant to the experiment room and asking the participant to take a seat at the table with the household items. The experimenter then introduced the participant to the robot, gave detailed instructions on the task, and answered any questions that the participant had about the study task. He or she remained available during the first round of the game to answer any additional questions that might arise after the participant had some experience with the task, and stood behind a divider, out of sight of the participant, for the remainder of the experiment. When nine rounds of the game were completed, project staff re-entered the room and escorted the participant to another room to complete the remainder of tasks included in the study.

Measurement and Analyses

Measurement.

The dependent variable was the number of guesses participants made until they correctly identified the robot’s pick. We expected this measure to be robust to individual differences but sensitive to group differences, thus unearthing any systemic effects that might result from mechanistic deficiencies in social cognition.

Data Inclusion Criteria.

Data from 153 participants, including nine trials from each participant (five uncued, four cued), yielded 1,377 data points.

Analyses.

Data were analyzed using split-plot and repeated-measures analyses of covariance (ANCOVA), with social cue as a within-participants variable, population as a between-groups variable, and site of data collection as a covariate. Alpha levels 0.05 and 0.10 are used to report on significant and marginal effects, respectively.

Results

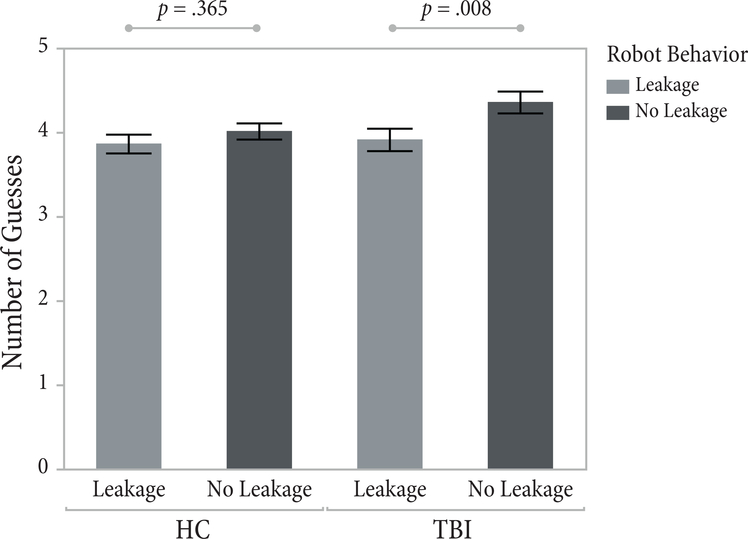

Results are shown in Figure 3. The ANCOVA revealed a significant main effect of gaze cue, F(1,145.1) = 6.52, p = .012, for all participants. Participants correctly identified the robot’s pick on an average of 3.89 questions (SD = 2.08) when the robot displayed the gaze cue and 4.19 (SD = 2.17) when it did not. A priori contrast tests within each group showed that the TBI group used significantly fewer questions in the presence of the gaze cue (M = 3.92, SD = 2.24) than when the cue was not present (M = 4.36, SD = 2.45), F(1,143.2) = 7.22, p = .008. HC participants showed a similar trend, asking fewer questions in the presence of the social cue (M = 3.87, SD = 1.91) than in its absence (M = 4.02 SD = 1.85), although the difference was not significant for this group, F(1,147) = 0.83, p = .365. There was no interaction between the gaze-cue manipulation and group, F(1,145.1) = 1.63, p = .203.

Figure 3.

Data from the number of guesses measure. A priori comparisons showed that individuals with TBI stronger mentalizing in the presence of the social cue, while the cueing had no significant effect on healthy comparisons.

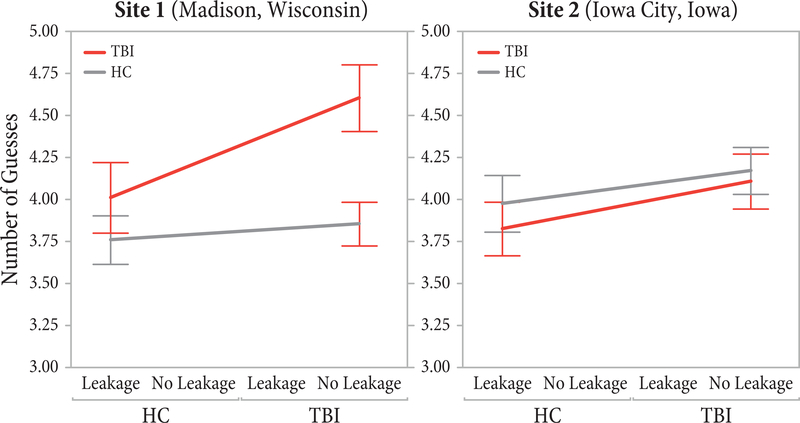

Our analysis also showed an interaction effect between data-collection site and population, F(1, 148.9) = 4.53, p = .035, and thus data-collection site was retained as a covariate in the model. We observed a large difference in the performance of the TBI group (M = 4.34, SD = 2.64) and the healthy controls (M = 3.81, SD = 1.71) in the sample from Site 1 (Madison, Wisconsin) and a very small difference in the opposite direction in the sample from Site 2 (Iowa City, Iowa) (M = 3.98, SD = 2.02 for TBI; M = 4.08, SD = 2.01 for HC). We discuss the potential explanations and implications of these differences in the Discussion Section.

Discussion

We have conceptualized mentalizing as a multi-stage process involving multiple cognitive mechanisms, including the perception of social information and integration of this information into the context of the interaction, in order to attribute mental states to others. We argued that the study of mentalizing requires the use of “first-” and “second-person” paradigms that engage these cognitive mechanisms in dynamic, physically situated interaction scenarios. Based on the second- and third-person mentalizing literature, we hypothesized that individuals with TBI would display an impaired ability to mentalize due to challenges in either social perception or integration of social information into the context of a dynamic interaction. We tested this hypothesis using a simulated social interaction task in which participants played a guessing game with a humanlike robot. Our data did not provide support for the hypothesis; to the contrary, individuals with TBI did not differ significantly from peers in mentalizing and showed a significant benefit from social gaze cues where their peers did not.

These results suggest that, in some contexts, individuals with TBI can successfully mentalize based on available social information. These findings challenge existing beliefs that social deficits in adults with TBI are due to underlying impairments in social perception or mentalizing. Performance of adults with TBI may have resulted from social skills training or increased effort by individuals with TBI to perform well on a social task, but these explanations are unlikely. Current social skills training models are explicit and didactic (e.g., rehearsing appropriate greetings) and do not address implicit perceptual processes such as those tested here; and if individuals with TBI could achieve peer-typical performance solely by increasing effort, they would not have their known social challenges. A more likely explanation is that mentalizing was tested here in a laboratory experiment that isolated all other social information and interpersonal processes that individuals with TBI might find overwhelming. Given the evidence that individuals with TBI can successfully mentalize in this context vs. their known deficits in everyday life, we argue that everyday deficits do not result from impairments in cognitive mechanisms that underlie social perception or mentalizing, but instead relate to challenges in rapidly integrating and contextualizing perceived social information and making social inferences in complex day-to-day social interactions. Such interactions can involve the presence of multiple cues that increase social-perception demand, conflicting cues (e.g., in the case of sarcasm where verbal and nonverbal cues can mismatch), or additional cognitive or interactional demands (e.g., performing under time pressure or interacting with multiple or unskilled partners). While further research is needed to substantiate this claim – for example, by assessing mentalizing ability in situations with increasing complexity – the findings of the current study redirect our attention from studying deficits at the mechanism level to understanding challenges in integrating social inference in real-life situations.

Despite predictions based on known social deficits, performance of participants with TBI improved in the presence of social gaze cues that were designed to facilitate mentalizing, from which we conclude that adults with TBI successfully perceived those cues and used them to attribute mental states. By contrast, performance of healthy peers did not differ significantly in the presence of cues, although differences between cued and uncued conditions were similar between groups. The failure to replicate earlier findings by Mutlu and colleagues (7) may have been due to methodological differences between the two studies, such as use of an older sample of participants compared to prior research (average age for our HC group was 44.49, and those for the three studies reported by Mutlu (37) were 21.86, 20.4, and 20.77). The most relevant difference, however, may have been that the robot in our study was less “human-like” than that used in prior work. Mutlu and colleagues (7) used two humanlike robots and found that, when effects were analyzed for each robot, mentalizing ability significantly improved with the highly humanlike robot but not with the less humanlike robot that was similar to the robot used in our study. Thus, we speculate that the social information provided by the robot was not sufficiently “life-like” to significantly improve mentalizing. Finally, the changes that we have made to the task as presented by Mutlu and colleagues (7), specifically the modifications in task items and their characteristics, including name, shape, color, and material, to make them more easily distinguishable by participants with TBI, might have made the task too simple for the healthy comparisons who then relied on other information to perform the task than the mentalizing cues.

Our analysis found a significant interaction between data-collection site and population, indicating that the performance of individuals with TBI and their healthy peers differed between the two data collection sites. A close examination of this effect shows that the differences between the individuals with TBI and healthy controls are much more pronounced in the data from Site 1 than those in the data from Site 2, particularly when the robot did not produce gaze cues. As shown in Figure 4, the negative effect of the absence of the gaze cues on the performance of individuals with TBI is more pronounced in the data from Site 1 than in the data from Site 2, while the effects of the gaze cues on healthy controls across the two sites appear similar. We argue that this difference among individuals with TBI between the two sites results from differences in population and injury characteristics. A comparison of the two groups in demographic and injury-related measures shows that individuals with TBI from Site 1 are significantly younger, F(1,70) = 9.10, p = .004, have marginally fewer years of education, F(1,70) = 2.93, p = .091, had their injuries significantly more recently, F(1,70) = 9.10, p = .004, and score lower on the CVLT, F(1,69) = 12.62, p = .001, and WAIS-PSI, F(1,69) = 9.65, p = .003, tests than those from Site 2. These differences suggest that younger individuals with more recent injuries, who also performed more poorly on standard measures of social functioning, show poorer mentalizing ability than their older peers with more chronic injuries. Therefore, we conclude that mentalizing ability among individuals with TBI may be mediated by age and time post injury, although further research is needed to more conclusively establish this effect.

Figure 4.

The effects of gaze manipulation and population on the number of guesses, broken down for each data-collection site. Only values between 3 and 5 are included in the Y axis to improve the visibility of differences across sites.

An alternative explanation of the results of the current study is that the healthy participants were able to use an alternative strategy to identify the robot’s pick, and the mentalizing cues provided only a marginal benefit for this group. Participants with TBI, on the other hand, were not able to rely on these cues and relied primarily on the leakage cues to mentalize. This explanation is consistent with the impairments in integrative cognitive processing and executive function in individuals with TBI (38) that would be required for the complex decision-making that would be required by identifying the robot’s pick. Although a more conclusive discussion of this explanation would require further research, it provides support for the conclusion that the TBI group was able to demonstrate mentalizing ability based on the social cues of a robot in an isolated context.

A final outcome of the current study is demonstration of a simulated social interaction paradigm for studying mentalizing in dynamic, physically situated interactions and for a more precise and ecologically valid understanding of the functioning of mentalizing mechanisms. The use of this paradigm simultaneously enables precise control and isolation of specific behaviors and mechanisms and their contextualization in an interactive, first-person scenario. Coupled with the high level of generalizability of findings between human interactions and interactions with interactive computers suggested by prior work (39, 40), these advantages highlight a promising methodological avenue for research into social functioning and deficits.

Limitations

The presented study has some limitations that future research might further explore or clarify. A limitation of the presented results is that, despite the statistically significant effects of the gaze-cue manipulation in the TBI group, participants asked, on average, 0.42 fewer questions in the presence of the gaze cue, and thus the practical significance of this effect is unknown. Another limitation of the current study is that although many social mechanisms in human-robot interaction function in the same way as they do in human-human interaction, as demonstrated by a large body of literature on human-robot interaction (41), there might be fundamental differences in people’s perceptions of robot and human social behaviors, particularly for individuals with TBI. For example, participants with TBI might have perceived the robot’s gaze shifts as task-relevant deictic cues in service of pragmatic communication, while their healthy peers might have perceived them as leakage cues that are byproducts of a complex cognitive process with little informational value. Clarifying such potential differences require further research into individual differences in social perception in human-robot interaction.

Conclusion

In this paper, we investigated how reported deficits in social perception and mentalizing in adults with TBI manifest themselves in moment-by-moment judgements in dynamic interactions. Based on the overwhelming research and clinical evidence for the presence of deficits in mentalizing, we hypothesized that, unlike healthy comparison peers, individuals with TBI would fail to make correct judgements about mental states of a partner based on social cues specifically designed to elicit mentalizing. Participants were asked to make inferences about the mental states of a humanlike robot in the absence and presence of precisely controlled robot behaviors designed to cue its mental states. Contrary to our hypothesis, cueing improved mentalizing among in the TBI group, but not among healthy comparisons, suggesting a heightened sensitivity to social information among individuals with TBI. Our results provide evidence that adults with TBI can successfully engage in mentalizing in dynamic, face-to-face interactions in isolated settings, suggesting that deficits in mentalizing highlighted by prior research may not result from impairments social-cognitive mechanisms but may result from other factors that impair the ability to utilize these mechanisms in the context of complex, dynamic interactions.

Acknowledgments

This work was supported by NIH NICHD/NCMRR award number R01 HD071089 and NIH/NIGMS award number R25GMO83252. The authors thank Dr. Erica Richmond and staff and students in the Mutlu, Turkstra, and Duff labs for work on data collection and management. The authors have no conflicts of interest to report.

References

- 1.Premack D, Woodruff G. Does the chimpanzee have a theory of mind? Behavioral and brain sciences. 1978;1(4):515–26. [Google Scholar]

- 2.Frith U, Morton J, Leslie AM. The cognitive basis of a biological disorder: Autism. Trends in neurosciences. 1991;14(10):433–8. [DOI] [PubMed] [Google Scholar]

- 3.Baron-Cohen S Mindblindness: An essay on autism and theory of mind: MIT press; 1997. [Google Scholar]

- 4.Martín-Rodríguez JF, León-Carrión J. Theory of mind deficits in patients with acquired brain injury: A quantitative review. Neuropsychologia. 2010;48(5):1181–91. [DOI] [PubMed] [Google Scholar]

- 5.Struchen MA, Clark AN, Sander AM, Mills MR, Evans G, Kurtz D. Relation of executive functioning and social communication measures to functional outcomes following traumatic brain injury. NeuroRehabilitation. 2008;23(2):185–98. [PubMed] [Google Scholar]

- 6.Duff MC, Mutlu B, Byom L, Turkstra LS, editors. Beyond utterances: Distributed cognition as a framework for studying discourse in adults with acquired brain injury. Seminars in speech and language; 2012: NIH Public Access. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mutlu B, Yamaoka F, Kanda T, Ishiguro H, Hagita N, editors. Nonverbal leakage in robots: communication of intentions through seemingly unintentional behavior. Proceedings of the 4th ACM/IEEE international conference on Human robot interaction; 2009: ACM. [Google Scholar]

- 8.McDonald S, Flanagan S. Social perception deficits after traumatic brain injury: interaction between emotion recognition, mentalizing ability, and social communication. Neuropsychology. 2004;18(3):572. [DOI] [PubMed] [Google Scholar]

- 9.Bibby H, McDonald S. Theory of mind after traumatic brain injury. Neuropsychologia. 2005;43(1):99–114. [DOI] [PubMed] [Google Scholar]

- 10.Milders M, Ietswaart M, Crawford JR, Currie D. Social behavior following traumatic brain injury and its association with emotion recognition, understanding of intentions, and cognitive flexibility. Journal of the International Neuropsychological Society. 2008;14(2):318–26. [DOI] [PubMed] [Google Scholar]

- 11.Turkstra LS. Conversation-based assessment of social cognition in adults with traumatic brain injury. Brain Injury. 2008;22(5):397–409. [DOI] [PubMed] [Google Scholar]

- 12.Muller F, Simion A, Reviriego E, Galera C, Mazaux J-M, Barat M, et al. Exploring theory of mind after severe traumatic brain injury. Cortex. 2010;46(9):1088–99. [DOI] [PubMed] [Google Scholar]

- 13.Henry JD, Phillips LH, Crawford JR, Ietswaart M, Summers F. Theory of mind following traumatic brain injury: The role of emotion recognition and executive dysfunction. Neuropsychologia. 2006;44(10):1623–8. [DOI] [PubMed] [Google Scholar]

- 14.Channon S, Crawford S. Mentalising and social problem-solving after brain injury. Neuropsychological Rehabilitation. 2010;20(5):739–59. [DOI] [PubMed] [Google Scholar]

- 15.Channon S, Pellijeff A, Rule A. Social cognition after head injury: Sarcasm and theory of mind. Brain and Language. 2005;93(2):123–34. [DOI] [PubMed] [Google Scholar]

- 16.Angeleri R, Bosco FM, Zettin M, Sacco K, Colle L, Bara BG. Communicative impairment in traumatic brain injury: A complete pragmatic assessment. Brain and language. 2008;107(3):229–45. [DOI] [PubMed] [Google Scholar]

- 17.Ietswaart M, Milders M, Crawford JR, Currie D, Scott CL. Longitudinal aspects of emotion recognition in patients with traumatic brain injury. Neuropsychologia. 2008;46(1):148–59. [DOI] [PubMed] [Google Scholar]

- 18.Wimmer H, Perner J. Beliefs about beliefs: Representation and constraining function of wrong beliefs in young children’s understanding of deception. Cognition. 1983;13(1):103–28. [DOI] [PubMed] [Google Scholar]

- 19.Stone VE, Baron-Cohen S, Knight RT. Frontal lobe contributions to theory of mind. Journal of cognitive neuroscience. 1998;10(5):640–56. [DOI] [PubMed] [Google Scholar]

- 20.Happé F Autism: cognitive deficit or cognitive style? Trends in cognitive sciences. 1999;3(6):216–22. [DOI] [PubMed] [Google Scholar]

- 21.Baron‐Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: Evidence from very high functioning adults with autism or Asperger syndrome. Journal of Child psychology and Psychiatry. 1997;38(7):813–22. [DOI] [PubMed] [Google Scholar]

- 22.Brunet E, Sarfati Y, Hardy-Baylé M-C, Decety J. A PET investigation of the attribution of intentions with a nonverbal task. Neuroimage. 2000;11(2):157–66. [DOI] [PubMed] [Google Scholar]

- 23.McDonald S, Bornhofen C, Shum D, Long E, Saunders C, Neulinger K. Reliability and validity of The Awareness of Social Inference Test (TASIT): a clinical test of social perception. Disability and rehabilitation. 2006;28(24):1529–42. [DOI] [PubMed] [Google Scholar]

- 24.Byom LJ, Mutlu B. Theory of mind: Mechanisms, methods, and new directions. Frontiers in human neuroscience. 2013;7:413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Blascovich J, Loomis J, Beall AC, Swinth KR, Hoyt CL, Bailenson JN. Immersive virtual environment technology as a methodological tool for social psychology. Psychological Inquiry. 2002;13(2):103–24. [Google Scholar]

- 26.Loomis JM, Blascovich JJ, Beall AC. Immersive virtual environment technology as a basic research tool in psychology. Behavior research methods, instruments, & computers. 1999;31(4):557–64. [DOI] [PubMed] [Google Scholar]

- 27.Breazeal C, Scassellati B. Robots that imitate humans. Trends in cognitive sciences. 2002;6(11):481–7. [DOI] [PubMed] [Google Scholar]

- 28.Scassellati B Using social robots to study abnormal social development. 2005. [Google Scholar]

- 29.Kertesz A Western Aphasia Battery. Revised ed. San Antonio, TX: Pearson Assessment; 2006. [Google Scholar]

- 30.Malec JF, Brown AW, Leibson CL, Flaada JT, Mandrekar JN, Diehl NN, et al. The mayo classification system for traumatic brain injury severity. Journal of neurotrauma. 2007;24(9):1417–24. [DOI] [PubMed] [Google Scholar]

- 31.Wilde EA, Whiteneck GG, Bogner J, Bushnik T, Cifu DX, Dikmen S, et al. Recommendations for the use of common outcome measures in traumatic brain injury research. Arch Phys Med Rehabil. 2010;91(11):1650–60. [DOI] [PubMed] [Google Scholar]

- 32.Delis DC, Kramer JH, Kaplan E, Ober BA. California Verbal Learning Test - Adult version (CVLT-II). Second ed. Austin, TX: The Psychological Corporation; 2000. [Google Scholar]

- 33.Wechsler D Wechsler Adult Intelligence Scale. Fourth ed. San Antonio, TX: Pearson; 2008. [Google Scholar]

- 34.Tombaugh TN. Trail Making Test A and B: normative data stratified by age and education. Archives of clinical neuropsychology : the official journal of the National Academy of Neuropsychologists. 2004;19(2):203–14. [DOI] [PubMed] [Google Scholar]

- 35.Turkstra LS, Duff MC, Politis AM, Mutlu B. Detection of text-based social cues in adults with traumatic brain injury. Neuropsychological rehabilitation. 2017:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rigon A, Turkstra L, Mutlu B, Duff M. The female advantage: sex as a possible protective factor against emotion recognition impairment following traumatic brain injury. Cognitive, Affective, & Behavioral Neuroscience. 2016;16(5):866–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mutlu B Designing gaze behavior for humanlike robots. Unpublished doctoral dissertation, Pittsburgh, PA, USA: (AAI3367045). 2009. [Google Scholar]

- 38.Kinnunen KM, Greenwood R, Powell JH, Leech R, Hawkins PC, Bonnelle V, et al. White matter damage and cognitive impairment after traumatic brain injury. Brain. 2010;134(2):449–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Reeves B, Nass CI. The media equation: How people treat computers, television, and new media like real people and places: Cambridge university press; 1996. [Google Scholar]

- 40.Nass C, Moon Y. Machines and mindlessness: Social responses to computers. Journal of social issues. 2000;56(1):81–103. [Google Scholar]

- 41.Lee KM, Peng W, Jin S-A, Yan C. Can robots manifest personality?: An empirical test of personality recognition, social responses, and social presence in human–robot interaction. Journal of communication. 2006;56(4):754–72. [Google Scholar]