Abstract

Background:

The Fundamentals of Laparoscopic Surgery (FLS) trainer box, which is now established as a standard for evaluating minimally invasive surgical skills, consists of five tasks: peg transfer, pattern cutting, ligation, intra- and extracorporeal suturing. Virtual simulators of these tasks have been developed and validated as part of the Virtual Basic Laparoscopic Skill Trainer (VBLaST) [1][2][3][4]. The virtual task trainers have many advantages including automatic real-time objective scoring, reduced costs and eliminating human proctors. In this paper, we extend VBLaST by adding two camera navigation system tasks: (a) pattern matching and (b) path tracing.

Methods:

A comprehensive camera navigation simulator with two virtual tasks, simplified and cheaper hardware interface (compared to the prior version of VBLaST), graphical user interface, and automated metrics has been designed and developed. Face validity of the system is tested with medical students and residents from the University at Buffalo’s medical school.

Results:

The subjects rated the simulator highly in all aspects including its usefulness in training to center the target and to teach sizing skills. The quality and usefulness of the force feedback scored the lowest at 2.62.

Keywords: Camera navigation, Laparoscopy, Virtual reality, Surgical training, Face validity

1. Introduction

Minimally invasive surgery (MIS) has revolutionized general surgery in the past decade in treating both malignant and benign diseases. Laparoscopy surgery is performed through trocars via small incisions (usually 0.5–1.5 cm) as compared to one larger incision needed for open surgery. Patients benefit by reduced post-operative pain, shortened hospital stays, improved cosmetic results, and faster recovery times [5]. Due to these improved patient outcomes, demonstrated cost effectiveness, and patient demand, laparoscopic surgery has been widely accepted by both patients and surgeons over the last two decades. Dramatic advances in instrumentation, camera video technology, and laparoscopic equipment have accelerated the development of laparoscopy. Many procedures that were traditionally accomplished through open laparotomy are now being performed laparoscopically.

Laparoscopy is performed using slender instruments through small incisions and therefore has lower tactile feedback, restricted movement and does not offer wide visibility outside the camera field-of-view as compared to open surgery. Therefore, one of the main drawbacks with the laparoscopic approach is that the surgeon loses the ability to palpate organs, vessels, and tumors during the procedure, especially if they are not visible on the surfaces of organs or structures. The surgeon has a restricted view through the laparoscopic camera inside the body cavity and any information beyond the surface of the organs in view is lost. Therefore, surgeons must rely on limited visual cues from the video monitor, rather than a combination of visual and tactile feedback, to achieve comparable or improved outcomes such as those reported for laparotomy. Due to this limited surface information provided by laparoscopic video, high level skills in manipulating the camera will be essential. Therefore, there is a need for high fidelity trainers to provide the surgeon with a risk-free environment to practice and train in these procedures.

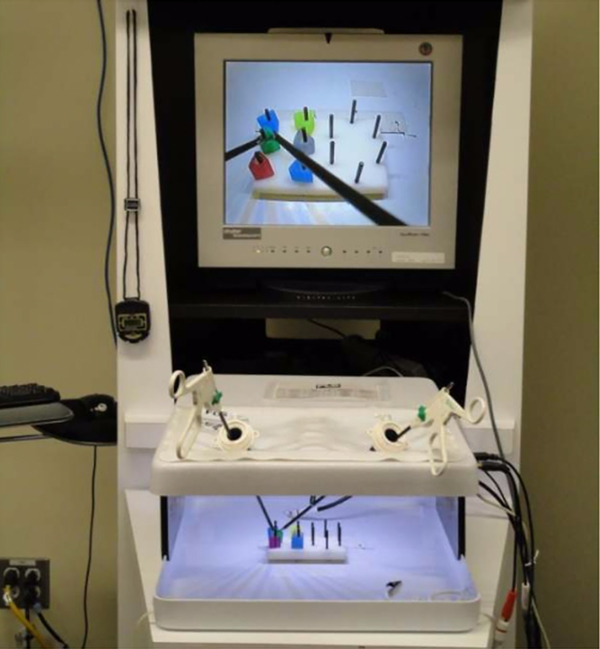

In this vein, the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) introduced the Fundamental Laparoscopic Surgery (FLS) training toolbox [6] to train and certify laparoscopic surgeons. The FLS training box was based on the MISTELS program[7] developed and validated by Vassiliou et al. [8]. The FLS training box is covered by a membrane in which two 12 mm trocars are placed on either side (separated by 6mm) of a 10 mm zero-degree laparoscope that is connected to a video monitor (Figure 1). Inside the box, five pre-manufactured tasks can be performed: peg transfer, pattern cutting, ligating loop, and suturing with intracorporeal and extracorporeal suturing. There are numerous drawbacks to the mechanical box including that the material and instrument have to be replaced constantly after being cut or sutured; significant manual labor is required for skill assessment; and lack of objective evaluation of the designated metrics.

Figure 1:

FLS training station showing the peg transfer setup

Virtual surgical tasks simulators are powerful tools that assist in providing advanced training for complex procedures and objective skills assessment ([9], [10]). Simulation-based basic laparoscopic training can be used for both training and rehearsal of the procedure [11]. Simulators accelerate training of residents without penalty of morbidity and mortality, while improving skills where patient outcomes clearly correlate with surgical experience, and result in significantly fewer errors and shorter surgical times ([12]). Double-blind validation studies have indicated that surgical residents who received simulator-based training outperformed residents without such training in performing complex procedures such as laparoscopic cholecystectomies ([13], [14], [8]).

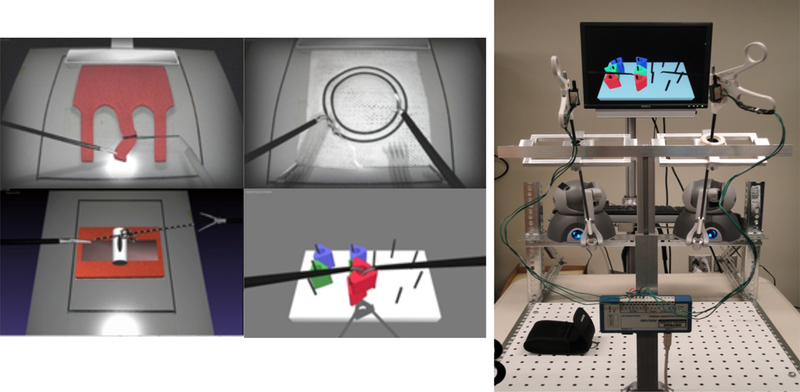

To overcome the drawbacks of the physical FLS trainer box, a virtual basic laparoscopic skill trainer (VBLaST) was developed [15] at Rensselaer polytechnic Institute (RPI). Tasks available in the FLS are replicated using advanced physics-based simulation techniques on standard PCs and laptops with inexpensive haptic interface devices (Figure 2). VBLaST provides the same five tasks -peg transfer, pattern cutting, ligating loop, intracorporeal suturing and extracorporeal suturing using advanced virtual reality technology including realistic visual and haptic feedback. The test materials used in the FLS are replaced with virtually simulated objects that are displayed on the monitor. A user can perform the tasks with the actual laparoscopic instruments that are interfaced with the haptic devices which are in turn driven by the VBLaST software. The hardware interface measures the motions of the instruments and provides haptic feedback. Physics-based algorithms accurately simulate the interaction between the instruments and virtual objects in real time and display realistic visual scene and haptic sensation. However, the VBLAST system does not include the camera navigation tasks which is the focus of this work.

Figure 2:

VBLaST tasks (left): pattern cutting, peg transfer, ligation loop, suturing. VBLaST hardware interface that mimics the FLS box trainer (right)

Camera navigation is a complicated task that requires significant hand-eye coordination. Virtual trainers and porcine model simulators are powerful teaching tools for this. The simulator tools not only can help improve the surgeon’s skills but also effectively reduce the learning curve of assistants in the operating room. Other researchers have looked into developing physical simulation trainers for camera navigation. Stefanidis et al. conducted a validation study comparing virtual camera navigation simulator (EndoTower™) with a video based trainer concluding that both passed the construct validation and have similar usability [16]. Nilsson et al. [17] reported that simulation-based training improves the technical skills required for camera navigation, regardless of practicing camera navigation or the procedure itself. Porcine model simulators have been investigated for camera navigation that showed the benefits compared to no training [18], [19]. Similar benefits recorded in simulation-based camera navigation training studies conducted by Bennett et al. [18] and Lee at al. [21]. Mori et al. [22] and others reported experience in laparoscopic surgery tasks lead to longer operating time. Franzeck et al. [23] have demonstrated that simulation-based training of camera navigation skills can be transferred to the operating room (OR) and was as effective as traditional hands-on training but more time-efficient. Roch et al. [24] also reported the impact of visual–spatial ability on learning of laparoscopic camera navigation. However, there is a lack of validated high fidelity virtual task trainer for camera navigation that is used to train and evaluate surgeons in in correct and precise use of angulation, spatial awareness, scene navigation, tracking accuracy, and steady holding of the camera during laparoscopic surgery.

2. Materials and Methods

2.1. Virtual Camera Navigation Simulation Software

Virtual camera navigation simulator is aimed at testing the user’s ability to manipulate an endoscopic camera precisely, hold a steady image, maintain a horizontal axis, move instruments in motion smoothly and be spatially aware in the context of laparoscopic surgery. The virtual camera navigation system in the current work consists of two tasks, pattern matching and path tracing, to test various aspects of these skills.

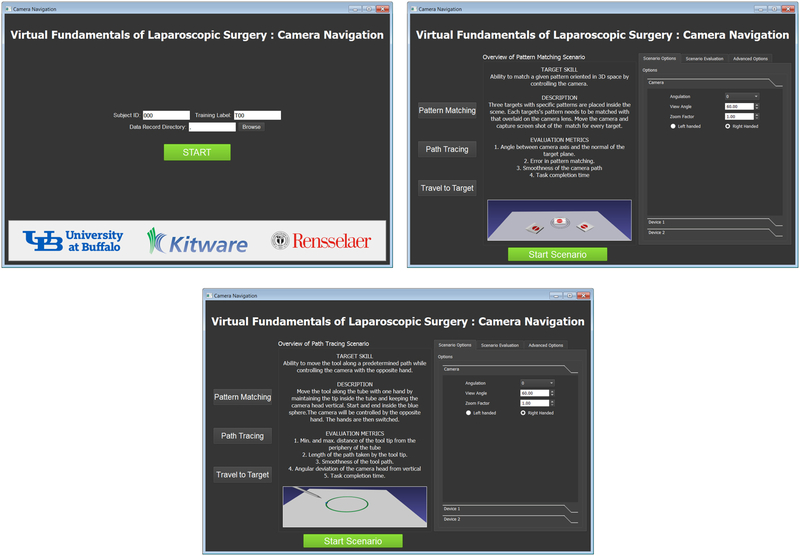

The virtual camera navigation software was implemented using the interactive medical simulation toolkit (iMSTK) [25] which is a free and open source software toolkit written in C++ that aids rapid prototyping of interactive multi-modal surgical simulations. It provides an easy to use and highly modular framework that can be extended and can be interfaced with other third-party libraries for the development of medical simulators without restrictive licenses. A QT based user interface (Figure 10) was developed. The user interface allows access to the subject management, the two tasks and display of the performance metrics. Below we describe the two tasks that are part of the trainer.

Figure 10:

Screenshots of the user interface of the camera navigation simulator.

2.1.1. Pattern Matching

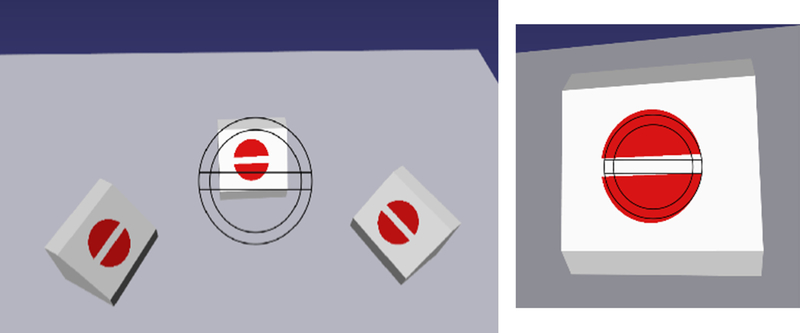

The virtual pattern matching task consists of three virtual blocks with a target (shown in red in Figure 3) on a plane at an angle to the horizontal plane. A matching target with outline is imprinted on the virtual lens. The user must match the target in the three dimensional space with that overlaid on the lens. The angulation is adjusted in order to maintain accurate matching of the horizontal lines. In this task the user is tested on his/her ability to accurately position the scope to reach and match the given target (both in size and angulation) in a timely fashion.

Figure 3:

Screen shot of the pattern matching task where wireframe representation of the target pattern is to be matched with that on the target block

Metrics:

In order to assess the accuracy of matching we measure the in-plane angular deviation between the target in 3D and that on the camera lens and the distance of the position of the camera with that of the optimal position which will lead to matching of the size of the targets. In addition, the position of the camera is tracked continuously in 3D space from which a smoothness metric based on jerk (third derivative of position with time) and the total time for completion of the task is recorded.

2.1.2. Path Tracing

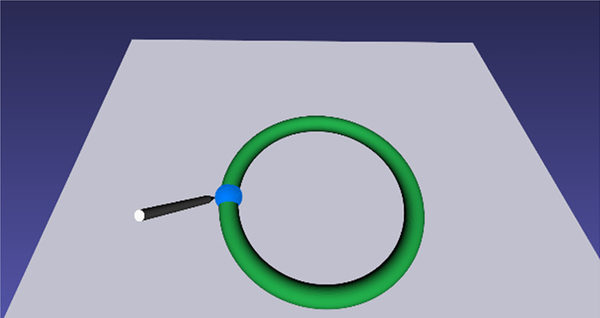

Ability to hold the camera steady, in view and in focus while the opposite hand is performing a task is a skill that is not currently tested in existing physical or VR based camera navigation simulators. We have therefore added a ‘path tracing task’ (see figure 4) where one hand holds the camera steady while the opposite hand traces a predetermined path with a laparoscopic tool. For the virtual scene a torus is placed horizontally in the scene that is rendered green (Figure 4). A virtual pointer controlled by the tracking device is used to trace the circle which forms the centerline of the torus as closely and as quickly as possible.

Figure 4:

Screenshot of the path tracing task. The user is asked to trace the center of the ring using a pointer controlled by a laparoscopic tool interface.

Metrics:

In order to assess the accuracy of path tracing, the distance of the tip of the virtual pointer with respect to the circle (center of the torus) is measured at each point and the maximum deviation is reported. In addition, the position of the virtual pointer is tracked continuously in 3D space from which smoothness metric based on jerk (third derivative of position with time), positional deviation of the camera and the total time for completion of the task are recorded.

2.2. Virtual Camera navigation Hardware Interface

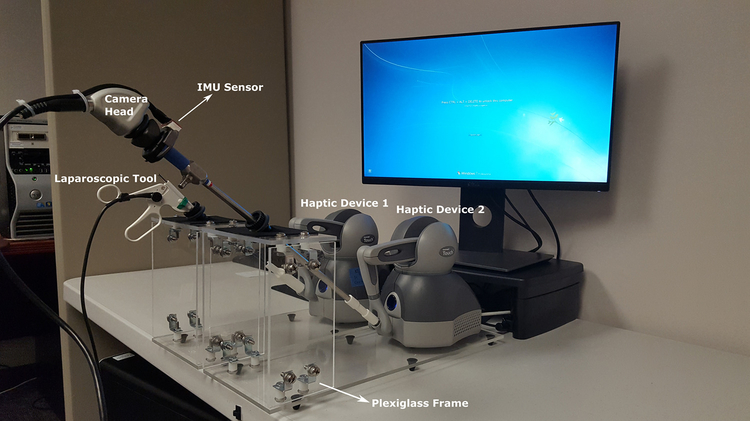

We have designed a hardware interface that mimics the endoscope and the laparoscopic tools used in the OR. It consists of one endoscope and one laparoscopic grasper connected to haptic devices that provide 6 degree of freedom tracking and forces in the three Cartesian axes of the virtual scene. The tools are attached using 3d printed connectors (Figure 5). The connectors provide a rigid but detachable connection between the tool and the haptic device. The tool and haptic devices are placed on plexiglass frames that mimic the FLS box trainer. The full assembled system is shown in Figure 5.

Figure 5:

Virtual FLS Camera Navigation hardware setup.

Two tool designs are used in the camera navigation virtual trainer. The first is a simple pointer tool that is implemented by attaching a laparoscopic tool handle to the stylus arm (Figure 5). This provides sufficient tracking to render the tool in the virtual environment. The second tool is an endoscopic camera, consisting of a scope and camera head. The scope is attached to the stylus arm and is tracked in the same manner as the pointer tool. The camera head attached to the scope adds an additional level of complexity, as its rotation must be tracked independently to correctly render the view of the virtual camera (Figure 5).

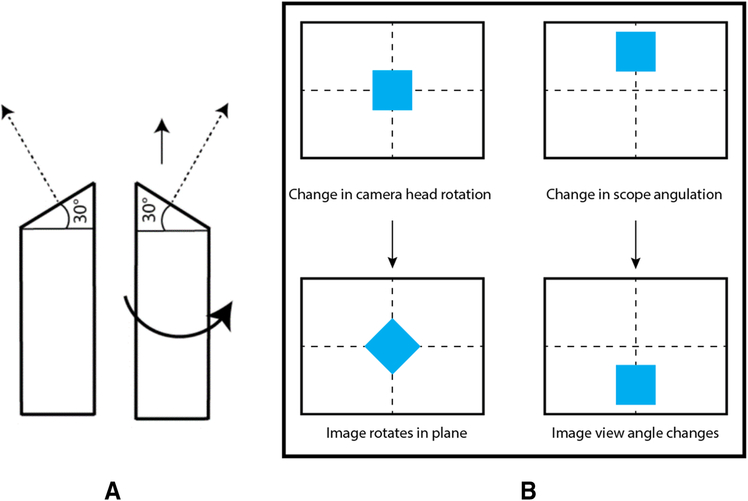

This separate angle tracking is required to correctly represent the angulation of scopes often used for laparoscopic procedures. An angled scope will have a beveled tip, resulting in a camera view vector that changes as the scope shaft is rotated, allowing the scope to look in different directions without lateral movement (Figure 6(a)). This is independent of the rotation of the camera head attached to the scope, which changes the in-plane rotation of the viewed image, but not the direction of view, see Figure 6(b) for an illustration of the difference.

Figure 6:

(a) Rotating the shaft of an angled scope (shown here as a 30 degree scope) allows the camera to look in different directions without lateral movement. (b)

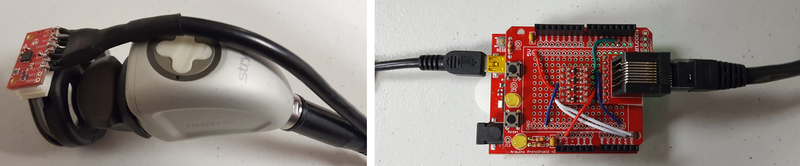

Since only the rotation of the scope shaft is tracked by the Geomagic Touch device, additional instrumentation is needed to track the rotation of the camera head. This is achieved by attaching an inertial measurement unit (IMU) chip to the camera head (Figure 7). The sensor tracks the rotation of the head on the scope with respect to gravity (i.e. the roll angle). The rotational position of the camera is reported to the software program using an Arduino microcontroller communicating over a COM port (Figure 7).

Figure 7.

Endoscopic camera with rotation tracking chip attached (left). Arduino microcontroller board programmed to collect data from the IMU (right). The left USB

2.2.1. Device calibration:

Haptic device:

Calibration of the Geomagic Touch devices is performed using the diagnostic application provided by the vendor. The tools are removed and the stylus arms are placed in their “inkwell” positions to calibrate the joint angles.

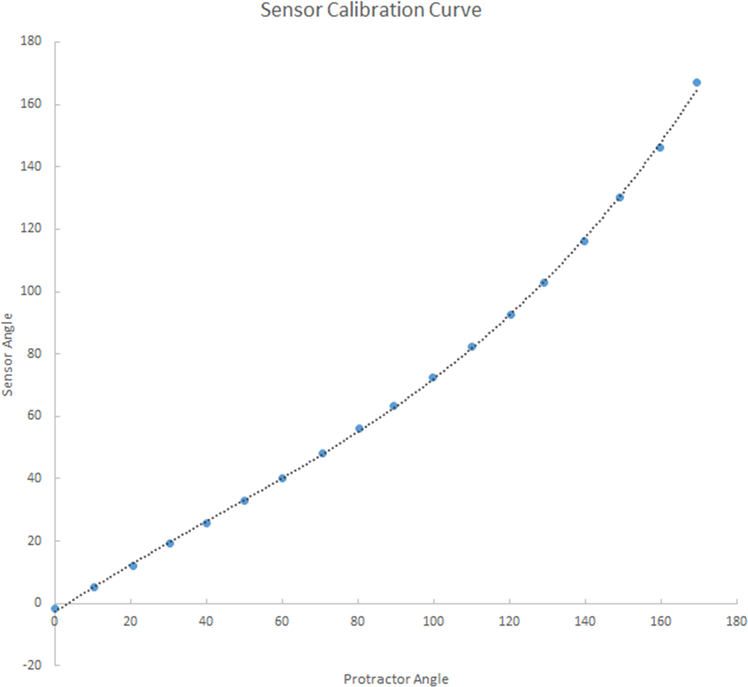

IMU sensor:

We implemented our own calibration for the camera head tracking IMU. The first stage calibration stage addresses the non-linearity (real vs raw data) issues is the angle reported by the IMU chip. Angles close to horizontal are accurate, but they are increasingly incorrect as the chip is rotated to the vertical position. Errors were quantified by attaching a digital protractor to the chip and measuring the chip output at known angles. This error was well approximated by a 3rd degree polynomial (Figure 8). Calibration coefficients of the 3rd degree polynomial are computed and stored for each IMU chip. At run time, the raw data reported by the sensor is input to the polynomial in order to get an angel that is close to real (as reported by the digital protractor). The second stage calibration stage corrects the offset angle that may have occured due to the mounting of the chip on the camera head. The camera head (with the chip mounted) is fixed level while data points are collected from the chip. The average value of the angle reported by the chip over 5 seconds is recorded in a configuration file to be used by the virtual trainer software to correctly interpret a level camera as a zero degree angle.

Figure 8:

Calibration for the IMU sensor. The computed curve is used to correct the output of the Arduino.

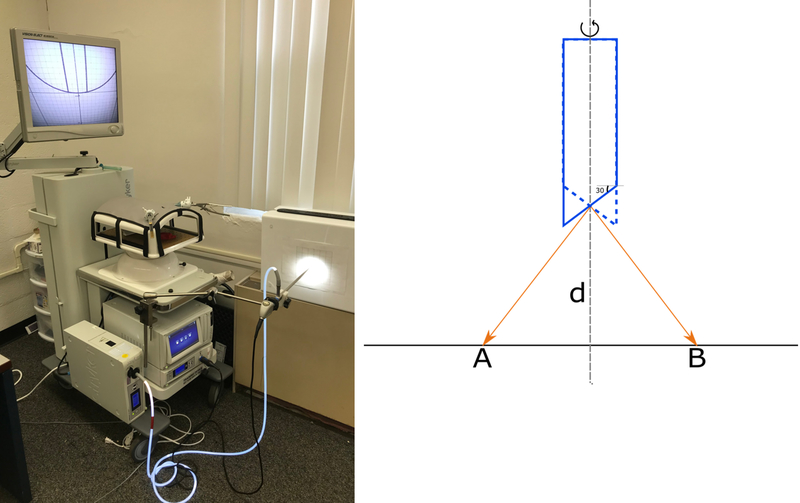

2.2.2. Verification

One of the most important aspects of the endoscopic camera is the angulation described above. Therefore the virtual scope needs to be verified against a real scope in order to accurately represent the operating room experience with the camera navigation. We designed an experimentation setup in order to perform this verification.

The setup consists of a scope placed perpendicular to a plane at a distance ‘d’ from the center of the lens. The plane contains of a uniform grid (see Figure 9) with each grid line spaced at 1/10 of an inch. The scope is then rotated 180 degrees in order to sweep the view from left to right in the plane that is perpendicular to the view. The focal point of the image in the view is then swept from point A to B in the illustration shown in Figure 9(b). In order to for the virtual scope to match with the real ones the distance swept by the camera focal point AB should be the same. We were able to verify this for a 30 degree scope shown in the Figure 9.

Figure 9:

Setup employing the real scope (from Stryker Corporation) used for verification of the angulation of the virtual scope (left). Illustration of the action of scope

2.3. Graphical User Interface

At the start of each task the user is presented with a user interface (UI) that will allow changing the experiment settings such as the angle of the scope, type of task etc (Figure 1). The application’s UI allows to configure the experiment, select the tasks, user management, and visualization of the metrics after the task is complete. The user interface was built using QT.

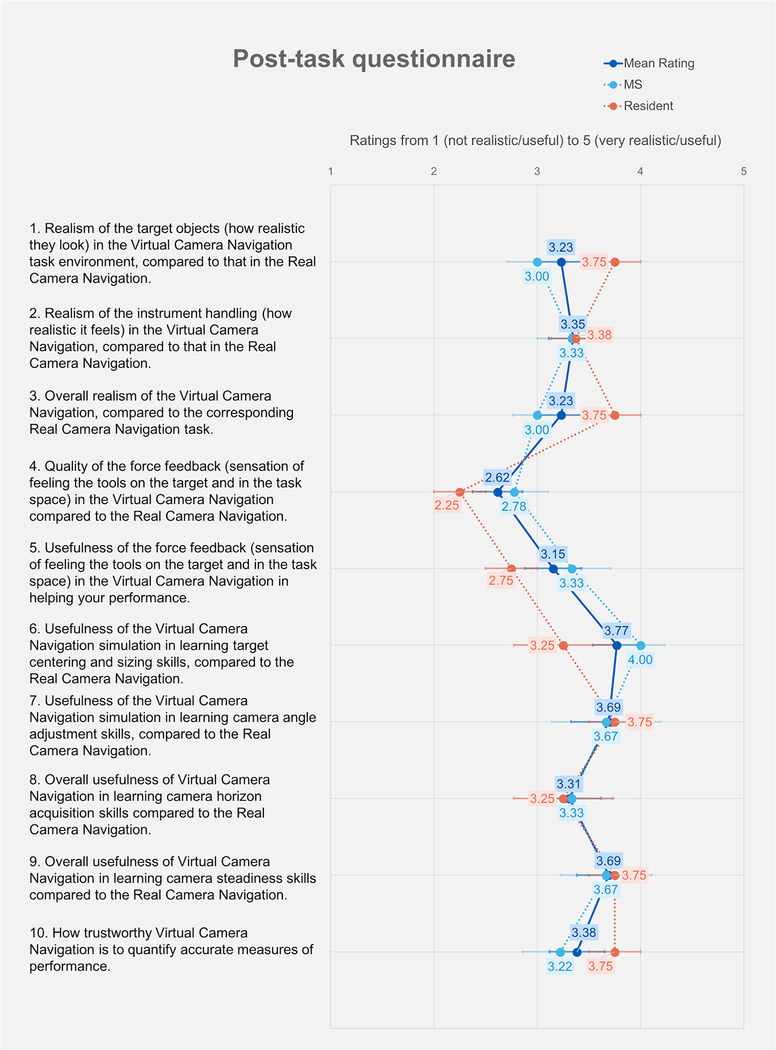

2.4. Validation

We conducted a face validation study of the camera navigation tasks with medical students and residents at the University at Buffalo with a protocol approved by the Institutional Review Board. Participants completed one session that lasted approximately 30 minutes. At the beginning of the session, after being informed about the study and providing informed consent, participants were asked to complete a questionnaire regarding demographic characteristics and surgical, simulator, and videogame experience. Medical students who have never used real camera navigation system before were presented with the Stryker camera system to become familiar with camera manipulation. Participants then watched video instructions, after which they were asked to use three different camera angles (0°, 30°, 45°) to perform each of the tasks described above. For each angle, they were asked to perform each task twice, for a total of 12 task trials.

After performing the tasks, the participants were asked to rate the face validity from 1 (not realistic/useful) to 5 (very realistic/useful) of the simulator on a 10-item questionnaire.

3. Results

4.1. Demographics

Nine medical students (MS, n=9) and four residents (n=4) participated in this study. Their demographic data are shown in Table 1.

Table 1.

Demographic data of the subjects who participated in the study

| Age, average (range) | 25.3 (22–31) |

| Sex, female:male | 3:10 |

| Hand dominance, left:right | 1:12 |

| Corrected vision, yes:no | 6:7 |

| Current position, medical student:resident | 9:4 |

| Surgical experience (observed), yes:no | 7:6 |

| Surgical experience (assisted/performed), yes:no | 6:7 |

| Simulator experience, yes:no | 12:1 |

| Video game experience, yes:no | 4:9 |

4.2. Face Validation

Figure 11 shows the results of the post-task questionnaire. Responses to 90% (9 out of 10) of the questions were rated above 3. The questions that rated highest were usefulness of the virtual camera navigation simulation in learning target centering and sizing skills (3.77), usefulness of the virtual camera navigation simulation in learning camera angle adjustment skills (3.69), and overall usefulness of virtual camera navigation in learning camera steadiness skills (3.69). The questions that rated lowest were quality of the force feedback (2.62) and usefulness of the force feedback in helping improve performance (3.15).

Fig. 11.

Results from post-task questionnaire

4. Discussion and Conclusions

With the improved computing power of the desktop computers, virtual simulators have become a reliable alternatives to the physical trainers. Simulation based trainers can be designed for target knowledge acquisition, procedural training, and skill acquisition and can provide objective assessment of proficiency and can be cost effective [26]. VBLaST system was developed as a virtual counterpart of the FLS system aimed at improving the basic skills such as hand-eye coordination, bimanual dexterity, speed, precision, and camera navigation required by the laparoscopic surgeons. In addition to the four task that are currently part of the VBLaST system the members of the SAGES have considered two additional tasks, camera navigation and cannulation, to be part of FLS [27]. Watanabe et al. refined the initial metrics for these tasks and validated them recently [28]. In this work, we report the development and face validation of a camera navigation simulator as part of the VBLaST with fully functional hardware and software components [29] that can be readily used for training residents at skills centers in teaching hospitals.

Commercial simulators such as LAP Mentor (Simbionix USA, Cleveland, OH) [30], Lap-X (Epona Medical, Rotterdam, the Netherlands) [31], and LapVRTM (Immersion Medical, Gaithersburg, MD) [32] have tasks that are similar to that in FLS but are either not validated (Lap-X) or expensive and do not have the evaluation metrics that the FLS implemented. Commercial virtual trainers currently deployed across the skills centers of the teaching hospitals in the US are expensive (>$100K) and often ship with proprietary hardware. The aim of the VBLaST has been to replicate the FLS box trainer whose tasks are extensively validated and currently adopted by SAGES for certification. In doing so it is desirable to also replicate the hardware interface of the FLS box while keeping the components highly reliable and cheap. In this work, we have simplified the hardware interface of the previous VBLaST system (compare Figure 2 with Figure 5) by employing cheaper and lighter materials. The form factor of the base frame has been improved to be the same size of the FLS box. The hardware interface (along with the camera head and the IMU sensor) cost about $4700 with majority of the cost coming from the 2 haptic devices ($4000). The hardware setup is inexpensive, reliable (with no reported malfunction during the course of the testing or the study) and the sensors can be swapped and calibrated with ease making the system easily adoptable and maintainable.

We conducted face validation of the camera navigation system at the University at Buffalo. From the studies, participants rated the system highly (greater than 3 on a scale of 5) with the exception of the quality of force feedback. There seems to be a high degree of agreement among the subjects on aspects such as (i) the closeness of the hardware instrumentation compared to the real scope, (ii) usefulness of the camera navigation in teaching camera adjustment skills, and (iii) overall usefulness of the system in teaching skills with regards to skillful camera usage in the operating room. Prior validation studies of the VBLaST tasks have shown similar trends. For example, in the case of pattern cutting and ligation, the subjects rated the force feedback (and instrument handling) among all the aspects of the simulation that needs to be improved [33] while rating the realism and the usefulness of the system in training the residents highly. The force feedback however was rated highly in the case of peg transfer [34]. It has to be noted however that in the case of camera navigation, the usefulness of the force feedback is minimal compared to peg transfer, pattern cutting, ligation loop and suturing since the obstacles are typically avoided based on the visual cues.

One of the advantages of the VR based trainers is that additional data such as the position, velocity profile of the tools can be recorded during the task. Such data can be analyzed to derive metrics such as path length, jerk that were proved to be valid discriminators of the proficiency level [35][34]. The camera navigation simulator records the position and velocity signatures of the users. As part of the future work, we will utilize these metrics that indicate motor performance to establish the construct validity. Additionally, we would like to incorporate surgery specific spatial navigation tasks that will help residents with spatial awareness, navigation skills among others. To allow remote storage and retrieval of data, we would move the subject data management online. Furthermore, we plan to develop additional metrics that will analyze the motion (3D movements and rotation) of the camera scope and other laparoscopic tools.

Acknowledgments:

Research reported in this publication was supported by the National Institute of Biomedical Imaging And Bioengineering of the National Institutes of Health under Award Number R44EB019802. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Funding: National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under Award Number R44EB019802.

Footnotes

Disclosures: Venkata Arikatla, Sam Horvath, Yaoyu Fu, Lora Cavuoto, Suvranu De, Steve Schwaitzberg, and Andinet Enquobahrie have no conflict of interests or financial ties to disclose.

References

- [1].Arikatla VS, Ahn W, Sankaranarayanan G, and De S, “Towards virtual FLS: development of a peg transfer simulator,” Int. J. Med. Robot. Comput. Assist. Surg, p. n/a-n/a, Jun. 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Zhang L et al. , “Characterizing the learning curve of the VBLaST-PT© (Virtual Basic Laparoscopic Skill Trainer),” Surg. Endosc, vol. 27, no. 10, pp. 3603–3615, Oct. 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Sankaranarayanan G et al. , “Preliminary face and construct validation study of a virtual basic laparoscopic skill trainer,” J. Laparoendosc. Adv. Surg. Tech, vol. 20, no. 2, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Qi D, Panneerselvam K, Ahn W, Arikatla V, Enquobahrie A, and De S, “Virtual interactive suturing for the Fundamentals of Laparoscopic Surgery (FLS),” J. Biomed. Inform, vol. 75, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Vanounou T et al. , “Comparing the Clinical and Economic Impact of Laparoscopic Versus Open Liver Resection,” Ann. Surg. Oncol, vol. 17, no. 4, pp. 998–1009, Apr. 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Peters JH et al. , “Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery.,” Surgery, vol. 135, no. 1, pp. 21–7, Jan. 2004. [DOI] [PubMed] [Google Scholar]

- [7].Fraser SA, Klassen DR, Feldman LS, Ghitulescu GA, Stanbridge D, and Fried GM, “Evaluating laparoscopic skills: setting the pass/fail score for the MISTELS system,” Surg. Endosc, vol. 17, no. 6, pp. 964–967, May 2003. [DOI] [PubMed] [Google Scholar]

- [8].Vassiliou MC et al. , “The MISTELS program to measure technical skill in laparoscopic surgery,” Surg. Endosc, vol. 20, no. 5, pp. 744–747, May 2006. [DOI] [PubMed] [Google Scholar]

- [9].Liu A, Tendick F, Cleary K, and Kaufmann C, “A Survey of Surgical Simulation: Applications, Technology, and Education,” Presence Teleoperators Virtual Environ, vol. 12, no. 6, pp. 599–614, Dec. 2003. [Google Scholar]

- [10].Satava RM, “Historical Review of Surgical Simulation—A Personal Perspective,” World J. Surg, vol. 32, no. 2, pp. 141–148, May 2007. [DOI] [PubMed] [Google Scholar]

- [11].LARSEN CR, OESTERGAARD J, OTTESEN BS, and SOERENSEN JL, “The efficacy of virtual reality simulation training in laparoscopy: a systematic review of randomized trials,” Acta Obstet. Gynecol. Scand, vol. 91, no. 9, pp. 1015–1028, Sep. 2012. [DOI] [PubMed] [Google Scholar]

- [12].Ahlberg G et al. , “Proficiency-based virtual reality training significantly reduces the error rate for residents during their first 10 laparoscopic cholecystectomies,” Am. J. Surg, vol. 193, no. 6, pp. 797–804, Jun. 2007. [DOI] [PubMed] [Google Scholar]

- [13].Jordan JA, Gallagher AG, McGuigan J, and McClure N, “Virtual reality training leads to faster adaptation to the novel psychomotor restrictions encountered by laparoscopic surgeons,” Surg. Endosc, vol. 15, no. 10, pp. 1080–1084, Oct. 2001. [DOI] [PubMed] [Google Scholar]

- [14].Seymour NE et al. , “Virtual Reality Training Improves Operating Room Performance,” Ann. Surg, vol. 236, no. 4, pp. 458–464, Oct. 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Maciel A, Liu Y, Ahn W, Singh TP, Dunnican W, and De S, “Development of the VBLaST™: a virtual basic laparoscopic skill trainer,” Int. J. Med. Robot. Comput. Assist. Surg, vol. 4, no. 2, pp. 131–138, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Stefanidis D et al. , “Construct and face validity and task workload for laparoscopic camera navigation: virtual reality versus videotrainer systems at the SAGES Learning Center,” Surg. Endosc, vol. 21, no. 7, pp. 1158–1164, Jun. 2007. [DOI] [PubMed] [Google Scholar]

- [17].Nilsson C et al. , “Simulation-based camera navigation training in laparoscopy—a randomized trial,” Surg. Endosc, vol. 31, no. 5, pp. 2131–2139, May 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Ganai S, Donroe JA, St. Louis MR, Lewis GM, and Seymour NE, “Virtual-reality training improves angled telescope skills in novice laparoscopists,” Am. J. Surg, vol. 193, no. 2, pp. 260–265, Feb. 2007. [DOI] [PubMed] [Google Scholar]

- [19].Korndorffer JR et al. , “Development and transferability of a cost-effective laparoscopic camera navigation simulator,” Surg. Endosc, vol. 19, no. 2, pp. 161–167, Feb. 2005. [DOI] [PubMed] [Google Scholar]

- [20].Bennett A, Birch DW, Menzes C, Vizhul A, and Karmali S, “Assessment of medical student laparoscopic camera skills and the impact of formal camera training,” Am. J. Surg, vol. 201, no. 5, pp. 655–659, May 2011. [DOI] [PubMed] [Google Scholar]

- [21].Richstone L, Schwartz MJ, Seideman C, Cadeddu J, Marshall S, and Kavoussi LR, “Eye Metrics as an Objective Assessment of Surgical Skill,” Ann. Surg, vol. 252, no. 1, pp. 177–182, May 2010. [DOI] [PubMed] [Google Scholar]

- [22].Mori M, Liao A, Hagopian TM, Perez SD, Pettitt BJ, and Sweeney JF, “Medical students impact laparoscopic surgery case time,” J. Surg. Res, vol. 197, no. 2, pp. 277–282, Aug. 2015. [DOI] [PubMed] [Google Scholar]

- [23].Franzeck FM et al. , “Prospective randomized controlled trial of simulator-based versus traditional in-surgery laparoscopic camera navigation training,” Surg. Endosc, vol. 26, no. 1, pp. 235–241, Jan. 2012. [DOI] [PubMed] [Google Scholar]

- [24].Roch PJ et al. , “Impact of visual–spatial ability on laparoscopic camera navigation training,” Surg. Endosc, vol. 32, no. 3, pp. 1174–1183, Mar. 2018. [DOI] [PubMed] [Google Scholar]

- [25].“Interactive Medical Simulation Toolkit (iMSTK).” [Online]. Available: http://www.imstk.org/. [Accessed: 07-May-2018].

- [26].Dawson SL and Kaufman JA, “The imperative for medical simulation,” Proc. IEEE, vol. 86, no. 3, pp. 479–483, 1998. [Google Scholar]

- [27].Fried GM et al. , “Proving the value of simulation in laparoscopic surgery.,” Ann. Surg, vol. 240, no. 3, pp. 518–25; discussion 525–8, Sep. 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Watanabe Y et al. , “Camera navigation and cannulation: validity evidence for new educational tasks to complement the Fundamentals of Laparoscopic Surgery Program,” Surg. Endosc, vol. 29, no. 3, pp. 552–557, Mar. 2015. [DOI] [PubMed] [Google Scholar]

- [29].Kothari LG, Shah K, and Barach P, “Simulation based medical education in graduate medical education training and assessment programs,” Prog. Pediatr. Cardiol, vol. 44, pp. 33–42, Mar. 2017. [Google Scholar]

- [30].Zhang A, Hünerbein M, Dai Y, Schlag P, and Beller S, “Construct validity testing of a laparoscopic surgery simulator (Lap Mentor<sup>®</sup>),” Surg. Endosc, vol. 22, no. 6, pp. 1440–1444, May 2008. [DOI] [PubMed] [Google Scholar]

- [31].“Epona Medical | LAP-X.” 13-May-2012.

- [32].Iwata N et al. , “Construct validity of the LapVR virtual-reality surgical simulator,” Surg. Endosc, vol. 25, no. 2, pp. 423–428, May 2011. [DOI] [PubMed] [Google Scholar]

- [33].Chellali A et al. , “Preliminary evaluation of the pattern cutting and the ligating loop virtual laparoscopic trainers.,” Surg. Endosc, vol. 29, no. 4, pp. 815–21, Apr. 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Arikatla VS et al. , “Face and construct validation of a virtual peg transfer simulator,” Surg. Endosc. Other Interv. Tech, vol. 27, no. 5, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Hwang H et al. , “Correlating motor performance with surgical error in laparoscopic cholecystectomy,” Surg. Endosc, vol. 20, pp. 651–655, Dec. 2005. [DOI] [PubMed] [Google Scholar]