Abstract

Some scenes are more memorable than others: they cement in minds with consistencies across observers and time scales. While memory mechanisms are traditionally associated with the end stages of perception, recent behavioral studies suggest that the features driving these memorability effects are extracted early on, and in an automatic fashion. This raises the question: is the neural signal of memorability detectable during early perceptual encoding phases of visual processing? Using the high temporal resolution of magnetoencephalography (MEG), during a rapid serial visual presentation (RSVP) task, we traced the neural temporal signature of memorability across the brain. We found an early and prolonged memorability related signal under a challenging ultra-rapid viewing condition, across a network of regions in both dorsal and ventral streams. This enhanced encoding could be the key to successful storage and recognition.

Introduction

Every day, we are bombarded by a mass of images in newspapers, billboards, and on social media, among others. While most of these visual representations are ignored or forgotten, a select few will be remembered. Recent studies have shown that these highly memorable images are consistent across observers and time scales demonstrating that memorability is a stimulus driven effect1–5. This is punctuated by the fact that observers have poor insight into what makes an image memorable. For instance, features such as interestingness, attractiveness and subjective memorability judgments (what the observer thinks they will remember) do not explain the phenomenon2,6.

Investigations into the neural basis of memorability using fMRI have revealed greater contributions in brain regions associated with high-level perception along ventral visual stream, rather than prefrontal regions associated with episodic memory7,8. These greater perceptual correlates indicate a potential processing advantage of memorable images, suggestive of a stronger perceptual representation. In a related vein, using an RSVP paradigm mixing images with different levels of memorability, Broers et al. (2017) found that memorable images were recognized significantly better than non-memorable images with extremely brief display durations9 (13 ms), suggesting that features underlying image memorability may be accessible early on in the perceptual process. The early response of the memorability effect is also supported by work tracking pupillary response and blink rates for memorable and non-memorable images, concluding that memorable images have both an immediate10 and long-lasting5 effect on recognition performance.

Taken together, this work suggest that memorable images are encoded more fluently, and this perceptual processing advantage correlates with better long-term storage. Here, we trace the temporal dynamics of memorable images in order to reveal the time course of neural events that influence future memory behavior. We employed high temporal resolution of magnetoencephalography (MEG) during a rapid serial visual presentation (RSVP) task to isolate the perceptual signature of memorability across the brain.

In order to focus our investigation on purely perceptual aspects of memorability, we isolated the neural signal of memorable images from the influence of higher cognitive processes such as the top-down influence of memory. This approach requires the consideration of two basic principles: First, we acknowledge that image masking procedures, such as those found in traditional RSVP tasks, inhibit neural representations of non-target images from reaching deep memory encoding11–16. Second, we assume that memorability scores from the LaMem dataset17 (normative memory scores collected from thousands of observers) can function as a proxy for individual memory in the current study (i.e. an image with a high memorability score would be very likely to be remembered by an observer in our study had the information not been interrupted through masking, whereas an image with a low score would not)2–5.

Results revealed an early and prolonged memorability related signal recruiting a network of regions in both dorsal and ventral streams, detected outside of the formation of subjective memory. The enhanced perceptual encoding shown here could be the key to improving storage and recognition.

Results

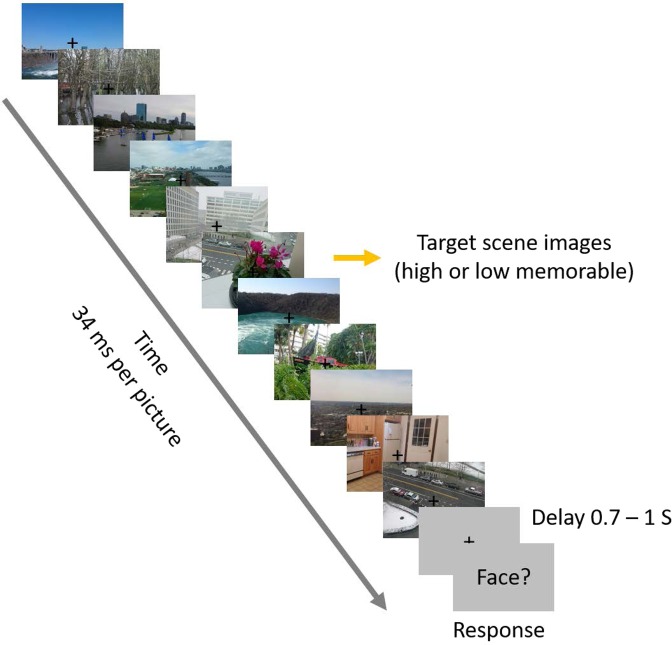

During an ultra-rapid serial visual presentation (RSVP) paradigm18, observers performed a two-alternative forced-choice face detection task (Fig. 1), while MEG data were collected. In each RSVP sequence of 11 images (34 ms per stimulus), 15 participants were instructed to determine whether the middle image, or target, was a face or non-face (50% chance), a task they could perform successfully (d′ = 3.72, two-sided signed-rank test; p < 10−4). Importantly, in the face-absent trials the middle image was replaced by a scene image (the non-face target) sampled randomly from 30 images, half with a high-memorability score of 0.88 ± 0.06 (mean ± std) and half with a low-memorability score of 0.59 ± 0.07 (mean ± std). The remaining images (distractors) in the sequence were sampled from mid-level memorability scores of 0.74 ± 0.01 (mean ± std). Target scenes and distractor stimuli came from the LaMem dataset, with pre-acquired memorability scores17. The rationale for opting the middle image as the target in the experiment design was to provide effective RSVP forward and backward masking, without making the task extremely difficult by varying the target position19.

Figure 1.

Paradigm design. RSVP paradigm, known to greatly reduce image visibility, and examples of high and low memorable target scenes. Each RSVP trial includes presentation of 11 images with the speed of 34 ms per picture (without inter-stimulus interval). In half of the trials a face image was embedded in the middle of the sequence and participants were asked to detect the face trials (a two-alternative forced choice task). In the non-face trials, the middle image was drawn randomly from a set of 30 scene images, half high memorable and half low memorable (target scenes). The presented images in this figure are not examples of the stimulus set due to copyright. The stimulus set used in the experiment can be found at https://memorabilityrsvp.github.io.

Following the MEG experiment, observers performed an unanticipated old-new memory test, with all targets and novel images shown one at a time. Participants were presented with the RSVP target images mixed randomly with 60 novel images matched on high and low level features, and instructed to indicate whether they had seen the image at any point during the RSVP task. Results show that while the goal-directed target stimuli (faces) were detected and remembered well above chance (d′ = 0.59, two-sided signed-rank test; p < 0.01), memory for target scenes was at chance level (d′ = −0.13, two-sided signed-rank test; p = 0.1), corroborating an absence of explicit memory trace. The unanticipated memory tests confirmed that despite 30 repetitions (see methods) of each target scene, participants failed to recall having seen these images. This provides the ideal circumstances to evaluate the purely perceptual basis of memorability, thus all subsequently described analyses focused only on perceptual dynamics of the target scene stimuli and the subjectively perceived face-target trials were disregarded from further analyses.

Temporal trace of memorable images

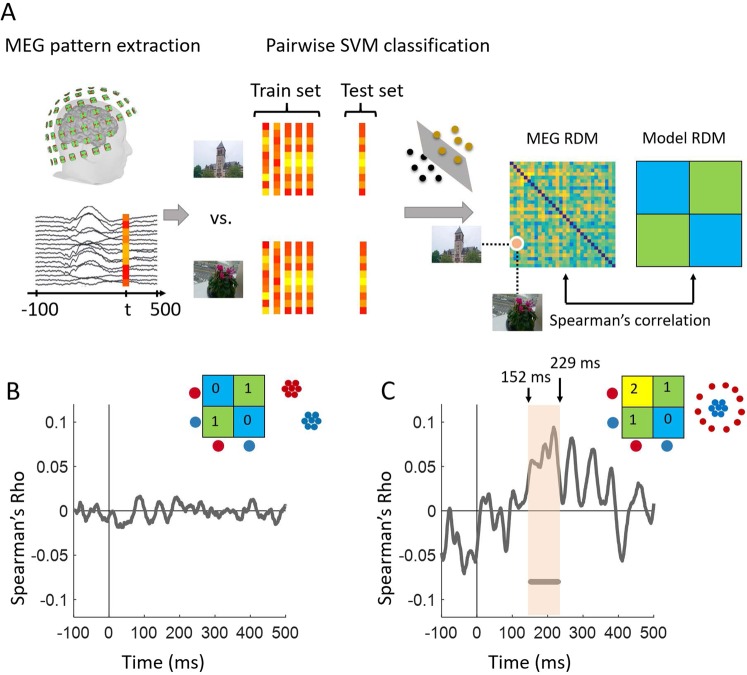

After extracting the MEG time series from −100 to 500 ms relative to target scene onset, we performed multivariate pattern analysis in a time-resolved manner. For each time point (1 ms step), we measured the performance of a SVM classifier to discriminate between pairs of scene images using leave-one-out cross-validation resulting in a 30 × 30 decoding matrix, also known as representational dissimilarity matrix (RDM), at each time point (Fig. 2A). We then used the representational similarity analysis (RSA) framework20–23 to characterize the representational geometry of memorability effect in MEG data. In this framework, hypothesized model RDMs can be compared against time resolved RDMs created from MEG data by computing their correlations (Fig. 2A). Here we considered two hypotheses, a linearly separable representation between our two conditions, such as a categorical clustering geometry (see the model RDM and its 2D multidimensional scaling (MDS) visualization in Fig. 2B), and a nonlinear entropy based representation where one condition is dispersed while the other is tightly clustered (see the model RDM and its 2D MDS visualization in Fig. 2C). The comparison of these two candidate model RDMs with the time resolved MEG RDMs yielded the correlation time series presented in Fig. 2B,C. As depicted, while no significant correlations were found between the MEG RDMs and the linearly separable model in Fig. 2B, the model assuming a more entropy based geometrical representation for high memorable images explained MEG RDM patterns with significant correlations starting at ~150 ms after target image onset.

Figure 2.

Multivariate pattern analysis and geometrical representation of memorability across time. (A) Using MEG pattern vectors at each time point t, a support vector machine (SVM) classifier was trained to discriminate pairs of target scene images. The performance of the SVM classifier in pairwise decoding of target images populated a 30 × 30 decoding matrix at each time point t. This process resulted in time resolved representational dissimilarity matrices for MEG data which can then be compared with candidate model RDMs by computing their Spearman’s rho correlations. (B,C) Two possible representational geometries of memorability and their comparison with MEG data. The RDM and MDS plot in panel B show a categorical representation in which high and low memorable images are linearly separable. The RDM and MDS plot in panel C illustrate a representational geometry where high memorable images are more dispersed than low memorable ones. The gray curves in (B) and (C) depict the time course of MEG and model RDM correlations. The line below the curve in panel C indicates significant time points when the correlation is above zero. Statistical tests used a cluster-size permutation procedure with cluster defining threshold P < 0.05, and corrected significance level P < 0.05 (n = 15).

The lack of categorical separability in our representational geometry implies that the classical between-categorical decoding analysis is not well suited to describe the distinction between these two conditions. Instead, we averaged the decoding values (dissimilarities) within high and low memorable scene pairs separately. Figure 3 shows the two curves for high and low memorable scenes across time in red and blue, respectively. Decoding accuracy was near identical for high and low memorable scenes until 149 ms, at which point the two curves diverged significantly, revealing the onset of a memorability-specific signal, which lasted until 228 ms consistent with the results in Fig. 2C.

Figure 3.

Time course of image decoding for high versus low memorable images. The pairwise decoding values were averaged within high and low memorable images separately. The color coded red and blue lines at the bottom of curves show significant time points where the decoding is above the chance level of 50%. The orange line indicates significant time points for the difference between high and low memorability. Statistical tests used a cluster-size permutation procedure with cluster defining threshold P < 0.05, and corrected significance level P < 0.05 (n = 15).

Together, our analysis demonstrates that the two categories of high and low memorable images are not linearly separable, but that high memorable images show a more distributed geometrical representation than low memorable images. This suggests that memorable stimuli are associated with higher differentiability and unique information, as illustrated by higher averaged decodings within high memorable scene pairs than low memorable scene pairs (Fig. 3).

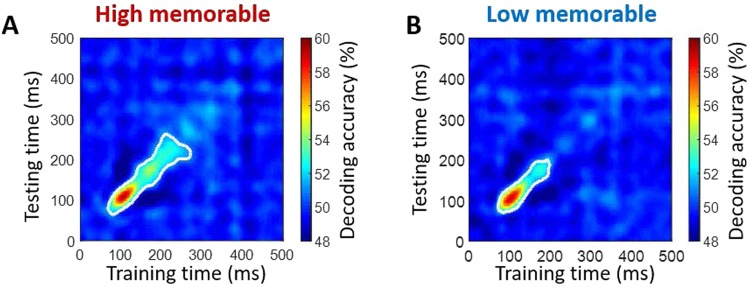

Temporal generalization reveals an evolutionary dynamics for memorable images

The significant persistence of memorable images from 149–228 ms (cluster defining threshold p < 0.05; corrected significance level p < 0.05) suggests this class of stimuli benefited from prolonged temporal processing. This extended processing could manifest as either a stable representation sustained over time - i.e. as a form of neural maintenance, or a series of distinct representations dynamically evolving over time. To investigate, we applied a temporal generalization approach24 which uses the trained SVM classifier on MEG data at a given time point t (training time) to test on data at all other time points t’ (testing time). Intuitively, if neural representations sustained across time, the classifier should generalize well across other time points. The resulting matrices (Fig. 4A,B), in which each row corresponds to the time (in ms) at which the classifier was trained and each column to the time at which it was tested, reveal that both conditions show a diagonally extended sequence of activation patterns starting at ~70 ms. This shape of significant time points suggests that the representations of both conditions dynamically evolved over time. Importantly, the greater diagonal reach of the high memorable condition suggests further processing during this evolutionary chain.

Figure 4.

Temporal generalization. (A,B) Generalization of image decoding across time for high and low memorable images. The trained SVM classifier on MEG data at a given time point t (training time) was tested on data at all other time points t’ (testing time). The resulting decoding matrices were averaged within high or low memorable scene images and over all subjects. White contour indicates significant decoding values. Statistical tests used a cluster-size permutation procedure using cluster defining threshold P < 0.05, and corrected significance level P < 0.05 (n = 15).

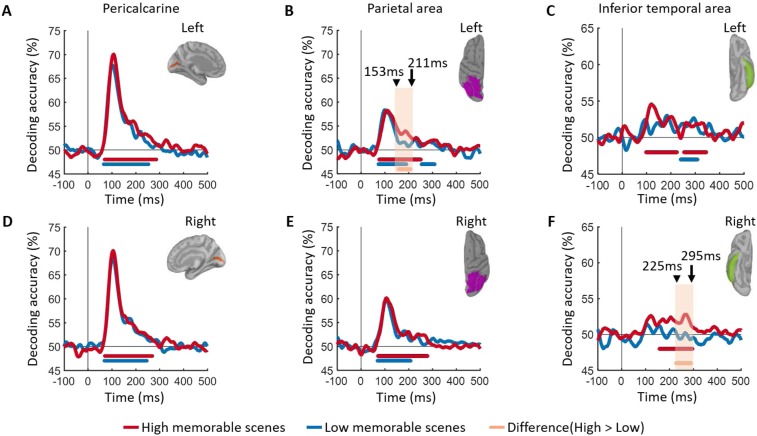

Cortical source of image memorability

How do the changing patterns of brain activity map onto brain regions? Using MEG source localization, we investigated where the memorability-specific effects manifested in the visual stream. We broadly targeted three anatomically defined regions-of-interest (ROI) known to be involved in building visual image representation. Based on Freesurfer automatic segmentation25, we selected the pericalcarine area for early visual processing, the inferior temporal area for the ventral stream processing and a parietal area for the dorsal stream processing. We successfully localized the MEG signals in the selected regions (Fig. S1). Within these ROIs we performed the same pairwise decoding analysis as for the sensor data but now using cortical source time series within these regions. Decoding results suggest that memorability recruited distinct brain regions evolving over time: as expected, no significant differences were seen in the pericalcarine (Fig. 5A,D), while significant differences between high- and low-memorable images were localized in the left parietal area starting at 153 ms (Fig. 5B) and then later in the right inferior-temporal around 225 ms (Fig. 5F). A conventional evoked response analysis is shown in Fig. S2 of Supplementary Information. While conventional evoked response analysis fails to pick up the subtle memorability effect, multi-variate pattern analysis proves to be a successful method for analyzing and interpreting MEG data.

Figure 5.

Spatial localization. (A–F) Time course of image decoding in cortical sources. RDM matrices were extracted at each time point t using MEG source localization in pericalcarine, parietal area and inferior temporal, separately for left and right. The color coded red and blue lines at the bottom of curves show the significant time points where the decoding is above chance level of 50%. The orange line indicates significant time points for the difference between high and low memorability. All significant statistical tests are with permutation tests using cluster defining threshold P < 0.05, and corrected significance level P < 0.05 (n = 15).

Discussion

In the current study, we examined the temporal processing signature of visual information that is likely to be remembered compared to the one likely to be forgotten. We tested this effect using high-temporal resolution MEG during an RSVP task to suppress the effects of top-down influences of memory. Our results revealed the dynamic neural blueprint of the perceptual memorability effect, with highly memorable images showing significantly better decoding accuracies between ~150–230 msec. Despite the extremely rapid viewing conditions, this signal persisted and evolved over multiple brain regions and timescales associated with high-level visual processing (e.g. semantic category or identity information).

A variety of neuroimaging and recording techniques have demonstrated that the cortical timescale of visual perception begins with low level features in early visual cortex at ~40–100 msec.24–29 and reaches the highest stages of processing in the inferior temporal cortex within 200 msec after stimulus onset22,23,30–38. Despite memorability being a perceptual phenomenon, previous work has revealed that low-level image features, as well as non-semantic object statistics, do not correlate strongly with memorability scores2, thus these features were equalized between conditions here, resulting in the overlapping curves within the first 150 msec after image onset (Fig. 3) and the lack of any memorability effects in the pericalcarine ROI (Fig. 5A,D).

Event-related potentials (ERPs) have linked high-level properties of the visual stimulus, such as its identity or category, with a timescale roughly 150 msec after stimulus onset32. More recently, MEG work has demonstrated a similar pattern of results22,23,39,40. While the current image set also controlled for high-level semantics between conditions (for every high memorable stimulus, there was a matching low memorable stimulus of the same semantic category), the memorability effect persisted during this period such that the decoding rate for memorable images did not drop as drastically, but instead persisted for an additional ~100 msec (see Fig. 3). This suggests that the timescale of the memorability effect (~150–230 msec) is reflective of a processing advantage for high level perceptual features.

How does this persistent high-level signal reflect the processing advantages of memorable images? To address this question, we examined the temporal generalization of the MEG data to reveal how the sequence of the memorability signals manifests over time. While the task-irrelevance and rapid masking in the current design inhibited the stimulus representations from reaching deep levels of encoding and manifesting into memory, recent temporal generalization work suggests that unseen stimuli are still actively maintained in neuronal activity over time, with an early signal representing the evolution of perception across the visual hierarchy (diagonal pattern) and a later signal generalizing over time (square pattern) as a function of the subjective visibility of the stimulus41.

Given that high memorable images are quickly perceived and understood9, we might have expected the memorable representations to stabilize more rapidly in the brain (as evidenced by a square generalization matrix), potentially reflecting a more durable maintenance of information across time. However, in both the high and low memorable conditions the generalization matrices were dominated by a diagonal pattern, commonly associated with a long sequence of neural responses reflecting the hierarchical processing stages of perception24. Importantly, the high memorable representations demonstrated a significant diagonal extension over the low memorable condition (Fig. 4), suggesting greater evolution of perception during this processing chain. Thus, the prolonged diagonal shape suggests that rather than manifest into memories under the current rapid viewing conditions, the representations of these memorable images were strong enough to take one step further down the perceptual processing pathway, perhaps readying themselves for a reliable transition into long term storage.

The evolution of signal observed in the diagonal processing chain triggers the question of which brain regions are responsible for this perceptual advantage? We performed an ROI based cortical source localization to evaluate the contributions of several regions previously associated with perceptual processing. Results revealed that memorability was localized to several high-level brain regions evolving over time; with the left parietal region recruited first, followed by the right IT cortex and, as predicted, no-significant difference observed in the pericalcarine. While hemispheric laterality effects may be a reflection of low signal-to-noise ratio, our results implicate high-level visual cortex in both the dorsal and ventral streams and not early visual areas to memorability effects.

The significantly better decoding rate of high-memorable images in these brain regions indicates that their cortical representations reflect a more concrete embodiment of the stimulus compared to those of low-memorability. However, this strong representation is still evolving over time and space as it moves from one high-level perceptual region to another. Both the inferior-temporal region and areas encompassed by the parietal cortex have been previously associated with the perception of shape, objects, faces and scenes and other high-level visual features42–48. Thus, when these regions are recruited during natural viewing, memorable images seem to carry a hidden advantage in the form of a kind of processing fluency49.

While the memorability of a stimulus plays a key role in influencing future memories, we acknowledge that it cannot be fully explained by image attributes2,4 or attentional conditions50. Yet this effect is quite strong. Even explicitly attempting to avoid forming a memory still results in memorability effects on later retrieval8. The design of the current experiment emphasized memorability as a perceptual phenomenon and thus limits our ability to explicitly draw memory related conclusions. Future work should examine the full timeline of memorability in the brain; from encoding, to storage to recognition. Only then will we have the full picture of the influence of memorability on memory formation.

While we acknowledge the coarse spatial resolution of MEG data, previous works51–55 have frequently used MEG source localization in the regions studied here to localize cognitive effects. While we successfully localized memorability with MEG in IT and parietal cortex, given the limited spatial resolution of MEG, future work should also focus on linking higher spatial resolution brain data to the current timescale. The ability to reliably trace the memorability signal over space and time has many practical advantages such as the early detection of perception or memory impairments in clinical populations (weaker or slower representations56 and the design and establishment of more memorable educational tools for improved implicit visual literacy.

As the medium of knowledge communication continues to evolve, visual literacy (the skill to interpret, negotiate, and make meaning from information presented in the form of an image) has become increasingly important. The ability of some images to be quickly understood and stick in our minds provides a powerful tool in the study of neural processing advantages leading to superior visual understanding. The high temporal-resolution results reported here provide new insights into the enduring strength of perceptual representations, pinpointing a high-level perceptual property that is quickly encoded.

Methods

Participants

Fifteen healthy right-handed human subjects (12 female; age mean ± s.d. 23.8 ± 5.7 years) with normal or corrected to normal vision participated in this experiment after signing an informed written consent form. They all received payment as a compensation for their participation. The study was approved by the Institutional Review Board of the Massachusetts Institute of Technology and conducted in agreement with the principles of the Declaration of Helsinki.

Experimental Design and Stimulus set

Stimulus set

The stimulus set comprised 60 target images (30 faces and 30 scenes) and 150 distractor images of scenes. The scene images (task irrelevant targets and distractors) were selected from a large memorability image dataset called LaMem17. The 30 scenes comprised of 15 high memorable and 15 low memorable images controlled for low level features (color, luminance, brightness, and spatial frequency) using the natural image statistical toolbox57,58 (see Supplementary Information). The face target images were selected from the 10 K USA Adult Faces3.

RSVP paradigm

Participants viewed RSVP sequences of 11 images each presented for 34 ms without inter stimulus interval in separate trials (Fig. 1). The middle image and the 10 distractor images, respectively, were randomly sampled from the set of 60 target images and the set of 150 distractor images. The image sequence was presented at the center of the screen on a gray background with 2.9° of visual angle. Each trial started with a 500 ms baseline time followed by the RSVP sequence and then a blank screen which was presented for 700–1000 ms with uniform distribution. The blank screen aimed to delay response and thus prevent motor artifacts on the data. At the end of trial, the subjects were prompted with a question to report whether they have seen a face image in the sequence or not and they responded with their right thumb using a MEG-compatible response button box. The experiment included 30 trials for each of the target images and trials were randomly ordered and presented in 12 blocks with 150 trials. In order to prevent eye movement artifacts, participants were instructed to fixate on a black cross at the screen center and only blink when pressing the button to respond. The subjects did not see the target images or distractors before the experiment.

Subsequent memory test

To determine if the target images (middle images in each sequence) were encoded in memory or not, after the MEG experiment we asked the subjects to perform an unanticipated memory test. They were presented with 120 images, the 60 RSVP target images randomly mixed with 60 novel images (30 faces and 30 scenes matched in terms of low level features and semantics with the target images), and were asked to report if they have seen the images during the experiment with 4 levels where 1 being a confidently novel image and 4 being a confidently seen image.

MEG acquisition and preprocessing

MEG data was collected using a 306-channel Elekta Triux system with the sampling rate of 1000 Hz and a band-pass filter with cut-off frequencies of 0.03 and 330 Hz. We measured the head position prior to the MEG recording using 5 head position indicator coils attached to the subjects’ head. The head position was also recorded continuously during the experiment.

Maxfilter software was applied on the acquired MEG data for head movements compensation and denoising using spatiotemporal filters59,60. Then Brainstorm software61 was used to extract trials from 100 ms before to 500 ms after target image onset and preprocess the data. We removed the baseline mean of each sensor and data was smoothed by a low-pass filter of 20 Hz. Trials with amplitude greater than 6000 fT (or fT/cm) were marked as bad trials. Eye blink/movement artifacts were detected using frontal sensor MEG data and then principal component analysis was applied to remove these artifacts from the MEG data. The participants were asked to withhold their eye blinks and only blink when they provided delayed button press responses. Furthermore, a fixation cross was constantly displayed at the center of the screen to prevent eye movements. Given this experimental design, we only lost less than 5% of trials per subject due to these artifacts.

MEG multivariate pattern analysis

Sensor space

We analyzed MEG data using multivariate pattern analysis. To decode information of the task irrelevant target stimuli, a linear support vector machine (SVM, libsvm implementation62) was used as a classifier. In order to reduce computational load, the MEG trials of each condition were sub-averaged in groups of 5 with random assignment, resulting in N = 6 trials per condition. At each time point t of each trial, the MEG data was arranged in a vector of 306 elements. Then, for each pair of high or low memorability scene images (middle scenes in the RSVP sequence) and at each time point, the accuracy of SVM classifier was calculated using a leave-one-out procedure. The procedure of sub-averaging and then cross-validation was repeated for 100 times. The classifier accuracies were averaged over the repetitions separately for pairs of high or low memorability scene images.

Source space

To localize representational information on regions of interest (ROIs), we mapped MEG signals on cortical sources (based on Freesurfer automatic segmentation25 using default anatomy63) and performed multivariate pattern analysis on each ROI. We computed the forward model using an overlapping spheres model64 and then using a dynamic statistical parametric mapping approach (dSPM) MEG signals were mapped on the cortex65. Time series from cortical sources within three regions of interest, namely, pericalcarine, inferior temporal and parietal area (concatenating inferior parietal and superior parietal) were derived66. We chose pericalcarine as a control region which processes low-level features. Because we controlled our stimulus set for these features (Tables S1 and S2), we expected no significant difference in decoding of MEG sources in this region. Since our stimuli are scene images containing objects of various categories (see Supplementary Information), we investigated parietal cortex, known to be involved in scene perception47,48 and inferior temporal cortex, known to be involved in object category perception22,36,51. In each cortical region of interest, pattern vectors were created by concatenating ROI-specific source activation values, and then a similar multivariate pattern analysis was applied to the patterns of each ROI.

Temporal generalization with multivariate pattern analysis

To compare the stability of neural dynamics of high and low memorable images, we studied the temporal generalization of their representations22–24,67–69 by extending the SVM classification procedure. The SVM classifier trained at a given time point t was tested on data at all other time points. The classifier performance in discriminating signals can be generalized to time points with shared representations. This temporal generalization analysis was performed on every pair of images and for each subject. Then averaging within high (or low) memorable images and across subjects resulted in a 2D matrix where the x-axis corresponded to training time and y-axis to testing time.

Statistical inference

We used nonparametric statistical tests which do not assume any distributions on the data70,71 and has been widely used for MEG data statistical tests65,68,72–74. Our statistical inference on decoding time series and temporal generalization matrices were performed by permutation-based cluster-size inference (1000 permutations, 0.05 cluster definition threshold and 0.05 cluster threshold) with null hypothesis of 50% chance level. For difference of decoding time series we used 0 as chance level. We performed bootstrap tests to assess statistics for peak latency of time series. Specifically, we bootstrapped subject-specific time series for 1000 times, each time we averaged the time series and found its peak latency, and finally using the empirical distribution of peak latencies we assessed the 95% confidence intervals.

Supplementary information

Acknowledgements

This work was funded by NSF award 1532591 in Neural and Cognitive Systems (to D.P. and A.O.) and the Vannevar Bush Faculty Fellowship program by the ONR to A.O. (N00014-16-1-3116).The study was conducted at the Athinoula A. Martinos Imaging Center at the McGovern Institute for Brain Research, Massachusetts Institute of Technology.

Author Contributions

Y.M., A.O. and D.P. designed the experiment. Y.M. conducted the experiment and analyzed the data. Y.M., C.M., A.O. and D.P. interpreted the results and wrote the manuscript.

Data Availability

The data and analysis tools used in the current study are available from the corresponding authors upon request.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Yalda Mohsenzadeh, Email: yalda@mit.edu.

Dimitrios Pantazis, Email: pantazis@mit.edu.

Supplementary information

Supplementary information accompanies this paper at 10.1038/s41598-019-42429-x.

References

- 1.Standing L. Learning 10000 pictures. Quarterly Journal of Experimental Psychology. 1973;25:207–222. doi: 10.1080/14640747308400340. [DOI] [PubMed] [Google Scholar]

- 2.Isola P, Parikh D, Torralba A, Oliva A. Understanding the Intrinsic Memorability of Images. Advances in Neural Information Processing Systems. 2011;24:2429–2437. [Google Scholar]

- 3.Bainbridge WA, Isola P, Oliva A. The intrinsic memorability of face photographs. Journal of Experimental Psychology: General. 2013;142:1323–1334. doi: 10.1037/a0033872. [DOI] [PubMed] [Google Scholar]

- 4.Bylinskii Z, Isola P, Bainbridge C, Torralba A, Oliva A. Intrinsic and extrinsic effects on image memorability. Vision Research. 2015;116:165–178. doi: 10.1016/j.visres.2015.03.005. [DOI] [PubMed] [Google Scholar]

- 5.Goetschalckx L, Moors P, Wagemans J. Image memorability across longer time intervals. Memory. 2018;26:581–588. doi: 10.1080/09658211.2017.1383435. [DOI] [PubMed] [Google Scholar]

- 6.Isola P, Xiao J, Parikh D, Torralba A, Oliva A. What Makes a Photograph Memorable? IEEE Transactions on Pattern Analysis and Machine Intelligence. 2014;36:1469–1482. doi: 10.1109/TPAMI.2013.200. [DOI] [PubMed] [Google Scholar]

- 7.Bainbridge WA, Dilks DD, Oliva A. Memorability: A stimulus-driven perceptual neural signature distinctive from memory. NeuroImage. 2017;149:141–152. doi: 10.1016/j.neuroimage.2017.01.063. [DOI] [PubMed] [Google Scholar]

- 8.Bainbridge WA, Rissman J. Dissociating neural markers of stimulus memorability and subjective recognition during episodic retrieval. Scientific Reports. 2018;8:1–11. doi: 10.1038/s41598-018-26467-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Broers N, Potter MC, Nieuwenstein MR. Enhanced recognition of memorable pictures in ultra-fast RSVP. Psychonomic Bulletin & Review. 2018;25:1080–1086. doi: 10.3758/s13423-017-1295-7. [DOI] [PubMed] [Google Scholar]

- 10.Le-Hoa Võ, M., Bylinskii, Z. & Oliva, A. Image Memorability In The Eye Of The Beholder: Tracking The Decay Of Visual Scene Representations., 10.1101/141044 (2017).

- 11.Hupé JM, et al. Cortical feedback improves discrimination between figure and background by V1, V2 and V3 neurons. Nature. 1998;394:784–787. doi: 10.1038/29537. [DOI] [PubMed] [Google Scholar]

- 12.Lamme VA, Supèr H, Spekreijse H. Feedforward, horizontal, and feedback processing in the visual cortex. Current Opinion in Neurobiology. 1998;8:529–535. doi: 10.1016/S0959-4388(98)80042-1. [DOI] [PubMed] [Google Scholar]

- 13.Bullier J. Feedback connections and conscious vision. Trends in Cognitive Sciences. 2001;5:369–370. doi: 10.1016/S1364-6613(00)01730-7. [DOI] [PubMed] [Google Scholar]

- 14.Lamme VAF, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends in Neurosciences. 2000;23:571–579. doi: 10.1016/S0166-2236(00)01657-X. [DOI] [PubMed] [Google Scholar]

- 15.Pascual-Leone A, Walsh V. Fast Backprojections from the Motion to the Primary Visual Area Necessary for Visual Awareness. Science. 2001;292:510–512. doi: 10.1126/science.1057099. [DOI] [PubMed] [Google Scholar]

- 16.Keysers C, Perrett DI. Visual masking and RSVP reveal neural competition. Trends in Cognitive Sciences. 2002;6:120–125. doi: 10.1016/S1364-6613(00)01852-0. [DOI] [PubMed] [Google Scholar]

- 17.Khosla, A., Raju, A. S., Torralba, A. & Oliva, A. Understanding and Predicting Image Memorability at a Large Scale. In 2015 IEEE International Conference on Computer Vision (ICCV) 2390–2398, 10.1109/ICCV.2015.275 (IEEE, 2015).

- 18.Potter MC. Short-Term Conceptual Memory for Pictures. Journal of Experimental Psychology: Human Learning and Memory. 1976;2:509–522. [PubMed] [Google Scholar]

- 19.Potter MC, Wyble B, Hagmann CE, McCourt ES. Detecting meaning in RSVP at 13 ms per picture. Attention, Perception, & Psychophysics. 2014;76:270–279. doi: 10.3758/s13414-013-0605-z. [DOI] [PubMed] [Google Scholar]

- 20.Kriegeskorte N, et al. Matching Categorical Object Representations in Inferior Temporal Cortex of Man and Monkey. Neuron. 2008;60:1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kriegeskorte, N. Representational similarity analysis – connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience, 10.3389/neuro.06.004.2008 (2008). [DOI] [PMC free article] [PubMed]

- 22.Cichy RM, Pantazis D, Oliva A. Resolving human object recognition in space and time. Nature Neuroscience. 2014;17:455–462. doi: 10.1038/nn.3635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mohsenzadeh Y, Qin S, Cichy RM, Pantazis D. Ultra-Rapid serial visual presentation reveals dynamics of feedforward and feedback processes in the ventral visual pathway. eLife. 2018;7:1–23. doi: 10.7554/eLife.36329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.King J-R, Dehaene S. Characterizing the dynamics of mental representations: the temporal generalization method. Trends in Cognitive Sciences. 2014;18:203–210. doi: 10.1016/j.tics.2014.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fischl B, et al. Sequence-independent segmentation of magnetic resonance images. NeuroImage. 2004;23:S69–S84. doi: 10.1016/j.neuroimage.2004.07.016. [DOI] [PubMed] [Google Scholar]

- 26.Jeffreys, D. A. & Axford, J. G. Source locations of pattern-specific components of human visual evoked potentials. I. Component of striate cortical origin. Experimental Brain Research16 (1972). [DOI] [PubMed]

- 27.Estevez, O. & Spekreijse, H. Relationship between pattern appearance-disappearance and pattern reversal responses. Experimental Brain Research19 (1974). [DOI] [PubMed]

- 28.Clark VP, Hillyard SA. Spatial Selective Attention Affects Early Extrastriate But Not Striate Components of the Visual Evoked Potential. Journal of Cognitive Neuroscience. 1996;8:387–402. doi: 10.1162/jocn.1996.8.5.387. [DOI] [PubMed] [Google Scholar]

- 29.Woodman GF. A brief introduction to the use of event-related potentials in studies of perception and attention. Attention, Perception & Psychophysics. 2010;72:2031–2046. doi: 10.3758/BF03196680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wyatte, D., Jilk, D. J. & O’Reilly, R. C. Early recurrent feedback facilitates visual object recognition under challenging conditions. Frontiers in Psychology5 (2014). [DOI] [PMC free article] [PubMed]

- 31.Thorpe, S., Fize, D. & Marlot, C. Speed of processing in the human visual system. Nature 520–522 (1996). [DOI] [PubMed]

- 32.VanRullen R, Thorpe SJ. The Time Course of Visual Processing: From Early Perception to Decision-Making. Journal of Cognitive Neuroscience. 2001;13:454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]

- 33.Hung CP. Fast Readout of Object Identity from Macaque Inferior Temporal Cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- 34.Mormann F, et al. Latency and Selectivity of Single Neurons Indicate Hierarchical Processing in the Human Medial Temporal Lobe. Journal of Neuroscience. 2008;28:8865–8872. doi: 10.1523/JNEUROSCI.1640-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Liu H, Agam Y, Madsen JR, Kreiman G. Timing, Timing, Timing: Fast Decoding of Object Information from Intracranial Field Potentials in Human Visual Cortex. Neuron. 2009;62:281–290. doi: 10.1016/j.neuron.2009.02.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.DiCarlo JJ, Zoccolan D, Rust NC. How Does the Brain Solve Visual Object Recognition? Neuron. 2012;73:415–434. doi: 10.1016/j.neuron.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dima DC, Perry G, Singh KD. Spatial frequency supports the emergence of categorical representations in visual cortex during natural scene perception. NeuroImage. 2018;179:102–116. doi: 10.1016/j.neuroimage.2018.06.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cichy RM, Pantazis D, Oliva A. Similarity-Based Fusion of MEG and fMRI Reveals Spatio-Temporal Dynamics in Human Cortex During Visual Object Recognition. Cerebral Cortex. 2016;26:3563–3579. doi: 10.1093/cercor/bhw135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mohsenzadeh, Y., Mullin, C., Lahner, B., Cichy, R. M. & Oliva, A. Reliability and Generalizability of Similarity-Based Fusion of MEG and fMRI Data in Human Ventral and Dorsal Visual Streams. BioRxiv 1–21, 10.1101/451526 (2018). [DOI] [PMC free article] [PubMed]

- 40.Halgren E. Cognitive Response Profile of the Human Fusiform Face Area as Determined by MEG. Cerebral Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- 41.King J-R, Pescetelli N, Dehaene S. Brain Mechanisms Underlying the Brief Maintenance of Seen and Unseen Sensory Information. Neuron. 2016;92:1122–1134. doi: 10.1016/j.neuron.2016.10.051. [DOI] [PubMed] [Google Scholar]

- 42.Kanwisher N, McDermott J, Chun MM. The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. The Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- 44.Epstein R, Harris A, Stanley D, Kanwisher N. The Parahippocampal Place Area: Recognition, Navigation, or Encoding? Neuron. 1999;23:115–125. doi: 10.1016/S0896-6273(00)80758-8. [DOI] [PubMed] [Google Scholar]

- 45.Rossion B, et al. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-speci®c processes in the human brain. Neuroreport. 2000;11:69–74. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]

- 46.Grill-Spector K, Kourtzi Z, Kanwisher N. The lateral occipital complex and its role in object recognition. Vision Research. 2001;41:1409–1422. doi: 10.1016/S0042-6989(01)00073-6. [DOI] [PubMed] [Google Scholar]

- 47.Dilks DD, Julian JB, Paunov AM, Kanwisher N. The Occipital Place Area Is Causally and Selectively Involved in Scene Perception. Journal of Neuroscience. 2013;33:1331–1336. doi: 10.1523/JNEUROSCI.4081-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ganaden RE, Mullin CR, Steeves JKE. Transcranial Magnetic Stimulation to the Transverse Occipital Sulcus Affects Scene but Not Object Processing. Journal of Cognitive Neuroscience. 2013;25:961–968. doi: 10.1162/jocn_a_00372. [DOI] [PubMed] [Google Scholar]

- 49.Andrew Leynes P, Zish K. Event-related potential (ERP) evidence for fluency-based recognition memory. Neuropsychologia. 2012;50:3240–3249. doi: 10.1016/j.neuropsychologia.2012.10.004. [DOI] [PubMed] [Google Scholar]

- 50.Bainbridge, W. A. The Resiliency of Memorability: A Predictor of Memory Separate from Attention and Priming. arXiv1703.07738v1, 37 (2017). [DOI] [PubMed]

- 51.Clarke A, Devereux BJ, Randall B, Tyler LK. Predicting the Time Course of Individual Objects with MEG. Cerebral Cortex. 2015;25:3602–3612. doi: 10.1093/cercor/bhu203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.McDonald CR, et al. Distributed source modeling of language with magnetoencephalography: Application to patients with intractable epilepsy. Epilepsia. 2009;50:2256–2266. doi: 10.1111/j.1528-1167.2009.02172.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Salti M, et al. Distinct cortical codes and temporal dynamics for conscious and unconscious percepts. eLife. 2015;4:1–19. doi: 10.7554/eLife.05652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Krishnaswamy P, et al. Sparsity enables estimation of both subcortical and cortical activity from MEG and EEG. Proceedings of the National Academy of Sciences. 2017;114:E10465–E10474. doi: 10.1073/pnas.1705414114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Mamashli F, et al. Maturational trajectories of local and long-range functional connectivity in autism during face processing. Human Brain Mapping. 2018;39:4094–4104. doi: 10.1002/hbm.24234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Bainbridge WA, et al. What is Memorable is Conserved Across Healthy Aging, Early Alzheimer’s Disease, and Neural Networks. Alzheimer’s & Dementia. 2017;13:P287–P288. doi: 10.1016/j.jalz.2017.06.189. [DOI] [Google Scholar]

- 57.Bainbridge WA, Oliva A. A toolbox and sample object perception data for equalization of natural images. Data in Brief. 2015;5:846–851. doi: 10.1016/j.dib.2015.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Torralba A, Oliva A. Statistics of natural image categories. Network: Computation in Neural Systems. 2003;14:391–412. doi: 10.1088/0954-898X_14_3_302. [DOI] [PubMed] [Google Scholar]

- 59.Taulu S, Kajola M, Simola J. Suppression of Interference and Artifacts by the Signal Space Separation Method. Brain Topography. 2003;16:269–275. doi: 10.1023/B:BRAT.0000032864.93890.f9. [DOI] [PubMed] [Google Scholar]

- 60.Taulu S, Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Physics in Medicine and Biology. 2006;51:1759–1768. doi: 10.1088/0031-9155/51/7/008. [DOI] [PubMed] [Google Scholar]

- 61.Tadel F, Baillet S, Mosher JC, Pantazis D, Leahy RM. Brainstorm: A User-Friendly Application for MEG/EEG Analysis. Computational Intelligence and Neuroscience. 2011;2011:1–13. doi: 10.1155/2011/879716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chang C-C, Lin C-J. LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology. 2011;2:1–27. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- 63.Holmes CJ, et al. Enhancement of MR Images Using Registration for Signal Averaging. Journal of Computer Assisted Tomography. 1998;22:324–333. doi: 10.1097/00004728-199803000-00032. [DOI] [PubMed] [Google Scholar]

- 64.Huang MX, Mosher JC, Leahy RM. A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Physics in Medicine and Biology. 1999;44:423–440. doi: 10.1088/0031-9155/44/2/010. [DOI] [PubMed] [Google Scholar]

- 65.Dale AM, et al. Mapping: Combining fMRI and MEG for High-Resolution Imaging of Cortical Activity. Neuron. 2000;26:55–67. doi: 10.1016/S0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- 66.Desikan RS, et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage. 2006;31:968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- 67.Carlson T, Tovar DA, Alink A, Kriegeskorte N. Representational dynamics of object vision: The first 1000 ms. Journal of Vision. 2013;13:1–1. doi: 10.1167/13.10.1. [DOI] [PubMed] [Google Scholar]

- 68.Isik L, Meyers EM, Leibo JZ, Poggio T. The dynamics of invariant object recognition in the human visual system. Journal of Neurophysiology. 2014;111:91–102. doi: 10.1152/jn.00394.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Pantazis D, et al. Decoding the orientation of contrast edges from MEG evoked and induced responses. NeuroImage. 2018;180:267–279. doi: 10.1016/j.neuroimage.2017.07.022. [DOI] [PubMed] [Google Scholar]

- 70.Pantazis D, Nichols TE, Baillet S, Leahy RM. A comparison of random field theory and permutation methods for the statistical analysis of MEG data. NeuroImage. 2005;25:383–394. doi: 10.1016/j.neuroimage.2004.09.040. [DOI] [PubMed] [Google Scholar]

- 71.Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods. 2007;164:177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- 72.Khan S, et al. Local and long-range functional connectivity is reduced in concert in autism spectrum disorders. Proceedings of the National Academy of Sciences. 2013;110:3107–3112. doi: 10.1073/pnas.1214533110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Hebart MN, Bankson BB, Harel A, Baker CI, Cichy RM. The representational dynamics of task and object processing in humans. eLife. 2018;7:1–22. doi: 10.7554/eLife.32816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Dobs K, Isik L, Pantazis D, Kanwisher N. How face perception unfolds over time. BioRxiv. 2018 doi: 10.1101/442194. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data and analysis tools used in the current study are available from the corresponding authors upon request.