Abstract

Network theory provides an intuitively appealing framework for studying relationships among interconnected brain mechanisms and their relevance to behavior. As the space of its applications grows, so does the diversity of meanings of the term “network model.” This diversity can cause confusion, complicate efforts to assess model validity and efficacy, and hamper interdisciplinary collaboration. Here we review the field of network neuroscience, focusing on organizing principles that can help overcome these challenges. First, we describe the fundamental goals in constructing network models. Second, we review the most common forms of network models, which can be described parsimoniously along three primary dimensions: from data representations to first-principles theory, from biophysical realism to functional phenomenology, and from elementary descriptions to coarse-grained approximations. We identify areas of focus and neglect in this space. Third, we draw on biology, philosophy, and other disciplines to establish validation principles for these models. We close with a discussion of opportunities to bridge model types and point to exciting frontiers for future pursuits.

WHAT IS A NETWORK MODEL?

Over a century ago, Camillo Golgi used blocks of brain tissue soaked in a silver-nitrate solution to provide among the earliest and most detailed views of the intricate morphology of nerve cells. Santiago Ramon y Cajal then combined this technique with light microscopy and an artist’s eye to draw stunning pictures that helped to establish that these nerve cells do not constitute a single reticulum. Instead, they are individual, anatomically defined units with complex interconnections. This idea, known as the Neuron Doctrine, soon came to encompass not just the structure but also the function of the nervous system and provides the foundation for modern neuroscience [1].

It is upon this foundation that modern network neuroscience thrives. This field uses tools developed in physics and other disciplines but has a natural relationship to the study of the brain. As in the Neuron Doctrine, network neuroscience seeks to understand systems defined by individual, functional units, often called nodes, and their relations, interactions, or connections, often referred to as edges. Together, the units and interactions, as well as any associated dynamics, form a network model that can be used to describe, explain, or predict the behavior of the real, physical network that it represents (Fig. 1). Many network models draw direct associations between these conceptual quantities and groups of anatomically defined, interconnected neurons in real brains [2–7]. These network models can then be analyzed using a host of quantitative techniques. For example, pairwise interactions can be described using an N × N adjacency matrix, where N is the number of nodes in the network, and each ijth element of the adjacency matrix gives the connection strength between node i and node j [8]. The architecture of the adjacency matrix can then be characterized using a set of mathematical approaches known collectively as graph theory, which was not developed explicitly to understand the brain but has a natural synergy with network neuroscience [9]. Many other approaches, including generalizations of graphs known as hypergraphs (an edge can link any number of vertices) and simplicial complexes (higher-order interaction terms become fundamental units) along with non-graph-based techniques, likewise can be used to analyze networks of neurons that constitute biological nervous systems [10–12].

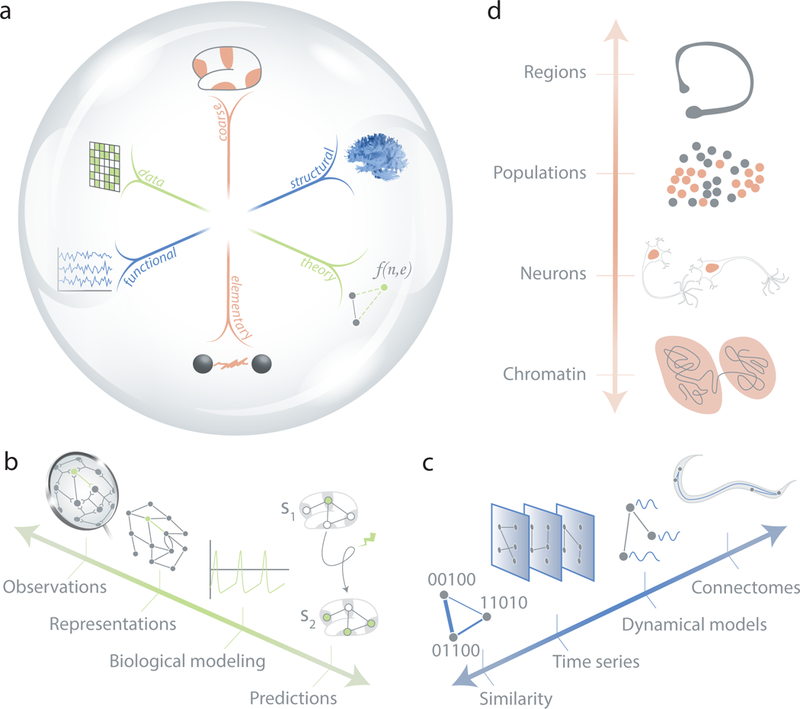

FIG. 1. Schematic of network models used in neuroscience.

(Left) The simplest and most commonly used network model for neural systems is one that represents the pattern of connections (edges) between neural units (nodes). More sophisticated network models can be constructed by adding edge weights and node values, or explicit functional forms for their dynamics. Multilayer networks can be used to represent interconnected sets of networks, and dynamic networks can be used to understand the reconfiguration of network systems over time. (Right) Common measures of interest include: degree, which is the number of edges emanating from a node; clustering, which is related to the prevalence of triangles; cavities, which describe the absence of edges; hubness, which is related to a node’s influence; paths, which determine the potential for information transmission; communities, or local groups of densely interconnected nodes; shortcuts, which are one possible marker of global efficiency of information transmission; and core-periphery structure, which facilitates local integration of information gathered from or sent to more sparsely connected areas.

These approaches can be applied to other network models of the brain, as well, not just those made up of interconnected neurons in vivo. For example, network models can be constructed across spatial scales that encompass pieces of chromatin, neuroprogenitor or neuronal cell cultures, cerebral organoids, cortical slices, and small volumes of human or non-human tissue [13–19]. Nodes can be chosen to reflect anatomical or functional units such as cell bodies at the microscale, cy-toarchitectonically or functionally distinct volumes at the macroscale, or more arbitary delineations like voxels [20–30]. Edges can be chosen to reflect anatomical connections based on identified synapses, white matter tracts, structural covariance, physical proximity, or functional relations such as statistical similarities in activity time series [31–36].

Even more sophisticated network models can be constructed to include heterogeneous and dynamic elements. For example, multilayer and multiplex network models can be constructed using multiple types of nodes and edges, such as those obtained from distinct neuroimaging modalities [37–39]. Annotated networks can be used to assign properties to nodes, such as cerebral glucose metabolism estimates from fluorodeoxyglucose (FDG)-positron emission tomography (PET), blood- oxygen-level dependent (BOLD) contrast imaging, mag- netoencephalographic (MEG) or electroencephalographic (EEG) power, gray matter volume or cortical thickness, cytoarchitectonic properties, measurements of oxidative metabolism, or estimates of coding capability, and to understand their relationships to nodal properties and internodal connectivity [40, 41]. Temporal dynamics can be applied to both nodes and edges, enabling studies of networks as reconfigurable dynamical systems that evolve over time [42–46].

Once constructed, these network models can be used to obtain deeper insights into how patterns of relationships between units support emergent function and behavior. For example, clustered connections can be used to identify locally efficient subgraphs or larger scale modules that can subserve specialized functions [47]. The conspicuous absence of connections can indicate holes or cavities in the architecture that could be conducive to information segregation [48, 49]. Topological shortcuts may support unusually long-distance interactions and generally efficient large-scale information transmission [50, 51]. At a more local level, nodes in a network that are more connected than the average are called hubs, which can be either localized or connected globally with one another to form a rich-club or network core [52, 53]. Important questions then follow regarding how the specific pattern of edges between nodes supports or hinders critical neu-rophysiological processes related to synchrony, communication, coding, and information transmission [54–57].

However, despite these clear benefits of using the evergrowing suite of network-based approaches to better understand the brain, the rapid growth of this field also brings major challenges. The sheer number of different kinds of network models used continues to grow, and their relationship to the kinds of relatively simple networks of interconnected neurons seen under Cajal’s microscope becomes increasingly distant. Consequently, different investigators use the term “network model” with different sets of assumptions about what the term means, which systems are most amenable to these kinds of analyses, and what sorts of conclusions can be drawn from the results (Box 1). These different assumptions can hamper communication, collaboration, and discovery. We therefore aim here to synthesize a set of organizing principles that govern how to define, validate, and interpret the different kinds of models encompassed by modern network neuroscience.

BOX 1: MODEL THEORY - A PHILOSOPHICAL PERSPECTIVE.

Recent model theory aims both to distinguish unique types of models from one another and to identify structural elements of models that are generalizable across scientific subfields. A model is a functional representation of a feature of the world. That is, it depicts what something is in terms of some of its measurable aspects to yield insights into how it works. There are at least four basic sorts of models [202, 203]. Some models are scalar, illuminating a feature by reducing or expanding it. Some are idealized, creating simplified, abstract representations. Other models are analogical, generating comparative structures at either the material or formal level. And still others are phenomenological, depicting merely observable elements. Insofar as all models are representations, they face the difficult task of defining the relation they have to the feature they model. What makes them heuristic devices? How is their accuracy or truth determined? Are functional models less true, given their distance from material substructures? Or are physical models less accurate, given their inability to account for higher level system dynamics?

All models represent reality in some sense and fail to represent reality in another sense. They are, therefore, akin to literary fiction. Models mobilize a creative approximation of some world-feature for an identifiable end. As such, they are perhaps best interpreted as imaginative objects and evaluated pragmatically. Theorizing models as fictions involves reframing them as imagined entities that aid in the narrativizing of a physical system [204–208]. As with any fiction, the community or discipline in question provides the principles that define how the model is generated and used. The model thus explores possible interpretations that are of value and interest to the community that uses it to gain insights that will move the field forward [209, 210]. Moreover, insofar as different models represent different features of a system, they may be used conjunctively to construct complementary narrative schemas. Whether taken together or separately, models clarify the anatomy and the architecture of a thing, precisely through the exercise and further provocation of scientific imagination.

WHAT KINDS OF MODELS ARE USED IN NETWORK NEUROSCIENCE?

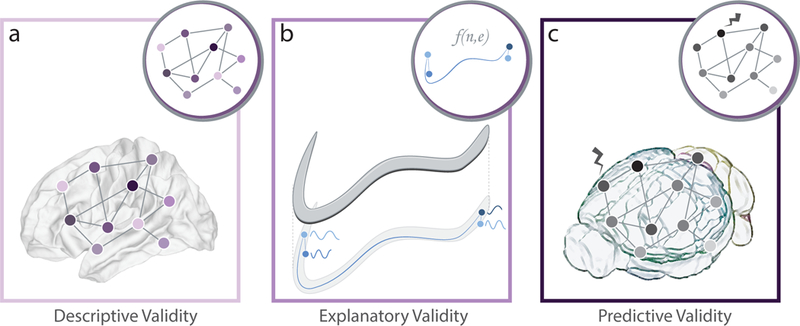

We begin by reviewing efforts to understand mechanisms of brain structure, function, development, and evolution in network neuroscience. We organize these efforts in terms of three key dimensions of model types that define the state-of-the-art in the field (Fig. 2). The first dimension is a continuum that extends from data representation to pure (or “first-principles”) theory. The second dimension is a continuum that extends from biophysical realism to phenomenological estimates of functional capacity (“functional phenomenology”). The third dimension is a continuum that extends from elementary descriptions to coarse-grained approximations. For each dimension, we discuss the rationale for its construction, several common forms, and major contributions. We then use this classification scheme to provide a vision for how future work might bridge network model types and provide integrative explanations for the diverse parts, processes, and principles of neural systems.

FIG. 2. Three dimensions of network model types.

(a) We posit that efforts to understand mechanisms of brain structure, function, development, and evolution in network neuroscience can be organized along three key dimensions of model types. (b) The first dimension extends from data representation to first-principles theory. (c) The second dimension extends from biophysical realism to functional phenomenology. (d) The third dimension extends from elementary descriptions to coarse-grained approximations.

From data representation to first-principles theory

Perhaps the first and most fundamental question that one can ask about a network model is whether it is a simple representation of data or a theory of how the system behind the data might work [58]. A straightforward way to operationalize the difference between these types is that a pure data representation cannot make a prediction about how the system came to be or what the system will become, whereas a first-principles theory explicitly sets out to do so. Mathematically, a data representation can be stored as a simple, temporal, multilayer, or annotated graph. In contrast, a theory-based model must combine a graph with a difference or differential equation specifying dynamics, evolution, or function of the nodes, edges, or both. This distinction is important because data-driven network models may have more biological realism but are less amenable to claims regarding mechanism or dynamics than theory-based models.

Data-based network models were first built using the detailed descriptions of brain structure by Cajal and his successors. For example, a synthesis of extensive prior work in the macaque suggested that the pattern of 305 structural connections among 32 visual and visual-association areas is consistent with a distributed hierarchy of information processing [59]. Complementary work in the mammalian cortex, especially the cat, distinguished between central and peripheral brain areas, in concert with their role in sensory processing [60–62]. As tools from the mathematical study of graphs became more commonly used to formalize the study of connectivity patterns in neural systems, efforts expanded across species from C. elegans to human, from macroscopic to microscopic scales, and from structural to functional data [16, 63–70]. These data-based network models were initially simple graphs that lacked any formal specification of dynamics. Later work extended these efforts to include other sorts of temporal, multilayer, multiplex, and annotated graphs. These models continue to provide new insights into how structural and functional connectivity are conserved and varies across species, depend on the spatial scale of measurement, and relate to cognition and disease [43, 71–77].

Theory-based models combine a network architecture with a model of dynamics that occurs at each node, at each edge, or across both nodes and edges. The most common forms of such models are those that define dynamics for each node. At the microscale, relevant dynamics include Hodgkin-Huxley, Izhikevich, and Rulkov map neuronal dynamics [78–81]. For neuronal ensembles, phenomenological models span FitzHugh-Nagumo, Hindmarsh-Rose, and Kuramoto oscillators [82–84]. For larger volumes of tissue, neural mass models have been used, for example in combination with coupling patterns derived from white-matter tractography to provide insights into neural underpinnings of synchronization, plasticity, and neurological disease [46, 85–89]. Another type of theory-driven model predicts how exogenous input to a neural system impacts its dynamics in a network- dependent manner, a form of network control [90–92]. Less common, but potentially useful, theory-based models define edge dynamics, for example as generative network models [93]. These models could be profitably extended by recently developed dynamic extensions of the classic random graph, including the configuration model, the stochastic block model, and the block-point process model [94, 95].

Network models at the two extremes of this dimension have both advantages and disadvantages. Data representations have the benefit of tracking closely with experimental findings but cannot alone be used to make formal, model-based predictions about a neural system’s growth, evolution, or dynamics. In contrast, theory-based models are explicitly built to make such predictions but typically must abstract away many neurophysiological and neuroanatomical details to ensure that the mathematics is manageable and the results are interpretable [96]. Intermediate models along this dimension try to combine the advantage of the extremes, often by defining theories whose parameters or functions are specified by data. For example, an intermediate model could specify first-principles dynamics on units that are linked by data-based estimates of large-scale patterns of connectivity. In general, models distributed along this dimension are synergistic, because data representations inform theories, and theories produce predictions that can be tested in new data representations [97].

From biophysical realism to functional phenomenology

A second dimension that is useful for differentiating network models spans structural to functional realism. Network models on the extreme end of structural, biophysical realism include physically true elements, such as neurons, represented as nodes; physically true axonal projection patterns, represented as edges; and, should dynamics be included, biophysically accurate descriptions of, for example, developmental, regenerative, or experience-dependent changes in neuronal morphology and projections [98]. In contrast, network models on the extreme end of functional phenomenology include nodes and edges that do not necessarily have exact physical counterparts, such as noise correlations in cellular neuroscience or functional connectivity in human neuroimaging, and, if dynamics are included, laws of network evolution that capture an observed phenomenon in a more abstract or conceptual sense. In the context of network neuroscience, this distinction is important because it often determines whether a particular model can be used to infer the functional capacities of realistic, physical structures or the structural demands of certain functions.

Perhaps the quintessential biophysical network model of a neural system is the C. elegans structural con-nectome, a data representation in which nodes represent neurons and edges represent chemical or electrical synapses [2, 33, 99]. Such models have recently been extended to first-principles theories of network control using a simplified noise-free, linear, discrete time, timeinvariant model of dynamics [92, 100–102]. At larger spatial scales, the structural connectomes of the mouse, macaque, and human brain are also biophysical network models providing a data representation of the white matter tracts (edges) linking volumes of tissue (nodes) [3, 64, 70]. Network models with biophysically realistic components can also be constructed according to theoretical principles. For example, simple theories of network control have been studied using network models composed of biophysically realistic white-matter tracts [103]. Likewise, complex theories of long-distance coordination have been studied using theory-driven oscillatory cortical-circuit models that use biophysically realistic components including synaptically couple excitatory and inhibitory neurons [45, 104]. Other efforts to include more biophysical realism in network models have focused on morphological diversity, arborization patterns, chemical gradients and physical barriers, cell-type specific connectivity rules, synaptic plasticity, and cytoarchitecture [5, 105–109].

Conversely, network models of functional phenomenology have edges that are defined by measures of functional connectivity or statistical similarities in node time series [36]. Functional edges have informational rather than physical meanings, including synchronization, phase locking, coherence, and correlation [110, 111]. Such edges also provide information about the properties of one time series that can be inferred or predicted from another [112]. Commonly, these kinds of functional edges are combined with nodes corresponding to exact physical volumes and locations to produce functional network models at the level of cells or large-scale brain regions [16, 67]. It is also possible to construct network models where the nodes are defined by non-physical rules. For example, at the cellular level, a node can be defined as an entity that produces a time series of spiking that is statistically different than other entities; e.g., by a principal components analysis [113]. At a larger scale, a node can be defined by the output of a sensor that is sensitive to brain activity from a distributed area with ill-defined physical boundaries because of artifacts of volume conduction through tissue and bone with complex morphologies, as in EEG, MEG, or electrocor- ticography (ECoG) [18, 114–116]. There also exist network models of functional phenomenology that incorporate explicit formulations of dynamics, such as: (i) the packet-switching model of information transmission in the structural connectome, which draws inspiration from queuing theory applied to the Internet [117]; and (ii) the pairwise maximum-entropy model of collective behavior across neurons or large-scale brain areas, which draws inspiration from statistical mechanics [118–121]. Studies of this type are often more focused on understanding the brain as an information-processing system than on understanding its specific physical instantiation.

Network models at the extremes of this dimension have both advantages and disadvantages. Biophysically realistic models incorporate rich empirical observations regarding the physical nature of the brain. They are concrete but can be computationally expensive and are sometimes difficult to interpret because of a plethora of parameters needed to describe the network structure. In contrast, network models that emphasize functional phenomenology incorporate rich empirical observations regarding the informational nature of the brain but are less easily mapped onto brain structure. They are not concrete in the physical sense but can provide simplicity, interpretability, and formal links to non-physical theories such as information theory (Box 2). These fundamental differences are particularly important to keep in mind when network models at these two extremes have apparently similar properties. For example, in some imaging modalities edge strength as measured by structural connectivity is correlated with edge strength as measured by functional connectivity [121–124]. However, a structural connection inferred from a physical measurement should be interpreted differently than a functional connection inferred from statistical similarities in time-series data [120, 125–127]. Accordingly, it is often useful to generate intermediate models along this dimension that combine approaches from the extremes. For example, an intermediate model could estimate functional interactions between units that are linked by biophysical estimates of inter-neuronal (synaptic) connectivity.

BOX 2: FUNCTIONAL PHENOMENOLOGY AND INFORMATION THEORY.

Common network models of functional phenomenology are those whose edges are defined by measures of statistical similarity between the activity time series of node i and the activity time series of node j [36]. Across cellular and systems neuroscience, the use of a simple correlation as the measure of statistical similarity has proven useful in decoding uncertainty; probing the optimality of computations in the face of limited inputs; and predicting a subject’s identity, age, and response to interventions such as deep brain stimulation [211–215]. However, many other measures of statistical similarity exist, including those that assess non-linear relations, mutual information, and other types of conditional dependencies between time series [16, 216, 217]. Important open questions in building network models are whether insight into neural function is best obtained by considering multiple measures of statistical similarity, and how to quantify their relative capacity to capture neural information [218].

While far from a panacea, information theory - the mathematical study of the coding and transmission of information - provides useful tools for statistical inference that could prove increasingly important for network neuroscience [219, 220]. Although commonly used statistical metrics probe shared information between two processes, the theory also provides expectations such as maximum entropy and other metrics to probe time-asymmetric transfer of information between two or more processes, such as transfer entropy [119, 221, 222]. In measurements of calcium imaging at the cellular level, transfer entropy can be used to distinguish between causal influences between neurons and non-causal correlations due to light scattering artifacts [223]. Similar efforts for larger-scale network models have used transfer entropy to demonstrate that diverse states of information routing can be induced on the same structural network model, simply by minor modulations of background y activity [224]. Important open questions include how to quantify non-pairwise causal interactions, and how to think about causality in dynamic networks characterized by higherorder, non-pairwise interactions occurring over multiple spatiotemporal scales [56].

From elementary descriptions to coarse-grained approximations

The third and final dimension is from elementary descriptions to coarse-grained approximations. Over the past decade, it has been argued constructively that the foundations of network science rely on accurately identifying well-defined, discrete, non-overlapping units that become nodes in the network, and the organic, irreducible relations that become edges in the network [128]. For some scientific questions, these units and their relations take on natural elementary forms. For other questions, the units and relations are coarse-grained. Consider the canonical physicist’s cow: an elementary description might begin with quarks, whereas the coarsegrained description might begin with a spherical mass. The former model is useful for questions related to quantum mechanics, and the latter is more useful for questions related to classical mechanics. In the context of network neuroscience, this distinction is important because models based on elementary descriptions seek to understand how relationships between structure and function emerge directly from those descriptions, whereas models based on coarse-grained approximations focus on emergent network properties that may be best understood without explicitly considering the system’s elementary building blocks.

Many common network models are based on the elemental units of the Neuron Doctrine: individual nerve cells and their synaptic connections [73]. Such models can be either data representations or first-principles theories and can either include extensive biophysical realism or explain functional phenomenology [80, 108, 118, 129, 130]. Network models at this scale can be used to ask questions about cellular mechanisms of growth and development or transitions from neural progenitor cells to neurons [131, 132]. Recent work has extended these approaches to even smaller scales, for example to investigate specific sections of a cell such as neurite outgrowth [133]. Ongoing efforts are also beginning to extend to the molecular scale to study the network architecture of chromatin folding within the cell nucleus, which changes as cells transition from pluripotent stem cells to neural progenitor cells and is altered in neurodevelopmental disorders [134].

In contrast, coarse-grained models are based on simplified descriptions of ensembles of smaller units and finer-scale processes. One example for such a simplification scheme, which is common in physics, is a continuum or mean-field theory that models the dynamics of a collection of different units as equivalent to the dynamics of an average unit [135]. This kind of coarse-graining is frequently used in simulations of molecular dynamics, cellular biology, and ecology [136–139]. In the context of the brain, it has been used to bridge spiking neurons to collective neural dynamics, to better understand synaptic plasticity, and to predict the effects of exogenous input [140–143]. Another example is neural mass models, which are explicit, coarse-grained approximations of neural ensemble dynamics [46]. Implicit coarse-graining also occurs in practically all studies of large-scale brain network models, in which each node’s properties are a coarse approximation of the properties of smaller units that are typically not directly accessible to measurement methodologies. A commonly encountered example is neuroimaging data, for which network-specific coarse-graining approaches are being developed to better understand and control how data are aggregated when forming network models [13, 144, 145].

Network models at the extremes of this dimension have both advantages and disadvantages. For example, elementary models are often most useful for understanding neural codes and network function at the cellular level. In contrast, coarse-grained approximations are often most useful for understanding population and ensemble codes and network function at the ensemble level and beyond. A current focus of interest is the development of network models that cross these scales, using data and theory to better understand how the structure and function at one scale can be used to make inferences about structure and function at another scale [6, 146, 147].

Density of study in this three-dimensional space

We consider the dimensions described above to be relatively independent, such that, for example, a data-driven model can be structurally or functionally realistic, or fine- or coarse-grained. These dimensions thus describe a three-dimensional space in which many different kinds of network models can be placed. It is interesting to consider whether there are particular parts of this volume that have been more or less well-studied. The answer is intertwined with the history of how the field of network neuroscience emerged, as well as with changes in the landscape of technology that have quickened the pace of data acquisition and broadened the extent of computational modeling. Network neuroscience largely began with observations of structural connectivity at the large- scale represented as graphs or networks devoid of any explicit dynamics [51, 65, 148]. This early focus has continued, with more extensive efforts in data representations than first-principles theories, more biophysical realism than functional phenomenology, and more coarse-grained approximations than elementary descriptions. Therefore, the least well-studied extreme in this cube represents first-principles theories of functional phenomenology at the elementary level of description.

Looking ahead, we emphasize two features of this space that are likely to promote new advances. The first is the increasing accessibility of the region of convergence at the center of our three-dimensional scheme. The growth of technology and data science has increased efforts that constrain or parameterize first-principles theories with real data representations [149], filling out the middle of the first dimension [97]. Efforts linking biophysical realism with functional phenomenology seek to understand structure-function relations in the brain facilitated by both its physical and informational nature, filling out the middle of the second dimension [49]. Computational methods and novel data-acquisition methods have also increased efforts to bridge elementary descriptions with coarse-grained approximations in explicit, multiscale models, filling out the middle of the third dimension. These integrative efforts, along with those that work across these dimensions, will help bridge levels of understanding of brain structure and function.

The second important feature of this space is the usefulness of its full volume, which comprises network models that seek to explain different parts, processes, or principles of the nervous system. In general, it is not necessary for any one model to provide the same insights as any other model. For example, a biophysical model of synaptic transmission in the dentate gyrus is not likely to provide direct insight into the functional phenomenology of the default-mode network. Similarly, a map of the whole structural connectome of the human at a resolution of 1-mm voxels is not likely to provide insight into the transmission of spontaneous or task-induced action potentials in auditory cortex. Therefore, in addition to integration at the center of this space we hope for continued exploration at its underdeveloped frontiers.

HOW CAN WE ASSESS THE VALIDITY AND EFFECTIVENESS OF MODELS IN NETWORK NEUROSCIENCE?

Assessing the validity of a particular network model typically involves standard statistical model-selection methods. These methods balance goodness-of-fit to the data with penalties for complexity to avoid overfitting and provide parsimonious and generalizable conclusions. However, it can be challenging to achieve this balance for network models because of their diversity of goals and uses. For example, a close fit to known anatomical connectivity patterns is likely to be a more valid approach for understanding principles that govern interactions between elementary, biophysically realistic components of the nervous system than for network models of abstract computations. Here we propose a classification system for validating network models based on different goals and domains of inquiry. This system is based on principles developed in the context of both statistical model selection and the evaluation of the efficacy of animal models for psychiatric disorders [150–153]. The categories, which are not necessarily mutually exclusive in terms of their relevance to assessing a particular network model, are: descriptive, explanatory, and predictive (see Fig. 3). Below we consider each in detail.

FIG. 3. Illustrations of different categories of model assessment.

(a) Descriptive validity addresses the question of whether the model resembles in some key way(s) the system it is constructed to model. For network models, descriptive validity naturally aligns with questions about how well the specific patterns of nodes and edges (circular inset) matches the anatomical and/or functional data that it represents (main panel). (b) Explanatory validity focuses on a theoretical construct that is ultimately used to develop statistical tests and support conclusions drawn from the use of the model. A network model can be considered to have explanatory validity if its architecture can be justified in terms of brain data and if it can then be used to test for causal relationships to dynamics or behavior based on that architecture. Here we show a formal model of network node dynamics (circular inset) that can be used to test for causal relationships with dynamics in the true system (main panel). (c) Predictive validity occurs when there is an organism-model correlation in response to a perturbation, such as a drug, electrical or chemical stimulation, neurofeedback, or training. Here we show the model’s response to perturbation (circular inset) matching the organism’s response to perturbation (main panel).

Descriptive validity

The first category is descriptive and is related to the concept of “face validity” that is commonly discussed in the context of animal models. It addresses the question of whether the model resembles in some key way(s) the system it is constructed to model. In considering animal models, face validity is often assessed by comparing symptoms of, for example, an animal model of depression to those listed in the Diagnostic and Statistical Manual of Mental Disorders [154]. For network models in neuroscience, descriptive validity naturally aligns with questions about how well the structure of the model (i.e., the specific patterns of nodes and edges) matches the anatomical and/or functional data that it represents. This match can be assessed using standard model- selection procedures with an emphasis on the goodness- of-fit to the measured data, but it also can reflect choices about how to construct the model in light of potentially relevant neurobiological features. For example, network models in which nodes represent brain areas that obey cytoarchitectonic or functional boundaries have more descriptive validity than those in which nodes represent randomly chosen volumes of tissue [22]. Similarly, network models in which edges are assigned continuous weights representing the strengths of anatomical connections or functional relations have more descriptive validity than those in which edges are assigned binary weights [51, 155].

Establishing the descriptive validity of network models faces two major challenges. One is that the neural architecture and functional dynamics being modeled are highly complex, with nontrivial structure and function present across multiple spatial and temporal scales [13, 156]. Thus, identifying the appropriate level of complexity that should be modeled can be difficult, both in principle and in practice [157]. In principle, problems arise because there is almost always uncertainty about which spatial and temporal scales in the brain are relevant for a particular anatomical architecture, neuro-physiological process, cognitive task, or behavior [77]. In practice, problems arise because the available imaging, physiological, anatomical, and other data used to build the model typically provide particular spatial and temporal resolutions that are constrained primarily by the measurement methodology and not by any consideration of the scales that are most appropriate for the model [158]. One way to address this challenge is to improve the descriptive validity of node definitions, for example by detecting node boundaries using structural or functional connectivity or using clustering methods to agglomerate smaller regions into larger regions [159–161]. Another useful approach is to integrate information from different sets of measurements, including anatomical and functional data, micro- and macro-scale network mapping, and integration of neurotransmitter function and chemoarchitecture to functional magnetic resonance imaging (fMRI) [6, 122, 124, 146, 162–167].

The second major challenge for establishing the descriptive validity of network models is extensive individual variability. Individual variability exacerbates problems of underfitting and overfitting, because of uncertainty associated with whether idiosyncratic network features are relevant to a particular model or are just noise and should be ignored. In general, little is known about the principles that govern individual differences in neural connectivity patterns in organisms with central nervous systems. However, network architecture and function show differences across people that are associated with cognitive functions and symptom severity for certain diseases, and these differences may be modulated or constrained by the environment, socio-economic status, and genetics [75, 168–175]. Network dynamics also can show appreciable individual differences that are reproducible across iterative measurements and can be separated into state and trait components [176, 177]. Efforts to overcome the challenges posed by these kinds of individual differences should include schemes to test for both general principles that are robust to individual variability and specific markers of behaviorally meaningful between- subject differences that are robust to measurement noise. Ideally, future first-principles models could use these tests to construct a general form for population-level features, as well as variable parameters to account for individual variability.

Explanatory validity

The second category in our proposed classification system for validating network models is explanatory. This category is related to the concept of “construct validity” that is commonly discussed in the context of animal models. It focuses on a theoretical construct that is ultimately used to develop statistical tests and support conclusions drawn from the use of the model. Thus, for example, an animal model of depression is considered to have construct validity based on arguments about not just how it relates to what is known about depression in humans, but also how the model is used to probe causal questions about mechanisms, symptoms, and/or treatments that cannot be easily tested in humans. Likewise, a network model of the brain can be considered to have construct or explanatory validity if its architecture can be justified in terms of brain data and if it can then be used to test for causal relationships based on that architecture [77].

Explanatory validity thus requires an assessment of both a model’s architecture (i.e., a form of descriptive validity) and its ability to test for causal relationships. This dual assessment can be accomplished using statistical model-selection approaches, with goodness-of-fit metrics applied to both structural and functional data balanced by penalties for the complexity of particular instantiations of the model [178–181]. Model-selection approaches have played an important role in both graphical modeling and neuroimaging [182–184]. They also have been applied to model selection at several scales, ranging from the small scale of a network subgraph (e.g., dynamic causal modeling) to the large scale of a statistic or statistical distribution estimated from the entire network (e.g., the degree distribution or Rentian scaling [99, 185, 186]). Formal frameworks for building and evaluating such models include exponential random graph models, which create a class of graphs with fixed values for topological statistics, and generative network models, which create a class of graphs from a wiring rule [93, 149, 187, 188].

Model-selection procedures to maximize explanatory validity involve two particular challenges. One is that model complexity, often quantified in terms of the number of free parameters, may involve other degrees of freedom in network models that are harder to quantify and account for, particularly when considering both structure and function. That is, the effective dimensionality of a particular network model may be difficult to define. For example, many network features can have non-trivial dependencies, making the number of parameters in a model ill-defined [189]. These dependencies can occur from known mathematical relations and therefore remain true across all types of networks and be relatively easy to account for, or they can occur from unknown constraints on - or drivers of - network topology and therefore require different approaches across different network ensembles or classes [190–192]. The second challenge is that the complexity of a neural network model may be defined or constrained by the desire to maintain biological realism in the model’s architecture (descriptive validity) beyond that which is necessary for the model’s function. Under these conditions, it can be challenging to identify the statistically simplest explanation under those constraints. Efforts to meet this challenge include model-selection procedures that aim to identify a reduced structural subsystem or slow functional manifold that captures the system’s effective architecture and dynamics in the simplest way possible [193].

Predictive validity

The third category in our proposed classification system for validating network models is predictive. By comparison, an animal model has predictive validity if there is a human-animal correlation of therapeutic outcomes. That is, a treatment, such as a particular drug, should affect a condition in an animal in a manner that corresponds to - and thus is predictive of - how that treatment affects that condition in a human [152]. Likewise, a statistical model is predictive if there is an organism-model correlation in response to perturbation. That is, a perturbation to the network, such as the addition, removal, strengthening, or weakening of a node or edge, or the alteration of the function of that node or edge via drug, stimulation, neurofeedback, or training, should affect the network in a model or simulation in a manner that corresponds to, and thus is predictive of, how that perturbation affects the network in the organism. These models, like explanatory models, often combine both structural and functional features. Accordingly, procedures for assessing and selecting models with predictive validity face many of the same challenges as for explanatory validity, concerning both model complexity and constraints imposed by a desire also to provide descriptive validity.

Opportunities for predictive validity often occur later in a field’s lifetime than opportunities for descriptive validity, as each discipline moves from developing a language for the subject matter, developing tools and techniques relevant to the subject matter, and finally using the language, tools, and techniques to answer novel questions and test novel predictions [194]. Thus, building models with predictive validity is a relatively new endeavor in network neuroscience, although several prominent examples exist. For models of large-scale network control, exogenous activation of strong modal controllers, which move the system to distant states on the intrinsic energy landscape, was predicted to induce task switching. This theoretical prediction was supported by observations of strong modal controllers in human brain areas that activate during task switching [90]. For models of smaller-scale network control, a theoretical prediction about the control function of a node inside the network model was confirmed by ablation studies in C. elegans [92]. For models of complex, nonlinear, high-frequency dynamics, a theoretical prediction of synchronization patterns produced by seizure dynamics at the onset zone which propagated along human white matter network architecture matched observed seizure dynamics in patients with medically refractory epilepsy [195]. For models of network dynamics, an observation that network flexibility was an intermediate phenotype for schizophrenia motivated the hypothesis that it was causally driven by the brain’s state of excitatory/inhibitory balance, thought to underlie the disease. This hypothesis was tested and validated in a separate set of healthy subjects undergoing a pharmacological challenge with an NMDA-receptor inhibitor (Dextromethorphan) [166]. These kinds of predictive models will need to play a more prominent role in network neuroscience for the field to grow beyond its current boundaries and affect other basic and translational studies of the brain.

A complementary but distinct line of work capitalizes on emerging methods from machine learning with the goal of developing network models that can inform other learning algorithms. For example, in translational research it is often of considerable interest to distinguish between patients and controls, to foreshadow conversion from one clinical phenotype to another, to distinguish between one pathology and another pathology leading to the same abnormal behavioral phenotype, or to predict the outcome of electroconvulsive therapy in major depression or of resection surgery in temporal lobe epilepsy [169, 196–200]. In these cases, summary statistics of network models have been used as features in machine- learning algorithms that make predictions [201]. A key challenge hampering the field is that it is not always known a priori which network statistics should be used. One useful benchmark could be to assess directly the predictive validity of the network model itself. An important area for future growth will be to use such approaches to infer fundamental and generalizable models that accurately predict the response of the brain to a perturbation or pathology.

FUTURE OUTLOOK

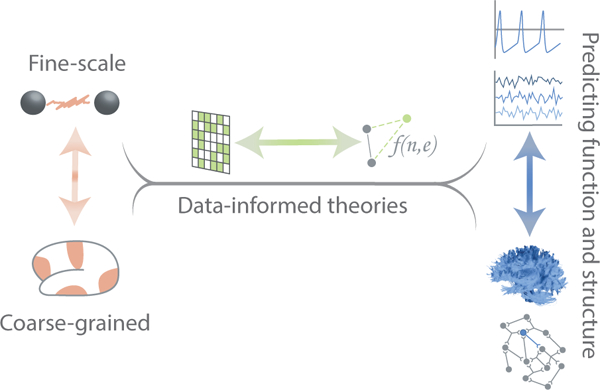

In the face of the multiplicity of model and validity types, it is imperative that investigators clearly specify their study’s goal(s). Insights that can be drawn reasonably from one type of model can be quite different than insights that can be drawn reasonably from another type of model. What is truth for one may be speculation for another. Furthermore, it is well established, although not always acknowledged in practice, that the different goals that motivate model construction can affect model fitting and selection in dramatic ways. For example, a model that provides the best explanation for how certain factors causally influence measured network dynamics might not, because of overfitting, be the best model for generating predictions of how the network will respond in the future to those factors. Conversely, a particular model that does not provide as effective explanations as another might be better suited to prediction problems. It is also possible to construct models that span types and dimensions, thereby bridging inferences and overcoming the limitations of one model with the advantages of another (see Fig. 4 and Box 3).

FIG. 4. Bridging model types in network neuroscience.

There are many ways to bridge model types. One natural path begins with fine-scale and coarse-grained information drawn from real systems (left) to create network models as data representations, which in turn are used to inform first-principles theories (middle). These theories then predict observed patterns of functional or structural connectivity (right).

BOX 3: BRIDGING MODEL TYPES.

The three model dimensions we considered (Fig. 2) are relatively independent of each other, but each encompasses principles that have been of great value to the field. It therefore is useful to consider ways to bridge two or more model types within and across these dimensions. The concept of bridging models raises the possibility of overcoming the limitations of one model type with the strengths of another. This benefit is particularly relevant when bridging models across two ends of a single dimension. For example, by informing first-principles theories with data, it is possible to overcome a common limitation of the former (impoverished empiricism) with a common strength of the latter (enriched empiricism) [96]. These bridges can also go in either direction: just as data can inform theories, so too can theories inform data-driven approaches [225]. A salient example of this latter approach is Latent Factor Analysis via Dynamical Systems (LFADS), a model of latent neural dynamics informed by an explicit dynamical-systems hypothesis regarding the generation of the observed neural spiking data [226].

The potential to link model types with one another, by either directed or undirected links, opens the possibility of building multiscale network models, where one type of model informs another type of model in a scale either above or below it. Conceptually, such multiscale models become meta-network models in which nodes represent models, and edges represent inter-model relations such as how one model predicts, informs, or is combined with another model (Fig. 4). For example, a network representation of fine-grained data (node) can inform (edge) a first-principles theory (node), as can a network representation of coarse-grained data (node). In turn, the first-principles theory (node) can predict (edge) patterns of functional connectivity (node) or patterns of structural connectivity (node). When one considers building such a meta-network model, the same challenges arise as with building a simple network: how to accurately identify well-defined, discrete, non-overlapping model units that become nodes in the network, and the organic, irreducible relations between models that become edges in the network [128]. Care must also be taken to consider the relative advantages and disadvantages of model complexity, biological realism, and interpretational simplicity.

A primary strength of network neuroscience is its natural descriptive validity. A key goal should be to use models that build on this strength and press forward towards better explanations and predictions [77]. However, care must be taken to assess the validity of these efforts using appropriate statistical approaches, which can differ for explanation versus prediction [150]. Models designed to explain are assessed according to goodness-of-fit, with various kinds of penalties assigned to model complexity. In general, these procedures minimize bias to obtain accurate representations, which tends to emphasize more complex models that can overfit the data. In contrast, models designed to predict are often assessed according to their ability to generalize, which can be tested by holding out subsets of data during fitting to then compare to predictions from the best-fitting model. These procedures typically minimize both bias and variance (overfitting to noise), which tends to emphasize simpler models. Finding the appropriate balance in these procedures is particularly challenging for network models because the models themselves, and the data to which they are applied, are often highly complex. Moreover, there is often a lot of uncertainty about how to match the complexity of the model to the complexity of the data in some reasonable and appropriate way. Thus, new tools must be developed to measure and compare the complexity of network models and to make principled decisions that balance the need for biological realism, which may demand complex physical network models, with the desire for computational simplicity.

CONCLUSION

Because of the recent explosion of work in the emerging field of network neuroscience, the current usage of the term “network model” is broad and multifaceted, encompassing multiple dimensions of scientific inquiry. Many practitioners are unaware of complementary work being done by others in distinct regions of the three-dimensional space that we define. The accompanying potential for myopia increases domain-specific usage and interpretation of terms. Because of this multiplicity of meanings, we suggest that practitioners provide explicit definitions corresponding to their particular usage when appropriate, so as to avoid confusion and facilitate interdisciplinary collaboration. While such care is critical in all areas of science, it is particularly worth raising in the collective awareness when a field is young and expanding. We suggest that concerted efforts to extend current frontiers in evaluating and assessing model types, as well as bridging model types, will be necessary in order to gain a fuller understanding of how networked brain structure and function give rise to our mental capacities.

ACKNOWLEDGEMENTS

We thank Blevmore Labs for efforts in graphic design. We also thank David Lydon-Staley, Ann E. Sizemore, Eli Cornblath, and Dale Zhou for helpful comments on an earlier version of this manuscript. DSB acknowledges support from the John D. and Catherine T. MacArthur Foundation, the Alfred P. Sloan Foundation, the Army Research Laboratory and the Army Research Office through contract numbers W911NF-10–2-0022 and W911NF-14–1-0679, the National Institute of Mental Health (2-R01-DC-009209–11), the National Institute of Child Health and Human Development (1R01HD086888– 01), the Office of Naval Research, and the National Science Foundation (BCS-1441502, BCS-1430087, NSF PHY-1554488, and BCS-1631550). The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the funding agencies.

Footnotes

COMPETING INTERESTS

The authors declare no competing interests.

References

- [1].Jones EG Colgi, cajal and the neuron doctrine. J Hist Neurosci 8, 170–178 (1999). [DOI] [PubMed] [Google Scholar]

- [2].Nicosia V, Vertes PE, Schafer WR, Latora V Bullmore ET Phase transition in the economically modeled growth of a cellular nervous system. Proceedings of the National Academy of Sciences USA 110, 7880–7885 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Oh SW et al. A mesoscale connectome of the mouse brain. Nature 508, 207–214 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Harris NG et al. Disconnection and hyperconnectivity underlie reorganization after TBI: A rodent functional connectomic analysis. Exp Neurol 277, 124–138 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Beul SF, Grant S & Hilgetag CC A predictive model of the cat cortical connectome based on cytoar-chitecture and distance. Brain Struct Funct 220, 3167–3184 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Scholtens LH, Schmidt R, de Reus MA & van den Heuvel MP Linking macroscale graph analytical organization to microscale neuroarchitectonics in the macaque connectome. J Neurosci 34, 12192–12205 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Hagmann P et al. Mapping the structural core of human cerebral cortex. PLoS Biol 6, e159 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Bollobas B Graph Theory: An Introductory Course (Springer-Verlag, 1979). [Google Scholar]

- [9].Albert E & Barabasi A-L Statistical mechanics of complex networks. Reviews of Modern Physics 74 (2002). [Google Scholar]

- [10].Bassett DS, Wymbs NF, Porter MA, Mucha PJ & Grafton ST Cross-linked structure of network evolution. Chaos 24, 013112 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Giusti C, Ghrist R & Bassett DS Two’s company, three (or more) is a simplex: Algebraic-topological tools for understanding higher-order structure in neural data. J Comput Neurosci 41, 1–14 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Calhoun VD, Miller R, Pearlson G & Adali T The chronnectome: time-varying connectivity networks as the next frontier in fMRI data discovery. Neuron 84, 262–274 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Betzel RF & Bassett DS Multi-scale brain networks. Neuroimage 160, 73–83 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Norton HK et al. Detecting hierarchical 3-D genome domain reconfiguration with network modularity. Nature Methods In Press (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Malmersjo S et al. Neural progenitors organize in small-world networks to promote cell proliferation. Proc Natl Acad Sci U S A 110, E1524–1532 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Bettencourt LM, Stephens GJ, Ham MI & Gross GW Functional structure of cortical neuronal networks grown in vitro. Phys Rev E 75, 021915 (2007). [DOI] [PubMed] [Google Scholar]

- [17].Carmeli C, Bonifazi P, Robinson HP & Small M Quantifying network properties in multi-electrode recordings: spatiotemporal characterization and intertrial variation of evoked gamma oscillations in mouse somatosensory cortex in vitro. Front Comput Neurosci 7 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Burns SP et al. Network dynamics of the brain and influence of the epileptic seizure onset zone. Proc Natl Acad Sci U S A 111, E5321–E5330 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Muldoon SF, Soltesz I & Cossart R Spatially clustered neuronal assemblies comprise the microstructure of synchrony in chronically epileptic networks. Proc Natl Acad Sci USA 110, 3567–3572 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Brodmann K Vergleichende Lokalisationslehre der Grosshirnrinde (Johann Ambrosius Barth, 1909). [Google Scholar]

- [21].von Economo CF & Koskinas GN Die Cytoar- chitektonik der Hirnrinde des erwachsenen Menschen (Springer, 1925). [Google Scholar]

- [22].Glasser MF et al. A multi-modal parcellation of human cerebral cortex. Nature 536, 171–178 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Zalesky A et al. Whole-brain anatomical networks: does the choice of nodes matter? Neuroimage 50, 970–983 (2010). [DOI] [PubMed] [Google Scholar]

- [24].Buckner RL et al. Cortical hubs revealed by intrinsic functional connectivity: mapping, assessment of stability, and relation to Alzheimer’s disease. J Neurosci 29, 1860–1873 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Yu Q et al. Comparing brain graphs in which nodes are regions of interest or independent components: A simulation study. J Neurosci Methods 291, 61–68 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Craddock RC, James GA, Holtzheimer P. E. r., Hu XP & Mayberg HS A whole brain fMRI atlas generated via spatially constrained spectral clustering. Hum Brain Mapp 33, 1914–1928 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Eickhoff SB, Thirion B, Varoquaux G & Bzdok D Connectivity-based parcellation: Critique and implications. Hum Brain Mapp 36, 4771–4792 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Wig GS, Laumann TO & Petersen SE An approach for parcellating human cortical areas using resting-state correlations. Neuroimage 93, 276–291 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Wang D et al. Parcellating cortical functional networks in individuals. Nat Neurosci 18, 1853–1860 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Chong M et al. Individual parcellation of resting fMRI with a group functional connectivity prior. Neuroimage 156, 87–100 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Bassett DS et al. Hierarchical organization of human cortical networks in health and schizophrenia. J Neurosci 28, 9239–9248 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].He Y, Chen ZJ & Evans AC Small-world anatomical networks in the human brain revealed by cortical thickness from MRI. Cereb Cortex 17, 2407–2419 (2007). [DOI] [PubMed] [Google Scholar]

- [33].Varshney LR, Chen BL, Paniagua E, Hall DH & Chklovskii DB Structural properties of the Caenorhabditis elegans neuronal network. PLoS Com- put Biol 7, e1001066 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Bassett DS, Brown JA, Deshpande V, Carlson JM & Grafton ST Conserved and variable architecture of human white matter connectivity. Neuroimage 54, 1262–1279 (2011). [DOI] [PubMed] [Google Scholar]

- [35].Betzel RF et al. The modular organization of human anatomical brain networks: Accounting for the cost of wiring. Network Neuroscience 1, 42–68 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Friston KJ Functional and effective connectivity: a review. Brain Connect 1, 13–36 (2011). [DOI] [PubMed] [Google Scholar]

- [37].Kivel M et al. Multilayer networks. J. Complex Netw. 2, 203–271 (2014). [Google Scholar]

- [38].Tewarie P et al. Structural degree predicts functional network connectivity: a multimodal resting-state fMRI and MEG study. Neuroimage 97, 296–307 (2014). [DOI] [PubMed] [Google Scholar]

- [39].Yu Q et al. Building an EEG-fMRI multi-modal brain graph: A concurrent EEG-fMRI study. Front Hum Neurosci 10, 476 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Newman MEJ & Clauset A Structure and inference in annotated networks. Nature Communications 7, 11863 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Murphy AC et al. Explicitly linking regional activation and function connectivity: Community structure of weighted networks with continuous annotation. arXiv 1611, 07962 (2016). [Google Scholar]

- [42].Holme P & Saramaki J Temporal networks. Phys. Rep. 519, 97–125 (2012). [Google Scholar]

- [43].Khambhati AN, Sizemore AE, Betzel RF & Bassett DS Modeling and interpreting mesoscale network dynamics. Neuroimage S1053–8119, 30500–1 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Sizemore AE & Bassett DS Dynamic graph metrics: Tutorial, toolbox, and tale. Neuroimage S1053– 8119, 30564–5 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Kopell NJ, Gritton HJ, Whittington MA & Kramer MA Beyond the connectome: the dynome. Neuron 83, 1319–1328 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Breakspear M Dynamic models of large-scale brain activity. Nat Neurosci 20, 340–352 (2017). [DOI] [PubMed] [Google Scholar]

- [47].Sporns O & Betzel RF Modular brain networks. Annu Rev Psychol 67 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Sizemore AE et al. Cliques and cavities in the human connectome. J Comput Neurosci Epub Ahead of Print (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Reimann MW et al. Cliques of neurons bound into cavities provide a missing link between structure and function. Front Comput Neurosci 11, 48 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Betzel RF & Bassett DS The specificity and robustness of long-distance connections in weighted, interareal connectomes. PNAS In Press (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Bassett DS & Bullmore ET Small-world brain networks revisited. Neuroscientist September 21, 1073858416667720 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].van den Heuvel MP, Kahn RS, Goni J & Sporns O High-cost, high-capacity backbone for global brain communication. Proc Natl Acad Sci U S A 109, 11372–11377 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Bassett DS et al. Task-based core-periphery organization of human brain dynamics. PLoS Comput Biol 9, e1003171 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Borgers C & Kopell N Synchronization in networks of excitatory and inhibitory neurons with sparse, random connectivity. Neural Comput 15, 509–538 (2003). [DOI] [PubMed] [Google Scholar]

- [55].Fries P Rhythms for cognition: Communication through coherence. Neuron 88, 220–235 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Ganmor E, Segev R & Schneidman E Sparse low- order interaction network underlies a highly correlated and learnable neural population code. Proc Natl Acad Sci U S A 108, 9679–9684 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Avena-Koenigsberger A, Misic B & Sporns O Communication dynamics in complex brain networks. Nat Rev Neurosci 19, 17–33 (2017). [DOI] [PubMed] [Google Scholar]

- [58].Abbott LF Theoretical neuroscience rising. Neuron 60, 489–495 (2008). [DOI] [PubMed] [Google Scholar]

- [59].Felleman DJ & Van Essen DC Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex 1, 1–47 (1991). [DOI] [PubMed] [Google Scholar]

- [60].Scannell JW, Blakemore C & Young MP Analysis of connectivity in the cat cerebral cortex. J Neurosci 15, 1463–1483 (1995). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Young MP, Scannell JW, Burns GA & Blakemore C Analysis of connectivity: neural systems in the cerebral cortex. Rev Neurosci 5, 227–250 (1994). [DOI] [PubMed] [Google Scholar]

- [62].Hilgetag CC, O’Neill MA & Young MP Hierarchical organization of macaque and cat cortical sensory systems explored with a novel network processor. Philos Trans R Soc Lond B Biol Sci 355, 71–89 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Watts DJ & Strogatz SH Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1998). [DOI] [PubMed] [Google Scholar]

- [64].Kaiser M & Hilgetag CC Nonoptimal component placement, but short processing paths, due to longdistance projections in neural systems. PloS Computational Biology 2, e95 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Bassett DS & Bullmore E Small-world brain networks. Neuroscientist 12, 512–523 (2006). [DOI] [PubMed] [Google Scholar]

- [66].Stam CJ Functional connectivity patterns of human magnetoencephalographic recordings: a ‘small-world’ network? Neurosci Lett 355, 25–28 (2004). [DOI] [PubMed] [Google Scholar]

- [67].Achard S, Salvador R, Whitcher B, Suckling J & Bullmore E A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. J Neurosci 26, 63–72 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].De Vico Fallani F et al. Brain connectivity structure in spinal cord injured: evaluation by graph analysis. Conf Proc IEEE Eng Med Biol Soc 1, 988–991 (2006). [DOI] [PubMed] [Google Scholar]

- [69].Micheloyannis S et al. Small-world networks and disturbed functional connectivity in schizophrenia. Schizophr Res 87, 60–66 (2006). [DOI] [PubMed] [Google Scholar]

- [70].Sporns O, Tononi G & Kotter R The human con- nectome: a structural description of the human brain. PLoS Comput Biol 1, e42 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [71].Bullmore E & Sporns O Complex brain networks: graph theoretical analysis of structural and functional systems. Nat Rev Neurosci 10, 186–198 (2009). [DOI] [PubMed] [Google Scholar]

- [72].van den Heuvel MP, Bullmore ET & Sporns O Comparative connectomics. Trends Cogn Sci 20, 345–361 (2016). [DOI] [PubMed] [Google Scholar]

- [73].Feldt S, Bonifazi P & Cossart R Dissecting functional connectivity of neuronal microcircuits: experimental and theoretical insights. Trends Neurosci 34, 225–236 (2011). [DOI] [PubMed] [Google Scholar]

- [74].Sporns O Contributions and challenges for network models in cognitive neuroscience. Nat Neurosci 17, 652–660 (2014). [DOI] [PubMed] [Google Scholar]

- [75].Medaglia JD, Lynall ME & Bassett DS Cognitive network neuroscience. J Cogn Neurosci 27, 1471–1491 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Stam CJ Modern network science of neurological disorders. Nat Rev Neurosci 15, 683–695 (2014). [DOI] [PubMed] [Google Scholar]

- [77].Braun U et al. From maps to multi-dimensional network mechanisms of mental disorders. Neuron 97, 14–31 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Lago-Fernandez LF, Huerta R, Corbacho F & Siguenza JA Fast response and temporal coherent oscillations in small-world networks. Phys Rev Lett 84, 2758–2761 (2000). [DOI] [PubMed] [Google Scholar]

- [79].Wu Y, Gong Y & Wang Q Autaptic activity- induced synchronization transitions in Newman-Watts network of Hodgkin-Huxley neurons. Chaos 25, 043113 (2015). [DOI] [PubMed] [Google Scholar]

- [80].Kim SY & Lim W Fast sparsely synchronized brain rhythms in a scale-free neural network. Phys Rev E 92, 022717 (2015). [DOI] [PubMed] [Google Scholar]

- [81].Zhu J, Chen Z & Liu X Effects of distance-dependent delay on small-world neuronal networks. Phys Rev E 93, 042417 (2016). [DOI] [PubMed] [Google Scholar]

- [82].Fortuna L, Frasca M, La Rosa M & Spata A Dynamics of neuron populations in noisy environments. Chaos 15, 14102 (2005). [DOI] [PubMed] [Google Scholar]

- [83].Stefanescu RA & Jirsa VK A low dimensional description of globally coupled heterogeneous neural networks of excitatory and inhibitory neurons. PLoS Comput Biol 4, e1000219 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [84].Breakspear M, Heitmann S & Daffertshofer A Generative models of cortical oscillations: neurobiological implications of the Kuramoto model. Front Hum Neurosci 4, 190 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Melozzi F, Woodman MM, Jirsa VK & Bernard C The virtual mouse brain: A computational neuroinformatics platform to study whole mouse brain dynamics. eNeuro 4, ENEUR0.0111–17.2017 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [86].Bezgin G, Solodkin A, Bakker R, Ritter P & McIntosh AR Mapping complementary features of cross-species structural connectivity to construct realistic “Virtual Brains”. Hum Brain Mapp 38, 2080–2093 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Ritter P, Schirner M, McIntosh AR & Jirsa VK The virtual brain integrates computational modeling and multimodal neuroimaging. Brain Connect 3, 121–145 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [88].Roy D et al. Using the virtual brain to reveal the role of oscillations and plasticity in shaping brain’s dynamical landscape. Brain Connect 4, 791–811 (2014). [DOI] [PubMed] [Google Scholar]

- [89].Falcon MI, Jirsa V & Solodkin A A new neuroinformatics approach to personalized medicine in neurology: The Virtual Brain. Curr Opin Neurol 29, 429–436 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [90].Gu S et al. Controllability of structural brain networks. Nat Commun 6, 8414 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [91].Kim JZ et al. Role of graph architecture in controlling dynamical networks with applications to neural systems. Nature Physics Epub Ahead of Print (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [92].Yan G et al. Network control principles predict neuron function in the Caenorhabditis elegans connectome. Nature 550, 519–523 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [93].Betzel RF & Bassett DS Generative models for network neuroscience: prospects and promise. J R Soc Interface 14, 20170623 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [94].Zhang X, Moore C & Newman MEJ Random graph models for dynamic networks. Eur. Phys. J. B 90, 200 (2017). [Google Scholar]

- [95].Junuthula RR, Haghdan M, Xu KS & Dev- abhaktuni VK The block point process model for continuous-time event-based dynamic networks. arXiv 1711, 10967 (2017). [Google Scholar]

- [96].Gerstner W, Sprekeler H & Deco G Theory and simulation in neuroscience. Science 338, 60–65 (2012). [DOI] [PubMed] [Google Scholar]

- [97].Gao P & Ganguli S On simplicity and complexity in the brave new world of large-scale neuroscience. Curr Opin Neurobiol 32, 148–155 (2015). [DOI] [PubMed] [Google Scholar]

- [98].Eliasmith C & Trujillo O The use and abuse of large- scale brain models. Curr Opin Neurobiol 25, 1–6 (2014). [DOI] [PubMed] [Google Scholar]

- [99].Bassett DS et al. Efficient physical embedding of topologically complex information processing networks in brains and computer circuits. PLoS Comput Biol 6, e1000748 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [100].Kailath T Linear Systems (Prentice-Hall, Inc., 1980). [Google Scholar]

- [101].Liu Y-Y, Slotine J-J & Barabasi A-L Controllability of complex networks. Nature 473, 167–173 (2011). [DOI] [PubMed] [Google Scholar]

- [102].Taylor PN et al. Optimal control based seizure abatement using patient derived connectivity. Front Neurosci 9, 202 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [103].Tang E & Bassett DS Control of dynamics in brain networks. arXiv 1701, 01531 (2017). [Google Scholar]

- [104].Pinto DJ, Jones SR, Kaper TJ & Kopell N Analysis of state-dependent transitions in frequency and long-distance coordination in a model oscillatory cortical circuit. J Comput Neurosci 15, 283–298 (2003). [DOI] [PubMed] [Google Scholar]

- [105].Reimann MW, Horlemann AL, Ramaswamy S, Muller EB & Markram H Morphological diversity strongly constrains synaptic connectivity and plasticity. Cereb Cortex 27, 4570–4585 (2017). [DOI] [PubMed] [Google Scholar]

- [106].Cherniak C Local optimization of neuron arbors. Biol Cybern 66, 503–510 (1992). [DOI] [PubMed] [Google Scholar]

- [107].Borisyuk R, Al Azad AK, Conte D, Roberts A & Soffe SR A developmental approach to predicting neuronal connectivity from small biological datasets: a gradient-based neuron growth model. PLoS One 9, e89461 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [108].Sautois B, Soffe SR, Li WC & Roberts A Role of type-specific neuron properties in a spinal cord motor network. J Comput Neurosci 23, 59–77 (2007). [DOI] [PubMed] [Google Scholar]

- [109].Cortes JM et al. Short-term synaptic plasticity in the deterministic Tsodyks-Markram model leads to unpre-dictable network dynamics. Proc Natl Acad Sci U S A 110, 16610–16615 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [110].Brody CD Correlations without synchrony. Neural Comput 11, 1537–1551 (1999). [DOI] [PubMed] [Google Scholar]

- [111].Brody CD Disambiguating different covariation types. Neural Comput 11, 1527–1535 (1999). [DOI] [PubMed] [Google Scholar]

- [112].Gabrieli JD, Ghosh SS & Whitfield-Gabrieli S Prediction as a humanitarian and pragmatic contribution from human cognitive neuroscience. Neuron 85, 11–26 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [113].Chung JE et al. A fully automated approach to spike sorting. Neuron 95, 1381–1394.e6 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [114].van Diessen E et al. Opportunities and methodological challenges in EEG and MEG resting state functional brain network research. Clin Neurophysiol 126, 1468–1481 (2015). [DOI] [PubMed] [Google Scholar]

- [115].Chu CJ et al. Emergence of stable functional networks in long-term human electroencephalography. J Neurosci 32, 2703–2713 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [116].Khambhati AN, Davis KA, Lucas TH, Litt B & Bassett DS Virtual cortical resection reveals push-pull network control preceding seizure evolution. Neuron 91, 1170–1182 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [117].Graham D & Rockmore D The packet switching brain. J Cogn Neurosci 23, 267–276 (2011). [DOI] [PubMed] [Google Scholar]

- [118].Tang A et al. A maximum entropy model applied to spatial and temporal correlations from cortical networks in vitro. J Neurosci 28, 505–518 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [119].Granot-Atedgi E, Tkacik G, Segev R & Schneid- man E Stimulus-dependent maximum entropy models of neural population codes. PLoS Comput Biol 9, e1002922 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [120].Watanabe T et al. A pairwise maximum entropy model accurately describes resting-state human brain networks. Nat Commun 4, 1370 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [121].Gu S et al. The energy landscape of neurophysiological activity implicit in brain network structure. Sci Rep 8, 2507 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [122].Honey C et al. Predicting human resting-state functional connectivity from structural connectivity. Proceedings of the National Academy of Sciences 106, 2035–2040 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [123].Hermundstad AM et al. Structural foundations of resting-state and task-based functional connectivity in the human brain. Proceedings of the National Academy of Sciences 110, 6169–6174 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]