Abstract

Based on electrohysterogram, this paper designed a new method using wavelet-based nonlinear features and stacked sparse autoencoder for preterm birth detection. For each sample, three level wavelet decomposition of a time series was performed. Approximation coefficients at level 3 and detail coefficients at levels 1, 2 and 3 were extracted. Sample entropy of the detail coefficients at levels 1, 2, 3 and approximation coefficients at level 3 were computed as features. The classifier was constructed based on stacked sparse autoencoder. In addition, stacked sparse autoencoder was further compared with extreme learning machine and support vector machine in relation to their classification performance of electrohysterogram. The experiment results reveal that classifier based on stacked sparse autoencoder showed better performance than the other two classifiers with an accuracy of 90%, a sensitivity of 92%, a specificity of 88%. The results indicate that the method proposed in this paper could be effective for detecting preterm birth in electrohysterogram and the framework designed in this work presents higher discriminability than other techniques.

Introduction

Preterm birth is defined as birth before 37 weeks of pregnancy. The World Health Organization have reported that every year about 15 million newborns are preterm, which account for more than 10% of all babies born around the world. [1] Preterm birth is a dominant cause for morbidity and mortality during both the perinatal and early neonatal periods. In addition, the complications of preterm birth such as significant neurological, mental, behavioral and pulmonary problems have a significant adverse impact on family and the economy.[2] Preterm birth is therefore both a major medical and economic challenge. One of the keys to reduce the incidence of preterm birth would be its detection or prediction using effective methods.

For the detection or prediction of preterm birth, continuous efforts have been applied toward diagnosing the onset of early labor for many years. As a result, various clinical techniques have been designed to monitor labor, such as the index detection based on biochemical indicators, infection immunity index and biophysical indicators. To prevent spontaneous preterm birth within asymptomatic high-risk women, Crane and Hutchens [3] adopted transvaginal ultrasonography to investigate the ability of cervical length. In order to predict the onset of preterm birth, Hudić et al. [4] studied the maternal serum concentration of progesterone-induced blocking factor. Compared with those methods, recording and analyzing EHG signals is a new noninvasive technique for diagnosing preterm birth, which has been proved to be of interest to provide a better monitor for the process of pregnancy. [5, 6].

In conjunction with EHG signals processing, extracting features from EHGs is an important step which can catch uterine contractility information. Among the EHGs analysis approaches, linear methods in both time and frequency domains were first adopted to extract features. Maner et al. [7] studied the ability of the power density spectrum peak frequency to classify EHG signals recorded on pregnant women who were 48, 24, 12 and 8 hours from term delivery, and 6, 4, 2 and 1 day(s) from preterm delivery. Some time-frequency analysis methods were also adopted to characterize EHG signals. Arora et al. [8] extracted the relative wavelet energy from each detail based on 4-level decomposition. Hassan et al. [9] employed wavelet coherence to diagnose the uterine electrical activity synchronization in labor. In an attempt to better characterize EHG, nonlinear characteristics have been explored to analyze EHG signals. Radomski et al. [10] adopted sample entropy to assess regularity in EHGs. Diab et al. [11] explored the performance of four nonlinear methods (time reversibility, sample entropy, Lyapunov exponent and delay vector variance) for detecting preterm birth. It is known that uterus is composed of a huge amount of intricately interconnected cells, which lead to uterus a complex, non-stationary and non-linear dynamic system. [12] Therefore, time-frequency analysis methods combined with non-linear signal processing techniques may achieve better results in analyzing EHG signals. Within time-frequency analysis methods and non-linear signal processing techniques, wavelets can capture the subtle changes in transient signals and entropy [13–15].

To be successful in classification and recognition for EHG signals, selection of a reliable classification method should also be a careful consideration. Recently, many recognition algorithms including artificial neural network, decision trees, Bayesian classifier, support vector machine, extreme learning machine and deep learning have been proposed for pattern recognition. Maner and Garfield [2] adopted an artificial neural network on EHG data to distinguish human term and preterm labor. In this work, a classification accuracy of 80% was achieved. Lu et al. [16] used multilayer Perceptron to distinguish EHG signals between 11 preterm signals and 28 term signals. This work reported that the classification accuracy of 64.1%. Moslem et al. [17] used a competitive neural network to classify EHG signals recorded on 32 women as pregnancy group and labor group. As a result, a classification accuracy of 78.2% was achieved. Moslem et al. [18] applied support vector machine to determine 137 pregnancy contraction signals and 76 labor signals. This work obtained an overall classification accuracy of 83.4%. While among these recognition technologies, most of them are supervised learning which needs millions of labeled samples for training. [19] In addition, the neural network can easily get into local optima, thus leading to a poor generalization. So, the choice of recognition model still is a big challenge because of the complex classification problem. A new unsupervised feature learning method, sparse autoencoder (SAE) possesses strong representation ability which can reveals high-level representation from complex data. Stacked sparse autoencoder (SSAE), a kind of deep learning neural network consisted of multiple layers of SAE, has achieved remarkable results in many fields. [20–24].

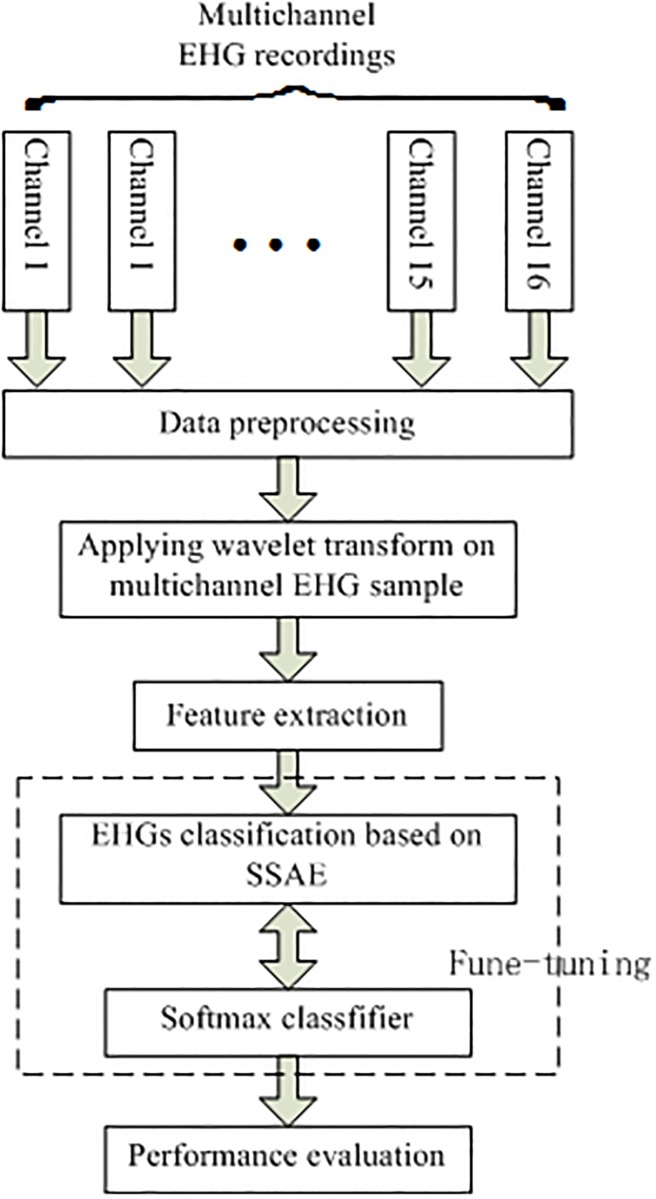

In present work, this paper proposed a novel method for classifying pregnancy EHG signals and labor EHG signals based on wavelet transform, sample entropy and stacked sparse autoencoder. The rest of this paper is composed as follows. After the introduction, the dataset used in this work and the methods of wavelet transform, sample entropy and stacked sparse autoencoder are described in Section 2. The process of the experiment and the obtained experiment results are discussed in Section 3. Section 4 concludes this paper. The block diagram of the designed method is presented in Fig 1.

Fig 1. The generalized block diagram of the proposed method.

Materials and methods

Data description

The EHG recordings employed in this work derives from Icelandic 16-electrode Electrohysterogram Database of PhysioNet. [25, 26] This dataset comprises 112 pregnancy EHG recordings and 10 labor EHG recordings. All the 122 EHG recordings were taken from 45 participants using a 4-by-4 electrode matrix positioned on the women’s abdomen. Pregnancy recordings were performed on participants who are in the third trimester and not suspected to be in labor. This experiment was made at Akureyri Primary Health Care Centre and Landspitali University Hospital. Labor recordings were recorded on participants who are suspected to be in labor within 24 hours. The duration of pregnancy recordings was between 19–86 minutes and the duration of labor recordings were 8–64 minutes. The signal sampling rate was 200 Hz. The recording device adopted an anti-aliasing filter whose cut-off frequency was 100 Hz. The tocographic signals were obtained at the same time during recordings based on a tocodynamometer attached to women’s abdomen. The protocol was approved by the National Bioethics Committee in Iceland (VSN 02-006-V4).

Wavelet transform and entropy calculation

Wavelet transform, a powerful signal processing technique, was first proposed to overcome the shortcomings of Fourier transform. Wavelet transform is a multi-revolution decomposition fast algorithm which can decompose a time series into a set of components by utilizing orthogonal wavelet bases. [27] It is equal to recursively decomposing the time series using a high-pass and low-pass filter pair. The band width of high-pass filter is equal to that for low-pass filter. Therefore after each level of decomposition, the sampling frequency is halved. The low-frequency component then be recursively decomposed to produce the components of the next stage. [28].

Given ψ ∈ L2(R) is a mother wavelet satisfying the permission condition ∫R ψ(t)dt = 0. The wavelet cluster can be expressed as:

| (1) |

In formula (1), s is the scale parameter and c is the translation parameter.

Then continuous wavelet transforms W(s, c) of x(t) can be expressed as:

| (2) |

In formula (2), * indicates complex conjugation.

Then discrete wavelet transform of time series x(n) (n = 1, …, N) can be obtained based on continuous wavelet transform by restraining s and c to a discrete lattice (s = 2−j, c = 2−j k):

| (3) |

In formula (3), j refers to the wavelet scale and k refers to translation factors. l(n) denotes low pass filter and h(n) denotes high pass filter. cAj,k and cDj,k represent the coefficients of approximation components and detail components respectively.

Then the frequency band ranges contained in Dj(k) and Aj(k) can be achieved by reconstruction as follows.

| (4) |

In formula (4), fs is the sampling frequency and J refers to maximal scale.

The time series x(n) can be denoted by the sum of all components as follows.

| (5) |

In this work, the sample entropy will be used for its effective calculation power in complexity. Detailed descriptions for sample entropy method can be found in Ref[29]. The definition of sample entropy is as follows.

| (6) |

In formula (6), m is the embedding dimension and r is vector comparison threshold. Bm (r) stands for the probability of two sequences matching for m points, and Bm+1 (r) is the probability of two sequences matching for m + 1 points.

Sparse autoencoer

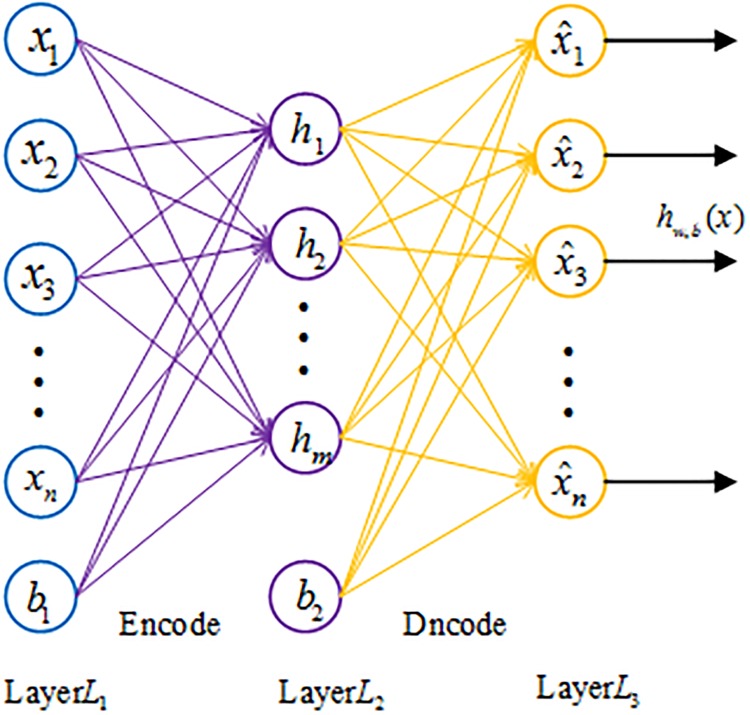

As an unsupervised learning method, the autoencoder is a multi-layer feedforward neural network used to represent the input with backpropagation. The autoencoder aims to learn a high-level representation from input data with minimum reconstruction loss. The basic structure of a three-layer autoencoder is described in Fig 2. A sparse autoencoder is an extension of the autoencoder which can achieve some specific representation ability by introducing a spare constraint on the hidden layer. [30].

Fig 2. The structure of an autoencoder.

The algorithm steps for the sparse autoencoder are given as follows.

-

Step 1: Given dataset X = {x(1), x(2), …, x(i), …, x(N)}, x(i) ∈ RM was first mapped to the hidden layer by means of a nonlinear activation function (formula (7)):

(7) In formula (7), the resulting hidden representation Z is then mapped back to a reconstructed vector hw,b(x) in the input space and f represents the encoder activation function. In formula (7), W1 and b1 represent respectively the weight matrix and the bias of the encoder.

In the decoding process, the hidden representation Z is converted back to reconstructed vector hw,b(x) by the activation function between the hidden and output layer. The specific calculation details are as formula (8):(8) In formula (8), f indicates the decoder activation function. W2 and b2 signify the weight matrix and the bias of the decoder, respectively. In formula (7) and (8), N is the number of data samples; M is the length of each data sample.

-

Step 2: In order to reconstruct the input data from the reconstructed vector, the parameter set {W1, b1, W2, b2} is optimized by minimizing error between the input data and the reconstructed dada. In addition, for SAE, a sparse constraint was introduced to the hidden layer. Therefore, the cost function of the SAE can be given as:

(9) The meanings of the notations in formula (9) are shown in Table 1.

can be defined as:(10) -

Step 3: In the process of coding, the optimal parameters of W and b need to be updated, which can be realized by minimizing J(W, b). This can be solved by adopting the backpropagation algorithm, where the stochastic gradient descent approach is adopted for training. The parameters W and b in each iteration can be updated as follows:

(11) (12) In formula (11) and (12), l represents the lth layer of the network; the parameter ε indicates the learning rate; i and j denote the ith and jth neurons of two neighboring layers, respectively.

Table 1. The notations utilized in the cost function.

| Notation | Meaning |

|---|---|

| N | Number of data samples |

| λ | Weight decay parameter |

| β | The weight of sparsity penalty term |

| ρ | Sparsity parameter defining the sparsity level |

| The average activation of hidden neuron j | |

| sl | Number of neurons in layer l |

| x(i) | Input feature vector |

| hw,b(x(i)) | Output feature vector |

| Kullback-Leibler divergence between ρ and | |

| The weight on the connection between neuron j in layer l + 1 and neuron i in layer l |

In general, the forward pass works to calculate the average activation to get the sparse error, then the parameters were updated by using the back-propagation algorithm. After that, the effective high level representations can be obtained by the SAE[19].

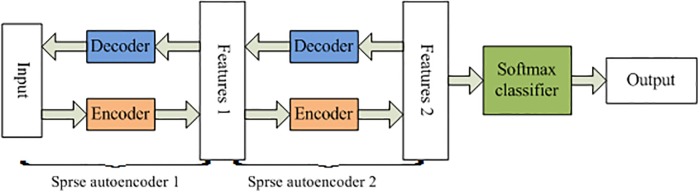

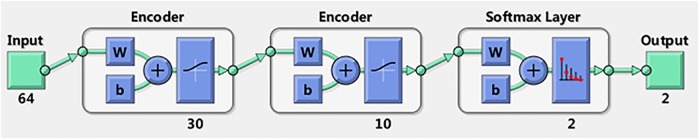

Stacked sparse autoencoder

SSAE is a deep neural network comprised of multiple layers of SAE, which adopts unsupervised greedy layer-wise algorithm to adjust the weights and uses backpropagation algorithm to fine-tune the entire deep neural networks. [31] The detailed descriptions for SSAE can be found in Ref.[32]. Fig 3 describes the SSAE structure combined with a softmax classifier.

Fig 3. The SSAE used in this work with a softmax clssifisr.

Results and discussion

Data preprocessing

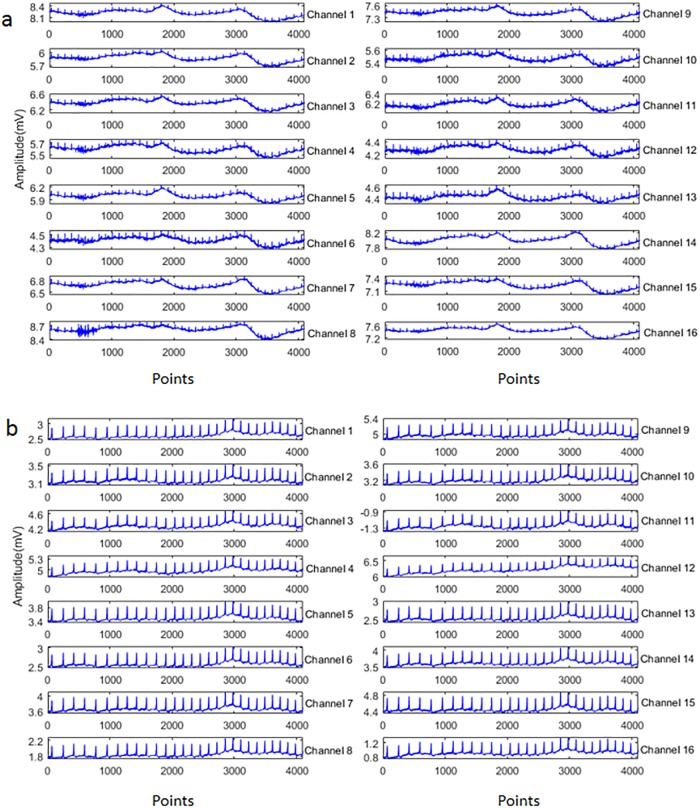

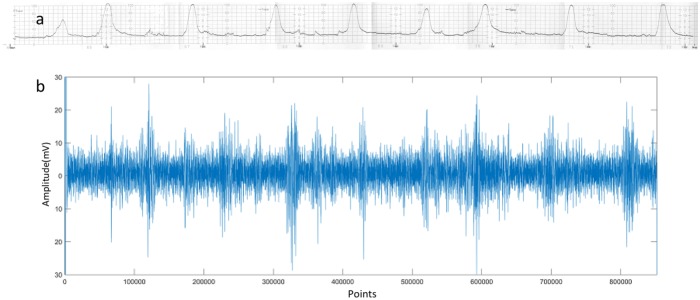

It is known that the of EHG ranges between 0.1 and 3Hz. 3Hz are the most useful frequency as the other. The obtained EHG recordings often contain irrelevant information such as maternal electrocardiogram, movement artifacts and base line. [33] Fig 4 presents two original EHG instances from the pregnancy state and labor state, respectively. Therefore, before implementing features extraction, the following steps should be applied on the raw EHG recordings: first, throughout this work, all raw EHG recordings were filtered between 0.1 and 3Hz using a Butterworth filter; then, the bursts reflecting uterine contractile activity were manually segmented from each recording based on tocographic signals; Finally, 150 pregnancy EHG samples and 150 labor EHG samples were segmented from these bursts. In this study, all the 16 time series in the EHG recordings were used. The length of each time series in each sample is 4096 points. Fig 5 shows the tocographic signal and its corresponding preprocessed one time series.

Fig 4. Samples of raw 16-channel EHGs.

(a) Pregnancy EHG instance (b) Labor EHG instance.

Fig 5. The tocographic signal and its corresponding preprocessed one time series.

(a) The tocographic signal obtained by tocographic measurement (Each small square is 30 seconds). (b) The simultaneously recorded one channel when filtered between 0.1-3Hz.

Features extraction

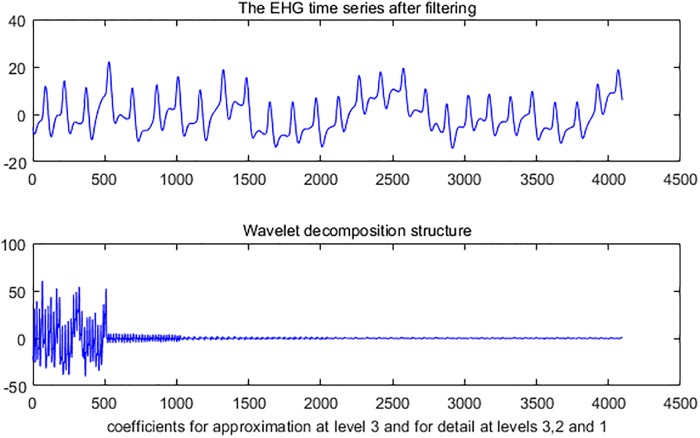

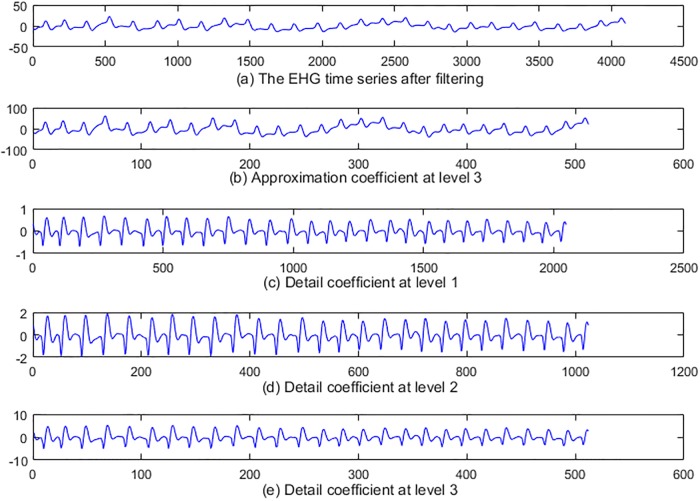

The steps for parameters extraction are as follows: Firstly, 3-level wavelet decomposition of a time series using db1 was performed. Fig 6 illustrates the result of 3-level wavelet decomposition about a time series. Secondly, the level-3 approximation coefficients of a time series are computed. Fig 7(b) shows level-3 approximation coefficients. Detail coefficients at levels 1, 2 and 3 from wavelet decomposition structure of a time series are then computed. Fig 7(c), 7(d) and 7(e) respectively illustrates the detail coefficients at levels 1, 2 and 3. Finally, Sample entropy of the detail coefficients at levels 1, 2 and 3 are then computed. Sample entropy of level-3 approximation coefficients is also computed. Thus, a feature vector consists of 64 parameters.

Fig 6. The decomposition of one EHG time series after filtering.

Fig 7. Approximate signal at level 3and detail signals at level 1, 2 and 3.

SSAE classification results

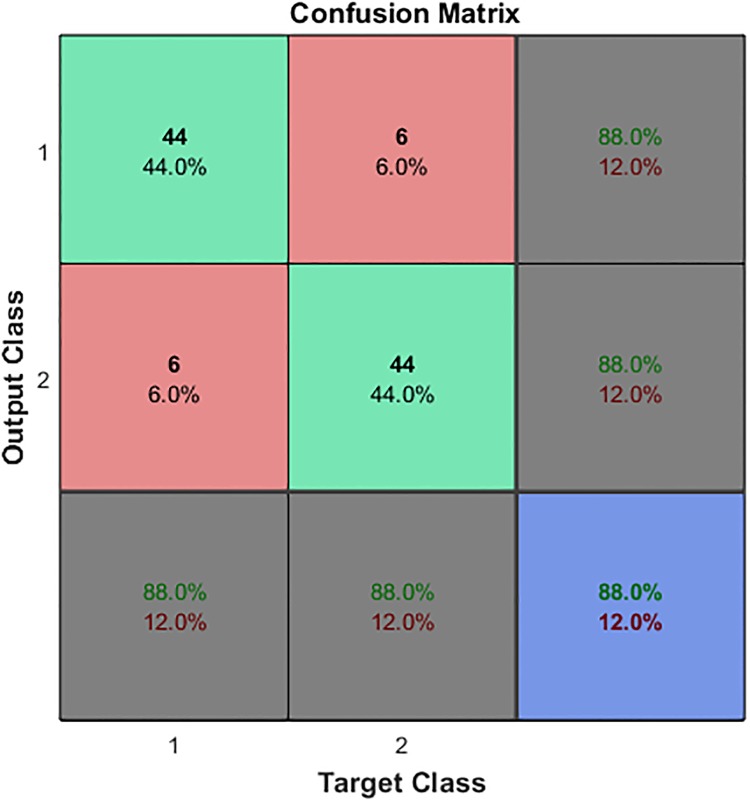

In this work, a 2-hidden-layer SSAE with a softmax classifier network is constructed to automatically classify EHG signals. As mentioned previously, 150 EHG feature vectors for pregnancy EHG and 150 EHG feature vectors for labor EHG were collected. For each vector set, 100 feature vectors were randomly chosen for training and the remaining 50 feature vectors were used for testing. When training set and test set are built, the values are all normalized to between 0 and 1. The parameter settings for this 2-hidden-layer SSAE with a softmax classifier network are given in Table 2. The architecture of the classification model used in this work is shown in Fig 8. When the classification model was established, the test set was used to verify the identification performance of the model. The confusion matrix for the proposed method in this work is presented in Fig 9. In Fig 9, the rows related to the predicted class (Output Class) and the columns related to the true class (Target Class). The first two diagonal cells indicate the number and percentage of correct classifications by the trained network. As out of 50 pregnancy cases, 44 cases are correctly classified as pregnancy, which corresponds to 88.0% of all pregnancy cases; 6 cases are incorrectly classified as labor, which corresponds to 12.0% of all pregnancy cases. Out of 50 labor cases, 44 cases are correctly classified as labor, which corresponds to 88.0% of all labor cases; 6 cases are incorrectly classified as pregnancy, which corresponds to 12.0% of all labor cases. Overall, 88.0% of all predictions are classified correctly and 12.0% are classified incorrectly.

Table 2. Parameter settings for modeling process.

| Layer1 | The number of input layer size | 64 |

| The number of output layer size | 30 | |

| Sparsity proportion | 0.05 | |

| Sparsity regularization for loss function | 8 | |

| weight regularization for loss function | 0.001 | |

| Layer2 | The number of input layer size | 30 |

| The number of output layer size | 10 | |

| Sparsity proportion | 0.05 | |

| Sparsity regularization for loss function | 8 | |

| weight regularization for loss function | 0.001 | |

| Softmax classifier | The number of input layer size | 10 |

| The number of output layer size | 2 | |

| Sparsity proportion | 0.05 |

Fig 8. The architecture of the classification model used in this work.

Fig 9. The classification result of test set by using the obtained model.

Performance comparison with ELM and SVM

In order to verify the discriminability between pregnancy and labor EHG signals in this experiment, the SSAE algorithm was further compared with two widely used classification methods, namely extreme learning machine (ELM) and support vector machine (SVM). For the ELM classifier, the number of hidden neurons was set to be 20, and the Sigmoidal function was chosen as transfer function. For the SVM classifier, radial basis function was adopted as kernal function and optimal parameters were obtained in training step for every trial.

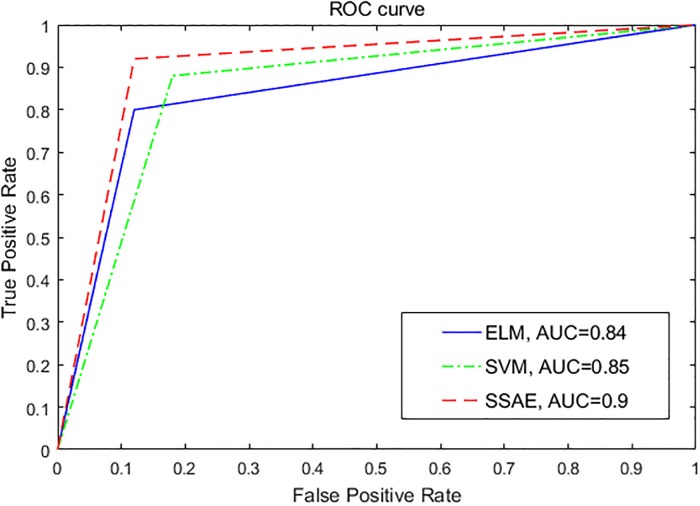

The index sensitivity, specificity and classification accuracy are used for evaluating the classification performance of the three classifiers. In addition, the classification performance for the three classifiers can be further evaluated by receiver operating characteristic (ROC) analysis, which gives us an intuitive view of entire spectrum of sensitivity.[34] The area under ROC curve (AUC) provides an effective way of comparing the performance for the classification model. The larger the area under ROC curve, the better the discrimination capability of the classification model.

The index sensitivity, specificity and classification accuracy can be represented as:

| (13) |

| (14) |

| (15) |

In formula (13), (14) and (15), true positives (TP) denotes the number of labor samples predicted correctly; false negatives (FN) denotes the number of labor samples predicted incorrectly; true negatives (TN) denotes the number of pregnancy samples predicted correctly; false positives (FP) denotes the number of pregnancy samples predicted incorrectly.

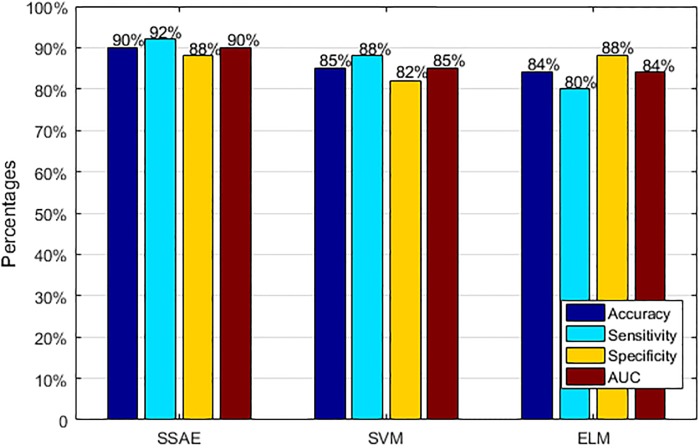

The performance of the three classifiers evaluated by ROC plot is illustrated in Fig 10. It is clear that the AUC achieved by SSAE is bigger than those by other two classifiers, which indicates that the classification model proposed in this work has a better classification performance. Fig 11 presents the performance comparison results for the three different classifiers using four indexes. As can be seen, when it comes to accuracy, SSAE presents the highest accuracy (90%). When it comes to sensitivity, SSAE presents the highest sensitivity (92%). When it comes to specificity, SSAE and ELM present the same sensitivity (88%). When it comes to AUC, SSAE presents the highest AUC (90%).

Fig 10. The ROC curve for the three classifiers.

Fig 11. The performance comparison results for the three different classifiers using four indexes.

Conclusion

In this work, the paper considered the application of wavelet transform and Stacked Sparse Autoencoder to the automatic classification of pregnancy EHG and labor EHG. Experimental results indicate that the method presented demonstrated better performance with an accuracy of 90%, a sensitivity of 92%, a specificity of 88% and the AUC of 90%. Experimental results demonstrated the effectiveness of feature extraction methods. It was also shown that by applying SSAE it is not only possible to improve the classification results but also to increase the generalization property of the classifier. Therefore, experimental results show that the proposed method achieves high accuracy in preterm birth detection. The method presented in this paper is a useful clinical test to aid diagnosis of preterm birth.

Further developments of this work may include that accuracy and specificity of the method is needed to be further enhanced. The classification model used in this paper has got acceptable results, but it still cannot be confidently used in clinics as accuracy of >95% is considered. In theory, as the sample increases, classifier based on SSAE will get better performance. In this paper, the experimental samples came from the database of PhysioNet with only 45 subjects. In future, more subjects will be used to train classifier based on SSAE and experiments will be performed with the new dataset in order to perfect the method in terms of specificity and accuracy.

Data Availability

Data are available from PhysioNet: doi:10.13026/C2159J.

Funding Statement

This research was supported by the Foundation and Frontier Research Project of Chongqing (No. cstc2016jcyjA0526), the Science and Technology Research Project of Chongqing Municipal Education Committee (No. KJ1600519), the Technology Innovation Project of Social Undertakings and Livelihood Security of Chongqing (No. cstc2017shmsA30016), the Postgraduate Science and Technology Innovation Project of Chongqing (CYS17203, CYS17215). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Dimes Mo, PMNCH, Children St, WHO. Born Too Soon: The Global Action Report on Preterm Birth. Howson C, Kinney M, Lawn J, editors: World Health Organization; Geneva; 2012. [Google Scholar]

- 2.Maner WL, Garfield RE. Identification of Human Term and Preterm Labor using Artificial Neural Networks on Uterine Electromyography Data. Ann Biomed Eng. 2007;35(3):465–73. 10.1007/s10439-006-9248-8 [DOI] [PubMed] [Google Scholar]

- 3.Crane JMG, Hutchens D. Transvaginal sonographic measurement of cervical length to predict preterm birth in asymptomatic women at increased risk: a systematic review. Ultrasound in Obstetrics & Gynecology. 2008;31(5):579–87. [DOI] [PubMed] [Google Scholar]

- 4.Hudić I, Stray-Pedersen B, Szekeres-Bartho J, Fatušić Z, Dizdarević-Hudić L, Tomić V, et al. Maternal serum progesterone-induced blocking factor (PIBF) in the prediction of preterm birth. J Reprod Immunol. 2015;109:36–40. 10.1016/j.jri.2015.02.006 [DOI] [PubMed] [Google Scholar]

- 5.Garfield RE, Maner WL. Physiology and electrical activity of uterine contractions. Semin Cell Dev Biol. 2007;18(3):289–95. 10.1016/j.semcdb.2007.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xia D, Langan DA, Solomon SB, Zhang Z, Chen B, Lai H, et al. Optimization-based image reconstruction with artifact reduction in C-arm CBCT. Phys Med Biol. 2016;61(20):7300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maner WL, Garfield RE, Maul H, Olson G, Saade G. Predicting term and preterm delivery with transabdominal uterine electromyography. OBSTET GYNECOL. 2003;101(6):1254–60. [DOI] [PubMed] [Google Scholar]

- 8.Arora S, Garg G. A Novel Scheme to Classify EHG Signal for Term and Pre-term Pregnancy Analysis. International Journal of Computer Applications. 2012;51(18):37–41. [Google Scholar]

- 9.Hassan M, Terrien J, Karlsson B, Marque C. Application of wavelet coherence to the detection of uterine electrical activity synchronization in labor. Irbm. 2010;31(3):182–7. [Google Scholar]

- 10.Radomski D, Grzanka A, Graczyk S, Przelaskowski A. Assessment of Uterine Contractile Activity during a Pregnancy Based on a Nonlinear Analysis of the Uterine Electromyographic Signal In: P E., K J., editors. Information Technologies in Biomedicine; Advances in Soft Computing. Berlin, Heidelberg: Springer; 2008. p. 325–31. [Google Scholar]

- 11.Diab A, Hassan M, Marque C, Karlsson B. Performance analysis of four nonlinearity analysis methods using a model with variable complexity and application to uterine EMG signals. Med Eng Phys. 2014;36(6):761–7. [DOI] [PubMed] [Google Scholar]

- 12.Fele-Žorž G, Kavšek G, Novak-Antolič Ž, Jager F. A comparison of various linear and non-linear signal processing techniques to separate uterine EMG records of term and pre-term delivery groups. Med Biol Eng Comput. 2008;46(9):911–22. 10.1007/s11517-008-0350-y [DOI] [PubMed] [Google Scholar]

- 13.Cek ME, Ozgoren M, Savaci FA. Continuous time wavelet entropy of auditory evoked potentials. Comput Biol Med. 2010;40(1):90 10.1016/j.compbiomed.2009.11.005 [DOI] [PubMed] [Google Scholar]

- 14.He Z, Gao S, Chen X, Zhang J, Bo Z, Qian Q. Study of a new method for power system transients classification based on wavelet entropy and neural network Int J Elec Power. 2011;33(3):402–10. [Google Scholar]

- 15.Kusnoto B, Kaur P, Salem A, Zhang Z, Galang-Boquiren MT, Viana G, et al. Implementation of ultra-low-dose CBCT for routine 2D orthodontic diagnostic radiographs: Cephalometric landmark identification and image quality assessment. Semin Orthod. 2015;21(4):233–47. [Google Scholar]

- 16.Lu N, Wang J, McDermott I, Thornton S, Vatish M, Randeva H. Uterine electromyography signal feature extraction and classification. International Journal of Modelling Identification & Control. 2008;6(2):136–46. [Google Scholar]

- 17.Moslem B, Diab MO, Marque C, Khalil M, editors. Classification of multichannel uterine EMG signals Signal Processing Systems; 2011. [DOI] [PubMed] [Google Scholar]

- 18.Moslem B, Khalil M, Diab MO, Chkeir A, editors. A multisensor data fusion approach for improving the classification accuracy of uterine EMG signals. IEEE International Conference on Electronics, Circuits and Systems; 2011.

- 19.Sun W, Shao S, Zhao R, Yan R, Zhang X, Chen X. A sparse auto-encoder-based deep neural network approach for induction motor faults classification. Measurement. 2016;89:171–8. [Google Scholar]

- 20.Krizhevsky A, Sutskever I, Hinton GE, editors. ImageNet classification with deep convolutional neural networks. International Conference on Neural Information Processing Systems; 2012.

- 21.Hinton G, Deng L, Yu D, Dahl GE, Mohamed A, Jaitly N, et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Proc Mag. 2012;29(6):82–97. [Google Scholar]

- 22.Shin HC, Orton MR, Collins DJ, Doran SJ, Leach MO. Stacked autoencoders for unsupervised feature learning and multiple organ detection in a pilot study using 4D patient data. IEEE T Pattern Anal. 2013;35(8):1930–43. [DOI] [PubMed] [Google Scholar]

- 23.Droniou A, Ivaldi S, Sigaud O. Deep unsupervised network for multimodal perception, representation and classification. Robot Auyon Syst. 2015;71(3):83–98. [Google Scholar]

- 24.Zhang Z, Han X, Pearson E, Pelizzari C, Sidky EY, Pan X. Artifact reduction in short-scan CBCT by use of optimization-based reconstruction. Phys Med Biol. 2016;61(9):3387–406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Alexandersson A, Steingrimsdottir T, Terrien J, Marque C, Karlsson B. The Icelandic 16-electrode electrohysterogram database. Sci Data. 2015;2:150017 10.1038/sdata.2015.17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Goldberger AL, Amaral LAN, Glass L., Hausdorff JM. PhysioBank P. PhysioNet: Components of a New Research Resource for Complex Physiologic Signals [Circulation Electronic Pages]. Circulation. 2000;101(23):e215–e20. [DOI] [PubMed] [Google Scholar]

- 27.El-Zonkoly AM, Desouki H. Wavelet entropy based algorithm for fault detection and classification in FACTS compensated transmission line. Int J Elec Power. 2011;3(8):34–42. [Google Scholar]

- 28.He ZY, Chen X, Luo G, editors. Wavelet Entropy Measure Definition and Its Application for Transmission Line Fault Detection and Identification; (Part I: Definition and Methodology). International Conference on Power System Technology, 2006 Powercon; 2006.

- 29.Zhang N, Lin A, Ma H, Shang P, Yang P. Weighted multivariate composite multiscale sample entropy analysis for the complexity of nonlinear times series. Physica a-Statistical Mechanics and Its Applications. 2018;508:595–607. 10.1016/j.physa.2018.05.085 [DOI] [Google Scholar]

- 30.Liu W, Ma T, Tao D, You J. HSAE: A Hessian regularized sparse auto-encoders. Neurocomputing. 2015;187:59–65. [Google Scholar]

- 31.Bengio Y. Learning Deep Architectures for AI. Foundations & Trends® in Machine Learning. 2009;2(1):1–127. [Google Scholar]

- 32.Liu H, Li L, Ma J. Rolling Bearing Fault Diagnosis Based on STFT-Deep Learning and Sound Signals. Shock Vib. 2016;2016(2):12. [Google Scholar]

- 33.Chen L, Hao Y. Feature Extraction and Classification of EHG between Pregnancy and Labour Group Using Hilbert-Huang Transform and Extreme Learning Machine. Comput Math Method M. 2017;2017:7949507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Fu K, Qu J, Chai Y, Dong Y. Classification of seizure based on the time-frequency image of EEG signals using HHT and SVM. Biomed Signal Proces. 2014;13(5):15–22. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available from PhysioNet: doi:10.13026/C2159J.