Abstract

The ability to choose among options that differ in their rewards and costs (value-based decision making) has long been a topic of interest for neuroscientists, psychologists and economists alike. This is likely because this is a cognitive process in which all animals (including humans) engage on a daily basis, be it routine (which road to take to work) or consequential (which graduate school to attend). Studies of value-based decision making (particularly at the preclinical level) often treat it as a uniform process. The results of such studies have been invaluable for our understanding of the brain substrates and neurochemical systems that contribute to decision making involving a range of different rewards and costs. Value-based decision making is not a unitary process, however, but is instead comprised of distinct cognitive operations that operate in concert to guide choice behavior. Within this conceptual framework, it is therefore important to consider that the known neural substrates supporting decision making may contribute to temporally distinct and dissociable components of the decision process. This review will describe this approach for investigating decision making, drawing from published studies that have used techniques that allow temporal dissection of the decision process, with an emphasis on the literature in animal models. The review will conclude with a discussion of the implications of this work for understanding pathological conditions that are characterized by impaired decision making.

“Life is a sum of all your choices.”

–Albert Camus

As Camus succinctly notes, our lives are built on, and formed by, the multitude of decisions we have made and continue to make on a daily basis. This decision-making ability is not unique to humans, but can be observed across species, from a worm determining whether to approach or avoid a chemical toxin to nonhuman primates deciding with whom to mate. Further, as may be evident from these examples, decision making can range from the mundane (deciding whether to have a second cup of coffee) to the consequential (having an alcoholic beverage after sustained abstinence). One notable feature common to all forms of decision making, however, is that it involves a value calculus that takes into account the costs and benefits associated with possible options in order to guide subsequent choice behavior. This type of value-based decision making has been a major focus for neuroscientists, psychologists and economists alike, all of whom aim to better understand how decisions are made and how they may go awry under pathological conditions.

Studies of value-based decision making (particularly at the preclinical level) often do not treat it as a dissociable process. Arguably, however, value-based decision making can be viewed as a set of distinct cognitive operations that work in concert to guide choice behavior (Fobbs & Mizumori, 2017; Rangel, Camerer, & Montague, 2008). Using a conceptual framework reminiscent of that described in the neuroeconomics literature (Camerer, 2008; Kable & Glimcher, 2009; Padoa-Schioppa, 2007, 2011; Sanfey, Loewenstein, McClure, & Cohen, 2006), decision making can be broken into four subcomponent phases that form a loop that feeds back on itself to alter future behavior (Figure 1). The first phase of decision making can be defined as deliberation, which itself can be further divided into two subcomponents: decision representation and option valuation. During decision representation, different options are identified, as are the costs and benefits associated with each option. Knowledge of the possible outcomes of each choice, gained through previous experience, is also integrated into the decision representation. Before acting on this knowledge, however, each option is also assessed in terms of its subjective value in the moment of the decision (outcome valuation). For example, if you are deciding whether to have a second cup of coffee, you may be more likely to choose to do so if you are making that decision while tired than if you are well-rested. Only after information from the decision representation and valuation phases is integrated is an action selected (pour another cup of coffee; otherwise referred to as action selection).

Figure 1. Conceptual schematic of the decision-making process.

The decision process can be compartmentalized into distinct phases: deliberation, action selection, outcome evaluation and feedback. Deliberation can be further subdivided into decision representation (identifying the available choices and their possible outcomes) and option valuation (assessing the subjective value of each option at the moment of decision making). While this conceptualization of decision making is not novel (Fobbs & Mizumori, 2017; Rangel et al., 2008), the ability to use sophisticated neuroscience techniques such as optogenetics to make causal inferences during each phase is a relatively new approach to studying the neurobiology of decision making.

The third phase of decision making, which directly follows action selection, can be considered an outcome evaluation period. During this phase, the value of the outcome of a choice is compared to the expected value of that outcome (i.e., was the actual value better, worse, or equivalent to what was predicted?). It is important to note that outcome evaluation is not equivalent to reward prediction error (RPE) signaling, although RPE may be part of the overall evaluative process. In addition to potentially signaling RPE, this process entails readjusting and updating values of available options given outcomes of recent choices. To continue the coffee example, the second cup of coffee may boost energy levels more than expected; however, it might also cause nausea due to an overload of caffeine, which may decrease the value of an afternoon cup of coffee. This evaluative process is necessary to inform future choices, and thus, the final phase of decision making can be considered the feedback period, which involves broadcasting the updated representations and values so that they can be used to refine future choice behavior. It is important to note that this phase feeds updated representations and valuations not only to decision representations in the deliberation phase, but also to the option valuation subcomponent of deliberation and action selection phases of decision making. Collectively, this dynamic decision-making loop allows the individual to adjust and optimize the quality of future decisions.

Over the last decade, significant strides have been made in delineating the neurobiology underlying value-based decision making in animal (particularly rodent) models (Orsini, Moorman, Young, Setlow, & Floresco, 2015; Winstanley & Floresco, 2016). This research has shown that various forms of value-based decision making, such as risky and intertemporal decision making, are mediated by an interconnected network of brain regions within the mesocorticolimbic circuit, including the basolateral amygdala (BLA), medial prefrontal cortex (mPFC) and nucleus accumbens (NAc; Floresco, Onge, Ghods-Sharifi, & Winstanley, 2008; Orsini, Moorman, et al., 2015). Although these studies have provided foundational knowledge about the neural substrates underlying decision making, they have predominantly employed pharmacological approaches and/or selective lesions, techniques that preclude the ability to isolate how such systems mediate temporally distinct phases of the decision process. This limitation becomes even more apparent when considering the fact that in vivo electrophysiology studies, which allow for monitoring neural activity in real time, have demonstrated that neural activity in multiple brain regions varies across temporally-discrete phases of choice tasks. For example, neural activity in the NAc tracks multiple components of decision making, including cues predictive of outcomes, action selection, and reward delivery (Day, Jones, & Carelli, 2011; Owesson-White et al., 2016; Roesch, Singh, Brown, Mullins, & Schoenbaum, 2009; Roitman, Wheeler, & Carelli, 2005; Setlow, Schoenbaum, & Gallagher, 2003). Electrophysiological recording studies are vital for determining the types of information encoded by activity in various brain regions across phases of the decision process; however, they are limited in that they do not address whether such encoding plays a causal role in the decision process. Fortunately, the advent and use of optogenetics has made such questions possible to address, as it allows for manipulation of neural activity at millisecond timescales within specific cell populations, anatomically-defined circuits, or entire brain regions (Kim, Adhikari, & Deisseroth, 2017). Given the temporal precision of optogenetics, this technique is well-suited to test how specific brain regions and/or cell populations are involved in temporally distinct phases of decision making.

The overall goal of this review is to describe what has been learned from the small but growing number of preclinical studies that have used optogenetics, or similar techniques that allow for precise temporal control of neural activity, to probe the neurobiology of value-based decision making. Rather than organizing the review by the phases of decision making outlined above, it is structured according to the brain regions or circuits that have been investigated in ways that afford the ability to isolate their roles in distinct decision phases. The review will conclude with a discussion of the implications of this framework for understanding pathological conditions characterized by impaired decision making. Because decision making is complex and inherent to all animals, we believe that discussions herein will appeal not only to behavioral neuroscientists, but also to computational neuroscientists, behavioral economists and experimental psychologists. Ultimately, we hope that this review will help shape the way in which others view decision making and approach research questions related to this topic.

Neurobiology of value-based decision making

In the last two decades, scientists have carefully constructed a neurobiological model of decision making spanning the frontal cortex, limbic system and midbrain. Human imaging and lesion studies have demonstrated the importance of the basolateral amygdala (BLA) and the ventrolateral, orbital and anterior cingulate subregions of the prefrontal cortex in decision making (Bechara, Damasio, Damasio, & Anderson, 1994; Bechara, Damasio, Damasio, & Lee, 1999; Ernst et al., 2002; Fellows & Farah, 2005; N. S. Lawrence, Jollant, O’Daly, Zelaya, & Phillips, 2009). These findings have been corroborated in rodent studies investigating the contribution of the BLA and mPFC [thought to be homologous to the human cingulate cortex (Uylings & van Eden, 1990), but see Laubach, Amarante, Swanson & White, 2018 for an excellent commentary on this topic] to various forms of decision making (Ghods-Sharifi, St Onge, & Floresco, 2009; Orsini et al., 2018; Orsini, Trotta, Bizon, & Setlow, 2015; St Onge & Floresco, 2010; Zeeb, Baarendse, Vanderschuren, & Winstanley, 2015; Zeeb & Winstanley, 2011). Through the use of animal models, others have also revealed the role of dopamine signaling, particularly within the striatum, in encoding both positive and negative values associated with cues and outcomes during various reward-related behavioral tasks (Cohen, Haesler, Vong, Lowell, & Uchida, 2012; Matsumoto & Hikosaka, 2009b; Setlow et al., 2003). More recently, the lateral habenula and midbrain regions have come under scrutiny due to their ability to bias choice behavior away from options associated with negative consequences (Bromberg-Martin, Matsumoto, & Hikosaka, 2010; Matsumoto & Hikosaka, 2007; Stamatakis & Stuber, 2012; Stopper, Tse, Montes, Wiedman, & Floresco, 2014). Given the complexity of value-based decision making, it is not surprising that this cognitive process is mediated by such a distributed neural network. Below, we review recent research that has provided a more precise understanding of how specific brain regions and circuits contribute to the distinct cognitive operations involved in decision making. Although decision making recruits multiple brain systems, this review will specifically focus on studies that examined the roles of dopaminergic signaling in the striatum, the basolateral amygdala, the lateral habenula and its connections with the midbrain, and the prefrontal cortex.

Striatum and dopamine

Dopamine is largely known for its modulatory influence on activity of medium spiny neurons (MSNs) in the dorsal and ventral striatum that receive excitatory input from regions such as the mPFC and BLA (Surmeier, Ding, Day, Wang, & Shen, 2007; Tritsch & Sabatini, 2012). Neuromodulation of striatal MSN processing is mediated by dopamine’s interaction with a family of G-protein coupled receptors (GCPRs). Dopamine receptors are canonically divided into two subgroups of receptors based on signaling and pharmacological properties (Beaulieu & Gainetdinov, 2011; Gerfen & Surmeier, 2011; Surmeier et al., 2007). Dopamine D1-like receptors (D1Rs) consist of D1 and D5 receptors and, through their coupling to G proteins Gαs and Gαolf, are thought to excite MSNs in the striatum. In contrast, dopamine D2-like receptors (D2Rs) include D2, D3 and D4 receptors and are thought to inhibit MSNs in the striatum via their coupling to G proteins Gαi and Gαo. Importantly, both D1Rs and D2Rs in the striatum have been shown to be involved in regulation of value-based decision making (Boschen, Wietzikoski, Winn, & Da Cunha, 2011; Mai, Sommer, & Hauber, 2015; Mitchell et al., 2014; Porter-Stransky, Seiler, Day, & Aragona, 2013; Simon et al., 2013; Simon et al., 2011; Stopper, Khayambashi, & Floresco, 2013; Yates & Bardo, 2017). For example, direct administration of a D2/3R agonist into the ventral striatum decreases choice of large rewards associated with risk of punishment (Mitchell et al., 2014). In a different probabilistic risk-based decision-making task, administration of either a D1R antagonist or a mixed D1/D2 antagonist into the ventral striatum decreases choice of large, but increasingly uncertain, rewards (Mai et al., 2015; Stopper et al., 2013). Levels of D1R and D2R mRNA expression in the dorsal striatum and ventral striatum, respectively, have also been shown to be correlated with the degree of preference for large, risky rewards (Simon et al., 2011). Although these studies, among many others (Boschen et al., 2011; Porter-Stransky et al., 2013; Simon et al., 2013; Yates & Bardo, 2017), clearly demonstrate the importance of dopamine receptor signaling in the striatum in value-based decision making, they do not provide insight into how this signaling contributes to distinct phases of decision making and whether D1R and D2R neuromodulation differs across phases of the decision process.

Recent work has begun to answer such questions using optogenetic approaches. In a recent study, Zalocusky et al. (2016) examined the contribution of D2R-expressing neurons in the NAc to risky decision making in rats. Their decision-making task consisted of choices between a “safe” lever, which yielded a moderate-sized sucrose reward, and a “risky” lever, which yielded a small sucrose reward 75% of the time and a large sucrose reward 25% of the time (Zalocusky et al., 2016). Thus, in this task, rats chose between two options that always resulted in delivery of sucrose reward but differed in the magnitude and frequency with which the reward was delivered. In a series of elegant experiments, these authors demonstrated that rats displayed stable risk preferences that were sensitive to dopamine D2/3R agonists administered into the NAc, and that activity within NAc D2R-expressing neurons was specifically tied to the deliberation phase. Specifically, activity of these neurons during deliberation (prior to a choice) was significantly greater before a rat made a safe choice (vs. a risky choice). In the same neurons, there was also increased activity during deliberation following trials in which the risky lever had been selected but the smaller reward delivered (a “loss”), a pattern of activity that was absent during deliberation following trials in which the risky lever had been selected and the large sucrose reward delivered (a “win”) or following trials in which the “safe” sucrose reward was delivered. The authors interpreted these activity patterns in D2R-expressing neurons as encoding rats’ sensitivity to losses, which may serve as a bias signal to shift choices away from risky options. To specifically test the causal role of NAc D2R-expressing neuron activity in signaling loss sensitivity during deliberation, the excitatory opsin channelrhodopsin (ChR2; Yizhar, Fenno, Davidson, Mogri, & Deisseroth, 2011) was selectively expressed in NAc D2R-expressing neurons using a D2R-specific promoter. Consistent with their hypothesis that activity in these neurons represents loss sensitivity, optogenetically driving their activity during the deliberation period resulted in a decrease in choice of the “risky” lever. Interestingly, this effect was only observed in rats that consistently preferred the “risky” lever (risk-seeking) as optogenetic excitation of these neurons had no effect on choice in risk-averse rats. When stimulation of D2R-expressing neurons occurred during reward outcome periods there were no effects on risk preference. This suggests that although activity in D2R-expressing neurons may not be necessary for evaluating outcomes (e.g., losses), this activity may be important for the integration of negative feedback with other information during deliberation, in order to bias choices away from unfavorable options and toward safer options.

Optogenetics has also been utilized to identify causal roles for D1R- and D2R-expressing neurons in the dorsal striatum (DS) in choice strategy (Tai, Lee, Benavidez, Bonci, & Wilbrecht, 2012). In the DS, these receptors are expressed in largely separate MSN populations that are thought to exert opposing influences on action selection (Gerfen & Surmeier, 2011; Surmeier et al., 2007; Tritsch & Sabatini, 2012). MSNs in the direct striatal pathway express D1Rs and act to promote and facilitate ongoing behavior, whereas MSNs in the indirect striatal pathway express D2Rs and act to inhibit or suppress ongoing behavior. Tai et al. (2012) used transgenic mice to selectively target striatal direct (D1Rs) or indirect (D2Rs) pathway MSNs for optogenetic manipulation to determine how these separate striatal populations contribute to reward-based decision making. In a probabilistic switching task, mice chose between a left and right reward delivery port, one of which was considered “correct” and yielded a water reward on 75% of responses. The left/right position of the rewarded port was intermittently switched across the task (every 7–23 trials), however, forcing mice to rely solely on the outcomes of the most proximal previous choices to guide their next choice. Mice exhibited stable “win-stay” and “lose-shift” strategies in this task, whereby water delivery on a trial prompted mice to stay at the rewarded port on the next trial, and the absence of water delivery prompted mice to shift to the alternative port, respectively. To selectively manipulate D1R- versus D2R-expressing neurons in the DS, ChR2 was expressed in a Cre-dependent manner in mice expressing Cre-recombinase under Drd1 or Drd2 promoters. These striatal cell populations were then stimulated during the first 500 ms of the deliberation phase, the commencement of which was indicated by a cue light. Notably, because previous work showed that unilateral manipulations of the DS can affect lateralized body movements (Kravitz et al., 2010; Schwarting & Huston, 1996), D1R- or D2Rexpressing neurons were unilaterally stimulated during test sessions and choice bias was assessed as a function of the side of stimulation (i.e., choice of the port ipsilateral vs. contralateral to stimulated hemisphere). Stimulation of D1R-expressing neurons resulted in a strong bias for the port contralateral to the stimulated hemisphere, but only after mice had experienced unrewarded trials in the past two trials. In contrast, stimulation of D2R-expressing neurons induced a strong bias for the port ipsilateral to the stimulated hemisphere; similar to D1R-expressing neurons, this effect was the greatest after mice had experienced unrewarded trials. Using similar stimulation parameters, Tai et al. confirmed that these effects were specific to deliberation, as the stimulation-induced choice bias diminished as delays between the cue light and stimulation increased. Further, the authors ensured that these effects were not merely due to altered motor function by assessing effects of stimulation (using the same stimulation patterns as in the choice task) in a distinct enclosed environment. The authors then used the choice data to build a quantitative model to determine whether these effects of stimulation could be explained by a change in value of each port based on previous reward history. Based on this model, when D1R-expressing neurons were stimulated, the value of the port contralateral to the stimulated hemisphere increased. In contrast, when D2R-expressing neurons were stimulated, the value of the port contralateral to the stimulated hemisphere decreased but the value of the port ipsilateral to the stimulated hemisphere increased. Collectively, these data suggest that after several losses (i.e., unrewarded trials), activity of DS D1R-expressing neurons during subsequent deliberation reflects the increasing value of the alternative option, resulting in a shift in choice bias. In contrast, it appears that activity of DS D2R-expressing neurons during deliberation following losses is important for the continued vigorous pursuit of this option. Interestingly, this is consistent with previous work suggesting that the striatal indirect pathway is recruited to inhibit competing alternative actions (i.e., switching to the contralateral port; Ferguson et al., 2011; Freeze, Kravitz, Hammack, Berke, & Kreitzer, 2013; Gerfen & Surmeier, 2011). Ultimately, these data suggest that optimal choice flexibility depends on the balance of activity between DS D1R- and D2Rexpressing neurons. This study further reveals that this balance is critical during the initial moments of the deliberation phase following losses to adjust future behavior. Notably, the authors did not explicitly test the effects of stimulation of these cell populations during the outcome phase of the trials, leaving open the possibility that activity of D1R- and D2R-expressing neurons may also be involved in outcome evaluation. Future optogenetic studies could test this hypothesis using a similar experimental approach.

Considered together, these two studies demonstrate that D2R-expressing neurons in both the dorsal and ventral striatum are involved in deliberation during value-based decision making. At first, the findings might seem to contradict one another in that Zalocusky et al. (2016) showed that activation of NAc D2R-expressing neurons biases choice away from riskier options whereas Tai et al. (2012) showed that activation of DS D2Rexpressing neurons promotes continued choice of options associated with potential loss. Aside from just the difference in the targeted brain region, another possible reason for this difference is that the structure and design of the decision-making tasks were different; whereas Zalocusky et al. used a task in which both outcomes entailed receipt of reward, albeit at different magnitudes, at a consistent rate across the task, Tai et al. used a task in which only one of the two options yielded a reward and the identity of this option varied across the task. Thus, if rewards are guaranteed but vary only by magnitude or frequency, activity in D2Rexpressing neurons may bias choice toward options that are consistent and certain, even if they are less rewarding overall. If the choice, however, is between the possibility of a reward and no reward at all with these contingencies constantly changing over time, activity in D2R-expressing neurons may promote the continued pursuit of rewards that have recently and unexpectedly been omitted. It therefore follows that the ability to shift preference to more optimal options after recent losses may actually require a reduction in activity in D2Rexpressing neurons. This supposition is consistent with previous work demonstrating that when an expected reward is omitted, D2R activation in the NAc prevents a shift in choice preference to an alternate rewarded option (Porter-Stransky et al., 2013). Critically, if the reward only decreases in magnitude (as opposed to complete reward omission), D2R activation has no effect. Hence, the contribution of striatal D2R-expressing neurons to deliberation in decision making may differ depending on the availability of possible rewards and the consistency with which they are delivered. Notably, this hypothesis assumes that D2R-expressing neurons are intrinsically similar across both striatal regions, an assumption that is not without precedence as many studies that have focused on dopamine receptors and signaling do not distinguish between the dorsal and ventral striatum (Andre et al., 2010; Carter, Soler-Llavina, & Sabatini, 2007; Cepeda et al., 2008; Kreitzer, 2009).

Recent work, however, has shown that there are indeed differences in D2R sensitivity and signaling across the dorsoventral gradient of the striatum. Indeed, a recent study revealed that D2Rs on MSNs in the NAc have slower activation kinetics and are more sensitive to dopamine than those in the DS (Marcott et al., 2018). Thus, these signaling differences between the dorsal and ventral regions may account for the discrepant results in the Tai et al. and Zalocusky et al. studies: activation of D2R-expressing MSNs in the striatum during deliberation may affect choice behavior differently due to subregional differences in D2R sensitivity and timing of signaling in response to DA release. Interestingly, cocaine exposure selectively diminishes sensitivity of D2Rs on MSNs in the NAc (Marcott et al., 2018). Consequently, under these conditions, deliberation may then be predominantly mediated by D2Rs on MSNs in the DS, resulting in continued choice of options associated with losses (Tai et al., 2012). This choice profile is not unlike that of individuals with SUDs, who often make risky choices despite awareness of the possibility of negative consequences.

Basolateral amygdala

The basolateral amygdala (BLA) is embedded within the mesocorticolimbic circuit and is well-positioned to receive and send projections to many of the other brain regions within this circuit (Janak & Tye, 2015). Consequently, it is involved in various behaviors in which affect plays a central role, from fear learning and extinction to value-based decision making (Orsini & Maren, 2012; Orsini, Moorman, et al., 2015). Indeed, the BLA is particularly important for the ability to integrate reward-related information with associated costs to guide behavior (Balleine & Killcross, 2006; Wassum & Izquierdo, 2015). Evidence for this comes from studies in which the BLA was either pharmacologically inactivated or lesioned in rats trained to perform various decision-making tasks (Ghods-Sharifi, Cornfield, & Floresco, 2008; Ghods-Sharifi et al., 2009; Orsini, Trotta, et al., 2015; Winstanley, Theobald, Cardinal, & Robbins, 2004; Zeeb & Winstanley, 2011). For example, our laboratory used excitotoxic lesions to assess the role of the BLA in decision making involving risk of explicit punishment (Orsini, Trotta, et al., 2015). In this “Risky Decision-making Task” (RDT), rats choose between two possible options, one which yields a small “safe” food reward and the other which yields a large reward that is accompanied by variable probabilities of mild footshock (Simon, Gilbert, Mayse, Bizon, & Setlow, 2009). Rats trained in the RDT exhibit stable decision-making behavior such that as the probability of punishment increases, choice of the large, risky option decreases. Rats with permanent BLA lesions display increased choice of the large, risky reward (increased risk taking) relative to sham control rats (Orsini, Trotta, et al., 2015). Control experiments revealed that this increased risk taking was not due to insensitivity to punishment, as rats were able to discriminate between punished and unpunished options with equal reward magnitudes. These data, in conjunction with other work (Ghods-Sharifi et al., 2008; Ghods-Sharifi et al., 2009; Winstanley & Floresco, 2016; Winstanley et al., 2004), indicate that the ability to integrate reward- and cost-related information heavily depends upon the integrity of the BLA.

The use of lesions or pharmacological inactivation, however, precludes the ability to isolate the role of the BLA during distinct phases of decision making. To circumvent this issue, we conducted a follow-up study using an optogenetic approach to probe the role of the BLA in decision making involving risk of punishment (Orsini et al., 2017). To do this, the inhibitory opsin, halorhodopsin (eNpHR3.0; Yizhar et al., 2011), was expressed in glutamatergic neurons in the BLA, which allowed for rapid (and rapidly reversible) BLA inactivation during discrete phases of decision making trials. Using this approach, we showed that BLA optogenetic inactivation specifically during delivery of the large, punished reward caused an increase in risk taking. There were no changes in choice behavior when the BLA was inactivated during delivery of the large, unpunished reward or the small, safe reward, indicating that during outcome evaluation, the BLA is not critical solely for discrimination between rewards of different magnitudes. These results recapitulated the effects of BLA lesions on risk taking in our prior work (Orsini, Trotta, et al., 2015); however, the question remained as to whether the BLA functioned in a similar manner before rats even made a choice (i.e., during deliberation). Unexpectedly, when the BLA was inactivated during deliberation, rats decreased their choice of the large, risky reward, an effect that was the opposite of that observed when the BLA was inactivated during delivery of the large, punished reward (Orsini et al., 2017).

These findings were the first to show that BLA activity during different phases of the decision-making process plays distinct causal roles in directing choice behavior. These findings are supported by additional data from our lab showing that optogenetic inactivation of the BLA has different effects on choice behavior in a delay discounting task depending on the phase in which the BLA is inactivated (Hernandez et al., 2017). In this task, rats choose between a small food reward delivered immediately and a large food reward delivered after varying delays (Cardinal, Pennicott, Sugathapala, Robbins, & Everitt, 2001; Evenden & Ryan, 1996; Mendez, Gilbert, Bizon, & Setlow, 2012). Rats typically decrease their choice of the large, delayed reward as the delay to its delivery increases. When the BLA was optogenetically inactivated, the effects varied by the decision phase. BLA inactivation during outcome delivery caused a decrease in the choice of the large, delayed reward (increased impulsive choice), but only when this inactivation occurred during delivery of the small, immediate reward. In contrast, when the BLA was inactivated during deliberation, there was an increase in choice of the large, delayed reward (decreased impulsive choice). Thus, across two different value-based decision-making tasks, the BLA appears to contribute to the decision process in a fundamentally different manner depending on the decision phase. Although the two decision-making tasks involve two different costs (risk of punishment vs. delay to reward delivery), the effects of BLA inactivation on choice behavior are similar, in that it decreases both risky choice and impulsive choice when inactivation occurs during deliberation, and increases both risky choice and impulsive choice when inactivation occurs during outcome delivery. This raises the question as to what information is processed by the BLA during these different phases. One hypothesis is that during deliberation, BLA activity may be important for tagging the various options in terms of their incentive salience, or how much they are “wanted” at that moment, with the larger (albeit riskier) option or the more immediate option being more salient in terms of their rewarding properties. In contrast, during outcome evaluation, the BLA may be important for integrating information about the value of both the reward and the cost to guide behavior toward better options in the future. In essence, BLA activity may signal whether the previous choice was really worth the cost involved, ultimately promoting less risky and less impulsive choices in subsequent decisions. How the BLA mediates these dissociable functions remains an open question, but it is likely that it arises in part from segregated populations of neurons that have distinct projections to other brain regions. For example, BLA neurons that project to the NAc may be predominantly involved in the outcome evaluation process, whereas BLA neurons that project to the orbitofrontal cortex may be predominantly involved in deliberation (Orsini, Trotta, et al., 2015). Optogenetics can readily address these possibilities by manipulating BLA terminals in the NAc or orbitofrontal cortex during outcome evaluation or deliberation, respectively.

Midbrain circuitry

A major component of the decision-making process is the ability to inhibit selection of certain options, particularly those associated with costly or unfavorable outcomes. Put another way, it is critical to learn about choices associated with negative consequences, so as to use that information in the future in order to avoid them. In the last decade, the lateral habenula (LHb) has emerged as a candidate brain region in the neural circuitry supporting this process (Baker et al., 2016). Neurons in the LHb are activated by punishment (footshock) and reward omission and are inhibited by delivery of unexpected rewards (Matsumoto & Hikosaka, 2007, 2009a; Stamatakis & Stuber, 2012). This activation and inhibition has been causally linked to inhibition and activation of dopamine (DA) neurons, respectively, in the ventral tegmental area (VTA; Ji & Shepard, 2007; Stopper et al., 2014), a major source of dopaminergic afferents to brain regions in the mesocorticolimbic circuit (e.g., NAc, BLA) (Oades & Halliday, 1987; Swanson, 1982). The LHb exerts this control over DA activity in the VTA through glutamatergic projections to GABAergic neurons in the rostromedial tegmental area (RMTg), which in turn project to DA neurons in the VTA (Hong, Jhou, Smith, Saleem, & Hikosaka, 2011; Jhou, Fields, Baxter, Saper, & Holland, 2009). Interestingly, others have shown that the LHb-RMTg circuit is critical for promoting active and conditional avoidance behavior (Stamatakis & Stuber, 2012). For example, optogenetic activation of this pathway causes mice to decrease working for a previously positive reinforcement, as though it has become aversive or viewed as a punishment (Stamatakis & Stuber, 2012). Thus, the influence of the LHB→RMTg pathway on VTA DA activity may be one mechanism that biases choices away from options associated with negative consequences and toward those that are safer. Until recently, however, the contribution of this circuit and its regulation of VTA DA activity in decision making had not been investigated.

In one of the first studies to explore this question, Stopper et al. (2014) used electrical stimulation of the LHb, RMTg or VTA during distinct decision phases to determine how activation of these structures affects choice performance in a probability discounting task. In this task, rats choose between two levers, one which yields a small reward 100% of the time (the “safe” option) and one which yields a large reward, but at varying probabilities (the “risky” option). Electrical stimulation of either the LHb or RMTg during delivery of the large reward following a “risky” choice (i.e., wins) caused a decrease in choice of this option (decreased risky choice), such that rats shifted their preference to the small certain option on subsequent trials. In contrast, when the LHb was stimulated during delivery of the small, certain reward, rats increased their choice of the risky option (increased risky choice). Together, these data seem to suggest that stimulation of the LHb or RMTg during reward delivery caused rats to act as though that reward were aversive, resulting in a shift in choices to the alternate option, irrespective of the actual identity of the reward (or associated costs). Interestingly, a similar decrease in risky choice was observed when the LHb was stimulated during deliberation, particularly in rats that preferred risky options. There were, however, no effects on choice behavior when the LHb was stimulated during intertrial intervals (the periods of time between the end of one trial and the beginning of the next). Finally, Stopper et al. stimulated the VTA during risky losses (omissions of the large reward following selection of the risky option) to determine whether this could override aversionrelated signals from the LHb-RMTg circuit and promote risky choice. Indeed, this manipulation did increase risky choice, suggesting that VTA stimulation increased activity of DA neurons to a level that superseded the inhibitory influence of the LHb and RMTg. In the absence of this inhibitory regulation of the VTA, rats would be less sensitive to reward omissions (losses) and would consequently increase their choice of the risky option.

A more recent study extended this work by probing the role of RMTg projections to the VTA in decision making involving explicit punishment (Vento, Burnham, Rowley, & Jhou, 2017). Rather than electrical stimulation, Vento and colleagues (2017) optogenetically inhibited RMTg terminals in the VTA during a task in which rats pressed levers to obtain a food reward that was immediately followed by a footshock that increased in intensity across trials within a test session. The aim of these experiments was to determine how inhibition of the RMTg-VTA pathway affected the ability of punishment to suppress reward-seeking (shock breakpoint). Given previous work by Stopper et al. and others (Baker et al., 2016; Hong et al., 2011; Jhou et al., 2009), it was perhaps not surprising that inhibition of RMTg projections to the VTA increased shock breakpoint, or impaired the ability of the punishment to inhibit reward-seeking. This resistance to punishment occurred when the RMTg-VTA was inhibited during either the deliberation period or during footshock delivery (each of which was assessed in separate test sessions), but not when the pathway was inhibited immediately before or after the footshock. This suggests that RMTg input to the VTA is not only important during deliberation to avoid actions associated with negative outcomes, but also for providing a negative feedback signal (i.e., outcome evaluation) that can be fed into subsequent deliberation phases. Collectively, these two studies (Stopper et al., 2014; Vento et al., 2017) elegantly delineate a circuit that is important for signaling cost-related information. In particular, this body of work shows that this circuit, and the individual structures within, are engaged during both deliberation and outcome evaluation phases. Such a dual purpose is not surprising, given that some costs associated with rewards could very well be harmful. It is likely adaptive for the brain to broadcast this information as widely as possible (i.e., across multiple phases of decision making).

There are several routes by which information about costs could be conveyed from the VTA to other regions in the mesocorticilimbic circuit to modulate value-based decision making. Recent work from Saddoris et al. (2015) suggests that one such route may be the dopaminergic projections from the VTA to the NAc. Using a delay discounting task similar to that used by Hernandez et al. (2017), Saddoris et al. employed voltammetry to initially demonstrate that DA release in the NAc scaled with rats’ choice behavior, such that there was greater peak DA release in the NAc during presentation of reward-predictive cues when those cues were followed by the rats’ choice of their preferred option (e.g., large reward with no delay or large reward with short delay vs. large reward with long delay or small, immediate reward). Interestingly, this predictive scaling of DA release was significantly lower during cue presentation prior to delivery of the large reward with the longest delay compared to cue presentation prior to delivery of the small immediate reward, indicating that DA signaling during decision making shifted as a function of the delay cost. These authors then used optogenetics to evaluate the causal role of DA release in biasing choice preference. Virally-packaged ChR2 was delivered to the VTA in transgenic rats expressing Cre-recombinase in tyrosine hydroxylase-positive (TH+) neurons in order to selectively activate dopaminergic projections from the VTA to the NAc during two different modified versions of the delay discounting task. In one version (delay test), rats chose between an immediate food reward (1 food pellet, 0 sec) and a delayed food reward (1 pellet, 10 sec). In the second version (reward magnitude test), rats chose between 1 and 2 food pellets, both of which were delivered immediately (i.e., no delay). This task design was used to isolate each feature of decision making (delay vs. reward magnitude) independent of the other. During each test, dopaminergic projections from the VTA to the NAc were stimulated during cue presentations for the less-valuable option (i.e., delayed reward in the delay test, small reward in the reward magnitude test), but only during forced choice trials in which only one lever was available. The rationale for this design was that if DA release signals the preferred option during cue presentation, leading to the selection of that option, enhancing DA through activation of the VTA-NAc circuit during cue presentation for the less valuable option should be sufficient to bias choice toward that option. Because stimulation of the circuit occurred only during forced choice trials, choice behavior during subsequent free choice trials served as the behavioral index for learned choice preference. As predicted, when the VTA-NAc circuit was stimulated during the cue for the delayed reward in the delay test, rats increased their choice of this option during subsequent free choice trials. When this circuit was activated during the cue for the small reward in the reward magnitude test, however, there were no changes in choice preference. Thus, enhancing VTA-mediated DA release in the NAc was indeed sufficient to alter value-based decision making, but only when the reward options varied in their relative cost, and not in magnitude. These data are significant in that they suggest that, rather than broadly encoding reward preference, VTA-mediated DA release in the NAc has a selective role in encoding the costs (or at least delay costs) associated with the available options. As noted above, this causal manipulation of DA signaling only occurred during cue presentations on forced choice trials and thus cannot be considered part of the deliberation phase as there was only one option from which to select. Nevertheless, it is clear that boosting DA release during the period prior to action selection affected how rats subsequently made decisions. This is supported by the fact that peak DA release during cue presentations on free choice trials was highest when followed by choice of the preferred option (Saddoris et al., 2015).

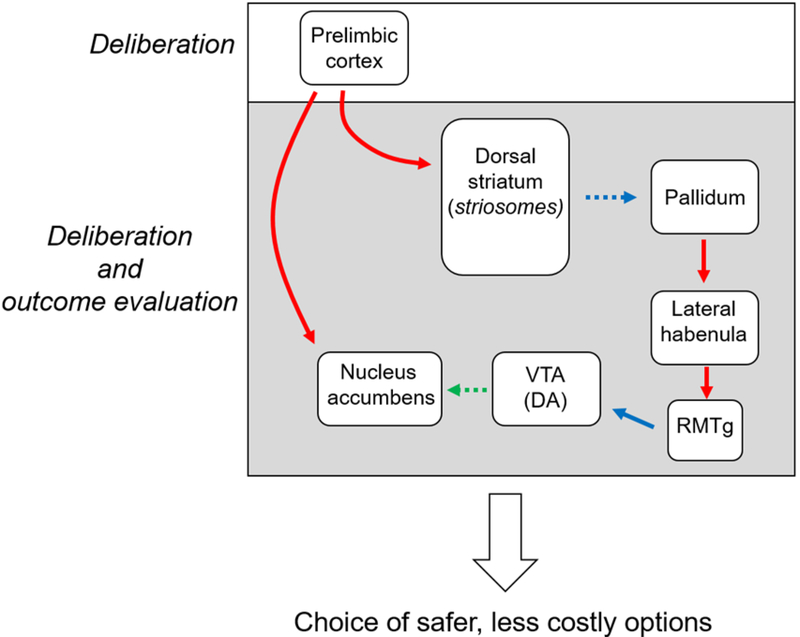

Considered together, these studies support a model (Figure 2) whereby during deliberation, glutamatergic projections from the LHb to GABAergic neurons in the RMTg are activated (Stopper et al., 2014). Excitation of these GABAergic neurons results in the inhibition of VTA dopaminergic activity (Vento et al., 2017), thereby decreasing DA release in the NAc. Under normal conditions, this pattern of activity within the circuit would result in a bias toward safer, but perhaps less preferable, options. If, however, the VTA became disinhibited, due to LHb inhibition for example, it could promote release of DA in the NAc, which, as Saddoris et al. (2015) demonstrated, biases choice toward the more preferred option, even if accompanied by a small cost (e.g., a short delay). Indeed, overriding LHb/RMTg regulation of the VTA with VTA electrical stimulation results in an increase in choice of larger, albeit uncertain, rewards (Stopper et al., 2014). The studies reviewed above also show that interactions between the LHb, RMTg and VTA are involved during outcome evaluation, but it is yet to be determined whether this also applies to the regulation of the VTA-NAc circuit. Notwithstanding, knowledge about the temporal dynamics of these circuits during deliberation provides a foundation from which hypotheses can be generated about how processing within this circuit may become compromised in psychiatric conditions [(e.g., in substance use disorders; (Orsini, Moorman, et al., 2015)].

Figure 2. Hypothetical circuit model of prefrontal regulation of decision making.

Synthesis of recent studies using optogenetics or electrical stimulation suggests that value-based decision making is mediated by interactions between the prefrontal cortex, midbrain and limbic regions. It should be noted that, although not represented in the schematic, the prelimbic cortex also projects to the basolateral amygdala (which in turn projects to other structures in the diagram). Although some of these circuits have been shown to contribute to value-based decision making (St Onge et al., 2012), it is unknown how it they are involved in temporally distinct phases of the decision process. Red lines represent glutamatergic projections. Blue lines represent GABAergic projections. Green lines represent dopaminergic projections. Solid lines indicate the activation of the projection and dashed lines indicate the inhibition of the projection under normal conditions that require a bias toward safer, less costly options. Ventral tegmental area, (VTA); rostromedial tegmental area, (RMTg); dopamine, (DA).

Prefrontal control of limbic and midbrain regions

The prefrontal cortex is another critical component of the decision-making circuit and is thought to monitor variations in outcomes and refine decision making to ultimately promote optimal behavior (Orsini, Moorman, et al., 2015). The mPFC is one subregion of the prefrontal cortex that is involved in various cognitive processes that contribute to decision making, such as behavioral flexibility and attention. Previous work has shown that pharmacological inactivation of the prelimbic cortex (PL) of the mPFC impairs the ability to flexibly shift choice preference as risks increase or decrease within test sessions (St Onge & Floresco, 2010). This indicates that the PL is particularly important for updating reward value representations as reward and risk contingencies change over time. The PL exerts its effects on choice performance through its projections to limbic and midbrain regions including the BLA, dorsal striatum, NAc and VTA (Orsini, Moorman, et al., 2015). For example, descending projections from the PL to the BLA mediate the flexibility necessary to adjust choice behavior as cost and reward contingencies change during decision making (St Onge, Stopper, Zahm, & Floresco, 2012). In addition, interactions between dopamine D1Rs in the PL and the NAc are important for promoting choice of larger, riskier options and reducing sensitivity to losses (Jenni, Larkin, & Floresco, 2017). More recently, however, new data have emerged from studies using optogenetic manipulations of PL circuits that more precisely delineate how PFC-specific circuits mediate distinct phases of decision making under conflict or punishment.

In the first of these studies, Friedman and colleagues (2015) demonstrated an important role for PL projections to distinct regions within the dorsal striatum in value-based decision making. Otherwise known as striosomes, these microzones are distinguished from surrounding striatal tissue, or matrisomes, on the basis of their neurochemistry and their patterns of inputs and outputs (Fujiyama et al., 2011; Graybiel, 1990; Graybiel & Ragsdale, 1978). Of particular relevance to decision making, striosomes not only receive input from the PL, but also other regions implicated in value-based decision making, such as the amygdala (Graybiel, 1990). Further, in contrast to matriosomes, striosomes send direct or indirect projections to dopamine-rich midbrain regions and structures such as the lateral habenula (Fujiyama et al., 2011; Graybiel, 1990; Prensa & Parent, 2001; Stephenson-Jones, Kardamakis, Robertson, & Grillner, 2013; Watabe-Uchida, Zhu, Ogawa, Vamanrao, & Uchida, 2012) that are known to contribute to decision making (Orsini, Moorman, et al., 2015). To test the contribution of PL communication with striosomes, Friedman et al (2015) optogenetically inhibited PL terminals in striosomes while rats engaged in a cost/benefit decision-making task which required them to choose between two arms of a T-maze. The ends of the arms were baited with options that differed in their costs (varying intensities of bright light) and rewards (varying dilutions of chocolate milk). Rats learned to approach or avoid arms based on the concentration of the chocolate milk relative to its associated cost. When the PLstriosome pathway was inhibited during the period between trial initiation and reward receipt, rats were more willing to select the high-cost, high-reward option. This effect was not observed in other versions of the task in which either the rewards or costs were held constant, indicating that it was selective to decisions that involved weighing the relative rewards and costs of the different options. As further confirmation, excitation of this pathway caused the opposite effect, such that rats biased their choice toward the low-cost, low-reward option. Based a series of additional experiments, Friedman et al. suggested that activity in this pathway may contribute to decision making under conflict by engaging a network of striatal interneurons that suppress activity in striosomes. Interestingly, it has been shown in lampreys that striosomes inhibit pallidal neurons that have glutamatergic projections to the LHb (Stephenson-Jones et al., 2013). Consistent with this, optogenetic stimulation of these excitatory projections from the pallidum to the LHb is aversive in rodents, resulting in avoidance behavior (Shabel, Proulx, Trias, Murphy, & Malinow, 2012). Considered together, suppression of striosomes could effectively release LHb-projecting pallidal neurons from inhibition, allowing pallidal neurons to drive LHb activity, which would bias behavior toward low-cost, low-reward choices (Figure 2). Support for this hypothesis is twofold. First, others have shown in primates that, like LHb neurons (Bromberg-Martin & Hikosaka, 2011; Matsumoto & Hikosaka, 2007), pallidal neurons that project to the LHb are activated by aversive events such as reward omission (Bromberg-Martin, Matsumoto, Hong, & Hikosaka, 2010; Hong & Hikosaka, 2008). Second, as reviewed above, activation of the LHb promotes avoidance-like behavior (Stamatakis & Stuber, 2012) and, of particular relevance, when LHb activation occurs during deliberation, rats decrease their choice of risky options (Stopper et al., 2014). It is important to note, however, that although the optogenetic manipulation of the PL-striosome pathway in the Friedman et al. study was selective to the period prior to outcome evaluation (i.e., the entire duration from trial initiation to reward receipt at the end of the maze arm), it did not discriminate between deliberation and action selection phases (Friedman et al., 2015). It would be useful to further dissect the role of this pathway during the period preceding the rats’ arrival at the end of the goal arm, particularly in light of work (reviewed above) showing that neural activity in the dorsal striatum during deliberation influences subsequent choices (Tai et al., 2012).

In addition to its projections to the DS, the PL sends robust projections to the NAc (Groenewegen, Wright, Beijer, & Voorn, 1999; Sesack, Deutch, Roth, & Bunney, 1989), which not only contribute to reward seeking for both drug and natural rewards, but also to risk-based decision making (Bossert et al., 2012; Jenni et al., 2017; McGlinchey, James, Mahler, Pantazis, & Aston-Jones, 2016). Recent work has extended this knowledge by showing that a subset of NAc-projecting neurons in the PL influences reward-seeking in the face of punishment, specifically during the period before a choice is made (i.e., deliberation; Kim, Ye, et al., 2017). In this study, mice were trained in a task in which 70% of lever presses yielded a chocolate milk reward, but the remaining 30% lever presses resulted in a footshock without reward delivery. Using calcium imaging to visualize activity in NAc-projecting neurons in the PL, Kim et al. (2017) observed that activity in these neurons was suppressed immediately before pressing the lever when it was associated with risk of punishment (in contrast to control sessions in which lever presses resulted only in reward with no possibility of punishment). Unexpectedly, however, optogenetic activation of this pathway during this deliberation phase had no effect on lever pressing. Subsequent experiments revealed that this was likely due to the diversity of responses in NAcprojecting PL neurons, with some encoding punishment (“shock” cells) and some encoding reward. When only the NAc-projecting shock cells in the PL were activated during deliberation, mice did show greater suppression of reward-seeking when there was a risk of punishment associated with the lever. Although the involvement of this pathway was not tested during other phases of the task (e.g., delivery of punishment, delivery of reward with no punishment), each optogenetic stimulation session consisted of trials in which no stimulation occurred, allowing for a within-session comparison of the effects of circuit activation during deliberation. Finally, this activation-induced suppression of reward seeking in the face of known risk of punishment appeared to be specific to the PL-NAc pathway, as the same manipulations of PL projections to the VTA were without effect. Again, however, because these circuits were not tested during outcome delivery, it is possible that VTAprojecting PL neurons are involved in other phases of the decision process. Collectively, these data demonstrate that recruitment of NAc-projecting neurons in the PL during deliberation appears to bias choices away from options with known risks of adverse consequences. By this view, diminished activity within this circuit during deliberation may contribute to the impaired decision making symptomatic of many psychiatric conditions, such as substance use disorders, pathological gambling, and eating disorders.

Together, these studies suggest that the PL interacts with the NAc and DS during the deliberation phase of value-based decision making (Figure 2), and that activation of either of these pathways results in a decrease in preference for costly options (Friedman et al., 2015; Kim, Ye, et al., 2017). This choice bias may be mediated at least in part by a subset of NAc-projecting neurons in the PL that selectively encode the costs associated with possible options. The engagement of PL projections to specific cell populations in the DS (striosomes) may be another mechanism governing risk-averse behavior. Activation of this pathway may disinhibit the pallidum, and thus activate the LHb via glutamatergic projections (Hong & Hikosaka, 2008). The LHb could then regulate DA activity in the ventral tegmental area and subsequent downstream NAc function via the RMTg (Bromberg-Martin, Matsumoto, Hong, et al., 2010; Hong et al., 2011; Stopper et al., 2014). Based on recent work reviewed herein, interactions between the LHb and midbrain circuitry appear to be involved in both deliberation and outcome evaluation, although the distinction between the different phases was not entirely clear in the respective studies. Thus, it will be critical to continue to employ techniques that afford temporal control of neuronal activity to gain a better appreciation for how these circuits contribute to distinct phases of the decision process.

Clinical implications

While the majority of individuals are able to make adaptive decisions, individuals who suffer from various psychiatric diseases are impaired in this ability (Crowley et al., 2010; Fishbein et al., 2005; Gowin, Mackey, & Paulus, 2013; Kaye, Wierenga, Bailer, Simmons, & Bischoff-Grethe, 2013). For example, individuals with substance use disorders (SUDs) tend to display exaggerated risk taking and impulsive choice (Bechara et al., 2001; Gowin et al., 2013; Leland & Paulus, 2005), which can contribute to continued drugseeking and/or relapse after periods of abstinence (Goto, Takahashi, Nishimura, & Ida, 2009; Sheffer et al., 2014). Other pathological conditions that are associated with impaired decision making include attention-deficit hyperactivity disorder (Ernst et al., 2003), eating disorders (Danner, Ouwehand, van Haastert, Hornsveld, & de Ridder, 2012; Kaye et al., 2013), pathological gambling (Brevers, Bechara, Cleeremans, & Noel, 2013), and schizophrenia (Heerey, Bell-Warren, & Gold, 2008; Heerey, Robinson, McMahon, & Gold, 2007; Lee, 2013). Not surprisingly, both preclinical and human studies have reported that dysfunction within many of the brain regions involved in decision making (and those specifically discussed in this review) is associated with these psychiatric diseases. For years, scientists have been working toward treating the primary manifestations of these conditions, such as drug-seeking or craving in the case of SUDs. If, however, aberrant decision making is a significant contributing factor to SUD persistence, an alternative approach could be to treat the impaired decision making, which might attenuate the severity and persistence of pathology. Further, in line with the main aim of this review, each phase of the decision process should be considered separately, as one phase may be preferentially impaired over others (e.g., chronic substance use might selectively impair cognitive operations during the deliberation phase, while leaving the outcome evaluation phase relatively intact). Whether this is in fact the case with decision-making impairments in psychiatric disease remains elusive, although there is evidence that, when tested in probabilistic learning tasks (or tasks that require switching choices based on prior experience), individuals with schizophrenia are impaired in their ability to use feedback from previous experiences to adjust their subsequent behavior (Strauss et al., 2011; Waltz, Frank, Robinson, & Gold, 2007). This of course does not preclude additional deficits in the deliberative process, but when considered together with research from animal models, could suggest that poor decision making in schizophrenia may be attributable to impairments in outcome evaluation. This approach could inform future treatment strategies, focusing cognitive behavioral therapy, for example, on how to approach decisions and pre-emptively evaluate options, rather than reflecting on outcomes of past decisions.

The research reviewed herein shows that certain brain regions or circuits may be differently engaged in decision making depending on the decision phase (summarized in Tables 1 and 2). For example, in decision making involving risk of punishment, the BLA appears to be engaged differently during deliberation versus outcome evaluation (Orsini et al., 2017). If a certain decision phase is selectively affected, as may be the case with deliberation in SUDs, this could suggest that BLA dysfunction is an underlying cause of the impaired decision making. Indeed, both preclinical and human imaging studies show that chronic substance use can alter BLA activity (Beveridge, Smith, Daunais, Nader, & Porrino, 2006; Calipari, Beveridge, Jones, & Porrino, 2013; Mackey & Paulus, 2013; Stalnaker et al., 2007; Zuo et al., 2012). With this knowledge, it might be possible to manipulate the BLA to attenuate impaired decision making. As an example, chronic cocaineinduced increases in risk taking (Mitchell et al., 2014) could potentially be attenuated by selective inhibition of the BLA during deliberation, which decreases risk taking in drug-naïve rats (Orsini et al., 2017). Although optogenetic approaches in the human brain are not yet feasible, scientists and clinicians have begun to explore the use of deep brain stimulation (DBS) as a means to reduce drug-seeking and related behavior (Creed, 2018). The effects of DBS on decision making itself have been reported in the context of Parkinson’s disease as an alternate form of treatment (Charles et al., 2012; Gubellini, Salin, Kerkerian-Le Goff, & Baunez, 2009), as standard dopamine replacement medications can increase risk taking and decrease impulse control (Evans & Lees, 2004; A. D. Lawrence, Evans, & Lees, 2003; Voon et al., 2011). In many of these studies, DBS has targeted the subthalamic nucleus (STN) and has been effective in decreasing the negative effects of dopamine replacement medications (Follett et al., 2010; Odekerken et al., 2013), although it has also been shown to disrupt executive functions, including decision making (Frank, 2006; Rogers et al., 2011). In a recent study in rats, however, DBS of the STN was shown to be effective in improving decision making specifically in subjects that demonstrated a high degree of risk preference at baseline (Adams et al., 2017). Interestingly, this same research group showed that such “risk-preferring” rats were also uniquely affected by cocaine selfadministration, increasing their risky choice relative to rats that chose more optimally at baseline (Ferland & Winstanley, 2017). This raises the possibility that DBS, at least in the STN, could effectively decrease the elevated risky choice (e.g., mitigate impaired decision making) associated with SUDs. Importantly, however, DBS of the STN in this study was not specific to particular decision phases (i.e., it occurred during the entire task). It would therefore be valuable to focus DBS during distinct decision phases to not only narrow how and when a particular brain region (such as the STN) is involved in the decision process, but also to determine whether DBS during a specific phase can restore or improve decision making under pathological conditions (e.g., after chronic cocaine self-administration).

Table 1. Deliberation.

Summary of studies implicating specific neural substrates involved in the deliberation phase of value-based decision making using optogenetic or electrical stimulation techniques. Abbreviations: DS, dorsal striatum; NAc, nucleus accumbens; BLA, basolateral amygdala; LHb, lateral habenula; RMTg, rostromedial tegmental area; VTA, ventral tegmental area; PL, prelimbic cortex.

| Region/circuit | Manipulation | Results | Reference |

|---|---|---|---|

| Dopamine D2 receptor-expressing neurons in NAc shell | Optogenetic excitation | Decreased risky choice in risk-preferring rats | Zalocusky et al. (2016) |

| Dopamine D1 or D2 receptor-expressing neurons in DS | Optogenetic excitation | D1 stimulation biased choice toward contralateral port; D2 stimulation biased choice toward ipsilateral port | Tai et al. (2012) |

| BLA | Optogenetic inhibition | Inhibition during deliberation decreased risky choice | Orsini et al. (2017) |

| BLA | Optogenetic inhibition | Inhibition during deliberation decreased impulsive choice | Hernandez et al. (2017) |

| LHb | Electrical stimulation | Stimulation during deliberation decreased risky choice | Stopper et al. (2014) |

| RMTg projections to the VTA | Optogenetic inhibition | Inhibition during deliberation increased shock breakpoint | Vento et al. (2017) |

| VTA terminals in the NAc | Optogenetic excitation | Excitation during cue presentation for the delayed reward in forced choice trials increased choice of this option in free choice trials | Saddoris et al. (2015) |

| PL projections to striosomes in DS | Optogenetic excitation or inhibition | Excitation decreased choice of high-cost, high-benefit option; inhibition increased choice of high-cost, high-benefit option | Friedman et al. (2016) |

| “Shock”-representing cells in the PL that project to the NAc | Optogenetic excitation | Decreased reward-seeking when reward associated with risk of punishment (compared to reward with no risk of punishment) | Kim et al. (2017) |

Table 2. Outcome evaluation.

Summary of studies implicating specific neural substrates involved in the outcome evaluation phase of value-based decision making using optogenetic or electrical stimulation techniques. Abbreviations: BLA, basolateral amygdala; LHb, lateral habenula; RMTg, rostromedial tegmental area; VTA, ventral tegmental area.

| Region/circuit | Manipulation | Results | Reference |

|---|---|---|---|

| BLA | Optogenetic inhibition | Inhibition during delivery of large, punished reward increased risky choice | Orsini et al. (2017) |

| BLA | Optogenetic inhibition | Inhibition during delivery of small, immediate reward increased impulsive choice | Hernandez et al. (2017) |

| LHb | Electrical stimulation | Stimulation during risky wins decreased risky choice; stimulation during delivery of small reward increased risky choice | Stopper et al. (2014) |

| VTA | Electrical stimulation | Stimulation during risky losses increased risky choice | Stopper et al. (2014) |

| RMTg projections to the VTA | Optogenetic inhibition | Inhibition during delivery of footshock (outcome) increased shock breakpoint | Vento et al. (2017) |

Closing remarks

The aim of this review was to conceptualize decision making as a process that is comprised of multiple cognitive elements that work in unison to guide value-based decision making. This is a relatively novel perspective when approaching decision-making research involving neurobiological mechanisms due to the experimental challenges in isolating a causal role for brain activity to a specific phase of the decision process. The use of optogenetics (or other techniques that allow for temporally-specific manipulation of neural circuits) now allows scientists to surpass this barrier to gain a more precise understanding of how the brain mediates this complex cognitive process, from cell type-specific contributions to circuit-level interactions. It is hoped that further use of these techniques will enable a more complete mapping of the circuitry mediating distinct decision components.

It is worth noting that throughout this review, there was no clear distinction between different types of costs (e.g., delay, footshock, reward omission) assessed in decision-making tasks. Rather, any penalty or loss incurred during a value-based decision-making task was discussed as though it is represented and processed in the brain similarly. Although this may be the case at some levels of information processing, there is also evidence suggesting that the type of cost can determine how some neural systems are engaged in decision making. For example, the effects of dopaminergic manipulations on risky decision making differ depending on whether the cost involves risk of reward omission vs. risk of punishment; systemic administration of the indirect DA agonist amphetamine or direct D2R agonists increases risky choice for the former (St Onge & Floresco, 2009), but decrease risky choice for the latter (Orsini, Trotta, et al., 2015; Orsini, Willis, Gilbert, Bizon, & Setlow, 2016; Simon et al., 2011). Although this may not generalize to other neural systems, it underscores the importance of considering the nature of the cost when assessing how the BLA, for example, evaluates outcomes and uses that information to guide future choices. Interestingly, work from Orsini et al. (2017) and Hernandez et al. (2017) does suggest that the BLA may operate similarly during deliberation and outcome evaluation for decision making involving risk of punishment and delay until reward receipt, respectively. Thus, it is possible that certain brain regions within the neural circuitry of decision making use the same calculus across different types of costs. Equipped with both the complex preclinical models of decision making and modern neuroscience tools, scientists are well-positioned to address such questions.

Relatedly, all of the studies described herein assume that performance in the decision-making tasks is based on model-based learning, or the ability to update already-acquired knowledge (“mental models”) about actions and their outcomes with new information from the environment to flexibly adjust future behavior (Dayan & Niv, 2008; Doll, Simon, & Daw, 2012; Sutton & Barto, 1998). This is in contrast to “model-free” learning, also referred to as habit learning, whereby values of different actions and their outcomes are based solely on previous direct experiences. However conceptually different these two models may be, they do appear to be mediated by overlapping brain regions and circuits (Doll et al., 2012; Lee, 2013). Nevertheless, given how different the evaluative processes are between these two types of behavior, it is reasonable to hypothesize that the recruitment of certain brain regions during distinct phases of decision making, such as outcome evaluation, may differ depending on the type of internal representations by the decision maker. As with the comparison of costs across various decision-making tasks, tools that allow temporal dissociation of distinct decision-making phases provide the means to test this hypothesis and propel our understanding of decision-making neurobiology forward.

Finally, although great strides have been made in understanding the neurobiological complexity of value-based decision making, most of this work has been done in a vacuum. Future attention should begin to focus on the context in which decisions are made. All of the work reviewed herein has investigated the neurobiology of value-based decision making in limited and carefully-controlled environments. Although experimentally tractable, such environments do not reflect the complexity of real-world decision making, which occurs amidst a constantly changing background. It is easy to imagine that choice behavior will differ depending on a variety of exogenous influences. For example, the decision to imbibe alcohol may differ depending on whether one is surrounded by peers at a party (drink more!) or whether one is in a private setting (one glass is enough). Similarly, choice behavior could vary based on familial relationships, such that more risk-averse strategies might be engaged for one’s own children, but riskier strategies might be tolerated for more distant relatives. Thus, decision making, and specifically its subprocesses therein, may differ depending on the context in which the decision occurs. It therefore follows that activity in brain regions selectively involved in these subprocesses may differ accordingly. Consideration of such environmental factors would provide greater ethological validity to our understanding of decision making and its underlying neurobiology and thus might enhance its translational application.

Acknowledgments

Acknowledgements: This work was supported by the National Institutes of Health (DA041493 to C.A.O., AG029421 to J.L.B, and DA036534 to B.S.), the McKnight Brain Research Foundation (J.L.B.), and a McKnight Predoctoral Fellowship and the Pat Tillman Foundation (C.M.H).

References:

- Adams WK, Vonder Haar C, Tremblay M, Cocker PJ, Silveira MM, Kaur S, … Winstanley CA (2017). Deep-Brain Stimulation of the Subthalamic Nucleus Selectively Decreases Risky Choice in Risk-Preferring Rats. eNeuro, 4(4). doi: 10.1523/ENEURO.0094-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andre VM, Cepeda C, Cummings DM, Jocoy EL, Fisher YE, William Yang X, & Levine MS (2010). Dopamine modulation of excitatory currents in the striatum is dictated by the expression of D1 or D2 receptors and modified by endocannabinoids. Eur J Neurosci, 31(1), 14–28. doi: 10.1111/j.14609568.2009.07047.x [DOI] [PubMed] [Google Scholar]

- Baker PM, Jhou T, Li B, Matsumoto M, Mizumori SJ, Stephenson-Jones M, & Vicentic A (2016). The Lateral Habenula Circuitry: Reward Processing and Cognitive Control. J Neurosci, 36(45), 1148211488. doi: 10.1523/JNEUROSCI.2350-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, & Killcross S (2006). Parallel incentive processing: an integrated view of amygdala function. Trends Neurosci, 29(5), 272–279. doi: 10.1016/j.tins.2006.03.002 [DOI] [PubMed] [Google Scholar]

- Beaulieu JM, & Gainetdinov RR (2011). The physiology, signaling, and pharmacology of dopamine receptors. Pharmacol Rev, 63(1), 182–217. doi: 10.1124/pr.110.002642 [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, & Anderson SW (1994). Insensitivity to future consequences following damage to human prefrontal cortex. Cognition, 50(1–3), 7–15. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR, & Lee GP (1999). Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J Neurosci, 19(13), 5473–5481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, & Nathan PE (2001). Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia, 39(4), 376–389. [DOI] [PubMed] [Google Scholar]

- Beveridge TJ, Smith HR, Daunais JB, Nader MA, & Porrino LJ (2006). Chronic cocaine self-administration is associated with altered functional activity in the temporal lobes of non human primates. Eur J Neurosci, 23(11), 3109–3118. doi: 10.1111/j.1460-9568.2006.04788.x [DOI] [PubMed] [Google Scholar]

- Boschen SL, Wietzikoski EC, Winn P, & Da Cunha C (2011). The role of nucleus accumbens and dorsolateral striatal D2 receptors in active avoidance conditioning. Neurobiol Learn Mem, 96(2), 254262. doi: 10.1016/j.nlm.2011.05.002 [DOI] [PubMed] [Google Scholar]

- Bossert JM, Stern AL, Theberge FR, Marchant NJ, Wang HL, Morales M, & Shaham Y (2012). Role of projections from ventral medial prefrontal cortex to nucleus accumbens shell in context-induced reinstatement of heroin seeking. J Neurosci, 32(14), 4982–4991. doi: 10.1523/JNEUROSCI.000512.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brevers D, Bechara A, Cleeremans A, & Noel X (2013). Iowa Gambling Task (IGT): twenty years after - gambling disorder and IGT. Front Psychol, 4, 665. doi: 10.3389/fpsyg.2013.00665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, & Hikosaka O (2011). Lateral habenula neurons signal errors in the prediction of reward information. Nat Neurosci, 14(9), 1209–1216. doi: 10.1038/nn.2902 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, & Hikosaka O (2010). Dopamine in motivational control: rewarding, aversive, and alerting. Neuron, 68(5), 815–834. doi: 10.1016/j.neuron.2010.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hong S, & Hikosaka O (2010). A pallidus-habenula-dopamine pathway signals inferred stimulus values. J Neurophysiol, 104(2), 1068–1076. doi: 10.1152/jn.00158.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calipari ES, Beveridge TJ, Jones SR, & Porrino LJ (2013). Withdrawal from extended-access cocaine self-administration results in dysregulated functional activity and altered locomotor activity in rats. Eur J Neurosci. doi: 10.1111/ejn.12381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer CF (2008). Neuroeconomics: opening the gray box. Neuron, 60(3), 416–419. doi: 10.1016/j.neuron.2008.10.027 [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, & Everitt BJ (2001). Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science, 292(5526), 2499–2501. doi: 10.1126/science.1060818 [DOI] [PubMed] [Google Scholar]

- Carter AG, Soler-Llavina GJ, & Sabatini BL (2007). Timing and location of synaptic inputs determine modes of subthreshold integration in striatal medium spiny neurons. J Neurosci, 27(33), 8967–8977. doi: 10.1523/JNEUROSCI.2798-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cepeda C, Andre VM, Yamazaki I, Wu N, Kleiman-Weiner M, & Levine MS (2008). Differential electrophysiological properties of dopamine D1 and D2 receptor-containing striatal medium-sized spiny neurons. Eur J Neurosci, 27(3), 671–682. doi: 10.1111/j.1460-9568.2008.06038.x [DOI] [PubMed] [Google Scholar]

- Charles PD, Dolhun RM, Gill CE, Davis TL, Bliton MJ, Tramontana MG, … Konrad PE (2012). Deep brain stimulation in early Parkinson’s disease: enrollment experience from a pilot trial. Parkinsonism Relat Disord, 18(3), 268–273. doi: 10.1016/j.parkreldis.2011.11.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JY, Haesler S, Vong L, Lowell BB, & Uchida N (2012). Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature, 482(7383), 85–88. doi: 10.1038/nature10754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Creed M (2018). Current and emerging neuromodulation therapies for addiction: insight from pre-clinical studies. Curr Opin Neurobiol, 49, 168–174. doi: 10.1016/j.conb.2018.02.015 [DOI] [PubMed] [Google Scholar]

- Crowley TJ, Dalwani MS, Mikulich-Gilbertson SK, Du YP, Lejuez CW, Raymond KM, & Banich MT (2010). Risky decisions and their consequences: neural processing by boys with Antisocial Substance Disorder. PLoS One, 5(9), e12835. doi: 10.1371/journal.pone.0012835 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danner UN, Ouwehand C, van Haastert NL, Hornsveld H, & de Ridder DT (2012). Decision-making impairments in women with binge eating disorder in comparison with obese and normal weight women. Eur Eat Disord Rev, 20(1), e56–62. doi: 10.1002/erv.1098 [DOI] [PubMed] [Google Scholar]

- Day JJ, Jones JL, & Carelli RM (2011). Nucleus accumbens neurons encode predicted and ongoing reward costs in rats. Eur J Neurosci, 33(2), 308–321. doi: 10.1111/j.1460-9568.2010.07531.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, & Niv Y (2008). Reinforcement learning: the good, the bad and the ugly. Curr Opin Neurobiol, 18(2), 185–196. doi: 10.1016/j.conb.2008.08.003 [DOI] [PubMed] [Google Scholar]