Abstract

Introduction

Achieving effective integration of healthcare across primary, secondary and tertiary care is a key goal of the New Zealand (NZ) Health Strategy. NZ’s regional District Health Board (DHB) groupings are fundamental to delivering integration, bringing the country’s 20 DHBs together into four groups to collaboratively plan, fund and deliver health services within their defined geographical regions. This research aims to examine how, for whom and in what circumstances the regional DHB groupings work to improve health service integration, healthcare quality, health outcomes and health equity, particularly for Māori and Pacific peoples.

Methods and analysis

This research uses a mixed methods realist evaluation design. It comprises three linked studies: (1) formulating initial programme theory (IPT) through developing programme logic models to describe regional DHB working; (2) empirically testing IPT through both a qualitative process evaluation of regional DHB working using a case study design; and (3) a quantitative analysis of the impact that DHB regional groupings may have on service integration, health outcomes, health equity and costs. The findings of these three studies will allow refinement of the IPT and should lead to a programme theory which will explain how, for whom and in what circumstances regional DHB groupings improve service integration, health outcomes and health equity in NZ.

Ethics and dissemination

The University of Otago Human Ethics Committee has approved this study. The embedding of a clinician researcher within a participating regional DHB grouping has facilitated research coproduction, the research has been jointly conceived and designed and will be jointly evaluated and disseminated by researchers and practitioners. Uptake of the research findings by other key groups including policymakers, Māori providers and communities and Pacific providers and communities will be supported through key strategic relationships and dissemination activities. Academic dissemination will occur through publication and conference presentations.

Keywords: organisation of health services, realist evaluation, health policy

Strengths and limitations of this study.

New Zealand’s regional District Health Board (DHB) groupings are a complex health system intervention and can be appropriately investigated using a realist evaluation design.

Realist evaluation allows the questions how, for whom and in what circumstances regional DHB groupings improve service integration, health outcomes and health equity to be addressed.

The use of mixed methods (qualitative and quantitative) allows investigation of how best to measure the impacts of regional DHB group working and an understanding of the contexts and mechanisms that lead to these outcomes.

A novel feature of this research, used specifically to promote change orientation, end user engagement and knowledge transfer, is having a clinician with a senior leadership role in one of the regional DHB groupings occupy a dual service/researcher role.

It may be difficult to attribute variation in quality measure attainment, in particular health outcome measures, to DHB regional working.

Introduction

Achieving effective integration of healthcare across primary, secondary and tertiary care is a key goal of the New Zealand (NZ) Health Strategy.1 NZ’s four regional District Health Board (DHB) groupings (the DHB regions) are fundamental to delivering integration. They provide a platform to bring the country’s 20 DHBs together to plan, fund and deliver health services within four geographical regions.2

Predominantly publicly funded, NZ’s health system, like many others, is faced with growing pressure on health resources and challenges surrounding ongoing sustainability.3 While overall strategic direction and oversight is provided by a central Ministry of Health (MOH), responsibility for planning, funding and delivering healthcare services is devolved to 20 geographically defined DHBs charged with managing health services in their district. DHBs are expected to monitor the health needs of their populations and reduce health disparities, in particular among Māori and Pacific populations.4 In the main, hospital and specialist services are provided by DHB-owned and operated provider arms. In addition, 31 primary health organisations (PHO) receive funding from DHBs and are responsible for providing primary health services to their enrolled populations.3 The DHB regions were established by the MOH in 2011 following the recommendations of a Ministerial Review Group and legislative change to promote integration and address health sector fragmentation, duplication and vulnerability.2 5 The country’s 20 DHBs were instructed to plan services and work together within one of four DHB regions. These were three North Island regions—Northern,6 Midland,7 Central8—and one South Island region,9 each region serving a population of between 850 000 and 1.76 million people. The regional services planning process was designed to reduce service vulnerability, reduce cost and improve quality of care in the four DHB regions.10

All four DHB regions have broadly similar operational objectives: healthcare integration; improving quality of regional services (eg, cancer services, major trauma services, health of older people); improving clinical information systems; and developing the health workforce. They differ, however, in the way they have chosen to work with their respective DHBs to achieve these objectives. The South Island regional grouping (known as the South Island Alliance: SIA) has chosen to use an alliancing approach to pursue transformational change within the complex health system comprising the five South Island DHBs.9 Derived from the construction industry, alliancing aims to more effectively deliver complex services by working collaboratively with common outcomes and shared accountability.11 12 The other three DHB regions have also taken collaborative approaches to achieve principle-based consensus decision-making: the Northern Regional Alliance uses elements of the alliancing approach and both Midlands Region and Central Region use separate company structures established for their regional work (HealthShare and Technical Advisory Services, respectively).

Currently there is not a set of outcome measures that directly reflect the activities of the DHB regions. Consequently, we do not know if the four DHB regions have improved health service integration, the quality of regional services, health outcomes and health equity, particularly for Māori and Pacific peoples, as expected. Further, in spite of anecdotal feedback from the DHB regions that regional working is delivering regional benefits, the organisational features that explain this success are unclear. A review by the NZ Auditor General in 2013 indicated that while there were some signs of improved processes since the establishment of the DHB regions, there had not been the progress that was hoped for, and evidence of improved outcomes was largely lacking.10 Given that DHB regions were established as a key enabler of health service integration and sustainability, there is an important need to determine whether routinely collected healthcare quality measures can assess if the DHB regions deliver better outcomes against the NZ ‘Triple Aim’ of improving population health and equity, patient experience and value for money.13 14 Gaining an understanding of the regional context and mechanisms by which these outcomes are generated is also important to inform future regional DHB working strategies.15

Recent national NZ developments to measure and monitor health system performance include the Health Quality and Safety Commission’s (HQSC) national health quality and patient safety indicators, which have been gradually introduced over the last decade, and the MOH’s System Level Measures (SLM) introduced in 2016.16 The HQSC’s indicators include the Atlas of Healthcare Variation, and Quality and Safety markers, which show variations in selected quality measures across the country at a DHB level.17 The MOH’s SLMs are ‘whole-of-system’ measures intended to capture the combined contribution of different service providers across the spectrum of care to a desired performance outcome.16 The SLMs focus on children, youth and vulnerable populations and are supplemented with contributory measures chosen at DHB-PHO district alliance level.18 19 The DHB regions also have outcome measures they are tracking over time, such as the measures developed within the SIA’s outcomes framework,9 and the Trendly Monitoring and Reporting Tool that tracks Māori compared with non-Māori outcomes, developed within the Midlands Region.7 These measures, along with other indicator sources, such as the NZ Health Survey,20 provide potential quality measures to assess how differing models of regional DHB working may have impacted on improving healthcare quality, including health service integration, health outcomes and health equity. In common with other health systems, the NZ approach is to use a combination of healthcare process measures (which are more sensitive to differences in healthcare quality) and health outcome measures (which are of greater overall importance but which may be more difficult to attribute to the activities of health sector organisations).18 21 22 This approach to measuring the performance of the NZ health system is in line with international developments to promote accountable care, in which healthcare organisations are ‘held jointly accountable for achieving a set of outcomes for a defined population over a period of time and for an agreed cost.’23

Realist evaluation

Realist evaluation24 is a theory-driven evaluation approach25 used to understand how, for whom and in what circumstances an intervention ‘works’ to produce intended outcomes. Thus, its focus is on not just whether or not a programme such as an NZ regional DHB grouping ‘works’ but how, for whom and in what contexts it ‘works’. Realist evaluation addresses these questions by developing context-mechanism-outcome (CMO) configurations. These configurations or explanatory pathways describe how various contexts (C) work with underlying mechanisms (M) to produce particular outcomes (O).24 An important objective is to identify those theories that describe the explanatory pathways. These working theories or hypotheses are termed a programme theory and are iteratively refined during the evaluation as initial programme theory (IPT). The IPT is empirically tested with the evaluation data and a final programme theory is developed to explain how, in which conditions and for whom the intervention works.24 26

Guidance on the components of a realist evaluation are that it should have an explanatory focus, explore CMO configurations and use multiple/mixed methods of data collection.24 27 28

Study aims and objectives

Aims

Describe the structures, activities and strategies NZ’s regional DHB groupings use to improve service integration, how they use them, with whom, for whom and how effective these are in particular contexts.

Understand how regional DHB groupings work to improve population health outcomes and achieve health equity in their populations.

Determine the impact of the regional DHB groupings on service integration, health outcomes, health equity and costs.

Objectives

To develop an IPT of how regional DHB groupings work to improve service integration, health outcomes and health equity.

To iteratively refine the IPT through a series of case studies to produce a programme theory explaining how, for whom and in what circumstances regional DHB groupings improve service integration, health outcomes and health equity.

Methods and analysis

This research uses a mixed methods realist evaluation design. Realist evaluation24 is increasingly used to evaluate complex health system interventions15 both internationally26 27 and in NZ.29 We chose this evaluation approach because of its specific focus on exploring how, for whom and in what circumstances a complex health system intervention such as regional DHB groupings delivers defined outcomes. We have used the RAMESES II reporting standards for realist evaluations28 to structure our reporting of the study methods and analysis (see online supplementary file 1).

bmjopen-2019-030076supp001.pdf (305.8KB, pdf)

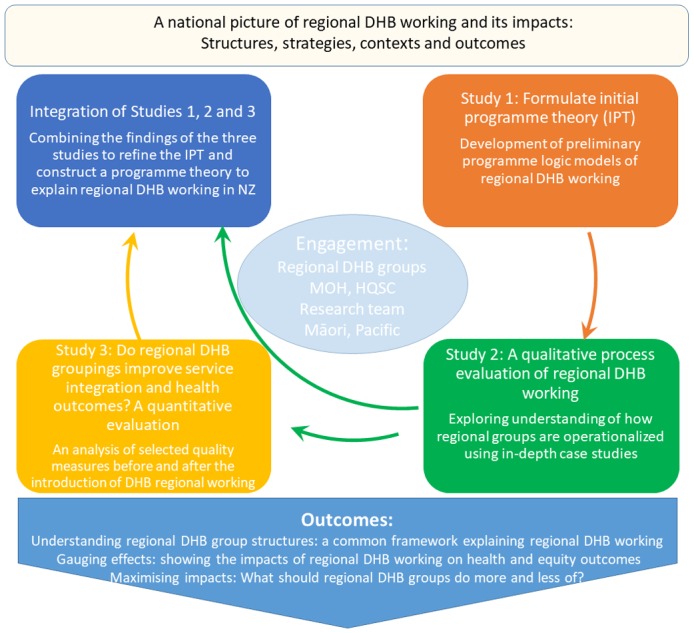

In order to evaluate the operation of regional DHB groupings and their impact on health system integration, health outcomes and equity, we will carry out three inter-related studies. First, drawing on the four regional case study sites, we will develop our IPT of how regional DHB groupings work. To do this we will develop preliminary programme logic models which link outcomes (O) with the context (C) and mechanisms (M) underpinning regional DHB working in each site (study 1). Second, informed by the preliminary programme logic models, we will gather qualitative data through interviews with key informants (providers and planners of healthcare) within each of the case study sites and other external key stakeholders to explore how regional DHB groupings work in practice (study 2). Third, building on studies 1 and 2, we will analyse routinely collected data to assess the impact of regional DHB groupings on health system integration, outcomes, equity and costs (study 3). Lastly, we will integrate the findings of studies 1–3 to refine our preliminary logic models and attendant CMO configurations. This will allow us to develop a programme theory of how regional DHB groupings work to improve service integration, health outcomes and health equity. Figure 1 presents the phases of the proposed evaluation. The duration of the research project is 24 months.

Figure 1.

Realist evaluation phases. DHB, District Health Board; HQSC, Health Quality and Safety Commission; MOH, Ministry of Health; NZ, New Zealand.

Study 1: Formulating IPT: developing programme logic models to describe regional DHB working (Aims 1 and 2, Objective 1)

We will formulate our IPT through the development of preliminary programme logic models of regional DHB working in each of the four regional DHB groupings. A programme logic model can be defined as a picture or visual representation of how an organisation such as a DHB regional grouping does its work.30 Programme logic models are associated with realist evaluation approaches24 and are widely used in evaluations of complex health system interventions as they link outcomes with programme activities/processes and the theoretical assumptions/principles of the programme.15 30

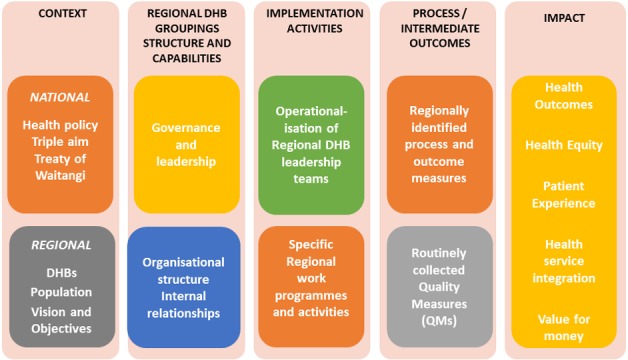

Programme logic model development, before embarking on studies 2 and 3, ensures that the process evaluation (study 2) and outcome evaluation (study 3) are informed by a clear description of how DHB regions are intended to operate, what their implementation activities/operational objectives are (eg, regional approach to low-volume, high-complexity services), what changes in healthcare processes are intended to occur (eg, reduced service vulnerability) and how they are expected to deliver the outcomes of the NZ ‘Triple Aim’.14 In order to better illustrate the utility of a logic model approach we have developed a draft preliminary model using recent regional DHB reports6–9 which will form the starting point for study 1 (figure 2). This draft model will be further developed and refined as described below.

Figure 2.

Draft preliminary logic model of regional District Health Board (DHB) working.

Design

Documentary analysis31 will be used to review publicly available central government, regional DHB and individual DHB documentation (eg, strategic plans, regional services plans and annual reports) as they relate to the current structure, capabilities and implementation activities of the four DHB regions. Members of the regional DHB governance groups and coordinating offices will be asked to advise whether the identified documentation best describes the organisational context and mechanisms for each DHB region and to identify and provide supplementary material as required. In terms of available quality measures all DHB regions will be reporting on SLMs18 through their constituent DHBs. We will also determine what other quality measures are being reported and used at regional levels to assess performance across the domains of the NZ ‘Triple Aim’,14 such as the HQSC’s quality and safety indicators17 and any regionally developed outcome measures.

Data analysis

The draft programme logic model (figure 2) will be further developed to organise the findings of the documentary analysis into preliminary logic models that describe the current structure, capabilities, implementation activities (operational objectives, eg, healthcare integration) and impact (health outcomes; equity) of the four regional DHB groupings. These logic models will allow us to refine our IPT of how regional DHB groupings work.

Study 2: Qualitative process evaluation of regional DHB working: detailed case studies of all four DHB regions (Aims 1 and 2, Objective 2)

We will empirically test our IPT through an analysis of the implementation activities (operational objectives) and mechanisms by which these activities lead to defined health outcomes (impact) as established by our logic models of each regional DHB grouping (study 1 and figure 2). Our approach is informed by complex intervention methodology.15 For each of the four DHB regions we will use the approaches described below.

Study participants

We will conduct semistructured interviews with key informants (providers and planners of healthcare) from across each DHB region, and external key stakeholders. Interviewees will be sampled purposively in order to construct a maximum variation sample that will include members of regional DHB governance groups and coordinating offices, DHB senior leadership, Māori and Pacific members of DHB executives and Māori and Pacific funders and planners of services as relevant, and lead clinicians for regional workstreams. In addition, key personnel from other stakeholder organisations will be interviewed, including from the MOH and HQSC. We estimate that a maximum of 130 interviews in total (up to 30 per DHB region, plus up to 10 with MOH and HQSC) will be conducted.

Design

We will develop a topic guide to explore the operationalisation of regional DHB groupings as identified in the programme logic models (study 1). The interviews will be based on our IPT and will focus on the contexts, structures and strategies behind regional DHB groupings as well as experiences with regional DHB working. Areas explored will include: (A) the key areas of regional focus and the issues that informants consider have enabled progress within their DHB region towards regional DHB working; (B) barriers/challenges that have been encountered during the process and how these have been addressed; (C) how the DHB region is monitoring progress towards improved regional outcomes; and (D) to what extent the informants consider changes in health outcomes in their DHB region (and constituent DHBs) can be attributed to actions taken through a regional approach to service planning and delivery (structure/capability and implementation activities as per figure 2). The topic guide will be used flexibly to allow participants to construct their accounts in their own terms, and will be revised and refined throughout the interviewing process to reflect themes emerging from the concurrent data analysis. All interviews will be digitally recorded (with consent) and transcribed.

Data analysis

We will conduct a thematic analysis32 of interview data, informed by the Consolidated Framework for Implementation Research (CFIR).33 CFIR is an internationally widely used implementation science framework which provides an overarching typology of implementation and allows researchers to identify variables that are most relevant to a particular intervention or healthcare programme.33–36 Data obtained from the interviews will be used to update and further develop the IPT and preliminary programme logic models for each of the four regional DHB groupings developed in study 1.

Study 3: Do regional DHB groupings improve service integration and health outcomes? A quantitative analysis of the impact that DHB regional groups may have on service integration, health outcomes, health equity and costs (Aim 3, Objective 2)

In study 3 we will explore the strengths and weaknesses of routinely collected data in evaluating the outcomes of DHB regional work, and aim to propose a suite of quality measures that could reflect changes in outcomes related to DHB regional working. We use the term ‘quality measure’ here as defined by the US Centers for Medicare and Medicaid Services37: ‘quality measures are tools that help us measure or quantify healthcare processes, outcomes, patient perceptions, and organizational structure and/or systems that are associated with the ability to provide high-quality health care and/or that relate to one or more quality goals for health care.’ Healthcare quality measures, encompassing equity and outcome measures currently being used by the DHB regions as identified in study 2, will be considered alongside other routinely collected data as potential measures to capture the value of regional working. We see this as an exploratory analysis as we are aware that it may be difficult to attribute variation in quality measure attainment, in particular outcome measures,21 to DHB regional working. We note that this view is consistent with feedback received from DHB regions that the nature of their work (eg, regional workforce planning) may not be easily captured by existing healthcare quality measures.

We will conduct a before-and-after analysis of selected process and outcome measures (ie, quality measures) for all DHB regions. The selected quality measures will be limited to those with routinely collected data predating the introduction of the DHB regions, and will be tested as to their ability to assess the impact of regional DHB working (figure 2).

Selection of Quality Measures

Existing routinely collected Quality Measures (QM) (2008–2017) used by DHB regions as identified in studies 1 and 2 will be assessed, alongside SLMs and HQSC’s quality and safety indicators17–19 and other potential indicators identified in study 1. There are currently six SLMs being collected17: acute hospital bed-days; childhood ambulatory sensitive hospitalisations; amenable mortality; babies living in smoke-free homes; patient experience of care; and youth access to and utilisation of youth-appropriate health services, although not all of these were collected prior to 2016. The HQSC’s Atlas of Healthcare Variation38 39 provides information on 18 domains across a broad health-related spectrum, with data going back to 2008 in some domains.

A structured consensus process40–43 involving the research team and representatives from the four DHB regions, MOH and HQSC will be used to select the QMs to analyse. Potential measures will be assessed in terms of their type (healthcare process or health outcome measures), evidence base and relevant QM dimensions. The QM dimensions have two separate aspects: first, the extent to which QM attainment can be attributed to regional DHB activities; second, their potential for (A) significant patient benefit—quality, safety and experience of care; (B) population benefit—improved health and equity; (C) health system benefit—efficiency, value for money; and (D) scope for improvement on current levels of attainment. Ten to 15 QMs will be selected for data analysis that meet these three criteria: (A) being available in 2008–2010 period, as well as 2015–2017; (B) being available at DHB level (to allow aggregation to regional level); and (C) being available by ethnicity.

Data collection

Changes in selected QMs will be evaluated using data collected at the DHB and region level by ethnicity at two time points before the roll-out of DHB regions across NZ (2008 and 2010) and two time points after their establishment (2015 and 2017).

Data analysis

The complex clustering of data with three levels (four longitudinal measurements [two before establishment and two after] within each of the 20 individual DHBs, which are in turn grouped within the four DHB regions, with four to six DHBs per region) presents challenges for statistical analyses using conventional parametric and non-parametric approaches.

To address these challenges, permutation tests will be used to compare changes from before (using 2008 and 2010 values) with after (using 2015 and 2017 values) establishment, both within DHB regions and for all DHB regions together, with appropriate test statistics based on mean changes for scores following establishment. This will be done separately for each QM. This approach will also allow comparisons of differences in changes between DHB regions (to explore associations between any such differences in changes and the information gathered in study 2). The permutations will be restricted to reflect the hierarchy described above (eg, to assess overall changes within a DHB region following establishment, before and after values for each QM will be permuted within the individual DHBs to allow calculating permuted change scores, and when comparing changes between DHB regions, individual DHBs will be permuted between DHB regions).

This approach has been piloted on synthetic data and will be able to assess evidence of changes over time with the key outcome being changes following the establishment of the DHB regions. Differences in DHB populations will be respected by this approach (as data will remain clustered within DHBs, so each DHB acts as its own control), although changes in DHB populations over time will not be modelled beyond the definitions of the QMs themselves (eg, a QM that directly measured changes for Māori would remain valid under changes in the ethnic composition within DHBs). The Holm-Bonferroni method will be used to control the familywise error rate irrespective of the number of QMs investigated and statistical significance will be determined by two-sided p<0.05.

Exploratory health economic analysis

This will be conducted using the QMs identified in study 3 as having the ability to detect changes attributable to regional DHB working. The identified QMs that capture direct costs to DHBs (eg, bed-days), or can be used to estimate expenditure, will be compared over time for each region. Where possible, we will aim to evaluate measures that assess all dimensions of quality (eg, patient experience, improved health, equity and value for money).

As a result of the analysis, QMs that indicate an ability to detect change that could be attributed to regional DHB working will be identified.

Integration of the findings of studies 1, 2 and 3: a programme theory of regional DHB working (Objective 2)

This research has been structured using an iterative, realist evaluation approach, which recognises the complex, context-rich environments in which regional DHB groupings operate (figure 1). Through using this iterative approach, we will be able to build an in-depth understanding of how regional DHB groupings work in four different contexts, allowing us to articulate the structures and strategies that support the four regional DHB groupings, the mechanisms and activities they use to drive improvements, the barriers and challenges to regional DHB working (studies 1 and 2) and their impacts across a range of outcomes (study 3). By combining the findings of these three studies, we will be able to refine our IPT and construct a programme theory which will explain regional DHB working in NZ. We intend to present this as an overarching framework that will visually articulate key structural mechanisms and contextual features which will show how regional DHB groupings achieve their stated health system goals. It will explain how regional DHB groupings can enhance their impact by identifying structural changes and strategies that they should do more and/or less of to maximise health system integration, population health outcomes and equity.

Patient and public involvement

We have not directly involved patients or members of the general public in the design or conduct of this study. Māori are the indigenous population of NZ and experience health inequities across the majority of health outcomes.44 The NZ government has obligations under the Treaty of Waitangi to improve Māori health and reduce inequities45 and these are embedded in the NZ health and disability system’s statutory framework.4 Accordingly, we have adopted an equity-based approach to ensure Māori involvement in the design and conduct of this study.45 46 This has resulted in our project aiming to examine how, for whom and in what circumstances the regional DHB groupings work to improve health service integration and healthcare quality, health outcomes and health equity specifically for Māori. This equity-based approach also guides our partnership with Pacific providers and communities.

Ethics and dissemination

Ethical approval

The study commenced in October 2018 and is planned to continue until September 2020.

Dissemination of findings

A novel feature of this research, used specifically to promote change orientation, end user engagement and knowledge transfer, is to have a clinician (general practitioner) with a senior leadership role in one of the regional DHB groupings (SIA) occupy a dual service/researcher role (CA). The embedding of a clinician researcher within a key health service organisation allows full coproduction of this health delivery research: the research has been jointly conceived and designed and will be jointly evaluated and disseminated by researchers and practitioners.47 Regular information sharing with and between the four DHB regions during the project will allow emerging themes to be tested by the DHB regional teams as the research progresses. Learnings and improvements in service delivery approaches will be able to be instituted when the information becomes available, rather than after the full research cycle has been completed. Such an approach will make policy and practice-relevant research immediately available to end users, accelerating the use of evidence in decision-making of health and other services.47 The uptake of our research findings by other key groups including policymakers, Māori providers and communities and Pacific providers and communities will be supported through key strategic relationships and dissemination activities throughout the course of the research. Academic dissemination will occur through publication and conference presentations.

Discussion

NZ’s four regional DHB groupings were established in 2011 and are fundamental to delivering health system integration as they plan, fund and deliver health services in their defined geographical regions.2 We do not, however, know if they have delivered the anticipated improved healthcare quality, health outcomes and health equity, particularly for Māori and for Pacific peoples. In this paper we have presented a study protocol for a mixed methods realist evaluation of these regional DHB groupings. We anticipate that, through our chosen realist evaluation methodology, we will be able to build an overarching framework which will explain regional DHB working in NZ. This framework will visually articulate key structural mechanisms and contextual features which will show how regional DHB groupings achieve their stated health system goals.

Supplementary Material

Acknowledgments

The study sponsor is the University of Otago, Dunedin, Otago, NZ. We also thank Peter Jones of the NZ MOH and Richard Hamblin of the HQSC for agreeing to act as expert advisors.

Footnotes

Contributors: CA, RG and TSt jointly conceived the study. TSt, CA, RG, EP, LR, ARG, FD-N, EW, RR and TSu developed the study protocol. TSt led the writing of the manuscript with input from EP and LR. ARG wrote the statistical methods section and TSu the economic evaluation component for study 3. All authors reviewed and critiqued the manuscript and approved the final published version.

Funding: This study is funded by the New Zealand Health Research Council (HRC Project Grant 18/138).

Competing interests: None declared.

Ethics approval: University of Otago Human Ethics Committee (D18/393, D18/394 and HD18/100).

Provenance and peer review: Not commissioned; peer reviewed for ethical and funding approval prior to submission.

Patient consent for publication: Not required.

References

- 1. Ministry of Health. Better, Sooner, More Convenient Health Care in the Community. Wellington: Ministry of Health, 2011. [Google Scholar]

- 2. Ministry of Health. Regional Services Planning: How district health boards are working together to deliver better health services. Wellington: Ministry of Health, 2012. [Google Scholar]

- 3. Gauld R. The New Zealand Health Care System. 2015. http://international.commonwealthfund.org/countries/new_zealand/

- 4. NZ Government. The New Zealand Health and Disability System: Handbook of Organisations and Responsibilities. Wellington, 2017. [Google Scholar]

- 5. Ministry of Health. Improving the Health System: Legislative Amendments to Support District Health Board Collaboration. 2010. https://www.health.govt.nz/about-ministry/legislation-and-regulation/regulatory-impact-statements/improving-health-system-legislative-amendments-support-district-health-board-collaboration

- 6. Northern Regional Alliance. Northern Region Health Plan 2016/17. Auckland: Northern Regional Alliance, 2016. [Google Scholar]

- 7. Midlands District Health Boards. Regional Service Plan Initiatives and Activities 2016-2019. Hamilton: Midland District Health Boards, 2016. [Google Scholar]

- 8. Central Region District Health Boards. Central Region Regional Service Plan 2014-2017. Wellington: Central TAS, 2014. [Google Scholar]

- 9. South Island Alliance. Te Wai Pounamu South Island Health Service Plan 2016-2019. Christchurch, NZ: South Island Alliance, 2016. [Google Scholar]

- 10. Office of the Auditor-General. Regional Services Planning in the Health Sector. Wellington: Office of the Auditor-General, 2013. [Google Scholar]

- 11. Timmins N, Ham C. The quest for integrated health and social care. A case study in Canterbury, New Zealand. London, 2013. [Google Scholar]

- 12. Gauld R. What should governance for integrated care look like? New Zealand’s alliances provide some pointers. Med J Aust 2014;201(3):67–8. 10.5694/mja14.00658 [DOI] [PubMed] [Google Scholar]

- 13. Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff 2008;27:759–69. 10.1377/hlthaff.27.3.759 [DOI] [PubMed] [Google Scholar]

- 14. Shuker C, Bohm G, Bramley D, et al. . The Health Quality and Safety Commission: making good health care better. N Z Med J 2015;128:97–109. [PubMed] [Google Scholar]

- 15. Moore GF, Audrey S, Barker M, et al. . Process evaluation of complex interventions: Medical Research Council guidance. BMJ 2015;350:h1258 10.1136/bmj.h1258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Ministry of Health. System Level Measures Framework. Wellington: Ministry of Health, 2017. Available: http://www.health.govt.nz/new-zealand-health-system/system-level-measures-framework [Google Scholar]

- 17. Health Quality Measures. Health Quality Measures NZ: the sector wide library of measures used in the NZ Health System. 2017. https://www.hqmnz.org.nz/library/Health_Quality_Measures_NZ

- 18. Chalmers LM, Ashton T, Tenbensel T. Measuring and managing health system performance: an update from New Zealand. Health Policy 2017;121:831–5. 10.1016/j.healthpol.2017.05.012 [DOI] [PubMed] [Google Scholar]

- 19. Doolan-Noble F, Lyndon M, Hau S, et al. . How well does your healthcare system perform? Tracking progress toward the triple aim using system level measures. N Z Med J 2015;128:44–50. [PubMed] [Google Scholar]

- 20. Ministry of Health. Indicator Interpretation Guide 2015/16: New Zealand Health Survey. Wellington: Ministry of Health, 2016. [Google Scholar]

- 21. Mant J. Process versus outcome indicators in the assessment of quality of health care. Int J Qual Health Care 2001;13:475–80. 10.1093/intqhc/13.6.475 [DOI] [PubMed] [Google Scholar]

- 22. Gauld R. Public Hospital Governance in New Zealand: A Case Study on the New Zealand District Health Board : Huntington D, Hort K, Public Hospital Governance in Asia and the Pacific. Geneva: World Health Organisation, 2015. [Google Scholar]

- 23. McClellan M, Udayakumar K, Thoumi A, et al. . Improving care and lowering costs: evidence and lessons from a global analysis of accountable care reforms. Health Aff 2017;36:1920–7. 10.1377/hlthaff.2017.0535 [DOI] [PubMed] [Google Scholar]

- 24. Pawson R, Tilley N. Realistic Evaluation. London: SAGE, 1997. [Google Scholar]

- 25. Weiss CH. Theory-based evaluation: past, present, and future. New Dir Eval 1997;1997:41–55. 10.1002/ev.1086 [DOI] [Google Scholar]

- 26. Marchal B, van Belle S, van Olmen J, et al. . Is realist evaluation keeping its promise? A review of published empirical studies in the field of health systems research. Evaluation 2012;18:192–212. 10.1177/1356389012442444 [DOI] [Google Scholar]

- 27. Salter KL, Kothari A. Using realist evaluation to open the black box of knowledge translation: a state-of-the-art review. Implement Sci 2014;9:115 10.1186/s13012-014-0115-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Wong G, Westhorp G, Manzano A, et al. . RAMESES II reporting standards for realist evaluations. BMC Med 2016;14:96 10.1186/s12916-016-0643-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Naccarella L, Greenstock L, Wraight B. An evaluation of New Zealand’s iterative Workforce Service Reviews: a new way of thinking about health workforce planning. Aust Health Rev 2013;37:251–5. 10.1071/AH12183 [DOI] [PubMed] [Google Scholar]

- 30. W.K. Kellogg Foundation. Logic Model Development Guide. Michigan: W.K.Kellogg Foundation, 2004. [Google Scholar]

- 31. Bowen GA. Document analysis as a qualitative research method. Qual Res 2009;9:27–40. 10.3316/QRJ0902027 [DOI] [Google Scholar]

- 32. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 33. Damschroder LJ, Aron DC, Keith RE, et al. . Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009;4:50 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Kirk MA, Kelley C, Yankey N, et al. . A systematic review of the use of the Consolidated Framework for Implementation Research. Implement Sci 2016;11:72 10.1186/s13012-016-0437-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Lovelock K, Martin G, Gauld R, et al. . Better, Sooner, More Convenient? The reality of pursuing greater integration between primary and secondary healthcare providers in New Zealand. SAGE Open Med 2017;5:205031211770105 10.1177/2050312117701052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Stokes T, Tumilty E, Doolan-Noble F, et al. . HealthPathways implementation in a New Zealand health region: a qualitative study using the Consolidated Framework for Implementation Research. BMJ Open 2018;8:e025094 10.1136/bmjopen-2018-025094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Centers for Medicare and Medicaid Services. Quality Measures: Centers for Medicare and Medicaid Services. 2017. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/QualityMeasures/index.html

- 38. Hamblin R, Bohm G, Gerard C, et al. . The measurement of New Zealand health care. N Z Med J 2015;128:50–64. [PubMed] [Google Scholar]

- 39. Health Care Quality and Safety Commission New Zealand. Atlas of Healthcare Variation. Wellington: HQSC, 2017. [Google Scholar]

- 40. Stelfox HT, Straus SE. Measuring quality of care: considering conceptual approaches to quality indicator development and evaluation. J Clin Epidemiol 2013;66:1328–37. 10.1016/j.jclinepi.2013.05.017 [DOI] [PubMed] [Google Scholar]

- 41. Stelfox HT, Straus SE. Measuring quality of care: considering measurement frameworks and needs assessment to guide quality indicator development. J Clin Epidemiol 2013;66:1320–7. 10.1016/j.jclinepi.2013.05.018 [DOI] [PubMed] [Google Scholar]

- 42. Rushforth B, Stokes T, Andrews E, et al. . Developing ’high impact' guideline-based quality indicators for UK primary care: a multi-stage consensus process. BMC Fam Pract 2015;16:156 10.1186/s12875-015-0350-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Willis TA, West R, Rushforth B, et al. . Variations in achievement of evidence-based, high-impact quality indicators in general practice: An observational study. PLoS One 2017;12:e0177949 10.1371/journal.pone.0177949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Reid P, Robson B. Understanding health inequities : Robson B, Harris R, Hauora: Maori standards of health IV a study of the years 2000-2005. Wellington: Te Rōpū Rangahau Hauora a Eru Pōmare, 2007. [Google Scholar]

- 45. Reid P, Paine SJ, Curtis E, et al. . Achieving health equity in Aotearoa: strengthening responsiveness to Māori in health research. N Z Med J 2017;130:96–103. [PubMed] [Google Scholar]

- 46. Wyeth EH, Derrett S, Hokowhitu B, et al. . Rangatiratanga and Oritetanga: responses to the Treaty of Waitangi in a New Zealand study. Ethn Health 2010;15:303–16. 10.1080/13557851003721194 [DOI] [PubMed] [Google Scholar]

- 47. Wolfenden L, Yoong SL, Williams CM, et al. . Embedding researchers in health service organizations improves research translation and health service performance: the Australian Hunter New England Population Health example. J Clin Epidemiol 2017;85:3–11. 10.1016/j.jclinepi.2017.03.007 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2019-030076supp001.pdf (305.8KB, pdf)