SUMMARY

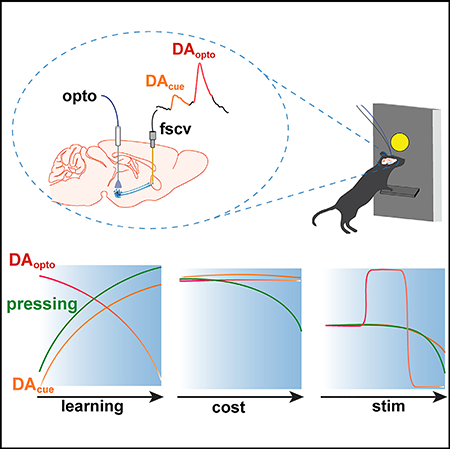

Dopamine (DA) transmission in the nucleus accumbens (NAc) facilitates cue-reward associations and appetitive action. Reward-related accumbal DA release dynamics are traditionally ascribed to ventral tegmental area (VTA) DA neurons. Activation of VTA to NAc DA signaling is thought to reinforce action and transfer reward-related information to predictive cues’ allowing cues to guide behavior and elicit dopaminergic activity. Here, we use optogenetics to control DA neuron activity and voltammetry to simultaneously record accumbal DA release in order to quantify how reinforcer-evoked dopaminergic activity shapes conditioned mesolimbic DA transmission. We find that cues predicting access to DA neuron self-stimulation elicit conditioned responding and NAc DA release. However, cue-evoked DA release does not reflect the cost or magnitude of DA neuron activation. Accordingly, conditioned accumbal DA release selectively tracks the expected availability of DA-neuron-mediated reinforcement. This work provides insight into how mesolimbic DA transmission drives and encodes appetitive action.

In Brief

To understand how mesolimbic dopamine signaling drives and encodes reward learning and seeking, Covey and Cheer recorded nucleus accumbens dopamine release while mice performed optogenetic self-stimulation of dopamine neurons. Cues that motivated self-stimulation elicited dopamine release, which reflected the availability, but not the expected cost or magnitude, of dopamine neuron activation.

Graphical Abstract

INTRODUCTION

The efficient pursuit of rewards is crucial for survival and relies on environmental cues directing and energizing goal-oriented behavior. Mesolimbic dopamine (DA) neuron projections from the ventral tegmental area (VTA) to the nucleus accumbens (NAc) contribute to the selection and invigoration of appetitive behaviors driven by outcome-predictive cues (Berridge, 2007; McClure et al., 2003; Nicola, 2010; Salamone and Correa, 2012). This is supported by evidence that VTA DA neuron firing and NAc DA release phasically increase following better than expected events and, as learning proceeds, the DA signal transfers along with action initiation to cues predicting access to the instigating stimulus (Cohen et al., 2012; Day et al., 2007; Flagel et al., 2011; Schultz et al., 1997). Phasic DA transmission is proposed to signal the utility of goal-directed action by scaling in magnitude according to reward value, such that larger or more probable rewards evoke greater dopaminergic activity that is subsequently reflected in the cue-evoked DA response (McClure et al., 2003; Schultz et al., 2017). Thus, phasic activation of mesolimbic DA neurons is thought to reinforce appetitive action, signal value, and transfer this information to antecedent cues. Accordingly, the ability to elicit dopaminergic activity may allow rewards such as food or addictive drugs to function as goals and for cues to drive goal seeking (Covey et al., 2014; Hyman et al., 2006; Keiflin and Janak, 2015; Redish, 2004).

Recent work supports this notion, demonstrating that DA neuron manipulations at the time of reward retrieval sufficiently modifies cue-directed reward seeking (Chang et al., 2016; Eshel et al., 2015; Sharpe et al., 2017; Steinberg et al., 2013), indicating that the degree to which DA neurons are phasically excited (or inhibited) proportionally endows predictive cues with an expectation of reward value. However, the degree to which cue-evoked mesolimbic DA signaling and behavior reflect reward-evoked DA release is poorly understood. Here, we used optogenetics to control DA neuron function and behavior while simultaneously monitoring its effect on NAc DA release using voltammetry in mice performing intracranial self-stimulation (ICSS) for optogenetic excitation of VTA DA neurons. This approach allowed us to precisely quantify how NAc DA release tracks DA-neuron-mediated reinforcement and identify what information about phasic DA neuron activation is incorporated into the cue-evoked DA response and behavior. We found that cues predicting access to optical ICSS elicited NAc DA release as mice learned the cuereinforcer contingency and declined when the reinforcer was withheld, indicating that VTA DA neuron activation sufficiently endows cues with conditioned reinforcing properties. However, cue-evoked NAc DA release was not modified by the magnitude or cost of DA neuron activation. Thus, NAc DA release tracks predicted DA-neuron-mediated reinforcement but does not necessarily incorporate information about the expected utility or magnitude of DA neuron activation.

RESULTS

Controlling and Monitoring DA Function and Behavior

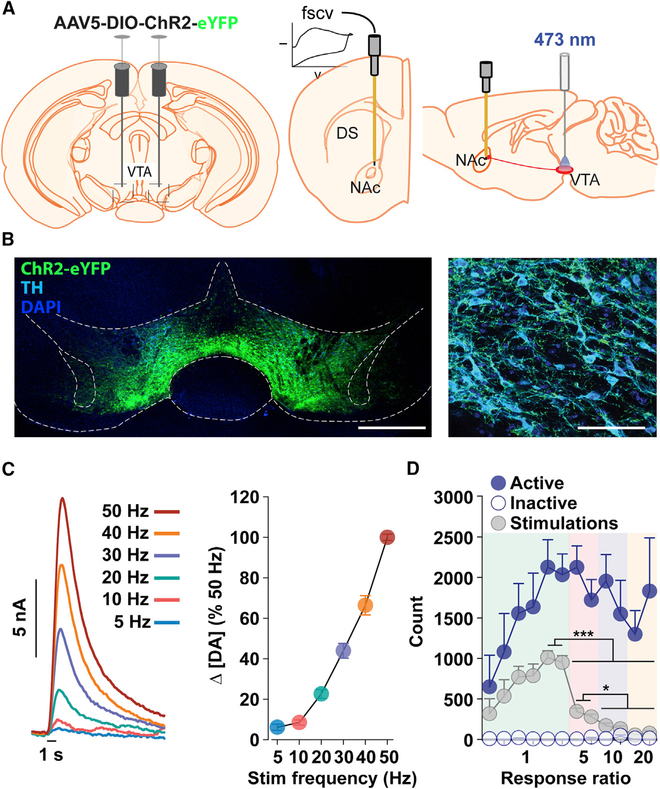

An adeno-associated virus (AAV) vector was used to express the excitable opsin ChR2 in VTA DA neurons of DAT::Cre+/− mice, an optical fiber was placed above the injection site to conduct light stimulation, and a carbon fiber microelectrode was implanted in the NAc to record DA concentration changes using fast-scan cyclic voltammetry (FSCV) (Figures 1A–1C). Laser stimulation (473 nm, 1 s, 30 Hz) maintained robust ICSS on a fixed ratio (FR) 1 continuous reinforcement (CRF) schedule (Figure 1D). Mice continued to seek self-stimulation at high rates despite an increase in response requirement (lever presses per stimulation) and a decrease in stimulations earned. To assess whether DA release accompanies behavior driven by activation of DA neurons, we monitored NAc DA release during ICSS on an FR1 CRF schedule (n = 20 sessions, 9 mice). Behavioral and FSCV measures from representative mice are shown in Figures 2A–2D and S1.

Figure 1. Controlling and Monitoring DA Function.

(A) Schematic showing viral transduction of VTA DA neurons (left), FSCV electrode placement in the NAc (middle), and optical fiber placement in the VTA (right).

(B) Confocal images showing staining for ChR2-eYFP, anti-tyrosine hydroxylase (TH), and anti-DAPI in the VTA. Scale bars represent 500 mm (left) and 50 μm (right).

(C) Frequency-dependent DA release during optical stimulation in awake mice (n = 4). Mean DA concentration change (Δ[DA]) during each stimulation (left) and mean (± SEM) maximal Δ[DA] relative to the 50 Hz stimulation (right). Stimulation frequency increased while duration remained constant at 1 s.

(D) Acquisition and expression of VTA DA neuron intracranial self-stimulation (ICSS). As the response ratio increases, mean (± SEM) total number of lever presses does not significantly change (sessions 5–12, FR5-FR20; one-way RM ANOVA:F(7,35) = 1.92, p = 0.10), while the number of mean (± SEM) stimulations earned decreases (one-way RM ANOVA: F(7,35) = 148.0, p < 0.001; ***p < 0.001, *p < 0.05) across consecutive 30-min ICSS sessions and increasing response ratios (n = 6).

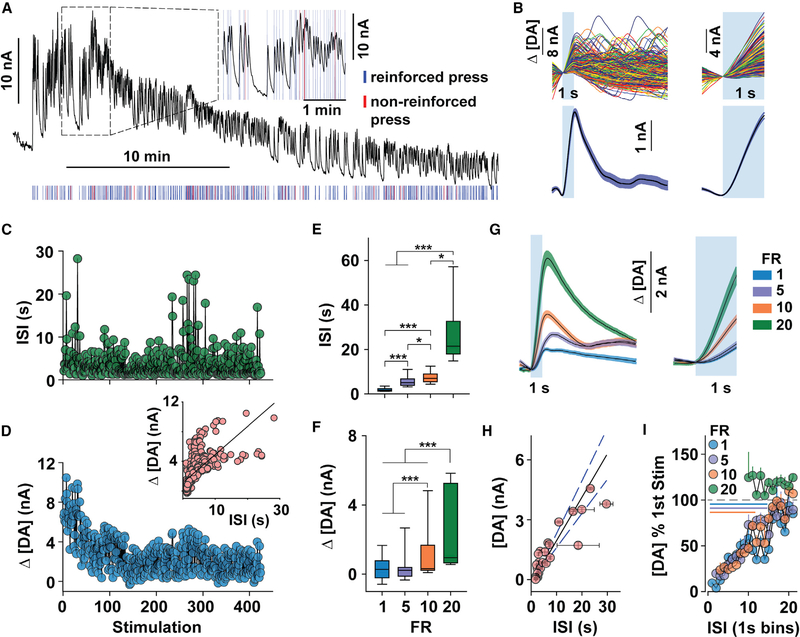

Figure 2. NAc DA Release during Optogenetic ICSS.

(A-D) Representative measures from a 30-min, FR1 ICSS session.

(A) The FSCV trace (black) fluctuated during reinforced presses (30 Hz, 1 s; blue tic marks). Red tic marks indicate active presses during an ongoing stimulation (non-reinforced).

(B) Individual (top) and mean (± SEM; bottom) Δ[DA] during each 10-s (bottom left) and 1-s (bottom right) period time-locked to laser stimulation (blue box).

(C and D) Inter-stimulation interval (ISI) (C) and peak Δ[DA] (D) during each stimulation (inset: correlation between ISI and peak Δ[DA]; R2 = 0.63, p < 0.001).

(E and F) Median (±interquartile range and 5th–95th percentile) ISI (E) and Δ[DA] (F) across increasing fixed ratios (FR; Kruskal-Wallis test: Δ[DA], H = 317, df = 3, p < 0.001; ISI, H = 3,474, df = 3, p < 0.001). ***p < 0.001; *p < 0.05).

(G) Mean (± SEM) Δ[DA] during the 10-s (left) and 1-s (right) period following stimulation across all mice and FR requirements.

(H) Correlation between mean (± SEM) Δ[DA] and ISI from each ICSS session (R2 = 0.90, p < 0.001).

(I) Mean (+ SEM) Δ[DA] as a percentage of the first stimulation of the session, separated into 1-s ISI bins for each FR requirement. Lines indicate bins that are significantly different than the first stimulated [DA] of the ICSS session; paired t tests with a Bonferroni correction were used to adjust for multiple comparisons. See also Figures S1–S5.

Initiation of lever pressing evoked an immediate increase in the FSCV signal that diminished (Figure 2A) or plateaued (Figure S1) as responding continued. We compared the amplitude of individual optically evoked DA release events (Figures 2B, S1B, and S2) to the inter-stimulation interval (ISI; Figure 2C) across the session and found that ICSS-evoked DA release (Figure 2D) covaried with the ISI (Figure 2D, inset), such that less DA release was associated with more vigorous responding (i.e., lower ISI). To assess whether changes in response vigor or time between stimulations differentially associated with DA release, a subset of mice (n = 4) were exposed to increasing fixed ratio requirements during separate ICSS sessions, which necessarily increased the ISI (Figure 2E) without significantly altering the response rate (Figure 1D). The evoked DA concentration also increased along with the response requirement (Figures 2F, 2G, and S3–S5) and was highly correlated with the ISI across all FR schedules (Figure 2H), indicating that the time between consecutive stimulations, rather than effortful investment, restrains optically evoked NAc DA release. By comparing the amplitude of the evoked DA signal to the first stimulation of the session, we identified 15 s as a minimal interval for obtaining consistent release events (Figure 2I).

DA Release during ICSS Seeking

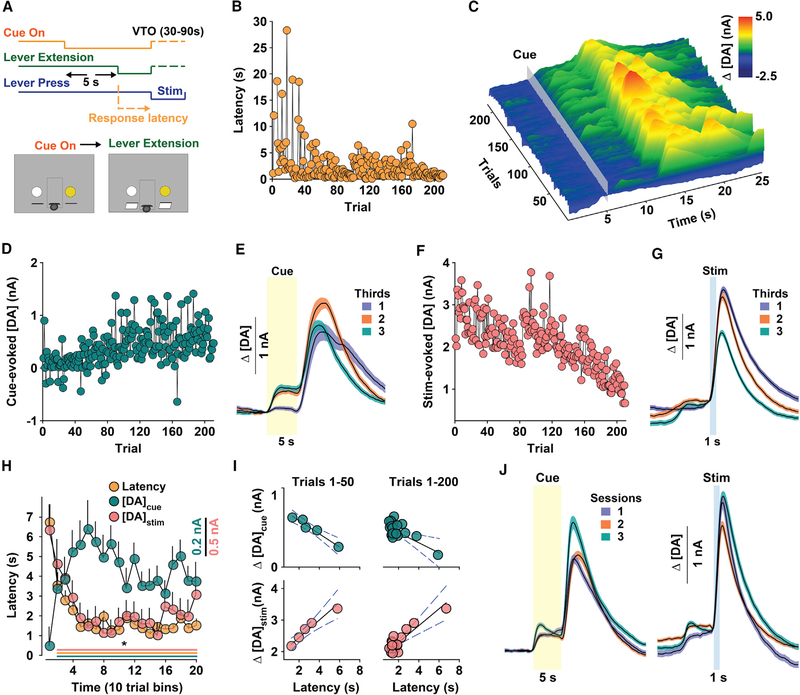

To assess whether phasically activating DA neurons is indeed sufficient to promote conditioned, appetitive encoding of incentive cues, mice were trained to seek optogenetic ICSS during 120-min sessions on a variable timeout (VTO; 30–90 s) schedule in response to a cue indicating forthcoming access to laser stimulation (Figure 3A) (Oleson et al., 2012; Owesson-White et al., 2008). The latency to press the active lever following cue presentation serves as a proxy of the cue’s learned association with forthcoming reinforcement. Behavior and DA measures from a representative session are shown in Figures 3B–3G. Latency to respond decreased over time and reached an asymptote at a low level during later trials (Figure 3B). DA concentration changes plotted relative to cue onset and across consecutive trials (Figure 3C) were inversely associated with latency, such that the peak DA concentration during cue presentation (5–10 s) gradually increased and persisted at a higher level during later trials (Figures 3D and 3E). In contrast, and despite constant stimulation parameters and a sufficient ISI for evoking a consistent DA signal (Figure 2I), stimulated release progressively declined during the session (Figures 3F and 3G). On average, cue-evoked and stimulation-evoked DA release tracked behavior in the opposite direction; more vigorous responding (i.e., lower response latency) was associated with increased and decreased DA release to the cue and stimulation, respectively (Figure 3H). The relationship between behavior and NAc DA release was clearest during the first 50 trials, before latency reached an asymptote (i.e., during learning), and persisted across the first 200 trials (Figure 3I). DA release to the cue and stimulation were stable across 3 consecutive sessions (Figure 3J), indicating that changes in the DA response over time are not likely due to declining electrode performance. Collectively, these findings show that phasic activation of VTA DA neurons sufficiently supports dopaminergic encoding of predictive cues.

Figure 3. NAc DA Release Tracks ICSS Seeking.

(A) Schematic of behavioral sequence.

(B-G) Representative metrics from the same session. Consecutive response latencies (B) and Δ[DA] (z axis) time-locked to cue-onset (gray box) overtime (x axis) and across trials (y axis) (C), peak Δ[DA] during the cue (Δ[DA]cue) (D), mean (± SEM) Δ[DA]cue (yellow box) divided into thirds of the session (E), peak Δ[DA] during stimulation (Δ[DA]stim) (F), and mean (± SEM) Δ[DA]stim (blue box) divided into thirds of the entire session (G).

(H) Mean (± SEM) latency and Δ[DA]cue and Δ[DA]stim during the first 200 trials across all mice (n = 9). Scale bars represent 0.2 nA for Δ[DA]cue and 0.5 nA for Δ[DA]stim. One-way RM ANOVA: latency, F(19,1470) = 13.58, p < 0.001; Δ[DA]cue, F(19,1563) = 3.58, p < 0.001; Δ[DA]stim, F(19,1470) = 12.56, p < 0.001; *p < 0.05 versus the first bin. Significant differences at each time point are indicated by a line (bottom) color-coded according to the symbol for each metric.

(I) Relationship between mean latency (x axis) during the first 50 trials (left) and D[DA]cue (top; R2 = −0.98; p < 0.001) and Δ[DA]stim (bottom; R2 = 0.98; p < 0.001) across the first 200 trials (top, right: Δ[DA]cue, R2 = −0.70; p < 0.001; bottom, right: Δ[DA]stim, R2 = 0.89; p < 0.001). Each circle represents the mean from 10 trials.

(J) Mean (± SEM) Δ[DA]cue (left; yellow, transparent box) and Δ[DA]stim (right; blue, transparent box) over 3 consecutive sessions (n = 6).

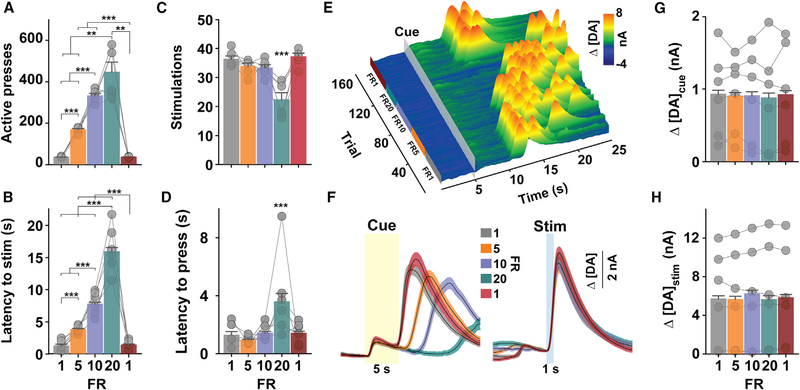

DA Release and Expected ICSS Cost

To assess whether information associated with phasic DA neuron activation is concomitantly reflected by changes in cue-evoked behavior and NAc DA release, we first manipulated the cost of VTA DA neuron ICSS (Figure 4). The task structure on each trial is the same as in Figure 3A, but the response requirement changed (FR1, 5, 10, 20, 1) across consecutive 30-min epochs. The additional FR1 period at the end of the session served as an internal control to assess whether behavior and DA function or its detection changed over time, independent of response requirement.

Figure 4. NAc DA Release Does Not Track Response Cost.

(A–D) Active lever presses(one-way RM ANOVA: F(4,20) = 66.87, p < 0.001)(A), latency to earn stimulation (stim; one-way RM ANOVA: F(4,749) = 344.65, p < 0.001)

(B), number of stimulations earned (one-way RM ANOVA: F(4,20) = 16.77, p < 0.001)(C), and latency to initiate lever pressing (one-way RM ANOVA: F(4,761) = 14.58, p < 0.001) (D) during consecutive 30-min periods at each fixed ratio (FR) requirement. ***p < 0.001.

(E) Representative Δ[DA] (z axis) time-locked to cue onset over time (x axis) and across trials (y axis).

(F–H) Mean (± SEM) Δ[DA]cue (F, left; yellow transparent box) and Δ[DA]stim (F, right, bluetransparent box), peak Δ[DA]cue (G; one-way RM ANOVA: F(4,772) = 0.289, p = 0.89), and Δ[DA]stim (H; one-way RM ANOVA: F(4,74g) = 2.48, p = 0.04).

Symbols in (A) and (B) refer to total count for each mouse (n = 6), and symbols in (C) and (F) refer to mean for all trials (latency, n = 975; Δ[DA]cue, n = 1,000; Δ[DA]stim, n = 975) during each FR epoch for each mouse. Bars refer to mean (+ SEM) across all trials. See also Figure S6.

Mice proportionally increased responding (Figure 4A) and required more time (Figure 4B) to earn a similar number of stimulations across all response requirements, except FR20 (Figure 4C). The 20-press response requirement was sufficient to hinder responding, as evidenced by a significant increase in the time to initiate lever pressing (Figure 4D), number of trial omissions (one-way repeated measures [RM] ANOVA: F(4,20) = 3.80, p = 0.02; p < 0.05, FR20 versus all epochs, data not shown), and inactive lever presses (one-way RM ANOVA: F(4,20) = 4.12, p = 0.014; p < 0.05, FR20 versus all epochs; data not shown). DA concentration changes from a representative session are plotted relative to cue onset and across consecutive trials in Figure 4E. Surprisingly, the amount of DA released during cue presentation or by laser stimulation (Figures 4F–4H and S6) did not significantly differ as a function of the response requirement. DA concentrations aligned to the initiation of lever pressing (Figures S6A–S6D) and stimulation (Figures S6E–S6H) were also not affected by violations of expectations during the transitions between FR requirements, as might be expected due to reward-prediction errors. Accumbal DA release has previously been found to encode economic information via distinct temporal dynamics rather than just the peak response (Hollon et al., 2014). We therefore also compared the area under the curve (AUC) across the entire 5-s cue and found similar results across FR epochs (one-way RM ANOVA: F(4 772) = 0.494 p = 0.74; data not shown). Since increasing response requirements did not extinguish ICSS responding (also see Figure 1D), we used a concurrent-choice paradigm to confirm that the imposed response requirement indeed reflected a comparatively less valued circumstance (Figure S7A). Comparisons were made between FR1 and FR20, as behavioral decrements were only observed at this latter ratio. While mice continued to respond when the 20-press requirement was the only available option (Figure S7B), they displayed a clear preference for the lower cost when given a choice (Figures S7C and S7D). Thus, cue- and stimulation-evoked NAc DA release does not necessarily track an expected or experienced decrease in the perceived utility of DA-neuron-mediated reinforcement due to an increase in the effortful (lever presses per reinforcer) or temporal (time to earn stimulation) cost. Moreover, cue-evoked NAc DA release does not predict the latency to respond for a learned action under these specific experimental conditions.

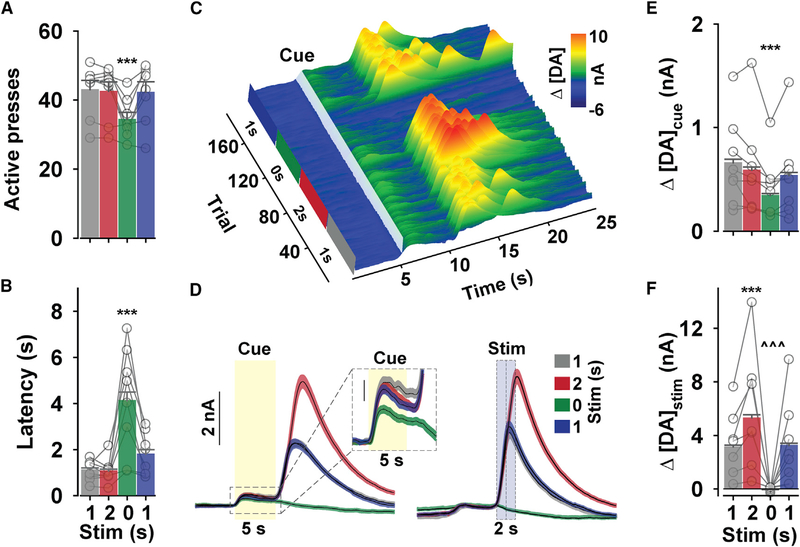

DA Release and Expected Magnitude of DA Neuron Activation

Stable cue-evoked DA release across increasing response costs could arise because there was no change in reinforcer-evoked dopaminergic activity that could be transferred to the cue, as demonstrated by the stable stimulus-evoked increase in NAc DA release. Notably, several studies indicate that phasically increasing or decreasing reward-evoked DA neuron activity modifies cue-evoked behavior (Chang et al., 2016; Eshel et al., 2015; Sharpe et al., 2017; Steinberg et al., 2013). We therefore assessed whether the cue-evoked signal reflects the expected magnitude of dopaminergic activity by manipulating the duration of VTA DA neuron stimulation earned on an FR1-VTO schedule (Figure 5). Eliminating reinforcement (0-s duration), but not increasing stimulation duration (2 s), affected behavior by decreasing active lever pressing (Figure 5A) and increasing latency to respond (Figure 5B), inactive lever pressing (F(3,24) = 4.0, p = 0.019), and trial omissions (F(3,24) = 13.01, p < 0.001). DA concentration changes from a representative session are plotted relative to cue-onset and across consecutive trials in Figure 5C. Despite greater DA release during the 2-s stimulation, cue-evoked DA release was only affected by elimination of reinforcement (Figures 5D–5F and S6). We also found comparable results when analyzing the AUC during the cue (one-way RM ANOVA: F(3,988) = 20.27 p < 0.001; all p < 0.001, 0 s versus all other stimulation epochs; data not shown). Stimulation-evoked DA release remained stable within each stimulation epoch (Figure S8A), and cue-evoked DA release slowly declined but quickly returned to baseline following the elimination and reintroduction of the reinforcer, respectively (Figure S8B). The conditioned DA release event declined across trials when stimulation was withheld (Figure S8B) at a rate that is strikingly similar to that seen during extinction of cocaine reinforcement (Stuber et al., 2005). Importantly, mice did not display a preference for the 1-s or 2-s stimulation (Figures S7E–S7G). Thus, we specifically isolated a direct comparison between the amount of reinforcer-evoked and cue-evoked DA release that cannot be explained by distinct effects on behavior. Our findings indicate that DA release and behavior track the availability, but not the expected magnitude, of DA neuron activation.

Figure 5. NAc DA Release during Changing Stimulation Magnitudes.

(A and B) Mean (+ SEM) active lever presses (A; one-way RM ANOVA: F(3,24) = 15.76, p < 0.001) and latency to press (B; one-way RM ANOVA: F(3,950) = 44.39, p < 0.001) during consecutive 30-min periods at each stimulation.

(C) Representative Δ[DA; z axis) time-locked to cue onset over time (x axis) and across trials (y axis).

(D) Mean (± SEM) Δ[DA]cue (left, yellow transparent box) and Δ[DA]stim (right, blue transparent box).

(E and F) Mean (+ SEM) peak Δ[DA]cue (E; one-way RM ANOVA: F(3,988) = 17.19, p < 0.001) and A[DA]stim (F; one-way RM ANOVA: F(3,950) = 240.62, p < 0.001). Symbols in (A) referto refer to total count for each mouse (n = 8), and symbols in (B), (E), and (F) refer to mean for all trials (latency, n = 1,297; Δ[DA]cue, n = 1,335; Δ[DA]stim, n = 1,297) during each stimulation epoch. ***p < 0.001 versus all other stimulation epochs. ^^^p < 0.001 versus both 1 s stimulation epochs. See also Figure S8.

DISCUSSION

Accumbal DA Release Tracks DA-Neuron-Mediated Reinforcement

VTA to NAc DA transmission allows reward-predictive cues to promote appetitive behavioral responses. This is evidenced by pharmacological (Nicola et al., 2005; Wyvell and Berridge, 2001; Yun et al., 2004), lesion (Parkinson et al., 2002; Taylor and Robbins, 1986), and optogenetic (Chang et al., 2016; Eshel et al., 2015; Sharpe et al., 2017; Steinberg et al., 2013) studies demonstrating that increasing or decreasing VTA activity or DA signaling in the NAc increases or decreases cue-motivated reward seeking. But what information about reward is relayed to the cue remains an open question. Neural recordings of DA function indicate that phasic activation of DA neurons operates as a teaching signal that encodes reward prediction errors (Bayer and Glimcher, 2005; Hart et al., 2014) or the difference between actual and expected outcomes. As rewards become increasingly expected, the DA response to the reward and cue progressively decreases and increases, respectively, and the transfer of this signal from reward to cue conforms to temporal-difference reinforcement learning models (Montague et al., 1996). Violations of expectations, such as a change in the magnitude or probability of reward, are reflected in reward- and cue-evoked DA signals (Beyene et al., 2010; Day et al., 2010; Fiorillo et al., 2003; Gan et al., 2010; Tobler et al., 2005). By encoding economic parameters of predicted rewards in their activity patterns, DA neurons are thought to ascribe incentive-motivational value to reward-predicting cues, allowing them to direct and invigorate reward pursuit (McClure et al., 2003). In line with this hypothesis, cues predicting access to forthcoming food (Roitman et al., 2004; Syed et al., 2016), cocaine (Phillips et al., 2003), or brain stimulation reward (Beyene et al., 2010; Oleson et al., 2012; Owesson-White et al., 2008) elicit transient elevations in NAc DA release that accompany action initiation. However, the precise type of information that is transferred by DA neuron activation to antecedent cues has been unclear.

Here, we found that during optogenetic ICSS, DA release gradually increased in response to the cue while DA release evoked by the stimulation declined as learning progressed (Figure 3). Thus, phasic optogenetic activation of DA neurons reinforces behavior and confers cues with the ability to predict future reinforcement and elicit NAc DA release. The decline in reinforcer-evoked DA release as it becomes increasingly expected (Figures 3F–3I) conforms with the role of DA neurons in encoding reward-prediction errors. However, this finding is somewhat surprising given that the reinforcer in this context was direct DA neuron depolarization and sufficient time was allotted between stimulations to maintain a consistent signal (Figure 2I). This effect has been reported previously in FSCV studies using electrical ICSS (Oleson et al., 2012; Owesson-White et al., 2008) and is proposed to arise from the increased cue-evoked DA signal removing potential DA content from a restricted releasable pool. However, this explanation is not supported by our findings. Stimulated DA release was not altered by greater time between the cue and stimulation (Figure 4) and predictably increased according to stimulus duration, despite consistent cue-evoked DA release preceding the stimulation (Figure 5), and did not differ if it was preceded by cue-evoked DA release (Figures S6E–S6H). One explanation is that the stimulation-evoked DA signal declines to a stable level as the outcome becomes increasingly expected. In support of this notion, during the FR1-VTO task (Figure 3), the stimulation-evoked signal declines as learning occurs and the behavioral task remains constant but is not altered when action-outcome contingencies are changing (Figures 4 and 5).

Although phasic DA neuron activation allows cues to predict future reinforcement and elicit NAc DA release, changes in the magnitude or cost of DA neuron activation are not reflected in the cue-evoked DA response (Figures 4 and 5). Because greater reward-evoked DA neuron activity is associated with a subsequently greater cue-evoked DA response (Tobler et al., 2005; Cohen et al., 2012) and causes new learning about predictive cues (Steinberg et al., 2013), we predicted that an increase in the duration of DA neuron activation and, in turn, the amount of DA released in the NAc would transfer to the cue. However, the cue-evoked DA signal was only affected by elimination of reinforcement (Figure 5). Insensitivity to the larger stimulation may be due to its equivalent reinforcing capacity (Figures S7E–S7G). However, the cue-evoked signal was also insensitive to an increase in effortful demand and delay to reinforcement, which was sufficient to hinder appetitive responding (Figures 4, S7C, and S7D). If cue-evoked release was relatively greater for certain conditions, an increase in signal would have been detected, as demonstrated by the relatively larger DA signal evoked by stimulation on each trial. Thus, it is not necessarily the expected utility of DA neuron activation but its imminent occurrence that modifies cue-evoked DA release during ICSS. One possibility is that this lack of value-associated change in DA function during ICSS arises from the absence of external sensory input or physiological need (e.g., during food reinforcement), which likely recruits additional neural mechanisms that sculpt DA neurotransmission and behavior. However, our findings that cue-evoked DA release is insensitive to effort-based cost is generally in line with DA recordings during food or liquid reinforcement, as recently reviewed (Walton and Bouret, 2019). Prior FSCV measures show that cue-evoked DA release does not reflect forthcoming cost (Wanat et al., 2010), decreases strictly when the high-cost choice is the only available option (Day et al., 2010), increases only in response to an unexpected reduction in cost (Gan et al., 2010), or preferentially encodes reward size irrespective of cost (Hollon et al., 2014). It should be noted, however, that recent work has found effortful cost encoding by accumbal DA release using a behavioral economics paradigm (Schelp et al., 2017), highlighting that additional work is needed to understand the precise relationship between effort and phasic DA. Furthermore, electrophysiology recordings generally agree with FSCV measures, demonstrating that phasic DA neuron firing to predictive cues preferentially reflects expected reward size, delay, and probability (Cohen et al., 2012; Eshel et al., 2015; Fiorillo et al., 2003; Pasquereau and Turner, 2013; Roesch et al., 2007; Tobler et al., 2005) rather than expected effortful cost (Pasquereau and Turner, 2013).

Association between Terminal DA Release and Cell Body Activation

ICSS is used to understand the minimal neural elements of reinforcement by identifying the neuronal targets and activity patterns that are sufficient to drive appetitive behavior (Olds and Milner, 1954). The precise role of mesolimbic DA signaling in brain reinforcement mechanisms has long been debated based on ICSS studies using electricity to activate neuronal targets. Pharmacological and anatomical assessments have generally implicated the mesolimbic DA system as a critical neural substrate mediating ICSS of midbrain regions. For instance, self-stimulation response rates are proportional to the density of dopaminergic neurons surrounding the electrode tip (Corbett and Wise, 1980), and DA receptor antagonists (Mogenson et al., 1979) or lesions of dopaminergic projections (Fibiger et al., 1987) in the NAc reduce ICSS performance. Alternatively, psychometric measures indicate that small, myelinated fibers rather than large, unmyelinated DA axons are the principal targets of typical electrical ICSS parameters (Bielajew and Shizgal, 1986; Yeomans et al., 1988). Moreover, early FSCV measures indicated that NAc DA release does not occur during ongoing ICSS (Garris et al., 1999; Kilpatrick et al., 2000; Kruk et al., 1998). These findings led to the conclusion that NAc DA release may be important for signaling the presence or predictability of rewards but is dissociable from ongoing reinforcement.

However, recent optogenetic manipulations confirm that VTA DA neuron activation is sufficient to sustain reinforcement (Ilango et al., 2014; Witten et al., 2011), which is suppressed by infusing DA receptor antagonists into the NAc (Steinberg et al., 2014). As is generally the case in optogenetic experiments, whether terminal neurotransmitter release accompanies behavior driven by neuronal activation was not known. It is feasible that NAc DA release may simply accompany the acquisition or initiation of ICSS and thereafter facilitate motor patterns required for continued responding. Furthermore, based on the estimated rate at which releasable DA pools replenish and autoreceptor-mediated inhibition of DA release operates (Kita et al., 2007; Montague et al., 2004; Yavich and MacDonald, 2000), along with rapid terminal DA reuptake, which should further increase during periods of repeated depolarization (Calipari et al., 2017), it is reasonable to expect that DA concentrations in the NAc become dissociated from robust neuronal activation during ICSS. However, we find that although DA release time-locked to each stimulation declines during ongoing ICSS, it is apparent throughout the session and tightly associates with behavior (Figures 2, S1, andS3–S5). Notably, alternative neural monitoring techniques, such as fluorescent calcium imaging, may not detect changes in neurotransmitter release if releasable pools are depleted and the association between vesicular release and intracellular calcium signaling fluctuates.

Conclusions

By monitoring DA release during ICSS, we characterized a dynamic association between optogenetic manipulations at cell bodies and terminal neurotransmitter release. Stimulation-evoked NAc DA release inversely tracked the reinforcing capacity of ICSS, such that more frequent stimulations (Figure 2) or a reduced latency to respond (Figure 3) corresponded with less DA release. Moreover, DA released to cues predicting access to self-stimulation increased as the latency to respond decreased (Figure 3), but cue-evoked DA release did not change according to the cost (Figure 4) or magnitude (Figure 5) of DA neuron activation. Hence, additional work is required to identify neural mechanisms that drive value-based changes in cue-evoked DA signaling. The ability of optogenetic ICSS to elicit learning and conditioned NAc DA release conforms with prior hypotheses related to the mechanisms by which addictive drugs hijack reward circuits (Covey et al., 2014; Hyman et al., 2006; Keiflin and Janak, 2015; Redish, 2004). Similar to optogenetic stimulation, addictive drugs bypass normal sensory processes to directly activate DA neurons. We show here that this action is sufficient to reinforce behavior, drive learning, and promote dopaminergic encoding of antecedent cues.

STAR⋆METHODS

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Joseph F. Cheer (jcheer@som.umaryland.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

For all experiments, we used both male and female mice (3–6 months old) expressing a heterozygous knock-in of Cre recombinase under the control of the regulatory elements of the DA transporter gene (DAT::Cre+/− mice). Sex and number of mice for each experiment are indicated in the ‘Behavior’ section. Animals were housed in a temperature-controlled room maintained on a reverse 12 h light/dark cycle (07:00–19:00 h). Following surgery, mice were housed individually and allowed ad libitum access to water and food. All experiments were conducted in the light cycle. Animal care and experimental procedures conformed to the National Institute of Health Guide for the Care and Use of Laboratory Animals and were approved by the Institutional Animal Use and Care Committee at the University of Maryland, Baltimore.

METHOD DETAILS

Surgery

Surgical procedures are depicted in Figure S1. Mice were anesthetized with isoflurane in O2 (4% induction and 1% maintenance, 2 L/min) and Cre-dependent channelrhodopsin (ChR2) virus (AAV5-EF1a-DIO-ChR2(H134R)-eYFP) produced at the University of North Carolina (Vector Core Facility) was injected in the VTA (−3.3 AP, +0.5 ML, −4.0 DV, mm relative to bregma). Viral injections (0.5 μl, 0.1 μl/min) used graduated pipettes (Drummond Scientific Company), broken back to a tip diameter of ~20 μm. An optical fiber (105 mm core diameter, 0.22 NA, Thorlabs, NJ) was then implanted unilaterally above the injection site at −3.8 mm DV. For FSCV recordings, a chronic voltammetry electrode (Clark et al., 2010) was then also implanted ipsilateral to the optical fiber in the NAc core (+1.2 AP, +1.1 ML, −3.7 DV, mm relative to bregma) and a Ag/AgCl reference electrode in the contralateral superficial cortex, as described previously (Covey et al., 2016, 2018). All components were permanently affixed with dental cement (Metabond, Parkell, Inc). Mice were allowed 4 weeks to recover from surgery and allow viral expression.

Behavior

Apparatus

Mice were tested in operant chambers (21.6 × 17.6 × 14 cm; Med Associates) housed within sound-attenuating enclosures. Each chamber was equipped with two retractable levers (located 2 cm above the floor) and one LED stimulus light located above each lever (4.6 cm above the lever). A houselight and a white-noise speaker (80 dB, masking noise background) were located on the opposite wall. Light was delivered by a diode-pumped solid-state laser (473 nm, 150 mW, company) coupled to 62.5 μm core, 0.22 NA optical fiber (Thor Labs). Light output was ~10–20 mW at the tip of the ferrule. In all cases, laser stimulation consisted of 4 ms pulses.

ICSS

To first confirm the reinforcing capacity of DA neuron ICSS, a group of mice that only received the viral infusion and optical fiber implantation (n = 6; 3 male, 3 female) were trained for 6 consecutive sessions to lever press on a fixed ratio-1 (FR1) continuous reinforcement schedule (CRF) schedule for laser stimulation (30 Hz, 1 s), followed by 2 sessions each on FR5, FR10, and FR20 (Figure 1D). During 30-minute sessions, both levers remained extended and no other cues were presented. Presses on one lever produced immediate laser stimulation once the response requirement was met (active), while presses on the other lever (inactive) or presses on the active lever during an ongoing stimulation (non-reinforced) were collected but had no programmed consequence. A separate group of mice that were also implanted with FSCV recording electrodes (n = 9; 5 male, 4 female) were initially trained for a minimum of 3 sessions on an FR1 CRF and then on separate, consecutive sessions on an FR5, 10, and 20 reinforcement schedule (Figures 1, 2, S1, and S3–S5). Mice were then trained on a variable time-out (VTO) schedule (Figures 3,4,5, S6, and S8), similar to that used in prior work (Oleson et al., 2012; Owesson-White et al., 2008). In this task, the cue light was illuminated 5 s prior to lever extension. Presses on the active lever delivered immediate laser stimulation if the response requirement was met within 60 s following lever extension. If the response requirement was not met within this time frame, both levers retracted, the cue light was turned off, and the trial was counted as an omission. Presses on the inactive lever were recorded but had no programmed consequence.

To test whether different reinforcement contexts affected subjective utility, we used a concurrent choice task to assess whether mice prefer the 1 s versus 2 s stimulation or the FR1 versus FR20 response requirement (Figure S7). Mice (n = 7; 4 male, 3 female) were first trained to press on both levers to receive optical reinforcement (30 Hz) using a CRF schedule as described above. For initial training, the active lever was randomly assigned for each mouse as the left or right lever for the first three sessions, and then reversed for two additional sessions. Importantly, active presses did not differ between the two levers on session 3 (mean: 1,032.86 ± 198.45) versus session 5 (mean: 1,154.00 ± 211.91; paired t test; t(6) = −.060, p = 0.569). Mice then completed two sessions of concurrent choice tasks for 1 s versus 2 s stimulations and for FR1 versus FR20 response requirements. Each session consisted of three, 20-minute blocks. The first two blocks were forced choice trials, during which a single cue light was illuminated above a single extended lever, followed by a free choice block during which both cue lights and levers were presented on each trial. The stimulus duration and response ratio were randomly assigned to the left or right lever for each session, and lever assignment remained constant for each mouse across the entire session. During each block, the first lever press on a trial was followed by retraction of both levers, and completion of the response requirement resulted in immediate delivery of optical stimulation and dimming of the cue lights for the duration of stimulation. Thus, once mice chose the FR20 option, they were committed to this decision.

FSCV Analysis

FSCV was used to monitor DA concentration changes by applying a triangular waveform (−0.4 V to +1.3V at 400 V/s) at 10 Hz to implanted carbon fiber microelectrodes. FSCV is able to extract changes in faradaic current due to redox reactions at the carbon fiber surface, and this change in current is proportional to the concentration of electroactive analytes. Here, we report all FSCV measures in current (nA) as this is the unit of measurement that serves as an estimate of the change in concentration. Principal component regression (PCR) analysis was used to statistically extract the DA component from the voltammetric recording (Heien et al., 2005). Training sets were created using non-contingent optogenetically-evoked DA signals obtained following a recording session and a standard set of five basic pH shift voltammograms. For continuous ICSS experiments (Figures 2, S1, and S3–S5), FSCV signals were compared to the residual (Q) values obtained from the PCR analysis during 10 s windows normalized to stimulation onset and were included in the analysis when residuals across the 10 s trace fell below the 95% confidence interval (i.e., Qα; Figure S2). Analysis of signal amplitude during CRF ICSS is confined to the 1 s stimulation period because another stimulation may occur 100 ms following stimulation offset. For VTO tasks (Figures 3,4,5, S6, and S8), FSCV signals were compared to Q values during 25 s windows normalized to either cue or stimulation onset.

Histology

Mice were anesthetized with isoflurane (5%) and transcardially perfused with 4% paraformaldehyde (PFA) in 0.1 M sodium phosphate buffer (PB; pH 7.4). Brains were post-fixed overnight in the same PFA solution at 4°C. 40 μm-thick coronal slices were cut with a vibratome (Leica). For immunohistochemistry, slices were incubated for 30-minutes in PB containing 0.2% Triton X-100 (Sigma-Aldrich) and 3% normal donkey serum (Jackson 017–000-121) and then overnight in primary antibodies (mouse monocloncal anti-tyrosine hydroxylase, TH; ImmunoStar, Catalog# 22941; 1:1000 and chicken anti-GFP; Aves Labs, Inc., Tigard, OR, USA; 1:4000). The next day, sections were incubated for 2 hours in secondary antibodies (donkey anti-mouse; Alexa 647; 1:000; Jackson 715–605-151 and donkey anti-chicken; Alexa 488; 1:1000; Jackson 703–545-155), followed by 30-minutes with 49,6-diamidino-2-phenylinode (DAPI; 1:50,000). Images were visualized under a confocal microscope (Olympus Fluoview, Shinjuku, Tokyo, Japan).

QUANTIFICATION AND STATISTICAL ANALYSIS

Behavior and voltammetric measures were analyzed using Kruskal-Wallis ANOVA on ranks, One-way repeated-measures (RM) ANOVA, or unpaired t tests. Significance was set at p < 0.05 and Tukey’s post hoc test or Bonferroni corrections were used to correct for multiple comparisons when appropriate. Statistical analyses were performed in Prism (Version 6, GraphPad). Statistical details for each experiment are presented in figure legends. The n for each experiment is presented in the figure legends or Method Details section above.

Supplementary Material

KEY RESOURCES TABLE.

| REAGENT or RESOURCE Antibodies | SOURCE | IDENTIFIER |

|---|---|---|

| mouse monocloncal anti-tyrosine hydroxylase | ImmunoStar | Catalog# 22941; RRID: AB_572268 |

| chicken anti-GFP | Aves Labs, Inc., Tigard, OR, USA | Catalog #: GFP-1020; RRID: AB_10000240 |

| donkey anti-mouse; Alexa 647 | Jackson ImmunoResearch Laboratories, Inc. | Jackson 715–605-151; RRID: AB_2340863 |

| donkey anti-chicken; Alexa 488 | Jackson ImmunoResearch Laboratories, Inc. | Jackson 703–545-155; RRID: AB_2340375 |

| Bacterial and Virus Strains | ||

| Cre-inducible channelrhodopsin-2 | University of North Carolina (Vector Core Facility) | AAV5-EF1a-DIO-ChR2(H134R)-eYFP |

| Experimental Models: Organisms/Strains | ||

| Mouse: DAT::Cre | Jackson Laboratories | Slc6a3tm1(cre)Xz |

| Software and Algorithms | ||

| GraphPad Prism | GraphPadSoftware | Version 6.02 |

| Tarheel | ESA Bioscience | N/A |

| SigmaPlot | Systat Software, Inc. | Version 12.5 |

| MATLAB | MathWords | R2018a |

| Adobe Illustrator | Adobe | Version CS6 |

| Med-PC | Med Associates | Cat#SOF-735 |

Highlights.

Accumbal dopamine release predictably tracks dopamine neuron self-stimulation

Self-stimulation supports learning about and dopamine encoding of predictive cues

Cue-evoked dopamine does not reflect the expected cost/magnitude of neuronal activation

Dopamine neuron activation drives reinforcement learning and its neural correlates

ACKNOWLEDGMENTS

The authors would like to thank Drs. Christopher Howard, Erik Oleson, Saleem Nicola, and Peter Dayan for helpful discussion and comments on the manuscript. This research was supported by the NIH (grant R01 DA022340 to J.F.C. and grants F32 DA041827 and K99 DA047432 to D.P.C.).

Footnotes

DECLARATION OF INTERESTS

The authors declare no competing interests.

SUPPLEMENTAL INFORMATION

Supplemental Information can be found online at https://doi.org/10.1016/j.celrep.2019.03.055.

REFERENCES

- Bayer HM, and Glimcher PW (2005). Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47, 129–141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC (2007). The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology (Berl.) 191, 391–431. [DOI] [PubMed] [Google Scholar]

- Beyene M, Carelli RM, and Wightman RM (2010). Cue-evoked dopamine release in the nucleus accumbens shell tracks reinforcer magnitude during intracranial self-stimulation. Neuroscience 169, 1682–1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bielajew C, and Shizgal P (1986). Evidence implicating descending fibers in self-stimulation of the medial forebrain bundle. J. Neurosci 6, 919–929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calipari ES, Juarez B, Morel C, Walker DM, Cahill ME, Ribeiro E, Roman-Ortiz C, Ramakrishnan C, Deisseroth K, Han MH, and Nestler EJ (2017). Dopaminergic dynamics underlying sex-specific cocaine reward. Nat. Commun 8, 13877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CY, Esber GR, Marrero-Garcia Y, Yau HJ, Bonci A, and Schoenbaum G (2016). Brief optogenetic inhibition of dopamine neurons mimics endogenous negative reward prediction errors. Nat. Neurosci 19, 111–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark JJ, Sandberg SG, Wanat MJ, Gan JO, Horne EA, Hart AS, Akers CA, Parker JG, Willuhn I, Martinez V, et al. (2010). Chronic microsensors for longitudinal, subsecond dopamine detection in behaving animals. Nat. Methods 7, 126–129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JY, Haesler S,Vong L, Lowell BB, and Uchida N (2012). Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature 482, 85–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbett D, and Wise RA (1980). Intracranial self-stimulation in relation to the ascending dopaminergic systems of the midbrain: a moveable electrode mapping study. Brain Res. 185, 1–15. [DOI] [PubMed] [Google Scholar]

- Covey DP, Roitman MF, and Garris PA (2014). Illicit dopamine transients: reconciling actions of abused drugs. Trends Neurosci. 37, 200–210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Covey DP, Dantrassy HM, Zlebnik NE, Gildish I, and Cheer JF (2016). Compromised dopaminergic encoding of reward accompanying suppressed willingness to overcome high effort costs is a prominent prodromal characteristic of the Q175 mouse model of huntington’s disease. J. Neurosci 36, 4993–5002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Covey DP, Dantrassy HM, Yohn SE, Castro A, Conn PJ, Mateo Y, and Cheer JF (2018). Inhibition of endocannabinoid degradation rectifies motivational and dopaminergic deficits in the Q175 mouse model of Huntington’s disease. Neuropsychopharmacology 43, 2056–2063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day JJ, Roitman MF, Wightman RM, and Carelli RM (2007). Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat. Neurosci 10, 1020–1028. [DOI] [PubMed] [Google Scholar]

- Day JJ, Jones JL, Wightman RM, and Carelli RM (2010). Phasic nucleus accumbens dopamine release encodes effort- and delay-related costs. Biol. Psychiatry 68, 306–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eshel N, Bukwich M, Rao V, Hemmelder V,Tian J, and Uchida N (2015). Arithmetic and local circuitry underlying dopamine prediction errors. Nature 525,243–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fibiger HC, LePiane FG, Jakubovic A, and Phillips AG (1987). The roleof dopamine in intracranial self-stimulation of the ventral tegmental area. J. Neurosci 7, 3888–3896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Tobler PN, and Schultz W (2003). Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902. [DOI] [PubMed] [Google Scholar]

- Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, Akers CA, Clinton SM, Phillips PEM, and Akil H (2011). A selective role for dopamine in stimulus-reward learning. Nature 469, 53–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gan JO, Walton ME, and Phillips PEM (2010). Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat. Neurosci 13, 25–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garris PA, Kilpatrick M, Bunin MA, Michael D, Walker QD, and Wightman RM (1999). Dissociation ofdopamine release in the nucleus accumbens from intracranial self-stimulation. Nature 398, 67–69. [DOI] [PubMed] [Google Scholar]

- Hart AS, Rutledge RB, Glimcher PW, and Phillips PEM (2014). Phasic dopamine release in the rat nucleus accumbens symmetrically encodes a reward prediction error term. J. Neurosci 34, 698–704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heien MLAV, Khan AS, Ariansen JL, Cheer JF, Phillips PEM, Wassum KM, and Wightman RM (2005). Real-time measurement of dopamine fluctuations after cocaine in the brain of behaving rats. Proc. Natl. Acad. Sci. USA 102, 10023–10028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollon NG, Arnold MM, Gan JO, Walton ME, and Phillips PEM (2014). Dopamine-associated cached values are not sufficient as the basis for action selection. Proc. Natl. Acad. Sci. USA 111, 18357–18362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyman SE, Malenka RC, and Nestler EJ (2006). Neural mechanisms of addiction: the role of reward-related learning and memory. Annu. Rev. Neuro-sci 29, 565–598. [DOI] [PubMed] [Google Scholar]

- Ilango A, Kesner AJ, Keller KL, Stuber GD, Bonci A, and Ikemoto S (2014). Similar roles of substantia nigra and ventral tegmental dopamine neurons in reward and aversion. J. Neurosci 34, 817–822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keiflin R, and Janak PH (2015). Dopamine prediction errors in reward learning and addiction: from theory to neural circuitry. Neuron 88, 247–263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilpatrick MR, Rooney MB, Michael DJ, and Wightman RM (2000). Extracellular dopamine dynamics in rat caudate-putamen during experimenter-delivered and intracranial self-stimulation. Neuroscience 96, 697–706. [DOI] [PubMed] [Google Scholar]

- Kita JM, Parker LE, Phillips PEM, Garris PA, and Wightman RM (2007). Paradoxical modulation of short-term facilitation of dopamine release by dopamine autoreceptors. J. Neurochem 102, 1115–1124. [DOI] [PubMed] [Google Scholar]

- Kruk ZL, Cheeta S, Milla J, Muscat R, Williams JEG, and Willner P (1998). Real time measurement of stimulated dopamine release in the conscious rat using fast cyclic voltammetry: dopamine release is not observed during intracranial self stimulation. J. Neurosci. Methods 79, 9–19. [DOI] [PubMed] [Google Scholar]

- McClure SM, Daw ND, and Montague PR (2003). A computational substrate for incentive salience. Trends Neurosci. 26, 423–428. [DOI] [PubMed] [Google Scholar]

- Mogenson GJ, Takigawa M, Robertson A, and Wu M (1979). Self-stimulation of the nucleus accumbens and ventral tegmental area of Tsai attenuated bymicroinjectionsofspiroperidol intothenucleusaccumbens. Brain Res. 171, 247–259. [DOI] [PubMed] [Google Scholar]

- Montague PR, Dayan P, and Sejnowski TJ (1996). A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci 16, 1936–1947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, McClure SM, Baldwin PR, Phillips PE, Budygin EA, Stuber GD, Kilpatrick MR, and Wightman RM (2004). Dynamic gain control of dopamine delivery in freely moving animals. J. Neurosci 24,1754–1759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicola SM (2010). The flexible approach hypothesis: unification of effort and cue-responding hypotheses for the role of nucleus accumbens dopamine in the activation of reward-seeking behavior. J. Neurosci 30, 16585–16600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicola SM, Taha SA, Kim SW, and Fields HL (2005). Nucleus accumbens dopamine release is necessary and sufficient to promote the behavioral response to reward-predictive cues. Neuroscience 135, 1025–1033. [DOI] [PubMed] [Google Scholar]

- Olds J, and Milner P (1954). Positive reinforcement produced by electrical stimulation of septal area and other regions of rat brain. J. Comp. Physiol. Psychol 47, 419–427. [DOI] [PubMed] [Google Scholar]

- Oleson EB, Beckert MV, Morra JT, Lansink CS, Cachope R, Abdullah RA, Loriaux AL, Schetters D, Pattij T, Roitman MF, et al. (2012). Endo-cannabinoids shape accumbal encoding of cue-motivated behavior via CB1 receptor activation in the ventral tegmentum. Neuron 73, 360–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owesson-White CA, Cheer JF, Beyene M, Carelli RM, and Wightman RM (2008). Dynamic changes in accumbens dopamine correlate with learning during intracranial self-stimulation. Proc. Natl. Acad. Sci. USA 105, 11957–11962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson JA, Dalley JW, Cardinal RN, Bamford A, Fehnert B, Lachenal G, Rudarakanchana N, Halkerston KM, Robbins TW, and Everitt BJ (2002). Nucleus accumbens dopamine depletion impairs both acquisition and performance of appetitive Pavlovian approach behaviour: implications for mesoaccumbens dopamine function. Behav. Brain Res 137, 149–163. [DOI] [PubMed] [Google Scholar]

- Pasquereau B, and Turner RS (2013). Limited encoding of effort by dopamine neurons in a cost-benefit trade-off task. J. Neurosci 33, 8288–8300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips PEM, Stuber GD, Heien ML, Wightman RM, and Carelli RM (2003). Subsecond dopamine release promotes cocaine seeking. Nature 422, 614–618. [DOI] [PubMed] [Google Scholar]

- Redish AD (2004). Addiction asacomputational processgoneawry. Science 306,1944–1947. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, and Schoenbaum G (2007). Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat. Neurosci 10, 1615–1624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roitman MF, Stuber GD, Phillips PE, Wightman RM, and Carelli RM (2004). Dopamine operates as a subsecond modulator of food seeking. J. Neurosci 24, 1265–1271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD, and Correa M (2012). The mysterious motivational functions of mesolimbic dopamine. Neuron 76, 470–485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schelp SA, Pultorak KJ, Rakowski DR, Gomez DM, Krzystyniak G, Das R, and Oleson EB (2017). Atransient dopamine signal encodes subjective value and causally influences demand in an economic context. Proc. Natl. Acad. Sci. USA 114, E11303–E11312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, and Montague PR (1997). A neural substrate of prediction and reward. Science 275, 1593–1599. [DOI] [PubMed] [Google Scholar]

- Schultz W, Stauffer WR, and Lak A (2017). The phasic dopamine signal maturing: from reward via behavioural activation to formal economic utility. Curr. Opin. Neurobiol 43, 139–148. [DOI] [PubMed] [Google Scholar]

- Sharpe MJ, Chang CY, Liu MA, Batchelor HM, Mueller LE, Jones JL, Niv Y, and Schoenbaum G (2017). Dopamine transients are sufficient and necessary for acquisition of model-based associations. Nat. Neurosci 20, 735–742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg EE, Keiflin R, Boivin JR, Witten IB, Deisseroth K, and Janak PH (2013). A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci 16, 966–973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg EE, Boivin JR, Saunders BT, Witten IB, Deisseroth K, and Janak PH (2014). Positive reinforcement mediated by midbrain dopamine neurons requires D1 and D2 receptor activation in the nucleus accumbens. PLoS ONE 9, e94771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuber GD, Wightman RM, Carelli RM, Hill C, and Carolina N (2005). Extinction of cocaine self-administration reveals functionally and temporally distinct dopaminergic signals in the nucleus accumbens. Neuron 46,661–669. [DOI] [PubMed] [Google Scholar]

- Syed ECJ, Grima LL, Magill PJ, Bogacz R, Brown P, and Walton ME (2016). Action initiation shapes mesolimbic dopamine encoding of future rewards. Nat. Neurosci 19, 34–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JR, and Robbins TW (1986). 6-Hydroxydopamine lesions of the nucleus accumbens, but not of the caudate nucleus, attenuate enhanced responding with reward-related stimuli produced by intra-accumbens d-amphetamine. Psychopharmacology (Berl.) 90, 390–397. [DOI] [PubMed] [Google Scholar]

- Tobler PN, Fiorillo CD, and Schultz W (2005). Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645. [DOI] [PubMed] [Google Scholar]

- Walton ME, and Bouret S (2019). What is the relationship between dopamine and effort? Trends Neurosci 42, 79–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wanat MJ, Kuhnen CM, and Phillips PEM (2010). Delays conferred by escalating costs modulate dopamine release to rewards but not their predictors. J. Neurosci 30, 12020–12027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witten IB, Steinberg EE, Lee SY, Davidson TJ, Zalocusky KA, Brodsky M, Yizhar O, Cho SL, Gong S, Ramakrishnan C, et al. (2011). Re-combinase-driver rat lines: tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron 72, 721–733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyvell CL, and Berridge KC (2001). Incentive sensitization by previous amphetamine exposure: increased cue-triggered “wanting” for sucrose reward. J. Neurosci 21, 7831–7840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yavich L, and MacDonald E (2000). Dopamine release from pharmacologically distinct storage pools in rat striatum following stimulation at frequency of neuronal bursting. Brain Res. 870, 73–79. [DOI] [PubMed] [Google Scholar]

- Yeomans JS, Maidment NT, and Bunney BS (1988). Excitability properties of medial forebrain bundle axons of A9 and A10 dopamine cells. Brain Res. 450, 86–93. [DOI] [PubMed] [Google Scholar]

- Yun IA, Wakabayashi KT, Fields HL, and Nicola SM (2004). The ventral tegmental area is required forthe behavioral and nucleusaccumbens neuronal firing responses to incentive cues. J. Neurosci 24, 2923–2933. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.