Key Points

Question

Can computerized provider order entry events be used to identify patients who would need manual medical record review to detect postoperative complications?

Findings

In a cohort study of 21 775 patients who had undergone surgical procedures, the use of computerized provider order entry to screen for postoperative complications appeared to decrease the burden of manual medical record review by 55.4% to 90.3%.

Meaning

Monitoring computerized provider order entry events may augment manual medical record review for selected postoperative complications by excluding from review those patients with low likelihood of complications.

Abstract

Importance

Conventional approaches for tracking postoperative adverse events requires manual medical record review, thus limiting the scalability of such efforts.

Objective

To determine if a surveillance system using computerized provider order entry (CPOE) events for selected medications as well as laboratory, microbiologic, and radiologic orders can decrease the manual medical record review burden for surveillance of postoperative complications.

Design, Setting, and Participants

This cohort study reviewed the medical records of 21 775 patients who underwent surgical procedures at a university-based tertiary referral center (University of Utah, Salt Lake City) from July 1, 2007, to August 31, 2017. Patients were included if their case was selected for review by a surgical clinical reviewer as part of the National Surgical Quality Improvement Program. Patients were excluded if they had incomplete follow-up data.

Main Outcomes and Measures

Thirty-day postoperative occurrences of superficial surgical site infection, deep surgical site infection, organ space surgical site infection, urinary tract infection, pneumonia, sepsis, septic shock, deep vein thrombosis requiring therapy, and pulmonary embolism, as defined by the National Surgical Quality Improvement Program. A logistic regression model was developed for each postoperative complication using CPOE features as predictors on a development set, and performance was measured on a holdout internal validation set. The models were internally validated using bootstrapping with 10 000 replications to determine the sensitivity, specificity, positive predictive value, and negative predictive value of CPOE-based surveillance system.

Results

The study included 21 775 patients who underwent surgical procedures. Among these patients, 11 855 (54.4%) were women and 9920 (45.6%) were men, with a mean (SD) age of 51.7 (16.8) years. Overall, the prevalence of postoperative complications was low, ranging from 0.2% (pulmonary embolism) to 2.6% (superficial surgical site infection). Use of CPOE events to detect patients who experienced at least 1 complication had a sensitivity of 74.8% (95% CI, 71.1%-78.4%), specificity of 86.8% (95% CI, 85.5%-88.3%), positive predictive value of 33.8% (95% CI, 31.2%-36.4%), negative predictive value of 97.5% (95% CI, 97.1%-97.8%), and area under the curve of 0.808 (95% CI, 0.791-0.824). The negative predictive value for individual complications ranged from 98.7% to 100%. Use of CPOE events to screen for adverse events was estimated to diminish the burden of manual medical record review by 55.4% to 90.3%. A CPOE-based surveillance system performed well for both inpatient and outpatient procedures.

Conclusions and Relevance

A CPOE-based surveillance of postoperative complications has high negative predictive value, which demonstrates that this approach can augment the currently used, resource-intensive manual medical record review process.

This cohort study evaluates an alternative approach to monitoring surgical complications by reviewing medication, laboratory test, and radiologic test orders in the electronic health record for patients who just underwent a surgical procedure.

Introduction

Postoperative complication surveillance is a cornerstone of local and national surgical quality improvement efforts in the United States.1 Locally, surveillance systems are an important tool that hospitals use to track incidence of complications and the response to targeted quality improvement interventions. Nationally, surveillance systems aid payers and decision makers in developing health care policy to improve surgical care.2 One such surveillance system is the American College of Surgeons National Surgical Quality Improvement Program (NSQIP).3 The program provides hospitals the tools, training, and resources to collect 30-day risk-adjusted postoperative outcomes, with the goal of providing hospitals the methods and data to drive local quality improvement efforts. The advantage of these systems is the reliability of obtaining clinical data through manual medical record abstraction.4,5 The high-quality abstracted data allow for risk adjustment and accurate surveillance over time. However, manual data abstraction can be costly, typically requiring 1 to 2 full-time data abstractors.6 Because of the cost associated with manual data abstraction, most surveillance systems rely on either a case-based or a time-based sampling method. In addition, manual data abstraction introduces the possibility of inconsistency and variability in abstraction methods. The cost and complexity of abstraction, therefore, limit the scalability and dissemination of these systems across hospitals.

Over the past decade, hospitals and other health care organizations have widely adopted electronic health records (EHRs).7 Most US hospitals, primarily in response to the Health Information Technology for Economic and Clinical Health Act, have adopted comprehensive EHRs that have electronic physician documentation, clinical decision support, and computerized provider order entry (CPOE) capabilities. Comprehensive EHR systems have been shown to decrease adverse events and improve compliance with antibiotic prescribing guidelines and venous thromboembolism prophylaxis protocol.8,9,10,11

The dissemination of EHRs has created an opportunity for developing automated surveillance systems for a variety of conditions.12,13 Surveillance systems based on both structured EHR data and unstructured EHR data using natural language processing (NLP) have been shown to achieve reasonable performance in detecting postoperative complications.14,15 However, the complexity of these systems and lack of interoperability currently make their generalizability across health care systems difficult.16 In addition, these systems have not performed sufficiently well to eliminate the need for manual medical record review.17 However, a hybrid model is possible in which EHR data are used to improve the manual review process.

The goal of the present study is to determine if a surveillance system using common CPOE features derived from routine clinical care can assist surgical clinical reviewers (SCRs) with identifying appropriate medical records to manually review for postoperative complications. We hypothesize that a CPOE-based surveillance system can be used to exclude patients with a low likelihood of complications and thus substantially decrease the medical record review burden of monitoring postoperative complications without compromising accuracy.

Methods

The institutional review board of the University of Utah approved this study. Because of the study design to use existing data, a waiver of informed consent was granted by the institutional review board of the University of Utah.

Study Design

We performed a retrospective cohort study of patients who underwent surgical procedures at a university-based tertiary referral center (University of Utah, Salt Lake City) from July 1, 2007, to August 31, 2017. Patients were included if their case was selected for review by an NSQIP-trained SCR. Briefly, as part of the NSQIP (which has been described previously18), the SCRs are trained in the NSQIP methods and definitions to examine the medical records of a random selection of patients who had surgical procedures. The NSQIP sampling is based on an 8-day cycle, which is designed to prevent bias in choosing cases for assessment. The SCRs analyze medical records for perioperative complications that occur within 30 days of the operation. Patients with incomplete follow-up information in the EHR are contacted to complete a 30-day follow-up. Patients were excluded from the present study if they had incomplete follow-up data.

Data Collection

The University of Utah has maintained an EHR with CPOE capability for both inpatient and outpatient encounters since 2007, and the data are stored in the university enterprise data warehouse. For this study, we obtained all electronic orders for patients in the enterprise data warehouse, including orders for selected medications (eg, antibiotics, anticoagulants, neuromuscular relaxants, and vasopressors), laboratory tests (eg, basic metabolic panel, complete blood cell count, blood glucose, prothrombin time, arterial blood gas, and vancomycin level), microbiologic orders (eg, urine culture, wound culture, respiratory culture, and blood culture), and radiologic orders (eg, chest, abdomen, and pelvis computed tomography; duplex venous ultrasonography; abscess drainage; and image-guided procedures); see eTable 1 in the Supplement for CPOE elements included in the final models. Orders that were temporary, canceled, or not completed were excluded. We elected to include only the orders placed 2 to 30 days after the date of operation. This limitation was aimed at excluding common postoperative order sets used as part of routine care, such as perioperative antibiotics. Individual orders were treated as binary variables, either present if at least 1 order was placed during the postoperative period or absent if no specific order was placed.

Outcomes

Guided by clinical experience and the literature, we elected a priori to examine 9 common postoperative complications: superficial surgical site infection (SSI), deep SSI, organ space SSI, urinary tract infection, pneumonia, sepsis, septic shock, deep vein thrombosis (DVT) requiring therapy, and pulmonary embolism (PE). In addition, we created a composite outcome of any complication if at least 1 of these compilations was present. These complications were defined according to standard NSQIP definitions (which differ from National Healthcare Safety Network and Agency for Healthcare Research and Quality definitions) and were chosen because of the complexity of screening for them, in contrast to complications associated with easily identifiable elements in the EHR, such as acute myocardial infarction with troponin elevation. Outcomes were modeled as binary variables (present or absent).

Statistical Analysis

Analysis was performed with Python, version 3.1 (Python Software Foundation) and R Statistical software package (R Foundation for Statistical Computing). Univariate analysis was performed using χ2 tests for discrete variables. Model comparisons were performed using the test for equality of proportions to calculate 2-sided P values. P < .05 was used to indicate statistical significance.

We modeled the association of orders with each individual binary outcome using multivariable logistic regression. For CPOE feature selection, we used recursive feature elimination with 10-fold cross-validation.19 We used bootstrapping with downsampling for a model selection technique.20 Features associated with each individual outcome in more than 70% of 500 random samples were retained in the model. During each iteration, model fit was assessed on the basis of area under the curve (AUC), and the top-performing features were retained in the model.

We created classification models for each outcome of interest (the 9 postoperative complications) using multivariable logistic regression. We categorized CPOE features as binary variables (present or absent) and used these features as independent variables in each multivariable logistic regression model. We did not assess any interaction terms in model development. The features for each outcome are listed in eTable 2 in the Supplement. We trained logistic regression models for each outcome using bootstrapping with downsampling.20,21 During each iteration, the data set was randomly split into a development set (67%) and a validation set (33%). The development set was then randomly downsampled to create a 1:1 ratio of no complication to complication. The model was trained on the downsampled data set to obtain the feature coefficients. The trained model was then tested on the full internal validation set to obtain classification performance for these metrics: sensitivity, specificity, positive predictive value (PPV), negative predictive value (NVP), area under the receiver operating characteristic curve, number needed to screen, and percent reduction in medical record review.22 We performed 10 000 bootstrap iterations to generate the mean feature coefficients, mean classification performance, and 95% CI for each metric.

The number needed to screen was defined as the number of medical records needed to be manually reviewed to identify 1 complication and was calculated as 1 per PPV. We defined the percent reduction in medical record review as the percentage of decrease in the number of records to analyze if only the records flagged by the CPOE were evaluated. We started by calculating the number of CPOE–flagged records to review, which was equal to (True Positives + False Positives), which in turn was equal to (Prevalence × Total Number)/PPV. The percent reduction in medical record review was then calculated as (Total Medical Records – Flagged Medical Records)/Total Medical Records.

Sensitivity Analysis

We performed 2 sensitivity analyses using our predictive models on the full data set. In the first sensitivity analysis, we varied the probability threshold for the classification of each complication from 10% to 90% to generate area under the receiver operating characteristic curves for the full data set.22 In the second sensitivity analysis, we split the data set into inpatient and outpatient surgical procedures and measured the classification performance (sensitivity, specificity, PPV, NPV, and AUC) for each subset. Using the test of equality of proportion, we determined whether the sensitivity and specificity of the models were the same between inpatient and outpatient procedures.

Results

Univariate Analysis

We identified 21 775 patients who underwent surgical procedures from July 1, 2007, to August 31, 2017. Of these patients, 11 855 (54.4%) were women and 9920 (45.6%) were men, with a mean (SD) age of 51.7 (16.8) years. Patient and operative characteristics are shown in Table 1. The patients were predominantly female (11 855 [54.4%]), were white (16 695 [76.%]), and had American Society of Anesthesiologists Physical Status Classification 2 (11 005 [50.5%]). Gastrointestinal (15 492 [71.1%]), genitourinary (56 [0.3%]), and gynecologic (29 [0.1%]) procedures as well as skin and soft tissue procedures (5368 [24.7%]) were the most common. Most procedures were nonemergent (19 183 [88.1%]) and resulted in a postoperative inpatient admission (11 257 [51.7%]). Overall, the incidence of postoperative complications ranged from 0.2% (PE) to 2.6% (superficial SSI).

Table 1. Baseline Characteristics of Study Sample .

| Variable | No. (%) |

|---|---|

| All patients, No. | 21 775 |

| Patient characteristic | |

| Age, mean (SD), y | 51.7 (16.8) |

| Female sex | 11 855 (54.4) |

| Race/ethnicitya | |

| American Indian or Alaska Native | 294 (1.3) |

| Asian | 257 (1.2) |

| Black or African American | 210 (1.0) |

| Native Hawaiian or Other Pacific Islander | 82 (0.4) |

| Unknown/not reported | 4185 (19.2) |

| White | 16 695 (76.7) |

| Hispanic | 1137 (5.1) |

| ASA classificationb | |

| ASA 1 - No disturbance | 2841 (13.1) |

| ASA 2 - Mild disturbance | 11 005 (50.6) |

| ASA 3 - Severe disturbance | 6944 (31.9) |

| ASA 4 - Life threatening | 917 (4.2) |

| ASA 5 - Moribund | 60 (0.3) |

| Procedure characteristic | |

| Category | |

| Endocrine | 290 (1.3) |

| Gastrointestinal | 15 492 (71.1) |

| Genitourinary | 56 (0.3) |

| Gynecologic | 29 (0.1) |

| Skin and soft tissue | 5368 (24.7) |

| Thoracic | 16 (0.1) |

| Vascular | 512 (2.4) |

| Outpatient procedure | 10 527 (48.3) |

| Emergency procedure | 2583 (11.9) |

| Wound classification | |

| Clean | 10 847 (49.8) |

| Clean/contaminated | 7070 (32.5) |

| Contaminated | 2056 (9.4) |

| Dirty/infected | 1802 (8.3) |

| Outcomes | |

| Superficial SSI | 559 (2.6) |

| Deep SSI | 76 (0.3) |

| Organ space SSI | 494 (2.3) |

| UTI | 302 (1.4) |

| Pneumonia | 210 (1.0) |

| Sepsis | 399 (1.8) |

| Septic shock | 192 (0.9) |

| Deep vein thrombosis | 121 (0.6) |

| Pulmonary embolism | 54 (0.2) |

| Any complication | 1795 (8.2) |

Abbreviations: ASA, American Society of Anesthesiologists; SSI, surgical site infection; UTI, urinary tract infection.

Some patients had more than one category of race/ethnicity.

The ASA status of 8 patients was missing.

The use of postoperative orders is shown in eTable 1 in the Supplement. Patients who experienced at least 1 included complication were more likely to have at least 1 laboratory order (odds ratio [OR], 12.2; 95% CI, 10.6-14.0), microbiologic order (OR, 13.6; 95% CI, 12.2-15.1), radiologic order (OR, 8.54; 95% CI, 7.7-9.5), or medication order (OR, 12.3; 95% CI, 10.5-14.5).

Multivariable Classification Models

We developed a multivariable logistic regression classification model for each individual complication using postoperative orders as the model covariates. The final model for each outcome is shown in eTable 2 in the Supplement. The number of features in each model ranged from 2 (PE and deep SSI) to 14 (any complication). The features in each model correlated clinically with each complication. For example, to classify a deep SSI, an order for wound culture (adjusted OR [aOR], 10.1; 95% CI, 3.4-33.6) and an order for glycopeptide antibiotic (aOR, 9.7; 95% CI, 3.7-.37.7) were independently associated with the outcome. To classify a PE, a chest computed tomography order (aOR, 7.1; 95% CI, 2.1-24.7) and an anticoagulation order (aOR, 7.5; 95% CI, 2.9-21.2) were independently associated with the outcome.

Performance

The performance of the classification models on 10 000 bootstrap iterations is shown in Table 2. The model to predict at least 1 complication had a sensitivity of 74.8% (95% CI, 71.1%-78.4%), specificity of 86.8% (95% CI, 85.5%-88.3%), and AUC of 0.808 (95% CI, 0.791-0.824), PPV of 33.8% (95% CI, 31.2%-36.4%), and NPV of 97.5% (95% CI, 97.1%-97.8%). Among the individual complications, the sensitivity was highest for DVT (89.0%; 95% CI, 79.3%-97.6%) and specificity was highest for organ space SSI (92.9%; 95% CI, 91.4%-94.5%). The classification model for organ space SSI performed best (AUC, 0.907; 95% CI, 0.884-0.928), and the classification model for superficial SSI performed worst (AUC, 0.671; 95% CI, 0.651-0.715).

Table 2. Bootstrap Performance of Postoperative Complication Surveillance Models.

| Complication | Prevalence, % | Sensitivity, % (95% CI) | Specificity, % (95% CI) | PPV (95% CI) | NPV (95% CI) | AUC (95% CI) | Reduction in Medical Record Review, % (95% CI) | NNS |

|---|---|---|---|---|---|---|---|---|

| Superficial SSI | 2.6 | 64.0 (55.4-74.3) | 72.8 (65.1-76.3) | 5.9 (4.7-7.0) | 98.7 (98.4-99.0) | 0.671 (0.651-0.715) | 56.5 (45.1-63.6) | 17.0 (14.2-21.4) |

| Deep SSI | 0.3 | 71.1 (56.0-85.7) | 92.5 (92.0-93.0) | 3.2 (2.0-4.5) | 99.9 (99.8-100) | 0.818 (0.742-0.891) | 89.1 (82.9-92.2) | 31.1 (22.5-49.0) |

| Organ space SSI | 2.3 | 88.6 (83.3-93.3) | 92.9 (91.4-94.5) | 22.5 (18.7-27.3) | 99.7 (99.6-99.8) | 0.907 (0.884-0.928) | 90.0 (87.9-91.7) | 4.4 (3.7-5.3) |

| UTI | 1.4 | 86.9 (79.6-93.3) | 89.2 (86.0-91.9) | 10.4 (7.5-13.8) | 99.8 (99.7-99.9) | 0.880 (0.852-0.907) | 86.7 (81.5-90.0) | 9.6 (7.2-13.3) |

| Pneumonia | 1.0 | 87.3 (78.9-94.2) | 90.6 (89.3-93.3) | 8.4 (6.5-10.4) | 99.9 (99.8-99.9) | 0.890 (0.850-0.923) | 88.6 (85.1-90.8) | 11.9 (9.6-15.5) |

| Sepsis | 1.8 | 88.9 (82.8-94.0) | 87.4 (85.7-89.9) | 11.5 (9.5-14.1) | 99.8 (99.6-99.9) | 0.881 (0.855-0.904) | 84.3 (81.0-87.2) | 8.7 (7.1-10.5) |

| Septic shock | 0.9 | 86.3 (77.0-93.8) | 92.5 (91.8-93.2) | 9.2 (7.3-11.1) | 99.9 (99.8-99.9) | 0.894 (0.850-0.931) | 90.5 (88.0-92.1) | 10.8 (9.0-13.6) |

| DVT | 0.6 | 89.0 (79.3-97.6) | 83.0 (75.8-85.9) | 2.9 (1.8-4.0) | 99.9 (99.9-100) | 0.860 (0.817-0.901) | 81.1 (69.0-86.2) | 34.1 (24.9-55.9) |

| PE | 0.2 | 86.1 (47.1-100) | 77.3 (74.0-96.0) | 1.1 (0.5-3.7) | 100.0 (99.9-100) | 0.817 (0.712-0.876) | 77.7 (54.7-93.3) | 89.8 (27.0-182.8) |

| Any complication | 8.2 | 74.8 (71.1-78.4) | 86.8 (85.5-88.3) | 33.8 (31.2-36.4) | 97.5 (97.1-97.8) | 0.808 (0.791-0.824) | 75.6 (73.6-77.4) | 3.0 (2.7-3.2) |

Abbreviations: AUC, area under the curve; DVT, deep vein thrombosis; NNS, number needed to screen; NPV, negative predictive value; PE, pulmonary embolism; PPV, positive predictive value; SSI, surgical site infection; UTI, urinary tract infection.

The value in using CPOE events for surveillance of postoperative complications is demonstrated in the NPV for each model. The NPV ranged from 98.7% (superficial SSI) to 100% (PE), which resulted in a false omission rate (1 – NPV) of 0% to 1.4%. If willing to accept this low false omission rate, SCRs would need to evaluate only those patient records flagged by each model, and the medical record review burden would decrease by 55.4% to 90.3%. The number of medical records needed to be screened to identify 1 complication would be reduced from between 39 and 403 to between 5 and 90 with the use of a predictive model that incorporates postoperative orders (Table 2).

Sensitivity Analysis

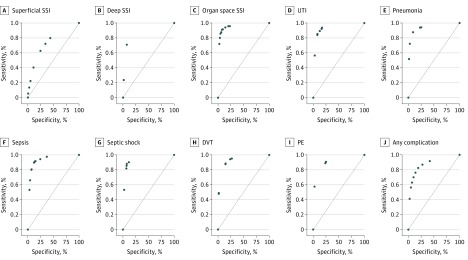

We performed 2 sensitivity analyses to demonstrate the robustness of the method. First, we varied the probability threshold for each model to determine its association with classification performance. The resulting receiver operating characteristic curves are shown in the Figure. Changing the probability threshold for each model can be used to maximize sensitivity (minimize false-negatives) or maximize specificity (decrease manual medical record review). For example, decreasing the classification probability threshold for organ space SSI to 10% would result in a sensitivity of 96.5% and NPV of 99.9%, thus minimizing the false-negative classification. Increasing the probability threshold to 90% would result in a specificity of 97.5% and PPV of 38.9%, thus decreasing the medical record review burden.

Figure. Receiver Operating Characteristic Curves for Computerized Provider Order Entry System–Based Postoperative Complication Surveillance Models.

Receiver operating characteristic curves. DVT indicates deep vein thrombosis; PE, pulmonary embolism; SSI, surgical site infection; and UTI, urinary tract infection.

One potential disadvantage of using CPOE events from the EHR is the uncertainty of capturing orders from outpatient visits outside the initial episode of care. To assess and compare the performance of the models in the outpatient setting, we performed a sensitivity analysis by applying each model to the entire data set stratified by inpatient and outpatient operations (Table 3). In general, the specificity for each model statistically significantly increased for outpatient procedures compared with inpatient procedures. For most models, no substantial change in sensitivity was found between inpatient and outpatient operations.

Table 3. Sensitivity Analysis on Total Study Sample by Inpatient and Outpatient Procedures.

| Outcome | Prevalence, % | Sensitivity, % | P Value | Specificity, % | P Value | |||

|---|---|---|---|---|---|---|---|---|

| Inpatient | Outpatient | Inpatient | Outpatient | Inpatient | Outpatient | |||

| Superficial SSI | 3.8 | 1.3 | 69.6 | 41.0 | <.001 | 58.5 | 93.0 | <.001 |

| Deep SSI | 0.6 | 0.1 | 73.8 | 54.5 | .34 | 86.7 | 98.7 | <.001 |

| Organ space SSI | 4.1 | 0.3 | 91.5 | 82.4 | .14 | 86.0 | 98.7 | <.001 |

| UTI | 2.4 | 0.3 | 90.0 | 83.9 | .45 | 78.5 | 96.3 | <.001 |

| Pneumonia | 1.8 | 0.1 | 88.9 | 63.6 | .05 | 83.0 | 99.1 | <.001 |

| Sepsis | 3.2 | 0.3 | 91.4 | 68.8 | .002 | 78.1 | 98.4 | <.001 |

| Septic shock | 1.7 | 0.1 | 88.7 | 66.7 | .32 | 85.9 | 99.0 | <.001 |

| DVT | 1.0 | 0.1 | 89.9 | 75.0 | .29 | 72.9 | 98.2 | <.001 |

| PE | 0.4 | 0.1 | 93.2 | 80.0 | .48 | 56.4 | 94.3 | <.001 |

| Any complication | 13.7 | 2.4 | 81.1 | 46.9 | <.001 | 74.9 | 96.9 | <.001 |

Abbreviations: DVT, deep vein thrombosis; PE, pulmonary embolism; SSI, surgical site infection; UTI, urinary tract infection.

Discussion

We have shown that the CPOE events from the EHR can be used as a tool to aid medical record review for surveillance of postoperative complications. The novel and important findings of the present study include the (1) simplicity in using clinically relevant CPOE events for postoperative complication surveillance, (2) high NPV for all complications examined, and (3) generalizability for both inpatient and outpatient procedures. Each detection model incorporated 2 to 14 common orders; however, substantial variation in performance was found within the 9 complications examined. Superficial SSI detection had the worst performance with 64% sensitivity (AUC, 0.671) and 73% specificity. Meanwhile, DVT had the highest sensitivity (89.0%), and organ space SSI had the highest specificity (92.9%). Overall, the use of CPOE features to detect patients experiencing at least 1 complication had a sensitivity of 74.8%, specificity of 86.8%, and AUC of 0.808. The method had a similar performance between inpatient and outpatient procedures.

Previous studies have demonstrated the value of using EHR data for complication surveillance. A study by Branch-Elliman et al13 developed a prediction model for SSI using several clinical variables. The AUC of the model was 0.93, with an NPV of 98% for patients with very low probability of complications. Pindyck et al17 subsequently validated the Branch-Elliman et al13 model in a second independent data set and found similar performance (AUC, 0.86; NPV, 98.2%-99.6%).

We separated individual SSI subtypes because of the differences in clinical presentation. Our model for organ space SSI included similar clinical variables with similar performance (AUC, 0.907). Our model for superficial SSI included similar variables but had lower classification performance (AUC, 0.671). Both models for organ space SSI and superficial SSI had excellent ability to exclude patients with low risk for complications (NPV, 99.7% vs 98.6%; Table 2). Our study did not include the use of International Classification of Diseases, Ninth Revision or International Statistical Classification of Diseases and Related Health Problems, Tenth Revision codes because of the delay in obtaining these elements and the variable accuracy of these codes for surveillance. We have expanded on this work to include other postoperative complications such as urinary tract infection (UTI), pneumonia, DVT, and PE.

Alternative approaches to postoperative complication surveillance involve the use of NLP. In 2 studies by Murff et al15 and FitzHenry et al,23 an NLP-based system for detecting postoperative complications was developed in the Veterans Health Administration system. These complications included acute renal failure, cardiac arrest, DVT, acute myocardial infarction, pneumonia, PE, sepsis, UTI, and SSI. Overall, the sensitivity of this NLP-based surveillance system ranged from 56% to 95%, and its specificity ranged from 63% to 97%. The system performed best for cardiac arrest and worst for wound infections, and it compared inpatient and outpatient surgical procedures with similar performance in each group. Potential disadvantages of an NLP-based system include the difficulty of portability or transferring such a system between EHRs. Current efforts to port NLP algorithms involve the clinical document architecture–based ontologies; however, most EHR vendors have not adopted these paradigms.24 The CPOE-based surveillance approach we used does not require any substantial preprocessing and could be integrated with common clinical decision support systems.

An important advantage of a CPOE-based surveillance approach is the ability to detect complications that occur after hospital discharge. In a study by Merkow et al,25 most readmissions after surgical procedures were related to new-onset complications after discharge. This study demonstrates that a substantial number of complications occur after hospital discharge, which represent important reasons for readmission. These complications are an ideal target for hospital quality improvement initiatives. The advantage of using EHR-based surveillance systems is the ability to include data from both inpatient and outpatient sources. Structured data, such as International Classification of Diseases, Ninth Revision or International Statistical Classification of Diseases and Related Health Problems, Tenth Revision, codes are not suited for identifying these complications because these codes are collected after the episode of care.5 Our approach performs well for both inpatient and outpatient procedures and improves specificity for outpatient procedures. This improved specificity likely reflects the decreased prevalence of complications in this population. However, our results demonstrate the possibility that a missing order in the outpatient setting would not negatively affect the usefulness of the approach.

Our goal was to identify approaches that aid in detecting postoperative complications; thus, we elected not to include risk factors for complications into our models. Several risk factors for postoperative complications have been identified, including procedure-specific complication risks, American Society of Anesthesiologists Physical Status Classification, and patient comorbidities.12 The purpose of demonstrating the use of CPOE events was to show the ability to perform not risk prediction, which still requires accurate medical record review,18 but case detection, regardless of the underlying patient risk. Integrating both patient and procedure risk factors into the prediction model will likely improve the performance at a modest cost of increasing the complexity of the underlying model. In addition, it will increase the technical complexity of disseminating this approach across EHR systems.

The implication of this study is the ability to considerably decrease the medical record review burden for postoperative complications surveillance. Decreasing the effort required to perform manual review allows hospitals and other health care organizations to perform close to 100% complications surveillance compared with the currently used sampling method. By combining our approach with NSQIP, hospitals can identify targets for quality improvement efforts using NSQIP hospital comparison reports. Complications identified as high outliers at a given hospital system can then be collected using CPOE-based surveillance. This process will allow hospitals to identify priorities for quality improvement efforts and implement evidence-based strategies to improve clinical care. In addition, after quality improvement efforts have been implemented, the CPOE-based approach can be used to monitor progress after implementation.

Limitations

This study is limited by being conducted at a single academic health care system and lacking external validation in a more expansive secondary data set. We attempted to demonstrate the generalizability of our approach by splitting the data into development and validation sets with 10 000 bootstrap iterations. We also defined complications according to standard NSQIP definitions. In addition, no standardized terminologies exist for most nonmedication orders; thus, developing a surveillance system that could easily be used with commercial EHR vendors may be difficult.

Conclusions

We have demonstrated the utility of CPOE events for surveillance of postoperative complications. This approach minimizes manual medical record review and allows targeted surveillance strategies for postoperative complications. In addition, it performs well for inpatient and outpatient surgical procedures. Future efforts will focus on further standardizing this approach and validating it on a larger scale.

eTable 1. Postoperative Order Utilization by Complication

eTable 2. Multivariable Logistic Regression Models and Feature Coefficients for Each Complication

References

- 1.Battles JB, Farr SL, Weinberg DA. From research to nationwide implementation: the impact of AHRQ’s HAI prevention program. Med Care. 2014;52(2)(suppl 1):S91-S96. doi: 10.1097/MLR.0000000000000037 [DOI] [PubMed] [Google Scholar]

- 2.West N, Eng T. Monitoring and reporting hospital-acquired conditions: a federalist approach. Medicare Medicaid Res Rev. 2015;4(4):E1-E16. doi: 10.5600/mmrr.004.04.a04 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hall BL, Hamilton BH, Richards K, Bilimoria KY, Cohen ME, Ko CY. Does surgical quality improve in the American College of Surgeons National Surgical Quality Improvement Program: an evaluation of all participating hospitals. Ann Surg. 2009;250(3):363-376. [DOI] [PubMed] [Google Scholar]

- 4.Ju MH, Ko CY, Hall BL, Bosk CL, Bilimoria KY, Wick EC. A comparison of 2 surgical site infection monitoring systems. JAMA Surg. 2015;150(1):51-57. doi: 10.1001/jamasurg.2014.2891 [DOI] [PubMed] [Google Scholar]

- 5.Stevenson KB, Khan Y, Dickman J, et al. Administrative coding data, compared with CDC/NHSN criteria, are poor indicators of health care-associated infections. Am J Infect Control. 2008;36(3):155-164. doi: 10.1016/j.ajic.2008.01.004 [DOI] [PubMed] [Google Scholar]

- 6.Hollenbeak CS, Boltz MM, Wang L, et al. Cost-effectiveness of the National Surgical Quality Improvement Program. Ann Surg. 2011;254(4):619-624. doi: 10.1097/SLA.0b013e318230010a [DOI] [PubMed] [Google Scholar]

- 7.Adler-Milstein J, Jha AK. HITECH Act drove large gains in hospital electronic health record adoption. Health Aff (Millwood). 2017;36(8):1416-1422. doi: 10.1377/hlthaff.2016.1651 [DOI] [PubMed] [Google Scholar]

- 8.Classen DC, Resar R, Griffin F, et al. ‘Global trigger tool’ shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood). 2011;30(4):581-589. doi: 10.1377/hlthaff.2011.0190 [DOI] [PubMed] [Google Scholar]

- 9.Samore MH, Bateman K, Alder SC, et al. Clinical decision support and appropriateness of antimicrobial prescribing: a randomized trial. JAMA. 2005;294(18):2305-2314. doi: 10.1001/jama.294.18.2305 [DOI] [PubMed] [Google Scholar]

- 10.Borab ZM, Lanni MA, Tecce MG, Pannucci CJ, Fischer JP. Use of computerized clinical decision support systems to prevent venous thromboembolism in surgical patients: a systematic review and meta-analysis. JAMA Surg. 2017;152(7):638-645. doi: 10.1001/jamasurg.2017.0131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Haut ER, Lau BD, Kraenzlin FS, et al. Improved prophylaxis and decreased rates of preventable harm with the use of a mandatory computerized clinical decision support tool for prophylaxis for venous thromboembolism in trauma. Arch Surg. 2012;147(10):901-907. doi: 10.1001/archsurg.2012.2024 [DOI] [PubMed] [Google Scholar]

- 12.Anderson JE, Chang DC. Using electronic health records for surgical quality improvement in the era of big data. JAMA Surg. 2015;150(1):24-29. doi: 10.1001/jamasurg.2014.947 [DOI] [PubMed] [Google Scholar]

- 13.Branch-Elliman W, Strymish J, Itani KM, Gupta K. Using clinical variables to guide surgical site infection detection: a novel surveillance strategy. Am J Infect Control. 2014;42(12):1291-1295. doi: 10.1016/j.ajic.2014.08.013 [DOI] [PubMed] [Google Scholar]

- 14.Hu Z, Melton GB, Moeller ND, et al. Accelerating chart review using automated methods on electronic health record data for postoperative complications. AMIA Annu Symp Proc. 2017;2016:1822-1831. [PMC free article] [PubMed] [Google Scholar]

- 15.Murff HJ, FitzHenry F, Matheny ME, et al. Automated identification of postoperative complications within an electronic medical record using natural language processing. JAMA. 2011;306(8):848-855. doi: 10.1001/jama.2011.1204 [DOI] [PubMed] [Google Scholar]

- 16.Martínez-Costa C, Kalra D, Schulz S. Improving EHR semantic interoperability: future vision and challenges. Stud Health Technol Inform. 2014;205:589-593. [PubMed] [Google Scholar]

- 17.Pindyck T, Gupta K, Strymish J, et al. Validation of an electronic tool for flagging surgical site infections based on clinical practice patterns for triaging surveillance: operational successes and barriers. Am J Infect Control. 2018;46(2):186-190. doi: 10.1016/j.ajic.2017.08.026 [DOI] [PubMed] [Google Scholar]

- 18.Shiloach M, Frencher SK Jr, Steeger JE, et al. Toward robust information: data quality and inter-rater reliability in the American College of Surgeons National Surgical Quality Improvement Program. J Am Coll Surg. 2010;210(1):6-16. doi: 10.1016/j.jamcollsurg.2009.09.031 [DOI] [PubMed] [Google Scholar]

- 19.Guyon I, Weston J, Barnhill S, Vapnik V. Gene selection for cancer classification using support vector machines. Mach Learn. 2002;46(1):389-422. doi: 10.1023/A:1012487302797 [DOI] [Google Scholar]

- 20.Lin WJ, Chen JJ. Class-imbalanced classifiers for high-dimensional data. Brief Bioinform. 2013;14(1):13-26. doi: 10.1093/bib/bbs006 [DOI] [PubMed] [Google Scholar]

- 21.Austin PC, Tu JV. Bootstrap methods for developing predictive models. Am Stat. 2004;58(2):131-137. doi: 10.1198/0003130043277 [DOI] [Google Scholar]

- 22.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143(1):29-36. doi: 10.1148/radiology.143.1.7063747 [DOI] [PubMed] [Google Scholar]

- 23.FitzHenry F, Murff HJ, Matheny ME, et al. Exploring the frontier of electronic health record surveillance: the case of postoperative complications. Med Care. 2013;51(6):509-516. doi: 10.1097/MLR.0b013e31828d1210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Oniki TA, Zhuo N, Beebe CE, et al. Clinical element models in the SHARPn consortium. J Am Med Inform Assoc. 2016;23(2):248-256. doi: 10.1093/jamia/ocv134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Merkow RP, Ju MH, Chung JW, et al. Underlying reasons associated with hospital readmission following surgery in the United States. JAMA. 2015;313(5):483-495. doi: 10.1001/jama.2014.18614 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Postoperative Order Utilization by Complication

eTable 2. Multivariable Logistic Regression Models and Feature Coefficients for Each Complication