Abstract

High-content biological microscopy targets high-resolution imaging across large fields-of-view (FOVs). Recent works have demonstrated that computational imaging can provide efficient solutions for high-content microscopy. Here, we use speckle structured illumination microscopy (SIM) as a robust and cost-effective solution for high-content fluorescence microscopy with simultaneous high-content quantitative phase (QP). This multi-modal compatibility is essential for studies requiring cross-correlative biological analysis. Our method uses laterally-translated Scotch tape to generate high-resolution speckle illumination patterns across a large FOV. Custom optimization algorithms then jointly reconstruct the sample’s super-resolution fluorescent (incoherent) and QP (coherent) distributions, while digitally correcting for system imperfections such as unknown speckle illumination patterns, system aberrations and pattern translations. Beyond previous linear SIM works, we achieve resolution gains of 4× the objective’s diffraction-limited native resolution, resulting in 700 nm fluorescence and 1.2 μm QP resolution, across a FOV of , giving a space-bandwidth product (SBP) of 60 megapixels.

1. Introduction

The space-bandwidth product (SBP) metric characterizes information content transmitted through an optical system; it can be thought of as the number of resolvable points in an image (i.e. the system’s field-of-view (FOV) divided by the size of its point spread function (PSF) [1, 2]). Typical microscopes collect images with SBPs of <20 megapixels, a practical limit set by the systems’ optical design and camera pixel count. For large-scale biological studies in systems biology and drug discovery, fast high-SBP imaging is desired [3–10]. The traditional solution for increasing SBP is to use an automated translation stage to scan the sample laterally, then stitch together high-content images. However, such capabilities are costly, have long acquisition times and require careful auto-focusing, due to small depth-of-field (DOF) and axial drift of the sample over large scan ranges [11].

Instead of using high-resolution optics and mechanically scanning the FOV, new approaches for high-content imaging use a low-NA objective (with a large FOV) and build up higher resolution by computationally combining a sequence of low-resolution measurements [12–25]. Such approaches typically illuminate the sample with customized patterns that encode high-resolution sample information into low-resolution features, which can then be measured. These methods reconstruct features smaller than the diffraction limit of the objective, using concepts from synthetic aperture [26–28] and super-resolution (SR) [29–34]. Though the original intent was to maximize resolution, it is important to note that by increasing resolution, SR techniques also increase SBP, and therefore have application in high-content microscopy. Eliminating the requirement for long-distance mechanical scanning means that acquisition is faster and less expensive, while focus requirements are also relaxed by the larger DOF of low-NA objectives.

Existing high-content methods generally use either an incoherent imaging model to reconstruct fluorescence [18–25], or a coherent model to reconstruct absorption and quantitative phase (QP) [12–17]. Both have achieved gigapixel-scale SBP (milli-/centi- meter scale FOV with sub-micron resolution). However, none have demonstrated cross-compatibility with both coherent (phase) and incoherent (fluorescence) imaging. Here, we demonstrate multi-modal high-content imaging via a computational imaging framework that allows super-resolution fluorescence and QP. Our method is based on structured illumination microscopy (SIM), which is compatible with both incoherent [26, 32, 33, 36] and coherent [37–42] sources of contrast [35, 43–45].

Though most SIM implementations have focused on super-resolution, some previous works have recognized its suitability for high-content imaging [18–24]. However, these predominantly relied on fluorescence imaging with calibrated illumination patterns, which are difficult to realize in practice because lens-based illumination has finite SBP. Here, we use random speckle illumination, generated by scattering through Scotch tape, in order to achieve both high-NA and large FOV illumination. Our method is related to blind SIM [46]; however, instead of using many random speckle patterns (which restricts resolution gain to ∼1.8×), we translate the speckle laterally, enabling resolution gains beyond that of previous methods [46–52] (see Appendix D). Previous works also use high-cost spatial-light-modulators (SLM) [53] or galvonemeter/MEMs mirrors [41, 54] for precise illumination, as well as expensive objective lenses for aberration correction. We eliminate both of these requirements by performing computational self-calibration, solving for the translation trajectory and the field-dependent aberrations of the system.

Our proposed framework enables three key advantages over existing methods:

resolution gains of 4× the native resolution of the objective (linear SIM is usually restricted to 2×) [46–52, 55, 56],

synergistic use of both the fluorescent (incoherent) and quantitative-phase (coherent) signal from the sample to enable multi-modal imaging,

algorithmic self-calibration to significantly relax hardware requirements, enabling low-cost and robust imaging.

In our experimental setup, the Scotch tape is placed just before the sample and mounted on a translation stage (Fig. 1). This generates disordered speckles at the sample that are much smaller than the PSF of the imaging optics, encoding SR information. Nonlinear optimization methods are then used to jointly reconstruct multiple calibration quantities: the unknown speckle illumination pattern, the translation trajectory of the pattern, and the field-dependent system aberrations (on a patch-by-patch basis). These are subsequently used to decode the SR information of both fluorescence and phase. Compared to traditional SIM systems that use high-NA objective lenses, our system utilizes a low-NA low-cost lens to ensure large FOV. The Scotch tape generated speckle illumination is not resolution-bound by any imaging lens; this is what allows us to achieve 4× resolution gains. The result is high-content imaging at sub-micron resolutions across millimeter scale regions. Various previous works have achieved cost-effectiveness, high-content (large SBP), or multiple modalities, but we believe this to be the first to simultaneously encompass all three.

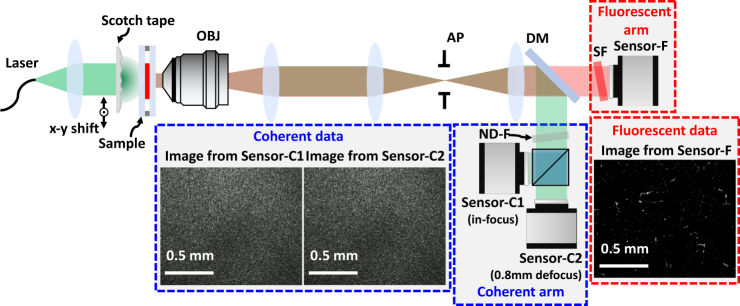

Fig. 1.

Structured illumination microscopy (SIM) with laterally-translated Scotch tape as the patterning element, achieving 4× resolution gain. Our imaging system has both an incoherent arm, where Sensor-F captures raw fluorescence images (at the emission wavelength, nm) for fluorescence super-resolution, and a coherent arm, where Sensor-C1 and Sensor-C2 capture images with different defocus (at the laser illumination wavelength, nm) for both super-resolution phase reconstruction and speckle trajectory calibration. OBJ: objective, AP: adjustable iris-aperture, DM: dichroic mirror, SF: spectral filter, ND-F: neutral-density filter.

2. Theory

SIM generally achieves super-resolution by illuminating the sample with a high spatial-frequency pattern that mixes with the sample’s information content to form low-resolution "beat" patterns (i.e. moire fringes). Measurements of these "beat" patterns allow elucidation of sample features beyond the diffraction-limited resolution of the imaging system. Maximum achievable resolution in SIM is set by the sum of the numerical apertures (NAs) of the illumination pattern, , and the imaging system, . Thus, SIM enables a resolution gain factor (over the system’s native resolution) of [33]. The minimum resolvable feature size is inversely related to this bound, .

Linear SIM typically maximizes resolution by using either: 1) a high-NA objective in epi-illumination configuration, or 2) two identical high-NA objectives in transmission geometry [33, 35]. Both result in a maximum of resolution gain because , which corresponds to an SBP increase by a factor of . Given the relatively low native SBP of high-NA imaging lenses, such increases are not sufficient to qualify as high-content imaging. Though nonlinear SIM techniques can enable higher resolution gains [34], they require either fluorophore photo-switching or saturation capabilities, which can associate with photobleaching and low SNR, and are not compatible with coherent QP techniques.

In this work, we aim for resolution gain; hence, we need the illumination NA to be larger than the detection NA, without using a high-resolution illumination lens (that would restrict the illumination FOV). To achieve this, we use a wide-area high-angle scattering element - layered Scotch tape - on the illumination side of the sample (Fig. 1). Multiple scattering within the tape creates a speckle pattern with finer features than the PSF of the imaging system, i.e. . This means that spatial frequencies beyond 2× the objective’s cutoff are mixed into the measurements, which gives a chance to achieve resolution gains greater than two.

The following sections outline the algorithm that we use to reconstruct large SBP fluorescence and QP images from low-resolution acquisitions of a sample illuminated by a laterally-translating speckle pattern. Unlike conventional SIM reconstruction methods that use analytic linear inversion, our strategy relies instead on joint-variable iterative optimization, where both the sample and illumination speckle (which is unknown) are reconstructed [25, 55, 56].

2.1. Super-resolution fluorescence imaging

Fluorescence imaging requires an incoherent imaging model. The intensity at the sensor is a low-resolution image of the sample’s fluorescent distribution, obeying the system’s incoherent resolution limit, , where is the emission wavelength. The speckle pattern generated through the Scotch tape excites the fluorescent sample with features of minimum size , where is the excitation wavelength and is set by the scattering angles exiting the Scotch tape. Approximating the excitation and emission wavelengths as similar (), the resolution limit of the SIM reconstruction is , with a resolution gain factor of . This factor is mathematically unbounded; however, it will be practically limited by the illumination NA and SNR (see Appendix D).

2.1.1. Incoherent forward model for fluorescence imaging

Plane-wave illumination of the Scotch tape, positioned at the -th scan-point, , creates a speckle illumination pattern, , at the plane of the fluorescent sample, , where subscript f identifies variables in the fluorescence channel. The fluorescent signal is imaged through the system to give an intensity image at the camera plane:

| (1) |

where r is the 2D spatial coordinates , is the system PSF, and Nimg is the total number of images captured. The subscript describes the acquisition index.

In this formulation, , , and are 2D -pixel distributions. To accurately model different regions of the pattern translating into the object’s FOV with incrementing , we initialize as a pixel 2D distribution, with , and introduce a cropping operator to select the region of the scanning pattern that illuminates the sample.

2.1.2. Inverse problem for fluorescence imaging

We next formulate a joint-variable optimization problem to extract SR estimates of the sample, , and illumination distributions, , from the raw fluorescence measurements, , as well as refine the estimate of the system’s PSF [25] (aberrations) and speckle translation trajectory, . We start with a crude initialization from raw observations of the speckle made using the coherent imaging arm (more details in Sec. 2.3). Defining as a joint-variable cost function that measures the difference between the raw intensity acquisitions and the expected intensities from estimated variables via the forward model, we have:

| (2) |

To solve, a sequential gradient descent [57, 58] algorithm is used, where the gradient is updated once for each measurement. The sample, speckle pattern, system’s PSF and scanning positions are updated by sequentially running through Nimg measurements within one iteration. After the sequential update, an extra Nesterov’s accelerated update [59] is included for both the sample and pattern estimate, to speed up convergence. Appendix A contains a detailed derivation of the gradient with respect to the sample, structured pattern, system’s PSF and the scanning position based on the linear algebra vectorial notation. The algorithm is described in Appendix B.

2.2. Super-resolution quantitative-phase imaging

In this section, we present our coherent model for SR quantitative-phase (QP) imaging. A key difference between the QP and fluorescence imaging processes is that the detected intensity at the image plane for coherent imaging is nonlinearly related to the sample’s QP [1, 38]. Thus, solving for a sample’s QP from a single intensity measurement is a nonlinear and ill-posed problem. To circumvent this, we use intensity meaurements from two planes, one in-focus and one out-of-focus, to introduce a complex-valued operator that couples QP variations into measurable intensity fluctuations, making the reconstruction well-posed [60, 61]. The defocused measurements are denoted by a new subscript variable z. Figure 1 shows our implementation, where two defocused sensors are positioned at z0 and z1 in the coherent imaging arm.

Generally, the resolution for coherent imaging is roughly half that of its incoherent counterpart [1]. For our QP reconstruction, the resolution limit is ), where the coherent resolution of the native system and the speckle are dsys = λex/NAsys and dillum = λex/NAillum, respectively.

2.2.1. Coherent forward model for phase imaging

Assuming an object with 2D complex transmittance function is illuminated by a speckle field, , where subscript c refers to the coherent imaging channel, positioned at the -th scanning position , we can represent the intensity image formed via coherent diffraction as:

| (3) |

where and are the complex electric-fields at the imaging plane and the system’s coherent PSF at defocus distance z, respectively. The comma in the subscript separates the channel index, c or f, from the scanning-position and acquisition-number indices, and z. Nimg here indicates the total number of translations of the Scotch tape. The defocused PSF can be further broken down into , where is the in-focus coherent PSF and is the defocus kernel. Similar to Section 2.1.1, , , and are 2D distributions with dimensions of pixels, while is of size pixels (). is a cropping operator that selects the sub-region of the pattern that interacts with the sample. The sample’s QP distribution is simply the phase of the object’s complex transmittance, .

2.2.2. Inverse problem for phase imaging

We now take the raw coherent intensity measurements, , and the registered trajectory, , from both of the defocused coherent sensors (more details in Sec. 2.3) as input to jointly estimate the sample’s SR complex-transmittance function, , and illumination complex-field, , as well as the aberrations inherent in the system’s PSF, . The optimization also further refines the scanning trajectory, . Based on the forward model, we formulate the joint inverse problem:

| (4) |

Here, we adopt an amplitude-based cost function, fc, which robustly minimizes the distance between the estimated and measured amplitudes in the presence of noise [57, 61, 62]. We optimize the pattern trajectories, and , separately for each coherent sensor, in order to account for any residual misalignment or timing-mismatch (see Sec. 2.3). As in the fluorescence case, sequential gradient descent [57, 58] was used to solve this inverse problem.

2.3. Registration of coherent images

Knowledge of the Scotch tape scanning position, , reduces the complexity of the joint sample and pattern estimation problem and is necessary to achieve SR reconstructions with greater than resolution gain. Because our fluorescent sample is mostly transparent, the main scattering component in the acquired raw data originates from the Scotch tape. Thus, using a sub-pixel registration algorithm [63] between successive coherent-camera acquisitions, which are dominated by the scattered speckle signal, is sufficient to initialize the scanning trajectory of the Scotch tape,

| (5) |

where is the registration operator. These initial estimates of are then updated, alongside , , , and using the inverse models described in Sec. 2.1.2 and 2.2.2. In the fluorescence problem described in Sec. 2.1.2, we only use the trajectory from the in-focus coherent sensor at z = 0 for initialization, so we omit the subscript z in .

3. Experimental results

Figure 1 shows our experimental setup. A green laser beam (BeamQ, 532 nm, 200 mW) is collimated through a single lens. The resulting plane wave illuminates the layered Scotch tape (4 layers of 3M 810 Scotch Tape, S-9783), creating a speckle pattern at the sample. The Scotch tape is mounted on a 3-axis piezo-stage (Thorlabs, MAX311D) to enable lateral speckle scanning. The transmitted light from the sample then travels through a 4f system formed by the objective lens (OBJ) and a single lens. In order to control the NA of our detection system (necessary for our verification experiment), an extra 4f system with an adjustable iris-aperture (AP) in the Fourier space is added. Then, the coherent and fluorescent light are optically separated by a dichroic mirror (DM, Thorlabs, DMLP550R), since they have different wavelengths. The fluorescence is further spectrally filtered (SF) before imaging onto Sensor-F (PCO.edge 5.5). The (much brighter) coherent light is ND-filtered and then split by another beam-splitter before falling on the two defocused coherent sensors, Sensor-C1 and Sensor-C2 (FLIR, BFS-U3-200S6M-C). Sensor-C1 is focused on the sample, while Sensor-C2 is defocused by 0.8 mm.

For our initial verification experiments, we use a 40× objective (Nikon, CFI Achro 40×) with as our system’s microscope objective (OBJ). Later high-content experimental demonstrations switch to a 4× objective (Nikon, CFI Plan Achro 4×) with .

3.1. Super-resolution verification

3.1.1. Fluorescence super-resolution verification

We start with a proof-of-concept experiment to verify that our method accurately reconstructs a fluorescent sample at resolutions greater than twice the imaging system’s diffraction-limit. To do so, we use the higher-resolution objective (40×, NA 0.65) and a tunable Fourier-space iris-aperture (AP) that allows us to artificially reduce the system’s NA (), and therefore, resolution. With the aperture mostly closed (to ), we acquire a low-resolution SIM dataset, which is then used to computationally reconstruct a super-resolved image of the sample with resolution corresponding to an effective NA = 0.4. This reconstruction is then compared to the widefield image of the sample acquired with the aperture open to , for validation.

Results are shown in Fig. 2, comparing our method against widefield fluorescence images at NAs of 0.1 and 0.4, with no Scotch tape in place. The sample is a monolayer of 1 μm diameter microspheres, with center emission wavelength nm. At 0.1 NA, the expected resolution is NA 3.0 μm and the microspheres are completely unresolvable. At 0.4 NA, the expected resolution is NA 0.76 μm and the microspheres are well-resolved. With Scotch tape and 0.1 NA, we acquire a set of measurements as we translate the speckle pattern in 267 nm increments on a 26 × 26 rectangular grid - acquisitions total (details in Sec. 4).

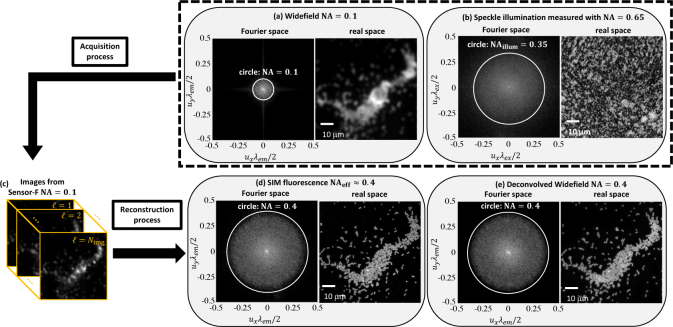

Fig. 2.

Verification of fluorescence super-resolution with 4× resolution gain. Widefield images, for comparison, were acquired at (a) 0.1 NA and (e) 0.4 NA by adjusting the aperture size. (b) The Scotch tape speckle pattern creates much higher spatial frequencies (∼0.35 NA) than the 0.1 NA detection system can measure. (c) Using the 0.1 NA aperture, we acquire low-resolution fluorescence images for different lateral positions of the Scotch tape. (d) The reconstructed SIM image contains spatial frequencies up to ∼0.4 NA and is in agreement with (e) the deconvolved widefield image with the system operating at 0.4 NA.

Figure 2(d) shows the final SR reconstruction of the fluorescent sample in real space, along with the amplitude of its Fourier spectrum. Individual microspheres can be clearly resolved, and results match well with the 0.4 NA deconvolved widefield image (Fig. 2(e)). Fourier-space analysis confirms our resolution improvement factor to be 4×, which suggests that the Scotch tape produces . To verify, we fully open the aperture and observe that the speckle pattern contains spatial frequencies up to (Fig. 2(b)).

3.1.2. Coherent super-resolution verification

To quantify super-resolution in the coherent imaging channel, we use the low-resolution objective (4×, NA 0.1) to image a USAF1951 resolution chart (Benchmark Technologies). This phase target provides different feature sizes with known phase values, so is a suitable calibration target to quantify both the coherent resolution and the phase sensitivity of our technique.

Results are shown in Fig. 3. The coherent intensity image (Fig. 3(a)) acquired with 0.1 NA (no tape) has low resolution (), so hardly any features can be resolved. In Fig. 3(b), we show the “ground truth” QP distribution at 0.4 NA, as provided by the manufacturer.

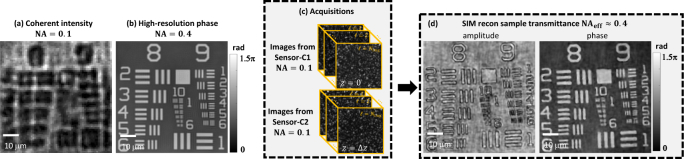

Fig. 3.

Verification of coherent quantitative phase (QP) super-resolution with 4× resolution gain. (a) Low-resolution intensity image and (b) “ground truth” phase at NA=0.4, for comparison. (c) Raw acquisitions of the speckle-illuminated sample intensity from two focus planes, collected with 0.1 NA. (d) Reconstructed SR amplitude and QP, demonstrating 4× resolution gain.

After inserting the Scotch tape, it was translated in 400 nm increments on a 36 × 36 rectangular grid, giving total acquisitions (details in Sec. 4) at each of the two defocused coherent sensors (Fig. 3(c)). Figure 3(d,e) shows the SR reconstruction for the amplitude and phase of this sample, resolving features up to group 9 element 5 (1.23 μm separation). Thus, our coherent reconstruction has a resolution gain compared to the brightfield intensity image.

3.2. High-content multi-modal microscopy

Of course, artificially reducing resolution in order to validate our method required using a moderate-NA objective, which precluded imaging over the large FOVs allowed by low-NA objectives. In this section, we demonstrate high-content fluorescence imaging with the low-resolution, large FOV objective (4×, NA 0.1) to visualize a 2.7×3.3 FOV (see Fig. 4(a)). We note that this FOV is more than 100× larger than that allowed by the 40× objective used in the verification experiments, so is suitable for large SBP imaging.

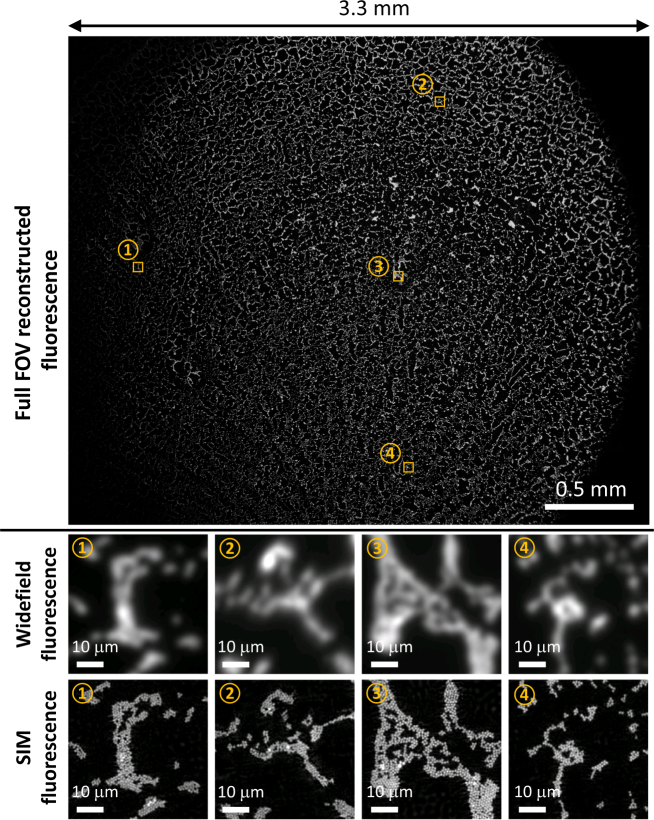

Fig. 4.

Reconstructed super-resolution fluorescence with 4× resolution gain across the full FOV (See Visualization 1 (71MB, tif) ). Four zoom-ins of regions-of-interest (ROIs) are compared to their widefield counterparts.

Within the imaged FOV for our 1 μm diameter microsphere monolayer sample, we zoom in to four regions-of-interest (ROI), labeled ①, ②, ③, and ④. Widefield fluorescence imaging cannot resolve individual microspheres, as expected. Using our method, however, gives a factor 4× resolution gain across the whole FOV and enables resolution of individual microspheres. Thus, the SBP of the system, natively ∼5.3 mega-pixels of content, was increased to ∼85 mega-pixels, a factor of . Though this is still not in the Gigapixel range, this technique is scalable and could reach that range with a higher-SBP objective and sensors.

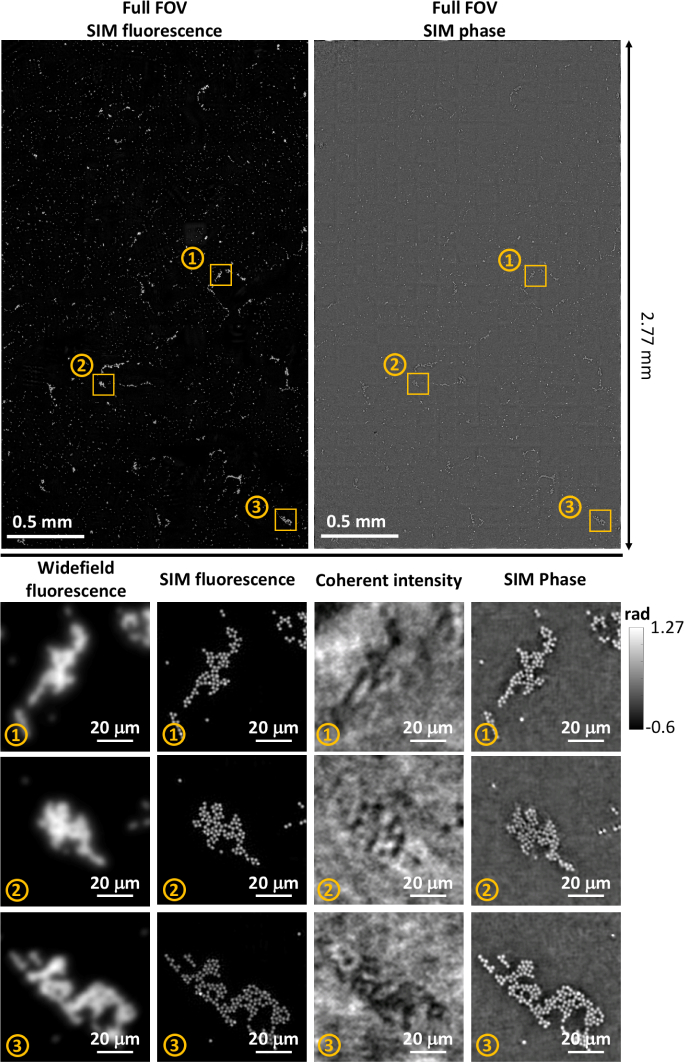

We next include the QP imaging channel to demonstrate high-content multimodal imaging, as shown in Fig. 5. The multimodal FOV is smaller (2×2.7 FOV) than that presented in Fig. 4 because our coherent detection sensors have a lower pixel-count than our fluorescence detection sensor. Figure 5 includes zoom-ins of three ROIs to visualize the multimodal SR.

Fig. 5.

Reconstructed multimodal (fluorescence and quantitative phase) high-content imaging (See Visualization 2 (18.6MB, tif) and Visualization 3 (57MB, tif) ). Zoom-ins for three ROIs compare the widefield, super-resolved fluorescence, coherent intensity, and super-resolved phase reconstructions.

As expected, the widefield fluorescence image and the on-axis coherent intensity image do not allow resolution of individual 2 μm microspheres, since the theoretical resolution for fluorescence imaging is m and for QP imaging is m. However, our SIM reconstruction with resolution gain enables clear separation of the microspheres in both channels. Our fluorescence and QP reconstructions match well, which is expected since the fluorescent and QP signal originate from identical physical structures in this particular sample.

The full-FOV reconstructions (Fig. 4 and 5) are obtained by dividing the FOV into small patches, reconstructing each patch, then stitching together the high-content images. Patch-wise reconstruction is computationally favorable because of its low-memory requirement, but also allows us to correct field-dependent aberrations. Since we process each patch separately using our self-calibration algorithm, we solve for each patch’s PSF independently and correct the local aberrations digitally. The reconstruction takes approximately 15 minutes for each channel on a high-end GPU (NVIDIA, TITAN Xp) for a patch with FOV of .

4. Discussion

Unlike many existing high-content imaging techniques, one benefit of our method is its easy compatibility for simultaneous QP and fluorescence imaging. This arises from SIM’s unique ability to multiplex both coherent and incoherent signals into the system aperture [35]. Furthermore, existing high-content fluorescence imaging techniques that use micro-lens arrays [18–23] are resolution-limited by the physical size of the lenslets, which typically have . Recent work [24] has introduced a framework in which gratings with sub-diffraction slits allow sub-micron resolution across large FOVs - however, this work is heavily limited by SNR, due to the primarily opaque grating, as well as tight required axial alignment. Though the Scotch tape used in our proof-of-concept prototype also induced illumination angles within a similar range as micro-lens arrays (), we could in future use a stronger scattering media to achieve , enabling further SR and thus larger SBP.

The main drawback of our technique is that we use around translations of the Scotch tape for each reconstruction, which results in long acquisition times (∼ 180 seconds for shifting, pausing, and capturing) and higher photon requirements. Heuristically, for both fluorescence and QP imaging, we found that a sufficiently large scanning range (larger than low-NA diffraction limited spot sizes) and finer scan steps (smaller than the targeted resolution) can reduce distortions in the reconstruction. Tuning such parameters to minimize the number of acquisitions without degrading reconstruction quality is thus an important subject for future endeavors.

5. Conclusion

We have presented a large-FOV multimodal SIM fluorescence and QP imaging technique. We use Scotch tape to efficiently generate high-resolution features over a large FOV, which can then be measured with both fluorescent and coherent contrast using a low-NA objective. A computational optimization-based self-calibration algorithm corrected for experimental uncertainties (scanning-position, aberrations, and random speckle pattern) and enabled super-resolution fluorescence and quantitative phase reconstruction with factor resolution gain.

Appendix A: Gradient derivation

A.1. Vectorial notation

A.1.1. Fluorescence imaging vectorial model

In order to solve the multivariate optimization problem in Eq. (2) and (4) and derive the gradient of the cost function, it is more convenient to consider a linear algebra vectorial notation of the forward models. The fluorescence SIM forward model in Eq. (1) can be alternatively expressed as

| (6) |

where , , , , and designate the raw fluorescent intensity vector, diffraction-limit low-pass filtering operation, pattern translation/cropping operation, speckle pattern vector, and sample’s fluorescent distribution vector, respectively. The 2D-array variables described in (1) are all reshaped into column vectors here. and can be further broken down into their individual vectorial components:

| (7) |

where is the OTF vector and is the vectorization of the function, where u is spatial frequency. The notation turns a vector, a, into an diagonal matrix with diagonal entries from the vector entries. and denote the -point and -point 2D discrete Fourier transform matrix, respectively, and Q is the cropping matrix.

With this vectorial notation, the cost function for a single fluorescence measurement is

| (8) |

where is the cost vector and T denotes the transpose operation.

A.1.2. Coherent imaging vectorial model

As with the fluorescence vectorial model, we can rewrite Eq. (3) using vectorial notation:

| (9) |

where

| (10) |

and are the sample transmittance function vector and structured field vector, respectively. and are the system pupil function and the deliberate defocus pupil function, respectively. With this vectorial notation, we can then express the cost function for a single coherent intensity measurement as

| (11) |

where is the cost vector for the coherent intensity measurement.

A.2. Gradient derivation

A.2.1. Gradient derivation for fluorescence imaging

To optimize Eq. (2) for the variables , , and , we first derive the necessary gradients of the fluorescence cost function. Consider taking the gradient of with respect to , we can represent the gradient row vector as

| (12) |

Turning the row gradient vector into a column vector in order to update the object vector in the right dimension, we the final gradient becomes

| (13) |

To compute the gradient of , we first rewrite the cost vector as

| (14) |

Now, we can write the gradient of the cost function with respect to the pattern vector in row and column vector form as

| (15) |

Similar to the derivation of the pattern function gradient, it is easier to work with the rewritten form of the cost vector expressed as

| (16) |

The gradient of the cost function with respect to the OTF vector in the row and column vector form are expressed, respectively, as

| (17) |

where denotes entry-wise complex conjugate operation on any general vector a. One difference between this gradient and the previous one is that the variable to solve, , is now a complex vector. When turning the gradient row vector of a complex vector into a column vector, we have to take a Hermitian operation, , on the row vector following the conventions in [64]. We will have more examples of complex variables in the coherent model gradient derivation.

For taking the gradient of the scanning position, we again rewrite the cost vector :

| (18) |

We can then write the gradient of the cost function with respect to the scanning position as

| (19) |

where q is either the x or y spatial coordinate component of . is the vectorial notation of the spatial frequency function in the q direction.

To numerically evaluate these gradients, we represent them in the functional form as:

| (20) |

where stands for complex conjugate of any general function, a, is the Fourier transform operator, and is a zero-padding operator that pads an image to size pixels. In this form, , , and are 2D images, while is a image. The gradients for the sample and the structured pattern are of the same size as and , respectively. Ideally, the gradient of the the scanning position in each direction is a real number. However, due to imperfect implementation of the discrete differentiation in each direction, the gradient will have small imaginary value that will be dropped in the update of the scanning position.

A.2.2. Gradient derivation for coherent imaging

For the coherent imaging case, we will derive the gradients of the cost function in Eq. (11) with respect to the sample transmittance function , speckle field , pupil function , and the scanning position . First, we take the gradient of with respect to , we then have the gradient in the row and column vector forms as

| (21) |

where the operation denotes entry-wise division between the two vectors, and . In addition, the detailed calculation of can be found in the Appendix of [57].

Next, we take the gradient with respect to the pattern field vector, , and write down the corresponding row and column vectors as

| (22) |

In order to calculate , we need to reorder the dot multiplication of and as we did in deriving the gradient of the pattern for fluorescence imaging.

In order to do aberration correction, we will need to estimate the system pupil function, . The gradient with respect to the pupil function can be derived as,

| (23) |

In the end, the gradient of the scanning position for refinement can be derived as

| (24) |

where q is either the x or y spatial coordinate component of .

In order to numerically evaluate these gradients, we represent them, as we did for the gradients of the fluorescence model, into functional forms:

| (25) |

Appendix B: Reconstruction algorithm

With the derivation of the gradients in Appendix A, we summarize here the reconstruction algorithm for fluorescence imaging and coherent imaging.

B.1. Algorithm for fluorescence imaging

First, we initialize the sample, , with the mean image of all the structure illuminated images, , which is approximately a widefield diffraction-limited image. As for the structured pattern, , we initialize it with a all-one image. The initial OTF, , is set as a non-aberrated incoherent OTF. Initial scanning positions are from the registration of the in-focus coherent speckle images, (z = 0).

In the algorithm, Kf is the total number of iterations (Kf = 100 is generally enough for convergence). At every iteration, we sequentially update the sample, structured pattern, system’s OTF and the scanning position using each single frame from to . A Nesterov acceleration step is applied on the sample and the structured pattern at the end of each iteration. The detailed algorithm is summarized in Algorithm 1.

Algorithm 1.

Fluorescence imaging reconstruction

| Require: , , | |

| 1. | initialize |

| 2. | initialize with all one values |

| 3. | initialize with the non-aberrated incoherent OTF |

| 4. | initialize with the scanning position from the registration step |

| 5. | for do |

| 6. | Sequential gradient descent |

| 7. | for do |

| 8. | |

| 9. | |

| 10. | |

| 11. | , where δ is chosen to be small |

| 12. | |

| 13. | Scanning position refinement |

| 14. | = |

| 15. | = |

| 16. | end for |

| 17. | Nesterov’s acceleration |

| 18. | if k = 1 then |

| 19. | |

| 20. | |

| 21. | |

| 22. | else |

| 23. | |

| 24. | |

| 25. | |

| 26. | end if |

| 27. | end for |

B.2. Algorithm for coherent imaging

For coherent imaging, we initialize with all ones. The pattern, , is initialized with the mean of the square root of registered coherent in-focus intensity stack. The pupil function is initialized with a circ function (2D function filled with ones within the defined radius) with the radius defined by the objective NA. In the end, we initialize the scanning position, , from the registration of the intensity stacks, , for respective focal planes.

For the coherent imaging reconstruction, we use a total number of iterations to converge. We sequentially update , , , and for each defocused plane (total number of defocused planes is Nz) per iteration. Unlike for our fluorescence reconstructions, we do not use the extra Nesterov’s acceleration step in the QP reconstruction.

Algorithm 2.

Coherent imaging reconstruction

| Require: , , | |

| 1: | initialize with all one values |

| 2: | initialize |

| 3: | initialize with all one values within a defined radius set by the objective NA |

| 4: | initialize with the scanning position from the registration step |

| 5: | for do |

| 6: | Sequential gradient descent |

| 7: | for do |

| 8: | |

| 9: | |

| 10: | if then |

| 11: | |

| 12: | |

| 13: | |

| 14: | , where δ is chosen to be small |

| 15: | else |

| 16: | Do the same update but save to |

| 17: | end if |

| 18: | |

| 19: | Scanning position refinement |

| 20: | |

| 21: | |

| 22: | end for |

| 23: | end for |

Appendix C: Sample preparation

Results presented in this work targeted super-resolution of 1 μm and 2 μm diameter polystyrene microspheres (Thermofischer) that were fluorescently tagged to emit at a center wavelength of nm. Monolayer samples of these microspheres were prepared by placing microsphere dilutions (60 uL stock-solution/500 uL isopropyl alcohol) onto #1.5 coverslips and then allowing to air-dry. High-index oil ( at λ = 532 nm) was subsequently placed on the coverslip to index-match the microspheres. An adhesive spacer followed by another #1.5 coverslip was placed on top of the original coverslip to assure a uniform sample layer for imaging.

Appendix D: Posedness of the problem

In this paper, we illuminate the sample with an unknown speckle pattern to encode both large-FOV and high-resolution information into our measurement. To decode the high-resolution information, we need to jointly estimate the speckle pattern and the sample. This framework shares similar characteristics with the work on blind SIM first introduced by [46], where completely random speckle patterns were sequentially illuminated onto the sample. Unfortunately, the reconstruction formulation proposed in that work is especially ill-posed due to randomness between the illumination patterns, i.e., if Nimg raw images are taken, there would be unknown variables to solve for (Nimg illumination patterns and 1 sample distribution). To better condition this problem, priors based on speckle statistics [46–52] and sample sparsity [48, 51] can be introduced, pushing blind SIM to 2s resolution gain. However, to implement high-content microscopy using SIM, we desire a resolution gain of . Even with priors, we found that this degree of resolution gain was not experimentally achievable with uncorrelated and random speckle illuminations, due to the reconstruction formulation being so ill-posed.

In this work, we improve the posedness of the problem by illuminating with a translating speckle pattern, as opposed to randomly changing speckle patterns. Because each individual illumination pattern at the sample is a laterally shifted version of every other illumination pattern, the posedness of the reconstruction framework dramatically increases. Previous works [25, 55, 56] have also demonstrated this concept to effectively achieve beyond resolution gain.

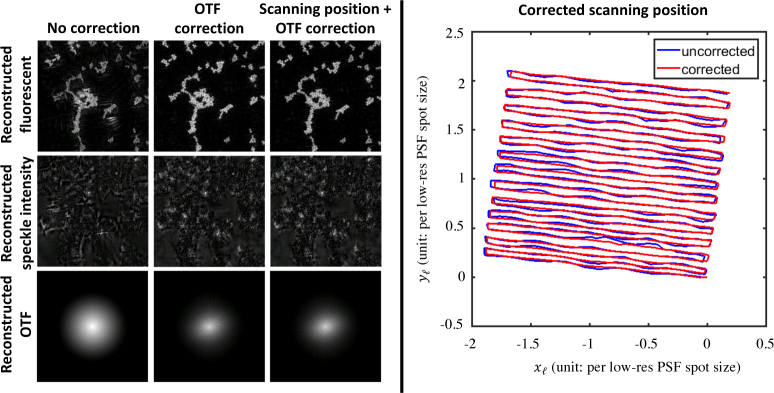

Appendix E: Self-calibration analysis

In Sec. 2.1.2 and 2.2.2, we presented the inverse problem formulation for super-resolution fluorescence and QP. We note that those formulations also included terms to self-calibrate for unknowns in the system’s experimental OTF and the illumination pattern’s scan-position. Here we demonstrate how these calibrations are important for our reconstruction quality.

Fig. 6.

Algorithmic self-calibration significantly improves fluorescence super-resolution reconstructions. Here, we compare the resconstructed fluorescence image, speckle intensity, and OTF with no correction, OTF correction, and both OTF correction and scanning position correction. The right panel shows the overlay of the uncorrected and corrected scanning position trajectories.

To demonstrate the improvement in our fluorescence imaging reconstruction due to the self-calibration algorithm, we select a region of interest from the dataset presented in Fig. 4. Figure 6 shows the comparison of the SR reconstruction with and without self-calibration. The SR reconstruction with no self-calibration contains severe artifacts in reconstructions of both the speckle illumination pattern and the sample’s fluorescent distribution. With OTF correction, dramatic improvements in the fluorescence SR image are evident. OTF correction is especially important when imaging across a large FOV (Fig. 4 and 5) due to space-varying aberrations.

Further self-calibration to correct for errors in the initial estimate of the illumination pattern’s trajectory enables further refinement of the SR reconstruction. We see that this illumination trajectory demonstrates greater smoothness after undergoing self-calibration. We fully expect that this calibration step to have important ramifications in cases where the physical translation stage is of lower stability or more inaccurate incremental translation.

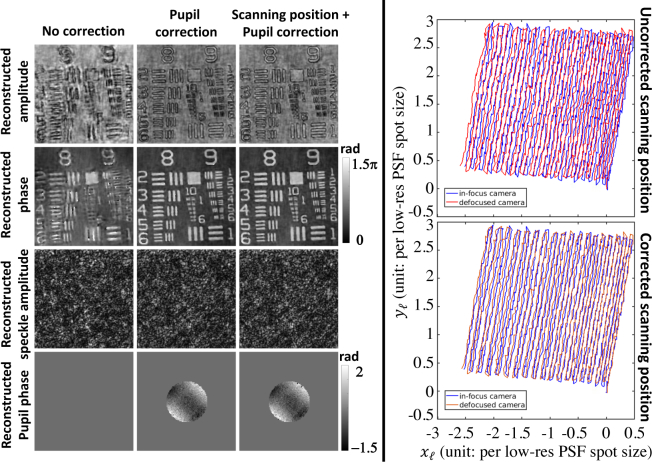

Fig. 7.

Algorithmic self-calibration significantly improves coherent super-resolution reconstructions. We show a comparison of reconstructed amplitude, phase, speckle amplitude, and phase of the pupil function with no correction, pupil correction, and both pupil correction and scanning position correction. The right panel shows the overlay of scannning position trajectory for the in-focus and defocused cameras before and after correction.

We also test how the self-calibration affects our phase reconstruction, using the same dataset as in Fig. 3. Similar to the conclusion from the fluorescence self-calibration demonstration, pupil correction (coherent OTF) plays an important role in reducing SR reconstruction artifacts as shown in Fig. 7. The reconstructed pupil phase suggests that our system aberration is mainly caused by astigmatism. Further refinement of the trajectory of the illumination pattern improves the SR resolution by resolving one more element (group 9 element 6) of the USAF chart. Paying more attention to the uncorrected and corrected illumination trajectory, we find that the self-calibrated trajectory of the illumination pattern tends to align the trajectories from the two coherent cameras. We also notice that the trajectory from the quantitative-phase channels seems to jitter more compared to the fluorescence channel. We hypothesize that this is due to longer exposure time for each fluorescence acquisition, which would average out the jitter.

Funding

Gordon and Betty Moore Foundation’s Data-Driven Discovery Initiative (GBMF4562); Ruth L. Kirschstein National Research Service Award (F32GM129966).

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References

- 1.Goodman J., Introduction to Fourier optics (Roberts & Co., 2005). [Google Scholar]

- 2.Lohmann A. W., Dorsch R. G., Mendlovic D., Zalevsky Z., Ferreira C., “Space-bandwidth product of optical signals and systems,” J. Opt. Soc. Am. A 13, 470–473 (1996). 10.1364/JOSAA.13.000470 [DOI] [Google Scholar]

- 3.Mccullough B., Ying X., Monticello T., Bonnefoi M., “Digital microscopy imaging and new approaches in toxicologic pathology,” Toxicol Pathol. 32 (suppl 2), 49–58 (2004). 10.1080/01926230490451734 [DOI] [PubMed] [Google Scholar]

- 4.Kim M. H., Park Y., Seo D., Lim Y. J., Kim D.-I., Kim C. W., Kim W. H., “Virtual microscopy as a practical alternative to conventional microscopyin pathology education,” Basic Appl. Pathol. 1, 46–48 (2008). 10.1111/j.1755-9294.2008.00006.x [DOI] [Google Scholar]

- 5.Dee F. R., “Virtual microscopy in pathology education,” Human Pathol 40, 1112–1121 (2009). 10.1016/j.humpath.2009.04.010 [DOI] [PubMed] [Google Scholar]

- 6.Pepperkok R., Ellenberg J., “High-throughput fluorescence microscopy for systems biology,” Nat. Rev. Mol. Cell Biol. 7, 690–696 (2006). 10.1038/nrm1979 [DOI] [PubMed] [Google Scholar]

- 7.Yarrow J. C., Totsukawa G., Charras G. T., Mitchison T. J., “Screening for cell migration inhibitors via automated microscopy reveals a Rho-kinase Inhibitor,” Chem. Biol. 12, 385–395 (2005). 10.1016/j.chembiol.2005.01.015 [DOI] [PubMed] [Google Scholar]

- 8.Laketa V., Simpson J. C., Bechtel S., Wiemann S., Pepperkok R., “High-content microscopy identifies new neurite outgrowth regulators,” Mol. Biol. Cell 18, 242–252 (2007). 10.1091/mbc.e06-08-0666 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Trounson A., “The production and directed differentiation of human embryonic stem cells,” Endocr. Rev. 27(2), 208–219 (2006). 10.1210/er.2005-0016 [DOI] [PubMed] [Google Scholar]

- 10.Eggert U. S., Kiger A. A., Richter C., Perlman Z. E., Perrimon N., Mitchison T. J., Field C. M., “Parallel chemical genetic and genome-wide RNAi screens identify cytokinesis inhibitors and targets,” PLoS Biol. 2, e379 (2004). 10.1371/journal.pbio.0020379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Starkuviene V., Pepperkok R., “The potential of high-content high-throughput microscopy in drug discovery,” Br. J. Pharmacol 152, 62–71 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Xu W., Jericho M. H., Meinertzhagen I. A., Kreuzer H. J., “Digital in-line holography for biological applications,” PNAS 98, 11301–11305 (2001). 10.1073/pnas.191361398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bishara W., Su T.-W., Coskun A. F., Ozcan A., “Lensfree on-chip microscopy over a wide field-of-view using pixel super-resolution,” Opt. Express 18, 11181–11191 (2010). 10.1364/OE.18.011181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Greenbaum A., Luo W., Khademhosseinieh B., Su T.-W., Coskun A. F., Ozcan A., “Increased space-bandwidth product in pixel super-resolved lensfree on-chip microscopy,” Scientific reports 3: 1717 (2013). 10.1038/srep01717 [DOI] [Google Scholar]

- 15.Zheng G., Horstmeyer R., Yang C., “Wide-field, high-resolution Fourier ptychographic microscopy,” Nat. Photon. 7, 739–745 (2013). 10.1038/nphoton.2013.187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tian L., Li X., Ramchandran K., Waller L., “Multiplexed coded illumination for Fourier ptychography with an LED array microscope,” Biomed. Opt. Express 5, 2376–2389 (2014). 10.1364/BOE.5.002376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tian L., Liu Z., Yeh L., Chen M., Zhong J., Waller L., “Computational illumination for high-speed in vitro Fourier ptychographic microscopy,” Optica 2, 904–911 (2015). 10.1364/OPTICA.2.000904 [DOI] [Google Scholar]

- 18.Pang S., Han C., Kato M., Sternberg P. W., Yang C., “Wide and scalable field-of-view Talbot-grid-based fluorescence microscopy,” Opt. Lett. 37, 5018–5020 (2012). 10.1364/OL.37.005018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Orth A., Crozier K., “Microscopy with microlens arrays: high throughput, high resolution and light-field imaging,” Opt. Express 20, 13522–13531 (2012). 10.1364/OE.20.013522 [DOI] [PubMed] [Google Scholar]

- 20.Orth A., Crozier K., “Gigapixel fluorescence microscopy with a water immersion microlens array,” Opt. Express 21, 2361–2368 (2013). 10.1364/OE.21.002361 [DOI] [PubMed] [Google Scholar]

- 21.Pang S., Han C., Erath J., Rodriguez A., Yang C., “Wide field-of-view Talbot grid-based microscopy for multicolor fluorescence imaging,” Opt. Express 21, 14555–14565 (2013). 10.1364/OE.21.014555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Orth A., Crozier K. B., “High throughput multichannel fluorescence microscopy with microlens arrays,” Opt. Express 22, 18101–18112 (2014). 10.1364/OE.22.018101 [DOI] [PubMed] [Google Scholar]

- 23.Orth A., Tomaszewski M. J., Ghosh R. N., Schonbrun E., “Gigapixel multispectral microscopy,” Optica 2, 654–662 (2015). 10.1364/OPTICA.2.000654 [DOI] [Google Scholar]

- 24.Chowdhury S., Chen J., Izatt J., “Structured illumination fluorescence microscopy using Talbot self-imaging effect for high-throughput visualization,” arXiv: 1801.03540 (2018).

- 25.Guo K., Zhang Z., Jiang S., Liao J., Zhong J., Eldar Y. C., Zheng G., “13-fold resolution gain through turbid layer via translated unknown speckle illumination,” Biomed. Opt. Express 9, 260–274 (2018). 10.1364/BOE.9.000260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lukosz W., “Optical systems with resolving powers exceeding the classical limit. II,” J. Opt. Soc. Am. 57, 932–941 (1967). 10.1364/JOSA.57.000932 [DOI] [Google Scholar]

- 27.Schwarz C. J., Kuznetsova Y., Brueck S. R. J., “Imaging interferometric microscopy,” Opt. Lett. 28, 1424–1426 (2003). 10.1364/OL.28.001424 [DOI] [PubMed] [Google Scholar]

- 28.Kim M., Choi Y., Fang-Yen C., Sung Ramachandra . Y., Dasari R., Feld Michael S., Choi W., “High-speed synthetic aperture microscopy for live cell imaging,” Opt. Lett. 36, 148–150 (2011). 10.1364/OL.36.000148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hell S. W., Wichmann J., “Breaking the diffraction resolution limit by stimulated emission: stimulated-emission-depletion fluorescence microscopy,” Opt. Lett. 19, 780–782 (1994). 10.1364/OL.19.000780 [DOI] [PubMed] [Google Scholar]

- 30.Betzig E., Patterson G. H., Sougrat R., Lindwasser O. W., Olenych S., Bonifacino J. S., Davidson M. W., Lippincott-Schwartz J., Hess H. F., “Imaging intracellular fluorescent proteins at nanometer resolution,” Science 313, 1642–1645 (2006). 10.1126/science.1127344 [DOI] [PubMed] [Google Scholar]

- 31.Rust M. J., Bates M., Zhuang X., “Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM),” Nature Methods 3, 793–795 (2006). 10.1038/nmeth929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Heintzmann R., Cremer C., “Laterally modulated excitation microscopy: improvement of resolution by using a diffraction grating,” Proc. SPIE 3568, 185–196 (1999). 10.1117/12.336833 [DOI] [Google Scholar]

- 33.Gustafsson M. G., “Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy,” Journal of Microscopy 198, 82–87 (2000). 10.1046/j.1365-2818.2000.00710.x [DOI] [PubMed] [Google Scholar]

- 34.Gustafson M. G. L., “Nonlinear structured-illumination microscopy: wide-field fluorescence imaging with theoretically unlimited resolution,” PNAS 102, 13081–13086 (2005). 10.1073/pnas.0406877102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chowdhury S., Eldridge W. J., Wax A., Izatt J. A., “Structured illumination multimodal 3D-resolved quantitative phase and fluorescence sub-diffraction microscopy,” Biomed. Opt. Express 8, 2496–2518 (2017). 10.1364/BOE.8.002496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Li D., Shao L., Chen B.-C., Zhang X., Zhang M., Moses B., Milkie D. E., Beach J. R., Hammer J. A., Pasham M., Kirchhausen T., Baird M. A., Davidson M. W., Xu P., Betzig E., “Extended-resolution structured illumination imaging of endocytic and cytoskeletal dynamics,” Science 349, aab3500 (2015). 10.1126/science.aab3500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.von Olshausen P., Rohrbach A., “Coherent total internal reflection dark-field microscopy: label-free imaging beyond the diffraction limit,” Opt. Lett. 38, 4066–4069 (2013). 10.1364/OL.38.004066 [DOI] [PubMed] [Google Scholar]

- 38.Chowdhury S., Dhalla A.-H., Izatt J., “Structured oblique illumination microscopy for enhanced resolution imaging of non-fluorescent, coherently scattering samples,” Biomed. Opt. Express 3, 1841–1854 (2012). 10.1364/BOE.3.001841 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chowdhury S., Izatt J. A., “Structured illumination quantitative phase microscopy for enhanced resolution amplitude and phase imaging,” Biomed. Opt. Express 4, 1795–1805 (2013). 10.1364/BOE.4.001795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gao P., Pedrini G., Osten W., “Structured illumination for resolution enhancement and autofocusing in digital holographic microscopy,” Opt. Lett. 38, 1328–1330 (2013). 10.1364/OL.38.001328 [DOI] [PubMed] [Google Scholar]

- 41.Lee K., Kim K., Kim G., Shin S., Park Y., “Time-multiplexed structured illumination using a DMD for optical diffraction tomography,” Opt. Lett. 42, 999–1002 (2017). 10.1364/OL.42.000999 [DOI] [PubMed] [Google Scholar]

- 42.Chowdhury S., Eldridge W. J., Wax A., Izatt J., “Refractive index tomography with structured illumination,” Optica 4, 537–545 (2017). 10.1364/OPTICA.4.000537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Chowdhury S., Eldridge W. J., Wax A., Izatt J. A., “Structured illumination microscopy for dualmodality 3D sub-diffraction resolution fluorescence and refractive-index reconstruction,” Biomed. Opt. Express 8, 5776–5793 (2017). 10.1364/BOE.8.005776 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Schürmann M., Cojoc G., Girardo S., Ulbricht E., Guck J., Müller P., “Three-dimensional correlative single-cell imaging utilizing fluorescence and refractive index tomography,” J. Biophoton. p. e201700145 (2017). [DOI] [PubMed]

- 45.Shin S., Kim D., Kim K., Park Y., “Super-resolution three-dimensional fluorescence and optical diffraction tomography of live cells using structured illumination generated by a digital micromirror device,” arXiv p. 1801.00854 (2018). [DOI] [PMC free article] [PubMed]

- 46.Mudry E., Belkebir K., Girard J., Savatier J., Moal E. L., Nicoletti C., Allain M., Sentenac A., “Structured illumination microscopy using unknown speckle patterns,” Nat. Photon. 6, 312–315 (2012). 10.1038/nphoton.2012.83 [DOI] [Google Scholar]

- 47.Ayuk R., Giovannini H., Jost A., Mudry E., Girard J., Mangeat T., Sandeau N., Heintzmann R., Wicker K., Belkebir K., Sentenac A., “Structured illumination fluorescence microscopy with distorted excitations using a filtered blind-SIM algorithm,” Opt. Lett. 38, 4723–4726 (2013). 10.1364/OL.38.004723 [DOI] [PubMed] [Google Scholar]

- 48.Min J., Jang J., Keum D., Ryu S.-W., Choi C., Jeong K.-H., Ye J. C., “Fluorescent microscopy beyond diffraction limits using speckle illumination and joint support recovery,” Scientific Reports 3, 2075: 1–6 (2013). 10.1038/srep02075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jost A., Tolstik E., Feldmann P., Wicker K., Sentenac A., Heintzmann R., “Optical sectioning and high resolution in single-slice structured illumination microscopy by thick slice blind-SIM reconstruction,” PLoS ONE 10, e0132174 (2015). 10.1371/journal.pone.0132174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Negash A., Labouesse S., Sandeau N., Allain M., Giovannini H., Idier J., Heintzmann R., Chaumet P. C., Belkebir K., Sentenac A., “Improving the axial and lateral resolution of three-dimensional fluorescence microscopy using random speckle illuminations,” J. Opt. Soc. Am. A 33, 1089–1094 (2016). 10.1364/JOSAA.33.001089 [DOI] [PubMed] [Google Scholar]

- 51.Labouesse S., Allain M., Idier J., Bourguignon S., Negash A., Liu P., Sentenac A., “Joint reconstruction strategy for structured illumination microscopy with unknown illuminations,” ArXiv: 1607.01980 (2016). [DOI] [PubMed]

- 52.Yeh L.-H., Tian L., Waller L., “Structured illumination microscopy with unknown patterns and a statistical prior,” Biomed. Opt. Express 8, 695–711 (2017). 10.1364/BOE.8.000695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Förster R., Lu-Walther H.-W., Jost A., Kielhorn M., Wicker K., Heintzmann R., “Simple structured illumination microscope setup with high acquisition speed by using a spatial light modulator,” Opt. Express 22, 20663–20677(2014). 10.1364/OE.22.020663 [DOI] [PubMed] [Google Scholar]

- 54.Dan D., Lei M., Yao B., Wang W., Winterhalder M., Zumbusch A., Qi Y., Xia L., Yan S., Yang Y., Gao P., Ye T., Zhao W., “DMD-based LED-illumination Super-resolution and optical sectioning microscopy,” Scientific Reports 3, 1116 (2013). 10.1038/srep01116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Dong S., Nanda P., Shiradkar R., Guo K., Zheng G., “High-resolution fluorescence imaging via pattern-illuminated Fourier ptychography,” Opt. Express 22, 20856–20870 (2014). 10.1364/OE.22.020856 [DOI] [PubMed] [Google Scholar]

- 56.Yilmaz H., Putten E. G. V., Bertolotti J., Lagendijk A., Vos W. L., Mosk A. P., “Speckle correlation resolution enhancement of wide-field fluorescence imaging,” Optica 2, 424–429 (2015). 10.1364/OPTICA.2.000424 [DOI] [Google Scholar]

- 57.Yeh L.-H., Dong J., Zhong J., Tian L., Chen M., Tang G., Soltanolkotabi M., Waller L., “Experimental robustness of Fourier ptychography phase retrieval algorithms,” Opt. Express 23, 33213–33238 (2015). 10.1364/OE.23.033214 [DOI] [PubMed] [Google Scholar]

- 58.Bottou L., “Large-scale machine learning with stochastic gradient descent,” International Conference on Computational Statistics pp. 177–187 (2010). [Google Scholar]

- 59.Nesterov Y., “A method for solving the convex programming problem with convergence rate ,” Dokl. Akad. Nauk SSSR 269, 543–547 (1983). [Google Scholar]

- 60.Streibl N., “Phase imaging by the transport equation of intensity,” Opt. Commun. 49, 6–10 (1984). 10.1016/0030-4018(84)90079-8 [DOI] [Google Scholar]

- 61.Fienup J. R., “Phase retrieval algorithms: a comparison,” Appl. Opt. 21, 2758–2769 (1982). 10.1364/AO.21.002758 [DOI] [PubMed] [Google Scholar]

- 62.Gerchberg R. W., Saxton W. O., “Phase determination for image and diffraction plane pictures in the electron microscope,” Optik 34, 275–284 (1971). [Google Scholar]

- 63.Guizar-Sicairos M., Thurman S. T., Fienup J. R., “Efficient subpixel image registration algorithms,” Opt. Lett. 33, 156–158 (2008). 10.1364/OL.33.000156 [DOI] [PubMed] [Google Scholar]

- 64.Kreutz-Delgado K., “The Complex Gradient Operator and the CR-Calculus,” arXiv:0906.4835v1 (2009).