Magnetic analog memristor boosts new circuit design for a neuromorphic on-the-fly learning system.

Abstract

Neuromorphic computing is an approach to efficiently solve complicated learning and cognition problems like the human brain using electronics. To efficiently implement the functionality of biological neurons, nanodevices and their implementations in circuits are exploited. Here, we describe a general-purpose spiking neuromorphic system that can solve on-the-fly learning problems, based on magnetic domain wall analog memristors (MAMs) that exhibit many different states with persistence over the lifetime of the device. The research includes micromagnetic and SPICE modeling of the MAM, CMOS neuromorphic analog circuit design of synapses incorporating the MAM, and the design of hybrid CMOS/MAM spiking neuronal networks in which the MAM provides variable synapse strength with persistence. Using this neuronal neuromorphic system, simulations show that the MAM-boosted neuromorphic system can achieve persistence, can demonstrate deterministic fast on-the-fly learning with the potential for reduced circuitry complexity, and can provide increased capabilities over an all-CMOS implementation.

INTRODUCTION

Bioplausible neuromorphic systems exploiting the brain’s computational methods contain hardware realizations of neural plasticity and complex synaptic connections. Neuromorphic systems range from those built with custom asynchronous analog circuits to those built with conventional synchronous digital processors (1–4), from those that mimic biological behavior precisely to those that mimic biological behavior coarsely. One of the main challenges in constructing these neuromorphic systems is the need for persistent memory embedded in the neural processing. This need, coupled with the advantage of small, lower-power circuitry, self assembly, and the ability to provide more three-dimensional connectivity, has led neuromorphic researchers to examine the use of nanotechnologies in conjunction with custom analog circuits (5–8).

Spintronic devices have been proposed as promising hardware candidates for neuromorphic computing due to their prominent properties such as nonvolatility, low power consumption, and compatibility with CMOS (complementary metal-oxide semiconductor) technologies (9–12). Numerous theoretical neuromorphic proposals have been explored based on spintronic devices, and some of them have been experimentally demonstrated (13–16). Recently, it has also been reported that a spintronic device incorporating a magnetic domain wall (DW) exhibits the functionality of an analog memristor (17, 18), promoting its implementation in neuromorphic circuits different from existing proposals. This magnetic domain wall analog memristor (MAM) potentially provides a uniform programming signal and long retention time, which is required for implementing persistent memory in analog synapse circuits.

Here, we describe a brain-plausible neuromorphic on-the-fly learning system with hybrid CMOS/MAM technologies, with the advantages of on-chip, online, and timing perceptive learning without forgetting. This spiking neuromorphic system is embedded with spike timing–dependent plasticity (STDP) learning, which supports learning while in operation and as circumstances change. The proposed system is fully designed with transistor-level circuit details, and no external computing units are required for the demonstrated applications. This is the first application of an STDP-based learning analog hardware implementation that learns to detect differences in timing of signals. In this system, the network learns the temporal relation of the input sequence using the same neuron and synapse designs. A broad range of perceptual learning tasks can benefit from this brain-plausible design. Our design includes a physical model of MAM, analog neuromorphic circuits with CMOS/MAM hybridization, and neuronal networks constituted by analog circuit neural element models. The spintronic device enables deterministic memristive behavior with ultra-low-energy operation that has inspired a feasible VLSI (very large scale integration) neuromorphic design, achieving an on-the-fly learning process with spike train signals.

RESULTS

Magnetic domain wall analog memristor

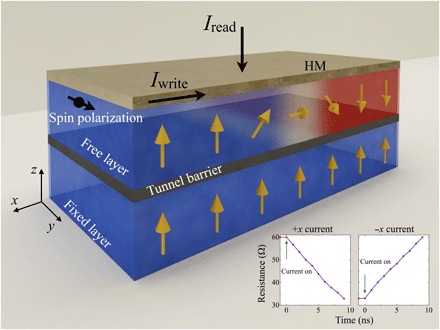

As the key component of our neuromorphic architecture, the characteristic behavior of MAM is first analyzed. The structure of a MAM is illustrated in Fig. 1. It consists of a heavy metal (HM) layer, a magnetic free layer with perpendicular magnetic anisotropy (PMA), a tunnel barrier, and a magnetic fixed layer. The magnetic free layer hosts a magnetic DW that separates the spin-up (blue) and spin-down (red) regions. When an electrical current is flowing in the negative x direction through the HM, a y-polarized spin current is injected into the free layer via the spin Hall effect (Fig. 1) and drives a DW motion against the direction of the current. Because of the pinning effects (both intrinsic and extrinsic pinning), a critical current to initiate the DW motion exists. Only current with amplitude above the critical value can trigger a DW motion, and the critical current here is 0.1 mA (~1 × 1011 A m−2). The tunnel magnetoresistance (TMR) of this device can be read out by a vertical (z direction) electrical current. Note that the reading current used here is well below the critical current of the DW motion, so it does not affect the position of the DW. The TMR is determined by the relative magnetization direction between the two magnetic layers and thus depends on the explicit position of the DW in the free layer. Because the DW motion is almost continuous depending on the sample shape, defects, and other variations, the TMR also changes continuously, imitating the continuously varying strength of an analog synapse.

Fig. 1. Schematic view of the MAM.

Yellow arrows indicate the magnetization direction. An electrical current flow in the x direction could induce a DW motion in the magnetic free layer. The TMR of this device is read out using a vertical current (z direction). Inset: Calculated resistance of the device after injecting both positive and negative current with an amplitude of 5 × 1011 A m−2 and a duration of 1 ns.

Combining the in-plane and out-of-plane current, this MAM device is able to achieve the functionalities of an analog memristor. A fixed in-plane current pulse with amplitude above the critical value is used for the DW motion. Each incoming current pulse changes the DW position and the corresponding resistance state, and the resistance state can be read out via the out-of-plane current. Here, we consider a magnetic free layer with geometry 1000 nm × 108 nm × 1 nm. A Néel-type magnetic DW is stabilized in the free layer. A series of micromagnetic simulations were performed to capture the microscopic dynamics of MAM (for details of the micromagnetic simulation, see Methods). Furthermore, PMA variations were also considered in the simulations, which is usual in experiments and could affect the device performance. Fifty MAM devices with different PMA variations were simulated and implemented in our circuit simulations. The calculated resistance (averaged over 50 devices) as a function of the input current pulse is shown in Fig. 1 (inset), which is fitted to the reported experimental values (18). Depending on the current direction, the device resistance either increases or decreases quasi-linearly, which is similar to the functionality of the Set/Reset signal for an analog memristor.

To demonstrate the potential of this novel spintronic analog memristor in neuromorphic circuits and systems, an integrated SPICE model is used for circuit simulation. We extracted the micromagnetic simulation results as a lookup table and implemented it in Verilog-A to create a SPICE model. The MAM is assumed to be a four-terminal device, where two terminals are used for the resistor and the other two terminals are used for controlling resistance Set/Reset. The resistance is decreased by the Set signal until a minimum value is reached and increased by the Reset signal until a maximum value is reached. The result is shown in Fig. 2D. The synaptic plasticity of the following neuromorphic circuits is based on this device.

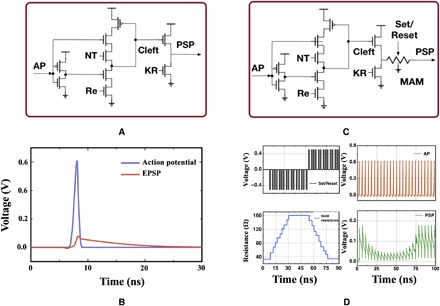

Fig. 2. Synapse circuit implementation and simulation results.

(A) BioRC synapse circuit. NT, neurotransmitter quantity; Re, reuptake control; KR, K+ channel receptor quantity control. (B) Simulation results of the synapse circuit with 45-nm CMOS. (C) Resistive multistate synapse circuit. (D) Simulation result of resistive multistate synapse circuit with hybrid of 45-nm CMOS and MAM.

Multistate synapse circuit

Figure 2 (A and B) shows a Biomimetic Real-Time Cortex (BioRC) analog CMOS excitatory synapse circuit in 45-nm technology (19) and its transient simulation results. The input action potential (AP) consists of spikes generated by a CMOS axon hillock circuit (20) with a maximum amplitude of 0.65 V. The excitatory postsynaptic potential (EPSP) magnitude of this particular synapse circuit is approximately 14% of the AP, with about five times duration of the AP. This particular simplified BioRC synapse design realizes short-term memory through the duration of the EPSP and can support long-term memory by adjusting the input of the neurotransmitter knob NT to control neurotransmitter concentration in the synapse. Other BioRC synapses also allow control of ion channel receptor concentration, providing another memory mechanism (21). However, the BioRC synapse as implemented in CMOS does not provide persistent memory unless the NT and receptor controls are generated continuously, because charge leakage occurs. To mimic a multistate human brain synapse biologically, the resistance properties of the MAM are exploited in this neuromorphic system. Figure 2 (C and D) shows the circuit design and simulation results. The Set/Reset signal varies the resistance of the MAM, and the output voltage of the synapse circuit is input to the resistance terminal of the MAM, resulting in excitatory postsynaptic current (EPSC) output. A load capacitor is connected at the MAM output to measure the EPSP variation in voltage. The input AP is generated by an axon hillock circuit, and the Set/Reset signal is set as stimuli. The resistance of MAM changes deterministically according to each Set/Reset signal, which is a fixed current pulse with ±0.5-mA amplitude and 1-ns duration generated by a pulse generator. The MAM also contains pinning areas for DW near its ends so that, when the resistance of MAM reaches its maximum (minimum) value, it will not respond to the Reset (Set) signal anymore.

On-chip learning method

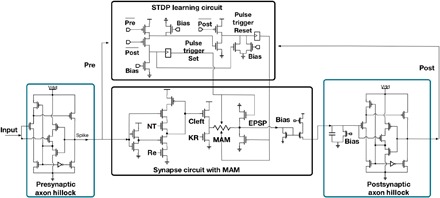

A learning method, STDP, is embedded in this spiking neuromorphic hardware system. STDP is implemented as a circuit in 45-nm CMOS and embedded with a synapse and a MAM to represent the basic learning element shown in Fig. 3.

Fig. 3. Illustration of basic STDP learning element implementation including pre/postsynaptic neurons simplified to the axon hillock, synapse circuit with MAM, STDP learning circuit, current mirror for isolation, and capacitor for current integration.

STDP learning

In a spiking neuromorphic system, it is natural to use biomimetic STDP as the learning method. In STDP, the weight dynamics depend not only on the current weight but also on the relationship between presynaptic and postsynaptic APs (22). This means that, besides the synaptic weight, each synapse keeps track of the recent presynaptic spike history. In terms of our STDP model, every time a presynaptic spike arrives at the synapse, the presynaptic spike will cause charge accumulation Xpre in diffusion capacitance of a transistor, and then the charge will decay gradually. Every time the postsynaptic neuron spikes, charge Xpost is also accumulated and then decays. When a postsynaptic spike arrives at the synapse due to back propagation from the axon hillock, the weight change Δω is calculated on the basis of the presynaptic charges. A simplified STDP mathematical model is given below

| (1) |

where η is the amount of weight change at each time step of a synapse, Xpre is the accumulated charge from the pre signal, Xpost is the accumulated charge from the post signal, and C is a constant greater than Xpre. The assumption of this equation is that both Xpre and Xpost are decaying over time after rising high instantly. If the post signal arrives before the pre signal decays to 0, the weight of this synapse will be increased by η. If only the post signal arrives, which means the output neuron fires before with the firing of presynaptic neurons within an infinite time, the weight of this synapse will be decreased by η.

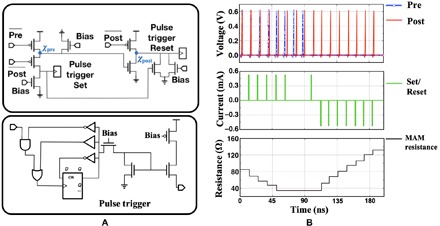

The novel biomimetic circuit implementation of this STDP equation is shown in Fig. 4A; only eight transistors and two pulse generators are used in the circuit. This circuit operates on charges, thus avoiding continuous current to save power. Each synapse in this design has its own STDP circuit, and the possible power consumption of memory operation can be reduced. η is analogous to the resistance change of MAM for each pulse. Xpre and Xpost are analogous to electrical charges, and charge signals are decaying over time through biasing transistors connected to the ground. If and only if the pre signal arrives first, the connected post gated transistor will be charged. Then, if the post signal arrives successively, the Set pulse will be triggered and the Reset signal is inhibited by discharging. The resistance of MAM will be decreased by one Set pulse to increase the strength of this synapse. If only the post signal arrives, the Set signal will not be triggered, because the precharging of the pre transistor gate is absent, and the post signal will trigger the Reset pulse without discharging inhibition. The resistance of MAM will be increased by one Reset pulse to decrease the strength of this synapse. All the charging nodes in this circuit are discharged by a constant bias transistor to implement the differential timing factor dQ/dt. In the mathematical model, the amplitude of Δω is a continuous value depending on the product of η and the differential equation. However, the amplitude of Δω in the circuit implementation is a discrete value of the resistance change of MAM for each pulse. A positive-edge input will trigger this circuit to generate one current pulse output with fixed amplitude and duration, in this case 0.5 mA and 1 ns. The simulation results shown in Fig. 4B present three scenarios:

Fig. 4. STDP circuit implementation and simulation results.

(A) STDP learning circuit. (B) Simulation results of the STDP learning circuit and the MAM response.

1) Scenario 1: If both pre and post spikes arrive in sequence and the timing interval is short enough, the Set pulse is triggered and the resistance of MAM is decreased. If the resistance of the MAM reaches its minimum value, the MAM will no longer respond to the Set pulse.

2) Scenario 2: If both pre and post spikes arrive in sequence with the timing interval slightly longer than the first scenario, neither signal Set nor Reset will be triggered. This scenario is shown in Fig. 4B around 70 ns. The reason for this phenomenon is that the decayed Xpre cannot serve as a source to enable the Set pulse trigger, but it is strong enough to enable the discharge of Xpost and then inhibit the post signal to trigger the Reset signal.

3) Scenario 3: If only the post signal arrives or the time interval between signal pre and post is long enough, the Reset signal is triggered and the resistance is increased. If the resistance of the MAM reaches its maximum value, the MAM will no longer respond to the Reset pulse anymore.

Neuronal networks

We connect neuron and synapse circuits in a networked manner to create a neuronal network. Unlike traditional artificial neural networks processing mathematical models of neural elements on digital processors, our neuromorphic hardware models an asynchronous neuronal network with custom analog circuits modeling biological neurons to a first order. Both pattern recognition and timing perceptive neuronal networks are presented in this section, and the details are discussed below.

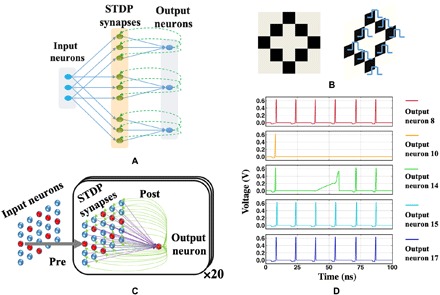

Feed-forward neuronal network for pattern recognition

The basic model of the feed-forward neuronal network is shown in Fig. 5A. In Fig. 5C, the configuration of the feed-forward neuronal network, which is constituted with 25 input neurons, 20 output neurons, and 500 synapses with initial random strengths, is shown. The randomness generation circuit is introduced in (23). The input neuron is simplified to the axon hillock circuit shown in Fig. 3. All the EPSC outputs of the synapses are connected with a current mirror (24) shown in Fig. 3. All isolated EPSC outputs from the current mirrors are connected together to realize linear current addition. The summation of the current is connected to an RC (resistance·capacitance) element (10 MΩ/500 fF) to constitute a simplified dendritic arbor along with converting to voltage output. Then, the output of the dendritic arbor is connected to the axon hillock of each output neuron. The pattern in Fig. 5B is fed into the neuronal network, and the simulation results are shown in Fig. 5D. The pattern is 5 × 5 pixels with 1-bit binary value, and then the pattern is encoded as 0.7-V pulses of 0.1-ns duration and repeated six times with 15-ns interval between the repetitions. These pulses activate the input neurons, and then the network learns this pattern adaptively. After the first echo, five output neurons fired spikes to respond to this pattern. If the input pattern stimulates the output neuron, the synapses that received both pre and post signals will be strengthened according to STDP learning scenario 1 or will keep the same strength according to scenario 2, while the synapses that only received post signal will be weakened according to scenario 3.

Fig. 5. Neuronal network configuration for pattern recognition.

(A) Feed-forward neuronal network example. Each input neuron receives image pattern pulses from one pixel, generating a presynaptic spike for synapses. The STDP synapses are initialized with random weights and receive pre and post spikes. The output neurons receive and integrate EPSPs using current adders. If the voltage of the capacitor accumulating the EPSPs exceeds 0.4 V, then the output neuron generates a post spike. The network has m input neurons, n output neurons, and m × n synapses. In this example, three input neurons, three output neurons, and nine synapses are shown. (B) Pattern example input to the neural network. Pattern is converted as pulses and fed to the input neurons six times successively. (C) Network configuration of 25 input neurons, 500 synapses, and 20 output neurons. This network is simulated using HSPICE at the transistor level. (D) Simulation results of the pattern recognition. Neurons 8, 14, 15, and 17 learned this pattern, while neuron 10 has not learned the pattern. Other nonresponding output neurons are not shown here.

On the basis of the randomization of initial synapse strengths, output neurons 8, 15, and 17 have relatively stronger initial states and timing dynamics, so they learn the pattern during the first trial. Output neuron 10 has fair initial synapses states, but the delay between pre and post signals is too long to trigger the strengthening for synapses instead of weakening. Output neuron 14 weakens the synapses for the first trial like output neuron 10, but the timing is good for trials 3 and 4. This situation is due to the fact that STDP learning scenario 2 happens for neuron 14 during the first trial. Therefore, neuron 14 still has high enough EPSP to trigger the output neuron, and then the STDP strengthens the synapses to learn this pattern eventually. After training with six trials, this pattern is stably recognized by four output neurons. A more detailed simulation result of learning three successive patterns can be found in the Supplementary Materials. Because an unsupervised learning is implemented here, multiple neurons may fire for the same pattern. The trained neurons firing for the same pattern can be clustered into one class using traditional clustering technologies such as winner-take-all method (25) or normalization method (26). The pattern capacity of this neuronal network depends on the number of post neurons. For instance, in the above network configuration, the maximum number of recognized pattern is 20, and each recognizable pattern has certain variation tolerance. For more patterns, this network can be scaled with more post neurons. For deeper scaling of this type of network, adding more layers can increase its accuracy. However, the extra layers just implement an averaging function for a set of patterns or features.

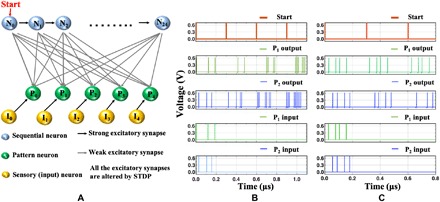

Neuronal network for timing perceptive learning

A neuronal network for timing perceptive learning is also implemented using the same circuit elements introduced above in a different network configuration. This example is provided to further highlight the role of MAMs, STDP, and persistent memory in learning and recall. The neuronal network is not intended to mimic biological mechanisms and is different from previously reported continuous in situ learning (27–29). Other than the general pattern recognition function, the neuronal network proposed here can also learn the temporal relationship of a pattern sequence. It recognizes a sequence of spikes from each input neuron, and each input neuron represents different patterns to be recognized. The neuronal network is illustrated in Fig. 6A, where 25 neurons are connected as a sequential neuron chain with strong excitatory synapses, another 5 neurons representing patterns are fully connected with the neuron chain, the sequential neurons are preconnected to the STDP synapses, and the pattern neurons are post-connected to the STDP excitatory synapses. The sensory neurons are connected as inputs to the pattern neurons in a one-to-one manner through strong excitatory synapses. The pattern neurons can represent signals or symbols that are a complex combination of signals from the sensory neurons. The learning process of this neuronal network is shown in Fig. 6B. After the start signal triggers the neuron chain from N0, the neurons fire successively from N0 to N24 behaving like a clock. While the neuron chain is firing, any firing sequence of the pattern neuron from P0 to P4 will be learned by the STDP synapses between the sequential neurons and pattern neurons. After the learning process, this neuronal network enters the recall process, shown in Fig. 6B around 0.3, 0.6, and 0.9 μs. During the recall process, the sequential neuron chain is triggered by the recall signal to fire successively and the pattern neurons fire according to the learning result, while the sensory neurons are quiet. Because of the subsequent STDP, the strong excitatory synapses between the sensory neurons and the pattern neurons will be depressed and become weak excitatory synapses. This depressive process is designed to be very strong so that the connection breaks in one shot and potential redundant learning process can be avoided. As a result of the recall process, the learning result is reinforced, and the pattern neurons are isolated from the sensory neurons to complete the abstraction. In the case of multiple sequential neurons connecting to one pattern neuron, the STDP will lead to forgetting in the recall process. For example, N1 and N24 both can trigger the P0 pattern neuron through a learned strong excitatory synapse in Fig. 6A. If N1 fires and triggers P0, the synapse between N24 and P0 should be weakened and forgotten. This issue is solved by setting the amplitude of strengthening to be five times larger than the amplitude of weakening. After several cycles of recall, the pattern neurons can be completely isolated from the sensory neurons with little influence on the synapses between pattern neurons and sequential neurons, as shown in Fig. 6C.

Fig. 6. Neuronal network configuration for timing perceptive learning.

(A) Neuronal network for timing perceptive learning. (B) Simulation result of the timing perceptive learning without timing factor calibration. (C) Simulation result of the timing perceptive learning with timing factor calibration.

The delay between sequential neurons is about 5.9 ns, so the start signal is given every 300 ns to start each period of the neuron chain. The most important difference between the learning period and the recall period is the timing shift of the pattern neuron firing. During learning, the pre and post signals happen in a small time window, but during the recall process, the post signal is triggered by the pre signal. The timing shift is ±3 ns, so it does not have important influence on the accuracy of the learned sequence. However, because our neuromorphic system is an online learning system, this shifting will trigger the learning of next sequential neuron during the recall period. As a result, the learned sequence will shift every period and lead to an erroneous duplication by the recall process, like the P1 and P2 output shown in Fig. 6B. This issue is solved in Fig. 6C by discovering the minimum overlap time window for two successive STDP processes in this circuit configuration. By applying and adjusting the overlap time window, this neuronal network can handle both the learning and recall processes without extra control. The missing signal shown in the third period of S2 output is due to multiple sequential neurons connecting to one pattern neuron as discussed above. Multiple versions of this neuronal network can be combined together and managed by a higher architecture for more complicated applications.

DISCUSSION

We presented a neuromorphic system with magnetic analog memristor, analog circuit implementation, and spiking-based on-the-fly learning neuronal networks. All the circuit components are built using conventional CMOS components except the MAM. Other memristive devices can also be used for the synapse circuit in our framework with an appropriate pulse trigger. This work is designed to be a biomimetic implementation of neuromorphic computing system elements as opposed to conventional neural networks, as the subunits of biological neural compartments are efficiently realized with analog circuits and the operation of each neuron is independent of the others and asynchronous. For instance, the subunits in the central nervous system include multiple synaptic terminals (so-called boutons) of a presynaptic neuron, postsynaptic terminals, dendritic arbors, and axon hillock of the postsynaptic neuron. Moreover, each biological synapse contains presynaptic release sites, cleft, and postsynaptic ion channels. Furthermore, our implementation of plasticity of the learning synapse through changes in the individual MAM is analogous to the fact that each of the synaptic connections between a pair of biological neurons is individually plastic. The large number of MAM devices during the potentiation/depression of the learning synapse circuit is analogous to the intermediate process of biological short-term and long-term memory and can also be used to realize structural plasticity in future circuits.

The proposed architecture also offers several advantages in terms of reliability. Even if the resistance amplitudes of the devices have some variations, the design will continue to perform synaptic efficacy and plasticity. In addition, each device within a synapse gets programmed less frequently than reused integrated memory, which effectively increases the overall lifetime of a MAM synapse. The potentiation and depression are event-driven processes, further improving power efficiency and endurance-related issues. The power consumption of MAM can be further reduced by optimizing material parameters such as the effective spin Hall angle and the damping constant of the free layer (30–33).

In summary, we propose a novel hybrid neuromorphic system comprising MAM devices with nonideal characteristics to efficiently implement on-the-fly learning when processing spike signals. This system is shown to overcome several important challenges that are characteristic to traditional memristive devices proposed for neuromorphic circuits, such as the asymmetric conductance response, limitations in resolution and dynamic range, as well as device-level variability (34). The analog circuit-based STDP design also has the features of efficient transistor cost, low power consumption, and intrinsic tolerance for process variations (35). Hence, the proposed system and the corresponding simulations are an important tool toward the realization of highly efficient, large-scale neuronal networks based on advanced nanodevices with typical experimentally observed nonideal characteristics. Our results could also benefit applications such as neuromorphic robots, context understanding, and video processing.

METHODS

Details of micromagnetic simulation

The micromagnetic simulations were carried out using MuMax3 (36) with the damping-like spin torque (τDL) and the field-like spin torque (τFL) terms

| (2) |

where s is the magnetization unit vector and σ is the spin polarization. We considered a magnetic DW device with size 1000 nm × 108 nm × 1 nm. The simulation cell size was 2 nm × 2 nm × 1 nm. describes the amplitude of τDL, where j is the current density, θSH = 0.1 is the spin Hall angle of the HM, and d is the thickness of the magnetic free layer. ηFL = ϵη denotes the amplitude of the field-like spin torque, and we took ϵ = 0.1 in the simulation. We assumed a thick HM layer and almost all the currents injected through the HM; thus, we could neglect the in-plane spin transfer torque for the DW motion. The exchange interaction J = 15 pJ/m, PMA Kμ = 800 kJ/m3, Dzyaloshinskii-Moriya interaction D = 0.5 mJ/m2, damping constant α = 0.3, saturation magnetization Ms = 1000 kA/m, and magnetic dipole-dipole interaction were taken into account in the simulation.

To further consider the weak disorder effect, which is common in DW devices, random grain shape was also included with an average grain size equal to 20 nm. The random grain shape was implemented with the Voronoi tessellation, and the PMA was randomly distributed over all grains with a Gaussian distribution and an SD of 0.02 Kμ. Fifty devices were simulated with different random PMA distributions. The corresponding lookup tables were used in the circuit simulations, and there was no obvious performance change in the circuit simulations. Pinning areas near the end of the magnetic free layer were simulated by setting a larger PMA constant, which could be experimentally achieved by either tuning the thickness of the magnetic free layer or putting an adjacent pinning layer.

The device resistance was estimated on the basis of the micromagnetic simulation results and calibrated to the experimental values (18, 37–39). The TMR can be expressed as , where x is the position of the DW and L is the length of the magnetic layer (17). RP and RAP are the TMR for parallel and antiparallel magnetization configuration, respectively, and the TMR ratio is taken to be 90%. The effect of voltage on the TMR was also considered in the lookup table (38).

SPICE

The SPICE model of the MAM was developed by a lookup table method in Verilog-A. The numerical simulation results were extracted as lookup tables and then embedded in Verilog-A model block. Electrical terminals were defined as a, b, c, and d. Terminals a and b were used as reading ports. A resistor was assigned between a and b, and the value of this resistor was indexed with the lookup tables. Terminals c and d were used as writing ports with a constant resistance assigned in between. An internal variable “state” was related with the voltage between c and d iteratively. Then, the value of state was used as searching ID to retrieve the instant resistance value. All the circuit simulations were executed on HSPICE version L-2016.06 for linux64. The PTM (Predictive Technology Model) 45-nm LP (Low Power) library from ASU (Arizona State University) (19) was used as CMOS models in this work.

Supplementary Material

Acknowledgments

Funding: Micromagnetic simulations and part of the SPICE simulations were supported as part of the Spins and Heat in Nanoscale Electronic Systems (SHINES), an Energy Frontier Research Center funded by the U.S. Department of Energy, Office of Science, Basic Energy Sciences under award no. DE-SC0012670. Author contributions: K.Y. and Y.L. conceived the hybrid MAM/CMOS idea and performed the simulations. R.K.L. and A.C.P. provided technical guidance, research inputs, and supervision. All authors discussed the results and prepared the manuscript. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/4/eaau8170/DC1

Fig. S1. Simulation results of the successive pattern recognitions.

REFERENCES AND NOTES

- 1.Davies M., Srinivasa N., Lin T.-H., Chinya G., Cao Y., Choday S. H., Dimou G., Joshi P., Imam N., Jain S., Liao Y., Lin C.-K., Lines A., Liu R., Mathaikutty D., McCoy S., Paul A., Tse J., Venkataramanan G., Weng Y.-H., Wild A., Yang Y., Wang H., Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro. 38, 82–99 (2018). [Google Scholar]

- 2.Merolla P. A., Arthur J. V., Alvarez-Icaza R., Cassidy A. S., Sawada J., Akopyan F., Jackson B. L., Imam N., Guo C., Nakamura Y., Brezzo B., Vo I., Esser S. K., Appuswamy R., Taba B., Amir A., Flickner M. D., Risk W. P., Manohar R., Modha D. S., A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014). [DOI] [PubMed] [Google Scholar]

- 3.George S., Kim S., Shah S., Hasler J., Collins M., Adil F., Wunderlich R., Nease S., Ramakrishnan S., A programmable and configurable mixed-mode FPAA SoC. IEEE Trans. VLSI Syst. 24, 2253–2261 (2016). [Google Scholar]

- 4.Indiveri G., Linares-Barranco B., Hamilton T. J., van Schaik A., Etienne-Cummings R., Delbruck T., Liu S.-C., Dudek P., Häfliger P., Renaud S., Schemmel J., Cauwenberghs G., Arthur J., Hynna K., Folowosele F., Saighi S., Serrano-Gotarredona T., Wijekoon J., Wang Y., Boahen K., Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 73 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.A. C. Parker, J. Joshi, C.-C. Hsu, N. A. D. Singh, A carbon nanotube implementation of temporal and spatial dendritic computations, in 2008 51st Midwest Symposium on Circuits and Systems (IEEE, 2008), pp. 818–821.

- 6.Indiveri G., Linares-Barranco B., Legenstein R., Deligeorgis G., Prodromakis T., Integration of nanoscale memristor synapses in neuromorphic computing architectures. Nanotechnology 24, 384010 (2013). [DOI] [PubMed] [Google Scholar]

- 7.Al-Shedivat M., Naous R., Cauwenberghs G., Salama K. N., Memristors empower spiking neurons with stochasticity. IEEE J. Emerg. Sel. Top. Circuits Syst. 5, 242–253 (2015). [Google Scholar]

- 8.Bichler O., Suri M., Querlioz D., Vuillaume D., DeSalvo B., Gamrat C., Visual pattern extraction using energy-efficient “2-PCM synapse” neuromorphic architecture. IEEE Trans. Electron. Devices 59, 2206–2214 (2012). [Google Scholar]

- 9.N. Locatelli, A. Mizrahi, A. Accioly, D. Querlioz, J. V. Kim, V. Cros, J. Grollier, Spin torque nanodevices for bio-inspired computing, in 2014 14th International Workshop on Cellular Nanoscale Networks and their Applications (CNNA) (IEEE, 2014).

- 10.Locatelli N., Cros V., Grollier J., Spin-torque building blocks. Nat. Mater. 13, 11–20 (2014). [DOI] [PubMed] [Google Scholar]

- 11.M. Hosomi, H. Yamagishi, T. Yamamoto, K. Bessho, Y. Higo, K. Yamane, H. Yamada, M. Shoji, H. Hachino, C. Fukumoto, H. Nagao, H. Kano, A novel nonvolatile memory with spin torque transfer magnetization switching: Spin-RAM (IEDM Technical Digest, IEEE, 2005), pp. 459–462.

- 12.Engel B. N., Åkerman J., Butcher B., Dave R. W., DeHerrera M., Durlam M., Grynkewich G., Janesky J., Pietambaram S. V., Rizzo N. D., Slaughter J. M., Smith K., Sun J. J., Tehrani S., A 4-Mb toggle MRAM based on a novel bit and switching method. IEEE Trans. Magn. 41, 132–136 (2005). [Google Scholar]

- 13.M. Sharad, C. Augustine, G. Panagopoulos, K. Roy, Proposal for neuromorphic hardware using spin devices. arXiv:1206.3227 [cond-mat.dis-nn] (14 June 2012).

- 14.Yogendra K., Fan D., Jung B., Roy K., Magnetic pattern recognition using injection-locked spin-torque nano-oscillators. IEEE Trans. Electron. Devices 63, 1674–1680 (2016). [Google Scholar]

- 15.Srinivasan G., Sengupta A., Roy K., Magnetic tunnel junction based long-term short-term stochastic synapse for a spiking neural network with on-chip STDP learning. Sci. Rep. 6, 29545 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Torrejon J., Riou M., Araujo F. A., Tsunegi S., Khalsa G., Querlioz D., Bortolotti P., Cros V., Yakushiji K., Fukushima A., Kubota H., Yuasa S., Stiles M. D., Grollier J., Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang X., Chen Y., Xi H., Li H., Dimitrov D., Spintronic Memristor Through Spin-Torque-Induced Magnetization Motion. IEEE Electron Device Lett. 30, 294–297 (2009). [Google Scholar]

- 18.Lequeux S., Sampaio J., Cros V., Yakushiji K., Fukushima A., Matsumoto R., Kubota H., Yuasa S., Grollier J., A magnetic synapse: Multilevel spin-torque memristor with perpendicular anisotropy. Sci. Rep. 6, 31510 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhao W., Cao Y., New generation of predictive technology model for sub-45 nm early design exploration. IEEE Trans. Electron. Devices 53, 2816–2823 (2006). [Google Scholar]

- 20.S. Barzegarjalali, K. Yue, A. C. Parker, Noisy neuromorphic circuit modeling obsessive compulsive disorder, in 2016 29th IEEE International System-on-Chip Conference (SOCC) (IEEE, 2016), pp. 327–332. [Google Scholar]

- 21.J. Joshi, A. C. Parker, C.-C. Hsu, A carbon nanotube cortical neuron with spike-timing-dependent plasticity, in 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE, 2009), pp. 1651–1654. [DOI] [PubMed] [Google Scholar]

- 22.Markram H., Lübke J., Frotscher M., Sakmann B., Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215 (1997). [DOI] [PubMed] [Google Scholar]

- 23.K. Yue, A. C. Parker, Noisy neuromorphic neurons with RPG on-chip noise source, in 2017 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2017), pp. 1225–1229. [Google Scholar]

- 24.C. Mead, M. Ismail, Analog VLSI Implementation of Neural Systems (Springer Science & Business Media, 2012), vol. 80. [Google Scholar]

- 25.Indiveri G., A current-mode hysteretic winner-take-all network, with excitatory and inhibitory coupling. Analog Integr. Circuits Signal Process. 28, 279–291 (2001). [Google Scholar]

- 26.Diehl P. U., Cook M., Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 9, 99 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.S. Kim, M. Ishii, S. Lewis, T. Perri, M. BrightSky, W. Kim, R. Jordan, G. Burr, N. Sosa, A. Ray, J. P. Han, Nvm neuromorphic core with 64k-cell (256-by-256) phase change memory synaptic array with on-chip neuron circuits for continuous in-situ learning, in 2015 IEEE International Electron Devices Meeting (IEDM) (IEEE, 2015), pp. 17–1. [Google Scholar]

- 28.Serrano-Gotarredona T., Masquelier T., Prodromakis T., Indiveri G., Linares-Barranco B., STDP and STDP variations with memristors for spiking neuromorphic learning systems. Front. Neurosci. 7, 2 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.J. A. Pérez-Carrasco, C. Zamarreno-Ramos, T. Serrano-Gotarredona, B. Linares-Barranco, On neuromorphic spiking architectures for asynchronous STDP memristive systems, in Proceedings of 2010 IEEE International Symposium on Circuits and Systems (ISCAS) (IEEE, 2010), pp. 1659–1662. [Google Scholar]

- 30.Dc M., Grassi R., Chen J.-Y., Jamali M., Hickey D. R., Zhang D., Zhao Z., Li H., Quarterman P., Lv Y., Li M., Manchon A., Mkhoyan K. A., Low T., Wang J.-P., Room-temperature high spin–orbit torque due to quantum confinement in sputtered BixSe(1–x) films. Nat. Mater. 17, 800–807 (2018). [DOI] [PubMed] [Google Scholar]

- 31.Khang N. H. D., Ueda Y., Hai P. N., A conductive topological insulator with large spin Hall effect for ultralow power spin–orbit torque switching. Nat. Mater. 17, 808–813 (2018). [DOI] [PubMed] [Google Scholar]

- 32.Okada A., He S., Gu B., Kanai S., Soumyanarayanan A., Lim S. T., Tran M., Mori M., Maekawa S., Matsukura F., Ohno H., Panagopoulos C., Magnetization dynamics and its scattering mechanism in thin CoFeB films with interfacial anisotropy. Proc. Natl. Acad. Sci. U.S.A. 114, 3815–3820 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Parkin S. S. P., Hayashi M., Thomas L., Magnetic domain-wall racetrack memory. Science 320, 190–194 (2008). [DOI] [PubMed] [Google Scholar]

- 34.Koo H.-J., So J.-H., Dickey M. D., Velev O. D., Towards all-soft matter circuits: Prototypes of quasi-liquid devices with memristor characteristics. Adv. Mater. 23, 3559–3564 (2011). [DOI] [PubMed] [Google Scholar]

- 35.Cameron K. L., Boonsobhak V., Murray A. F., Renshaw D. S., Spike timing dependent plasticity (STDP) can ameliorate process variations in neuromorphic VLSI. IEEE Trans. Neural Netw. 16, 1626–1637 (2005). [DOI] [PubMed] [Google Scholar]

- 36.Vansteenkiste A., Leliaert J., Dvornik M., Helsen M., Garcia-Sanchez F., Van Waeyenberge B., The design and verification of MuMax3. AIP Adv. 4, 107133 (2014). [Google Scholar]

- 37.Parkin S. S. P., Kaiser C., Panchula A., Rice P. M., Hughes B., Samant M., Yang S.-H., Giant tunnelling magnetoresistance at room temperature with MgO 100 tunnel barriers. Nat. Mater. 3, 862–867 (2004). [DOI] [PubMed] [Google Scholar]

- 38.Yuasa S., Nagahama T., Fukushima A., Suzuki Y., Ando K., Giant room-temperature magnetoresistance in single-crystal Fe/MgO/Fe magnetic tunnel junctions. Nat. Mater. 3, 868–871 (2004). [DOI] [PubMed] [Google Scholar]

- 39.Yakushiji K., Fukushima A., Kubota H., Konoto M., Yuasa S., Ultralow-voltage spin-transfer switching in perpendicularly magnetized magnetic tunnel junctions with synthetic antiferromagnetic reference layer. Appl. Phys. Express 6, 113006 (2013). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/4/eaau8170/DC1

Fig. S1. Simulation results of the successive pattern recognitions.