Abstract

Optical coherence tomography (OCT) images of the retina are a powerful tool for diagnosing and monitoring eye disease. However, they are plagued by speckle noise, which reduces image quality and reliability of assessment. This paper introduces a novel speckle reduction method inspired by the recent successes of deep learning in medical imaging. We present two versions of the network to reflect the needs and preferences of different end-users. Specifically, we train a convolution neural network to denoise cross-sections from OCT volumes of healthy eyes using either (1) mean-squared error, or (2) a generative adversarial network (GAN) with Wasserstein distance and perceptual similarity. We then interrogate the success of both methods with extensive quantitative and qualitative metrics on cross-sections from both healthy and glaucomatous eyes. The results show that the former approach provides state-of-the-art improvement in quantitative metrics such as PSNR and SSIM, and aids layer segmentation. However, the latter approach, which puts more weight on visual perception, outperformed for qualitative comparisons based on accuracy, clarity, and personal preference. Overall, our results demonstrate the effectiveness and efficiency of a deep learning approach to denoising OCT images, while maintaining subtle details in the images.

1. Introduction

Optical coherence tomography (OCT) has emerged as a powerful imaging modality that allows for non-invasive acquisition of depth-resolved retinal images at microscopic resolution. Measurements performed on OCT images (e.g. layer thickness) have proven themselves to be clinically useful biomarkers for diagnosis and monitoring of diseases such as glaucoma [1,2] and age-related macular degeneration (AMD) [3–6]. However, OCT images are inherently affected by speckle noise, which degrades the image quality, masks image features, and makes it difficult to perform qualitative assessment and quantitative measurements reliably. Therefore, image enhancement is a critical step required in the analysis pipeline to ensure the reliability of disease assessment.

Speckle is the cause of the grainy appearance of OCT images, and is dependent on both the wavelength of the imaging beam and the structural characteristics of the tissue. Therefore, in addition to noise, the speckle also contains information about the textural characteristics and clinical features of the tissue. Separation of these noise and information components is an ongoing challenge, and multiple approaches have been suggested to address it, which can be roughly characterised into frame averaging methods [7], and digital suppression methods [8]. For OCT of the retina, frame averaging operates on the principle that small changes in the subject (such as eye movement) induce changes in the speckle pattern between repeated measurements, which can then be removed through registration of the images and averaging [7]. However, in circumstances where multiple repetitions of the volume are not available, there exists a need to remove speckle noise from single scans without loss of detail. Many digital suppression methods have been proposed (see [8] for a review on speckle suppression specifically in OCT, and [9] for a more recent review of noise suppression in ultrasound images), including spatial domain filtering approaches, where filtering is applied directly to the noisy image [10,11], and transform domain filtering, where images undergo a transformation (such as a wavelet transform or sparse representation) prior to denoising [12–16].

Spatial domain approaches such as variational models can be negatively affected by artefacts like the staircase effect [17]. While the incorporation of total generalised variation regularisation proposed by [11] eliminates this effect, it also serves to remove small structural features in the image. Other approaches, including non-local means filtering [18] require long processing times, particularly for large images, which may be intractable for diagnostic applications. Transform domain filtering approaches have shown much success at denoising, but since they rely on fixed mathematical models they have limited adaptability, have difficulties in distinguishing subtle details from speckle noise, and are prone to artefacts.

In this work, we present a novel deep-learning based algorithm for speckle noise reduction in OCT images. Deep learning approaches are new to the medical image denoising scene, but have already shown promise in enhancing ultrasound [19] and CT [20–22]. Results thus far have shown that deep learning can produce perceptually realistic, enhanced images, that are more convincing, and often faster, than those produced by traditional techniques. The success of such networks arises from their ability to exploit spatial correlations at multiple resolutions using a hierarchical network structure. However, these networks generally require large amounts of high quality data to train their numerous parameters. The additional requirement that both noisy and denoised images are jointly available means that previous studies have relied on introducing artificial perturbations into images to simulate the effects of noise. This is an inherent limitation, since the generated images may not accurately represent the statistical properties of noise in real images, therefore, the trained networks may not perform successfully when applied to real images. To tackle this issue, we encourage our network to remove speckle noise without losing small structural details by training the network to transform noisy OCT images into their enhanced counterparts obtained through frame averaging. Previous studies have suggested that using only mean squared error (MSE) between the input and ground truth images is not a sufficient objective function to produce perceptually convincing enhancements, and have thus implemented generative adversarial network (GAN) architectures and additional objective functions to improve on image quality [21]. However, while perceptual similarity is preferable, it does not convey whether the enhanced image is any more useful to the clinician. Therefore, we implement both a MSE-trained CNN, and a GAN architecture, and introduce several metrics to evaluate both image similarity and enhancement usefulness. The remainder of the paper is structured as follows: In Section 2 we discuss the proposed method for denoising OCT frame data. Section 3 presents a discussion of the datasets, and the evaluation process for gaining quantitative and qualitative results. The results of our method, and a comparison to results using standard algorithms, are presented in Section 4. Our work is summarised in Section 5. Here, conclusions are also drawn and suggestions for future work are given.

2. Method

Let IR ∈ ℝM×N denote a cross section (hereafter called a B-scan) from a raw OCT volume, and IFA indicate the frame averaged version of the B-scan, created by registering and averaging R consecutively acquired OCT scans (see Section 3 for further details regarding data acquisition and pre-processing). This enhancement process serves to remove speckle noise in the images, and accentuate low contrast structures that are otherwise obscured by noise. However, the denoised images are only available during training. Therefore, our goal is to find a function G that maps IR to IFA

| (1) |

To achieve this, we train a generator network as a feed-forward convolutional neural network (CNN) GθG parametrised by θG. GθG maps IR into a certain distribution IE. We train the parameters θG by minimising an enhancement-specific loss function L, with the aim of making IE as close as possible to IFA.

2.1. Network architecture

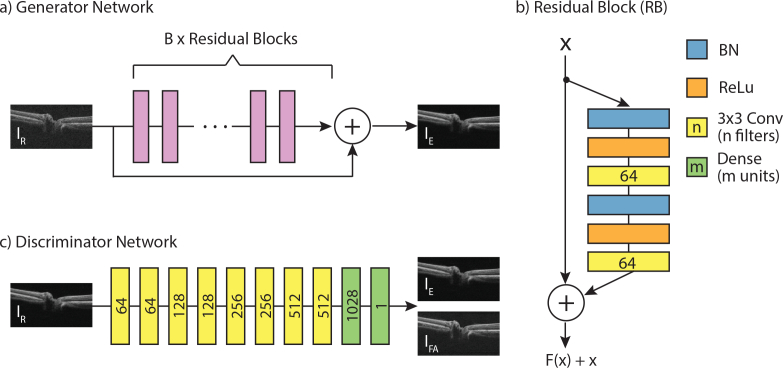

The overall view of the proposed network GθG is shown in Fig. 1(a). We employ a residual network architecture [23], which is modular in style with multiple connecting blocks of identical structure (shown in Fig. 1(b)). The residual blocks employ pre-activation with batch normalisation (BN) and rectified linear units (ReLU), which improves training and generalisation of the network [23]. Pre-activation precedes two convolutional layers with small 3 × 3 kernels and 64 feature maps. The blocks include a skip link, which combines the signals pre- and post-processing through addition. This helps information to flow through the network, and improves gradient flow during back-propagation. Another skip connection combines the signal prior to the residual blocks with that after processing through addition. This is followed by another pre-activation and convolutional layer, and a final convolutional layer with feature depth of one. The output of this final layer is IE.

Fig. 1.

Illustration of the proposed networks. The input (IR) to the generator network (a) is a raw B-scan from the OCT scanner, which undergoes processing by B residual blocks (b) to produce an enhanced image (IE). The numbers of filters for the convolutional layers, and the number of units for the dense layers, are indicated by numbers on the blocks. The discriminator network (c, described in 2.3) aims to estimate the Wasserstein metric between real (IFA) and generated (IE) data distributions.

We train two versions of the generator network GθG. The first version is trained via a mean-squared error loss, and is referred to as CNN-MSE. The MSE loss favours high peak signal to noise ratio (PSNR), which is a widely-used metric for quantitatively evaluating the quality of OCT images. However, convolutional neural networks trained solely with MSE loss often display overly smooth textures, and lack high-frequency features. Considering the importance of clear layer boundaries in OCT B-scan analysis, this approach alone may not be optimal. Therefore, we trained a second version, referred to as CNN-WGAN, with the aim of creating images which are perceptually indistinguishable from IFA by formulating the problem in terms of a Wasserstein generative adversarial network (WGAN) [24], and utilising a perceptual loss in addition to the MSE loss. In the next section, we will describe the implementation of these methods.

2.2. Perceptual loss

The perceptual loss is based on high-level features extracted from a pre-trained network [25]. This ensures that the network is trained to replicate image similarities more robustly compared to using per-pixel losses. The perceptual loss is defined as the Euclidean distance between the feature representations of the enhanced image (GθG (IR)) and the frame-averaged reference image (IFA) given by a pre-trained VGG19 network [26].

| (2) |

where, ϕi,j indicates the feature map obtained by the j-th convolution, after ReLU activation, prior to the i-th pooling layer, and Wi,j and Hi,j describe the dimensions of the respective feature maps within the VGG network.

2.3. Adversarial loss

Along with the generator network, a generative adversarial network (GAN) involves a discriminator network, DθD, parametrised by θD (shown in Fig. 1). The generator network is trained to produce realistic images, while the discriminator network is trained to identify which images are real versus those that are generated. Here, we implement a WGAN, an improved version of the original GAN, which uses the Earth Mover’s distance [27] to compare two data distributions (that of IFA and IE). We optimise both networks in an alternating manner (fixing one and updating the other) to solve the following min-max problem:

| (3) |

where, the first two terms represent the estimation of the Wasserstein distance, and the final term performs a gradient penalty to enforce the Lipschitz constraint, with penalty coefficient λ. is uniformly sampled along pairs of IE and IFA samples. This results in improved stability during training. Additionally, we impose gradient penalty [28], which has been shown to improve convergence of the WGAN compared to gradient clipping. With this approach, our generator can learn to create solutions that are highly similar to real images and thus difficult to classify by D.

Thus, the overall loss of the CNN-WGAN architecture is given by:

| (4) |

where λ1 and λ2 are weighting parameters to control the trade-off between the three components of the loss.

3. Experiments

3.1. Data acquisition and pre-processing

Six OCT volumes were acquired from both eyes of 38 healthy patients on a Cirrus HD-OCT Scanner (Zeiss, Dublin, CA) at a single visit. The scans were centred on the optic nerve head (ONH) and were 200 × 200 ×1024 voxel per cube, acquired from a region 6mm × 6mm × 2mm. These scans were then registered and averaged to create the “ground truth” denoised image. The scan with the highest signal strength (as provided by the scanner software) was chosen as the reference image, and the remaining five volumes were registered to it in two steps. First, a 2-D Elastix registration [29] of the en-face images addressed rotation and translation differences between the consecutive scans (x–y axis registration). Next, individual A-scans from the reference and moving scans were cross-correlated to find the translation that best aligned the scans (Z-axis registration). The resulting volumes were averaged to remove noise. This process produced 76 “denoised” OCT volumes, however 7 volumes were not used for further training or analysis due to inaccurate registration as recognized upon visual inspection, resulting in a cohort of 69 volumes from healthy eyes.

A second set of scans were also acquired from a single eye of 6 glaucoma patients using the same protocol i.e. six ONH-centred scans were acquired on the same visit. The denoised ground truth was generated as described above. Note, however, that this set was not used in the training procedure and was only used to evaluate the method.

3.1.1. Training details

The 69 OCT volumes were split into a training, validation and testing subsets, containing 51, 7 and 11 volumes, respectively. In this way, B-scans from volumes in the hold-out test set were never seen during training. B-scans within 10 voxels of the edge of the volume were not considered during training, since they often contained artefacts from the registration process. Therefore, each denoised volume had 6 corresponding raw volumes, with 180 B-scans, resulting in 55080 B-scan pairs. Patches of size 176 × 176 were extracted from these scans for training, and augmentation was performed by randomising the position of the patch (ensuring that each patch showed a portion of retina), and randomly flipping each patch horizontally. Pairs of patches underwent normalisation prior to training, as such:

| (5) |

where I and Ĩ are the original and the normalised B-scans respectively, and μT and σT are the mean and standard deviation of the training set. This transformation was also applied to validation and hold-out set images. In our experiments, both networks were optimized using Adam algorithm [30], the hyper parameters of which were empirically set as α = e − 5, β1 = 0.5, β2 = 0.9. Mini-batch size was 4. The penalty coefficient was set as λ = 10 as suggested in [28], and the loss weighting parameters were empirically set as λ1 = 1e − 3 and λ2 = 2e − 6. Training was performed in Tensorflow (https://www.tensorflow.org/) on a NextScale nx360 m4 server with 64 GB of RAM, 800GB SSD, and an NVIDIA Tesla K40 Graphics Processing Unit (GPU) with NVIDIA cuda (v9.0) and cu-dnn (v7.0.5) libraries (http://www.nvidia.com). Training was monitored, and stopped when no further improvement was observed. Training of CNN-MSE and CNN-WGAN took approximately 5 hours and 22 hours respectively. Testing was performed on 1980 B-scans (11 volumes × 180 slices). While the network was trained on patches of images, the fully-convolutional architecture of the network means that images of arbitrary size may be used during testing, therefore entire B-scans where enhanced. Prior to further analytics, the enhanced images were scaled back to their original distribution by:

| (6) |

3.1.2. Quantitative metrics

We compare the performance of CNN-MSE and CNN-WGAN to two well-known image enhancement techniques: block-matching 3D (BM3D) algorithm [31], implemented using the pyBM3D wrapper in Python, and double-density dual-tree complex wavelet transform (DD-CDWT) [32] (which has shown more recent, promising results on OCT data [33]), implemented using MATLAB (http://eeweb.poly.edu/iselesni/WaveletSoftware/dt2D.html). To fairly compare the speed of the different methods, all were implemented on a MacBook Pro with 2.9 GHz Intel Core i5, 8GB 1867 MHz DDR3. Performance was gauged using standard error metrics such as PSNR, structural similarity index (SSIM), multi-scale structural similarity index (MS-SSIM) metrics, and MSE. SSIM was calculated for pixels considered to be part of the retina. These metrics were computed on a hold-out set of data from the healthy cohort and the entire glaucoma cohort. Statistical significance was determined using paired two-sided Wilcoxon signed-rank tests [34] at p < 0.05.

Additionally, the impact of the proposed enhancement techniques on the visibility of structures of interest was gauged by having multiple independent experts manually annotate five surfaces: the internal limiting membrane (ILM), the posterior border of the retinal nerve fibre layer (RNFL), the ganglion cell and inner plexiform layer (GCIPL), the inner nuclear layer (INL) and Bruch’s membrane (BM) shown in Fig. 4(a). Manual annotations were obtained on 22 slices from the original volumes (2 from each volume of the test set), plus the same images enhanced by CNN-MSE and CNN-WGAN. Half of the slices were chosen from a central section of the volume (containing the cross-section of the ONH), while the other half were chosen at random from a peripheral section (lacking the ONH). The optic nerve head itself was omitted from the annotations. The slices were also repeated in the set and shuffled before being presented to the annotators.

Fig. 4.

a) Portion of a B-scan with surface annotations for five layers. Intra-observer (b) and inter-observer (c) annotation location difference (AAD), averaged over the columns of all annotated B-scans for ILM and GCIPL surfaces (mean ± standard error). Statistical significance at p < 0.001 indicated by *. Results for the remaining layers are shown in Figure 6.

With the assumption that retinal surfaces would be easier to identify with a higher degree of repeatability in clearer images, unsigned variability in the location of the surface location between observers (inter-observer), and for the same observer between multiple repeated scans (intra-observer), titled Average Annotation Difference (AAD) was calculated as follows:

| (7) |

Where l represents the surface location on the ath column (or A-scan) of the nth B-scan. The subscript on l indicates the first versus second observer (for inter-observer), or first versus second repeat of the annotation (for intra-observer). A three-way ANOVA was used to compare the effect of the annotator (or annotator pair, for inter-observer AAD), the enhancement technique, and the surface annotated on intra- and inter-observer variability. Statistical significance was then determined using a Tukey-Kramer post-hoc analysis.

3.1.3. Qualitative metrics

We performed a mean opinion score test to quantify the ability of different approaches to reconstruct perceptually convincing images. Given six different representations of a B-scan - unprocessed (“Raw”), enhanced using CNN-WGAN,CNN-MSE, BM3D and DD-CDWT, and frame averaged, three experts were asked to rank the images from best to worst on three metrics:

-

1.

Ability to discriminate structures (e.g. retinal layers, blood vessels) (1 = most clear, 6 = least clear)

-

2.

Accuracy with which the image represents what you might see at a cellular/microscopic level (1 = most accurate, 6 = least accurate)

-

3.

Personal preference for use in clinical practice (1 = most preferred, 6 = least preferred)

The metrics were specifically chosen to understand the differences in perceived clarity (metric 1), accuracy (metric 2), and personal preference (metric 3) between various versions of the same scan. Each observer was simultaneously shown all 6 versions of each of 55 B-scans (5 from each of the 11 test set volumes), in a randomized order, and asked to rank each of the three metrics in turn. Inter-observer agreement was calculated using Cohen’s Kappa Score [35], and statistical significance between observers in their preference for CNN-MSE versus CNN-WGAN was calculated using a Binomial Test (with Bonferroni correction for multiple comparisons).

4. Results

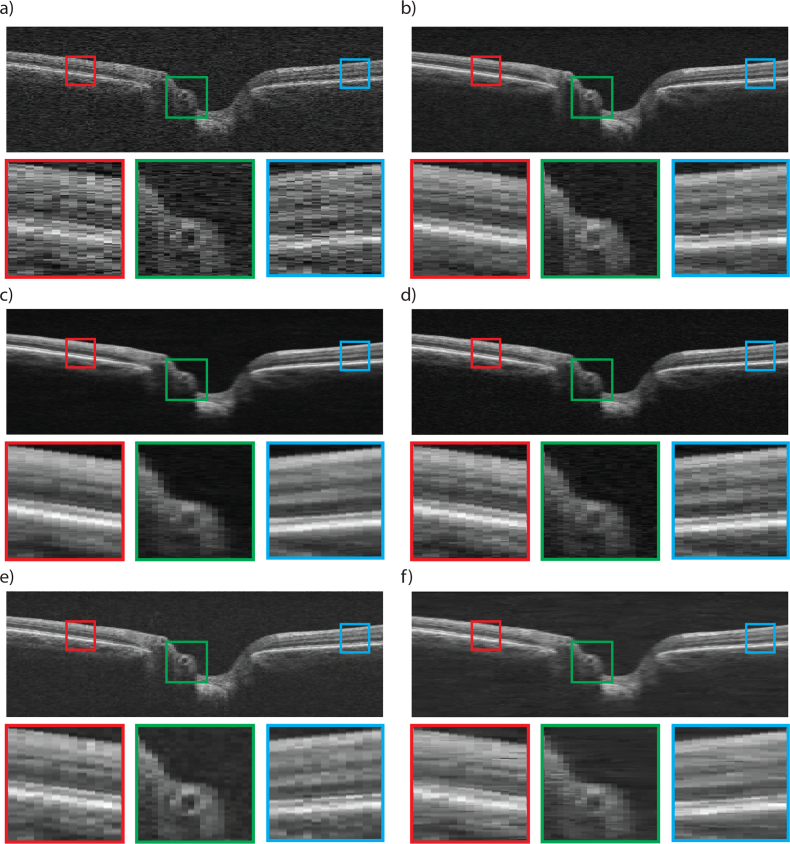

To show the denoising effect of the proposed networks, a representative B-scan from the optic nerve head of a healthy person within the test set is shown in Fig. 2, as it appears in the raw image (a), ground truth achieved through averaging (b), post-processing of the raw scan with CNN-MSE (c), CNN-WGAN (d), BM3D (e), and DD-CDWT (f). The B-scans consisted of 200 A-Scans, each with 1024 pixels. While the scans were kept at this aspect ratio during processing, for display purposes they were resized for better visualisation.

Fig. 2.

OCT Image from a healthy volume captured by Cirrus HD-OCT Scanner (a), and corresponding 6-frame averaged image (b). The result of post-processing of (a) with CNN-MSE (c), CNN-WGAN (d), BM3D (e), and DD-CDWT (f). Three zoomed in, colour coded sections are shown below each B-scan (best viewed in colour).

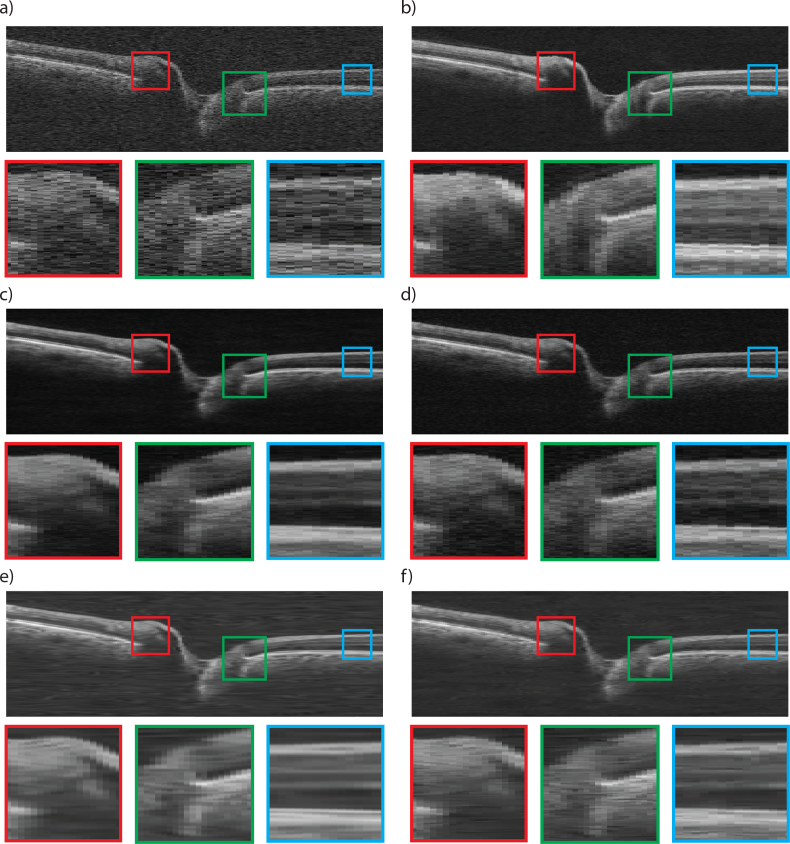

As can be observed, the BM3D method (e) does not over-smooth the image, but still shows some residual noise, and has poor contrast. In comparison, DD-CDWT (f) introduces over smoothing, resulting in loss of layer boundary distinction (particularly evident in the red and blue insets). The images produced by CNN-MSE (c) also show signs of smoothing in that it attains homogeneity within each layer, but does not lose layer separation (as is the case for DD-CDWT), and layers can still be clearly distinguished. CNN-WGAN (d) produces images most similar perceptually to the frame-averaged scans (b), in that it maintains layer texture and boundary clarity without introducing artefacts. Fig. 3 shows a representative scan from a glaucomatous volume. Even though no glaucomatous scans were used during network training, both CNN enhancement methods produce enhanced images with a high degree of accuracy for structural detail. In particular, the border tissue/Bruch’s membrane configuration (green box), which is an important indicator of disc margin in glaucomatous volumes [36] is best preserved in the CNN-WGAN enhancement. Additionally, the two CNN methods do not blur the edges between layers (blue box), as does DD-CDWT. BM3D performs well in the horizontally flat region of the retina indicated by the blue box, but is outperformed by the CNN methods in the other two highlighted regions.

Fig. 3.

OCT Image from a glaucomatous volume captured by Cirrus HD-OCT Scanner (a), and corresponding 6-frame averaged image (b). The result of post-processing of (a) with CNN-MSE (c), CNN-WGAN (d), BM3D (e), and DD-CDWT (f). Three zoomed in, colour coded sections are shown below each B-scan (best viewed in colour).

The average times required to process a B-scan by each method are shown in Table 1. The times reported are averaged over 200 B-scans, with the proposed methods (CNN-MSE and CNN-WGAN) processing all 200 simultaneously.

Table 1.

Time required to process a single B-scan, averaged over 200 B-scans.

| Method | Time (seconds) |

|---|---|

| BM3D | 7.84 |

| DD-CDWT | 0.72 |

| CNN-WGAN | 0.68 |

| CNN-MSE | 0.68 |

The results show that the proposed solutions out-perform existing methods in terms of speed of use. Additionally, the networks are highly parallelizable, and therefore lend themselves to further speed increases on more powerful systems, particularly those with access to a GPU.

4.1. Quantitative results

For quantitative results, we calculated the PSNR, SSIM, MS-SSIM and MSE between six different image types (Raw, BM3D, DD-CDWT, CNN-WGAN and CNN-MSE) and their corresponding frame-averaged scan. The summary data are shown in Table 2. The best performing technique in each metric is shown in bold.

Table 2.

Mean ± standard deviation of the peak signal to noise ratio(PSNR), structural similarity ratio (SSIM), multi-scale structural similarity ratio (MS-SSIM) and mean squared error (MSE) for 1980 healthy B-scans and 1080 B-scans from patients with glaucoma, using the BM3D, DD-CDWT, and the proposed CNN-WGAN and CNN-MSE networks. The best results are shown in bold. All pairwise comparisons (excluding SSIM on glaucoma images processed by BM3D and DD-CDWT) were statistically significant (p < 0.0001).

| Healthy | PSNR | SSIM | MS-SSIM | MSE |

|---|---|---|---|---|

| Raw | 26.82±0.67 | 0.62±0.04 | 0.79±0.02 | 136.67±22.43 |

| BM3D | 31.17±1.27 | 0.74±0.04 | 0.88±0.02 | 51.53±17.29 |

| DD-CDWT | 31.21±1.19 | 0.75±0.04 | 0.89±0.02 | 51.22±15.92 |

| CNN-WGAN | 31.83±1.21 | 0.77±0.03 | 0.92±0.01 | 44.52±14.34 |

| CNN-MSE | 32.28±1.27 | 0.78±0.03 | 0.92±0.01 | 40.28±13.44 |

| Glaucoma | PSNR | SSIM | MS-SSIM | MSE |

|---|---|---|---|---|

| Raw | 25.46±0.67 | 0.58±0.04 | 0.76±0.02 | 188.32±52.53 |

| BM3D | 29.12±1.12 | 0.74±0.03 | 0.88±0.03 | 83.34±44.76 |

| DD-CDWT | 29.44±1.18 | 0.74±0.03 | 0.89±0.03 | 77.84±46.15 |

| CNN-WGAN | 29.92±1.27 | 0.76±0.03 | 0.91±0.02 | 72.85±46.07 |

| CNN-MSE | 30.21±1.12 | 0.78±0.03 | 0.92±0.03 | 70.04±44.91 |

We observe that the CNN-MSE network delivers the best results on all metrics, with all pairwise comparisons showing statistical significance (p < 0.0001) (excluding the comparison between SSIM on glaucoma images processed by BM3D and DD-CDWT, for which p > 0.05). The CNN-MSE network is also able to greatly improve the quality of glaucomatous volumes, despite having been trained using only healthy volumes. Since visual inspection and quantitative comparison of the enhancement techniques showed that the CNN-based methods were superior to both BM3D and DD-CDWT, these were excluded from further analyses.

While these results show that CNN-MSE is objectively better in terms of image metrics, it has previously been posited that such a network might overly blur images, which in this application might result in reduced layer boundary visibility. Therefore, we define a quantitative assessment based on the effect upon the end user. We asked three experts to annotate five surfaces (ILM, RNFL, GCIPL, INL and BM) in raw images, and those having undergone enhancement with CNN-MSE and CNN-WGAN. Fig. 4(a) shows a section of B-scan, processed by CNN-WGAN, with layer annotations indicated. Each observer annotated each image twice, and the average difference in location of annotations (AAD, defined in Equation 7), both between observers (inter-observer), and for the one observer between repeated scans (intra-observer) was calculated. The intra- and inter-observer AAD for two of the surfaces are shown in Figs. 4(b) and (c).

The results of intra-observer analysis show that compared to raw images, both networks significantly improved the repeatability of layer annotations for all three annotators (p < 0.001, this and all further comparisons in this section were determined via a three-way AVONA with Tukey-Kramer post-hoc analysis). Additionally, this also occurred for all surfaces when controlling for grader (all p < 0.001). Differences between the AAD metric were more pronounced for surfaces that were more difficult to label, as shown in Fig. 4(b) by the difference in AAD between ILM and GCIPL. Controlling for grader, CNN-MSE resulted in significantly smaller AAD values for both GCIPL and BM (both p < 0.001), though there was no significant difference for the other surfaces. Controlling for surface type, AAD for graders 1 and 2 with CNN-MSE was significantly less than that for CNN-WGAN (both p < 0.001), however there was no significant difference for grader 3 (p = 0.850). Overall, grader 3 was more consistent, with significantly smaller AAD for raw images than that for both graders 2 and 3 (both p < 0.001), this was also true for CNN-WGAN images (both p < 0.001).

Results were similar when comparing annotations between graders (Fig. 4(c)). Again, CNN-MSE and CNN-WGAN both resulted in lower AAD than raw (both p < 0.001) for all graders, and this was true for all surfaces when controlling for grader (all p < 0.001). Controlling for surface type, AAD for CNN-MSE was significantly less for two grader pairs (p = 0.003 for 1 vs 2, and p = 0.023 for 1 vs 3), though not significant for the third (p = 0.860). Controlling for grader pairs, CNN-MSE produced significantly improved AAD for RNFL and BM compared to CNN-WGAN (p = 0.030 and p < 0.001, respectively).

Overall, the results show that both enhancement methods drastically improve the reliability and repeatability of manual surface annotations, and that for more difficult surfaces, CNN-MSE sometimes outperforms CNN-WGAN.

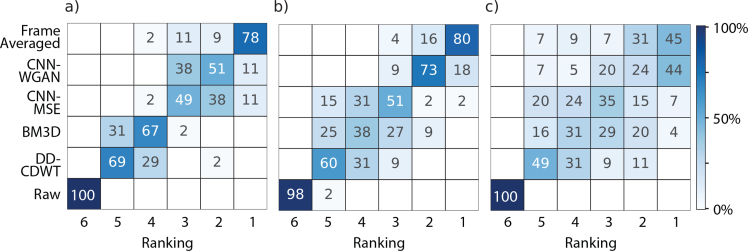

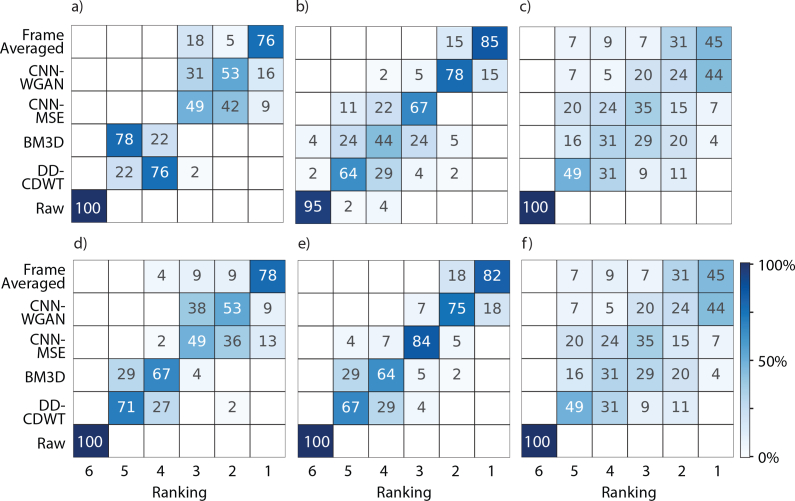

4.2. Qualitative results

As well as clarifying layer boundary locations, another potential utility for our OCT image enhancement technique is quality improvement for visual inspection of complex anatomic pathologic structures. Therefore, here, we demonstrate the capabilities of the current techniques to improve the perceptual quality of provided images. To sufficiently investigate the qualitative differences between the images we asked three observers to rank the six different versions in three metrics, representative of clarity, accuracy and personal preference (listed in full in section 3.1.3). Observers were shown six versions of the same image: the frame-averaged image, the result of enhancement by CNN-MSE, CNN-WGAN, BM3D and DD-CDWT, and the raw version, which was the input to the four enhancement techniques. The results for clarity are shown in Fig. 5. For the respective results for accuracy and personal preference, see Figure 7 in the Appendix.

Fig. 5.

Qualitative results for perceived clarity for 55 B-scans ranked by observer 1 (a), observer 2 (b) and observer 3 (c) for images acquired by 6-frame averaging (“Ground Truth”), CNN-WGAN, CNN-MSE, BM3D, DD-CDWT and the respective un-processed images (“Raw”). Colour indicates the percentage of images, for the respective image type, in each rank position (where 1=highest rank, 6=lowest rank). Results for accuracy and personal preference are shown in Figure 7.

All three graders ranked the ground truth version the highest in terms of clarity for the majority of images. This is not surprising, given that the frame averaged version represents the ideal scenario, and is what both CNN approaches were trained to reproduce. Impressively, the enhanced scans produced by the two CNN approaches were sometimes ranked higher, with grader 3 rating 44% of the CNN-WGAN scans most clear, almost equal to the percentage of frame-averaged images rated highest (45%). To determine whether different graders shared enhancement preferences for particular images, the inter-observer agreement was measured using Cohen’s Kappa Score (Table 3). A kappa score below 0.2 indicates no agreement, while between 0.21 − 0.39 is considered a minimal level of agreement, and 0.40 − 0.59 indicates weak agreement [35].

Table 3.

Inter-observer agreement as measured by Cohen’s kappa score between the three experts (labelled 1–3) for each of the qualitative metrics.

| 1 v 2 | 1 v 3 | 2 v 3 | |

|---|---|---|---|

| Clarity | 0.429 | 0.188 | 0.250 |

| Accuracy | 0.432 | 0.158 | 0.174 |

| Preference | 0.479 | 0.191 | 0.182 |

These kappa scores reflect weak agreement between graders 1 and 2, and minimal to no agreement between 3 and the others. This is likely due to the inclusion of two enhancement methods (CNN-MSE and CNN-WGAN) that provide similar outcomes, causing the graders to split their preference between the two. A similar effect occurs between BM3D and DD-CDWT, though they are collectively rated lower by all graders. The very low kappa scores for comparisons between grader 3 and the other graders reflects the larger variability grader 3 showed in ratings for all three questions, particularly for CNN-MSE, BM3D and DD-CDWT. While graders 1 and 2 were clear in their prederence for the CNN-based methods. To determine whether graders ranked CNN-MSE or CNN-WGAN higher, the number of times that each was ranked above the other was tallied, and the difference from chance calculated with a binomial test (at a Bonferroni-corrected significance level of p = 0.0056). This showed that all three graders ranked CNN-WGAN images significantly higher than CNN-MSE in all three metrics, however this was only significant for graders 2 and 3 (p < 0.001 for all comparisons).

5. Discussion and future work

In this paper, we demonstrated a novel approach for denoising OCT images using deep learning. We proposed two networks, identical in structure but trained with different objective functions, to remove speckle noise from B-scans. The first network, CNN-MSE, was trained solely to minimise the mean-squared error between the image it produced and one obtained with 6-frame averaging. The second network, CNN-WGAN, was trained using a combination of mean-squared error, perceptual loss, and an adversarial loss. The perceptual loss utilized features representing patterns at multiple pixel scales extracted from a pre-trained network, and therefore is ideal for encouraging edge and texture preservation. The adversarial loss served to push our proposed solution to the natural image manifold using a discriminator network that is trained to differentiate between the enhanced images and image obtained through frame averaging. In contrast to statistical denoising methods, by training the network using averaged images we make no assumptions as to the noise distribution, or presence of artefacts in the images.

Our results show that CNN-MSE quantitatively improves images better than compared methods. Specifically, CNN-MSE showed the highest metrics for PSNR, SSIM, MS-SSIM, and MSE, which were significantly better than the other methods; this was closely followed by CNN-WGAN. Additionally, both CNN-MSE and CNN-WGAN resulted in improved layer annotation by three independent observers, with CNN-MSE showing slightly better results for particularly difficult surfaces. An automated segmentation method using Iowa Reference Algorithms (Retinal Image Analysis Lab, Iowa Institute for Biomedical Imaging, Iowa City, IA [37,38]) was also tested (not shown), but did not provide satisfactory segmentations for any of the enhanced B-scans or for the frame-averaged B-scans. This is likely due to the inclusion of a variety of denoising steps in the method’s pipeline, which merely result in blurring of the layer bourdaries. This will likely be the same in other machine-learning based approaches [39–42] since these techniques also incorporate denoising as well as multi-scale features such as Guassian and Gabor filters.

However, these quantitative improvements were not reflected in the qualitative analysis. This analysis showed that all three graders thought that CNN-WGAN images represented better the details at a microscopic level, and showed structures more clearly than CNN-MSE. This contradiction between the most successful enhancement for quantitative versus qualitative analysis suggests that there exists a dichotomy between the ideal image for the purpose of further analysis, and that for visual inspection. However, given the efficiency of the proposed methods, it is realistic that both methods of enhancement could be used by clinicians for different tasks, based on personal preference.

Since our ground truth images were obtained by averaging only 6 repeated images, they still contained an element of noise (as is evident by observing the background of the images). As such, it is possible that much of the texture that was encouraged by the CNN-WGAN network in its generated images was due to noise. Furthermore, if images without noise had been available, the CNN-WGAN network would likely produce images without noise, but still with accurate textural detail (which the MSE network tends to blur slightly). Without access to ground truth images that have been completely denoised, it is not possible to assess whether this is the case. However, this would be of interest to investigate in the future, possibly by averaging more images. Interestingly, the hint of noise that is shown in the CNN-WGAN likely contributed to these images being ranked most highly in the qualitative assessment, since overly smoothed images (such as those produced by the CNN-MSE network) may suggest that important details have been blurred, thereby reducing confidence in the image.

The frame averaged scans were ranked higher more often by the observers for all metrics, however such an approach requires multiple volumes to be acquired at each visit, followed by registration and frame-averaging. While some devices provide real-time averaging to reduce speckle, this still requires the eye to be kept open and fixated on a target for a longer time, which can be difficult, particularly for elderly patients. Additionally, such systems cannot be used on previously recorded data where multiple scans have not been acquired. Our proposed solution has the advantage of providing a very close approximation of a frame-averaged image, but operates using only a single input image. Therefore, the enhancement can be applied to any existing scans. While training of the network is computationally demanding and can take a long time, particularly for CNN-WGAN, which requires several updates to the discriminator for each generator update, the trained model operates quickly and effectively using only the resources provided by a laptop computer. Additionally, we’ve shown that both CNN methods can generalise to OCT volumes with pathologies that were not part of the training set (as shown by their success at enhancing glaucomatous volumes). It would be of interest in the future to train the network with images containing pathologies, including more dramatic structural pathologies (such as those associated with AMD), since it is likely that this would further improve the performance for unhealthy B-scan enhancement.

6. Conclusion

The proposed deep learning-based enhancement method provides significant improvements in image quality metrics for OCT B-scans, while preserving structural features of the retinal layers. Furthermore, we have shown that by training the same deep neural network in two different ways, perceptually distinct results can be achieved, that may be used interchangeably dependent on the task and personal preference. Despite being trained using only healthy OCT volumes, the two CNN’s presented herein were able to generalise to glaucomatous scans. The fact that such drastic enhancement can be achieved in a short time using only a single scan as input is a testament to effectiveness of deep learning as a viable solution in the clinical setting.

Appendix

Fig. 6.

Intra-observer (a) and inter-observer (b) annotation location difference (AAD), averaged over the columns of all annotated B-scans for BM, INL and RNFL surfaces (mean ± standard error). Statistical significance at p < 0.001 indicated by *.

Fig. 7.

Qualitative results for perceived accuracy (a–c) and personal preference (d–f) for 55 B-scans ranked by observer 1 (a, d), observer 2 (b, e) and observer 3 (c, f) for images acquired by 6-frame averaging (“Ground Truth”), CNN-WGAN, CNN-MSE, BM3D, DD-CDWT and the respective un-processed images (“Raw”). Colour indicates the percentage of images, for the respective image type, in each rank position (where 1=highest rank, 6=lowest rank).

Disclosures

KJH, BJA, MHL, and RG: IBM Research Australia (E).

References

- 1.Bowd C., Weinreb R. N., Williams J. M., Zangwill L. M., “The retinal nerve fiber layer thickness in ocular hypertensive, normal, and glaucomatous eyes with optical coherence tomography,” Arch. Ophthalmol. 118, 22–26 (2000). 10.1001/archopht.118.1.22 [DOI] [PubMed] [Google Scholar]

- 2.Medeiros F. A., Zangwill L. M., Bowd C., Vessani R. M., Susanna R., Weinreb R. N., “Evaluation of retinal nerve fiber layer, optic nerve head, and macular thickness measurements for glaucoma detection using optical coherence tomography,” Am. J. Ophthalmol. 139, 44–55 (2005). 10.1016/j.ajo.2004.08.069 [DOI] [PubMed] [Google Scholar]

- 3.Srinivasan V. J., Wojtkowski M., Witkin A. J., Duker J. S., Ko T. H., Carvalho M., Schuman J. S., Kowalczyk A., Fujimoto J. G., “High-definition and 3-dimensional imaging of macular pathologies with high-speed ultrahigh-resolution optical coherence tomography,” Ophthalmology 113, 2054–2065 (2006). 10.1016/j.ophtha.2006.05.046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Farsiu S., Chiu S. J., O’Connell R. V., Folgar F. A., Yuan E., Izatt J. A., Toth C. A., “Quantitative classification of eyes with and without intermediate age-related macular degeneration using optical coherence tomography,” Ophthalmology 121, 162–172 (2014). 10.1016/j.ophtha.2013.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chiu S. J., Izatt J. A., O’Connell R. V., Winter K. P., Toth C. A., Farsiu S., “Validated automatic segmentation of AMD pathology including drusen and geographic atrophy in SD-OCT images,” Investig. Ophthalmol. Vis. Sci. 53, 53–61 (2012). 10.1167/iovs.11-7640 [DOI] [PubMed] [Google Scholar]

- 6.Schuman S. G., Koreishi A. F., Farsiu S., Jung S. ho, Izatt J. A., Toth C. A., “Photoreceptor layer thinning over drusen in eyes with age-related macular degeneration imaged in vivo with spectral-domain optical coherence tomography,” Ophthalmology 116, 488–496 (2009). 10.1016/j.ophtha.2008.10.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jørgensen T. M., Thomadsen J., Christensen U., Soliman W., Sander B., “Enhancing the signal-to-noise ratio in ophthalmic optical coherence tomography by image registration–method and clinical examples,” J. biomedical optics 12, 041208 (2012). 10.1117/1.2772879 [DOI] [PubMed] [Google Scholar]

- 8.Ozcan A., Bilenca A., Desjardins A. E., Bouma B. E., Tearney G. J., “Speckle reduction in optical coherence tomography images using digital filtering,” J. Opt. Soc. Am. A, Opt. image science, vision 24, 1901–1910 (2007). 10.1364/JOSAA.24.001901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Contreras Ortiz S. H., Chiu T., Fox M. D., “Ultrasound image enhancement: A review,” Biomed. Signal Process. Control. 7, 419–428 (2012). 10.1016/j.bspc.2012.02.002 [DOI] [Google Scholar]

- 10.Yu H., Gao J., Li A., “Probability-based non-local means filter for speckle noise suppression in optical coherence tomography images,” Opt. Lett. 41, 994 (2016). 10.1364/OL.41.000994 [DOI] [PubMed] [Google Scholar]

- 11.Duan J., Lu W., Tench C., Gottlob I., Proudlock F., Samani N. N., Bai L., “Denoising optical coherence tomography using second order total generalized variation decomposition,” Biomed. Signal Process. Control. 24, 120–127 (2016). 10.1016/j.bspc.2015.09.012 [DOI] [Google Scholar]

- 12.Fang L., Li S., Nie Q., Izatt J. A., Toth C. A., Farsiu S., “Sparsity based denoising of spectral domain optical coherence tomography images,” Biomed. Opt. Express 3, 927–942 (2012). 10.1364/BOE.3.000927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fang L., Li S., Cunefare D., Farsiu S., “Segmentation based sparse reconstruction of optical coherence tomography images,” IEEE Transactions on Med. Imaging 36, 407–421 (2017). 10.1109/TMI.2016.2611503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jian Z., Yu Z., Yu L., Rao B., Chen Z., Tromberg B. J., “Speckle attenuation in optical coherence tomography by curvelet shrinkage,” Opt. Lett. 34, 1516 (2009). 10.1364/OL.34.001516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Xu J., Ou H., Lam E. Y., Chui P. C., Wong K. K. Y., “Speckle reduction of retinal optical coherence tomography based on contourlet shrinkage,” Opt. Lett. 38, 2900 (2013). 10.1364/OL.38.002900 [DOI] [PubMed] [Google Scholar]

- 16.Mayer M. A., Borsdorf A., Wagner M., Hornegger J., Mardin C. Y., Tornow R. P., “Wavelet denoising of multiframe optical coherence tomography data,” Biomed. Opt. Express 3, 572 (2012). 10.1364/BOE.3.000572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Duan J., Tench C., Gottlob I., Proudlock F., Bai L., “Optical coherence tomography image segmentation,” in International Conference on Image Processing (ICIP), (IEEE, 2015), pp. 4278–4282. [Google Scholar]

- 18.Aum J., Kim J.-h., Jeong J., “Effective speckle noise suppression in optical coherence tomography images using nonlocal means denoising filter with double Gaussian anisotropic kernels,” Appl. Opt. 54, D43 (2015). 10.1364/AO.54.000D43 [DOI] [Google Scholar]

- 19.Perdios D., Besson A., Arditi M., Thiran J.-P., “A deep learning approach to ultrasound image recovery,” in 2017 IEEE International Ultrasonics Symposium (IUS), (IEEE, 2017), pp. 1–4. [Google Scholar]

- 20.Gondara L., “Medical image denoising using convolutional denoising autoencoders,” in 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), (IEEE, 2016), pp. 241–246. 10.1109/ICDMW.2016.0041 [DOI] [Google Scholar]

- 21.Yang Q., Yan P., Zhang Y., Yu H., Shi Y., Mou X., Kalra M. K., Wang G., “Low dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss,” IEEE Transactions on Med. Imaging 37, 1348–1357 (2018). 10.1109/TMI.2018.2827462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chen H., Zhang Y., Kalra M. K., Lin F., Chen Y., Liao P., Zhou J., Wang G., “Low-dose CT with a residual encoder-decoder convolutional neural network (RED-CNN),” IEEE Transactions on Med. Imaging 36, 2524–2535 (2017). 10.1109/TMI.2017.2715284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.He K., Zhang X., Ren S., Sun J., “Identity mappings in deep residual networks,” in European Conference on Computer Vision (Springer, Cham, 2016), pp. 630–645. [Google Scholar]

- 24.Arjovsky M., Chintala S., Bottou L., “Wasserstein generative adversarial networks,” in Proceedings of The 34th International Conference on Machine Learning, (2017), pp. 1–32. [Google Scholar]

- 25.Ledig C., Theis L., Huszar F., Caballero J., Cunningham A., Acosta A., Aitken A., Tejani A., Totz J., Wang Z., Shi W., “Photo-realistic single image super-resolution using a generative adversarial network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition,(2017), pp. 4681–4690. [Google Scholar]

- 26.Simonyan K., Zisserman A., “Very deep convolutional networks for large-scale image recognition,” International Conference on Learning Representations, (2014). [Google Scholar]

- 27.Rubner Y., Tomasi C., Guibas L. J., “Earth mover’s distance as a metric for image retrieval,” Int. J. Comput. Vis. 40, 99–121 (2000). 10.1023/A:1026543900054 [DOI] [Google Scholar]

- 28.Gulrajani I., Ahmed F., Arjovsky M., Dumoulin V., Courville A., “Improved training of wasserstein GANs,” in Advances In Neural Information Processing Systems, (2017), pp. 5767–5777. [Google Scholar]

- 29.Klein S., Staring M., Murphy K., Viergever M. A., Pluim J. P., “Elastix: A toolbox for intensity-based medical image registration,” IEEE Transactions on Med. Imaging 29, 196–205 (2010). 10.1109/TMI.2009.2035616 [DOI] [PubMed] [Google Scholar]

- 30.Kingma D. P., Ba J., “Adam: a method for stochastic optimization,” \International Conference on Learning Representations (2014). [Google Scholar]

- 31.Dabov K., Foi A., Katkovnik V., Egiazarian K., “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Transactions on Image Process. 16, 2080–2095 (2007). 10.1109/TIP.2007.901238 [DOI] [PubMed] [Google Scholar]

- 32.Selesnick I. W., “The double-density dual-tree DWT,” IEEE Transactions on Signal Processing, 52 (2004), pp. 1304–1314. 10.1109/TSP.2004.826174 [DOI] [Google Scholar]

- 33.Chitchian S., Mayer M. A., Boretsky A. R., van Kuijk F. J., Motamedi M., “Retinal optical coherence tomography image enhancement via shrinkage denoising using double-density dual-tree complex wavelet transform,” J. Biomed. Opt. 17, 116009 (2012). 10.1117/1.JBO.17.11.116009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gibbons J. D., Chakraborti S., Nonparametric Atatistical Inference (Chapman & Hall/Taylor & Francis, 2003). [Google Scholar]

- 35.McHugh M. L., “Interrater reliability: the kappa statistic,” Biochem. Medica 22, 276–282 (2012). 10.11613/BM.2012.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Reis A. S., Sharpe G. P., Yang H., Nicolela M. T., Burgoyne C. F., Chauhan B. C., “Optic disc margin anatomy in patients with glaucoma and normal controls with spectral domain optical coherence tomography,” Ophthalmology 119, 738–747 (2012). 10.1016/j.ophtha.2011.09.054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li K., Wu X., Chen D. Z., Sonka M., “Optimal surface segmentation in volumetric images - A graph-theoretic approach,” IEEE Transactions on Pattern Analysis Mach. Intell. 28, 119–134 (2006). 10.1109/TPAMI.2006.19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Garvin M. K., Abràmoff M. D., Abràmoff M. D., Wu X., Russell S. R., Burns T. L., Sonka M., “Automated 3-D Intraretinal Layer Segmentation of Macular Spectral-Domain Optical Coherence Tomography Images,” IEEE Transactions on Med. Imaging 28, 1436–1447 (2009). 10.1109/TMI.2009.2016958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chiu S. J., Li X. T., Nicholas P., Toth C. A., Izatt J. A., Farsiu S., “Automatic segmentation of seven retinal layers in SDOCT images congruent with expert manual segmentation,” Opt. Express 18, 19413 (2010). 10.1364/OE.18.019413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Antony B., Abràmoff M. D., Tang L., Ramdas W. D., Vingerling J. R., Jansonius N. M., Lee K., Kwon Y. H., Sonka M., Garvin M. K., “Automated 3-D method for the correction of axial artifacts in spectral-domain optical coherence tomography images,” Biomed. optics express 2, 2403–2416 (2011). 10.1364/BOE.2.002403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lang A., Carass A., Sotirchos E., Calabresi P., Prince J. L., “Segmentation of retinal OCT images using a random forest classifier,” in International Society for Optical Engineering the International Society for Optical Engineering, vol. 8669 Ourselin S., Haynor D. R., eds. (International Society for Optics and Photonics, 2013), pp. 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lee K., Niemeijer M., Garvin M. K., Kwon Y. H., Sonka M., Abramoff M. D., “Segmentation of the optic disc in 3-D OCT scans of the optic nerve head,” IEEE Transactions on Med. Imaging 29, 159–168 (2010). 10.1109/TMI.2009.2031324 [DOI] [PMC free article] [PubMed] [Google Scholar]