Abstract

Objective

To review how web-based prognosis tools for cancer patients and clinicians describe aleatory (risk estimates) and epistemic (imprecision in risk estimates) uncertainties.

Methods

We reviewed prognostic tools available online and extracted all uncertainty descriptions. We adapted an existing classification and classified each extracted statement by presentation of uncertainty.

Results

We reviewed 222 different prognostic risk tools, which produced 772 individual estimates. When describing aleatory uncertainty, almost all (90%) prognostic tools included a quantitative description, such as “chances of survival after surgery are 10%”, though there was heterogeneity in the use of percentages, natural frequencies, and use of graphics. Only 14% of tools described epistemic uncertainty. Of those that did, most used a qualitative prefix such as “about” or “up to”, while 22 tools described quantitative descriptions using confidence intervals or ranges.

Conclusions

Considerable heterogeneity exists in the way uncertainties are communicated in cancer prognostic tools. Few tools describe epistemic uncertainty. This variation is predominately explained by a lack of evidence and consensus in risk communication, particularly for epistemic uncertainty.

Practice Implications

As precision medicine seeks to improve prognostic estimates, the community may not be equipped with the tools to communicate the results accurately and effectively to clinicians and patients.

Keywords: Prognosis, Uncertainty, Survival, Communication

1. Introduction

In the era of precision medicine there is an increasing focus on the production and use of individualized prognostic estimates in clinical care. Individualized information about prognosis can help patients make better informed decisions about treatment, and to cope more effectively with their disease by reducing uncertainty about the future [1,2]. The production and clinical use of individualized prognostic information have been facilitated by clinical prediction models, which are often implemented in the form of online prognostic tools. These tools have proliferated in oncology [1,3,4] and hold great promise to improve clinical care. However, their utility depends on the quality and completeness of the data and the methodological adequacy of the statistical models on which they are based. Shortcomings in these factors limit the ability of prognostic models to account for individual variation in the progression of disease and, in some cases, the response to treatment, and the effective translation of individualized prognostic information into actionable clinical decisions [5].

Individualized prognostic information can be complex and challenging to communicate [1,6]. In particular, the inherent uncertainties of prognostic information need to be communicated and understood if patients and clinicians are to engage in informed and shared decision making [1,3]. These uncertainties are predominantly of two types. The first is aleatory uncertainty, which stems from the “fundamental indeterminacy or randomness of future outcomes’’ [7], and is most frequently expressed by point estimates of probability or ‘risk’. However, the stratification of already uncertain data will often lead to greater uncertainty in the prognostic estimates since estimates are typically based on smaller sample sizes [3]. The second main type of uncertainty is epistemic uncertainty, which stems from inadequacies in existing knowledge of the probability of future outcomes. Epistemic uncertainty arises from what has been termed ‘ambiguity’—the ‘lack of reliability, credibility, or adequacy’ of information about future outcomes [7]—and is manifest by imprecision in probability estimates, which is commonly communicated using ranges or confidence intervals around a point estimate.

Recommendations on best practices for risk communication both in and outside of health care have centered on how to communicate absolute risks (e.g. percentages or natural frequencies, or both), how graphical representations (e.g. icon/dot arrays, risk ladders using a logarithmic scale, analogies) can beused to help people visualize and contextualize risk [8–13], and the time interval over which the risk will occur [10,11]. These approaches deal implicitly with aleatory uncertainty, where empirical evidence suggests that people have difficulty understanding the notion of randomness or chance that probability estimates aim to convey [14]. There has been little research conducted to inform guidelines on whether or how best to communicate epistemic uncertainty. Recent expert guidelines have recommended that patient decision aids should describe “uncertainty around the probabilities,” there are no evidence-based recommendations about how [13].

The objective of this study was to review a comprehensive cross-section of currently available prognostic tools in oncology, to investigate whether and how aleatory and epistemic uncertainties are communicated. Oncology is the leading area in the development of prognostic tools in the era of precision medicine. By reviewing this area and highlighting the current degree of consistency in approaches to communicating these uncertainties, we hoped to identify existing gaps in knowledge and to inform future research and recommendations on improving the communication of uncertainty in prognostic information.

2. Methods

We sought to identify a cross-section of available interactive cancer prognostic tools. We focused on prognostic rather than risk models (which estimate the risk of developing cancer). We utilized two previous searches that used a similar methodology. As our primary search strategy, we employed a method we previously used to identify interactive cancer prognostic tools, which utilized the Google and Medline Internet search engines combined with input from cancer experts [1]. In the Google and Medline searches, terms describing cancer (e.g. cancer, lymphoma), prognosis (e.g. prognosis, survival) and tool (e.g. tool, calculator) were combined. In Google, the first 10 pages of results were reviewed for relevant results. This search identified 22 websites that contained a total of 107 web-based tools covering 89 different cancers [1]. To maximize its comprehensiveness, we augmented this inventory with the results of a more focused search conducted as part of a different project examining the state of the field of prognostic modeling for prostate and head and neck cancers. This project aimed to identify interactive cancer prognostic tools for prostate and head and neck cancers, and identified 60 web-based prognostic tools (38 of which would have been identified by our primary search), some of which were contained within web-based tools that dealt with multiple different cancer types.

The websites identified in either search strategy were searched to identify all available interactive cancer prognostic tools. Interactive cancer prognostic tools designed for any cancer on these websites were considered for inclusion in this review. The aim was not perform an exhaustive search, but rather to obtain a robust cross section of easily accessible and widely used current websites and tools, and to understand variation in how aleatory and epistemic uncertainties are communicated. Inclusion of the second search strategy (targeting prostate and head and neck cancers) might have resulted in an over-representation of tools for these cancers. However, these cancers are common (e.g. prostate cancer is the most common types of cancer in men the USA and Canada), and many of the prognostic tools for these cancers were contained within broader web-based tools that dealt with multiple different cancer types. Therefore, we believe our search identified the majority of interactive prognostic websites and tools for all cancers.

We included any prognostic tool that was written in English, cancer-related, and interactive (utilizing patient data to produce prognostic estimates). If a single tool developer produced multiple tools for different cancers, we included them all. We did not seek to understand if the same underlying algorithm was being used in tools on different websites. Tools that predicted the likelihood of developing cancer, provided only promotional health material, or focused only on the prevention of cancer were excluded. Prognostic tools were also excluded if they were unavailable on the Internet, provided a statistical model but no interactive prognostic tool, or required financial payment for access.

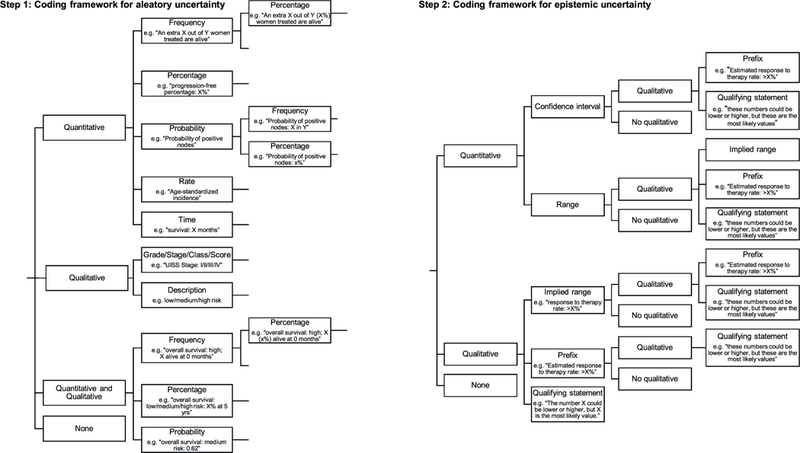

All risk estimates were extracted directly from the identified tools. An existing extraction framework, used previously to classify aleatory and epistemic uncertainty in patient decision support interventions [15], was adapted to describe the communication of risk estimates in interactive cancer prognostic tools. The existing framework first separated components of information into aleatory and epistemic uncertainty and considered each in turn. The communication of aleatory uncertainty was first classified as quantitative (e.g. 50 in 100 will survive), qualitative (e.g. “with treatment people may survive …“, or both quantitative and qualitative (e.g. 50 in 100 may survive). Within each of these categories the information was subcategorized by the presentation format. Quantitative communication of aleatory uncertainty was further classified according to whether frequencies, percentages or combinations of frequencies and percentages were used. Epistemic uncertainty was then classified according to whether it was described quantitatively (e.g. a range: “5–10 in 100”), qualitatively (e.g. an implied range: “up to 5 in 100”), both quantitatively and qualitatively (e.g. “up to 5–10 in 100”), or not communicated. For the purposes of this study we added sub-categories to the aleatory uncertainty classification of quantitative probability (e.g. “probability of positive nodes”, death rate, e.g. “age-standardized incidence”, estimated survival time) and qualitative probability (grade/stage/class/score e.g. “UISS stage IV”) and added the subcategory of “use of qualifying statements” to the qualitative epistemic uncertainty classification (e.g. “the number X could be lower or higher, but X is the most likely value”) (Fig. 1). Often tools provided more than one prognostic risk estimate, sometimes communicated in different ways; in these cases each estimate was extracted separately. Sometimes, the descriptions would change based on the inputs, such as when aleatory uncertainty was extremely high or low, some tools would switch from no epistemic uncertainty, to introducing implied ranges (greater than or less than, e.g. < 1%; >99%). In these cases, we took an inclusive approach and classified these as having qualitative implied range epistemic uncertainty. We present results primarily at the level of classification of the tool to avoid our description of ‘standard practice’ being skewed either by a large number of tools from a small number of developers or by tools containing a large number of estimates whose uncertainty was communicated using only one specific method. We then supplement these results by reporting at the estimate level to convey the variation in presentation in more detail.

Fig. 1.

Framework for defining aleatory and epistemic uncertainty estimates in prognostic tools.

Five reviewers were involved in the extraction and classification process (MB, LS, NB, MH, HK). One reviewer (MB) extracted all risk estimates from all of the prognostic tools identified by the search strategy. The same reviewer (MB) then reviewed these tools for eligibility (applying our inclusion and exclusion criteria) with two other reviewers (MH & NB). Eligible tools were then classified using our adapted framework by two reviewers (HK & MB), and this classification was verified at second stage (LS & MB). This double, independent, extraction process was included to verify the classification of all estimates in each tool as we recognized that there was a degree of judgement involved in applying the classification framework. Any conflicts in classification were resolved by discussion between the five reviewers (MB, LS, NB, MH, HK) which led eventually to consensus.

3. Results

3.1. Prognostic tool characteristics

In total 222 different prognostic risk tools were included in the review, from 34 different websites, which produced 772 individual prognostic estimates (each tool included between 1 and 26 individual risk estimates) (Table 1). The prognostic tools reviewed included 36 different types of cancer, most commonly prostate (48 tools, 22%), breast (24 tools, 11%) and kidney (23 tools, 10%), along with five other cancer categories. The most prominent websites were the Fox Chase Cancer Center (FCCC) and QxMD (a for-profit tool development company that translates clinical research into online tools), which contributed 24% and 18% of the total prognostic tools, respectively. Most prognostic tools were developed in United States (207 tools, 93%) with the remainder from Europe (13 tools, 6%).

Table 1.

Prognostic tools included (N = 222).

| Characteristics | N (%) |

|---|---|

| Website | |

| FCCC | 54 (24%) |

| QxMD | 39 (18%) |

| Lerner | 31 (14%) |

| MSK | 24 (11%) |

| KCI | 16 (7%) |

| MD Anderson | 12 (5%) |

| MAASTRO | 11 (5%) |

| CancerMath | 9 (4%) |

| Other | 26 (12%) |

| Country Developed | |

| USA | 207 (93%) |

| Europe | 13 (6%) |

| Unknown | 2 (1%) |

| Year developed | |

| Pre-2012 (2007 to 2011) | 59 (27%) |

| 2012 onwards | 14 (6%) |

| No Year Provided | 149 (67%) |

| Type of Cancer | |

| Prostate | 48 (22%) |

| Breast | 24 (11%) |

| Kidney | 23 (10%) |

| Blood | 22 (10%) |

| Bladder | 11 (5%) |

| Lung | 11 (5%) |

| Colon | 8 (4%) |

| Other Cancers | 75 (3%) |

FCCC: Fox Chase Cancer Center.

MSK: Memorial Sloan Kettering.

KCI: Kinetic Concepts Inc.

MAASTRO: Maastricht Radiation Oncology.

The majority of prognostic tools reviewed (83%, 184 tools) included at least one direct reference to the statistical models used to estimate prognosis. 171 tools (77%) listed one or more references, 10 tools (5%) supplemented the references provided with information on the number of patients the prognostic estimate was based on (six from MD Anderson Cancer Center, three from the Maastricht Radiation Oncology (MAASTRO) clinic, one from Memorial Sloan Kettering (MSK)), and three tools (1%) augmented the reference with information about a source database or the number of clinical trials used to generate the data on which the statistical model was based. Fourteen tools (6%) provided indirect references about the source database or study design(s) that the estimate was based upon; of these, one tool described multiple clinical trials with consistent results (e.g. risk reduction of 0.7), and one reported that it was based on evidence from studies conducted at large research institutions with surgeons who perform a high volume of procedures. A total of 24 tools (11%) provided no references on the underlying statistical models or their derivation. Of the 772 estimates, 482 (62%) were focused on mortality, 298 (39%) on progression or reoccurrence of a cancer (22, 3% combined “progression or death”), and a few (12, 2%) on other aspects such as chance of side-effects.

3.2. Representations of uncertainty in prognostic estimates

3.2.1. Aleatory uncertainty

Nearly all prognostic tools (200 tools, 90%) included at least one quantitative statement to describe aleatory uncertainty, and usually represented this uncertainty as a point estimate of risk (Table 2). For example, “my chances of surviving my kidney cancer 1 year after surgery are: 10%.” Twenty-two prognostic tools (10%) described aleatory uncertainty using only qualitative estimates of risk. An example was “Risk group: low/low-intermediate/high-intermediate/high.” Those that did not describe aleatory uncertainty tended to focus on a survival time.

Table 2.

Descriptions of uncertainty from included prognostic tools (N = 222).

| Included at least one description reporting aleatory uncertainty by type | N (%)a |

|---|---|

| Qualitative, Description (e.g. low/medium/high risk) | 36 (16%) |

| Qualitative, Score | 34 (15%) |

| Quantitative, Frequency | 35 (16%) |

| Quantitative, Percentage | 169 (76%) |

| Quantitative, Both frequency and percentage | 1 (<1%) |

| Quantitative, Other (e.g. probability, rate) | 10 (5%) |

| Quantitative, Time | 36 (16%) |

| Quantitative & Qualitative | 6 (3%) |

| Included at least one description with epistemic uncertainty by type | N (%)a |

| No epistemic uncertainty | 190 (86%) |

| Qualitative | 9 (4%) |

| Qualitative, Implied Range | 25 (11%) |

| Qualitative, Prefix | 25 (11%) |

| Quantitative, Range/Confidence Interval | 15 (7%) |

| Included at least one description with graphics | N (%)a |

| No graphic | 173 (80%) |

| Graphic provided | 85 (38%) |

| Bar chart | 41 (18%) |

| Icon/dot array | 31 (14%) |

| Survival/mortality curve | 17 (8%) |

| Graph/chart | 10 (4%) |

| Pie chart | 8 (4%) |

| Choice of graphic offered | 9 (5%) |

Many tools included multiple descriptions so the numbers sum to more than 100%.

A small number of prognostic tools (7 tools, 3%) represented aleatory uncertainty by combining multiple qualitative and quantitative risk expressions of risk, for example, “Local control: low/medium/high risk: X% at 5 yrs” (6 tools, 3%) or both frequencies and percentages (1 tool, <1%). All of these seven tools also included other risk estimates communicated using quantitative and/or qualitative methods. No tool provided additional qualitative language aimed at reinforcing the idea of randomness or the meaning of chance.

3.2.2. Epistemic uncertainty

The vast majority of prognostic tools (190 tools, 86%) producing numerous risk estimates (615 estimates, 80%) did not include any representation of epistemic uncertainty (Table 2). Of the 32 prognostic tools (14%) that did communicate epistemic uncertainty, the most common method was either qualitative language (25 tools, 78%). These conveyed imprecision by using qualifying or hedging terms such as ‘estimated’ or ‘about’ to qualify estimates of probability (e.g. “Estimated median survival: X%”) or by communicating an implied range (e.g. “up to x%”: 25 tools, 78%). Further, some tools represented probability estimates as ‘less than’ or ‘greater than’ a number (e.g. “less than X%”) when risks were close to 100% or 0%. Quantitative representations of epistemic uncertainty were presented by 22 prognostic tools, most commonly using confidence intervals (13 tools, 6%) or ranges (9 tools, 4%). Only 9 of the 222 prognostic tools (4%) included additional qualitative language aimed at reinforcing the idea of limitations in knowledge about risk (for example, “due to the fact that a model can never be completely the same as ‘the real world’, X could be lower or higher”).

3.2.3. Combined aleatory and epistemic uncertainty

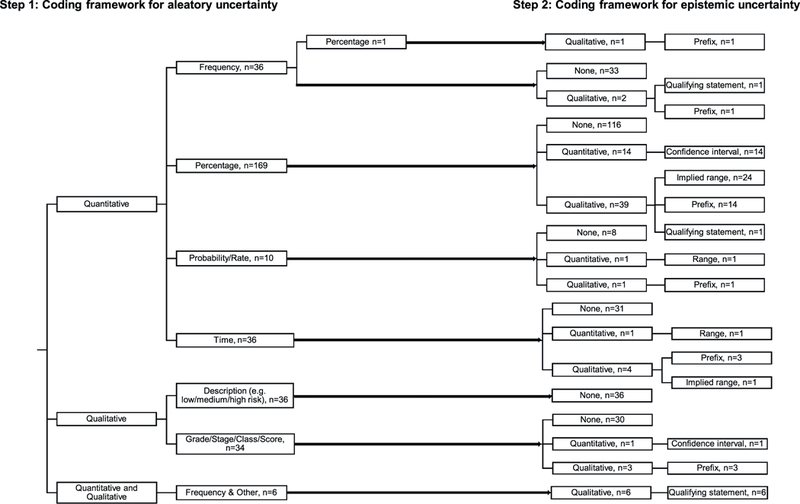

A total of 16 discrete combinations of aleatory and epistemic uncertainty risk estimates were identified in the 772 uncertainty risk estimates that were extracted from the prognostic tools (Fig. 2). The 10 most common combinations, which accounted for 96% of the total risk estimates that combined aleatory and epistemic uncertainty, are shown in Table 3.

Fig. 2.

Classification of prognostic tools according to their use of combinations of aleatory and epistemic uncertainty using our framework.

From 222 tools, 200 of which provided only quantitative descriptions (some tools included more than 1 different type of quantitative description), 22 provided only qualitative descriptions, and 7 included only combined qualitative and quantitative descriptions.

Table 3.

10 most common classification of risk estimates with hypothetical and real examples. (N = 166 (22%) of 772 risk descriptions).

| Type of risk description |

Example description | Example | Source | N (%) of descriptions | |

|---|---|---|---|---|---|

| Aleatory | Epistemic | ||||

| Quantitative, Percentage | Qualitative, Implied Range | 5 year survival: >99% | The chance that I will be free from kidney cancer after 12 years is: (greater than) X% | FCCC -Preoperative recurrence calculator | 35 (5%) |

| Quantitative, Time | Qualitative, Prefix | Estimated median survival: 5 years | Predicted median survival: surgery + chemo: X months | KCI -Pancreatic Cancer Adjuvant Therapy | 35 (5%) |

| Quantitative, Percentage | Quantitative, Confidence Interval | 5 year survival: 5–10% | 12 months: predicted survival probability: X% (Y-Z) | Duke -Second Line Chemotherapy | 30 (4%) |

| Quantitative, Time | Quantitative, Range | Overall survival time: X-Y years | PSA: every X-Y months (for 5y, then every year) | FCCC -NCCN guidelines prostate cancer recommended follow-up | 27 (3.5%) |

| Qualitative and quantitative, Percentage | Qualitative, Prefix | Estimated 5-year survival: X%, low | Estimated probabilities for overall survival for this particular rectal cancer patient are: low/medium/ high risk: X%at 5 yrs | MAASTRO - Models local recurrences, distant metastases & overall survival | 10 (1%) |

| Quantitative, Frequency | Qualitative | Estimated survival: X in XXX are alive at the end of 5 years. These numbers are based on a single, small study so may change as further research is reported. | If there would be a group of 100 patients with the same characteristics as this individual patient, X patients would have no local recurrence and Y patients would be alive 2 years after the radiotherapy treatment. Due to the fact that a model can never be completely the same as the “real world”, these numbers could be lower or higher, but these are the most likely values | MAASTRO - Model local recurrences & overall survival (larynx) | 9 (1%) |

| Qualitative and quantitative, Percentage | Quantitative, Confidence Interval | Estimated 5-year survival: X%, 95% CI X%, X% | Probability of dyspnea >2 within 6 months after the start of R(CH)T: X%. 95% confidence interval Y% - Z% | MAASTRO - Model lung dysphagia | 4 (<1%) |

| Quantitative, Percentage | Quantitative, Range | 5-year survival: between X % and Y% | Extracapsular extension (ECE): range: X% to Y% | CapCalculator - estimated extent of disease at surgery | 4 (<1%) |

| Quantitative, Percentage | Qualitative, Prefix | 5-year survival: around X% | About 50 percent of patients who do not have organ-confined cancer have long-term freedom from recurrence following surgery | MSK - Prostate cancer - preradical prostatectomy | 3 (<1%) |

| Quantitative, Probability | Quantitative, Confidence Interval | Probability of 5 year survival: X.X, 95% CI X.X, X. X | Predicted 12 months survival probability: X (Y-Z confidence interval) | Metatstatic Castrate Resistant Prostate Cancer Patients prediction tool | 3 (<1%) |

The most common combinations of aleatory and epistemic uncertainty were quantitative percentages along with qualitative implied ranges (35 risk estimates, 5%) or quantitative confidence intervals (30 risk estimates, 4%); quantitative risk estimates expressed as time with qualitative prefixes (35 risk estimates, 5%) or quantitative ranges (27 risk estimates, 4%); or quantitative frequencies with a qualitative description (9 risk estimates, 1%) (e.g., ‘the number X could be lower or higher, but X is the most likely value’).

The 6 remaining discrete combinations of aleatory and epistemic uncertainty presentation (those not presented in Table 3) accounted for 4% of all combined uncertainty representations. There was no pattern for systematically different methods for describing mortality vs. progression or reoccurrence of cancer estimates.

3.2.4. Graphical communication of uncertainty

Only 93 tools (42%) presented graphics to support the communication of either type of uncertainty in prognostic information. For tools that did use graphical representation to support at least one statement, this was most commonly prespecified by the website (85 tools, 38%), although a small number of tools allowed users to specify the type of graphic they would prefer to use to represent the risk (9 tools, 5%).

4. Discussion and conclusion

4.1. Discussion

Our study describes the way currently available cancer prognostic tools describe both aleatory and epistemic uncertainty when communicating prognostic information. Our primary finding is that most tools communicate aleatory uncertainty in quantitative terms, using point estimates of risk, but do not communicate epistemic uncertainty. Furthermore, there was considerable heterogeneity in how aleatory uncertainty was described, ranging from percentages and frequencies of mortality (e.g., ‘50%’ or ‘50 in 100 people survive 5 years’) or progression at a given time point, to median survival times (e.g., ‘median survival is 5 years’). Of the few tools that did describe epistemic uncertainty, qualitative representations were used. A small minority of tools communicated epistemic uncertainty in quantitative terms conveying imprecision – e.g., 95% confidence intervals and risk ranges.

A growing body of empirical research has begun to identify several best practices in risk communication [10,13,16]. Studies have focused on the use of natural frequencies with the aid of graphical interfaces. In this regard, the finding that most prognostic tools used percentages instead of natural frequencies, without the use of graphical displays is a departure from recommendations, although the value of graphical information is equivocal. Whilst it might be argued that many of these tools are designed primarily to be used by clinicians, who then bear responsibility for communicating prognostic information to patients, there is considerable evidence that clinicians—as well as patients—struggle with understanding risk information [17]. This problem raises the need to design prognostic tools in a way that ensures proper understanding of prognostic information, using recommended best practices in risk communication. Unfortunately, best practices for communicating uncertainty in prognostic information remain to be defined.

As precision medicine seeks to improve prognostic estimates, particularly the prognosis for patients on particular treatments based on their genomic profiles, our review suggests that the community may not be equipped with the tools to support them in communicating the results of these studies accurately and effectively to clinicians and patients. A previous commentary highlights the contradiction in the use of the term ‘precision’ in precision medicine, highlighting that tailoring treatments to increasingly small subgroups of patients and individuals will ‘demand a greater tolerance of and ability to calculate and interpret probabilities of uncertainty by physicians and patients’ [3]. There is a fundamental trade-off between greater accuracy in point estimates of risk (decreased aleatory uncertainty), and lower precision in these estimates at the individual level (increased epistemic uncertainty) manifest by wider confidence intervals, as the sample sizes used to generate these estimates are reduced. This trade-off needs to be made explicit, but our review suggests that prognostic tools in cancer do not fully describe these uncertainties. This is, perhaps, expected given the lack of both evidence and expert recommendations on best practices for communicating uncertainty in the wider risk communication literature [18]. However, it is not clear from our review whether certain developers have even considered communicating these uncertainties, and epistemic uncertainty in particular. Even for the few existing tools that represent imprecision using confidence intervals, there is evidence—e.g., the use of multiple decimal places in risk estimates—that imply inconsistency or discomfort with the task of communicating epistemic uncertainty.

There are concerns about potential negative outcomes in describing epistemic uncertainty in prognostic tools. Communicating epistemic uncertainty can lead to more pessimistic appraisals of risks and avoidance of decision making, a set of responses known as ambiguity aversion [12,13]. Furthermore, some studies have found that communicating epistemic uncertainty can increase worry [19,20]. While this might be a negative effect that developers might want to avoid, this worry may also help people understand the limitations of risk evidence and avoid an artificial sense of certainty [21,22] leading to more appropriately cautious and deliberative decisions [23]. Furthermore, although in theory the notion of randomness or indeterminacy is implicit in all risk estimates, in practice people have difficulty understanding these concepts and attribute excess certainty to risk estimates [14]. Yet few studies have examined how the communication and understanding of aleatory uncertainty can be improved [24–27]. Our review highlights the need for more empirical research to understand not only the methods and outcomes of communicating both aleatory and epistemic uncertainty in prognostic tools, and the extent and reasons for variation in the clinical communication of uncertainty. Whilst it was not a primary objective of this study, in this context of a lack of certainty about potential negative outcomes, it was notable that few web-based tools provided links to sources of additional support for users with questions. And although a few tools included some information about factors affecting the quality of risk evidence (e.g., clinical studies and patient populations used to derive prognostic models), no tools evaluated or explicitly discussed the quality of the risk evidence. As all the web-based tools we reviewed in this study are accessible and available to patients, this lack of attention to the quality of prognostic estimates is a major limitation of existing tools.

There are several limitations to our study. Our search strategy, while based on an existing published approach, has led us to disproportionately focus on a specific set of cancers. While we have included other cancers by the same developers, we have missed tools that might only be available for these other cancers, and it is possible these would be different. Moreover, the strategy will have missed some developers, and we have also not included mobile applications, which are increasingly being used by clinicians. Next, even though two independent persons extracted the data, we still may have misclassified some descriptions particularly in the interpretation of some estimates. For example the description “about X%” may infer the author wanting to describe epistemic uncertainty around the percentage, or might be the author simply describing that they have rounded the percentage to the nearest whole number. Furthermore we did not calculate inter-rater reliability which would have allowed us to quantify the degree of consensus in the classification of presentation of information in risk estimates, however we believe that our extensive double, independent, extraction process led to a high degree of consensus. Finally, our studies objective was descriptive, and we have not reviewed studies that have measured how effective the prognostic tools are in making actionable decisions.

4.2. Conclusion

The findings of our study highlight the need for a more deliberate approach to the communication of uncertainty in prognostic tools. Focused attention on this task is needed to better define knowledge gaps, to achieve greater consistency in the extent and manner in which aleatory and epistemic uncertainties are communicated, and ultimately to help patients and their clinicians make better-informed decisions.

4.3. Practice implications

For developers of prognostic tools, it is recommended to follow best practices in risk communication for describing aleatory uncertainty [10,13,16]. For deciding if and how to describe epistemic uncertainty, consideration of the context and potential influence on decisions is warranted [15]. Caution is advised about using qualitative representations of epistemic uncertainty without first understanding how this might be interpreted. For users of prognostic tools and communicators of uncertain evidence, recognition that different approaches to conveying the same risk information exist and that some approaches might be more understandable to individuals than others.

Acknowledgements

This project was funded by the Canadian Centre for Applied Research in Cancer Control (ARCC). ARCC receives core funding from the Canadian Cancer Society Research Institute (Grant #2105–703549). We also acknowledge Judy Chiu for her support on the project.

Funding

This work was supported by the Canadian Cancer Society Research Institute (Grant #2105–703549). N. Bansback is a Canadian Institutes for Health Research New Investigator. M. Harrison is supported by a Michael Smith Foundation for Health Research Scholar Award. P.K.J. Han and B. Rabin were supported by the US National Cancer Institute (Contract HHSN2612012000010 l).

Footnotes

Declarations of interest

None.

References

- [1].Rabin BA, Gaglio B, Sanders T, Nekhlyudov L, Dearing JW, Bull S, et al. , Predicting cancer prognosis using interactive online tools: a systematic review and implications for cancer care providers, Cancer Epidemiol. Biomark. Prev 22 (2013) 1645–1656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Hagerty RG, Butow PN, Ellis PM, Dimitry S, Tattersall MHN, Communicating prognosis in cancer care: a systematic review of the literature, Ann. Oncol 16 (2005) 1005–1053. [DOI] [PubMed] [Google Scholar]

- [3].Hunter DJ, Uncertainty in the era of precision medicine, N. Engl. J. Med 375 (2016) 711–713. [DOI] [PubMed] [Google Scholar]

- [4].Shariat SF, Karakiewicz PI, Roehrborn CG, Kattan MW, An updated catalog of prostate cancer predictive tools, Cancer 113 (2008) 3075–3099. [DOI] [PubMed] [Google Scholar]

- [5].Weeks JC, Cook EF, O’Day SJ, Peterson LM, Wenger N, Reding D, et al. , Relationship between cancer patients’ predictions of prognosis and their treatment preferences, JAMA 279 (1998) 1709–1714. [DOI] [PubMed] [Google Scholar]

- [6].Epstein RM, Street RL Jr. Patient-Centered Communication in Cancer Care: Promoting Healing and Reducing Suffering, APA PsycNET Available from URL: http://psycnet.apa.org/psycextra/481972008-001.

- [7].Han PKJ, Klein WMP, Arora NK, Varieties of uncertainty in health care: a conceptual taxonomy, Med. Decis. Mak 31 (2011) 828–838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Hammitt JK, Graham JD, Willingness to pay for health protection: inadequate sensitivity to probability? J. Risk Uncertain 18 (1999) 33–62. [Google Scholar]

- [9].Corso PS, Hammitt JK, Graham JD, Valuing mortality-risk reduction: using visual aids to improve the validity of contingent valuation, J. Risk Uncertain 23 (2001) 165–184. [Google Scholar]

- [10].Trevena LJ, Zikmund-Fisher BJ, Edwards A, Gaissmaier W, Galesic M, Han PKJ, et al. , Presenting quantitative information about decision outcomes: a risk communication primer for patient decision aid developers, BMC Med. Inform. Decis. Mak 13 (Suppl 2) (2013)S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Helping patients decide: ten steps to better risk communication, J. Natl. Cancer Inst 103 (2011) 1436–1443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Han PKJ, Joekes K, Elwyn G, Mazor KM, Thomson R, Sedgwick P, et al. , Development and evaluation of a risk communication curriculum for medical students, Patient Educ. Couns 94 (2014) 43–49. [DOI] [PubMed] [Google Scholar]

- [13].Elwyn G, O’Connor A, Stacey D, Volk R, Edwards A, Coulter A, et al. , Developing a quality criteria framework for patient decision aids: online international Delphi consensus process, BMJ 333 (2006) 417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Han PKJ, Lehman TC, Massett H, Lee SJ, Klein WM, Freedman AN, Conceptual problems in laypersons’ understanding of individualized cancer risk: a qualitative study, Health Expect 12 (2009) 4–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Bansback N, Bell M, Spooner L, Pompeo A, Han PKJ, Harrison M, Communicating uncertainty in benefits and harms: a review of patient decision support interventions, Patient 10 (2017) 311–319. [DOI] [PubMed] [Google Scholar]

- [16].Trevena LJ, Barratt A, Butow P, Caldwell P, A systematic review on communicating with patients about evidence, J. Eval. Clin. Pract 12 (2006) 13–23. [DOI] [PubMed] [Google Scholar]

- [17].Gigerenzer G, Gaissmaier W, Kurz-Milcke E, Schwartz LM, Woloshin S, Helping doctors and patients make sense of health statistics, Psychol. Sci. Public Interest 8 (2007) 53–96. [DOI] [PubMed] [Google Scholar]

- [18].Han PK, Conceptual, methodological, and ethical problems in communicating uncertainty in clinical evidence, Med. Care Res. Rev 70 (2013) 14S–36S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Han PKJ, Klein WMP, Lehman T, Killam B, Massett H, Freedman AN, The communication of uncertainty regarding individualized cancer risk estimates: effects and influential factors, Med. Decis. Mak 31 (2011) 354–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Lipkus IM, Klein WM, Rimer BK, Communicatingbreastcancerrisks to women using different formats, Cancer Epidemiol. Biomark. Prev 10 (2001) 895–898. [PubMed] [Google Scholar]

- [21].Han PK, Klein WM, Lehman TC, Massett H, Lee SC, Freedman AN, Laypersons’ responses to the communication of uncertainty regarding cancer risk estimates, Med. Decis. Mak 29 (2009) 391–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Schapira MM, Nattinger AB, McHorney CA, Frequency or probability? A qualitative study of risk communication formats used in health care, Med. Decis. Mak 21 (2001) 459–467. [DOI] [PubMed] [Google Scholar]

- [23].Politi MC, Lewis CL, Frosch DL, Supporting shared decisions when clinical evidence is low, Med. Care Res. Rev 70 (2013) 113S–128S. [DOI] [PubMed] [Google Scholar]

- [24].Han PK, Klein WM, Killam B, Lehman T, Massett H, Freedman AN, Representing randomness in the communication of individualized cancer risk estimates: effects on cancer risk perceptions, worry, and subjective uncertainty about risk, Patient Educ. Couns 86 (2012) 106–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Stone ER, Sieck WR, Bull BE, Yates JF, Parks SC, Rush CJ, Foreground: background salience: explaining the effects of graphical displays on risk avoidance, Organ Behav. Hum. Decis. Process 90 (2003) 19–36. [Google Scholar]

- [26].Stone ER, Yates JF, Parker AM, Effects of numerical and graphical displays on professed risk-taking behavior, J. Exp. Psychol. Appl 3 (1997) 243. [Google Scholar]

- [27].Schirillo JA, Stone ER, The greater ability of graphical versus numerical displays to increase risk avoidance involves a common mechanism, Risk Anal 25 (2005) 555–566. [DOI] [PubMed] [Google Scholar]