Abstract

Background

Melanoma has one of the fastest rising incidence rates of any cancer. It accounts for a small percentage of skin cancer cases but is responsible for the majority of skin cancer deaths. History‐taking and visual inspection of a suspicious lesion by a clinician is usually the first in a series of ‘tests’ to diagnose skin cancer. Establishing the accuracy of visual inspection alone is critical to understating the potential contribution of additional tests to assist in the diagnosis of melanoma.

Objectives

To determine the diagnostic accuracy of visual inspection for the detection of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults with limited prior testing and in those referred for further evaluation of a suspicious lesion. Studies were separated according to whether the diagnosis was recorded face‐to‐face (in‐person) or based on remote (image‐based) assessment.

Search methods

We undertook a comprehensive search of the following databases from inception up to August 2016: CENTRAL; CINAHL; CPCI; Zetoc; Science Citation Index; US National Institutes of Health Ongoing Trials Register; NIHR Clinical Research Network Portfolio Database; and the World Health Organization International Clinical Trials Registry Platform. We studied reference lists and published systematic review articles.

Selection criteria

Test accuracy studies of any design that evaluated visual inspection in adults with lesions suspicious for melanoma, compared with a reference standard of either histological confirmation or clinical follow‐up. We excluded studies reporting data for ‘clinical diagnosis’ where dermoscopy may or may not have been used.

Data collection and analysis

Two review authors independently extracted all data using a standardised data extraction and quality assessment form (based on QUADAS‐2). We contacted authors of included studies where information related to the target condition or diagnostic threshold were missing. We estimated summary sensitivities and specificities per algorithm and threshold using the bivariate hierarchical model. We investigated the impact of: in‐person test interpretation; use of a purposely developed algorithm to assist diagnosis; and observer expertise.

Main results

We included 49 publications reporting on a total of 51 study cohorts with 34,351 lesions (including 2499 cases), providing 134 datasets for visual inspection. Across almost all study quality domains, the majority of study reports provided insufficient information to allow us to judge the risk of bias, while in three of four domains that we assessed we scored concerns regarding applicability of study findings as 'high'. Selective participant recruitment, lack of detail regarding the threshold for deciding on a positive test result, and lack of detail on observer expertise were particularly problematic.

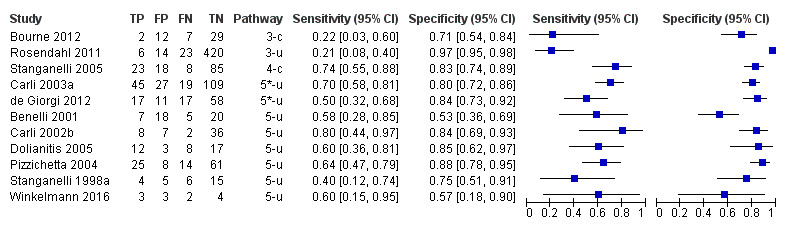

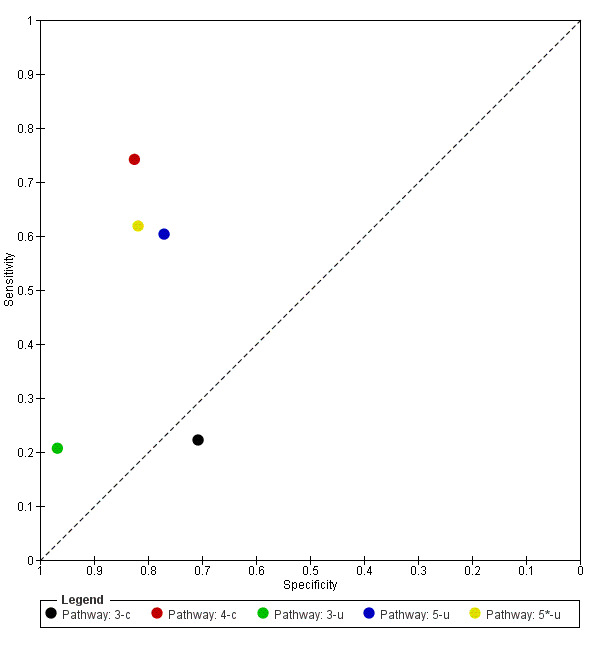

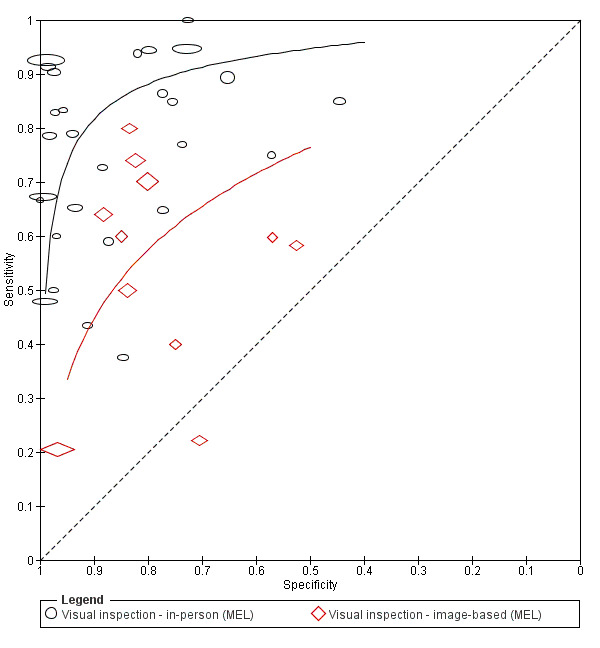

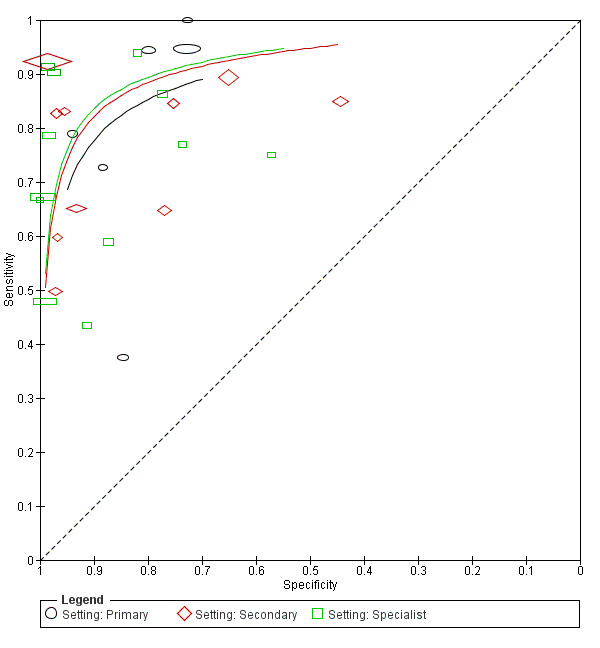

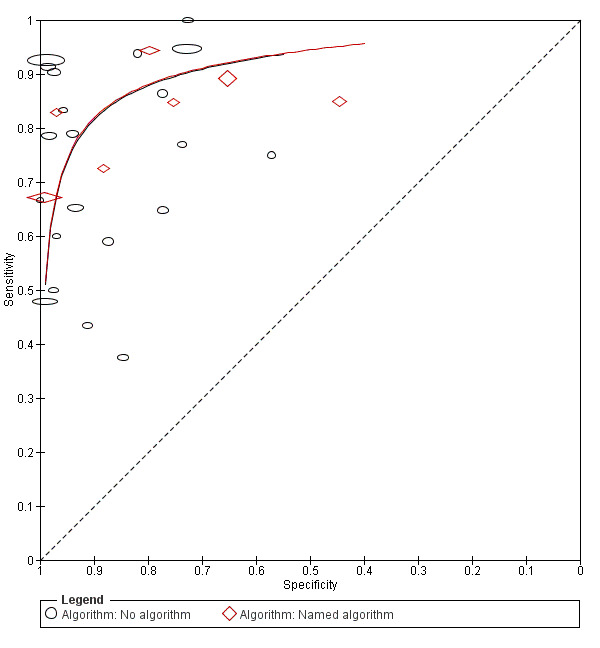

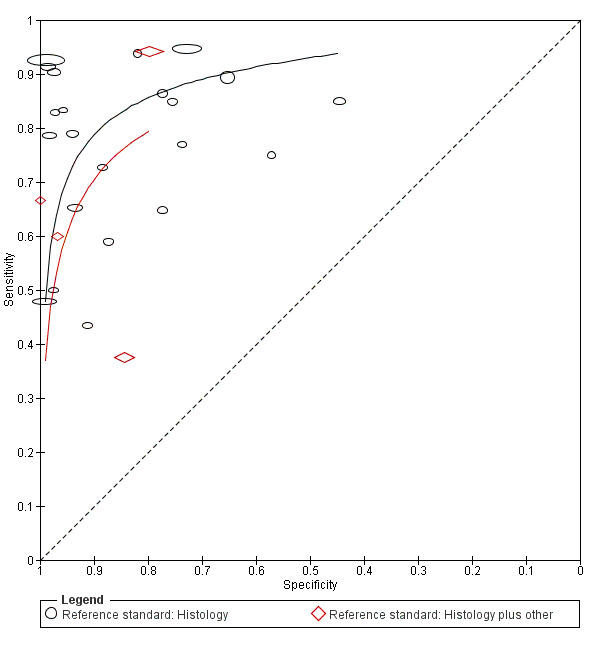

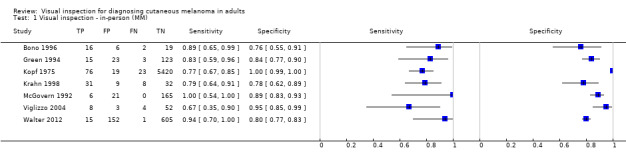

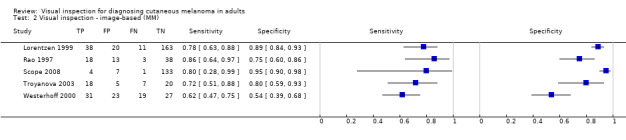

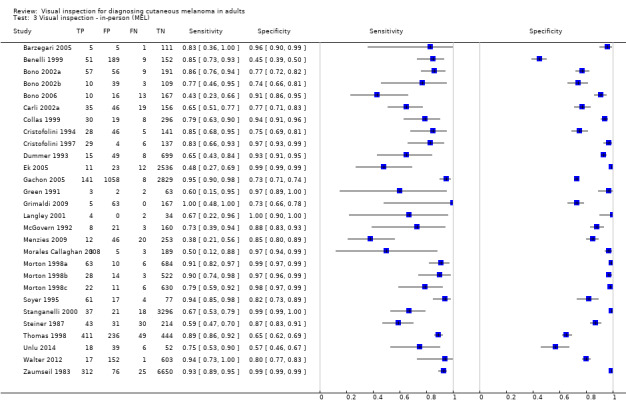

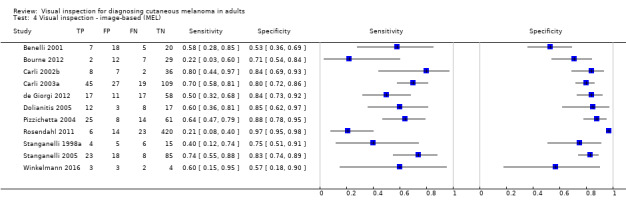

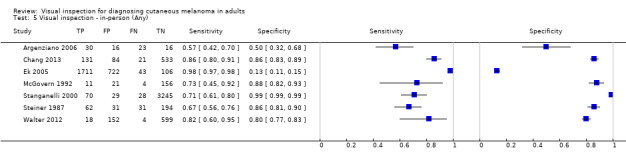

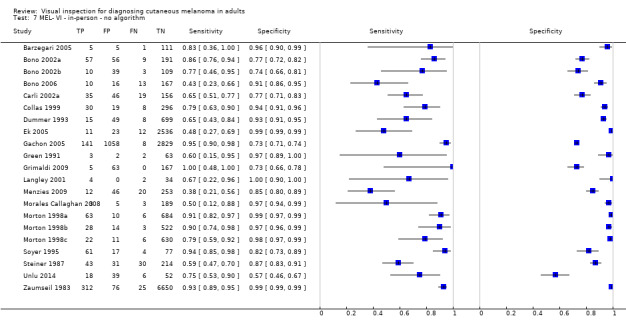

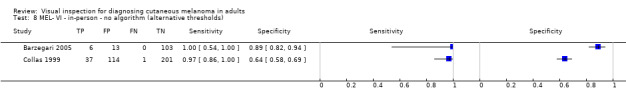

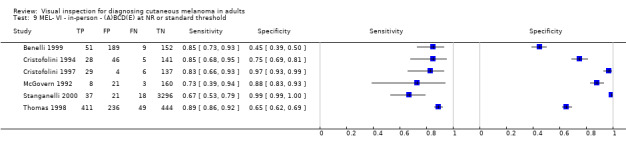

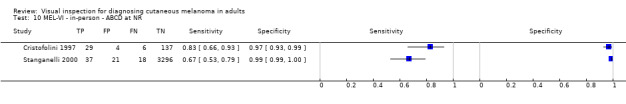

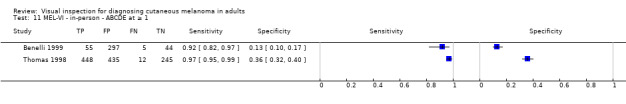

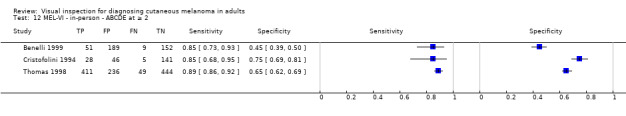

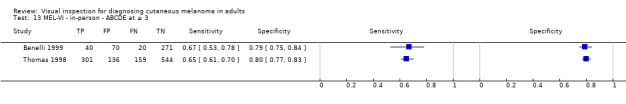

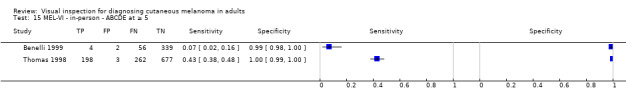

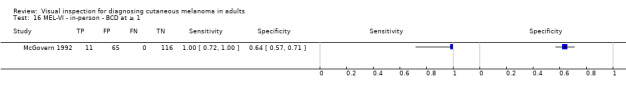

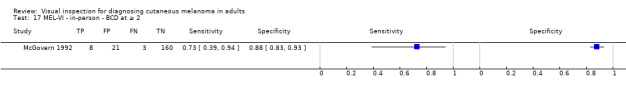

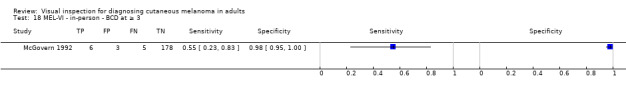

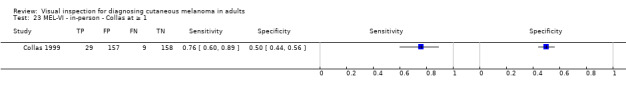

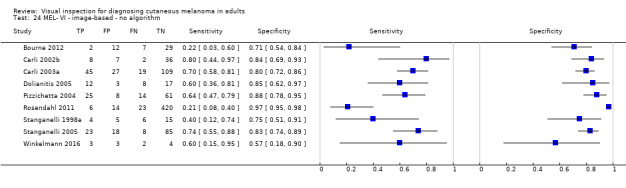

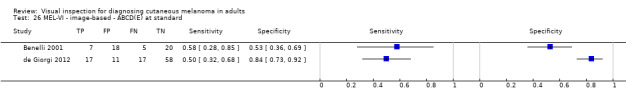

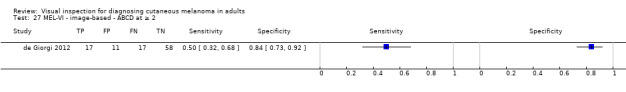

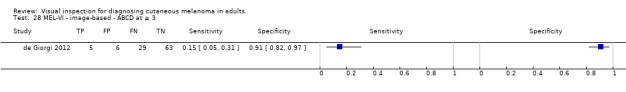

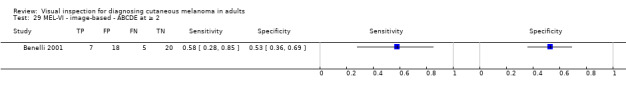

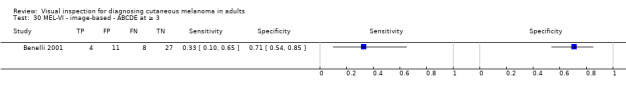

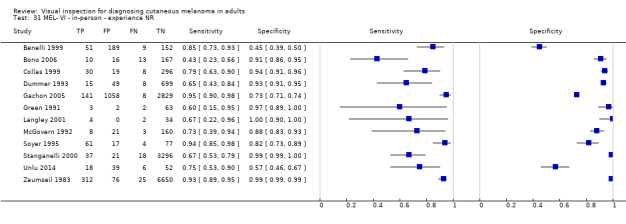

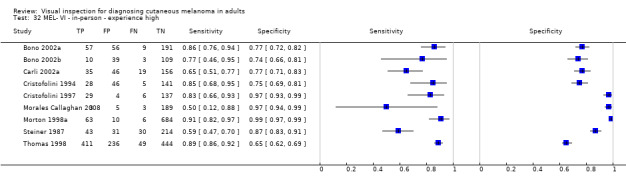

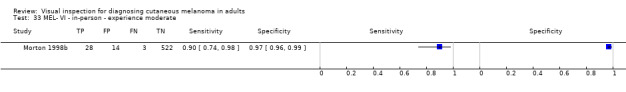

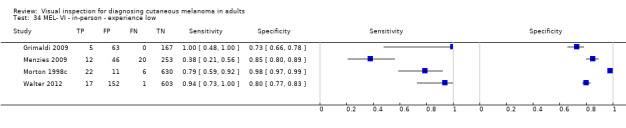

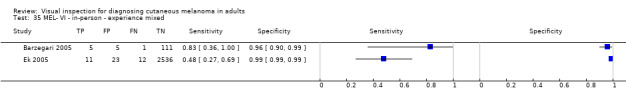

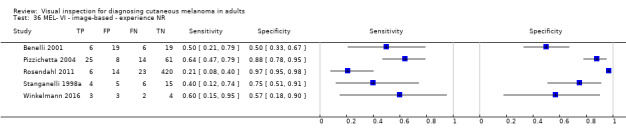

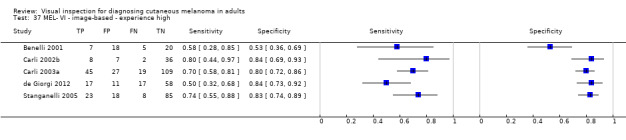

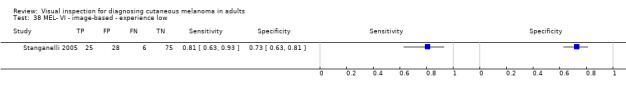

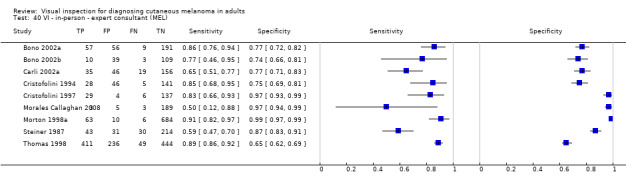

Attempts to analyse studies by degree of prior testing were hampered by a lack of relevant information and by the restricted inclusion of lesions selected for biopsy or excision. Accuracy was generally much higher for in‐person diagnosis compared to image‐based evaluations (relative diagnostic odds ratio of 8.54, 95% CI 2.89 to 25.3, P < 0.001). Meta‐analysis of in‐person evaluations that could be clearly placed on the clinical pathway showed a general trade‐off between sensitivity and specificity, with the highest sensitivity (92.4%, 95% CI 26.2% to 99.8%) and lowest specificity (79.7%, 95% CI 73.7% to 84.7%) observed in participants with limited prior testing (n = 3 datasets). Summary sensitivities were lower for those referred for specialist assessment but with much higher specificities (e.g. sensitivity 76.7%, 95% CI 61.7% to 87.1%) and specificity 95.7%, 95% CI 89.7% to 98.3%) for lesions selected for excision, n = 8 datasets). These differences may be related to differences in the spectrum of included lesions, differences in the definition of a positive test result, or to variations in observer expertise. We did not find clear evidence that accuracy is improved by the use of any algorithm to assist diagnosis in all settings. Attempts to examine the effect of observer expertise in melanoma diagnosis were hindered due to poor reporting.

Authors' conclusions

Visual inspection is a fundamental component of the assessment of a suspicious skin lesion; however, the evidence suggests that melanomas will be missed if visual inspection is used on its own. The evidence to support its accuracy in the range of settings in which it is used is flawed and very poorly reported. Although published algorithms do not appear to improve accuracy, there is insufficient evidence to suggest that the ‘no algorithm’ approach should be preferred in all settings. Despite the volume of research evaluating visual inspection, further prospective evaluation of the potential added value of using established algorithms according to the prior testing or diagnostic difficulty of lesions may be warranted.

Keywords: Adult, Aged, Humans, Middle Aged, Algorithms, Diagnostic Errors, Melanoma, Melanoma/diagnosis, Melanoma/diagnostic imaging, Physical Examination, Physical Examination/methods, Sensitivity and Specificity, Skin Neoplasms, Skin Neoplasms/diagnosis, Skin Neoplasms/diagnostic imaging

Plain language summary

How accurate is visual inspection of skin lesions with the naked eye for diagnosis of melanoma in adults?

What is the aim of the review?

Melanoma is one of the most dangerous forms of skin cancer. The aim of this Cochrane Review was to find out how accurate checking suspicious skin lesions (lumps, bumps, wounds, scratches or grazes) with the naked eye (visual inspection) can be to diagnose melanoma (diagnostic accuracy). The Review also investigated whether diagnostic accuracy was different depending on whether the clinician was face to face with the patient (in‐person visual inspection), or looked at an image of the lesion (image‐based visual inspection). Cochrane researchers included 19 studies to answer this question.

Why is it important to know the diagnostic accuracy of visual examination of skin lesions suspected to be melanomas?

Not recognising a melanoma when it is present (a false‐negative test result) delays surgery to remove it (excision), risking cancer spreading to other organs in the body and possibly death. Diagnosing a skin lesion (a mole or area of skin with an unusual appearance in comparison with the surrounding skin) as a melanoma when it is not (a false‐positive result) may result in unnecessary surgery, further investigations, and patient anxiety. Visual inspection of suspicious skin lesions by a clinician using the naked eye is usually the first of a series of ‘tests’ to diagnose melanoma. Knowing the diagnostic accuracy of visual inspection alone is important to decide whether additional tests, such as a biopsy (removing a part of the lesion for examination under a microscope) are needed to improve accuracy to an acceptable level.

What did the review study?

Researchers wanted to find out the diagnostic accuracy of in‐person compared with image‐based visual inspection of suspicious skin lesions. Researchers also wanted to find out whether diagnostic accuracy was improved if doctors used a 'visual inspection checklist' or depending on how experienced in visual inspection they were (level of clinical expertise). They considered the diagnostic accuracy of the first visual inspection of a lesion, for example, by a general practitioner (GP), and of lesions that had been referred for further evaluation, for example, by a dermatologist (doctor specialising in skin problems).

What are the main results of the review?

Only 19 studies (17 in‐person studies and 2 image‐based studies) were clear whether the test was the first visual inspection of a lesion or was a visual inspection following referral (for example, when patients are referred by a GP to skin specialists for visual inspection).

First in‐person visual inspection (3 studies)

The results of three studies of 1339 suspicious skin lesions suggest that in a group of 1000 lesions, of which 90 (9%) actually are melanoma:

‐ An estimated 268 will have a visual inspection result indicating melanoma is present. Of these, 185 will not be melanoma and will result in an unnecessary biopsy (false‐positive results).

‐ An estimated 732 will have a visual inspection result indicating that melanoma is not present. Of these, seven will actually have melanoma and would not be sent for biopsy (false‐negative results).

Two further studies restricted to 4228 suspicious skin lesions that were all selected to be excised found similar results.

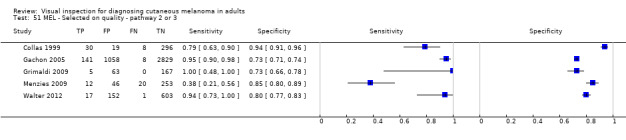

In‐person visual inspection after referral, all lesions selected to be excised (8 studies)

The results of eight studies of 5331 suspicious skin lesions suggest that in a group of 1000 lesions, of which 90 (9%) actually are melanoma:

‐ An estimated 108 will have a visual inspection result indicating melanoma is present, and of these, 39 will not be melanoma and will result in an unnecessary biopsy (false‐positive results).

‐ Of the 892 lesions with a visual inspection result indicating that melanoma is not present, 21 will actually be melanoma and would not be sent for biopsy (false‐negative results).

Overall, the number of false‐positive results (diagnosing a skin lesion as a melanoma when it is not) was observed to be higher and the number of false‐negative results (not recognising a melanoma when it is present) lower for first visual inspections of suspicious skin lesions compared to visual inspection following referral.

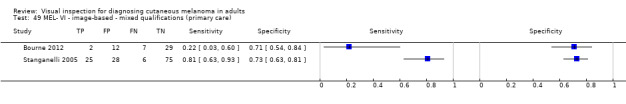

Visual inspection of images of suspicious skin lesions (2 studies)

Accuracy was much lower for visual inspection of images of lesions compared to visual inspection in person.

Value of visual inspection checklists

There was no evidence that use of a visual inspection checklist or the level of clinical expertise changed diagnostic accuracy.

How reliable are the results of the studies of this review?

The majority of included studies diagnosed melanoma by lesion biopsy and confirmed that melanoma was not present by biopsy or by follow‐up over time to make sure the skin lesion remained negative for melanoma. In these studies, biopsy, clinical follow‐up, or specialist clinician diagnosis were the reference standards (means of establishing final diagnoses). Biopsy or follow‐up are likely to have been reliable methods for deciding whether patients really had melanoma. In a few studies, experts diagnosed the absence of melanoma (expert diagnosis), which is less likely to have been a reliable method for deciding whether patients really had melanoma. There was lots of variation in the results of the studies in this review and the studies did not always describe fully the methods they used, which made it difficult to assess their reliability.

Who do the results of this review apply to?

Thirteen studies were undertaken in Europe (68%), with the remainder undertaken in Asia (n = 1), Oceania (n = 4), and North America (n = 1). Mean age ranged from 30 to 73.6 years (reported in 10 studies). The percentage of individuals with melanoma ranged between 4% and 20% in first visualised lesions and between 1% and 50% in studies of referred lesions. In the majority of studies, the lesions were unlikely to be representative of the range of those seen in practice, for example, only including skin lesions of a certain size or with a specific appearance. In addition, variation in the expertise of clinicians performing visual inspection and in the definition used to decide whether or not melanoma was present across studies makes it unclear as to how visual inspection should be carried out and by whom in order to achieve the accuracy observed in studies.

What are the implications of this review?

Error rates from visual inspection are too high for it to be relied upon alone. Although not evaluated in this review, other technologies need to be used to ensure accurate diagnosis of skin cancer. There is considerable variation and uncertainty about the diagnostic accuracy of visual inspection alone for the diagnosis of melanoma. There is no evidence to suggest that visual inspection checklists reliably improve the diagnostic accuracy of visual inspection, so recommendations cannot be made about when they should be used. Despite the existence of numerous research studies, further, well‐reported studies assessing the diagnostic accuracy of visual inspection with and without visual inspection checklists and by clinicians with different levels of expertise are needed.

How up‐to‐date is this review?

The review authors searched for and used studies published up to August 2016.

Summary of findings

Summary of findings'. 'What is the diagnostic accuracy of visual inspection for the detection of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults?

| Question | What is the diagnostic accuracy of visual inspection for the detection of cutaneous invasive melanoma and atypical intraepidermalmelanocytic variants in adults? | |||||

| Population | Adults with lesions suspicious for melanoma, including:

|

|||||

| Index test | Visual inspection with or without the use of any established algorithms or checklist to aid diagnosis, including:

|

|||||

| Target condition | Cutaneous invasive melanoma and atypical intraepidermal melanocytic variants | |||||

| Reference standard | Histology with or without long‐term follow‐up | |||||

| Action | If accurate, positive results ensure melanoma lesions are not missed but are appropriately referred and excised and those with negative results can be safely reassured and discharged. | |||||

| Number of studies | Total lesions | Total cases | ||||

| Quantity of evidence | 49a | 34,351 | 2499 | |||

| Limitations | ||||||

| Risk of bias | Potential risk for participant selection from case‐control design (6), inappropriate exclusion criteria (7) or lack of detail (27/49) All index test interpretation was blinded to reference standard diagnosis. Index test thresholds not clearly pre‐specified (22/33 in‐person evaluations; 13/16 image‐based) Low risk for reference standard (42/49); high concern from use of expert diagnosis (6). Blinding of reference standard to visual inspection diagnosis not reported in any study. High risk for participant flow due to differential verification (11), and exclusions following recruitment (15); 37 studies did not mention timing of tests |

|||||

| Applicability of evidence to question | Participant selection restricted to those with melanocytic lesions only (10), or to those with histopathology results (37) and included multiple lesions per participant (14) No description of index test diagnostic thresholds (24 in‐person; 13 image‐based) or reporting of average or consensus diagnoses (7 in‐person; 13 image‐based). Clinical images interpreted blinded to clinical information (11/16). Little information given concerning the expertise of the histopathologist (40/49). |

|||||

| Findings | ||||||

| 37 studies (providing 39 datasets) reported accuracy data for the primary target condition. We separated them a priori into in‐person (n = 28) and image‐based (n = 11) evaluations. Subsequent analysis confirmed differences in accuracy according to the different approaches to diagnosis (P < 0.001). Attempts to analyse studies by degree of prior testing were hampered by a lack of relevant information provided in the study publications and by the inclusion of lesions selected for biopsy or excision. Of the 28 in‐person evaluations, we could only clearly place 17 on the clinical pathway, and considered 11 to have provided insufficient information to allow us to identify the pathway (coded ‘unclear’ on pathway). The findings presented are based on results for in‐person evaluations that could be clearly placed on the clinical pathway. | ||||||

| Test: | In‐person visual inspection using any or no algorithm at any threshold | |||||

| Data: | Number of datasets | Total lesions | Total melanomas | |||

| All in‐person evaluations | 28 | 25,604 | 1748 | |||

| Studies clearly placed on the clinical pathway | 17 | 14,700 | 622 | |||

| Place on pathway: participants with limited prior testing (all lesions) | ||||||

| Datasets (n) | Lesions (n) | Melanomas (n) | Sensitivity (95% CI) | Specificity (95% CI) | ||

| 3 | 1339 | 55 | 92% (26 to 100) | 80% (74 to 85) | ||

| Numbers in a cohort of 1000 lesionsb | TP | FP | FN | TN | PPV | NPV |

| At a prevalence of 4% | 37 (10 to 40) |

195 (252 to 147) |

3 (30 to 0) |

765 (708 to 813) |

16% (4 to 21) | 100% (96 to 100) |

| At a prevalence of 9% | 83 (24 to 90) |

185 (239 to 139) |

7 (66 to 0) |

725 (671 to 771) |

31% (9 to 39) |

99% (91 to 100) |

| At a prevalence of 16% | 148 (42 to 160) |

171 (221 to 129) |

12 (118 to 0) |

669 (619 to 711) |

46% (16 to 55) |

98% (84 to 100) |

| Place on pathway: participants with limited prior testing (only lesions selected for excision) | ||||||

| Datasets (n) | Lesions (n) | Melanomas (n) | Sensitivity (95% CI) | Specificity (95% CI) | ||

| 2 | 4228 | 160 | 90% (70 to 97) | 81% (67 to 90) | ||

| Numbers in a cohort of 1000 lesionsb | TP | FP | FN | TN | PPV | NPV |

| At a prevalence of 4% | 36 (28 to 39) |

180 (312 to 96) |

4 (12 to 1) |

780 (648 to 864) |

17% (8 to 29) |

99% (98 to 100) |

| At a prevalence of 9% | 81 (63 to 88) |

170 (296 to 91) |

9 (27 to 2) |

740 (614 to 819) |

32% (18 to 49) |

99% (96 to 100) |

| At a prevalence of 16% | 144 (112 to 156) |

157 (273 to 84) |

16 (48 to 4) |

683 (567 to 756) |

48% (29 to 65) |

98% (92 to 99) |

| Place on pathway: referred participants (all lesions) | ||||||

| Datasets (n) | Lesions (n) | Melanomas (n) | Sensitivity (95% CI) | Specificity (95% CI) | ||

| 2 | 3494 | 61 | 75% (49 to 90) | 99% (95 to 100) | ||

| Numbers in a cohort of 1000 lesionsb | TP | FP | FN | TN | PPV | NPV |

| At a prevalence of 4% | 30 (20 to 36) |

13 (51 to 4) |

10 (20 to 4) |

947 (909 to 956) |

69% (28 to 90) |

99% (98 to 100) |

| At a prevalence of 9% | 67 (44 to 81) |

13 (48 to 4) |

23 (46 to 9) |

897 (862 to 906) |

84% (48 to 96) |

98% (95 to 99) |

| At a prevalence of 16% | 119 (78 to 144) |

12 (45 to 3) |

41 (82 to 16) |

828 (795 to 837) |

91% (64 to 98) |

95% (91 to 98) |

| Referred participants (only lesions selected for excision) | ||||||

| Datasets (n) | Lesions (n) | Melanomas (n) | Sensitivity (95% CI) | Specificity (95% CI) | ||

| 8 | 5331 | 258 | 77% (62 to 87) | 96% (90 to 98) | ||

| Numbers in a cohort of 1000 lesionsb | TP | FP | FN | TN | PPV | NPV |

| At a prevalence of 4% | 31 (25 to 35) |

41 (99 to 16) |

9 (15 to 5) |

919 (861 to 944) |

43% (20 to 68) |

99% (98 to 99) |

| At a prevalence of 9% | 69 (56 to 78) |

39 (94 to 15) |

21 (34 to 12) |

871 (816 to 895) |

64% (37 to 84) |

98% (96 to 99) |

| At a prevalence of 16% | 123 (99 to 139) |

36 (87 to 14) |

37 (61 to 21) |

804 (753 to 826) |

77% (53 to 91) |

96% (92 to 98) |

| Referred participants with equivocal lesions (only lesions selected for excision) | ||||||

| Datasets (n) | Lesions (n) | Melanomas (n) | Sensitivity (95% CI) | Specificity (95% CI) | ||

| 2 | 930 | 88 | 85% (56 to 96) | 89% (79 to 95) | ||

| Numbers in a cohort of 1000 lesionsb | TP | FP | FN | TN | PPV | NPV |

| At a prevalence of 4% | 34 (22 to 38) |

101 (197 to 48) |

6 (18 to 2) |

859 (763 to 912) |

25% (10 to 44) |

99% (98 to 100) |

| At a prevalence of 9% | 76 (50 to 86) |

96 (187 to 46) |

14 (40 to 4) |

814 (723 to 865) |

44% (21 to 66) |

98% (95 to 100) |

| At a prevalence of 16% | 136 (89 to 154) |

88 (172 to 42) |

24 (71 to 6) |

752 (668 to 798) |

61% (34 to 79) |

97% (90 to 99) |

| CI: confidence interval; FN: false‐negative; FP: false‐positive; NPV: negative predictive value; PPV: positive predictive value; TN: true negative; TP: true positive | ||||||

a37 of the 49 included studies (reporting on 39 cohorts of lesions) provide data for the primary target condition (defined as detection of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants) and are the main focus of this 'Summary of findings' table; the summary of methodological quality is based on the full sample of 49 studies. bWe estimated number of true positives (TP), false‐positives (FP), false‐negatives (FN) and true negatives (TN) for a hypothetical cohort of 1000 lesions at the median and interquartile ranges of prevalence (25th and 75th percentiles), at average sensitivity and specificity and using the lower and upper limits of the 95% confidence intervals, denoted in brackets (lower limit to upper limit).

Background

This review is one of a series of Cochrane Diagnostic Test Accuracy (DTA) reviews on the diagnosis and staging of melanoma and keratinocyte skin cancers conducted for the National Institute for Health Research (NIHR) Cochrane Systematic Reviews Programme. Appendix 1 shows the content and structure of the programme. Appendix 2 provides a glossary of terms used, and a table of acronyms used is provided in Appendix 3.

Target condition being diagnosed

Melanoma is one of the most aggressive forms of skin cancer, with the potential to metastasise to other parts of the body via the lymphatic system and blood stream. It accounts for a small percentage of skin cancer cases but is responsible for up to 75% of skin cancer deaths (Boring 1994; Cancer Research UK 2017).

Melanoma arises from uncontrolled proliferation of melanocytes, the epidermal cells that produce pigment or melanin. It most commonly arises in the skin but can occur in any organ that contains melanocytes, including mucosal surfaces, the back of the eye, and lining around the spinal cord and brain. Cutaneous melanoma refers to a skin lesion with malignant melanocytes present in the dermis, and includes superficial spreading, nodular, acral lentiginous, and lentigo maligna melanoma variants (see Figure 1). Melanoma in situ refers to malignant melanocytes that are contained within the epidermis and have not yet invaded the dermis, but are at risk of progression to melanoma if left untreated. Lentigo maligna, a subtype of melanoma‐in‐situ in chronically sun‐damaged skin, denotes another form of proliferation of abnormal melanocytes. Lentigo maligna can progress to invasive melanoma if its growth breaches the dermo‐epidermal junction during a vertical growth phase (when it becomes known as 'lentigo maligna melanoma'); however, its rate of malignant transformation is both lower and slower than for melanoma in situ (Kasprzak 2015). Melanoma in situ and lentigo maligna are both atypical intraepidermal melanocytic variants.

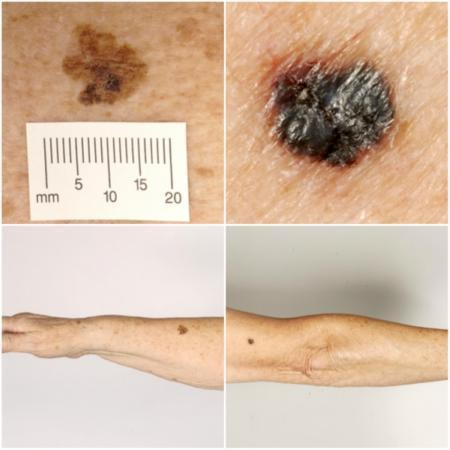

1.

Sample photographs of superficial spreading melanoma (left) and nodular melanoma (right). Copyright © 2010 Dr Rubeta Matin: reproduced with permission.

The incidence of melanoma rose to over 200,000 newly diagnosed cases worldwide in 2012 (Erdmann 2013; Ferlay 2015), with an estimated 55,000 deaths (Ferlay 2015). The highest incidence is observed in Australia with 13,134 new cases of melanoma of the skin in 2014 (ACIM 2017) and in New Zealand with 2341 registered cases in 2010 (HPA and MelNet NZ 2014). For 2014 in the USA, the predicted incidence was 73,870 per annum and the predicted number of deaths was 9940 (Siegel 2015). The highest rates in Europe are seen in north‐western Europe and the Scandinavian countries, with a highest incidence reported in Switzerland: 25.8 per 100,000 in 2012. Rates in England have tripled from 4.6 and 6.0 per 100,000 in men and women, respectively, in 1990, to 18.6 and 19.6 per 100,000 in 2012 (EUCAN 2012). In the UK, melanoma has one of the fastest rising incidence rates of any cancer and has the biggest projected increase in incidence between 2007 and 2030 (Mistry 2011). In the decade leading up to 2013, age‐standardised incidence increased by 46%, with 14,500 new cases in 2013 and 2459 deaths in 2014 (Cancer Research UK 2017). While overall incidence rates are higher in women than in men, the rate of incidence in men is increasing faster than in women (Arnold 2014).

The rising incidence in melanoma is thought to be primarily related to an increase in recreational sun exposure and use of tanning beds, and an increasingly ageing population with higher lifetime ultraviolet (UV) exposure, in conjunction with possible earlier detection (Belbasis 2016; Linos 2009). Putative risk factors are reviewed in detail elsewhere (Belbasis 2016), but can be broadly divided into host or environmental factors. Host factors include fair skin and light hair or eye colour; older age (Geller 2002); male sex (Geller 2002); previous skin cancer (Tucker 1985); predisposing skin lesions, for example, high melanocytic naevus counts (Gandini 2005), clinically atypical naevi (Gandini 2005), or large congenital naevi (Swerdlow 1995); genetically inherited skin disorders, for example, xeroderma pigmentosum (Lehmann 2011); and a family history of melanoma (Gandini 2005). Environmental factors include recreational, occupational, and work‐related exposure to sunlight (both cumulative and episodic burning) (Armstrong 2017; Gandini 2005); artificial tanning (Boniol 2012); and immunosuppression, for example, in organ transplant recipients or HIV‐positive individuals (DePry 2011). Lower socioeconomic class may be associated with delayed presentation and thus more advanced disease at diagnosis (Reyes‐Ortiz 2006).

A database of over 40,000 US patients from 1998 onwards, which assisted the development of the Eighth Edition American Joint Committee on Cancer (AJCC) Staging System indicated a five‐year survival of 99% for stage IA melanoma (melanoma ≤ 1 mm thick without ulceration, mitosis or involvement of the lymph nodes), dropping to anything between 32% and 93% in stage III disease (melanoma of any thickness with metastasis to the lymph nodes) depending on tumour thickness, the presence of ulceration and number of involved nodes (Gershenwald 2017). Before the advent of targeted and immuno‐therapies, stage IV melanoma (melanoma disseminated to distant sites/visceral organs) was associated with median survival of six to nine months, one‐year survival rate of 25%, and three‐year survival of 15% (Balch 2009; Korn 2008).

Between 1975 and 2010, five‐year relative survival for melanoma (i.e. not including deaths from other causes) in the USA increased from 80% to 94%, with survival for localised, regional, and distant disease estimated at 99%, 70%, and 18%, respectively in 2010 (Cho 2014). Overall, mortality rates however showed little change, at 2.1 per 100,000 deaths in 1975 and 2.7 per 100,000 in 2010 (Cho 2014). Increasing incidence in localised disease over the same period (from 5.7 to 21 per 100,000) suggests that much of the observed improvement in survival may be due to earlier detection and heightened vigilance (Cho 2014). New targeted therapies for stage IV melanoma (e.g. BRAF inhibitors) have improved survival and immunotherapies are evolving such that long‐term survival is being documented (Pasquali 2018). No new data regarding the survival prospects for people with stage IV disease were analysed for the AJCC Eighth Edition Staging Guidelines due to lack of contemporary data (Gershenwald 2017).

Treatment of melanoma

For primary melanoma, the mainstay of definitive treatment is early detection and excision of the lesion, to remove both the tumour and any malignant cells that might have spread into the surrounding skin (Garbe 2016; Marsden 2010; NICE 2015a; SIGN 2017; Sladden 2009). Recommended surgical margins vary according to tumour thickness (Garbe 2016) and stage of disease at presentation (NICE 2015a).

Index test(s)

For the purposes of our series of reviews, each component of the diagnostic process, including visual inspection or clinical examination, is considered a diagnostic or index ‘test', the accuracy of which can be established in comparison with a reference standard of diagnosis, either alone or in combination with other available technologies that may assist the diagnostic process.

Clinical history‐taking to identify risk factors and visual inspection of the lesion, surrounding skin and comparison with other lesions on the rest of the body is fundamental to the diagnosis of skin cancer. The strongest common phenotypic risk factor is the presence of atypical naevi; typically the presence of over a hundred moles or naevi of abnormal appearance that may pose diagnostic challenges (Goodson 2010; Rademaker 2010; Salerni 2012). In the UK, clinical examination is typically done at two decision points – first in the general practice (GP) surgery, where a decision is made to refer or not to refer, and then a second time by a dermatologist or other secondary care clinician, where a decision is made to biopsy or not. Specialist advice can also be sought using teledermatology, where lesion images are forwarded with variable clinical information (such as age, gender, and location of lesion) to specialist clinics or to commercial organisations for interpretation. The accuracy of these diagnostic encounters (defined as the proportion of 'correct' diagnoses, i.e. true positive plus true negative diagnoses out of the total number of diagnoses) is known to vary according to qualifications and experience (Morton 1998; Westerhoff 2000); the accuracy of ‘image‐based’ as opposed to face‐to‐face diagnosis is less clear.

Research into the cognitive processes involved in dermatological diagnoses suggests that two main strategies are employed simultaneously and iteratively (Elstein 2002; Norman 1989; Norman 2009). Non‐analytical pattern recognition formulates an initial hypothesis; identification is made implicitly, without conscious thought or reference to specific rules and hidden from the conscious view of the diagnostician (Norman 2009). Analytical pattern recognition, using more explicit rules based on conscious analytical reasoning, is then employed to test the initial hypothesis. Analytical pattern recognition has been described as the “careful and systematic gathering of data and weighing the elicited information against mental rules” (Norman 2009). The balance between non‐analytical and analytical reasoning varies between clinicians, according to factors such as experience and familiarity with the diagnostic question.

Various attempts have been made to formalise the 'mental rules' involved in analytical pattern recognition for melanoma, ranging from setting out criteria that should be considered (e.g. ‘pattern analysis’; Friedman 1985; Sober 1979) to formal scoring systems with explicit numerical thresholds (MacKie 1985; MacKie 1990). The most commonly used algorithms are described in detail in Appendix 4.

The ABCD (asymmetry, border irregularity, colour variegation, diameter > 6 mm) algorithm of clinical warning signs was developed in 1985 to help distinguish melanoma from a benign naevus (Friedman 1985), and then extended to include an E for 'enlargement' criterion (Thomas 1998). As a result of its simplicity, ABCD(E) is now widely advocated for use by non‐experts or lay persons (American Academy of Dermatology 2015). The approach has been criticised for its inability to capture nodular and amelanotic melanomas, which account for a relatively small proportion (˜15% to 20%) of incident melanomas but a large proportion (˜50%) of melanoma‐related deaths (Moreau 2013; Shaikh 2012). In addition, up to a third of melanomas may be smaller than 6 mm in diameter (Maley 2014), a proportion which is likely to increase due to improved skin surveillance. The validity of ABCD(E) as a useful tool for the lay public has also been called into question (Aldridge 2011a; Girardi 2006; Liu 2005). Subsequent modifications have been suggested, including altering the meaning of the ABCD acronym for use in paediatric populations (Cordoro 2013); changing 'D' to 'dark' (Goldsmith 2014)); or changing the acronym altogether (e.g. CCC for colour, contour, and change (Moynihan 1994); or "Do UC" the melanoma for different, uneven, changing (Yagerman 2014)). To date, the latter three have not been evaluated in populations with lesions suggestive for melanoma.

The seven‐point checklist assessing change in size, shape, colour, inflammation, crusting or bleeding, sensory change, or diameter of 7 mm or more was developed by UK researchers as a guide to help non‐dermatologists detect possible melanoma (MacKie 1985; MacKie 1990). The revised, weighted version (MacKie 1990), is currently recommended for GP use in the evaluation of pigmented lesions (NICE 2015a). A primary care‐based evaluation found moderately good performance for the identification of clinically significant lesions (including malignant and premalignant lesions as disease‐positive) in primary care (sensitivity and specificity for the presence of at least three features were 62.7% and 65.0%, respectively), with higher sensitivity for the detection of melanoma (80.6%) at the expense of low specificity (61.7%) (Walter 2013).

Unlike most formalised rules, the 'ugly duckling' sign is based on differential pattern recognition, where abnormal lesion identification is achieved by noticing the odd one out, that is, a melanoma will be the pigmented lesion that does not match the rest of a person's naevi, for example a very dark or pale/pink lesion that is different in colour compared to the rest of the pigmented naevi (Grob 1998). Although 'ugly duckling' is inherently a form of subjective pattern recognition, sensitivity has been reported to be 100% for pigmented‐lesion experts and 85% for non‐clinicians (Scope 2008). The assumption that an individual has a "normal" naevus phenotype is debatable, however. Many individuals have multiple 'atypical' pigmented lesions which, although very similar morphologically, allow malignancy to easily disguise itself amidst an abnormal complex of pigmented lesions (also referred to as ‘The Little Red Riding Hood’ phenomenon) (Mascaro 1998).

Clinical pathway

The diagnosis of melanoma can take place in primary, secondary, and tertiary care settings by both generalist and specialist healthcare providers. In the UK, people with concerns about a new or changing lesion will usually present first to their GP or, less commonly, directly to a specialist in secondary care, which could include a dermatologist, plastic surgeon, general surgeon or other specialist surgeon (such as an ear, nose, and throat (ENT) specialist or maxillofacial surgeon), or ophthalmologist (Figure 2). Current UK guidelines recommend that all suspicious pigmented lesions presenting in primary care should be assessed by taking a clinical history and visual inspection using the seven‐point checklist (MacKie 1990); lesions suspected to be melanoma should be referred urgently for appropriate specialist assessment within two weeks (Chao 2013; Marsden 2010; NICE 2015b; SIGN 2017).

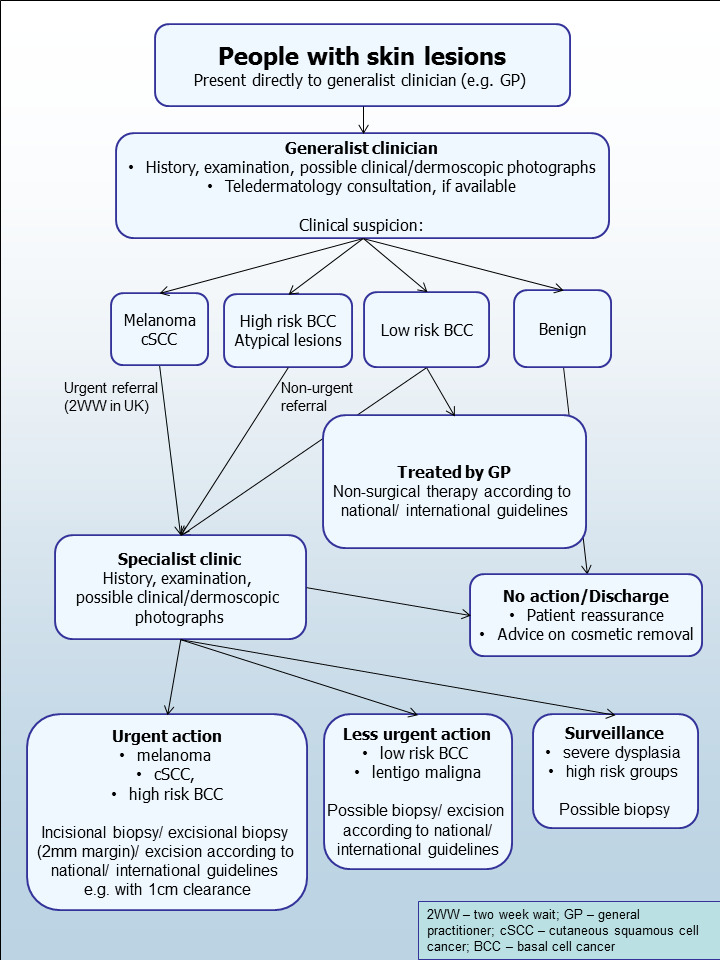

2.

Current clinical pathway for people with skin lesions.

Teledermatology consultations can aid more appropriate triage of lesions into urgent referral; non‐urgent secondary care referral (e.g. for suspected basal cell carcinoma (BCC)); or where available, referral to an intermediate care setting, for example, clinics run by GPs with a special interest in dermatology. The distinction between setting and examiner qualifications and experience is important as specialist clinicians might work in primary care settings (for example, in the UK, GPs with a special interest in dermatology and skin surgery who have undergone appropriate training), and generalists might practice in secondary care settings (for example, plastic surgeons who do not specialise in skin cancer). The level of skill and experience in skin cancer diagnosis will vary for both generalist and specialist care providers and will also impact on test accuracy.

The specialist clinician will also use history‐taking and visual inspection of the lesion (in comparison with other lesions on the skin), usually in conjunction with dermoscopic examination, to inform a clinical decision. If melanoma is suspected, then urgent excision biopsy is recommended; for suspected cutaneous squamous cell carcinoma (cSCC) urgent excision with predetermined surgical margins. Other lesions such as BCC or pre‐malignant lesions such as lentigo maligna may also be referred for a diagnostic biopsy, followed by appropriate treatment or further surveillance or reassurance and discharge.

Prior test(s)

Although smartphone applications and community‐based teledermatology services can increasingly be directly accessed by people who have concerns about a skin lesion (Chuchu 2018), visual inspection of a suspicious lesion by a clinician is usually the first in a series of tests to diagnose skin cancer. In the UK first visual inspection of a suspicious lesion usually takes place in primary care; however, in some countries, people with suspicious lesions can present directly to a secondary care setting. Considering the degree of prior testing that study participants have undergone is key to interpretation of resulting test accuracy indices, which are known to vary according to the spectrum or case‐mix of included participants (Lachs 1992; Leeflang 2013; Moons 1997; Usher‐Smith 2016). Studies of people with suspicious lesions at the initial clinical presentation stage ('test‐naïve'), are likely to have a wider range of differential diagnoses and include a higher proportion of people with benign diagnoses compared with studies of participants who have been referred for a specialist opinion on the basis of visual inspection (with or without dermoscopy) by a generalist practitioner. Furthermore, studies in more specialist settings may focus on equivocal or difficult‐to‐diagnose lesions, rather than lesions with a more general level of clinical suspicion. A simple categorisation of studies according to primary, secondary, or specialist setting may not always adequately reflect differences in spectrum.

Role of index test(s)

Visual inspection and history‐taking are key to diagnosing skin cancer and are always undertaken as part of a clinical examination regardless of examiner experience and whatever additional technologies are available. For the generalist practitioner, the key is to minimise the proportion of people who are referred unnecessarily and identify those lesions that require urgent referral. For the specialist, the aim is not only to identify those in need of urgent excision due to invasive cancer, but also to identify high‐risk lesions, with considerable potential to progress to invasive disease, such as those with severe dysplasia or in situ disease, for example, lentigo maligna. Given differences in setting, prior testing, observer qualifications, experience and training, the anticipated performance in terms of accuracy is likely to vary.

When diagnosing potentially life‐threatening conditions such as melanoma, the consequences of falsely reassuring a person that they do not have skin cancer can be serious and potentially fatal, as the resulting delay to diagnosis means that the window for successful early treatment may be missed. To minimise these false‐negative diagnoses, a good diagnostic test will demonstrate high sensitivity and a high negative predictive value (NPV), where very few of those with a negative test result will actually have a melanoma. Giving falsely positive test results (meaning the test has poor specificity and a high false‐positive rate) resulting in the removal of lesions that turn out to be benign is arguably less of an error than missing a potentially fatal melanoma, but is not cost free. False‐positive diagnoses not only cause unnecessary scarring from the biopsy or excision procedure, but also increase patient anxiety whilst they await the definite histology results and increase healthcare costs as the number needed to remove to yield one melanoma diagnosis increases.

Alternative test(s)

We have reviewed a number of other tests as part of our series of Cochrane diagnostic test accuracy (DTA) reviews on the diagnosis of melanoma. In particular, dermoscopy has become an essential tool for the specialist clinician and is increasingly being taken up in primary care settings. Dermoscopy (also referred to as dermatoscopy or epiluminescence microscopy or ELM) uses a hand‐held microscope and incident light (with or without oil immersion) to reveal subsurface images of the skin at increased magnification of x 10 to x 100 (Kittler 2011). Used alongside clinical examination, dermoscopy has been shown in some studies to increase the sensitivity of clinical diagnosis of melanoma from around 60% to as much as 90% (Bono 2006; Carli 2002a; Kittler 1999; Stanganelli 2000) with much smaller effects in others (Benelli 1999; Bono 2002a). The accuracy of dermoscopy depends on the experience of the examiner (Kittler 2011), with accuracy when used by untrained or less experienced examiners potentially no better than clinical inspection alone (Binder 1997; Kittler 2002).

Pattern analysis (Pehamberger 1993; Steiner 1987) is thought to be the most specific and reliable technique to aid dermoscopy interpretation when used by specialists (Maley 2014); however, dermoscopic histological correlations have been established and diagnostic algorithms developed based on colour, aspect, pigmentation pattern, and skin vessels (e.g. the ABCD rule for dermoscopy (Nachbar 1994; Stolz 1994), the Menzies (Menzies 1996) and the seven‐point dermoscopy checklist (Annessi 2007; Argenziano 1998; Argenziano 2001; Gereli 2010; amongst others). Dermoscopy used in addition to visual inspection (in‐person evaluations) or used alone (dermoscopic image interpretation remotely from the patient concerned) are the subject of a separate systematic review (Dinnes 2018).

Other relevant tests that we have looked at as part of this series of reviews include teledermatology, mobile phone applications, reflectance confocal microscopy, optical coherence tomography, computer‐assisted diagnosis or artificial intelligence‐based techniques, and high‐frequency ultrasound (Dinnes 2015a). Evidence permitting, we will compare the accuracy of available tests in an overview review, exploiting within‐study comparisons of tests and allowing the analysis and comparison of commonly used diagnostic strategies where tests may be used singly or in combination.

We also considered and excluded a number of tests from review, including tests used in the context of monitoring people, such as total body photography of those with large numbers of typical or atypical naevi, and finally, histopathological confirmation following lesion excision. The latter is the established reference standard for melanoma diagnosis and will be one of the standards against which we evaluate the index tests in these reviews.

Rationale

Our series of reviews of diagnostic tests used to assist clinical diagnosis in either clinical practice or in a research setting aims to identify the most accurate approaches to diagnosis and to provide clinical and policy decision‐makers with the highest possible standard of evidence on which to base diagnostic and treatment decisions. With increasing rates of melanoma and a trend to adopt the use of dermoscopy and other high‐resolution image analysis in primary care, the anxiety around missing early cases needs to be balanced against the risk of over‐referrals, to avoid sending too many people with benign lesions for a specialist opinion. It is questionable whether all skin cancers picked up by sophisticated techniques contribute to morbidity and mortality or whether newer technologies run the risk of increasing false‐positive diagnoses. It is also possible that use of some technologies, for example, widespread use of dermoscopy in primary care with no training, could actually result in harm by missing melanomas if they are used as replacement technologies for traditional history‐taking and clinical examination of the entire skin. Many branches of medicine have noted the danger of such "gizmo idolatry" amongst doctors (Leff 2008). The trend toward remote interpretation of dermatology images (whether clinical or dermoscopic images) and the use of remote technologies that do not involve clinicians without substantive evidence could further disrupt clinical pathways and healthcare payments as they may attract custom from the worried well, leaving an ever decreasing pool of qualified doctors to pick up any resulting problems.

There are few available systematic reviews in the field. The literature searches for the most comprehensive systematic reviews of visual inspection were carried out up to 2007 (Vestergaard 2008) or are focused on specific clinical questions, for example, specific healthcare professionals (Corbo 2012 including only direct comparisons of the accuracy of primary care physicians versus dermatologists, and Loescher 2011 reviewing the skin cancer detection skills of advanced practice nurses) or settings (Herschorn 2012 including direct comparisons of visual inspection versus dermoscopy in primary care). More recently, Harrington and colleagues (Harrington 2017) published a systematic review of clinical prediction rules (or published algorithms) used to assist the diagnosis of melanoma; however, the requirement for a clinical prediction rule does not allow comparison of accuracy with and without the use of an algorithm.

The critical question about the accuracy of visual inspection alone and the impact of examiner, prior patient testing, underlying risk status, and the use of images for diagnosis needs to be answered before the potential contribution of additional diagnostic tests can be set in context and appropriately placed in the diagnostic pathway.

This review follows a generic protocol that covers the full series of Cochrane DTA reviews for the diagnosis of melanoma (Dinnes 2015a). The Background and Methods sections of this review therefore use some text that was originally published in the protocol (Dinnes 2015a) and text that overlaps some of our other reviews (Dinnes 2018).

Objectives

To determine the diagnostic accuracy of visual inspection for the detection of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants in adults.

Accuracy was estimated separately according to the prior testing undergone by study participants:

those with limited prior testing, that is, primary presentation; and

those referred for further evaluation of a suspicious lesion, that is, referred participants.

Accuracy was also estimated separately according to whether the diagnosis was recorded based on a face‐to‐face (in‐person) encounter or based on remote (image‐based) assessment.

Secondary objectives

For the identification of cutaneous invasive melanoma and atypical intraepidermal melanocytic variants:

to determine the diagnostic accuracy of individual algorithms used to assist visual inspection; and

to determine the effect of observer experience on diagnostic accuracy.

For the alternative definitions of the target condition:

to determine the diagnostic accuracy of visual inspection for the detection of invasive melanoma alone in adults;

to determine the diagnostic accuracy of visual inspection for the detection of any skin cancer or skin lesion with a high risk of progression to melanoma in adults (i.e. requiring excision).

Investigation of sources of heterogeneity

We set out to address a range of potential sources of heterogeneity for investigation across our series of reviews, as outlined in our generic protocol (Dinnes 2015a) and described in Appendix 5; however, our ability to investigate these was necessarily limited by the available data on each individual test reviewed.

The sources of heterogeneity that we investigated for visual inspection were:

in‐person versus image‐based evaluations;

study setting: primary, community or private care versus secondary versus specialist clinics;

use of a diagnostic algorithm: no algorithm reported versus any named algorithm used;

type of reference standard: histology alone versus histology plus clinical follow‐up or other reference standard; and

disease prevalence: ≤ 10% versus > 10%. We chose the 10% cut‐off based on advice from clinical co‐authors (RB, HW).

Methods

Criteria for considering studies for this review

Types of studies

We included test accuracy studies that allow comparison of the result of the index test with that of a reference standard, including the following:

studies where all participants receive a single index test and a reference standard;

studies where all participants receive more than one index test(s) and reference standard;

studies where participants are allocated (by any method) to receive different index tests or combinations of index tests and all receive a reference standard (between‐person comparative studies (BPC));

studies that recruit series of participants unselected by true disease status (referred to as case series for the purposes of this review);

diagnostic case‐control studies that separately recruit diseased and non‐diseased groups (see Rutjes 2005); however, we did not include studies that compared results for malignant lesions to those for healthy skin (i.e. with no lesion present);

both prospective and retrospective studies; and

studies where previously acquired clinical or dermoscopic images were retrieved and prospectively interpreted for study purposes.

We excluded studies from which we could not extract 2x2 contingency data or if they included fewer than five melanoma cases or fewer than five benign lesions. The size threshold of five is arbitrary. However, such small studies are unlikely to add precision to the estimate of accuracy.

Studies available only as conference abstracts were excluded; however, attempts were made to identify full papers for potentially relevant conference abstracts (Searching other resources).

Participants

We included studies in adults with pigmented skin lesions or lesions suspicious for melanoma or those at high risk of developing melanoma, including those with a family history or previous history of melanoma skin cancer, atypical or dysplastic naevus syndrome, or genetic cancer syndromes.

We excluded studies that recruited only participants with malignant or benign diagnoses.

We excluded studies conducted in children or that clearly reported inclusion of more than 50% of participants aged 16 and under.

Index tests

Studies reporting accuracy data for visual inspection alone, with either image‐based or in‐person diagnosis, were eligible for inclusion. For in‐person visual inspection, diagnosis is undertaken in a clinic setting with the patient present (face‐to‐face diagnosis). For these studies we assumed that patient history‐taking would have taken place and is likely to have contributed to lesion diagnosis; however, we did not specifically extract details of patient history‐taking due to anticipated poor reporting in the primary studies. For image‐based studies, diagnosis is based on clinical or ‘macro’ images (photographs), remotely from the study participant. For these studies, we extracted any additional patient information that was provided to assist diagnosis.

We included all established algorithms or checklists to assist diagnosis by visual inspection. We included studies developing new algorithms or methods of diagnosis (i.e. derivation studies) if they:

used a separate independent 'test set' of participants or images to evaluate the new approach; or

investigated lesion characteristics that had previously been suggested as associated with melanoma and the study reported accuracy based on the presence or absence of particular combinations of characteristics.

We excluded studies if they:

used a statistical model to produce a data‐driven equation, or algorithm based on multiple diagnostic features, with no separate test set;

used cross‐validation approaches such as 'leave‐one‐out' cross‐validation (Efron 1983);

evaluated the accuracy of the presence or absence of individual lesion characteristics or morphological features, with no overall diagnosis of malignancy;

reported accuracy data for ‘clinical diagnosis’ with no clear description as to whether the reported data related to visual inspection alone;

were based on the experience of a particular skin cancer clinic, where dermoscopy may or may not have been used on an individual patient‐basis.

Although primary care clinicians can in practice be specialists in skin cancer, we considered primary care physicians as generalist practitioners and dermatologists as specialists. Within each group, we extracted any reporting of special interest or accreditation in skin cancer.

Target conditions

We defined the primary target condition as the detection of:

any form of invasive cutaneous melanoma or atypical intraepidermal melanocytic variants (i.e. including melanoma in situ, or lentigo maligna, which has a risk of progression to invasive melanoma).

We considered two additional definitions of the target condition in secondary analyses, namely the detection of:

any form of invasive cutaneous melanoma alone;

any skin lesion requiring excision. This latter definition includes melanoma plus other forms of skin cancer, such as BCC and cSCC, as well as melanoma in situ, lentigo maligna, and lesions with severe melanocytic dysplasia.

The diagnosis of the keratinocyte skin cancers, BCC, and SCC as primary target conditions are the subject of a separate series of reviews (Dinnes 2015b).

Reference standards

The ideal reference standard is histopathological diagnosis in all eligible lesions. A qualified pathologist or dermatopathologist should perform histopathology. Ideally, reporting should be standardised detailing a minimum dataset to include the histopathological features of melanoma to determine the American Joint Committee on Cancer (AJCC) Staging System (e.g. Slater 2014). We did not apply reporting of a minimum dataset as a necessary inclusion criterion, but extracted any pertinent information.

Partial verification (applying the reference test only to a subset of those undergoing the index test) was of concern given that lesion excision or biopsy are unlikely to be carried out for all benign‐appearing lesions within a representative population sample. Therefore, to reflect what happens in reality, we accepted clinical follow‐up of benign‐appearing lesions as an eligible reference standard, whilst recognising the risk of differential verification bias (as misclassification rates of histopathology and follow‐up will differ).

Additional eligible reference standards included cancer registry follow‐up and 'expert opinion' with no histology or clinical follow‐up. Cancer registry follow‐up is considered less desirable than active clinical follow‐up, as follow‐up is not carried out within the control of the study investigators. Furthermore, if participant‐based analyses as opposed to lesion‐based analyses are presented, it may be difficult to determine whether the detection of a malignant lesion during follow‐up is the same lesion that originally tested negative on the index test.

All of the above were considered eligible reference standards with the following caveats:

all study participants with a final diagnosis of the target disorder must have a histological diagnosis, either subsequent to the application of the index test or after a period of clinical follow‐up; and

at least 50% of all participants with benign lesions must have either a histological diagnosis or clinical follow‐up to confirm benignity.

Search methods for identification of studies

Electronic searches

The Information Specialist (SB) carried out a comprehensive search for published and unpublished studies. A single large literature search was conducted to cover all topics in the programme grant (see Appendix 1 for a summary of reviews included in the programme grant). This allowed for the screening of search results for potentially relevant papers for all reviews at the same time. A search combining disease related terms with terms related to the test names, using both text words and subject headings was formulated. The search strategy was designed to capture studies evaluating tests for the diagnosis or staging of skin cancer. As the majority of records were related to the searches for tests for staging of disease, a filter using terms related to cancer staging and to accuracy indices was applied to the staging test search, to try to eliminate irrelevant studies, for example, those using imaging tests to assess treatment effectiveness. A sample of 300 records that would be missed by applying this filter was screened and the filter adjusted to include potentially relevant studies. When piloted on MEDLINE, inclusion of the filter for the staging tests reduced the overall numbers by around 6000. The final search strategy, incorporating the filter, was subsequently applied to all bibliographic databases as listed below (Appendix 6). The final search result was cross‐checked against the list of studies included in five systematic reviews; our search identified all but one of the studies, and this study was not indexed on MEDLINE. The Information Specialist devised the search strategy, with input from the Information Specialist from Cochrane Skin. No additional limits were used.

We searched the following bibliographic databases to 29 August 2016 for relevant published studies:

MEDLINE via OVID (from 1946);

MEDLINE In‐Process & Other Non‐Indexed Citations via OVID; and

Embase via OVID (from 1980).

We searched the following bibliographic databases to 30 August 2016 for relevant published studies:

the Cochrane Central Register of Controlled Trials (CENTRAL; 2016, Issue 7) in the Cochrane Library;

the Cochrane Database of Systematic Reviews (CDSR; 2016, Issue 8) in the Cochrane Library;

Cochrane Database of Abstracts of Reviews of Effects (DARE; 2015, Issue 2);

CRD HTA (Health Technology Assessment) database, 2016, Issue 3; and

CINAHL (Cumulative Index to Nursing and Allied Health Literature via EBSCO from 1960).

We searched the following databases for relevant unpublished studies using a strategy based on the MEDLINE search:

CPCI (Conference Proceedings Citation Index), via Web of Science™ (from 1990; searched 28 August 2016); and

SCI Science Citation Index Expanded™ via Web of Science™ (from 1900, using the 'Proceedings and Meetings Abstracts' Limit function; searched 29 August 2016).

We searched the following trials registers using the search terms 'melanoma', 'squamous cell', 'basal cell' and 'skin cancer' combined with 'diagnosis':

Zetoc (from 1993; searched 28 August 2016).

The US National Institutes of Health Ongoing Trials Register (www.clinicaltrials.gov); searched 29 August 2016.

NIHR Clinical Research Network Portfolio Database (www.nihr.ac.uk/research‐and‐impact/nihr‐clinical‐research‐network‐portfolio/); searched 29 August 2016.

The World Health Organization International Clinical Trials Registry Platform (apps.who.int/trialsearch/); searched 29 August 2016.

We aimed to identify all relevant studies regardless of language or publication status (published, unpublished, in press, or in progress) and applied no date limits.

Searching other resources

We have included information about potentially relevant ongoing studies in the Characteristics of ongoing studies tables. We have screened relevant systematic reviews identified by the searches for their included primary studies, and included any missed by our searches. We have checked the reference lists of all included papers, and subject experts within the author team reviewed the final list of included studies. We did not conduct any citation searching.

Data collection and analysis

Selection of studies

At least one review author (JDi or NC) screened titles and abstracts, with any queries discussed and resolved by consensus. A pilot screen of 539 MEDLINE references showed good agreement (89% with a kappa of 0.77) between screeners. We included at initial screening primary test accuracy studies and test accuracy reviews (for scanning of reference lists) of any test used to investigate suspected melanoma, BCC, or cSCC. Both a clinical reviewer (from one of a team of twelve clinician reviewers) and a methodologist reviewer (JDi or NC) independently applied Inclusion criteria (Appendix 7) to all full text articles, disagreements were resolved by consensus or by a third party (JDe, CD, HW, and RM). We contacted authors of eligible studies when insufficient data were presented to allow for the construction of 2x2 contingency tables.

Data extraction and management

One clinical (as detailed above) and one methodologist reviewer (JDi, NC or LFR) independently extracted data concerning details of the study design, participants, index test(s) or test combinations and criteria for index test positivity, reference standards, and data required to populate a 2x2 diagnostic contingency table for each index test using a piloted data extraction form. We extracted data at all available index test thresholds. We resolved disagreements by consensus or by consulting a third party (JDe, CD, HW, and RM).

We contacted authors of included studies where information related to final lesion diagnoses or diagnostic thresholds were missing. In particular, invasive cSCC (included as disease‐positive for one of our secondary objectives) is not always differentiated from ‘in situ’ variants such as Bowen’s disease (which we did not consider as disease‐positive for any of our definitions of the target condition). We contacted authors of conference abstracts published from 2013 to 2015 to ask whether full data were available. If no full paper was identified, we marked conference abstracts as 'pending' and will revisit them in a future review update.

Dealing with multiple publications and companion papers

Where we identified multiple reports of a primary study, we maximised yield of information by collating all available data. Where there were inconsistencies in reporting or overlapping study populations, we contacted study authors for clarification in the first instance. If this contact with authors was unsuccessful, we used the most complete and up‐to‐date data source where possible.

Assessment of methodological quality

We assessed risk of bias and applicability of included studies using the QUADAS‐2 checklist (Whiting 2011), tailored to the review topic (see Appendix 8) and piloted it on a small number of included full‐text articles. One clinical (as detailed above) and one methodologist reviewer (JDi, NC or LFR) independently assessed quality for the remaining studies; we resolved any disagreement by consensus or by consulting a third party where necessary (JDe, CD, HW, and RM).

Statistical analysis and data synthesis

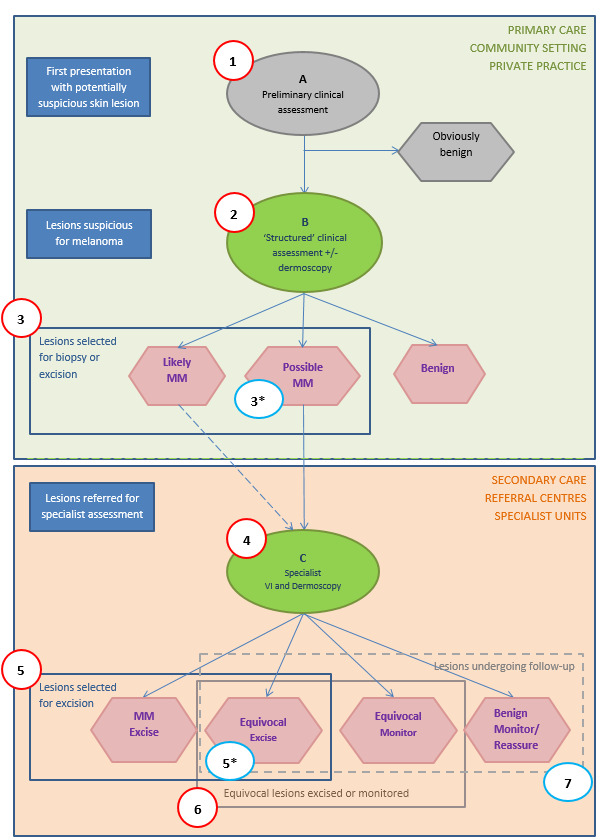

We conducted separate analyses according to the point that study participants reached in the clinical pathway (numbered from 1 to 7 in Figure 3), the clarity with which the pathway could be determined (clear or unclear), and the evaluation of in‐person versus image‐based diagnosis.

3.

Clinical pathway

Our unit of analysis was the lesion rather than the participant. This is because firstly, in skin cancer, initial treatment is directed to the lesion rather than systemically (thus it is important to be able to correctly identify cancerous lesions for each person), and secondly, it is the most common way in which the primary studies reported data. Although there is a theoretical possibility of correlations of test errors when the same people contribute data for multiple lesions, most studies include very few people with multiple lesions and any potential impact on findings is likely to be very small, particularly in comparison with other concerns regarding risk of bias and applicability. Where an individual study assessed multiple algorithms, we selected datasets on the following preferential basis:

‘no algorithm’ reported; data presented for clinician’s overall diagnosis or management decision;

pattern analysis or pattern recognition;

ABCD algorithm (or derivatives of);

seven‐point checklist (also referred to as Glasgow/MacKie checklist).

Where multiple thresholds per algorithm were reported, we included the standard or most commonly used threshold. If data for multiple observers were reported, we used data for the most experienced observer, using single observer diagnosis in preference to a consensus or average across observers. If we were unable to choose a dataset based on the above ‘rules’, we made a random selection of one dataset per study. To allow comparisons of tests, we have included data on the accuracy of dermoscopy in a separate review in our series (Dinnes 2018).

For each analysis, we plotted estimates of sensitivity and specificity on coupled forest plots and in receiver operating characteristic (ROC) space. For tests where commonly used thresholds were reported we estimated summary operating points (summary sensitivities and specificities) with 95% confidence intervals and prediction regions using the bivariate hierarchical model (Chu 2006; Reitsma 2005). Where inadequate data were available for the model to converge the model was simplified, first by assuming no correlation between estimates of sensitivity and specificity and secondly by setting estimates of near zero variance terms to zero (Takwoingi 2015). Where all studies reported 100% sensitivity (or 100% specificity) we summed the number with disease (or no disease) across studies and used it to compute a binomial exact 95% confidence interval.

For computation of likely numbers of true‐positive, false‐positive, false‐negative and true‐negative findings in the 'Summary of findings' tables, we applied these indicative values to lower quartile, median and upper quartiles of the prevalence observed in the study groups. We have reported these numbers for the average operating point on the SROC curve in 'Summary of findings' tables.

Investigations of heterogeneity

We investigated heterogeneity, and made comparisons between algorithms and according to observer experience by comparing summary ROC curves using the hierarchical summary receiver‐operator curves (HSROC) model (Rutter 2001). HSROC curves allow incorporation of data at different thresholds and from different algorithms or checklists. We used an HSROC model that assumed a constant SROC shape between tests and subgroups, but allowed for differences in threshold and accuracy by addition of covariates. We assessed the significance of the differences between tests or subgroups by the likelihood ratio test assessing differences in both accuracy and threshold, and by a Wald test on the parameter estimate testing for differences in accuracy alone. We fitted simpler models when convergence was not achieved due to small numbers of studies, first assuming symmetric SROC curves (setting the shape term to zero), and then setting random‐effects variance estimates to zero. We have presented estimates of accuracy from HSROC models as diagnostic odds ratios (DORs) (estimated where the SROC curve crosses the sensitivity=specificity line) with 95% confidence intervals. We have presented differences between tests and subgroups from HSROC analyses as relative diagnostic odds ratios (RDORs) with 95% confidence intervals.

We fitted bivariate models using the xtmelogit command in STATA 15 and HSROC models using the NLMIXED procedure in the SAS statistical software package (SAS 2012; version 9.3; SAS Institute, Cary, NC, USA) and the metadas macro (Takwoingi 2010).

Sensitivity analyses

We planned sensitivity analyses, restricting analyses to studies at the least risk of bias; however, these were not carried out due to insufficient study numbers.

Assessment of reporting bias

Because of uncertainty about the determinants of publication bias for diagnostic accuracy studies and the inadequacy of tests for detecting funnel plot asymmetry (Deeks 2005), we did not perform tests to detect publication bias.

Results

Results of the search

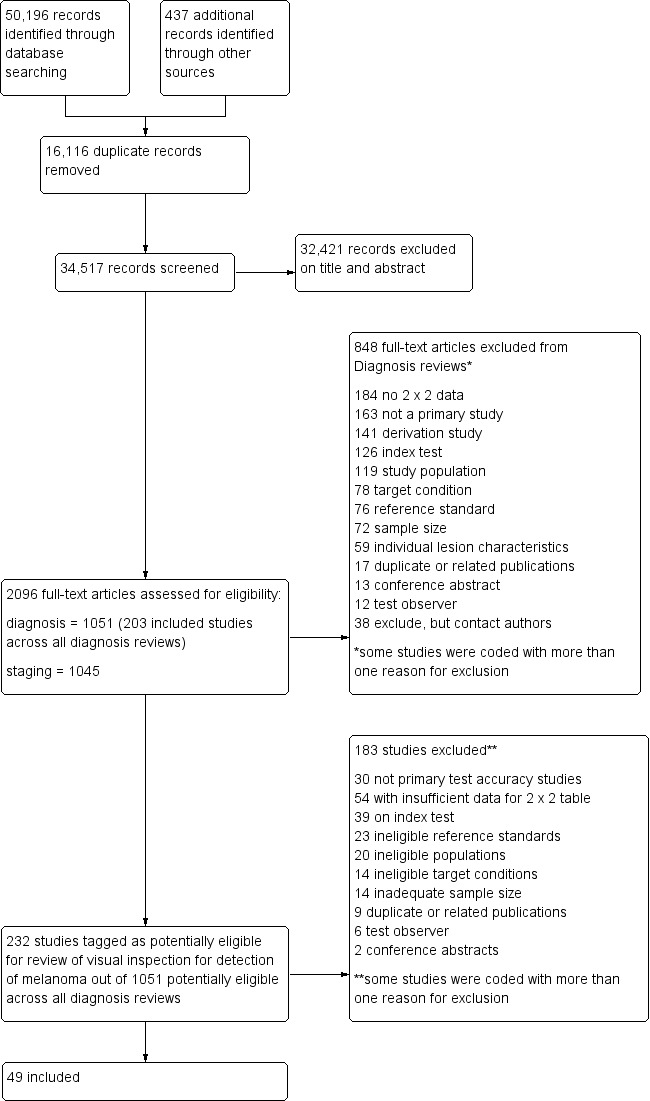

The Information Specialist identified a total of 34,517 unique references and we screened them for inclusion. Of these, we reviewed 1051 full‐text papers for eligibility for any one of the suite of reviews of tests to assist in the diagnosis of melanoma or keratinocyte skin cancer. Of the 1051 full‐text papers assessed, we excluded 848 from all reviews in our series (see Figure 4; PRISMA flow diagram of search and eligibility results).

4.

PRISMA flow diagram.

Of the 232 studies tagged as potentially eligible for this review of visual inspection, we included 49 publications, reporting 49 individual studies. Exclusions were mainly due to the inability to construct a 2x2 contingency table based on the data presented (n = 54); the use of ineligible index tests (n = 39) (for example: reporting of data for visual inspection and dermoscopy only (n = 12), reporting of data for ‘clinical diagnosis’ (n = 11), or for serial use of the index test in a follow‐up context (n = 7)); or not meeting our requirements for an eligible reference standard (n = 23). Other reasons for exclusion included ineligible study populations (n = 20) (for example, recruiting only malignant or only benign lesions (n = 18)), inadequate sample size (n = 14), ineligible definition of the target condition (n = 14) or with test interpretation by medical students or laypeople (n = 6). A list of the 183 publications excluded from this review with reasons for exclusion is provided in Characteristics of excluded studies, with a list of all studies excluded from the full series of reviews available as a separate pdf (please contact skin.cochrane.org for a copy of the pdf).

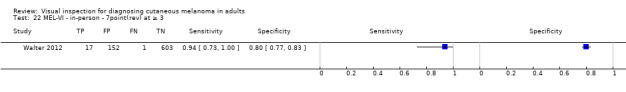

We contacted the authors of 14 publications for the purposes of this review of visual inspection and, to date, have received responses about seven publications. One response allowed the inclusion of the study in the review (Walter 2012), five provided clarifications on methods used on studies included (Bono 2006; Bourne 2012; Rosendahl 2011; Stanganelli 2000; Walter 2012); one replied with the information needed but the two studies could not be included due to the evaluation of ‘clinical diagnosis’ (Youl 2007a; Youl 2007b); and five replied but were not able to provide the information requested in relation to eight study publications, one of which we could still include (Menzies 2009) and seven we could not (Fabbrocini 2008; Freeman 1963; Heal 2008; Menzies 2009; Warshaw 2009a; Warshaw 2009b; Warshaw 2010).

The 49 included study publications report on a total of 51 cohorts of lesions and 134 datasets with 34,351 lesions and 2499 malignancies. The total number of study participants with suspicious lesions cannot be estimated due to lack of reporting in study publications. Two thirds of studies (n = 32; 65%) also reported accuracy data for diagnosis using dermoscopy; these comparisons are reported in Dinnes 2018. Seven studies reported data for additional tests including teledermatology (n = 1) and computer‐assisted diagnosis techniques (n = 6).

Methodological quality of included studies

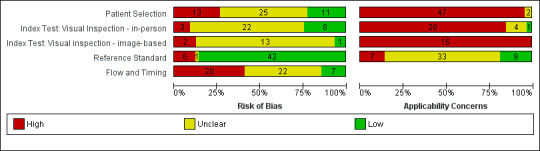

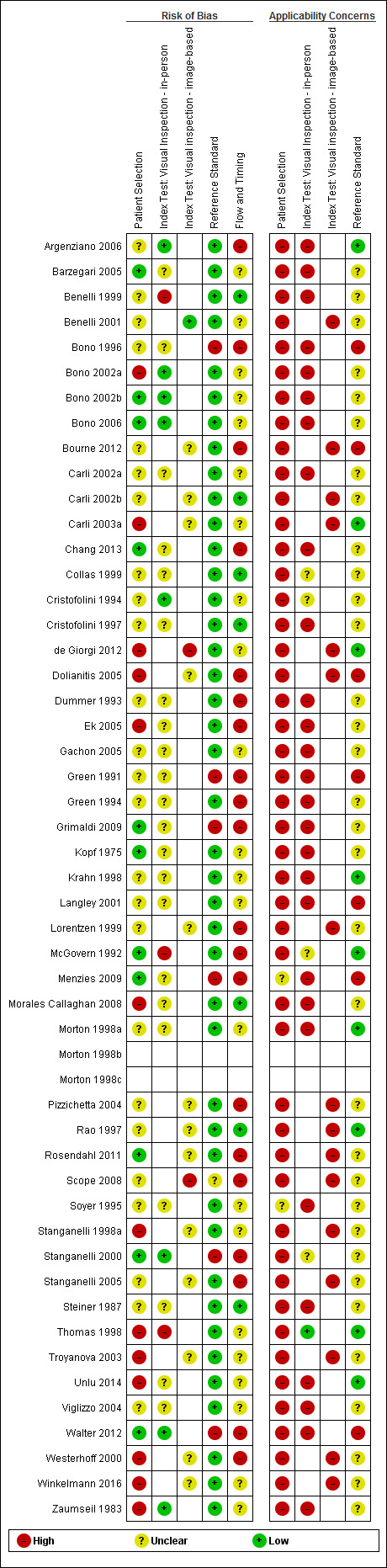

We have summarised the overall methodological quality of all included studies (n = 49) in Figure 5 and Figure 6.

5.

Risk of bias and applicability concerns graph: review authors' judgements about each domain presented as percentages across included studies

6.

Risk of bias and applicability concerns summary: review authors' judgements about each domain for each included study

The majority of study reports provided insufficient information across almost all study quality domains to allow us to judge the risk of bias, while we scored applicability of study findings as of ‘High’ concern in three of four domains assessed.

Participant selection

We judged only 22% of studies (n = 11) at low risk of bias for participant selection and 27% (n = 13) at high risk of bias. Ten studies (20%) either used a case‐control type design with separate selection of melanoma cases and lesions with benign diagnoses (n = 6) or did not clearly describe the study design used (n = 5). Over half (55%; n = 27) reported random or consecutive participant recruitment; the remaining 45% did not describe recruitment methods. Over half of studies (53%) did not describe whether they had applied any exclusion criteria and we judged them at unclear risk of bias. Seven studies (14%) applied inappropriate participant exclusions, excluding ‘difficult to diagnose’ lesions such as awkwardly located lesions (Bono 2002a; Morales Callaghan 2008; Unlu 2014); those with disagreement on histopathology (de Giorgi 2012; Ek 2005; Zaumseil 1983); or dermoscopically ‘peculiar’ lesions (Carli 2003a).

We considered almost all cohorts (96%; n = 47) at high concern for applicability of participants. In the majority of cases (n = 41), high concern was due to restricted study populations: inclusion of only melanocytic (n = 10) or amelanotic (n = 1) lesions; restriction by lesion diameter (Bono 2002b; Bono 2006; Steiner 1987); or, most commonly, inclusion of lesions selected for excision based on the clinical or dermoscopic diagnosis or selected retrospectively from histopathology databases (n = 37). We judged only four cohorts to have included a representative patient population (Grimaldi 2009; Menzies 2009; Stanganelli 2000; Walter 2012). Fourteen cohorts also included multiple lesions per participant, with only eight clearly including a similar number of participants and lesions (Bono 2002a; Bono 2002b; Bono 2006; Bourne 2012; Collas 1999; Krahn 1998; Pizzichetta 2004; Unlu 2014).

Index test

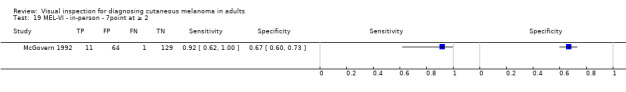

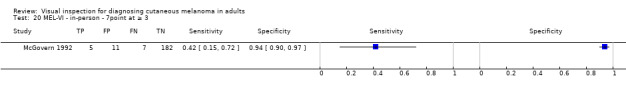

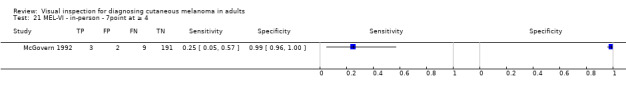

For the index test domain, we considered studies separately according to whether they reported in‐person evaluations of visual inspection (n = 33) or evaluations based on interpretation of clinical images (image‐based evaluations; n = 16). For the in‐person evaluations, we judged 24% (n = 8) at low risk of bias, and 9% (n = 3) at high risk; 22 (67%) did not provide sufficient information to allow us to judge the risk of bias fully. We considered that all studies made the diagnosis blinded to the reference standard result: 24% (n = 8) also clearly reported pre‐specification of the diagnostic threshold (five of the eight using named algorithms (Argenziano 2006; Cristofolini 1994; Stanganelli 2000; Walter 2012; Zaumseil 1983 and three by the same author team (Bono 2002a; Bono 2002b; Bono 2006) describing the process by which they had reached the diagnosis. Three studies developed new algorithms (Thomas 1998) or evaluated multiple thresholds for test positivity (Benelli 2001; McGovern 1992). Reporting was poorer for the image‐based evaluations, with over three quarters of studies (n = 13) not providing sufficient information to allow us to judge the risk of bias fully, one study (6%) judged at low risk of bias and two (12%) at high risk. Again, we considered that all the studies had made the diagnosis blinded to the reference standard result, with one prospectively testing two pre‐specified diagnostic thresholds (Benelli 2001) and two (de Giorgi 2012; Scope 2008) testing multiple diagnostic thresholds.

We recorded high concern for the applicability of the index tests for 85% (n = 28) of in‐person evaluations. High concern was primarily due to a lack of description of the diagnostic thresholds used (n = 24), but also as a result of presentation of average (Argenziano 2006) or consensus diagnoses (Barzegari 2005; Benelli 1999; Carli 2002a; Cristofolini 1997; Morales Callaghan 2008; Steiner 1987) as opposed to the diagnosis of a single observer. Two studies were also judged to have reported diagnosis by non‐expert observers (Menzies 2009; Walter 2012), both of which reported diagnoses by large groups of primary care practitioners. In reality, specific expertise in diagnosing pigmented lesions does vary amongst examiners, for example Menzies 2009 requiring a history of excision or referral of at least 10 pigmented skin lesions over the previous 12‐month period but excluding those already using dermoscopy or digital monitoring of lesions, and Walter 2012 excluding those with specialist dermatology training but reporting some training in dermatology for almost a quarter of participating GPs. We judged almost three quarters of studies (n = 24) to have applied and interpreted the ‘test’ in a clinically applicable manner, nine (27%) provided sufficient detail of the threshold used and 11 (33%) described the observers as expert or experienced. All image‐based studies were of high concern for applicability, due to the image‐based nature of interpretation limiting the clinical applicability of findings but also the lack of detail on the thresholds used (n = 13). A higher proportion (62%; n = 10) described the observers as expert or experienced.

Reference standard