Abstract

Daily life often requires the coordination of our actions with those of another partner. After 50 years (1968–2018) of behavioral neurophysiology of motor control, the neural mechanisms that allow such coordination in primates are unknown. We studied this issue by recording cell activity simultaneously from dorsal premotor cortex (PMd) of two male interacting monkeys trained to coordinate their hand forces to achieve a common goal. We found a population of “joint-action cells” that discharged preferentially when monkeys cooperated in the task. This modulation was predictive in nature, because in most cells neural activity led in time the changes of the “own” and of the “other” behavior. These neurons encoded the joint-performance more accurately than “canonical action-related cells”, activated by the action per se, regardless of the individual versus interactive context. A decoding of joint-action was obtained by combining the two brains' activities, using cells with directional properties distinguished from those associated to the “solo” behaviors. Action observation-related activity studied when one monkey observed the consequences of the partner's behavior, i.e., the cursor's motion on the screen, did not sharpen the accuracy of joint-action cells' representation, suggesting that it plays no major role in encoding joint-action. When monkeys performed with a non-interactive partner, such as a computer, joint-action cells' representation of the other (non-cooperative) behavior was significantly degraded. These findings provide evidence of how premotor neurons integrate the time-varying representation of the self-action with that of a co-actor, thus offering a neural substrate for successful visuomotor coordination between individuals.

SIGNIFICANCE STATEMENT The neural bases of intersubject motor coordination were studied by recording cell activity simultaneously from the frontal cortex of two interacting monkeys, trained to coordinate their hand forces to achieve a common goal. We found a new class of cells, preferentially active when the monkeys cooperated, rather than when the same action was performed individually. These “joint-action neurons” offered a neural representation of joint-behaviors by far more accurate than that provided by the “canonical action-related cells”, modulated by the action per se regardless of the individual/interactive context. A neural representation of joint-performance was obtained by combining the activity recorded from the two brains. Our findings offer the first evidence concerning neural mechanisms subtending interactive visuomotor coordination between co-acting agents.

Keywords: interpersonal coordination, joint-action, monkeys, motor systems, premotor cortex, social interactions

Introduction

The advent of the analysis of single-cell activity in the brain of behaving monkeys, now 50 years ago (Evarts, 1968; Mountcastle et al., 1975), has dramatically advanced the study of the neural control of movement, extending it to complex forms of motor cognition. This approach undoubtedly expanded our understanding of the way the cerebral cortex encodes action at different levels of complexity (for review, see Caminiti et al., 2017). However, it has so far provided a “solipsistic” representation of action in different neural centers, because all studies have been performed in monkeys engaged in individual cognitive-motor tasks, i.e., in single brains in action.

Recently new attention has been devoted to the neural correlates of social cognition in monkeys. It has been shown that neural activity in the posterior parietal cortex of monkeys distinguishes situations of possible interaction between agents from those of mere “co-presence” (Fujii et al., 2007), whereas in medial frontal cortex single-unit activity encodes the errors made by a partner monkey during a common task (Yoshida et al., 2012) or encodes the result of social decisions in reward allocation paradigms (Chang et al., 2013). Finally, in the anterior cingulate cortex neural activity seems to reflect the anticipation of the opponent's choice even before this is known (Haroush and Williams, 2015), as in the prisoner's dilemma game. Altogether, these studies offer compelling evidence that aspects of social behavior can be encoded at cellular and population level. However, the outcome of social operations is transformed into action by the motor system, often thanks to coordinated behavior between interacting agents, i.e., through joint-action. To date, the neurophysiology of intersubjects' motor coordination has never been studied in nonhuman primates through the analysis of the time-evolving interplay of neural activity recorded simultaneously from two co-acting brains.

We studied the neural mechanisms of joint-action by analyzing the neural activity recorded simultaneously from corresponding dorsal premotor areas (PMd; F7/F2) of two interacting monkeys. The animals exerted hand forces on their isometric joystick, to guide a visual cursor on a screen, either individually (SOLO condition) or through a reciprocally coordinated action (TOGETHER condition), necessary to achieve a common goal. During the SOLO performance of one monkey, the other animal observed the outcome of the partner's action (OBS-OTHER condition), consisting in the cursor's motion on the screen. Our findings show the existence in dorsal premotor cortex of a novel class of neurons; that is, “joint-action neurons”, whose neural activity are modulated preferentially when a monkey coordinates his own action with that of another monkey in a dyad.

In another task, monkeys were required to coordinate their own action with a computer in a “simulated TOGETHER” condition (SIM-TOGETHER), rather than with a real partner. In absence of bidirectional coordination, this new scenario forced the acting monkey to adjust his hand-force output, hence the cursor's trajectory, to that generated by the computer, and not vice versa. We reasoned that, when a monkey performed the task with a non-interactive partner, if cell activity in premotor cortex reflected the mutual and time-evolving interaction and adaptation of the two monkeys, the accuracy of neural activity in encoding the joint performance would have been degraded, which was indeed the case. Our aim was therefore to analyze the neural mechanisms, subtending the ability to coordinate our own actions with those of an interactive or non-interactive partner, which are common conditions in real life. Our findings offer the first evidence of a neural representation, at single-cell and population levels, of joint-performance in nonhuman primates. This representation, predictive in nature, allowed the reconstruction of the dyadic performance, by merging the activity recorded from the two co-acting brains.

Materials and Methods

Animals

Two adult male rhesus monkeys (Macaca mulatta; Monkey S and Monkey K; body weight 7.5 and 8.5 kg, respectively) were used in this study. During experimental procedures, all efforts were made to minimize animal suffering. Animal care, housing, and surgical procedures were in conformity with European Directive 63-2010 EU and Italian (DL 26-2014) laws on the use of nonhuman primates in scientific research. Monkeys were housed in pairs in room with other monkeys, allowing auditory and visual contact. Monkeys S and K were housed in separate cages, in front of each other. At ∼3 months after onset of training, before the recording sessions, animals underwent surgery for head-post implantation. After pre-anesthesia with ketamine (10 mg/kg, i.m.), they were anesthetized with a mix of Oxigen/isoflurane (1–3% to effect). A titanium headpost was implanted on the skull under aseptic conditions. After surgery, the animals were allowed to fully recover for at least 7 d, under treatment with antibiotic and pain relievers, as from veterinary prescription. Then, they returned to daily training sessions until they reached a stable performance in all tasks in which they had been trained. This generally occurred 7 months after first surgery. At the completion of training, the animals were pre-anesthetized and then anesthetized as above, and a circular recording chamber (18 mm diameter) was implanted on the skull, over the left frontal lobe of one hemisphere, to allow recording from dorsal premotor cortex (F2/F7; PMd; area 6). Monkeys S and K underwent all surgical procedures in successive days. Recording of neural activity started after 5–6 d of recovery, under strict veterinary control, and only when both animals were able to perform the tasks as in the immediate pre-surgery time. Recording sessions were performed for 3–4 d per week, 3–4 h per day, and lasted for ∼2 months. At the end of the recording sessions, the dura was opened, and reference points were placed at known chamber coordinates to delimitate the recording region and facilitate recognition of the entry points of microelectrode penetrations. These were later reconstructed relative to key anatomic landmarks, such as the central sulcus and the arcuate sulcus in the frontal lobe.

Experimental design

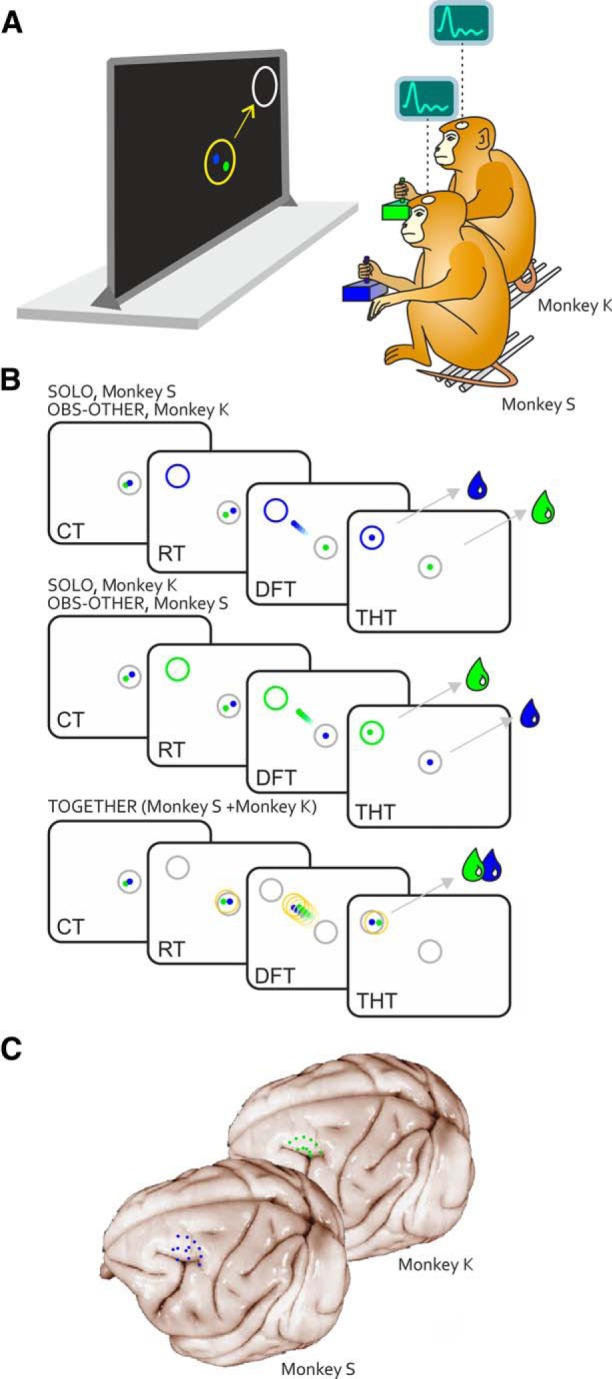

Animals were placed together in a darkened, sound-attenuated chamber and seated side-to-side on two primate chairs (Fig. 1A), with their head fixed in front of a 40 inch monitor (100 Hz, 800 × 600 resolution, 32-bit color depth; monitor-eye distance 150 cm). They were trained to control a visual cursor on the screen by applying with the hand a force on an isometric joystick (ATI Industrial Automation), which measured the forces in two dimensions (Fx and Fy), with a sampling frequency of 1000 Hz. The two cursors, 0.6 degrees of visual angle (DVA) in diameter, were presented on a dark background and consisted each in a filled circle (blue, Monkey S; green, Monkey K). During the experiment, the animals used the right arm, contralateral to the recording chamber while their left arm was gently restrained. The animals were unable to interact outside the task, because visual contact with the partner was slim and physical contact prevented by the distance between them (60 cm). The NIH-funded software package REX was used to control stimuli presentation and to collect behavioral events, eye movement and force data. In all task conditions, eye movements were sampled (at 220 Hz) through an infrared oculometer (Arrington Research) and stored together with joystick force signal and key events, which were sampled at 1 kHz. Fixation accuracy, when requested, was controlled through circular windows (5 DVA diameter) around the targets.

Figure 1.

Apparatus, tasks and recording sites. A, Monkeys S and K controlled each an individual visual cursor (Monkey S, blue dot; Monkey K, green dot) on a screen, by applying a force pulse on an isometric joystick with one hand. B, Initially, each animal had to move its own cursor within a central target from an offset position, by exerting a static force for a variable CT, until a peripheral target was presented in 1 of 8 positions. In the SOLO condition, one monkey called into action individually by the color of the peripheral target (Monkey S, blue; Monkey K, green), within a subjective RT, had to exert a dynamic force pulse on the joystick (DFT). During Monkey S's SOLO trials, Monkey K observed on the screen the motion of the other monkey visual cursor (OBS-OTHER condition), and vice versa. In the SOLO and OBS-OTHER conditions, each animal was rewarded (green/blue drop) depending on its own successful performance, regardless of the behavior of its mate. When the color of the peripheral target was white (A, represented in grey in B), both monkeys had to act jointly (TOGETHER trial), to move together a large common object (yellow circle) toward the peripheral target, by coordinating their forces. To successfully perform the task and receive simultaneously a drop of fruit juice, the animals had to keep their cursors within a maximal inter-cursor distance for the entire DFT and until the end of the THT. Lack of intersubject coordination resulted in unsuccessful trials and neither animal was rewarded. C, Reconstruction of recording sites in areas F7/F2 of Monkey S and Monkey K.

Behavioral tasks.

Monkeys were trained in an isometric directional task, characterized by a standard center-out structure, where the animals had to apply isometric hand forces (see previous paragraph) on a joystick to move their own cursor from a central position of the screen to one of eight peripheral targets, as it will described hereafter. This task was executed in two conditions (SOLO and TOGETHER). In all instances, the task began with the presentation of an outlined white circle (2 DVA diameter) in the center of the screen. The animals had to move their cursors within the circle from an offset position and hold them there for a variable control time (CT; 500–600 ms), by exerting a static hand force. Then a peripheral target (outlined circle, 2 DVA diameter) was presented in one of eight possible locations at 45° angular intervals, around a circumference of 8 DVA radius. The color (blue, green, or white) of the target circle instructed the monkeys about the condition and, therefore, the action required (Fig. 1B).

In the SOLO condition, one monkey at a time performed the task individually, by moving its cursor from the center toward the peripheral target (Fig. 1B; Movie 1). The color of the target instructed which of the two animals had to act (blue, Monkey S; green, Monkey K). To move the cursor to the target, the monkey applied a proper force amount in the appropriate direction, in the absence of arm displacement, therefore under isometric conditions. Typically, a force pulse of 1 N resulted in a cursor displacement of 2.5 DVA. Therefore, the animal had to increment its force on the joystick of 3.2 N, to bring the cursor from the central to the peripheral position (8 DVA eccentricity). To obtain a liquid reward, in the SOLO task each animal had to individually move its cursor to the peripheral target within a subjective reaction time (RT; upper limit 800 ms), and dynamic force time (DFT; upper limit 2000 ms) and hold it within the final location for a variable target holding time (THT; 50–100 ms). The isometric nature of the task did not provide to the partner any other visual cue on the performed action, beyond the resulting motion of a visual cursor on the screen.

Example of typical SOLO trials of Monkey S (blue cursor) and Monkey K (green cursor), corresponding to OBS-OTHER trials of Monkey K and Monkey S, respectively. During the CT (only the last 300 ms are represented in the movie) Monkey K and Monkeys S, by exerting a static hand force on their joysticks, keep their cursors (blue, Monkey S; green, Monkey K) into the central target (white circle) for a variable time, until a peripheral target appears in one of eight possible positions (0 ms on the temporal axis). The color of this target (blue or green) indicates which monkey (S or K) will have to act. After a subjective RT, by applying a dynamic force on the joystick, the animal called into action moves its cursor from the central toward the peripheral target (DFT), and keeps it there for a short THT, to receive a liquid reward. For the entire duration of the trial (CT, RT, DFT, THT), to be rewarded the other monkey had to keep its own cursor within the central target, while observing the motion of the visual cursor controlled by its partner. The time evolution of the trial and the cursors speed are reported in the bottom. Note the different timings of cursor's speed for the two animals, when acting individually.

In the TOGETHER task, the peripheral target was white. It instructed both monkeys to guide together, a common visual object (yellow circle; Fig. 1B; Movie 2), which appeared simultaneously to the peripheral target, from the center toward the latter. The moving yellow circle controlled by both animals was centered at any instant at the midpoint of the two cursors. Therefore, the monkeys had to coordinate the motion of their cursors, in space and time, by maintaining a maximum inter-cursor distance limit (ICDmax; 5 DVA), which coincided with the diameter of the yellow circle. An inter-cursor distance >ICDmax resulted in the abortion of the trial. Once the yellow circle was successfully dragged to the final location and kept it there for a short THT (50–100 ms), both monkeys received an equal amount of liquid reward, simultaneously. The amount of reward dispensed was identical across task conditions.

Example of a typical TOGETHER trial, where both Monkeys S and K move their cursors, by coordinating their forces to bring together a common visual object (yellow circle) from the central to the peripheral target. After a variable control time, the white color of the peripheral target instructs the monkeys on the joint-action condition. The animals must coordinate in time and space their hand output forces, to keep their cursors within a distance limit (ICDmax, 5 DVA), which corresponded to the diameter of the yellow cursor. An inter-cursor distance above this limit, results in the abortion of the trial. Once the yellow cursor reaches the final target, overlapping it for a short THT, both monkeys receive a liquid reward. At any instant, the center of the moving yellow circle coincides with the midpoint of the two cursors controlled by the monkeys. Note the synchronization of the cursor speeds when monkeys act together.

To prevent anticipatory movements, trials were aborted automatically when a reaction time fell <50 ms. Trials were presented in an intermingled fashion and pseudorandomized in a block of a minimum of 192 trials, consisting of 8 replications for each of the 8 target directions, and the 3 distinct conditions (2 SOLO conditions, 1 for each animal, and 1 TOGETHER condition; 3 conditions × 8 directions × 8 replication = 192).

When one animal moved its cursor in a SOLO condition, the other to get a reward had to keep its own cursor inside the central target, by exerting a static hand force until the end of the SOLO trial of its mate. Because during this time the animal observed the result of the partner's action, in the form of motion of a cursor on the screen (see Results for the description of the observation behavior), this condition was labeled as “OTHER OBSERVATION” (OBS-OTHER; Fig. 1B; Movie 1). When the color of the peripheral target was blue, Monkey S had to act while Monkey K observed the partner's performance on the screen, and vice-versa when the color of the peripheral target was green. Under both SOLO and OBS-OTHER condition, the reward delivery to each animal depended only on the success of its own performance, which was independent on the co-actor behavior. No task requirements were imposed on eye movements, being interested in studying the natural behavior of each animal under this condition.

Neurophysiological recording of neural signals during the above-mentioned tasks resulted in three sets of data for each monkey, relative to the three behavioral conditions, in which the animal acted either individually (SOLO) or in a cooperative joint-action task (TOGETHER), or observed the outcome of the partner's action (OBS-OTHER).

As a control condition, referred to as “simulated”, in separate sessions we repeated the experiment with one single animal sitting alone in front of a computer screen. The monkey was required to perform three tasks (SOLO, OBS-OTHER, SIM-TOGETHER), which were virtually identical to those previously reported, with the important difference that the joint-action condition (SIM-TOGETHER) was simulated through a computer. In the SIM-TOGETHER trials, each animal had to calibrate its hand-force output to guide its own cursor in coordination with another cursor controlled by a PC, instead of by another monkey. The computer simulated the behavior of the other partner, by displaying typical cursor motions collected from previous behavioral sessions. Therefore, in the SIM-TOGETHER trials Monkey K had to act jointly with the cursor's motion previously collected from Monkey S, and vice versa. This new task differed from the real joint-action only in its social/interactive aspects. In fact, during the SIM-TOGETHER task the acting monkey sat alone in the recording room, rather with a conspecific, as in the real TOGETHER condition. Moreover, and most important, in the SIM-TOGETHER the PC that generated the cursor's trajectories was not interacting with the performing animal. Therefore, in this case the success depended only from the unidirectional coordination of the active animal with the PC, unlike the TOGETHER condition in which the two animals could reciprocally adjust/adapt their behavior online to achieve their common goal. Under this simulated condition, the RT differences (or the difference in the cursors' arrival times) were computed between the RTs (or the arrival time) of the acting animal with those of the fictitious mate. As in the “real” interaction experimental setup, one session consisted of a minimum of 192 trials presented in an intermingled fashion, and in a pseudorandom order (8 replications × 8 target directions × 3 conditions = 192).

Given the controversial influence of eye related signals on the neural activity of premotor neurons (Cisek and Kalaska, 2002), a saccadic eye movement task (EYE) was used as control, to evaluate this influence on the activity recorded during the other task conditions. Each monkey performed the task individually. A typical trial started when the monkey fixated a white square central target for a variable CT (700–1000 ms). Then, 1 of 8 peripheral targets was presented at a 45° angular interval on a circle of 8 DVA radius. To obtain a liquid reward, the monkey was required to make a saccade to the target and keep fixation there for a variable THT (300–400 ms). Fixation accuracy was controlled through circular windows (5 DVA diameter) around the targets.

Electrophysiological recordings.

We recorded unfiltered neural activity using two separate 5-channel multiple-electrode arrays for extracellular recording (Thomas Recording) from the two brains, by adjusting the depth of each electrode (quartz-insulated platinum-tungsten fibers 80 μm diameter, 0.8–2.5 MΩ impedance), so as to isolate the signs of neural activity of single cells. Electrodes were equidistantly disposed in a linear array with inter-electrode distance of 0.3 mm and were guided through the intact dura into the cortical tissue (Eckhorn and Thomas, 1993) through a remote controller. The raw neural signal was amplified, digitized, and optically transmitted to a digital signal processing unit (RA16PA-RX5–2, Tucker-Davis Technologies) where it was stored together with the key behavioral events at 24 kHz for discrimination of action potential of individual cells. For this, we filtered the raw signal using a bidirectional FIR bandpass filter to obtain local field potentials (1–200 Hz) and multiunit activity (MUA; 0.3–5 kHz). MUA data were further analyzed in real time to obtain a threshold-triggered, window-discriminated single-unit activity. Only waveforms that crossed a threshold were stored and spike sorted, using off-line sorting custom MATLAB routine (MathWorks).

Statistical analyses

Behavioral data.

The RT was defined as the time elapsing from the presentation of peripheral target to the onset of the cursor's movement, followed by the DFT, corresponding to the time of dynamic force application on the isometric joystick, which determined the cursor's motion time (cMT) on the screen. This was defined as the time from the movement onset of the cursor to its entry into the peripheral target. Therefore, the cMT was expression of the dynamic force applied by the animal to the isometric joystick (DFT). The cursor's motion onset was defined as a change in the cursor velocity exceeding 3 SD of the signal calculated during the 50 ms before and after the target onset, for at least 90 ms.

Modulation of cell activity.

Quantitative analysis of cell activity in SOLO and TOGETHER conditions was performed on two epochs of interest (RT and DFT). First, the mean firing frequency of cell activity in each epoch (CT, RT, DFT) and trial and across task conditions was computed. For each task, the modulation of neural activity was assessed by using a two-way ANOVA (Factor 1: epoch, Factor 2: target position). This analysis was repeated for the two epochs of interest, considering CT versus RT or CT versus DFT as the two levels for Factor 1. A cell was defined as modulated in one epoch of the above tasks, if Factor 1, Factor 2, or the interaction term was significant (p < 0.05). In the OBS-OTHER trials, the cell modulation was evaluated during the RT and DFT (cMT), defined by the behavior of the acting (observed) monkey. Modulation in the EYE task was assessed as above (two-way ANOVA, Factor 1: epoch, Factor 2: target position), considering eye RT, and eye MT as epochs-of-interest.

A different two-way ANOVA (Factor 1: target position, Factor 2: condition) was used to assess the difference in a cell's activity between the SOLO and the TOGETHER conditions. Because each cell's modulation was computed using multiple tests, we applied a Bonferroni correction for multiple comparisons, dividing the critical p value (α = 0.05) by the number of comparisons used to assess neural modulation. This resulted in different α levels for the epoch modulation test (α = 0.05/3 = 0.017) and for the condition modulation test (α = 0.05/2 = 0.025). A cell showing a significant Factor 2 (condition, SOLO vs TOGETHER) or interaction factor, was considered as modulated by the task condition. A significant Factor 2 identifies a cell with different mean activity between the two conditions, whereas a significant interaction term corresponds to a different relation of cell activity with target position (different directional tuning curve), across task conditions.

Signal detection analysis.

We performed a receiver operating characteristics analysis (ROC; Lennert and Martinez-Trujillo, 2011; Brunamonti et al., 2016) to evaluate the performance of different types of cells (“canonical action-related cells” and joint-action cells; see Results) in disentangling the two action contexts. For this, the area under the ROC curve (auROC) was first computed. The curves were generated in each 40 ms bin and in 10 ms increments, within a time interval spanning from 500 ms before to 800 ms after target presentation. The discriminability latency of neurons was defined as the time from target presentation at which auROC values exceeded the mean + 2 SD of the auROC measured during the CT. The nonparametric Wilcoxon rank sum test (MATLAB function “ranksum”) was used to evaluate differences between mean auROC values associated to different population of cells ('canonical action-related neurons' vs 'joint-action neurons'), or to different contexts (TOGETHER vs SIM-TOGETHER conditions) for a given set of cells.

Directional modulation of cell activity and population coding.

The directional modulation of cell activity was first assessed by means of the two-way ANOVA analysis described above (Factor 1: epoch, Factor 2: target position). A cell was labeled as directionally modulated in one epoch if the Factor 2 or the interaction factor were significant (p < 0.05).

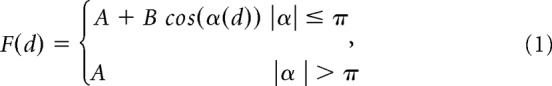

Then, the directional tuning of cell activity was computed through a nonlinear fitting procedure. A truncated cosine function was fitted into the experimental firing rates using the least square method. The fitting model was described by the following directional tuning function:

|

where F is the firing rate (in Hz) as a function of the direction of movement (d) expressed in radians. The argument of the cosine function, α(d) = (d − PD) · π/TW, contains two fitting parameters: the preferred direction (PD) expressed in radians, and the tuning width (TW; in radians). The other two parameters resulting from the fitting procedure (A and B) are linked to the tuning gain (TG) and to the baseline firing rate (BF) by the relations: TG = 2B and BF = A − B.

To classify a cell as “directionally-tuned” we assessed the statistical significance of its directional tuning, by using a bootstrap procedure which tested whether the degree of directional bias of the tuning curve could have occurred by chance (Georgopoulos et al., 1988). The parameter used in this procedure was the tuning strength (TS), defined as the amplitude of the mean vector expressing the firing rate in polar coordinates (Crammond and Kalaska, 1996). This quantity represents the directional bias of the firing rate, i.e., TS = 1 identifies a cell that only discharges for movements in one direction, whereas TS = 0 represents a cell with uniform activity across all directions (Batschelet, 1981). A shuffling procedure randomly reassigned single-trial data to different target directions and the TS from the shuffled data was determined. This step was repeated 1000 times, obtaining a bootstrapped distribution of TS, from which a 95% (one-tailed) confidence limit was evaluated. A cell was labeled as directionally-tuned in a specific epoch if the TS value calculated from the original unshuffled data was higher than the computed confidence limit (p < 0.05).

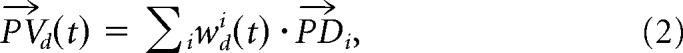

To study the directional congruence between the overall population activity and the behavioral output as a function of time, a population vector (PV) analysis was performed. For this purpose, the mean firing rate of each cell and the mean cursor position were calculated for each target direction in overlapping bins (width 80 ms, step 20 ms) after aligning the data to the dynamic force onset in each trial. The analysis was performed on the neural and behavioral data in a time window spanning from 300 ms before to 400 ms after force onset.

The population vector for a given direction d at a given time bin t, was calculated in standard fashion as follows:

|

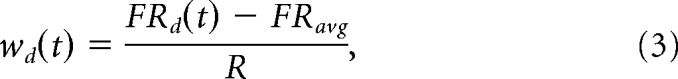

whereas is the preferred direction of the ith cell, computed in a given condition (SOLO or TOGETHER, see below) and wdi(t) is its weighted discharge rate during DFT when monkeys guided the cursor motion in direction d, at time t. The weight function for a given cell was defined as follows:

|

where FRd(t) is the firing rate of that cell at time t for movements toward direction d, FRavg is its discharge rate averaged over all directions, and R = (max(FRd) − min(FRd))/2 represents the half-range of its activity across directions (Georgopoulos et al., 1988). By using this weight function the contribution of each cell to the population vector is normalized, ranging from −1 to +1 for symmetrically distributed firing rates.

The PV associated to the TOGETHER condition was computed for two different subpopulations of cells, the first consisting of those directionally-tuned in the SOLO (“tuned S”; n = 144) condition and the second with neurons tuned in the TOGETHER (“tuned T”; n = 135) task. For each set, the PDs used to calculate the PV in (Eq. 2) were those computed in the SOLO and TOGETHER task, respectively. The PV was computed from sets with identical cell numerosity (n = 135), to avoid any possible bias due to an uneven number of neurons included in each subpopulation.

Reconstruction of neural trajectories.

For each subset of cells, a neural representation of the cursor's movement trajectory based on the information encoded in the population vector was constructed as follows. The predicted distance traveled by the cursor during time bin t was assumed to be proportional to the length of the population vector at time t − ΔT, where ΔT is the time lag between neural activity and behavior, defined as the time difference resulting in the best fit (highest correlation coefficient) between the instantaneous magnitude of the decoded trajectory and that of the dynamic force. Thus, a representation of the predicted cursor trajectory (“decoded trajectory”) for each target direction was obtained by connecting tip to tail all the PV(t − ΔT) (t = 1, 2, …, n) for that direction, normalized to the maximum vector length (Georgopoulos et al., 1988). The decoded trajectories obtained from the two set of tuned S and tuned T cells were then compared with the actual mean cursor trajectory. In fact, the latter was also expressed as a series of vectors {D⃗(t)}, each describing the displacement of the cursor between two consecutive time bins. Therefore, to evaluate the similarity of the decoded trajectories to the actual ones, we have computed for each direction d, at each time t, the angular differences between and D⃗(t). For the direct comparison between actual and decoded trajectories, the cursor's vector series was also normalized to the maximum vector length. Finally, through a Wilcoxon rank sum test, the angular differences obtained from the two sets of cells were then statistically compared.

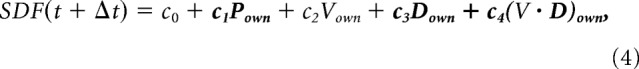

Multiple linear regression of neural activity.

To investigate the relation between neural activity and kinematic parameters of the two cursors guided by each monkey during the execution (DFT) of the TOGETHER trials, a multiple linear regression analysis was performed. The spike density function (SDF) was first calculated by replacing each spike with a Gaussian (SD = 30 ms), obtaining the time evolution of the firing rate with a sampling frequency of 1 kHz. Both the SDF and the behavioral parameters were down-sampled to 100 Hz, aligned to DFT onset and averaged across all replications for the TOGETHER condition, independently for each direction. These signals included all time bins between 150 ms before and 350 ms after the DFT onset. The neural data were then placed tip to tail, resulting in a long signal representing the evolution of the firing rate during the TOGETHER task in all directions. This procedure was repeated for each kinematic parameter and the resulting data were finally used to test the validity of the following linear model (Model 1, vectors in bold type):

|

where Δt is the time lag between the neural activity and the kinematic parameters, P, V, D, V·D are the position, tangential velocity (magnitude of the velocity vector), movement direction, and velocity vector of the own cursor on the screen.

The regression was repeated after shifting the SDF with respect to the behavioral data, using time lags ranging from −300 to +300 ms, in 10 ms steps. The time lag that yielded the highest R2 was then identified and the corresponding R2 was collected. This analysis was then repeated with the parameters of the cursor controlled by the “other” monkey (Model 2), i.e.:

|

As a result, for each cell we obtained two R2 values, R2own and R2other, representing the goodness of coding of the own and of the other cursor motion, respectively. A t test (α = 0.05) was used to compare the distributions of R2own and R2other obtained for different set of cells.

Results

Motor behavior during joint-action vs solo action and observation

The analysis of motor behavior of Monkeys S and K is shortly summarized here to favor comprehension of neural data. The details of this analysis have been reported in a previous behavioral study (Visco-Comandini et al., 2015), which included results from a larger dataset obtained from a total of three pairs of animals (monkey couples: S–K, C–D, B–K).

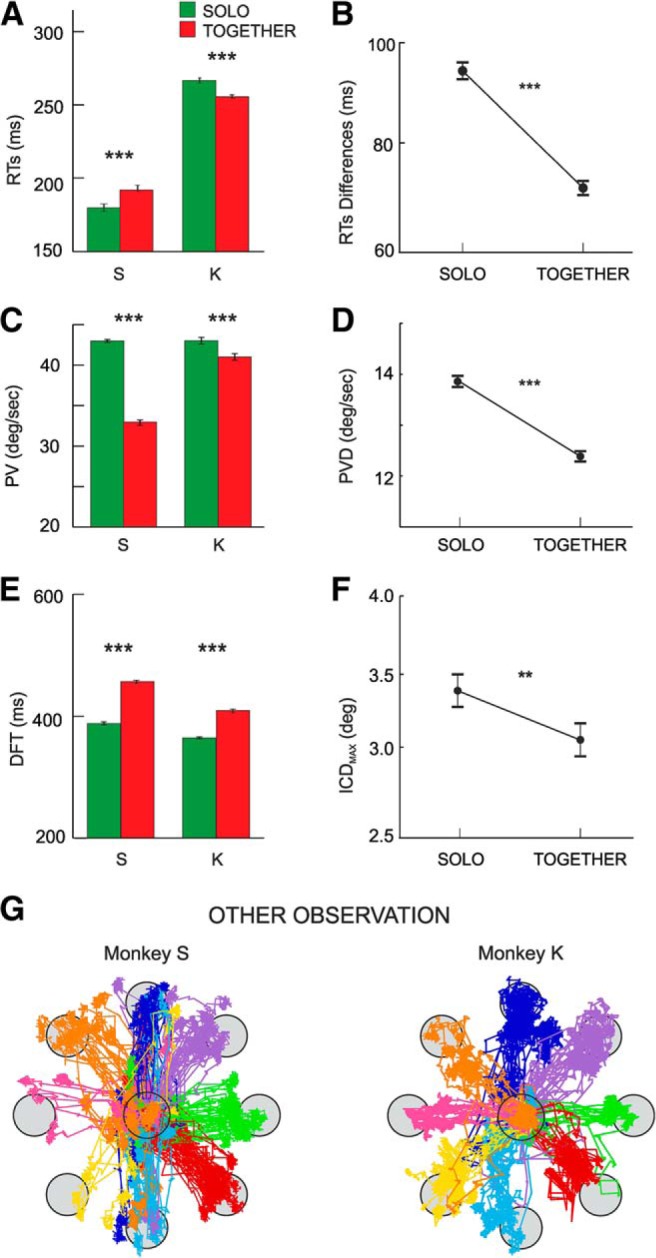

In brief, joint-action control was achieved through a progressive shaping of each monkey's action parameters to those of the partner. Relative to SOLO trials, Monkey S lengthened, whereas Monkey K shortened their RTs to the target presentation during joint-action trials (Fig. 2A). This helped achieving optimal reciprocal temporal coordination, by minimizing the differences between the two animals' RTs when acting together (Fig. 2B). This strategy, achieved through a progressive and reciprocal behavioral adaptation of the two monkeys, led to a significant reduction of the overall number of errors observed during training. During the execution phase, to drag the common cursor to the target, both monkeys maintained invariant the output force typical of the SOLO task but lowered the rate of change of instantaneous force application, which resulted in a decrease of the cursor's peak velocity (Fig. 2C). We also observed a significant attenuation of the difference between the cursors' speeds generated by the two animals, when moving them in the TOGETHER trials (Fig. 2D), and this helped to synchronize their joint-behavior. The overall reduction of velocity peaks led to a natural elongation of the time necessary to bring the cursor from the center to the periphery (Fig. 2E). This resulted in the optimization of the spatial intersubject coordination when the animals acted together, as also suggested by the significant decrease of the inter-cursor distance (Fig. 2F) observed during joint-action with respect to the SOLO trials. In conclusion, the above results illustrate the monkeys' ability to modulate their individual performance (Fig. 2A,C,E), to maximize the dyadic motor outcome. They also provide evidence of the animals' effort to optimize their reciprocal spatiotemporal coordination (Fig. 2B,D,F), by synchronizing their behavior both during planning (RT) and execution time (DFT).

Figure 2.

Behavioral data measured during SOLO (green) and TOGETHER (red) trials averaged across sessions. A, Mean RTs of Monkeys S and K across task conditions. B, Mean differences between RTs of Monkeys S and K in SOLO and TOGETHER trials. C, Mean values of cursor peak velocities (PV across task conditions.). D, Mean differences between peak velocities (PVD) of the two animals in SOLO and TOGETHER trials. E, Mean duration of DFT. F, Mean values of the maximum inter-cursor distance (ICDMAX) between the stimuli, controlled by Monkeys S and K in the SOLO and TOGETHER conditions across trials. In A–F, error bars represent the SE, and asterisks indicate significant differences (t-test; **p < .01; ***p < .001). G, Examples of eye trajectories recorded during the OBS-OTHER condition of one monkey, during which its partner was performing the SOLO trials. For each monkey, data refers to pooled trials collected in two typical recording sessions.

During the performance of SOLO trials by one of the two monkeys, the second animal was involved in the OBS-OTHER condition, in which the monkey was required to maintain its own cursor within the central target (see Materials and Methods), until the end of its partner performance. To study the natural behavior of each animal when its partner performed the SOLO task, no constraints were imposed on eye movements. The results showed that, when one of the two monkeys was performing the task individually, the other was indeed engaged in observing the cursor's motion controlled by its partner. Figure 2G shows the eye trajectories recorded during the OBS-OTHER trials of the two monkeys, as observed in two typical sessions for each animal. The eyes move in the direction of the eight peripheral targets reached by the moving cursor controlled by the partner.

Canonical action-related and joint-action neurons: single-cell properties and discrimination accuracy

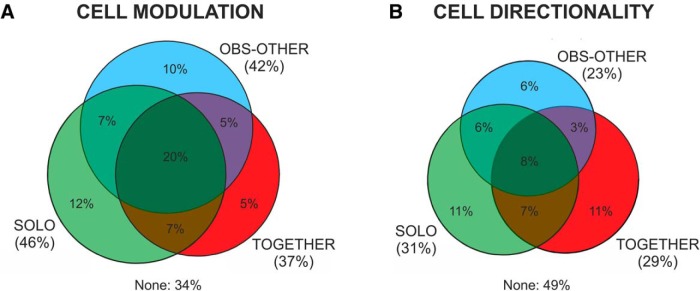

The neural activity of 471 cells (204 from Monkey S; 267 from Monkey K) was recorded from corresponding dorsal premotor cortex (areas F7/F2) of Monkeys S and K (Fig. 1C). These areas were deemed to be a good candidate to encode joint-action, for the link of area F7 with the executive control system of prefrontal cortex (Luppino et al., 2003; for review, see Caminiti et al., 2017), as well as with premotor area F2, which in turns projects to motor cortex (Johnson et al., 1996). Neural activity was recorded simultaneously from the two interacting animals in 36 sessions (1–3 session/d), with an average of 6 units/session in Monkey S, and 7 units/session in Monkey K. We found that 311/471 (66%) cells were modulated (two-way ANOVA, p < 0.05; see Materials and Methods) in at least one of the three tasks. We found that 216/471 (45.8%) cells were activated during the DFT in the SOLO trials, whereas 176/471 (37.3%) were modulated during the DFT of the joint-action (TOGETHER) trials. Many cells (199/471, 42.2%) were activated while the animal merely observed the consequences of the partner's action (OBS-OTHER condition), that is a cursor's motion on the screen. Single-cell activity was modulated in many instances in more task conditions (Fig. 3A). The analysis of the directional properties of these cells revealed that 144/471 (30.6%) were directionally-tuned when each monkey acted alone, 108/471 (22.9%) during observation of the partner's action, and 135/471 (28.7%) while acting with the partner (Fig. 3B).

Figure 3.

Proportion of cells modulated (A) and directionally tuned (B) in the DFT epoch of three different tasks (SOLO, TOGETHER, OBS-OTHER). Percentages are calculated with respect to a total of N = 471 cells recorded in the experiment with the co-presence of two interacting monkeys.

By studying the cell firing rate during the EYE task, we found that 186/471 (39.4%) neurons showed eye-movement modulated activity. It is worth noticing that neuronal modulation found during OBS-OTHER condition is not necessarily associated to the putative influence of eye-movement signals. In fact, 54.8% (110/199) of the cells activated during the observation of the other action were not active during the saccadic task, used as a control.

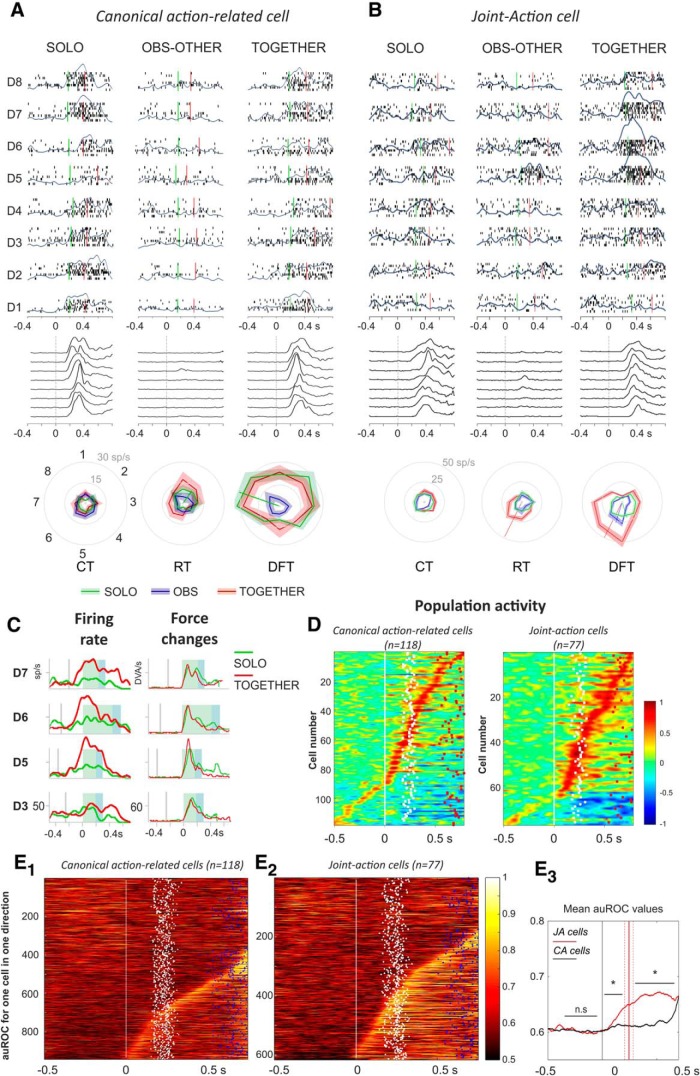

The study of neural activity revealed two main patterns of activation (Fig. 4). A first group of cells (118/471; 25.0%) was active in the SOLO condition, and their activity was not significantly different when monkeys acted in the TOGETHER task (Fig. 4A; two-way ANOVA, term “condition” and/or condition × “target position”, p < 0.05). Therefore, the activity of these canonical action-related cells depended on the action per se, regardless of the context (individual/interactive). An example of this type of cell is shown in Figure 4A. For this unit, neither during RT nor during DFT the firing rate changed across types of action (two-way ANOVA; RT: condition, F(1,128) = 3.28, p = 0.07; condition × target position, F(7,128) = 0.48, p = 0.85; DFT: condition, F(1,128) = 0.7, p = 0.40; condition × target position, F(7,128) = 0.47, p = 0.85).

Figure 4.

Neural activity of joint-action and canonical action-related cells. A, Neural activity of a canonical action-related neuron in form of rastergrams (5 replications) and SDFs (overimposed gray curves) in 3 task conditions (SOLO, OBS-OTHER, TOGETHER) and 8 directions (D1–D8), aligned to the target presentation (0 s). The corresponding behavior is shown below each condition by representing the changes of force application (black curves). Cell activity was modulated during DFT, both when the monkey acted individually (SOLO; two-way ANOVA; p < 0.05) and jointly with the partner in the TOGETHER condition (two-way ANOVA, p < 0.05) and it was not significantly different across task conditions (DFT; SOLO vs TOGETHER; two-way ANOVA, p > 0.05). The cell was silent both during the OBS-OTHER and EYE tasks (data not shown; two-way ANOVA, p > 0.05). Polar plots (bottom) show that the cell was directionally tuned during DFT and its preferred direction (PD) did not change from SOLO to TOGETHER condition. B, Neural activity of a joint-action cell, modulated during DFT, both when the monkey performed the action individually (SOLO; two-way ANOVA; p < 0.05) and, in a much stronger fashion, during joint-action with the partner (TOGETHER condition; two-way ANOVA, p < 0.05). The cell was poorly modulated (two-way ANOVA, p < 0.05) in the OBS-OTHER and silent in the EYE condition (data not shown; two-way ANOVA, p > 0.05). Conventions and symbols as in A. C, Direct comparison of the SDFs (left) of the joint-action cell shown in B, observed during the SOLO (green) and TOGETHER (red) task, in directions D3, D5, D6, and D7. Data are aligned to DFT (green shading) onset, followed by THT (blue shading). The corresponding force changes are shown on the right (thin curves). D, SDFs for the population of canonical action-related and joint-action cells (each row corresponding to 1 cell), aligned to the target presentation (0 s, white line). Dots indicate onset (white) and end (red) of DFT. Color bar, Normalized activation relative to CT. E, ROC plots for canonical action-related (E1) and joint-action (E2) cells, evaluating the discrimination between action conditions (SOLO vs TOGETHER). Colors show the auROC values of each individual cell, rank-ordered according to the time at which after target presentation auROC values exceeded the mean plus two times the SD, of the auROC measured during the CT. In each graph, time of alignment (0 ms; white line) corresponds to the target presentation; dots indicate times of start of force application (white) and times of cursor arrival on peripheral target (blue). Color bar, auROC values ranging from 0.5 (no discrimination) to 1 (high discrimination). E3, Comparison of mean auROC values across cells of the two subpopulations [canonical action-related (CA), black; joint-action (JA), red] and across directions (Wilcoxon rank sum test, *p < 0.05).

A second population consisted of 77/471 (16.3%) cells active when the animal performed the task jointly with the partner (TOGETHER; Fig. 4B), and whose activity differed from that of the SOLO condition (two-way ANOVA, term condition and/or condition × target position, p < 0.05). These units, labeled as joint-action cells, changed their firing rate across action conditions, despite the same amount of force applied by the monkeys in the two tasks (Fig. 4B,C). The joint-action neuron reported in Figure 4B showed a significant increase in firing rate in the TOGETHER trials, relative to the SOLO action, both during action preparation (RT; two-way ANOVA: condition, F(1,128) = 9.93, p = 0.0021; condition × target position, F(7,128) = 2.93, p = 0.0075) and execution (DFT; two-way ANOVA: condition, F(1,128) = 61.34, p = 2.85 × 10−12; interaction, F(7,128) = 6.27, p = 3.21 × 10−6). The significant interaction between the two main factors (condition, target position) highlights changes occurring also in the directional properties of this cell across tasks.

In the TOGETHER task, joint-action cells showed an overall population activity (Fig. 4D) stronger than canonical action-related ones. A signal detection ROC analysis was applied to these two cell populations, separately, to evaluate their overall contribution to solo- and joint-action. The neural power in discriminating these actions was estimated by computing the auROC in each direction of force application. Neurons were sorted according to their discriminability latency, defined as the time from target presentation where the auROC values were greater than the mean + 2 SD of the auROC measured during the CT. Joint-action cells signaled the different action performance contexts with variable latencies, ranging from 150 ms after target presentation, throughout the execution time of the task (Fig. 4E1–E2). The analysis of the temporal evolution of the mean auROC values across cells from each subpopulation (Fig. 4E3) indicate a significantly higher selectivity of the action context for the joint-action cells with respect to the canonical action-related ones. This emerged during the preparation (Wilcoxon rank sum test, RT: z = −4.02, p = 5.84 × 10−5) and persisted during the execution phase (Wilcoxon rank sum test, DFT: z = −10.831, p = 2.46 × 10−27) of the task. The emergence of these signals during the RTs suggests that modulation of joint-action neurons is not merely because of potential differences in animal behaviors across task conditions. This aspect has been thoroughly investigated by using a control task consisting in a simulated joint-action, as described further on (see Figs. 7, 8).

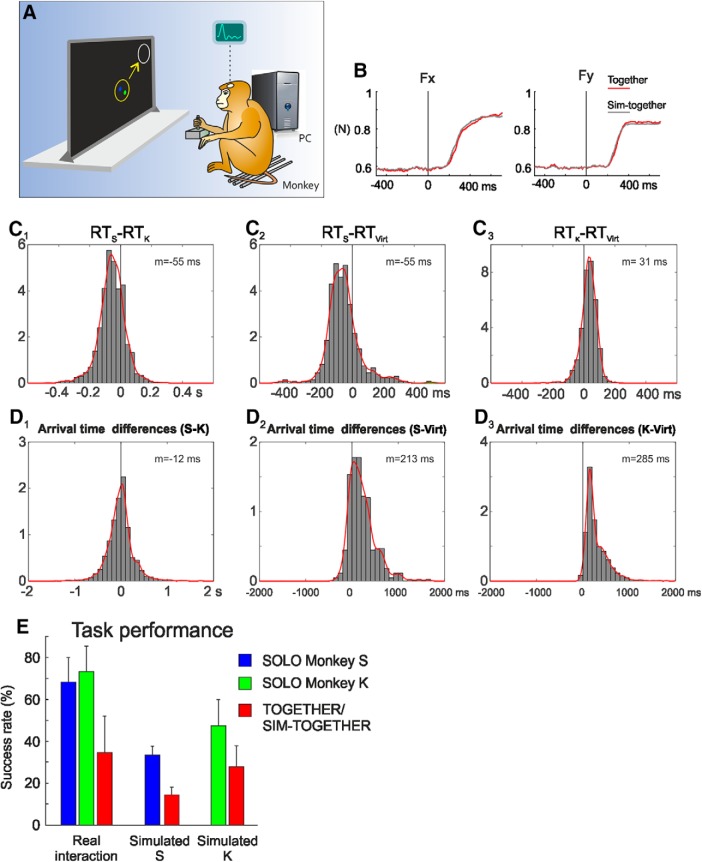

Figure 7.

Simulated joint-action. A, Behavioral setup of the SIM-TOGETHER condition. Each monkey controlled his hand force to guide the motion of a visual cursor in coordination with another one controlled by a computer. B, Comparison of mean Fx and Fy components of the force applied in the real (TOGETHER, red) and simulated (SIM-TOGETHER, gray) joint-action tasks. C, Distribution of the differences between the RTs of two interacting monkeys (Monkeys S and K; C1) measured during the TOGETHER trials, or between the RTs of one acting monkey (Monkey S, C2; Monkey K, C3) with those of the respective virtual partner (Virt; C2–C3). D, Distribution of the differences between the arrival times (i.e., time at which the cursor entered the peripheral target) of two interacting monkeys (Monkeys S and K; D1) measured during the TOGETHER trials, or between the arrival times of one acting monkey (Monkey S, D2; Monkey K, D3) with those of the respective virtual partner (Virt; D2–D3). C, D, The mean values (m) of the distributions are reported. E, Comparison of mean (±SD) success rates (%) across sessions, for different types of trials (SOLO, TOGETHER, SIM-TOGETHER), performed in different experimental conditions (real interaction, simulated with Monkey S or K).

Figure 8.

Neural activity in the simulated joint-action. A, Proportion of cells modulated and directionally tuned in the DFT epoch of three different tasks (SOLO, OBS-OTHER, SIM-TOGETHER). Percentages are calculated with respect to a total of N = 257 cells recorded in the simulated joint-action experiment, where each monkey had to control its own cursor in coordination with a computer-guided moving cursor. B, ROC plots for joint-action cells (JA) recorded during the simulated sessions. Conventions and symbols as in Figure 4E. C, Comparison of mean auROC values evaluated for two sets of cells (CA and JA), in the experimental conditions TOGETHER (T), SIM-TOGETHER (SIM-T) and in three epochs of interest (CT, RT, DFT). For statistics, see Results. D, Comparison of mean auROC values of joint-action cells across experimental conditions (SIM-TOGETHER, pink; TOGETHER, red), aligned to target presentation (0 ms). Vertical lines and shaded area indicate mean DFT onset ±SD for SIM-TOGETHER (gray) and TOGETHER (red) conditions. Red and gray dots indicate the times at which mean auROC values exceeded after target presentation the mean +2 SD, of the auROC measured during the CT. *p < 0.05 Wilcoxon rank sum test. E, Cumulative frequency distributions of correlation coefficients obtained by modeling the neural activity, recorded in the SIM-TOGETHER experiment, with the inter-cursor distance. No significant differences (Wilcoxon rank sum test) were found when comparing the distribution of R2 obtained for CA (green) and JA (pink) neurons.

Representation of joint-action through population vector coding

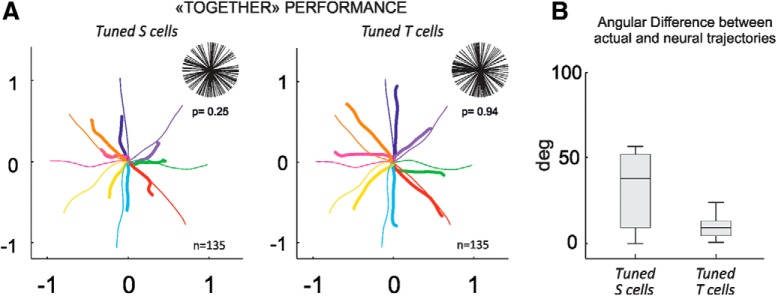

A critical question of this study is whether the neural representation of joint-action, obtained by combining the activity of the two interacting brains, simply emerges from the sum of the neural representation of each individual (SOLO) action, or whether this representation critically depends on the contribution of a dedicated set of cells. For this, the decoded neural trajectories of the joint-action outcome (TOGETHER) were reconstructed from the neural activity of two different sets of cells, the first directionally-tuned in the SOLO (tuned S; n = 144) action, the second directionally-tuned in the TOGETHER (tuned T; n = 135) task. The neural representation of the combined forces exerted by each animal was obtained by adding tip-to-tail the population vectors computed over time. Because the population vector critically depends on the uniformity of the distribution of the preferred directions (Fig. 5A, top), this was tested through a Rayleigh's test (tuned S, z = 2.1, p = 0.25; tuned T, z = 0.06, p = 0.94). Furthermore, to avoid any possible bias due to an uneven number of neurons included in each set used for the population vector computation, this analysis was performed on two sets with identical cell numerosity (n = 135). The decoded trajectories were then compared with the actual ones, obtained by averaging across sessions the force-guided trajectories of the common object (yellow circle; Fig. 1A) that the animals moved together. The latter was located at the midpoint of the two cursors controlled by each monkey.

Figure 5.

Neural representation of joint-action through population vector analysis. A, Decoded (thick colored) and actual (thin colored) cursor's motion trajectories reconstructed from directionally tuned S and tuned T cells, after combining the activity of the two interacting brains. For each subpopulation, in the top-right corner the distribution of preferred directions is plotted with relative statistics on the uniformity of the distributions (Rayleigh's test). B, Boxplot of the angular differences between vectors defining the decoded and actual trajectories at each time t, computed for two different sets of cells.

The decoded trajectories of the TOGETHER condition (Fig. 5A) were very accurate when computed from the combined activity of the subset of directionally tuned T units recorded from the two brains. A poorer neural representation of joint-action was instead obtained by combining the activity of directionally tuned S cells recorded from the two monkeys, as suggested by the larger angular difference of the decoded versus actual trajectories for the tuned S cells compared with the tuned T cells (Wilcoxon rank sum test, z = 7.67, p = 1.757 × 10−14; Fig. 5B).

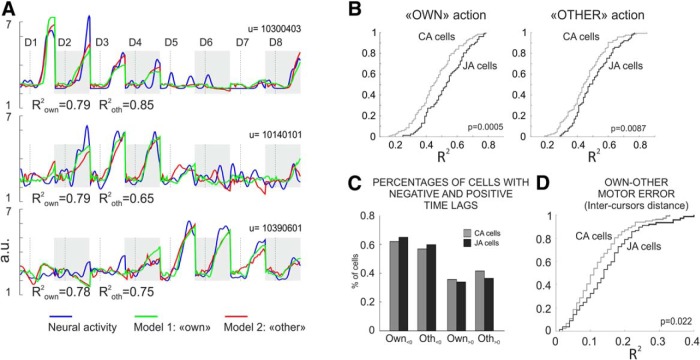

Joint-action neurons encode the own and the other behavior better than canonical action-related cells

To analyze the different functional role the two subsets of cells during joint behavior, we investigated the neural representation of the output of both the own (executed) and the other (observed) action, during the co-acting condition. To this aim, for each individual cell we performed two multiple linear regressions (MLR). The activity recorded during the TOGETHER task was modeled with the kinematics (position, direction, and velocity) of the own (Model 1) or of the other (Model 2) visual cursor. Both canonical action-related and joint-action cells showed an excellent encoding of the two actions output (Fig. 6A) during joint performance. However, the distributions of the coefficients of determination (R2) obtained from each regression applied to the two subsets of cells (Fig. 6B) showed that the joint-action neurons provided a more accurate representation of the cursors kinematics resulting from the two actions (Wilcoxon rank sum test: own, z = −3.46, p = 5.34 × 10−4; other, z = −2.62, p = 0.0087; Fig. 6B) compared with that of the canonical action-related cells. For the two sets of neurons we then computed the distributions of the time lags obtained from the regression applied to the own and other action. In both, we found cells whose activity mostly led rather than following the variation of the cursor's kinematics (Fig. 6C), pointing to a predominance of feedforward on feedback mechanisms in encoding both types of action.

Figure 6.

Neural representation of the own and of the other performance during joint-action. A, Example of neural representation of the own and of the other cursor kinematics, for three premotor cells. For each unit (U), the three curves represent neural activity in the form of SDF (blue), the activity obtained from Model 1 (own cursor; green) and from Model 2 (other cursor, red). The SDF has been over imposed on the two other curves at 0 lag, after appropriate time shifting, by using the ΔT that yielded the best R2 in the MLR. The activities refer to the TOGETHER trials in the eight directions (D1–D8) placed tip-to-tail (highlighted by alternating gray shading), for a time interval spanning from 150 ms before to 300 ms after DFT onset (vertical dashed line). B, Cumulative frequency distributions of correlation coefficients relative to the output kinematics (cursor's motion) of the own (left) and the other (right) action computed for the joint-action (JA; black) and canonical action-related cells (CA; gray). C, Percentages of cells with negative and positive time lags for Model 1 (Own) and Model 2 (Other). D, Representation of the Own-Other motor error (inter-cursor distance) by JA and CA neurons. Cumulative frequency distributions of correlation coefficients obtained by modeling the neural activity of the CA (gray) and JA (black) neurons, with the inter-cursor distance. B, D, The p values refer to Wilcoxon rank sum statistics.

Finally, to successfully coordinate their actions in the TOGETHER trials the two animals kept their cursors close to each other inside the common object that they dragged to the target. To study whether a specific role emerged for joint-action cells in evaluating the instantaneous distance between the cursors controlled by each animal, here defined as 'Own-Other motor error', we performed a new linear regression with the inter-cursor distance as predictor. Even in this case, the joint-action neurons differed from the canonical action-related cells in their overall more accurate representation (Wilcoxon rank sum test: z = −2.29, p = 0.022; Fig. 6D) of this key parameter of joint-action.

Neural activity and behavior during simulated joint-action

To further evaluate the role of joint-action cells, each monkey performed a simulated joint-action task (SIM-TOGETHER), by controlling its own cursor in coordination with a computer-guided one (Fig. 7A). For any given animal, the computer simulated the behavior of its respective real partner, by displaying on the screen the cursor trajectories of the latter, stored from previous behavioral sessions. The sensory-motor context of these trials was virtually identical to that of the real joint-action (same visual scene, same applied forces, same cursor's kinematics and motion's law; Fig. 7B). The major differences consisted in the absence of the partner monkey in the experimental environment and, more importantly, in the absence of a bidirectional interaction between the acting animal and its (now virtual) partner. In the new context of the SIM-TOGETHER task, the PC was passively displaying a moving cursor, without any kind of interaction/adaptation with that controlled by the active animal. Therefore, the success for the latter was guaranteed only by its unidirectional adaptive coordination with the PC-generated cursor's motion, unlike the TOGETHER condition in which the two animals could reciprocally adjust online their behavior. We have recorded the activity of 257 cells (68 in Monkey S in 7 sessions; 189 in Monkey K in 37 sessions) under this simulated form of joint-action.

Both the behavioral and the neural results were consistent, although different, with those observed during the real joint-action. First, by comparing the RTs of TOGETHER trials in the real and simulated conditions, Monkey S did not show any significant difference (197 ± 54 and 198 ± 60 ms, respectively), whereas for Monkey K we observed a significant decrease (from 254 ± 54 ms for the real interaction to 191 ± 36 ms for the SIM-TOGETHER condition). This RT decrease can be explained by the shorter RTs (156 ± 27 ms) of its virtual mate, relative to the 197 ± 54 ms of the real interactive animal (Monkey S). Second, we studied the differences between RTs of the two interacting animals (RTS − RTK) in the TOGETHER condition (Fig. 7C1) and between the RTs of one animal with its virtual mate in the SIM-TOGETHER trials (Fig. 7C2–C3), as a measure of synchronicity in initiating the force application in the two tasks. Comparing the distribution of these differences across tasks, we found that in both conditions Monkey S tended to anticipate, whereas Monkey K to follow the action onset of the real or virtual partners (Fig. 7C1–C3). Third, concerning the execution phase, both animals showed longer DFTs in the simulated condition (Monkey S: 527 ± 268 ms; Monkey K: 400 ± 229 ms), with respect to the real ones (Monkey S: 398 ± 213 ms; Monkey K: 330 ± 221 ms), suggesting a different strategy for each animal to coordinate its own action with the cursor motion of the artificial partner in the SIM-TOGETHER task.

The new strategy to act more slowly during the simulated joint-performance was confirmed by the analysis of the differences between the arrival times at the peripheral targets of the two cursors in the two experimental sets. In the TOGETHER condition the two animals were rather synchronous during the execution phase (Fig. 7D1; mean of arrival times difference: 12 ms; mode: 24 ms), because of the bidirectional nature of their coordination. On the contrary, in the simulated trials they both followed their respective fictitious mate, with a mean difference of the active monkey of 155 ms (Monkey S; Fig. 7D2) and of 207 ms (Monkey K; Fig. 7D3) with respect to the arrival time of the computer-guided cursor. This result, in line with the slower action observed above, highlights the interactive versus the non-interactive nature of the real versus simulated joint-action, respectively. In the SIM-TOGETHER trials, to successfully coordinate its own action with the non-cooperative partner the active subject had necessarily to follow the other behavior. Interestingly, the behavioral error rates associated to both the SOLO and SIM-TOGETHER trials, studied across experimental sessions, showed an overall decrease of animal performance in the simulated context compared with when the monkeys were working together in the same room (Fig. 7E). Within this general decrease, the successes rate associated to the SIM-TOGETHER trials were, as in the real interaction experiment, significantly lower with respect to that observed for the SOLO ones.

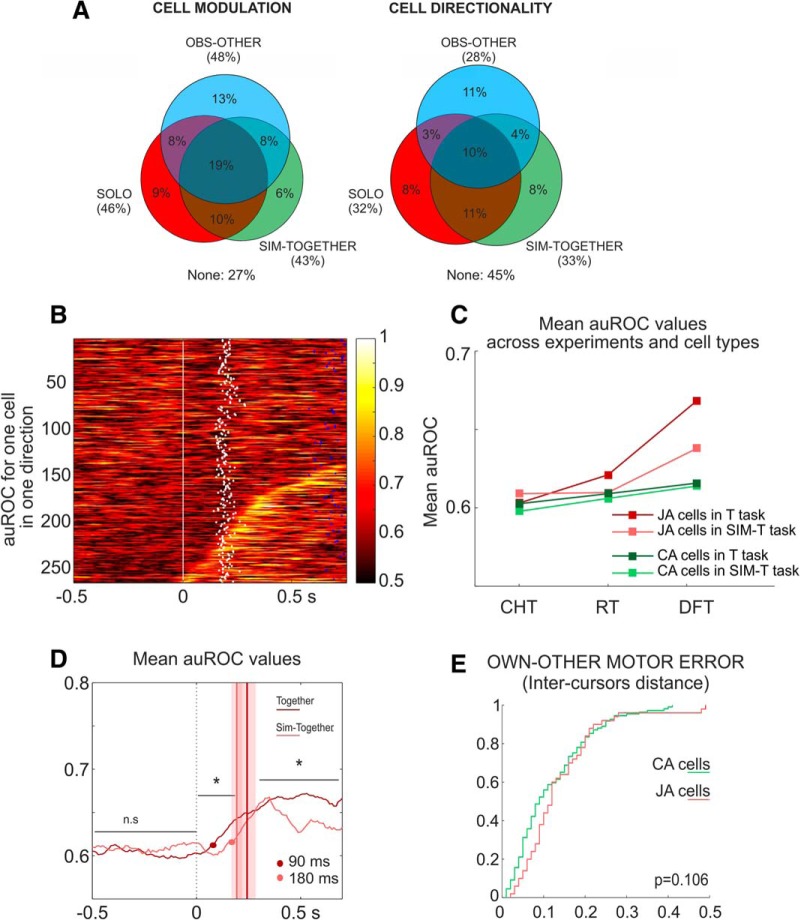

At the neural level (Fig. 8A), we found a set of joint-action cells (33/257; 13%), i.e., active in the SIM-TOGETHER trials and whose neural activity discriminated the two action conditions, as indicated by a ROC analysis (Fig. 8B). In this simulated context as well, the joint-action cells provided a more accurate representation of the output of the two actions (Wilcoxon rank sum test, own: z = −2.53, p = 0.011, other: z = −2.89, p = 0.0038, data not shown) compared with that of the canonical action-related cell. Similarly to the real interaction, the analysis of the temporal evolution of the mean auROC values across cells of the two subpopulation indicates a significantly higher selectivity of the action context for joint-action cells with respect to the canonical action-related neurons (Fig. 8C). However, in the simulated joint-action, the significant difference between the mean auROC values in the SOLO and SIM-TOGETHER trials was found only during the execution phase (DFT: Wilcoxon rank sum test, zval: −4.79, p = 1.68 × 10−6; Fig. 8C), and not during action planning (RT: Wilcoxon rank sum test, z = −1.03, p = 0.302). Furthermore, comparing the discrimination power of the two sets of joint-action cells, recorded in the two different experimental contexts, this was significantly reduced (Wilcoxon rank sum test, RT: z = 2.64, p = 0.008; DFT: z = 3.80, p = 1.44 × 10−4; Fig. 8C,D) during the simulated joint-performance compared with the real interaction. No differences between the two experimental set-ups were observed for the canonical action-related cells, in either epoch of interest (Wilcoxon rank sum test; RT: z = 1.32, p = 0.186, DFT: z = 0.64, p = 0.517; Fig. 8C). It is worth noticing that the similarity in the amount of force applied in the real and the simulated trials (Fig. 7B) rules out the possibility that significant changes of the auROC values observed in Figure 8, C and D, were merely because of variation in force modulation across experiments. Finally, another critical difference that emerges in the SIM-TOGETHER condition, with respect to the real interaction, is associated to the ability of the joint-action cells to encode the 'Own-Other motor error'. In absence of a bidirectional interaction, the joint-action neurons compared with canonical action-related cells, did not offer a better encoding (Wilcoxon rank sum test: z = −1.61, p = 0.106) of the instantaneous distance between the two cursors, representing the output of the own and other action (Fig. 8E), as observed instead in the real interaction task.

The role of observation-related activity in encoding of joint-action

We finally asked whether the neural encoding of the own and of the partner's action was influenced when the observation-related activity, evaluated through the analysis of cell modulation during the OBS-OTHER trials, was included in the regression analysis. For this, we have compared the joint-action (n = 53) and canonical action-related cells (n = 32), which were modulated also during the observation of the cursor motion determined by the partner (OBS-OTHER). For the second set, we have included in the analysis only those units which showed also a different modulation (two-way ANOVA, Factor 1: target position, Factor 2: condition) between the SOLO and OBS task, to guarantee the existence of an action-related modulation for these neurons. For the subset of canonical action-related cells, we found a significant improvement in the neural representation of the own (Wilcoxon rank sum test, z = −2.27, p = 0.0229) and of the other action (Wilcoxon rank sum test, z = −2.09, p = 0.0366), when they also had observation-related activity. On the contrary, for joint-action cells the presence or absence of observation-related response did not influence the overall accuracy of the cortical representation of the kinematics of both the own and the other's action (Wilcoxon rank sum test, own z = −1.06, p = 0.288, other z = −0.23, p = 0.817). Therefore, encoding intersubject coordination by joint-action neurons seems to be independent from their perceptual representation of the observed action in a solo scenario, as in the OBS-OTHER task.

Discussion

The motor-cognitive aspects that allow individuals to coordinate their actions with others have received significant theoretical (Bratman, 1992, Vesper et al., 2010) and experimental (Sebanz et al., 2006; Aglioti et al., 2008; Newman-Norlund et al., 2008) consideration. However, the neural underpinning of joint-action is still unknown, as debated remains the role of the mirror system (Newman-Norlund et al., 2008; Kokal and Keysers, 2010) in this context.

Joint-action cells in primate premotor cortex

In dorsal premotor cortex we found a population of joint-action neurons, which distinguishes when an identical action is performed in a solo fashion or taking into account a partner's behavior. During the TOGETHER trials, these cells provide a more accurate representation of both the own and the other actions, which together shape the joint-performance compared with canonical action-related cells, whose neural activity was not influenced by the coordination with a partner. Therefore, our findings support the existence of a simultaneous co-representation of both the own and other behavior by premotor neurons, in line with recent experiments in the hippocampus of bats (Omer et al., 2018) and rats (Danjo et al., 2018), showing the integration of information about the “self” and the other, as an essential neural process for social behavior.

In premotor neurons, the distinction between the two action contexts emerged during the reaction time and persisted during task execution. This anticipatory encoding is consistent with the behavioral data from interacting monkeys (Visco-Comandini et al., 2015), as well as from developing children and adults (Satta et al., 2017), who show predictive adaptation of their own action to that of the partner, in tasks similar to those of this experiment. Our results are in line with human EEG studies (Kourtis et al., 2013), highlighting a predictive representation of the partner's action during reaction-time. Furthermore, the analysis of temporal delays between neural activity and the behavioral parameters, measured for the own and the other actions, showed that the time lags were mostly negatives for both canonical action-related and joint-action cell populations, supporting the view of a co-representation of the two behaviors based on feedforward processes. This agrees with the dyadic motor plan view of Sacheli et al. (2018), which demonstrates how a successful joint-performance relies on the active prediction of the partner's action, rather than on its passive imitation or observation. Our data offer an experimental evidence for a single-unit mechanism subtending intersubject motor coordination, which is reminiscent of a predictive coding (Rao and Ballard, 1999; Friston, 2005), proposed in humans to automatically anticipate others' mental states (Thornton et al., 2019). Even though, further investigations are needed to assess whether the neural processes here described can be fully explained in terms of predictive coding schemes (Friston, 2005).

From a computational perspective, joint-action neurons could play an ideal role in combining predictions of the current state of the own body with those of the partner's state (Wolpert et al., 2003). Although neural transformations from sensory signals to motor commands are uniquely governed at neural level, those from motor commands to their consequences strictly depends on the physics of our body and of the external world (Wolpert and Ghahramani, 2000). However, an accurate prediction of such consequences, through internal modeling of both our own body and the external environment, is fundamental for successful behavior. In the context of motor interactions, external changes are largely influenced by the others' behavior. To cope with such state changes, joint-action neurons might represent an ideal neural substrate to integrate the current state predictions of the own action with that of the entity to interact with (not necessarily represented by a conspecific), thus offering an accurate online computation of the behavioral differences between self- and other-action. This difference, here referred to as a 'Own-Other motor error', is a critical parameter for a successful joint-performance, which indeed is better encoded by the joint-action neurons, with respect to the canonical action-related cells. Here, the term “Own-Other”, is used from a computational perspective, to consider the presence of two, or more, behavioral states (Wolpert et al., 2003), to be integrated for the task achievement. From a motor control perspective, this circumstance does not necessarily imply the co-presence of a biological partner, but generalizes to conditions when our own action must be coordinated with a computer or a robotic device (see 'Motor coordination with an artificial non-interactive partner').

An important clue to understand the role of different classes of premotor cells in encoding joint-behavior resides in their ability to represent the outcome of the two brains action, corresponding to the cursor trajectories on the screen. We combined the activity recorded from the two interacting premotor areas during the TOGETHER trials, for two different subpopulations, consisting of cells directionally-tuned in the SOLO (tuned S) or in the TOGETHER (tuned T) tasks. The latter had tuning properties shaped by the joint-performance, contrary to the former, whose tuning function was associated to the individual action. The decoded cursor trajectories of joint-action were computed through the analysis of the time-varying population vectors obtained from the two subsets of neurons. We found that the combination of the tuned T cells recorded from premotor cortex of the two interacting brains provided a representation of joint performance by far more accurate than that of the tuned S neurons studied in the same condition. This confirms that when a goal is shared among two co-agents, the outcome of their interaction cannot be reduced to the linear combination of the two individual actions (Woodworth, 1939), but rather emerge thanks to their “co-representation” (Obhi and Sebanz, 2011; Wenke et al., 2011).

Motor coordination with an artificial non-interactive partner

A simulated joint-action task (SIM-TOGETHER) was used to study the neural processes of interpersonal coordination under sensory-motor conditions identical to those of natural joint-action, but different in the interactive versus non-interactive nature of the task and for the absence of a second monkey in the recording room. Behavioral data showed that when acting with a virtual non-interactive mate, the monkey was forced to follow the other action. Even under these circumstances, as during real interactions, we found joint-action related cells whose activity was modulated by the action context (individual or to be coordinated with the virtual mate). This suggests that these cells are associated to interpersonal motor coordination (i.e., when “acting with”, whoever represents the co-actor), rather than signaling the presence of another monkey in the action space. Nevertheless, joint-action neurons were more strongly modulated by the more complex task demands of natural interactions. In the latter the monkey had to deal with continuously refreshed, therefore less predictable, inputs generated by its partner's intentions, contrary to the case of the simulated condition, characterized by more stereotyped, and repeated behavioral patterns, represented by the cursor trajectories controlled by the virtual mate.

Under this scenario, the discrimination power of joint-action neurons in distinguishing individual actions from those performed concurrently with the artificial agent, not only decays drastically during the task's execution, but also vanishes during the planning phase. Therefore, these cells are sensitive to the level of interactivity, higher in tasks where two subjects must coordinate in a reciprocal and naturalistic fashion to accomplish a common goal. Therefore, the relevance of these neurons in social cognition might reside in allowing coordinated motor interactions among individuals, because being social implies, among other cognitive attitudes, to be able to flexibly adjust the own action to that of another conspecific (Munuera et al., 2018).

The role of observation in joint-action

A crucial mechanism for understanding how the actions performed by others are represented in our brain is provided by the mirror system (di Pellegrino et al., 1992; Gallese et al., 1996; Rizzolatti and Sinigaglia, 2010), which offers empirical evidence for a unifying process of action perception and execution. This mechanism has also been discussed to understand shared cooperative contexts (Pacherie and Dokic, 2006). It has been argued that mirror activity is a biologically-tuned phenomenon (Tai et al., 2004), being this system poorly active when an action is performed by an artificial effector (e.g., a robot), therefore in absence of an effective motor matching representation. However, single cells studies in monkeys do show mirror-like response to tool-based actions (Fogassi et al., 2005; Umiltà et al., 2008), suggesting that mirroring can also be found as result of the internalization of an unambiguous motor representation after a long training. Furthermore, in motor and dorsal premotor cortex, the directional tuning of cells remains similar during both observation and execution of familiar tasks (Tkach et al., 2007), even in abstract contexts, as when guiding a cursor in absence of any visible arm displacement. In our experiment, we did observe neurons with mirror-like responses consisting in neural activity modulation during both force application and observation trials, when the monkey observed on the screen the motion of the visual cursor's determined by the other animal.

However, the matching operations performed by joint-action neurons, between the own (executed) kinematics and the other (observed) behavior, occur independently from mirror-like mechanisms. Our results show, in fact, that the presence/absence of observation-related activity in joint-action cells does not influence their encoding accuracy of the two interacting behaviors. Therefore, it is tempting to speculate that the functional role of these premotor cells seems to be grounded on a predictive coding (Friston, 2005), rather than on feedback mechanisms, associated to the sensory observation-related input. In canonical action-related neurons, instead, mirroring improves the representation of both actions, suggesting that mirror-like processes can partially contribute to the representation of the joint outcome, through the observation-derived signals processed by these neurons. For this population, observation-related activity can enhance the encoding not only of the other, but also of the own action. This is consistent with the idea that mirror neurons might contribute to self-action monitoring, as holds by theoretical predictions (Bonaiuto and Arbib, 2010) and also suggested by experimental findings in monkeys (Maranesi et al., 2015). Therefore, joint-action representation can emerge from concurrent operations of different population of cells, such as the canonical action-related and joint-action neurons, each contributing, even though differently, to the sensory-motor processing of the own and the other behavior.

Footnotes

The authors declare no competing financial interests.

This work was supported by the MIUR of Italy (Grant N. 2010XPMFW4_004) and by SAPIENZA-University of Rome (C26A15NXPT) to A.B.-M. We thank Paul B. Johnson for his valuable contribution to laboratory setup, and Roberto Caminiti for his useful comments and suggestions during the preparation of the paper.

References

- Aglioti SM, Cesari P, Romani M, Urgesi C (2008) Action anticipation and motor resonance in elite basketball players. Nat Neurosci 11:1109–1116. 10.1038/nn.2182 [DOI] [PubMed] [Google Scholar]

- Batschelet L. (1981) Circular statistics in biology. New York: Academic. [Google Scholar]

- Bonaiuto J, Arbib MA (2010) Extending the mirror neuron system model: II. What did I just do? A new role for mirror neurons. Biol Cybern 102:341–359. 10.1007/s00422-010-0371-0 [DOI] [PubMed] [Google Scholar]

- Bratman M. (1992) Shared cooperative activity. Philos Rev 101:327–341. 10.2307/2185537 [DOI] [Google Scholar]

- Brunamonti E, Genovesio A, Pani P, Caminiti R, Ferraina S (2016) Reaching-related neurons in superior parietal area 5: influence of the target visibility. J Cogn Neurosci 28:1828–1837. 10.1162/jocn_a_01004 [DOI] [PubMed] [Google Scholar]

- Caminiti R, Borra E, Visco-Comandini F, Battaglia-Mayer A, Averbeck BB, Luppino G (2017) Computational architecture of the parieto-frontal network underlying cognitive-motor control in monkeys. eNeuro 4:ENEURO.0306–16.2017. 10.1523/ENEURO.0306-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang SW, Gariépy JF, and Platt ML (2013) Neuronal reference frames for social decisions in primate frontal cortex. Nat Neurosci 16:243–250. 10.1038/nn.3287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF (2002) Modest gaze-related discharge modulation in monkey dorsal premotor cortex during a reaching task performed with free fixation. J Neurophysiol 88:1064–1072. 10.1152/jn.00995.2001 [DOI] [PubMed] [Google Scholar]