Abstract

Objective. To revise the Self-Assessment of Perceived Level of Cultural Competence (SAPLCC) instrument and validate it within a national sample of pharmacy students.

Methods. A cross-sectional study design using a convenience sample of pharmacy schools across the country was used for this study. The target population was Doctor of Pharmacy (PharmD) students enrolled in the participating pharmacy programs. Data were collected using the SAPLCC. Exploratory factor analysis with principal components extraction and varimax rotation was used to identify the factor structure of the SAPLCC instrument.

Results. Eight hundred seventy-five students from eight schools of pharmacy completed the survey. Exploratory factor analysis resulted in the selection of 14 factors that explained 76.6% of the total variance and the grouping of 75 of the 86-items in the SAPLCC into six domains: knowledge (16 items), skills (11 items), attitude (15 items), encounters (11 items), abilities (13 items), and awareness (9 items). Using a more diverse, representative sample of pharmacy students resulted in important revisions to the constructs of the SAPLCC and allowed the identification of a new factor: social determinants of health.

Conclusion. The 75-item SAPLCC is a reliable instrument covering a full range of domains that can be used to measure pharmacy students’ perceived level of cultural competence at baseline and upon completion of the pharmacy program.

Keywords: cultural competence, student assessment, pharmacy education, questionnaire, multi-institutional study

INTRODUCTION

Culturally competent communication and service are required of pharmacists and other health care providers to decrease health care disparities and improve access to quality care for a growing multicultural, multilingual, and diverse population.1 To measure change in the provision of culturally competent services, the Agency for Healthcare Research and Quality developed the Consumer Assessment of Healthcare Providers and Systems (CAHPS) cultural competence item set to capture patients’ perspectives on the cultural competence of their health care providers.2 As a way to measure the quality of culturally competent services, The Joint Commission developed a roadmap of standards requiring the provision of culturally competent and patient-centered care in hospitals.3 Accreditation agencies including the Accreditation Council for Pharmacy Education (ACPE) have included training in cultural sensitivity as a requirement in health care-related academic programs and called for the incorporation of and student exposure to cultural factors in didactic and experiential curricula.4 The Center for the Advancement of Pharmacy Education (CAPE) includes cultural sensitivity (Standard 3.5) and self-awareness (Standard 4.1) as key elements of educational outcomes.5 Similarly, the Healthy People 2020 initiative has included specific goals for new objectives to increase the percentages of medical, dental, and pharmacy programs providing content in cultural diversity in required courses.6

Implementing cultural competence training in health care academic programs, however, is still a challenge, especially when students lack motivation to become culturally competent, do not value the impact that culturally competent services can have on patient outcomes, and may be resistant to dealing with their personal beliefs and stereotypes.7-9 A 2013 review of pharmacy programs incorporating curricula in cultural competence, conducted by the American College of Clinical Pharmacy (ACCP), found that there is a great need to assess the impact of this training and its educational outcomes, particularly for the provision of patient-centered, culturally sensitive health care.10 This ACCP review recommended that schools implement a pilot curriculum in cultural competence including specific learning objectives and assessment techniques. Because of the limitations of class time and lack of faculty preparation in cultural competence training, Echeverri and colleagues,11,12 have recommended to first conduct a baseline assessment of students’ perceived level of competence and target culturally diverse educational interventions to address identified needs.

Considering the lack of a specific instrument for assessing cultural competence in pharmacy students, in 2010, Echeverri and colleagues adapted two complementary scales with the permission of the respective authors: The Clinical Cultural Competency Questionnaire (CCCQ),13 and the California Brief Multicultural Competency Scale (CBMCS).14 The validation process of the CCCQ allowed the identification of nine constructs for curriculum development in cultural competence,15 and the validation process of the CBCMS confirmed the existence of four different constructs needed to measure multicultural competence.16 Although both measures were found to be reliable instruments, the CBMCS was too brief to comprehensively evaluate training needs if used alone, and the CCCQ did not include specific domains that were included in the CBMCS and considered critical when teaching cultural competence such as awareness of racial dynamics and self-reflection.17,18 The new instrument was called the Self-Assessment of Perceived Level of Cultural Competence (SAPLCC). Published pilot studies in which the SAPLCC was administered to pharmacy students have confirmed that it is a reliable and practical instrument that allows for the creation of student profiles in cultural competence and the identification of students’ needs for training.11,12 Initial validation of the SAPLCC was restricted to one school with a student body that was predominantly Asian and African American. Accordingly, the objective for this study was to validate the SAPLCC within a more diverse, national sample of pharmacy students. A more representative sample population would provide additional validity evidence supporting the SAPLCC constructs and inform a well-rounded development of cultural competence curricula in pharmacy programs.

METHODS

A cross-sectional study design was used with a convenience sample of pharmacy schools in the United States. Faculty members at schools known to be delivering curricula in cultural competence were invited to participate. The target respondent population was limited to students enrolled in any year of an ACPE-accredited PharmD program in the US. Efforts were made to include at least one school from each US geographical region. The study was approved by the institutional review board of each participating school. Collaboration agreements to share de-identified data were signed by each school.

Data collection was conducted online. A customized link to the survey instrument was created for each participating program. Students were contacted during class sessions by the respective faculty members at the schools participating in the study. Email invitations were sent out by the investigators to their own students in the fall of 2015. The email included the rationale for the study, an invitation to participate, and a dedicated link to the survey specifically created for each school. Students’ completion of the survey instrument was considered to be implicit consent to participate in the study. Because each school conducted the survey at a different time, data collection lasted for six months. Because the data were collected online, the start and end times at which each student completed the survey instrument were documented. This allowed us to calculate the average time taken by students to complete the questionnaire.

The SAPLCC questionnaire included 86 items organized into six main educational domains: knowledge (16 items), skills (16 items), attitudes (17 items), encounters (12 items), abilities (15 items), and awareness (10 items). The items were reorganized according to the domains previously identified16,17 and coded accordingly for easy identification. Although no modifications were done to any item in the SAPLCC, in this study we changed the item order and codes to make it easier to identify them in each domain. Because of this reorganization, the item codes do not match with previous publications of the SAPLCC.9,11,12 The SAPLCC scale for all responses ranged from one to four (1=not at all, 2=a little, 3=quite a bit, 4=very). Demographic data collected included race/ethnicity, academic year, gender, and age.

Exploratory factor analysis (EFA) with principal components extraction and varimax rotation was used to identify the relevant factors in the SAPLCC instrument. Because we considered this study to be an update to the original validation of the scale, we replicated Echeverri’s methodology.15 The appropriateness of using factor analysis was evaluated through the Bartlett test for sphericity and the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy. The number of factors to be retained was decided based on the Kaiser criterion by dropping all factors with eigenvalues less than 1.0. Item statistics and item-total correlations were calculated. An item-total correlation greater than 0.5 was considered acceptable. Items that showed cross loading higher than 0.35 or low extraction communality of 0.50, were eliminated. The Cronbach alpha was used to calculate the reliability of the extracted factors and 0.7 was considered acceptable. The data were analyzed using IBM SPSS, version 19 (IBM Corp, Armonk, NY).

RESULTS

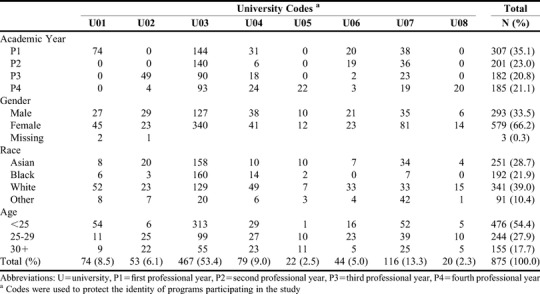

In total, 875 students from eight of the 11 pharmacy programs invited completed the SAPLCC (Table 1). The pharmacy programs were located in different regions of the country: one in the Midwest, one in the East, three in the South, and three in the West. Five programs were located at private universities and three at public universities. All except one program were four years in length. University codes were used to protect the identity of the programs participating in the study (U01 to U08). Most of the students were female (66.2%) and younger than 25 years (54.4%). Most participants self-identified as one of the three main racial categories represented in the pharmacy profession: white (39.0%), black/African American (21.9%), and Asian (28.7%). The remaining participants (10.4%) represented other racial/ethnic groups (eg, Hispanic, American Indian, multiple races). Most participants (58.1%) were enrolled in one of the first two years of a PharmD program.

Table 1.

Student Demographics (N=875)

Considering the different characteristics of the curriculum in cultural competence, some of the participating universities targeted the study to the incoming class (U01), others targeted specific upper-level classes (U02, U05 and U08), and the remainder targeted the entire pharmacy student body (U03, U04, U06 and U07). Because the study used convenience sampling, sample size calculation was not done a priori. However, given that the objective of this study was to validate the SAPLCC scale using an exploratory factor analysis, a sample size greater than 500 was considered adequate for the data analysis and interpretation.19

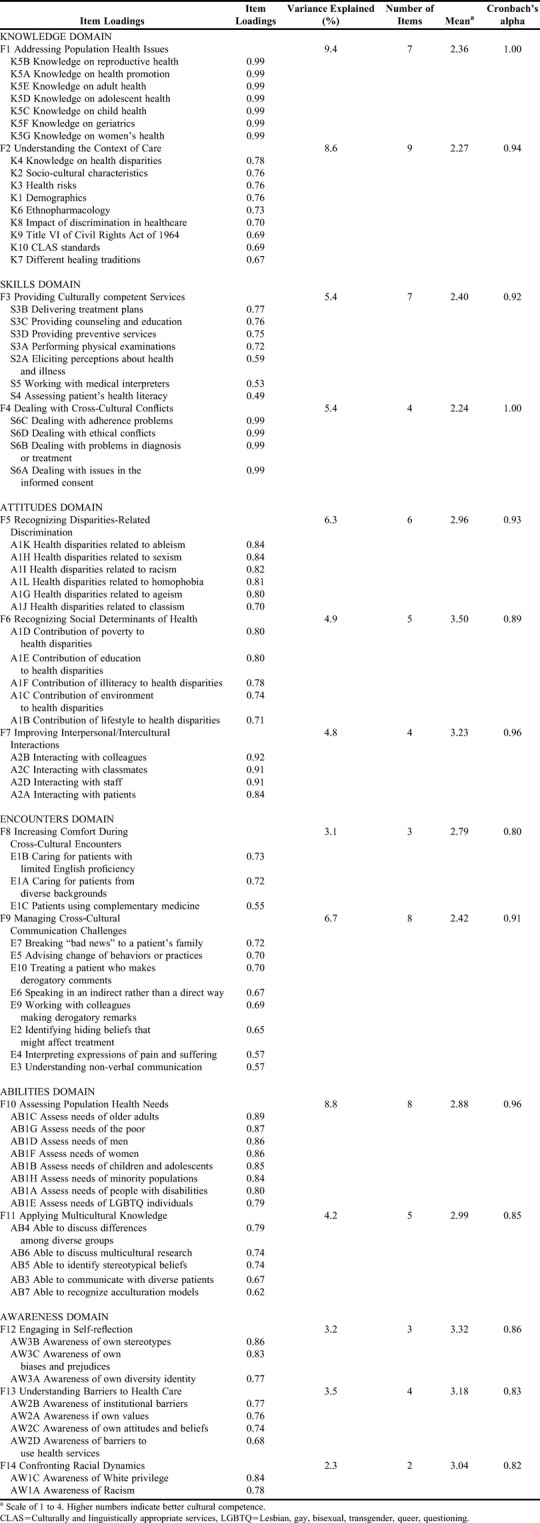

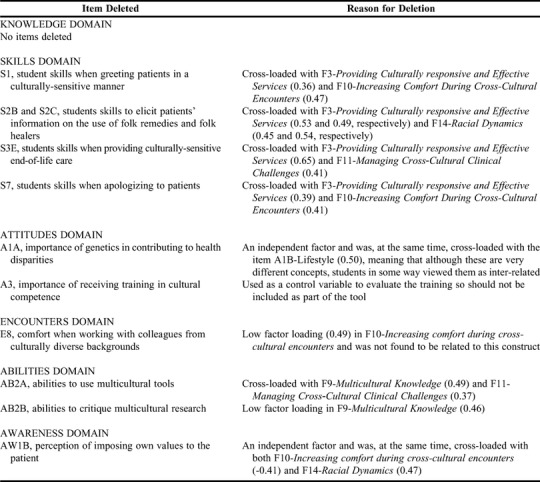

Exploratory factor analysis resulted in the retention of 75 of the 86 items in the SAPLCC, which were organized in 14 factors and explained 76.6% of the total variance. The 14 factors were grouped into six domains (Table 2). All extracted items had adequate extraction communality (h2>0.50) and corrected item-total correlations (>0.20). Cronbach alpha was 0.95 for the sum scale, supporting claims that the SAPLCC is considered a reliable instrument. Internal consistency reliability coefficients for each factor were consistently above 0.80, suggesting that the subscales are also reliable when considered independently (Table 2). The use of factor analysis as the statistical technique in this study was confirmed, with results obtained with the Bartlett test statistic for sphericity and the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy (chi-square=112342.6; p<.001, and KMO value=0.935). The scale structure, items under each domain and factor, and the reliability statistics are presented in Table 2. Overall, 11 items were eliminated during the factor extraction process either because they did not have a high structure coefficient (≥0.55) or because they cross-loaded with other factors (≥0.35) (Table 3). No items were eliminated because their deletion caused the alpha to increase, they had a reduced range of responses, or they showed extreme means. However, in the Skills domain, S4 (assessing health literacy) and S5 (working with medical interpreters) were retained even though they had a low structure coefficient (<0.55). We opted to keep these items in the survey on conceptual grounds, given the importance of these two skills when working with minority and multilingual populations, and on statistical grounds, because lack of cross-loading and removal did not increase subscale reliability. The scale and scoring methods are available from the corresponding author upon request.

Table 2.

Factor Structure of the Self-Assessment of Perceived Level of Cultural Competence

Table 3.

Items Deleted During Validation Process for the Self-Assessment of Perceived Level of Cultural Competence

As shown in Table 2, F1 and F4 had Cronbach alphas of 1 and all items had coefficient loadings of 0.99. Cronbach alpha is a commonly used indicator of scale reliability (internal consistency) and presumes that all items in the construct have the same coefficient loadings and are equally important measures of the construct (Rasch model),20 which is correct in both F1 and F4. Considering that alpha values are directly and positively affected by the number of items that make up a scale, Netemeyer recommend that researchers have a reason for including each item in a questionnaire and that they examine carefully the scale length, average level of inter-item correlations, unnecessary redundancy of item wording, and complexity of the constructs.21 We repeated the factor analysis after deleting all the items in these two factors, with no important change in the Cronbach alpha values. Factor 1 (F1) assesses students’ knowledge to address population health issues, which are of high importance when working as health care providers. Factor 1 is the dominant factor in the scale (9.4% of variance explained). Although the items sound repetitive, each one refers to a completely different concept (health promotion, reproductive health, women health, etc), and definitely cannot be seen as redundant.

Factor 4 (F4) assesses students’ skills in dealing with cross-cultural conflicts. The items in this factor are relevant (explained 5.37% of variance) to all health care providers, who should be prepared to adequately handle disagreements with patients because of different cultural and/or religious points of view. We considered that the high Cronbach alphas may have been due more to low variation in respondents’ answers than to redundancy of the questions. Both F1 and F4 were among the lowest mean scores of the factors (2.36 and 2.24, respectively), meaning that low percentage of students (13.5 and 13.1, respectively) perceived they had the knowledge and skills in the topics covered by these factors. Although it makes sense to delete items that were not important to have a shorter measure, in this case, we considered that deleting the items would have an adverse impact on the measure’s conceptualization because of the relevance of these topics when measuring and developing curriculum in cultural competence.

Normally, three of the factors in the SAPLCC would have been considered weak because they have three or fewer items: F8 (3 items), F12 (3 items), and F14 (2 items). However, together these three factors have an important impact on the overall scale (8.7 of total variance). Considering that the items in these factors satisfied the criteria defined, were not cross-loaded with other items in the instrument, had high Cronbach alphas, and would be highly relevant when implementing training in cultural competence, we opted to keep them in the instrument.

Students’ mean overall SAPLCC score was 2.74 (scale of 1 to 4 on which 1 is the lowest) indicating that students believed they had at least some cultural competence knowledge and skills. Considering that many studies report students’ tendency to self-assess their own skills higher than their actual performance,22,23 we revised the factors with high and low subscale mean scores (Table 2). The factors in the attitudes (F6, F7) and the awareness (F12, F13 and F14) domains had higher scores (>3), while the factors in the knowledge (F1, F2) and skills (F3 and F4) domains had lower scores (<2.4).

DISCUSSION

Although previous studies15,16 of the SAPLCC grouped 68 items into 13 different constructs identified during the validation processes, in this study we found 75 items grouped into 14 constructs to be a more reliable structure of the integrated measure. In the current study, items in F6 (Recognizing Social Determinants of Health) were independent from the other factors, showing that social determinants of health should be considered when developing cultural competence curriculum. The importance of social determinants of health was acknowledged by their inclusion in the 2013 revised educational outcomes defined by the Center for the Advancement of Pharmacy Education (CAPE), which states that student pharmacists should be able to recognize social determinants of health that need to be addressed in order to diminish disparities and inequities in access to quality care.5

In addition to the identification of a new factor, there were notable changes to items within the SAPLCC. Five additional items (K1, K2, K3, K4, and K8) that were not included previously were added to F2 (Understanding the Context of Care). Considering the continually increasing diversity of the US population, it makes sense to include these cultural competence topics in pharmacy education curricula. Health care providers must understand important cultural aspects in the context of care such as the demographics of the population they serve, health disparities and health risks of that specific population, and the impact that discrimination and ethno-pharmacology have in patients’ access to quality care.

A third notable result from this study was the deletion of the item AW1B-Power Imbalance. In this study AW1B was an independent factor that was cross-loaded with F14, “confronting racial dynamics,” and also cross-loaded with items measuring students’ skills to elicit patients’ information on the use of folk remedies (S2B) and folk healers (S2C), which were deleted during the process (Table 3). AW1B also cross-loaded negatively with F8, “increasing comfort during cross-cultural encounters”) meaning that power imbalance (imposing one’s own values onto the patient) may have an important negative impact on provider-patient comfort level during the clinical encounter. These results may indicate that imposing one’s own values on the patient may be more detrimental when there is discordance between the provider’s health beliefs and those of the patient. Culturally competent health care providers should be prepared to address the complex differences between traditional and holistic views of health and illness, which may often arise during multicultural encounters. Although AW1B-Power Imbalance, S2B-Folk Remedies and S2C-Folk Healers were deleted during the process, similar concepts are included in item S2A, which addresses patients’ perceptions about health and illness, and item E1C, which addresses patients’ use of complementary and alternative medicine.

Based on our findings, the SAPLCC appears to be a reliable instrument with very high internal consistency (Cronbach alpha=0.95) in this broad sample of student pharmacists. We also concluded that the SAPLCC instrument can be used to reliably measure students’ perceptions of their level of cultural competence. Although the subscales were also reliable (Cronbach alpha over 0.8, Table 2), there are important points that need to be considered when making the decision to use the SAPLCC for curriculum development and student self-assessment of cultural competence. One of the advantages of the SAPLCC is that its structure and organization allows for the division of the instrument into subscales, each with high reliability coefficients (Table 2). Based on the results of this study, the SAPLCC could be used as a baseline measurement in a given Pharm.D. program. SAPLCC results can be used to identify the topics that most need to be addressed across a group, and also to make decisions regarding domains and factors to focus on in within a specific curriculum.11,12 We also recommend that pharmacy schools administer the SAPLCC again at the end of the cultural competence curriculum to measure change in students’ perceived level of cultural competence. If the curriculum lasts more than one year, we recommend retesting students, at least in those domains and factors included in the teaching. Considering the SAPLCC results from the perspective of curricular assessment, it appears we are addressing the impact of different social determinants to health disparities as well as underlining the importance of self-reflection to identify and address discrimination and improve intercultural interactions, but there is still a need to focus more on increasing students’ knowledge and skills to connect health issues to the context of care that results on the provision of effective cross-cultural competent services.

Although the SAPLCC is a long instrument in terms of number of items, most respondents completed it in an average of 14-15 minutes. In order to increase response rates and answer quality, we recommend that schools administer the SAPLCC electronically as an in-class academic activity. Electronic administration of the SAPLCC generates immediate results that the facilitator could use to initiate an in-class discussion of the need to have a cultural competence curriculum. If students have the opportunity to view results and discuss rationale and main components to be addressed in the curriculum, they may be more engaged and motivated to become culturally competent. This may be especially true if the SAPLCC is used in conjunction with a particular learning activity.

Despite methodological steps to strengthen the study findings, some limitations still exist. These include the potential for social desirability bias, the use of self-assessment instead of actual performance measures, average time needed for survey completion, and limited generalizability to other health care provider subgroups. Social desirability bias may exist in self-reported responses for students with conflicting views towards certain domains of cultural competence in the SAPLCC. The potential for this bias was reduced in most universities by administering the survey as an anonymous, non-graded, voluntary class activity, and not having faculty members present during survey completion. Still, the potential for social desirability cannot be completely dismissed and should be examined in greater detail in future studies. As stated in the name, SAPLCC is a self-assessment tool of perceived level of competence, which may differ from actual knowledge and performance. Considering that ACPE4 requires students to be able to “examine and reflect on personal knowledge, skills, abilities, beliefs, biases, motivation, and emotions that could enhance or limit their personal and professional growth” (Standard 4.1), the SAPLCC may be used as one of the tools to document students self-assessment of and reflection on learning needs (Standard 10). However, because students tend to overestimate their cultural competences,24 we highly recommended that administration of the SAPLCC be accompanied with other tools such as standardized patients, objective structured clinical examinations (OSCEs), and implicit bias association tests to measure students’ actual performance. Although, the average time required for a student to complete the SAPLCC was 14 to15 minutes, some participants needed considerably more time to complete the survey. In those cases, response fatigue and/or distractions may have potentially affected the quality of their answers. Because the survey was completed online in the students’ own time instead of as an in-class activity, it is impossible to know what factors may have influenced students’ completion time. While some students may have answered quickly without interruptions and/or without reflecting much on each question, others may have focused intently on each question or been doing other activities at the same time. Finally, these findings represent one subgroup of future health care providers (pharmacy students) at a convenience sample of pharmacy schools and therefore may not be generalizable to students in other professions (eg, nursing students, medical students, etc). Further research is warranted among students and providers in other professions, particularly given the movement towards interprofessional education and practice.

CONCLUSION

The SAPLCC demonstrated acceptable reliability in this diverse sample of pharmacy students from a broad range of institutions. The psychometric evidence from this study supports a full range of distinct domains that can be used to measure both the baseline and post-education cultural competence (knowledge, skills, attitudes, encounters, abilities, and awareness) level of pharmacy students. Using a more diverse representative sample of pharmacy students resulted in important revisions to the constructs and allowed the identification of a new factor, F6-Recognizing social determinants of health, which is aligned with the 2013 CAPE outcomes. Continued evaluation of the SAPLCC factor structure is highly recommended, especially conducting a higher order analysis for those factors with overly high Cronbach alphas. Data collected also may be used for the construction of student profiles of cultural competence, which could identify the main constructs on which to focus during curricular revision within an institution.

ACKNOWLEDGMENTS

The authors would like to thank Dr. Rajan Radhakrishnan (University of Charleston School of Pharmacy) and Dr. Krystal Moorman (University of Utah College of Pharmacy) for facilitating data collection at their respective institutions. At the time of the research, Dr. Alkhateeb was affiliated with the University of Texas at Tyler, Tyler, TX.

Dr. Margarita Echeverri’s contribution to the study was supported in part by funds from the Bureau of Health Professions, Health Resources and Services Administration, Department of Health and Human Services, under grant number D34HP00006, and by the National Institute on Minority Health and Health Disparities, grant number S21MD0000100.

REFERENCES

- 1.Goode TD, Dunne C. Policy Brief 1: Rationale for Cultural Competence in Primary Care. National Center for Cultural Competence; Washington, DC: Georgetown University Center for Child and Human Development; 2003. https://nccc.georgetown.edu/documents/Policy_Brief_1_2003.pdf [Google Scholar]

- 2.Agency for Healthcare Research and Quality (AHRQ) CAHPS Cultural Competence Item Set. Content last reviewed September 2016. AHRQ, Rockville, MD. http://www.ahrq.gov/cahps/surveys-guidance/item-sets/cultural/index.html.

- 3. The Joint Commission. Advancing Effective Communication, Cultural Competence, and Patient- and Family-Centered Care: A Roadmap for Hospitals. Oakbrook Terrace, IL: The Joint Commission, 2010, https://www.jointcommission.org/roadmap_for_hospitals/.

- 4.Accreditation Council for Pharmacy Education (ACPE) 2016 Accreditation standards and key elements for the professional program in pharmacy leading to the Doctor of Pharmacy degree, Chicago, Illinois: ACPE, https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf.

- 5.Medina MS, Plaza CM, Stowe CD, et al. Center for the Advancement of Pharmacy Education: 2013 Educational Outcomes. Am J Pharm Educ. 2013;77(8):Article 162. doi: 10.5688/ajpe778162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.U.S. Department of Health & Human Services (DHHS) Healthy People 2020, Educational and Community-Based Programs: Objectives. http://healthypeople.gov/2020/topicsobjectives2020/objectiveslist.aspx?topicId=11.

- 7.Murray- Garcia JL, Harrell S, Garcia JA, et al. Self-reflection in multicultural training: be careful what you ask for. Acad Med. 2005;80(7):694–701. doi: 10.1097/00001888-200507000-00016. [DOI] [PubMed] [Google Scholar]

- 8.Poirier TI, Butler LM, Devraj R, Gupchup GV, Santanello C, Lynch JC. A Cultural Competency Course for Pharmacy Students. Am J Pharm Educ. 2009;73(5):Article 81. doi: 10.5688/aj730581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Echeverri M. In: Cultural Diversity: International Perspective, Impacts on the Workplace and Educational Challenges. 1st ed. Snider M, editor. New York: Nova Science Publishers, Inc; 2014. pp. 49–67.https://www.novapublishers.com/catalog/product_info.php?products_id=50914 Chapter 2, Factors Influencing Students’ Perceptions of Training in Cultural Diversity Competence. [Google Scholar]

- 10.American College of Clinical Pharmacy. O’Connell MB, Rodriguez M, Poirier T, Karaoui LR, Echeverri M, et al. Cultural Competency in Health Care and Its Implications for Pharmacy, Part 3A: Emphasis on Pharmacy Education Curriculums and Future Directions. White Paper approved by the American College of Clinical Pharmacy Board of Regents. Pharmacotherapy. 2013;33(12):e347–e367. doi: 10.1002/phar.1353. http://www.accp.com/docs/positions/whitePapers/ACCP_CultComp_3A.pdf [DOI] [PubMed] [Google Scholar]

- 11.Echeverri M, Brookover C, Kennedy K. Assessing Pharmacy Students’ Self-Perception of Cultural Competence. J Health Care Poor Underserved. 2013;24(10):64–92. doi: 10.1353/hpu.2013.0041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Echeverri M, Dise T. Racial Dynamics and Cultural Competence Training in Medical and Pharmacy Education. J Health Care Poor Underserved. 2017;28(1):266–278. doi: 10.1353/hpu.2017.0023. [DOI] [PubMed] [Google Scholar]

- 13.Like RC. Clinical Cultural Competency Questionnaire (pre- training version) New Brunswick, NJ: Center for Healthy Families and Cultural Diversity, Department of Family Medicine, UMDNJ- Robert Wood Johnson Medical School; 2001. http://rwjms.rutgers.edu/departments_institutes/family_medicine/chfcd/grants_projects/documents/Pretraining.pdf [Google Scholar]

- 14.Gamst G, Dana RH, Der- Karabetian A, et al. Cultural competency revised: the California Brief Multicultural Competency Scale. Meas Eval Couns Dev. 2004;37(3):163–183. [Google Scholar]

- 15.Echeverri M, Brookover C, Kennedy K. Nine constructs of cultural competence for curriculum development. Am J Pharm Educ. 2010;74(10):Article 181. doi: 10.5688/aj7410181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Echeverri M, Brookover C, Kennedy K. Factor analysis of a modified version of the California Brief Multicultural Competence Scale with minority pharmacy students. Adv Health Sci Educ. 2011;16:1–18. doi: 10.1007/s10459-011-9280-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Boutin-Foster C, Foster JC, Konopasek L. Physician, know thyself: the professional culture of medicine as a framework for teaching cultural competence. Acad Med. 2008;83(1):106–111. doi: 10.1097/ACM.0b013e31815c6753. [DOI] [PubMed] [Google Scholar]

- 18.Watt K, Abbott P, Reath J. Developing cultural competence in general practitioners: an integrative review of the literature. BMC Family Practice. 2016;17:158. doi: 10.1186/s12875-016-0560-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Comrey AL, Lee HB. A First Course in Factor Analysis. 2nd edition. Hillsdale, NJ: Lawrence Erlbaum; 1992. p. 430pp. [Google Scholar]

- 20.Tavakol M, Dennick R. Making sense of Cronbach’s alpha. Int J Med Educ. 2011;2:53–55. doi: 10.5116/ijme.4dfb.8dfd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Netemeyer R. Can a Reliability Coefficient Be Too High? Chapter III, Measurement, in Iacobucci D. (ed.), J Consum Psychol: Special Issue on Methodological and Statistical Concerns of the Experimental Behavioral Researcher. 2001;10 (1&2), Mahwah, NJ: Lawrence Erlbaum Associates, p. 56-58. http://www2.owen.vanderbilt.edu/dawn.iacobucci/jcpmethodsissue/iii-meas-coef-alpha.pdf.

- 22.Ross JA. The reliability, validity, and utility of self-assessment. Prac Assess Res Eval. 2006;11(10):1–13. [Google Scholar]

- 23.Brown GTL, Andrade HL, Chen F. Accuracy in student self-assessment: directions and cautions for research. Assess Educ Princ, Pol Prac. 2015;22(4):444–457. [Google Scholar]

- 24.Gozu A, Beach MC, Price EG, et al. Self-administered instruments to measure cultural competence of health professionals: a systematic review. Teach Learn Med. 2007;19(2):180–190. doi: 10.1080/10401330701333654. [DOI] [PubMed] [Google Scholar]