Abstract

Chest digital tomosynthesis (CDT) provides more limited image information required for diagnosis when compared to computed tomography. Moreover, the radiation dose received by patients is higher in CDT than in chest radiography. Thus, CDT has not been actively used in clinical practice. To increase the usefulness of CDT, the radiation dose should reduce to the level used in chest radiography. Given the trade-off between image quality and radiation dose in medical imaging, a strategy to generating high-quality images from limited data is need. We investigated a novel approach for acquiring low-dose CDT images based on learning-based algorithms, such as deep convolutional neural networks. We used both simulation and experimental imaging data and focused on restoring reconstructed images from sparse to full sampling data. We developed a deep learning model based on end-to-end image translation using U-net. We used 11 and 81 CDT reconstructed input and output images, respectively, to develop the model. To measure the radiation dose of the proposed method, we investigated effective doses using Monte Carlo simulations. The proposed deep learning model effectively restored images with degraded quality due to lack of sampling data. Quantitative evaluation using structure similarity index measure (SSIM) confirmed that SSIM was increased by approximately 20% when using the proposed method. The effective dose required when using sparse sampling data was approximately 0.11 mSv, similar to that used in chest radiography (0.1 mSv) based on a report by the Radiation Society of North America. We investigated a new approach for reconstructing tomosynthesis images using sparse projection data. The model-based iterative reconstruction method has previously been used for conventional sparse sampling reconstruction. However, model-based computing requires high computational power, which limits fast three-dimensional image reconstruction and thus clinical applicability. We expect that the proposed learning-based reconstruction strategy will generate images with excellent quality quickly and thus have the potential for clinical use.

Keywords: Chest digital tomosynthesis, Deep learning, Sparse sampling, Low dose

Introduction

Digital tomosynthesis is a three-dimensional (3D) imaging technique based on reconstruction of several planar radiographs, and has mainly been used for breast- and chest-related diagnoses and, to some extent, for orthopedic, angiographic, and dental investigations [1, 2]. Digital tomosynthesis on the chest has garnered special attention because it can be used to provide 3D diagnostic images with much lower radiation doses than those required for computed tomography [1–4]. However, since the use of ionized radiation for the human body is based on the concept of as low as reasonably achievable, research is needed to further lower the radiation dose used in chest digital tomosynthesis (CDT), which needed multiple projections for 3D reconstruction.

To reduce the radiation dose delivered to patients during 3D medical imaging, including tomosynthesis, strategies such as sparse sampling or reduction of tube current have been used when acquiring projections. Model-based iterative reconstruction (MBIR) has been used to achieve superior 3D imaging quality in such limited conditions [5, 6]. MBIR is the most computationally demanding strategy, as it models the entire process, including the shape of the focal spot on the anode, the shape of the emerging X-ray beam, and its 3D interaction with the voxel, as well as the two-dimensional (2D) interaction of the beam with the detector [7]. Therefore, the image acquisition process is too slow for clinical use.

We propose an alternative image reconstruction strategy based on a learning-based algorithm, called convolutional neural networks (CNNs). CNNs are multi-layered fully trainable models that can capture and represent complex high-dimensional input-output relationships [8]. CNNs have become the method of choice in many fields of computer vision and have been applied to medical imaging. One advantage is the ability of CNNs to learn complex relationships between images. For example, end-to-end image translation models can learn which image features to compute and how to map the features to the desired outputs [8, 9]. Thus, we applied CNNs to learn the direct image translation between sparse sampling low-dose CDT images and full sampling high-quality CDT images.

Research on improvement of medical image quality based on CNNs has been actively conducted in the recent past. For example, in previous studies, CNNs were used for 3D reconstruction of low-dose CT, as well as applications such as metal artifact reduction, scatter correction, and super resolution [10–13]. CNNs have especially been using for low-dose reconstruction of breast tomosynthesis, indicating that high-quality imaging with low radiation doses is possible with the use of deep learning technologies [13]. Most medical image quality research is based on fully convolutional networks such as U-net, which are trained to reduce the mean square error between low-quality and high-quality images. Here, we studied the implementation of sparse sampling CDT imaging using deep learning technologies and investigated the usefulness of the developed network using simulated imaging data obtained from different patients, as well as experimentally obtained phantom data.

Methods

Data Acquisition

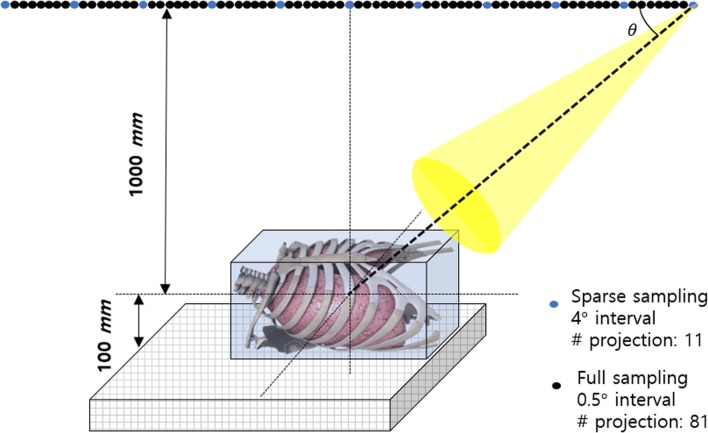

We performed a study using CDT reconstructed images obtained using computer simulation and an actual experiment. First, we obtained chest tomosynthesis projection data by projecting CT volume data using a computer simulation. The SPIE-American Association of Physicist in Medicine CT challenge dataset was used for the computer simulation [14]. Thirty and five patient datasets were used as training and testing data, respectively. Images classified as testing data were not related at all to the training process. Figure 1 is a schematic of the computer simulation used to generate chest tomosynthesis projections. We implemented the geometry of a prototype CDT system (LISTEM, Wonju, Korea) using computer simulation. The X-ray tube and the flat panel detector were moved linearly in opposite directions. The coordinates of the X-ray tube and the detector were influenced by the X-ray tube angle, source to detector distance (SDD), and object to detector distance (ODD).

Fig. 1.

Schematic of chest tomosynthesis geometry. The X-ray tube and detector were moved linearly in opposite directions. The numbers of projections obtained in the sparse and full sampling modes were 11 and 81, respectively

The angles used in the simulation ranged from − 20° to + 20°. Matlab was used for the simulation. Clinical CT volume integration data obtained using the three-dimensional coordinate system were used as projection data.

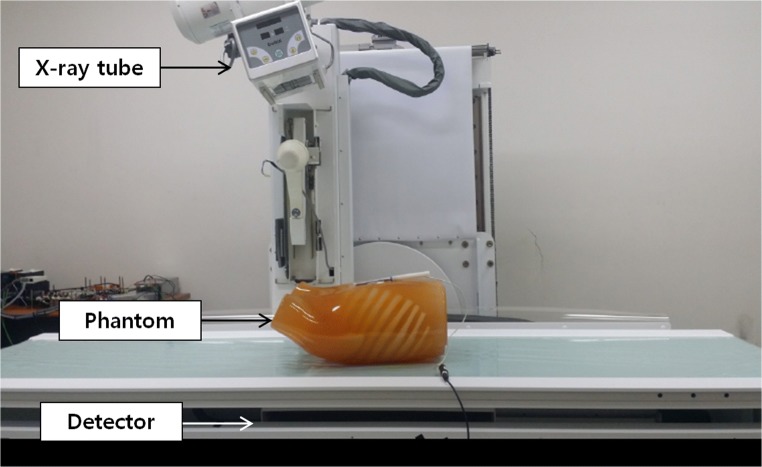

Second, to generate CDT projections, we used a prototype CDT system (LISTEM, Wonju, Korea) [15, 16] consisting of an X-ray tube (E7869X, Toshiba, Japan) with a rhenium-tungsten-faced molybdenum target, a thallium-activated cesium iodide scintillator flat panel digital detector (Pixium RF 4343, Thales, France), a main controller, and a reconstruction server. The SDD and ODD were 1100 and 100 mm, respectively. The prototype CDT system X-ray tube and detector were moved linearly. Normally, projections within a 40-degree range separated by 0.5 degrees are obtained using CDT. Using the sparse sampling mode of the prototype CDT system, we acquired images at 4-degree interval in a 40-degree range. Table 1 details the specifications of the developed prototype CDT system, and Fig. 2 describes the developed prototype CDT system. In this study, an anthropomorphic human chest phantom (LUNGMAN, Kagaku, Japan) was used. To reconstruct 3D volume data with limited angles, we used filtered back projection algorithms. Experimentally obtained CDT reconstructed images were used as test data.

Table 1.

Parameters of the developed prototype chest digital tomosynthesis (CDT) system. Sparse sampling mode of developed prototype CDT system, used with only 12.5% projection data from the full sampling mode

| Radiography tube | E7869X (Toshiba Japan) |

| Detector | CsI (TI) scintillator FPD (Pixium RF4343, Thales, France) |

| Source to detector distance | 1100 mm |

| Object to detector distance | 100 mm |

| Motion | Linear translation motion |

| Sparse sampling mode | Sampling 40-degree angles in 4-degree intervals (11 projections) |

| Full sampling mode | Sampling 40-degree angles in 0.5-degree intervals (81 projections) |

| Reconstruction matrix | 1024 ×1024 ×80 |

| Volume resolution | 0.5 ×0.5 ×1 mm3 |

| Volume size | 512×512×80 mm3 |

| Algorithms | Filtered back projection |

Fig. 2.

Prototype chest digital tomosynthesis system under development. The system was based on radiographic/fluoroscopic systems, and a radiography tube and detector were operated using a linear translation mode

Image Restoration with an Image-to-Image Translation Deep Learning Model

In this study, we designed 2D CNN models to directly learn the relationship between sparsely sampled low-dose CDT images and fully sampled high-dose CDT images. The model can be trained by collecting all low-dose CDT images reconstructed with sparse sampling projection data, and the corresponding high-dose CDT images reconstructed with full sampling projection data. Many different CNN models have been proposed in the computer vision literature, and their architectures are very flexible [8]. In this study, we build upon recent developments in semantic image segmentation, where a deep learning model can be trained from end-to-end to directly produce a dense label map for object segmentation in a 2D image [9, 17]. In particular, we used a U-net architecture [17]. Figure 3 provides a schematic of the developed deep learning architecture based on a U-net.

Fig. 3.

Architecture of the proposed chest digital tomosynthesis (CDT) image restoration model. We used 3 × 3 convolution and deconvolution kernels with 2 × 2 strides. The encoding and decoding layers were directly connected by shortcut connections

The proposed U-net mainly consisted of two encoding and decoding parts. The encoding part behaved as traditional CNNs that learn to extract complex features from input data. The decoding part reconstructs the features extracted from the input data, to generate the output image. A key innovation of U-net, compared with a convolution auto-encoder, which is the simplest end-to-end CNN architecture, is the application of a direct connection across the encoding and decoding parts; thus, high-resolution features from input data are preserved for the predicted images [9]. The encoding part of the proposed model consisted of a series of convolutional processes with 3 ×3 filter sizes and 2 ×2 strides. The decoding part was symmetric to the encoding part and consisted of a series of transposed convolutional processes usually referred to as deconvolution processes [17]. Each convolutional layer was activated using a ReLU activation function. Network training was performed to reduce mean square error between the input and output images. We used the adaptive momentum estimation optimizer. The learning rate and total number of training epochs were 0.002 and 3000, respectively. To train complex deep learning architectures, we used a Tensorflow library with graphic processing unit acceleration [18].

Quantitative Image Evaluation and Effective Dose Measurement

We performed quantitative measurements using the structure similarity index measure (SSIM) to evaluate the performance of image restoration from CDT reconstructed images with sparse sampling data to CDT reconstructed images with full sampling data. The SSIM represents the similarity between two images. Positive SSIM positive values range from 0 to 1. A value of 0 denotes no correlation between images, and a value of 1 denotes two equal images [19, 20]. For the original and processed signals (x and y), the SSIM is given as:

where l, c, and s are the luminance, contrast, and structural components, respectively, calculated as follows:

where μx and μy represent the means of the original and processed images, and σx and σy are the standard deviations, respectively. σxy is the covariance of the two images. Positive constants C1, C2, and C3 are used to avoid a null denominator.

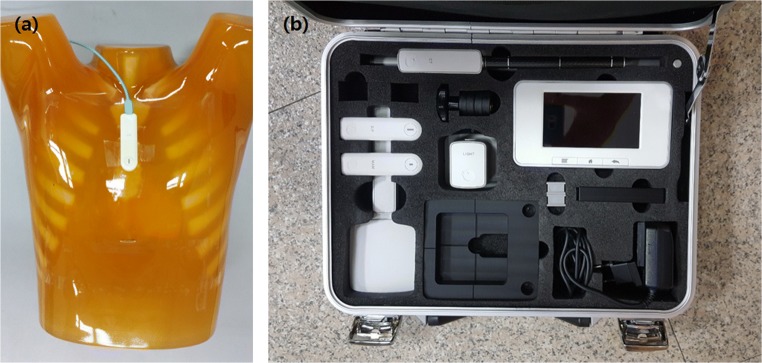

To estimate the effective dose, we converted the experimentally measured entrance exposure to the effective dose using PCXMC simulation tools [21]. Figure 4 shows the ion chamber (Raysafe X2, Unfors Raysafe, Sweden) and its location for entrance exposure measurement. We measured the entrance exposure by attaching an ion chamber sensor to the phantom surface. We estimated the effective CDT dose using PCXMC 2.0 software, which simulates effective doses with both ICRP 60 and 103 references. ORNL standard phantom information was used in the simulation, at a height of 178.6 cm and a mass of 73.2 kg. For accurate measurement, we considered the source-to-object distance, which varies with projection angle [22–25].

Fig. 4.

Location of the ion chamber sensor for entrance exposure measurement (a). The ion chamber (Raysafe X2, Unfors Raysafe, Sweden) used (b)

Results

Figure 5 provides the results of the simulation studies using sparse sampling data, the proposed method for restoring degraded images due to lack of sampling data, and full sampling data. The quality of the images reconstructed using sparse sampling data was significantly degraded. Anatomical structures in the lung field were obscured by strong streak artifacts and noise. However, the proposed image restoration strategy, based on deep learning, obtained high-quality reconstructed images with sparse sampling projection data.

Fig. 5.

Results of simulation studies using sparse sampling data, the proposed method, and full sampling data

Figure 6 shows images obtained in the experimental studies. As in the simulation studies, it was confirmed that the proposed method could effectively improve the degradation of diagnostic images caused by sparse sampling in experimental studies. In particular, it was remarkable that the streak artifacts appearing over the image were well resolved without distorting the complex anatomical structures in the thorax cage.

Fig. 6.

Results of experimental studies using sparse sampling data, the proposed method, and full sampling data

To quantitatively assess the performance of the image restoration deep learning model, we measured the SSIM. As shown in Table 2, the SSIM between reconstructed images acquired via the proposed method and full sampling data was approximately 20% higher than that between reconstructed images acquired via sparse and full sampling data in both simulation and experimental studies. The values shown in Table 2 refer to the average SSIMs calculated using large amounts of testing data.

Table 2.

The structure similarity index measure in both simulation and experimental studies

| Without proposed method | With proposed method | |

|---|---|---|

| Simulation | 0.723 | 0.871 |

| Experiment | 0.700 | 0.864 |

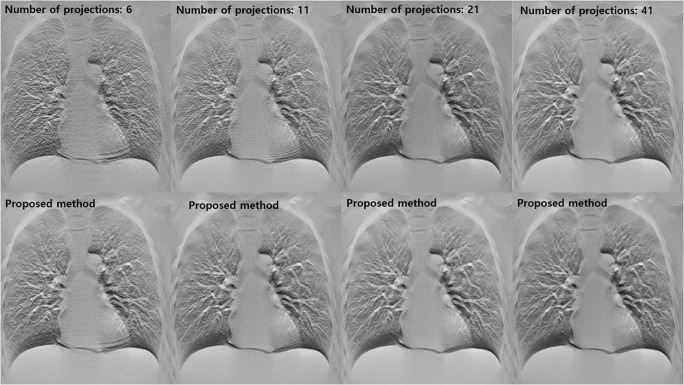

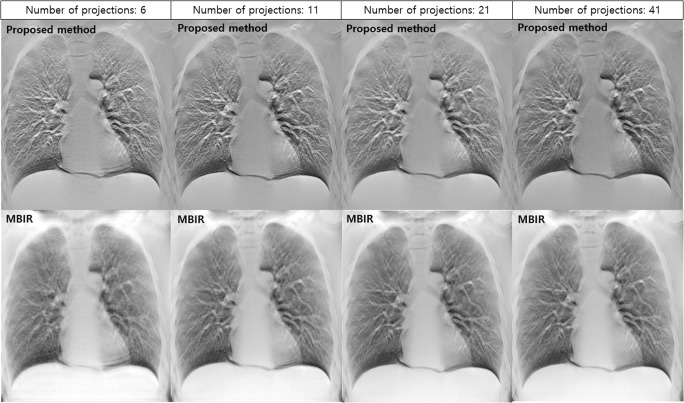

Figure 7 shows the CDT reconstructed images acquired using various sparse sampling conditions. It was greater deterioration of the reconstructed images with increasing sparsity. However, the proposed method effectively improved the low-quality diagnostic images obtained using various degrees of sparsity.

Fig. 7.

Results of experimental studies using various sparse sampling data. The images in the first row are conventional CDT reconstructed images obtained using various sparse sampling conditions and those in the second row are images restored using the proposed method

Table 3 summarizes the quantitative assessment of results obtained using various sparse sampling conditions. As the sparsity of the projection sampling increased, it was possible to quantitatively confirm that the SSIM was significantly reduced. We quantitatively confirmed the improvement in the quality of the diagnostic images when using the proposed method using SSIM evaluation, even when the sampling conditions were changed. However, when the sampling condition was excessively sparse, the reconstructed diagnostic image was not sufficiently close to the ground truth image. This indicates that the proposed method has limitations in the image quality that can be restored.

Table 3.

Measurement of the structure similarity index measure when using various sparse sampling conditions

| # of projections | 6 | 11 | 21 | 41 |

| Sparse sampling | 0.599 | 0.700 | 0.832 | 0.915 |

| Proposed method | 0.792 | 0.864 | 0.902 | 0.961 |

Figure 8 shows a comparison between the proposed method and MBIR. Since images reconstructed using MBIR and filtered back projection (FBP) have different characteristics, absolute comparisons between the two image types are not easy. The MBIR used in this study was an iterative reconstruction method based on maximum likelihood expectation maximization (MLEM). According to previous studies, MLEM is an iterative reconstruction technique that is most commonly studied in digital tomosynthesis [26]. Although MBIR is considered an advanced reconstruction technique, images reconstructed using MBIR were further degraded when the sampling condition was excessively sparse. In particular, microscopic structures were not apparent in MBIR images reconstructed using six projections.

Fig. 8.

Comparisons between reconstructed images obtained using proposed method and those obtained using MBIR under various sampling conditions

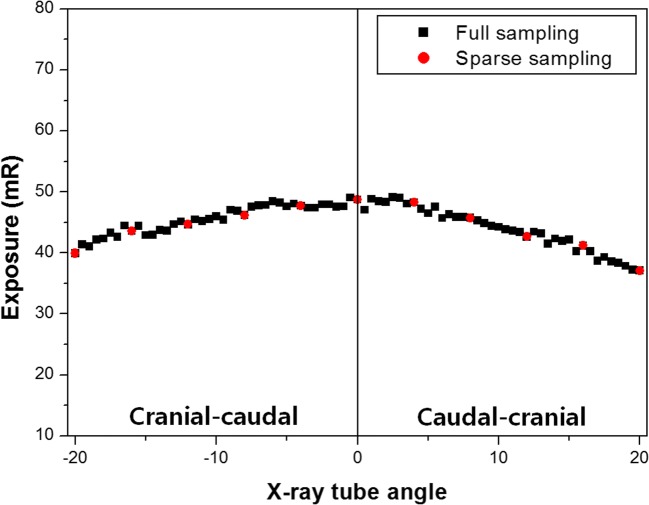

We used our developed CDT system to acquire projections from various angles in step-and-shoot mode. Since the developed CDT system moves linearly, the source-to-object distance differed at different angles. In this study, quantitative dose evaluation was performed in different geometric environments in the CDT system according to the X-ray tube angles. In addition, exposure and effective doses were compared between the full and sparse sampling modes. Figure 9 presents the entrance exposure results using an ion chamber. The measured values were obtained by averaging the values measured three times considering the influence of measurement error. As the X-ray angled further from 0 degrees, the source-to-object distance became larger. Therefore, the measured entrance exposure gradually decreased according to inverse square law. Compared with the full sampling mode, the sparse sampling mode has one eighth projections, and the entrance exposure was dramatically reduced.

Fig. 9.

Measured entrance exposures according to radiation (X-ray) tube angle. Black squares denote the entrance exposures obtained using the full sampling mode. The red circles denote the entrance exposures obtained using the sparse sampling mode. The black line indicates the 0-degree X-ray tube angle. Positive X-ray tube angles to the right of the black line corresponded to cranial-caudal projections and the negative X-ray tube angles to the left of the black line corresponded to caudal-cranial projections

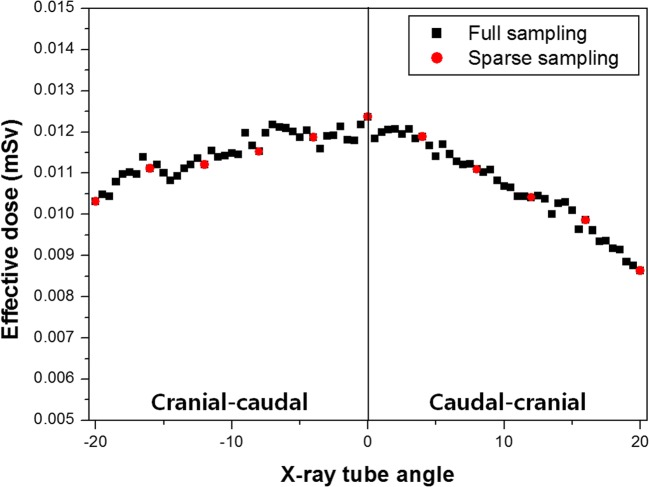

Figure 10 presents the effective doses obtained using simulation with the PCXMC 2.0 dose simulation tool. As the effective dose is a factor associated with biological susceptibility, the effective dose tended to be higher in the cranial-caudal projection, where the main organs irradiated were in the abdominal and thoracic cavities. As with the entrance exposure, the effective dose was small due to minimal projection in the sparse sampling mode.

Fig. 10.

Effective doses measured according to the radiation (X-ray) tube angle. Black squares denote effective doses obtained in the full sampling mode. Red circles denote effective doses obtained in the sparse sampling mode. The black line indicates the 0-degree X-ray tube angle. Positive X-ray tube angles to the right of the black line correspond to cranial-caudal projections and the negative X-ray tube angles to the left of the black line correspond to caudal-cranial projections

Table 4 shows the total entrance exposures and effective doses for all exposures and effective doses at each angle. Both the total entrance exposure and effective dose were approximately eight times higher in the full sampling mode than in the sparse sampling mode.

Table 4.

Total entrance exposures and effective doses measured in the full and sparse sampling modes

| Full sampling mode | Sparse sampling mode | |

|---|---|---|

| Total entrance exposure (mR) | 3621.28 | 465.72 |

| Total effective dose (mSv) | 0.89 | 0.11 |

Discussion

The deep learning-based sparse sampling reconstruction developed in this study can be used to perform excellent image restoration. The U-net model, which was developed for image segmentation and used in this study, was successfully applied to image translation and restored image quality deteriorated by sparse sampling in CDT. As shown in Figs. 5 and 6, our developed deep learning model effectively predicted high-resolution, quality images for diagnosis. Qualitatively, reconstructed images were similar to full sampling images. The existing image-based de-noising method was effective for removing image noise, but was not perfect for artifact removal due to the lack of sampling. For example, the repetitive pattern in the overall diagnostic images, shown in Fig. 6, could not be solved by the current de-noising technique. However, it was possible to reduce not only noise, but also artifacts, using the proposed deep learning-based image restoration technology. Although the image restoration technology, which is based on deep learning, has great advantages and potential for clinical application, there are a few factors to consider.

First, to develop an image restoration model using deep learning, paired diagnostic imaging data consisting of low- and high-quality images are necessary [9–11]. The paired data should be obtained in the same patient using the same diagnostic device. However, such medical imaging databases are not easy to construct in clinical practice. Moreover, there are not very many clinical databases containing data obtained using CDT, which was recently introduced as a medical imaging technique. Therefore, like most previous image restoration studies based on deep learning, we attempted to train the proposed network using simulation data. Although training the network using simulation data can be used to show feasibility for clinical application, there is uncertainty regarding whether such training is applicable to actual diagnostic images. We therefore attempted to verify the developed network in experimental studies. As seen in Fig. 6, the proposed method effectively eliminated image noise and artifacts in the experimentally obtained images, even though it was trained using simulation data. This result suggested that, even in the absence of sufficient data for training, good results may be expected when using simulation data.

Second, various clinical validations are required for the clinical application of deep learning-based image processing techniques. In this study, we only evaluated the resulting images using a quantitative image quality metric. However, it is necessary to evaluate the possibility of the clinical use of generic images obtained using deep learning, as well as improvement of image quality through clinical insight.

Finally, it is necessary to confirm whether the developed network shows consistent performance when using various CDT images with different characteristics. Many factors affect the quality of diagnostic images, including acquisition conditions, the imaging device used, and patient movement [27–29]. Especially in tomosynthesis, the sampling condition is a factor that has great influence on image quality [30]. Therefore, we attempted to verify the reproducibility and reliability of the proposed network by diversifying the sparse sampling conditions. As shown in Fig. 7, even when there were differences in image quality due to changes in the sparse sampling conditions, the proposed was effective for image restoration.

MBIR is the latest reconstruction technology that can be used to realize excellent image quality [31, 32]. Therefore, in this study, we compared image quality between MBIR and the proposed method. As shown in Fig. 8, we were able to reduce the occurrence of streak artifacts significantly using MBIR, although the images obtained using MBIR were not as sharp as those obtained using FBP. In order to distinguish the lung nodule from the vessel in the lung field, high spatial resolution was absolutely necessary when using CDT. Therefore, it is expected that the proposed method would be capable of realizing excellent-quality FBP images under sparse sampling conditions and contribute to the diagnoses of chest conditions.

As shown in the dose evaluation results for the sparse sampling mode, the radiation dose used for CDT reconstruction was greatly reduced as the acquisition projection decreased. Quantitative evaluation results (Table 2) indicated that the effective patient dose in the sparse sampling mode was approximately 0.11 mSv, similar to that on used for chest radiography (0.1 mSv), as specified in the RSNA report [33]. In general, chest examination data acquired using chest radiography contain various positional views, including posterior-anterior, lateral, and decubitus. Thus, there is a possibility of further lowering the effective dose when diagnosing chest conditions using the proposed low-dose CDT system.

Conclusions

In conclusion, we developed a new deep learning-based method for generating high-quality low-dose CDT reconstructed images. The proposed technique enables the generation of high-quality 3D reconstructed images using radiation doses equivalent to those used in conventional radiography. The proposed technique is expected to improve the clinical value and usefulness of CDT.

Acknowledgements

This work was supported by the Radiation Technology R&D program through the National Research Foundation of Korea funded by the Ministry of Science, ICT & Future Planning (No. NRF-2017M2A2A6A01070263).

References

- 1.Vikgren J, Zachrisson S, Svalkvist A, Johnsson AA, Boijsen M, Flinck A, Kheddache S, Båth M. Comparison of chest tomosynthesis and chest radiography for detection of pulmonary nodules: human observer study of clinical cases. Radiology. 2008;249:1031–1041. doi: 10.1148/radiol.2492080304. [DOI] [PubMed] [Google Scholar]

- 2.Dobbins JT, III, McAdams HP. Chest tomosynthesis: technical principles and clinical update. Eur J Radiol. 2009;72:244–251. doi: 10.1016/j.ejrad.2009.05.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tingberg A. X-ray tomosynthesis: a review of its use for breast and chest imaging. Radiat Prot Dosim. 2010;139:100–107. doi: 10.1093/rpd/ncq099. [DOI] [PubMed] [Google Scholar]

- 4.Dobbins JT, III, McAdams HP, Godfrey JD, Li CM. Digital tomosynthesis of the chest. J Thorac Imaging. 2008;23:86–92. doi: 10.1097/RTI.0b013e318173e162. [DOI] [PubMed] [Google Scholar]

- 5.Katsura M, Matsuda I, Akahane M, Yasaka K, Hanaoka S, Akai H, Sato J, Kunimatsu A, Ohtomo K. Model-based iterative reconstruction technique for radiation dose reduction in chest CT: comparison with the adaptive statistical iterative reconstruction technique. Eur Radiol. 2012;22:1613–1623. doi: 10.1007/s00330-012-2452-z. [DOI] [PubMed] [Google Scholar]

- 6.Samei E, Ricahrd S. Assessment of the dose reduction potential of a model-based iterative reconstruction algorithm using a task-based performance metrology. Med Phys. 2015;41:314–323. doi: 10.1118/1.4903899. [DOI] [PubMed] [Google Scholar]

- 7.Nishida J, Kitagawa K, Nagata M, Yamazaki A, Nagasawa N, Sakuma H. Model-based iterative reconstruction for multi-detector row CT assessment of the adamkiewicz artery. Radiology. 2014;270:282–291. doi: 10.1148/radiol.13122019. [DOI] [PubMed] [Google Scholar]

- 8.Xu L, Ren JSJ, Liu C, Jia J. Deep convolutional neural network for image deconvolution. Adv Neural Inf Proces Syst. 2014;27:1790–1798. [Google Scholar]

- 9.Han X. MR-based synthetic CT generation using a deep convolutional neural network method. Med Phys. 2017;44:1408–1419. doi: 10.1002/mp.12155. [DOI] [PubMed] [Google Scholar]

- 10.Kang E, Min J, Ye JC. A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med Phys. 2017;44:e360–e375. doi: 10.1002/mp.12344. [DOI] [PubMed] [Google Scholar]

- 11.Giesteby L, Yang Q, Xi Y, Zhou Y, Zhang J, Wang G: Deep learning methods to guide CT image reconstruction and reduce metal artifacts, Proc Vol 10132 Med Imag. Phys of Med Imag 10132, 2017

- 12.Dong C, Loy CC, He K, Tang X. Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell. 2015;38:295–307. doi: 10.1109/TPAMI.2015.2439281. [DOI] [PubMed] [Google Scholar]

- 13.Liu J, Zarshenas A, Qadir A, Wei Z, Yang L, Fajardo L, Suzuki K: Radiation dose reduction in digital breast tomosynthesis (DBT) by means of deep learning-based supervised image processing. Proc Vol 10574 Med Imag, Imag Process 10574, 2018

- 14.Medical imaging database. Available at https://wiki.cancerimagingarchive.net/display/Public/SPIE-AAPM+Lung+CT+Challenge. Accessed 24 August 2018

- 15.Choi S, Lee S, Lee H, Lee D, Choi S, Shin J, Seo C-W, Kim H-J. Development of a prototype chest digital tomosynthesis (CDT) R/F system with fast image reconstruction using graphic processing unit (GPU) programming. Nucl Inst Methods Phys Res A. 2017;848:174–181. doi: 10.1016/j.nima.2016.12.027. [DOI] [Google Scholar]

- 16.Lee D, Choi S, Lee H, Kim D, Choi S, Kim H-J. Quantitative evaluation of anatomical noise in chest digital tomosynthesis, digital radiography, and computed tomography. J Instrum. 2017;12:T04006. doi: 10.1088/1748-0221/12/04/T04006. [DOI] [Google Scholar]

- 17.Ronneberger O, Fischer P, Brox T: U-Net: Convolutional networks for biomedical image segmentation. Med Image Comput Comput Assist Interv 234–241, 2015

- 18.An open-source software library for machine learning. Access on 1 September 2018. Available at https://www.tensorflow.org/.

- 19.Horé A, Ziou D: Image quality metrics: PSNR vs. SSIM. Int Conf Pattern Recog 2366–2369, 2010

- 20.Eskicioglu AM, Fisher PS. Image quality measures and their performance. IEEE Trans Commun. 1995;43:2959–2965. doi: 10.1109/26.477498. [DOI] [Google Scholar]

- 21.A Monte Carlo simulation tool for calculating patient dose. Access on 1 September 2018. Available at http://www.stuk.fi/palvelut/pcxmc-a-monte-carlo-program-for-calculating-patient-doses-in-medical-x-ray-examinations.

- 22.Khelassi-Toutaoui N, Berkani Y, Tsapaki V, Toutaoui AE, Merad A, Frahi-Amroun A, Brahimi Z. Experimental evaluation of PCXMC and prepare codes used in conventional radiology. Radiat Prot Dosim. 2008;131:374–378. doi: 10.1093/rpd/ncn183. [DOI] [PubMed] [Google Scholar]

- 23.Ladia A, Messaris G, Delis H, Panayiotakis G. Organ dose and risk assessment in paediatric radiography using the PCXMC 2.0. J Phys Conf Ser. 2015;637:012014. doi: 10.1088/1742-6596/637/1/012014. [DOI] [Google Scholar]

- 24.Sabol JM. A Monte Carlo estimation of effective dose in chest tomosynthesis. Med Phys. 2009;36:5480–5487. doi: 10.1118/1.3250907. [DOI] [PubMed] [Google Scholar]

- 25.Lee C, Park S, Lee JK. Specific absorbed fraction for Korean adult voxel phantom from internal photon source. Radiat Prot Dosim. 2007;123:360–368. doi: 10.1093/rpd/ncl167. [DOI] [PubMed] [Google Scholar]

- 26.Sechopoluos I. A review of breast tomosynthesis. Part II. Image reconstruction, processing and analysis, and advanced applications. Med Phys. 2013;40:014302. doi: 10.1118/1.4770281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fahrig R, Dixon R, Payne T, Morin RL, Ganguly A, Strobel N. Dose and image quality for a cone-beam C-arm CT system. Med Phys. 2006;33:4541–4550. doi: 10.1118/1.2370508. [DOI] [PubMed] [Google Scholar]

- 28.Goldman LW. Principles of CT: radiation dose and image quality. J Nucl Med Technol. 2007;35:213–225. doi: 10.2967/jnmt.106.037846. [DOI] [PubMed] [Google Scholar]

- 29.Barrett JF, Keat N. Artifacts in CT: recognition and avoidance. RadioGraphics. 2004;24:1679–1691. doi: 10.1148/rg.246045065. [DOI] [PubMed] [Google Scholar]

- 30.Abbas S, Lee T, Shin S, Lee R, Cho S. Effects of sparse sampling schemes on image quality in low-dose CT. Med Phys. 2013;40:111915. doi: 10.1118/1.4825096. [DOI] [PubMed] [Google Scholar]

- 31.Hara AK, Paden RG, Silva AC, Kujak JL, Lawder HJ, Pavlicek W. Iterative reconstruction technique for reducing body radiation dose at CT: feasibility study. AJR Am J Roentgenol. 2009;193:764–771. doi: 10.2214/AJR.09.2397. [DOI] [PubMed] [Google Scholar]

- 32.Beister M, Kolditz D, Kalender WA. Iterative reconstruction methods in X-ray CT. Phys Med. 2012;28:94–108. doi: 10.1016/j.ejmp.2012.01.003. [DOI] [PubMed] [Google Scholar]

- 33.Radiation Dose in X-ray and CT Exam. Access on 1 September 2018. Available at https://www.radiologyinfo.org/en/info.cfm?pg=safety-xray.