Abstract

Background:

We sought to quantify the efficiency and acceptability of Internet-based recruitment for engaging an especially hard-to-reach cohort (college-students with type 1 diabetes, T1D) and to describe the approach used for implementing a health-related trial entirely online using off-the-shelf tools inclusive of participant safety and validity concerns.

Method:

We recruited youth (ages 17-25 years) with T1D via a variety of social media platforms and other outreach channels. We quantified response rate and participant characteristics across channels with engagement metrics tracked via Google Analytics and participant survey data. We developed decision rules to identify invalid (duplicative/false) records (N = 89) and compared them to valid cases (N = 138).

Results:

Facebook was the highest yield recruitment source; demographics differed by platform. Invalid records were prevalent; invalid records were more likely to be recruited from Twitter or Instagram and differed from valid cases across most demographics. Valid cases closely resembled characteristics obtained from Google Analytics and from prior data on platform user-base. Retention was high, with complete follow-up for 88.4%. There were no safety concerns and participants reported high acceptability for future recruitment via social media.

Conclusions:

We demonstrate that recruitment of college students with T1D into a longitudinal intervention trial via social media is feasible, efficient, acceptable, and yields a sample representative of the user-base from which they were drawn. Given observed differences in characteristics across recruitment channels, recruiting across multiple platforms is recommended to optimize sample diversity. Trial implementation, engagement tracking, and retention are feasible with off-the-shelf tools using preexisting platforms.

Keywords: adolescent, alcohol, diabetes mellitus (type 1), health education, Internet, social media, young adult

The transition into emerging adulthood is a critical period when health outcomes may be imperiled for adolescents and young adults (AYA) with type 1 diabetes (T1D),1-4 making it especially important to develop strategies to engage and support them. Ensuring effective disease self-management and self-regulation of risk behaviors are vital for avoiding diabetes complications5,6 and minimizing costs.7 Movement away from established care relationships and supports, common for college attending AYA, can undermine self-management and expose youth to potentially devastating health consequences.4,8,9 College students with T1D may be especially vulnerable to these risks; many reside in settings that are ill-equipped to identify and support their health needs.10 The older adolescent and young adult years are a high-risk period for many risk behaviors including substance use.11 Youth who are under the influence of any substance may have higher risk for poor self-care and nontreatment. In particular, the profoundly “alcogenic” features of college environments12,13 may promote consumption of alcohol including at heavy and binge levels,8,14 increasing risks for acute hypoglycemia15 and treatment nonadherence.16 Despite the potential health problems for youth with T1D in college,17 we know little about how best to engage them around diabetes self-management and avoidance of risk behaviors.

Consistent with their developmental status, successful interventions are likely to require a patient-centered focus, emphasis on shared decision making and autonomy, and peer and/or provider directives reinforcing the value of maintaining health and avoiding risks.18-21 The model may require flexibility and delivery on a web-enabled platform given the mobile, digitally oriented nature of this group. Still, recruiting AYA participants into studies targeting health behaviors remains challenging.22,23 Internet-based research, particularly social media-based research, has gained traction for reaching AYA;24-27 98% of AYA use the Internet28 and 88% use social media.29 Web-based technologies hold promise for expanding the reach of health research,30-35 providing an opportunity to cast a broader net than traditional recruitment and extending recruitment to previously hard-to-reach populations, including AYA.36 However, web-enabled studies may experience unique problems with recruitment, retention, safety concerns, and validity and representativeness of samples.37,38

An emerging evidence base has begun to describe the areas of opportunity and barriers to engaging hard-to-reach populations in Internet-enabled research.39-45 We sought to contribute to this literature by ascertaining the utility of various Internet-based methods for engaging college students with T1D in completing a comparative effectiveness trial testing competing versions of an educational intervention designed to influence diabetes self-management and alcohol consumption behaviors. In addition to measuring study interest and participation across recruitment strategies, we further describe the implementation of our study, including consideration and balance of issues related to conducting an entirely online health-related study within the auspices of academic research and human subject oversight; technical implementation of the content delivery and data collection platform; approach to monitoring safety and ensuring validity of study data and protecting against invalid (duplicate/false) records.

Methods

Participatory Research / Partnership Building

To promote recruitment, we established partnerships with two diabetes advocacy groups with large AYA followings—TuDiabetes (a program of Beyond Type 1) and College Diabetes Network (CDN). Study investigators contacted these groups, presented aligned goals, and proposed utilizing their networks for targeted recruitment. We worked closely with liaisons from both groups to select the platforms, iterate on recruitment materials, and distribute recruitment messages. CDN was provided a monetary donation to their nonprofit organization for their collaboration efforts.

Study Design and Procedures

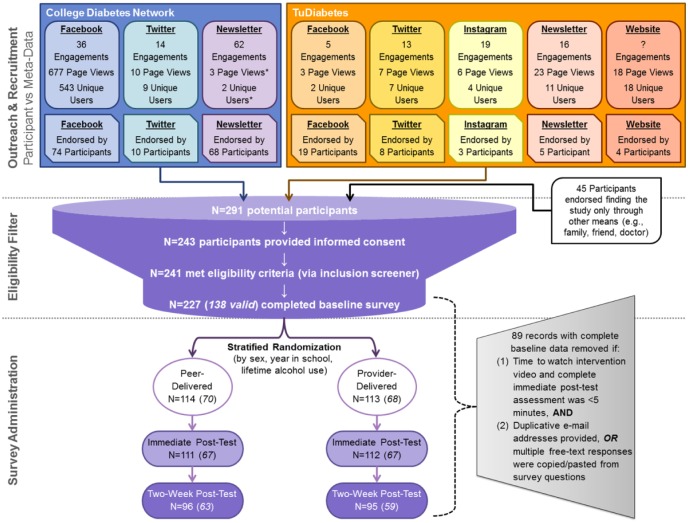

Recruitment messages were posted on the social media accounts (ie, Facebook, Twitter, Instagram) of the two advocacy groups, also via direct newsletters to the email distribution lists of both networks and a website banner on one network’s website. The two collaborating networks provided the research team with engagement metrics for each recruitment message posted (Figure 1). The recruitment message posted on each platform promoted the study (brief descriptions, inclusion criteria) and provided a unique link directing participants to one of eight identical versions of the study website, with visit and user metrics separately tracked using Google Analytics (GA). The eight identical landing pages included general study information, research team contact information, and a GA disclaimer.

Figure 1.

Trial recruitment flow diagram.

Figure depicts engagement metrics, both meta-data and participant reported data, across sampling frames and by recruitment modalities/platforms. “Engagements” includes the sum of active interactions with a post and were provided by either the College Diabetes Network (CDN) or TuDiabetes. For Facebook this includes shares and likes; for newsletter this includes a click on recruitment post within the newsletter email; for Twitter this includes retweets, likes, links clicked, @replies, or mentions; for Instagram this includes likes and comments on the recruitment post; engagements with the website banner were not quantified. Information on the total number of page views and unique users who interacted with each unique recruitment landing page were derived from Google Analytics; as the CDN newsletter email also contained the link that was intended for their Facebook post, these metrics may be conservative (indicated with an asterisk). Removal of invalid records (N = 89) from the analytic sample and associated decision rules are shown.

Each recruitment platform was intended to be uniquely paired to its own website for tracking (eg, CDN’s Facebook post directed users to a different landing page than the CDN’s Twitter post). In two instances, in addition to providing the correct webpage link, the CDN email newsletter also included the link to the webpage identified for tracking CDN Facebook engagement and the TuDiabetes newsletter included the link identified for tracking TuDiabetes Instagram engagement—thus there may be some landing page miscounts. We tracked traffic to each page during the recruitment period (Appendix 1), and determined device on which the page was accessed (mobile, tablet, desktop), referral source, and basic demographics characteristics (Appendix 2).

Landing pages directed participants to click an outbound link that transferred them to a Research Electronic Data Capture (REDCap) survey,46 hosted on secure servers at Boston Children’s Hospital. The number of clicks on the outbound link were tracked and quantified with GA; however, survey entry directly through the web address (ie, when the link was copied and distributed) would not be captured via GA tracking. After entering the survey, participants viewed an online consent form with language included in a typical written consent. Of the 291 survey entries, 243 participants consented by selecting “Yes, I consent to participate in this study.” at the end of the online form (versus a converse decline statement). No respondents declined consent (which would have exited them from the survey after again displaying the study safety information) but 48 participants exited the survey prior to affirming or declining consent.

Following consent, there were three screening questions to ascertain if respondents were between the ages of 17 and 25 years, had received a diagnosis of T1D, and were currently attending or enrolled in a college/university; respondents who did not meet inclusion criteria (N = 2) were exited from the survey and directed to the study safety resources and contact information. The 241 participants who met these criteria were directed to complete the remainder of the baseline survey; 14 participants did not complete the baseline survey and were subsequently not randomized to either version of the intervention video.

Intervention

Upon baseline survey completion, participants were automatically randomized to receive one of two brief educational videos using a randomization module programmed in REDCap that executed a stratified randomization scheme based on sex, year in college, and lifetime alcohol use. REDCap automatically showed participants the appropriate intervention video, either narrated by an endocrinologist (provider) or a college student with T1D (peer), which was housed on a private YouTube channel and embedded in REDCap. Aside from the spokesperson delivering the message, all other video content was identical.

Following receipt of the intervention, participants were directed to complete a brief (“immediate”) follow-up survey. Two weeks after completion of the initial session (baseline survey, intervention video, and immediate follow-up survey), participants were sent a follow-up survey via automated email invitation through REDCap. If the participant did not complete the two-week follow-up survey, they received up to two additional automated email reminders. Participants received a $20 gift card for an online retailer for completion of each of the two survey time points (“initial” session and the two-week follow-up survey).

Safety

Each recruitment landing page provided a link to a separate safety resources page (identified with: “Please click here if you are interested in learning more about Protecting Your Health, if you are in crisis, or if you think you may need help regarding a substance abuse problem”; the latter featured given the goal of addressing alcohol use risk). The safety resource page included the website and phone number for the National Suicide Prevention Lifeline and contact information and websites for national substance use and mental health services. A safety protocol was developed to convey these resources directly to participants if they displayed signs of distress/crisis in communication with the research team via the study email. The study email included an automatic reply to any incoming message that contained these resources and notified participants that messages were not guaranteed be viewed instantly nor was the research account monitored routinely on evenings or weekends. These safety procedures were critical for study approval by the Institutional Review Board.

Survey Measures

Survey data were also used to ascertain platform yield; participants were asked how they heard about the survey and invited to select all that apply from a preset list, including “other” (free text).

Participants provided demographic data (eg, age, sex, race/ethnicity, parental education, school year, enrollment, and college/university attended) and health/diabetes management information (eg, age diagnosed, last hemoglobin A1c [HbA1c], insulin pump use, continuous glucose monitoring [CGM] use, average blood glucose tests per day, self-rated health). Participants also provided data on several measures designed to assess the effectiveness of the intervention (ie, alcohol/substance use behaviors and attitudes). Participants were asked about their likelihood of study participation based on hypothetical recruitment methods and rated each of seven options from “very unlikely” to “very likely.” Survey time and date stamps tracked completion across time-points and determined time to complete each study component.

Assessing Data Validity

Because we relied on existing software that did not have a setting to prevent duplicative or fraudulent entries, we applied post hoc exclusion of records that were identified as invalid (ie, the same individual completing the survey multiple times) through the application of a series of decision rules. Invalid records were categorized if (1) time to complete the immediate follow-up survey and watch the video was <5 minutes AND (2) repeated email address noted across multiple records (where repetition is defined as identical replication of a previous email, with or without only one differentiating number or letter, or pattern of multiple, three or more, appearances of the same word combination, N = 54 records from seven email clusters, with each cluster likely from distinct individuals) OR multiple qualitative/free text responses were verbatim “copy and paste” from survey text (N = 35). Applying these rules identified 89 invalid records out of a total 227; these records were subsequently excluded from our analytic sample.

Statistical Analysis

All analyses were performed using SAS 9.4 (Cary, NC). To ascertain the impact of excluding invalid records from our sample, we calculated descriptive statistics for the full sample (N = 227), valid cases (N = 138), and invalid records (N = 89); differences in characteristics between valid and invalid records were assessed using appropriate bivariate tests. To compare the features of (valid) participants (N = 138) across recruitment platform, we calculated descriptive statistics for each recruitment platform and sampling frame endorsed by participants; as participants could endorse multiple referral sources, differences in characteristics were assessed for each source (eg, saw recruitment materials on Facebook vs did not see any recruitment materials on Facebook) using appropriate bivariate tests. Overall summaries of outreach platform acceptability among valid cases were characterized descriptively. Finally, sample representativeness was assessed by comparing descriptive characteristics of valid cases to user features derived from GA and to previously described characteristics of the CDN source population;47 differences were assessed using appropriate bivariate tests.

Results

Although no participant communication to study emails indicated safety concerns or sought additional safety information, there were two visits to the safety resource information page listed on the study website.

Over half of all study webpage users (337 of 596, Appendix 1) accessed the study website within the first day after recruitment posts went live and 105 participants progressed through to study consent within the first day. Facebook was the highest yield recruitment source (endorsed by 41% of respondents; Figure 1).

Among the 227 data records, 60.8% were determined to be valid cases (Table 1). Compared to valid cases, invalid records were significantly more likely to have been recruited from TuDiabetes Twitter or Instagram posts and differed substantially across most sociodemographic and health characteristics.

Table 1.

Descriptive Characteristics of Valid Versus Invalid Records.

| Total | Total |

Valid |

Invalid |

P value |

|---|---|---|---|---|

| 227 | 138 (60.8%) | 89 (39.2%) | ||

| Participation | ||||

| Completed immediate follow-up | 223 (98.2%) | 134 (97.1%) | 89 (100.0%) | .1051 |

| Time to complete immediate follow-up (in minutes) | <.0001 | |||

| Median (interquartile range) | 8.00 (2.0-13.0) | 12.00 (9.0-16.0) | 1.00 (1.0-3.0) | |

| Completed 2-week follow-up | 192 (84.6%) | 122 (88.4%) | 70 (78.7%) | .0469 |

| Randomization | .8499 | |||

| Peer-delivered materials | 114 (50.2%) | 70 (50.7%) | 44 (49.4%) | |

| Provider-delivered materials | 113 (49.8%) | 68 (49.3%) | 45 (50.6%) | |

| Sociodemographics | ||||

| Age at baseline (years) | .1292 | |||

| Mean (standard deviation) | 20.63 (1.57) | 20.49 (1.53) | 20.83 (1.62) | |

| Sex | <.0001 | |||

| Male | 81 (35.7%) | 27 (19.6%) | 54 (60.7%) | |

| Female | 146 (64.3%) | 111 (80.4%) | 35 (39.3%) | |

| Race/ethnicity | .0005 | |||

| White, non-Hispanic | 201 (88.5%) | 114 (82.6%) | 87 (97.8%) | |

| Hispanic or non-white | 26 (11.5%) | 24 (17.4%) | 2 (2.2%) | |

| Parental education | <.0001 | |||

| ≤High school or unknown | 17 (7.5%) | 11 (8.0%) | 6 (6.7%) | |

| Some college, no degree | 26 (11.5%) | 20 (14.5%) | 6 (6.7%) | |

| Associate’s degree | 85 (37.4%) | 14 (10.1%) | 71 (79.8%) | |

| Bachelor’s degree | 54 (23.8%) | 48 (34.8%) | 6 (6.7%) | |

| Graduate degree | 45 (19.8%) | 45 (32.6%) | 0 (0.0%) | |

| Year in school | <.0001 | |||

| Freshman | 43 (18.9%) | 19 (13.8%) | 24 (27.0%) | |

| Sophomore | 62 (27.3%) | 42 (30.4%) | 20 (22.5%) | |

| Junior | 87 (38.3%) | 42 (30.4%) | 45 (50.6%) | |

| Senior | 21 (9.3%) | 21 (15.2%) | 0 (0.0%) | |

| 5th year or grad student | 14 (6.2%) | 14 (10.1%) | 0 (0.0%) | |

| Enrollment status | .0006 | |||

| Full-time | 210 (92.5%) | 121 (87.7%) | 89 (100.0%) | |

| Part-time or other | 17 (7.5%) | 17 (12.3%) | 0 (0.0%) | |

| Region of college/university | .0685 | |||

| US, Northeast | 77 (33.9%) | 38 (27.5%) | 39 (43.8%) | |

| US, Midwest | 53 (23.3%) | 35 (25.4%) | 18 (20.2%) | |

| US, South | 76 (35.5%) | 51 (37.0%) | 25 (28.1%) | |

| US, West | 17 (7.5%) | 10 (7.2%) | 7 (7.9%) | |

| Outside US | 4 (1.8%) | 4 (2.9%) | 0 (0.0%) | |

| Health and diabetes management | ||||

| Age at diagnosis (years) | <.0001 | |||

| Mean (standard deviation) | 12.11 (4.81) | 10.88 (5.17) | 14.03 (3.41) | |

| Last hemoglobin A1c (HbA1c, %) | .1721 | |||

| Mean (standard deviation) | 7.57 (1.23) | 7.62 (1.26) | 7.50 (1.19) | |

| Insulin pump use | <.0001 | |||

| Current user | 205 (90.3%) | 116 (84.1%) | 89 (100.0%) | |

| Not a current user | 22 (9.7%) | 22 (15.9%) | 0 (0.0%) | |

| Continuous glucose monitoring (CGM) use | <.0001 | |||

| Current user | 172 (75.8%) | 83 (60.1%) | 89 (100.0%) | |

| Not a current user | 55 (24.2%) | 55 (39.9%) | 0 (0.0%) | |

| Average blood sugar checks | .0195 | |||

| 0-2 times/day | 48 (21.1%) | 25 (18.1%) | 23 (25.8%) | |

| 3-4 times/day | 91 (40.1%) | 52 (37.7%) | 39 (43.8%) | |

| 5-6 times/day | 59 (26.0%) | 36 (26.1%) | 23 (25.8%) | |

| 7+ times/day | 29 (12.8%) | 25 (18.1%) | 4 (4.5%) | |

| Self-rated health | <.0001 | |||

| Fair/poor | 25 (11.0%) | 14 (10.1%) | 11 (12.4%) | |

| Good | 105 (46.3%) | 61 (44.2%) | 44 (49.4%) | |

| Very good | 67 (29.5%) | 54 (39.1%) | 13 (14.6%) | |

| Excellent | 30 (13.2%) | 9 (6.5%) | 21 (23.6%) | |

| Recruitment source | ||||

| Sampling framea | ||||

| College Diabetes Network (CDN) | 148 (65.2%) | 87 (63.0%) | 61 (68.5%) | .3961 |

| TuDiabetes | 40 (17.6%) | 13 (9.4%) | 27 (30.3%) | <.0001 |

| Other source | 45 (19.8%) | 42 (30.4%) | 3 (3.4%) | <.0001 |

| Platforma | ||||

| CDN Facebook | 74 (32.6%) | 49 (35.5%) | 25 (28.1%) | .2444 |

| CDN Twitter | 10 (4.4%) | 4 (2.9%) | 6 (6.7%) | .1684 |

| TuDiabetes Facebook | 19 (8.4%) | 8 (5.8%) | 11 (12.4%) | .0813 |

| TuDiabetes Twitter | 8 (3.5%) | 0 (0.0%) | 8 (9.0%) | .0030 |

| TuDiabetes Instagram | 3 (1.3%) | 0 (0.0%) | 3 (3.4%) | .0299 |

| CDN Newsletter | 68 (30.0%) | 37 (26.8%) | 31 (34.8%) | .1978 |

| TuDiabetes Newsletter | 5 (2.2%) | 1 (0.7%) | 4 (4.5%) | .0589 |

| TuDiabetes Website | 4 (1.8%) | 3 (2.2%) | 1 (1.1%) | .5571 |

| Other (from free text response) | .8970 | |||

| Friend | 19 (8.4%) | 18 (13.0%) | 1 (1.1%) | |

| Family/doctor | 11 (4.8%) | 10 (7.2%) | 1 (1.1%) | |

| Other | 19 (8.4%) | 18 (13.0%) | 1 (1.1%) | |

Column percentages are shown. Note that invalid records likely do not reflect unique individuals, instead representing duplicative entries from a small number of persons.

Sampling frame and platform are not mutually exclusive.

Characteristics of valid cases also differed by recruitment platform (Table 2). Cases recruited from Twitter were more likely to be male and reported a lower HbA1c on average. Cases recruited from a website banner were more likely to be non-white or Hispanic, and have been diagnosed at an older age. Other qualitative (nonsignificant) differences in year in school, region, and diabetes management were observed. Differences between individuals recruited from CDN (more educated parents) versus TuDiabetes (older age, living outside the United States, in worse health) were also noted.

Table 2.

Descriptive Characteristics by Recruitment Platform and Sampling Frame Among Valid Cases.

| Recruitment platform |

Sampling frame |

||||||

|---|---|---|---|---|---|---|---|

| Newsletter | Website | Other only | CDN | TuDiabetes | |||

| Total (N = 138) | 68 (60.8%) | 4 (2.9%) | 39 (28.3%) | 5 (3.6%) | 28 (20.3%) | 87 (63.0%) | 13 (9.4%) |

| Participation | |||||||

| Immediate follow-up | ** | ||||||

| Completed | 98.5% | 100.0% | 97.4% | 100.0% | 92.9% | 100.0% | 92.3% |

| Time to complete (minutes) | † | * | |||||

| Median (interquartile range) | 12.0 (8.0-15.0) | 13.5 (12.0-20.5) | 14.0 (11.0-20.0) | 11.0 (8.0-13.0) | 12.0 (10.0-18.0) | 12.0 (9.0-18.0) | 12.0 (8.0-19.0) |

| Two week follow-up | |||||||

| Completed | 88.2% | 75.0% | 87.2% | 100.0% | 85.7% | 90.8% | 84.6% |

| Randomization | |||||||

| Peer-delivered materials | 45.6% | 50.0% | 48.7% | 40.0% | 57.1% | 50.6% | 46.2% |

| Provider-delivered materials | 54.4% | 50.0% | 51.3% | 60.0% | 42.9% | 49.4% | 53.8% |

| Sociodemographics | |||||||

| Age at baseline (years) | † | † | † | * | |||

| Mean (standard deviation) | 20.65 (1.43) | 20.00 (0.82) | 20.44 (1.60) | 22.20 (2.39) | 20.04 (1.26) | 20.33 (1.37) | 21.77 (1.79) |

| Sex | ** | ||||||

| Male | 16.2% | 75.0% | 12.8% | 40.0% | 21.4% | 18.4% | 15.4% |

| Female | 83.8% | 25.0% | 87.2% | 60.0% | 78.6% | 81.6% | 84.6% |

| Race/ethnicity | * | † | |||||

| White, non-Hispanic | 85.3% | 100.0% | 84.6% | 40.0% | 78.6% | 87.4% | 69.2% |

| Hispanic or non-white | 14.7% | 0.0% | 15.4% | 60.0% | 21.4% | 12.6% | 30.8% |

| Parental education | ** | ||||||

| ≤High school or Unknown | 7.4% | 0.0% | 7.7% | 20.0% | 7.1% | 5.7% | 15.4% |

| Some college, no degree | 16.2% | 0.0% | 7.7% | 0.0% | 21.4% | 10.3% | 23.1% |

| Associate’s degree | 7.4% | 0.0% | 12.8% | 20.0% | 10.7% | 5.7% | 23.1% |

| Bachelor’s degree | 39.7% | 50.0% | 41.0% | 20.0% | 21.4% | 44.8% | 7.7% |

| Graduate degree | 29.4% | 50.0% | 30.8% | 40.0% | 39.3% | 33.3% | 30.8% |

| Year in school | |||||||

| Freshman | 7.4% | 0.0% | 17.9% | 0.0% | 25.0% | 12.6% | 0.0% |

| Sophomore | 33.8% | 50.0% | 20.5% | 20.0% | 32.1% | 27.6% | 30.8% |

| Junior | 36.8% | 50.0% | 33.3% | 20.0% | 17.9% | 36.8% | 30.8% |

| Senior | 14.7% | 0.0% | 15.4% | 20.0% | 17.9% | 14.9% | 15.4% |

| 5th year or grad student | 7.4% | 0.0% | 12.8% | 40.0% | 7.1% | 8.0% | 23.1% |

| Enrollment status | |||||||

| Full-time | 85.3% | 100.0% | 89.7% | 80.0% | 92.9% | 87.4% | 84.6% |

| Part-time or other | 14.7% | 0.0% | 10.3% | 20.0% | 7.1% | 12.6% | 15.4% |

| Region of college/university | ** | ||||||

| US, Northeast | 25.0% | 25.0% | 25.6% | 20.0% | 35.7% | 26.4% | 23.1% |

| US, Midwest | 22.1% | 50.0% | 35.9% | 40.0% | 21.4% | 29.9% | 23.1% |

| US, South | 39.7% | 25.0% | 30.8% | 20.0% | 35.7% | 37.9% | 15.4% |

| US, West | 10.3% | 0.0% | 5.1% | 0.0% | 7.1% | 4.6% | 23.1% |

| Outside US | 2.9% | 0.0% | 2.6% | 20.0% | 0.0% | 1.1% | 15.4% |

| Health and diabetes management | |||||||

| Age at diagnosis (years) | * | ||||||

| Mean (standard deviation) | 10.90 (5.36) | 12.75 (7.59) | 10.59 (4.92) | 16.00 (5.57) | 9.80 (4.94) | 10.79 (4.98) | 11.88 (6.39) |

| Last Hb A1c (%) | * | ||||||

| Mean (standard deviation) | 7.70 (1.45) | 6.43 (0.85) | 7.47 (1.18) | 7.20 (0.82) | 7.75 (0.89) | 7.58 (1.35) | 7.84 (1.38) |

| Insulin pump use | * | ||||||

| Current user | 88.2% | 75.0% | 87.2% | 60.0% | 21.4% | 87.4% | 61.5% |

| Not a current user | 11.8% | 25.0% | 12.8% | 40.0% | 78.6% | 12.6% | 38.5% |

| CGM use | † | ||||||

| Current user | 58.8% | 50.0% | 64.1% | 40.0% | 39.3% | 64.4% | 38.5% |

| Not a current user | 41.2% | 50.0% | 35.9% | 60.0% | 60.7% | 35.6% | 61.5% |

| Average blood sugar checks | |||||||

| 0-2 times/day | 16.2% | 25.0% | 20.5% | 0.0% | 21.4% | 18.4% | 15.4% |

| 3-4 times/day | 32.4% | 50.0% | 46.2% | 40.0% | 32.1% | 42.5% | 30.8% |

| 5-6 times/day | 30.9% | 0.0% | 20.5% | 40.0% | 25.0% | 23.0% | 38.5% |

| 7+ times/day | 20.6% | 25.0% | 12.8% | 20.0% | 25.0% | 16.1% | 15.4% |

| Self-rated health | * | ||||||

| Fair/poor | 13.2% | 0.0% | 10.3% | 0.0% | 7.1% | 9.2% | 30.8% |

| Good | 44.1% | 25.0% | 35.9% | 80.0% | 46.4% | 40.2% | 46.2% |

| Very good | 33.8% | 75.0% | 48.7% | 20.0% | 42.9% | 44.8% | 15.4% |

| Excellent | 8.8% | 0.0% | 5.1% | 0.0% | 3.6% | 5.7% | 7.7% |

Column percentages are shown. Recruitment sources (Facebook, Twitter, Newsletter, Website) are not mutually exclusive; “Other Only” includes those who did not endorse or mention any of the aforementioned platforms. Sampling frame (CDN, TuDiabetes) are not mutually exclusive. As such, each comparison is across those who endorsed each recruitment source/sampling frame versus those who did not (eg, 68 participants who endorsed seeing the study via Facebook, shown, were compared against 70 participants who did not endorse seeing the study via Facebook, omitted).

Statistical significance is denoted as: †P < .10. *P < .05. **P < .01.

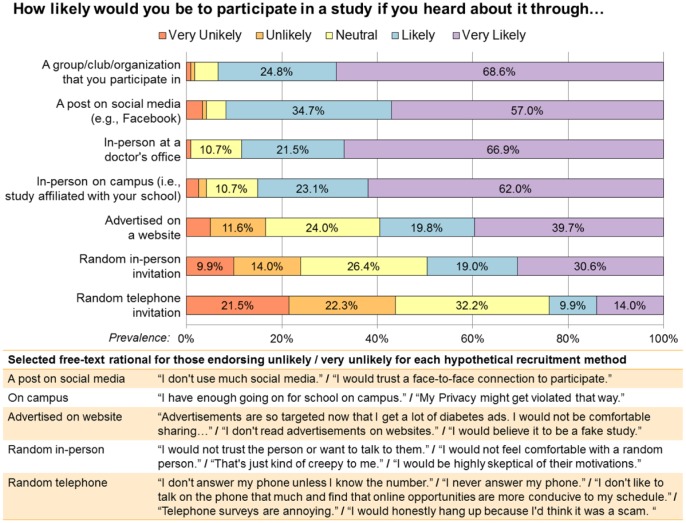

Participants reported high acceptability for future recruitment through a post on social media, with 91.7% reporting that they would be likely or very likely to participate (Figure 2). Lowest acceptability was for web-based advertisement (59.5%), random in-person invitation (49.6%), and random telephone invitation (24.0%). For the least accepted forms of recruitment, participants reported concerns about credibility/trustworthiness and overwhelmingly noted that they do not answer phone calls from unknown numbers.

Figure 2.

Acceptability of recruitment methods.

At two-week follow-up, all participants (N = 122 valid cases) were asked to report their likelihood of participating in a study if they heard about it through each of seven potential recruitment methods, rating their likelihood on a five-point Likert scale from “very unlikely” (red) to “very likely” (purple). Prevalence of reported likelihood for each method is shown in the horizontal bars. Respondents who endorsed being unlikely or very unlikely to participate for a hypothetical recruitment method where then asked to explain why; selected free-text responses that are representative of these reasons are provided.

Finally, the analytic sample of valid cases closely resembled the user distribution of sex and country, obtained from GA, and was similar to the CDN population with respect to reported population characteristics (Appendix 2).

Discussion

We demonstrate that recruitment of a traditionally hard-to-reach population (college students with T1D) into a longitudinal comparative effectiveness intervention trial via outreach through social media is feasible, efficient, acceptable to participants, and yields a sample that is largely representative of the user-base from which the sample was drawn. As there are subtle differences in participant characteristics across different recruitment platforms, researchers may wish to consider diversifying recruitment efforts across platforms to maximize sample diversity in future research. Tracking of initial engagement with recruitment posts and subsequent drop-off at each stage of the study is facilitated by off-the-shelf software and preexisting platforms.

A major criticism of Internet-based recruitment is that the underlying population (“denominator”) is ill-defined37 and it may thus be hard to identify issues with sample representativeness. Although these issues are not always clearly addressed even when more traditional recruitment methods are utilized, we demonstrate that relying on well-defined social media spaces and careful tracking of engagement from initial post through study completion can be used to shed light on the potential for selection bias and issues with external validity/generalizability. User demographics derived from recruitment landing pages were taken to reflect the population of invited participants, and though limited, aligned closely with demographics of valid cases. Moreover, more extensive comparison of demographics revealed that valid cases were similar to the predominant sampling frame (CDN), with small differences in year in school and age. Although this sample is likely not representative of all college students with T1D (eg, given our higher proportion of female respondents), these analyses suggest participant loss during sampling efforts and refusal were nondifferential and led to a sample that was fairly representative of the sampling frame. Importantly, achieving sample representativeness was likely affected by relying on different modalities (ie, social media versus Internet-based) and platforms (eg, Facebook, Twitter) as differences in user features are known to exist across social media platforms and users (versus nonusers),29 and these underlying differences were reflected in our data as well.

Overall, social media appears to be a highly acceptable way to recruit young people to participate in clinical research, with nearly equivalent acceptability as recruitment in a doctor’s office but perhaps with higher potential for retention, as we achieved complete follow-up for 88.4% of valid cases. Youth exist online to a greater degree than older populations,29 so it is unsurprising that hosting all trial components virtually enabled their participation. Interestingly, when asked why youth were unlikely to participate in studies with a phone invitation, participants overwhelmingly noted that they generally do not answer their phones (especially from unknown callers) and that their schedules are often not conducive to phone calls; as such, the utility of using telephone calls for initial recruitment or even study follow-up for AYA may be limited. Endeavoring to provide youth with opportunities to participate in research virtually including using approaches that support online consent and follow-up, may optimize representativeness and retention.

Undertaking this study in partnership with two large and very different organizations enabled highly efficient implementation of a rigorous trial with online distributed cohorts, use of online consenting and safety procedures, and collection of valuable information about recruiting and health status and behaviors. Summaries of this information were made available to partners to “close the loop” and ensure optimal use of study information, consistent with principles of community-based participatory research within an online ecosystem. Limitations were present (Table 3), notably the high prevalence of invalid records, which likely reflects provision of a small remuneration for participation (common in research) without a mechanism to gatekeep duplicate entries (ie, a “one IP address, one survey” algorithm or use of browser cookies—advisable for future studies). While gatekeep protections are not guaranteed to prevent all duplicate entries by motivated individuals, they likely would have reduced the number observed here. Application of post hoc decisions rules based on process measures (eg, time to complete) enabled exclusion of invalid records and could be applied similarly in other studies.

Table 3.

Lessons Learned and Implications for Future Studies.

| Lesson learned | Technical approach and design implications |

|---|---|

| Participatory research and partnership building | While buying ads on social media platforms has gained momentum as a recruitment source for research, some populations do not find ad-based recruitment acceptable or compelling (as evidenced with study data; Figure 2). Rather, this population (college students) values a trusted source. As such, partnering with existing organizations (trusted by their user base) was a highly efficient and acceptable method to solicit participation. Creating successful collaborations involved engaging organizations in study design decisions and offering data feedback as a value add for collaborators. |

| Human subject protections and participant safety | Standard practice for implementing participant protections, including use of informed consent and provision of clear notification around safety monitoring can be adapted to an entirely online environment. However, researchers must balance asking survey questions where the responses would warrant a clinical response (eg, acute suicidality) with the fact that participants may not have a direct connection to care—and consider their ethical responsibility to act on information provided as part of a survey. For research that is not able to provide access to a clinician in response to such safety concerns, appropriately scoping the survey is warranted. |

| Data security and privacy | As with any research, careful attention to data security and patient privacy is vital. Researchers should consider how data collected virtually is stored and transmitted (this is especially true if collecting sensitive data). Using secure servers to host websites and data collection via the REDCap platform, which has built-in functionality to store data securely, were helpful. As email addresses (considered Protected Health Information) were collected for follow-up and remuneration, a process was implemented to store this information separately from survey data (with potential for linkage) to protect against inadvertent disclosure and generate a deidentified dataset. |

| Use of off-the-shelf tools | Especially in instances of limited budgets or timelines (that would prohibit working with a web developer to build/customize needed software/platforms), existing tools are available and can be adapted to the needs of this study. Use of these existing tools was cost effective and allowed for quick implementation. |

| Virtual clinical trial | A substantial hurdle to running a trial virtually was the need to randomize participants in real time and in an automated fashion (anticipating that many participants would enter the survey outside of traditional working hours). Although REDCap did not initially have the capacity to perform such automated randomization, the research team worked with their developers to create, implement, and test an algorithm to accomplish these goals. Importantly, hosting the entirety of the trial in a virtual environment likely facilitated participation and enabled heterogeneity in the study sample (in terms of geographic location and other participant characteristics) while carefully designing access routes into the trial and controlling participant experiences during participation helped to protect internal validity. |

| Recruitment from multiple platforms | As differences are known to exist in the user base of different social media platforms and across the networks of our partner organizations, it was important to diversify recruitment efforts to maximize sample yield and improve external validity. Duplicating efforts across platforms and organizations also likely had a multiplier effect whereby the cost to us was minimal but resulted in participation gains. |

| Use of online distributed cohorts and issues with generalizability | This and others’ data indicate that adolescents/young adults prefer to be reached/engaged via online channels, the use of which provides important access to diverse and traditionally hard-to-reach groups. Nevertheless, there are limitations in understanding the denominator (underlying population) in many social media/online spaces and so generalizability remains a concern. While this study was strengthened by assessment of sample representativeness and implemented well-defined recruitment paths, efforts are unlikely to yield a perfectly representative sample of larger populations that exist off-line. But just as there are potential biases from the fact that users of our recruitment platforms may differ from nonusers (as well as that responders may also differ from nonresponders), parallel biases exist in other recruitment methods (eg, clinic attendees and nonattendees); so care should always be taken to understand whether factors that may contribute to populations filtering into a sampling frame and then into a sample are likely to be associated with key characteristics under investigation and to select appropriate recruitment strategies accordingly. |

| Protection against duplicative/false/ invalid records | A major concern of Internet-based research is the potential for false participation, especially when monetary compensation may encourage duplicative entries. Careful application of anticipatory or post hoc protections is recommended to minimize invalid records. These considerations are essential and feasible at the pre- and post-data collection stage; for instance, using browser cookies or allowing one survey entry per IP address (pre) or creating decision rules or implementing test questions (eg, asking specific questions that only the population of interest would have easy knowledge of and eliminating records that provide incorrect or illogical answers) to identify records that are unlikely to be valid (post). Further, instead of offering compensation for each completed record (as done here), remuneration in the form of a lottery or by donation to an outside organization may be less likely to incentivize duplicative/false records. |

| Academic setting | Rigorous research operating in an outside setting can be done such that it aligns with the core values and practices of human subject protections and IRB governance, an important advance that protects the pathway of academic research undertaken in partnership with private and outside organizations. |

Conclusion

The unique challenges of studies recruiting via social media often raise concerns about safety, efficiency, generalizability, and validity. We demonstrate the feasibility of implementing a rigorous trial that engaged and retained a hard-to-reach group using tracking to quantify sample representativeness and post hoc protections to minimize invalid records. Partnership with multiple platforms and using off-the-shelf tools optimizes sample diversity, increasing external validity with no cost to internal validity. In light of our success in virtually recruiting for and conducting a comparative-effectiveness trial targeting a traditionally hard-to-reach population, researchers may wish to implement similar strategies for both observational and experimental studies—the results of which can further be used to evaluate the effectiveness and acceptability of these methods.

Supplementary Material

Footnotes

Abbreviations: AYA, adolescent(s) and young adult(s); CDN, College Diabetes Network; CGM, continuous glucose monitoring; GA, Google Analytics; HbA1c, hemoglobin A1c; T1D, type 1 diabetes.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: We wish to acknowledge the generous funding support for this project provided by the Boston Children’s Hospital Research Faculty Council Awards Committee Pilot Research Project Funding FP01017994 (co-PIs: LEW and ERW), the Agency for Healthcare Research and Quality K12HS022986 (PI: Finkelstein), and the Conrad N. Hilton Foundation Clinical Research 20140273 (co-PIs: Levy and ERW). The trial was registered at ClinicalTrials.gov (#NCT02883829).

Supplemental Material: Supplemental material for this article is available online.

References

- 1. Barnard K, Sinclair JM, Lawton J, Young AJ, Holt RI. Alcohol-associated risks for young adults with type 1 diabetes: a narrative review. Diabet Med. 2012;29:434-440. [DOI] [PubMed] [Google Scholar]

- 2. Barnard KD, Dyson P, Sinclair JM, et al. Alcohol health literacy in young adults with type 1 diabetes and its impact on diabetes management. Diabet Med. 2014;31:1625-1630. [DOI] [PubMed] [Google Scholar]

- 3. Harjutsalo V, Feodoroff M, Forsblom C, Groop PH, FinnDiane Study G. Patients with type 1 diabetes consuming alcoholic spirits have an increased risk of microvascular complications. Diabet Med. 2014;31:156-164. [DOI] [PubMed] [Google Scholar]

- 4. Jaser SS, Yates H, Dumser S, Whittemore R. Risky business: risk behaviors in adolescents with type 1 diabetes. Diabetes Educ. 2011;37:756-764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Edwards D, Noyes J, Lowes L, Haf Spencer L, Gregory JW. An ongoing struggle: a mixed-method systematic review of interventions, barriers and facilitators to achieving optimal self-care by children and young people with type 1 diabetes in educational settings. BMC Pediatr. 2014;14:228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Polfuss M, Babler E, Bush LL, Sawin K. Family perspectives of components of a diabetes transition program. J Pediatr Nurs. 2015;30:748-756. [DOI] [PubMed] [Google Scholar]

- 7. American Diabetes Association. Economic costs of diabetes in the US in 2012. Diabetes Care. 2013;36:1033-1046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Hingson RW, Zha W, Weitzman ER. Magnitude of and trends in alcohol-related mortality and morbidity among U.S. college students ages 18-24, 1998-2005. J Stud Alcohol Drugs Suppl. 2009;16:12-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Weitzman ER, Magane KM, Wisk LE, Allario J, Harstad E, Levy S. Alcohol use and alcohol-interactive medications among medically vulnerable youth. Pediatrics. 2018;142:1-9. https://pediatrics.aappublications.org/content/pediatrics/142/4/e20174026.full.pdf [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Lemly DC, Lawlor K, Scherer EA, Kelemen S, Weitzman ER. College health service capacity to support youth with chronic medical conditions. Pediatrics. 2014;134:885-891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Wisk LE, Weitzman ER. Substance Use Patterns Through Early Adulthood: Results for Youth With and Without Chronic Conditions. Am J Prev Med. 2016;51:33-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Park CL. Positive and negative consequences of alcohol consumption in college students. Addict Behav. 2004;29:311-321. [DOI] [PubMed] [Google Scholar]

- 13. Walters ST, Bennett ME, Noto JV. Drinking on campus. What do we know about reducing alcohol use among college students? J Subst Abuse Treat. 2000;19:223-228. [DOI] [PubMed] [Google Scholar]

- 14. Weitzman ER, Nelson TF, Wechsler H. Taking up binge drinking in college: the influences of person, social group, and environment. J Adolesc Health. 2003;32:26-35. [DOI] [PubMed] [Google Scholar]

- 15. White ND. Alcohol use in young adults with type 1 diabetes mellitus. Am J Lifestyle Med. 2017;11:433-435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Weitzman ER, Ziemnik RE, Huang Q, Levy S. Alcohol and Marijuana use and treatment nonadherence among medically vulnerable youth. Pediatrics. 2015;136:450-457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Hanna KM, Weaver MT, Slaven JE, Fortenberry JD, DiMeglio LA. Diabetes-related quality of life and the demands and burdens of diabetes care among emerging adults with type 1 diabetes in the year after high school graduation. Res Nurs Health. 2014;37:399-408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Garg S, Chase HP. Treatment of adolescents with type 1 diabetes mellitus. J Pediatr Endocrinol Metab. 2004;17:805-806. [DOI] [PubMed] [Google Scholar]

- 19. Jaser SS, White LE. Coping and resilience in adolescents with type 1 diabetes. Child Care Health Dev. 2011;37:335-342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. MacNaught N, Holt P. Type 1 diabetes and alcohol consumption. Nurs Stand. 2015;29:41-47. [DOI] [PubMed] [Google Scholar]

- 21. Chiauzzi E, Green TC, Lord S, Thum C, Goldstein M. My student body: a high-risk drinking prevention web site for college students. J Am Coll Health. 2005;53:263-274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Bost ML. A descriptive study of barriers to enrollment in a collegiate Health Assessment Program. J Community Health Nurs. 2005;22:15-22. [DOI] [PubMed] [Google Scholar]

- 23. Faden VB, Day NL, Windle M, et al. Collecting longitudinal data through childhood, adolescence, and young adulthood: methodological challenges. Alcohol Clin Exp Res. 2004;28:330-340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Chu JL, Snider CE. Use of a social networking web site for recruiting Canadian youth for medical research. J Adolesc Health. 2013;52:792-794. [DOI] [PubMed] [Google Scholar]

- 25. Pedersen ER, Helmuth ED, Marshall GN, Schell TL, PunKay M, Kurz J. Using facebook to recruit young adult veterans: online mental health research. JMIR Res Protoc. 2015;4:e63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ramo DE, Prochaska JJ. Broad reach and targeted recruitment using Facebook for an online survey of young adult substance use. J Med Internet Res. 2012;14:e28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ramo DE, Rodriguez TM, Chavez K, Sommer MJ, Prochaska JJ. Facebook recruitment of young adult smokers for a cessation trial: methods, metrics, and lessons learned. Internet Interv. 2014;1:58-64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Pew Research Center Internet and Technology. Internet/Broadband Fact Sheet. Washington, DC: Pew Research Center Internet and Technology; 2018. [Google Scholar]

- 29. Smith A, Anderson M. Social Media Use in 2018. Washington, DC: Pew Research Center; 2018. [Google Scholar]

- 30. Lane TS, Armin J, Gordon JS. Online recruitment methods for web-based and mobile health studies: a review of the literature. J Med Internet Res. 2015;17:e183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Chiauzzi E, Pujol LA, Wood M, et al. painACTION-back pain: a self-management website for people with chronic back pain. Pain Med. 2010;11:1044-1058. [DOI] [PubMed] [Google Scholar]

- 32. Pagoto SL, Waring ME, Schneider KL, et al. Twitter-delivered behavioral weight-loss interventions: a pilot series. JMIR Res Protoc. 2015;4:e123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Spruijt-Metz D, Hekler E, Saranummi N, et al. , Building new computational models to support health behavior change and maintenance: new opportunities in behavioral research. Transl Behav Med. 2015;5:335-346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Weitzman ER, Adida B, Kelemen S, Mandl KD. Sharing data for public health research by members of an international online diabetes social network. PLOS ONE. 2011;6:e19256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Weitzman ER, Kelemen S, Mandl KD. Surveillance of an online social network to assess population-level diabetes health status and healthcare quality. Online J Public Health Inform. 2011;3:1-12. https://journals.uic.edu/ojs/index.php/ojphi/article/view/3797/3124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Whitaker C, Stevelink S, Fear N. The use of Facebook in recruiting participants for health research purposes: a systematic review. J Med Internet Res. 2017;19:e290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Chunara R, Wisk LE, Weitzman ER. Denominator issues for personally generated data in population health monitoring. Am J Prev Med. 2017;52:549-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Hays RD, Liu H, Kapteyn A. Use of Internet panels to conduct surveys. Behav Res Methods. 2015;47:685-690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Bailey J, Mann S, Wayal S, Abraham C, Murray E. Digital media interventions for sexual health promotion-opportunities and challenges: a great way to reach people, particularly those at increased risk of sexual ill health. BMJ. 2015;350:h1099. [DOI] [PubMed] [Google Scholar]

- 40. Blandford A, Gibbs J, Newhouse N, Perski O, Singh A, Murray E. Seven lessons for interdisciplinary research on interactive digital health interventions. Digit Health. 2018;4:2055207618770325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Lavallee DC, Wicks P, Alfonso Cristancho R, Mullins CD. Stakeholder engagement in patient-centered outcomes research: high-touch or high-tech? Expert Rev Pharmacoecon Outcomes Res. 2014;14:335-344. [DOI] [PubMed] [Google Scholar]

- 42. Michie S, Yardley L, West R, Patrick K, Greaves F. Developing and evaluating digital interventions to promote behavior change in health and health care: recommendations resulting from an international workshop. J Med Internet Res. 2017;19:e232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Murray E, Hekler EB, Andersson G, et al. Evaluating digital health interventions: key questions and approaches. Am J Prev Med. 2016;51:843-851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Wicks P. Could digital patient communities be the launch pad for patient-centric trial design? Trials. 2014;15:172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Wicks P, Stamford J, Grootenhuis MA, Haverman L, Ahmed S. Innovations in e-health. Qual Life Res. 2014;23:195-203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377-381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Saylor J, Lee S, Ness M, Ambrosino JM, et al. Positive health benefits of peer support and connections for college students with type 1 diabetes mellitus. Diabetes Educ. 2018;44:340-347. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.