Abstract

This article proposes quantitative answers to meta-scientific questions including ‘how much knowledge is attained by a research field?’, ‘how rapidly is a field making progress?’, ‘what is the expected reproducibility of a result?’, ‘how much knowledge is lost from scientific bias and misconduct?’, ‘what do we mean by soft science?’, and ‘what demarcates a pseudoscience?’. Knowledge is suggested to be a system-specific property measured by K, a quantity determined by how much of the information contained in an explanandum is compressed by an explanans, which is composed of an information ‘input’ and a ‘theory/methodology’ conditioning factor. This approach is justified on three grounds: (i) K is derived from postulating that information is finite and knowledge is information compression; (ii) K is compatible and convertible to ordinary measures of effect size and algorithmic complexity; (iii) K is physically interpretable as a measure of entropic efficiency. Moreover, the K function has useful properties that support its potential as a measure of knowledge. Examples given to illustrate the possible uses of K include: the knowledge value of proving Fermat’s last theorem; the accuracy of measurements of the mass of the electron; the half life of predictions of solar eclipses; the usefulness of evolutionary models of reproductive skew; the significance of gender differences in personality; the sources of irreproducibility in psychology; the impact of scientific misconduct and questionable research practices; the knowledge value of astrology. Furthermore, measures derived from K may complement ordinary meta-analysis and may give rise to a universal classification of sciences and pseudosciences. Simple and memorable mathematical formulae that summarize the theory’s key results may find practical uses in meta-research, philosophy and research policy.

Keywords: meta-research, meta-science, reproducibility, bias, knowledge, pseudoscience

1. Introduction

A science of science is flourishing in all disciplines and promises to boost discovery on all research fronts [1]. Commonly branded ‘meta-science’ or ‘meta-research’, this rapidly expanding literature of empirical studies, experiments, interventions and theoretical models explicitly aims to take a ‘bird’s eye view’ of science and a decidedly cross-disciplinary approach to studying the scientific method, which is dissected and experimented upon as any other topic of academic inquiry. To fully mature into an independent field, meta-research needs a fully cross-disciplinary, quantitative and operationalizable theory of scientific knowledge—a unifying paradigm that, in simple words, can help tell apart ‘good’ from ‘bad’ science.

This article proposes such a meta-scientific theory and methodology. By means of analyses and practical examples, it suggests that a system-specific quantity named ‘K’ can help answer meta-scientific questions including ‘how much knowledge is attained by a research field?’, ‘how rapidly is a field making progress?’, ‘what is the expected reproducibility of a result?’, ‘how much knowledge is lost from scientific bias and misconduct?’, ‘what do we mean by soft science?’, and ‘what demarcates a pseudoscience?’.

The theoretical and methodological framework proposed in this article is built upon basic notions of classic and algorithmic information theory, which have been rarely used in a meta-research context. The key innovation introduced is a function that, it will be argued, quantifies the essential phenomenology of knowledge, scientific or otherwise. This approach rests upon a long history of advances made in combining epistemology and information theory. The concept that scientific knowledge consists in pattern encoding can be traced back at least to the polymath and father of positive philosophy August Comte (1798–1857) [2], and the connection between knowledge and information compression ante litteram to the writings of Ernst Mach (1838–1916) and his concept of ‘economy of thought’ [3]. Claude Shannon’s theory of communication gave a mathematical language to quantify information [4], whose applications to physical science were soon examined by Léon Brillouin (1889–1969) [5]. The independent works of Solomonoff, Kolmogorov and Chaitin gave rise to algorithmic information theory, which dispenses of the notion of probability in favour of that of complexity and compressibility of strings [6]. The notion of learning as information compression was formalized in Rissanen’s minimum description length principle [7], which has fruitful and expanding applications in statistical inference and machine learning [8,9]. From a philosophical perspective, the relation between knowledge and information was explored by Fred Dretske [10], and a computational philosophy of science was elaborated by Paul Thagard [11]. To the best of the author’s knowledge, however, the main ideas and formulae presented in this article were never proposed before (see Discussion for further details).

The article is organized as follows. In §2, the core mathematical approach is presented. This verges on a single equation, the K function, whose terms are described in §2.1, and whose derivation and justification are described in §2.2 by a theoretical, a statistical and a physical argument. Section 2.3 explains and discusses properties of the K function. These properties further support the claim that K is a universal quantifier of knowledge, and they lay out the bases for developing a methodology. The methodology is illustrated in §3, which offers practical examples of how the theory may help answer typical meta-research questions. These questions include: how to quantify theoretical and empirical knowledge (§3.1 and 3.2, respectively), how to quantify scientific progress within or across fields (§3.3), how to forecast reproducibility (§3.4), how to estimate the knowledge value of null and negative results (§3.5), how to compare the knowledge costs of bias, misconduct and QRP (§3.6) and how to define a ‘soft’ science (§3.8) and a pseudoscience (§3.7). These results are expressed in simple and memorable formulae (table 1), and are further summarized in §4, where the theory’s predictions, limitations and testability are discussed. The essay’s sections make cross-reference to each other but can be read in any order with little loss of comprehensibility.

Table 1.

K theory’s answers to meta-scientific questions.

| question | formula | interpretation | section |

|---|---|---|---|

| How much knowledge is contained in a theoretical system? | K = h | Logico-deductive knowledge is a lossless compression of noise-free systems. Its value is inversely related to complexity and directly related to the extent of domain of application. | 3.1 |

| How much knowledge is contained in an empirical system? | K = k × h | Empirical knowledge is lossy compression. It is encoded in a theory/methodology whose predictions have a non-zero error. It follows that Kempirical < Ktheoretical. | 3.2 |

| How much progress is a field making? | Progress occurs to the extent that explanandum and/or explanatory power expand more than the explanans. This is the essence of consilience. | 3.3 | |

| How reproducible is a research finding? | The ratio between the K of a study and its replication Kr is an exponentially declining function of the distance between their systems and/or methodologies. | 3.4 | |

| What is the value of a null or negative result? | The knowledge yielded by a single conclusive negative result is an exponentially declining function of the total number of hypotheses (theories, methods, explanations or outcomes) that remain untested. | 3.5 | |

| What is the cost of research fabrication, falsification, bias and QRP? | The K corrected for a questioned methodology is inversely proportional to the methodology’s relative description length times the bias it generates (B). | 3.6 | |

| When is a field a pseudoscience? | A pseudoscience results from a hyper-biased theory/methodology that produces net negative knowledge. Conversely, a science has . | 3.7 | |

| What makes a science ‘soft’? | Compared to a harder science (H), a softer science (S) yields relatively lower knowledge at the cost of relatively more complex theories and methods. | 3.8 |

2. Analysis

2.1. The quantity of knowledge

At the core of the theory and methodology proposed, which will henceforth be called ‘K-theory’, is the claim that knowledge is a system-specific property measured by a quantity symbolized by a ‘K’ and given by the function

| 2.1 |

in which each term represents a quantify of information. What is information? In a very general and intuitive sense, information consists in questions we do not have answers to, or, equivalently, it consists in answers to those questions. Any object or event y that has a probability p(y) carries a quantity of information equal to

| 2.2 |

that quantifies the number of questions with A possible answers that we would need to ask to determine y. The logarithm’s base, A, could have any value, but we will always assume that A = 2 and therefore that information is measured in ‘bits’, i.e. in binary questions. Shannon’s entropy

| 2.3 |

is the expected value of the information in a random variable Y. A sequence of events, objects or random variables, for example, a string of bits 101100011 · · ·, is of course just another object, event or random variable, and therefore is quantifiable by the same logic [6,12].

The three terms in function (2.1) are defined as follows:

-

—

Y constitutes the explanandum, latin for ‘what is to the explained’. Examples of explananda include: response variables in regression analysis, physical properties to be measured, experimental outcomes, unknown answers to questions.

-

—

X and τ together constitute the explanans, latin for ‘what does the explaining’. In particular,

-

(a)

X will be referred to as the ‘input’, and it will represent information acquired externally. Examples of inputs include: results of any measurement, explanatory variables in regression analysis, physical constants, arbitrary methodological decisions and all other factors that are not ‘rigidly’ encoded in the theory or methodology.

-

(b)

τ will be referred to as the ‘theory’ or ‘methodology’. A typical τ is likely to contain both a description of the relation between Y and X, as well as a specification of all other conditions that allow the relationship between X and Y to manifest. Examples of τ include: an algorithm to reproduce Y, a description of a physical law relating Y to X, a description of the methodology of a study or a field (i.e. description of how subjects are selected, how measurements are made, etc.).

-

(a)

Specific examples of all of these terms will be offered repeatedly throughout the essay. Mathematically, all three terms ultimately consist of sequences, produced by random variables and therefore characterized by a specific quantity of information. In the cases most typically discussed in this essay, explanandum and input will be assumed to be sequences of lengths nY and nX, respectively, resulting from a series of independent identically distributed random variables, Y and X, with discrete alphabets , probability distributions pY, pX and therefore Shannon entropy H(Y) and H(X).

The object representing the theory or methodology τ will be typically more complex than Y and X, because it will consist in a sequence of independent random variables (henceforth, RVs) that have distinctive alphabets (are non-identical) and are all uniformly distributed. This sequence of RVs represents the sequence of choices that define a theory and/or methodology. Indicating with T a RV with uniform probability distribution PT, resulting from a sequence of l RVs Ti ∈ {T1, T2 … Tl} each with a probability distribution , we have

| 2.4 |

The alphabet of each individual RV composing τ may have size greater than or equal to 2, with equality corresponding to a binary choice. For example, let τ correspond to the description of three components of a study’s method: τ = (‘randomized’, ‘human subject’, ‘female’). In the simplest possible condition, this sequence represents a draw from three independent binary choices: 1 = ‘randomized vs not’, 2 = ‘human vs not’, 3 = ‘female vs not’. Representing each choice as a binary RV Ti, the probability of τ is Pr{T1 = τ1} × Pr{T2 = τ2} × Pr{T3 = τ3} = 0.53 = 0.125 and its information content is 3 bits.

Equivalent and useful formulations of equation (2.1) are

| 2.5 |

and

| 2.6 |

in which

| 2.7 |

will be referred to as the ‘effect’ component, because it embodies what is often quantified by ordinary measures of effect size (§2.2.2), and

| 2.8 |

will be referred to as the ‘hardness’ component, because it quantifies the informational costs of a methodology, which is connected to the concept of ‘soft science’, as will be explained in §3.8.

2.2. Why K is a measure of knowledge

Why do we claim that equation (2.1) quantifies the essence of knowledge? This section will offer three different arguments. First, a theoretical argument, which illustrates the logic by which the K function was originally derived, i.e. following two postulates about the nature of information and knowledge. Second, a statistical argument, which illustrates how the K function includes the quantities that are typically computed in ordinary measures of effect size. Third, a physical argument, which explains how the K function, unlike ordinary measures of effect size or information compression, has a direct physical interpretation in terms of negentropic efficiency.

2.2.1. Theoretical argument: K as a measure of pattern encoding

Equation (2.1) is the mathematical translation of two postulates concerning the nature of the phenomenon we call knowledge:

-

(i)

Information is finite. Whatever its ultimate nature may be, reality is knowable only to the extent that it can be represented as a set of discrete, distinguishable states. Although in theory the number of states could be infinite (countably infinite, that is), physical limitations ensure that the number of states that are actually represented and processed never is or can be infinite.

-

(ii)

Knowledge is information compression. Knowledge is manifested as an encoding of patterns that connect states, thereby permitting the anticipation of states not yet presented, based on states that are presented. All forms of biological adaptation consist in the encoding of patterns and regularities by means of natural selection. Human cognition and science are merely highly derived manifestations of this process.

Physical, biological and philosophical arguments in support of these two postulates are offered in appendix A.

The most general quantification of patterns between finite states is given by Shannon’s mutual information function

| 2.9 |

in which is Shannon’s entropy (equation (2.3)). The mutual information function is completely free from any assumption concerning the random variables involved (figure 1). In order to turn equation (2.9) into an operationalizable quantity of knowledge, we formalize the following properties:

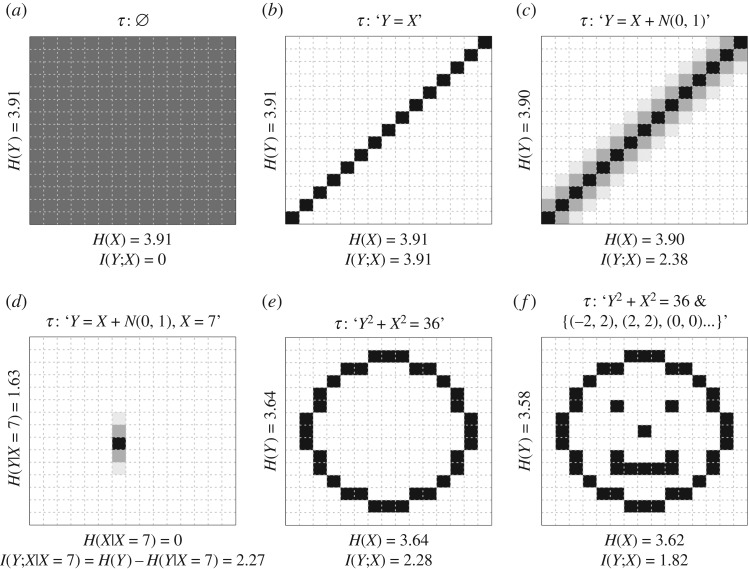

Figure 1.

Pictorial representation of various patterns, with corresponding values of entropy and mutual information. The descriptions of the patterns τ are purely illustrative and not necessarily literal descriptions of what the pattern encodings would look like in practice. The intensity of grey in each cell represents the relative probability of occurrence of different cell values, with black= 1 and white= 0. The entropy and mutual information values were calculated by normalizing the cell values in the table or at the margins. For further details, see the source code in electronic supplementary material.

-

(i)

The pattern between Y and X is explicitly expressed by a conditioning. We therefore posit the existence of a third random variable, T, with alphabet , such that H(Y, X|T) = H(Y|T) + H(Y|X, T), or H(Y, X|T) = H(Y) + H(X) if . Unlike Y and X, T is assumed to be uniformly distributed, and therefore the size of its alphabet is , where n is the minimum number of bits required to describe each τ in the set. The uniform distribution of T also implies that H(T) = −logPr{T = τ} = n.

-

(ii)

The mutual information expressing the pattern as described above is standardized (i.e. divided by the total information content of its own terms), in order to allow comparisons between different systems.

The two requirement above lead us to formulate knowledge as resulting from the contextual, system-specific connection of the quantities, defined by the following equation:

| 2.10 |

in which, to simplify the notation, we will typically use H(Y) in place of H(Y|T) and H(X) in place of H(X|T).

Note how, at this stage, the value computed by equation (2.10) is potentially very low, because is the average value of the conditional entropy for every possible theory of description length −log p(τ). The more complex is the average , the larger is the number of possible theories of equivalent description length, and therefore the smaller is the proportion of theories τi that yield H(Y|X, T = τi) < H(Y) (because most realizable theories are likely to be nonsensical).

Knowledge is realized because, from all possible theories, only a specific theory (or possibly a subset of theories) is selected (figure 2). This selection is not merely a mathematical fiction, but is typically the result of Darwinian natural selection and/or other analogous neurological, memetic and computational processes. The details of how a τ is arrived at, however, need not concern us because, in mathematical terms, the result of a selection process is the same: the selection ‘fixes’ the random variable T in equation (2.10) on a particular realization , with two consequences. On the one hand, the entropy of T goes to zero (because there is no longer any uncertainty about T), but on the other hand, the selection itself entails a non-zero amount of information.

Figure 2.

Pictorial representation of a set of theories of a given description length that condition the relation between two variables. This set constitutes the alphabet of the uniformly distributed random variable T, from which a specific theory/methodology, in this case τ55, is selected. For further discussion, see text.

Since T has a uniform distribution, the information necessary to identify this realization of T is simply −logP(T = τ) = log 2l(τ) = l(τ), which is the shortest description length of τ (e.g. the minimum number of binary questions needed to identify τ in the alphabet of T). This quantity constitutes an informational cost that needs to be computed in the standardized equation (2.10). Therefore, we get

| 2.11 |

Equation (2.1) is arrived at by generalizing (2.11) to the case in which the knowledge encoded by τ is applied to multiple independent realizations of explanandum and/or input, which are counted by the nY and nX terms, respectively.

2.2.2. Statistical argument: K as a universal measure of effect size

Despite having been derived theoretically and being potentially applicable to phenomena of any kind, i.e. not merely statistical ones, equation (2.1) bears structural similarities with ordinary measures of statistical effect size. Such similarities ought not to be surprising, in retrospect. Statistical measures of effect size are intended to quantify knowledge about patterns between variables, and so K would be expected to reflect them. Indeed, structural analogies between the K function and other measures of effect size offer further support for the theoretical argument made above that K is a general quantifier of knowledge.

To illustrate such similarities, it is useful to point out that the value of the K function can be approximated from the quantization of any continuous probability distribution. For information to be finite as required by the K function, the entropy of a normally distributed quantized random variable XΔ can be approximated by , in which σ is the standard deviation rescaled to a lowest decimal (for example, from σ = 0.123 to σ = 123, further details in appendix B).

There is a clear structural similarity between the k component of equation (2.6) and the coefficient of determination R2. Since the entropy of a random variable is a monotonically increasing function of the variable’s dispersion (e.g. its variance), this measure is directly related to K. For example, if Y and Y|X are continuous normally distributed RVs with variance σY and σY|X, respectively, then R2 is a function of K,

| 2.12 |

in which TSS is the total sum of squares, SSE is the sum of squared errors, n is the sample size and f(·) represents an undefined function. The adjusted coefficient of determination is also directly related to K since

| 2.13 |

with A = (n − 1)/(n − k − 1).

From this relation follows that multiple ordinary measures of statistical effects size used in meta-analysis are also functions of K. For example, for any two continuous random variables, R2 = r2, with r the correlation coefficient. And since most popular measures of effect size used in meta-analysis, including Cohen’s d and odds ratios, are approximately convertible to and from r [13], they are also convertible to K.

The direct connection between K and measures of effect size like Cohen’s d implies that K is also related to the t and the F distributions, which are constructed as ratios between the amount of what is explained and what remains to be explained, and are therefore constructed similarly to an ‘odds’ transformation of K

| 2.14 |

Other more general tests, such as the Chi-squared test, can be shown to be an approximation of the Kullback–Leibler distance between the probability distributions of observed and expected frequencies [12]. Therefore, they are a measure of the mutual information between two random variables, i.e. the same measure on which the K function is built.

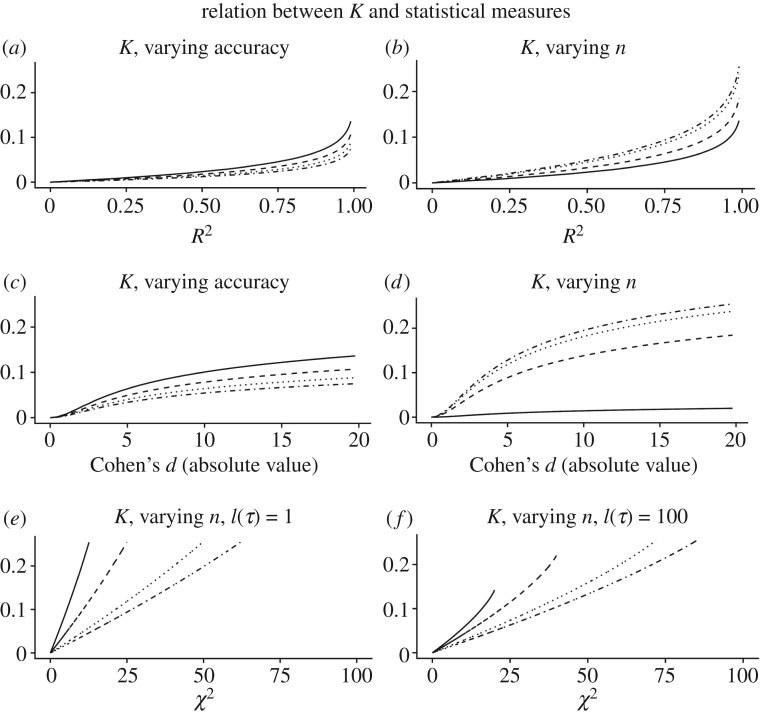

Figure 3 illustrates how these are not merely structural analogies, because K can be approximately or exactly converted to ordinary measures of effect size. As the figure illustrates, K stands in one-to-one correspondence with ordinary measures of effect sizes, but its specific value is modulated by additional variables that are critical to knowledge and that are ignored by ordinary measures of effect size. Such variables include the size of the theory or methodology describing the pattern, which is always non-zero, the number of repetitions (which, depending on analyses, may correspond to the sample size or to the intended total number of uses of a τ); the resolution (e.g. accuracy of measurement, §2.3.6); distance in time and space and methods (§2.3.5) and Ockham’s razor (§2.3.1). The latter property also makes K conceptually analogous to measures of minimum description length, discussed below.

Figure 3.

Relation between K and common measures of effect size, with varying conditions of accuracy (i.e. of resolution, see §2.3.6), number of repetitions n (i.e. the nY in equation (2.1)) and size of τ. The relation with R2 and Cohen’s d was derived assuming a normal distribution of the explanandum. Increasing accuracy, in this case, corresponded to calculating entropies with a standard deviation measured with one additional significant digit, at each step, from solid line to dotted line. The values of n for R2 and Cohen’s d were, from dotted to solid line, 1, 2, 10, 100, respectively. The relation with χ2 was derived from the probability distribution of a 2 × 2 contingency table. From solid to dotted line, the value of n was 20, 40, 80, 100, and the description length of τ was 1 bit for (a,c,e), and 100 bits for (b,d,f). The code used to generate these and all other figures is available in electronic supplementary material.

Minimum description length principle. The minimum description length (MDL) principle is a formalization of the principle of inductive inference and of Ockham’s razor that has many potential applications in statistical inference, particularly with regard to the problem of model selection [8]. In its most basic formulation, the MDL principle states that the best model to explain a dataset is the one that minimizes the quantity

| 2.15 |

in which L(H) is the description length of the hypothesis (i.e. a candidate model for the data) and L(D|H) is the description length of the data given the model. The K equation has equivalent properties to equation (2.15), with L(H) ≡ −log p(τ) and L(D|H) ≡ nYH(Y|X, τ). Therefore, the values that minimize equation (2.15) maximize the K function.

The reader may question why, if K is equivalent to existing statistical measures of effect size and MDL, we could not just use the latter to quantify knowledge. There are at least three reasons. The first reason is that only K is a universal measure of effect size. The quantity measured by K is completely free from any distributional assumptions about the subject matter being assessed. It can be applied not only to quantitative data with any distribution (e.g. figure 1), but also to any other explanandum that has a finite description length (although this potential application will not be examined in detail in this essay). In essence, K can be applied to anything that is quantifiable in terms of information, which means any phenomenon that is the object of cognition—any phenomenon amenable to being ‘known’.

The second reason is that, as illustrated above, K takes into account factors that are overlooked by ordinary measures of effect size or model fit, and therefore is a more complete representation of knowledge phenomena (figure 3).

The third reason is that, unlike any of the statistical and algorithmic approaches mentioned above, K has a straightforward physical interpretation, which is presented in the next section.

2.2.3. Physical argument: K as a measure of negentropic efficiency

The physical interpretation of equation (2.1) follows from the physical interpretation of information, which was revealed by the solution to the famous paradox known as Maxwell’s Demon. In the most general formulation of this Gedankenexperiment, the demon is an organism or a machine that is able to manipulate molecules of a gas, for example, by operating a trap door, and is thus able to segregate molecules that move at higher speed from those that move at lower speed, seemingly without dissipation. This created a theoretical paradox as it would contradict the second law of thermodynamics, according to which no process can have as its only result the transfer of heat from a cooler to a warmer body.

In one variant of this paradox, called the ‘pressure demon’, a cylinder is immersed in a heat bath and has a single ‘gas’ molecule moving randomly inside it. The demon inserts a partition right in the middle of the cylinder, thereby trapping the molecule in one half of the cylinder’s volume. It then operates a measurement to assess in which half of the cylinder the molecule is, and pushes down, with a reversible process, a piston in the half that is empty. The demon could then remove the partition, allowing the gas molecule to push the piston up, and thus extract work from the system, apparently without dissipating any energy.

Objections to the paradox that involve the energetic costs of operating the machine or of measuring the position of the particle [5] were proven to be invalid, at least from a theoretical point of view [6,14]. The conclusive solution to the paradox was given in 1982 by Charles Bennett, who showed that dissipation in the process occurred as a byproduct of the demon’s need to process information [15]. In order to know which piston to lower, the demon must memorize the position of the molecule, storing one bit of information, and it must eventually re-set its memory to prepare it for the next measurement. The recording of information can occur with no dissipation, but the erasure of it is an irreversible process that will produce heat that is at least equivalent to the work extracted from the system, i.e kTln2 joules, in which k is Boltzmann’s constant. This solution to the paradox proved that information is a measurable physical quantity.

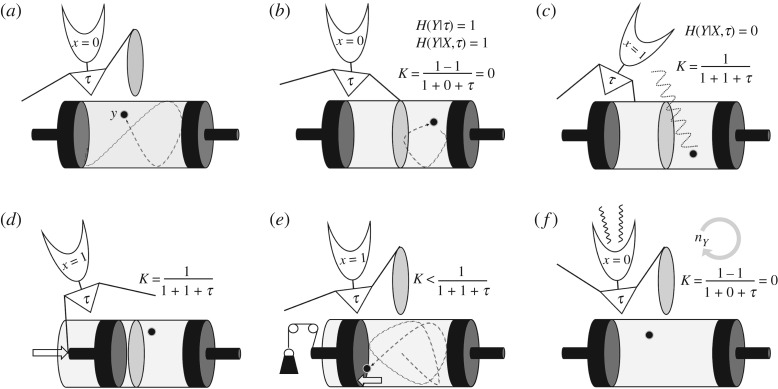

Figure 4 illustrates how the K function relates to Maxwell’s pressure demon. The explanandum H(Y) (which is a shorthand for H(Y|τ), as explained previously) quantifies the entropy, i.e. the amount of uncertainty about the molecule’s position relative to the partition in the cylinder. The input H(X) is the external information obtained by a measurement. The input corresponds to the colloquial notion of ‘information’ as something that is acquired and ‘gives form’ (to subsequent choices, actions, etc.). Since this latter notion of information is a counterpart to the physical notion of information as entropy, it may be perhaps more correctly defined as negentropy [5].

Figure 4.

Illustration of Maxwell’s ‘pressure demon’ paradox, and how it relates to K. (a) The system is set up, described by τ, with a default memory state X = 0. (b) A partition is placed in the cylinder, generating one bit of information in the explanandum Y. The demon has zero knowledge about the molecule’s position. (c) A measurement is made, allowing the position of the molecule to be stored in memory. An amount K of knowledge is now possessed by the demon and put to use. (d) One of the pistons is pushed down allowing work to be extracted from the system. (e) Work is extracted at the expense of the demon’s knowledge. (f) The demon’s knowledge is now zero and its memory is re-set, dissipating entropy in the environment. The cycle will be repeated nY times. See text for further explanations.

The theory τ contains a description of the information-processing structure that allows the Pressure Demon to operate. The extent of this description will depend in part on how the system is defined. A minimal description will include at least an encoding of the identity relation between the state of X and that of Y, i.e. ‘X = Y’ as distinguished from its alternative, ‘X ≠ Y’. This theory requires at least a binary alphabet and therefore one bit of memory storage. A more comprehensive description will include a description of the algorithm that enables the negentropy in X to be exploited—something like ‘if X = left, press down right piston, else, press left piston’. Multiple other aspects of the system may be included in τ. The amount of information contained in the explanandum, for example, is a function of where the partition is laid down, a variable that a truly complete algorithm would need to specify. The broadest possible physical description of the pressure demon ought to encode instructions to set up the entire system, i.e. the heat bath, the partition etc. In other words, a complete τ contains the genetic code to reproduce pressure demons.

The description length of τ will, intuitively, also depend on the language used to describe it. Moreover, some descriptions might be less succinct than others and contain redundancies, unnecessary complexities, etc. From a physical point of view, however, it is well understood that each τ would be characterized by its own specific minimum amount of information, a quantity known as Kolmogorov complexity [6]. This is defined as the shortest program that, if fed into a universal Turing machine, would output the τ and then halt. Mathematical theorems prove that this quantity cannot be computed directly—at least in the sense that one can never be sure to have found the shortest possible program. In practice, however, the Kolmogorov complexity of an object is approximated, by excess, by any information compression algorithm and is independent of the encoding language used, up to a constant. This means that, even though we cannot measure the Kolmogorov complexity in absolute terms, we can measure it rather reliably in relative terms. A τ that is more complex, and/or more redundant than another τ will necessarily have, all else being equal, a longer description length.

Whether we take τ to represent the theoretical shortest possible description length for the demon (in which case −log p(τ) quantifies its Kolmogorov complexity), or whether we assume that it is a realistic, suboptimal description (in which case the description length −log p(τ) is best interpreted in relative terms), the K function expresses the efficiency with which the demon converts information into work. At the start of the cycle, the demon’s K is zero. After measuring the particle’s position, the demon has stored one bit of information (or less, if the partition is not placed in the middle of the cylinder, but we will here assume that it is), and has knowledge K > 0, with the magnitude of K inversely related to the description length of τ. By setting the piston and removing the partition, the demon puts its knowledge to use and extracts k ln 2 of work from it. Once the piston is fully pushed out, the demon no longer knows where the molecule is (K = 0) and yet still has one bit stored in memory, a trace of its last experience. The demon has now two possible options. First, as in Bennett’s solution to the paradox, it can simply erase that bit, re-setting X to the initial state H(X) = 0 and releasing k ln 2 in the environment. At each cycle, the negentropy is renewed via a new measurement, whereas the fixed τ component remains unaltered. Since the position of the molecule at each cycle is independent of previous positions, the total cumulative explanandum (the total entropy that the demon has reduced) grows by one bit, whereas the theory component remains unaltered. For n cycles, the total K is therefore

| 2.16 |

which to the limit of infinite cycles is

| 2.17 |

The value of K = 1/2 constitutes the absolute limit for knowledge that requires a direct measurement and/or a complete and direct description of the explanandum.

Alternatively, the demon could keep the value of X in memory and allocate new memory space for the information to be gathered in the next cycle ([6]). As Bennett also pointed out, in practice it could not do so forever. In any physical implementation of the experiment, the demon would eventually run out of memory space and would be forced to erase some of it, releasing the entropy locked in it. If, ad absurdum, the demon stored an infinite amount of information, then at each cycle the input would grow by one bit yielding

| 2.18 |

which to the limit of infinite cycles is

| 2.19 |

again independent of τ. This is a further argument to illustrate how information is necessarily finite, as we postulated (§2.2.1, see also §2.3.6 for another mathematical argument and appendix A for philosophical and scientific arguments).

More realistically, we can imagine that the number of physical bits available to the demon is finite. As cycles progress, the demon could try to allocate as many resources as possible to the memory X, for example, by reducing the space occupied by τ. This is why knowledge entails compression and pattern encoding (see also §2.3.1).

Elaborations on the pressure demon experiment shed further light on the meaning of K and its implications for knowledge. First, let us imagine that the movement of the gas molecule is not actually random, but that, acted upon by some external force, the molecule periodically and regularly finds itself alternatively on the right and left side of the cylinder, and expands from there. If the demon kept a sufficiently long record of past measurements, say a number z of bits, it might be able to discover the pattern. Its τ could then store a new, slightly expanded algorithm, such as ‘if last position was left, new position is right, else, new position is left’. With this new theory, and one bit of input to determine the initial position of the molecule, the demon could extract unlimited amounts of energy from the heat bath. In this case,

| 2.20 |

which to the limit of infinite cycles is

| 2.21 |

Therefore, the maximum amount of knowledge expressed in a system asymptotically approaches 1. As we would expect, it is higher than the maximum value of 1/2 attained by mere descriptions. Note, however, that K can never actually be equal to 1, since n is never actually infinite and τ cannot be 0.

Intermediate cases are also easy to imagine, in which the behaviour of the molecule is predictable only for a limited number of cycles, say c. In such case, K would increase as the number of necessary measurements nX is reduced to nX/c. At any rate, this example illustrated how the demon’s ability to implement knowledge (in order to extract work, create order, etc.) is determined by the presence of regularities in the explanandum as well as the efficiency with which the demon can identify and encode patterns. Since this ability is higher when the explanans is minimized, the demon (the τ) is selected to be as ‘intelligent’ and ‘informed’ as possible.

As a final case, let us imagine instead that the gas molecule moves at random and that its position is measurable only to limited accuracy. A single measurement yields the position of the molecule with an error η. However, each additional measurement reduces η by a fraction a. The demon, in this case, could benefit from increasing the number of measurements. Indicating with m the number of measurements and with τm the corresponding theory we have

| 2.22 |

that to the limit of infinite cycles is

| 2.23 |

The work extracted at each cycle will be k ln 2 (1 − η × a−m). Therefore, K expresses the efficiency with which work can be extracted from a system, given a certain error rate a and number of measurements m.

2.3. Properties of knowledge

This section will illustrate how K possesses properties that a measure of knowledge would be expected to possess. In addition to offering support for the three arguments given above, these properties underlie some of the results presented in §3.

2.3.1. Ockham’s razor is relative.

As discussed in §2.2.2, the K function encompasses the MDL principle, and therefore computes a quantification of Ockham’s razor. However, the K formulation of Ockham’s razor highlights a property that other formulations overlook: that Ockham’s razor is relative to the size of the explanandum and the number of times a given theory or explanation can be used. For a given Y and X and two alternative theories τ and τ′ that have the same effect H(Y|X, τ) = H(Y|X, τ′) and that can be applied to a number of repetitions nY and n′Y, respectively, we have that

| 2.24 |

and similarly for the case in which τ = τ′ while nX H(X) ≠ n′X H(X′),

| 2.25 |

Therefore, the relative epistemological value of the simplicity of an explanans, i.e. Ockham’s razor, is modulated by the number of times that the explanans can be applied to the explanandum.

2.3.2. Prediction is more costly than explanation, but preferable to it.

The K function can be used to quantify either explanatory or predictive efficiency. The expected (average) explanatory or predictive efficiency of an explanans with regard to an explanandum is measured when the terms of the K function are entropies, i.e. expectation values of uncertainties. If instead the explanandum is an event that has already occurred and that carries information −logP(Y = y), K quantifies the value of an explanation, whose information cost includes the surprisal of explanatory conditions −logP(X = x) and the complexity of the theory linking such conditions to the event, −logP(T = τ). Inference to the best explanation and/or model is, in both these cases, driven by the maximization of K.

If instead it is the explanans, that is pre-determined and fixed, then its predictive power is quantified by how divergent its predictions are relative to observations. To any extent that observations do not match predictions, the observed and predicted distributions will have a non-zero informational divergence, which quantifies the extra amount of information that would be needed to ‘adjust’ the predictions to make them match the observations. It follows that, indicating with the tilde sign the predictive theory, we can calculate an ‘adjusted’ K as

| 2.26 |

in which Kobs = kobsh = K(Y; X, τ) is the K observed, and is the Kullback–Leibler divergence between the observed and the predicted distribution (proof in appendix C). Since , Kadj ≤ Kobs, with equality corresponding to perfect fit between observations and predictions. An analogous formula could be derived for the case in which the explanandum is a sequence, in which case the distance would be calculated following methods suggested in §3.3.3.

Now, note that the observed K is the explanatory K, and therefore is always greater or equal to the predictive K for individual observations. When evidence cumulates, then the explanans of an explanatory K is likely to expand, reducing the cumulative K (§3.3). Replacing a ‘flexible’ explanation with a fixed one avoids these latter cumulative costs, allowing a fixed explanans to be applied to a larger number of cases nY, with no cumulative increase in its complexity.

Therefore, predictive knowledge is simply a more generalized, unchanging form of explanatory knowledge. As intuition would suggests, prediction can never yield more knowledge than a post hoc explanation for a given event (e.g. an experimental outcome). However, predictive knowledge becomes cumulatively more valuable to the extent that it allows to explain, with no changes, a larger number of events, backwards or forwards in time.

2.3.3. Causation entails correlation and is preferable to it

Properties of the K function also suggests why the knowledge we gain from uncovering a cause–effect relation is often, but not always, more valuable than that derived from a mere correlation. Definitions of causality have a long history of subtle philosophical controversies [16], but no definition of causality can dispense with counterfactuals and/or with assuming that manipulating present causes can change future effects [17]. The difference between a mere correlation and a causal relation can be formalized as the difference between two types of conditional probabilities, P(Y = y|X = x) and P(Y = y|do(X = x)), where ‘do(X = x)’ is a shorthand for ‘X|do(X = x)’ and the ‘do’ function indicates the manipulation of a variable. In general, correlation without causation entails P(Y = y) ≤ P(Y = y|X = x) and P(Y = y) = P(Y = y|do(X = x)) whereas causation entails P(Y = y) ≤ P(Y = y|X = x) ≤ P(Y = y|do(X = x)).

If knowledge is exclusively correlational, then K(Y; X = x, τ) > 0 and K(Y; do(X = x), τ) = 0, otherwise K(Y; X = x, τ) > 0 and K(Y; do(X = x), τ) > 0. Hence, all else being equal, the knowledge attainable via causation is larger under a broader set of conditions. Moreover, note that in the correlational case knowledge is only attained once an external input of information is obtained, which has an informational cost nYH(X) > 0. In the causal case, instead, the input has no informational cost, i.e. H(X|do(X = x)) = 0, because there is no uncertainty about the value of X, at least to the extent that the manipulation of the variable is successful. However, the explanans is expanded by an additional τdo(X=x), which is the description length of the methodology to manipulate the value of X. Therefore, the value of causal knowledge is defined as

| 2.27 |

It follows that there is always an such that . Specifically, assuming τ to be constant, causal knowledge is superior to correlational knowledge when .

2.3.4. Knowledge growth requires lossy information compression

Both theoretical and physical arguments suggest that K is maximized when τ is minimized (§2.2). A simple calculation shows that such minimization must eventually consist in the encoding of concisely described patterns, even if such patterns offer an incomplete account of the explanandum, because otherwise knowledge cannot grow indefinitely.

Let τ be a theory that is not encoding a relation between RVs X and Y, but merely lists all possible (x, y) pairs of elements from the respective alphabets, i.e. and . To take the simplest possible example, let each element correspond to one element of . Clearly, such τ would always yield H(Y|X, τ) = 0, but its description length will grow with the factorial of the size of the alphabet. Indicating with s the size of the two alphabets, which in our example have the same length, the size of τ would be proportional to log(s!). As the size of the alphabet grows, knowledge declines because

| 2.28 |

independent of the probability distribution of Y and X. Therefore, as the explanandum is expanded (i.e. its total information and/or complexity grows), knowledge rapidly decreases, unless τ is something other than a listing of (x, y) pairs. In other words, knowledge cannot grow unless τ consists in a relatively short description of some pattern that exploits a redundancy. The knowledge cost of a finite level of error or missing information H(Y|X, τ) > 0 will soon be preferable to an exceedingly complex τ.

2.3.5. Decline with distance in time, space and/or explanans

Everyone’s experience of the physical world suggests that our ability to predict future states of empirical phenomena tends to become less accurate the more ‘distant’ the phenomena are from us, in time or space. Perhaps less immediately obvious, the same applies to explanations: the further back we try to go in time, the harder it becomes to connect the present state of phenomena to past events. These experiences suggest that any spatio-temporal notion of ‘distance’ is closely connected to the information-theoretic notion of ‘divergence’. In other words, our perception that a distance in time or space separates us from objects or events is cognitively intertwined, if not indeed equivalent, to our diminished ability to access and process information about those objects or events and, therefore, to our knowledge about them.

One of the most remarkable properties of K is that it expresses how knowledge changes with informational distances between systems. It can be shown that, under most conditions in which a system contains knowledge, divergence in any component of the system will lead to a decline of K that can be described by a simple exponential function of the form

| 2.29 |

in which A is an arbitrary basis, Y′, X′, τ′ is a system having an overall distance (i.e. informational divergence) from Y, X, τ, and defines the decline rate (proof in appendix D).

2.3.6. Knowledge has an optimal resolution

Accuracy of measurement is a special case of the general informational concept of resolution, quantifiable as the number of bits that are available to describe explanandum and explanans. It can be shown both analytically and empirically that any system Y, X, τ is characterized by a unique optimal resolution that maximizes K (the full argument is offered in appendix E).

We may start by noticing how, even if empirical data is assumed to be measurable to infinite accuracy (against one of the postulates in §2.2.1), the resulting K value will be inversely proportional to measurement accuracy, unless special conditions are met. When K is measured on a continuous, normal and quantized random variable YΔ (§2.2.2), to the limit of infinite accuracy only one of two values is possible,

| 2.30 |

with representing Shannon’s differential entropy function. The upper limit in equation (2.30) occurs if and when h(Y|X, τ) > 0, i.e. by assumption there is a non-zero residual uncertainty that needs to be measured. When this is the case, then the two information terms n brought about by the quantization cancel each other out in the numerator (because the explanandum and the residual error are necessarily measured at the same resolution). This is the typical case of empirical knowledge. The lower limit in equation (2.30) presupposes a priori that h(Y|X, τ) = 0, i.e. the explanandum is perfectly known via the explanans and there is no residual error to be quantized. This case is only represented by logico-deductive knowledge.

We can define empirical systems as intermediate cases, i.e. cases that have a non-zero conditional entropy and have a finite level of resolution. We can show (see appendix E) that all empirical systems have ‘K-optimal’ resolutions and , such that

| 2.31 |

As the resolution increases, K will increase up to a maximal value and then decline.

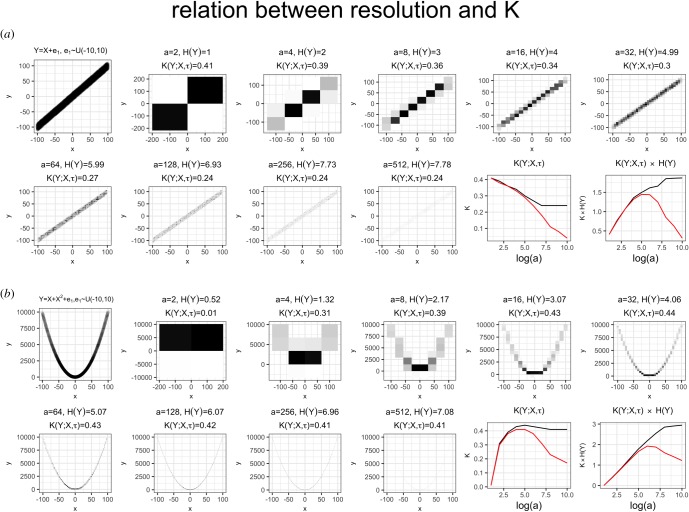

A system’s optimal resolution is partially determined by the shape of the relation between explanandum and explanans in ways that are likely to be system-specific. Two simulations in figure 5 illustrate how both K and H(Y)K may vary depending on resolution.

Figure 5.

Illustrative example of how K varies in relation to the resolution measured for Y and X, depending on the shape of the pattern encoded. The figures and all the calculations were derived from a simulated dataset, in which the pattern linking explanandum to explanans was assumed to have noise with uniform distribution, as described in the top-left plot of each panel. Black line: entropies and K values calculated by maximum-likelihood method (i.e. counting frequencies in each bin). Red line: entropies and K values calculated using the ‘shrink’ method described in [18] (the R code used to generate the figures is provided in electronic supplementary material). Note how the value of K and its rescaled version H(Y)K have a unique maximum.

The dependence of K on resolution reflects its status as a measure of entropic efficiency (§2.2.3) and entails that, to compare systems for which the explanandum is measured to different levels of accuracy, the K value needs to be rescaled. Such rescaling can be attained rather simply, by multiplying the value of K by the entropy of the corresponding explanandum,

| 2.32 |

The resulting product quantifies in absolute terms how many bits are extracted from the explanandum by the explanans.

3. Results

This section will illustrate, with practical examples, how the tools developed so far can be used to answer meta-scientific questions. Each of the questions is briefly introduced by a problem statement, followed by the answer, which comprises a mathematical equation, an explanation and one or more examples. Most of the examples are offered as suggestions of potential applications of the theory, and the specific results obtained should not be considered conclusive.

3.1. How much knowledge is contained in a theoretical system?

Problem: Unlike empirical knowledge, which is amenable to errors that can be verified against experiences, knowledge derived from logical and deductive processes conveys absolute certainty. It might therefore seem impossible to compare the knowledge yield of two different theories, such as two mathematical theorems. The problem is made even deeper by the fact that any logico-deductive system is effectively a tautology, i.e. a system that derives its own internal truths from a set of a priori axioms. How can we quantify the knowledge contained such a system?

Answer: The value of theoretical knowledge is quantified as

| 3.1 |

in which K corresponds to equation (2.1) and h to equation (2.8).

Explanation: Logico-deductive knowledge, like all other forms of knowledge, ultimately consists in the encoding of patterns. Mathematical knowledge, for example, is produced by revealing previously unnoticed logical connections between a statement with uncertainty H(Y) and another statement, which may or may not have uncertainty H(X) (depending on whether X has been proven, postulated or conjectured), via a set of passages described in a proof τ. The latter consists in the derivation of identities, creating an error-free chain of connections such that P(Y|X, τ) = 1.

When the proof of the theorem is correct, the effect component k in equation (2.6), is always equal to one, yielding equation (3.1). However, when the chain of connections τ is replaced with a τ′ at a distance dτ > 0 from it, k is likely to be zero, because even minor modifications of τ (for example, changing a passage in the proof of a theorem) break the chain of identities and invalidate the conclusion. This is equivalent to the case λτ ≈ ∞. Therefore, the reproducibility (§3.4) of mathematical knowledge, as it is embodied in a theorem, is either perfect or null,

| 3.2 |

Alternative valid proofs, however, might also occur, and their K value will be inversely proportional to their length, since a shorter proof yields a higher h.

Once a theorem is proven, its application will usually not require invoking the entire proof τ. In K, we can formalize this fact by letting τ be replaced by a single symbol encoding the nature of the relationship itself. The entropy of τ will in this case be minimized to that of a small set of symbols, e.g. {=, ≠, >, < · · ·}. In such case, the value of the knowledge obtained will be primarily determined by nY, which is the number of times that the theorem will be invoked and used. This leads to the general conclusion that the value of a theory is inversely related to its complexity and directly related to the frequency of its use.

3.1.1. Example: The proof of Fermat’s last theorem

Fermat’s last theorem (henceforth, FLT) states that there is no solution to the equation an + bn = cn when all terms are positive integers and n > 2. The French mathematician Pierre de Fermat (1607–1665) claimed to have proven such statement, but his proof was never found. In 1995, Andrew Wiles published a proof of FLT, winning a challenge that had engaged mathematicians for three centuries [19]. How valuable was Wiles’ contribution?

We can describe the explanandum of FLT as a binary question: ‘does an + bn = cn have a solution’? In absence of any proof τ, the answer can only be obtained by calculating the result for any given set of integers [a, b, c, n]. Let nY be the total plausible number of times that this result could be calculated. Of course, we cannot estimate this number exactly, but we are assured that this number is an integer (because a calculation is either made or not), and that it is finite (because the number of individuals, human or otherwise, who have, will, or might do calculations is finite). Therefore, the explanandum is nYH(Y). For simplicity, we might assume that in absence of any proof, individuals making the calculations are genuinely agnostic about the result, such that H(Y) = 1.

Indicating with τ the maximally succinct (i.e. maximally compressed) description of this proof, the knowledge yielded by it is

| 3.3 |

Here we assume that any input is contained in the proof τ. The information size of the latter is certainly calculable in principle, since, in its most complete form, it will consist in an algorithm that derives the result from a small set of axioms and operations.

Wiles’ proof of FLT is over 100 pages long and is based on highly advanced mathematical concepts that were unknown in Fermat’s times. This suggests that Fermat’s proof (assuming that it existed and was correct) was considerably simpler and shorter than Wiles’. Mathematicians are now engaged in the challenge of discovering such a simple proof.

How would a new, simpler proof compare to the one given by Wiles? Indicating this simpler proof with τ′ and ignoring nY because it is constant and independent of the proof, the maximal gain in knowledge is

| 3.4 |

Equation (3.4) reflects the maximal gain in knowledge obtained by devising a simpler, shorter proof of a previously proven theorem.

Given two theorems addressing different questions, in the more general case, the difference in knowledge yield will depend on the lengths of the respective proofs as well as the number of computations that each theorem allows to be spared. The general formula is, indicating with Y′ and τ′ an explanandum and explanans different from Y and τ,

| 3.5 |

3.2. How much knowledge is contained in an empirical system?

Problem: Science is at once a unitary phenomenon and highly diversified and complex one. It is unitary in its fundamental objectives and in general aspects of its procedures, but it takes a myriad different forms when it is realized in individual research fields, whose diversity of theories, methodologies, practices, sociologies and histories mirrors that of the phenomena being investigated. How can we compare the knowledge obtained in different fields, about different subject matters?

Answer: The knowledge produced by a study, a research field, and generally a methodology is quantified as

| 3.6 |

in which K is given by equation (2.1), k by equation (2.7) and h by equation (2.8).

Explanation: Knowledge entails a reduction of uncertainty, attained by the processing of stored information by means of an encoded procedure (an algorithm, a ‘theory’, a ‘methodology’). Equation (3.6) quantifies the efficiency with which uncertainty is reduced. This is a scale-free, system-specific property. The system is uniquely defined by a combination of explanandum, explanans and theory, the information content of which is subject to physical constraints. Such physical constraints ensure that, among other properties, every system Y, X, τ has an optimal resolution, non-zero and non-infinite, and therefore a unique identifiable value K (§2.3.6). As discussed in §2.3.6, this quantity can also be rescaled to K × H(Y), which gives the total net number of bits that are extracted from the explanandum by the explanans. Since k ≤ 1, theoretical knowledge is typically, although not necessarily always, larger than empirical knowledge. Equation (3.6) applies to descriptive knowledge as well as correlational or causal knowledge, as examples below illustrate.

3.2.1. Example 1: The mass of the electron

Decades of progressively accurate measurements have led to a current estimate of the mass of the electron of me = 9.10938356 ± 11 × 10−31 kg (based on the NIST recommended value [20]), with the error term representing the standard deviation of normally distributed errors. Since this is a fixed number of 39 significant digits, the explanandum is quantified by the amount of storage required to encode it, i.e. a string of information content −logP(Y = y) = 39 × log(10), and the residual uncertainty is quantified by the entropy of the normal distribution of errors with σ = 11. These measurements are obtained by complex methodologies that are in principle quantifiable as a string of inputs and algorithms, −log p(x) −log p(τ). However, the case of physical constants is similar to that of a mathematical theorem, in that the explanans becomes negligible to the extent that the value obtained can be used in a very large number of subsequent applications. Therefore, we estimate our current knowledge of the mass of the electron to be

| 3.7 |

with the last approximation due to the case that the value can be stored and used for a very large nY times, yielding h ≈ 1. More accurate calculations would require estimating the h component, too. In particular, to compare K(me) to the K value of another constant, the relative frequency of use would need to be taken into account. The corresponding rescaled value is K(me) × 39log 10 ≈ 124 bits.

Note that the specific value of K depends on the scale or unit in which me is measured. If it is measured in grams (10−3 kg), for example, then K(me) = 0.954. This reflects the fact that units of measurement are just another definable component of the system: there is no ‘absolute’ value of K, but solely one that is relative to how the system is defined. The relativity of K may lead to difficulties when comparing systems that are widely different from each other (§3.8). However, results obtained comparing systems that are adequately similar to each other are coherent and consistent, as illustrated in the next paragraph.

We could be tempted to ‘cheat’ by rescaling the value of me to a lower number of digits, in order to ignore the current measurement error. For example, we could quantify knowledge for the mass measured to 36 significant digits only (which is likely to cover over three standard deviations of errors, and therefore over of possible values). By doing so, we would obtain K(me) ≈ 1, suggesting that at that level of accuracy, we have virtually perfect knowledge of the mass of the electron. This is indeed the case: we have virtually no uncertainty about the value of me in the first few dozen significant digits. However, note that the rescaled value of K is K(me) × 36 log10 = 119.6 bits. Therefore, by lowering the resolution, our knowledge increased in relative but not in absolute terms.

It should be emphasized that we are measuring here the knowledge value of the mass of the electron in the narrowest possible sense, i.e. by restricting the system to the mass itself. However, the knowledge we derive by measuring (describing) phenomena such as a physical constant has value also in a broader context, in its role as an input required to know other phenomena, as the next example illustrates.

3.2.2. Example 2: Predicting an eclipse

The total solar eclipse that occurred in North America on 21 August 2017 (henceforth, E2017) was predicted with a spatial accuracy of 1–3 km, at least in publicly accessible calculations [21]. This error is mainly due to irregularities in the Moon’s surface and, to a lesser extent, to irregularities of the shape of the Earth. Both sources of error can be reduced further with additional information and calculations (and thus a longer explanans), but we will limit our analysis to this estimate and therefore assume an average prediction error of 4 km2.

What is the value of the explanans for this knowledge? The theory component of the explanans consists in calculations based on the JPL DE405 solar system ephemeris, obtained via numerical integration of 33 equations of motion, derived from a total of 21 computations [22]. In the words of the authors, these equations are deemed to be ‘correct and complete to the level of accuracy of the observational data’ [22], which means that this τ can be used for an indefinite number nY of computations, suggesting that we can assume −logp(τ)/nY ≈ 0.

The input is in this case a defined object of information content H(X) = −logp(x). It contains 98 values of initial conditions, physical constants and parameters, measured to up to 20 significant digits, plus 21 auxiliary constants used to correct previous data, and the radii of 297 asteroids [22]. Assuming for simplicity that on average these inputs take five digits, we estimate the total information of the input to be at least (98 + 21 + 297) × 5 × log10 ≈ 6910 bits. The accuracy of predictions is primarily determined by the accuracy of measurement of these parameters, which moreover are in many cases subject to revision. Therefore, in this case nX/nY > 0, and the value of H(X) is less appropriately neglected. Nonetheless, we will again assume for simplicity that nY ≫ nX and thus h ≈ 1.

Therefore, since the surface of the Earth is approximately 510 072 000 km2, we estimate our astronomical knowledge to be

| 3.8 |

and a rescaled value of K(E2017; X, τ) × log (510 072 000) = 26.9261.

Therefore, the value of K for predicting eclipses is smaller than that obtained for physical constants (§3.2.1). However, our analysis is not complete and it still over-estimates the K value of predicting an eclipse for at least two reasons. First, because the assumption of a negligible explanans for eclipse prediction is a coarser approximation than for physical constants, since physical constant are required to predict eclipses, and not vice versa. Secondly, and most importantly, our knowledge about eclipses is susceptible to declining with distance between explanans and explanandum. This is in stark contrast to the case of physical constants, which are, by definition, unchanging in time and space, such that λy ≈ 0.

What is λ in the case of eclipses? We will not examine here the possible effects of distance in methods, and we will only estimate the knowledge loss rate over time. We can do so by taking the most distant prediction made using the JPL DE405 ephemeris for a total solar eclipse: the one that will occur on 26 April AD 3000 [21]. The estimated error is approximately 7.8° of longitude, which at the predicted latitude of peak eclipse (21.1° N, 18.4° W) corresponds to an error of approximately 815 km in either direction. Therefore, the estimated K for predicting an eclipse 982 years from now is

| 3.9 |

Solving K(E3000; X, τ) = K(E2017; X, τ) × 2−λ×982 yields a knowledge loss rate of

| 3.10 |

per year. Which corresponds to a knowledge half life of λ−1 ≈ 667 years. Therefore our knowledge about the position of eclipses, based on the JPL DE405 methodology, is halved for every 667 years of time-distance to predictions.

3.3. How much progress is a research field making?

Problem: Knowledge is a dynamic quantity. Research fields are known to be constantly evolving, splitting and merging [23]. As evidence cumulates, theories and methodologies are modified, enlarged or simplified, and may be extended to encompass new explananda and explanantia, or conversely may be re-defined to account more accurately for a narrower set of phenomena. To what extent do these dynamics determine scientific progress?

Answer: Progress occurs if and only if the following condition is met:

| 3.11 |

in which H(X′) ≡ ΔH(X) and −logp(τ′) ≡ −Δlogp(τ) are expansions or reductions of explanantia, and k = (H(Y) − H(Y|X, τ))/H(Y), k′ = (H(Y) − H(Y|X, X′, τ, τ′))/H(Y), h = nYH(Y)/(nYH(Y) + nXH(X) − log p(τ)) (see appendix F).

Explanation: Knowledge occurs when progressively larger explananda are accounted for by relatively smaller explanantia. This is the essence of the process of consilience, which has been recognized for a long time as the fundamental goal of the scientific enterprise [24]. Consilience drives progress at all levels of generality of scientific knowledge. At the research frontier, where new research fields are being created by identifying new explananda and/or new combinations of explanandum and explanans, K grows by a process of ‘micro-consilience’. A ‘macro-consilience’ may be said to occur when knowledge-containing systems are extended and unified across fields, disciplines and entire domains. Equation (3.11) quantifies the conditions for consilience to occur both at the micro- and macro-level.

The inequality (3.11) is satisfied under several conditions. First, when the explanantia X′ and/or τ′ produce a sufficiently large improvement in the effect, from k to k′. Second, equation (3.11) is satisfied even when explanatory power is lost, i.e. when k′ ≤ k, if ΔH(X) − Δlog p(τ) is sufficiently negative. This entails that input, theory or methodology are being reduced or simplified. Finally, if ΔH(X) − Δlog p(τ) = 0, condition (3.11) is satisfied provided that k′ > k, which would occur by expansion of the explanandum. In all cases, the conditions for consilience are modulated by the extent of application of the theories themselves, quantified by the nX and nY indices.

3.3.1. Example 1: Evolutionary models of reproductive skew

Reproductive skew theory is an ambitious attempt to explain reproductive inequalities within animal societies according to simple principles derived from kin selection theory ([25] and references within). In its earliest formulation, reproductive skew was predicted to be determined by a ‘transactional’ dynamic between dominant and subordinate individuals, according to the condition,

| 3.12 |

in which pmin is the minimum proportion of reproduction required by the subordinate to stay, xs and xd are the number of offspring that the subordinate and dominant, respectively, would produce if breeding independently, r is the genetic relatedness between subordinate and dominant and k is the productivity of the group. The theory was later expanded to include an alternative ‘compromise’ model approach, in which skew was determined by direct intra-group conflict. Subsequent elaborations of this theory have extended its range of possible conditions and assumptions, leading to a proliferation of models whose overall explanatory value has been increasingly questioned [25].

We can use equation (3.11) to examine the conditions under which introducing a new parameter or a new model would constitute net progress within reproductive skew theory, using data from a comprehensive review [25]. In particular, we will focus on one of the earliest and most stringent predictions of transactional models, which concerns the correlation between skew and dominant-subordinate genetic relatedness. Contradicting earlier reported success [26], empirical tests in populations of 21 different species failed to support unambiguously transactional models in all but one case (data taken from table 2.2 in [25]).

Since this analysis is intended as a mere illustration, we will make several simplifying assumptions. First, we will assume that all parameters in the model are measurable to two significant digits, and that their prior expected distributions are uniform (in other words, any group from any species may exhibit a skew and relatedness ranging from 0.00 to 0.99, and individual and group productivities ranging from 0 to 99). Therefore, we assume that each of these parameters has an information content equal to 2log 10 = 6.64 bits. Second, we will assume that the data reported by [25] are a valid estimate of the average success rate of reproductive skew theory in any non-tested species. Third, we will assume that all of the parameters relevant to the theory are measured with no error. For example, we assume that for any organism in which a ‘success’ for the theory is reported, reproductive skew is explained or predicted exactly. Fourth, we will assume that the extent of applications of skew theory, i.e. nY, is sufficiently large to make the τ component (which contains a description of equation (3.12) as well as any other condition necessary to make reproductive skew predictions work) negligible. These assumptions make our analysis extremely conservative, leading to an over-estimation of K values.

Indicating with Y, Xs, Xd, Xr, Xk the values of pmin, xs, xd, r, k in equation (3.12), we obtain the value corresponding to the K of transactional models

| 3.13 |

and

| 3.14 |

Plugging these values in equation (3.11) and re-arranging, we derive the minimal amount of increase in explanatory power that would justify adding a new parameter input X′,

| 3.15 |

This suggests, for example, that if X′ is a new parameter measured to two significant digits, with H(X′) = 2log 10, adding it to equation (3.12) would represent theoretical progress if k′ > 1.2k, in other words if it increased the explanatory power of the theory by 20%. If instead X′ represented the choice between transactional theory and a new model then, assuming conservatively that H(X′) = 1, we have k′ > 1.03k, suggesting that any improvement above 3% would justify it.

Did the introduction of a single ‘compromise’ model represent a valuable extension of transactional theory? The informational cost of expanding transactional theory consists not only in the equations τ′ that need to be added to the theory, but also in the additional binary variable X′ that determines the choice between the two models for each new species to which the theory is applied. We will assume conservatively that the choice equals one bit. According to Nonacs & Hager [25], compromise models were successfully tested in 2 out of the 21 species examined. Therefore, the k = 3/21 = 0.14 attained by adding a compromise model amply compensated for the corresponding increased complexity of reproductive skew theory.

The analysis above refers to results for tests of reproductive skew theory across groups within populations. When comparing the average skew of populations, conversely, transactional models were compatible with virtually all of the species tested, especially with regard to the association of relatedness with reproductive skew [25]. In this case, if we interpret these data as suggesting that k ≈ 1, i.e. that transactional models are compatible with every species encountered, then progress within the field (the theory) could only be achieved by simplifying equation (3.12). This could be obtained by removing or recoding the parameters with the lowest predictive power, or by deriving the theory in question from more general theories. The latter is what the authors of the review did, by suggesting that the cross-population success of the theory is explainable more economically in terms of kin selection theory, from which these models are derived [25].

These results are merely preliminary and likely to over-estimate the benefits of expanding skew theory. In addition to the conservative assumptions made above, we have assumed that only one transactional model and one compromise model exist, whereas in reality several variants of these models have been produced, which entails that the choice X′ is not simply binary, and therefore H(X′) is likely to be larger than 1. Moreover, we have assumed that the choice between transactional and compromise models is made a priori, for example based on some measurable property of organisms that tells beforehand which type of model applies. If the choice is made after the variables are known then the costs of this choice have to be accounted for, with potentially disastrous consequences (§3.6).

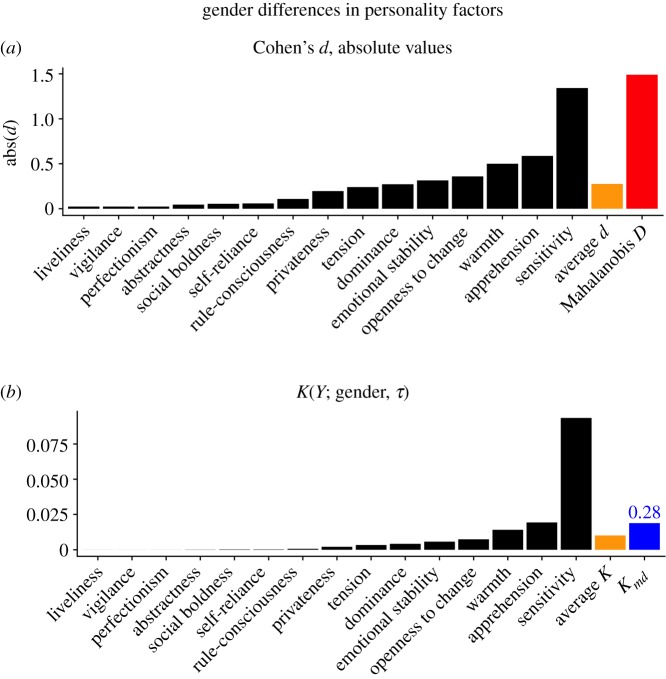

3.3.2. Example 2: gender differences in personality factors

In 2005, psychologist Janet Hyde proposed a ‘gender similarity hypothesis’, according to which men and women are more similar than different on most (but not all) psychological variables [27]. According to her review of the literature, human males and females exhibit average differences that, for most measured personality factors, are of small magnitude (i.e. Cohen’s d less than or equal to 0.35). Assuming that these traits are normally distributed within each gender, this finding implies that the empirical distributions of male and female personality factors overlap by more than 85% in most cases.

The gender similarity hypothesis was challenged by Del Giudice et al. [28], on the basis that, even assuming that the distributions of individual personality factors do overlap substantially, the joint distribution of these factors might not. For example, if Mahalanobis distance D, which is the multivariate equivalent of Cohen’s d, was applied to 15 psychological factors measured on a large sample of adult males and females, the resulting effect was large (D = 1.49), suggesting an overlap of or less [28] (figure 6a).

Figure 6.

Uni- and multivariate analyses of gender differences in personality factors. (a) Cohen’s d and Mahalanobis D calculated in [28]. (b) K values calculated on a dataset of one million individuals, reproduced using the covariance matrices for males and females estimated in [28]. Orange bar: average of unidimensional K values. Blue bar: Kmd, calculated assuming that all factors are orthogonal, as in equation (3.16). The number above the blue bar represents the rescaled values H(Y)Kmd. For further details, see text.

The multivariate approach proposed by Del Giudice was criticized by Hyde primarily for being ‘uninterpretable’ [29], because it is based on a distance in 15-dimensional space, calculated from the discriminant function. This suggests that such a measure is intended to maximize the difference between groups. Indeed, Mahalanobis D will always be larger than the largest unidimensional Cohen’s d included in its calculation (figure 6a).

The K function offers an alternative approach to examine the gender differences vs similarities controversy, using simple and intuitive calculations. With K, we can quantify directly the amount of knowledge that we gain, on average, about an individual’s personality by knowing their gender. Since most people self-identify as male and female in roughly similar proportions, knowing the gender of an individual corresponds to an input of one bit. In the most informative scenario, males and females would be entirely separated along any given personality factor, and knowing gender would return exactly one bit along any dimension. Therefore, we can test to what extent the gender factor is informative by setting up a one-bit information in each of the explananda: we divide the population in two groups, corresponding to values above and below the median for each dimension.

The resulting measure, which we will call ‘multi-dimensional K’ are psychologically realistic and intuitively interpretable and are calculated as

| 3.16 |

in which z is the number of dimensions considered and is the theory linking gender to each dimension i.

Note that, whereas the maximum value attainable by the unidimensional K is 1/2, that of Kmd is 15/16 = 0.938. This value illustrates how, as the explanandum is expanded to new dimensions, Kmd could approach indefinitely the value of 1, value that would entail that input about gender yields complete information about personality. Whether it does so, and therefore the extent to which applying the concept of gender to multiple dimensions represents progress, is determined by conditions in (3.11).

To illustrate the potential applications of these measures, the values of K, average K, as well as Kmd were calculated from a dataset (N=106) simulated using the variance and covariance of personality factors estimated by [28,30]. All unidimensional personality measures were split in lower and upper 50% percentile, yielding one bit of potentially knowable information. In Kmd, these were then recombined, yielding a 15-bit total explanandum.

Figure 6b reports results of this analysis. As expected, the unidimensional K values are closely correlated with their corresponding Cohen’s d values (figure 6a,b, black bars). However, the multi-dimensional K value offers a rather different picture from that of Mahalanobis D. Kmd is considerably smaller than the largest unidimensional effect measured, and is in the range of the second-largest effect. Indeed, unlike Mahalanobis D, Kmd is somewhat intermediate in magnitude, although larger than a simple average (given by the orange bar in figure 6b).

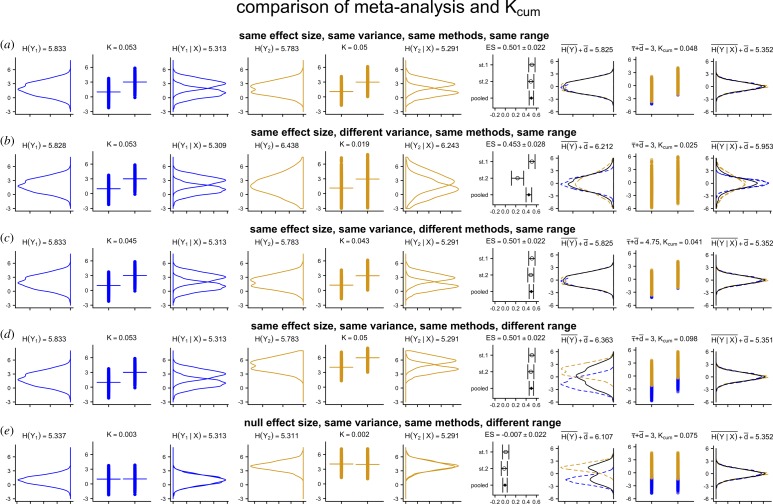

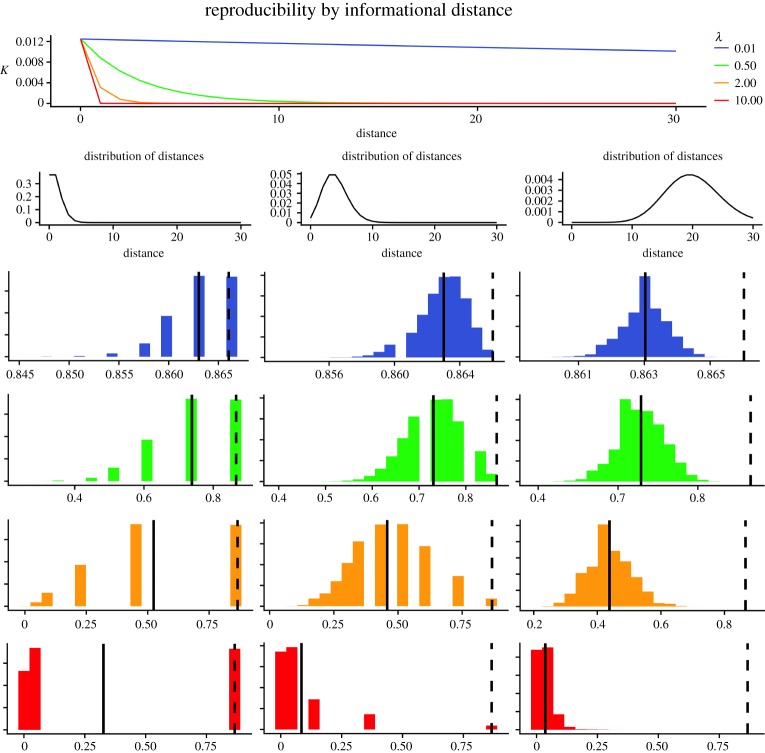

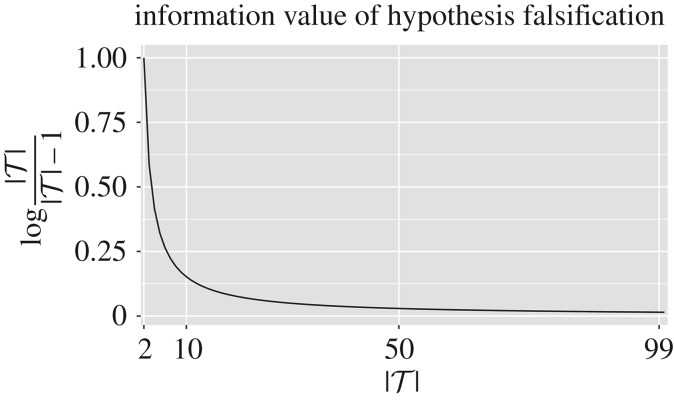

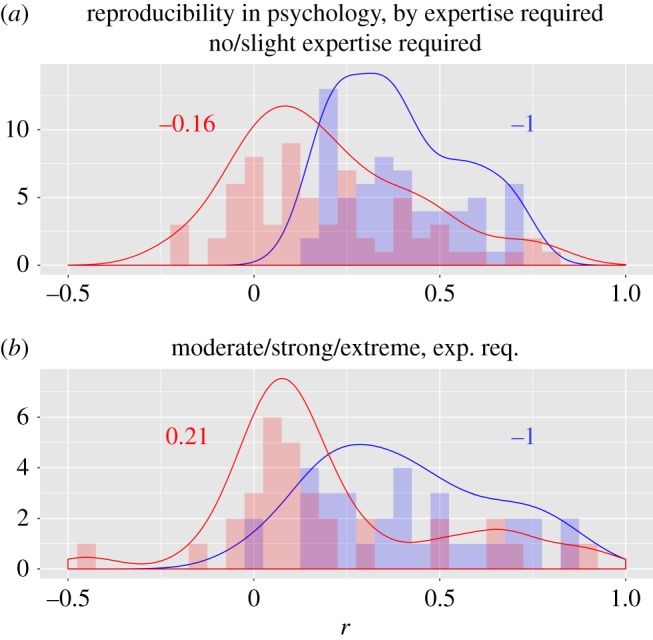

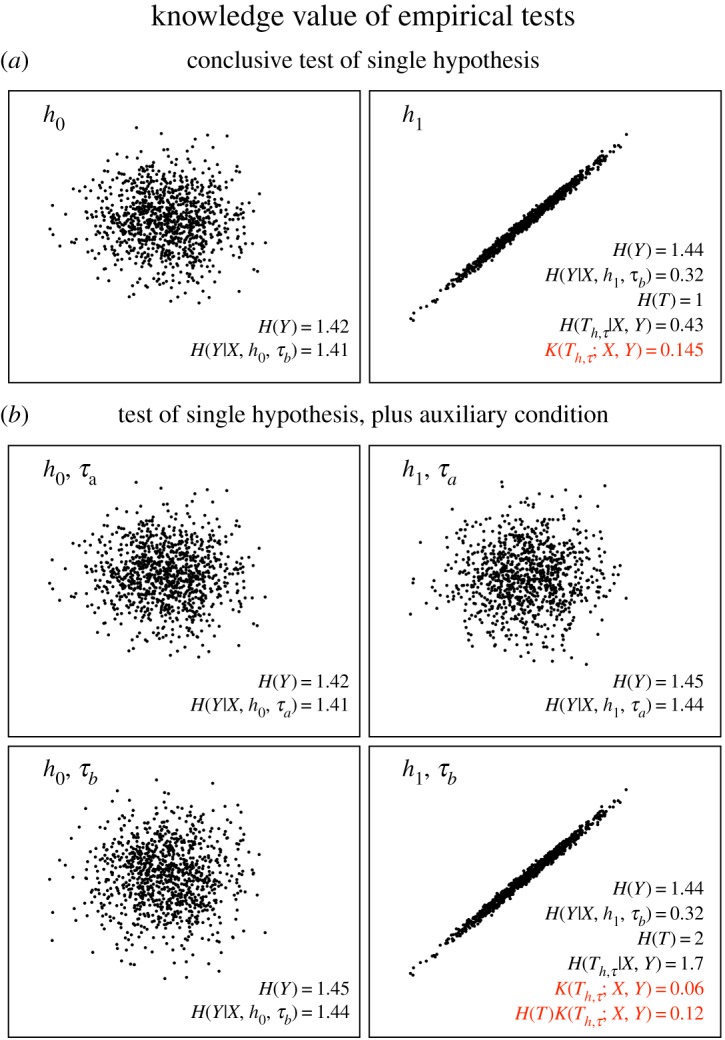

Therefore, we conclude that the overall knowledge conferred by gender about the 15 personality factors together is comparable to some of the larger, but not the largest, values obtained on individual factors. This is a more directly interpretable comparison of effects, which stems from the unique properties of K.