Abstract

Background

Over the past decade, nearly half of internal medicine residencies have implemented block clinic scheduling; however, the effects on residency-related outcomes are unknown. The authors systematically reviewed the impact of block versus traditional ambulatory scheduling on residency-related outcomes, including (1) resident satisfaction, (2) resident-perceived conflict between inpatient and outpatient responsibilities, (3) ambulatory training time, (4) continuity of care, (5) patient satisfaction, and (6) patient health outcomes.

Method

The authors reviewed the following databases: Ovid MEDLINE, Ovid MEDLINE InProcess, EBSCO CINAHL, EBSCO ERIC, and the Cochrane Library from inception through March 2017 and included studies of residency programs comparing block to traditional scheduling with at least one outcome of interest. Two authors independently extracted data on setting, participants, schedule design, and the outcomes of interest.

Results

Of 8139 studies, 11 studies of fair to moderate methodologic quality were included in the final analysis. Overall, block scheduling was associated with marked improvements in resident satisfaction (n = 7 studies, effect size range − 0.3 to + 0.9), resident-perceived conflict between inpatient and outpatient responsibilities (n = 5, effect size range + 0.3 to + 2.6), and available ambulatory training time (n = 5). Larger improvements occurred in programs implementing short (1 week) ambulatory blocks. However, block scheduling may result in worse physician continuity (n = 4). Block scheduling had inconsistent effects on patient continuity (n = 4), satisfaction (n = 3), and health outcomes (n = 3).

Discussion

Although block scheduling improves resident satisfaction, conflict between inpatient and outpatient responsibilities, and ambulatory training time, there may be important tradeoffs with worse care continuity.

Electronic supplementary material

The online version of this article (10.1007/s11606-019-04887-x) contains supplementary material, which is available to authorized users.

KEY WORDS: systematic review, block, ambulatory, scheduling, X + Y

BACKGROUND

In 2009, the Accreditation Council for Graduate Medical Education (ACGME) updated internal medicine residency program guidelines to recommend that internal medicine residencies increase ambulatory training time and develop training schedules to reduce conflicts between inpatient and outpatient responsibilities.1 These recommendations were the culmination of two decades of effort by many professional societies—including the Society of General Internal Medicine, the American College of Physicians, the Association of Program Directors in Internal Medicine (APDIM), the Alliance for Academic Internal Medicine, and the American Board of Internal Medicine (ABIM)—calling for a major redesign of ambulatory resident training to better prepare residents for independent outpatient practice.2–13

Although not formally required by the ACGME, many internal medicine residency programs interpreted the updated 2009 guidelines as an implicit recommendation for the implementation of “block” ambulatory scheduling, also known as “X + Y” scheduling,12 where residents have X weeks of uninterrupted inpatient rotations, followed by Y weeks of uninterrupted outpatient rotations. This was a marked transition away from traditional ambulatory scheduling, in which residents are expected to conduct primary care continuity clinics for one half-day per week irrespective of other concurrent clinical responsibilities. Traditional scheduling was thought to create a conflict between continuity clinic and inpatient responsibilities, resulting in a disjointed ambulatory training experience and negative resident attitudes towards ambulatory training.12 Additionally, many programs found it challenging for residents to attain the minimum required clinic sessions per ACGME in the traditional scheduling model, and wanted to create scheduling models that improved the continuity of care residents had with their patient panel.14 Therefore, many programs were eager to pilot alternate training models. The ACGME-sponsored Education Innovation Project (EIP), which was a 10-year pilot project initiated in 2006 that allowed 21 high-performing internal medicine residency programs to operate under 40% fewer requirements in order to foster innovation in accreditation, facilitated early adoption of block scheduling by participating programs. By 2015, nearly half (43%) of all internal medicine residency programs—including those without EIP support—had adopted block scheduling.14

Despite the popularity and prevalence of block scheduling, it is unclear whether this strategy has had the intended effect on residency-related outcomes. Thus, the objective of our study was to conduct a high-quality synthesis of the current literature to assess the impact of block ambulatory scheduling on residency-related outcomes, including resident satisfaction with ambulatory training, resident-perceived conflict between inpatient and outpatient responsibilities, continuity of care, ambulatory training time, patient satisfaction, and patient health outcomes compared to traditional ambulatory scheduling.

METHODS

Data Sources and Searches

We systematically searched Ovid MEDLINE, Ovid MEDLINE InProcess, EBSCO CINAHL, EBSCO ERIC, and the Cochrane Library (Cochrane Database of Systematic Reviews, Cochrane Database of Reviews of Effect and Cochrane Central Register of Controlled Trials), from inception through March 2017 for studies of residency programs comparing traditional to block ambulatory scheduling. Subject headings and text words for the concepts of graduate medical education, internship, or residency were combined with those for care setting (ambulatory care, ambulatory care facilities, hospital outpatient clinics, primary health care) or scheduling (continuity of patient care, personnel staffing and scheduling, appointments and schedules, rotations, clinics or schedules, including block/random/4 + 1/6 + 2 schedules). Reference lists of included articles were hand-searched to identify additional eligible studies. The search strategies are provided in detail in Online Appendix 1.

Study Selection

Two authors (A.D. and H.L.) independently conducted title/abstract and full-text review. Disagreements were resolved through discussion and consensus. Inclusion criteria were (1) full text in English; (2) study population included residents in primary care specialties, which we defined as family medicine, pediatrics, internal medicine, and obstetrics/gynecology; (3) the study evaluated a difference between traditional ambulatory scheduling (defined as primary care clinic scheduled weekly, including during inpatient rotations) and block ambulatory scheduling (defined as primary care clinic scheduled only during dedicated longitudinal primary care or other specialty outpatient rotations, with rotations lasting anywhere from 1 week to 1 year in duration), (4) the presence of any type of comparison group as a control (i.e., pre-post, historical, or concurrent comparison groups were all acceptable); and (5) at least one of the following outcomes of interest was reported: resident satisfaction with ambulatory training, resident-perceived conflict between inpatient and outpatient duties, continuity of care, change in ambulatory training time (i.e., number of scheduled resident continuity clinic sessions), patient satisfaction, and/or patient health outcomes.

Data Extraction and Quality Assessment

Using a standardized data extraction form, two reviewers (A.D. and H.L.) independently extracted data on the setting, number of participants, definitions of traditional and block ambulatory scheduling, and outcomes of interest as described above. Disagreements were resolved through discussion and consensus. If consensus could not be achieved, a third author (O.K.N.) reviewed discrepancies. Corresponding authors were contacted by e-mail for missing data, with up to three attempts at contact made per study.

For resident satisfaction, we extracted data for the survey item or average composite score for multiple survey items that most closely matched the question “What is your overall satisfaction with ambulatory training?” Similarly, for resident-perceived conflict, we extracted data for the survey item that most closely matched the question “Does the current schedule minimize conflict between inpatient and outpatient responsibilities?” Both outcomes were assessed across all studies using each study’s own 5-point Likert scales. To facilitate comparison across studies, we calculated the effect size—the absolute difference in scores between comparison groups divided by the standard deviation associated with that measure—when possible.15 Generally, values of ≥ 0.2, ≥ 0.5, and ≥ 0.8 are considered small, medium, and large effect sizes respectively.15, 16 If standard deviations were not reported, we calculated them from reported 95% confidence intervals when possible.

We extracted data on continuity of care both for “physician continuity,” defined as the number of total visits during which a physician saw a patient in his or her own panel, and “patient continuity,” defined as the number of total visits during which a patient was seen by his or her own physician. We calculated the percent difference in continuity as the difference in visit proportions with block scheduling compared to traditional scheduling for both measures of continuity.

For ambulatory training time, we extracted data on the number of clinic sessions that occurred during the study period. Since studies varied in the length of follow-up time, we calculated the percent change in clinic sessions with block versus traditional scheduling to facilitate comparison across studies.

For patient satisfaction, we extracted the reported mean overall patient satisfaction survey scores. Because none of the studies reporting this outcome used the same or comparable surveys, we calculated the percent change in patient satisfaction with block versus traditional scheduling to facilitate comparison across studies.

For patient health outcomes, we extracted data on any preventive or chronic disease measures that were reported in included studies (e.g., proportion of patients with diabetes with LDL < 100). Because reported patient health outcomes varied widely across studies, we qualitatively described these results in the manuscript text.

Of note, there were four studies that assessed the effect of block scheduling on different outcomes within subgroups of a twelve-site cohort of programs participating in the ACGME Education Innovations Program (EIP).17–20 In these studies, the subgroups were traditional scheduling, combination block and traditional scheduling, and block scheduling. Only the data for the block scheduling group was extracted.

We assessed the quality of included studies using both the Medical Education Research Study Quality Instrument (MERSQI) and the Newcastle Ottawa Scale for Education (NOS-E) for methodological quality assessment of educational intervention studies.21, 22 Because of minimal variation in NOS-E scores, we included only MERSQI scores in the main manuscript text; details of the NOS-E scoring are available in Online Appendix 2.

Data Synthesis and Analysis

For data synthesis and analysis, we grouped studies by those of programs with “short” ambulatory blocks of 1-week duration. “Other” ambulatory blocks were defined as ambulatory blocks lasting ≥ 3 weeks. These definitions were based on face validity, given the lack of a uniform standard duration for ambulatory blocks among residency training programs. In addition, grouping together programs for analysis that have ambulatory blocks lasting ≥ 3 weeks to 1 year in duration has previously been done in the literature.17–19, 23

Several issues precluded the performance of a meta-analysis, including heterogeneity in study settings, block scheduling interventions, and outcome measurements; statistical heterogeneity; and missing data needed for meta-analysis of outcomes data. Thus, aside from a subgroup meta-analysis of resident satisfaction scores among programs implementing “short” ambulatory blocks for which we report a pooled standard mean difference (equivalent to a pooled estimate of effect size), we otherwise focused on qualitatively synthesizing the results for specific outcomes of interest and methodological quality of studies.

For tests of statistical significance, we included reported p values when available from the original text of included studies. If p values were not reported, we calculated p values using two-sample tests for comparison of means or proportions as appropriate to the reported outcome measure when adequate data were available either in the study text or through author correspondence. We conducted all statistical analyses using Stata 12.1 (StataCorp, College Station, TX).

RESULTS

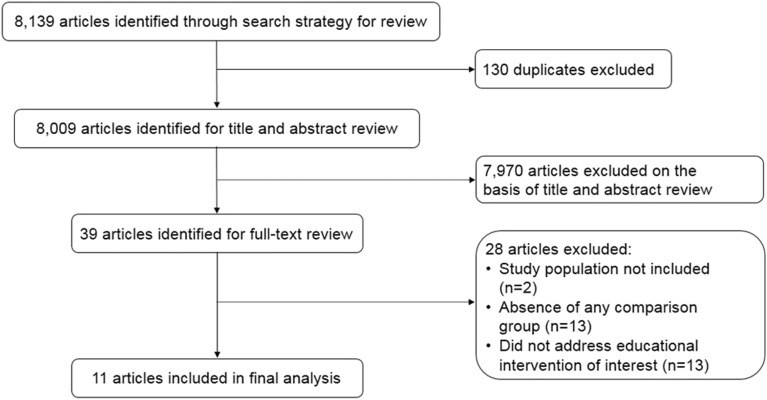

Of 8139 articles identified from our search strategy, 39 articles met criteria for full-text review, and 11 were included in our final analysis (Fig. 1).17–20, 23–29 Although we searched for studies across various primary care specialties, all identified studies took place within internal medicine residency programs in the USA between 2008 and 2016 (Table 1).

Figure 1.

Study selection flowchart.

Table 1.

Characteristics of Included Studies (n = 11 studies)

| Study | Block schedule typea | Residency setting (all IM residencies) | Number of residents in analysis | Study follow-up (months) | Control group for comparison | Reported Outcomes | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Resident satisfaction | Inpatient-outpatient conflict | Care continuity | Ambulatory training time | Patient satisfaction | Patient outcomes | |||||||

| “Short” ambulatory blocks (1 week, n = 4) | ||||||||||||

| Chaudry et al., 201324 | 4 + 1 | University-based program in Long Island, NY | 82b | 9 | Pre-post | X | X | X | ||||

| Harrison et al., 201425 | 3 + 1 | University-based program in Syracuse, NY | 80b | 10 | Concurrentc | X | X | X | ||||

| Heist et al., 201426 | 4 + 1 | University-based program in Denver, CO | 38d | 5 | Historicale | X | X | |||||

| Mariotti et al., 201027 | 4 + 1 | Community-based program in Allentown, PA | 34 | 7 | Pre-post | X | X | X | ||||

| Other ambulatory block designs (n = 7) | ||||||||||||

| Bates et al., 2016f23 | 3 + 3 | University-based program in Boston, MA | 84b | 12 | Pre-post | X | X | X | X | |||

| Francis et al.f, g | June 201417 | Varioush: 4 + 4 8 + 4 8 + 8 “Long”h |

University and community programs in 12 sites | 326 | 9 | Concurrentj | X | X | ||||

| Sept 201418 | University and community programs in 11 sites | 305i | X | |||||||||

| 201519 | University and community programs in 12 sites | 463 | X | X | ||||||||

| 201620 | University and community programs in 12 sites | 463 | X | |||||||||

| Warm et al., 2008f, k28 | 1 year | University-based program in Cincinnati, OH | 69 | 12 | Pre-post | X | X | X | X | X | ||

| Wieland et al., 2013f29 | 4 + 4 | University-based program in Rochester, MN | 56 | 12 | Pre-post | X | X | X | X | |||

IM internal medicine

aBlock schedule type reported as “X inpatient weeks + Y clinic weeks”; i.e., 4 + 1 should be interpreted as “4 inpatient weeks + 1 clinic week.” Programs were further grouped by ambulatory block length, i.e., “short” ambulatory blocks of ≤ 1 week and “long” ambulatory blocks of ≥ 3 weeks

bOnly second- and third-year residents included in analysis

cInterns maintained a traditional ambulatory schedule and were compared to second- and third-year residents who experienced a block schedule

dOnly first-year residents included in analysis

eInterns from academic year 2011–2012 maintained a traditional ambulatory schedule and were compared to interns from academic year 2012–2013 who experienced a block schedule

fBlock scheduling implementation and evaluation was supported by the Educational Innovations Project

gAll Francis et al. studies were evaluations of block scheduling in the same cohort of residents; each study reported different outcomes as noted in the table. Although the cohort consisted of a total of 713–730 residents across 12 programs, the n reported here reflects number of residents included in analyses comparing traditional and block scheduling programs and excludes those in “mixed” programs with components of both traditional and block scheduling

hAll of the listed models were included in one “block scheduling” comparison group

iOne site using a different patient satisfaction survey from the 11 other sites was excluded from this analysis

jTwelve residency programs that were part of the Educational Innovations Project participated in this cohort. Programs with traditional scheduling were compared to those with any form of block scheduling

kLong block scheduling was defined in this study as 12 months of clinic between the 17th–28th months of residency

Of the 11 included studies, 7 were single-site studies.23–29 The remaining 4 studies were multi-site studies that included the twelve-site cohort of programs participating in the EIP.17–20, 23 Two of the single-site studies took place in programs that were also part of this larger twelve-site cohort.28, 29 One study received ACGME EIP support for block scheduling implementation and evaluation but was not part of the Francis et al. evaluations.23 Overall, about half of the studies were pre-post evaluations (n = 5).23, 24, 27–29 One single-site study25 and the four studies from the twelve-site cohort had concurrent comparison groups17–20; one study used a historical comparison group.26

Block ambulatory scheduling strategies were heterogeneous, and study sample sizes ranged from 38 to 463 residents with between 5 and 12 months of study follow-up time (Table 1).

Resident Satisfaction

Seven studies assessed changes in resident satisfaction with ambulatory training associated with block versus traditional ambulatory scheduling (Table 2).17, 23–25, 27–29 Five studies found improvements in resident satisfaction with ambulatory training following the implementation of block clinic scheduling.20, 24, 25, 27, 28 Three of these five studies were of programs with short ambulatory blocks,24, 25, 27 which showed the largest and statistically significant changes (p ≤ 0.05) in resident satisfaction (effect size range + 0.7 to + 0.9, subgroup meta-analysis with pooled standard mean difference of + 0.83, 95% CI + 0.59 to + 1.08, p < 0.001; I2 = 0.0%). One study28 of the other ambulatory block designs found an increase in resident satisfaction (0.9 point mean increase on a 5-point Likert scale, p = 0.02); however, there were no statistically significant changes in resident satisfaction among the rest of the “other” ambulatory block design studies.

Table 2.

Effect of Block Scheduling on Residency-Related Outcomes

| Study | Block schedule type | Outcomes of interest | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Resident satisfaction (n = 7) | Inpatient-outpatient conflict (n = 5) | Care continuity | Ambulatory training time (n = 5) | Patient satisfaction (n = 3) | Patient health outcomes (n = 3) | |||||

| Physician (n = 4) | Patient (n = 4) | |||||||||

| Effect size* (absolute change) | Effect size* (absolute change) | Percent change† | Percent change‡ | Number of added clinic sessions | Percent change§ | Percent changeǁ | Various measures | |||

| “Short” ambulatory blocks (1 week) | ||||||||||

| Chaudry et al., 201324 | 4 + 1 | 0.9 (+ 1.0) | 1.4 (+ 3.4) | + 60 (in 9 months) | + 66.7% | |||||

| Harrison et al., 201425‡‡ | 3 + 1 | 0.7 (+ 0.6) | 2.6 (+ 2.5) | |||||||

| Heist et al., 201426 | 4 + 1 | − 15.0% | − 8.7% | + 6 (in 10 months) | + 32.3% | |||||

| Mariotti et al., 201027‡‡ | 4 + 1 | 0.7 (+ 0.8) | + 72 (in 7 months) | + 66.7% | ||||||

| Other ambulatory block designs | ||||||||||

| Bates et al., 2016 23 | 3 + 3 | NA¶ | NA# | + 14.0% | NA (in 12 months) | + 11.0% | ||||

| Francis et al. | June 201417 | Various: 4 + 4 8 + 4 8 + 8 “Long” |

0.0 (+ 0.0) | 0.3 (+ 0.5) | ||||||

| Sept 201418 | + 1.1 | |||||||||

| 201519 | − 10.2% | + 35.5% | − 7 (in 12 months) | − 13.9% | ||||||

| 201620 | See text | |||||||||

| Warm et al., 200828 | 1 year | NA** (+ 0.9) | NA** (+ 1.6) | + 14.0% | + 3.5% | See text | ||||

| Wieland et al., 201329 | 4 + 4 | − 0.3 (− 0.1)†† | − 14.8% | − 10.2% | + 0.2%** | See text | ||||

IM internal medicine, NA not available

*Effect size = absolute change in outcome between intervention and control groups/standard deviation. Effect size of ≥ 0.2 small magnitude of change, ≥ 0.5 medium magnitude of change, ≥ 0.8 large magnitude of change. Negative values denote a change for the worse (i.e., worsened resident satisfaction or worsened perception of inpatient-outpatient conflict). An effect size of 0 indicates no difference. All values were statistically significant unless otherwise noted

†Calculated as the change in the percentage of visits that a resident physician saw his or her assigned primary care patients with the transition to block scheduling. A negative value denotes worse continuity after implementation of block scheduling. All values were statistically significant unless otherwise noted

‡Calculated as the change in the percentage of visits a patient was seen by his or her assigned resident physician after transition to block scheduling. A negative value denotes worse continuity after implementation of block scheduling

§Calculated as the percent change in resident continuity clinic sessions with block scheduling compared to traditional scheduling. A positive value denotes an increase in number of clinic sessions after implementation of block scheduling, whereas a negative value denotes a decrease

ǁCalculated as the percent change in self-reported patient satisfaction scores after transition to block scheduling. A positive value denotes an increase in patient satisfaction

¶Reported as proportion of residents with “positive” responses (i.e., score of 4 or 5 on a 5-point Likert scale). Reported increase from 75 to 81% proportion of positive responses after implementation of block scheduling. Mean values for resident satisfaction scores not reported

#Reported as proportion of residents with “positive” responses (i.e., score of 4 or 5 on a 5-point Likert scale). Reported increase from 16 to 98% proportion of positive responses after implementation of block scheduling. Mean values for resident-perceived conflict scores not reported

**Standard deviation not available for calculation of effect size

††Change was not statistically significant

‡‡These two studies evaluated continuity qualitatively with resident perspective of their continuity discussed during focus groups. There was no quantitative data to report

Conflict Between Inpatient and Outpatient Responsibilities

Five studies assessed change in resident-perceived conflict between inpatient and outpatient responsibilities with block scheduling (Table 2).17, 23–25, 28 All five studies demonstrated statistically significant improvements in this outcome; i.e., all showed statistically significant reductions in resident-perceived conflict between inpatient and outpatient responsibilities with a range of effect sizes from 0.3 to 2.6 for studies with available data (n = 4).17, 24, 25, 28 Two of these five studies were evaluations of programs with short ambulatory blocks, which showed the largest improvements in perceived inpatient-outpatient conflict (effect sizes 1.4 and 2.6, p ≤ 0.001 and p ≤ 0.01 respectively).24, 25 The three remaining studies did not have short ambulatory blocks,17, 23, 28 but all showed statistically significant improvements in perceived conflict as outlined in Table 2.

Care Continuity

Four studies assessed changes in physician continuity of care with block scheduling, i.e., the proportion of visits in the study period during which a resident physician saw his or her own assigned primary care patients (Table 2).19, 23, 26, 29 Of these, three19, 26, 29 demonstrated worse physician continuity (− 10.2 to − 15.0% of visits with own assigned patients), while only one study28 found an improvement in continuity (+ 14.0% of visits) with block scheduling. Only one study26 took place in a program with a short ambulatory block.

Four studies evaluated changes in patient continuity of care with block scheduling, i.e., the proportion of visits in the study period during which a patient saw his or her assigned resident physician.19, 26, 28, 29 There was an inconsistent effect of block scheduling on patient continuity, with two studies19, 28 reporting improved continuity (+ 14.0 to + 35.5% visits with own physician, Table 2), and two studies26, 29 reporting worse continuity (− 8.7 to − 10.2% visits with own physician). Only one study took place in a program with a short ambulatory block.26

Ambulatory Training Time

Five studies assessed changes in ambulatory training time, i.e., changes in the number of scheduled resident continuity clinic sessions with block scheduling (Table 2).19, 23, 24, 26, 27 Three studies were conducted in programs with short ambulatory blocks,24, 26, 27 and had the largest relative increases in ambulatory time (range, + 32.0 to + 66.7% more clinics compared to traditional scheduling). The remaining two studies19, 23 were conducted in programs without short ambulatory blocks. Of these, one23 found a modest increase in ambulatory time (+ 11%) and one19 found a decrease in ambulatory time (− 13%).

Patient Satisfaction

Three studies assessed changes in patient satisfaction with block scheduling, using a variety of measurement tools (Table 2).18, 28, 29 No studies found any changes in patient satisfaction with transitioning to block from traditional ambulatory scheduling.

Patient Health Outcomes

Three studies evaluated the effect of block scheduling on various preventive and chronic disease management quality metrics as surrogates for patient health outcomes.20, 28, 29 Each study assessed between 5 and 20 metrics. Only one patient health outcome metric was reported across all three studies: the proportion of patients with diabetes with a LDL serum level < 100 mg/dL. There was no consistent effect of block scheduling on LDL serum levels, or on any other patient health outcome measure.

Quality Assessment of Study Methods

Most studies were of fair to moderate quality by the MERSQI criteria22 due to lack of a concurrent control group (n = 5)23, 24, 27–29; being conducted at a single site (n = 7)23–29; the use of resident-reported rather than “objective” outcomes (n = 4)17, 23, 24, 27; the use of primarily descriptive analyses (n = 1)23; and lack of assessment of patient health outcomes (n = 8)17–19, 23–27 (Table 3). NOS-E scores ranged from 2 to 3 out of a possible maximum of 6, with 91% of studies (10 of 11 studies) with the same score of 2, suggesting overall fair methodologic quality (Appendix Table 2).

Table 3.

Quality Assessment of Study Methodsa

| Study | Study designb | Sampling (number of programs)c | Survey response rated | Types of outcomes measurese | Validity of evaluation toolsf | Data analysis appropriate?g | Data analysis strategyh | Reported outcome(s)i | Total quality scorea | |

|---|---|---|---|---|---|---|---|---|---|---|

| “Short” ambulatory blocks (1 week) | ||||||||||

| Chaudry et al., 201324 | Pre-post | 1 | > 75% | Objective | Providedj | Appropriate | Beyond descriptive | Satisfaction, general facts | 11.5 | |

| Harrison et al., 201425 | Non-randomized trial | 1 | > 75% | Self-assessed | Providedj | Appropriate | Beyond descriptive | Satisfaction, general facts | 10 | |

| Heist et al., 201426 | Non-randomized trial | 1 | NA | Objective | NAk | Appropriate | Beyond descriptive | Behaviors | 10.5 | |

| Mariotti et al., 201027 | Pre-post | 1 | 50–74% | Self-assessed | Providedj | Appropriate | Beyond descriptive | Satisfaction, general facts | 9 | |

| Other ambulatory block designs | ||||||||||

| Bates et al., 2016 23 | Pre-post | 1 | > 75% | Self-assessed | Providedj | Appropriate | Descriptive | Satisfaction, general facts | 8.5 | |

| Francis et al. | June 201417 | Non-randomized trial | > 3 | > 75% | Self-assessed | Provided | Appropriate | Beyond descriptive | Satisfaction, general facts | 13 |

| Sept 201418 | Non-randomized trial | > 3 | > 75% | Objective | Provided | Appropriate | Beyond descriptive | Patient health care outcomes | 17 | |

| 201519 | Non-randomized trial | > 3 | > 75% | Objective | Provided | Appropriate | Beyond descriptive | Satisfaction, general facts | 13.5 | |

| 201620 | Non-randomized trial | > 3 | NA | Objective | Provided | Appropriate | Beyond descriptive | Patient health care outcomes | 17 | |

| Warm et al., 200828 | Pre-post | 1 | NR | Objective | Provided | Appropriate | Beyond descriptive | Patient health care outcomes | 14.5 | |

| Wieland et al., 201329 | Pre-post | 1 | 50–74% | Objective | Provided | Appropriate | Beyond descriptive | Patient health care outcomes | 15 | |

NA not applicable, NR not reported

aAssessed using the Medical Education Research Study Quality Instrument (MERSQI). The total possible score is 18. Green denotes higher methodologic quality, yellow denotes moderate quality, and orange denotes fair quality

bStudy design defined as post-test only (1 point), “pre-post” (one group compared before and after intervention; 1.5 points); “non-randomized trial” (separate concurrent control and intervention groups; 2 points), randomized control trial (3 points)

cPotential number of institutions is 1 (0.5 points), 2 (1 points), or ≥ 3 (1.5 points). Studies involving a greater number of institutions are considered higher quality

dSurvey response rate on post-test data; < 50% or not reported (0.5 points), 50–74% (1 point), ≥ 75% (1.5 points)

ePotential types of outcomes measures are assessment by a study participant (1 point), or objective (3 points)

fValidity of evaluation tools is defined as content validity or using existing tools (1 point), internal structure validity (1 point), and correlation with other variables (1 point)

gAppropriateness of data analysis is defined as analysis that was appropriate for the study design (1 point) or not appropriate for study design (0 point)

hData analysis strategy is defined as descriptive only (1 point) or beyond descriptive analysis (2 points)

iReported outcomes are defined as satisfaction, attitudes, perceptions, opinions, general facts (1 point), knowledge or skills (1.5 points), behaviors (2 points), and patient health outcomes (3 points)

jValidity was based on expert opinion only

kNo survey instruments were used in this study

DISCUSSION

In this systematic review, we identified 11 studies comparing the effect of block to traditional ambulatory scheduling on various residency-related outcomes. Included studies were generally of fair to moderate methodologic quality. Overall, block scheduling resulted in moderate to large improvements in resident satisfaction with ambulatory training and in resident-perceived conflict between inpatient and outpatient responsibilities, as well as large relative increases in the number of clinic sessions scheduled for ambulatory training time. However, these gains may have come at the expense of worsened care continuity, with the majority of studies that examined physician continuity of care reporting decreases in continuity.19, 26, 29

One of the major initial motivations for implementing block scheduling was to improve continuity for residents in their primary care practices14; therefore, it is important to recognize that “blocking” resident clinic time was not shown to improve residents’ accessibility to their assigned patients. There are many systems-level factors that residents have previously identified that affect continuity of care, including clinic triage scripts that deemphasize continuity, residents’ inefficient use of non-physician care resources, and an imbalance of clinic scheduling that does not favor continuity over acute visits.30 Therefore, there are certainly other factors which could have affected continuity of care in these studies. However, with residents spending extended weeks at a time entirely removed from the ambulatory setting, it is not surprising that continuity may have been effected. It has become necessary in the block system for residents on their ambulatory block to see the patients of colleagues who are not on their ambulatory block, which could decrease the overall proportion of visits that residents spend seeing patients from their own panel.

Of note, only one26 of the studies that examined physician continuity of care had a short ambulatory block; therefore, it is not clear if short ambulatory blocks in general result in decreased physician continuity of care. There were other differences noted in the short ambulatory block studies. Programs with short ambulatory blocks had a larger magnitude of improvement in ambulatory training time and reduction in resident-perceived conflict between inpatient and outpatient responsibilities compared to those without short ambulatory blocks. Resident satisfaction was significantly improved with implementation of short ambulatory block designs. It was not clear that resident satisfaction changed with implementation of “other” designs. While one “other” ambulatory study28 did show an increase in resident satisfaction, that study implemented the so-called long-block, which was 12 months of clinic implemented in the 17th–28th months of residency. The long-block’s unique design may have caused its results to be somewhat disparate from the rest of the “other” ambulatory block designs.

The short ambulatory block designs showing different results from the “other” ambulatory designs was unexpected. One explanation for these differences could be the presence of the EIP. All of the “other” ambulatory block design studies17–20, 23, 28, 29 included programs that were enrolled in the ACGME-sponsored EIP. These programs were high-performing by definition of their inclusion in the EIP. Therefore, these programs may have had residents more satisfied with their ambulatory training in general, with less inpatient and outpatient conflict built into their scheduling, and with higher numbers of ambulatory sessions already in place. Therefore, drastic improvements in these outcomes may have been less likely to be seen. In light of this confounding factor, it is not possible to state that the short ambulatory block design is superior to other ambulatory block designs.

An additional consideration for programs looking into block scheduling is the potentially prohibitive cost of administrative resources needed to implement block scheduling. The 2015 APDIM survey found that one in five internal medicine residency program directors did not have the needed administrative resources to implement a change to block scheduling.14 In our systematic review, all of the programs without short ambulatory blocks (including three single-site studies23, 28, 29 and all programs in the multi-site Francis et al studies17–20) received EIP support for the implementation of block scheduling. There may be fewer costs and challenges associated with implementing short ambulatory blocks, but this information was not explicitly reported in any of the studies in this review. Therefore, when considering the transition to block scheduling, program leadership would do well to consider the administrative resources necessary, as well as possible methods for ensuring continuity of care within each program’s specific clinic system, prior to the implementation of block scheduling.

Our findings should be interpreted in light of several limitations. First, though we conducted a systematic search, we may have overlooked articles in the gray literature. Second, the quality of studies was limited by the fact that most were observational single-site studies. Although multi-site randomized controlled trials are challenging to conduct for medical education interventions, this limitation could be addressed in future studies through initiatives by professional societies such as the ACGME, AAIM, or ABIM to coordinate multi-site pragmatic and/or cluster-randomized RCTs, if this area of investigation remains a priority. Third, few studies assessed the effect of block scheduling on patient satisfaction, chronic disease management, or preventive care measures, limiting any robust conclusions on the effect of block scheduling on patient-related outcomes. Fourth, there was marked heterogeneity among included studies in study setting, types of block scheduling interventions, and outcome measurement tools. We addressed heterogeneity in outcome measurements by standardizing our reporting of outcomes to use effect sizes and percent differences when possible to facilitate comparisons. For future studies, this limitation could be addressed with coordinated multi-site evaluations of curricular innovations that plan for use of the same standardized and/or validated tools for evaluation across sites. Finally, this body of literature is limited because it largely ignores the impact block scheduling may have had on inpatient residency–related outcomes.

CONCLUSIONS

In conclusion, there is fair to moderate quality evidence to support that block scheduling results in marked improvements in resident satisfaction, perceived conflict between inpatient and outpatient responsibilities, and ambulatory training time but possibly worsens physician care continuity. Residency programs considering the transition to block ambulatory scheduling should weigh the potential benefits of block scheduling with potential tradeoffs in continuity and costs of administrative resources needed for the transition.

Electronic Supplementary Material

Online Appendix 1 Search strategy. Online Appendix 2. Quality assessment of study methods using the Newcastle Ottawa Score for Education (NOS-E) (DOCX 26 kb)

Acknowledgements

The authors thank Lynne Kirk, MD, FACP, for her advice, input, and helpful comments on a draft of this manuscript and Anil Makam, MD, MAS, for his input on the analytic approach.

Funding/Support:

Dr. Nguyen received support from UT Southwestern KL2 Scholars Program (NIH/NCATS KL2 TR0001103). Ms. Mayo and Dr. Nguyen also received support from the AHRQ-funded UT Southwestern Center for Patient-Centered Outcomes Research (AHRQ R24 HS022418). The study sponsors had no role in study design, data analysis, drafting of the manuscript, or decision to publish these findings.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Previous Presentations

We presented preliminary findings from this study at the Society of General Internal Medicine Annual Meeting in Washington, D.C., in April 2017.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Accreditation Council for Graduate Medical Education (ACGME) 2009;Pages http://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/140_internal_medicine_2016.pdf. Accessed 6 Dec 2018.

- 2.Blumenthal D, Gokhale M, Campbell EG, Weissman JS. Preparedness for clinical practice: reports of graduating residents at academic health centers. J Am Med Assoc. 2001;286(9):1027–34. doi: 10.1001/jama.286.9.1027. [DOI] [PubMed] [Google Scholar]

- 3.Bowen JL, Irby DM. Assessing quality and costs of education in the ambulatory setting: a review of the literature. Acad Med. 2002;77(7):621–80. doi: 10.1097/00001888-200207000-00006. [DOI] [PubMed] [Google Scholar]

- 4.Cantor JC, Baker LC, Hughes RG. Preparedness for practice. Young physicians’ views of their professional education. J Am Med Assoc. 1993;270(9):1035–40. doi: 10.1001/jama.1993.03510090019005. [DOI] [PubMed] [Google Scholar]

- 5.Petersdorf RG, Goitein L. The future of internal medicine. Ann Intern Med. 1993;119(11):1130–7. doi: 10.7326/0003-4819-119-11-199312010-00011. [DOI] [PubMed] [Google Scholar]

- 6.Walter D, Whitcomb ME. Venues for clinical education in internal medicine residency programs and their implications for future training. Am J Med. 1998;105(4):262–5. [PubMed] [Google Scholar]

- 7.Wiest FC, Ferris TG, Gokhale M, Campbell EG, Weissman JS, Blumenthal D. Preparedness of internal medicine and family practice residents for treating common conditions. J Am Med Assoc. 2002;288(20):2609–14. doi: 10.1001/jama.288.20.2609. [DOI] [PubMed] [Google Scholar]

- 8.Keirns CC, Bosk CL. Perspective: the unintended consequences of training residents in dysfunctional outpatient settings. Acad Med. 2008;83(5):498–502. doi: 10.1097/ACM.0b013e31816be3ab. [DOI] [PubMed] [Google Scholar]

- 9.Sisson SD, Boonyasai R, Baker-Genaw K, Silverstein J. Continuity Clinic Satisfaction and Valuation in Residency Training. J Gen Intern Med. 2007;22(12):1704–10. doi: 10.1007/s11606-007-0412-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fitzgibbons JP, Bordley DR, Berkowitz LR, Miller BW, Henderson MC. Redesigning residency education in internal medicine: A position paper from the association of program directors in internal medicine. Ann Intern Med. 2006;144(12):920–6. doi: 10.7326/0003-4819-144-12-200606200-00010. [DOI] [PubMed] [Google Scholar]

- 11.Holmboe ES, Bowen JL, Green M, Gregg J, DiFrancesco L, Reynolds E, et al. Reforming Internal Medicine Residency Training. J Gen Intern Med. 2005;20(12):1165–72. doi: 10.1111/j.1525-1497.2005.0249.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Weinberger SE, Smith LG, Collier VU. Education Committee of the American College of P. Redesigning training for internal medicine. Ann Intern Med. 2006;144(12):927–32. doi: 10.7326/0003-4819-144-12-200606200-00124. [DOI] [PubMed] [Google Scholar]

- 13.Meyers FJ, Weinberger SE, Fitzgibbons JP, Glassroth J, Duffy FD, Clayton CP, et al. Redesigning residency training in internal medicine: the consensus report of the Alliance for Academic Internal Medicine Education Redesign Task Force. Acad Med. 2007;82(12):1211–9. doi: 10.1097/ACM.0b013e318159d010. [DOI] [PubMed] [Google Scholar]

- 14.Association of Program Directors in Internal Medicine (APDIM);Pages https://www.amjmed.com/article/S0002-9343(17)30987-7/pdf. Accessed 6 Dec 2018.

- 15.Sullivan GM, Feinn R. Using Effect Size—or Why the P Value Is Not Enough. J Grad Med Educ. 2012;4(3):279–82. doi: 10.4300/JGME-D-12-00156.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Reed D, Price EG, Windish DM, Wright SM, Gozu A, Hsu EB, et al. Challenges in systematic reviews of educational intervention studies. Ann Intern Med. 2005;142(12_Part_2):1080–9. doi: 10.7326/0003-4819-142-12_Part_2-200506211-00008. [DOI] [PubMed] [Google Scholar]

- 17.Francis MD, Thomas K, Langan M, Smith A, Drake S, Gwisdalla KL, et al. Clinic Design, Key Practice Metrics, and Resident Satisfaction in Internal Medicine Continuity Clinics: Findings of the Educational Innovations Project Ambulatory Collaborative. J Grad Med Educ. 2014;6(2):249–55. doi: 10.4300/JGME-D-13-00159.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Francis MD, Warm E, Julian KA, Rosenblum M, Thomas K, Drake S, et al. Determinants of Patient Satisfaction in Internal Medicine Resident Continuity Clinics: Findings of the Educational Innovations Project Ambulatory Collaborative. J Grad Med Educ. 2014;6(3):470–7. doi: 10.4300/JGME-D-13-00398.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Francis MD, Wieland ML, Drake S, Gwisdalla KL, Julian KA, Nabors C, et al. Clinic Design and Continuity in Internal Medicine Resident Clinics: Findings of the Educational Innovations Project Ambulatory Collaborative. J Grad Med Educ. 2015;7(1):36–41. doi: 10.4300/JGME-D-14-00358.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Francis MD, Julian KA, Wininger DA, Drake S, Bollman K, Nabors C, et al. Continuity Clinic Model and Diabetic Outcomes in Internal Medicine Residencies: Findings of the Educational Innovations Project Ambulatory Collaborative. J Grad Med Educ. 2016;8(1):27–32. doi: 10.4300/JGME-D-15-00073.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cook DA, Reed DA. Appraising the Quality of Medical Education Research Methods: The Medical Education Research Study Quality Instrument and the Newcastle–Ottawa Scale-Education. Acad Med. 2015;90(8):1067–76. doi: 10.1097/ACM.0000000000000786. [DOI] [PubMed] [Google Scholar]

- 22.Reed DA, Beckman TJ, Wright SM, Levine RB, Kern DE, Cook DA. Predictive validity evidence for medical education research study quality instrument scores: quality of submissions to JGIM’s Medical Education Special Issue. J Gen Intern Med. 2008;23(7):903–7. doi: 10.1007/s11606-008-0664-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bates CK, Yang J, Huang G, Tess AV, Reynolds E, Vanka A, et al. Separating Residents’ Inpatient and Outpatient Responsibilities: Improving Patient Safety, Learning Environments, and Relationships With Continuity Patients. Acad Med. 2016;91(1):60–4. doi: 10.1097/ACM.0000000000000849. [DOI] [PubMed] [Google Scholar]

- 24.Chaudhry SI, Balwan S, Friedman KA, Sunday S, Chaudhry B, DiMisa D, et al. Moving Forward in GME Reform: A 4 + 1 Model of Resident Ambulatory Training. J Gen Intern Med. 2013;28(8):1100–4. doi: 10.1007/s11606-013-2387-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Harrison JW, Ramaiya A, Cronkright P. Restoring Emphasis on Ambulatory Internal Medicine Training—The 3∶1 Model. J Grad Med Educ. 2014;6(4):742–5. doi: 10.4300/JGME-D-13-00461.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Heist K, Guese M, Nikels M, Swigris R, Chacko K. Impact of 4 + 1 Block Scheduling on Patient Care Continuity in Resident Clinic. J Gen Intern Med. 2014;29(8):1195–9. doi: 10.1007/s11606-013-2750-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mariotti JL, Shalaby M, Fitzgibbons JP. The 4∶1 Schedule: A Novel Template for Internal Medicine Residencies. J Grad Med Educ. 2010;2(4):541–7. doi: 10.4300/JGME-D-10-00044.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Warm EJ, Schauer DP, Diers T, Mathis BR, Neirouz Y, Boex JR, et al. The Ambulatory Long-Block: An Accreditation Council for Graduate Medical Education (ACGME) Educational Innovations Project (EIP) J Gen Intern Med. 2008;23(7):921–6. doi: 10.1007/s11606-008-0588-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wieland ML, Halvorsen AJ, Chaudhry R, Reed DA, McDonald FS, Thomas KG. An Evaluation of Internal Medicine Residency Continuity Clinic Redesign to a 50/50 Outpatient–Inpatient Model. J Gen Intern Med. 2013;28(8):1014–9. doi: 10.1007/s11606-012-2312-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wieland ML, Jaeger TM, Bundrick JB, Mauck KF, Post JA, Thomas MR, Thomas KG. Resident physician perspectives on outpatient continuity of care. J Grad Med Educ. 2013;5(4):668–73. doi: 10.4300/JGME-05-04-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Online Appendix 1 Search strategy. Online Appendix 2. Quality assessment of study methods using the Newcastle Ottawa Score for Education (NOS-E) (DOCX 26 kb)