Abstract

Complexity and heterogeneity are intrinsic to neurobiological systems, manifest in every process, at every scale, and are inextricably linked to the systems’ emergent collective behaviours and function. However, the majority of studies addressing the dynamics and computational properties of biologically inspired cortical microcircuits tend to assume (often for the sake of analytical tractability) a great degree of homogeneity in both neuronal and synaptic/connectivity parameters. While simplification and reductionism are necessary to understand the brain’s functional principles, disregarding the existence of the multiple heterogeneities in the cortical composition, which may be at the core of its computational proficiency, will inevitably fail to account for important phenomena and limit the scope and generalizability of cortical models. We address these issues by studying the individual and composite functional roles of heterogeneities in neuronal, synaptic and structural properties in a biophysically plausible layer 2/3 microcircuit model, built and constrained by multiple sources of empirical data. This approach was made possible by the emergence of large-scale, well curated databases, as well as the substantial improvements in experimental methodologies achieved over the last few years. Our results show that variability in single neuron parameters is the dominant source of functional specialization, leading to highly proficient microcircuits with much higher computational power than their homogeneous counterparts. We further show that fully heterogeneous circuits, which are closest to the biophysical reality, owe their response properties to the differential contribution of different sources of heterogeneity.

Author summary

Cortical microcircuits are highly inhomogeneous dynamical systems whose information processing capacity is determined by the characteristics of its heterogeneous components and their complex interactions. The high degree of variability that characterizes macroscopic population dynamics, both during ongoing, spontaneous activity and active processing states reflects the underlying complexity and heterogeneity which has the potential to dramatically constrain the space of functions that any given circuit can compute, leading to richer and more expressive information processing systems. In this study, we identify different tentative sources of heterogeneity and assess their differential and cumulative contribution to the microcircuit’s dynamics and information processing capacity. We study these properties in a generic Layer 2/3 cortical microcircuit model, built and constrained by multiple sources of experimental data, and demonstrate that heterogeneity in neuronal properties and microconnectivity structure are important sources of functional specialization, greatly improving the circuit’s processing capacity, while capturing various important features of cortical physiology.

Introduction

Heterogeneity and diversity are ubiquitous design principles in neurobiology (or in any biological system, for that matter), covering components and mechanisms at every descriptive scale [1]. While many of these specializations, as well as their inherent complexity and diversity, are functionally meaningful, intrinsically linked and responsible for the brain’s computational capacity and efficiency (see e.g. [2–4]), others are bound to reflect epiphenomena, by-products of evolution, bearing little or no functional significance [5], or to subserve metabolic/maintenance tasks [6] that, while crucial for healthy function, are not directly involved in the computational process.

In order to study the functional role of heterogeneity in cortical processing, we need to modularize complexity [7]: exploit the degenerate nature of the system [8, 9] and heuristically identify groups of components that may behave as singular modules (depending on the scale and processes of interest). Once these tentative ‘building blocks’ are identified, we need to specify adequate levels of descriptive complexity that may shed light onto the underlying functional principles. These pursuits, however, pose severe epistemological problems as we currently have no clear intuition as to what ‘adequacy’ means in this context (see, e.g. [10–12]).

Despite substantial progress, our ability to clearly identify the system’s core component ‘building blocks’ [3, 13] and to systematically characterize their relative contributions and potential functional roles is still a daunting task given the multiple spatial and temporal scales at which they operate, their complex, nested interactions and the, often incomplete or inconsistent, empirical evidence. Nevertheless, when studying neuronal computation, one needs to keep in mind that, despite its tremendous complexity or because of it, the brain is a machine ‘fit-for-purpose’ and optimized to process information and operate in complex, dynamic and uncertain environments, whose spatiotemporal structure it must extract in order to reliably compute [1, 4]. As such, it is important to disentangle and quantify which and how the different components of the system may modulate functional neurodynamics in meaningful ways that can be paralleled with related experimental observations, and to which degree these specializations affect the system’s operations.

In the next section, we attempt to identify an appropriate partition of potential building blocks of complexity and heterogeneity in neocortical microcircuits. We then proceed to building a biologically inspired and strongly data-driven microcircuit model that explicitly employs this partition in an attempt to understand and quantify the differential functional roles and consequences of these different sources of heterogeneity applying systematic and generic benchmarks. We will focus on generic properties in both the microcircuit architecture and its information processing capacity. Rather than modelling specific microcircuits in specific cortical regions and corresponding functional specializations (as in e.g. [14, 15]), we generalize our approach and collate data pertaining to layer 2/3 microcircuit features (anatomical and physiological) from different cortical regions, while evaluating the differential contributions of different forms of heterogeneity on the system’s dynamics and generic computational properties, focusing particularly on their suitability for continuous, online processing with fading memory [16–18]. The approach also aims at providing an intermediate level of descriptive complexity for studying computation in cortical microcircuit models, as discussed below.

Heterogeneous building blocks in the neocortical circuitry

On a macroscopic level, hierarchical modularity is easily identifiable as a parsimonious design principle underlying various structural and functional aspects of cortical organization [19–23]. Different anatomophysiological [24, 25], genetic / biochemical [26–30] or functional [31–33] criteria give rise to slightly different modular parcellations but, in combination, these criteria reveal the relevant ‘building blocks’, the most important features whose variations and recombinations give rise to the complexity and diversity of the cortical tissue [34].

For convenience, these features can be coarsely (and tentatively) grouped into neuronal, synaptic and structural components (see also [13]). Neuronal features refer to the different cell classes and their laminar and regional distributions [35] along with their characteristic electrophysiological and biochemical diversity [36, 37]. Synaptic components refer to a molecular default organization characterized by variations in the differential expression and transcription of genes involved in synaptic transmission [26, 28], which is reflected, for example, in regional receptor architectonics [38–40]. Structural aspects include variations in cortical thickness and laminar depth [41] along with neuronal and synaptic density [42, 43] and input-output (both local and long-range) connectivity patterns [44, 45]. In combination, these features highlight default organizational principles whose variations across the cortical sheet are likely to contribute to the corresponding functional specializations.

Based either on morphological, electrophysiological or biochemical features (or, preferably, a combination thereof), several different classes of neurons can be identified throughout the neocortex (see e.g. [35–37, 46–48]). Apart from pronounced regional and laminar differences in the types of neurons that make up the cortex and their relative spatial distributions, every microcircuit in every cortical column is composed of diverse neuron types, with heterogeneous properties and heterogeneous behaviour.

Electrochemical communication between these diverse neuronal classes is an intricate, dynamic and very complex process involving a multitude of nested inter- and intracellular signalling networks [49–51]. Their functional range spans multiple spatial and temporal scales [52–54] and has, arguably, the most critical role in modulating microcircuit dynamics and information processing within and across neuronal populations [2, 3, 55]. The specificities of receptor composition and kinetics underlie the substantial diversity observed in the elicited post-synaptic potentials [56, 57] across different synapse and neuronal types (see, e.g. [58–62]). This occurs because the receptors mediating these events have distinct biochemical and physiological properties depending on the type of neuron they are expressed in and, naturally, the type of neurotransmitter they are responsive to. These varying properties have known and non-negligible implications in the characteristic kinetics of synaptic transmission events occurring between different neurons [63] and strongly constrain the circuit’s operations.

Additionally, cortical microcircuits are not randomly coupled, but over-express specific connectivity motifs [64–70], which bias and skew the network’s degree distributions [71] and/or introduce correlations among specific connections [72], thus selectively modifying the impact of specific pre-synaptic neurons on their post-synaptic targets. Analogously to the heterogeneities in neuronal and synaptic properties, such structural features are known to significantly impact the circuit’s properties [73–77].

Descriptive adequacy

As discussed above, the variations in the anatomy and physiology of a cortical microcircuit are experimentally well established and have been shown to influence computational properties. However, the absence of complete descriptions of biophysical heterogeneity and well-substantiated empirical evidence to support them (primarily due to technical limitations), has forced computational studies to take a simplified approach. This has multiple additional advantages, such as greater likelihood of analytical tractability and lower overhead for the researcher in specifying the network parameters. Simplified, homogeneous models of spiking networks have proven to be a valuable tool for a theoretically grounded exploration of microcircuit dynamics, emerging from the interaction of excitatory and inhibitory populations [78–81].

The generic principles established by studying simple balanced random networks have subsequently been applied to model specific cortical microcircuits with integrated connectivity maps and realistic numbers of neurons and synapses [82]. This approach revealed that some prominent features of spontaneous and stimulus-evoked activity and its dynamic flows through a cortical column can be accounted for by the macroscopic connectivity structure, mediated by local and long-range interactions [83]. However, by focusing on emergent dynamics, these studies neglect the functional aspects and the fact that cortical interactions serve computational purposes (but, see [84] for a study on the computational properties of the [82] microcircuit model). In addition, although there are good reasons for taking a minimalist approach, assuming uniformity and homogeneity on every component of the system tends to lack cogency with respect to established anatomical and physiological facts and to disregard biophysical and biochemical plausibility.

Some of these limitations were circumvented by [85], who not only accounted for detailed and empirically-informed connectivity maps, but also employed more biologically motivated models of neuronal and synaptic dynamics and placed them in an explicit functional/computational context. In line with the results obtained with the simpler microcircuit models [82, 84], this study demonstrated that considering realistic structural constraints is beneficial and significantly improves the computational capabilities of the circuit. Several other studies provide important steps to move away from homogeneous systems by incorporating variability (e.g. [75, 86, 87]), but tend to do so in a relatively arbitrary manner and/or focusing on specific forms of heterogeneity while retaining homogeneity in other components (depending on the scientific objectives of the study).

A completely different set of priorities for modelling cortical microcircuits are espoused by [88] in the framework of the continuous efforts of the Blue Brain project [89]. The Blue Brain approach lies on the other extreme of the descriptive scale, in that it attempts to model a cortical column in full detail, explicitly accounting for the complexities of cellular composition (based on neuronal morphology and electrophysiology), synaptic anatomy and physiology, as well as thalamic innervation, essentially constituting an in silico reconstruction of a cortical column (see also [90]). This approach is, naturally, extremely computationally expensive and its explanatory power is limited. The model complexity at this end of the spectrum is so close to the biophysical reality that it might not lend itself to a comprehensive understanding of dissociable and important functional principles any more readily than studying the real thing does. Nonetheless, it provides valuable insights in that it carefully replicates a lot of in vivo and in vitro responses of a real cortical column, while generating a wealth of complete and comprehensive data [88, 91].

Thus, we conclude that while simpler models are preferable, as they are generic enough to be broadly insightful and allow us to uncover general principles, we should ask the question: what is the cost of simplification? If a model simplifies away the core computational elements of the system, our ability to account for its operations is lost. The findings discussed above indicate that heterogeneity may be critical for the mechanisms of computation; therefore models aiming at uncovering computational principles in specific biophysical systems, such as a cortical column or microcircuit, should account for these features.

In this study, we attempt to bridge this descriptive gap by building microcircuit models, inspired and constrained by the composition of Layer 2/3, that account for key heterogeneities in neuronal, synaptic and structural properties. We implement all types of heterogeneity such that they can be switched on or off, thus enabling us to systematically disentangle and evaluate the roles played by the different types of heterogeneity in the different tentative building blocks, and how they collectively interact to shape the circuit’s dynamics and information processing capacity.

The choices and characteristics of the models and parameter sets used throughout this study, as well as the general microcircuit composition are constrained and inspired by multiple sources of experimental data (see section Data-driven microcircuit model and S1 Appendix) and account for the prevalence of different neuronal sub-types and their heterogeneous physiological and biochemical properties, the specificities of instantaneous synaptic kinetics and its inherent diversity as well as specific structural biases in cortical micro-connectivity. All models and model parameters were, as far as possible, chosen to directly match relevant experimental reports and minimize the introduction of arbitrary model parameters, in order to ensure that the effects observed are caused by realistic forms of complexity and heterogeneity and avoid imposing excessive assumptions or preconceptions on the systems studied, i.e. to “allow biology to speak for itself”.

In section Data-driven microcircuit model (complemented by the Methods section and the Supplementary Materials), we explain all the details of the models and model parameters used to build and constrain the microcircuit, as well as the underlying empirical observations that motivate the choices. After specifying and fixing all the relevant parameters to, as closely as possible, match multiple sources of empirical data, we study the effects of heterogeneity on population dynamics in a quiet state, where the circuit is passively excited by background noise (section Emergent population dynamics) and in an active state, where the circuit is directly engaged in information processing (section Active processing and computation). We evaluate the circuit’s sensitivity and responsiveness, as well as its memory and processing capacity, demonstrating a clear and unambiguous role of heterogeneity in shaping the proficiency of the system by greatly increasing the space of computable functions.

Results

Data-driven microcircuit model

In this section, we describe the process of building a complex data-driven cortical microcircuit model capturing some of the fundamental features of layer 2/3. We specify the detailed architecture, composition and dynamics of the microcircuits explored throughout this study as well as the motivation behind all model and parameter choices. In each relevant section, we highlight the differences between the respective homogeneous and heterogeneous conditions. A summarized, tabular description of the main models is provided in S1 Table, along with a list of the primary sources of experimental data used to constrain the model parameters, provided in S1 Appendix.

All the circuits analysed throughout this study are composed of N = 2500 point neurons (roughly corresponding to the size of a layer 2/3 microcircuit in an anatomically defined cortical column; [92]), divided into NE = 0.8 N excitatory, glutamatergic neurons and NI = 0.2 N inhibitory, GABAergic neurons. In addition, we further subdivide the inhibitory population in two sub-classes, I1 and I2 (with and ), corresponding to fast-spiking and non-fast-spiking interneurons, respectively (see Neuronal properties). Accordingly, there are nine different synapse types (all possible connections between neuronal populations), with distinct, specific response properties (see Synaptic properties). Similarly, there are nine connection probabilities from which random connections are drawn (see Structural properties).

For each of the key features of neuronal, synaptic and structural properties, we differentiate between the homogeneous case, where all properties are identical, and the heterogeneous case, where properties are drawn from appropriately chosen distributions. In this way, we can tease apart the differential effects of the three sources of heterogeneity considered here: neuronal, synaptic and structural.

For consistency, all the circuits’ structural (and synaptic) features are constrained primarily by the composition of layer 2/3 in the C2 barrel column in the mouse primary somatosensory cortex (S1), given the availability of direct, complete and significantly explored experimental datasets (e.g. [92–94]).

Neuronal properties

We divide the neurons into one excitatory (E: glutamatergic, pyramidal neurons) and two inhibitory (I1: GABAergic, fast spiking interneurons, I2: GABAergic, non-fast spiking) classes, consistent with the reports in [92, 93] and [94]. The three classes differ in relative excitability and firing properties, providing substantially more electrophysiological diversity than commonly exhibited in cortical models on the abstraction level of point neurons (e.g. [81, 82, 95–97]), while still being of a manageable degree of complexity. All neurons are modelled using a simplified adaptive leaky integrate-and-fire scheme (see Methods and [98, 99]).

The parameters used for the different neuron types (summarized in Table 1) were chosen to match the respective ranges reported in the literature, considering both the data collected in the NeuroElectro database [36, 100] encompassing tens of unique data points from different experimental sources (see S1 Appendix) and those reported in [92, 93, 101, 102], and [94] given the completeness of these reports and the direct similarities with our case study.

Table 1. Single neuron parameter sets.

In the heterogeneous condition, three neuronal types have the values of the described parameters randomly drawn from a normal () or lognormal () distribution. The parameter values for these distributions were determined taking into account multiple sources of experimental data (see S1 Appendix). Note that, to make comparisons simpler, the values displayed for the lognormal distributions correspond to the mean and standard deviation of the distribution, not the actual μ and σ parameters (see Materials and methods).

| Parameter | Homogeneous | Heterogeneous | Description | ||||

|---|---|---|---|---|---|---|---|

| E | I1 | I2 | E | I1 | I2 | ||

| Eleak[mV] | −76.43 | −64.33 | −61 | resting membrane potential | |||

| Vthresh[mV] | −44.45 | −38.97 | −34.44 | spike threshold | |||

| Vreset[mV] | −54.18 | −57.47 | −47.11 | reset potential | |||

| Gleak[nS] | 4.64 | 9.75 | 4.61 | leak conductance | |||

| Cm[pF] | 116.52 | 104.52 | 102.87 | membrane capacitance | |||

| tref[ms] | 2.05 | 0.52 | 1.34 | absolute refractory time | |||

| τm[ms] | 25.11 | 10.72 | 22.33 | membrane time constant | |||

| Rm[MΩ] | 215.54 | 102.53 | 217.10 | input resistance | |||

Due to the nature of the chosen neuronal formalism (see Neuronal dynamics), some model parameters have no direct proxy with experimental measures and were instead determined considering their relations to other variables for which direct experimental data exists. For example, Vreset is not, strictly speaking, a biophysically meaningful variable; its value was chosen considering the data for afterhyperpolarization potentials EAHP relative to the resting membrane potentials EL. Whenever such discrepancies occurred or when parameters had to be derived relative to others, we selected those values that better matched the experimental data. Overall, we observed a remarkable consistency in the ranges of parameter values considered across the different data sources.

Neuronal heterogeneity

In the homogeneous condition, the parameters for all neurons of a given class are fixed and chosen as a representative example of that class (see left side of Table 1 and bold fI curves in Fig 1). To incorporate neuronal heterogeneity, the specific values of each parameter are independently drawn from a probability distribution, specific to each neuron class (see right side of Table 1 and individual fI curves in Fig 1).

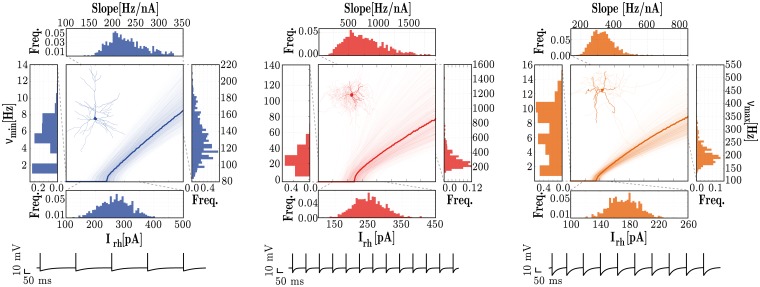

Fig 1. Response properties of the three different neuronal types, E (left), I1 (middle) and I2 (right).

For each neuronal class, the central panels depict single neuron fI curves and the marginal panels give the corresponding distributions of the neuron’s rheobase currents (Irh[pA], bottom), minimum firing rates (νmin[Hz], left); maximum firing rates (νmax[Hz], right) and the slope of the fI curve (Slope[Hz/nA], top). The data was obtained from 1000 neurons of each class. The membrane potential traces depicted in the bottom correspond to the response of the homogeneous neurons (bold traces in the fI curves) to a stimulus step of amplitude Irh + 10 pA, for a duration of 1 second.

To ensure comparability among conditions, we tuned the intrinsic adaptation parameters (a, b, see Neuronal dynamics) independently for each neuron type, in order to retain the following relations among neuronal classes:

Rheobase current (excitability)—Irh(E) ≈ Irh(I1) > Irh(I2)

Slope of f-I curve (gain)—g(I1) > g(I2) > g(E)

Minimum firing rate—νmin(I1)> νmin(I2) > νmin(E)

Maximum firing rate—νmax(I1) > νmax(I2) > νmax(E)

This led to the following values (a, b) for sub-threshold and spike-triggered adaptation, respectively: E = (4, 30), I1 = (0, 0), I2 = (2, 10).

One of the advantages of using the modelling approach presented in this study is the possibility to directly interpret the biophysical meaning of the different parameters. In this case, these results highlight the fact that fast spiking interneurons (I1) do not exhibit any form of intrinsic adaptation, which is a reasonable result for a neuron whose primary role is to respond quickly and provide dense and fast, feed-forward inhibition [103]. It should be noted that, after fixing all the neuronal parameters as described above, the absolute values of these four properties did not exactly match the corresponding experimental reports. For example, the values obtained for the rheobase currents were, on average, larger than the ranges reported experimentally, for all 3 neuron classes (see S2 Table). These discrepancies in absolute values and their potential impact were ameliorated by ensuring the above relations between the key response properties of the different neuronal classes were retained.

Synaptic properties

With three neuronal populations, as described above, there are nine possible types of synaptic connection, i.e. syn ∈ {EE, EI1, EI2, I1E, I2E, I1I1, I1I2, I2I1, I2I2}. Synapse types can be grouped by transmitter composition and/or post-synaptic effect, as excitatory synE = {EE, I1E, I2E} and inhibitory synI = {EI1, EI2, I1 I1, I1 I2, I2 I1, I2 I2}, as illustrated in Fig 2a. For simplicity, we consider all synaptic transmission as being mediated by either glutamate (excitatory synapses) or gamma-aminobutyric acid (GABA, inhibitory synapses), as illustrated in Fig 2b. This is a reasonable simplification given that these are, by far, the primary neurotransmitters used in the neocortex, as demonstrated by immunohistochemistry [104] and receptor autoradiography studies [39, 105, 106]. Additionally, this is a common assumption underlying the great majority of theoretical and computational studies.

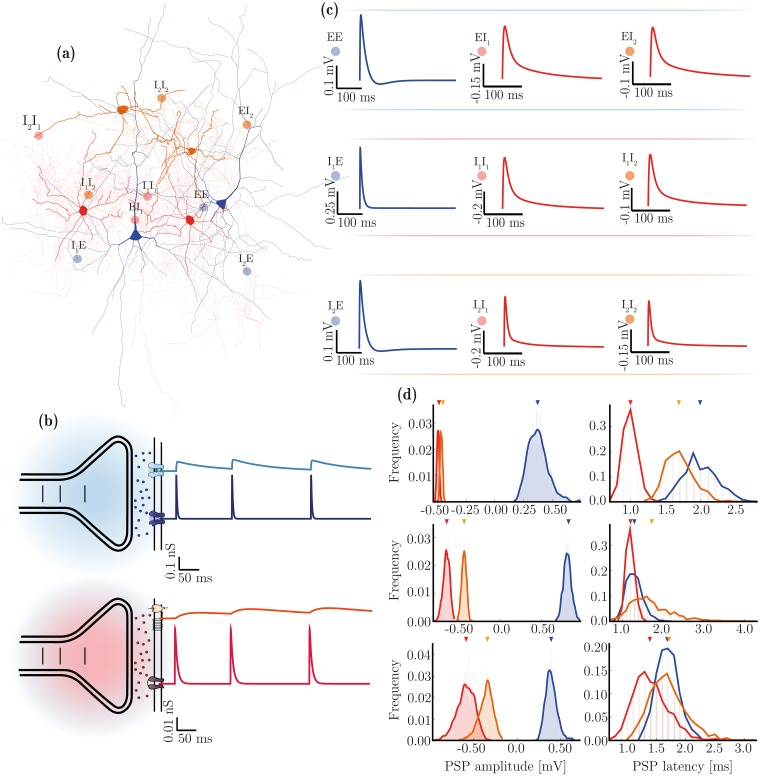

Fig 2. Diversity of synaptic transmission in the microcircuit.

(a) Illustration of the three neuron and nine synapse types considered in this study. (b) Characteristics of synaptic transmission in excitatory (blue) and inhibitory (red) synapses, comprising fast and slow components. (c) Kinetics of spike-triggered PSPs dependent on pre- and post-synaptic neuron type and determined by the specific receptor kinetics and composition in the postsynaptic neuron. The depicted traces are the PSPs at rest in E neurons (top row) and at a fixed holding potential of −55 mV for inhibitory neurons (I1: middle row, I2: bottom row), as a function of receptor composition and correspond to the values reported in Table 2 (last column). (d) Distributions of PSP amplitudes and latencies after rescaling (by wsyn and dsyn, respectively, see Table 3) in the homogeneous (top arrows) and heterogeneous (distributions) conditions, for synapses onto E (top), I1 (middle) and I2 (bottom) neurons. Note that the latency distributions are discrete in that for technical reasons, they can only assume values that are a multiple of the simulation resolution.

To accommodate the wealth of data available regarding the phenomenology of synaptic transmission and to provide a significant step forward from the traditional approaches, we chose a relatively complex biophysical model [99, 107–109]; primarily due to its plausibility but also due to the availability of direct parameters in the experimental literature (e.g. [109]). The model, described fully in the Materials and Methods, captures the detailed kinetics of single receptor conductances (Fig 2b).

The use of this model allows us to specify different receptor parameters depending on the neuron type they are expressed in (see Table 2), in order to directly match empirical data on receptor kinetics and relative conductance ratios in different neuronal classes. In the absence of such direct data for specific synapses (particularly those involving I2 neurons), we tuned these parameters to match the resulting PSP/PSC kinetics, as well as the relative ratios of total charge elicited by the receptors that compose such synapses. For a detailed list of the data sources used to constrain these parameters, consult S1 Appendix.

Table 2. Differential receptor expression in the different neuron types.

The kinetics and relative conductance of the different receptors that make up an inhibitory or excitatory synapse onto each neuron results in post-synaptic potentials with equally discernible kinetics. The parameters were chosen based on the corresponding receptor conductance data, if directly available and/or on the characteristics of the resulting PSPs, resulting in a substantial diversity of postsynaptic responses (see Fig 2c). (*) The PSP values reported in this table were obtained by fitting a double exponential function to single, spike-triggered PSPs, recorded at rest for E neurons and at a fixed holding potential of −55 mV for I neurons.

| Neuron Type | Receptors | Conductance parameters | Resulting PSPs (*) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| E[mV] | τrise[ms] | r | Jsyn[mV] | τrise[ms] | τdecay[ms] | |||||

| E | AMPA | 0.9 | 0 | 0.3 | 1 | 2 | - | 0.82 | 3.16 | 17.8 |

| NMDA | 0.14 | 0 | 1 | 0 | - | 100 | ||||

| GABAA | 0.15 | −75 | 0.25 | 1 | 6 | - | −0.1 | 3.2 | 64.3 | |

| GABAB | 0.009 | −90 | 30 | 0.8 | 200 | 600 | ||||

| I1 | AMPA | 1.6 | 0 | 0.1 | 1 | 0.7 | - | 0.5 | 0.8 | 10.7 |

| NMDA | 0.003 | 0 | 1 | 0 | - | 100 | ||||

| GABAA | 1 | −75 | 0.1 | 1 | 2.5 | - | −0.25 | 1.65 | 17.5 | |

| GABAB | 0.022 | −90 | 25 | 0.8 | 50 | 400 | ||||

| I2 | AMPA | 0.8 | 0 | 0.2 | 1 | 1.8 | - | 0.6 | 2.4 | 17.3 |

| NMDA | 0.012 | 0 | 1 | 0 | - | 100 | ||||

| GABAA | 0.7 | −75 | 0.2 | 1 | 5 | - | −0.6 | 3.2 | 42 | |

| GABAB | 0.025 | −90 | 25 | 0.8 | 150 | 500 | ||||

Having fixed the kinetics of post-synaptic responses according to neuron class (Table 2), we finally rescale the PSP amplitudes (wsyn) and latencies (dsyn) independently for each synapse type (see below), in order to account for the effects of different presynaptic neuron classes and to explicitly match the data reported in [93]. As a result of this parameter fitting process, the responses generated by the synaptic model are good matches to the responses experimentally observed in the nine types of biological synapses represented in this study.

Synaptic heterogeneity

As all the receptor parameters are fixed and neuron-specific (Table 2), we introduce synaptic heterogeneity by simply distributing the individual values of weights and delays (Fig 2d and Table 3). Whereas in the homogeneous condition, synaptic efficacies (wsyn) and conduction delays (dsyn) are fixed and equal for all connections of a given type, in the heterogeneous condition, these values are randomly drawn from lognormal distributions, left-truncated at 0 for weight distributions and 0.1 for delay distributions, and parameterized such that the distributions’ means are equal to the homogeneous value. For consistency among the various data sources, we fix the connectivity parameters, including not only structural aspects, but also synaptic weights and delays, to match the data reported in [93]. It is worth noting that variability in delay distributions could also be considered structural since they are primarily determined by morphological features (axonal length). However, the synaptic delays were chosen to match the observed latencies in postsynaptic responses. So, for convenience and simplicity, we treat variations in synaptic weights and delays as synaptic properties.

Table 3. Synaptic and structural parameters in the microcircuit.

Each of the nine connection types (which can be grouped as indicated) is characterized by a specific connection density, weight and delay. In the homogeneous condition, weights and delays are fixed and equal to the mean values (, ) for all synapses of a given type, whereas in the heterogeneous condition they are independently drawn from lognormal distributions with the corresponding mean and standard deviation. The last two rows in the table are the connection-specific structural bias parameters, used to skew the network’s degree and weight distributions. The indicated values were taken directly from [110] and [71]. The cases marked with—or x, correspond to connections that were either tested, revealing no significant effect (-) or untested due to missing data (x). In both cases, we set the corresponding values to 0.

| Parameter | Connection Types | Description | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| synE | synI | |||||||||

| EE | IE | EI | II | |||||||

| E → E | E → I1 | E → I2 | I1 → E | I2 → E | I1 → I1 | I1 → I2 | I2 → I1 | I2 → I2 | ||

| psyn[%] | 16.8 | 57.5 | 24.4 | 60 | 46.5 | 55 | 24.1 | 37.9 | 38.1 | Connection density |

| 0.45 | 1.65 | 0.638 | 5.148 | 4.85 | 2.22 | 1.4 | 1.47 | 0.83 | Synaptic weights | |

| 0.10 | 0.10 | 0.11 | 0.11 | 0.11 | 0.14 | 0.25 | 0.10 | 0.2 | ||

| 1.8 | 1.2 | 1.5 | 0.8 | 1.5 | 1 | 1.2 | 1.5 | 1.5 | Synaptic delays | |

| 0.25 | 0.2 | 0.2 | 0.1 | 0.2 | 0.1 | 0.3 | 0.5 | 0.3 | ||

| 5 | 5 | 0 | - | 0 | - | - | x | x | Degree distribution bias | |

| 5 | 0 | 0 | - | - | 0 | - | x | x | ||

| 1 | 1 | 1 | - | x | - | - | x | x | Weight correlation bias | |

| 0 | 1 | 0 | - | x | 0 | - | x | x | ||

The mean weight values were chosen to rescale the PSP amplitude of each synapse type to the target value. Additionally, as described below, if both structural and synaptic heterogeneity conditions are considered simultaneously, the weight distributions are skewed in order to introduce structural weight correlations (see Generating structural heterogeneity in Materials and methods).

Structural properties

The structure of the network is defined by the density parameter psyn, which is specific for each of the nine connection types. For consistency with the results and methods presented in [110] and [71], we set the connection densities (psyn) to the values reported in [93] (see Table 3). However, it is worth noting that the values reported in the literature can vary substantially, possibly reflecting methodological/technical limitations and/or the fact that connection density is a highly region- / species-specific feature. In fact, among all the complex parameter sets used throughout this study, the single parameter that was most difficult to reconcile across multiple sources was psyn. In the homogeneous case, random connections are created between neurons in a source population pre and target population post (with pre, post ∈ {E, I1, I2}) with a probability given by psyn.

Structural heterogeneity

In order to account for structural heterogeneity, we bias the network’s degree distributions by modifying the structure of the connectivity matrix Asyn, following the methods introduced in [71, 110] and validated against the same primary sources of experimental data used in this study.

By controlling the skewness of the out-/in-degree distributions (kout/in parameters, see Generating structural heterogeneity in Materials and methods), we can generate completely random, uniform connectivity (kout/in = 0, Fig 3a) or highly structured in-/out-degree distributions, with a larger variance in the number of connections per neuron (kout/in > 0, Fig 3b). For the structural heterogeneity condition, these parameters were fixed to the values that were shown to provide a better fit for the experimentally determined connectivity data (see [71, 110] and Table 3).

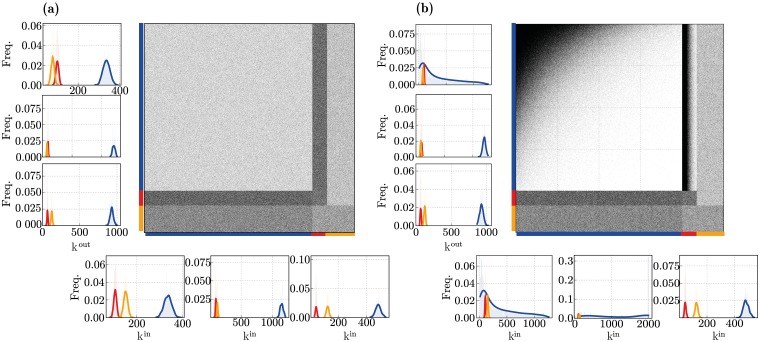

Fig 3. Microcircuit connectivity for (a) homogeneous and (b) heterogeneous network structures.

The large panels show the circuit’s complete connectivity matrix Asyn, comprising all connections among all neuron classes. The panels on the left sides show the corresponding out-degree (kout) distributions for the neuronal populations (E, I1 and I2 from top to bottom); the panels at the bottom show the corresponding in-degree (kin) distributions (E, I1 and I2 from left to right).

Additionally, in conditions where synaptic heterogeneity is also present (fully heterogeneous circuit), structural heterogeneity is further expressed as a bias in the synaptic efficacies for all incoming and outgoing connections to a given neuron. Following [71, 110] and [72], this bias is implemented by rescaling individual synaptic efficacies in order to introduce correlations between them, see Table 3 and Materials and methods. It should be noted that structural heterogeneity only modified connections from E neurons as most of the other connections were shown to have negligible effects (marked with—in Table 3) or were not successfully tested (marked with x), due to technical constraints. Even though this is likely to be incomplete, it appears to be sufficient to capture the most significant structural effects and their impact in population dynamics, while explicitly accounting for the experimental data in [93]. So, for consistency, we implemented and parameterized structural heterogeneity in the same manner as that reported in [71] and [110].

Emergent population dynamics

Throughout this study, and in order to isolate the effects of different sources of heterogeneity, we consider five different microcircuits: fully homogeneous (Hom), structural (Str), neuronal (Neu) or synaptic (Syn) heterogeneity in isolation and a fully heterogeneous circuit (Het), accounting for the combined effects. In this section, we set out to quantify and evaluate the specific impact of the different forms of heterogeneity on the characteristics of population activity. To do so, we consider the circuit’s responses to an unspecific and stochastic external input, modelling cortical background / ongoing activity (see Input specifications in Materials and methods). We determine and compare the circuit’s responsiveness by looking at the population rate transfer functions, as exemplified in Fig 4a for I2 neurons (complete results are provided in S1 Fig), and summarize the results by the change in absolute gain (ΔGain) and offset (ΔOffset) introduced by each source of heterogeneity, relative to the homogeneous condition (Fig 4b).

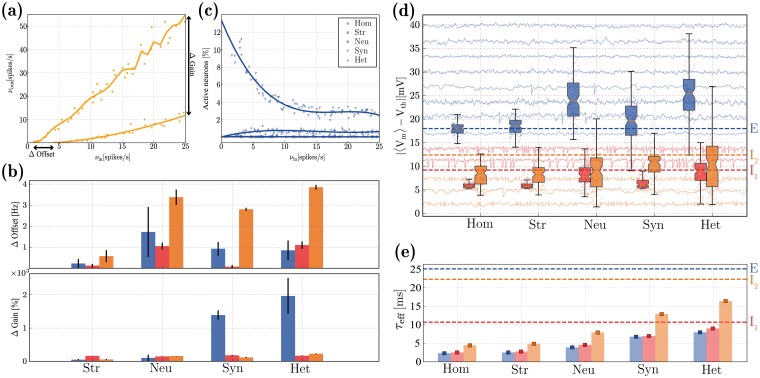

Fig 4. Characteristics of population activity in the quiet state.

(a): Rate transfer function of the I2 neurons in the homogeneous (dots) and structurally heterogeneous (squares) conditions. (b): Change in absolute gain (ΔGain, expressed as a percentage of rate gain relative to the baseline homogeneous condition) and offset (ΔOffset), see annotations in (a), for the E (blue), I1 (red) and I2 (orange) populations, for each heterogeneous condition (structural, neuronal, synaptic, and all forms combined), relative to the homogeneous condition. Error bars indicate the standard deviation across ten simulations per condition. (c): Fraction of active E neurons in the different conditions as a function of input rate νin. (d): Distributions of absolute distances between neurons’ mean membrane potentials 〈Vm〉 and their firing threshold Vth, for the three neuronal populations considered, under the different heterogeneity conditions. The dashed lines indicate the corresponding values reported in [94]. For illustrative purposes, we depict Vm traces for a small set of randomly chosen neurons in the background. (e): Effective membrane time constants (τeff = Cm/〈Gtotal〉) in the noise-driven regime, for the different neuronal classes and conditions. Error bars correspond to the means and standard deviations across each population. The dashed lines indicate the baseline values (τ0 = Cm/gleak). All results depicted were calculated over an observation period of 10 s. The results depicted in (d, e) were acquired with a fixed input rate of νin = 10 spikes/s and correspond to the distributions across the population, for a single simulation per condition.

All heterogeneous conditions, particularly neuronal and synaptic, cause a slight offset for all neuron types (more significant for I2 neurons), making them more responsive (firing at lower input rates) but the effect is not substantial (Fig 4b, top). In most of the conditions analysed, the E population is rather unresponsive, with less than 1% of the neurons active (Fig 4c) and firing at rates inferior to 1 spikes/s, regardless of the input rate. While structural and neuronal heterogeneity are incapable of circumventing this effect, synaptic heterogeneity appears to be important for the network to fire at more reasonable rates (albeit, still very sparsely), resulting in a substantial modulation of the gain of the rate transfer function (Fig 4b, bottom).

It should be noted that the impact of structural heterogeneity alone is mitigated by the low E rates, since the structural bias exists only within excitatory synapses or between excitatory neurons and fast-spiking interneurons (i.e. E E, I1 E, see Table 3). So, if the E population rarely fires, it is difficult to ascertain the effects of structural heterogeneity, suggesting either that its relevance pertains mostly to active states, when population activity is slightly higher, or that it is negligible at this scale.

The extremely sparse firing of E neurons that we observe is consistent with physiological measurements in layer 2/3 (e.g. [92, 94, 111–114]), but it significantly limits the degree to which we can quantify the effects of heterogeneity on population activity. So, in order to obtain a greater insight, we look at the sub-threshold responses and characteristics of membrane potential dynamics (Fig 4d and 4e). Excitatory neurons are always significantly hyperpolarized, with their mean membrane potentials kept far from threshold (Fig 4d, blue) and thus require much stronger depolarizing inputs to fire, compared with both inhibitory types. The inhibitory populations are, on average, much more depolarized and their membrane potentials fluctuate closer to their firing thresholds, particularly I1 (Fig 4d, red). Qualitatively, the ratio of average degree of depolarization among the different populations is retained across all conditions, with I1 neurons being strongly depolarized, followed by I2 and E and is consistent with experimental reports for circuits in a state of quiet wakefulness (Fig 4d, dashed lines). This feature stems directly from the electrophysiological properties of the different neuronal classes and the interactions among the 3 populations (given that it is already observed in the homogeneous circuit). Both synaptic and neuronal heterogeneity greatly increase the variability in the distribution of mean membrane potentials across all the neurons and cause a slight overlap between E and I2 populations, an effect that is also consistent with experimental evidence [94].

Active synapses contribute to the total membrane conductance and cause a deviation from the resting membrane time constant [115, 116]. This shunting effect may be mild in sparsely active circuits [117], but it provides a form of activity-dependent modulation of single neurons’ integrative properties [118], which constrain the circuit’s responsiveness. In the absence of synaptic input, I1 neurons have faster responses, characterized by a short baseline membrane time constant (τ0 = Cm/gleak ≈ 10.7 ms), whereas I2 and E neurons are slower (τ0 ≈ 22.3 and 25.1 ms, respectively) and can thus integrate their synaptic inputs over a larger time scale (dashed lines in Fig 4e). This relationship between the neuronal classes (τeff(I1) < τeff(I2) < τeff(E)) is a consequence of the neurons’ physiological properties and is consistent with empirical evidence [59, 118, 119]. However, when driven by external input, the ratio is modified and I2 neurons respond slowest, i.e. τeff(I2) > τeff(I1) > τeff (E). The presence of heterogeneous synapses is important to ameliorate the magnitude of this shunting effect (Fig 4e), which is very substantial in all conditions. It should be noted that, while the sparsity of recurrent activity (particularly that of E neurons), would prompt us to expect a very minor reduction in τeff, the observed results are caused by the large synaptic input provided as background.

Excitation/inhibition balance

The balance of excitation and inhibition is one of the most important and widely observed features in the neocortex. It plays a pervasive role in modulating and stabilizing circuit dynamics [120], shifting the population state [121–123], selectively gating and routing signals [124–126] and maintaining sparse, distributed dynamics [114, 117], critical for adequate processing and computation [127–129].

As demonstrated in the previous section (Fig 4), the different sources of heterogeneity significantly influence the circuit’s responsiveness, partially by modifying how the different neurons and neuronal populations receive and integrate their synaptic inputs. These differences can also be observed in the amplitude and time course of the total excitatory and inhibitory drive onto each neuron, as can be seen in Fig 5. The results shown are a compound effect of the kinetics of the specific receptors involved and the post-synaptic currents they elicit, the physiological properties of the different neuronal classes as well as the density of specific connections.

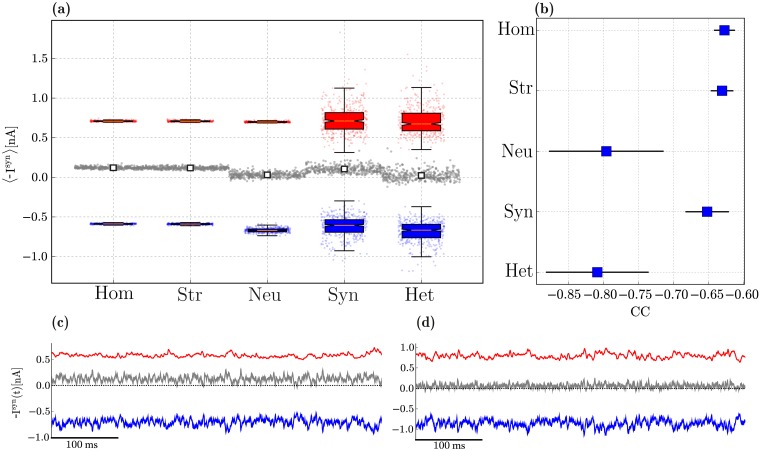

Fig 5. Balance of excitation and inhibition in excitatory neurons driven by background, Poissonian input at νin = 10 spikes/s.

(a): Distribution of mean amplitudes of excitatory (blue) and inhibitory (red) membrane currents onto E neurons in the different conditions, as well as the absolute difference between them (grey). For each condition, we show the single data points, where the currents onto a given neuron are summarized as a set of 3 points (〈Iexc〉 in blue, 〈Iinh〉 in red and |〈Iinh〉 − 〈Iexc〉| in grey). Overlaid on top of these data points are the distributions across all the neurons, summarized as box-plots: the box represents the first and third quartiles (IQR); the median is marked in red; the whiskers are placed at 1.5 IQR and the outliers can be seen in the underlying data points. The white markers in the middle display the mean difference of synaptic amplitudes across all neurons, for each condition. (b): zero-lag correlation coefficient between excitatory and inhibitory synaptic currents (mean and standard deviation across the E population). (c, d): Examples of the total excitatory and inhibitory synaptic currents received by a randomly chosen E neuron in the homogeneous and fully heterogeneous conditions, respectively. Results in (a) and (b) were gathered from 10 simulations per condition.

In a globally balanced state, the amplitudes of excitatory and inhibitory synaptic currents cancel each other on average. This occurs in our microcircuit model only in the presence of neuronal heterogeneity (Fig 5a). Variability in connectivity structure is indistinguishable from the homogeneous condition, whereas variability in synaptic weights and delays significantly increases the variance in the distribution of post-synaptic current amplitudes, but does not shift the mean. This results in an inhibition-dominated synaptic input, resembling that of the homogeneous condition (see also Fig 5c), despite the substantially different distributions.

Apart from being balanced on average, a condition of “detailed” balance [126, 130] is characterized by E and I currents that closely track each other and are strongly anti-correlated (Fig 5b). In the homogeneous circuit, excitatory and inhibitory currents are most weakly anti-correlated (CC ≈ −0.63, see also Fig 5c). Synaptic heterogeneity causes a slight improvement, but the most important contribution to this effect comes from neuronal heterogeneity. In this condition, the mean correlation coefficient reaches CC ≈ −0.8, although it also introduces a greater variance than synaptic or structural heterogeneity (see also Fig 5d).

Both global and detailed balance thus appear to be emergent properties of heterogeneous microcircuits, primarily due to neuronal diversity, but encompassing also a clear influence of synaptic diversity. As is the case with all results presented so far, the fully heterogeneous circuit retains several key properties of interest, but appears to inherit them from different sources. As before, the effects of structural heterogeneity are mitigated by the very sparse firing of the E population, which render its effects moot and no significant deviation from the homogeneous condition is observed.

Active processing and computation

In order to induce a functional state, engaging the circuit in active processing, we introduce an additional input signal, directly encoded as a piece-wise constant somatic current (see Input specifications in Materials and methods). We began by tuning the input amplitudes (of both background input firing rate νin and external input current ρu) independently, for each condition, in order to approximate the relative ratio of mean firing rates among the different populations (see S2 Fig), i.e. we attempt to find a combination of input parameters that allows the mean firing rates to remain within realistic bounds (νE ∈ [0.5, 5], , , considering the values reported in [93, 94, 111, 114]).

We consider the circuit’s responses to this input signal as an active state, as opposed to the condition explored in the previous sections, where the circuit was driven solely by background, stochastic input (noise). It is worth noting, however, that the similarities between what we call quiet and active states and their biological counterparts are limited (see Discussion). In the following, we show that despite these limitations, the actively engaged circuit operates in similar dynamic regimes to its biological counterpart and maintains the key statistical features that are most likely to play a significant role in modulating the circuit’s processing capacity.

In this section, we assess the microcircuit’s capacity to compute complex functions of the input signal, as described in the Materials and Methods. Note that we purposefully removed any predetermined structure in the input signal, such that the measurements reflect the properties of the system and not the acquisition of structural information in the input. If we were to consider naturalistic sensory input as the driving signal, this would not be the case. Furthermore, we intentionally focus on generic information processing as the ability the perform arbitrary transformations on an input signal and not on specific functions which might be performed by specific microcircuits.

Spiking activity in the active state

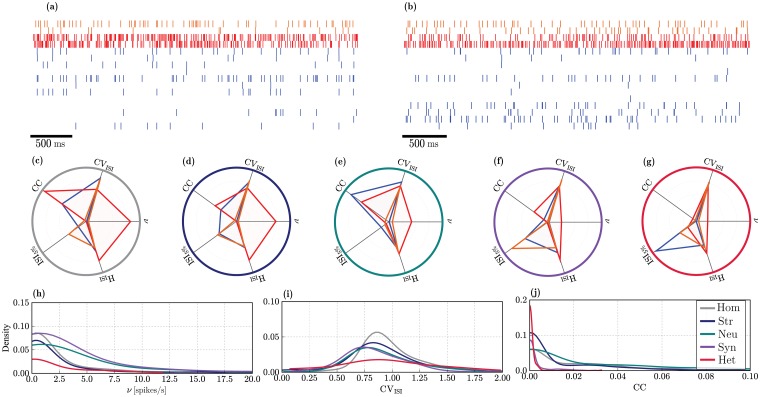

Due to the extremely sparse firing observed in the quiet state, an adequate comparison of spiking statistics is only sensible in the active condition. While no explicit effort was taken to constrain the circuit’s operating point when tuning the input parameters (the focus was purely on average firing rates), all conditions operate on an asynchronous irregular regime (exemplified by the raster plots in Fig 6a). This regime is characterized by low pairwise correlations (CC < 0.03) and high coefficients of variation (CVISI ≈ 0.9), for all neuron classes, in all conditions. Each condition generates different spiking responses with slight variations in activity statistics. The profiles of the activity statistics for the three neuronal classes are summarized in Fig 6c–6g for the five different conditions. The complete results, displaying the specific profiles for the different classes and conditions separately can be consulted in S3 Fig.

Fig 6. Statistical properties of spiking activity in the active state for the E (blue), I1 (red) and I2 (orange) populations.

(a, b): Example raster plots depicting the activity of a small, randomly chosen subset of neurons, over a recording period of 2.5s in a homogeneous and fully heterogeneous circuit, respectively. (c)-(g): Activity statistics profiles for the different populations (overlaid) in the different conditions. The radial axes represent: mean pairwise correlation coefficient (CC), coefficient of variation of the inter-spike intervals (CVISI), mean firing rate (ν[spikes/s]), entropy (HISI[bits]) and burstiness (ISI5%[s]) of the firing patterns. The values along the radial axes are normalized to the largest value across all neuron classes in each condition. The units have been removed for better legibility (see S3 Fig). (h)-(j): Distribution of firing rates ν[spikes/s], CVISI and CC for the E population in the different heterogeneity conditions (see legend in (j)). The results depicted are population averages, obtained from one simulation per condition.

In the homogeneous condition (Fig 6a and 6c), I1 neurons exhibit the most distinctive profile, with the highest amount of synchrony and the largest firing rates as well as a very bursty and regular firing relative to any other neuronal class and any other condition. Consistent with empirical observations [94, 114, 131, 132], E neurons retain an extremely low firing rate (νE ≤ 1 spikes/s), even when stimulated by the extra input current that characterizes the active state. The main difference is that a larger fraction of the population is engaged and actively firing. This result demonstrates that sparse firing of E neurons is a stable characteristic of layer 2/3 microcircuits, emerging from the strong and dense inhibition provided primarily by I1 neurons that, firing at very high rates, strongly inhibit the E population, regardless of the variations introduced by different sources of heterogeneity and the addition of the extra excitatory input.

The impact of the various heterogeneity conditions particularly affects the degree of synchronization and burstiness across the different populations. Only the firing rates of I1 neurons are significantly modified by heterogeneity, whereas for all other neuron classes, they remain consistently low. Irregularity and randomness in the firing patterns are mostly unaffected as is clear by observing the similarity in the respective axes (HISI and CVISI in Fig 6c–6g).

The effects of structural heterogeneity (Fig 6d) are only noticeable on the neuronal classes that are directly affected (E and I1, see Table 3); no changes in activity statistics are observable for the I2 population (orange profiles in Fig 6c and 6d). Excitatory neurons fire less synchronously and exhibit a much lower tendency to fire in short spike bursts, compared with the homogeneous condition. On the other hand, I1 neurons show a slight decrease in synchrony and firing rate.

Diversity in neuronal parameters (Fig 6e) strongly affects the response properties of I2 neurons, slightly increasing their firing rates and correlation coefficient. The most noticeable effect, however is a greatly increased tendency to fire in short bursts (ISI5% ≈ 1s) which is the most significant deviation of the standard profile exhibited by this neuronal class in all other conditions (for a more complete comparison, consult S3 Fig). Heterogeneous E neurons have a higher tendency to fire synchronously (albeit still with very low CC), compared to any other condition. As for the I1 population, the most significant effect of neuronal heterogeneity is a reduction of the mean firing rate.

Synaptic heterogeneity (Fig 6f) causes a clear alteration of the firing profile of all neuron classes, particularly E and I1, resulting in a noticeable decrease in synchronization in all populations, thus having a marked decorrelating effect. It also produces a substantial reduction in the tendency for burst spiking in the E and I2 populations. The firing rates of both inhibitory populations are reduced (due to the chosen input parameters, see S2 Fig) which, consequently, leads to a slight increase in the E neurons’ firing rates that also fire slightly more regularly.

Interestingly, the firing profile observed in the fully heterogeneous circuit (Fig 6b and 6g) exhibits some unique features, different from any of those created by the individual sources of heterogeneity in isolation. Particularly prominent is the complete abolishment of any degree of synchronization in any of the neuronal populations, which show the smallest correlation coefficients of all the cases considered. This effect is likely primarily acquired from synaptic heterogeneity, but goes further than the effect observed there. The firing profile of I2 neurons in the fully heterogeneous circuit retains all the features observed in the homogeneous circuit, indicating that the variations introduced by neuronal and synaptic heterogeneity are counteracted by the complex interactions between the different sources of heterogeneity.

Overall, the statistics of population activity clearly demonstrate that the fully heterogeneous circuit is more than the sum of its parts, i.e. the variations introduced by the combination of multiple sources of heterogeneity cannot be fully accounted for by their individual effects and lead to more complex interactions that strongly modulate the circuit’s operating point. In addition, all heterogeneity conditions give rise to similar distributions (Fig 6h–6j), i.e. lognormal distributions of firing rates (argued to be a beneficial feature [72, 133]) and correlation coefficients as well as a Gaussian distribution of CVISI, with mean close to 1. The different conditions simply modulate the parameters of the distributions: synaptic and neuronal heterogeneity broaden the firing rate distributions; synaptic heterogeneity alone is responsible for skewing the CCs to smaller values, an effect that is stronger and more pronounced in the fully heterogeneous circuit. It should be noted that the tails of the rate distributions in networks with heterogeneous neurons and/or synapses fall beyond the range typically observed in layer 2/3 microcircuits. The extra somatic current with which we emulate the active state drives the targeted sub-population to fire excessively in these conditions, which highlights a limitation of our approach (see Limitations and future work).

Temporal tuning and memory capacity

Online processing requires the continuous acquisition and integration of temporally extended information arriving through multiple time-varying input streams. As such, cortical microcircuits need to retain information over time (fading memory) and combine it in meaningful ways. This generic operating principle thus constitutes an important feature underlying cortical information processing (see e.g. [4, 134–136]), and is primarily determined by architectural constraints that potentially modulate the circuit’s operating timescales. In this section, we investigate the effect of heterogeneity on the ability of our microcircuit model to retain information over long timescales, enabling it to operate over a broad dynamic range.

The baseline, homogeneous circuit already exhibits these properties (Fig 7a), with an average intrinsic time constant (measured as the decay of the membrane potential autocorrelation functions, see Materials and methods) of ≈ 126.75 ms in the quiet state and a relatively broad dynamic range. Thus, even when all the system’s components are homogeneous, neurons appear to operate at relatively long time scales. This can be partially attributed to the fact that each input elicits very small, sub-millivolt responses [137, 138] and these neurons are strongly hyperpolarized. Therefore, the microcircuit complexity, in combination with the nature of the sub-threshold dynamics, is inherently sufficient to allow it to operate over a large and long temporal range and to rapidly switch to the timescales of its primary driving input (Fig 7a, top).

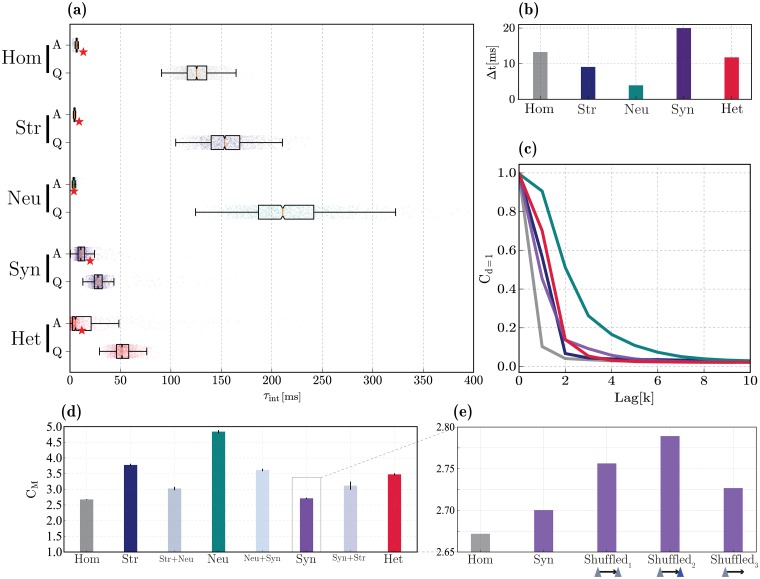

Fig 7. Temporal tuning and linear memory capacity.

(a): distributions of intrinsic timescales (τint) in the quiet (Q, bottom) and active (A, top) states, for each of the different conditions. (b) Optimal stimulus resolution (Δt) that allows each circuit to perfectly track its input signal (see also red star markers in (a) and S4 Fig). (c): Fading memory functions for the different heterogeneity conditions, determined as the ability to reconstruct the input signal at different delays (k). Colours as in panel (d) below. (d): Total memory capacity, corresponding to the area under the curves in (c). Apart from the main conditions depicted in (c), pair-wise combinations among conditions are depicted in between. (e) Effects of synaptic heterogeneity on memory capacity, in conditions where the weight distributions are fixed, but re-shuffled such that the strongest weights are assigned to the connections among the input population (Shuffled1), from the input population to all excitatory neurons (Shuffled2), or from the input population to all neurons (Shuffled3). Results depicted in (b), (d) and (e) were gathered from 10 simulations per condition.

In order to reliably compute, it is beneficial if the system’s high-dimensional dynamics become transiently ‘enslaved’ by the input [16]. This would correspond to a switch in the system’s intrinsic timescale to the dominant input time scale, allowing the intrinsic fluctuations to help the circuit track the input dynamics. The observed features of having a broad dynamic range in the quiet state, along with the ability to rapidly switch to the shorter timescale of the driving input during active processing appear to stem primarily from the microcircuit’s composition and dynamics (homogeneous condition). However, they are present to greater extents in the presence of structural and neuronal heterogeneity (see Fig 7a). The same pattern is true for memory capacity, whereby neuronal heterogeneity causes a large extension of the circuit’s memory range, leading to a slowly fading and relatively long memory store (Fig 7c and 7d).

We express the memory functions as the ability to use the current state of the circuit x[n] to reconstruct the input sequence at different time lags u[n − k] for k ∈ [0, 1, 2, …, T] (as depicted in Fig 7c). The total memory capacity CM (Fig 7d) is then the sum of the individual capacities at different lags k and thus characterizes the maximum extent to which information about past inputs is retained in the current state. It is worth noting, however, that these results should be interpreted cautiously as the different circuits exhibit different responsiveness to the input signal. For comparability, the time constant at which the input varies (Δt) was chosen independently for each condition as the value that maximizes the circuit’s ability to reconstruct its input, i.e. the value that allowed optimal performance at 0 time lag (see Fig 7b and S4 Fig).

The memory range of structurally heterogeneous networks is similar to that of the fully heterogeneous circuit (CM ≈ 3.65 and 3.49, respectively) and both are markedly larger than in the corresponding homogeneous case (CM ≈ 2.67, Fig 7c and 7d). Thus, despite having a barely noticeable effect on microcircuit dynamics and state transitions, structural heterogeneity appears to have non-negligible functional effects. This is somewhat surprising, in light of all the results discussed so far, but consistent with e.g. [139], who proposed that a heterogeneous network structure can give rise to broad and diverse temporal tuning.

The fact that physiological diversity in single neuron properties (neuronal heterogeneity) extends the dynamic range and memory capacity, is to be expected since it directly decreases redundancy and adds variability to the population responses. However, the magnitude of the effect is very significant, nearly doubling the memory capacity, thus making neuronal heterogeneity stand-out as the most functionally relevant condition. In the presence of neuronal heterogeneity alone, the circuit becomes much more responsive, with broader temporal tuning and memory capacity (Fig 7) and capable of achieving optimal performance in reconstructing the input signal, even when it varies at short timescales (peak reconstruction performance is achieved with Δt ≈ 3.9 ms versus Δt ≈ 13.3 ms in the homogeneous circuit, see Fig 7b and S4 Fig).

Counter-intuitively, it appears that synaptic heterogeneity makes the circuit ‘sluggish’, in the sense that it appears to be less responsive and incapable of tracking fast fluctuations in the input signal (Δt ≈ 20 ms, Fig 7b and S4 Fig). These circuits are also endowed with a very short memory capacity (CM ≈ 2.7) that is similar to that of the homogeneous circuit. Accordingly, diversity in synaptic components enforces a very narrow temporal tuning, skewed towards short timescales in the quiet state (mean τint ≈ 30 ms) and reduces the circuit’s ability to acquire the input timescale (Fig 7a and 7b).

These, apparently deleterious, effects of synaptic heterogeneity hint at an important limitation of our implementation (further discussed in Limitations and future work): heterogeneous connection strengths in biological circuits are not randomly assigned, but result from learning and adaptation processes and sub-serve the development of a functional architecture see, e.g. [185], tailored to the circuit’s processing demands. For simplicity, however, we did not introduce any form of synaptic adaptation in our microcircuit model and we have randomly distributed the connection strengths across the network, which may have precluded us from capturing the functional relevance of synaptic heterogeneity.

In order to investigate whether non-random features of the distribution of synaptic weights play a role in the memory capacity, we perform an additional test whereby the weight distributions were retained as in the original implementation, but the individual values were re-shuffled according to three different assumptions about which connections are most likely to have the strongest weights (see Fig 7e): connections within the sub-population of E neurons that were directly stimulated (Shuffled1); connections between these input-driven neurons and other E neurons (Shuffled2); and connections from the input-driven neurons to every other neuron in the microcircuit (Shuffled3, i.e. also involving synapses onto I1 and I2 populations). The results depicted in Fig 7e demonstrate that any of these modifications improves the circuit’s memory capacity, relative to the random synaptic heterogeneity condition. These improvements are only marginal (reaching a maximum value of CM ≈ 2.78), but substantiate the claim that the non-random nature of synaptic heterogeneity is functionally meaningful and ought to be carefully scrutinized. Among the conditions tested, the strengthening of excitatory synapses connecting the input-driven neurons to the remaining E neurons (Shuffled2) appears to be the most beneficial.

Overall, these results demonstrate a clear relation between the system’s responsiveness to temporal fluctuations in the input signal, the intrinsic timescales that characterize the neurons’ activity and the circuit’s memory capacity. There appears to be a ‘push-and-pull’ phenomenon caused by the interactions of neuronal and synaptic heterogeneity, whereby the first significantly boosts the circuit’s dynamic range and memory capacity, whereas the second pulls it back to values similar to the homogeneous condition. Additionally, the independent sources of heterogeneity co-modulate each other’s effects in unexpected ways (Fig 7d). For example, structural and neuronal heterogeneity, which individually cause the most noticeable positive impact on the circuit’s memory capacity, fail to do so when combined. On the other hand, the negative impact of synaptic heterogeneity alone is ameliorated when it is combined with either neuronal or structural heterogeneity.

Processing capacity

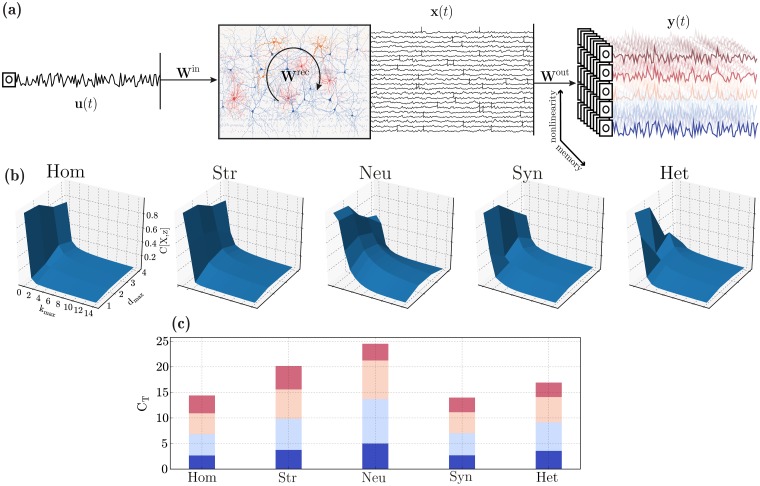

To complement the results of the previous sections and determine the microcircuit’s suitability for online processing with fading memory, we adopt the notion of information processing capacity introduced in [140], which allows us to quantify the system’s ability to employ different modes of information processing and, by combining them, determine the total computational capacity of the circuit (for a formal description, see Processing capacity in Materials and methods). By this definition, the memory capacity discussed in the previous section corresponds to the capacity to reconstruct the set of k different linear functions (degree 1 Legendre polynomials) of the input u, each corresponding to a specific time lag, see also [141]. As such, it corresponds to the fraction of the total capacity associated with linear functions (since no products are involved) and measures the circuit’s linear processing capacity (d = 1 in Fig 8). Accordingly, degrees d ≥ 2 correspond to larger and increasingly complex sets of non-linear basis functions (products of Legendre polynomials, see illustrative example in Fig 8a) and thus require increasingly more sophisticated computational capabilities.

Fig 8. Computational capacity in the different heterogeneity conditions.

(a) Illustration of the setup used to assess computational capacity. An input signal, u, is used to drive a sub-set of E neurons and the population responses, x, are recorded and gathered in state matrix X. These states are then used to reconstruct a set of time-dependent functions z = f(u−k). These target functions vary in complexity (degree of nonlinearity, color-coded) and memory requirements. (b) Normalized capacity space, i.e. ability to reconstruct functions of u at different maximum delays (kmax, memory) and degrees (dmax, complexity/nonlinearity). For any given function, the capacity is normalized such that C[X, z] = 1 corresponds to perfect reconstruction of z. (c) Total processing capacity, expressed as the sum of all capacities for a given degree (the incremental color code in each bar corresponds to the maximum degree for each segment, varying from 1 to 4 and is also illustrated in (a)).

We can thus distinguish between computational complexity / non-linearity (specified by the maximum degree of the basis functions used, dmax) and memory (specified by the maximum delay taken into consideration, kmax). By evaluating a very large set of functions of u, we can quantify the circuit’s information processing capacity over the space of basis functions (Fig 8b). The total capacity CT (Fig 8b), defined as the sum of the individual capacities (C[X, z]) for all the different functions z tested, thus quantifies the circuit’s ability to compute multiple transformations of u, with variable degrees of complexity and provides a summarized description of the system’s information processing capacity (see Processing capacity in Materials and methods).

In line with the results on the previous section, heterogeneity in neuronal parameters has the most significant effect, greatly extending the space of computable functions, both linear and nonlinear. By allowing the circuit to retain contextual information for longer (extended memory range), these circuits have a high capacity even for relatively large delays, as demonstrated by the slowly decaying memory curves in Fig 8b. As a consequence, the total capacity of microcircuits with heterogeneous neurons is the largest among all the conditions (Fig 8c).

Despite its very modest effects on population activity, structural heterogeneity has very interesting consequences on the microcircuit’s processing capacity, particularly in the ability to compute complex nonlinear functions. Although the memory functions decay abruptly (almost as abruptly as in the homogeneous condition, Fig 8b), the circuits achieve a larger capacity for more complex functions (Fig 8c) and the total capacity at d = 4 (largest degree evaluated) is the largest among all conditions tested, as can be seen by comparing the top crimson bars in Fig 8c.

Also in line with the results for d = 1 (linear memory capacity), synaptic heterogeneity has a deleterious effect on processing capacity, reducing it to values smaller than the homogeneous case (CT ≈ 13.9 versus CT ≈ 14.4 for the homogeneous condition, Fig 8c). Consequently, the beneficial effects introduced by both structural and neuronal heterogeneity are counteracted by the negative effect of synaptic heterogeneity, as observed in the previous section. These cancelling effects result in the fully heterogeneous circuit having a total capacity that is only modestly superior to the homogeneous case (CT ≈ 16.9).

Under idealized conditions, the total capacity is bound by the number of linearly independent state variables of the dynamical system, which, in the limit of T → ∞, equals N (the number of neurons), in systems that perfectly obey the fading memory property and whose neurons’ activity is linearly independent (for proofs, see [140]). In that respect, the values we have obtained for the total capacity are very modest and close to only 1% of the theoretical limit. On the one hand, this is due to methodological limitations (we could only investigate a short range of dmax), and on the other, suggests that there may be important aspects that were neglected in this study that significantly boost the total capacity and, at least partially explain the difference (see Limitations and future work).

Nevertheless, the results demonstrate a consistent pattern to that observed in the memory capacity, i.e. the functional consequences of the different sources of heterogeneity are consistent for both the ability to compute linear and non-linear functions with fading memory, as circuits with the largest linear memory capacity are also the ones with the largest non-linear processing capacity (namely neuronal heterogeneity). However, these results indicate that neuronal heterogeneity has its main effect on memory (kmax), greatly extending the capacity to compute functions of u−k for larger values of k (Fig 8b) while structural heterogeneity (that has the second largest functionally beneficial effects) boosts the ability to compute more complex functions, with a main effect on dmax.

Discussion

Heterogeneity and diversity in cellular, biochemical and physiological properties seen within and across cortical regions and layers exerts a significant influence on population dynamics. Although often disregarded by the reduced models commonly used in computational neuroscience, these features of the neural tissue may well be partially responsible for the high computational proficiency and functional properties of these systems. In order to understand the functional relevance of the different ‘building blocks’ and their inherent complexity and diversity, it is important to start from relatively simple formalisms and gradually account for the biological complexity while maintaining coherence with the relevant empirical observations at multiple levels. The present study proposes a data-driven modelling approach as an exploratory strategy to systematically uncover the computational benefits of different microcircuit features in an attempt to elucidate and quantify the biophysical substrates of neural computation.

We have focused on the composition of layer 2/3 cortical microcircuits, since their highly recurrent connectivity [142, 143] and sparse, asynchronous activity [93, 111, 113, 117, 142, 144] are ideally suited to study the nature of sparse distributed processing in cortical microcircuit models. The relatively small extent of the neuritic processes (in comparison with deeper layers), makes it acceptable to assume that the potential role of dendritic compartmentalization [145, 146] and other effects caused by the detailed neuronal morphology [147, 148] are negligible, allowing us to use simple point-neuron models with limited loss—which would not be the case if we accounted for the deeper layers. Additionally, the input/output relations and unique position of layer 2/3 in a cortical column suggests a particularly prominent computational role as it must integrate and process multiple streams of information in meaningful ways.

In an attempt to disentangle the role played by heterogeneity in different components of the system, we tentatively partitioned it into neuronal, structural and synaptic components (see Introduction, Data-driven microcircuit model and Supplementary Materials). These different sources of heterogeneity differentially influence the characteristics of population responses: from introducing variability in how different neurons and neuronal classes respond to and integrate their synaptic inputs, to variations in the magnitude and distribution of those inputs, among other effects. These, often subtle, differences have complex effects at the population level and strongly condition the system’s operating point. The fully heterogeneous circuit provides the closest approximation to the biophysical reality, exhibiting important commonalities, and appears to inherit different features from different sources of heterogeneity. Naturally, given the simplifications required, our conclusions on the effects of the various heterogeneities are primarily qualitative. However, the extent to which the model responses differ from the empirical observations can also be informative about the potential impact of microcircuit features and processes that were not explicitly considered or were overlooked or oversimplified, as we discuss in the following section.

Collecting, validating and organizing experimental data relevant for these type of studies is still a monumental challenge. Manual annotation and parameter extraction are cumbersome, error-prone strategies and only feasible on well-constrained systems and well-defined problems. The creation and active curation of stable and reliable large-scale databases (of which good examples exist: Allen Brain Atlas [149], NeuroMorpho [150], NeuroElectro [100], NMC [91], ICGenealogy [151], to name a few), along with standard and widely accepted registration and sharing practices [152, 153] are increasingly a priority in a community-driven effort to better constrain neuroscience models and integrate knowledge from multiple disciplines. In addition, automated parameter extraction, estimation as well as model fitting and comparison is strictly and increasingly necessary for studies in this direction. These are complex challenges as they must meet the requirements of an ever-changing scientific field that, consequently, doesn’t lend itself easily to standardization.