Abstract

Background

The shortage and disproportionate distribution of health care workers worldwide is further aggravated by the inadequacy of training programs, difficulties in implementing conventional curricula, deficiencies in learning infrastructure, or a lack of essential equipment. Offline digital education has the potential to improve the quality of health professions education.

Objective

The primary objective of this systematic review was to evaluate the effectiveness of offline digital education compared with various controls in improving learners’ knowledge, skills, attitudes, satisfaction, and patient-related outcomes. The secondary objectives were (1) to assess the cost-effectiveness of the interventions and (2) to assess adverse effects of the interventions on patients and learners.

Methods

We searched 7 electronic databases and 2 trial registries for randomized controlled trials published between January 1990 and August 2017. We used Cochrane systematic review methods.

Results

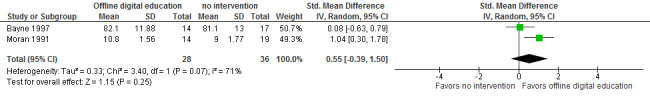

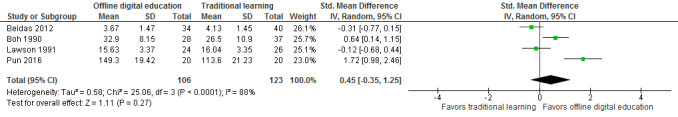

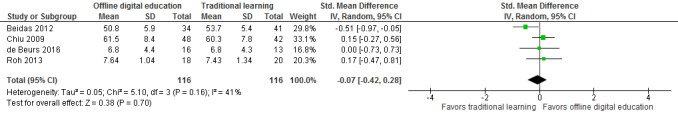

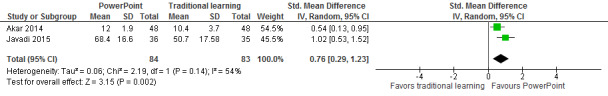

A total of 27 trials involving 4618 individuals were included in this systematic review. Meta-analyses found that compared with no intervention, offline digital education (CD-ROM) may increase knowledge in nurses (standardized mean difference [SMD]=1.88; 95% CI 1.14 to 2.62; participants=300; studies=3; I2=80%; low certainty evidence). A meta-analysis of 2 studies found that compared with no intervention, the effects of offline digital education (computer-assisted training [CAT]) on nurses and physical therapists’ knowledge were uncertain (SMD 0.55; 95% CI –0.39 to 1.50; participants=64; I2=71%; very low certainty evidence). A meta-analysis of 2 studies found that compared with traditional learning, a PowerPoint presentation may improve the knowledge of patient care personnel and pharmacists (SMD 0.76; 95% CI 0.29 to 1.23; participants=167; I2=54%; low certainty evidence). A meta-analysis of 4 studies found that compared with traditional training, the effects of computer-assisted training on skills in community (mental health) therapists, nurses, and pharmacists were uncertain (SMD 0.45; 95% CI –0.35 to 1.25; participants=229; I2=88%; very low certainty evidence). A meta-analysis of 4 studies found that compared with traditional training, offline digital education may have little effect or no difference on satisfaction scores in nurses and mental health therapists (SMD –0.07; 95% CI –0.42 to 0.28, participants=232; I2=41%; low certainty evidence). A total of 2 studies found that offline digital education may have little or no effect on patient-centered outcomes when compared with blended learning. For skills and attitudes, the results were mixed and inconclusive. None of the studies reported adverse or unintended effects of the interventions. Only 1 study reported costs of interventions. The risk of bias was predominantly unclear and the certainty of the evidence ranged from low to very low.

Conclusions

There is some evidence to support the effectiveness of offline digital education in improving learners’ knowledge and insufficient quality and quantity evidence for the other outcomes. Future high-quality studies are needed to increase generalizability and inform use of this modality of education.

Keywords: randomized controlled trial, systematic review, medical education

Introduction

Background

There is no health care system without health professionals. The health outcomes of people rely on well-educated nurses, pharmacists, dentists, and other allied health professionals [1]. Unfortunately, these professionals are in short supply and high demand [2,3]. Almost 1 billion people are negatively affected by the lack of access to adequately trained health professionals, suffering ill-health or dying [4,5]. In many low- and middle-income countries (LMICs), this situation is further aggravated by the difficulties in implementing traditional learning programs; deficiencies in health care systems and infrastructure; and lack of essential supplies, poor management, corruption, or low remuneration [6].

Digital education also known as e-learning is an umbrella term encompassing a broad spectrum of educational interventions characterized by their tools, technological contents, learning objectives or outcomes, pedagogical approaches, and delivery settings, which includes, but is not limited to, online and offline computer-based digital education, massive open online courses (MOOCs), mobile learning (mLearning), serious gaming and gamification, digital psychomotor skill trainers, virtual reality, or virtual patient scenarios [7]. Digital education aims to improve the quality of teaching by facilitating access to resources and services, as well as remote exchange of information and peer-to-peer collaboration [8]; it is also being increasingly recognized as one of the key strategic platforms to build strong education and training systems for health professionals worldwide [9]. The United Nations and the World Health Organization consider digital education as an effective means of addressing the educational needs among health professionals, especially in LMICs.

This review focused on offline digital education. This refers to the use of personal computers or laptops to assist in delivering stand-alone multimedia materials without the need for the internet or local area network connections [10]. The educational content can be delivered via videoconferences, emails, and audio-visual learning materials kept in either magnetic storage, for example, floppy disks, or optical storage, for example, CD-ROM, digital versatile disk, flash memory, multimedia cards, external hard disks, or downloaded from a networked connection, as long as the learning activities do not rely on this connection [11].

There are several potential benefits of offline digital education such as unrestrained knowledge transfer, enriched accessibility, and significance of health professions education [12]. Further benefits include flexibility and adaptability of educational content [13], so that learners can absorb curricula at a convenient pace, place, and time [14]. The interventions can also be used to deliver an interactive, an associative, and a perceptual learning experience by combining text, images, audio, and video via combined visual, auditory, and spatial components, further improving health professionals’ learning outcomes [15,16]. By doing so, offline digital education can potentially stimulate neurocognitive development (memory, thinking, and attention) by enhancing changes in the efficiency of chemical synaptic transmission between neurons, increasing specific neuronal connections and creating new patterns of neuronal connectivity and generating new neurons [17]. Finally, health professionals better equipped with knowledge, skills, or professional attitudes as a result of offline digital education might improve the quality of health care services provision, as well as the patient-centered and public health outcomes, and reduce the costs of health care.

Objectives

This systematic review was one of a series of reviews evaluating the scope for implementation and the potential impact of a wide range of digital health education interventions for postregistration and preregistration health professionals. The objective of this systematic review was to evaluate the effectiveness of offline digital education compared with various controls in improving learners’ knowledge, skills, attitudes, satisfaction, and patient-centered outcomes.

Methods

At the time of conducting and reporting the review, we used and adhered to the systematic review methods as recommended by the Cochrane Collaboration [18]. For a detailed description of the methodology, please refer to the study by Car et al [7].

Search Strategy and Data Sources

We searched the following databases (from January 1990 to August 2017): MEDLINE (via Ovid), Excerpta Medica dataBASE (via Elsevier), Web of Science, Educational Resource Information Center (via Ovid), Cochrane Central Register of Controlled Trials, The Cochrane Library, PsycINFO (via Ovid), and the Cumulative Index to Nursing and Allied Health Literature (via EBSCO). The search strategy for MEDLINE is presented in Multimedia Appendix 1. We searched for papers in English but considered eligible studies in any language. We also searched 2 trial registries (EU Clinical Trials Register and ClinicalTrials.gov), screened reference lists of all included studies and pertinent systematic reviews, and contacted the relevant investigators for further information.

Eligibility Criteria

Only randomized controlled trials (RCTs) and cluster RCTs (cRCTs) of postregistration health professionals except medical doctors—as they were covered in a separate review [19]—using either stand-alone or blended offline digital education with any type of controls (active or inactive) measuring knowledge, skills, attitudes, satisfaction, and patient-centered outcomes (as primary outcomes) as well as adverse effects or costs (as secondary outcomes) were eligible for inclusion in this review.

We excluded crossover trials, stepped wedge design, interrupted time series, controlled before and after studies, and studies of doctors (including medical diagnostics and treatment technologies) or medical students. Participants were not excluded on the basis of sociodemographic characteristics such as age, gender, ethnicity, or any other related characteristics.

Data Selection, Extraction, and Management

The search results from the different electronic databases were combined in a single EndNote (X8.2) library, and duplicate records of the same reports were removed. In total, 2 reviewers independently screened titles and abstracts to identify studies that potentially meet the inclusion criteria. The full text versions of these articles were retrieved and read in full. Finally, 2 review authors independently assessed articles against the eligibility criteria, and 2 reviewers independently extracted the data for each of the included studies using a structured data extraction form and the Covidence Web-based software (Veritas Health Innovation, Melbourne, Australia). We extracted all relevant data on the characteristics of participants, intervention, comparator group, and outcome measures. For continuous data, we reported means and SDs and odds ratios (ORs) and its 95% CIs for dichotomous data. For studies with multiple arms, we compared the relevant intervention arm to the least active control arm, so that double counting of data does not occur. Any disagreements were resolved through discussion between the 2 authors and if no consensus was reached, a third author acted as an arbiter.

Assessment of Risk of Bias

In total, 2 reviewers independently assessed the risk of bias of the included studies using the Cochrane Collaboration’s Risk of Bias tool [18]. Studies were assessed for risk of bias in the following domains: random sequence generation; allocation concealment; blinding of participants or personnel; blinding of outcome assessment; completeness of outcome data (attrition bias); selective outcome reporting (reporting bias); validity and reliability of outcome measures; baseline comparability; and consistency in intervention delivery. For cRCTs, we also assessed and reported the risk of bias associated with an additional domain: selective recruitment of cluster participants. Judgments concerning the risk of bias for each study fell under 3 categories: high, low, or unclear risk of bias.

Data Synthesis

Data were synthesized using Review Manager version 5.3. In cases where studies were homogeneous enough (in terms of their population interventions, comparator groups, outcomes, and study designs) to make meaningful conclusions, we pooled them together in a meta-analysis using a random-effects model and presented results as standardized mean difference (SMD). We assessed heterogeneity through a visual inspection of the overlap of forest plots and by calculating the chi-square tests and I2 inconsistency statistics [18].

Summary of Findings Tables

We prepared the Summary of Findings (SoF) tables to present the results for each of the primary outcomes. We converted results into absolute effects when possible and provided a source and rationale for each assumed risk cited in the table(s) when presented. A total of 2 authors (PP and MS) independently rated the overall quality of evidence as implemented and described in the GRADEprofiler (GRADEproGDT Web-based version) and Chapter 11 of the Cochrane Handbook for Systematic Reviews of Interventions [20]. We considered the following criteria to assess the quality of evidence: limitations of studies (risk of bias), inconsistency of results, indirectness of the evidence, imprecision and publication bias, and downgraded the quality where appropriate. This was done for all primary outcomes reported in the review.

Results

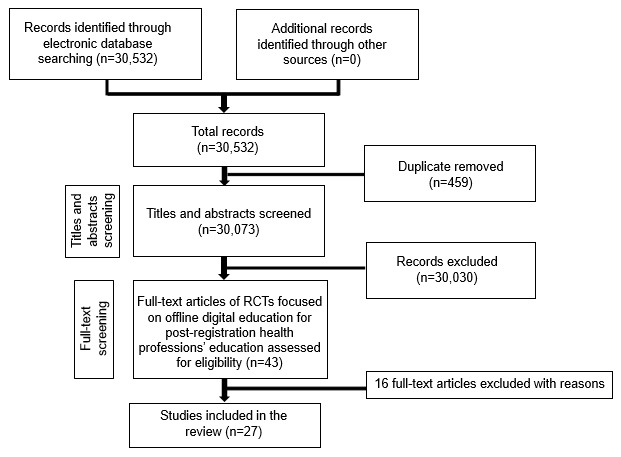

Our searches yielded a total of 30,532 citations; and 27 studies with 4,618 participants are included in Figure 1. For characteristics of excluded studies, please refer to Multimedia Appendix 2.

Figure 1.

Study flow diagram. RCT: randomized controlled trial.

Included Studies

Details of each trial are presented in Table 1 or Multimedia Appendix 3; a summary is given below. The included trials were published between 1991 and 2016, and originated from Brazil 3.7% (n=1), Hong Kong 3.7% (n=1), Iran 7.4% (n=2), Korea 3.7% (n=1), the Netherlands 11.1% (n=3), Norway 3.7% (n=1), Taiwan 11.1% (n=3), Turkey 3.7% (n=1), the United Kingdom 7.4% (n=2), and the United States 44.4% (n=12). A total of 5 trials employed cluster design [21-25], whereas the remaining studies used a parallel group design. The majority of studies (51.8%) were conducted in nurses [23,24,26-37] followed by pharmacists (14.8%) [38-41], mental health therapists (11.1%) [21,25,42], dentists (7.4%) [43,44], midwives [22], physical therapists [45], patient care personnel [46], and substance abuse counselors [47]. The evaluated interventions included blended learning [35]; CD-ROM and emails [43]; computer-assisted instruction (CAI), computer-based training, or computer-mediated training [22,23,26,28,29,34,36,38, 41,42,44-46]; CD-ROM [24,30-33,37,47]; PowerPoint presentation [39,40,46]; and software [21,25,27]. The duration of the intervention ranged from 50 min [28,38] to 3 months [21,25,31,46]. The intensity ranged from 15 min [44] to 2.4 h [37]. Comparison groups included no intervention [22,26,32,33,37,43-45,47], blended learning [21,25], and traditional learning [23,24,27-31,34-36,38-42,46]. Primary outcomes included knowledge in 20 studies [21-24,26-48], skills in 9 studies [22,24,31,35,37,38,41-43], attitudes in 7 studies [26,30,35,40,41,44,45], satisfaction in 9 studies [23,25,28, 33,35,36,38,40,42], and patient-centered outcomes in 2 studies [22,25].

Table 1.

Characteristics of included studies

| Author (year), reference, country | Population/health profession (N) | Field of study/condition/health problem | Intervention type | Control | Outcomes (measurement instrument) | Results (continuous or dichotomous) |

| Akar (2014) [46], Turkey | Patient care personnel (96) | Testicular cancer | PowerPoint presentation | Ta | Knowledge (MCQ 26-items) | Mean (SD) 12.0 (1.9) vs 10.4 (3.7); P=.005 |

| Albert (2006) [43], United States | Dentists (184) | Tobacco addiction | CD-ROM and email | NLb | 1. Skills | 1. P<.01 |

| 2. Knowledge | 2. P<.05 | |||||

| Bayne (1997) [26], United States | Nurses (67) | Drug overdose | CAIc | NL | 1. Knowledge (test 20-items) | 1. Mean (SD) 82.1 (11.88) vs 81.1 (13.0) |

| 2. Satisfaction | 2. —d | |||||

| 3. Attitude (Qe) | 3. — | |||||

| Beidas (2012) [42], United States | Mental health therapist (115) | Anxious children | CBLf | T | 1. Skills (checklist) | 1. Mean (SD) 17.4 (1.81) vs 17.4 (1.83) |

| 2. Knowledge (test 20-items) | 2. Mean (SD) 3.6 (1.47) vs 4.1 (1.45) | |||||

| 3. Satisfaction (Q) | 3. Mean (SD) 50.8 (5.9) vs 53.7 (5.4); (P<.001) | |||||

|

| ||||||

| Boh (1990) [38], United States | Pharmacists (105) | Osteoarthritis | Computer-based simulation | T | 1. Knowledge (MCQg 25-items) | 1. Mean (SD) 76.0 (8.59) vs 65.73 (9.65); (P<.005) |

| 2. Skills (simulation) | 2. Mean (SD) 32.9 (8.15) vs 26.5 (10.90) | |||||

| 3. Satisfaction (Q) | 3. — | |||||

| Bredesen (2016) [27], Norway | Nurses (44) | Pressure ulcer prevention | Software | T | 1. Knowledge (a. Braden scale and b. pressure ulcer classification) | a. NSh |

| b. Fleiss kappa=0.20 (0.18-0.22) vs 0.27 (0.25-0.29) | ||||||

| Chiu (2009) [28], Taiwan | Nurses (84) | Stroke | CAI | T | 1. Knowledge (Q 15-items) | 1. Mean (SD) 34.7 (2.4) vs 33.7 (5.0); (P=.21) |

| 2. Satisfaction (Q 16-items) | 2. Mean (SD) 61.5 (8.40) vs 60.3 (7.80); (P=.51) | |||||

| Cox (2009) [29], United States | Nurses (60) | Pressure ulcers | CBL | T | Knowledge (Single choice questionnaire) | Mean (SD) 90.3 (4.9) vs 92.9 (3.3); (P=.717) |

| de Beurs (2015) [21], Holland | Psychiatric departments (567i) | Suicide prevention | Software (train-the-trainer)a | BLj | Knowledge (Q 15-items) | Mean (SD) 26.6 (3.1) vs 24.1 (2.3) |

| de Beurs 2016 [46], Holland | Psychiatric departments (881i) | Suicide prevention | Software (train-the-trainer)k | BL | 1. Patient-centered outcome (Beck scale 19-items) | 1. Mean (SD) 4.2 (13.4) vs 4.9 (10.5) |

| 2. Satisfaction (4-point scale) | 2. Mean (SD) 6.8 (4.4) vs 6.8 (4.3) | |||||

| Donyai (2015) [39], United Kingdom | Pharmacy professional (48) | Continuing professional development case scenarios | PowerPoint presentation | T | Knowledge (score) | Mean difference 9.9; 95% CI 0.4 to 19.3; (P=.04) |

| Ebadi (2015) [30], Iran | Nurses (90) | Biological incidents | CD-ROM | T | 1. Knowledge (MCQ 34-items) | 1. Mean (SD)=24.3 (5.1) vs 13.9 (3.2) (P<.001) |

| 2. Attitude (visual analogue scale 0-100) | 2. Mean (SD) 81.59 (15.21) vs 54.4 (20.24); (P<.001) | |||||

| Gasko (2012) [31], United States | Nurse anesthetists (29) | Regional anesthesia | CD-ROM | T | Skills (Q 16 criteria) | Mean (SD) 33 (7) vs 35 (10); (P<.05) |

| Hsieh (2006) [44], United States | Dentists (174) | Domestic violence | CBL | NL | 1. Knowledge (Q 24-items) | 1. (P<.01) |

| 2. Attitude | 2. (P<.01) | |||||

| Ismail (2013) [22], United Kingdom | Midwives (25) | Perineal trauma | CBL | NL | Patient-centered outcomes | Delta=0.7%; 95% CI −10.1 to 11.4; (P=.89) |

| Javadi (2015) [40], Iran | Pharmacists (71) | Contraception and sexual dysfunctions | PowerPoint presentation | T | 1. Knowledge (MCQ 23-items) | 1. Mean (SD) 68.46 (16.60) vs 50.75 (17.58); (P<.001) |

| 2. Satisfaction (Q 5-items) | 2. — | |||||

| 3. Attitude (scale 14-items) | 3. Median 28 vs 27; (P=.18) | |||||

| Lawson (1991) [41], United States | Pharmacists (50) | Financial management | CBL | T | 1. Skills (Q 25-items) | 1. Mean (SD) 15.63 (3.37) vs 16.04 (3.35) |

| 2. Attitude | 2. (P=.082) | |||||

| Liu (2014) [32], Taiwan | Psychiatric nurses (216) | Case management | CD-ROM | NL | Knowledge (MCQ 20-items) | Delta= 0.37; 95% CI –3.3 to 4.0; (P=.84) |

| Liu (2014) [33], Taiwan | Nursing personnel (40) | Nursing care management | CD-ROM | NL | Knowledge (Q) | Mean (SD) 91 (8.6) vs 58 (20.4) |

| Moran (1991) [45], United States | Physical therapists (41) | Wound care | CAI | NL | 1. Knowledge (test 13-items) | 1. Mean (SD) 10.85(1.56) vs 9.05(1.77); (P<.004) |

| 2. Attitude (survey) | 2. — | |||||

| Padalino (2007) [34], Brazil | Nurses (49) | Quality training program | CBL | T | Knowledge (Q) | Mean (SD) 19.4(1.7) vs 17.8(3.2); (P=.072) |

| Pun (2016) [35], Hong Kong | Nurses (40) | Hemodialysis management | BL | T | 1. Knowledge (MCQ and fill-in-the blank questions) | 1. Mean (SD) 24 (1.03) vs 17.45 (2.74); (P<.001) |

| 2. Skills (checklist 39-items) | 2. Mean (SD) 149.3 (19.42) vs 113.65 (21.23); (P<.001) | |||||

| 3. Attitude (3-item checklist 7-point Likert scale) | 3. Mean (SD) 1.83 (0.03)l | |||||

| 4. Satisfaction (7-point Likert scale) | 4. Range 2.10 to 2.75 (0.55 to 0.94)I | |||||

| Roh (2013) [36], Korea | Nurses (38) | Advanced life support | CBL | T | Satisfaction (Q 20-items) | Mean (SD) 7.64 (1.04) vs 7.43 (1.34); (P=.588) |

| Rosen (2002) [23], United States | Nurses (173) | Mental health and aging | CBL | T | 1. Knowledge (test) | 1. Mean (SD) 90.0 (9.1) vs 84.0 (11.2); (P=.004) |

| 2. Satisfaction (Q) | 2. (P<.0001) | |||||

| Schermer (2011) [24], Holland | Nurses (1135) | Spirometry | CD-ROM | T | Skills (test) | ORm 1.2, 95% CI 0.6 to 2.5; (P=.663) |

| Schneider (2006) [37], United States | Nurses (30) | Medication administration | CD-ROM | NL | Skills (observation) | OR 0.38, 95% CI 0.19 to 0.74; (P=.004) |

| Weingardt (2006) [47], United States | Substance abuse counselor (166) | Substance abuse | CD-ROM | NL | Knowledge (MCQ) | (P<.01) |

aT: traditional.

bNL: no learning.

cCAI: computer-assisted instruction.

d—: not reported.

eQ: questionnaire.

fCBL: computer-based learning.

gMCQ: multiple choice questionnaire.

hNS: not significant.

iTotal number of patients or professions.

jBL: blended learning.

kIntervention also included blended learning (Web-based plus traditional learning).

lData for the intervention group only.

mOR: odds ratio.

Risk of Bias in Included Studies

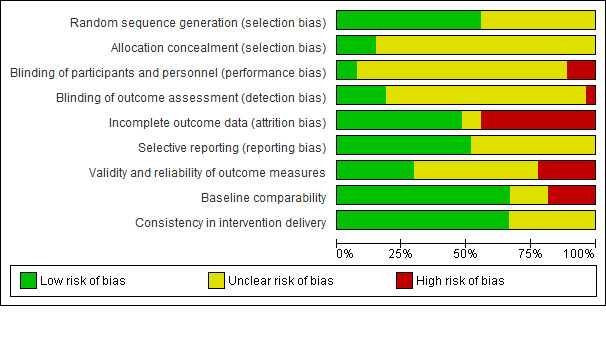

We present our judgments about each risk of bias item for all included studies as (summary) percentages in Figure 2.

Figure 2.

Risk of bias graph: review authors' judgements about each risk of bias item presented as percentages across all included studies.

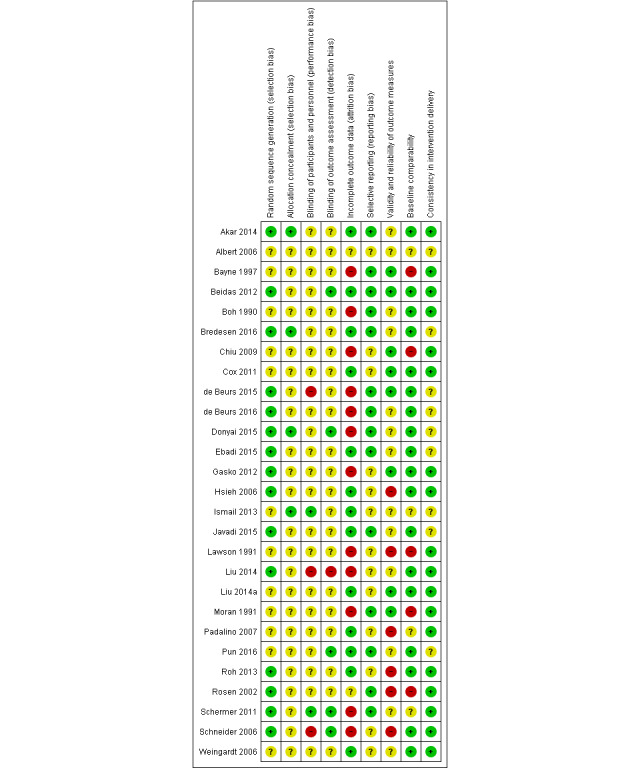

Figure 3 shows separate judgments about each risk of bias item for each included study.

Figure 3.

Risk of bias summary: review authors' judgements about each risk of bias item for each included study.

The risk of bias was predominantly low for random sequence generation (55.5% of the studies), selective reporting, baseline comparability, and consistency in intervention delivery. The risk of bias was predominantly unclear for allocation concealment blinding of participants, personnel, or outcome assessors. A total of 12 studies (44.4%) had a high risk of attrition bias; 6 studies (22.2%) had a high risk of bias for validity and reliability of outcome measures; and 5 studies (18.5%) had a high risk of bias for baseline comparability. In total, 3 studies (11.1%) had a high risk of performance bias; and 1 study (3.7%) had a high risk of detection bias. For cRCTs, all 5 studies had a low risk of bias for selective recruitment of cluster participants.

Effects of Interventions

Offline Digital Education (CD-ROM) Versus No Intervention or Traditional Learning

Primary Outcomes

Knowledge

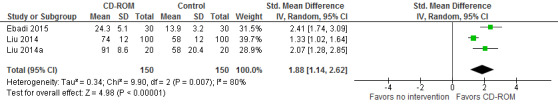

A meta-analysis of 3 studies [30,32,33] considered to be homogeneous enough found that compared with no intervention, offline digital education (CD-ROM) may increase knowledge in nurses (SMD 1.88; 95% CI 1.14 to 2.62; low certainty evidence, Figure 4). There was a substantial level of heterogeneity of the pooled studies (Tau2=.34; χ2=9.90; P=.007; I2=80%; low certainty evidence).

Figure 4.

Forest plot of comparison: Offline digital education (CD-ROM) versus no intervention, outcome: Knowledge.

A total of 2 studies did not report sufficient data that could be included in the meta-analysis. Weingardt [47] reported that compared with no intervention, CD-ROM probably improves substance abuse counselors’ knowledge (P<.01; moderate certainty evidence). Albert [43] reported that compared with no intervention, CD-ROM and email may slightly improve dentists’ knowledge (P<.05; low certainty evidence).

Skills

Schneider [37] reported an increase in nurses’ skills (decreased core 1 error rates) between baseline and postintervention periods in the intervention group (OR 0.38, 95% CI 0.19 to 0.74; P=.004; low certainty evidence). Albert [43] reported that compared with no intervention, the offline digital education (CD-ROM and email) intervention may slightly improve dentists’ skills (P<.01; low certainty evidence). Gasko [31] reported that the CD-ROM intervention may have little or no effect on nurse anesthetists’ skills compared with traditional learning (mean 33 [SD 7] vs mean 35 [SD 10]; low certainty evidence). Schermer [24] reported that compared with traditional training (joint baseline workshop), CD-ROM may slightly improve the rate of adequate tests (32.9% vs 29.8%; OR 1.2, 95% CI 0.6 to 2.5; P=.663; low certainty evidence).

Satisfaction

Liu [33] reported that 87% of participants in the CD-ROM groups agreed or strongly agreed that the program was flexible (mean 4.28; low certainty evidence). There was no comparison group for this outcome. For a summary of the effects of these comparisons on knowledge, skills, and satisfaction, see SoF in Multimedia Appendix 4.

Offline Digital Education (Computer-Assisted Training) Versus No Intervention or Traditional Learning

Primary Outcomes

Knowledge

A meta-analysis of 2 studies [26,45] considered to be homogeneous enough found that compared with no intervention, the effects of offline digital education (computer-assisted training [CAT]) on nurses and physical therapists’ knowledge were uncertain (SMD 0.55; 95% CI –0.39 to 1.50; very low certainty evidence; Figure 5).

Figure 5.

Forest plot of comparison: Offline digital education (computer-assisted training) versus no intervention, outcome: Knowledge.

A substantial level of heterogeneity of the pooled studies was detected (Tau2=.33; χ2=3.40; P=.07; I2=71%). One study [44] did not present data that could be included in the meta-analysis for this outcome. Hsieh reported that compared with no intervention, offline digital education may improve dentists’ knowledge (P<.01; low certainty evidence).

Beidas [42] reported that compared with routine training, offline digital education (computer training) may have little or no effect on community mental health therapists’ knowledge postintervention (mean 17.45 [SD 1.83] vs mean 17.42 [SD 1.81]; P=.26; low certainty evidence). Boh [38] found that compared with traditional learning, an intervention (audio cassette and microcomputer simulation) may improve pharmacists’ knowledge postintervention (mean 65.7 [SD 9.6] vs mean 76 [SD 8.5]; P<.005; low certainty evidence). Chiu [28] reported that compared with traditional training, offline digital education (CAI) may slightly improve nurses’ knowledge at 4 weeks (mean 33.7 [SD 5.0] vs mean 34.7 [SD 2.4]; P=.21; low certainty evidence). Cox [29] found that compared with traditional training, offline digital education (computer-based learning) may have little or no effect on nurses’ knowledge postintervention (mean 92.9 [SD 3.3] vs mean 90.3 [SD 4.9]; P=.717; low certainty evidence). Padalino [34] reported that compared with traditional classroom training, offline digital education (computer-mediated training) may slightly improve nurses’ knowledge postintervention (mean 17.8 [SD 3.2] vs mean 19.4 [SD 1.7]; P=.072; low certainty evidence). Rosen [23] found that compared with usual education, offline digital education (computer-based training) may improve nurses’ knowledge at 6 months (mean 84 [SD 11.2] vs mean 90 [SD 9.1]; P=.004; low certainty evidence). Taken together, these results suggest that computer-assisted interventions may slightly improve various health professionals’ knowledge, but the quality of evidence was low and results were mixed.

Skills

A meta-analysis of 4 studies [35,38,41,42] found that compared with traditional training, the effects of CAT on skills in community (mental health) therapists, nurses, and pharmacists were uncertain (SMD 0.45; 95% CI –0.35 to 1.25; very low certainty evidence; Figure 6). Heterogeneity of the pooled studies was considerable (Tau2=.58; χ2=25.06; P<.0001; I2=88%).

Figure 6.

Forest plot of comparison: Offline digital education (computer-assisted training) versus traditional learning, outcome: Skills.

Attitudes

In Moran [45], 93% of respondents reported a strong agreement or an agreement with the statement that computer-assisted instructions were helpful. There was no comparison group for this outcome (low certainty evidence). Hsieh [44] reported that compared with no intervention, the computer-based tutorial group may improve dentists’ attitudes (P<.01; low certainty evidence). Lawson [41] found that compared with traditional education, offline digital education may have little or no effect on participants’ attitudes concerning expected helpfulness (P=.082; low certainty evidence).

Satisfaction

A meta-analysis of 4 studies [25,28,36,42] considered to be homogeneous enough found that compared with traditional training, offline digital education may have little effect or no difference on satisfaction scores in nurses and mental health therapists (SMD –0.07; 95% CI –0.42 to 0.28; low certainty evidence; Figure 7). A moderate level of heterogeneity of the pooled studies was detected (Tau2=.05; χ2=.10; P=.16; I2=41%).

Figure 7.

Forest plot of comparison: Offline digital education (computer-assisted training) versus traditional learning, outcome: Satisfaction.

A total of 2 studies [23,38] were not included in the meta-analysis for this outcome as they did not report a sufficient amount of data for pooling. Boh [38] found that compared with traditional learning, offline digital education (audio cassette and microcomputer simulation) may have little or no effect on pharmacists’ satisfaction postintervention (low certainty evidence). Rosen [23] found that compared with usual education, offline digital education (computer-based training) may improve nurses’ satisfaction at 6 months (P<.0001; low certainty evidence).

Patient-Centered Outcomes

Ismail [22] reported that compared with no intervention, offline digital education may have little or no effect on the average percentage of women reporting perineal pain on sitting and walking at 10 to 12 days (mean difference [MD]=0.7%; 95% CI −10.1 to 11.4; P=.89; low certainty evidence).

Secondary Outcomes

Only 1 study [27] mentioned the costs of offline digital education. Bayne and Bindler [27] reported the costs as US $54 per participant in the computer-assisted group compared with US $23 per participant in the no intervention control group. For a summary of the effects of these comparisons on all outcomes, see SoF Multimedia Appendix 4.

Offline Digital Education (Software, PowerPoint) Versus Blended Learning or Traditional Learning

Primary Outcomes

Knowledge

A meta-analysis of 2 studies [40,46] considered to be homogeneous enough found that compared with traditional learning, a PowerPoint presentation may improve the knowledge of patient care personnel and pharmacists (SMD 0.76; 95% CI 0.29 to 1.23; low certainty evidence; Figure 8). A considerable level of heterogeneity of the pooled studies was detected (Tau2=.06; χ2=2.19; P=.14; I2=54%).

Figure 8.

Forest plot of comparison: Offline digital education (PowerPoint) versus traditional learning, outcome: Knowledge.

One study did not report sufficient data to be included in the meta-analysis. Donyai [39] reported that compared with traditional learning, a PowerPoint presentation may improve pharmacy professionals’ knowledge (MD=9.9; 95% CI 0.4 to 19.3; P=.04).

de Beurs [21] reported that compared with blended learning, offline digital education (software) may improve mental health professionals’ knowledge (mean [SD] 26.6 (3.1) vs 24.1 (2.3); P<.001; low certainty evidence).

Satisfaction

de Beurs [25] reported that compared with blended learning, offline digital education (software) may have little effect or no difference on patients’ satisfaction at 3 months (mean [SD] 6.8 (4.4) vs 6.8 (4.3); low certainty evidence).

Patient-Centered Outcomes

de Beurs [25] reported that compared with blended learning, offline digital education (software) may have little effect or no difference on patients’ suicidal ideation at 3 months (mean [SD] 4.2 (13.4) vs 4.9 (10.5); low certainty evidence). For a summary of the effects of these comparisons on all outcomes, see SoF Multimedia Appendix 4.

There was not enough data included in any of the pooled analyses to allow sensitivity analyses to be conducted. Similarly, given the small number of trials contributing data to outcomes within different comparisons in this review, a formal assessment of potential publication bias was not feasible.

Discussion

We summarized and critically evaluated evidence for effectiveness of offline digital education for improving knowledge, skills, attitudes, satisfaction, and patient-centered outcomes in postgraduate health professions except medical doctors. A total of 27 studies with 4618 participants met the eligibility criteria. We found highly diverse studies in different professions and evidence to support the effectiveness of certain types of offline digital education such as CD-ROM and PowerPoint compared with no intervention or traditional learning in improving learners’ knowledge. For other outcomes (and comparators), the evidence was less compelling in improving learners’ skills, attitudes, satisfaction, and patient-related outcomes.

Overall Completeness and Applicability of Evidence

We identified 4 studies from upper middle-income countries (Brazil, Iran, and Turkey), and the remaining studies were conducted in high-income countries (Hong Kong, Korea, the Netherlands, Norway, the United Kingdom, and the United States). Only 4 studies (15%) were conducted during the 1990s and the remaining studies were from 2000 onward. In 15 studies (55.5%), information about the frequency of the interventions was missing, thereby often making it difficult to analyze in depth and interpret the findings. Similarly, economic evaluations of the interventions were missing in 26 (96%) studies.

Quality of the Evidence

Overall, the quality of evidence was low or very low. We assessed the quality of evidence using the Grading of Recommendations, Assessment, Development, and Evaluations system and presented the findings in SoF Multimedia Appendix 4 for all comparisons. The reasons for downgrading the evidence most commonly pertained to the high risk of bias. For instance, only 13 (48.1%) of the studies reported complete outcome data. Reducing the dropout rate might reduce the risk of attrition bias and further improve the quality of the studies. Only 8 studies (29.6%) had a low risk of bias for validity and reliability of outcome measures. This issue of nonvalidated measurement tools has repeatedly been raised and is paramount to advance the field [49]. Only 2 studies (7.4%) adequately described blinding of participants and personnel. As with many educational interventions, blinding of participants or personnel might prove challenging. However, we highlighted the need for more adequate descriptions of masking to further reduce the risk of performance bias and allow clearer judgments to be made. We also downgraded the overall quality of evidence for inconsistency (where there was a high level of heterogeneity, ie, I2>50%). Overall, there was a moderate-to-considerable level of heterogeneity of meta-analyses (I2 range 41% to 88%); and 4 (out of 5) meta-analyses had I2>50%. More reasons for downgrading included indirectness (we downgraded once for 1 outcome only—where there were differences in the population used). Participants were not homogeneous and ranged from nurses, pharmacists, mental health therapists, dentists, midwives and obstetricians, physical therapists, patient care personnel to substance abuse counselors. Other sources of indirectness also stemmed from heterogeneous interventions (their duration, frequency, and intensity), comparison groups, and outcome assessment tools ranging from multiple choice or single choice questionnaires, tests, observations, checklists, scales, surveys, visual analogue scales, and simulations. Finally, we also downgraded for imprecision where the sample size was small. The included studies also failed to provide details of sample size and power calculations and may have therefore been underpowered and unable to detect change in learning outcomes.

Strengths and Limitations of the Review

This systematic review has several important strengths that include comprehensive searches without any language limitations, robust screening, data extraction and risk of bias assessments, and a critical appraisal of the evidence. However, some limitations must be acknowledged while interpreting the results of this study. First, we considered subgroup analyses to be unfeasible because of the insufficient number of studies under the respective outcomes and professional groups. However, we minimized potential biases in the review process and maintained its internal validity by strictly adhering to the guidelines outlined by Higgins et al [18].

Agreements and Disagreements With Other Studies or Reviews

A review by Al-Jewair [50] found some evidence to support the effectiveness of computer-assisted learning in improving knowledge gains in undergraduate or postgraduate orthodontic students’ or orthodontic educators’ knowledge, but no definite conclusions were reached; and future research was recommended. Rosenberg [51] concluded that computer-aided learning is as effective as other methods of teaching and can be used as an adjunct to traditional education or as a means of self-instruction of dental students. Based on 4 mixed-results RCTs, Rosenberg [52] was unable to reach any conclusions on knowledge gains and recommended more high-quality trials evaluating the effectiveness of computer-aided learning in orthodontics. However, we are familiar with newer technologies being currently evaluated for the same outcomes; MOOCs or mLearning can play a very important role in health professions education such as improving clinical knowledge and promoting lifelong learning [53-54]. We are also aware of recent reviews, which reached similar conclusions [55-62]. For example, digital education seems to be at least as effective (and sometimes more effective) as traditional education in improving dermatology, diabetes management, or smoking cessation–related skills and knowledge [58,61,62]. Most of these reviews, however, stressed the inconclusiveness of overall findings mainly because of the low certainty of the evidence.

Conclusions

Offline digital education may potentially play a role in the education of health professionals, especially in LMICs, where there is a lack of access to Web-based digital education for a variety of reasons, including cost; and there is some evidence to support the effectiveness of these interventions in improving the knowledge of health professionals. However, because of the existing gaps in the evidence base, including limited evidence for other outcomes; lack of subgroup analyses, for example, CD-ROM or PowerPoint; low and very low quality of the evidence, the overall findings are inconclusive. More research especially evaluating patient-centered outcomes, costs, and safety (adverse effects); involving those subgroups; and originating from LMICs is needed. Such research should be adequately powered, be underpinned by learning theories, use valid and reliable outcome measures, and blind outcome assessors.

Acknowledgments

This review was conducted in collaboration with the World Health Organization, Health Workforce Department. We would like to thank Carl Gornitzki, GunBrit Knutssön, and Klas Moberg from the University Library, Karolinska Institutet, Sweden, for developing the search strategy. We gratefully acknowledge funding from Nanyang Technological University Singapore, Singapore, eLearning for health professionals education grant.

Abbreviations

- CAI

computer-assisted instruction

- CAT

computer-assisted training

- cRCT

cluster randomized controlled trial

- LMIC

low- and middle-income country

- MD

mean difference

- mLearning

mobile learning

- MOOC

massive open online course

- OR

odds ratio

- RCT

randomized controlled trial

- SMD

standardized mean difference

- SoF

Summary of Findings

MEDLINE (Ovid) search strategy.

Characteristics of excluded studies.

Results of the included studies.

Summary of findings tables.

Footnotes

Authors' Contributions: JC and PP conceived the idea for the review. PP and MMB wrote the review. BMK, MS, UD, MK, and AS extracted data and appraised for risk of bias, and all authors commented on the review and made revisions following the first draft.

Conflicts of Interest: None declared.

References

- 1.Anand S, Bärnighausen T. Human resources and health outcomes: cross-country econometric study. Lancet. 2004;364(9445):1603–9. doi: 10.1016/S0140-6736(04)17313-3.S0140-6736(04)17313-3 [DOI] [PubMed] [Google Scholar]

- 2.Campbell J, Dussault G, Buchan J, Pozo-Martin F, Guerra Arias M, Leone C, Siyam A, Cometto G. World Health Organization. 2013. A universal truth: no health without a workforce https://www.who.int/workforcealliance/knowledge/resources/GHWA-a_universal_truth_report.pdf?ua=1 .

- 3.Chen L. Striking the right balance: health workforce retention in remote and rural areas. Bull World Health Organ. 2010 May;88(5):323, A. doi: 10.2471/BLT.10.078477. http://europepmc.org/abstract/MED/20461215 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moszynski P. One billion people are affected by global shortage of healthcare workers. Br Med J. 2011 Feb 1;342:d696. doi: 10.1136/bmj.d696. [DOI] [PubMed] [Google Scholar]

- 5.Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Fineberg H, Garcia P, Ke Y, Kelley P, Kistnasamy B, Meleis A, Naylor D, Pablos-Mendez A, Reddy S, Scrimshaw S, Sepulveda J, Serwadda D, Zurayk H. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010 Dec 4;376(9756):1923–58. doi: 10.1016/S0140-6736(10)61854-5.S0140-6736(10)61854-5 [DOI] [PubMed] [Google Scholar]

- 6.Nkomazana O, Mash R, Shaibu S, Phaladze N. Stakeholders' perceptions on shortage of healthcare workers in primary healthcare in Botswana: focus group discussions. PLoS One. 2015;10(8):e0135846. doi: 10.1371/journal.pone.0135846. http://dx.plos.org/10.1371/journal.pone.0135846 .PONE-D-15-04268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Car J, Carlstedt-Duke J, Tudor Car L, Posadzki P, Whiting P, Zary N, Atun R, Majeed A, Campbell J, Digital Health Education Collaboration Digital education for health professions: methods for overarching evidence syntheses. J Med Internet Res. 2019 Dec 14;21(2):e12913. doi: 10.2196/12913. http://www.jmir.org/2019/2/e12913/ v21i2e12913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.DeBate RD, Severson HH, Cragun DL, Gau JM, Merrell LK, Bleck JR, Christiansen S, Koerber A, Tomar SL, McCormack Brown KR, Tedesco LA, Hendricson W. Evaluation of a theory-driven e-learning intervention for future oral healthcare providers on secondary prevention of disordered eating behaviors. Health Educ Res. 2013 Jun;28(3):472–87. doi: 10.1093/her/cyt050. http://europepmc.org/abstract/MED/23564725 .cyt050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kahiigi EK, Ekenberg L, Hansson H, Tusubira FF, Danielson M. Exploring the e-Learning state of art. EJEL. 2008;6(2):77–87. https://www.google.com/search?q=Exploring+the+e-Learning+State+of+Art&rlz=1C1CHZL_enIN838IN838&oq=Exploring+the+e-Learning+State+of+Art&aqs=chrome..69i57.332j0j4&sourceid=chrome&ie=UTF-8# . [Google Scholar]

- 10.Rasmussen K, Belisario JM, Wark PA, Molina JA, Loong SL, Cotic Z, Papachristou N, Riboli-Sasco E, Tudor Car L, Musulanov EM, Kunz H, Zhang Y, George PP, Heng BH, Wheeler EL, Al Shorbaji N, Svab I, Atun R, Majeed A, Car J. Offline eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014 Jun;4(1):010405. doi: 10.7189/jogh.04.010405. http://europepmc.org/abstract/MED/24976964 .jogh-04-010405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.George PP, Papachristou N, Belisario JM, Wang W, Wark PA, Cotic Z, Rasmussen K, Sluiter R, Riboli-Sasco E, Car L, Musulanov EM, Molina JA, Heng BH, Zhang Y, Wheeler EL, Al Shorbaji N, Majeed A, Car J. Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health. 2014 Jun;4(1):010406. doi: 10.7189/jogh.04.010406. http://europepmc.org/abstract/MED/24976965 .jogh-04-010406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Greenhalgh T. Computer assisted learning in undergraduate medical education. Br Med J. 2001 Jan 6;322(7277):40–4. doi: 10.1136/bmj.322.7277.40. http://europepmc.org/abstract/MED/11141156 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. J Am Med Assoc. 2008 Sep 10;300(10):1181–96. doi: 10.1001/jama.300.10.1181.300/10/1181 [DOI] [PubMed] [Google Scholar]

- 14.Lam-Antoniades M, Ratnapalan S, Tait G. Electronic continuing education in the health professions: an update on evidence from RCTs. J Contin Educ Health Prof. 2009;29(1):44–51. doi: 10.1002/chp.20005. [DOI] [PubMed] [Google Scholar]

- 15.Adams AM. Pedagogical underpinnings of computer-based learning. J Adv Nurs. 2004 Apr;46(1):5–12. doi: 10.1111/j.1365-2648.2003.02960.x.JAN2960 [DOI] [PubMed] [Google Scholar]

- 16.Brandt MG, Davies ET. Visual-spatial ability, learning modality and surgical knot tying. Can J Surg. 2006 Dec;49(6):412–6. http://www.canjsurg.ca/vol49-issue6/49-6-412/ [PMC free article] [PubMed] [Google Scholar]

- 17.Mahan JD, Stein DS. Teaching adults-best practices that leverage the emerging understanding of the neurobiology of learning. Curr Probl Pediatr Adolesc Health Care. 2014 Jul;44(6):141–9. doi: 10.1016/j.cppeds.2014.01.003.S1538-5442(14)00013-3 [DOI] [PubMed] [Google Scholar]

- 18.Higgins JP, Green S. Cochrane Handbook for Systematic Reviews of Interventions. 2018. http://www.cochrane-handbook.org/

- 19.Hervatis V, Kyaw B, Semwal M, Dunleavy G, Tudor Car L, Zary N, Car J. Cochrane Database System Review. 2016. Aug 10, [2019-04-17]. Offline and computer-based eLearning interventions for medical students' education https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=45679 .

- 20.Schünemann HJ, Oxman AD, Higgins JPT, Vist GE, Glasziou P, Guyatt GH. Cochrane Handbook for Systematic Reviews of Interventions. UK: 2011. [2019-04-17]. Chapter 11: Presenting results and 'Summary of findings' tables https://handbook-5-1.cochrane.org/chapter_11/11_presenting_results_and_summary_of_findings_tables.htm . [Google Scholar]

- 21.Cox J, Roche S, van Wynen E. The effects of various instructional methods on retention of knowledge about pressure ulcers among critical care and medical-surgical nurses. J Contin Educ Nurs. 2011 Feb;42(2):71–8. doi: 10.3928/00220124-20100802-03. [DOI] [PubMed] [Google Scholar]

- 22.Padalino Y, Peres HH. E-learning: a comparative study for knowledge apprehension among nurses. Rev Lat Am Enfermagem. 2007;15(3):397–403. doi: 10.1590/s0104-11692007000300006. http://www.scielo.br/scielo.php?script=sci_arttext&pid=S0104-11692007000300006&lng=en&nrm=iso&tlng=en .S0104-11692007000300006 [DOI] [PubMed] [Google Scholar]

- 23.Albert D, Gordon J, Andrews J, Severson H, Ward A, Sadowsky D. Use of electronic continuing education in dental offices for tobacco cessation (PA2-7). Society for Research on Nicotine and Tobacco 12th Annual Meeting; February 15-18, 2006; Orlando, Florida. 2006. [Google Scholar]

- 24.Hsieh NK, Herzig K, Gansky SA, Danley D, Gerbert B. Changing dentists' knowledge, attitudes and behavior regarding domestic violence through an interactive multimedia tutorial. J Am Dent Assoc. 2006 May;137(5):596–603. doi: 10.14219/jada.archive.2006.0254.S0002-8177(14)64859-5 [DOI] [PubMed] [Google Scholar]

- 25.Weingardt KR, Villafranca SW, Levin C. Technology-based training in cognitive behavioral therapy for substance abuse counselors. Subst Abus. 2006 Sep;27(3):19–25. doi: 10.1300/J465v27n03_04. [DOI] [PubMed] [Google Scholar]

- 26.Ismail KM, Kettle C, Macdonald SE, Tohill S, Thomas PW, Bick D. Perineal Assessment and Repair Longitudinal Study (PEARLS): a matched-pair cluster randomized trial. BMC Med. 2013 Sep 23;11:209. doi: 10.1186/1741-7015-11-209. https://bmcmedicine.biomedcentral.com/articles/10.1186/1741-7015-11-209 .1741-7015-11-209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bayne T, Bindler R. Effectiveness of medication calculation enhancement methods with nurses. J Nurs Staff Dev. 1997;13(6):293–301. [PubMed] [Google Scholar]

- 28.Bredesen IM, Bjøro K, Gunningberg L, Hofoss D. Effect of e-learning program on risk assessment and pressure ulcer classification - a randomized study. Nurse Educ Today. 2016 May;40:191–7. doi: 10.1016/j.nedt.2016.03.008.S0260-6917(16)00114-3 [DOI] [PubMed] [Google Scholar]

- 29.Chiu S, Cheng K, Sun T, Chang K, Tan T, Lin T, Huang Y, Chang J, Yeh S. The effectiveness of interactive computer assisted instruction compared to videotaped instruction for teaching nurses to assess neurological function of stroke patients: a randomized controlled trial. Int J Nurs Stud. 2009 Dec;46(12):1548–56. doi: 10.1016/j.ijnurstu.2009.05.008.S0020-7489(09)00165-5 [DOI] [PubMed] [Google Scholar]

- 30.Gasko J, Johnson A, Sherner J, Craig J, Gegel B, Burgert J, Sama S, Franzen T. Effects of using simulation versus CD-ROM in the performance of ultrasound-guided regional anesthesia. AANA J. 2012 Aug;80(4 Suppl):S56–9. [PubMed] [Google Scholar]

- 31.Liu W, Chu K, Chen S. The development and preliminary effectiveness of a nursing case management e-learning program. Comput Inform Nurs. 2014 Jul;32(7):343–52. doi: 10.1097/CIN.0000000000000050. [DOI] [PubMed] [Google Scholar]

- 32.Schneider PJ, Pedersen CA, Montanya KR, Curran CR, Harpe SE, Bohenek W, Perratto B, Swaim TJ, Wellman KE. Improving the safety of medication administration using an interactive CD-ROM program. Am J Health Syst Pharm. 2006 Jan 1;63(1):59–64. doi: 10.2146/ajhp040609.63/1/59 [DOI] [PubMed] [Google Scholar]

- 33.Boh LE, Pitterle ME, Demuth JE. Evaluation of an Integrated Audio Cassette and Computer Based Patient Simulation Learning Program. Am J Pharm Educ. 1990;54:15–22. https://eric.ed.gov/?id=EJ408826 . [Google Scholar]

- 34.Javadi M, Kargar A, Gholami K, Hadjibabaie M, Rashidian A, Torkamandi H, Sarayani A. Didactic lecture versus interactive workshop for continuing pharmacy education on reproductive health: a randomized controlled trial. Eval Health Prof. 2015 Sep;38(3):404–18. doi: 10.1177/0163278713513949.0163278713513949 [DOI] [PubMed] [Google Scholar]

- 35.Lawson K, Shepherd M, Gardner V. Using a computer-simulation program and a traditional approach to teach pharmacy financial management. Am J Pharm Educ. 1991;55:226–35. https://www.researchgate.net/publication/234701225_Using_a_Computer-Simulation_Program_and_a_Traditional_Approach_to_Teach_Pharmacy_Financial_Management . [Google Scholar]

- 36.Beidas RS, Edmunds JM, Marcus SC, Kendall PC. Training and consultation to promote implementation of an empirically supported treatment: a randomized trial. Psychiatr Serv. 2012 Jul;63(7):660–5. doi: 10.1176/appi.ps.201100401. http://europepmc.org/abstract/MED/22549401 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Moran ML. Hypertext computer-assisted instruction for geriatric physical therapists. Phys Occup Ther Geriatr. 2009 Jul 28;10(2):31–53. doi: 10.1080/J148v10n02_03. [DOI] [Google Scholar]

- 38.Schermer TR, Akkermans RP, Crockett AJ, van Montfort M, Grootens-Stekelenburg J, Stout JW, Pieters W. Effect of e-learning and repeated performance feedback on spirometry test quality in family practice: a cluster trial. Ann Fam Med. 2011;9(4):330–6. doi: 10.1370/afm.1258. http://www.annfammed.org/cgi/pmidlookup?view=long&pmid=21747104 .9/4/330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ebadi A, Yousefi S, Khaghanizade M, Saeid Y. Assessment competency of nurses in biological incidents. Trauma Mon. 2015 Nov;20(4):e25607. doi: 10.5812/traumamon.25607. http://europepmc.org/abstract/MED/26839862 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pun S, Chiang VC, Choi K. A computer-based method for teaching catheter-access hemodialysis management. Comput Inform Nurs. 2016 Oct;34(10):476–83. doi: 10.1097/CIN.0000000000000262. [DOI] [PubMed] [Google Scholar]

- 41.Roh YS, Lee WS, Chung HS, Park YM. The effects of simulation-based resuscitation training on nurses' self-efficacy and satisfaction. Nurse Educ Today. 2013 Feb;33(2):123–8. doi: 10.1016/j.nedt.2011.11.008.S0260-6917(11)00309-1 [DOI] [PubMed] [Google Scholar]

- 42.Rosen J, Mulsant BH, Kollar M, Kastango KB, Mazumdar S, Fox D. Mental health training for nursing home staff using computer-based interactive video: a 6-month randomized trial. J Am Med Dir Assoc. 2002;3(5):291–6. doi: 10.1097/01.JAM.0000027201.90817.21.S1525-8610(05)70543-0 [DOI] [PubMed] [Google Scholar]

- 43.de Beurs DP, de Groot MH, de Keijser J, van Duijn E, de Winter RF, Kerkhof AJ. Evaluation of benefit to patients of training mental health professionals in suicide guidelines: cluster randomised trial. Br J Psychiatry. 2016 Dec;208(5):477–83. doi: 10.1192/bjp.bp.114.156208.S0007125000243791 [DOI] [PubMed] [Google Scholar]

- 44.Liu W, Rong J, Liu C. Using evidence-integrated e-learning to enhance case management continuing education for psychiatric nurses: a randomised controlled trial with follow-up. Nurse Educ Today. 2014 Nov;34(11):1361–7. doi: 10.1016/j.nedt.2014.03.004.S0260-6917(14)00077-X [DOI] [PubMed] [Google Scholar]

- 45.Donyai P, Alexander AM. Training on the use of a bespoke continuing professional development framework improves the quality of CPD records. Int J Clin Pharm. 2015 Dec;37(6):1250–7. doi: 10.1007/s11096-015-0202-4.10.1007/s11096-015-0202-4 [DOI] [PubMed] [Google Scholar]

- 46.de Beurs DP, de Groot MH, de Keijser Jos, Mokkenstorm J, van Duijn E, de Winter RF, Kerkhof AJ. The effect of an e-learning supported Train-the-Trainer programme on implementation of suicide guidelines in mental health care. J Affect Disord. 2015 Apr 1;175:446–53. doi: 10.1016/j.jad.2015.01.046. https://linkinghub.elsevier.com/retrieve/pii/S0165-0327(15)00050-6 .S0165-0327(15)00050-6 [DOI] [PubMed] [Google Scholar]

- 47.Akar SZ, Bebiş H. Evaluation of the effectiveness of testicular cancer and testicular self-examination training for patient care personnel: intervention study. Health Educ Res. 2014 Dec;29(6):966–76. doi: 10.1093/her/cyu055.cyu055 [DOI] [PubMed] [Google Scholar]

- 48.Monteiro RS, Letchworth P, Evans J, Anderson M, Duffy S. Obstetric emergency training in a rural South African hospital: simple measures improve knowledge. Obstetric Anaesthesia 2016; May 19-20, 2016; Manchester Central Convention Complex, UK. 2016. May 11, [Google Scholar]

- 49.Law GC, Apfelbacher C, Posadzki PP, Kemp S, Tudor Car L. Choice of outcomes and measurement instruments in randomised trials on eLearning in medical education: a systematic mapping review protocol. Syst Rev. 2018 Dec 17;7(1):75. doi: 10.1186/s13643-018-0739-0. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-018-0739-0 .10.1186/s13643-018-0739-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Al-Jewair TS, Azarpazhooh A, Suri S, Shah PS. Computer-assisted learning in orthodontic education: a systematic review and meta-analysis. J Dent Educ. 2009 Jun;73(6):730–9. https://www.google.com/search?q=Computer-assisted+learning+in+orthodontic+education%3A+a+systematic+review+and+meta-analysis+doi&rlz=1C1CHZL_enIN838IN838&oq=Computer-assisted+learning+in+orthodontic+education%3A+a+systematic+review+and+meta-analysis+doi&aqs=chrome..69i57.1449j0j4&sourceid=chrome&ie=UTF-8# .73/6/730 [PubMed] [Google Scholar]

- 51.Rosenberg H, Grad HA, Matear DW. The effectiveness of computer-aided, self-instructional programs in dental education: a systematic review of the literature. J Dent Educ. 2003 May;67(5):524–32. http://www.jdentaled.org/cgi/pmidlookup?view=long&pmid=12809187 . [PubMed] [Google Scholar]

- 52.Rosenberg H, Sander M, Posluns J. The effectiveness of computer-aided learning in teaching orthodontics: a review of the literature. Am J Orthod Dentofacial Orthop. 2005 May;127(5):599–605. doi: 10.1016/j.ajodo.2004.02.020.S0889540605000041 [DOI] [PubMed] [Google Scholar]

- 53.Zhou Y, Yang Y, Liu L, Zeng Z. [Effectiveness of mobile learning in medical education: a systematic review] Nan Fang Yi Ke Da Xue Xue Bao. 2018 Nov 30;38(11):1395–400. doi: 10.12122/j.issn.1673-4254.2018.11.20. http://www.j-smu.com/Upload/html/2018111395.html . [DOI] [PubMed] [Google Scholar]

- 54.Liyanagunawardena TR, Williams SA. Massive open online courses on health and medicine: review. J Med Internet Res. 2014 Aug 14;16(8):e191. doi: 10.2196/jmir.3439. http://www.jmir.org/2014/8/e191/ v16i8e191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kyaw BM, Saxena N, Posadzki P, Vseteckova J, Nikolaou CK, George PP, Divakar U, Masiello I, Kononowicz AA, Zary N, Car LT. Virtual reality for health professions education: systematic review and meta-analysis by the Digital Health Education collaboration. J Med Internet Res. 2019 Jan 22;21(1):e12959. doi: 10.2196/12959. http://www.jmir.org/2019/1/e12959/ v21i1e12959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Dunleavy G, Nikolaou CK, Nifakos S, Atun R, Law GC, Car LT. Mobile digital education for health professions: systematic review and meta-analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 12;21(2):e12937. doi: 10.2196/12937. http://www.jmir.org/2019/2/e12937/ v21i2e12937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Car LT, Kyaw BM, Dunleavy G, Smart NA, Semwal M, Rotgans JI, Low-Beer N, Campbell J. Digital problem-based learning in health professions: systematic review and meta-analysis by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 28;21(2):e12945. doi: 10.2196/12945. http://www.jmir.org/2019/2/e12945/ v21i2e12945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Semwal M, Whiting P, Bajpai R, Bajpai S, Kyaw BM, Car LT. Digital education for health professions on smoking cessation management: systematic review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Mar 4;21(3):e13000. doi: 10.2196/13000. http://www.jmir.org/2019/3/e13000/ v21i3e13000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Wahabi HA, Esmaeil SA, Bahkali KH, Titi MA, Amer YS, Fayed AA, Jamal A, Zakaria N, Siddiqui AR, Semwal M, Car LT, Posadzki P, Car J. Medical doctors' offline computer-assisted digital education: systematic review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Mar 1;21(3):e12998. doi: 10.2196/12998. http://www.jmir.org/2019/3/e12998/ v21i3e12998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.George PP, Zhabenko O, Kyaw BM, Antoniou P, Posadzki P, Saxena N, Semwal M, Car LT, Zary N, Lockwood C, Car J. Online digital education for postregistration training of medical doctors: systematic review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 25;21(2):e13269. doi: 10.2196/13269. http://www.jmir.org/2019/2/e13269/ v21i2e13269 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Huang Z, Semwal M, Lee SY, Tee M, Ong W, Tan WS, Bajpai R, Car LT. Digital health professions education on diabetes management: systematic review by the Digital Health Education Collaboration. J Med Internet Res. 2019 Feb 21;21(2):e12997. doi: 10.2196/12997. http://www.jmir.org/2019/2/e12997/ v21i2e12997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Xu X, Posadzki PP, Lee GE, Car J, Smith HE. Digital education for health professions in the field of dermatology: a systematic review by Digital Health Education collaboration. Acta Derm Venereol. 2019 Feb 1;99(2):133–38. doi: 10.2340/00015555-3068. https://www.medicaljournals.se/acta/content/abstract/10.2340/00015555-3068 . [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

MEDLINE (Ovid) search strategy.

Characteristics of excluded studies.

Results of the included studies.

Summary of findings tables.