Abstract

Introduction

Academic health centers are reorganizing in response to dramatic changes in the health‐care environment. To improve value, they and other health systems must become a learning health system, specifically one that has the capacity to understand performance across the continuum of care and use that information to achieve continuous improvements in efficiency and effectiveness. While learning health system concepts have been well described, the practical steps to create such a system are not well defined. Establishing the necessary infrastructure is particularly challenging at academic health centers due to their tripartite missions and complex organizational structures.

Methods

Using an evidence‐based framework, this article describes a series of organizational‐level interventions implemented at an academic health center to create the structures and processes to support the functions of a learning health system.

Results

Following implementation of changes from 2008 to 2013, system‐level performance improved in multiple domains: patient satisfaction, population health screenings, improvement education, and patient engagement.

Conclusions

This experience can be applied to health systems that wrestle with making system‐level change when existing cultures, structures, and processes vary. Using an evidence ‐based framework is useful when developing the structures and processes that support the functions of a learning health system.

Keywords: academic health center, learning health system, quality improvement

1. QUESTIONS OF INTEREST

How can an evidence‐based framework be applied to accomplish a series of organizational interventions that support the creation of a learning health system with documented performance improvements related to patient satisfaction, population health screenings, improvement education, and patient engagement?

2. INTRODUCTION

The Institute of Medicine (IOM) provides a vision of the future health‐care system as one capable of continuous learning.1 Critical characteristics of this learning health system have been well articulated: alignment of incentives to reward high value care, real‐time access to science to guide care while simultaneously capturing information about the care experience to improve care, effective partnerships between clinicians and patients, and a supportive culture.1 However, creating the organizational system to support continuous learning is a daunting undertaking, particularly in traditional academic health centers (AHCs).2, 3

Existing literature has focused on the development of learning health systems in highly integrated delivery systems, which may have greater success aligning resources to achieve these changes. For example, Greene and colleagues describe the Group Health model in which research and care delivery are integrated to inform care improvements.4 Similarly, Psek describes 9 important components when operationalizing a continuous learning system at Geisinger Health System.5

Creating a learning health system in an AHC involves additional difficulties. Academic health centers have variable organizational structures, spanning the spectrum from “loose” affiliation to full integration.6 Aligning culture, strategy, and resources is particularly challenging when negotiating across the academic departments, hospital administration, and faculty group practice governing entities typically found in these institutions and is frequently challenged by severely constrained financial resources.7, 8, 9 Academic individualism, entrepreneurship, and autonomy challenge efforts to standardize care, often impeding efforts to implement evidence‐based care models within and between departments.10

In this paper, we describe one AHC's evolution toward a learning health system. Over a 5 year period, purposeful changes in organizational structure and process were implemented to support the goal of consistently delivering high value care and continuously learning to improve care. This experience represents a practical guide for building the infrastructure to support health systems aspiring to achieve the IOM vision.

3. SETTING

University of Wisconsin (UW) Health is a public academic health system consisting of 6 hospitals, 90 regionally based clinics, and a physician practice plan. The 1400‐member faculty physician practice group provides care during ~2.4 million outpatient visits and ~28,000 hospitalizations per year at the university hospital and trains more than 550 residents and fellows across 60 accredited programs. Among AHCs, UW Health is distinguished by its balance of advanced tertiary and quaternary care with primary care. Nearly 400 primary care providers care for 360,000 medically homed patients at over 40 different clinic practice locations. The organization identifies patients for which it provides a medical home as those who had an identified primary care provider and a telephone contact or clinic visit across the organization within the last 3 years.

From 2008 to 2013, UW Health provided clinical services at delivery sites that were owned and operated by one of the 3 separate legal entities that constituted UW Health (School of Medicine and Public Health, UW Hospital and Clinics, and UW Medical Foundation). These clinical delivery sites had significant differences in complexity, including differences between union and nonunion workforces, teaching status, and clinic regulatory and accreditation requirements. This fragmented and complex system was most evident in primary care, as each of these 3 entities had clinics delivering the same clinical service but variable authority to develop and monitor individual clinical standards, guidelines, protocols, and procedures.

Beginning in 2008, UW Health began a series of ambitious redesign efforts to achieve the triple aim of better care, better health, and lower costs while simultaneously supporting the research and education missions of the AHC. Quality improvement leaders developed an evidence‐based framework to organize and coordinate complex redesign efforts, providing a simple model to identify critical domains of change at each level of the health system.11 Using this framework, we describe the organization‐level changes implemented from 2008 to 2013 that accomplish the functions of the learning health system (Table 1). This project was exempt from review by the institutional review board because it did not constitute research as defined under 45 CFR 46.102(d).

Table 1.

University of Wisconsin (UW) Health organizational changes and core components of the learning health system

| Change Domains | Organizational Capabilities of the Learning Health System[Link] | New UW Health Organizational Infrastructure Supporting the Learning Health System | Examples |

|---|---|---|---|

| Goals and strategies |

•Identify problems and potential solutions •Prioritization •Organization •Funding •Align incentives •Ethics and oversight |

•Integrated strategic planning process •Integrated governance structure to establish enterprise‐wide improvement goals and provide oversight •System‐level quality department •Internal pay for performance program |

•Regular presentations to organizational leadership •Integrated 3 separate quality improvement departments to create the single UW health quality, safety, and innovation department •One‐page UW health scorecard communicating annual improvement goals for inpatient and ambulatory care in patient experience, clinical metrics, and costs •UW Health Quality Council chaired by chief executives of faculty practice and hospital members included chairs from all academic departments and senior leaders in nursing, operations, and IT. |

| Culture |

•Patient and family engagement •Culture of learning supported by leaders |

•Patient‐ and family‐centered principles consistently guide redesign initiatives •Patients engaged as partners in redesign •Senior leadership supported strategic plan |

•Patient engagement microsystem training program—47 teams engaged patients as members on improvement teams. •More than 150 patient and family advisory councils established (see Figure 4)12, 13, 14 •Internal policy work, including establishing protocols for patient volunteers (eg, childcare and transportation) and HIPAA privacy |

| People and processes |

•Design •Implement •People and partnerships •Clinician‐patient partnership |

•Leadership dyads (physician leaders and clinic/inpatient unit managers) •Established multisectoral partnerships •Standardized care models |

•Program was initiated with 41 primary care dyads and 42 inpatient dyads •Standard care models for previsit planning, office visits including role optimization, and chronic care management are developed and have been sequentially implemented across primary care sites. •Developed an innovation grant program with insurance partner15 |

| Learning infrastructure |

•Evaluate •Adjust •Disseminate •Data and analytics •Evaluation and methodology •Deliverables |

•Transparent performance reporting •Center to evaluate evidence and maintain system‐level knowledge base •Care Model Oversight Committee •Standard training and education in improvement science •Multidisciplinary university partnerships for research and education |

•Center for Clinical Knowledge Management allowed for system‐level quality work and established practice guidelines, clinical decision‐support tools, and nurse delegation protocols and a system for ongoing knowledge management •Maintenance of Certification Portfolio Program •Worked with Health Innovation Program, multidisciplinary patient advocacy center/law, department of industrial and system engineering, and department of economics |

| Technology | •Science and informatics providing real‐time access to knowledge and data | •EHR embedded tools for clinician and patient decision making |

•Health maintenance best practice alerts •Registries established •Patient portal •EHR user optimization16 |

4. METHODS

A series of organization‐level changes were implemented in 5 domains of change (Table 1): goals and strategies, culture, people and processes, learning infrastructure, and technology.

4.1. Goals and strategies

Clear communication and alignment of efforts are needed to achieve system goals but are a difficult task in the AHC with its traditional academic departments and complex governance structures.10 University of Wisconsin Health employed 3 strategies to achieve this: (1) integrated strategic planning, (2) a unified governance structure for establishing improvement goals, and (3) an internal pay‐for‐performance program.

Beginning in 2008, the 3 organizations that comprised UW Health initiated a multiyear strategic planning process. Led by the chief executive officers of the hospital and physician group practice plan and the dean of the school of medicine and public health, the combined strategic plan clearly established common goals. A single, organizational quality council was chartered in 2010. The council prioritized improvement needs by using a standard set of criteria, established annual system‐wide inpatient and outpatient improvement goals, and tackled barriers to progress. The council was co‐chaired by the chief executive officers, and membership included chairs of all 16 academic departments, critical administrative officers, and senior operational leaders at the clinics and hospital. The UW Health Quality, Safety, and Innovation Department provided centralized leadership and improvement resources for achieving the goals set by the quality council. Internal pay‐for‐performance programs were developed to incentivize the work needed to achieve these goals. University of Wisconsin Health pay‐for‐performance programs were implemented in ambulatory and inpatient settings and designed collaboratively with local insurers and physician and administrative leaders. Programs awarded improvement and achieving threshold quality performance goals.

4.2. Culture

Culture can be described as the values, norms, and beliefs that help to define “who we are and how we do things here.”17 Establishing a culture of continuous learning is particularly challenging in academic health settings where there is a healthy tension among the goals of research, education, and clinical care.

At UW Health, patient‐ and family‐centered care provided the unifying principles in support of a common culture. Changes in insurance markets and payment models further reinforced the importance of understanding the patient experience and engaging patients and families in care decisions. From senior leaders to frontline care teams, patient‐ and family‐centeredness was universally identified as a set of values and principles around which the organization would rally.

To this end, the values of patient‐ and family‐centered care were “hard wired” throughout the organization. Patient experience survey results were transparently reported at the physician and advanced practice practitioner level. Goals for patient experience were tied to financial incentives for all academic departments (measured at the department level), and additional incentives were provided to primary care providers. Institution‐wide celebrations were hosted through the year to celebrate outstanding performance in patient experience. Education, training, and engagement goals were introduced to support frontline clinic team successes in involving patients and families in improving care.12, 13

4.3. People and processes

Management teams were critically important in learning how to design, implement change, and continuously adjust processes in response to performance data. Leadership dyads were established at all system levels, ranging from the chief ambulatory medical officer teamed with the chief ambulatory administrative officer to leadership of each patient care unit by a local medical director and clinic manager. Dyads were responsible for reviewing performance, supporting process improvement work, and achieving clinic/unit goals. Ongoing education and training was provided to these leadership teams in support of their roles.

New partnerships were established to engage critical stakeholders in developing sustainable improvements. Working with external partners, such as local insurers and neighboring health‐care providers, provided new opportunities for collaborative learning and funding for innovations in care.15 Patient engagement in redesign efforts provided invaluable insights to creating patient‐centered models of care in both the inpatient and outpatient clinical settings.12 Participation of patient and family advisors increased dramatically over the years, with advisors routinely populating senior level committees and actively working as team members on major projects such as new facility design.

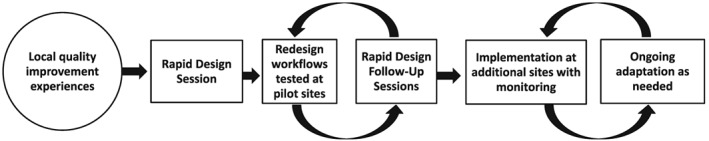

The organization managed risk by testing new processes prior to formal implementation. For example, new workflows for primary care were developed in rapid design sessions with various stakeholders including patients, front line providers and staff, and clinic leadership. These workflows from these design sessions were piloted in a few clinics and then modified in an iterative process (Figure 1) prior to spread across the organization. Ongoing monitoring determined the need for additional changes.

Figure 1.

Testing and implementation process

4.4. Learning infrastructure

Critical to success as a learning system, UW Health created a unified center to serve as the institution's knowledge management resource, the Center for Clinical Knowledge Management (CCKM). Center for Clinical Knowledge Management evaluated internal and external evidence to define the institutional knowledge base that served as the foundation for standardized care processes and clinical decision support tools (guidelines, protocols, electronic health record [EHR] alerts, and order sets). Staffed by a multidisciplinary team including nurses, pharmacists, IT programmers, data analysts, and individuals trained in information services, the center followed standard processes for evaluating existing evidence, creating clinical support tools, monitoring data to evaluate the effectiveness of the tools, and regularly updating all practice guides. Critical stakeholders across the health system (including payers) were engaged in data review and establishing and maintaining the repository of knowledge from which organizational evidence‐based tools are created.

Simplifying and standardizing process improvement approaches across the organization supported continuous improvement. This organization‐wide improvement approach, branded as the UW Health Improvement Network, facilitated communication about improvement science and promoted standard improvement education and training across traditional silos. The UW Health Improvement Network builds on the Associates in Process Improvement's Model for Improvement,18 Dartmouth microsystem principles,19 and Lean quality improvement methodology. Basic online education about quality improvement was provided to all members of the health system, establishing a “common vocabulary” and providing exposure to a standard improvement method. Teams working on high‐priority improvement goals received support from centralized staff who educated and coached teams by using a common system of improvement tools and methods.

An essential phase of the rapid‐learning health‐care system includes the ability to evaluate the effects of process improvements and then adjust and refine the changes based on this assessment.4 An example of this infrastructure was the UW Health's Care Model Oversight Committee, which consisted of leaders and managers from ambulatory operations, primary care clinical services, and the quality improvement department with oversight for the development and dissemination of the standard model of primary care at UW Health. The committee reviewed quantitative and qualitative data collected at implementation sites, recommended modifications of the care model, and monitored dissemination across all primary care clinics. Examples of implementation data included staff and patient satisfaction with tested interventions, time studies to measure the impact of interventions on patient clinic visit time, and changes in predefined quality metrics (vaccine rates, chronic disease monitoring tests, etc.). Care team members were interviewed by improvement coaches during testing, and this input was discussed by the committee. The committee was governed by a charter, voted on issues, and reported to the organization‐wide quality council who then decided on modifications. Workflows and care team roles for previsit planning, patient rooming, well‐child visits, care coordination, and other care processes were optimized based on these analyses; decisions were made by consensus. This iterative learning capability was an important characteristic of UW Health's system‐level improvement initiatives.

University of Wisconsin Health implemented a Maintenance of Certification (MOC) Portfolio Program as a combined education and care improvement strategy. The program was co‐led by the departments of quality improvement and continuing professional development, creating a strong collaborative partnership between quality improvement and education. The MOC Portfolio Program increased physician engagement and promoted a standardized organizational approach to improvement by requiring physicians to submit projects for MOC Part IV credit by using standard templates for project reporting (an A3) formatted to lead improvement teams through a set of standard improvement processes. Physicians were able to work individually or in teams and could submit their own local improvement project for MOC Part IV credit or enroll in UW Health MOC programs implementing standardized care models to improve quality in an area aligned with organizational strategic goals. For example, during the time period described in this paper, the organization was focused on improving performance in diabetes care. Primary care clinic teams received education in diabetes management including the use of a diabetes registry, data reports, endorsed guidelines for testing and medication titration, and patient self‐management resources. Physicians completing the UW Health MOC Part IV documents attesting their participation in model implementation could receive Part IV MOC credit for their work.

Engaging researchers in the pursuit of the learning health system is challenging in AHCs where research has traditionally been focused on basic sciences; creating a research agenda to understand improvement and implementation is a less well‐developed path to academic advancement.20 Since 2008, UW Health quality improvement leaders and health service researchers purposefully built a strong collaborative partnership for evaluation and dissemination. Health service researchers from the UW Health Innovation Program (HIP) have collaborated with improvement and operational leaders on the design and evaluation of interventions related to improvements in prevention, chronic care, acute care, and organizational design. Researchers have made important contributions to improvement efforts by providing subject matter expertise and informing intervention design and data analyses.

Effective delivery system interventions are often confined to the site of discovery due to a failure to publish and disseminate successes through scholarly venues. Leveraging new partnerships among the academic health system, health service research, and university departments (law, engineering, and economics) expanded dissemination opportunities from local to diverse international audiences. Effective programs, tools, and other materials are available for free to the public through the HIP's online registration‐based portal, HIPxChange.org.21 For example, our toolkit on engaging patients in care redesign is available at https://www.hipxchange.org/PatientEngagement.

4.5. Technology

The EHR holds great promise as a tool for continuous learning. At UW Health, a single EHR (Epic Systems) is used in all clinical delivery sites. Epic modules that support administrative functions are integrated with the clinical platform (eg, professional and hospital billing, pharmacy, registration, and scheduling services). Internal working groups determine which clinical builds are prioritized in line with organizational priorities. Embedded clinical decision support tools are internally built (health maintenance, best practice alerts, and standardized order sets) and are linked to supporting education materials (literature, UpToDate, guidelines, and expert consensus). This education can be accessed through links that are provided when the decision support tool presents to an ordering physician. Responses to practice alerts were monitored by the CCKM providing important feedback on how the clinical decision support was used (or not used). This information from frontline care teams was used to improve EHR decision supports and update the institution's knowledge base.

Translating data to information is an important competency of the learning health system. Registries combined with clinical decision support tools in the EHR were designed to support care teams as they provided care to individual patients and managed populations. Improvement teams were informed by combined clinical and financial reports, allowing clinicians working with operational leaders to identify opportunities to improve value.

Performance reporting was done at the organization level and cascaded down to department, clinic, and, where appropriate, physician level. Reports were accessible through the EHR, providing information on performance related to high priority improvement goals and linkages to resources available to support improvement efforts. Whenever possible, reports were formatted to provide information on current performance relative to external benchmarks and internal targets and longitudinal reports showing changes in performance over time. Over the years, there was increased use of statistical process control charts (or 95% confidence intervals) to evaluate the impact of interventions.

4.6. Measures

We measured performance across the Triple Aim (patient experience, population health, and cost), creating an organizational scorecard to communicate a parsimonious set of improvement goals for the inpatient and ambulatory services. An additional goal for our redesign was an increase in workforce capacity for local problem solving and sustaining improvements, which we measured by workforce education in improvement science and patient engagement.

Data collection and reporting was done at all levels of the health system including physician, unit and clinic, academic and operational department, and aggregated performance across the whole system. In addition to mandatory performance reporting to fulfill demands from various external programs (value‐based purchasing, meaningful use, Healthcare Effectiveness Data and Information Set reporting, etc.), reports were generated to provide information on strategic performance improvement programs directed to key strategic goals. Patient experience was measured across the organization by using standard Hospital Consumer Assessment of Healthcare Providers and Systems data in the inpatient setting and a vendor in the ambulatory setting that supported physician‐level reporting. Unadjusted percentages on patient experience of care were compared from 2010 to 2015. The organization used a standardized mail survey administered by Avatar International to measure patient experience of care. This is mailed to randomly selected group of primary care patients who were seen in the clinic in the past 2 weeks. One question in the survey asks to what degree a patient is willing to “recommend a provider's office without hesitation to others.” The aggregate percentage of patients seen in primary care who answered “strongly agree” (the top positive response) to these questions was compared over time.

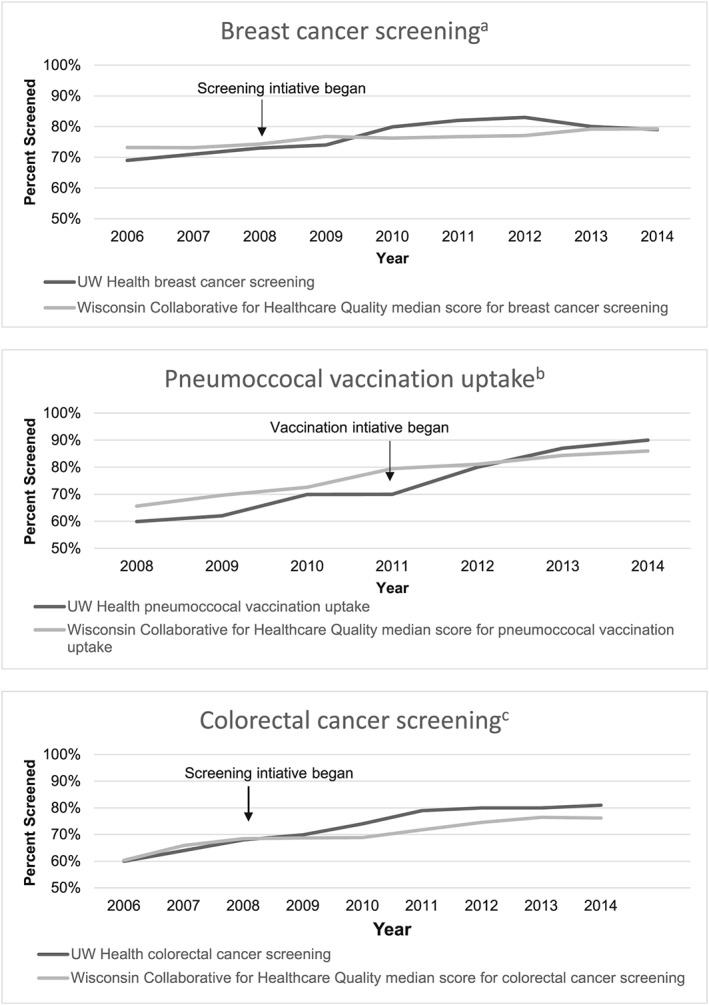

Population health measurement focused on publicly reported performance in the Wisconsin Collaborative for Healthcare Quality (WCHQ). Wisconsin Collaborative for Healthcare Quality is a voluntary state‐wide consortium of physician groups, health systems, and health plans reporting on clinical quality measures since 2004. Measure specifications and data standards are listed on the WCHQ website.22 Over 20 measures are publicly reported by WCHQ, but a focused number of measures were used to report progress on the organizational strategic initiatives in chronic and preventative care. Specific initiatives were determined by organizational leaders after reviewing performance compared with peers, capacity, and readiness to improve at our organization and the potential impact on the health of the populations we serve.

Three population health metrics in preventive care were obtained from publicly reported data available from WCHQ and were compared at baseline and after organizational redesign efforts. Pneumococcal vaccination was measured by the percentage of adults greater than or equal to 65 years who had a pneumococcal vaccination. Colorectal cancer screening measured the percentage of adults' age 50 to 75 years who received this screening according to WCHQ reporting specifications. Breast cancer screening performance measures the number of women who received a within the previous 24 months compared with all eligible women between the ages of 50 and 74.

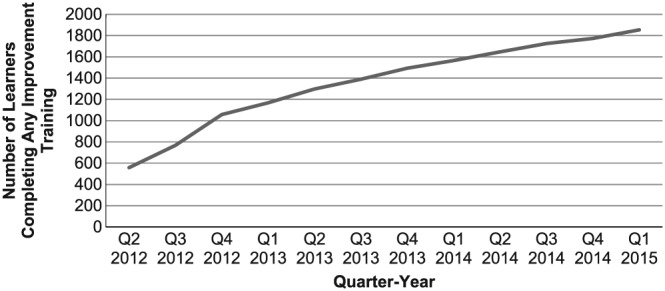

Education in improvement science was measured by the number of faculty and staff who completed the UW Health Improvement Network internal training courses. The number of UW Health staff and faculty completing these trainings was compared over time.

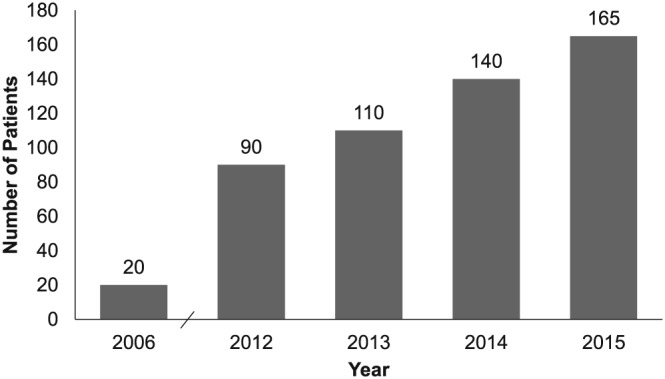

Patient engagement was measured by the number of patient and family advisory councils at UW Health clinics in 2006 and from 2012 to 2015. Data from 2007 to 2010 are not available.

5. RESULTS

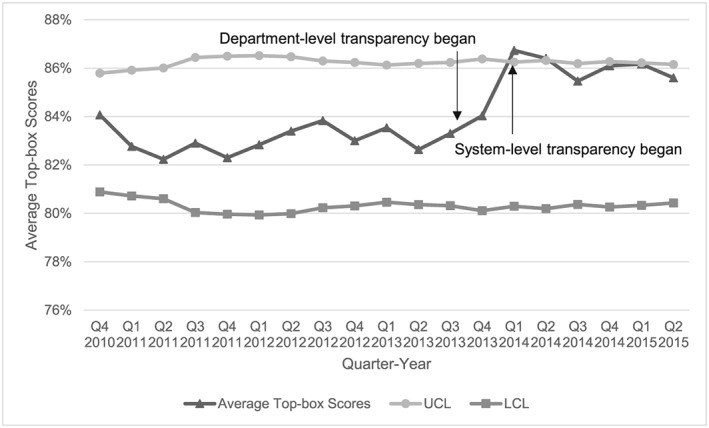

The new and enhanced structures and processes described above were implemented during 2008 to 2013. The impact of any single component of these efforts is difficult to measure, and time between implementation and effect varied. However, changes in performance before and after the implementation period in areas that were identified as strategic priorities likely reflect the impact of the emerging infrastructure. Examples of system‐level performance during this period of redesign include patient satisfaction (Figure 2) population health screenings (Figure 3) workforce process improvement education (Figure 4) and patient engagement (Figure 5).

Figure 2.

Top‐box performance of University of Wisconsin (UW) Health primary care patients who strongly agreed “recommend a provider's office without hesitation to others.” Satisfaction survey items from the avatar international satisfaction monthly scores were averaged across quarters. The scores were aggregated as a top‐box score by using the percentage of patients who strongly agreed (strongly agree, agree, neither agree nor disagree, disagree, and strongly disagree) with the statement that “I would recommend this provider's office without hesitation to others”

Figure 3.

University of Wisconsin (UW) Health population health screening improvements over time compared with the median score of participating organizations in the Wisconsin Collaborative for Healthcare Quality. Note: Details on all Wisconsin Collaborative for Healthcare Quality (WCHQ) measure specifications can be reviewed at www.wchq.org. aBreast cancer screening rates are the values reported to the WCHQ that measure the percentage of eligible women who received a mammogram in the previous 24 months. From 2006 to 2009, this included women aged 40 to 68; in 2010, the screening age was changed to 50 to 74 years. bPneumococcal vaccination rates are the values reported to WCHQ that measure the percentage of eligible adults greater than or equal to 65 years who had a pneumococcal vaccination. cColorectal cancer screening rates are the values reported to WCHQ that measure the percent of eligible adult patients who received a colorectal cancer screening in the appropriate screening period (this varies by screening test, eg, 10 year interval for colonoscopy)

Figure 4.

University of Wisconsin (UW) Health staff and faculty educated in the UW Health Improvement Network. Improvement training refers to the UW Health Improvement Network internal courses

Figure 5.

Growth in University of Wisconsin (UW) Health patient and family advisory councils over time. Data from 2007 to 2010 were not available

Between 2010 and 2015, the improvement trend in patient satisfaction (as measured by a contracted external vendor) was 0.078 points per month and significant at P > .001 (Figure 2). A series of interventions were implemented over this period. In November 2013, the first stage of department‐level transparency was implemented (providers in the same department could see scores), and then in March 2014, this was expanded across the system so that any provider being surveyed could see the results of any other provider. We did not test the impact of specific interventions; however, the introduction of transparent reporting in 2013 seemed to quickly drive up scores.

The percentage of individuals receiving mammograms, pneumococcal vaccinations, and colorectal cancer screening tests increased over time from 2009 to 2014 (Figure 3). As reported to WCHQ, mammogram screening rates increased from 74% to 79%, pneumococcal vaccination rates increased from 62% to 90%, and colorectal cancer screening rates increased from 69% to 81%.

As described above, training and education in improvement science and skills was offered through a series of tiered courses, combining didactics and experiential learning. The number of learners who completed these formal courses in improvement science tripled between 2012 and 2015 (Figure 4). This number does not account for additional individuals participating on UW Health improvement teams who received coaching support and education while doing “real” work; that number approaches 7000. In addition to quantitative data, qualitative data were collected longitudinally for one of the major education programs, and this information was used to continuously update education and training. For example, staff interviews demonstrated the value of improvement tools that were provided as part of this education. In the words of one staff participant in the microsystems training program: “…we learnt how to analyze a problem – that fishbone thing, any problem, any place in your life – what can be helped and what can be changed.”

Between 2012 and 2016, the number of patient and family advisory councils increased by 83% from 90 to 165 (Figure 5).

6. DISCUSSION

Given the size and inherent complexity of the AHC, one could logically assume that incremental, rather than transformative change, is the only path forward on the journey to becoming a learning health system. Our results challenge this assumption. Using an evidence‐based framework, changes in structures and processes were implemented across multiple domains of change. We focused on constructing an infrastructure that supported continuous learning with the goal of improving the value of health services and integrating research and education.

This article contributes to existing knowledge about learning health systems (IOM4, 5) by providing information about critical infrastructure requirements to support organizational competencies for continuous learning. Our paper describes a series of interventions implemented at the organizational level of the health system; however, these interventions had impact through the entire system including patient care, services delivered by frontline care teams, and interactions with insurers. Interventions to achieve strategic goals in quality of care and patient experience in a relatively short period of time were purposefully designed to impact the various levels of the health system in critical domains11 without attempting to identify the relative impact of each intervention. Individual organizations seeking to design system change can use this framework to build from their strengths and identify specific areas that need growth.23 The selection of specific interventions will need to vary according to local context and priorities.

Funding new infrastructure is challenging, particularly in loosely integrated systems and those challenged by shrinking operating margins.10 Aligned goals and integrated strategic planning facilitated the necessary financial and in‐kind support from all 3 entities at UW Health. Financial risks were managed through a disciplined improvement and change management approach. Interventions were piloted at multiple sites, adjustments made, and care models adjusted prior to system‐wide dissemination. Governance committees used data from tested models to identify required resources for large‐scale change. Resources were requested through operating and strategic budgets. Additional funding was available from pay‐for‐performance programs and insurance partners.

The scale of organizational change described required unwavering support from senior leaders. Aligning goals and strategies across the triple AHC missions of clinical care, research, and education was particularly challenging. Focusing organizational redesign to achieve improvements in the value of delivering patient and family‐centered health‐care services provided the required alignment to galvanize senior leaders responsible for clinical operations. Leaders in health services research and education also were critical partners in designing new infrastructure.

In conclusion, all health systems are facing increasing pressures to transform care delivery to improve value. Our paper identifies the structures and processes that align with the change domains in a learning health system and outlines how they were coordinated to achieve improvement across an AHC. This experience can be applied to other health systems that wrestle with making system‐level change when existing missions, cultures, structures, and processes vary. While other health‐care systems may require a different infrastructure to achieve the goals of a learning health system, similarly mapping these structures and processes against the change domains can serve as a useful organizational framework for developing a learning health system.

CONFLICT OF INTERESTS

The authors have no conflicts of interest to disclose.

ACKNOWLEDGMENTS

The authors would like to thank Betsy Clough and Amy Smyth, UW Health Quality, Safety, and Innovation, for their help with data reporting and Zaher Karp for editing. This work was supported by the Primary Care Academics Transforming Healthcare Writing Collaborative, the HIP, the UW School of Medicine and Public Health from The Wisconsin Partnership Program, and the Community‐Academic Partnerships core of the UW Institute for Clinical and Translational Research through the National Center for Advancing Translational Sciences (grant number UL1TR000427) and the UW Carbone Cancer Center Support Grant from the National Cancer Institute (grant number P30CA014520).

Kraft S, Caplan W, Trowbridge E, et al. Building the learning health system: Describing an organizational infrastructure to support continuous learning. Learn Health Sys. 2017;1:e10034 10.1002/lrh2.10034

REFERENCES

- 1. Institute of Medicine . Best care at lower cost: the path to continuously learning health care in America In: Institute of Medicine , ed. Committee on the Learning Health Care System. Washington, DC: National Academies Press; 2013. [PubMed] [Google Scholar]

- 2. Dzau VJ, Cho A, Ellaissi W, et al. Transforming academic health centers for an uncertain future. N Engl J Med. 2013;369:991‐993. [DOI] [PubMed] [Google Scholar]

- 3. Friedman C, Rubin J, Brown J, et al. Toward a science of learning systems: a research agenda for the high‐functioning learning health system. J Am Med Inform Assoc. 2015;22:43‐50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Greene SM, Reid RJ, Larson EB. Implementing the learning health system: from concept to action. Ann Intern Med. 2012;157:207‐210. [DOI] [PubMed] [Google Scholar]

- 5. Psek WA, Stametz RA, Bailey‐Davis LD, et al. Operationalizing the learning health care system in an integrated delivery system. EGEMS (Wash DC). 2015;3:1122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Barrett DJ. The evolving organizational structure of academic health centers: the case of the University of Florida. Acad Med. 2008;83:804‐808. [DOI] [PubMed] [Google Scholar]

- 7. Dzau V, Gottleib G, Steven L, Schlichting N, Washington E. Essential stewardship priorities for academic health systems In: Institute of Medicine , ed. Learning Health System Series. Washington, DC: Institute of Medicine; 2014. [Google Scholar]

- 8. Dzau VJ, Ackerly DC, Sutton‐Wallace P, et al. The role of academic health science systems in the transformation of medicine. Lancet. 2010;375:949‐953. [DOI] [PubMed] [Google Scholar]

- 9. Washington AE, Coye MJ, Feinberg DT. Academic health centers and the evolution of the health care system. JAMA. 2013;310:1929‐1930. [DOI] [PubMed] [Google Scholar]

- 10. Enders T, Conway J. Advancing the academic health system for the future: a report from the AAMC Advisory Panel on Health Care. American Association of Medical Colleges, 2014.

- 11. Kraft S, Carayon P, Weiss J, Pandhi N. A simple framework for complex system improvement. Am J Med Qual. 2015;30:223‐231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Caplan W, Davis S, Kraft SA, et al. Engaging patients at the front lines of primary care redesign: operational lessons for an effective program. Jt Comm J Qual Patient Saf. 2015;40:533‐540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Davis S, Berkson S, Gaines ME, et al. Engaging patients in team‐based practice redesign: critical reflections on program design. J Gen Intern Med. 2016;31(6):688‐695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Davis S, Gaines ME. Patient engagement in redesigning care toolkit. Center for Patient Partnerships, UW Health Innovation Program, 2014.

- 15. Kraft S, Strutz E, Kay L, Welnick R, Pandhi N. Strange bedfellows: a local insurer/physician practice partnership to fund innovation. J Healthc Qual. 2015;37:298‐310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Pandhi N, Yang WL, Karp Z, et al. Approaches and challenges to optimising primary care teams' electronic health record usage. Inform Prim Care. 2014;21:142‐151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Ferlie EB, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q. 2001;79:281‐315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Langley GL, Moen R, Nolan KM, Nolan TW, Norman CL, Provost LP. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. San Francisco, CA: Jossey‐Bass Publishers; 2009. [Google Scholar]

- 19. Dartmouth College . Microsystem Academy. 2011.

- 20. Grumbach K, Lucey CR, Johnston SC. Transforming from centers of learning to learning health systems: the challenge for academic health centers. JAMA. 2014;311:1109‐1110. [DOI] [PubMed] [Google Scholar]

- 21. Health Innovation Program . HIPxChange. 2016.

- 22. Wisconsin Collaborative for Healthcare Quality . Wisconsin Collaborative for Healthcare Quality. 2015.

- 23. Weiss JM, Pickhardt PJ, Schumacher JR, et al. Primary care provider perceptions of colorectal cancer screening barriers: implications for designing quality improvement interventions. Gastroenterol Res Pract. 2017;2017: 1619747 [DOI] [PMC free article] [PubMed] [Google Scholar]